4.4.1. Comparison of Different Algorithms

This paper is based on the YOLOv11n model as an improved foundation. To validate the effectiveness of the proposed YOLO-FAD algorithm, we selected various existing models and improved models for comparison. The specific selections include the following:

YOLO series models: YOLOv8n [

23], YOLOv9s [

24], YOLOv10n [

25], YOLOv12 [

26].

RT-DETR [

27] series models: RT-DETR-l, RT-DETR-x, RT-DETR-Resnet50, RT-DETR-Resnet101.

Other models: Improved Faster-RCNN [

28], some improved models based on SOTA such as BiTNet [

29] and DHC-YOLO [

30].

To ensure fairness in the comparative experiments, all models included in the comparison (YOLOv8n, YOLOv9s, YOLOv10n, YOLOv11n, YOLOv12, RT-DETR series, Improved Faster-RCNN, BiTNet, DHC-YOLO) were fully retrained on the dataset presented in this paper. Training parameters and hyperparameters were kept consistent across all models: input image resolution was uniformly set to 640 × 640. The optimizer used stochastic gradient descent (SGD) with momentum set to 0.9 and a weight decay coefficient of 0.0005. The initial learning rate was 0.01, employing the OneCycleLR learning rate scheduling strategy. The first 3 epochs constituted a warmup phase (learning rate linearly increased from 0.001 to 0.01), with a total of 300 training epochs. The batch size was set to 16, and mixed-precision training (FP16) was used to accelerate the training process.

All models utilize pre-trained weights from official open-source sources. For the four defect categories in this dataset, only the classification head output dimension was adjusted (from the default 80 classes to 4 classes). The remaining network architecture (e.g., backbone, neck) and hyperparameters (e.g., anchor box sizes, loss functions) retained the official default settings. Specifically, the encoder layers and decoder heads of the RT-DETR series models remained unchanged. For Improved Faster-RCNN, the Region Proposal Network (RPN) anchor sizes were adapted to the photovoltaic defect size range (16 × 16–128 × 128). GFLOPs, FPS, and parameter counts (Params) for all models were tested under identical hardware conditions (NVIDIA RTX 3080 Ti GPU, Intel Core i9-12900K CPU). The batch size was set to 1 during testing to ensure consistent hardware conditions and prevent device variations from affecting performance comparison results.

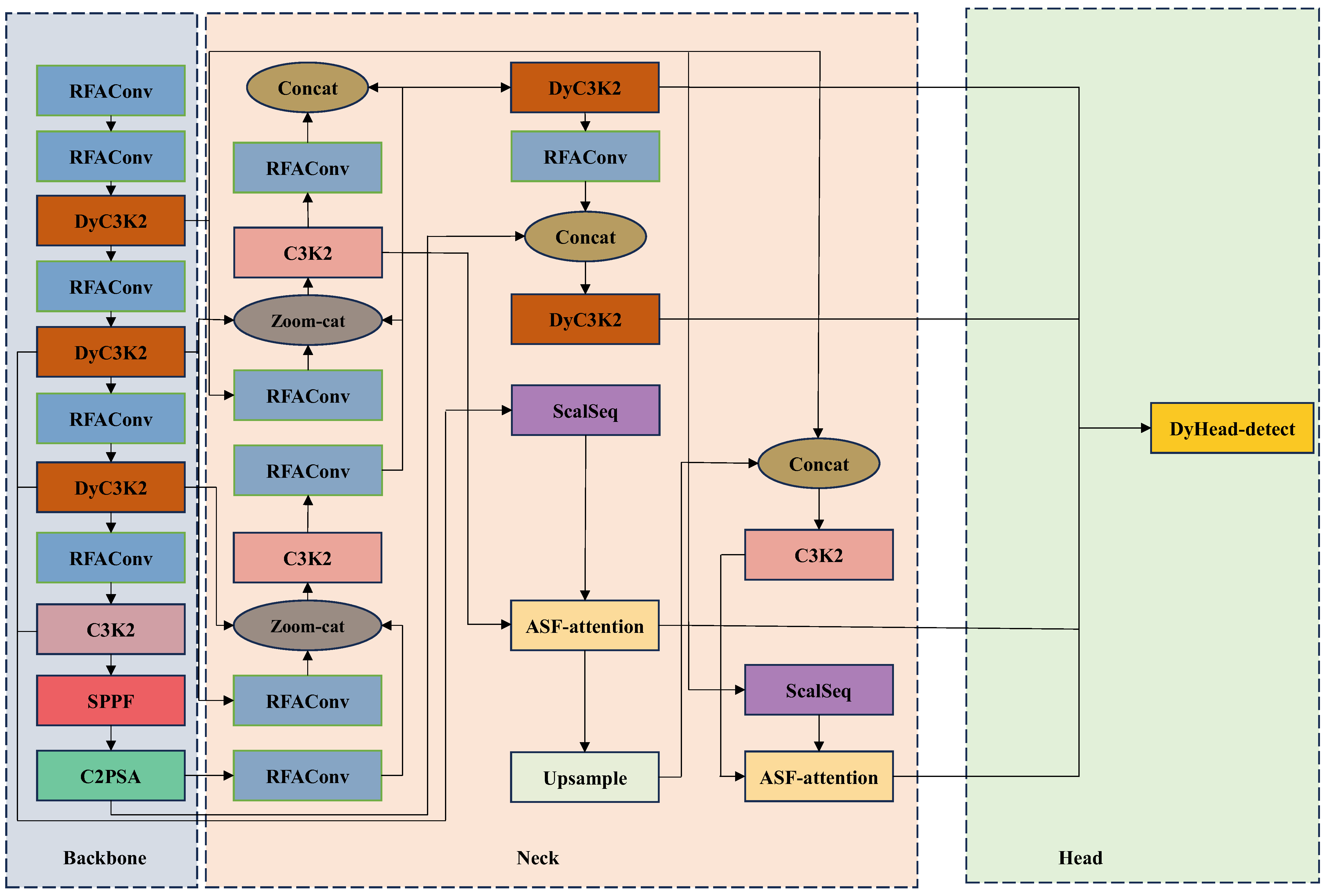

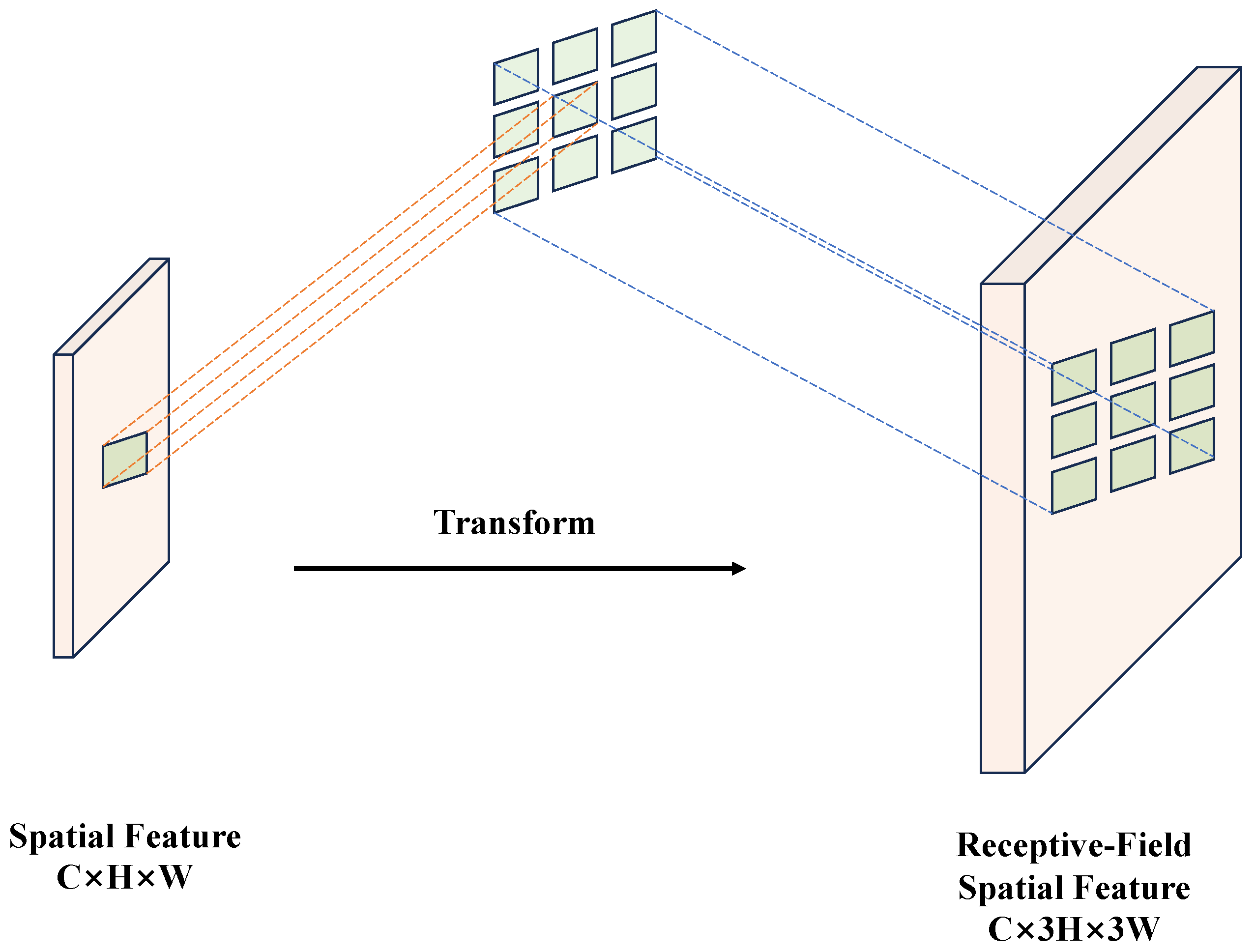

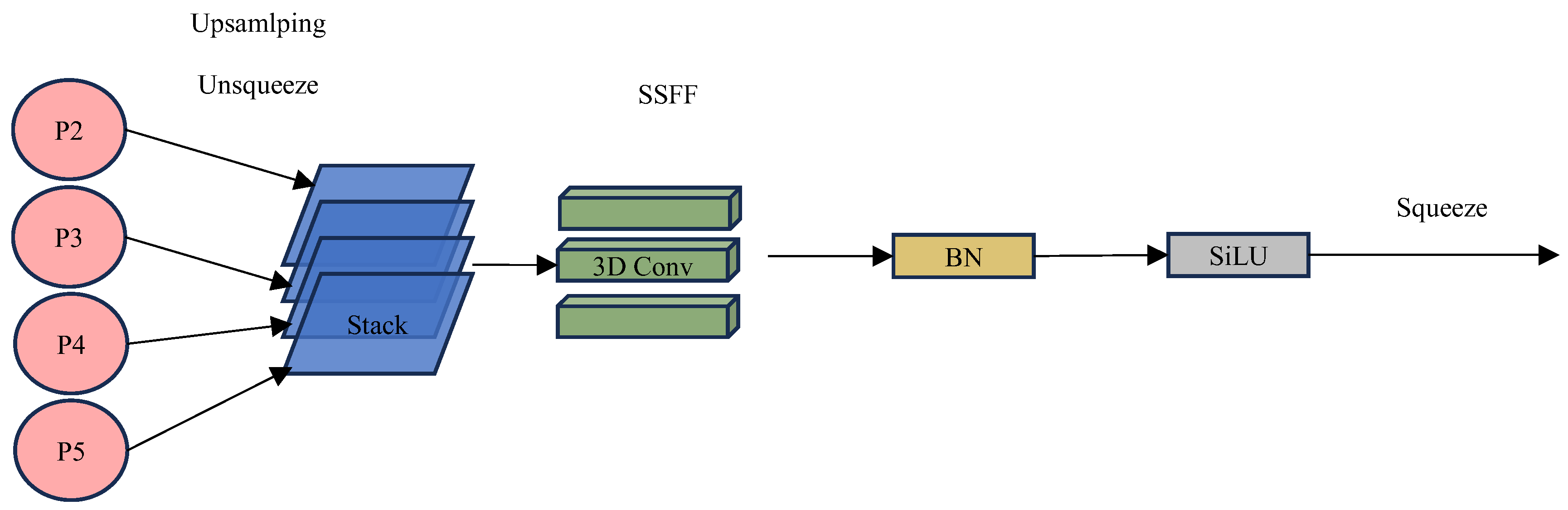

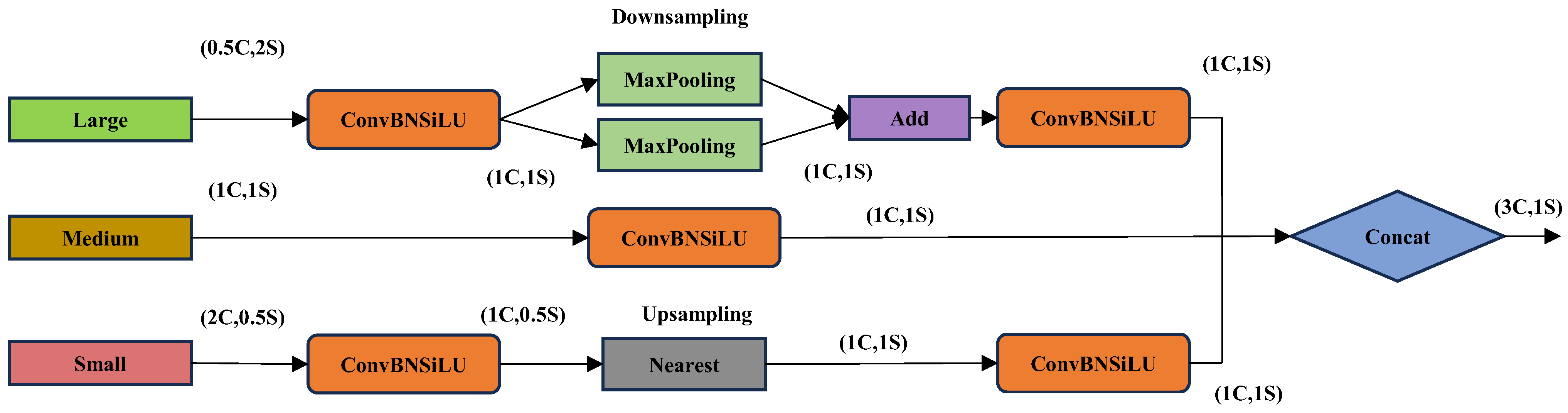

To validate the statistical significance of the performance differences, we conducted two-tailed t-tests on the mAP@0.5 values of YOLO-FAD and comparative models (5-fold cross-validation results as independent samples). The results show that YOLO-FAD achieves a statistically significant improvement over YOLOv11n (p = 0.003 < 0.05), DHC-YOLO (p = 0.012 < 0.05), and BiTNet (p = 0.001 < 0.05). This confirms that the proposed improvements are not due to random variability but to the effective integration of RFAConv, ASF, DyC3K2, and DyHead modules.

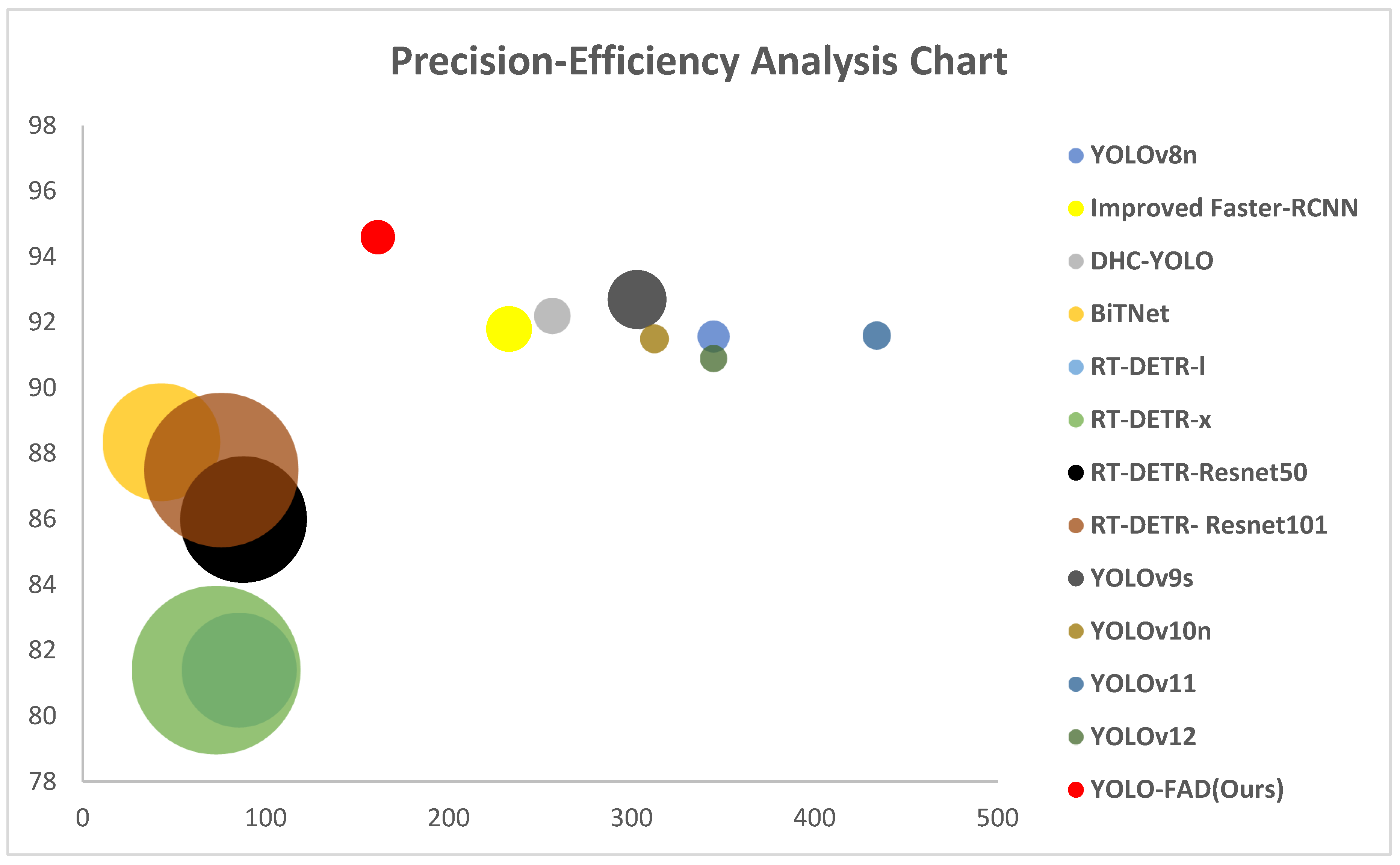

The specific comparison results are shown in

Table 1.

YOLOv8n: Achieves 91.38% accuracy, 84.96% recall rate, and 91.57% mAP@0.5, demonstrating excellent performance in defect detection for cracks (99.2%) and battery strings (99.5%). Its low computational complexity (8.1 GFLOPs), fast inference speed (344.8 FPS), and small parameter count (3 M) make it lightweight and efficient, suitable for photovoltaic module defect detection scenarios with high-speed requirements.

YOLOv9s: Accuracy of 85.38%, recall rate of 89.44%, mAP@0.5s of 92.7%, crack detection accuracy of 99.4%, and hotspot detection accuracy of 78.1%, with room for improvement. Computational load: 26.7 GFLOPs, inference speed: 303 FPS, parameter count: 7.2 M, a standard-performance model within the YOLO series.

YOLOv10n: Accuracy of 90.7%, recall rate of 83.14%, mAP@0.591.5%, crack detection accuracy of 99.3%, and hotspot detection accuracy of 76.8%. Computational complexity: 6.5 GFLOPs, inference speed: 312.5 FPS, number of parameters: 2.26 M, lightweight with good accuracy performance.

YOLOv11n: Accuracy of 89.89%, recall rate of 88.14%, mAP@0.5s 91.6%, with a solid foundation for defect detection (e.g., hotspot detection at 75.2%). Computational complexity: 6.3 GFLOPs, inference speed: 434 FPS (extremely fast), number of parameters: 2.6 million. It serves as the foundation for improvements to YOLO-FAD, with a significant speed advantage.

YOLOv12: Accuracy of 82.9%, recall rate of 90%, mAP@0.590.9%, battery string defect detection accuracy of 96.8% slightly lower. Computational load: 5.8 GFLOPs, inference speed: 344.8 FPS, parameter count: 2.39 M, accuracy fluctuates but efficiency is notable.

- 2.

RT-DETR Series Models

RT-DETR-l: Accuracy 76.6%, recall rate 76.7%, mAP@0.581.4%, crack detection accuracy of 97.5% is acceptable, but hotspot detection accuracy of 64.6% is poor. Computational load: 103.4 GFLOPs, inference speed: 85.5 FPS, number of parameters: 32 M. Both detection performance and efficiency require improvement.

RT-DETR-x: Accuracy 79%, recall rate 76.5%, mAP@0.5s 81.4%, crack detection accuracy of 98.5% is good, but hotspot detection accuracy of 61.9% is weak. Computational complexity: 222.5 GFLOPs, inference speed: 72.9 FPS, number of parameters: 65.5 M, with a noticeable efficiency shortfall.

RT-DETR-Resnet50: Accuracy 82.4%, recall rate 82.5%, mAP@0.5s 86%, crack detection accuracy 99.2% (excellent), hotspot detection accuracy 68.5% (average). Computational complexity: 125.6 GFLOPs, inference speed: 87.7 FPS, number of parameters: 42 M. Under the Resnet50 backbone network, accuracy and efficiency performance are moderate.

RT-DETR-Resnet101: Accuracy 86.5%, recall rate 81%, mAP@0.5s 87.5%, crack detection accuracy 99.8% (top-notch), hotspot detection accuracy 71% (insufficient). Computational complexity: 186.2 GFLOPs, inference speed: 75.75 FPS, number of parameters: 61 M. Strong crack detection capability but overall efficiency is not high.

- 3.

Other models

Improved Faster-RCNN: Accuracy 90.4%, recall rate 89.2%, mAP@0.5s 91.8%, crack detection accuracy 98.6% (excellent), hotspot detection accuracy 81.3% (slightly weaker). Computational complexity: 16.2 GFLOPs, inference speed: 233 FPS, parameter count: 41.4 M (relatively large). As a traditional two-stage model, it ensures accuracy but has slightly lower efficiency.

BiTNet: Accuracy 88.6%, recall rate 82.8%, mAP@0.5s 88.35% (relatively low), and performance for defect detection (e.g., hot spots at 76.4%) is average. Computational complexity: 108.6 GFLOPs, inference speed: 42.98 FPS (slow), number of parameters: 17.68 million, overall performance is slightly inferior.

DHC-YOLO: Accuracy 89.4%, recall rate 87.2%, mAP@0.5s 92.2%, battery string detection accuracy of 98.1% is good. Computational load 10.5 GFLOPs, inference speed 256.7 FPS, parameter count 9.8 M, achieving a certain balance between accuracy and efficiency.

In the task of photovoltaic module defect detection, YOLO-FAD demonstrates significant performance advantages. Compared to YOLOv11n, YOLO-FAD achieves comprehensive improvements across multiple metrics: its Precision, Recall, and mAP@0.5s are all enhanced. Particularly for small-object defects (such as hot spots), YOLO-FAD improves detection accuracy from 75.2% to 85.3%, significantly enhancing its ability to identify small-object defects. Additionally, detection accuracy for other defect types (such as cracks and series-connected cells) has also been optimized.

Compared to other models in the YOLO series (such as YOLOv11n), YOLO-FAD demonstrates superior overall detection performance; when compared to models in the RT-DETR series, YOLO-FAD outperforms in terms of accuracy, small object recognition capability, and efficiency balance; when compared to other detection frameworks (such as Improved Faster-RCNN), YOLO-FAD achieves better adaptability between detection accuracy and inference speed.

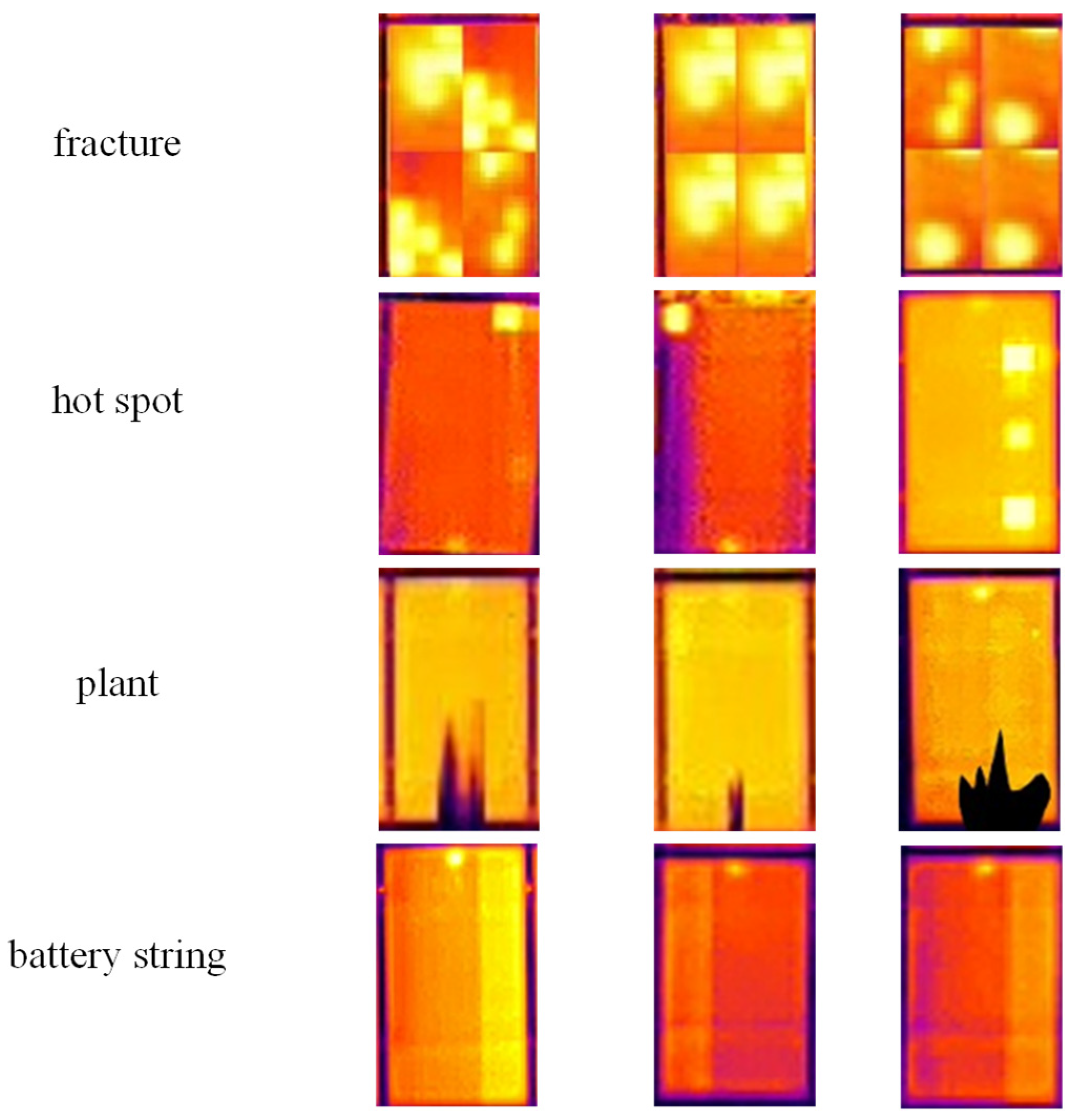

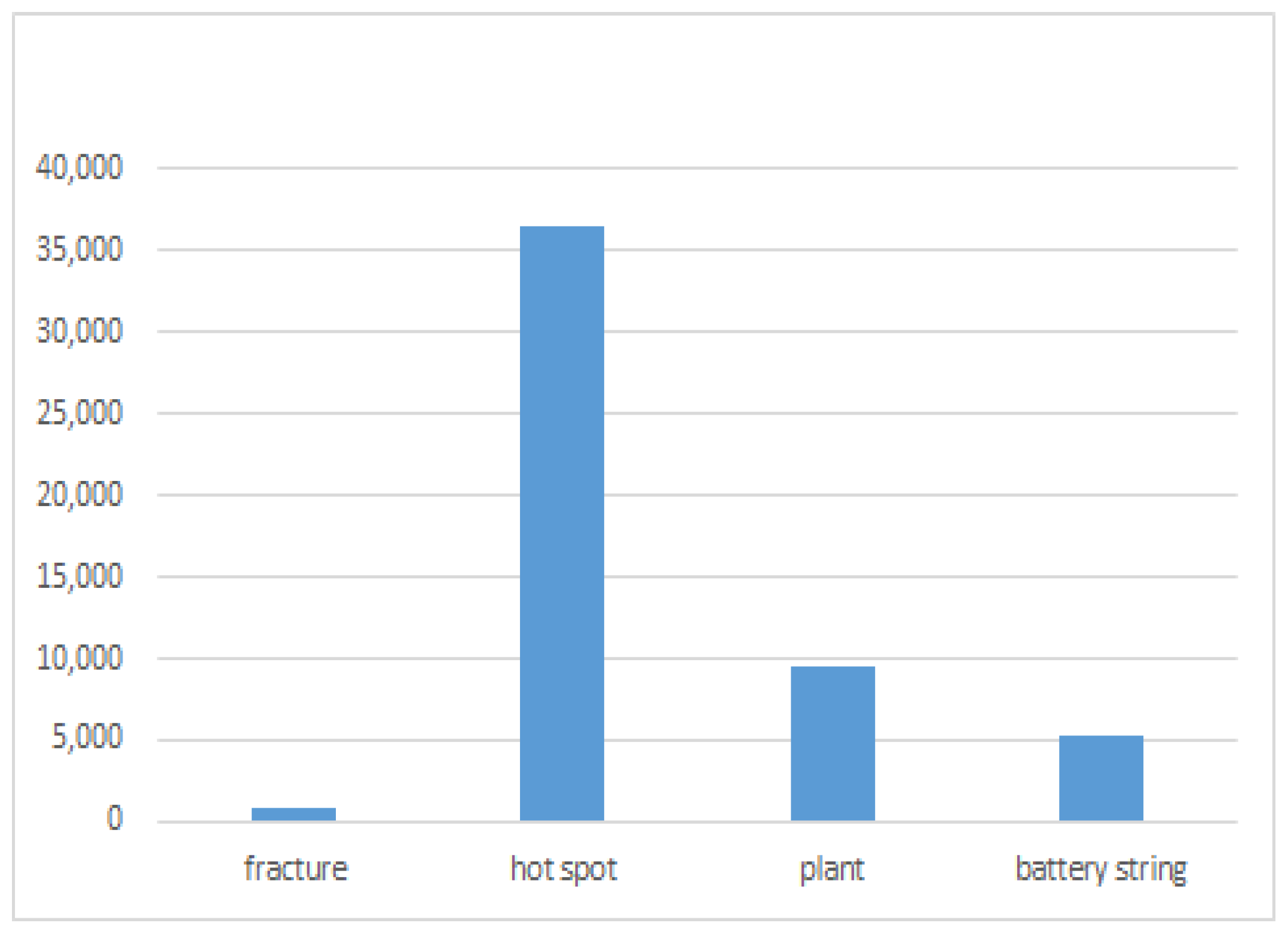

Additionally, it is necessary to explain the reason for the AP discrepancy among different defect categories in the dataset:

(1) Intrinsic detection difficulty of defects: Hot spot defects are mostly tiny, low-contrast regions (especially prone to confusion with normal heating areas in complex backgrounds), and some hot spots have irregular shapes and blurred boundaries, making it difficult for the model to distinguish them accurately. In contrast, fracture defects are mostly obvious linear features with large texture differences and high distinctiveness, which can be effectively learned by the model even with a small number of samples.

(2) Data distribution characteristics: Although hot spot samples are large in quantity, there are a large number of “similar redundant samples” (such as continuous hot spot areas of the same module), which limits the generalization ability of the model. For fracture samples, despite the small quantity, the annotation accuracy is high (the boundaries of linear defects are clear, and the annotation error is small), and there is no obvious category confusion, so the AP is higher.

(3) Annotation quality difference: Due to the small size and scattered distribution of hot spots, manual annotation is prone to slight deviations (such as incomplete coverage of small hot spots), which introduces subtle noise into the training data. Fracture defects are large in size and distinct from the background, so the annotation consistency is high, and the training data is more reliable.

This discrepancy also confirms the necessity of the proposed YOLO-FAD model, which is optimized for small, low-contrast defects like hot spots and effectively mitigates the impact of the above factors.

Deployment trade-offs: We tested inference latency on an NVIDIA Jetson Xavier NX (UAV-mounted edge device). YOLO-FAD achieves 32 ms per 640 × 640 image, meeting real-time requirements (≤50 ms) for UAV flight speed (5 m/s). Compared to YOLOv11n (28 ms), the 4 ms increase is negligible for 10.1% higher small defect mAP. For low-power devices (e.g., DJI Manifold 2), a lightweight variant (removing one DyC3K2 module) achieves 25 ms latency with mAP@0.5 = 93.2%—a favorable trade-off.

In summary, YOLO-FAD successfully addresses the challenge of detecting small objects (such as hot spots) by precisely improving detection accuracy for various defects, while balancing detection efficiency and performance, thereby providing a superior solution for photovoltaic module defect detection.

4.4.3. Analysis of the Impact of Different Datasets on Results

To verify the generalization ability of the proposed model, two publicly available defect detection datasets with different application scenarios were selected for cross-domain testing:

1. NEU-DET dataset [

31]: Released by Northeastern University, China, it contains 1800 images of six types of hot-rolled steel strip surface defects, including Grazing, Inclusion, Patches, Pitted-surface, Roll-in scale, and Scratches. Each image contains a single defect, and the dataset is characterized by high contrast between defects and backgrounds, diverse defect morphologies, and is widely used for evaluating metal surface small defect detection algorithms.

2. PCB-DET dataset [

32]: A classic dataset for printed circuit board (PCB) defect detection, containing 1468 images of six common PCB defects: Missing hole, Mouse bite, Open, Short, Spur, and Spurious. The defects in this dataset are small in size, dense in distribution, and have low contrast with the background, which is highly consistent with the characteristics of small defects in photovoltaic modules, making it suitable for verifying the model’s ability to detect subtle defects.

Table 2 presents the PCB-DET dataset, which includes the following defect types: Missing hole (Missing hole defect), Mouse bite (mouse bite defect), Open (open circuit defect), Short (short circuit defect), Spur (burr defect), and Spurious (false defect).

YOLO-FAD significantly improves detection accuracy for all defect types (e.g., Missing hole from 97.1% to 99.3%, Mouse bite from 90.2% to 93.5%, etc.), particularly for small targets/fine defects (such as Mouse bite, which are prone to misclassification), demonstrating that the improved model achieves more precise identification of complex defects.

mAP@0.5s have jumped from 87.6% to 93.17%, proving that YOLO-FAD’s overall detection performance significantly outperforms YOLOv11n; although GFLOPs have increased from 6.3 to 9 (slightly higher computational complexity), FPS remains at 161.3 (meeting industrial detection real-time requirements), and the parameter count of 4.18 M has not been overly inflated, achieving a balance between accuracy and efficiency.

Table 3 shows the NEU-DET dataset, which includes defect types such as Grazing (scratches), Inclusion (inclusions), Patches (patches), Pitted-surface (pitted surfaces), Roll-in-scale (rolled-in scale), and Scratches (scratches).

YOLO-FAD significantly improves detection accuracy for all defect types (e.g., Grazing from 67.2% to 76.5%, Inclusion from 83.3% to 88.4%, etc.), particularly Scratches from 94.5% to 98.4%, indicating that the improved model achieves more precise identification of complex and subtle defects.

Overall performance: mAP@0.5s have jumped from 82.16% to 88.55%, proving that YOLO-FAD’s overall detection performance significantly outperforms YOLOv11n; although GFLOPs have increased from 6.3 to 9 (slightly higher computational complexity), the FPS remains at 161.3 (meeting industrial real-time detection requirements), and the number of parameters (4.18 M) has not increased excessively, achieving a better balance between accuracy and efficiency.

The YOLO-FAD model outperforms the YOLOv11n model in various defect detection accuracy metrics. By comparing the YOLO-FAD model on two publicly available datasets with different characteristics and application scenarios—PCB-DET (printed circuit board domain) and NEU-DET (hot-rolled steel strip domain)—the above conclusions were drawn. This indicates that the YOLO-FAD model is not limited to specific types of data or application domains but can perform well on different types of datasets, strongly validating that the YOLO-FAD model possesses excellent generalization capabilities, i.e., it can be effectively applied to diverse real-world application scenarios.

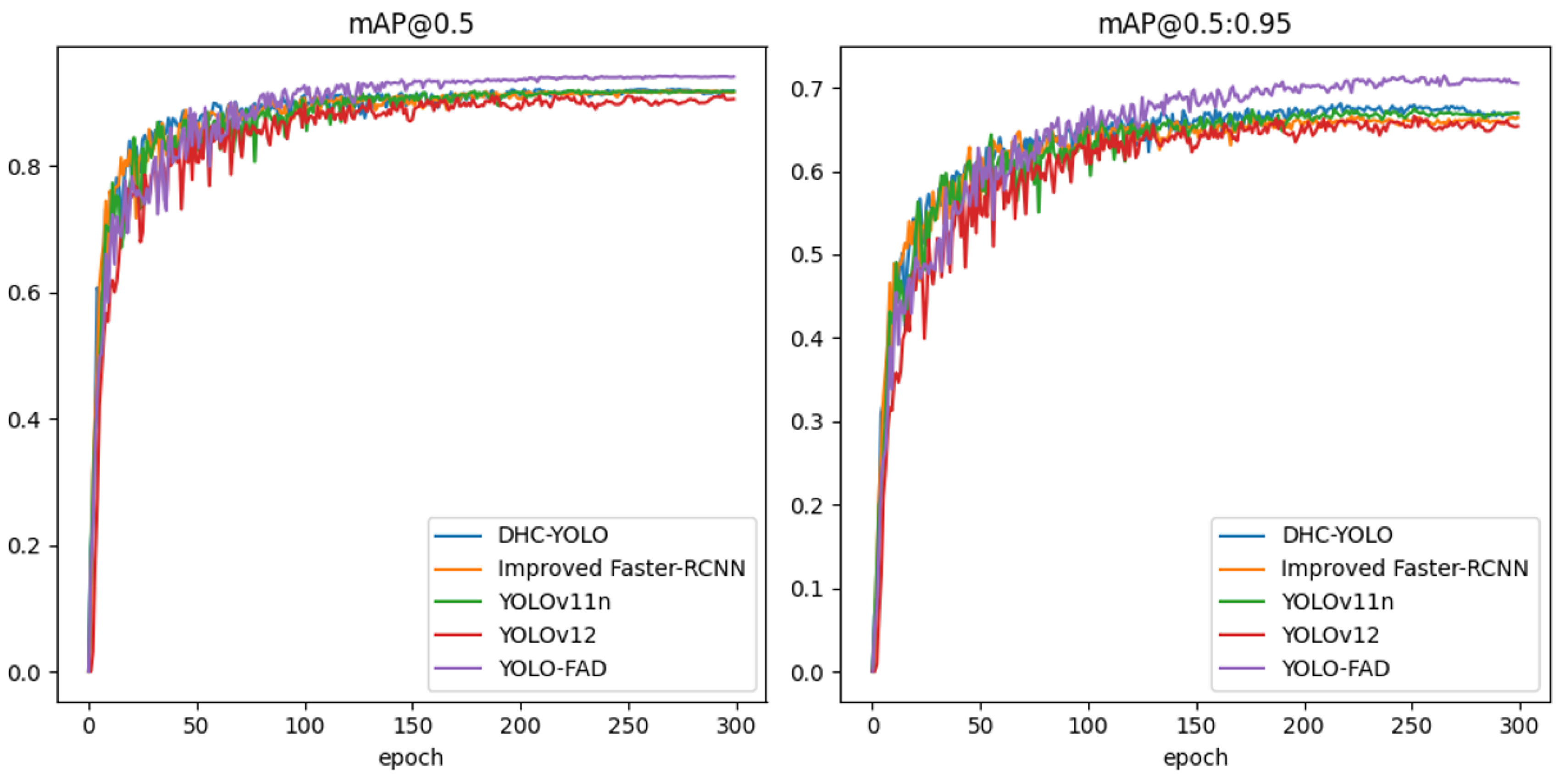

4.4.6. Comparison Analysis of Curve Charts

In the experiment on defect detection in photovoltaic modules, we compared the new Improved Faster-RCNN, the SOTA-based improved HDC-YOLO, YOLOv11n, YOLOv12, and our improved algorithm YOLO-FAD based on YOLOv11n. The experiment was conducted over 300 epochs, and the specific experimental curve comparison is shown in

Figure 10.

mAP@0.5 Reflects the average precision when the IoU threshold is 0.5 (relatively loose matching standard). In the early stages of training (the first 50 epochs), all algorithms quickly learn the features of photovoltaic components, and the mAP value rises steeply. Among them, the YOLO series algorithms (such as DHC-YOLO, YOLOv11n, YOLOv12, and YOLO-FAD) leverage the characteristics of their single-stage detection framework to converge faster than the two-stage Improved Faster-RCNN, demonstrating initial adaptability to the photovoltaic module detection task.

As training progresses (50–300 epochs), the curves gradually stabilize. YOLO-FAD ultimately achieves an mAP value exceeding 0.9, demonstrating the best performance. Compared to other algorithms, DHC-YOLO and YOLOv11n also achieve high accuracy, but YOLO-FAD, with its improvements over YOLOv11n, demonstrates more prominent detection performance in specific target detection tasks like photovoltaic components, enabling more efficient and precise identification of photovoltaic components while reducing false negatives and false positives.

- 2.

mAP@0.5:0.95: Balancing Precision Across Multiple Strictness Levels

mAP@0.5:0.95 measures the average accuracy when the IoU threshold ranges from 0.5 to 0.95 in 0.05 intervals, testing the algorithm’s ability to balance precise boundary box localization and classification confidence for photovoltaic modules. In the early stages of training, all algorithms improve rapidly, but due to the need to adapt to multiple IoU thresholds, the overall upward slope is lower than that of the ‘mAP@0.5’ scenario.

In the later stages of training (150–300 epochs), the YOLO-FAD curve remained at a high level and was smooth, demonstrating strong generalization in multi-strictness detection. In contrast, Improved Faster-RCNN was limited by its two-stage framework and had slightly weaker adaptability to multiple IoU thresholds; DHC-YOLO, YOLOv11n, and YOLOv12 also perform well, but YOLO-FAD further optimizes bounding box regression and classification heads. In photovoltaic component detection, it ensures detection accuracy across different stringency levels while maintaining training stability, effectively addressing the challenge of bounding box localization caused by angles and occlusions in photovoltaic component detection, and accurately identifying component locations and categories.

- 3.

Superiority of the YOLO-FAD Algorithm

Leading comprehensive performance: YOLO-FAD demonstrates outstanding detection capabilities in both the ‘mAP@0.5’ (loose matching) and ‘mAP@0.5:0.95’ metrics, adapting to different precision requirements in photovoltaic module detection scenarios. Whether for rapid screening (IoU = 0.5) or detailed inspection (high IoU threshold), it efficiently completes tasks.

Convergence and Stability: The algorithm converges quickly during the initial training phase, rapidly learning the features of photovoltaic modules. In the later stages, the performance curve remains stable, with minimal impact from increased epochs, ensuring consistent high-precision detection results even during prolonged training. This guarantees the reliability and consistency of photovoltaic module detection tasks.

Optimized for photovoltaic scenarios: Based on improvements to YOLOv11n, it precisely meets the requirements for photovoltaic module detection, effectively addressing the challenge of detecting small defects caused by background interference. In real-world scenarios such as photovoltaic power plant inspections and production line quality control, it can more accurately identify module status, supporting efficient operations and quality management in the photovoltaic industry.

YOLO-FAD demonstrates superior detection accuracy, generalization, and stability in photovoltaic module detection tasks, significantly outperforming other comparison algorithms. It provides a more efficient and precise technical solution for object detection in the photovoltaic field.

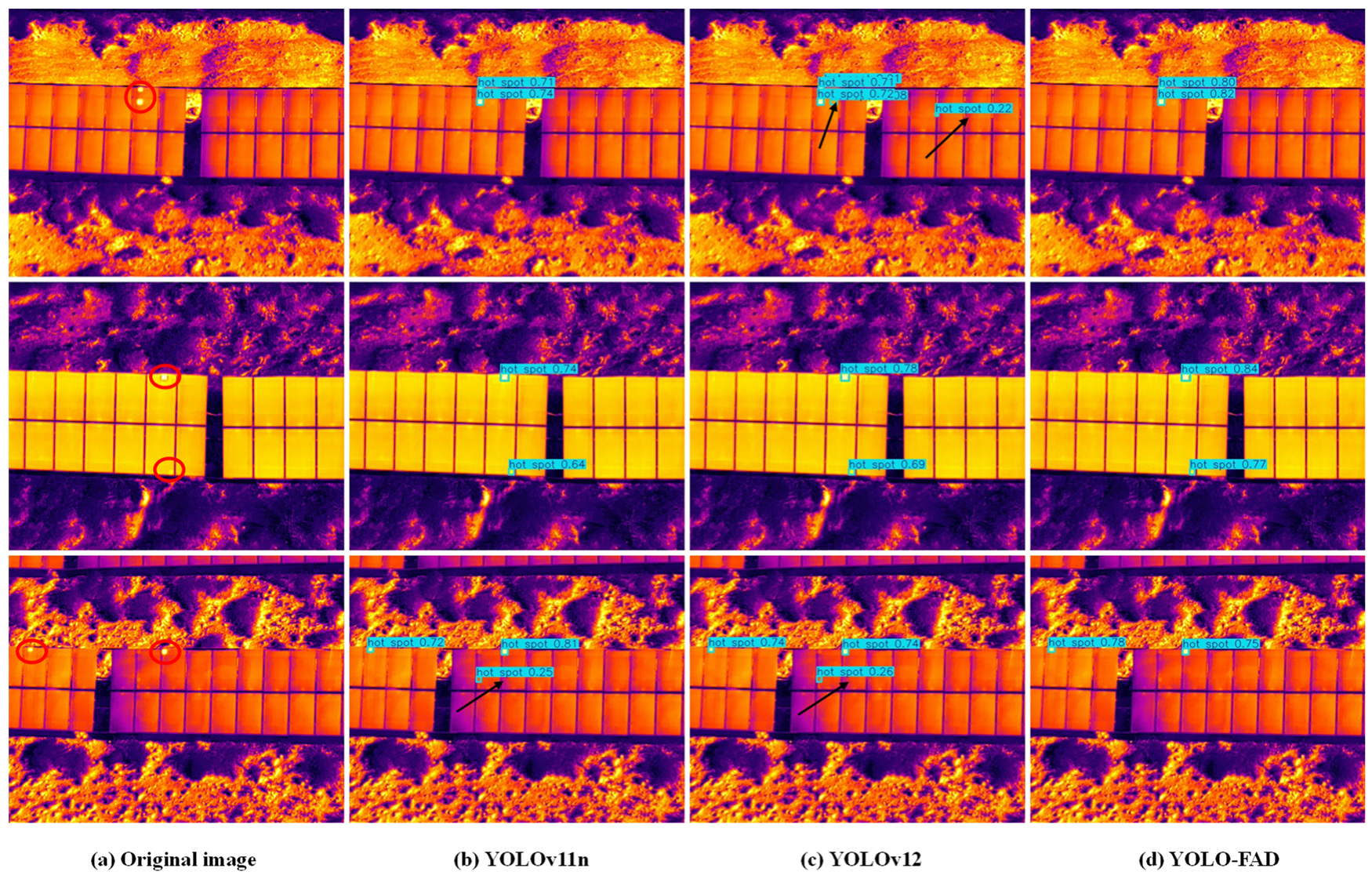

4.4.7. Visualization Analysis

To further analyze and improve the performance of the YOLO-FAD model in detecting small defects in photovoltaic modules, we conducted a visual comparison experiment using YOLOv11n and YOLOv12 on the test set, as shown in

Figure 10. The defects marked with red circles in the original image are indicated by black arrows, which highlight false positives. The specific details are as follows:

To further analyze the performance of the YOLO-FAD model in detecting small defects in photovoltaic modules, a visual comparison experiment with YOLOv11n and YOLOv12 was conducted on the test set, as shown in

Figure 11. The defects marked with red circles in the original image are the key detection targets, and the false positives are highlighted with black arrows. The specific details are as follows:

Figure 11a (Original image): a noticeable hot spot (small defect) in the left module is highlighted with a red circle.

Figure 11b (YOLOv11n): detected two hot spots (confidence: 0.71/0.74); false positives: the black arrow points to the right module, where multiple non-defective areas were incorrectly detected (hot spot confidence: 0.72/0.92); missed detection: the defect marked by the red circle was not covered.

Figure 11c (YOLOv12): detected slightly more hot spots than YOLOv11n, but false positives still existed (e.g., upper right area); the area indicated by the black arrow has no defect but was marked, and the detection box overlap with the red circle is moderate.

Figure 11d (YOLO-FAD): correctly detected all defects within the red circle; no obvious false positives, and the detection results are concentrated in the actual defect areas; high detection confidence (hot spot: 0.80/0.89) and accurate positioning.

- 2.

Central region (second row)

Figure 11a (Original image): two hot spot defects (marked with red circles) in the left-middle and lower-right areas.

Figure 11b (YOLOv11n): the positions of the detection boxes are slightly offset; one defect was missed; false positives exist: the central section was incorrectly identified as a defect.

Figure 11c (YOLOv12): the positions of the detection boxes are improved, but missed detections and minor false positives still exist; one hot spot has low confidence (0.64).

Figure 11d (YOLO-FAD): successfully detected both defects marked by red circles; the detection boxes are more accurate with almost complete overlap; no false positives; excellent detection confidence (0.84/0.77).

- 3.

Lower region (third row)

Figure 11a (Original image): three defect areas marked with red circles, all of which are small in size.

Figure 11b (YOLOv11n) detected two targets but did not cover all red circles; severe false positives (area indicated by black arrows)—the hot background was misclassified as defects; low hot spot confidence (0.25).

Figure 11c (YOLOv12) detected all three targets, but false positives still exist; the bounding box offset is slightly large, and some are not accurately aligned with the defect points.

Figure 11d (YOLO-FAD) detected all defects within the red circles; no false positives; the highest positioning accuracy of detection boxes, with hot spot confidence concentrated between 0.75 and 0.78; stable performance even in complex backgrounds.

YOLO-FAD demonstrates significant advantages in detecting minor defects in photovoltaic modules, particularly in complex backgrounds and scenarios with subtle defects; it maintains outstanding detection performance. Compared to YOLOv11n and YOLOv12, YOLO-FAD offers more accurate detection box positioning, significantly reduced false positive rates, and higher sensitivity to small hotspots. Image analysis shows that it nearly 100% covers red-circled defects while avoiding false positives indicated by black arrows, demonstrating the optimized effects of its small-object perception, feature fusion, and confidence assessment capabilities.

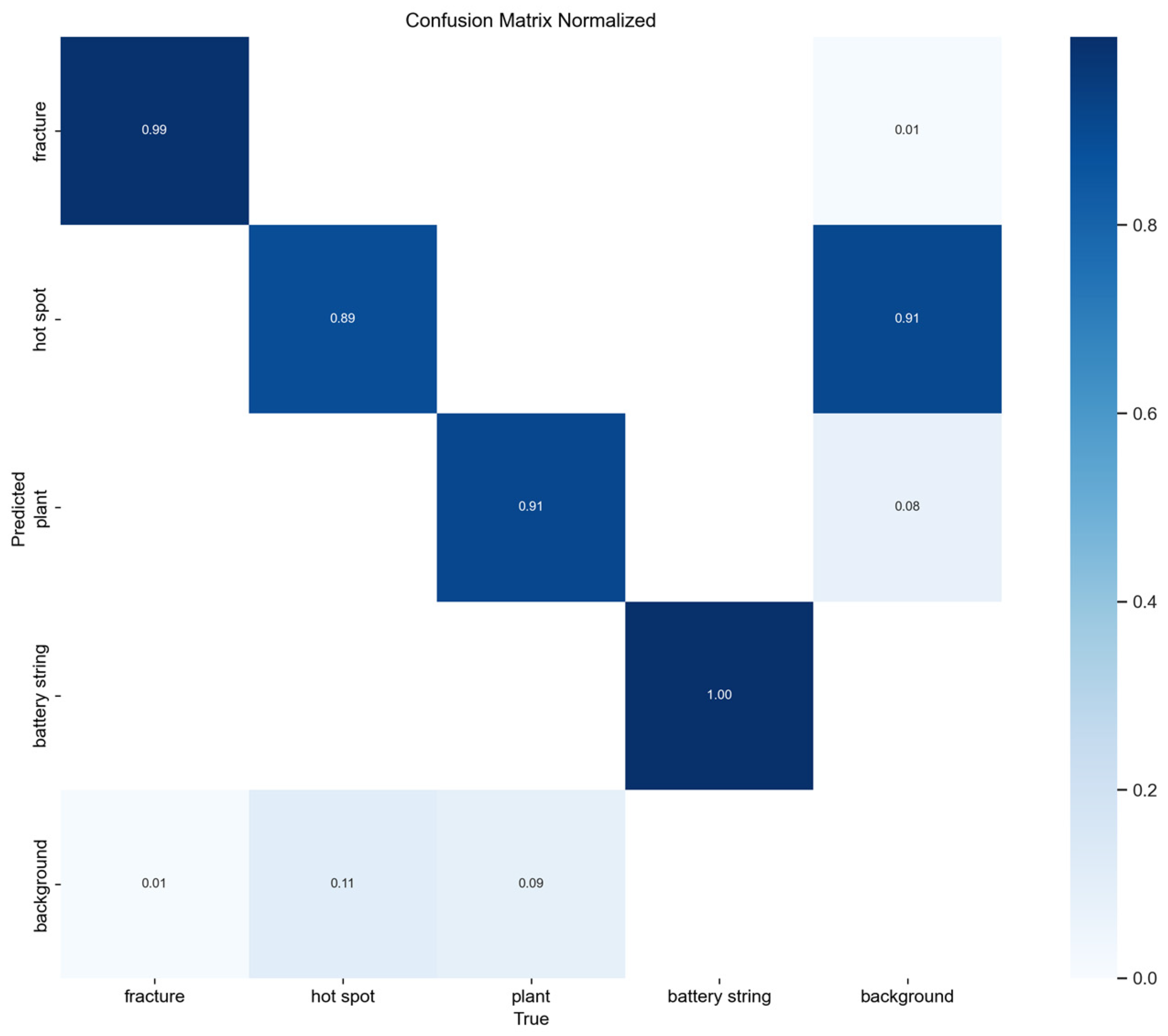

To quantitatively evaluate the classification performance and class confusion of YOLO-FAD, we supplement the analysis of the normalized confusion matrix in

Figure 12. The horizontal axis represents the true classes (fracture, hot spot, plant, battery string, background), and the vertical axis represents the predicted classes. The values indicate the normalized classification accuracy between classes, from which the false positive rate (FPR) and false negative rate (FNR) can be derived:

Fracture: 99% of true fractures are predicted correctly, with only 1% misclassified as background. Its FNR is 1%, and FPR is (since only 0.01 of the background is misclassified as fracture, and the proportion of true non-fracture cases is 1 − 0.99 = 0.01). This indicates that the model almost never misses fracture defects, with only a negligible number of background pixels misclassified as fractures.

Hot Spot: 89% of true hot spots are predicted correctly, while 11% are misclassified as background. Its FNR is 11% and FPR is (0.11 of the background is misclassified as hot spot, and the proportion of true non-hot spot cases is 1 − 0.89 = 0.11). This reflects the inherent challenge of distinguishing small hot spots from the background in infrared photovoltaic images, where their grayscale features overlap to some extent.

Plant: 91% of true plant occlusions are predicted correctly, with 9% misclassified as background. Its FNR is 9%, and FPR is (0.09 of the background is misclassified as plant, and the proportion of true non-plant cases is 1 − 0.91 = 0.09). This suggests there is still room for improvement in distinguishing plant occlusions from the background.

Battery String: 100% of true battery string defects are predicted correctly, with no false negatives (FNR = 0) or false positives (FPR = 0), demonstrating the model’s strong recognition capability for this defect type.

Background: Only 0.01 is misclassified as fracture, 0.11 as hot spot, and 0.09 as plant, indicating a low overall false positive rate and good ability to distinguish non-defective background regions.

In summary, the confusion matrix quantitatively validates YOLO-FAD’s classification performance: it achieves near-perfect recognition for fractures and battery string defects. Misclassifications for hot spots and plants mainly stem from feature confusion with the background (which also points to future optimization directions), while the overall false positive rate remains controllable.

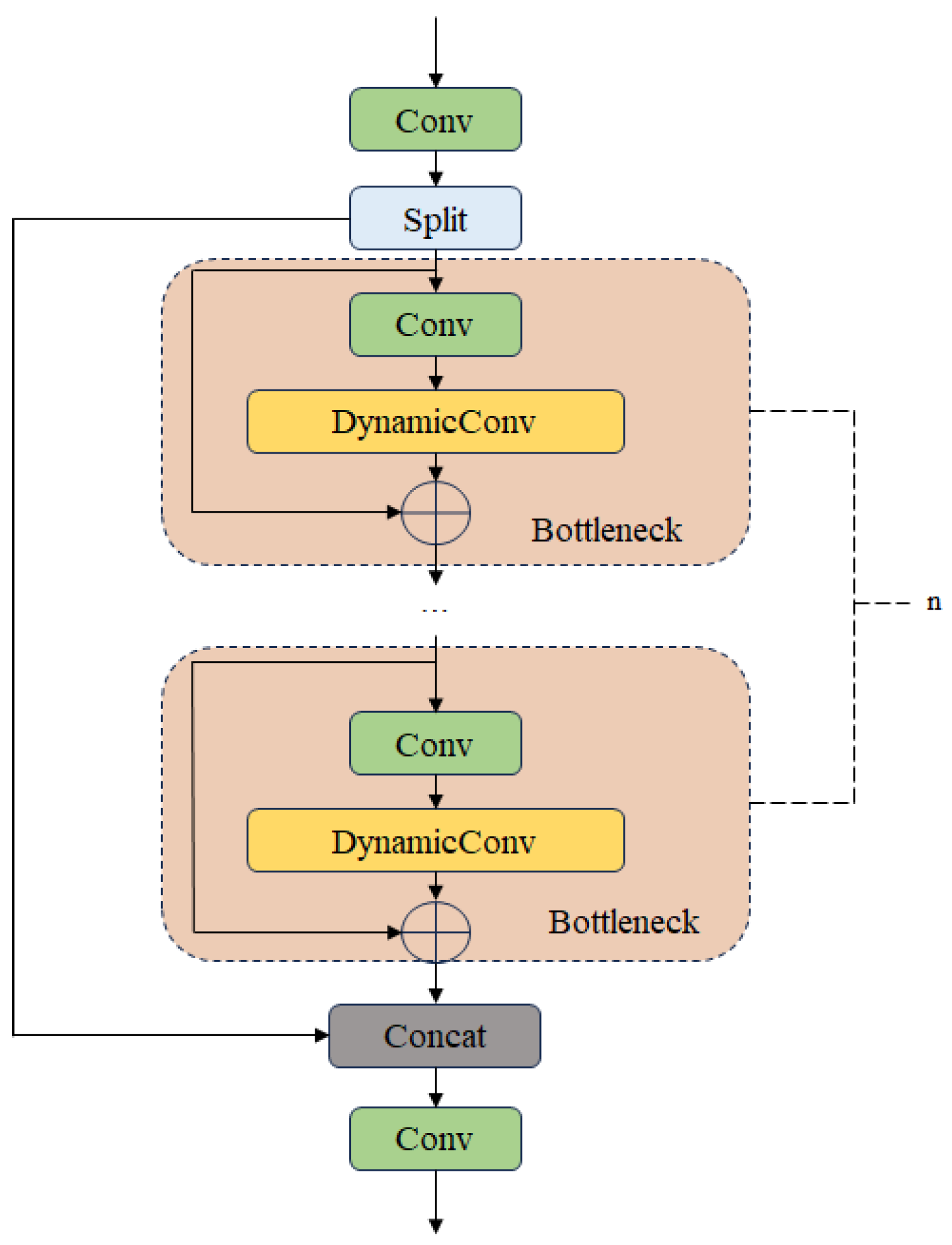

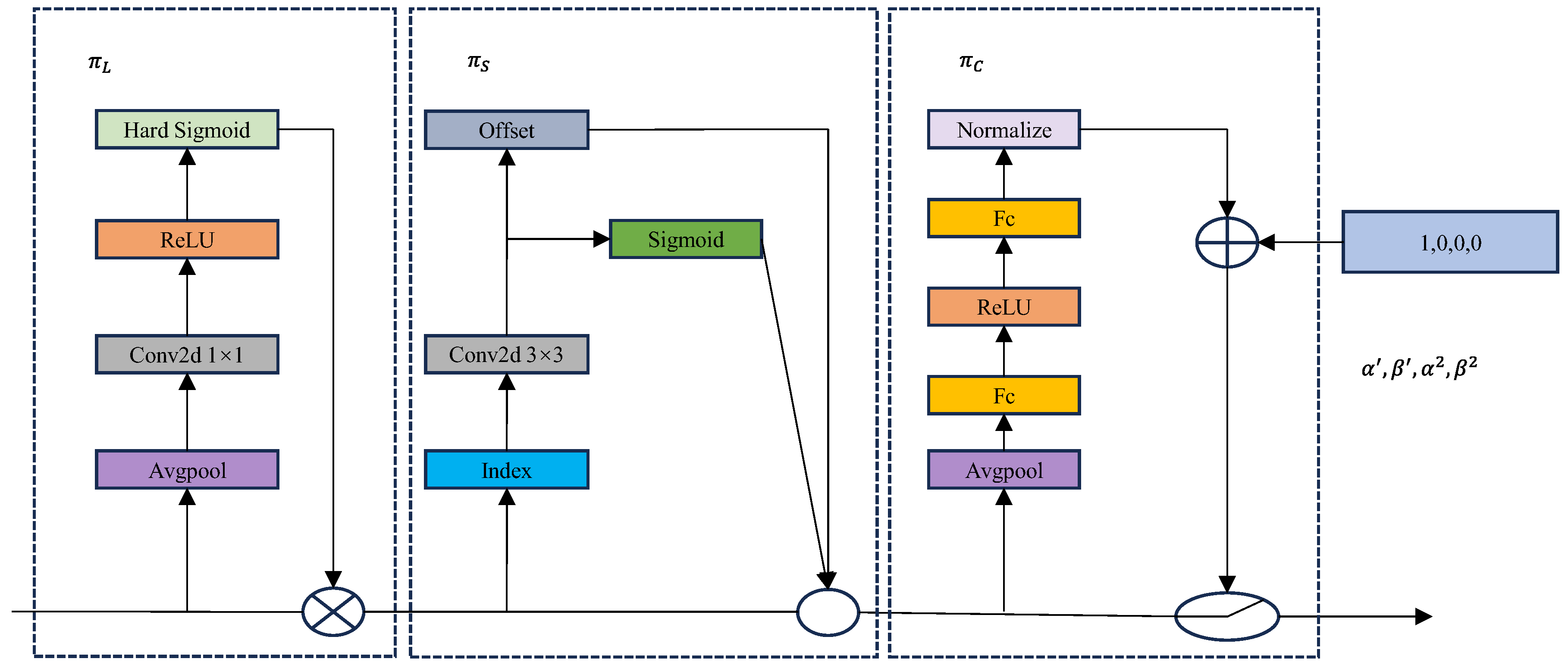

4.4.10. Ablation Experiment

The improved YOLO-FAD algorithm consists of four core modules: RFAConv, ASF, DyC3K2, and DyHead-detect head detection. The effectiveness of each module in enhancing the performance of the YOLO-FAD algorithm was validated through experimental studies, with the results shown in

Table 8.

As shown in

Table 8, YOLOv11n achieves only 75.2% accuracy in detecting small targets and has limited capability in identifying small target defects (such as hot spots), resulting in high risks of missed detections and false positives.

When using a single module (adding only one module), the accuracy for detecting small target defects ranges from 78.4% to 79.5%, indicating that a single module can enhance feature extraction to some extent but has limited improvement in detecting small target defects.

The two-module combination achieves a detection accuracy of 81.3% to 82.6% for small target defects, indicating that module collaboration begins to take effect, further improving detection accuracy for small target defects, though it has not yet reached the optimal level.

The three-module combination achieves a detection accuracy of 84.2% for small targets, indicating that the complementary nature of multiple modules is enhanced, enabling more precise localization and identification of small target defects (hot spots).

The combination of four modules achieves a small-object detection accuracy of 85.3%, indicating that the model achieves deep collaboration among the four modules, comprehensively optimizing feature extraction, defect enhancement, and bounding box regression, resulting in the best performance for small-object defect detection.

The combination of the four YOLO-FAD modules achieves a significant breakthrough in small-object defect (hot spot) detection through collaborative optimization, validating the rationality and practicality of the model improvements with data, and providing an optimal solution for photovoltaic module defect detection.

To validate the reliability of ablation experiment results, this study repeated each module combination five times (maintaining consistent experimental conditions: NVIDIA RTX 3080 Ti GPU, PyTorch 1.11.0 framework) and calculated the standard deviation (Std) and 95% confidence interval (CI). Results show that YOLO-FAD (full four-module configuration) achieved amAP@0.5of 94.6% (95% CI: 94.3–94.9%) and a hotspot detection accuracy of 85.3% (95% CI: 84.8–85.8%), with standard deviations below 0.25 for both metrics, indicating excellent experimental stability. Analysis via independent samples t-test (α = 0.05) revealed that the difference in mAP between YOLO-FAD and the baseline model (YOLOv11n) was statistically significant (p < 0.01), and the difference compared to the three-module combination (RFAConv + DyC3K2 + ASF) was also significant (p < 0.05). This confirms that the improvement in detection performance achieved through the synergistic optimization of the four modules is not coincidental, further validating the rationality of the module design.