Abstract

The integration of a P300-based brain–computer interface (BCI) into virtual reality (VR) environments is promising for the video games industry. However, it faces several limitations, mainly due to hardware constraints and limitations engendered by the stimulation needed by the BCI. The main restriction is still the low transfer rate that can be achieved by current BCI technology, preventing movement while using VR. The goal of this paper is to review current limitations and to provide application creators with design recommendations to overcome them, thus significantly reducing the development time and making the domain of BCI more accessible to developers. We review the design of video games from the perspective of BCI and VR with the objective of enhancing the user experience. An essential recommendation is to use the BCI only for non-complex and non-critical tasks in the game. Also, the BCI should be used to control actions that are naturally integrated into the virtual world. Finally, adventure and simulation games, especially if cooperative (multi-user), appear to be the best candidates for designing an effective VR game enriched by BCI technology.

1. Introduction

A video game can be defined as “a mental contest, played with a computer according to certain rules, for amusement, recreation, or winning a stake” [1]. It has also been defined briefly as “story, art, and software” [1]. Sometimes, for example, in serious games, amusement is not the main goal. However, to date, amusement still plays a major role in the video game industry. Although by different means, virtual reality (VR) and brain–computer interface (BCI) are both excellent candidates for enhancing the possibilities of entertainment and satisfaction in video games. Indeed, both enhance immersion and it is a common belief that this encourages the amusement feeling. The concept of immersion was defined in [2], observing that everybody may enjoy a game with immersion, even if the gaming control seems to play the main role in the user’s enjoyment. According to Zyda [1], this immersion feeling is created by computer graphics, sound, haptics, affective computing and advanced user interfaces that increase the sense of presence. Virtual reality refers to a collection of devices and technologies enabling the end user to interact in 3D [3], e.g., spatialized sounds and haptic gloves (for example Dexmo, Dexta Robotics, Shenzhen, China). Steuer [4] emphasizes the particular type of experience that is created by VR. Such experience is named telepresence, defined as the experience of presence in an environment by means of a communication medium [4], joining the concept of presence [1]. A BCI can also enhance the feeling of presence in the virtual world since it can replace or enhance mechanical inputs. According to Brown and Cairns [2], immersive games are played using three kinds of inputs: visual, auditory and mental; since a BCI may transform ‘mental’ signals into input commands, such interface may play a unique role in the mentalization process involved in the feeling of immersion. However, considering the limitations of a BCI system (to be analysed later), it is still not clear to what extent current BCI technology may improve immersion. As pointed out by Brown and Cairns [2], “engagement, and therefore enjoyment through immersion, is impossible if there are usability and control problems”.

An element of amusement derives from the originality and futuristic aspect of BCI technology as compared to other traditional inputs, like a mouse, a joystick, or a keyboard. Nonetheless, as it often happens in the technological industry, BCI technology risks being dropped by the public if the improvement it brings is not worthwhile compared to the effort needed for its use. Virtual reality has already enjoyed the “wow-factor” and VR systems tend to be employed nowadays in commercial events especially for raising this effect (Feel Wimbledon by Jaguar, Coca Cola’s Santa’s Virtual Reality Sleigh Ride, McDonald’s Happy Meal VR Headset and Ski App, Michelle Obama’s VR Video, XC90 Test Drive by Volvo, etc.) (http://mbryonic.com/best-vr/). The “wow-factor” is defined in the Cambridge dictionary as “a quality or feature of something that makes people feel great excitement or admiration”, and was previously studied in the domain of marketing or education (e.g., [5,6]). The recent development of dedicated VR headsets, that is, head-mounted-devices (HMDs, e.g., the Oculus, Facebook, Menlo Park, CA, USA; HTC Vive, HTC, Taoyuan, Taiwan; Google Cardboard, Google, Mountain View, CA, USA) has paved the way to the commercialization of combined BCI+VR technology. Indeed, HMDs provide an already build-in structure that can support the embedding of EEG (electroencephalography) electrodes, which are needed for the BCI. The Neurable Company (Cambridge, MA, USA) has recently announced a product combining an HTC Vive (Taoyuan, Taiwan) with an EEG cap. The company claims to develop an everyday BCI and successfully raised 6 million dollars for this purpose (https://www.forbes.com/sites/solrogers/2019/12/17/exclusive-neurable-raises-series-a-to-build-an-everyday-brain-computer-interface/). The HTC Vive (Taoyuan, Taiwan), as well as other HMDs such as the SamsungGear (Samsung, Seoul, Korea) or the Oculus Quest (Facebook, Menlo Park, CA, USA) use inboard electronics, thus herein we refer to them as active devices. On the contrary, passive HMDs consist of a simple mask with lenses in which a smartphone is inserted (Figure 1). Passive HMDs are particularly promising for the BCI+VR field since they are very affordable and smartphones are nowadays ubiquitous. In addition, passive HMDs, like SamsungGear and Oculus Quest, are easily transported since they do not require the use of additional materials, such as personal computers or external sensors.

Figure 1.

The SamsungGear HMD (a) can be used in passive mode (inserting a smartphone without plugging it into the mask through the micro-USB port) or active mode (with on-board electronic, mainly the gyroscope, supplied through the micro-USB port). The Neurable headset (b) combines EEG with the HTC Vive (c), an active VR headset linked to a powerful computer. The Oculus Quest (d) is an active device which offers similar functionalities than the HTC Vive without the need of external sensors, thus it is easily transported.

Prototypes of BCI-based video games already exist [7,8,9,10,11,12,13,14,15,16,17,18]. They are mainly based on three BCI paradigms: the steady-state-evoked-potential (SSVEP), P300 event-related potentials (ERP) and mental imagery (MI). The first two necessitate sensorial stimulation of the user, usually visual, and are defined as synchronous because the application decides when to activate the stimulation so that the user can give a command [19]. In this article, we focus on P300-based BCIs. As compared to MI-based BCIs, P300-based BCIs require a shorter training, achieve a higher information transfer rate (amount of information sent per unit of time), and allow a higher number of possible commands [20,21]. As compared to SSVEP-based BCIs they feature a lower information transfer rate. However the flickering used for eliciting SSVEPs is annoying and tiring, besides presenting an increased risk of eliciting epileptic seizures [22]. Modern P300-based BCI was introduced in 1988 by Farwell and Donchin as a means of communication for patients suffering from the ‘locked-in’ syndrome [23]. They were probably influenced by prior work of Vidal, who proposed in the 70′s a conceptual framework for online ERP-based BCI [24], coining by the way the expression brain–computer interface [25,26]. P300-based BCIs are based on the so-called oddball paradigm. The oddball paradigm is an experimental design consisting of the presentation of discrete stimuli successively; most are neutral (non-TARGET) and a few (rare) are TARGET stimuli [27]. In the case of P300-based BCI, items are flashed on the screen, typically in groups. A sequence of flashes covering all available items is named a repetition. The goal of the BCI is to analyse the ERPs in one or more repetitions to individuate which item has produced a P300, a positive ERP that appears around 300–600 ms after the item the user wants to select (TARGET) has flashed. The typical accuracy of P300-based BCIs has risen over the past years from about around 75% after 15 repetitions of flashes [28] to around 90% after three repetitions using modern machine learning algorithms based on Riemannian geometry [29,30,31,32]. In practice, this means that at least one second is necessary to such BCI to issue a command, but more may be needed to issue reliable commands.

We anticipate that the integration of BCI in VR games thanks to the development of integrated HMD-EEG devices will foster the acceptance of this technology by both the video game industry and gamers, thus pushing the technology into the real world. The development of a concrete application for the large public faces several limitations, though. In the domain of virtual reality, motion sickness appears to be one of the most severe limitation. However, this limitation seems relatively weak in comparison to those risen by the BCI system. Above all, BCIs are often unsightly, and the electrodes are not easy to use. Also, users in virtual reality may move a lot and this jeopardizes the quality of the EEG signal.

In this work, we analyse these limitations and we make recommendations to circumvent them. This work has been inspired by previous contributions along these lines concerning BCI technology [12,33,34] and its use in virtual reality [35]. Here, we will integrate these previous works with similar guidelines found in the literature on virtual reality [36,37,38,39] and spatial mobility [14,40,41,42], focusing on P300 technology for BCI and gaming applications for VR. In the following section, we will present the limitations concerning a public use, divided according to the fact whether they are introduced by (1) the HMD, (2) the BCI system (in general or using VR), or (3) by the P300. For the purpose of clarity, we present the recommendations directly after each corresponding limitation. Numerous limitations and recommendations already discussed in the literature are considered and others are added here. Since there are different levels of evidence for all of them, the limitations and recommendations we report will be labelled according to the following taxonomy:

- -

- Level of evidence (LoE) A: The recommendation or limitation is a fact, or there is a strong evidence supporting it, for instance, it has been reported in a review paper or in several studies.

- -

- LoE B: The evidence supporting the limitation or recommendation is weak for one or more of the following reasons:

- ○

- It appears relevant, but it still not exploited currently in BCI or VR.

- ○

- The limitation or recommendation was stated in some papers but challenged in others.

- ○

- The limitation or recommendation has been sparsely reported.

- ○

- The limitation or recommendation has appeared in old publications and is now possibly outdated considering technological improvements.

- -

- LoE C: The limitation or recommendation is introduced here by the authors. Thus, it requires independent support and validation.

The authors acknowledge that all listed limitations and recommendations are not equally relevant when designing a BCI + VR system. For example, the limitation of the field of view (discussed later) is very specific to the VR domain. It is not a major concern for a BCI+VR system in comparison to other limitations, such as the need of an ergonomic EEG cap. In parallel to the LoE just defined, we then label the limitations and recommendations also according to their level of interest (LoI), that is, their pertinence to designing a BCI + VR system:

- -

- LoI 1: The recommendation or limitation deeply impact the conception of a BCI coupled with VR.

- -

- LoI 2: The recommendation or limitation is relevant to the field, but might be ignored for a prototypical version of a BCI+VR game.

- -

- LoI 3: The recommendation or limitation is secondary.

2. Type of Game Recommendations

Marshall et al. [33] studied the possible applications of BCI technology depending on the type of game. In the following we review the recommendations given by these authors.

- -

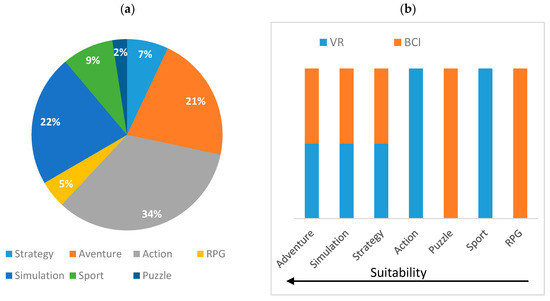

- Real Time Strategy (RTS) games are too complex and need continuous control, thus P300 does not suit them (LoE A; LoI 1). In RTS games, P300 can still be used if restricting it to non-critical control aspects. Generally, however, strategy is not particularly adapted to the VR context, as only 7% of VR games are of this kind (Figure 2a). For these games, the recommendation is to have a third-person point of view. For example, the player’s avatar controls a map representing the game field and this map is an object in a virtual room. Although it is not a strategy game, MOSS (Polyarc, Seattle, WA, USA) illustrates the use of a third-person point of view in VR.

Figure 2. Benchmark of the type of game in VR and BCI. (a) The repartition of VR games by type according to the Steam Platform (Valve Corporation, Bellevue, US)–see https://store.steampowered.com/search/?tags=597&category1=998&vrsupport=402. (b) Classification of the different types of game in regard of the previously exposed recommendations, and their representation in the VR market. The colour code indicates either the type of game is suitable for VR or BCI. The suitability for VR or BCI increases from right to left.

Figure 2. Benchmark of the type of game in VR and BCI. (a) The repartition of VR games by type according to the Steam Platform (Valve Corporation, Bellevue, US)–see https://store.steampowered.com/search/?tags=597&category1=998&vrsupport=402. (b) Classification of the different types of game in regard of the previously exposed recommendations, and their representation in the VR market. The colour code indicates either the type of game is suitable for VR or BCI. The suitability for VR or BCI increases from right to left. - -

- Role play games (RPG) are also problematic for P300-based BCI because of their complexity (LoE A; LoI 1). The general recommendations are the same as for RTS. The RPG should be turn-based and the BCI should be restricted to minor aspects of the game. An example is “Alpha wow” [9], where the user’s mental state is used to change the avatar’s behaviour in the virtual world.

- -

- Action games are the most popular type of game employing BCI technology (LoI 1; LoE A). This is surprising since action games often include fast moving gameplay. For this reason, the use of BCI is not recommended in action game without adaptation.

- -

- Sport games meet the same requirements as action games [33]. They often require fast moving gameplay and continuous control. Therefore, we do not recommend the use of BCI for sport games without adaptation (LoE A; LoI 1), such as the one given as an example in Table 1. In VR, sport games represent a moderate percent of the games (9%, Figure 2a).

- -

- Puzzle games are very well suited for P300-based applications (LoE A; LoI 1). They should be turn-based, allowing users to make simple choices at their own pace. The use of existing popular puzzles helps players because they are already familiar with the game’s rules. However, the problem is the same as for strategy games and board games in general, i.e., it is not very useful to adopt a 3D perspective with a board game (puzzle games represent only 2% of VR games—Figure 2a). We suggest the use of the same workaround as for strategy games and to use a third-person point of view (LoE C; LoI 1). In such a scenario, puzzle games may be presented as a board game inside a virtual room. Another idea is to design a puzzle in 3D allowing the player to move the pieces in all directions (and, why not, to move inside the puzzle itself).

- -

- Adventure games are well suited for P300-based BCI, if the player is given a set of limited options within a given time. (LoE A; LoI 1).

- -

- Simulation games (for training or education purpose for example) are also well suited for P300-based BCI, especially in the case of management simulation. Simulation games should feature a slow gameplay, allowing the player to adjust and learn how to control the BCI. In addition, simulation games are not based on “score”. Therefore the player can relax and obtain better performance using BCI control (LoE A; LoI 1).

Furthermore, we should considerate the means of interactions with the VE, that is, tracking of the user’s movements in a room or use of traditional inputs (joystick, mouse, keyboard) while sitting. Indeed, room-scaled VR games seem less suitable for BCI, as rotating the head or walking through the game area could perturb the EEG signal due to muscular artefacts or swinging of the electrode cables (as exposed in Section 3.2). However, it could be challenging to design a VR game using traditional inputs. Indeed, according to the Steam Platform (Valve Corporation, Bellevue, WA, USA), a ubiquitous offering platform for games, only 13% of VR games are played on a chair with a keyboard or a mouse.

In conclusion, the P300 paradigm suits well turn-based strategy game (board games such as chess or some PC game as Civilization or Heroes of Myths’ and Magic). Adventure and simulation games appear to be the most adapted types of games for a BCI-VR game (LoE A; LoI 1; Figure 2b), provided that they implement slow-game play and restrict the player’s movements. Non-surprisingly, the over-representation of adventure and simulation in VR games has not changed since our first investigation in 2017 [43], outlining a stable interest of the general public for this type of game. Interestingly, independent studios are very well represented in the VR versus PC and console industry. In our opinion, this can be explained by (1) the early integration of VR into game editors such as Unity 3D (Unity, San Francisco, CA, USA) or Unreal Engine (Epic Games, Cary, NC, USA) and (2) a more willing to experiment new technologies (https://www.vrfocus.com/2020/05/why-now-is-the-time-for-aaa-studios-to-consider-vr/; https://labusinessjournal.com/news/2015/jun/17/independent-virtual-reality-studios-benefit-early-/).

3. Limitations and Recommendation

3.1. Limitations of the HMD Device

3.1.1. IMU Accuracy

The IMU (inertial measurement unit) is not accurate enough (LoE A; LoI 3). The smartphone position and rotation in space is determined thanks to the IMU, the accuracy of which widely varies in different models of smartphone (see [44] for a benchmark of different smartphones, and [45] for a deep review of this problem). Therefore, a VR device may detect movement when the user is not moving. This creates the perception that the virtual world moves slightly around the user, forcing the user to rotate his/her head to compensate and follow the scene. In turn, these movements generate artefacts in the EEG signal.

Recommendations

This problem seems restricted to passive HMD (when the smartphone is the only device of virtualization). It is solved in the active SamsungGear device (Samsung, Seoul, Korea), which incorporates an IMU of good quality. It may also be solved by tracking the user head by means of an external tracker (LoE C; LoI 3). New generation of VR devices which are based on sensor fusion (Sensor fusion refers to the combination of sensory data or data derived from disparate sources) solves this problem as well as positional tracking by correcting the IMU bias according to a video camera input (LoE A; LoI 3: movement is not suitable for BCI). This technology is known as inside-out positional tracking, and it is implemented in the following commercial products:

- -

- The Oculus GO and Quest (Facebook, Menlo Park, CA, USA)

- -

- The Daydream SDK (Google, Mountain View, CA, USA), which is associated with Lenovo (Lenovo, Hong Kong, China) and Vive (HTC, Taipei, Taiwan; Valve, Washington, DC, USA).

- -

- The Structure Sensor (Apple, Cupertino, US).

- -

- The ZED camera (Stereolabs, San Francisco, CA, USA).

- -

- The Windows Mixed Reality platform (Microsoft, Washington, DC, USA) (Among others, Lenovo (Hong Kong, China), HP (Palo Alto, CA, USA), Acer (Taipei, Taiwan) and Sam sung (Seoul, South Korea) have already built headsets for the Windows Mixed Reality platform.).

3.1.2. Locomotion in Virtual Reality

Tracking user position is problematic (LoE A; LoI 3). Tracking user’s position in an indoor environment like a room is a global issue for VR devices. Low-cost or portable devices use a gyroscope to track the user’s head rotation, but they cannot determine the user’s position. More expensive devices, like the Vive or the Oculus, can track user position in a specific area. This area is limited by the size of the room where the game takes place as well as by the position of the motion capture sensors (around 25 m2 for the Vive and 5 m2 for the Oculus), when such devices do not implement inside-out positional tracking. Often game designers and application creators want to allow movement in a virtual world that is substantially bigger than the size of the room where the user is. This limitation especially applies to VR systems only. Indeed, the use of locomotion with BCI is not recommended (see limitations of the BCI system in general).

Recommendations

We propose here six solutions for locomotion in Virtual Reality. The reader is referred to [46] for a systematic review of locomotion in virtual reality.

- (1)

- Teleportation (LoI 1: very relevant for BCI since this technique does not require any movement), which for example is used in the following games: Portal Series in VR (Valve, Bellevue, WA, USA), Robo Recall (Epic games, Cary, NC, USA) or Raw Data (Survios, Los Angeles, CA, USA); the user focuses on the area of interest then clicks to teleport on the place s/he has selected. This solution is simple to be implemented, but its effect on motion sickness is not clear. In fact, the apparition of an unnatural cutting scene could induce motion sickness (LoE C). However, fading instead of cutting the screen can mitigate this effect (LoE C). In addition, teleportation may also reduce motion sickness since it does not involve visible motion (hypothesis presented in [47] with mitigate results) (LoE B: According to the systematic review of Boletsis [46], teleportation is a mainstream technique, but there is a lack of empirical studies about teleportation).

- (2)

- Walk-in-place [36,48], e.g., VR-Step (VRMersive, Reno, NV, USA) and RIPMotion (RIPMotion, Raleigh, NC, USA): The user first focuses on the area where s/he wants to move then walk-in-place to execute the movement and reach the selected destination. This solution reduces motion sickness as the user receives the sensation of movement as the virtual world moves. In practice, it is more complicated to be implemented because accelerometer data is needed to detect vertical movements of the user (walking). The accelerometer ordinarily employed is not always sufficiently accurate to obtain information about the step length and the movement may appear unnatural, again possibly resulting in motion sickness and a lack of immersion. Moreover, the walk-in-place input is restricted to situations where the user must walk, since the in-place gesture cannot be easily mimicked for applications where the player swims or flies, for example (LoE A: Stated in many games and studies; LoI 3: this technique is secondary since motion is not recommended with BCI and it is limited to a few situations).

- (3)

- Gesture Recognition, implemented by Raptor-lab (Lyon, France): For example, it reproduces the way skiing people push their poles to move forward. The company claims that their solution “offers human locomotion in VR with full freedom of movement, ‘as real life’ agility and liberty of action, which means, walking, running, climbing, jumping, crawling, and even swimming. And all that while avoiding motion sickness”. The gesture system was used in a game called the “Art of Fight” for the HTC Vive that has reached 10,000 players (http://steamspy.com/app/531270), and reproduced in the well-known game “The Tower” (Codivo GmbH, Munich, Germany) where the user can move from tower to tower using grappling (LoE B: Used in games, but not evaluated in scientific studies; LoI 3: it requires motion).

- (4)

- Motion Platforms (like a fitness treadmill) allow the use of movement in order to navigate in a restricted area. There are already commercial motion platforms such as Virtuix omni (Virtuix, Austin, US), WalkOVR (WalkOVR, Istanbul, Turkey) or VR Motion Simulators (Virtec Attractions, Balerna, Swiss)—Figure 3. So far, this technology has been restricted because it cannot be easily transported and because different physical movements require different platforms. Therefore movement is sometimes unnatural—in particular, Virtuix omni was criticized because it reproduces an unnatural walking. Thus, these VR motion platforms are likely restricted to arcade rooms (LoE A; LoI 3).

Figure 3. Examples of motion platforms: (a) Virtuix omni and (b) VR Motion Simulator.

Figure 3. Examples of motion platforms: (a) Virtuix omni and (b) VR Motion Simulator. - (5)

- Sensor Fusion (LoI 3: Sensor Fusion is used when the player is expected to physically move). Accelerometers, such as the ones we find in smartphones, are not sufficiently accurate to determine the position of the user by integrating twice the sensors’ input. By adding information from gyroscopes, magnetometers and cameras (image recognition), the new generation of devices can determine relative position accurately without the use of any external sensors. This allows the expansion of the game area and reduce the system bulkiness. In fact, the Oculus Quest we mentioned above can track the user position, indoor or outdoor, in an area of 58 square metres (https://developer.oculus.com/blog/down-the-rabbit-hole-w-oculus-quest-the-hardware-software/), which is significantly larger than the size of the room for devices using external sensors as described in the limitations. Such use of elements from the real world into the virtual world is known as augmented virtuality (Figure 4) and is part of the mixed reality domain. The reader may refer to [37,49] for a classification of virtualization technology and a description of mixed reality (LoE A).

Figure 4. Representation of Milgram and Kishino [49] virtuality continuum of real and virtual environments (Figure rearranged from [49]).

Figure 4. Representation of Milgram and Kishino [49] virtuality continuum of real and virtual environments (Figure rearranged from [49]). - (6)

- Redirected walking is a locomotion technic consisting of imperceptibly redirecting the user’s view to one direction while he walks in a straight path in the VE, with the purpose of allowing real walk in VEs which are larger than the size of the room. He relies on the fact that vision subtlety overcome proprio-vestibular inputs when these senses slightly conflict. Other methods and paradigms have been developed such as respectively modifying walk speed or body rotation and redirecting the user to targets or to the centre of the room. However, the use of redirected walking is limited by the need of resetting phases, independently of the chosen paradigm and method, as well as the detection of manipulation thresholds, above which cybersickness will occur. In addition, there are open controversies concerning the impact of this technic on spatial performance, such interference with spatial learning or modification of distance perception, as well as the impact of overt manipulation on virtual sickness. Note that we did not discuss redirected space in this paragraph, which is a similar technic consisting of manipulating the VE, but we do redirect the reader to [50,51] for an extended literature review on the topic of redirected-walking (LoE B: unless redirected walking looks promising to decrease cybersickness and virtually extend the game area, there is a lack of applied research in the VR games industry, LoI 3: it requires motion, and thus interferes with P300 detection).

3.1.3. Motion Sickness

Motion sickness (LoE A; LoI 1: the comfort of the user is a main concern). The HMD may induce motion sickness [52,53]. In general, motion sickness arises when there is a mismatch between the visual and the vestibular systems, for example when travelling on a ship or a car without seeing the horizon and the road [54]. Sensory conflict is commonly used to explain such sensation of discomfort in a VR context [39,55], but this is likely unproven [39,56] (LoE B: There is no agreement concerning the cause of motion sickness). Practical factors that have been found to induce motion sickness in virtual environments include:

- -

- The user observes a movement that does not happen in the real world [53]. (LoE B; LoI 1)

- -

- The lag between the movement of the user and the movement of the avatar in the virtual world [52]. For instance, this lag may be due to the refresh rate of the screen and/or computation time due to high quality graphics (LoE B; LoI 1).

- -

- Motion sickness increases with higher field of view, with an asymptote starting at 140° [57]. This figure is criticized by Xiao and Benko [58], but the authors did not use the same measure of motion sickness as Lin et al. [57] (LoE B; LoI 2).

- -

- Postural instability [59,60] (LoE A; LoI 3: since movements are limited in BCI this factor does not really apply here).

In evaluating the risk of motion sickness, the following factors should be considered:

- -

- Mental rotation ability and field in/dependence; better mental rotation and weak tendency to field dependence or independence may result in less motion sickness (LoE A, LoI 3: this limitation applies for the end user, independently of the application design).

- -

- People feeling motion sickness in real life are more likely to experience motion sickness in virtual reality environments [61] (LoE B; LoI 3).

- -

- Age and gender are correlated to virtual sickness; motion sickness is less common in the age range 21–50 [39,54,62] and women are more exposed than men [63,64,65] (LoE B; LoI 3).

The motion sickness limitation especially applies to VR. However, this limitation appears to be a major limitation for a BCI+VR system since people feeling sick will not be able to use the system.

Recommendations

The following recommendations are useful to reduce motion sickness in a VR setting:

- (1)

- Avoid motion parallax effect [66,67] (LoE A; LoI 1).

- (2)

- Use a refresh rate above 70 Hz [39] (LoI 1). There is clear evidence that vection, that is an illusion of self-motion, increases as a function of the refresh rate [39,68]. Although there is an established relationship between vection and motion sickness, the above studies demonstrate that vection can both increase and control sickness. In fact, recommendations about refresh rate are concomitant to (1) the screen refresh rate of the device and (2) the minimal average of images per second below which unnatural motion is perceived (https://www.quora.com/Why-does-Virtual-reality-need-90-fps-or-higher-rates-What-are-the-technical-problems-in-terms-of-computer-graphics-in-the-lower-rates). In fact, a dropping in the refresh rate of the game may induce flickering, that is a visible fading of the screen. In practice, the refresh rate of the screen is about 90 Hz for old versions of the Oculus and HTC Vive; and 60 or 72 HZ for newer versions of these headsets, such as the Oculus Quest and Oculus GO (LoE B: this recommendation is due to the technical configuration of the headsets, but there is no clear evidence than a high refresh rate diminish sickness).

- (3)

- Avoid cutting scenes, since such transitions do not apply in the real world (LoE C; LoI 3: see locomotion in virtual reality).

- (4)

- Avoid extreme downward angle, such as looking downward at a short distance in front of the virtual feet [69] (LoE B; LoI 1).

- (5)

- Try to use stroboscopic vision and overlaying glasses as suggested in [38] (LoE C: Stated for motion sickness but not for virtual sickness; LoI 3).

- (6)

- Take breaks out of the VR immersion as motion sickness increases with playing time [69] (LoE B; LoI 1).

- (7)

- Introduce a static frame of reference [70,71,72] (LoE A; LoI 1).

- (8)

- Dynamically reduce the field of view in response to the visually perceived motion [73] (LoE B; LoI 2).

- (9)

- Try movement in zero gravity (https://www.theverge.com/2016/10/13/13261342/virtual-reality-oculus-rift-touch-lone-echo-robo-recall; https://developer.oculus.com/documentation/native/pc/asynchronous-spacewarp/) (LoE C, LoI 2: this technique may be relevant for application design but must be studied in depth). The link we have given in the footnote is a short description of the game “Lone Echo” (Ready at Dawn Studios, 2017) that was nominated at the Game Award for best VR game. This game takes place in space and reproduces the sensation of floating by disabling gravity and continuously moving the player with an endless drift. In the aforementioned link it is suggested that this might diminish motion sickness.

3.1.4. Unsolved Limitations: Asset Restriction, Field of View and Optical Distortion

The following limitation applies to VR specifically. The impact of these limitations on BCI + VR systems appears limited.

- Asset restriction (LoE A). The use of HMD does not fit large assets, with graphics restricted in terms of polygon and texture size. This restriction is due to the graphic engine, hardware and stereoscopic vision. Stereoscopic vision appears as the main problem since a texture has to be drawn twice, one for each eye. Next, big assets require more computation or power, which is mainly limited by the hardware capability, specifically when using a mobile platform such as the SamsungGear (Samsung, Seoul, South Korea) (https://developer.oculus.com/blog/squeezing-performance-out-of-your-unity-gear-vr-game/). The graphic engine may also have a great impact on performance when either (among others) multithreading, batching or fixed foveated rendering (e.g., [74,75]) are enabled. This last technic computes images with an elevated level of detail in the centre while the resolution near the borders is poor, taking advantage of the natural tendency of being focused on what happens in front of our eyes rather than in our side (LoI 3: The quality of the graphics may be considered secondary when designing an ergonomic interface).

- Field of View (FOV) is limited (LoE A; LoI 3). The HTC Vive (HTC, Taoyuan, Taiwan) has the largest FOV among the currently available virtual reality headsets. Its FOV is about 100°, that is, around 80° less than the human FOV. Such restricted FOV limits the feeling of immersion, while a wider FOV causes optical distortion of the image and increases the sensation of motion sickness (e.g., [57,58]). To our knowledge, the Pimax 5K (Pimax, Shangai, China) is the only HMD on the market which offers a good sensation of comfort along with a wide FOV (up to 200°).

- Optical aberration (LoE C). In our opinion, the quality of VR screen is low as compared to a PC monitor, with visible artefacts due to the poor resolution of the screen and the lens. Mainly, images intended to be displayed on a plane surface are distorted while being projected into curved lenses, thus resulting in blurring. This blurring can be coupled with a flare effect, which appears when light outside the FOV of the lens is trapped and reflected inside them. These effects are mitigated using pre-rendering algorithms along with high-quality lens (LoI 3: Visual aspects may be considered secondary as compared to game design or gameplay).

3.2. Limitations of the BCI Systems

3.2.1. BCI System in General

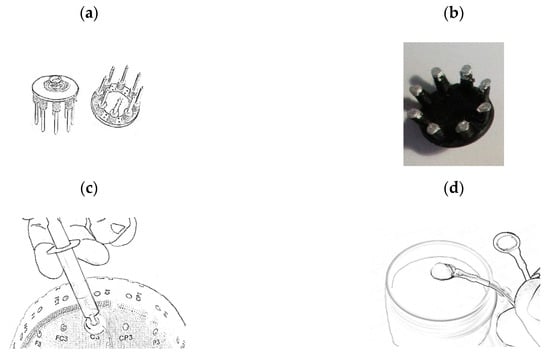

- Comfort and Ergonomics of the electrodes (LoI 1: The use of electrodes introduces a major discomfort): Traditional EEG caps need the use of a gel or a paste to establish the contact between the electrodes and the scalp or the use of dry electrodes, which are often more uncomfortable (Figure 5). This limitation is a major concern for the end user, since an unsightly, messy, or painful product has little chance to be successful (LoE C). Research is ongoing to develop EEG caps that are easy to set up, easy to clean and comfortable, with the key requirement of allowing accurate EEG signal recording [14,40,41,76]. Among the commercial products, we can mention: the “Mark IV” (OpenBCI, New York, NY, USA); the “Muse” headband (Muse, Toronto, ON, Canada), simple to set up, but covering only a small portion of the head; the “Quick-20” (Cognionics, San Diego, CA, USA) a dry EEG headset that can be set up in a few minutes. Most of these systems diminish the setting time by providing an already build-in support structure and dry electrodes. Nevertheless, the affirmation that dry electrodes are easier to install was challenged in [77]. This study concluded that the set-up time was equally or even longer using dry electrodes since they do not easily adapt to the shape of the head. This observation ignores the fact that the user may have to clean their hair after using the gel-based electrode. A common concern for the aforementioned products is that the number of electrodes and/or the quality of the signal is not sufficiently high for P300-based applications (Debener et al. [41] and Mayaud et al. [78] have a mitigated point of view, whereas Guger et al. [79] are more optimistic) (LoE A: wet electrodes are more accurate, stable and comfortable than dry electrodes; LoE B: Dry electrodes are easier to install and remove if the user is experimented and the cap can easily adjust to the shape of the head).

- Locomotion with the electrodes: The use of BCI when moving around is very disputable. It has been shown that the recognition of the P300 component is still possible when walking, but the performance of the system is reduced [41] (LoE B; LoI 2: It is possible to design applications with limited movements, sacrificing the immersion feeling and the VR capabilities).

- Tagging: An important technical aspect of P300-based BCIs is that the BCI engine needs to be informed about the exact moments when the stimulations (flashes) are delivered. Traditionally this is obtained by hardware tagging, that is, through a serial or parallel port by which the user interface (UI) sends a tag to the EEG acquisition unit at each flash, which in turn synchronize the tags with the incoming EEG data. This is an accurate method allowing a tagging error within ±2 ms. The alternative is known as software tagging and can be achieved in several ways. Software tagging is better achieved synchronizing the clocks of the machine on which the UI software runs and of the EEG acquisition device, the lack of which may result in rather high-tagging error [6]. This synchronization problem is well-known in the Network domain where multiple servers must be synchronized or when dealing with an array of wireless sensors [80]. If hardware tagging is used, the wire connecting the UI to the EEG acquisition unit may limit the movement of the user (LoE A, LoI 1: From the perspective of the user, it is important to guarantee the accuracy of the system while minimizing its bulkiness).

Figure 5.

Dry electrodes: (a) ‘g.Sahara’ (Guger Technologies, Graz, Austria) and (b) ‘Flex sensor’ (Cognionics, San Diego, US). Image of wet electrodes (c) showing how the gel is injected into a ‘g.LADYbird’ (Guger Technologies, Graz, Austria) electrode attached to an elastic cap and (d) ‘Gold cup electrodes’ (OpenBCI, New York, NY, USA), which can be attached to the scalp using a fixating paste.

Recommendations

- (1)

- To diminish set up time the EEG cap could be embedded in the VR device (LoE C; LoI 1). For this purpose, since pin-shaped dry electrodes result uncomfortable, a new generation of dry electrodes based on conductive polymers could be preferred (e.g., [81]) (LoE B; LoI 1). For gaming, this solution is preferable to wet electrodes as flexible dry electrodes do not require a long set up and cleaning (LoE C; LoI 2: see previous discussion on dry and wet electrodes). The problem concerning the quality of the signal might be addressed by a shield placed over the electrodes to prevent electromagnetic contamination (prototype of A. Barachant (http://www.huffingtonpost.fr/2017/11/22/brain-invaders-le-jeu-video-qui-se-controle-par-la-pensee_a_23284488/); LoE C; LoI 1). Another option is the use of miniaturized electrodes (LoE B; LoI 1). Bleichner et al. [82], conceived a system made of 13 miniaturized electrodes placed in and around the ear besides the traditional sites for P300 recognition (central, parietal and occipital locations). An offline analysis showed that the accuracy of this system was comparable to the one obtained in a previous study with state-of-the art equipment [42]. However, the authors point out that the lack of robustness of such a system if the user moves around.

- (2)

- The camera and accelerators could be used to detect the user movement and remove the corresponding EEG signal from the analysis (LoE C; LoI 3). It has also been suggested to use an automatic online artefact rejection such as [83], or to use new features such as the weighted phase lag index when walking [84]. However, we note a lack of studies in real life such as [41] (LoE B: It works in laboratory conditions, but there is a need for out-of-the lab studies; LoI 1: The removal of movement artefacts, if effective, will allow to freely move in VR).

- (3)

- When the UI and the BCI engine run on separate platforms: if the EEG acquisition unit is not mounted on the head (e.g., [42]), prefer software tagging over hardware tagging (LoE B; LoI 1)—Figure 6b.

Figure 6. Examples of a BCI-VR system when the EEG acquisition unit and the BCI engine (Analysis) are running on different platforms: (a) the HMD is linked to a PC; (b) the HMD works without the need for a PC. In (a) the PC could be miniaturized and embedded into the mobile HMD-EEG system. In (b) the HMD is in charge of running and displaying the UI, but also of acquiring and tagging the signal (software tagging).

Figure 6. Examples of a BCI-VR system when the EEG acquisition unit and the BCI engine (Analysis) are running on different platforms: (a) the HMD is linked to a PC; (b) the HMD works without the need for a PC. In (a) the PC could be miniaturized and embedded into the mobile HMD-EEG system. In (b) the HMD is in charge of running and displaying the UI, but also of acquiring and tagging the signal (software tagging). - (4)

- It would be even better to directly embed a wireless EEG acquisition unit on the VR device. With such system the tagging problem would be solved for good [42] (LoE B; LoI 1).

- (5)

- Embed the EEG acquisition unit and the EEG analysis within the EEG cap, making the BCI completely independent from an external computer (Figure 6a; LoE C; LoI 1). Such a system would also avoid dealing with problems related to wireless communication (e.g., data loss, signal perturbation, etc.—LoE B; LoI 1). A simple application of this recommendation consists of placing the PC on which the BCI engine runs in a backpack [42]. For HMD linked to a PC, MSI (Zhonghe, Taiwan) has released VR-ready PCs placed into a backpack (http://vr.msi.com/Backpacks/vrone).

3.2.2. Limitations That Are Common to VR and BCI

A major limitation of both VR and BCI hardware is the price. This is currently steadily decreasing, and we expect it to become very affordable in the next few years (LoE A; LoI 2). The tendency can be explained by the increasing interest in these technologies, which foster larger productions permitting lowering the price for a single unit. All studies are consistent to say that the BCI and VR market are both increasing. To cite some examples, consulting company Grand View Research (San Francisco, CA, USA) predicts that the VR and BCI market will grow at a compound annual growth rate of 21.6 and 15.5 percent, respectively (https://www.grandviewresearch.com/industry-analysis/virtual-reality-vr-market (VR) and https://www.grandviewresearch.com/industry-analysis/brain-computer-interfaces-market (BCI)). In 2019, the VR and BCI markets were evaluated to 10.32 and 1.2 billion dollars. This can be compared to the BCI market in 2017, which was estimated to around 807 million and to the VR market, which has been negligible until 2015. According to the same studies, HMD and non-invasive BCI are the most sold products.

It should also be also outlined that combining VR and BCI technologies raise additional limitations, since some interactions in VR are incompatible with BCI [43]. In fact, player movement is a major concern for a ubiquitous VR+BCI technology, as movement is an essential interaction within VE but at the same time perturbs the EEG signal. It is likely that the player does not get the focus and patience required to use the BCI due to her/his excitation entering the VE. In addition, perfect synchronization required using P300-based BCI, between the EEG acquisition system and the display of stimuli on screen, is a technical bottleneck for mobile EEG. These considerations are well detailed in the following section.

3.3. Limitations of P300–Based BCIs

Possibly the most severe limitations are engendered by the P300 paradigm itself. We list here four limitations, the first three being well-known [12] (LoE A; LoI 1). The last one is a suggestion from the authors (LoE C; LoI 1).

3.3.1. Synchronous BCI

As we have seen the P300 is a synchronous BCI, thus it is not possible to control a continuous process where constant error correction is required, for example when driving a car. Rather, it is possible to perform a goal selection task, like for example choosing the destination of our vehicle.

Recommendations

- (1)

- Enable goal selection strategies and gradual control strategies [12]: Gradual control means controlling a continuous process in a discrete way by use of separated and limited goals. For example, the player may control speed by focusing on items such as SLOW, FAST, and MODERATE. (LoE A; LoI 1).

- (2)

- Use the concept of cone of guidance [33], inspired by a game described in [85], where the player has to guide a helicopter through floating rings. In the process of approaching a ring, the player is assisted by an invisible cone that improves the player performance, but this is not necessary to win the game. From a larger perspective, the cone of guidance may refer to any optional computer assistance that may help the user to perform a task, but it still requires enough input from the user to finish this task (LoE B; LoI 1).

- (3)

- Use high level commands [35]: They drive faster toward the sought result, although they are less intuitive. Lotte [35] shows an example of navigation in a museum using high-level commands. In this example, the user has to select a point of interest in the museum using three commands: two commands are for selecting the point of interest through a succession of binary choices; the last one is for deleting the last binary choice. The authors compared this method of navigation to navigation using low-level commands (such as turn left/right and go forward). The results of a subjective questionnaire show that high level navigation is faster and less fatiguing than low level navigation, but that high level navigation is less intuitive because of the succession of binary choices (LoI 1; LoE B: the given reference is about MI-based BCI; it is not clear to what extent the result applies to P300-based BCI since the latter BCIs allow a higher number of choices).

- (4)

- Do not separate stimuli and action and always incorporate them in the virtual world [12]: A more radical solution is the use of the P300 BCI control for actions that are normally “synchronous”, such as stopping when a traffic light switches to red [12,86] (LoE A; LoI 1).

- (5)

- Limit the use of complex actions such as controlling speed and movement at the same time (LoE C; LoI 1).

- (6)

- Design cooperative BCI games (whenever possible) where each player controls one parameter of the game. For example, one player could be responsible only for changing the direction and another could control the speed of a moving avatar or vehicle (LoE C; LoI 1). Korczowski et al. [87], have studied multiuser interaction with P300-based BCI playing Brain Invaders [17], showing the feasibility of cooperative BCIs for gaming [87].

To show how these recommendations may be applied in practice, Table 1 gives practical examples in relation to the design of a car race game. We have chosen this type of game for this example because, due to its nature, it is not the best candidate game for introducing BCI control. This gives us an opportunity to show that an adequate design may make BCI control possible even in unfavourable situations.

Table 1.

Examples of practical implementations of the design recommendations given in this section.

Table 1.

Examples of practical implementations of the design recommendations given in this section.

| Recommendation | When It Applies | Example of a Car Race Game |

|---|---|---|

| Goal Control | Every time you use a synchronous BCI | Do not control the movement but set objectives that the car must reach. |

| High Level Commands | As much as possible, but trying to make them intuitive | Control the speed of the car through a simple interface (SLOW, MODERATE and FAST). Avoid real time commands such as “activate clutch, select driving gear from one to six”. |

| Incorporate stimuli in the game | As much as possible | At the start of the race, incorporate the stimuli in the signal light. The car direction can be set up by looking at different billboards on the left or right side of the road. |

| Separate complex actions | When controlling an action that has more than two possibilities, or when each possibility can take too many values | The user action is to control the trajectory of the car, which depends on speed and direction. Usually these are done simultaneously with keyboard or joystick, but have to be set one after the other when using a synchronous BCI. |

| Enumerate all possibilities for an action | When an action can only take a small set of discrete values. | The speed can be slow or fast and the direction can be right or left. These are two tasks to be accomplished by the user. They can be combined into one choice with four possibilities: right-slow, right-fast, left-slow and left-fast. |

| Multiplayer interaction | Whenever the game is multiplayer. | The first player can control the speed and the second direction. |

3.3.2. Visual Fatigue

The flashing in a P300-based BCI is more tiring as compared to a normal visual scene and risks of photosensitive irritation should be considered. Furthermore, since P300-based BCIs work thanks to brain responses to stimulation (e.g., flashing of items on the screen), it continuously elicits cognitive resources from the user.

Recommendations

Ways to reduce this fatigue include:

- (1)

- Incorporation of the flashing items in the game scene [12,86] (LoE A; LoI 1): Kaplan et al. [12], concluded that stimuli should be natural discrete events that should occur at expected locations. Examples are: blinking lights in the sky, advertisements in a city during the night, attraction park and horror scenes (graphical reference from video games: Planet Coaster, Until Dawn) or a diving experience (graphical reference from video games: Sub Nautica). Moreover, in the process of a goal selection only the controls that are specific to the current context should appear. For example, only the navigation commands should be displayed when moving an avatar, whereas these commands should disappear when the user is not controlling the movement of the avatar anymore. A game could therefore automatically switch among different control panels depending on the context.

- (2)

- Adopting stimulation as less tiring as possible: The use of audio stimuli, which coupled with visual stimulation may lower visual fatigue, was recommended in [88] (LoE B; LoI 2). This study shows that combining auditory and visual stimulation is a good choice for a BCI speller to lower the workload. This study also reports that the use of audio stimuli alone leads to worse performance and higher workload as compared to a unimodal visual stimulation, thus the use of audio stimuli alone is not recommended. We should mention a promising study [89], which as stimuli used spoken sounds representing concepts as close as possible to the action they represent. Again, audio stimuli should be natural for the gaming environment and should vary according to the type of game (horror game, game with enigma, infiltration game, etc.). In the same vein, [90] investigated the use of mixed tactile and audio stimulations. Although the results are mitigated, the findings could be easily adapted in VR, as most of the controller provides a haptic feedback. We reported considerations about the use of multiple sensory stimulations in [91] (LoE C; LoI 2). Another solution could be to couple the visual P300 paradigm with other BCI paradigms such as motion onset visual evoked potential (mVEP) (LoE B; LoI 1). mVEP is a type of visual evoked potential (like SSVEP or P300) allowing more elegant stimuli [92]. Guo et al. [92] demonstrated that moving targets with low contrast and luminance, could evoke prominent mVEP. The protocol was nearly the same as for P300, but using moving instead of flashing targets. The usability of this paradigm needs to be studied specifically in the VR context, where targets can move in 3D around the user, thus the user may have to turn the head to follow them (LoE C; LoI 3).

- (3)

- Lowering the stimulation time: BCI systems that do not need calibration are definitely preferable [87] (LoE A; LoI 1). Also, in designing a game, BCI control may be activated only in some situations, totalling a small amount of the gaming time (LoE C; LoI 1).

3.3.3. Low Transfer Rate

The low transfer rate of a P300-based BCI refers to the fact that several repetitions of flashes are needed for achieving accurate item selection and that, unless a large number of repetitions are employed, selection errors are unavoidable [12]. This introduces the need of repetitive actions instead of single actions to achieve a goal and the frustration of not being able to issue a command immediately, which is important in critical gaming situations.

Recommendations

There are at least eight ways to circumvent this limitation:

- (1)

- Using a-priori, user and/or context information to improve item selection (LoE C; LoI 1): To this end we may employ a so-called passive BCI to monitor physiological information about the user and adapt the gameplay consequently [33]. A passive BCI is a cognitive monitoring technology that can provide valuable information about the users’ intention, situational interpretation and emotional state [93]. For example, in the game Alphawow [9], the avatar’s character changes its behaviour according to the player’s relaxation state. Statistics are also relevant to predict the user’s behaviour. The use of natural blinking objects, such as advertisements, may inform the system of the user’s preferences and help to determine his/her choices. Also, it can be useful to keep a database of statistics from other users. For example, if 80% of people answer “yes” to a form in the game, the “yes” button could be given a higher weight (visually, or by putting a weight in the BCI engine output) to facilitate this selection. In addition, the use of VR devices for head- and eye- tracking or movement recognition (e.g., Leap Motion, San Francisco, CA, USA) might improve the transfer rate by detecting muscular artefacts and providing useful information on the user’s point of interest. Note that HMD such as the Vive Pro (Taoyuan, Taiwan) already include an eye tracker.

- (2)

- Using appropriate stimulation: Recommendations given in the section ‘Visual fatigue’ also apply here. In addition, the use of a spatial frequency in the visual stimuli is known to generate high-frequency oscillations in the EEG that can be used to help the detection of the P300-based [94] (LoE C; LoI 3). The shape, colour and timing of the stimuli may also play a role: Jin et al. [95] showed that stimuli representing faces lead to better classification (LoE A; LoI 1) while Jin et al. [95,96] suggest that the use of contrasted colours and the modification of flash duration impact the accuracy (LoE B; LoI 1).

- (3)

- Reduce the time needed to trigger an action and make each action nonessential: Increasing the number of flash repetitions leads to higher classification accuracy, but this forces the user to stay focused for a longer time (LoE A; LoI 1). Therefore, the fatigue of the user increases, the task is perceived more difficult and the application is less responsive. A compromise between accuracy and responsiveness is to keep the number of repetitions low while making the BCI commands non-critical. For example, in a car-driving application, at each repetition of flashes the trajectory may be slightly adjusted in the sought direction, thus, despite occasional errors, on the long run the player will succeed in giving the car the sought trajectory. Two other examples from previous studies are the Brain Invaders [17] and the Brain Painting [97]. In the Brain Invaders an alien is destroyed after each repetition. However, such action is not critical since the player has eight chances to hit the target hence, to finish the level. The Brain Painting is a game that is used by patient suffering from the locked-in-syndrome [34]. It consists of a P300 speller where the selection items are special tools for drawing. The concept itself retains our attention because the errors are not critical since the painting can always be retouched without the need of starting again.

- (4)

- Dynamic stopping (LoE B; LoI 2): Current P300-based BCIs usually make use of a fixed number of repetitions, forcing the user to keep focusing even if the BCI may have already successfully detected the target. Dynamic stopping consists in determining the optimal number of repetitions required to identify the target and thus it can decrease the time required for selection and provide higher robustness and performance [98,99].

- (5)

- Use feedback (LoE B; LoI 2): Marshall et al. [33] recommend the use of positive feedback. Nevertheless, feedback is mainly used for Motor Imagery-based BCIs, while it finds little use in P300-based BCIs. In Brain invaders [17], there is a binary feedback that indicates if the result is correct or wrong. However, the feedback does not indicate how close accurate selection is. Also, people playing video games are used to immediate feedback: when driving a virtual car, there is no appreciable delay between the command and its effect on the scene. That is to say, the feedback must be given as soon as possible. In the presentation of an EEG acquisition unit prototype, A. Barachant (http://www.huffingtonpost.fr/2017/11/22/brain-invaders-le-jeu-video-qui-se-controle-par-la-pensee_a_23284488/) used a probabilistic feedback that set the size of each item according to its probability of being the target chosen by the user. The feedback is updated after each item is flashed. This idea could be a starting point for designing an appropriate feedback for P300. Another established way to use feedback is to analyse the error-related potentials, which are produced by the brain after an error feedback is delivered to the subject. This can be used to automatically correct erroneous BCI commands, effectively increasing the consistency and transfer rate of the BCI [100,101,102].

- (6)

- Control non-critical aspects of the game (LoE C; LoI 1): In a race game for example, the speed is a critical aspect of the game and should not be controlled by a BCI, especially when mechanical inputs (e.g., mouse or keyboard) can achieve the same goal faster and with less concentration. However, a BCI may be used for triggering a “boost effect” that would help the player by temporally increasing the speed of the vehicle. Such a triggered effect would impact the score, but would not be an obstacle to finish the game. Also, we suggest restricting the use of the BCI to a limited set of aspects. This stands against the design of BCI experiment looking for a “wahoo effect”, as it is the case within contest or events. For instance, in the BCI game developed by Mentalista (Paris, France) for the European Championship of football in 2016, two players were asked to score against each other by moving a ball towards the opposite player’s cage thanks to their concentration (https://mentalista.fr/foot). Although it works surprisingly well for a short-timed session, the lack of alternative for winning harm a long-term use of this type of game.

- (7)

- Use a cone of guidance, as already defined in section ‘Synchronous BCIs’ (LoE B; LoI 1).

- (8)

- Define levels of difficulty (LoE C; LoI 1). The above parameters could be set as a level of difficulty in the game, with the following limitations. First, the expected behaviour must be known by the game. This is the case for Brain Invaders [17], where the player is expected to concentrate on a specific alien, but not the case for a P300 puzzle game for example, where the player can place the puzzle pieces in any desired order. Second, lowering the difficulty lowers the impression of control. In general, it is not recommended to use adaptive difficulty, as suggested in [103].

- (9)

- Use collaboration (LoI 1: collaboration is a ubiquitous aspect of video games): Korczowski et al. [87] demonstrated that collaboration between two users is an efficient way to improve performance, at least in the case where both users are focusing on the same goal and have an homogenous performance while playing alone. Interestingly, this study also outlines that adding the individual performance of the two players while playing together is better than considering the two resulting EEGs as the produce of a unique brain having twice electrodes (LoE B: While a significant amount of data is available on multi-player interaction using BCIs [104,105,106,107], analysis is still ongoing).

3.3.4. Intention to Select

Looking at a stimulus does not mean we want to trigger an action. For example, one can look at a door without having the intention to open it.

Recommendation

Current designs of P300 applications suppose that the user is focusing on the target even if the user is not looking at the screen at all. It has been suggested to use motor imagery [86] or the analysis of alpha rhythms [14] as a supplementary input to enable the user to signal the intention to select (LoE B; LoI 2).

Another option is to define a threshold for the certitude of the P300 classifier, below which the application will not take any decisions. This is what dynamic stopping (see also the recommendation for diminishing the ‘low transfer rate’) performs by dynamically changing the number of repetitions according to the certitude of the P300 classifier. Again, Mak et al. [108] present a benchmark of the methods for dynamic stopping (LoE B; LoI 1).

4. Discussion

In this review, we choose to focus on selected aspects of mixed reality devices, such as mobility, motion sickness, cost or ease of use. Other aspects such as the projection of the VE into the real world (e.g., CAVE), the use of augmented reality or interactions with holograms was not discussed in this paper. In fact, several concerns highlight the fact that these technologies are not ready for the market at least for personal use, like the lack of transparent LCD screen or a mature holographic technology for augmented reality. In our opinion, the early adoption of the Oculus Quest (Facebook, Menlo Park, CA, USA) outlines a general preference for headsets with inside-out positional tracking, that is, with six degrees of freedoms and standalone. A few studies (e.g., [43,109]) have investigated the interaction of a P300-based BCI with a VR headset, but the headset was either linked to a PC or based on a heavily modified smartphone. To our knowledge, the coupling of a P300-based BCI with a fully standalone HMD was never investigated.

The recommendations we have listed are numerous. We believe that a framework is required in order to maximize their usefulness. As a matter of fact, the global picture is further complicated by the heterogeneity of gameplay modes in different types of games. To build such framework, there is a need for a-posteriori data and of a method that can evaluate the impact of recommendations. Otherwise, the risk is high to design a BCI+VR application that will reproduce the same pattern of interaction than using a computer, but with less responsiveness. As stated in Lécuyer et al. [7], the current methods and paradigms devoted to interaction with games and BCI based on visual stimulation remain in their infancy. Future investigations in the Human Computer Interaction (HCI) domain are needed to overcome the limits of BCI and facilitate its use within virtual worlds. Along these lines, Marshall et al. [33] suggests that Fitt’s law may be used to compare BCI application designs. Fitt’s law assumes that in any game the objective would be to minimize the time required to accomplish a mission as well as minimizing the concentration or effort required by the user. This law is a good way to evaluate designs and elaborate patterns for BCI games in conjunction with VR applications. For instance, in designing BCI technology in the healthcare domain, references [34,77] describe several concerns about the daily usage of the BCI for patient suffering from disabilities, including ergonomics of the electrodes and functional requirement from the patient. Then, the authors designed and created a system meeting the requirement of the patient, before testing it through a standardized questionnaire of satisfaction [110]. The recommendations we have exposed here appear to be a first and a necessary step in the creation of a BCI+VR game at “out-of-the-lab” destination.

Finally, an extended discussion is needed concerning ethical concerns and medical consequences of video game designs [111,112]. Regarding the latter, Cobb et al. [113] introduce the term of VRISE (virtual reality induced symptoms and effects) and reports than VRISE might be serious for a small percent of people, even if the symptoms seem to be short-lived and minor for the majority of people. Virtual reality might modify heart rate, induce nausea and increase the level of aggressiveness [114,115]. It is not clear, however, if these effects persist (more than a few days) or if they are temporary. Concerning the positive effects of VR-based therapy, after analysing 50 studies on the subjects, Gregg and Tarrier [116] concluded that the effectiveness of such therapy remains still unproven. We are not aware of any study concerning the long-term side effects of control-oriented BCIs. However, extensive and enduring literature exists demonstrating the potential of BCI technology for neurotherapy (i.e., neurofeedback, see [117]). The natural question arises concerning the long-term possible side effects and therapeutical effects of using BCI technology in VR environments.

5. Conclusions

In this article, we have exposed the limitations of current BCI-enriched virtual environments and recommendations to work around these limitations (Table 2). The recommendations address several software and hardware problems of currently available systems. We have proposed different ways to resolve or circumvent these limitations. Hopefully, this will help and encourage game creators to incorporate BCI in VR. We have focused on P300-based BCI since as per today this BCI paradigm features the best trade-off between usability and transfer rate. An essential recommendation is to use the BCI only for small and non-critical tasks in the game. Concerning software limitations, using actions naturally integrated into the virtual world is important. A cooperative game is also a good solution since it enables multiple actions and enhances social interaction and entertainment. In addition, the use of passive BCIs appears essential to bring a unique perspective into VR technology. In fact, only a BCI may provide information on the user’s mental state, whereas for giving commands, traditional input devices are largely superior to current BCIs. However, more promising results can be obtained combining different stimulations for BCI, such as coupling a visual P300 to an audio P300, or mVEP to a SSVEP. In general, BCI integrates easier with turn-based games that require high levels of concentration and logical thinking (for example: strategy, artificial life, simulation, puzzle, and society games). Among these games, simulation and adventures games appear the best choice for VR, as long as they implement slow gameplay and reduced movements. However, P300-based BCI technology may be used in other types of games to control a specific action in the game and for increasing the level of enjoyability (e.g., sport or RPG game). Concerning hardware limitations, an ideal solution would be to use a VR device with embedded EEG headset, together with sensor fusion capabilities to precisely track the position of the user and the rotation of the head. The main recommendation for avoiding user sickness is to avoid unnatural effects—like a lag in the animation, performing user motion when the user is not moving or modifying the natural parallax of the user. Furthermore, locomotion in a virtual world should imply a motion of the user itself, which is not always possible considering the gesture to be performed (such as swimming, climbing or flying) and the space where the game takes place (the virtual world can be bigger than the real space). In such case, designers can use teleportation, walk-in-place, or redirected walking to help moving in the virtual world, keeping in mind that cutting the scene and translations should be avoided. Although redirected walking is not applied in video games, studios should consider this option as it reproduces real walking. Motion sickness also varies according to individual characteristics such age, genre or psychological abilities, but it can be diminished by experience and by taking regular breaks when using VR devices.

Table 2.

Summary of the recommendations, according to their level of interest and evidence. The colour indicates either recommendations are proof-based and pertinent (deep blue) or rather less significant suggestions (light blue) according to our classification.

Finally, coupling two technologies, each with its limitations, is a difficult task. The recommendations in this article have the objective to ensure that a VR+BCI game can be successfully designed and implemented. Without these, the amount of time that developers will lose, will make the domain of VR+BCI highly unattractive, while poor game design will cause the disinterest of the general public for such technology.

Author Contributions

G.C., E.V. and A.A. wrote this paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zyda, M. From visual simulation to virtual reality to games. Computer 2005, 38, 25–32. [Google Scholar] [CrossRef]

- Brown, E.; Cairns, P. A grounded investigation of game immersion. In Proceedings of the Extended Abstracts of the 2004 Conference on Human Factors and Computing Systems-CHI’04; ACM: New York, NY, USA, 2004; pp. 1297–1300. [Google Scholar] [CrossRef]

- Harvey, D. Invisible Site: A Virtual Sho. (George Coates Performance Works, San Francisco, California). Variety 1992, 346, 87. [Google Scholar]

- Steuer, J. Defining Virtual Reality: Dimensions Determining Telepresence. J. Commun. 1992, 42, 73–93. [Google Scholar] [CrossRef]

- Tokman, M.; Davis, L.M.; Lemon, K.N. The WOW factor: Creating value through win-back offers to reacquire lost customers. J. Retail. 2007, 83, 47–64. [Google Scholar] [CrossRef]

- Bamford, A. The Wow Factor: Global Research Compendium on the Impact of the Arts in Education; Waxmann Verlag: Münster, Germany, 2006; ISBN 978-3-8309-6617-3. [Google Scholar]

- Lécuyer, A.; Lotte, F.; Reilly, R.B.; Leeb, R.; Hirose, M.; Slater, M. Brain-Computer Interfaces, Virtual Reality, and Videogames. Computer 2008, 41, 66–72. [Google Scholar] [CrossRef]

- Andreev, A.; Barachant, A.; Lotte, F.; Congedo, M. Recreational Applications of OpenViBE: Brain Invaders and Use-the-Force; John Wiley & Sons: Hoboken, NJ, USA, 2016; Volume 14, ISBN 978-1-84821-963-2. [Google Scholar]

- Van De Laar, B.; Gurkok, H.; Bos, D.P.-O.; Poel, M.; Nijholt, A. Experiencing BCI Control in a Popular Computer Game. IEEE Trans. Comput. Intell. AI Games 2013, 5, 176–184. [Google Scholar] [CrossRef]

- Mühl, C.; Gürkök, H.; Bos, D.P.-O.; Thurlings, M.E.; Scherffig, L.; Duvinage, M.; Elbakyan, A.A.; Kang, S.; Poel, M.; Heylen, D. Bacteria Hunt. J. Multimodal User Interfaces 2010, 4, 11–25. [Google Scholar] [CrossRef]

- Angeloni, C.; Salter, D.; Corbit, V.; Lorence, T.; Yu, Y.C.; Gabel, L.A. P300-based brain-computer interface memory game to improve motivation and performance. In Proceedings of the 2012 38th Annual Northeast Bioengineering Conference (NEBEC), Philadelphia, PA, USA, 16–18 March 2012; pp. 35–36. [Google Scholar]

- Kaplan, A.Y.; Shishkin, S.L.; Ganin, I.P.; Basyul, I.A.; Zhigalov, A.Y. Adapting the P300-Based Brain–Computer Interface for Gaming: A Review. IEEE Trans. Comput. Intell. AI Games 2013, 5, 141–149. [Google Scholar] [CrossRef]

- Pires, G.; Torres, M.; Casaleiro, N.; Nunes, U.; Castelo-Branco, M. Playing Tetris with non-invasive BCI. In Proceedings of the 2011 IEEE 1st International Conference on Serious Games and Applications for Health (SeGAH), Braga, Portugal, 16–18 November 2011; pp. 1–6. [Google Scholar]

- Liao, L.-D.; Chen, C.-Y.; Wang, I.-J.; Chen, S.-F.; Li, S.-Y.; Chen, B.-W.; Chang, J.-Y.; Lin, C.-T. Gaming control using a wearable and wireless EEG-based brain-computer interface device with novel dry foam-based sensors. J. Neuroeng. Rehabil. 2012, 9, 5. [Google Scholar] [CrossRef]

- Edlinger, G.; Guger, C. Social Environments, Mixed Communication and Goal-Oriented Control Application Using a Brain-Computer Interface. In Universal Access in Human-Computer Interaction. Users Diversity; Springer: Berlin/Heidelberg, Germany, 2011; pp. 545–554. [Google Scholar] [CrossRef]

- Gürkök, H. Mind the Sheep! User Experience Evaluation & Brain-Computer Interface Games; University of Twente: Enschede, The Netherlands, 2012. [Google Scholar]

- Congedo, M.; Goyat, M.; Tarrin, N.; Ionescu, G.; Varnet, L.; Rivet, B.; Phlypo, R.; Jrad, N.; Acquadro, M.; Jutten, C. “Brain Invaders”: A prototype of an open-source P300- based video game working with the OpenViBE platform. In Proceedings of the 5th International Brain-Computer Interface Conference 2011 (BCI 2011), Styria, Austria, 22–24 September 2011; pp. 280–283. [Google Scholar]

- Ganin, I.P.; Shishkin, S.L.; Kaplan, A.Y. A P300-based Brain-Computer Interface with Stimuli on Moving Objects: Four-Session Single-Trial and Triple-Trial Tests with a Game-Like Task Design. PLoS ONE 2013, 8, e77755. [Google Scholar] [CrossRef]

- Wolpaw, J.; Wolpaw, E.W. Brain-Computer Interfaces: Principles and Practice; Oxford University Press: New York, NY, USA, 2012; ISBN 978-0-19-538885-5. [Google Scholar]

- Zhang, Y.; Xu, P.; Liu, T.; Hu, J.; Zhang, R.; Yao, D. Multiple Frequencies Sequential Coding for SSVEP-Based Brain-Computer Interface. PLoS ONE 2012, 7, e29519. [Google Scholar] [CrossRef] [PubMed]

- Sepulveda, F. Brain-actuated Control of Robot Navigation. In Advances in Robot Navigation; IntechOpen: London, UK, 2011; Volume 8, ISBN 978-953-307-346-0. [Google Scholar]

- Fisher, R.S.; Harding, G.; Erba, G.; Barkley, G.L.; Wilkins, A. Epilepsy Foundation of America Working Group Photic- and pattern-induced seizures: A review for the Epilepsy Foundation of America Working Group. Epilepsia 2005, 46, 1426–1441. [Google Scholar] [CrossRef] [PubMed]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Vidal, J.J. Real-time detection of brain events in EEG. Proc. IEEE 1977, 65, 633–641. [Google Scholar] [CrossRef]

- Lotte, F.; Nam, C.S.; Nijholt, A. Introduction: Evolution of Brain-Computer Interfaces; Taylor & Francis (CRC Press): Abingdon-on-Thames, UK, 2018; pp. 1–11. ISBN 978-1-4987-7343-0. [Google Scholar]

- Vidal, J.J. Toward Direct Brain-Computer Communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Squires, N.K.; Squires, K.C.; Hillyard, S.A. Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalogr. Clin. Neurophysiol. 1975, 38, 387–401. [Google Scholar] [CrossRef]

- Guger, C.; Daban, S.; Sellers, E.; Holzner, C.; Krausz, G.; Carabalona, R.; Gramatica, F.; Edlinger, G. How many people are able to control a P300-based brain-computer interface (BCI)? Neurosci. Lett. 2009, 462, 94–98. [Google Scholar] [CrossRef]

- Congedo, M. EEG Source Analysis, Habilitation à Diriger des Recherches; Université de Grenoble: Grenoble, France, 2013. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Multiclass brain-computer interface classification by Riemannian geometry. IEEE Trans. Biomed. Eng. 2012, 59, 920–928. [Google Scholar] [CrossRef]

- Barachant, A.; Congedo, M. A Plug&Play P300 BCI Using Information Geometry. ArXiv14090107 Cs Stat. August 2014. Available online: http://arxiv.org/abs/1409.0107 (accessed on 27 April 2017).

- Congedo, M.; Barachant, A.; Bhatia, R. Riemannian geometry for EEG-based brain-computer interfaces; a primer and a review. Brain-Comput. Interfaces 2017, 4, 155–174. [Google Scholar] [CrossRef]

- Marshall, D.; Coyle, D.; Wilson, S.; Callaghan, M. Games, Gameplay, and BCI: The State of the Art. IEEE Trans. Comput. Intell. AI Games 2013, 5, 82–99. [Google Scholar] [CrossRef]