1. Introduction

Augmented Reality (AR) means enhancing the user’s perception “with additional, artificially generated sensory input to create a new experience including, but not restricted to, enhancing human vision by combining natural with digital offers” [

1]. Augmented Reality typically has three characteristics [

2]: (1) AR combines the virtual with the real world; (2) objects are registered from both the real and virtual world in one coordinate system; and (3) the interaction between the objects of both worlds is possible in real time.

Hands-on training is important for many disciplines and professions, such as medical workers, mechanics, technicians, electricians, engineers, sailors, pilots, and firefighters. In the past decade, AR has been increasingly employed for a number of training applications, such as medical education [

3], rehabilitation engineering [

4], automotive safety [

5], task assistance [

6], and manufacturing [

7].

For the successful adoption of AR-based training across different domains, one of the key factors is user satisfaction. User satisfaction is defined as a combination of different factors associated with the usage of the AR application and the associated delivery device [

8]. These factors include: a feeling of powerfulness and achievement; an efficient use of time, effort, and other resources; meaningful content; a better insight into the training environment; a natural interaction; a feeling of amazement; performance that exceeds expectations; playfulness; the invoking of positive feelings and pleasing memories; immersion and engagement; a transparent interaction; the feeling of participation in a community; a sense of privacy of the user’s content; inspiration, encouragement, and motivation; and, finally, artistic creativity [

8].

The main objective of this study was to test and observe user satisfaction in using AR applications and using AR glasses. The method for evaluating included questionnaires and interviews. The AR app used in this evaluation, therefore, has two parts: one is the expert recording the experience in the workplace, and the other is the novices training on work-related procedures using said recordings. In this study, we evaluated the following research hypotheses: to find if experts and students are satisfied with the prototype application, to see if the application can increase interest in learning new skills, and to evaluate if the users find the application easy to use.

The rest of this paper is organized as follows. First, we turn to the state of the art, summarizing what the research has found so far with respect to AR user interaction, AR user satisfaction, and questionnaires used for evaluating user satisfaction. Next, the AR app used in the trials is described. Subsequently presented are the research methodology and a summary of the information of the participants, devices, design of trial tasks, and evaluation methods. Finally, findings and results are illustrated, and the discussion and conclusion are given at the end.

2. State of the Art

2.1. AR User Interaction

AR technologies provide a different user experience than that of, for example, mobile phone apps. The user interacts with the surrounding real world, combining inputs from the environment with digital augmentations. Popular examples include PokemonGO and SnapChat. These types of apps certainly brought the term “augmented reality” into the spotlight [

9]. With the advent of consumer-grade AR glasses, different types of AR user interactions are becoming necessary. For example, a user who is wearing Microsoft HoloLens can communicate diagrams and other types of graphics directly embedded into the environment to a different, remote user (see

Figure 1).

2.2. AR User Satisfaction and Questionnaires for Evaluating User Satisfaction

AR technology has evolved from offline to online, from static devices to mobile devices, and from desktop and mobile to wearable devices [

11]. Consequently, with AR development over the past decade or so, special attention has been drawn to the maximization of AR user satisfaction. AR user satisfaction is dependent on both the design of the user interface (UI) and the choice of the AR hardware. Personalization of AR glasses can lead to greater AR user satisfaction [

12]. AR apps designed for a good user experience result in a more overall satisfied AR user. This applies to AR navigation apps, AR health apps, AR education apps, and certain AR smart glasses games [

13].

There are several concepts and subjective measures for evaluating the user experience of AR services. With regards to the user, satisfaction questionnaires are common tools used to evaluate a user’s experience. One such tool—the Questionnaire for User Interaction Satisfaction (QUIS)—is designed to assess users’ subjective satisfaction with specific aspects of the human–computer interface [

14]. The results of QUIS facilitate new developments by addressing reliability and validity problems found using its satisfaction measurements. Therefore, the measure is highly reliable across many types of interfaces.

QUIS consists of a demographic questionnaire, a six-scale measure of overall system satisfaction, and hierarchically organized measures. The measures include the following specific interface factors [

14]: screen factors, terminology and system feedback, learning factors, system capabilities, technical manuals, online tutorials, multimedia, teleconferencing, and software installation. Each area is measured by a seven-point scale according to the user’s overall satisfaction with the interface and the above factors [

14].

3. The AR Application

The AR application consists of two modes: recorder and player. This AR application is part of the work from WEKIT (Wearable Experience for Knowledge Intensive Training) project.

The recorder is designed for capturing an expert’s workplace experience and combining it with technical documentation associated with a given scenario. The player is used to reenact the scenario to verify the recordings and usually employed to train a novice for the scenario.

To capture an expert’s experience, a set of transfer mechanisms were defined by Limbu et al. [

15]. The so-called transfer mechanisms allow us to map the key aspects of an expert’s performance to low level data and subsequent sensors. For more details on the different sensor components and their integration, please see the work by Sharma et al. [

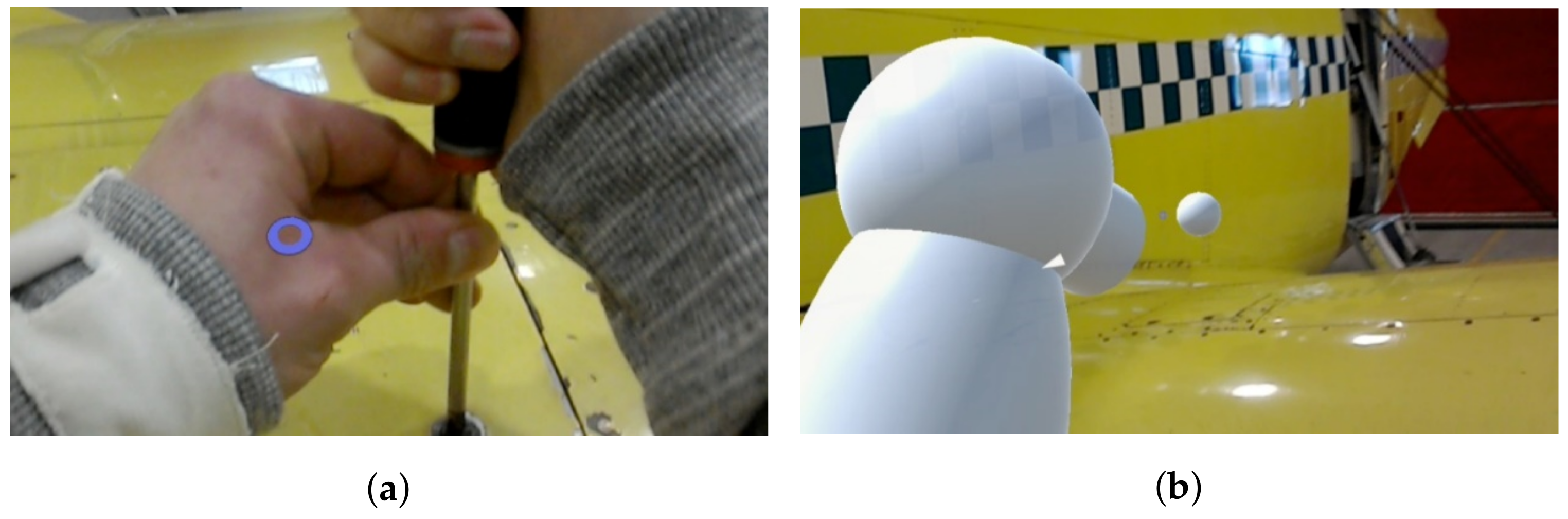

16]. The recorder (as shown in

Figure 2 [

17]) consists of a radial menu that allows us to select different options for capturing diverse annotations such as: pictures, videos, text annotations (for adding text information to different objects in the environment), audio, ghost hands (to capture the locations and movements of user’s hands) and 3D models (useful for performing the task).

Trainers can use a so-called “ghost track” to record their own position and indoor movement, while at the same time recording voice explanations. When replaying such recording to the trainees, the holographic “ghost” representation of the expert provides more intuitive guidance on where to be, where to focus, and what to do than merely reading about the task to be learned in a manual using text and illustration.

Figure 3 shows an example of such ghost track recording and replay for an aircraft maintenance task. The app was recording the expert when he was maintaining the aircraft (

Figure 3a [

18]). After recording, in the replay, as shown in

Figure 3b [

18], we can see a representation of the expert’s position and his or her hand position (represented by the white sphere).

The player is the mode designed for trainees to learn how to do procedural operations (kind of “do-torial” mode). The app executes AR learning experience models (IEEE standard association, working group p1589), thus allows loading different learning and training activities. Activities can be transferred from device to device as well as from place to place, using a calibration marker to recalculate the relative positions of all points of interest, while utilizing 3D environmental mapping to provide stable projections.

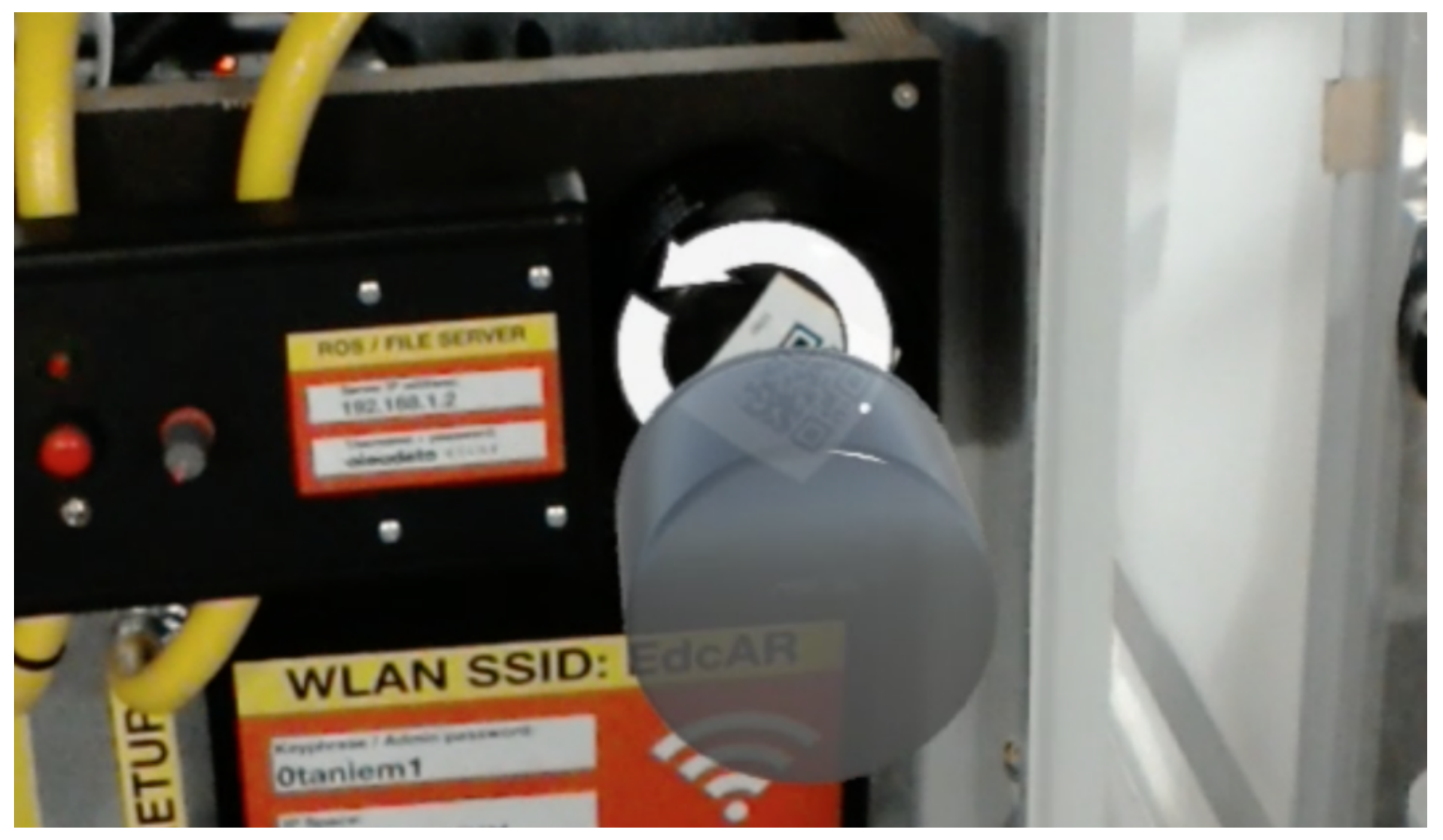

The WEKIT player starting screen is shown in

Figure 4 [

17]. Once the task starts, the first action step and its associated augmentations are shown on the smart glasses display. From the perspective of the users, this typically means that the visual annotations overlay onto their unimpeded real-world view (optical see-through). Step by step, they guide the user through the learning task at hand. Gesture commands, voice commands, and the hardware clicker are all available when using the app.

Figure 5 [

19] shows an example of the WEKIT player in action. When the sensors on the HoloLens detect the particular tangible object, the virtual button is displayed in front of the trainee, while instruction on handling and movement are given at the same time.

4. Research Design/Experiment Methodology

4.1. Participants

To evaluate the satisfaction of the user interaction and the smart glasses user experience, the WEKIT application was deployed in three different pilot testing scenarios: aviation, medical imaging, and space. In total, 142 people participated in the three scenarios, 55 in aviation, 48 in medical imaging, and 39 in space. Moreover, in the experiments, the test population was divided into two main groups, experts and students, respectively. A total of 47 experts (8 females and 39 males) with a high level of technological competency in their respective fields were recruited. A total of 95 learners (23 females and 72 males) from the three different fields voluntarily participated in the trials. The majority of the participants (68) were in the 18–24 age group, followed by 48 of the participants in the range between 25 and 34. Most of the participants had moderate or better computer knowledge and Internet knowledge, expressed on a five-point Likert scale ranging from very poor, poor, moderate, good, to very good. All participants gave written consent for their participation in the trials.

4.2. Material and Apparatus

The trial used the Microsoft HoloLens as wearable AR glasses for assessing the user’s satisfaction with AR training. There are two parts in the WEKIT technology platform [

20] deployed on HoloLens. One is a recorder for capturing expert experience and the other one is a player for presenting the expert’s experience to the trainees. During the trial, all interactions and manipulations were done by using gesture and voice command only.

4.3. Trial Design/Task

The trial tasks were separated into three different areas, as mentioned in

Section 4.1. Tasks in the Aeronautics use case were performed at Lufttransport, Norway. The scenario used for the aeronautics use case was a pre-flight inspection consisting of checking and securing different items such as baggage, exits, locks, and checking the status of components such as landing gears, brakes, engine switches, battery, and fuel. The experts comprised of maintenance apprentices, skilled workers (mechanics), and technicians working on base maintenance at Lufttransport. The novice group comprised of student volunteers from UiT The Arctic University of Norway [

18].

Figure 6 shows a novice engaging in the pre-flight inspection task. Experts had been using the different types of annotations to create the required instruction for the training procedure, which then was provided to the trainee in the player mode of the AR app. The novice followed the instructions in order to complete the task in the cockpit.

The pre-flight inspection scenario consisted of the steps shown in

Table 1.

The medical task involved imaging and diagnostic workers and was conducted at EBIT (Esaote’s Healthcare IT Company) in Genoa, Italy [

22]. This task was for training medical students and radiologist apprentices on using MyLab8, an ultrasound machine produced by ESAOTE [

23]. Similar to the trial at Lufttransport, the users executed the steps of the procedure using the player mode of the application. The scenario for the medical use case was to perform a particular ultrasound examination to analyze a patient’s condition. The patient was a paid actor. During the task, the novice doctors needed to combine data from different sources in order to arrive at the correct diagnosis. As for the holographic training instruction, the guidance was set up for the player mode again using experts, adding the step-by-step description needed to guide the trainee through the full scanning and assessment procedure. The novice doctors then wore the HoloLenses and tried to perform the examination. The tasks are shown in

Table 2. In

Figure 7, we can see a novice performing a task by positioning the probe in the target direction and taking measurements using the player application.

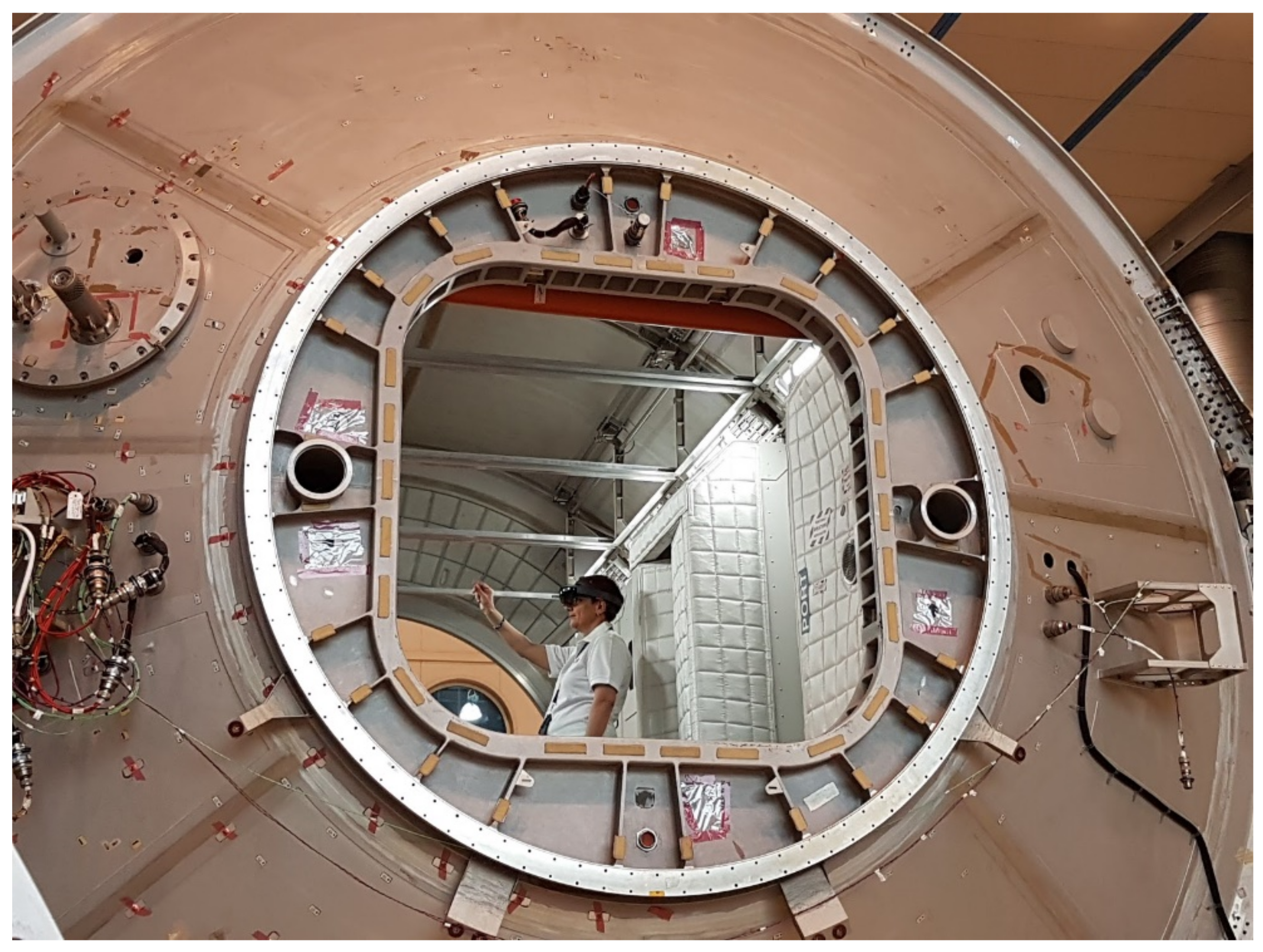

The space task that was conducted at the facilities of ALTEC in Turin, Italy and it involved training astronauts on how to install a Temporary Stowage Rack (TSR). The TSR installation is a procedure that astronauts have to perform on the International Space Station (ISS) [

24]. Similar to the trials at the other two organizations, experts designed the training scenario, while a larger number of trainees then executed the scenario on the player application. The evaluation of the expert’s experience was conducted using the recorder mode of the app as well as the player, while the trainees used only the player mode. The steps for this procedure are as follows. First, trainees were asked to scan the working area to create the 3D model of the environment, and then to identify the six seat track studs location on the structure, the position of the ball bearing and brackets. Next, they were instructed to fix the six studs in specific locations. Finally, they were asked to extract TSR, deploy it, and fix it to the correct places. The novices performed the task based on the recorded content.

Table 3 shows the details of the steps. In

Figure 8, we can see a participant of the trials performing a task in a replica module of the International Space Station.

4.4. Smart Glasses User Satisfaction (SGUS)

The Smart Glasses User Satisfaction (SGUS) questionnaire was created for the WEKIT trials. It is a tool designed to assess users’ subjective satisfaction with smart glasses. SGUS is a method and measure to scrutinize aspects, such as an enhanced perception of the environment, interaction with the augmented environment, implications of location and object awareness, the user-created AR content, and the new AR features that users typically use [

8]. The general objective of the questionnaire is to understand the potential end users’ central expectations of AR services with smart glasses, especially from an experiential point of view [

8]. In this study, the smart glasses used for the different use cases were Microsoft HoloLens. SGUS measures subjective satisfaction on the basis of different features associated with user satisfaction, such as the content and interaction with the content. SGUS is based on evaluation criteria for web-based learning [

25] and statements evaluating the user experience of mobile augmented reality services [

8]. Some of the items from the table “Evaluation criteria for web-based learning—a framework” [

25] and table “Examples of formative subjective statements with regard to the value and overall goodness of the service in terms of the UX category in question” [

8] were picked and modified. SGUS consists of 11 items (statements) on a seven-point Likert scale (1–7) [

18]. The 11 statements include three categories of evaluation criteria, which are general interface usability criteria, AR interaction-specific criteria for an educational AR app, and learner-centered effective learning [

25].

4.5. Questionnaire for User Interface Satisfaction (QUIS)

The Questionnaire for User Interaction Satisfaction (QUIS) measures subjective satisfaction with specific aspects of the interface and interaction between the user and the AR application [

26]. In this study, QUIS was modified for AR glasses, i.e., HoloLens. There are five sections in the “User Evaluation of an Interactive Computer System” (see

Appendix A) [

26]. We picked some items from this questionnaires for our study: all the questions in the

overall reactions to the software section; No. 44 and 46 in

screen section; No. 50 and 51 in

terminology and system information; No. 54, 55, and 57 in

learning section; and No. 60 and 61 in

system capabilities section. All of these are directly used, except No. 54, which was modified to AR glasses to adapt this study. The rest of the items were not applicable for our setting, therefore were not used. Hence, a questionnaire with 15 items was used. To maintain consistency with the survey in other sections, each item was mapped to a numeric value of 1–7 instead of the nine-point scale.

4.6. Procedure

As most participants had no experience with AR glasses, at the beginning of the trial, they were asked to familiarize themselves with the AR glasses, i.e., HoloLens. To do this, gesture training with HoloLens was done before they started using the application. The application comprised a scenario that the participants had to complete in a particular use case setting. The content of the application was generated by experts in that specific use. After the participants completed all the tasks, they were provided with the QUIS and SGUS questionnaires to complete.

5. Results/Findings

5.1. Descriptive Statistics

In this section, we report on descriptive statistics for the smart glasses user interaction and the interaction satisfaction. We organize the findings alongside the investigation of eight hypotheses, with the summary of these shown in

Table 4.

Hypothesis 1. Does gender matter? In Science and Engineering, gender is not balanced and there are fewer women than men [27]. Gender stereotypes can affect use of established technologies. We therefore investigated whether the influence on user satisfaction of these new media will be moderated by gender. Hypothesis 2. Does age matter? Studies imply that younger people embrace new technologies more easily [28]. Since we used AR glasses and applications for training, we wanted to know whether age affects user satisfaction. Hypothesis 3. Are experts more tech savvy? It is likely that experts have more experience with technology applications, as in general they also have more domain-specific knowledge and skills. We assumed that they would be more able to grasp the app concept, thus be more satisfied with the interaction. The novices, however, may have less knowledge and skills, hence, may find the app difficult to use.

Hypothesis 4. Does education matter? Higher levels of education go hand in hand with higher levels of ICT skills. It is justified to hypothesize that the educational level predicts satisfaction.

Hypothesis 5. Does computer knowledge matter? Higher levels of ICT and media skills typically involve transfer skills. The AR smart glasses headset used, Microsoft Hololens, is a stand-alone device. We need basic computer knowledge to use it. Those with better computer knowledge might find it easy to use, and hence, give a higher score in terms of user satisfaction.

Hypothesis 6. Does Internet knowledge matter? In analogy to computer skills, one can expect Internet skills to influence the user satisfaction levels in a positive manner.

Hypothesis 7. Are there differences in satisfaction levels between the participants of the three test-beds? The trials involved three different learning tasks, in three different environments, with three different groups of participants. As all three trials are about training a particular procedure, there are no differences identified across test-beds.

Hypothesis 8. Is there any interaction between the above-mentioned factors?

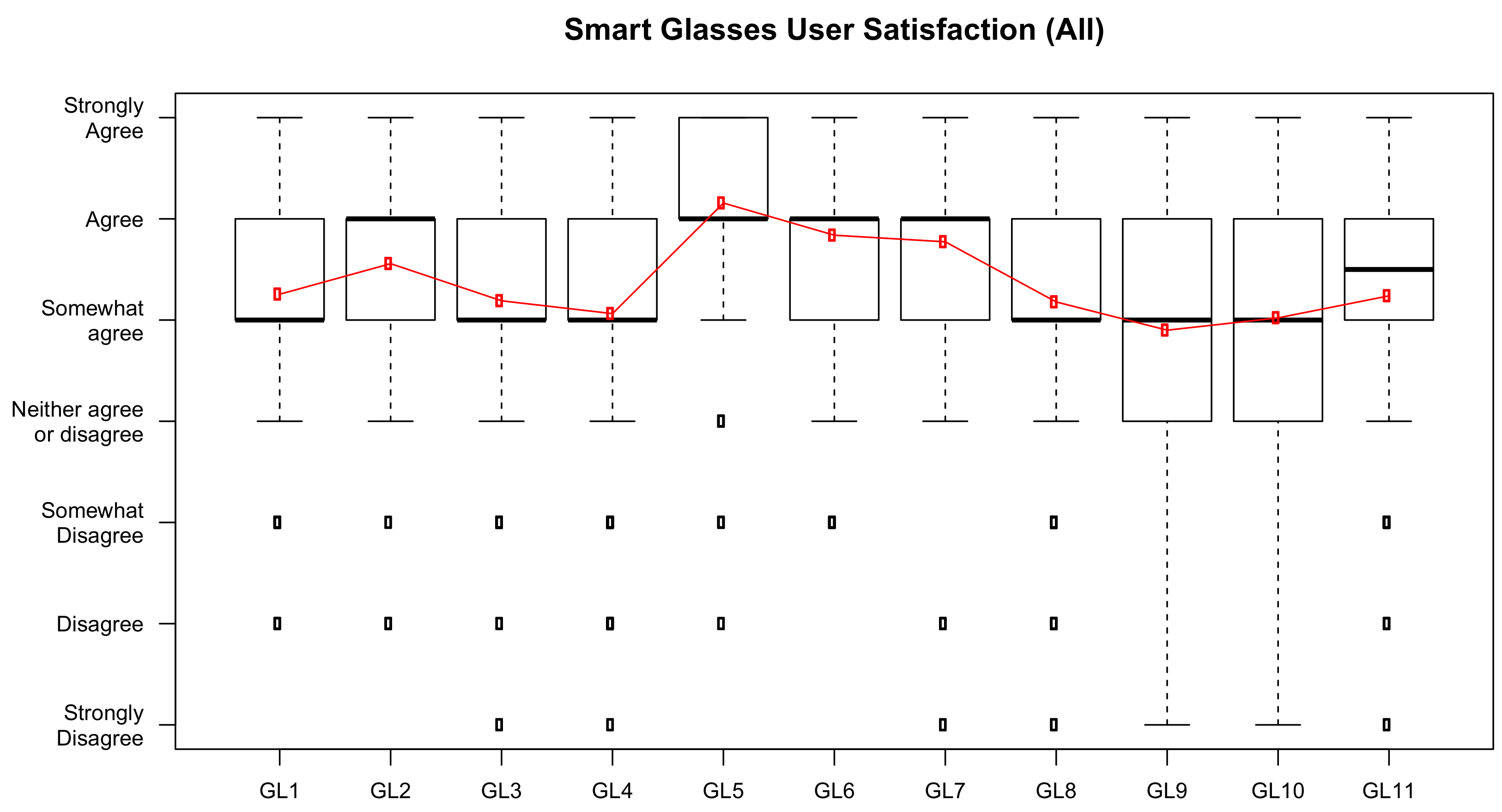

5.1.1. SGUS

As mentioned before, SGUS has 11 items. The summation of the score for the 11 items is the SGUS score. As shown in

Table 5, we provide data the following data: n (number of participants), mean, standard deviation, minimum value, Q1 (the first quartile: “middle” value in the first half of the rank-ordered data set), median, Q3 (the third quartile: “middle” value in the second half of the rank-ordered data set), and maximum value for the variables gender, education level, roles, and organizations. Based on these results, it is clear that the mean scores are similar across the different levels associated with the variables.

5.1.2. QUIS

Similarly, the overall Questionnaire for User Interface Satisfaction (QUIS) score was calculated by summation of the score for the 15 QUIS items. Summary data for all questions in QUIS are presented in

Table 6. The 15 items were designed independently from each other. These items aim to investigate the satisfaction of users with different aspects of the interface, including usability and user experience in using AR applications.

5.2. Correlation

In this section, we discuss correlation for SGUS and correlation for QUIS.

5.2.1. Correlation of SGUS

Spearman’s correlation coefficient,

, measures the strength and direction of association between two ranked variables in the range [−1, 1]. Based on the 11 items, the results of Spearman’s rank correlation are shown in

Table 7: the first value of each row represents Spearman’s correlation coefficient, and the second value of each row represents the p value. It can be seen that almost all items are statistically significant (

p < 0.05) and have a low positive correlation. This implies that all the items are independent.

In the study of SGUS, each of the items investigates a different aspect of the user experience. For the analysis, the overall averages for all items were calculated.

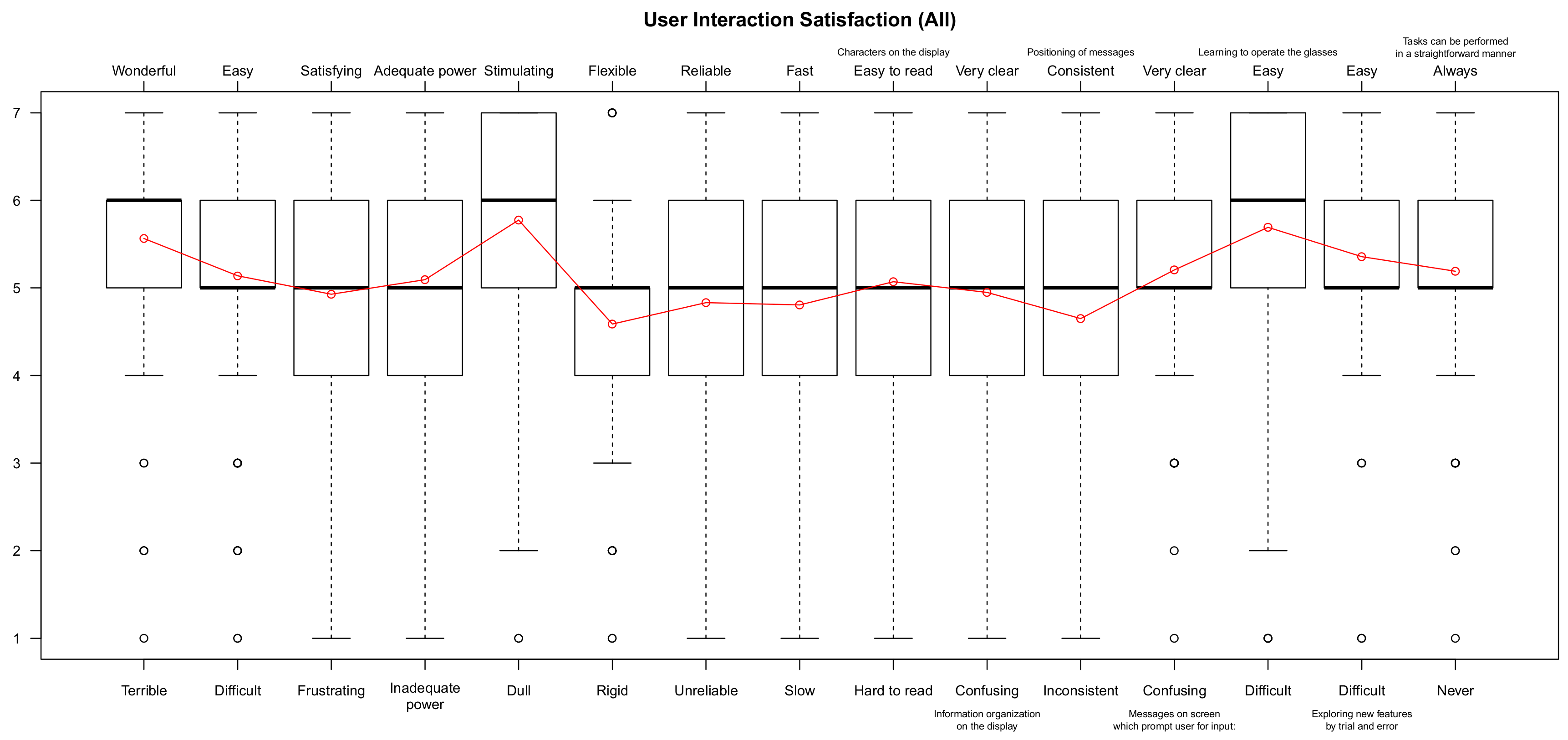

Figure 9 shows the plot of the average score from individual items. The box in the plot depicts the answer of 50% of the participants, with the line in the middle indicating the median. The dotted lines span the 95% confidence interval. Outliers are depicted with black dots. The connected red dots indicate the medians. The results imply that most of the participants had a good conception of what is real and what is augmented when using AR-glasses (GL5). The participants indicated that the system and content helped them to accomplish the task quite well (GL7) and their attention was captivated in a positive way (GL6). The provided content was also seen as contextually meaningful (GL2). However, performing the task with AR glasses was experienced as less natural (GL9 and GL4), and following and understanding the task phases (GL8 and GL10–11) was not very easy [

18]. The results were very much in line across the three.

5.2.2. Correlation of QUIS

The correlation for QUIS is based on 15 items. The results of Spearman’s rank correlation are shown in

Table A1 (see

Appendix A). The values in the table have the same meaning as in

Table 7. The results are similar to those of SGUS; most of the items are statistically significant (

p < 0.05) and have a low positive correlation. This implies that most of the items are independent.

In the study of QUIS, each of the items investigated different aspects of the user experience. For the analysis, the overall average from all items was calculated.

Figure 10 shows the plot of the average score from individual items, and the description of the plot is the same as that of the SGUS plot. The results imply that most of the participants agree that learning to operate the AR glasses (QS13) seemed to be rather easy, and the overall enthusiasm towards the system seemed (QS1 and QS5) to be very positive. The characters on the screen were relatively easy to read (QS9). The means of QS3, QS4, QS6, QS7, and QS8 indicate that the system was experienced as rigid, unreliable, and slow, which may cause frustration [

18].

5.3. Analysis of Variance and Interaction Plots

The participants were described by seven factors: gender, age, role, education skill level, computer knowledge level, Internet knowledge level, and organization. Each factor was divided by two levels, except for organizations, which were in three levels. Please note that none of the participants claimed that they have a poor or very poor Internet knowledge level. The following section discusses the analysis of variance (ANOVA) of QUIS and of SGUS. In this ANOVA study, SGUS and QUIS scores were investigated for using the application on the AR glasses with six independent variables, i.e., the relationships between: age distribution, gender, roles, highest level of education, organization, and computer knowledge. Therefore, there were six main effects and 57 interactions. We were interested in whether there is a relationship between the satisfaction levels (measured by the questionnaire) and these factors.

5.3.1. ANOVA of SGUS

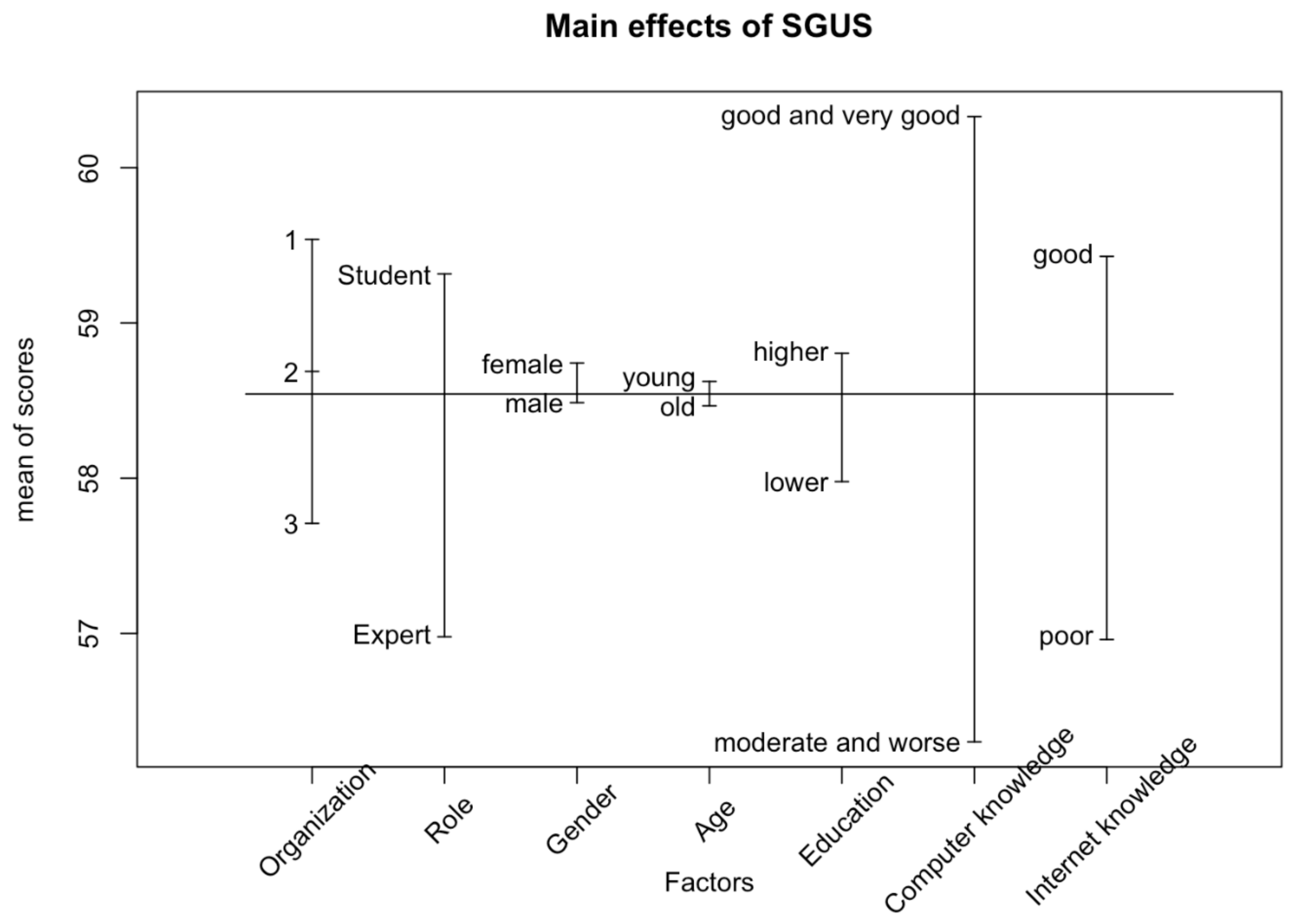

In this study, we investigated whether the age, gender, roles, computer knowledge level, or different organizations have an effect on the satisfaction of using AR glasses. To determine this, we needed to look at the simple main effects: the main effect of one independent variable (e.g., age) at each level of another independent variable (e.g., for students and for experts).

Figure 11 shows the main effects of the six factors. Participants with different computer knowledge levels have the greatest differences in the SGUS results. This means that the participants with good computer knowledge and poor computer knowledge gave different scores for user satisfaction. The results show that participants with good or very good computer knowledge were, in general, more satisfied with the smart glasses application, and there is a significant effect from computer knowledge levels (F value = 8.87,

p = 0.003). The result implies that the SGUS score was affected by the effects of good computer knowledge.

Table 8 shows the summary results of the linear model of the independent variables. The estimate for the model intercept is 54.688 and the coefficient measuring the slope of the relationship with computer knowledge level is 4.324. There is strong evidence that the significance of the model coefficient is significantly different from zero: as the computer skill level increases, so does the satisfaction. The information about the standard errors of these estimates is also provided in the Coefficients table. In the result of the multiple regression model, only 8.8% of the variance in the SGUS scores is explained by each of the factors (Multiple R-squared is 0.088). There is no statistically significant factor that explains the variation in the SGUS scores (overall p value is 0.08).

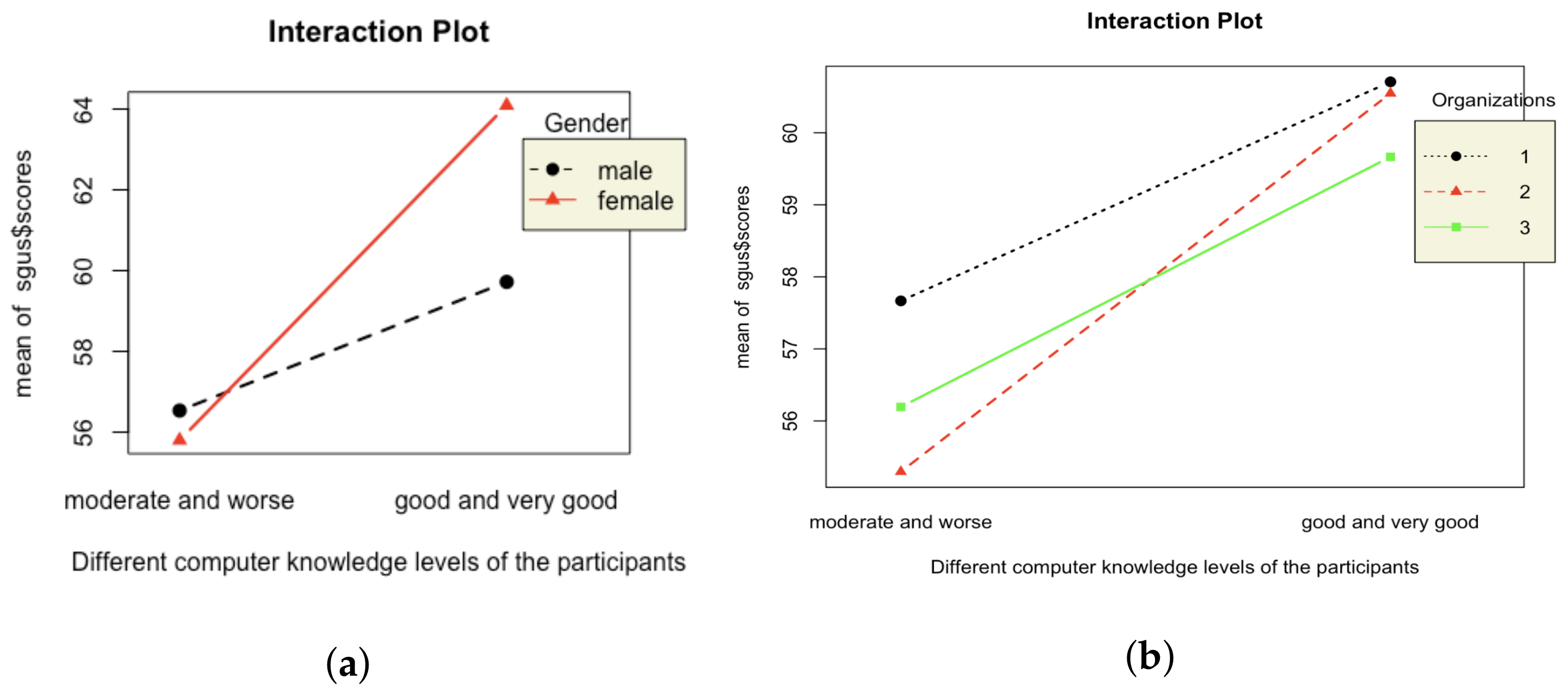

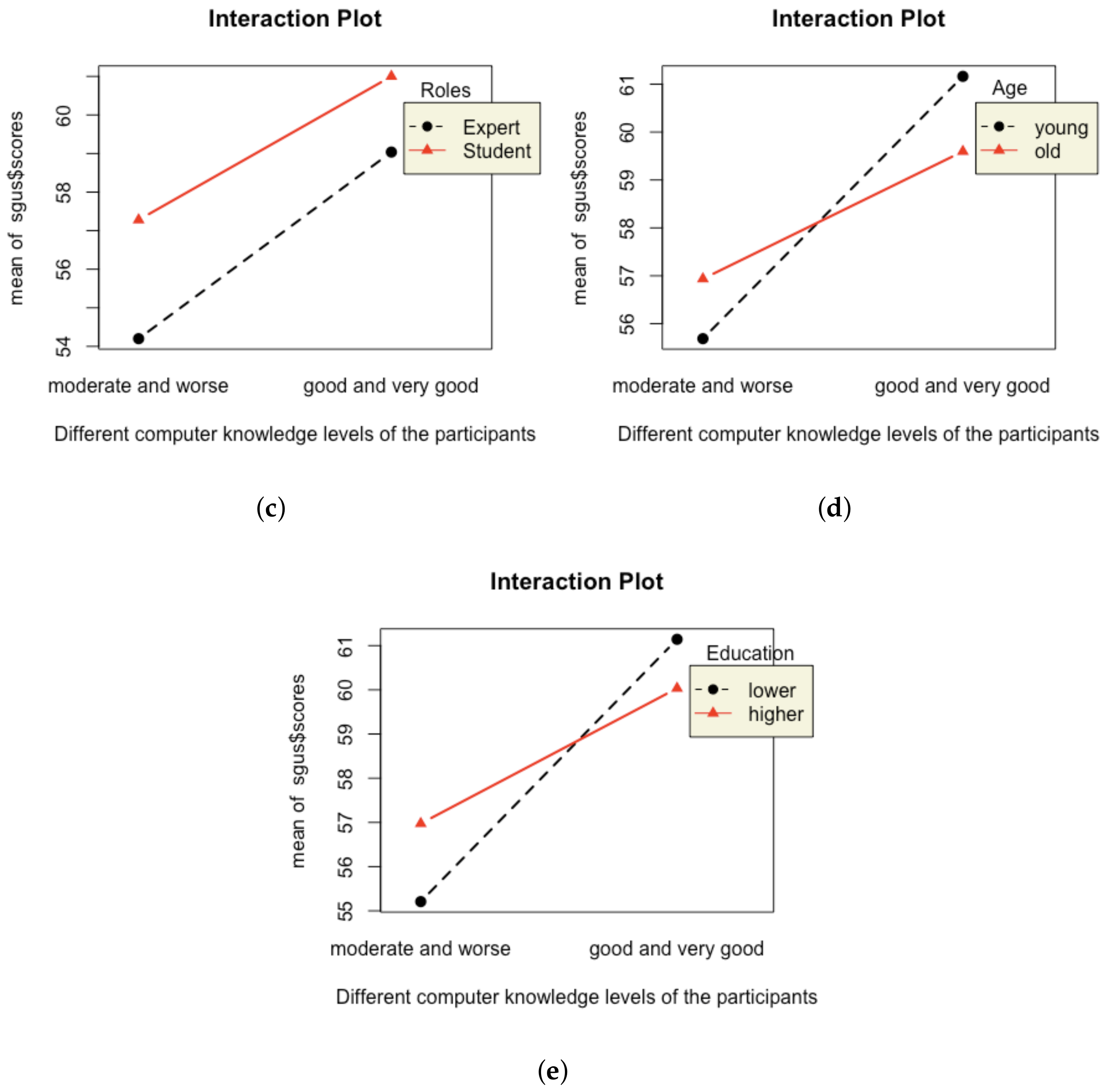

To investigate the interaction, it was interesting to find whether the SGUS score depends on an interaction between good computer knowledge and the other factors. The two-factor interaction plot is shown in

Figure 12. The following are the findings from the plot:

Female participants with good computer knowledge have a higher SGUS score than males with good computer knowledge; both females and males with moderate and worse computer knowledge have nearly the same, lower SGUS score (

Figure 12a).

Participants from medicine with good computer knowledge tended toward a higher SGUS score than participants from engineering, and there is no significant difference between them and the participants with good computer knowledge from astronautics and medicine (

Figure 12b).

There is no significant interaction between participants with different computer knowledge levels from astronautics and engineering (

Figure 12b).

There is no significant interaction between students and experts with different computer knowledge levels (

Figure 12c).

Participants younger than 25 years old with good computer knowledge tended toward a higher SGUS than participants older than 25 years old; however, participants younger than 25 years old with a moderate and worse computer knowledge level tended toward a lower SGUS score (

Figure 12d).

Participants with secondary school or lower education level and good computer knowledge tended toward a higher SGUS score than participants with a bachelor’s or higher education level and good computer knowledge level. However, participants with secondary school or lower education level and moderate and worse computer knowledge tended toward a lower SGUS score than participants with a bachelor’s or higher education level and moderate and worse computer knowledge level (

Figure 12e).

From the result of the ANOVA table (

Table 9), there is insufficient evidence of statistical significance for two-factor interactions, since all

p values are higher than 0.05.

5.3.2. ANOVA of QUIS

In this section, the effect of the six independent variables (age, gender, roles, computer knowledge level, and different organizations) on user interaction satisfaction is reported. Satisfaction includes specific aspects of the interface, usability, and user experience of the AR application.

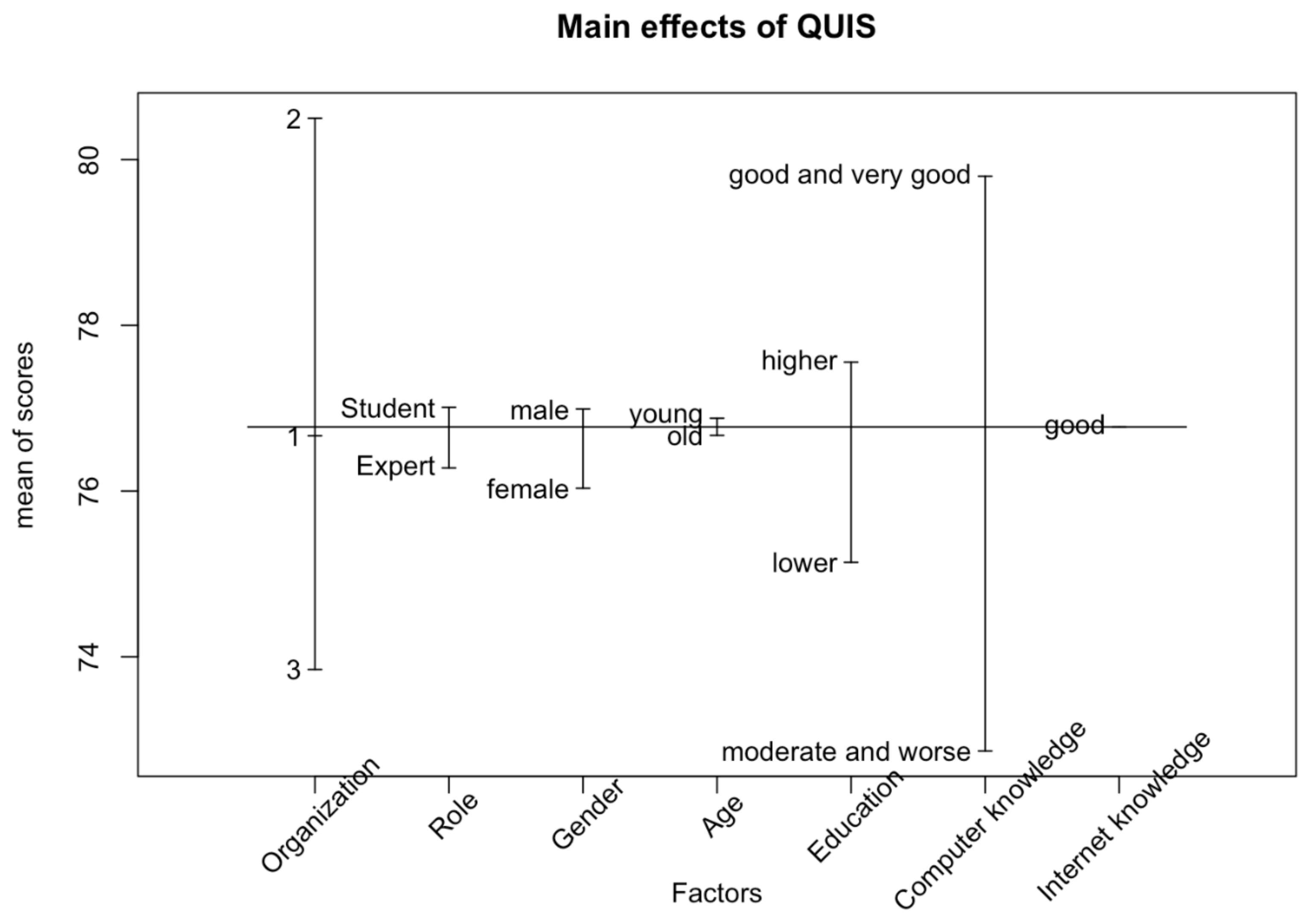

A total of 133 participants were used for this part of the study and completed the questionnaire. The simple main effects are shown in

Figure 13. The results obtained by using the ANOVA in

Table 10 indicate that the significance of the two-factor interaction of computer knowledge levels and organizations is not supported since all

p values are more than 0.05.

Table 10 also shows that the computer knowledge levels and different organizations have a significant effect on QUIS (

p value is 0.008 for computer knowledge levels and 0.041 for different organizations).

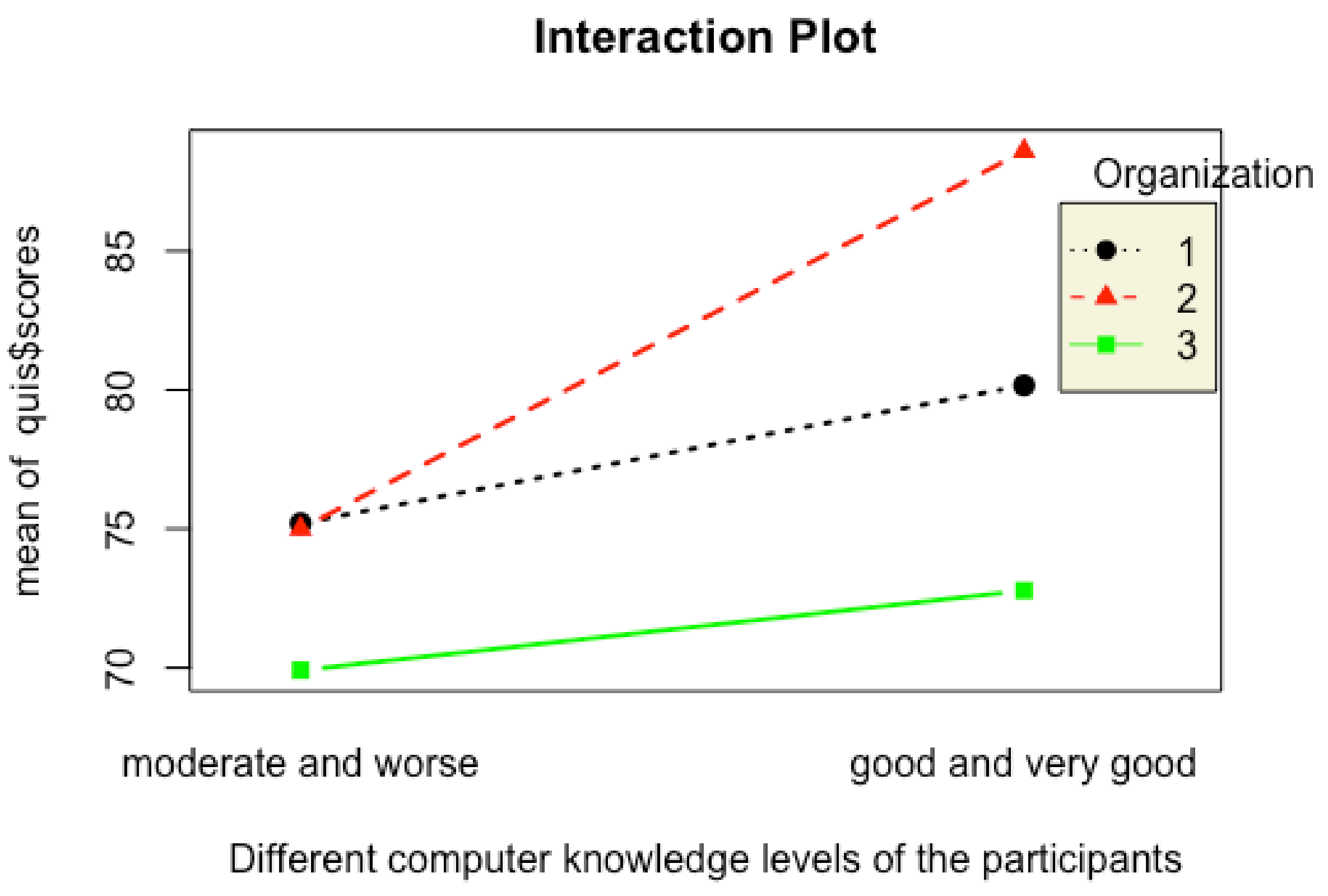

Figure 14 shows that in all three organizations, participants with moderate or worse computer levels were given lower scores than participants with good and very good computer levels. There are no significant interactions between them.

We selected the factors of organization and computer knowledge level to investigate the interaction between them, and the summary results of the linear model regression (see

Table 11) shows that the estimate for the model intercept is 73.533, while there is no significant interaction between them. The information about the standard errors of these estimates is also provided in the coefficients table (

Table 11). From the result of the multiple regression model, 10.6% of the variance in QUIS scores is explained by each of the factors (Multiple R-squared is 0.106). There is a statistically significant factor to explain the variation in the QUIS scores (overall

p value is 0.0133).

6. Discussion

This study established a set of norms to be used for the evaluation of satisfaction of using AR glasses and AR applications. The relationship between each questionnaire item shows weak correlation, both in SGUS and in QUIS. Each questionnaire item is designed for evaluating a specific aspect of satisfaction of the smart glasses and AR applications. From the mean score of both questionnaires, we observed that most of the participants are satisfied with the AR glasses and the AR applications. It was found that the system and content helped the participants to accomplish the task quite well and their attention was captivated in a positive way. In other words, the result shows that the user interface is well designed. The user sees “useful information” displayed next to each part.

The main factors age, gender, education level, roles of the participants, and organizations do not have significant effects on the satisfaction of using smart glasses and AR applications. However, computer/Internet knowledge level does influence user satisfaction. Participants who have better computer/Internet knowledge are more satisfied with the smart glasses and AR applications. There is no significant interaction between all these factors. Since most participants have a moderate level or better than moderate level of knowledge using computers and the Internet, it can be predicted that most educated people can easily accept smart glasses and AR applications. The summary of the findings are shown in

Table 12.

Based on the results associated with the eight hypotheses, we outline the following statements:

Statement 1. Based on the results, we could not identify any gender differences in user satisfaction. It could be a limitation of our experiment set up, as we asked for volunteers, so we ended up with mainly people who were interested in the technology, thus not giving us the option to explore, whether there would be any gender differences in the general population with respect to AR training satisfaction.

Statement 2. Our results suggest that user satisfaction is not influenced by age. A possible explanation for not finding any differences by age could be that the target group had no prior exposure to AR smart glasses, hence age effects of younger people, typically being more open to experimentation of emerging technologies in their home context, could not yet affect the picture.

Statement 3. It is probably to do with our applications. The recorder is a more complicated application, challenging experts in their interaction. Even if, usually, experts would be more technical savvy, in this case, the findings probably reflect more the differences in user friendliness of the applications.

Statement 4. Only the space case had people in higher education. Most participants in the aviation test bed come from upper secondary backgrounds. However, there were no differences found in the impact of education level on user satisfaction. The differences may not be obvious in satisfaction levels, but—judging from observation during trials—there were differences across test-beds with respect to how long it took to explain the applications and their use. The application and the use cases enabled everyone, regardless of whether secondary and tertiary education to use the app.

Statement 5. Computer knowledge possibly matters: Better computer knowledge can drive satisfaction with holographic applications. However, in self assessment tests, users tend to overestimate their computer knowledge [

29,

30]. This means that it is also possible that user satisfaction levels are not influenced by computer knowledge. It seems that existing knowledge is still relevant. At the same time, this also clearly indicates that the required support and assistance needs to be provided in order to make the introduction of AR applications on smart glasses a success. Not everyone is a digital native.

Statement 6. Internet knowledge matters: All participants in the trial claimed that they have good Internet knowledge and very few people claimed that they have poor Internet knowledge, so there was no chance to observe any differences.

Statement 7. There is no difference between the three test-beds in SUGS: We did not find significant differences between the three test-beds. This indicates that occupation does not have direct influence on satisfaction of the AR glasses. Procedure oriented trainings seem to be covered well. There are some difference between the three test-bed in QUIS. The medicine test-bed have the highest satisfaction of the AR app, while the engineering test-bed gave the lowest scores. The procedures of the tasks might effect the satisfaction of the AR app.

Statement 8. There are no interaction effects for QUIS results but some interaction effects amongst the SGUS results. Young people with good computer knowledge are more satisfied the AR glasses. People with lower education and good computer knowledge are more satisfied with the AR glasses than the others.

7. Conclusions

This study was started by noting the scarcity of AR applications for hands-on training. As a first step toward incorporating the recorded teaching activities into learning procedures, the AR application was developed on AR glasses. In this work, the Questionnaire for Smart Glasses User Satisfaction (SGUS) and Questionnaire for User Interaction Satisfaction (QUIS) were investigated for augmented reality applications using Microsoft HoloLens.

The results of this study show that the approach is feasible. The experts wore the AR glasses to show the process, and the activities were recorded. The AR applications can facilitate the students to learn the process. The results show that the satisfaction of both teaching and learning are acceptable. The results indicate that satisfaction does increase when participants have higher computer knowledge levels. It also shows that gender, age, education level, and roles of students or experts do not have any effect on user satisfaction.