Attention-Pool: 9-Ball Game Video Analytics with Object Attention and Temporal Context Gated Attention

Abstract

1. Introduction

1.1. Problem Statement and Motivation

1.2. Research Scope and Objectives

2. Related Work

3. Material and Methodology

3.1. Datasets

- Clear shots: 12,847 positive samples, 45,231 negative samples.

- Win conditions: 3456 positive samples, 54,622 negative samples.

- Potted balls: Multi-class labels with counts ranging from 0 to 9 balls.

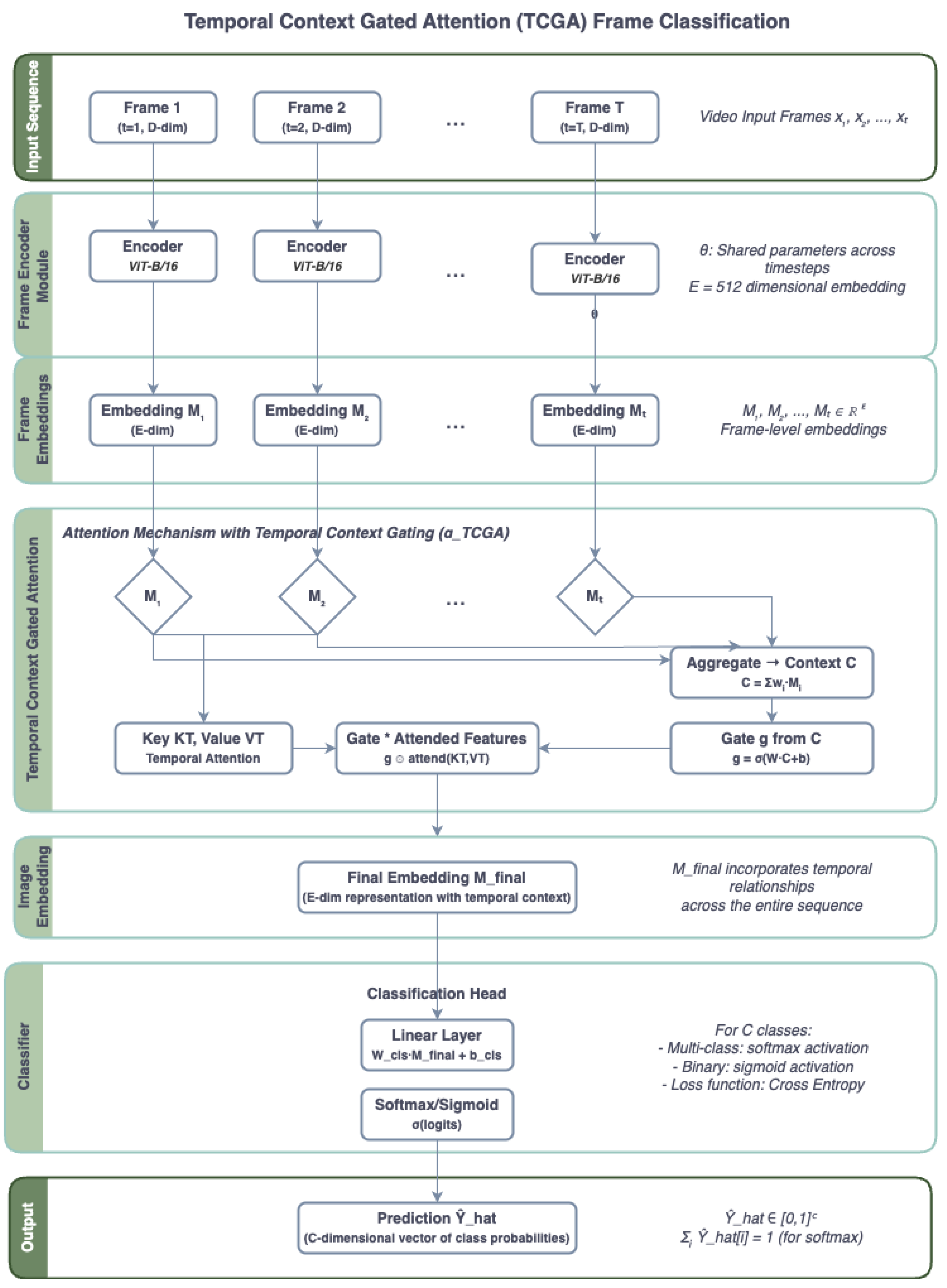

3.2. Proposed TCGA-Pool Architecture

- (1)

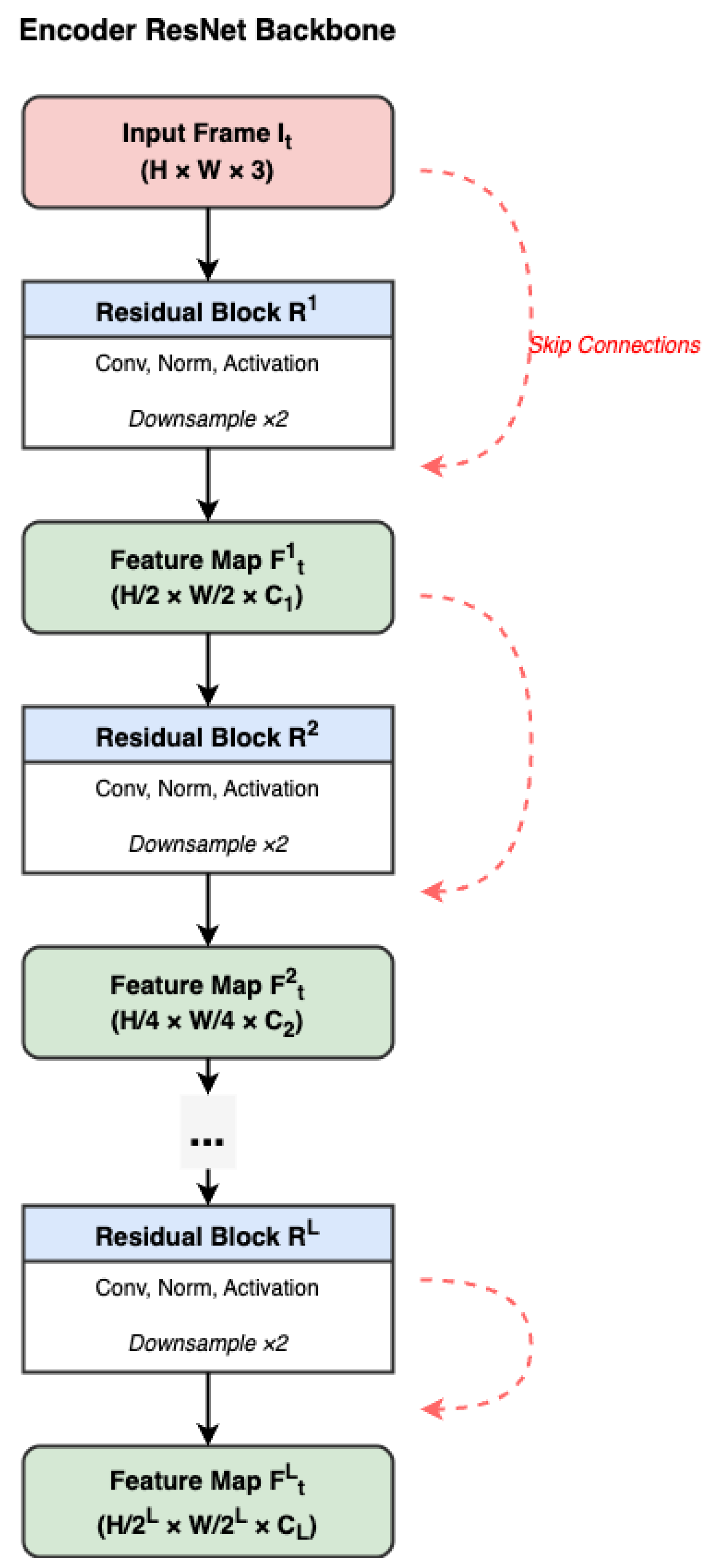

- Frame Encoder (e): The frame encoder serves as the foundation of our architecture, transforming input video frames into meaningful feature representations. Formally defined as ec: R (H×W×Cin) → R(DM), the encoder converts an input video frame Fωt (with height H, width W, and Cin input channels) into a DM-dimensional embedding vector Mωt.Architecture Design: The frame encoder utilizes a ResNet-50 backbone pre-trained on ImageNet, modified with additional convolutional layers for domain-specific feature extraction. The architecture incorporates multi-resolution feature fusion as shown in Figure 2, enabling the capture of both local ball details and global table context. The encoder processes frames independently, generating a sequence of embeddings M = {Mω1, …, MωT} that serve as input to the temporal modeling component.Feature Extraction Strategy: The encoder implements a hierarchical feature extraction approach, combining low-level visual features (edges, colors, textures) essential for ball detection with high-level semantic features necessary for understanding game context. Batch normalization and dropout layers are incorporated to improve training stability and generalization performance.

- (2)

- Temporal Context Gated Attention Module (gTCGA): Representing the central innovation of this research, the TCGA module receives the sequence of frame embeddings (M = {Mω1, …, MωT}) from the encoder. It implements a specialized attention mechanism that is concurrently guided and gated by the global temporal context derived from the entire sequence. Its primary function is to dynamically focus on the most informative frames and feature dimensions relevant to the classification objective, while adaptively modulating the aggregated information based on the holistic context of the sequence.

- (3)

- Classifier: This terminal component serves as the prediction head. Commonly structured as one or more fully connected layers culminating in an appropriate activation function, it accepts the final context-aware representation (Mω final) produced by the TCGA module and outputs the predicted probability distribution (Yω) over the target classes.

- Clear shots: 12,847 positive samples, 45,231 negative samples.

- Win conditions: 3456 positive samples, 54,622 negative samples.

- Potted balls: Multi-class labels with counts ranging from 0 to 9 balls. The dataset is split into training (70%), validation (15%), and test (15%) sets, ensuring no overlap between games across splits to prevent data leakage.

4. Results and Evaluation

- Accuracy: Overall classification accuracy.

- Precision, Recall, F1-score: For each class individually.

- Mean Average Precision (mAP): For multi-class scenarios.

- Area Under ROC Curve (AUC): For binary classification tasks.

- TimeSformer: Transformer-based video classification.

- X3D: Efficient video network with progressive expansion.

- TCGA-Pool: Our implementation of a pool-specific CNN baseline.

- Clear Shot Detection: 4.7% accuracy improvement over the best baseline (Pool CNN).

- Win Condition Identification: 3.2% accuracy improvement with substantially better F1-score.

- Potted Ball Counting: 6.2% accuracy improvement, demonstrating the effectiveness of our attention mechanism for multi-object scenarios.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Liang, C. Prediction and analysis of sphere motion trajectory based on deep learning algorithm optimization. J. Intell. Fuzzy Syst. 2019, 37, 6275–6285. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Huang, W.; Chen, L.; Zhang, M.; Liu, X. Pool game analysis using computer vision techniques. Pattern Recognit. Lett. 2018, 115, 23–31. [Google Scholar]

- Li, K.; He, Y.; Wang, Y.; Li, Y.; Wang, W.; Luo, P.; Wang, Y.; Wang, L.; Qiao, Y. VideoChat: Chat-centric video understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6823–6833. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Siddiqui, M.H.; Ahmad, I. Automated billiard ball tracking and event detection. In Proceedings of the International Conference on Image Processing, Taipei, Taiwan, 22–25 September 2019; pp. 1234–1238. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. TSM: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7083–7093. [Google Scholar]

- Zheng, Y.; Zhang, H. Video analysis in sports by lightweight object detection network under the background of sports industry development. Comput. Intell. Neurosci. 2022, 2022, 3844770. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 20–36. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Naik, B.T.; Hashmi, M.F.; Bokde, N.D. A comprehensive review of computer vision in sports: Open issues, future trends and research directions. Appl. Sci. 2022, 12, 4429. [Google Scholar] [CrossRef]

- Rahmad, N.A.; As’Ari, M.A.; Ghazali, N.F.; Shahar, N.; Sufri, N.A. A survey of video based action recognition in sports. Indones. J. Electr. Eng. Comput. Sci. 2018, 11, 987–993. [Google Scholar] [CrossRef]

- Wu, M.; Fan, M.; Hu, Y.; Wang, R.; Wang, Y.; Li, Y.; Wu, S.; Xia, G. A real-time tennis level evaluation and strokes classification system based on the Internet of Things. Internet Things 2022, 17, 100494. [Google Scholar] [CrossRef]

- Ekin, A.; Tekalp, A.M.; Mehrotra, R. Automatic soccer video analysis and summarization. IEEE Trans. Image Process. 2003, 12, 796–807. [Google Scholar] [CrossRef] [PubMed]

- Yoon, S.; Rameau, F.; Kim, J.; Lee, S.; Kang, S.; Kweon, I.S. Online detection of action start in untrimmed, streaming videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 58–66. [Google Scholar]

- Tang, J.; Chen, C.Y. A billiards track and score recording system by RFID trigger. Procedia Environ. Sci. 2011, 11, 465–470. [Google Scholar] [CrossRef]

- Cioppa, A.; Deliège, A.; Giancola, S.; Ghanem, B.; Van Droogenbroeck, M.; Gade, R.; Moeslund, T.B. Camera calibration and player localization in soccernet-v2 and investigation of their representations for action spotting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 19–25 June 2021; pp. 4537–4546. [Google Scholar]

- Lu, Y. Artificial intelligence: A survey on evolution, models, applications and future trends. J. Manag. Anal. 2019, 6, 1–29. [Google Scholar] [CrossRef]

- Nie, B.X.; Wei, P.; Zhu, S.C. Monocular 3d human pose estimation by predicting depth on joints. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3467–3475. [Google Scholar]

- Zhang, Q.; Wang, Z.; Long, C.; Yiu, S. Billiards sports analytics: Datasets and tasks. ACM Trans. Knowl. Discov. Data 2025, 18, 1–27. [Google Scholar] [CrossRef]

- Teachabarikiti, K.; Chalidabhongse, T.H.; Thammano, A. Players tracking and ball detection for an automatic tennis video annotation. In Proceedings of the 2010 11th International Conference on Control Automation Robotics & Vision, Singapore, 7–10 December 2010. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Song, H.; Wang, W.; Zhao, S.; Shen, J.; Lam, K.M. Exploring temporal preservation networks for precise temporal action localization. In Proceedings of the AAAI Conference on Artificial Intelligence 32, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Herzig, R.; Ben-Avraham, E.; Mangalam, K.; Bar, A.; Chechik, G.; Rohrbach, A.; Darrell, T.; Globerson, A. Object-region video transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Thomas, G.; Gade, R.; Moeslund, T.B.; Carr, P.; Hilton, A. Computer vision in sports: A survey. Comput. Vis. Image Underst. 2017, 159, 3–18. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Xu, M.; Orwell, J.; Lowey, L.; Thirde, D. Algorithms and system for segmentation and structure analysis in soccer video. In Proceedings of the IEEE International Conference on Multimedia and Expo, Tokyo, Japan, 22–25 August 2001; pp. 928–931. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 3–28 June 2014; pp. 1725–1732. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Ashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Rodriguez-Lozano, F.J.; Gámez-Granados, J.C.; Martínez, H.; Palomares, J.M.; Olivares, J. 3D reconstruction system and multiobject local tracking algorithm designed for billiards. Appl. Intell. 2023, 53, 19. [Google Scholar] [CrossRef]

- Faizan, A.; Mansoor, A.B. Computer vision based automatic scoring of shooting targets. In Proceedings of the 2008 IEEE International Multitopic Conference, Karachi, Pakistan, 23–24 December 2008. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slow fast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2021, 7, 283–309. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. ViVit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6836–6846. [Google Scholar]

- Wang, X.; Zhao, K.; Zhang, R.; Ding, S.; Wang, Y.; Shen, F. Deep learning for sports analytics: A survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar]

- Zhang, Y.; Yao, L.; Xu, M.; Qiao, Y.; Liu, Q. Video understanding with large language models: A survey. arXiv 2023, arXiv:2312.17432. [Google Scholar]

| Method | Clear Shots | Win Conditions | Potted Balls | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc | Fl | AUC | Acc | Fl | AUC | Acc | mAP | Fl | |

| TimeSformer | 81.2 | 78.6 | 86.9 | 90.3 | 84.7 | 94.1 | 73.8 | 72.4 | 74.9 |

| X3D | 79.6 | 76.8 | 85.2 | 88.7 | 81.9 | 93.4 | 70.5 | 68.9 | 71.6 |

| TSN | 76.4 | 73.2 | 83.1 | 86.9 | 78.6 | 90.8 | 68.3 | 65.7 | 69.2 |

| Pool-CNN | 82.7 | 80.1 | 87.8 | 91.6 | 86.2 | 94.9 | 75.9 | 74.1 | 76.8 |

| TCGA-Pool(ours) | 87.4 | 85.2 | 92.3 | 94.8 | 91.6 | 97.2 | 82.1 | 80.7 | 83.5 |

| Model Variant | Description | Accuracy | F1-Score | AUC |

|---|---|---|---|---|

| Baseline | ResNet-50 + FC layers | 75.8 | 72.1 | 82.4 |

| Object Attention | Add spatial object attention | 82.1 | 79.3 | 88.6 |

| Temporal Context | Add temporal modeling (LSTM) | 84.3 | 81.7 | 90.1 |

| Gated Mechanism | Add gating for attention fusion | 86.2 | 83.9 | 91.5 |

| Full TCGA-Pool | Complete framework | 87.4 | 85.2 | 92.3 |

| Ablation on Individual Components | ||||

| TCGA-Pool w/o Object Attention | Remove spatial attention | 79.6 | 76.8 | 85.2 |

| TCGA-Pool w/o Temporal Context | Remove temporal marodeling | 81.2 | 78.4 | 87.9 |

| TCGA-Pool w/o Gated Fusion | Remove attention gating | 83.7 | 80.9 | 89.8 |

| TCGA-Pool w/o Multi-scale | Remove multi-scale features | 84.9 | 82.1 | 90.7 |

| Attention Type | Accuracy | F1-Score | Parameters (M) |

|---|---|---|---|

| No Attention | 75.8 | 72.1 | 23.5 |

| Global Average Pooling | 78.2 | 74.9 | 23.5 |

| CBAM [30] | 81.4 | 78.6 | 25.8 |

| Self-Attention | 83.1 | 80.2 | 28.7 |

| Non-local [11] | 83.9 | 81.1 | 31.2 |

| SFrNet [29] | 80.7 | 77.8 | 24.9 |

| Object Attention (Ours) | 87.4 | 85.2 | 27.3 |

| Architecture Variant | Accuracy | F I-Score | AUC | FLOPs G |

|---|---|---|---|---|

| Concatenation Fusion | 84.7 | 81.9 | 89.8 | 15.2 |

| Element-wise Addition | 85.1 | 82.3 | 90.2 | 12.8 |

| Attention Fusion | 86.3 | 83.7 | 91.1 | 14.6 |

| Gated Fusion (Ours) | 87.4 | 85.2 | 92.3 | 13.9 |

| Gating Mechanism Variants | ||||

| Sigmoid Gating | 86.8 | 84.1 | 91.7 | 13.9 |

| Tanh Gating | 86.2 | 83.5 | 91.2 | 13.9 |

| Learnable Gating (Ours) | 87.4 | 85.2 | 92.3 | 13.9 |

| Softmax Gating | 86.9 | 84.3 | 91.8 | 13.9 |

| Method | Parameters (M) | FLOPs (G) | Training Time (h) | Inference (ms/frame) |

|---|---|---|---|---|

| 3D ResNet-50 | 46.2 | 12.1 | 18.5 | 28.3 |

| SlowFast | 59.9 | 16.8 | 24.2 | 35.7 |

| TimeSformer | 121.4 | 38.5 | 41.6 | 67.2 |

| X3D | 38.1 | 9.7 | 16.3 | 22.8 |

| TCGA-P001 (Ours) | 27.3 | 13.9 | 21.7 | 31.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, A.; Yan, W.Q. Attention-Pool: 9-Ball Game Video Analytics with Object Attention and Temporal Context Gated Attention. Computers 2025, 14, 352. https://doi.org/10.3390/computers14090352

Zheng A, Yan WQ. Attention-Pool: 9-Ball Game Video Analytics with Object Attention and Temporal Context Gated Attention. Computers. 2025; 14(9):352. https://doi.org/10.3390/computers14090352

Chicago/Turabian StyleZheng, Anni, and Wei Qi Yan. 2025. "Attention-Pool: 9-Ball Game Video Analytics with Object Attention and Temporal Context Gated Attention" Computers 14, no. 9: 352. https://doi.org/10.3390/computers14090352

APA StyleZheng, A., & Yan, W. Q. (2025). Attention-Pool: 9-Ball Game Video Analytics with Object Attention and Temporal Context Gated Attention. Computers, 14(9), 352. https://doi.org/10.3390/computers14090352