An Intelligent Hybrid AI Course Recommendation Framework Integrating BERT Embeddings and Random Forest Classification

Abstract

1. Introduction

- Content-based filtering: Under this method, items are observed and then matched with the user’s preferences. It performs tight item recommendations based on past interactions, utilizing techniques such as TF-IDF and machine learning. It is very personalized, but it has a problem of a cold start and can lead to a filter bubble, as the diversity of the shown content is reduced. To illustrate, a movie streaming service that proposes movies to the client according to his or her preferences regarding actors and genres [7].

- Collaborative filtering: This method suggests items not based on their features, but instead on the behavior patterns of the users. It identifies similar users or items using techniques such as user-based and item-based collaborative filtering. Although efficient, it has problems with data sparsity and cold start. An example is where Netflix suggests programs to watch depending on what similar users to you have watched [8].

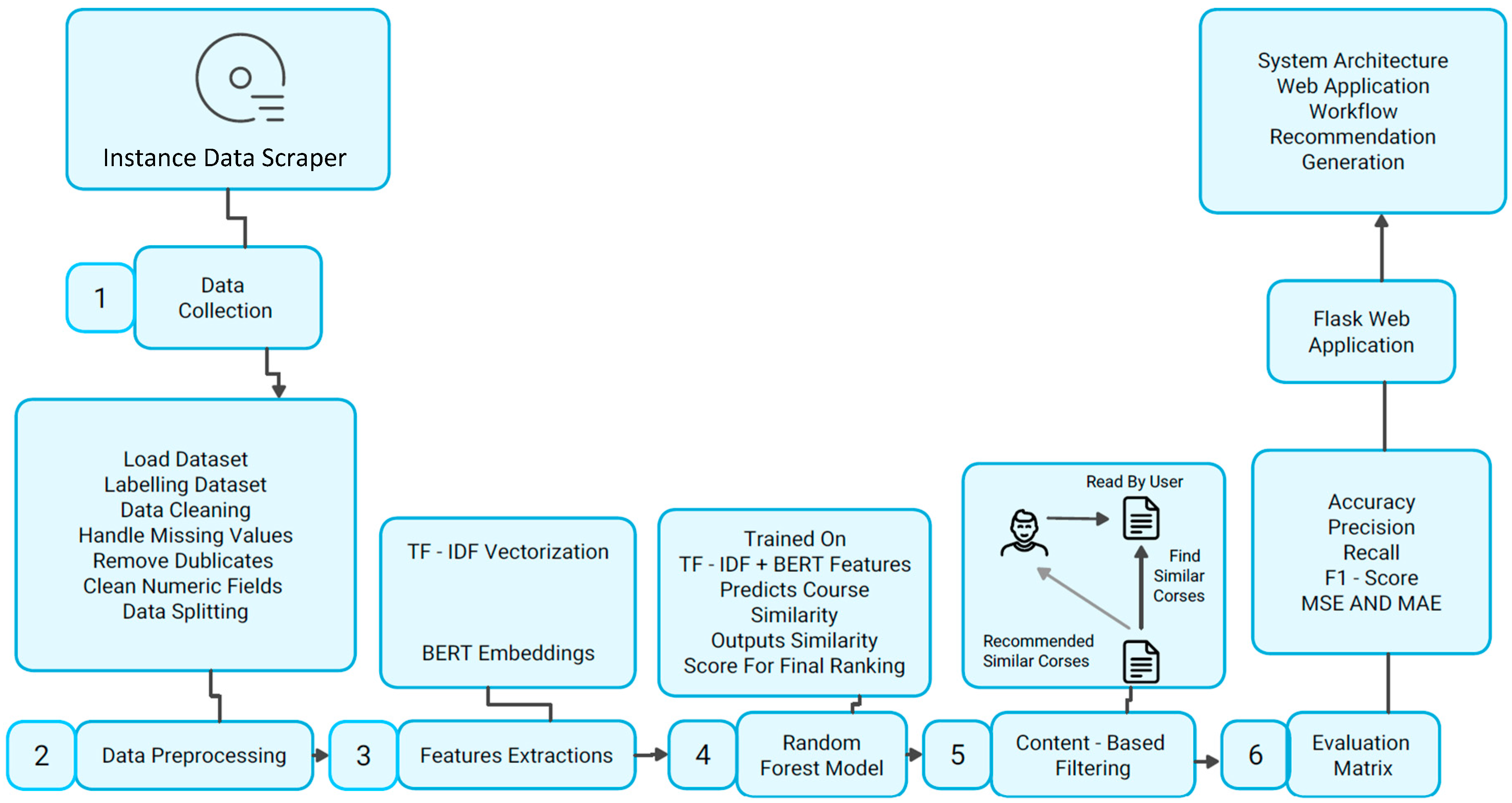

- We constructed a real-world dataset of 2238 AI-related courses collected from Udemy through multiple scraping sessions, followed by rigorous cleaning and preprocessing. Additionally, we provided a quantitative analysis of dataset characteristics (course levels, ratings, and price points) and discussed their potential impact on generalizability.

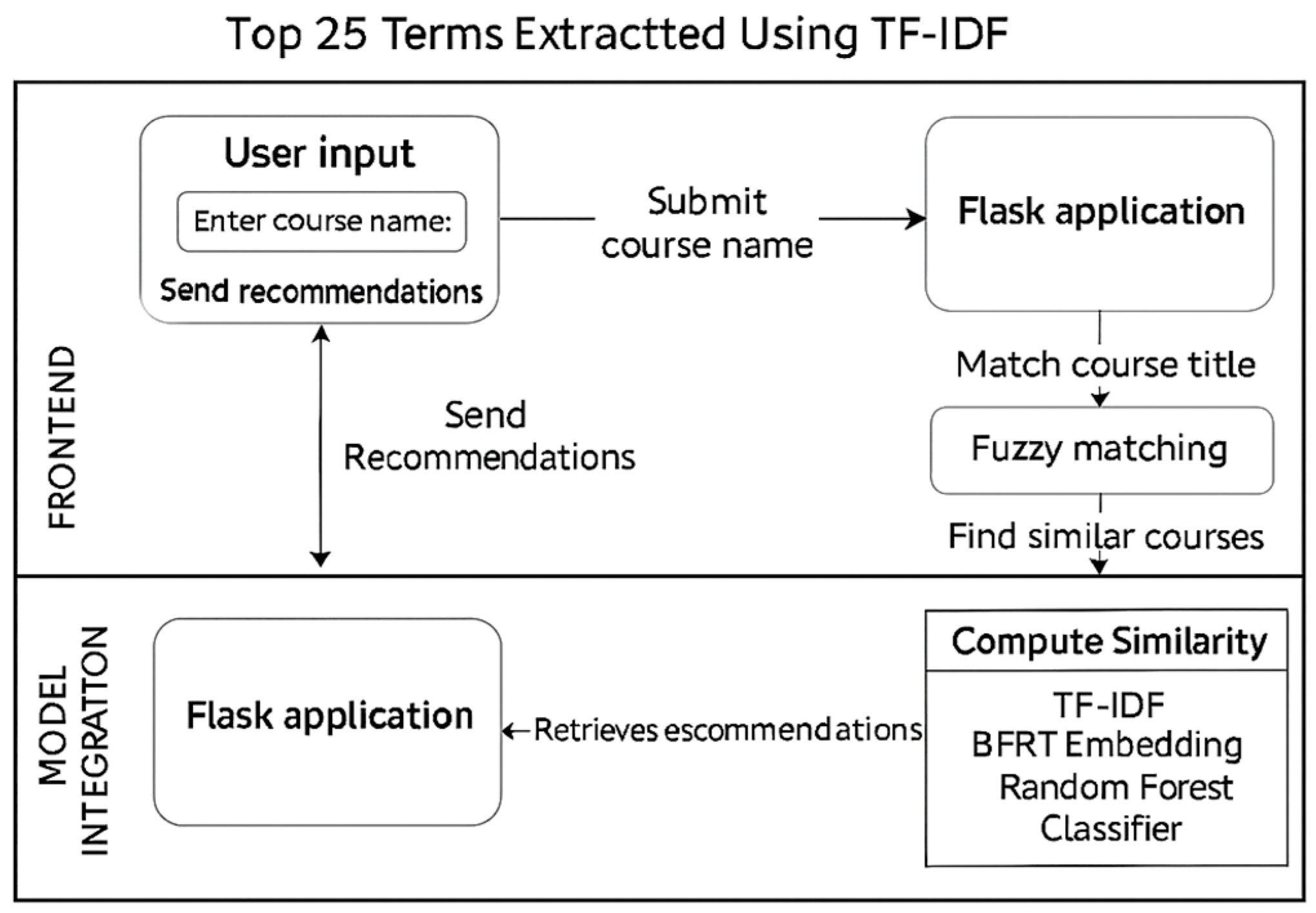

- This work presents a hybrid recommendation architecture that combines TF-IDF for lexical feature extraction, BERT embeddings for contextual semantic representation, and a Random Forest classifier to enhance predictive accuracy. The architecture is fully metadata-driven, enabling deployment in a real-time Flask-based application for immediate, user-friendly recommendations.

- By relying solely on course metadata rather than historical interaction data, the proposed system effectively addresses the cold-start problem, ensuring that accurate recommendations can be made for new users or unseen courses.

- Extensive empirical evaluation, including statistical significance testing (bootstrap, McNemar’s test) with 95% confidence intervals, demonstrates that the proposed approach achieves 91.25% accuracy and a 90.77% F1-Score, outperforming recent baselines.

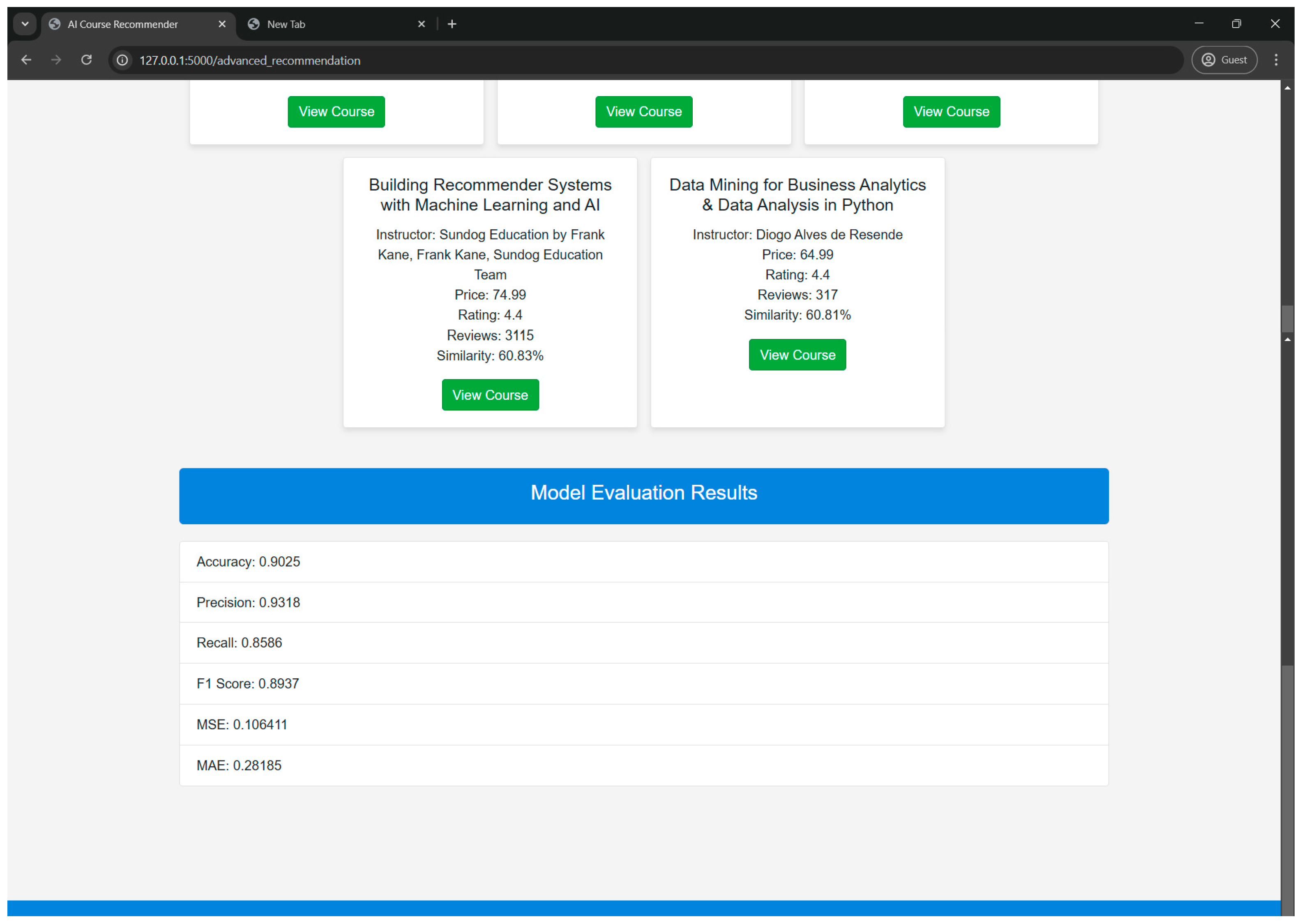

- The system is deployed as a real-time interactive web application using Flask, offering immediate recommendations via a user-friendly interface. A pilot usability study with five undergraduate participants was also conducted, showing high internal agreement in Likert-scale responses (avg. 4.6/5), indicating preliminary usability and paving the way for larger-scale evaluations in future work.

2. Literature Review

- To obtain proper suggestions, only a minimal number of researchers use metadata, including levels, classifications, and descriptions, fully.

- Problem of Cold Start: Content-based filtering addresses the issue of collaborative filtering methods’ inability to handle new users and objects.

- Scalability and simplicity: Most solutions are associated with complicated algorithms and might be challenging to scale and use.

- Over-reliance on Collaborative Filtering: Many systems rely on information about user interactions, which makes them useless for courses and new users.

3. Theoretical Backgrounds

3.1. Techniques Used in Feature Extraction

3.1.1. TF-IDF Vectorization

3.1.2. Bidirectional Encoder Representations from Transformers (BERT)

3.2. Random Forest

4. Methodology

4.1. Data Collection

4.2. Data Preprocessing

- Loading the Dataset: Loading the dataset using Python 3.11.3 (Python Software Foundation, Wilmington, NC, USA) and the Pandas library. This allowed for the handling of course attributes, such as name, description, instructor name, price, and rating. This step prepares the dataset for further processing.

- Data Cleaning: Several steps were implemented to ensure the dataset was consistent and accurate, including text normalization, removal of extra spaces and special characters, and standardization of numerical fields. To enable speedier processing, these procedures centered on standardizing textual and numerical values.

- Handling Missing Values: While processing the dataset, we identified missing information, including instructor names and course descriptions, which required correction.

- 4.

- Removing Duplicates: A few courses were duplicated due to the combined sessions of scraping. Initially, Python’s drop_duplicates() function was used to remove duplicate values, but some courses still appeared twice because they had minor differences in other columns. Since those courses also shared the same URL, we removed duplicates based on their URLs (course-URL). That way, we ensured that each course appeared only once. Cleaning up these duplicates guaranteed that the dataset was accurate and well-structured.

- 5.

- Text Preprocessing: For the textual processing of the data, we employed natural language preprocessing to calculate similarities between the course titles and descriptions. The techniques were the following:

- Lemmatization and tokenization: the course names were tokenized into individual words and lemmatized for the minimization of the words into the most fundamental form (e.g., “learning” → “learn”).

- Stop word removal: the universally present non-descriptive words within the texts’ context were eliminated to make the dataset more informative and efficient for machine learning models.

- 6.

- Final dataset and export: The cleaned dataset will be used for feature extraction and the development of the recommendation model. It should provide a concise and precise description of the experimental results, their interpretation, as well as the experimental conclusions that can be drawn.

4.3. Feature Extraction

4.3.1. Term Frequency–Inverse Document Frequency (TF-IDF)

4.3.2. BERT Embeddings

4.3.3. Fuzzy Matching for User Queries

4.4. Random Forest Model

4.5. Content-Based Filtering

4.6. Evaluation Metrics

- Accuracy: The number of relevant courses predicted correctly divided by the total number of courses. It provides a rough estimate of the system’s performance. The formula for accuracy is

- Precision: The number of recommended courses that were relevant. Precision is the proportion of true positives to all the predicted positive cases. The formula is

- Recall: A metric of the number of correct courses that were correctly recommended. It is the number of true positives divided by the total number of actual correct courses. The formula is

- F1-Score: A blend of precision and recall, which provides a trade-off between the two. It is most effective when you need to evaluate the model’s performance, particularly when both false positives and false negatives are high. The formula is

- Mean Squared Error (MSE): Computes the mean of the squared difference between the predicted and true relevance values of the courses. It is used to estimate the extent to which the recommendations deviate from the actual expected relevance. The formula is

- Mean Absolute Error (MAE): approximates the mean of the absolute error between the estimated and actual values of relevance.

- Mean Relative Error (MRE): MRE finds the difference between expected values and actual values, showing it as the number of times the expected value differs. It is good when you need to see the size of the error relative to the real values, not the error itself.

4.7. Flask Web Application

5. Results and Discussion

5.1. Results of the Feature Extraction and Representation

5.1.1. TF-IDF (Term Frequency-Inverse Document Frequency)

5.1.2. BERT (Bidirectional Encoder Representations from Transformers) Embeddings

5.2. Model Training and Evaluation

Performance Analysis

5.3. Deployment of the Flask Web Application

5.4. Comparing the Proposed System with Other Recommenders

5.5. Use Case Scenario

6. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gm, D.; Goudar, R.H.; Kulkarni, A.A.; Rathod, V.N.; Hukkeri, G.S. A digital recommendation system for personalized learning to enhance online education: A review. IEEE Access 2024, 12, 34019–34041. [Google Scholar] [CrossRef]

- Yurchenko, A.; Drushlyak, M.; Sapozhnykov, S.; Teplytska, A.; Koroliova, L.; Semenikhina, O. Using Online IT-Industry Courses in Computer Sciences Specialists’ Training. Int. J. Comput. Sci. Netw. Secur. 2021, 21, 97–104. [Google Scholar] [CrossRef]

- Madhavi, A.; Nagesh, A.; Govardhan, A. A study on E-Learning and recommendation system. Recent Adv. Comput. Sci. Commun. Former. Recent Pat. Comput. Sci. 2022, 15, 748–764. [Google Scholar] [CrossRef]

- Urdaneta-Ponte, M.C.; Mendez-Zorrilla, A.; Oleagordia-Ruiz, I. Recommendation systems for education: Systematic review. Electronics 2021, 10, 1611. [Google Scholar] [CrossRef]

- Algarni, S.; Sheldon, F. Systematic Review of Recommendation Systems for Course Selection. Mach. Learn. Knowl. Extr. 2023, 5, 560–596. [Google Scholar] [CrossRef]

- Hassan, R.H.; Hassan, M.T.; Sameem, M.S.I.; Rafique, M.A. Personality-Aware Course Recommender System Using Deep Learning for Technical and Vocational Education and Training. Information 2024, 15, 803. [Google Scholar] [CrossRef]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep learning based recommender system: A survey and new perspectives. ACM Comput. Surv. CSUR 2019, 52, 1–38. [Google Scholar] [CrossRef]

- Zhong, M.; Ding, R. Design of a personalized recommendation system for learning resources based on collaborative filtering. Int. J. Circuits Syst. Signal Process. 2022, 16, 122–131. [Google Scholar] [CrossRef]

- Guo, Q.; Zhuang, F.; Qin, C.; Zhu, H.; Xie, X.; Xiong, H.; He, Q. A survey on knowledge graph-based recommender systems. IEEE Trans. Knowl. Data Eng. 2020, 34, 3549–3568. [Google Scholar] [CrossRef]

- Burke, R. Hybrid Recommender Systems: Survey and Experiments. User Model User-Adap. Inter. 2002, 12, 331–370. [Google Scholar] [CrossRef]

- Monsalve-Pulido, J.; Aguilar, J.; Montoya, E.; Salazar, C. Autonomous recommender system architecture for virtual learning environments. Appl. Comput. Inform. 2024, 20, 69–88. [Google Scholar] [CrossRef]

- Chen, W.; Shen, Z.; Pan, Y.; Tan, K.; Wang, C. Applying machine learning algorithm to optimize personalized education recommendation system. J. Theory Pract. Eng. Sci. 2024, 4, 101–108. [Google Scholar] [CrossRef]

- Tian, Y.; Zheng, B.; Wang, Y.; Zhang, Y.; Wu, Q. College library personalized recommendation system based on hybrid recommendation algorithm. Procedia Cirp 2019, 83, 490–494. [Google Scholar] [CrossRef]

- Dai, Y.; Takami, K.; Flanagan, B.; Ogata, H. Beyond recommendation acceptance: Explanation’s learning effects in a math recommender system. Res. Pract. Technol. Enhanc. Learn. 2024, 19, 1–21. [Google Scholar] [CrossRef]

- Usman, A.; Roko, A.; Muhammad, A.B.; Almu, A. Enhancing personalized book recommender system. Int. J. Adv. Netw. Appl. 2022, 14, 5486–5492. [Google Scholar] [CrossRef]

- Li, Q.; Kim, J. A Deep Learning-Based Course Recommender System for Sustainable Development in Education. Appl. Sci. 2021, 11, 8993. [Google Scholar] [CrossRef]

- Guruge, D.B.; Kadel, R.; Halder, S.J. The State of the Art in Methodologies of Course Recommender Systems—A Review of Recent Research. Data 2021, 6, 18. [Google Scholar] [CrossRef]

- Lee, E.L.; Kuo, T.T.; Lin, S.D. A Collaborative Filtering-Based Two Stage Model with Item Dependency for Course Recommendation. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Tokyo, Japan, 19–21 October 2017; pp. 496–503. [Google Scholar] [CrossRef]

- Ghatora, P.S.; Hosseini, S.E.; Pervez, S.; Iqbal, M.J.; Shaukat, N. Sentiment Analysis of Product Reviews Using Machine Learning and Pre-Trained LLM. Big Data Cogn. Comput. 2024, 8, 199. [Google Scholar] [CrossRef]

- Ramzan, B.; Bajwa, I.S.; Jamil, N.; Amin, R.U.; Ramzan, S.; Mirza, F.; Sarwar, N. An intelligent data analysis for recommendation systems using machine learning. Sci. Program. 2019, 2019, 5941096. [Google Scholar] [CrossRef]

- Thakkar, A.; Chaudhari, K. Predicting stock trend using an integrated term frequency–inverse document frequency-based feature weight matrix with neural networks. Appl. Soft Comput. 2020, 96, 106684. [Google Scholar] [CrossRef]

- Zalte, J.; Shah, H. Contextual classification of clinical records with bidirectional long short-term memory (Bi-LSTM) and bidirectional encoder representations from transformers (BERT) model. Comput. Intell. 2024, 40, e12692. [Google Scholar] [CrossRef]

- Selva Birunda, S.; Kanniga Devi, R. A Review on Word Embedding Techniques for Text Classification. In Innovative Data Communication Technologies and Application: Proceedings of ICIDCA 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 267–281. [Google Scholar] [CrossRef]

- Parmar, A.; Katariya, R.; Patel, V. A Review on Random Forest: An Ensemble Classifier. In International Conference on Intelligent Data Communication Technologies and Internet of Things (ICICI) 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 758–763. [Google Scholar] [CrossRef]

- Pawar, A.; Patil, P.; Hiwanj, R.; Kshatriya, A.; Chikmurge, D.; Barve, S. Language Model Embeddings to Improve Performance in Downstream Tasks. In Proceedings of the 2024 IEEE 16th International Conference on Computational Intelligence and Communication Networks (CICN), Indore, India, 22–23 December 2024; pp. 1097–1101. [Google Scholar]

- Javed, U.; Shaukat, K.; Hameed, I.A.; Iqbal, F.; Alam, T.M.; Luo, S. A review of content-based and context-based recommendation systems. Int. J. Emerg. Technol. Learn. 2021, 16, 274–306. [Google Scholar] [CrossRef]

- Sultan, L.R.; Abdulateef, S.K.; Shtayt, B.A. Prediction of student satisfaction on mobile learning by using fast learning network. Indones. J. Electr. Eng. Comput. Sci. 2022, 27, 488–495. [Google Scholar] [CrossRef]

- Kiran, R.; Kumar, P.; Bhasker, B. DNNRec: A novel deep learning based hybrid recommender system. Expert Syst. Appl. 2020, 144, 113054. [Google Scholar] [CrossRef]

- Shuwandy, M.L.; Alasad, Q.; Hammood, M.M.; Yass, A.A.; Abdulateef, S.K.; Alsharida, R.A.; Qaddoori, S.L.; Thalij, S.H.; Frman, M.; Kutaibani, A.H.; et al. A Robust Behavioral Biometrics Framework for Smartphone Authentication via Hybrid Machine Learning and TOPSIS. J. Cybersecur. Priv. 2025, 5, 20. [Google Scholar] [CrossRef]

- San, K.K.; Win, H.H.; Chaw, K.E.E. Enhancing Hybrid Course Recommendation with Weighted Voting Ensemble Learning. J. Future Artif. Intell. Technol. 2025, 1, 337–347. [Google Scholar] [CrossRef]

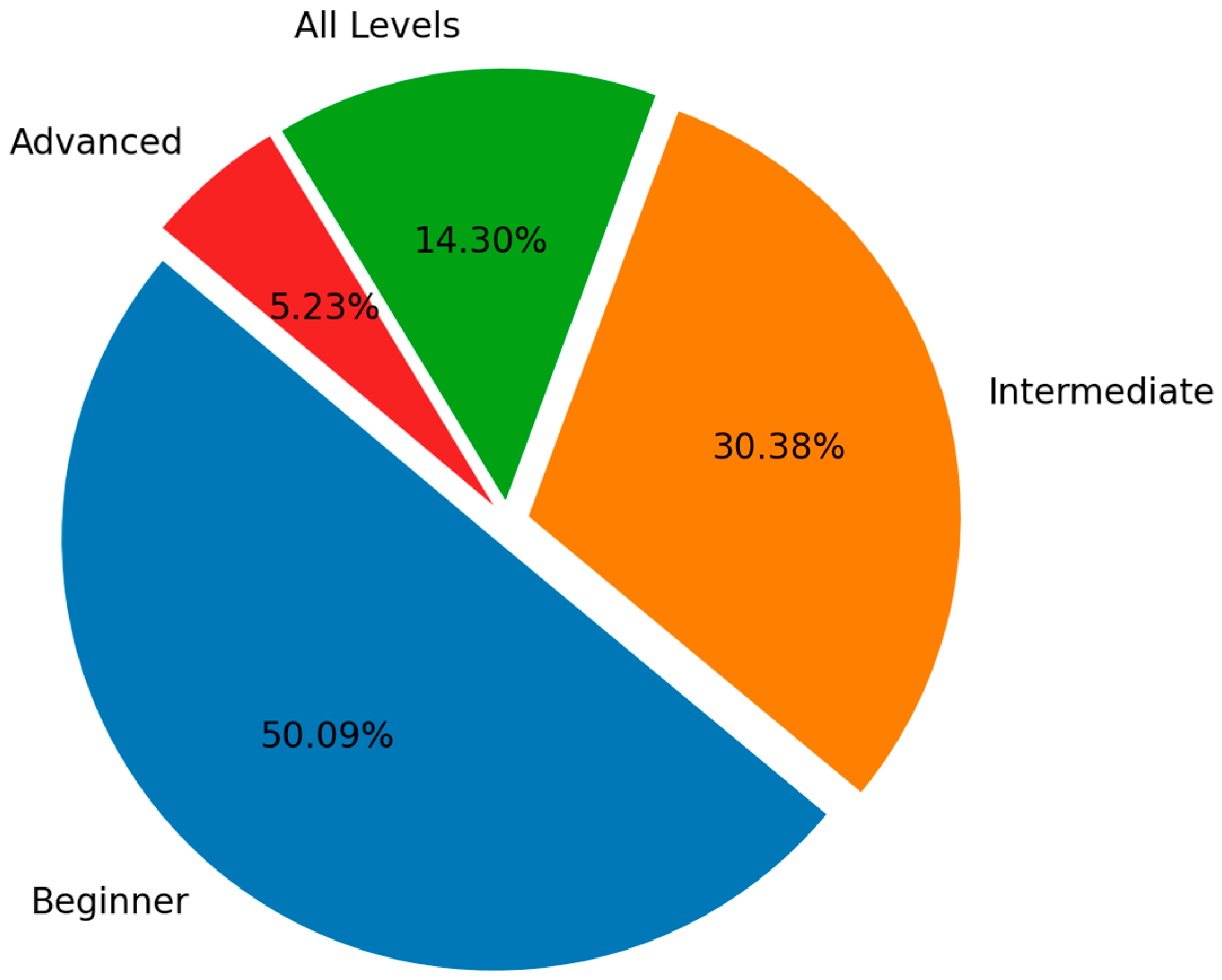

| Classification Category | Number of Courses | Percentage (%) |

|---|---|---|

| Beginner | 1120 | 50.09% |

| Intermediate | 680 | 30.38% |

| All Levels | 320 | 14.30% |

| Advanced | 118 | 5.23% |

| Total | 2238 | 100% |

| No. | Course-Card-Image | Course-URL | Course-Name | Course-Caption | Course-Instructor | Course-Price | Course-Reviews | Course-Hours | Course-Lectures | Course-Level | Course-Rating | Course-Classification |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | https://img-c.udemycdn.com/course/240x135/2284943_3b99_2.jpg (accessed on 2 March 2025) | https://www.udemy.com/course/ibm-watson-for-artificial-intelligence-cognitive-computing/?couponCode=MT260825G1 (accessed on 2 March 2025) | IBM Watson for Artificial Intelligence | Build smart, AI, and ML applications and so on | Packt Publishing | $69.99 | 82 | 15 total hours | 77 | Beginner | 3.4 | Cognitive Computing |

| 2 | https://img-c.udemycdn.com/course/240x135/3978988_25d9_3.jpg (accessed on 2 March 2025) | https://www.udemy.com/course/cognitive-behavioural-therapy/?couponCode=MT260825G1 (accessed on 2 March 2025) | Cognitive Behavioral | Become a Certified Behavioral | Kain Ramsay | $79.99 | 35548 | 31.5 total hours | 121 | All Levels | 4.6 | Cognitive Computing |

| … | … | … | … | … | … | … | … | … | … | … | … | … |

| 2237 | https://img-c.udemycdn.com/course/240x135/5282600_e201_2.jpg (accessed on 5 March 2025) | https://www.udemy.com/course/developing-implementing-employee-recognition-programs/?couponCode=MT260825G1 (accessed on 5 March 2025) | Mastering Employee | Design & Implement Effective Employee | GenMan Solutions | $19.99 | 106 | 2 total hours | 27 | All Levels | 4.2 | Speech Recognition |

| 2238 | https://img-c.udemycdn.com/course/240x135/2881844_69a7_4.jpg (accessed on 13 March 2025) | https://www.udemy.com/course/sentiment-analysis-beginner-to-expert/?couponCode=MT260825G1 (accessed on 13 March 2025) | Sentiment Analysis | Sentiment Analysis | Taimoor khan | $49.99 | 92 | 8.5 total hours | 79 | All Levels | 4.2 | Speech Recognition |

| Component Procedure | Parameters | Value | Tuning |

|---|---|---|---|

| BERT | Model name Embedding size | All-Mini-L6-V2 384 dimensions | Determined by the model architecture |

| TF-IDF | ngram_range | (1, 2) | Bigram enhanced contexts |

| min_df | 2 | Tested min_df ∈ {1, 2, 5} | |

| max_df | 0.9 | Tested max_df ∈ {0.85, 0.9, 0.95} | |

| Random forest | n_estimators | 100 | 100 balanced performance/speed |

| random_state | 42 | Ensures reproducibility | |

| criterion | Gini impurity | Default | |

| max_depth | None | The tree should grow as large as possible |

| Study | Accuracy (95% CI) | F1-Score | MSE |

|---|---|---|---|

| Proposed system | 91.25 [88.79%, 93.95%] | 90.77 | 0.10 |

| [6] | 91 [88.37%, 93.51%] | 79.4 | 0.18 |

| [8] | 81.9 [78.48%, 85.65%] | 86.7 | 0.13 |

| [30] | 51.1 [46.41%, 55.83%] | 45.7 | - |

| Participant | Ease of Use (Q1) | Relevance (Q2) | Satisfaction (Q3) |

|---|---|---|---|

| Student 1 | 5 | 5 | 5 |

| Student 2 | 4 | 5 | 4 |

| Student 3 | 5 | 4 | 4 |

| Student 4 | 4 | 4 | 5 |

| Student 5 | 5 | 5 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasoon, A.N.; Abdulateef, S.K.; Abdulameer, R.S.; Shuwandy, M.L. An Intelligent Hybrid AI Course Recommendation Framework Integrating BERT Embeddings and Random Forest Classification. Computers 2025, 14, 353. https://doi.org/10.3390/computers14090353

Hasoon AN, Abdulateef SK, Abdulameer RS, Shuwandy ML. An Intelligent Hybrid AI Course Recommendation Framework Integrating BERT Embeddings and Random Forest Classification. Computers. 2025; 14(9):353. https://doi.org/10.3390/computers14090353

Chicago/Turabian StyleHasoon, Armaneesa Naaman, Salwa Khalid Abdulateef, R. S. Abdulameer, and Moceheb Lazam Shuwandy. 2025. "An Intelligent Hybrid AI Course Recommendation Framework Integrating BERT Embeddings and Random Forest Classification" Computers 14, no. 9: 353. https://doi.org/10.3390/computers14090353

APA StyleHasoon, A. N., Abdulateef, S. K., Abdulameer, R. S., & Shuwandy, M. L. (2025). An Intelligent Hybrid AI Course Recommendation Framework Integrating BERT Embeddings and Random Forest Classification. Computers, 14(9), 353. https://doi.org/10.3390/computers14090353