Abstract

The use of learning success prediction models is increasingly becoming a part of practice in educational institutions. While recent studies have primarily focused on the development of predictive models, the issue of their temporal stability remains underrepresented in the literature. This issue is critical as model drift can significantly reduce the effectiveness of Learning Analytics applications in real-world educational contexts. This study aims to identify effective approaches for assessing the degradation of predictive models in Learning Analytics and to explore retraining strategies to address model drift. We assess model drift in deployed academic success prediction models using statistical analysis, machine learning, and Explainable Artificial Intelligence. The findings indicate that students’ Digital Profile data are relatively stable, and models trained on these data exhibit minimal model drift, which can be effectively mitigated through regular retraining on more recent data. In contrast, Digital Footprint data from the LMS show moderate levels of data drift, and the models trained on them significantly degrade over time. The most effective strategy for mitigating model degradation involved training a more conservative model and excluding features that exhibited SHAP loss drift. However, this approach did not yield substantial improvements in model performance.

1. Introduction

It is difficult to imagine a modern educational institution today without tools for data analysis. The development of data-driven education was significantly accelerated by the COVID-19 pandemic, which shifted nearly the entire educational process in higher educational institutions (HEIs) to an electronic environment [1,2,3]. On the one hand, this led to the rapid development of modern and innovative methods for conducting classes in a remote format [4,5,6]; on the other hand, it stimulated the advancement of universities’ digital infrastructure and the growth of processes for collecting, storing, processing, and utilizing educational data [7,8,9]. This factor brought Learning Analytics (LA) as a discipline and predicting learning success as its most prominent task to a new level.

Predicting academic success aims at improving the quality of education—whether by identifying at-risk students or by optimizing the resources of HEIs [10,11]. These predictions benefit various stakeholders: students can assess their performance and identify weaknesses; instructors can offer personalized support and adaptive learning strategies; and administrators can make data-driven decisions for institutional management [12,13,14].

Depending on the specific goals and challenges faced by HEIs, the task of predicting learning success—as well as the very notion of “success”—may vary significantly depending on the context. In the literature, the following formulations of this task are commonly encountered: predicting academic performance, including identifying students at high risk of academic failure in completing a semester [15]; identifying students at risk of dropout [16]; forecasting the grade point average (GPA) [17]; assessing the probability of completing a course or module [18]; and evaluating the level of student satisfaction with the learning experience [19]. Among other formulations, the identification of students at high risk of academic failure in completing a semester and the assessment of the likelihood of course completion hold the greatest potential for early interventions to support student engagement.

A wide spectrum of data available within digital educational environments is used to predict academic performance. These data can generally be divided into two major categories: Digital Profile data [20,21] and Digital Footprint data [22,23]. Digital Profile data includes student data reflecting demographic, socio-economic, academic, and other personal characteristics. These are historical and relatively static indicators, which rarely change during the course of study, typically being updated only once a semester or annually. They are usually obtained from university databases associated with the student admissions process or other administrative systems. Digital Footprint data comprises data on students’ interactions with digital educational services, platforms, or tools, reflecting their dynamic learning activity. The primary source of learning trace data is Learning Management Systems (LMSs). Digital Footprint data are characterized by frequent updates and provide valuable insights into student engagement in the learning process.

An analysis of existing research in LA related to learning success prediction has revealed three key problems:

First problem: The majority of studies focus on building predictive models using publicly available benchmark datasets (e.g., the UCI Student Performance dataset, PISA datasets, or xAPI-Edu-Data) [24,25]. This approach has a significant advantage: it eliminates data collection and minimizes the necessity for data preprocessing before training machine learning (ML) models. However, these datasets often lack real educational context and fail to capture the complexity and diversity of actual university learning processes. As a result, models trained on such datasets may demonstrate high performance metrics on synthetic data [26,27], but their scalability and applicability to other educational institutions are significantly limited. Moreover, these publicly available datasets do not reflect the dynamic changes inherent in real educational processes, leading to rapid obsolescence and a considerable gap between research and practice.

Second problem: Many studies focus on building models using real, institution-specific datasets [28,29]. However, in many of these studies, the ML model development cycle for predicting academic success often ends at the stage of evaluating model performance on a hold-out test set. Unfortunately, there is typically no information about the model’s deployment or monitoring of its performance over time in the educational process. This gap is often attributed to a lack of infrastructure needed for integrating the developed model into existing educational platforms or academic resistance. The lack of real-world deployment hinders the ability to assess the long-term effects of predictive model use—such as its actual impact on academic performance, interpretation of key performance indicators, relationships between academic indicators, and the model’s stability over time.

Third problem: There is a significant lack of published real-world case studies describing the implementation of learning success prediction models. Only a few studies report on existing systems and longitudinal research [13,30,31,32]. However, research from related domains—such as finance, healthcare, and others [33,34,35,36]—highlights a critical challenge: the degradation of ML model performance over time due to data drift, i.e., changes in the distribution of input features or target variables in new data compared with the training data. Such drift can be triggered by both external and internal factors affecting the educational process, including curriculum updates, changes in student demographics, or the modernization of the institution’s digital infrastructure. We should also note that the educational sphere is characterized by the high variability of the research object itself—the human being. It is well known that the psychological and cognitive characteristics of students change significantly under the influence of social processes. However, methods for detecting data drift and adaptation strategies are rarely applied in LA. Addressing these gaps is essential to ensure the long-term robustness and reliability of academic success prediction models.

It is noteworthy that changes in data distribution pose a common challenge across many applied ML domains. However, the nature of drift can vary significantly depending on the context. In streaming data scenarios (e.g., industrial systems, e-commerce), both sudden drift and incremental drift may occur, with the latter leading to gradual model degradation over subsequent instances. Other fields, such as climate analysis and medicine, can exhibit seasonal drift, where data patterns shift cyclically due to periodic factors. Educational data in learning success forecasting models present a unique case. Academic assessment cycles are synchronized with large student cohorts evaluated simultaneously. Even if the underlying relationships between model variables evolve incrementally, the discrepancy between the predicted and true outcomes becomes observable only after exams, thus data drift manifests at the end of the learning period. It would be valuable to test various methods for detecting and mitigating data drift specifically for this scenario.

To address the aforementioned challenges, we examined existing models developed and deployed in a digital academic success prediction service called “Pythia” (https://p.sfu-kras.ru/ (accessed on 19 August 2025)). This service was developed at the Siberian Federal University (SibFU) in 2023 to provide early predictions of students’ examination session outcomes and to visualize student performance dynamics through a “traffic light” interface [37]. The service enables faculty and administrative staff to identify students experiencing learning difficulties, allowing for timely pedagogical support and interventions [38].

The service’s predictive models address two key tasks: (1) forecasting student success in mastering a course, (2) predicting academic debts during exams. This enables both the early detection of at-risk students and the identification of courses requiring interventions.

Based on monitoring predictive models implemented in the Pythia service, this study aims to explore the practical aspects of deploying ML models in education. We pursue the following research objectives related to model degradation in deployed LA applications:

RA1. Assess the stability of student Digital Profile data and Digital Footprint data, which form the foundation for training academic success prediction models.

RA2. Estimate the performance degradation of the predictive models used in the Pythia system over time.

RA3. Propose and evaluate retraining strategies for learning success prediction models in the presence of data drift and model degradation, with a focus on their effectiveness in the context of the Pythia service.

2. Materials and Methods

2.1. Educational Data and Corresponding Predictive Models

In this study, we examine the degradation of models embedded into the Pythia service. The prediction of learning success is based on a hybrid approach. The work by [39] provides a detailed schematic diagram illustrating the relationships between the models used in the service. It also justifies why such an approach, involving the use of multiple models, can be useful for large HEIs with heterogeneous levels of digitalization. Below, we briefly describe these models and the educational data used for their training.

2.1.1. Digital Profile Dataset and the LSSp Model

We utilized two types of educational data: student Digital Profiles and Digital Footprints from LMSs. The Digital Profile data were extracted from various university electronic services, as detailed in [39]. After preprocessing and feature engineering, we compiled the Digital Profile dataset, which represents the digital personal portraits of students and their previous educational history (where “previous” refers to the students’ academic trajectory and performance in prior semesters).

From the Digital Profile dataset we extracted 115,635 educational profile records corresponding to 38,550 students across the 2018–2021 academic years. On these data we trained the LSSp model—a Model for Forecasting Learning Success in Completing the Semester Based on Previous Educational History, which is described in detail in [39]. This model is a binary classifier, which predicts whether a student has a high risk of failing at least one course in the current semester.

The target variable, “at risk,” is defined as follows: it takes the value 1 for students who failed at least one course in the current semester, and 0 for those who successfully passed all courses. The set of predictors includes the following features:

- Demographic data: age, gender, citizenship status (domestic or international);

- General education data: type of funding, admission and social benefits, field of study, school/faculty, level of degree program (Bachelor’s, Specialist, or Master’s), type of study plan (general or individual), current year of study, and current semester (spring or fall);

- Relocation history: number of transfers between academic programs, academic leaves, academic dismissals, and reinstatements; reasons for the three most recent academic dismissals, reasons for the most recent academic leave and the most recent transfer; types of relocation in the current semester (if applicable);

- Student grade book data: final course grades from the three most recent semesters (both before and after retakes), number of exams taken, number of retakes, and number of academic debts.

An overview of the LSSp model’s features and the target variable including their definitions, data sources, variable types, and update frequencies, is provided in Table A1.

The LSSp model is implemented as an ensemble of four tree-based algorithms: Random Forest (RF), XGBoost, CatBoost, and LightGBM. Hyperparameter tuning was performed individually for each base model. The resulting ensemble achieved a weighted F1-score of 0.741 on the validation dataset. The predictive performance of the LSSp model is not sufficiently high, suggesting that educational profile data alone may be insufficient for identifying academic performance issues in the current semester.

2.1.2. Digital Footprint Dataset and the LSSc Model

The most significant source of data on students’ current educational behavior and performance is the university’s Moodle-based e-learning platform, “e-Courses”. Data on students’ learning activities and current course scores are extracted weekly from the “e-Courses” platform. These data are used to construct the Digital Footprint dataset.

However, the degree of digitalization varies across academic programs at SibFU. For example, a number of academic programs still rely exclusively on face-to-face instruction and students studying these programs are not represented in Digital Footprint dataset. Consequently, data from Digital Footprint dataset cannot be directly incorporated as predictors into the general LSSp model, which was developed for the entire student population.

To address this, a hybrid forecasting model was developed. First, the LSSp model generates a baseline prediction of successful semester completion for all students. Then, for those students with available Digital Footprint data from the “e-Courses” platform, this prediction is refined using an additional model—the Model for Forecasting Learning Success for Completing the Semester Based on Current Educational History (LSSc model).

The LSSc model utilizes data on students’ activity and current performance, as well as information about the individual set of electronic courses studied by each student to predict the same target variable, “at risk”.

We define students’ activity on the “e-Courses” platform in terms of clicks. For this purpose, all user actions in an electronic course are classified into one of two categories:

- Active clicks—interactions with reading materials and viewing the course elements;

- Effective clicks—interactions with the e-course that change its content, e.g., submitting assignments, test attempts, etc.

The current performance of a student on an e-course is represented by the following:

- The overall score in the gradebook of the e-course at a given moment in time;

- The course-specific performance forecasts made by the Model for Forecasting Learning Success in Mastering a Course (LSC Model) [40].

To define a student’s individual set of electronic courses we consider both the number of e-courses the student is enrolled in and the types of these courses in terms of their relevance to the educational process. Based on the Digital Footprint from “e-Courses” we identify the following types of courses:

- Assessing e-courses (in which course grades changed at least once for one of the subscribed users last week).

- Frequently visited e-courses (which were accessed by their subscribers at least 50 times last week).

The LSSc model uses the following features from the Digital Footprint dataset as predictors:

- Week of semester, semester (spring or fall), year of study;

- Number of e-courses a student is subscribed to (total/for assessing/for frequently visited courses);

- Average grades (for all/assessing/frequently visited courses);

- Number of active clicks and number of effective clicks (for all/assessing/frequently visited courses);

- Averages of the forecasts made by the LSSc model (across all e-courses/assessing/frequently visited).

Characteristics such as the number of clicks and grades depend not only on a student’s learning behavior but also on the structure of the e-course. Therefore, to reduce the impact of course design on these variables, we standardize the number of active clicks, the number of effective clicks, and the overall grade using both z-score normalization and min–max normalization. The standardized versions of these variables are then added to the set of predictors defined above. A detailed overview of the LSSc model’s features and target variable is provided in Table A2.

The initial LSSc model [39] was trained for forecasting both in the fall and spring academic semesters.

However, during the analysis of academic curricula and predictor distributions, it was found that the fall and spring semesters differ significantly both in terms of curriculum (e.g., most mathematics courses are taught in the fall, while academic internships typically take place in the spring) and in the distributions of LSSc predictors.

As a result, two separate models were trained on 2022 data, LSSc-fall and LSSc-spring, using an identical set of predictors which included indicators of students’ Digital Footprints from the Digital Footprint dataset and the probability of incurring academic debts as predicted by the LSSp model.

Both models were implemented using the XGBoost classifier (from the xgboost 2.1.3 package), which is robust to multicollinearity [41] and demonstrated superior predictive accuracy in prior studies. Model training was performed using nested cross-validation, which simultaneously optimized predictor selection and hyperparameter tuning.

The key characteristics of the models are presented in Table 1. As shown in the table, the LSSc-fall and LSSC-spring models both demonstrated high performance, achieving a weighted F-score (with the weight β = 2) and recall of over 0.99 in 5-fold cross-validation.

Table 1.

Model characteristics and top predictors for the LSSc models.

2.2. Data Drift and Model Degradation

In real-world applications of ML models, various factors can lead to a decline in prediction quality. The deterioration of model performance over time is known as model drift or model degradation [35,42,43]. The broad category of issues relating to model performance due to changes in the underlying data is referred to as data shift (or data drift).

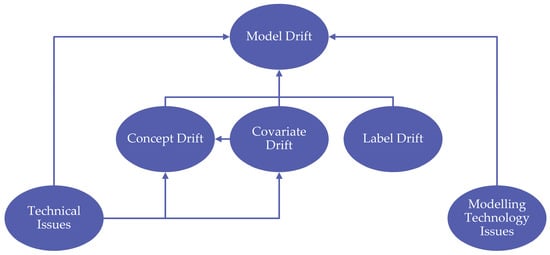

Let X1, …, Xn denote the vector of model features, Y—the target variable, t—the time of model training, and T—the time elapsed since training. Data shift refers to a situation when Pt(Y, X1, …, Xn) ≠ Pt+T(Y, X1, …, Xn). Since P(Y, X1, …, Xn) = P(X1, …, Xn)∙P(Y | X1, …, Xn), the following types of shift can be distinguished:

- Covariate drift—changes in the distribution of features Pt(X1, …, Xn) ≠ Pt+T(X1, …, Xn);

- Label drift—changes in the distribution of the target variable Pt(Y) ≠ Pt+T(Y);

- Concept drift—changes in the relationships between features and the target: Pt(Y | X1, …, Xn) ≠ Pt+T(Y | X1, …, Xn).

These types of drift are illustrated in Figure 1, which also highlights their potential causes related to technical issues and modeling technology.

Figure 1.

Types of model drift and their causes.

Concept shift directly affects the prediction performance since it requires an adaptation of the model parameters to maintain accuracy. At the same time, changes in P(Y | X1, …, Xn) might or might not be accompanied by changes in P(X1, …, Xn), i.e., by covariate shift. Conversely, covariate shift does not necessarily lead to a change in the decision boundary, thus it might not degrade prediction performance. Label shift may affect the prediction performance if cases of significant change, for example, when the number of classes in the classification problem changes [43].

Various types of technical issues can lead to model drift. These include changes in data extraction methods and encoding techniques. Even a change in software can negatively impact model performance. In addition, the modeling approach itself can be a source of model drift. For example, the model may have been trained on a non-representative dataset. A model not designed to handle changes in the data distribution may have been selected due to a better performance on the validation set. Overfitting, the wrong choice of hyperparameters, and similar issues can also occur.

2.3. Methods for Model Drift Detection

To detect data drift and model degradation, both statistical and ML model-based approaches are used. The Population Stability Index (PSI), Kullback–Leibler’s divergence, Jensen–Shannin’s Distance, and Kolmogorov–Smirnov’s tests are used to compare the distributions of training sets and new data.

In streaming data scenarios, sequential analysis techniques are employed, such as the drift detection method and the early drift detection method, which monitor the classification error rate over time [44,45]. Window-based drift detectors monitor the performance of the most recent observations by comparing it with the performance in a reference window. Adaptive windowing is the most popular method based on the windowing technique [46].

Various methods address data drift using machine learning models. For instance, in [47], a k-Nearest Neighbors approach is employed to identify regions in the feature space where the most significant regional drift occurs. This enables a focused analysis of the most problematic areas. To assess how similar or dissimilar the training and the test datasets are, classification methods can also be used to distinguish between the old and the new data. Such models can be referred to as Classifier-Based Drift Detectors. Unlike statistical tests, they are less prone to producing false positives when applied to high-dimensional data.

From the perspective of further combating data drift, promising approaches include detection techniques based on explainable AI methods, such as SHapley Additive exPlanations (SHAP).

Since our study aims not only to detect drift in high-dimensional educational data, but also to identify the sources of model degradation, we focus on several techniques that are particularly well suited for this purpose:

- PSI—to evaluate drift in univariate marginal and conditional distributions of educational features;

- Classifier-Based Drift Detector—to assess changes in the multivariate structure of student data;

- SHAP—to analyze model degradation in terms of performance metrics.

2.3.1. PSI

To detect drift in the univariate distributions of students’ Digital Profile and Digital Footprint data, this study employed PSI [48,49]. PSI provides a quantitative measure of the presence and magnitude of shifts in distributions, using the following formula:

where pi and qi represent the proportions of observations in the i-th bin of the compared histograms for distributions P and Q, respectively, and n is the number of bins used for grouping the values of distributions P and Q.

The categorical features of the Digital Profile and Digital Footprint datasets were analyzed based on the number of unique categories present in the combined dataset from the years being compared. For continuous features, the data were divided into ten equal-width bins. The resulting PSI values were interpreted using established empirical guidelines [50]: PSI < 0.1 indicates negligible shift; 0.1 ≤ PSI < 0.25 indicates moderate shift; and PSI ≥ 0.25 indicates significant shift. It should be noted that the chosen threshold values are not theoretically derived but are widely adopted as practical guidelines in fields such as credit scoring and model validation. The PSI metric is based on the Kullback–Leibler divergence, and these thresholds correspond to empirically established levels of informational divergence between populations.

2.3.2. Classifier-Based Drift Detectors

To identify systematic changes in the multivariate structure of students’ data, classification models were employed [51,52,53]. The target variable for these models was the year in which the corresponding data were collected, and the baseline classification algorithm was the RandomForestClassifier (RF-Drift Detector) with default parameters (n_estimators = 100, criterion = “gini”, max_depth = None, min_samples_split = 2) from the scikit-learn library (v1.6.1) for Python. This algorithm was chosen due to its ability to model nonlinear relationships and interactions between features, which is particularly important for analyzing complex multivariate data.

The original dataset was split into training and testing sets in a 70/30 ratio. Model performance was evaluated using the metrics accuracy, precision, recall, and F1-score. To prevent overfitting, a 10-fold cross-validation was applied. In cases where the classifier achieved a high performance (F1-score > 0.8), it was concluded that the data contained strong temporal patterns, indicating significant data drift across the compared years. Additionally, a feature importance analysis of the trained classifier helped identify the key features responsible for predicting the data collection year, and thus for detecting potential drift in the structure of the Digital Profile and Digital Footprint data.

2.3.3. SHAP Loss

SHAP, introduced in [54], is a method for explaining individual predictions using Shapley values. The Shapley value represents the fair and proportional contribution of each player in a cooperative game to the total payoff. In the context of ML models, SHAP treats each predictor as a “player” and assesses its individual contribution to the predicted outcome.

SHAP values can be used to diagnose model drift. The idea is that if new data are distributed similarly to the training set, then a fixed value of a given feature in the new dataset should contribute to the result in the same way as the corresponding feature value in the training data. A significant number of violations of the principle indicates the presence of model drift. Building on this idea in [55], the authors introduced the L-CODE framework, which enables the detection of model drift in the absence of the true outcomes for new-coming data points.

However, a shift in SHAP does not always indicate a decline in prediction performance. To better capture model degradation, the outcome of the cooperative game can be defined in terms of the loss function rather than the model’s prediction. SHAP values computed with respect to the model’s loss—referred to as SHAP loss—can be used to assess the contribution of each feature to prediction errors. In the study [56], the SHAP loss values were employed to monitor both the model performance and the predictive power of individual features in deployed models.

Monitoring models using SHAP loss offers several advantages over standard monitoring based solely on the loss function or prediction quality metrics. When monitoring overall loss, issues related to individual predictors may be masked by compensatory effects from other predictors. This can lead to undetected problems, such as the misencoding of categorical variables—for example, when categories that occur with similar frequency are mistakenly interchanged. By tracking the contribution of each predictor to the loss function, we can more effectively identify both genuine model drift and arising technological issues, for instance, from changes in dictionaries or encoding procedures.

In our study, we detect model drift for each individual predictor using SHAP loss values. To do this, for each feature X we test the hypothesis that there is a significant difference in SHAP loss values between the training dataset (SHAP losstrain(X)) and the new dataset (SHAP lossnew(X)).

When sample sizes are large, using Student’s t-test may lead to statistically significant differences that do not meaningfully affect prediction performance. Therefore, conclusions about data drift should consider both the statistical significance of the difference in SHAP loss values and the effect size. Moreover, at conventional significance levels (e.g., α = 0.1, 0.05, 0.01), the power of the t-test may be excessively high, increasing the risk of detecting trivial differences. Thus, we recommend conducting a power analysis before hypothesis testing and potentially lowering the significance level to balance Type I and Type II error rates.

We propose the following procedure:

- Define a practically meaningful effect size (prac_effect).

- For each predictor X, using the samples SHAP losstrain(X) and SHAP lossnew(X), the following steps can be performed:

- (a)

- Perform a power analysis to determine an appropriate significance level α;

- (b)

- Using t-test, compare the means of SHAP losstrain(X) and SHAP lossnew(X);

- (c)

- Calculate the observed effect size (Cohen’s d);

- (d)

- Conclude that data drift is present for predictor X if the difference in SHAP loss is statistically significant and Cohen’s d ≥ prac_effect.

To determine prac_effect—the effect that is meaningful from the practical point of view—it is possible to establish the minimally significant effect in terms of the possible values of the model’s prediction [57], or use Cohen’s d values. In our study, we use the second approach and, following the gradation of Cohen’s d, suggested by Sawilowsky [58], classify an effect size below 0.01 as very small, between 0.01 and 0.2 as small, between 0.2 and 0.5 as medium, between 0.5 and 0.8 as large, between 0.8 and 1.2 as very large, and above 1.2 as huge.

To compute SHAP loss values, we use the SHAP library (version 0.46.0) for Python.

3. Results

3.1. Data Drift in the Digital Profile Dataset

To study the stability of the Digital Profile dataset, we used data from the 2018 to 2023 academic years. Two methods were employed to detect structural changes in the data: calculating PSI and training Classifier-Based Drift Detectors to classify Digital Profile data by year. The use of both methods is motivated by their complementary nature. PSI provides an assessment of the distributions of individual features, allowing for the identification of features exhibiting significant data drift. However, this method does not account for potential interactions between features or their combined influence on the overall data structure. In contrast, Drift Detector models can assess the distinguishability of data across time periods, taking into account both individual features and their combinations, which is important for uncovering hidden patterns of data drift.

The Digital Profile data were divided into three periods: 2018–2021 (data used for training the LSSp model), 2022 (data used for training the LSSc model), and 2023 (data which is new for both models).

The PSI was calculated for both unconditional and conditional distributions given the target values. For computing PSI, the feature distributions were divided into 10 bins.

For unconditional distributions of features the PSI values were calculated between the periods 2018–2021 and 2022, 2022 and 2023, and 2018–2021 and 2023. It was found that only three features exhibited PSI values indicating a moderate data shift between the data of 2018–2021 and 2023: “Number of courses in the previous semester” (PSI = 0.122), “Number of academic debts in the previous semester” (PSI = 0.145), and “Year of study_3” (PSI = 0.111). For all other comparisons, all features had PSI values less than 0.1, indicating a high stability of the univariate distributions of the Digital Profile characteristics.

A similar PSI calculation was performed for conditional distributions of features given different values of the target variable (at_risk = 0 and at_risk = 1). The analysis of conditional distributions allows for the identification of data shift in the group of successful students (at_risk = 0) and the group of students with academic debts (at_risk = 1), which is important for understanding the dynamics of academic success in time. The feature “Year of study_3” exhibited a moderate shift (PSI = 0.146) between 2018 and 2021 and 2022 for students without academic debts, as well as between 2018 and 2021 and 2023 for both at_risk = 0 (PSI = 0.188) and at_risk = 1 (PSI = 0.110). Three other features demonstrated moderate shift between 2018 and 2021 and 2023: “Number of courses from two semesters prior” (PSI =0.121 for at_risk = 1), “Number of academic debts from two semesters prior” (PSI = 0.139 for at_risk = 1), and “Number of courses in the previous semester” (PSI = 0.116 for at_risk = 0 and 0.164 for at_risk = 1). All other conditional distributions compared across the observed years had PSI values less than 0.1.

To gain a deeper understanding of the stability of the Digital Profile data and to uncover hidden patterns of data drift, we trained the RF-Drift Detector model with default hyperparameters. The model classifies data by year (2018–2021, 2022, and 2023) and exhibits the following quality metrics on the test sample (see Table 2).

Table 2.

Classification metrics of the RF-Drift Detector.

The RF-Drift Detector demonstrates a high classification performance on older data (2018–2021); however, it decreases on more recent data (2022 and 2023). This indicates a significant distribution shift between the older and newer datasets, while the data from 2022 and 2023 appear to be more stable. The significance of data drift related to specific features can be assessed using their feature importance (FI) scores in RF-Drift Detector. The following features exhibit the highest importance scores: “Age” (FI = 0.166), “Number of courses in the previous semester” (FI = 0.06), “GPA after retakes from the previous semester” (FI = 0.031), “Number of courses from two semesters prior” (FI = 0.03), and “GPA after the previous semester” (FI = 0.03). The high feature importance for “Age” may indicate a shift in the demographic composition of the data within the digital educational profile, while the top five predictors likely reflect changes in the curricula and academic workload. An additional factor contributing to these shifts may be the COVID-19 pandemic, which significantly altered the format of instruction. The results of the RF-Drift Detector-based analysis partially align with those of the PSI, while also revealing complex changes in joint feature distributions that were not captured by univariate analysis.

In summary, the PSI results indicate that most features exhibit high stability (PSI < 0.1) and show no significant changes in univariate distributions, with the exception of a few features demonstrating moderate drift. In the multidimensional feature space, the RF-Drift Detector identified data shift between older and newer datasets along with a moderate stability of features’ distribution in the new data from 2022–2023.

3.2. Model Degradation of the LSSp Model

The LSSp model, trained on digital educational profile data from the 2018–2021 academic years, was used to make predictions for the 2022–2024 academic years. The prediction performance slightly declined during this period (see Table 3, lines 1, 2, and 5).

Table 3.

Performance of the LSSp model.

Prediction quality in the fall semesters is consistently lower than in the spring semesters (see Table 3, lines 3, 4, 6, 7, and 8). The decline in model performance, especially recall, results in more at-risk students being missed, reducing the effectiveness of the Pythia support service. Delayed interventions increase the risk of academic failure for students who could benefit from early help. This highlights the real-world impact of model degradation and the need for continuous monitoring and recalibration.

We found that the primary contributor to this decline in quality is the model’s performance on first-year students. Table 4 presents the average weighted F-score of the LSSp model’s predictions on new data from fall 2022 to fall 2024. The results show that in the spring semesters, the prediction quality for first-year students is nearly identical to students in the second year of study and above; moreover, the average F-score for the first-year students is slightly higher. In contrast, during the fall semesters of 2022, 2023, and 2024, the average weighted F-score for the first-year students is significantly lower than for upper-year students—by 20%, 18%, and 16%, respectively.

Table 4.

Comparison of the weighted F-scores for first-year and upper-year students.

This decline is likely due to the absence of academic performance data from previous semesters for first-year students in the fall semester. Since they have only recently entered the university, all predictors related to prior academic performance are missing. Notably, predictors such as “GPA in the previous semester”, “GPA after second retakes (previous semester)”, and “Number of academic debts in the previous semester” consistently receive high feature importance scores across all the models in the LSSp ensemble. Thus, these predictors significantly influence the model’s output. In spring, first-year students have already received grades for the fall term, and consequently, information about their prior academic performance becomes available. As a result, the prediction quality for these students improves, becoming comparable to that of students in higher years.

The decline in prediction quality may be attributed to data drift. In Section 3.1, we assessed the shifts in the distributions of model predictors. However, to identify the root causes of performance degradation, it is also important to assess model drift by comparing the SHAP loss values between older and newer data.

Since the LSSp model is an ensemble of tree-based algorithms, we use the TreeExplainer algorithm [56] with interventional feature perturbation to calculate SHAP loss values for all predictors in the base models. These values are computed on the training dataset (2018–2021) and on new data (the fall and spring semesters of 2022, the fall and spring semesters of 2023, and the fall semester of 2024). We then compare the SHAP loss distributions from the 2018–2021 dataset to those from each of the new datasets using the methodology described in Section 2.3.3.

Most of the statistically significant differences in SHAP loss exhibit a small effect size (Cohen’s d < 0.2). Only two predictors show a medium effect size for one of the base models (RF): “Number of retakes from the previous session”, with Cohen’s d = 0.25 when comparing the 2018–2021 data to fall 2023; and “Age”, with Cohen’s d = 0.2 when comparing the 2018–2021 data to fall 2022. Thus, from the perspective of SHAP loss analysis, there is no evidence of substantial data drift for the predictors used in the LSSp model. Therefore, to improve the prediction quality on new data, we do not exclude any predictors from the LSSp model. Instead, we explore retraining the model on more recent educational data.

Two strategies are considered:

- Extend the training dataset by adding all academic semesters up to the target year. For example, to make predictions for 2023, the model is trained on data from 2018 to 2022; for predictions in 2024, the training data includes the years from 2018 to 2023.

- Restrict training dataset to the previous academic year only.

Both retraining strategies—using extended historical data or data from only the previous year—lead to slight improvements in prediction metrics for spring 2023 and fall 2024 (see Table 5). However, since including the oldest data in training may introduce noise over time, we have adopted the strategy of retraining the LSSp model on data from the previous academic year prior to making predictions for the upcoming year.

Table 5.

Metrics of the LSSp models related to different refitting strategies.

In summary, no substantial model drift was observed for the LSSp model over the analyzed time period. Stable prediction quality can be maintained by retraining the model on data from the previous academic year, using the same set of predictors and the hyperparameter values for the ensemble’s base models.

3.3. Data Drift in the Digital Footprint Dataset

Following the approach used for the Digital Profile dataset, a similar analysis of temporal data stability was conducted for the Digital Footprint dataset. The Digital Footprint data related to the 2022–2024 academic years were collected via the “e-Courses” electronic learning platform. For the analysis, the data were grouped into three fall semesters (2022, 2023, and 2024) and two spring semesters (2022 and 2023).

PSI values for three pairs of datasets—2022 and 2023, 2023 and 2024, 2022 and 2024—were computed for both the unconditional and conditional distributions of features separately for the fall and spring semesters.

The results for unconditional distributions in fall semesters were the following:

- All PSI values for feature distributions between 2022 and 2023 were below 0.1, indicating no significant data drift.

- For the 2023–2024 comparison, the feature “Year of study” exhibited a substantial drift with PSI = 0.5. Two other features, “Average grade for assessing e-courses (min–max scaled)” with PSI = 0.123 and “Average grade for all e-courses (min–max scaled)” with PSI = 0.124 showed moderate drift. For all other features PSI values were below 0.1.

- A comparison between 2022 and 2024 revealed that half of the features demonstrated drift that was moderate to significant. A new feature exhibiting substantial drift was “Number of active clicks for assessing e-courses” (PSI = 0.252), which had previously been stable. The moderate drift with PSI in the range from 0.1 to 0.25 was detected for the following features: “Number of effective clicks for frequently visited e-courses”, “Average forecast of model LSC for assessing/frequently visited/all e-courses”, “Number of active/effective clicks”, “Number of all/assessing e-courses”, “Average grade for assessing e-courses (min–max scaled)”, and “Average grade for all e-courses (min–max scaled)”.

For the conditional distributions of features given the target values, the following results were obtained:

For students without academic debts (at_risk = 0) PSI values between 2022 and 2023 showed moderate shift in the features: “Number of active clicks” (PSI = 0.142), “Number of active clicks for assessing e-courses” (PSI = 0.143), and “Number of active clicks for frequently visited e-courses” (PSI = 0.142). All other features remained within the acceptable range (PSI < 0.1).

- All conditional distributions of features given at_risk = 1 were stable between 2022 and 2023 (PSI < 0.1).

- Conditional distributions given at_risk = 0 remained within the range of insignificant change between 2023 and 2024 with PSI < 0.1, except for the feature “Year of study”, which exhibited substantial drift (PSI = 0.575).

- A significant drift in the conditional distribution given at_risk = 1 between 2023 and 2024 was also observed for “Year of study” (PSI = 0.411). Moderate drift was found for “Average forecast of model LSC for assessing/frequently visited/all e-courses”, “Average grade for assessing e-courses (min–max scaled)”, and “Average grade for all e-courses (min–max scaled)”.

- Out of the 30 Digital Footprint features compared between 2022 and 2024, only 10 remained within the threshold for insignificant drift (PSI < 0.1) for both conditional distributions given at_risk = 0 and at_risk = 1. These included “Average grade for all/assessing/frequently visited e-courses”, “Number of frequently visited courses”, and their min–max scaled variants.

For the unconditional distributions of features in the spring semesters, PSI values were calculated between 2022 and 2023. A moderate drift was observed for four features: “Year of study” (PSI = 0.121), “Number of active clicks” (PSI = 0.234), “Number of active clicks for assessing e-courses” (PSI = 0.235), and “Number of active clicks for frequently visited e-courses” (PSI = 0.234). Importantly, the PSI values for the activity-related features are on the threshold of significant drift.

In the conditional distributions of features between 2022 and 2023, for learners with at least one academic debt, moderate drift was observed in the activity features “Number of active clicks” (PSI = 0.17), “Number of active clicks for assessing e-courses” (PSI = 0.172), “Number of active clicks for frequently visited e-courses” (PSI = 0.170), and in the feature “Prediction_proba_LSSp” (PSI = 0.118).

The data for conditional distributions given at_risk = 0 showed significant drift in the features “Number of active clicks” (PSI = 0.317), “Number of active clicks for assessing courses” (PSI = 0.319), and “Number of active clicks in frequently visited courses” (PSI = 0.318), and moderate drift in “Year of study” (PSI = 0.158), “Number of effective clicks” (PSI = 0.113), “Number of effective clicks for assessing courses” (PSI = 0.115), and “Number of effective clicks in frequently visited courses” (PSI = 0.113).

The analysis of both conditional and unconditional distributions of Digital Footprint data revealed the following trends:

- The distributions can be considered stable in the short term, particularly during the fall semesters. The results show only minor drift between the years 2022 and 2023, and between 2023 and 2024.

- In the long term, data drift tends to accumulate, leading to the emergence of moderate to significant drift across several features.

- The analysis of conditional distributions demonstrated that data drift depends on learner groups as defined by the target variable. For students without academic debts, moderate changes in activity on the e-learning platform “e-Courses” were observed, with more pronounced variations during spring semesters.

Two Classifier-Based Drift Detector models were also trained on student Digital Footprint data—one for the fall semesters and another for the spring semesters. For the fall semesters, a multiclass classification model was used with three different target labels corresponding to the academic years (2022, 2023, and 2024). For the spring semesters, a binary classification model was developed (2022, 2023).

The detector model for the fall semesters demonstrated excellent classification performance on the test set, with precision, recall, and F1-score metrics exceeding 0.97. Feature importance analysis for the multiclass model did not reveal a strong dominance of any particular feature. The most significant feature was “Year of study” (FI = 0.086), while the importance of the remaining features gradually decreased from 0.044 to 0.025.

The binary classification model for the spring semesters yielded similarly strong results, with precision, recall, and F1-score values of 0.98. The ranked list of feature importances closely mirrored that of the fall semester model (in terms of order and magnitude).

The high classification performance of the detector models indicates that data from different years differ significantly in the multidimensional feature space. This confirms the presence of a data shift in students’ Digital Footprints, which may be attributed to changes in student behavior or external factors (e.g., modifications in the university’s digital environment or academic programs). Despite the high stability of individual features (PSI < 0.1), the RF-Drift Detectors identified hidden patterns of change that were not detected through the PSI analysis of univariate and conditional distributions.

3.4. Model Degradation of the LSSc Models

The predictive performance of the LSSc-fall and LSSc-spring models dropped sharply on new data:

- Fall 2023: recall = 0.58; weighted F-score = 0.695;

- Fall 2024: recall = 0.689; weighted F-score = 0.748;

- Spring 2023: recall = 0.741, weighted F-score = 0.757.

When comparing these performance metrics to those reported in Table 1 for 2022 (fall 2022: recall = 0.991, weighted F-score = 0.990; spring 2022: recall = 0.993; weighted F-score = 0.994, see Table 1), we concluded that the models were overfitted. Retraining the models using a more conservative set of hyperparameters may help improve their generalization and predictive performance on new data.

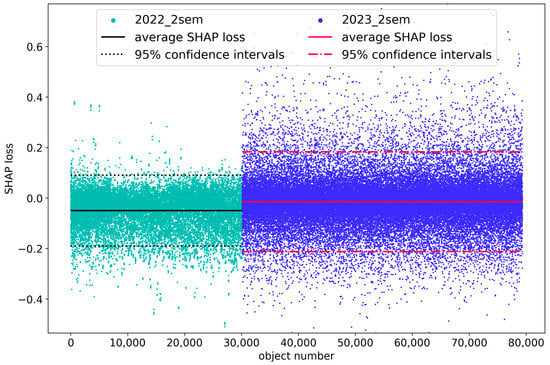

Next, we examine model drift for the LSSc-fall and LSSc-spring models. To assess how significantly each feature’s contribution to the loss function has changed, we conducted a SHAP loss analysis of both the LSSc-fall and LSSc-spring models.

The week of the semester during which predictors are computed and predictions are made may affect the distribution of predictors and their SHAP loss values. For instance, due to varying processes for enrolling students in electronic courses, a predictor like “Number of active clicks” may follow different distributions in the first week of instruction across academic years. However, by the end of the semester, such differences may diminish. Therefore, SHAP loss values for model features were calculated and compared on a weekly basis.

Figure 2 displays the SHAP loss distributions for the predictor “Average forecast of model LSC” in week 14 of the semester, comparing the training dataset (spring 2022) with new data (spring 2023). Weeks are aligned according to the academic schedule, starting from week 1 of each semester, to ensure temporal consistency in pedagogical context across years. A substantial shift in the mean SHAP loss is evident: the average SHAP loss for this predictor in the training data is −0.05, while in the 2023 data it equals 0.01. This indicates that in 2022, the predictor improved model performance, whereas in 2023, its inclusion slightly degraded prediction quality. The difference in mean SHAP loss is statistically significant (p-value < 10−6) with Cohen’s d = 0.39, which means a medium effect. Furthermore, the figure shows that the variance of SHAP loss values increased notably in 2023, particularly toward higher values of the loss function.

Figure 2.

SHAP loss distributions for the predictor “Average Forecast of Model LSC”.

Table A3 presents a comparison of the SHAP loss values for the LSSc-spring model when applied to data from 2022 and 2023. The predictors are grouped based on the maximum observed Cohen’s d values across different weeks of the semester and are sorted in descending order by these values.

As shown in Table A3, the majority of the predictors in both models exhibit a medium level of drift in 2023 in terms of SHAP loss—25 out of 33 predictors in the LSSc-spring model and 28 out of 35 for the LSSc-fall model. The least stable were the features describing the total set of e-courses in which the student is enrolled: “Total number of e-courses”, “Average grade for all e-courses”, as well as the predictor “Week”. For these predictors, Cohen’s d > 0.4, which can be interpreted as a medium level of drift. The most stable (for both the fall and spring semesters) were “Prediction_proba_LSSp” and “Year of study” (for values 3, 4, and 5). For these predictors, Cohen’s d < 0.2, which corresponds to a small level of drift. In the Discussion section, we will explore the possible reasons for the observed instability of data. This substantial shift in the distributions may have contributed to the decline in prediction performance on new data. Based on this, we hypothesize that removing predictors with the most significant SHAP loss drift could improve model accuracy.

To explore ways to improve prediction quality on new data, we implemented the following model modification strategies:

- Retraining the LSSc-spring and LSSc-fall models on 2022 data using the full set of predictors but with a more conservative set of hyperparameters;

- Sequentially removing predictors from the models—starting from the top of Table A3—that showed the most pronounced SHAP loss drift in 2023, followed by retraining on 2022 data;

- Combining both approaches: applying a more conservative set of hyperparameters and removing predictors with the most significant SHAP loss drift in 2023.

Strategies 1 and 2 did not lead to a noticeable improvement in prediction quality for 2023 and 2024. However, strategy 3 yielded a slight positive effect. Therefore, we examine the resulting models in more detail. The key characteristics of these modified models are presented in Table 6.

Table 6.

Impact of predictor removal and hyperparameter regularization on the LSSc model’s stability.

As shown in Table 6, using strategy 3 resulted in the following modifications:

- Model complexity was controlled through regularization parameters: max_depth, min_child_weight, reg_alpha, reg_lambda, and gamma.

- Robustness to noise was enhanced by using non-default values for subsample and colsample_bytree.

- Predictors that exhibited the most significant SHAP loss drift in 2023 compared with 2022 were excluded from the models. After modification, both the LSSc-fall and the LSSc-spring models include only nine predictors.

The modified models exhibit slight underfitting on the 2022 training data but show improved predictive performance on the new data, with modest gains in the weighted F-score and more noticeable improvements in recall. The performance metrics of the modified LSSc-spring and LSSc-fall models now exceed those of the baseline LSSp model across all datasets. However, this improvement is relatively small, raising questions about the overall effectiveness of incorporating LMS Digital Footprint data to refine predictions of academic success based on students’ Digital Profiles.

4. Discussion

4.1. Data Stability

The analysis of the Digital Profile data indicates its stability over time, with only moderate PSI shifts in a few features. However, models trained on this data show relatively low precision and recall (Table 5). These results imply that static characteristics of a student’s profile, such as demographics, prior academic achievements, etc., although providing long-term model stability, are limited in their ability to reflect the dynamics of learning behavior. This highlights the need to supplement profile data with Digital Footprint features, providing more recent information about students.

At the same time, the results of this study also reveal instability in both the Digital Footprint data itself and the LSSc models trained on it. Moreover, the applied model modification strategies aimed at improving performance did not lead to substantial gains. This raises important questions regarding the causes of Digital Footprint instability and potential approaches to address it.

The primary source of this instability lies in the high variability of key components within the digital educational ecosystem.

The volume, quality, and structure of students’ Digital Footprints are directly dependent on the university’s electronic learning environment. Instructors significantly influence Digital Footprint formation by designing curricular content and e-courses, as well as by selecting the instructional mode—blended, traditional, or fully online. These choices determine the tools used and directly shape students’ Digital Footprints, even when course content is identical.

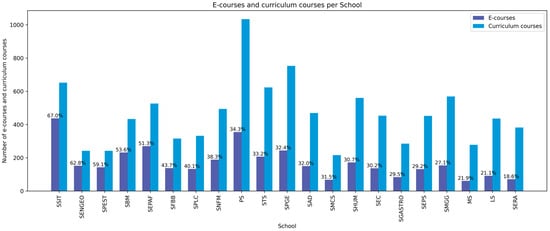

This variability in e-course design likely explains the moderate SHAP loss drifts in click- and grade-related features (e.g., “Number of active clicks (z-scaled)”, “Average grade for all e-courses”, “Average grade for assessing e-courses”, and “Number of effective clicks for assessing e-courses (z-scaled)”, see Table A3).

Another factor contributing to the variability of the Digital Footprint data is the differing levels of digitalization across institutions or departments. This leads to imbalances in both the volume and quality of collected data, as well as in the degree to which students’ educational behavior is captured in digital learning environments. For example, SibFU includes 21 different schools with a wide range of specializations—from culinary studies to aerospace—and the extent to which curricular disciplines are supported by electronic learning courses varies greatly (see, e.g., Figure 3). As illustrated in Figure 3, there is substantial heterogeneity in the availability of e-courses across schools: only 5 out of 21 exceed 50% coverage of curriculum courses with digital counterparts in Moodle, indicating that in the majority of schools, less than half of the disciplines are supported by e-learning resources. This uneven distribution raises concerns about the representativeness of the data, as fields of study with a heavy reliance on offline teaching are underrepresented in the Digital Footprint data. In addition to these quantitative differences, the quality of the electronic courses themselves may also differ significantly, introducing varying levels of noise in the recorded Digital Footprints. It is important to note that the LMS primarily captures online activities and does not account for offline learning unless explicitly included in course design. As a result, the Digital Footprint data reflect only a partial view of the educational experience, particularly for fields of study where offline learning is predominant.

Figure 3.

Number of e-courses vs. number of curriculum courses per school of SibFU.

Uneven e-learning adoption and varying digitalization rates across schools likely explain the drift in e-course count features (e.g., “Total number of e-courses”, “Number of assessing e-courses”, “Number of frequently visited e-courses”, see Table A3).

Finally, Digital Footprint variability originates from students themselves. Beyond measurable LMS data, latent factors (motivation, cognitive styles, learning strategies, etc.) significantly influence learning behavior but are not directly recorded.

The combination of the factors discussed above presents a complex challenge of Digital Footprint data instability when forecasting academic success. While LMSs generate large volumes of data that are indeed related to academic success, the effective utilization of this data requires targeted efforts to understand and mitigate the causes of instability.

Several approaches can be considered to address the instability of students’ Digital Footprint data. One option is to identify and select the most stable Digital Footprint features. For instance, based on the conducted analyses (PSI, detector models, and SHAP loss), it is possible to identify a set of features that are both stable over time and demonstrate sufficient predictive power based on their feature importance scores (e.g., “Number of active clicks for assessing e-courses” and “Number of effective clicks for assessing e-courses”).

Another approach to improving data stability involves the application of specific preprocessing techniques. In our study, the predictors used in the LSSc models for academic performance prediction included both aggregated indicators of student activity in the digital environment and different normalization strategies. Normalization by the group mean (z-score standardization) captures deviations from average group behavior and is better suited for analyzing group dynamics. In contrast, normalization by the group maximum (min–max standardization) highlights individual achievements and indicates how close a student is to the group leader. However, our analysis revealed that, over time, features normalized by the group mean tend to be more stable. Using a PSI threshold of ≥ 0.1 to assess potential feature instability across the 2022–2024 period, it was found that none of the z-scaled features exceeded this threshold, whereas all six min–max scaled features were classified as potentially unstable. This suggests that z-score standardization may offer greater robustness against data drift, especially for click-based and relative activity features, and should be prioritized in feature selection when stability is a concern. However, its generalizability across educational settings needs further validation.

Conservative hyperparameter tuning and feature engineering provided only marginal stability gains despite high implementation effort. Thus, purely technical solutions have limited practical value, and more meaningful improvements may come from data-level interventions, such as the design of digital learning environments.

The most complex, yet potentially most effective, approach to stabilizing Digital Footprint data is the targeted selection of e-learning courses. A key contributor to data instability is the high variability among e-courses. Thus, it may be valuable to develop design criteria for e-courses that support the stability of recorded digital traces. Such criteria could include pedagogical design principles for digital environments (interaction formats, content elements, and instructional guidelines), as well as a standardized set of content elements such as quizzes, assignments, seminars, essays, etc.

The findings of this study have implications beyond the specific context of SibFU. Moodle is widely used across HEIs, and the Digital Footprint features studied—such as clicks, quizzes, and assignments—are commonly collected. Similarly, Digital Profile data (e.g., demographics, academic performance indicators) are standard institutional records. This suggests that the observed patterns of data stability may be generalizable to other HEIs with similar LMS and data infrastructures.

However, the degree of instability and mitigation effectiveness may vary by institution, depending on digitalization, course design, and teaching practices. Institutions using additional data sources (e.g., surveys, dashboards) may observe different stability patterns, especially for Digital Profile features. Therefore, while the core challenges of Digital Footprint instability are likely shared, optimal solutions should be adapted to local educational and technological contexts.

4.2. Mitigating Model Drift Caused by Modeling Technology Issues

In our study, we found that selecting hyperparameters corresponding to a more conservative model—combined with the exclusion of features exhibiting the highest SHAP loss drift—improved predictive performance on new data. While in our case the improvement was modest, in general, conservative hyperparameter selection represents one possible technique for mitigating model drift as it helps to reduce overfitting.

It can be valuable to expand the range of strategies for addressing model stability issues through the following approaches:

- Adaptive selection of models based on “aging” metrics.Instead of selecting a single machine learning model, it can be useful to consider a diverse portfolio of models. For example, in our study, alongside XGBoost, we could have also included k-Nearest Neighbors, Random Forest, and regularized logistic regression—despite their lower performance on the 2018–2021 test set.The idea is to initially deploy the best-performing model in the forecasting system while continuing to generate forecasts using the remaining models in parallel. After several periods of deployment on new data, evaluate each model’s performance and rate of degradation—e.g., via relative model error, as described in [36]. Based on both performance and “aging” metrics, a data-driven decision can then be made regarding which model to adopt for the forecasting service in the next academic term.

- Stress testing during model training.It can be beneficial to incorporate stress tests during the model training phase to identify which types of models are most robust to noise and data perturbations. An example of the adoption of such an approach can be found in [59].

4.3. Pipelines for Monitoring and Refinement of the Deployed Models

In a scenario when true labels become available at the end of the examination period, significant interventions to counteract model drift—such as selecting a new model for the forecasting system, or retraining models—can be made only twice a year.

At the current stage of our research, we propose the following pipeline to support decisions in learning success forecasting systems:

- Assess predictive performance of all models from the portfolio on new data and compute aging metrics.

- In cases of significant model degradation, conduct SHAP loss analysis, using the procedure described in Section 2.3.3 to evaluate the extent and the nature of model drift.

- Retrain all models of the portfolio using different strategies for incorporating most recent data, as described in Section 3.2. Additionally, explore retraining variants that exclude features showing the most significant SHAP loss drift.

- From the set of retrained models, select the one with the best performance and the lowest aging metric to deploy in the forecasting system for the upcoming semester.

- If retrained models still underperform, consider deeper modifications like feature engineering, prioritizing interpretable features. This improves transparency in academic success predictions for staff involved in student guidance.

An open question remains regarding the feasibility of detecting model degradation during the semester. Until true labels become available at the end of the examination period, we are limited to monitoring the distribution of predictors and the model’s output, as forecasts are generated on a weekly basis. Thus, during the semester, we can monitor covariate drift—for example, using PSI—and, to some extent model drift, by analyzing weekly SHAP values using the L-CODE framework [55]. However, to date, we have not identified a clear relationship between shifts in SHAP values and SHAP loss. Similarly, the extent and the direction of covariate drift’s contribution to model degradation remain unclear. These questions require further investigation.

4.4. Detection of Biased Subgroups for Predictive Models

As noted in Section 3.2, a significant decline in the prediction performance of the LSSp model was observed for first-year students during fall semesters, which, in our view, is due to the lack of information about their prior educational background. To address this issue, it is necessary to properly fill in the missing data in the Digital Profile dataset. For instance, admission data and entrance exam results can be used to fill in the gaps related to students’ prior academic performance using comparable indicators.

A low predictive performance for first-year students raises a critical pedagogical and ethical concern: when models underperform for this group, they are less likely to be identified as at-risk and may miss timely support. This risk of systemic under-assistance—especially for those who need help most—underscores the need for equitable access to early interventions and fairer model performance.

The conservative variant of the LSSc model, which we intend to further explore due to its lower susceptibility to model drift, currently underperforms. It may be valuable to identify subgroups of students for which the model demonstrates poor performance. For this purpose, clustering methods or techniques specially designed for detecting biased subgroups in classification tasks—such as those described in [60,61]—can be employed.

The results of this analysis may contribute not only to improving classification quality but also to developing criteria for selecting electronic courses that form the basis of students’ Digital Footprint.

4.5. Limitation of the Study and Future Research Directions

It is important to discuss some limitations of our study. Firstly, educational data are to some extent determined by the specifics of the national educational system. In this regard, conclusions about the stability of Digital Profile data may differ for other educational systems. Secondly, the stability of Digital Footprint data is largely determined by the level of digitalization of specific educational institutions; therefore, conclusions drawn for SibFU are more likely to be applicable to similar large universities with heterogeneous levels of digitalization across different faculties. However, the methodology proposed in this article for identifying data drift and model degradation can be applied to other educational contexts without significant modifications.

Some aspects of the proposed methodology represent distinct research interests, for example, determining the size of practically significant data drift effects specific to the educational domain.

In the context of ensuring model stability within the Pythia service, promising research directions include conducting additional feature engineering using available data and developing alternative approaches to mitigate overfitting in the LSSc model.

The mentioned directions will form the basis of our future research.

5. Conclusions

Our study contributes to a deeper understanding of model drift in Learning Analytics applications. Addressing the degradation of predictive models is essential for ensuring the effectiveness and fairness of recommender and decision-making systems in education.

We considered and applied several techniques to detect data drift and model degradation in two predictive models designed to forecast academic success in completing a semester. These models, trained on two distinct types of educational data—Digital Profile data and Digital Footprint data—were deployed in a university service for predicting students’ learning performance. We analyzed their performance for three consecutive academic years and assessed the stability of the underlying data.

The key findings of our study are as follows:

- We proposed a methodology for the comprehensive analysis of learning performance prediction models under data drift, incorporating the following techniques: calculating PSI, training and analyzing Classifier-Based Drift Detector models, and testing hypotheses about SHAP loss value shifts. This allows the evaluation of concept drift, covariate drift, and label drift, as well as the overall model degradation.

- We found that educational data of different types could exhibit varying degrees of data drift. Specifically, Digital Profile data in our study demonstrated only a minor covariate shift, and the academic success prediction model based on these data showed slight performance degradation. At the same time, Digital Footprint data from the online course platform changed significantly over time. This was revealed both through covariate shift detection methods and SHAP loss drift analysis. The corresponding predictive model demonstrated a substantial degree of model drift. However, the observed improvements in recall for the LSSc model suggest that LMS-derived behavioral features can enhance the detection of at-risk students if data quality is sufficient. Improving the pedagogical design and standardization of e-courses could significantly enhance the predictive power and stability of LMS-based features.

- We explored and tested several model drift mitigating strategies: using conservative hyperparameters, retraining the model including more recent data, and excluding features with large SHAP loss drift. While we managed to successfully mitigate the degradation of the predictive model based on Digital Profile data, we did not achieve a significant improvement in prediction performance on new data for the model based on Digital Footprint data. It is likely that the most effective strategy for mitigating model degradation in our case may be the development and application of criteria for selecting electronic courses, whose Digital Footprint data will be used for prediction.

We hope that our findings contribute to a deeper understanding of the problems related to the deployment of Learning Analytics-based services. We believe that further in-depth research is needed to understand the relationships between different types of drift in Learning Analytics models and to develop approaches for stabilizing the performance of deployed predictive models.

Author Contributions

Conceptualization, T.A.K. and R.V.E.; methodology, T.A.K. and R.V.E.; software, T.A.K. and R.V.E.; validation, T.A.K. and R.V.E.; formal analysis, T.A.K. and R.V.E.; investigation, T.A.K. and R.V.E.; resources, T.A.K. and R.V.E.; data curation, T.A.K. and R.V.E.; writing—original draft preparation, T.A.K. and R.V.E.; writing—review and editing, T.A.K., R.V.E., and M.V.N.; visualization, T.A.K. and R.V.E.; supervision, T.A.K., R.V.E., and M.V.N.; project administration, T.A.K., R.V.E., and M.V.N.; funding acquisition, T.A.K., R.V.E., and M.V.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study, as this study involves no more than a minimal risk to subjects.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study. Informed consent for the analysis of the Digital Footprint data and the Digital Profile data was also provided, as all students agreed to the general terms and conditions of using the SibFU’s electronic information and educational environment, including the conduct of statistical and other research based on anonymized data.

Data Availability Statement

The samples of anonymized data used in this study can be provided upon appropriate request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GPA | Grade Point Average |

| FI | Feature Importance |

| HEI | Higher Educational Institution |

| LA | Learning Analytics |

| LMS | Learning Management System |

| ML | Machine Learning |

| Model LSC | Model for Forecasting Learning Success in Mastering a Course |

| Model LSSc | Model for Forecasting Learning Success in Completing the Semester Based on Current Educational History |

| Model LSSp | Model for Forecasting Learning Success in Completing the Semester Based on Previous Educational History |

| PSI | Population Stability Index |

| RF | Random Forest |

| RF-Drift Detector | Random Forest based drift detector model |

| SHAP | SHapley Additive exPlanations |

| SHAP Loss | SHAP values computed with respect to the model’s loss |

| SibFU | Siberian Federal University |

Appendix A

Table A1.

Input features in the LSSp Model.

Table A1.

Input features in the LSSp Model.

| Feature | Source | Description | Type | Update Frequency |

|---|---|---|---|---|

| At risk (target variable) | Grade book | Whether a student failed at least one exam in the current semester | Categorical | Dynamic (once a week) |

| Age | Student profile | Student’s age | Numerical | Dynamic (once a week) |

| Sex | Student profile | Gender | Categorical | Static |

| International student status | Student profile | Indicator of foreign citizenship or international enrollment | Categorical | Static |

| Social benefits status | Student profile | Whether the student is eligible for state benefits | Categorical | Static |

| School | Student profile | Faculty within the university | Categorical | Dynamic (once a week) |

| Year of study | Student profile | Current year of study | Categorical | Dynamic (once a year) |

| Current semester (term) | Student profile | Current semester of study | Categorical | Dynamic (once a semester) |

| Study mode | Student profile | Full-time, part-time, or distance | Categorical | Static |

| Funding type | Student profile | Enrollment category: state-funded, tuition-paying, or targeted training | Categorical | Dynamic (once a week) |

| Level of academic program | Student profile | Degree level: Bachelor’s, Specialist, or Master’s program | Categorical | Dynamic (once a week) |

| Field of study category | Student profile | Broad academic domain: humanities, technical, natural sciences, social sciences, etc. | Categorical | Dynamic (once a week) |

| Individual study plan | Student profile | Indicator of non-standard/personalized curriculum | Categorical | Dynamic (once a year) |

| Amount of academic leave | Administrative records | Total amount of academic leave taken since enrollment | Numerical | Dynamic (once a week) |

| Reason for the last academic leave | Administrative records | Primary justification for the most recent academic leave | Categorical | Dynamic (once a week) |

| Number of transfers | Administrative records | Total number of transfers to other degree programs within the university since enrollment | Numerical | Dynamic (once a week) |

| Reason for the last transfer | Administrative records | Stated reason for the most recent transfer to another degree program | Categorical | Dynamic (once a week) |

| Number of academic dismissals | Administrative records | Total number of prior academic dismissals from the university | Numerical | Dynamic (once a week) |

| Reason for the last academic dismissal | Administrative records | Official reason for the most recent dismissal | Categorical | Dynamic (once a week) |

| GPA in the current semester | Grade book | Grade point average across all courses in the current semester, before retakes | Numerical | Dynamic (once a semester) |

| Number of courses in the current semester | Grade book | Total number of courses enrolled in this semester | Numerical | Dynamic (once a semester) |

| Number of pass/fail exams in current semester | Grade book | Number of courses in the current semester evaluated through pass/fail exams | Numerical | Dynamic (once a semester) |