Abstract

The Internet of Things (IoT) holds transformative potential in fields such as power grid optimization, defense networks, and healthcare. However, the constrained processing capacities and resource limitations of IoT networks make them especially susceptible to cyber threats. This study addresses the problem of detecting intrusions in IoT environments by evaluating the performance of deep learning (DL) models under different data and algorithmic conditions. We conducted a comparative analysis of three widely used DL models—Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM), and Bidirectional LSTM (biLSTM)—across four benchmark IoT intrusion detection datasets: BoTIoT, CiCIoT, ToNIoT, and WUSTL-IIoT-2021. Each model was assessed under balanced and imbalanced dataset configurations and evaluated using three loss functions (cross-entropy, focal loss, and dual focal loss). By analyzing model efficacy across these datasets, we highlight the importance of generalizability and adaptability to varied data characteristics that are essential for real-world applications. The results demonstrate that the CNN trained using the cross-entropy loss function consistently outperforms the other models, particularly on balanced datasets. On the other hand, LSTM and biLSTM show strong potential in temporal modeling, but their performance is highly dependent on the characteristics of the dataset. By analyzing the performance of multiple DL models under diverse datasets, this research provides actionable insights for developing secure, interpretable IoT systems that can meet the challenges of designing a secure IoT system.

1. Introduction

Robust intrusion detection is a crucial requirement in Internet of Things (IoT) networks since several objects communicate with each other independently. The nature of the dispersed architecture of these networks, diversity in the communication protocols, inability to command resources, and most often inadequate security measures make them particularly vulnerable to a variety of cyberthreats. A cybersecurity agency called Kaspersky stated that cyberattacks on IoT gadgets have risen by over 300% in the past three years alone, and millions of gadgets are already infected across the globe. These attacks include malware infections, Distributed Denial of Service (DDoS) attacks, unauthorized access attempts, and information disclosures, which pose an extreme threat to the IoT network and the sensitive information they process [1]. Hence, to protect IoT devices against probable vulnerabilities and harmful actions, it is critical to implement potential intrusion detection system (IDS) solutions.

The IDS, which is part of the cybersecurity infrastructure, should be able to classify different types of attacks. Through proper classification, the IDS is able to identify whether an action in the network is malicious or legal. Thus, threat detection is also a key concern, in addition to defending the network’s resources [2]. This process improves detection, analysis, resolution, and reaction to solve cybersecurity problems for IoT networks and other computer environments. Artificial intelligence (AI) [3] and deep learning (DL) [4] have redefined IDS by providing them with high-tech analytical features to navigate the large amounts of data generated by Internet-enabled devices and improve their decision-making processes. AI systems and DL-integrated IDSs have been used to detect anomalous and zero-day threats and identify suspicious cases by differentiating them from actual network breaches [5]. However, the quality and comprehensiveness of the training datasets have a significant impact on the performance of algorithms [6].

In intrusion detection, the dataset plays a very important role in the IoT network since it is used to assess and compare IDSs. They are usually characterized by information about network traffic and features observed during simulated or real attacks [7]. CICIDS occupies one of the prominent positions among the most utilized datasets and includes samples of benign and hostile traffic identified in IoT networks and devices [8]. Another interesting dataset is IoT-23, which emphasizes the attacks and anomalies unique to IoT devices, such as spoofing, data exfiltration, and command injection. This dataset gives researchers an opportunity to develop and evaluate IDSs that are specifically tailored to IoT environments [9]. Moreover, some datasets that were not initially created to analyze IoT have been adjusted to include threats related to IoT networks. As an example, the NSL-KDD dataset, initially developed for general network intrusion detection, was altered and enhanced to evaluate the effectiveness of IDS on IoT networks. The above-mentioned datasets, along with others such as the UNSW-NB15 and CTU-IoT, can be considered by researchers to determine the effectiveness, precision, and strength of an IDS in protecting IoT networks against cyberattacks.

The remainder of this paper is organized as follows. In Section 2, we discuss the contributions of other researchers to the topic. In Section 3, we discuss the proposed model in detail. In Section 4, we discuss the experimental setup, including the dataset used, data processing, and performance metrics used to evaluate the performance of the proposed system. Section 5 presents the results and compares the performance of various DL models on the datasets used. Finally, Section 6 concludes the work and provides future directions.

2. Related Work

The focus of researchers on the application of deep learning methods to IoT-based network intrusion detection systems has increased, especially because it can improve cybersecurity in changing environments. Building robust deep learning models for the underlying IDS demands careful selection and tuning of hyperparameters. The ideal number of hidden layers and neurons in Generative Adversarial Networks (GANs) was investigated by researchers in [10], highlighting the significance of parameter adjustment for efficient IDS creation. In [11], the authors proposed and achieved a classification accuracy of 93% on the NSL-KDD dataset with a deep neural network (DNN) with 200 hidden layers. In [12], the authors experimented with DNN models with varying hidden layers and used a hyperparameter selection method to find the optimal number of layers. On the other hand, in [13], the authors evaluated the performance of three datasets on a simpler DNN that consisted of one input layer, three hidden layers, and one output layer.

The researchers in another study [14] offered a comparison of ANN, RNN, and DNN performance using an enhanced UNSW-NB15 dataset. They demonstrated that a DNN with a specific numbers of layers can achieve an accuracy rate of 99.22% and 99.59% on binary and multivariate classification, respectively. In [15,16], authors combined a DNN and stacked auto-encoder (SAE) for IDS application. CNNs have also been used in IDS alongside ANNs by many researchers. The authors in [17] presented a comparative study of the CNN and LSTM/GRU models and demonstrated that this particular model had an accuracy of 97.01% in comparison with other models. In contrast, other studies have explored the fusion of CNN and RNN models to leverage the feature extraction capabilities of CNNs and the strong sequence modeling abilities of RNNs. In [18], CNN is integrated with the Spark data processing platform for binary classification, followed by machine learning for multiclass classification of anomalies. This integration enhances the discrimination between normal and abnormal events while minimizing potential coupling issues and reducing data preprocessing time.

In [14], the authors compared LSTM-based intrusion detection systems (IDSs) with other models and found that LSTM alone did not perform as well as the combined models. To bolster LSTM’s feature extraction capability, CNNs are integrated into IDS [18]. Another strategy, as demonstrated in [19], involved using autoencoders (AE) to reduce the dimensionality of the features before combining with LSTM, yielding positive intrusion detection outcomes. Additionally, ref. [20] introduced a weighted LSTM (WDLSTM) variant to address concerns of overfitting caused by circular connections. Bidirectional LSTM (BiLSTM) was also leveraged in [21] to enhance prediction accuracy by incorporating temporal information from both past and future instances.

3. Proposed Method

3.1. System Model

The proposed system is described by a supervised learning setup where the input data is represented as a matrix X with N samples, with each sample being a vector in a D-dimensional feature space. Associated with each sample is a label , where , indicating L distinct attack categories. The goal is to learn a function that maps feature vectors to their corresponding labels. In supervised learning, this function is approximated by a learnable function using a labeled training dataset. The objective is to make as close as possible to the true underlying function to accurately predict labels for new, unseen data points.

3.2. DL Models

3.2.1. CNN

CNNs have demonstrated exceptional performance across various domains. In CNNs, input features undergo transformation into meaningful information through convolutions, which are then utilized to build subsequent layers of the neural network. A convolutional layer in CNNs serves the purpose of feature extraction and performs linear operations. Convolutions are achieved by employing multiple kernels or filters. Mathematically, a convolution operation can be expressed as

Here, the kernel k is of dimensions , where n and m represent the height and width of the kernel, respectively. x denotes the input to the convolution operation, and b represents the bias term.

Our proposed model for classifying attacks in IoT systems leverages a sequence input layer to handle temporal IoT data, followed by two convolutional layers. In each convolutional layer, the number of filters is doubled compared to the previous layer, allowing for more complex feature extraction. We employed causal padding and rectified linear unit (ReLU) after each conventional layer to efficiently capture sequential dependencies. In addition, normalization layers are used to improve training convergence. Afterwards, we applied a global average pooling layer to aggregate time dimensional characteristics. A fully connected layer with softmax activation serves as the final layer, allowing attacks to be categorized into multiple classes.

3.2.2. LSTM and biLSTM

An architecture of recurrent neural networks (RNNs) called Long Short-Term Memory (LSTM) is designed to identify sequential patterns and long-range dependencies in data. The fundamental components of LSTM are memory cells and gates. The cells are used to store data over time, and gates control the information flow into and out of these cells. An LSTM cell’s main constituents are the input gate (), forget gate (), output gate (), and cell state (). The input gate decides how much fresh information to add, the forget gate decides how much information to discard, the output gate controls the cell’s output, and the cell state reflects the long-term memory. The computations within an LSTM cell involve activation functions like the sigmoid function for gate outputs and the hyperbolic tangent (tanh) function for candidate cell state updates. The equations governing an LSTM cell’s behavior are

Here, represents the input at time step t, is the previous hidden state, W and b are the weight matrices and bias terms, respectively. The symbol denotes the sigmoid activation function. These equations represent the standard LSTM implementation mechanism, and the architecture used in our experiments adheres to this formulation. LSTM and biLSTM are employed in this work due to their proven effectiveness in learning long-term dependencies in sequential data. IoT traffic data, especially attack patterns, often follow temporal sequences, making LSTM and biLSTM a suitable choice for intrusion detection systems [22].

Our suggested model for categorizing attacks in IoT systems starts with a sequence input layer followed by three LSTM layers. Each LSTM layer has different amounts of hidden units. These LSTM layers are appropriate for modeling temporal patterns in IoT attack data because they are able to capture long-term dependencies in sequential data. For multi-class classification, we employed a softmax layer after feature transformation and dimensionality reduction achieved by a fully connected layer before the softmax layer. In the next experiment, we switched to BiLSTM layers, which process data both forward and backward to capture bidirectional dependencies. This improves the model’s capacity to precisely categorize attacks. Each LSTM and BiLSTM layer of the design uses increasing units; the first layer has 64 units, the second has 128 units, and the final layer has 128 units. A dense layer with a softmax classification activation function makes up the last layer.

4. Experiment Design

In our experiments, we used the Adam optimizer for training all deep learning models with a learning rate of 0.001, batch size of 32, and up to 100 epochs. Additionally, the training data is shuffled at the beginning of each epoch to prevent the model from overfitting to the input sequence and to encourage better generalization. These hyperparameters were selected based on preliminary tuning to balance convergence speed and model generalization.

4.1. Data Preparation and Pre-Processing

While the original datasets are available as CSV files with multiple features and records, we prepared data as per the requirements of the experiments. First of all, we removed the columns (features) with missing values. Afterwards, we removed the records with missing values. Although other options such as duplication, interpolation, and average value replacement are possible, we aimed to maintain the originality of the data; therefore, we chose to remove the records with missing values over other options. Then, the text features such as upd packet, source ip, destination ip were converted into numeric values using the one-hot-encoding technique. Then, we prepared two separate sets of data for each dataset: the balanced dataset and imbalanced dataset.

4.1.1. Balanced Dataset

Data imbalance is a common challenge in many machine learning tasks, particularly in domains like intrusion detection where certain classes of data are significantly underrepresented compared to others. To address this issue and ensure fair model training, we employed a data balancing technique known as undersampling in our study. The undersampling reduces the number of samples in the majority class to achieve the balanced class distribution. In our experiments, the undersampling approach is chosen instead of oversampling (which balances the data by synthetic generation of samples) or duplication of existing samples to preserve the originality of the dataset. As a result, a balanced dataset is achieved while ensuring that the remaining samples in the class are still authentic and representative of the actual data distribution. The undersampling process involves careful selection of samples from categories with a higher number of instances and removing them until each category has a similar number of samples. This step is crucial to prevent bias and to maintain the integrity of the dataset. While undersampling helps to address the challenges of class imbalance, it also comes with considerations. One such consideration is the potential loss of information due to the removal of samples, which could impact the model’s ability to capture complex patterns in the data. In the context of deep learning techniques, data balancing through undersampling plays a vital role in improving model performance by reducing overfitting and ensuring a fair representation of all classes during training. By including this data balancing approach in our methodology, we aimed to enhance the robustness and accuracy of our deep learning-based intrusion detection system. The balanced datasets were subsequently split into 80% for training and 20% for testing. The resulting sample distribution is shown in Table 1.

Table 1.

Dataset split between training and testing samples after data balancing.

4.1.2. Imbalanced Dataset

To ease the handling of the dataset, we extracted a portion of records of the original datasets, from which 80% is used for training and 20% is used for testing. This split was applied consistently across all datasets to ensure comparability in model evaluation. The number of records of minority class were not reduced. This decision was made to preserve the natural class imbalance present in real-world IoT traffic, where certain attack types are significantly underrepresented compared to others. While processing with imbalanced datasets, we used the 5% samples of each category of original datasets. This sampling strategy was adopted to reduce computational load while retaining representative distributions across classes. The sampling was applied uniformly, ensuring the overall class proportions remain unchanged. By maintaining the original imbalance in class distributions, this setup allowed us to evaluate how well each model performs under realistic data conditions. In such scenarios, performance metrics like recall and MCC become critical, particularly for identifying rare but high-impact attack types. For WUSTL-IIoT-2021, only binary classification is considered in the imbalanced setup as well, with ‘Normal’ and ‘Attacked’ categories. This dataset is included to examine performance under simpler class distributions and industrial IoT settings. The number of observations while performing the experiments on the imbalanced dataset are shown in Table 2.

Table 2.

Dataset records’ distribution for training and testing.

4.2. Dataset Details and Comparison

The experiments conducted in this study utilize four key datasets: BoTIoT [23], ToNIoT [25,26], CiCIoT [24], and WUSTL-IIoT-2021 [27]. Each dataset provides distinct insights into IoT and IIoT security challenges.

The BoTIoT dataset is built upon a realistic IoT network testbed designed at the University of New South Wales (UNSW), Australia, to simulate a smart home and smart city IoT environment. The testbed integrates multiple IoT devices and services with normal and malicious traffic streams to capture comprehensive network activity for intrusion detection research. The network consists of normal IoT devices, such as smart fridges, smart thermostats, smart garage doors, and surveillance cameras, all connected through a software-defined networking (SDN) environment to reflect modern IoT deployment models. These devices interact with remote cloud services via edge and gateway nodes, simulating real-world network behavior under normal operations. To generate malicious traffic, the environment includes simulated attack tools like Hping3, Slowloris, and Nmap, which are used to perform a variety of cyberattacks. These attacks are launched from compromised virtual machines within the network to mimic attacker behavior targeting the IoT infrastructure. The publicly available dataset is composed of multiple features and includes both training and testing subsets with detailed distributions of data items for different attack types.

The IoT network scenario in ToNIoT simulates a smart industrial environment composed of multiple interconnected devices, services, and operating systems. These include IoT/IIoT sensors and actuators (temperature, motion, humidity sensors), edge devices and gateways, operating systems (Windows 7, Windows 10, Ubuntu, and Android), and network infrastructure components. These entities are connected through a network topology that mirrors a smart manufacturing environment. The devices generate telemetry and communication data during normal operations and under various cyberattack scenarios. The attack traffic in the dataset is generated using tools such as Metasploit, Hping3, Nmap, GoldenEye, and Loic. The ToNIoT dataset incorporates diverse data sources from telemetry datasets of IoT and IIoT sensors, operating systems datasets, and network traffic datasets. It was collected from a network at the Australian Defence Force Academy, encompassing various virtual machines and hosts running different operating systems. This dataset includes many attack methods such as DoS, DDoS, and ransomware, against web apps, IoT gateways, and computer systems, thus providing a rich resource for developing intrusion detection systems.

The CiCIoT dataset was created by the Canadian Institute for Cybersecurity and is designed for deep learning-based intrusion detection in IoT contexts. The dataset is based on a smart home testbed environment that includes 105 IoT devices such as smart thermostats, lights, and cameras. These devices communicate through a Wi-Fi router to simulate real-world consumer IoT setups. The dataset captures both benign and malicious traffic to enable comprehensive evaluation of intrusion detection models in home IoT networks.

The WUSTL-IIoT-2021 dataset is developed by Washington University in St. Louis. The dataset includes telemetry data, network traffic data, and system logs. The testbed was developed by connecting industrial sensors and Programmable Logic Controllers (PLCs) using industrial protocols (e.g., Modbus/TCP) and edge computing gateways. The dataset collected from the testbed emulates the industrial IoT environment. This dataset supports binary classification by capturing normal and attack traffic flows, including unauthorized access and protocol misuse. Hence, it provides a comprehensive resource for analyzing device behavior in industrial settings.

The comparison of various features of datasets is crucial for evaluating the performance of the deep learning models in IoT-based intrusion detection systems. Table 3 compares these four datasets presented in this work, considering a variety of parameters.

Table 3.

Comparison of datasets.

4.3. Performance Metrics

The performance metrics taken into account when assessing the performance of our intrusion detection models are discussed below.

4.3.1. Accuracy

Accuracy is one of the indicators used to predict the perfection of the model. It reflects the percentages of accuracy of the values which were correctly classified in relation to the total number of cases in the dataset. When the data is not balanced, a better accuracy implies that the predictive results of the model are more accurate; however, it may not be enough to provide a complete picture of the performance of the model.

4.3.2. Recall

Another important measure of the functioning of the intrusion detection system is called recall. The recall value determines the ratio of successful accurate detection (true positives) of the model. Good recall provides a guarantee that real threats are not overlooked, which is critical parameter in cybersecurity applications, since failures to detect the intrusion can result in extensive damage.

4.4. Precision

Precision is another metric used to evaluate the performance of a machine learning model. It refers to the quality of the positive predictions. Precision is the number of true positives divided by the overall number of positive predictions (i.e., the sum of true positives and false positives). In an IDS, the high precision indicates that if the system flags an event as an attack, it is more likely to be a genuine attack and less likely to be a harmless event (false alarm).

4.4.1. F1 Score

The F1 score is a balanced metric that takes into account both precision and recall. It provides a single figure that shows the trade-off between false positives and false negatives. It is computed as the harmonic mean of precision and recall. Recall and precision are better balanced when the F1 score is higher.

4.4.2. MCC (Matthews Correlation Coefficient)

False positives, false negatives, true positives, and true negatives are all taken into consideration by the Matthews correlation coefficient (MCC). It offers a fair assessment of the model’s effectiveness. The MCC metric is important specifically for the cases when there is an imbalance between the classes. The MCC metric is a number between −1 and 1, where 1 denotes ideal forecasts, 0 represents random predictions, and −1 denotes total discrepancy between predictions and actual results.

5. Results and Discussion

In this section, we demonstrate and explain the performance of the CNN, LSTM, and biLSTM models presented in Section 3.2 on the datasets discussed in above sections. For all of these models, three loss functions (cross-entropy, focal loss, and dual focal loss) are tested. To validate our results, we repeated all experiments multiple times with different random seeds and the average values of the performance parameters are reported. Furthermore, we benchmarked our models against baseline methods from the existing literature [28], demonstrating clear improvements in accuracy, recall, and MCC.

5.1. Comparison of Loss Function Performance

5.1.1. Balanced BoTIot Dataset

The results in Table 4 highlight a key contrast between the data-balancing strategies used in this study and those in the baseline models from [28]. While the baseline models rely on oversampling techniques either through random duplication (Rand) or synthetic data generation using CTGAN, the models in this study use undersampling to achieve a balanced dataset.

Table 4.

Performance metrics for DL models with different loss functions for the BoTIoT balanced dataset.

The findings indicate that undersampling led to significantly better model performance across all deep learning architectures. For instance, the CNN model trained with cross-entropy loss in this study achieved an accuracy of 0.98824 and an MCC of 0.98541. This notable performance of CNN can be explained by BoTIoT’s high-dimensional structured feature space, which benefits from CNN’s capacity to learn spatial hierarchies. The model effectively captured local interactions among features that are crucial for distinguishing between attack classes. In comparison, the best-performing CNN baseline (CNN-CTGAN) achieved only 0.8168 accuracy and 0.6878 MCC, suggesting that oversampling with synthetic data may have introduced noise or redundant patterns, thereby hindering the model’s ability to generalize effectively. Additionally, the precision of the CNN-CTGAN model (0.4298) is significantly lower than any CNN variant in this study, further indicating that the synthetic data generation may have negatively affected class separation.

Similarly, the LSTM and biLSTM models trained on undersampled data showed superior performance compared to the baseline models that used oversampling. The LSTM model with DFL, for instance, achieved an accuracy of 0.96797 and an MCC of 0.962, whereas the best FNN-based model from the baseline (FNN-CTGAN) had only 0.8808 accuracy and 0.7885 MCC. The recall values in this study are also consistently higher, indicating that the undersampled models retained strong sensitivity to minority class instances without the risk of overfitting to synthetic patterns.

One of the key weaknesses of the oversampling approach used in the baseline study is evident in the MCC values. MCC is particularly important in imbalanced datasets as it considers both false positives and false negatives. The fact that the highest MCC in the baseline study (0.7885 for FNN-CTGAN) is significantly lower than those in this study (e.g., CNN with cross-entropy: 0.98541, LSTM with DFL: 0.962) demonstrates that undersampling led to a better overall decision boundary.

5.1.2. Balanced CiCIoT Dataset

When evaluating the CiCIoT dataset shown in Table 5, we noted that the CNN model achieved the best performance with DFL, displaying high accuracy, recall, precision, F1 score, and MCC. Cross-entropy loss and FL also performed well, indicating that CNNs are versatile across different loss functions. On the other hand, the LSTM and biLSTM models exhibited strong performance with all loss functions, with cross-entropy loss providing the best balance of accuracy and MCC. This robustness indicates that LSTM-based architectures are well suited for the CiCIoT dataset, regardless of the loss function used.

Table 5.

Performance metrics for DL models with different loss functions for the CiCIoT balanced dataset.

5.1.3. Balanced WUSTL-IIOT-2021 Dataset

The analysis of DL models on the WUSTL-IIOT-2021 dataset (Table 6) shows that CNN performs exceptionally well across metrics, with FL being slightly better than cross-entropy and DFL. This showcases the CNN’s effectiveness with complex data regardless of the loss function used. LSTM excels with cross-entropy, highlighting its superior generalization, while biLSTM performs remarkably with specialized loss functions, emphasizing their role in handling class imbalances effectively.

Table 6.

Performance metrics for DL models with different loss functions for the WUSTIIoT balanced dataset.

5.1.4. Balanced ToNIoT Dataset

In Table 7, we present the performance comparison of proposed DL algorithms for different loss functions on the ToNIoT dataset. For the ToNIoT dataset, the results are similar to the other datasets. Similar to the other datasets, the CNN model shows solid performance across all metrics and loss functions. Using cross-entropy loss, CNN achieves high accuracy, precision, and F1 score. Focal loss results in slightly lower accuracy, which indicates that FL faces challenges in handling balanced data effectively. For this dataset, the CNN and biLSTM models exhibit robust performance across all loss functions, with DFL providing a slight edge in precision and accuracy. The LSTM model performs exceptionally well with cross-entropy loss as compared to FL and DFL.

Table 7.

Performance metrics for DL models with different loss functions for the ToNIoT balanced dataset.

5.2. Comparison of the Performance of Various DL Models

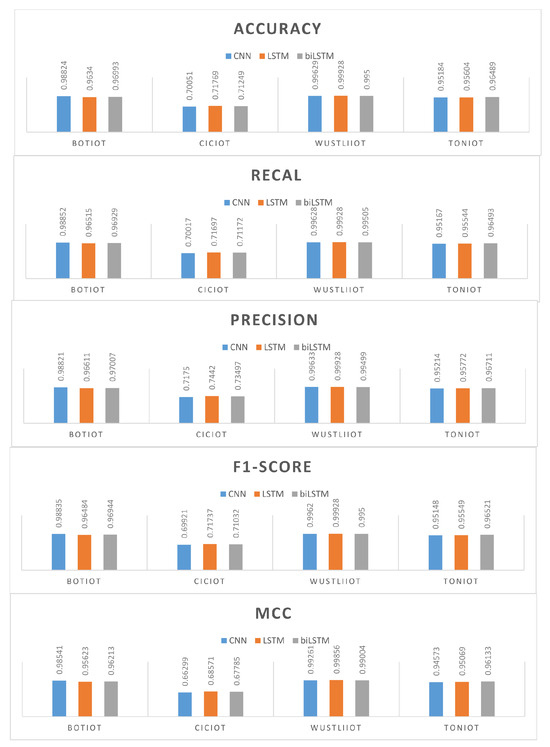

A performance analysis of the deep learning models across the four IoT datasets is shown in Figure 1. The results demonstrate that the CNN model consistently performs well across all datasets. This superior performance can be attributed to CNN’s ability to capture high-dimensional spatial features, which are typically present in IoT environments, effectively. The high accuracy, recall, precision, F1 score, and MCC metrics for these datasets indicate that CNNs are adept at distinguishing between different classes of data having complex patterns and noise; CNNs handle these well through convolutional layers.

Figure 1.

Comparison of performance of DL models for balanced datasets.

The LSTM and biLSTM models demonstrate strong performance on the WUSTL-IIoT-2021 dataset. The results suggests that these models are effective in handling sequential data and capturing long-term dependencies. This capability is crucial in IoT environments where data streams are continuous. On the other hand, the performance of LSTM and biLSTM is lower on the CiCIoT dataset than CNN. This is due to the dataset characteristics that favor the spatial feature extraction strength of CNNs more than the temporal handling of LSTMs. The analysis and the performance trends indicate that for datasets that contain rich spatial features like BoTIoT and CiCIoT, CNNs are highly effective. Meanwhile, for datasets that include significant sequential data or require handling of long-term dependencies, as present in WUSTL-IIoT-2021, LSTMs and biLSTMs are more suitable than CNNs.

5.3. Analysis for Imbalanced Dataset

5.3.1. Comparison of DL Models

The evaluation of CNN, LSTM, and biLSTM models across different IDS datasets reveals insightful trends in performance as shown in Table 8 and Table 9. CNN achieves consistently high accuracy across datasets, with the best performance on WUSTIIoT (Accuracy: 0.99976, F1-Score: 0.99912, MCC: 0.99823). However, CiCIoT remains a challenging dataset, where CNN shows a drop in accuracy (0.83789) and F1 score (0.72318), indicating difficulty in handling this dataset’s complexity.

Table 8.

Performance analysis of CNN, LSTM, and biLSTM models on IDS datasets.

Table 9.

Performance metrics of different models on IDS imbalanced datasets.

LSTM shows superior recall values compared to CNN, particularly for CiCIoT, indicating better performance in detecting minority class instances. This suggests that the sequential nature of LSTM helps in identifying attack patterns over time. Additionally, WUSTIIoT achieves near-perfect classification. However, for datasets like ToNIoT, the improvement over CNN is marginal, suggesting that temporal dependencies in attack detection are less pronounced for this dataset.

The biLSTM model demonstrates mixed performance, showing some improvements in accuracy but a notable drop in recall for the BoTIoT and CiCIoT datasets. This reduction in recall may be attributed to the presence of overlapping or sparse temporal patterns in these datasets, which can hinder the model’s ability to effectively capture sequential dependencies. Although biLSTM maintains relatively high precision, the lower recall indicates challenges in generalizing temporal features across all classes. In contrast, on the WUSTL-IIoT dataset, biLSTM achieves the highest overall performance, highlighting its effectiveness in handling more structured and temporally consistent data.

After analyzing the results, we can conclude that CNN performs well across most datasets but struggles with CiCIoT, where LSTM provides better recall value. LSTM consistently delivers strong results, while biLSTM offers marginal improvements for certain datasets but struggles with recall. Therefore, the choice of model depends on the dataset’s characteristics, with LSTM being preferable for datasets with temporal dependencies and CNN excelling in structured datasets.

5.3.2. Comparison of Loss Functions

In this section, we provide the analysis of different loss functions on intrusion detection models to provide insights into their effectiveness across various datasets.

We can see from Table 10 that the cross-entropy (CE) loss performs well overall as compared to other loss functions by achieving the highest accuracy for WUSTIIoT and BoTIoT, along with strong precision and recall values. However, it struggles with CiCIoT, where accuracy drops to 0.83789 and the F1 score is relatively lower, indicating challenges in handling class imbalance.

Table 10.

Performance analysis of different loss functions.

Focal loss (FL), which is designed to address class imbalance by reducing the weight of well-classified instances and focusing on hard-to-classify cases, improves recall in CiCIoT; however, it does not significantly improve accuracy and even reduces precision compared to CE. On the other hand, on the WUSTL-IIoT dataset, FL performs better, showing some performance gains over CE. However, for ToNIoT, FL results in a slightly lower accuracy and MCC than CE.

Dual focal loss (DFL) results in the best overall accuracy for WUSTIIoT and BoTIoT, along with the highest precision values. However, DFL does not significantly improve CiCIoT performance, where the accuracy remains close to FL. The impact of DFL is more visible in datasets with balanced distributions, whereas its benefits for handling imbalanced datasets such as CiCIoT are marginal. Although DFL is designed to enhance performance on imbalanced datasets, its limited improvement on CiCIoT stems from high inter-class similarity and feature overlap among attack types. In such cases, loss function tuning alone does not yield significant performance gains without additional feature engineering or model complexity.

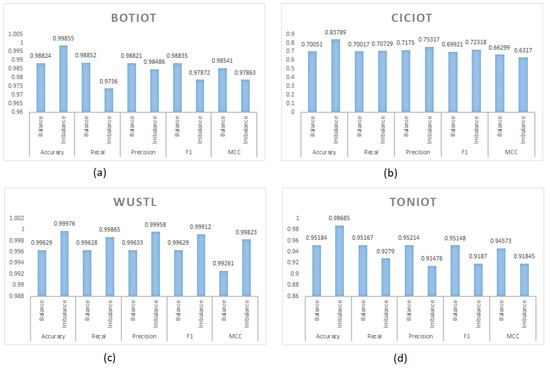

5.4. Effects of the Data-Balancing Technique

The impact of data balancing through undersampling significantly influences the performance of IDS models across different datasets, as shown in Figure 2. In general, imbalanced datasets exhibit higher accuracy compared to their balanced counterparts. This trend is particularly evident in the BotIoT and WUSTL datasets, where accuracy remains consistently high even when balancing is applied. However, the CiCIoT dataset experiences a substantial accuracy drop when balanced (0.70051 vs. 0.83789 in the imbalanced case), suggesting that balancing increases classification difficulty due to higher class overlap or insufficient attack representation in the reduced dataset.

Figure 2.

Comparison of performance of DL models for diverse datasets: (a) imbalanced BOTIOT dataset, (b) imbalanced CICIOT dataset, (c) imbalanced WUSTLIIOT dataset, (d) imbalanced TONIOT dataset.

A deeper look into recall values reveals that BotIoT and WUSTL maintain high recall scores across both balanced and imbalanced datasets, indicating the suitability of the models for detecting attacks in these environments. However, CiCIoT exhibits a lower recall value when balanced using undersampling. This pattern suggests that while undersampling can correct biases in heavily skewed datasets, it may also eliminate essential attack instances for some datasets and can affect the model’s generalization ability.

Analyzing the results of precision, we can see that imbalanced datasets generally achieve higher precision scores, particularly in the WUSTL dataset. However, CiCIoT and TONIoT datasets show precision losses after balancing, which imply that the models struggle with attack class discrimination when fewer training samples are available. This precision drop is also reflected in the F1 score, where CiCIoT reaches only 0.69921 under balanced conditions, reinforcing the difficulty of making accurate attack predictions with a reduced dataset.

We also evaluate the MCC scores to highlight the overall reliability of the models. We can see that WUSTL dataset shows the most stable results for MCC. The MCC for CiCIoT drops when balanced, indicating that undersampling does not significantly improve classification reliability for this dataset. This suggests that removing excess data from the majority class does not always lead to better classification performance, especially when the dataset structure is complex.

6. Conclusions

In this study, we conducted a comprehensive performance analysis of three deep learning models (CNN, LSTM, and biLSTM) on four datasets (BoTIoT, CiCIoT, ToNIoT, and WUSTL-IIOT-2021) using three distinct loss functions (cross-entropy, focal loss, and dual focal loss). We have shown that data balancing through undersampling is more effective as compared to data balancing through oversampling and the synthetic generation of data. Moreover, the findings indicate that CNN is effective compared to LSTM and biLSTM model on all datasets, whereas LSTM and biLSTM are effective in the datasets with a temporal character. Such higher performance is explained by the fact that CNN has a greater capacity to capture complex features, which are essential in situations concerning IoT-based intrusion detection systems. In the future, we aim to explore how combining the spatial feature extraction capabilities of CNNs with the temporal analysis strengths of LSTMs can enhance detection performance in more complex IoT settings. The interpretability of models can also help in practical deployment via methods such as SHAP or LIME. Lastly, testing each of the models under time restrictions would assist in determining their practicability and feasibility of installation in edge-based or cloud-encompassing IoT security protocols.

Author Contributions

Conceptualization, A.W. and S.D.K.; methodology, A.W. and S.D.K.; software, A.W. and Z.U.; validation, S.D.K., Z.U.; formal analysis, A.W. and S.D.K.; investigation, A.W.; resources, A.W., S.D.K. and Z.U.; writing—original draft preparation, A.W., M.U. and H.U.; writing—review and editing, A.W., M.U. and H.U.; supervision, S.D.K.; project administration, S.D.K., M.U. and H.U.; funding acquisition, S.D.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Research Program for Universities (NRPU) of the Higher Education Commission (HEC) of Pakistan, grant number 20-17332/NRPU/R&D/HEC/2021-2020.

Data Availability Statement

The sources of these datasets are clearly cited in the manuscript with appropriate references.

Acknowledgments

We gratefully acknowledge the Norwegian University of Science and Technology (NTNU), Norway, for covering the article processing charge (APC) through its Open Access Publishing Fund.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Aldhaheri, A.; Alwahedi, F.; Ferrag, M.A.; Battah, A. Deep learning for cyber threat detection in IoT networks: A review. Internet Things-Cyber-Phys. Syst. 2023, 4, 110–128. [Google Scholar] [CrossRef]

- Srivastava, D.; Singh, R.; Chakraborty, C.; Maakar, S.K.; Makkar, A.; Sinwar, D. A framework for detection of cyber attacks by the classification of intrusion detection datasets. Microprocess. Microsyst. 2024, 105, 104964. [Google Scholar] [CrossRef]

- Heyden, W.; Ullah, H.; Siddiqui, M.S.; Machot, F.A. RevCD–Reversed Conditional Diffusion for Generalized Zero-Shot Learning. arXiv 2024, arXiv:2409.00511. [Google Scholar]

- Tasfe, M.; Nivrito, A.; Al Machot, F.; Ullah, M.; Ullah, H. Deep Learning based Models for Paddy Disease Identification and Classification: A Systematic Survey. IEEE Access 2024, 12, 100862–100891. [Google Scholar] [CrossRef]

- Azam, Z.; Islam, M.M.; Huda, M.N. Comparative analysis of intrusion detection systems and machine learning based model analysis through decision tree. IEEE Access 2023, 11, 80348–80391. [Google Scholar] [CrossRef]

- Islam, N.; Farhin, F.; Sultana, I.; Kaiser, M.S.; Rahman, M.S.; Mahmud, M.; SanwarHosen, A.; Cho, G.H. Towards Machine Learning Based Intrusion Detection in IoT Networks. Comput. Mater. Contin. 2021, 69, 1801–1821. [Google Scholar] [CrossRef]

- Al-Hadhrami, Y.; Hussain, F.K. Real time dataset generation framework for intrusion detection systems in IoT. Future Gener. Comput. Syst. 2020, 108, 414–423. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), Madeira, Portugal, 22–24 January 2018; Volume 1, pp. 108–116. [Google Scholar]

- Parmisano, A.; Garcia, S.; Erquiaga, M.J. A Labeled Dataset with Malicious and Benign IoT Network Traffic; Stratosphere Laboratory: Praha, Czech Republic, 2020. [Google Scholar]

- Lamjid, A.; Ariffin, K.A.Z.; Aziz, M.J.A.; Sani, N.S. Determine the optimal Hidden Layers and Neurons in the Generative Adversarial Networks topology for the Intrusion Detection Systems. In Proceedings of the 2022 International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 6–7 October 2022; pp. 1–7. [Google Scholar]

- Liu, Z.; Ghulam, M.U.D.; Zhu, Y.; Yan, X.; Wang, L.; Jiang, Z.; Luo, J. Deep learning approach for IDS: Using DNN for network anomaly detection. In Proceedings of the Fourth International Congress on Information and Communication Technology: ICICT 2019, London, UK, 25–26 February 2019; Springer: Singapore, 2020; Volume 1, pp. 471–479. [Google Scholar]

- Vinayakumar, R.; Alazab, M.; Soman, K.P.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Thakkar, A.; Lohiya, R. Fusion of statistical importance for feature selection in Deep Neural Network-based Intrusion Detection System. Inf. Fusion 2023, 90, 353–363. [Google Scholar] [CrossRef]

- Aleesa, A.; Younis, M.; Mohammed, A.A.; Sahar, N. Deep-intrusion detection system with enhanced UNSW-NB15 dataset based on deep learning techniques. J. Eng. Sci. Technol. 2021, 16, 711–727. [Google Scholar]

- Tang, C.; Luktarhan, N.; Zhao, Y. SAAE-DNN: Deep learning method on intrusion detection. Symmetry 2020, 12, 1695. [Google Scholar] [CrossRef]

- Mennour, H.; Mostefai, S. A hybrid deep learning strategy for an anomaly based N-ids. In Proceedings of the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 9–11 June 2020; pp. 1–6. [Google Scholar]

- Al-Emadi, S.; Al-Mohannadi, A.; Al-Senaid, F. Using deep learning techniques for network intrusion detection. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 171–176. [Google Scholar]

- Liu, C.; Gu, Z.; Wang, J. A hybrid intrusion detection system based on scalable K-means+ random forest and deep learning. IEEE Access 2021, 9, 75729–75740. [Google Scholar] [CrossRef]

- Hnamte, V.; Nhung-Nguyen, H.; Hussain, J.; Hwa-Kim, Y. A novel two-stage deep learning model for network intrusion detection: LSTM-AE. IEEE Access 2023, 11, 37131–37148. [Google Scholar] [CrossRef]

- Hassan, M.M.; Gumaei, A.; Alsanad, A.; Alrubaian, M.; Fortino, G. A hybrid deep learning model for efficient intrusion detection in big data environment. Inf. Sci. 2020, 513, 386–396. [Google Scholar] [CrossRef]

- Imrana, Y.; Xiang, Y.; Ali, L.; Abdul-Rauf, Z. A bidirectional LSTM deep learning approach for intrusion detection. Expert Syst. Appl. 2021, 185, 115524. [Google Scholar] [CrossRef]

- Vellela, S.S.; Roja, D.; Purimetla, N.R.; Thalakola, S.; Vuyyuru, L.R.; Vatambeti, R. Cyber threat detection in industry 4.0: Leveraging GloVe and self-attention mechanisms in BiLSTM for enhanced intrusion detection. Comput. Electr. Eng. 2025, 124, 110368. [Google Scholar] [CrossRef]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the development of realistic botnet dataset in the internet of things for network forensic analytics: Bot-iot dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef]

- Neto, E.C.P.; Dadkhah, S.; Ferreira, R.; Zohourian, A.; Lu, R.; Ghorbani, A.A. CICIoT2023: A real-time dataset and benchmark for large-scale attacks in IoT environment. Sensors 2023, 23, 5941. [Google Scholar] [CrossRef] [PubMed]

- Moustafa, N. A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets. Sustain. Cities Soc. 2021, 72, 102994. [Google Scholar] [CrossRef]

- Booij, T.M.; Chiscop, I.; Meeuwissen, E.; Moustafa, N.; Den Hartog, F.T. ToN_IoT: The role of heterogeneity and the need for standardization of features and attack types in IoT network intrusion data sets. IEEE Internet Things J. 2021, 9, 485–496. [Google Scholar] [CrossRef]

- Zolanvari, M.; Teixeira, M.A.; Gupta, L.; Khan, K.M.; Jain, R. WUSTL-IIOT-2021 Dataset for IIoT Cybersecurity Research; Washington University: St. Louis, MO, USA, 2021. [Google Scholar]

- Dina, A.S.; Siddique, A.; Manivannan, D. A deep learning approach for intrusion detection in Internet of Things using focal loss function. Internet Things 2023, 22, 100699. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).