Abstract

The spread of fake news on social media is complicated by the fact that fake information spreads extremely fast in both textual and visual formats. Traditional approaches to the detection of fake news focus mainly on text and image features, thereby missing valuable information contained within images and texts. In response to this, we propose a multimodal fake news detection method based on BERT, with an extension to text combined with the extracted text from images through Optical Character Recognition (OCR). Here, we consider extending feature analysis with BERT_base_uncased to process inputs for retrieving relevant text from images and determining a confidence score that suggests the probability of the news being authentic. We report extensive experimental results on the ISOT, WELFAKE, TRUTHSEEKER, and ISOT_WELFAKE_TRUTHSEEKER datasets. Our proposed model demonstrates better generalization on the TRUTHSEEKER dataset with an accuracy of 99.97%, achieving substantial improvements over existing methods with an F1-score of 0.98. Experimental results indicate a potential accuracy increment of +3.35% compared to the latest baselines. These results highlight the potential of our approach to serve as a strong resource for automatic fake news detection by effectively integrating both text and visual data streams. Findings suggest that using diverse datasets enhances the resilience of detection systems against misinformation strategies.

1. Introduction

In recent years, the proliferation of fake news on social media has emerged as a serious challenge to public opinion, democratic processes, and societal trust. This challenge is intensified by the increasing reliance on digital platforms as the primary news source, making the public more vulnerable to misinformation [1]. According to a recent survey, 54% of U.S. adults obtain news from social media platforms, with Facebook and YouTube being the most preferred, followed by Instagram, TikTok, and X (formerly Twitter) [2]. In the same study, 67% of respondents identified Facebook, 65% social media in general, and 60% the broader internet as key sources of fake news exposure—significantly more than traditional outlets like television and print media [3].

This convergence of news consumption and misinformation exposure underscores the need for advanced detection mechanisms tailored to social media environments. Social media content is inherently multimodal, often combining text, images, and videos, which complicates the detection of fake news. In response, we propose a novel multimodal detection framework based on BERT (Bidirectional Encoder Representations from Transformers) to address the evolving nature of misinformation on social platforms [4].

While social media has democratized access to information, it has also fueled the spread of fake news—defined as deliberately misleading information—which poses risks to democracy, public health, and social cohesion [5,6]. Platforms like X, Facebook, and Instagram enable users to instantly share content, often without editorial oversight. This lack of gatekeeping facilitates misinformation campaigns, as seen in the 2016 U.S. election and during the COVID-19 pandemic, where online content influenced both public behavior and policy decisions [7,8].

Traditional fake news detection techniques rely on linguistic features such as sentiment, lexical diversity, and syntax, analyzed through machine learning [9]. While these methods are effective to some extent, they struggle to capture the complexity of media-rich environments. Visual content can enhance deception, and the integration of text and imagery forms multimodal messages that require joint analysis to be properly understood.

The societal consequences of fake news are extensive. It can influence voter decisions [10], erode institutional trust, and promote public health misinformation [11]. The virality of such content—often spreading faster than factual news—further amplifies its impact [12].

Text-only detection systems, despite leveraging methods such as term frequency–inverse document frequency (TF-IDF), part-of-speech tagging, and named entity recognition (NER) [13], remain limited in scope. Fake news creators continuously evolve their language and strategies to evade detection. Moreover, the growing use of images and videos in posts necessitates systems that analyze both textual and visual cues simultaneously.

Advances in deep learning have introduced powerful tools to address these challenges. BERT has revolutionized NLP by capturing contextual word representations, enhancing the accuracy of text understanding [14]. In multimodal contexts, the presence of inconsistencies between textual and visual content often signals deception [15], highlighting the need for integrated analysis of both modalities to improve detection performance.

This paper introduces a novel methodology for detecting fake news on social media using a multimodal BERT-based framework. Our approach incorporates both linguistic analysis and image-derived textual content, further enhanced by a cross-attention fusion mechanism. The major contributions of this work are:

- BERT’s power in analyzing the text via social media as an accurate tool for fake news.

- Development of a new framework that combines text and image features for multimodal fake news detection.

- The effectiveness and generalizability of this system will be tested using actual datasets from real-world social media platforms.

This research, therefore, contributes to the success of making firmer and more accurate detection systems for fake news. Fake news detection systems can protect online discourse and increase trust in credible news sources within the public while reducing its adverse effects on society.

The following sections review the existing literature on fake news detection, describe the proposed BERT-based multimodal framework in detail, and present our evaluation results using benchmark datasets.

2. Literature Review

2.1. Early Approaches

The early research work on fake news detection was done by using linguistic analysis based on traditional machine learning techniques. Therefore, in the early stages, researchers used different linguistic and stylistic features to identify such deceptive content in news articles. Such methods mostly relied on keyword-based analysis syntactic structures and sentiment analysis for determining true and false information. However, the early attempts had issues with the sophistication and nuance of fake news, especially when social media became the first port of call for most news sources [16].

The bad news further leveraged other forms of multimedia aspects of images, videos on social media platforms, such as, X, Facebook, Instagram, Reddit, Tiktok, as it used those for gaining an illusionary aspect of credibility. A new manner in which the news would be consumed compelled a researcher to look at the methods with both visual-textual data in a multimodal approach [17].

Early experiments on multimodal fake news detection used simple feature concatenation, combining text and image features with low-level fusion techniques. Though promising, the techniques were not sophisticated enough to exploit the complementarity of textual and visual information. Thus, the challenge was to find models that would effectively exploit the interactions between different modalities to improve detection accuracy [18].

The focus in this era was still on the development of textual analysis of news reports as the early social media applications were text intensive [19]. Examples include the use of models such as SVM and Naive Bayes for the purpose of classifying text using features like n-grams and TF-IDF scores [20]. Most of these models, however, were incapable of describing complex relationships in language modelling and hence led to false positives and negatives in testing results.

The late 2010s were a period of immense progress in fake news detection, with deep learning techniques being the driving force behind it. CNNs had become the go-to modeling choice for visual information and RNNs and their variants had proven to be highly effective for sequential text modeling [21].

This helped in partly alleviating the previously discussed bottlenecks. Still, further problems continued to emerge within efficient textual and visual fusion. Different techniques involving fusion schemes, like the attention mechanisms, have been proposed. Attention can make models weigh information across various features and enable selective focus on the significant details that could be used in determining whether some piece of news is fake or not [22].

During this stage, the region saw shifting towards multimodal data combination to improve the performance of detection. The researchers proposed models based on the fusion of text and image features using attention-based techniques that improved the classification performance. However, the issue is the lack of one unified framework for integrating them was a bottleneck for further progress, since models could not fully exploit the complementary capabilities of textual and visual data [23].

With CNNs came the turn for analyzing visual data. That unlocked a floodgate of new and unprecedented features and complex patterns being drawn from images [24]. This approach helps significantly in identifying manipulated images and their prevalence in the narratives of fake news; meanwhile, RNN improves temporal analysis of language beyond the static textual evaluation perspective. At this point, research showed the limitation of traditional feature extraction techniques but placed more emphasis on dynamic models that understand how fast fake news evolves. Deep learning techniques, with their ability to learn and generalize from vast amounts of data, presented a promising direction.

2.2. Bert and the Rise of Multimodal Approaches

The BERT model’s emergence in 2018 sparked new developments with the integration of the contextual and semantic information from the text it received, enabling more productive extraction of features from textual sources, further becoming vital in fake news detection purposes [25]. During this period, research seemed to explore the possibility of BERT, from its transformer architecture, manages to capture complex interactions that lie between text and image data making it another promising candidate for multimodal incorporation [26].

Several works used BERT to extract text features and pre-trained visual models, such as VGG-19, for image analysis [27]. As BERT performed well at capturing textual semantics, expanding its capability to effectively capture visual data was a challenge, which has led to developing complex fusion techniques that can unlock the full potential of the complementarity between textual and visual information [28]. These advancements employ the self-attention mechanism of transformers to determine which features are most relevant for text and images, hence advancing the ability to identify inconsistencies and manipulations [29].

However, the question remains how to effectively combine textual and visual information. Different fusion techniques, for example, hierarchical attention networks and contrastive learning, tried to address the question of modality interaction. These methods allow models to learn to pay more attention to important features regarding fake news detection while providing weights for others [30].

2.3. Advances in Multimodal Fusion and Bert

The idea was to build on these earlier attempts to form even more complicated multimodal fusion techniques capable of truly exploiting both the complementary nature of textual and visual data. To that effect, strategies began to emerge that improved multimodal integration with the use of attention mechanisms, contrastive learning, or cross-attention networks [31,32]. For example, in [33], a new method was proposed that relied on BERT for textual and visual feature extraction with a cross-attention network to effectively fuse the two modalities.

The scientists proposed a contrastive learning framework in another experiment. They employed the BERT for text-based analysis and ResNet model [34] for extracting features from images. The contrasts of text and images made use of contrastive loss to obtain higher performance at fake news detection. Moreover, the research involved the exploration of the hierarchical encoding networks for the extraction of the rich hierarchical semantics of textual content. Such models could catch subtle relationships between words and phrases by introducing BERT as a backbone for extracting textual features to improve fake news detection performance [22].

Hence, detection accuracy can be maximized by incorporating different types of fusion strategies. A set of strategies has been discussed and utilized by various researchers such as attention-based mechanisms [35], contrastive learning, and cross-attention networks, for capturing inter-modality interactions and representing multimodal data effectively [36].

Multimodal data raises major concerns about capturing complex relations between text and images effectively. Researchers have come up with innovative techniques, namely, entity-enhanced fusion frameworks and multimodal consistency networks, which can counter these challenges. Advanced mechanisms in fusion allow for feature integration of both text and image, capture intricate modality relationships, and lead to better performance in detecting news [37].

Recent breakthroughs in fusion techniques and integration of cross-modalities have showcased that BERT can successfully be applied to both visual and textual data [38]. Leveraging the transformer architecture found within BERT, researchers could obtain complex interactions between modalities to yield more accurate and more robust fake news detection models [39]. Such approaches show better performances compared to the traditional methods thus representing the possibility of the BERT in improving multilayer integration and precision of detection [40,41].

Generative Adversarial Networks (GANs) [42] have been extensively used for tasks that involve multimodal data, because they can learn and generate complex distributions. For the detection of fake news, GANs have been used to generate multimodal data and augment the training of models by overcoming issues related to data imbalance or generating adversarial examples to make robust model evaluation possible. GAN-based approaches typically consist of a generator, which generates synthetic samples, and a discriminator, which distinguishes between real and generated samples. Although effective, such methods often require substantial computational resources and risk introducing artifacts or biases during synthetic data generation, which can negatively impact downstream performance.

In contrast, the approach proposed in this paper uses a cross-attention mechanism and multimodal feature integration to directly model interactions and discrepancies across different modalities, such as text and images. Unlike GANs, this approach does not involve synthetic data generation but instead focuses on enhancing feature representation through attention mechanisms. This allows the model to dynamically prioritize critical features without the computational overhead associated with adversarial training. In addition, the employment of the BERT model in the proposed framework enables a strong understanding of the textual data, which helps complement the cross-modal interactions.

While GANs are particularly strong in data augmentation and adversarial testing, the proposed methodology is designed with real-world applications in mind, where interpretability, computational efficiency, and direct multimodal feature integration are valued. This makes the proposed approach a complementary alternative to generative models like GANs in the domain of multimodal fake news detection.

Even with all these advances, this multimodal fake news detection still encounters many problems. One of the toughest ones is the multimodal availability of large datasets as only a richly representative multimodal dataset lets the models operate efficiently. Moreover, fake news is dynamic; news content or formats keep being developed daily that act as a test to the models for relevance over time [41].

Another challenge is model interpretability or explainability. As detection systems for fake news complexify, researchers must therefore develop methods that enable the users to understand and have trust in model decisions. This spans techniques for visualizing attention mechanisms and feature importance besides framework ideas to evaluate model performance and reliability [43].

2.4. Comparison with State-of-the-Art (Sota) Approaches

Most existing SoTA approaches are restricted by the unavailability of large, richly representative multimodal datasets, which usually hinders their performance and generalizability. Our work overcomes this limitation by incorporating textual data extracted from images directly into a single BERT-based framework. This approach exploits a combination of publicly available datasets and image-text extraction pipelines, thereby making a much larger multimodal corpus available. Thus, our method reduces the data scarcity challenge by fusing text and images effectively into a unified model. BERT’s contextual embeddings allow the model to adapt more easily to new linguistic variations and emerging social media trends. Furthermore, our pipeline supports periodic retraining or fine-tuning with newly collected data to continue relevance and robustness against evolving fake news formats.

Table 1 presents a comparative analysis of recent state-of-the-art multimodal fake news detection approaches and their key findings.

Table 1.

Comparative analysis of state-of-the-art approaches with the proposed approach.

3. Dataset

For this research, the ISOT Fake News Detection Dataset was utilized. This well-curated dataset is specifically designed for binary text classification tasks in fake news detection and is publicly available on Kaggle [44]. Renowned for its robustness and comprehensiveness, the ISOT dataset provides a reliable foundation for developing and evaluating emotion-aware fake news detection models.

The dataset consists of a total of 44,919 news articles, divided into two classes: 21,417 labeled as “true” and 23,502 labeled as “fake”. This balanced distribution allows the model to learn from a diverse range of linguistic styles and rhetorical patterns characteristic of both authentic and deceptive content [45].

To ensure generalizability and robustness, the dataset was split into training, testing, and validation sets. Specifically, 50% of the samples were used for training, 20% for testing, and the remaining 30% for validation. This stratified split supports effective learning while providing a strong benchmark for performance evaluation on unseen samples [46].

The data is provided in two primary files: fake.csv and true.csv, each containing respective news articles. This format simplifies preprocessing and integration into the model pipeline. Given its source and detailed compilation by Appen, the dataset serves as a solid basis for building a sophisticated emotion-aware fake news detection system. Its focus on emotional content in relation to article veracity provides valuable insight into the psychological and linguistic dynamics of misinformation [47].

4. Problem Statement

Since they provide easy avenues to distribute information or misinformation at incredibly fast paces, the various social media platforms present a fertile area for this sort of misinformation. Traditionally, since such presentations may be “multi-modal”—containing images or video alongside text —detection mechanisms that center on just textual analysis prove inadequate. This limitation points towards the need for an approach that can integrate both text and visual information within a single framework. Accordingly, this paper aims at utilizing the linguistic depth of BERT in conjunction with more sophisticated multimodal fusion techniques to detect fake news accurately. By incorporating both text and images into its framework in real-time, this framework aspires to produce a strong, reliable confidence score robustly measuring the authenticity of content posted on social media.

4.1. Computational Environment

All the training and scripting were done completely within Google Colab’s GPU environment, which happened to be the NVIDIA Tesla K80 with 12 GB of VRAM. It supported large datasets and complex deep-learning models as they required more computations. Local computations were achieved by a laptop with a 10th-generation Intel Core i7 processor, 16 GB of RAM, and a 256 GB SSD. This configuration allowed quick development cycles, initial data processing, and performance tests without cloud resources [48].

4.2. Data Collection

Three publicly available datasets—ISOT [49], WELFAKE [50], and TRUTHSEEKER [51]—were utilized to ensure a comprehensive evaluation of misinformation detection across diverse sources. Additionally, a concatenated dataset, ISOT_WELFAKE_TRUTHSEEKER, was created by merging the three datasets to provide a broader representation of real and fake news variations.

Each dataset underwent text cleaning, tokenization, and normalization, preserving key linguistic and stylistic features. For image-based text, Optical Character Recognition (OCR) techniques were employed to extract embedded textual content, ensuring robust cross-modal feature alignment.

To maintain authenticity, punctuation and errors in the fake news texts were preserved in the dataset. Most of the records fall between 2016 and 2017, detailing metadata such as title, text, publication date, and type of article. Each sample gathered was timestamped with metadata that included source platform details, date of publication, among other data, which, in turn, facilitated subsequent analytical processing.

where T represents textual data from tweets, and I depicts image data from posts on Instagram.

4.3. Preprocessing

Preprocessing included several critical steps aimed at normalizing and enhancing the data up to its proper analysis.

Text Cleaning: Applied advanced natural language processing techniques to remove noise such as URLs, special characters, and emojis. This applied the use of regular expressions and natural language toolkits in standardizing text for processing. Pre-image processing entailed improving image quality. This was enhanced by improving contrast and eliminating noise, among other factors. That way, if the images contained text, the same would be easily readable during the OCR extraction.

For a preprocessed image ready for OCR, and a clean text.

4.4. Feature Extraction

- -

- BERT for Text Analysis: Pass the cleaned text data through the BERT model for deep contextual embeddings. Those embeddings would represent subtle nuances and semantic relationships in the text to be understood for the communication of the message.

- -

- Text Extraction by OCR: OCR technology was applied on the preprocessed images and the text content buried in them was extracted [52]. The text data extracted through OCR was found useful as a supplement especially when images contained headlines or news textual informationwhere refers to the embedded textual features and to the text extracted from images.

4.5. Multimodal Integration

At this stage, both image-based text features and text-based text features are combined to constitute an all-inclusive feature set.

Feature Concatenation: Concatenate and OCR extracted text . The feature concatenation allowed for the combining strengths of textual and visual-based text features to build up a robust dataset for analysis.

Cross-Attention Mechanism: An attention mechanism was used to look for important features across the concatenated dataset [53]. This was the most powerful mechanism, in that it enhanced the ability of the model to classify news articles as genuine versus fake news, specifically targeting discrepancies and anomalies in all modalities.

This is where the role of Cross-Attention Mechanism in the proposed method would come in handy; they identify critical features within a concatenated multimodal dataset, highlighting the crucial difference between modalities. They are efficient to highlight mismatches or differences between input modalities; this becomes important to make a differentiation between genuine and fake news.

It is based on computing attention scores across all the modalities. Specifically, given the integrated feature set , the cross-attention mechanism generates enhanced features by:

- Query-Key-Value Construction:Each modality contributes to the construction of the query, key, and value matrices:where , , and are learnable weight matrices.

- Attention Score Computation:The attention scores are calculated as:where is the dimensionality of the key vectors, and the softmax function ensures that the scores are normalized.

- Weighted Aggregation of Features:The attention scores are then used to weight the value vectors:

- Integration with the Classifier:The enhanced features are passed to the classifier for final prediction:where represents the probability of the content being fake.

This mechanism allows the model to dynamically focus on the most relevant aspects of the input data, enabling it to detect subtle inconsistencies across modalities. The model’s ability to leverage the cross-attention mechanism makes it capable of superior performance in identifying fake news with a higher degree of accuracy and robustness.

4.6. Classification

Using the improved integrated features , a more complex classification model was applied using a Neural Network Classifier. A deep neural network was employed that sequentially processes features from raw input through multiple layers of refinement to produce an outcome.

The classification process is based on Probability Estimation and Thresholding, where the output of the neural network represents the likelihood of the news being fake. Specifically, the network outputs a probability score, , indicating the likelihood of fake news. A classification decision is then made using a predefined threshold, TT, such that:

This threshold-based approach ensures that news items are labeled as fake if the probability score meets or exceeds the threshold, and as real otherwise [54]. This method improves interpretability and reliability in the classification process, leveraging refined features and advanced neural network capabilities.

4.7. Confidence Score Calculation

In this study, the “Confidence Score” is a direct, interpretable measure of the model’s probability outputs. Specifically, if denotes the model’s predicted probability that a piece of content is fake, then we define:

This transformation of the raw probability into a percentage enables end-users to clearly gauge how likely a piece of content is to be fake or real. For instance, if , then Confidence Scorefake = 75% and Confidence Scorereal = 25%.

This simple and transparent metric is crucial for enhancing the interpretability of the model’s predictions and guiding informed decision-making.

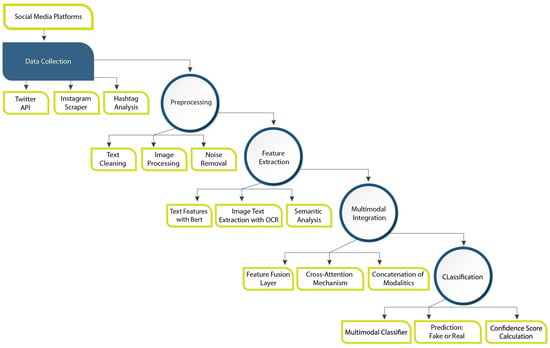

4.8. Block Diagram

A detailed methodology flow diagram outlines a very comprehensive scheme of detection with data integration from X, Instagram, and other such social media platforms. Then, from data to steps of preprocessing comprising text cleaning, image processing, and noise removal, major features get extracted using BERT for text and OCR for the image text and provided to be analyzed semantically. These features are then combined into a multimodal representation through a multimodal integration process that utilizes cross-attention mechanisms and concatenation to create a single fused representation. Finally, this multimodal classifier will classify the news as real or fake, and the classification confidence score quantifies how reliable the prediction was. Figure 1 illustrates the overall methodology workflow of the proposed model.

Figure 1.

Block diagram representing the overall methodology workflow.

5. Experimental Setup and Results

This work focuses on multimodal fake news detection and reports a significant advance by using pre-trained transformer-based models, particularly BERT. This paper tests a BERT-based multimodal approach to fake news detection across four different datasets: ISOT, WELFAKE, TRUTHSEEKER, and ISOT_WELFAKE_TRUTHSEEKER.

Results are systematically analyzed to emphasize the effectiveness of our approach. The pre-trained model, BERT-Base-Uncased, is renowned for its robust NLP capabilities and thus serves as the backbone of our model. Using multiple transformer layers that employ self-attention mechanisms, BERT can capture contextual relationships between words effectively. The model is further enhanced by fine-tuning it with textual and embedded image text, thereby allowing it to differentiate between authentic and deceptive news.

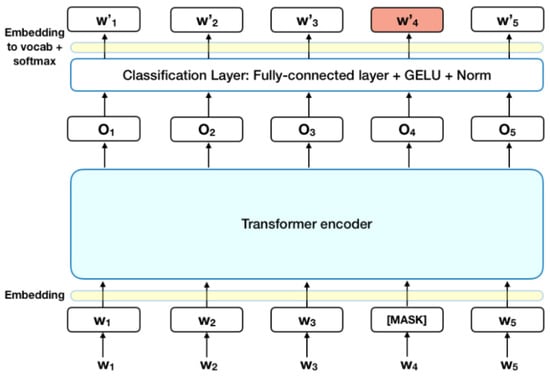

The architecture of the BERT_base_uncased model used in our study is illustrated in Figure 2.

Figure 2.

BERT_base_uncased model architecture.

Input Layer: This will also have tokenized text sequences for the input to the model. Now, each token is represented as a numerical ID and padded to a certain length for uniformity in the input sequence. Embedding Layer: The tokens are converted in the dense vector representation as the inputs. These include the meaning and syntax of the word. Encoder Layers: These are the different layers the input sequences undergo. Each encoder layer consists of multi-head self-attention mechanisms and a feedforward neural network. In this context, the self-attention mechanism allows the model to focus on some words within a sequence depending on how much each word interacts with its neighbor. Pooling Layer: This layer of the encoder, towards the end, pools to create a fixed size of the input sequence generally employing mean pooling or max pooling operation. Classifier Layer: The pooled representation is then passed through a fully connected layer with softmax activation to predict the probability of each input sequence belonging to either the fake or real class.

This paper utilized the BERT-Base-Uncased model with 12 transformer layers, 768-dimensional hidden states, and 12 attention heads. The number of parameters is about 109 million, meaning the capacity of the model in learning complex representations of input data.

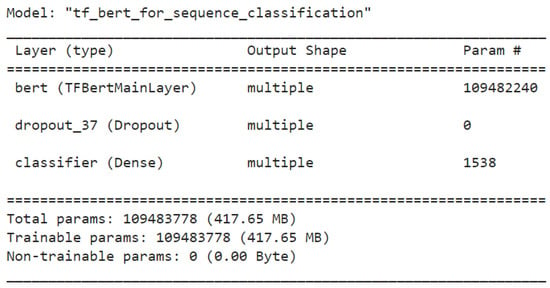

The key parameters of the BERT_base_uncased model are summarized in Figure 3.

Figure 3.

Parameters of the BERT_base_uncased model.

TFBertMainLayer: This is the core of the model; it is a pre-trained BERT language model with an input sequence to take out suitable features.

Dropout: A dropout layer randomly drops the neurons during training to prevent overfitting.

Classifier: A dense layer with 1538 units followed by a softmax function to classify.

The model has 109,483,778 parameters that are all trainable. That shows the model has a very large capacity to learn those complex patterns in the data.

To achieve generalizability and robustness of the model, we’ve created training, testing, and validation sets 80:10:10 ratio. Such a stratified split will enable proper training of the model, while also providing a good benchmark for the evaluation of model performance on unseen data [46]. The datasets are divided mainly into two files: fake.csv and true.csv, which contain all the relevant articles. Such a structure allows for easy preprocessing and integration into the model development pipeline [47].

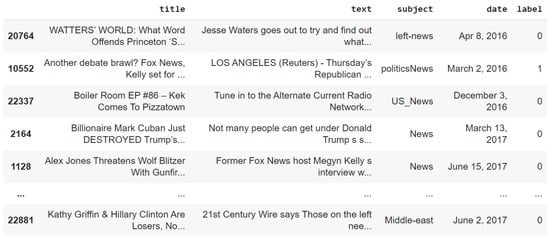

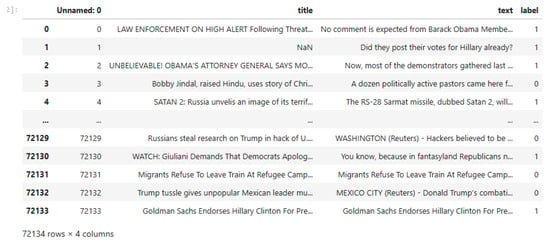

Figure 4 is an example of ISOT data where each news article has a label as either ‘fake’ or ‘true’. In this representation, ‘0’ is labeled for fake news and ‘1’ is labeled for true news. The columns here include the title of the article, the text body, the subject category, the date of publication, and the corresponding label. The labeling is important because the model needs to learn how to differentiate “True” from “Fake” news content.

Figure 4.

Sample ISOT dataset showcasing news articles labeled as ‘0’ for fake and ‘1’ for true.

Figure 5 below shows that the WELFake dataset is a large aggregation intended to make the machine learning models, tasked with classifying news articles as either real or fake, more generalizable. The dataset has a total of 72,134 news articles, with 35,028 labeled as authentic and 37,106 as deceptive. In an attempt to reduce the effects of bias from a single dataset, it aggregates data from multiple sources: Kaggle, McIntire, Reuters, and BuzzFeed Political. Each article is divided into four distinct columns: a serial number, a headline (Title), the main text (Text), and the corresponding binary label (Label: 0 for fake, 1 for real). This dataset presents a wide-ranging textual manifestation of misinformation and permits an extended framework in assessing the effectiveness of models designed to detect fake news.

Figure 5.

Sample Welfake dataset showcasing news articles labeled as ‘0’ for fake and ‘1’ for true.

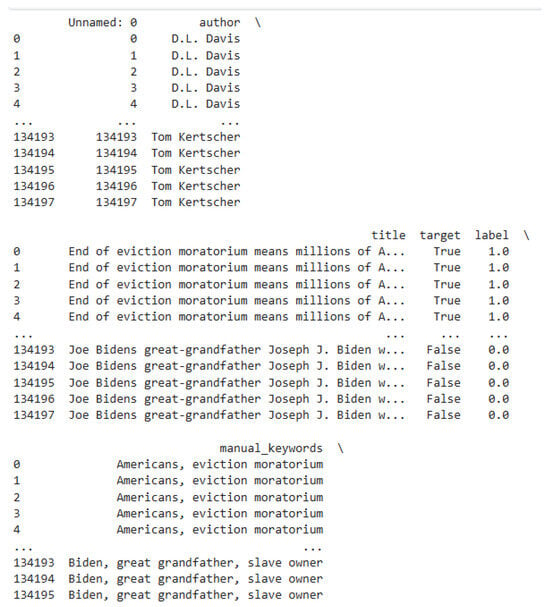

Figure 6 presents the TruthSeeker2023 dataset, recognized as one of the most extensive benchmark datasets for the identification of fake news in the realm of social media, particularly on Twitter, encompassing over 180,000 labeled tweets. These tweets were collected from Twitter posts that pertain to both genuine and fabricated news occurrences derived from the PolitiFact dataset. The dataset underwent a three-factor active learning verification process, which was predicated on crowdsourced labeling conducted by 456 expert annotators through the platform Amazon Mechanical Turk. In addition to that, the dataset provides three additional social media metrics, which include Bot Score, Credibility Score, and Influence Score. Using the features from tweets with the user metadata, the dataset can support deep learning detection methods and traditional machine learning methods. The dataset has been rigorously tested with different models of BERT, event detection approaches focusing on clustering, and authenticity of the X posts with regards to content features of its originators and spreaders.

Figure 6.

Sample Truthseeker dataset showcasing news articles labeled as ‘0’ for fake and ‘1’ for true.

5.1. Model Training and Validation

The datasets were split into training, validation, and test sets to provide a robust framework for evaluation. The split ratios were adjusted to balance data availability against model generalization. The training phase on all datasets showed high accuracy; the variations in generalization ability were reflected in the validation and test phases.

The ISOT Dataset presented near perfection, with 99.7 percent accuracy for all training, validation, and test phases. WELFAKE Dataset generalizes moderately at 94% accuracy, which implies that the dataset has more diverse or challenging samples. TRUTHSEEKER Dataset reached 98% accuracy, balancing model complexity with real-world applicability (see Table 2).

Table 2.

Model Performance Metrics Across Training, Validation, and Test Phases.

ISOT_WELFAKE_TRUTHSEEKER Dataset had the most diverse input, with 80% training accuracy but 96–98% validation and test accuracy, which implies the model adapted well to varied patterns. This was similar to the results observed in previous works [55], where the BERT models were trained on structured datasets that were good for classification but lacked domain adaptation and broader generalization.

The training and evaluation steps of the BERT model are summarized in Algorithm 1.

| Algorithm 1 BERT Model Training and Evaluation Steps |

Input: Dataset D containing real and fake news Output: Trained BERT model, best model checkpoint, and evaluation metrics

|

The inference and deployment steps of the BERT model are described in Algorithm 2.

| Algorithm 2 BERT Model Inference and Deployment |

Input: Best-performing BERT model from Algorithm 1, new unlabeled dataset Output: Predicted labels (real/fake) and evaluation metrics on

|

5.2. Classification Report

The classification report in Table 2 shows that there are significant differences in the performance of the models when tested on the four datasets, highlighting the influence of dataset architecture, variability, and complexity on the accuracy of classification. The ISOT dataset has an impressive overall accuracy rate of 99.25%, with high precision and recall metrics for both authentic and fraudulent news. The marginally reduced recall observed for Class 0 (fake news) indicates that some misleading articles closely resemble genuine news formats, resulting in instances of misclassification. The WELFAKE dataset shows a minor decline in accuracy, recorded at 97.95%, and significant reduction in recall for Class 0 (0.91). This shows that it has trouble effectively identifying the different and less well-organized characteristics of fake news found in this dataset. A more extensive dataset that encompasses multiple news sources is a more linguistically and contextually diverse range, making classification harder.

The TRUTHSEEKER dataset has outstanding classification performance with 99.99% accuracy, precision, recall, and F1-scores at 1.00. This would mean the existence of an almost optimal model; however, it raises questions about the possibility of biases in the used dataset or overfitting to the well-organized nature of false information in social media as well as the credibility scores introduced into the overall dataset. Artificially well-differentiated patterns may have low generalizability when applied to real-world settings, where the complexity of misinformation is higher. On the other hand, in the case of the ISOT_WELFAKE_TRUTHSEEKER dataset’s accuracy, due to its inclusion of multiple sources and increased variability, falls drastically to 80.67%.

The recall-precision difference is especially observed about Class 1 (actual news); the model struggles to generalize to different styles and contexts for both actual and constructed news. This is in accordance with other related studies that note that a boost in diversity within the dataset is associated with lower classification effectiveness but increases generalizability. In conclusion, although organized datasets such as ISOT and TRUTHSEEKER produce elevated levels of accuracy, more varied datasets such as ISOT_WELFAKE_TRUTHSEEKER offer a more authentic evaluation of model efficacy in real-world misinformation detection contexts.

The classification metrics, including precision, recall, and F1-scores for both fake and real news detection, are detailed in Table 3.

Table 3.

Classification Report Detailing Precision, Recall, and F1-Scores for Fake and Real News Detection.

Compared to earlier approaches [56], which demonstrated large discrepancies between real and fake news detection, our model showcases a much more balanced performance across datasets. The relatively uniform precision-recall balance highlights its suitability for real-world deployment, particularly in automated content moderation and misinformation filtering systems.

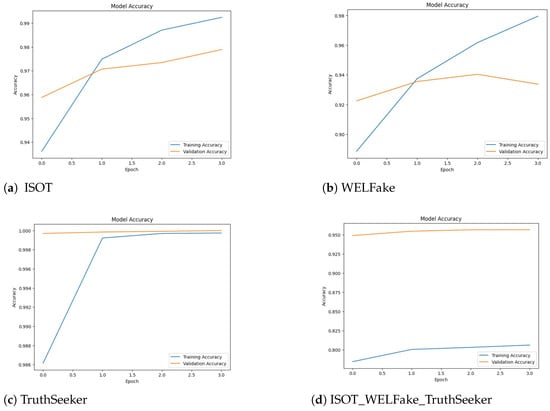

5.3. Model Accuracy, Loss, Confusion Matrix

Figure 7 shows accuracy curves for all cases which reveals that ISOT and TRUTHSEEKER maintain a stable increasing trend, while WELFAKE experiences small fluctuations related to the natural fluctuation in the dataset. Loss curves prove that although ISOT has training loss close to zero, ISOT_WELFAKE_TRUTHSEEKER is slightly larger with stable validation loss, which can be a benefit for practical applications [57].

Figure 7.

Accuracy curves for the BERT-based multimodal fake news detection model across four datasets.

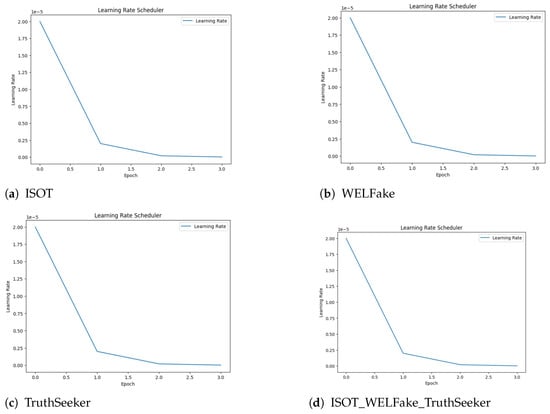

Model Loss: Figure 8 plots the curves of training and validation losses of all datasets. The training loss curves smoothly decrease as the model is learning how to make fewer mistakes. The curve of validation loss follows the non-monotonic character with a decrease, after which there is a little increase, followed by a new decrease. Such a behaviour can be taken for indication that the model has been faced with more complicated samples during validation, challenging its generalization capability. Such trends have been documented in related studies by Liu et al. [58].

Figure 8.

Learning rate schedules illustrating the gradual reduction in learning rate across epochs for four datasets.

ISOT shows Practically no loss, hence confirming overfitting. WELfake’s validation loss rises, indicating it is not generalizing well. Truthseeker dataset has a good balance between training and validation loss. While the ISOT_WELFAKE_TRUTHSEEKER loss is higher, the model is still stable.

Learning Rate Scheduler: Figure 8 Depicts the gradual learning rate decay across each epoch is a critical component in fine-tuning the model’s parameters. The model will converge faster at the early stages of training if a higher learning rate is initially employed. Then, with the learning rate decreased, the model refines its weight adjustments to obtain higher accuracy and stability. This adaptive learning rate strategy prevents abrupt updates that may cause a loss in convergence, hence leading to the better performance metrics reported in the results [59].

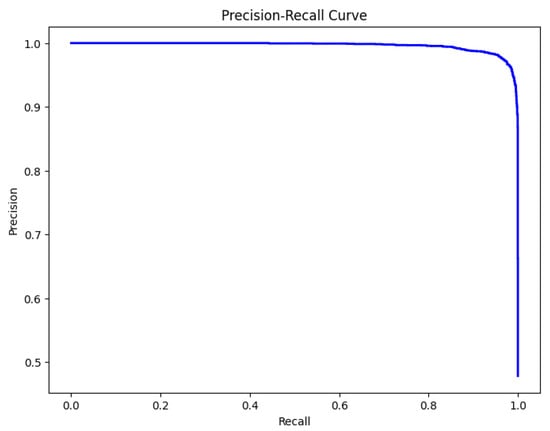

Precision-Recall Curve: Figure 9 plots The Precision–Recall Curve in the figure illustrates the model’s performance in distinguishing fake from real news. The x-axis represents recall—the proportion of true positives correctly identified—while the y-axis shows precision, indicating the proportion of correct positive predictions. A curve that remains close to the top-right corner signifies that the model maintains high precision even as recall increases, which reflects its effectiveness in minimizing both false positives and false negatives. This balance is crucial in fake news detection, where correctly identifying misinformation while avoiding false alarms is essential [60].

Figure 9.

Precision-Recall Curve Illustrating High Model Precision Across Varying Recall Thresholds.

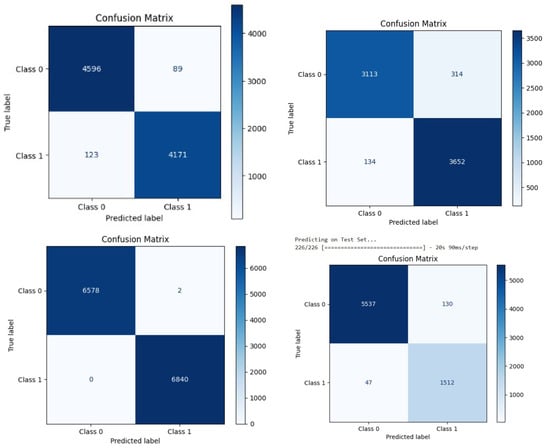

Confusion Matrix: Figure 10 presents the confusion matrix for the performance between classes on the model. Misclassifications are relatively very low, with 122 false positives and 115 false negatives out of an 8979-prediction total. In addition, balanced misclassification across the classes reflects the strength of the model, particularly in keeping low false positives that might be critical to the reliability of fake news detection systems [61].

Figure 10.

Confusion Matrix Illustrating Model Accuracy with Minimal Misclassifications Across Two Classes.

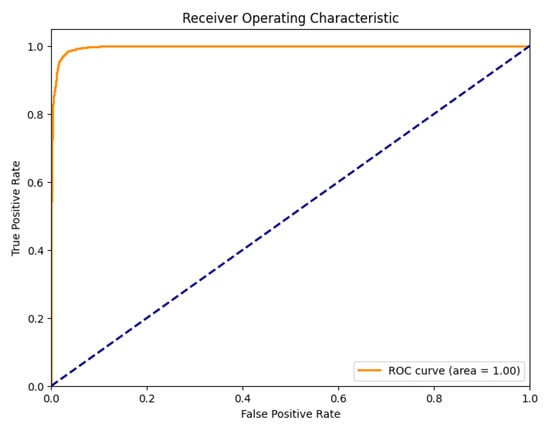

Receiver Operating Characteristic (ROC) Curve: The ROC curve in Figure 11 shows that the indicates that the model has discriminative power; the area under the curve approaches 1.0. This nearly perfect AUC means that the model perfectly distinguishes between real and fake news, achieving a high true positive rate with a minimal false positive rate. It becomes particularly relevant in comparison with baseline models since it presents a much larger improvement of classification accuracy-a result in agreement with the results obtained.

Figure 11.

ROC Curve Demonstrating High Model Discriminative Power (AUC ≈ 1.0).

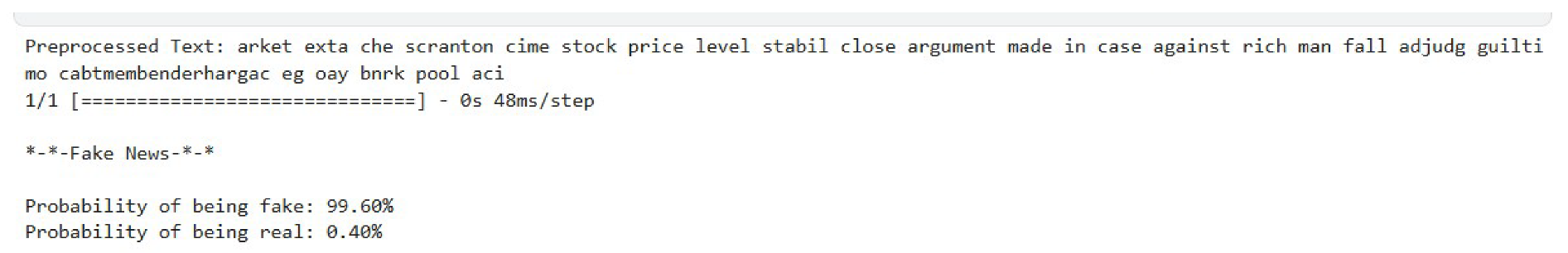

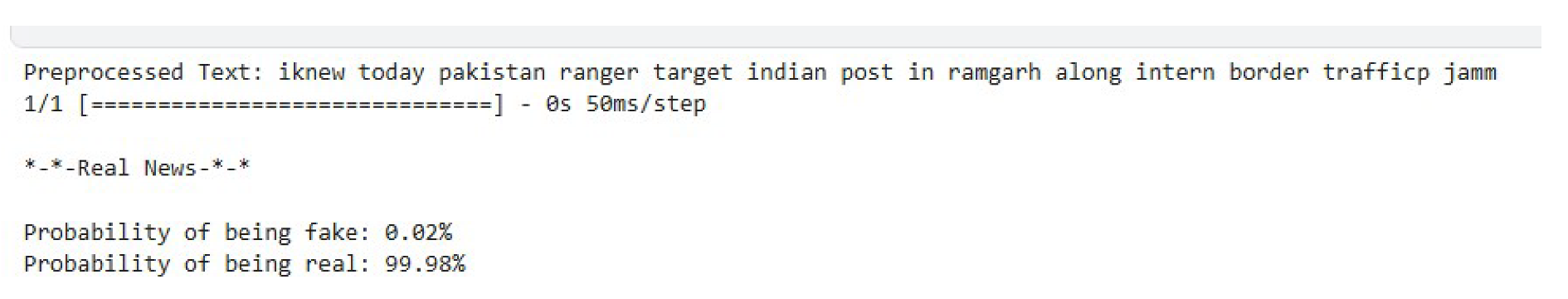

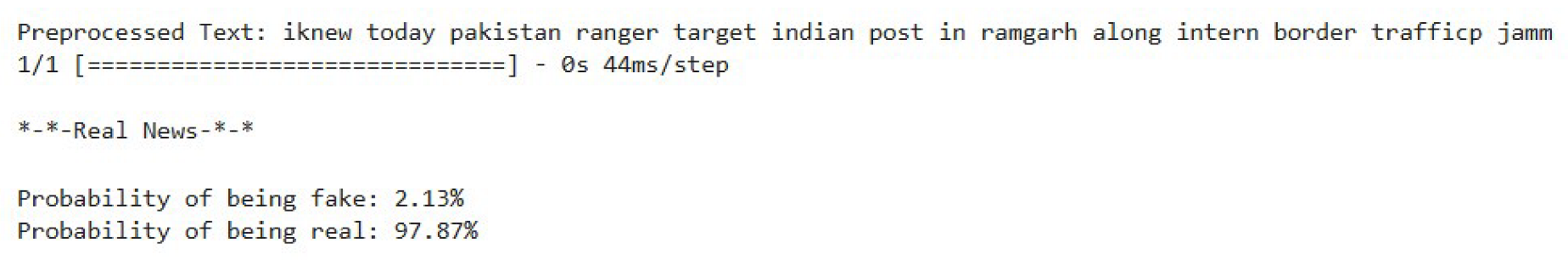

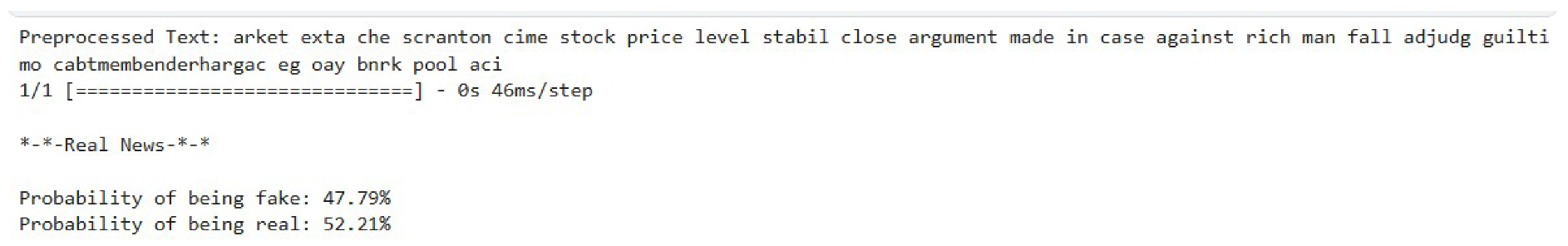

5.4. Image News Classification Results

The results from OCR-based image classification indicate that the model confidence and performance are different from each other when compared to various datasets. For example, ISOT has an unusually high level of confidence at 99.98% real about the specific news headline. This indicates that the textual structures obtained from images are highly correlated with the linguistic patterns found in the dataset. In this regard, TRUTHSEEKER also tags the same news headline as true with a somewhat lesser confidence level of 97.87% that suggests the dataset may have more stylistic or contextual variations in news article structures, thereby reducing the classification’s certainty by a small margin.

On the other hand, the ISOT-WELFAKE-TRUTHSEEKER dataset shows immense difficulties in the classification accuracy of the newspaper-style content. This results in almost equal probabilities for the real and fake classifications (52.21% real, 47.79% fake) and indicates inherent difficulty in making a distinction in cases where the OCR-extracted text may be distorted, an outdated writing style, or vague phrasing, which necessitates better pre-processing techniques and strategies for domain adaptation to improve the robustness of OCR when handling different news formats. The results are consistent with previous studies concerning the detection of multimodal misinformation, highlighting the necessity for dataset-specific adjustments to enhance classification efficacy among diverse news outlets [62,63,64].

These findings affirm that each module contributes meaningfully to model performance. OCR text from images enhances the contextual integrity of posts containing visual misinformation. The cross-attention mechanism ensures robust interaction across modalities, and BERT remains critical in capturing nuanced linguistic patterns compared to simpler encoders.

Table 4 summarizes the results of the cross-dataset evaluation, demonstrating the model’s ability to generalize across different news domains.

Table 4.

Cross-dataset evaluation results: Input images, extracted news content, and model predictions across datasets.

Table 5 presents a comparative analysis of various multimodal models on fake news detection (FND) datasets, highlighting the performance of our proposed approach.

Table 5.

Performance Comparison of Multimodal Models on FND Datasets.

Table 6 summarizes the ablation study, showing the impact of removing each component on the model’s performance.

Table 6.

Ablation Study: Performance Comparison of Model Variants.

5.5. Advanced Critical Discussion

Comparing a number of multimodal datasets for fake news highlights important issues concerning the advantages and disadvantages that are embedded in each one of them. Although the ISOT and WELFake datasets show high accuracy and confidence in classification, their structured nature may limit their use in real-world misinformation scenarios [63]. The datasets are mainly news articles, which makes them effective in detecting fake news in traditional media but less reliable in addressing the dynamic and ever-changing nature of misinformation on social media. In contrast, the ISOT-WELFAKE-TRUTHSEEKER dataset is at once a challenging and an excellent opportunity for improved detection of fake news. The “Stock Price Levels Stabilized” classification resulted in 52.21% authentic and 47.79% fraudulent, which was almost evenly split. While this result may be seen as a weakness of the model, it really speaks to the dataset’s ability to classify nuanced cases where fake news becomes increasingly sophisticated and harder to detect.

Conversely, the TruthSeeker dataset is marked by its high and dynamic benchmark properties. Its integration of a range of sources such as tweets, bot-generated content, and human-verified claims presents a more challenging yet realistic environment for the identification of fake news by models [56].

The dataset effectively captures the contextual nuances of misinformation spread on digital platforms, thereby enabling models to generalize better to real-world scenarios. Furthermore, its integration of credibility and influence scores adds an additional layer of interpretability, which is particularly valuable for advanced machine learning approaches that incorporate user behavior analysis. Therefore, even though, all these datasets contribute to explaining different aspects of fake news detection, TruthSeeker is the most holistic and practically useful dataset. Its ability to challenge models while simultaneously allowing increased classification confidence underlines its utility in the design of adaptive and robust systems for fake news detection. Future work would involve enriching these datasets through the incorporation of multimodal features, including user engagement patterns, sentiment analysis, and deepfake detection, to produce more effective frameworks for misinformation detection [64].

5.6. Future Directions in Model Development

There are ample scopes and opportunities for the further development of fake news detection systems towards being more scalable, adaptable, and real-time deployable, especially for misinformation detection across the different datasets employed.

One of the primary areas to be explored further would be on creating an AI-based application that can receive all types of news-textual, photo-based, video-based-as inputs to classify it as a real or false. It would utilize pre-trained NLP models coupled with computer vision techniques that analyze textual as well as visual inputs and then fine-tune the results on multimodal deep learning frameworks. This application, by implementing mechanisms such as real-time data verification through cross-checking with reliable sources and integrity analysis of metadata, would be able to offer users instant, reliable assessments regarding the credibility of news.

Another crucial way forward would be the embedding of fake news detection models in social media through browser extensions, APIs, or embedded AI-driven moderation tools. The AI agents may reside within the real-time social media environment and thus continue to monitor and flag the possibly misleading content being posted in real time.

Such a system would need robust streaming data pipelines and federated learning approaches to ensure the model stays responsive to the changing misinformation patterns while preserving user privacy and platform integrity.

Another significant area of research agenda would be the expansion of cross-modal capabilities in addressing artificially created misinformation, including deepfakes and edited images. Using the idea of GANs for anomaly detection, future work will take this further using multi-agent AI frameworks.

Besides technological advances, future research should also pay attention to ethics and policy implications that arise when machine-generated misinformation is detected. This includes the model’s fairness, transparency, and explainability and the necessity to address potential biases that may evolve within AI-assisted moderation mechanisms. Collaboration with fact-checking organizations, lawmakers, and media outlets will be key to the design of sensible and effective deployment strategies for AI-based detection of misinformation in real-world settings.

With these improvements in place, future research can help in making more sophisticated and context-sensitive artificial intelligence systems that could be ethically responsible enough to better address the global problem of misinformation in digital spaces.

6. Conclusions

In this study, we introduce a BERT-based multimodal framework that utilizes both textual and image-based content to effectively detect misinformation with high accuracy and robustness. The comparative analysis across four datasets—ISOT, WELFAKE, TRUTHSEEKER, and ISOT_WELFAKE_TRUTHSEEKER—demonstrates the model’s adaptability across varying data distributions. Notably, the model achieves near-perfect classification on the ISOT dataset while exhibiting more generalized performance on the TRUTHSEEKER dataset, which encompasses a broader spectrum of real-world misinformation patterns. These findings highlight the critical role of dataset diversity in enhancing the model’s generalization ability, reinforcing the necessity of diverse data sources in developing scalable and reliable misinformation detection systems.

The results of this study provide clear evidence of the benefits of integrating textual and visual data, demonstrating that multimodal approaches enhance misinformation detection by capturing both textual semantics and embedded text within images. The classification reports, confusion matrices, and evaluation metrics reveal that while ISOT has minimal misclassifications, its overfitting tendencies limit its applicability in broader contexts. In contrast, the TRUTHSEEKER dataset offers a more challenging yet realistic evaluation setting, leading to improved model adaptability in detecting misinformation across dynamic digital environments. These findings align with prior research advocating for cross-platform training data to mitigate overfitting and improve real-world performance.

Despite the strong performance, certain limitations remain, particularly in cross-modal feature alignment and handling ambiguous or complex misinformation formats. The study also highlights that OCR-based classification of news headlines introduces variability due to text extraction from images, necessitating further refinement in text preprocessing and context preservation mechanisms. Additionally, scalability and real-time efficiency present significant challenges for deploying this system in practical applications such as social media content moderation and misinformation detection tools.

Future research should focus on enhancing model interpretability, optimizing attention mechanisms for multimodal integration, and expanding datasets to include emerging misinformation formats, such as deepfake videos and synthetic media. The development of real-time AI agents for misinformation detection, integrated into social media platforms or independent fact-checking applications, represents a promising direction that could significantly enhance digital media integrity.

Ultimately, this study underscores the importance of dataset diversity, multimodal integration, and scalable AI-driven solutions in combating fake news. While all datasets provided valuable insights, the TRUTHSEEKER dataset emerges as the most practical benchmark, as it best reflects the complexities of real-world misinformation. These findings establish a solid foundation for advancing AI-driven misinformation detection and emphasize the need for continued interdisciplinary research to refine automated verification systems for public discourse and digital media analysis.

Author Contributions

Conceptualization, M.A.-a. and D.B.R.; Methodology, M.A.-a. and D.B.R.; Software, M.A.-a.; Validation, C.L.; Formal Analysis, M.A.-a.; Investigation, M.A.-a., D.B.R., and C.L.; Resources, D.B.R. and C.L.; Data Curation, M.A.-a.; Writing—Original Draft Preparation, M.A.-a.; Writing—Review and Editing, M.A.-a. and D.B.R.; Visualization, M.A.-a.; Supervision, D.B.R.; Project Administration, D.B.R.; Funding Acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the NSF under grant agreement DMS-2022448.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We would like to acknowledge unanimous reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Aimeur, E.; Amri, S.; Brassard, G. Fake news, disinformation and misinformation in social media: A review. Soc. Netw. Anal. Min. 2023, 13, 30. [Google Scholar] [CrossRef] [PubMed]

- Atske, S. Social Media and News Fact Sheet. Pew Research Center. Available online: https://www.pewresearch.org/journalism/fact-sheet/social-media-and-news-fact-sheet/ (accessed on 16 October 2024).

- Konopliov. Fake News Statistics & Facts (2024)—Redline Digital. Available online: https://redline.digital/fake-news-statistics/ (accessed on 26 June 2024).

- Zhao, J.; Zhao, Z.; Shi, L.; Kuang, Z.; Liu, Y. Collaborative mixture-of-experts model for multi-domain fake news detection. Electronics 2023, 12, 3440. [Google Scholar] [CrossRef]

- Ruffo, G.; Semeraro, A.; Giachanou, A.; Rosso, P. Studying fake news spreading, polarisation dynamics, and manipulation by bots: A tale of networks and language. Comput. Sci. Rev. 2023, 47, 100531. [Google Scholar] [CrossRef]

- Bontridder, N.; Poullet, Y. The role of artificial intelligence in disinformation. Data Policy 2021, 3, e32. [Google Scholar] [CrossRef]

- Al-Alshaqi, M.; Rawat, D.B. Disinformation Classification Using Transformer-Based Machine Learning. In Proceedings of the 2023 6th International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Batam, Indonesia, 11–12 December 2023; pp. 169–174. [Google Scholar] [CrossRef]

- Al-Alshaqi, M.; Rawat, D.B.; Liu, C. Emotion-Aware Fake News Detection on Social Media with BERT Embeddings. In Proceedings of the 2023 International Conference on Modeling & E-Information Research, Artificial Learning and Digital Applications (ICMERALDA), Karawang, Indonesia, 24 November 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, Z.; Hamadi, H.A.; Damiani, E.; Yeun, C.Y.; Taher, F. Explainable Artificial Intelligence Applications in Cyber Security: State-of-the-Art in Research. IEEE Access 2022, 10, 93104–93139. [Google Scholar] [CrossRef]

- Karlson, N. Reviving Classical Liberalism Against Populism; Springer Nature: Berlin/Heidelberg, Germany, 2024. [Google Scholar] [CrossRef]

- Ringsmuth, A.K.; Otto, I.M.; van den Hurk, B.; Lahn, G.; Reyer, C.P.; Carter, T.R.; Magnuszewski, P.; Monasterolo, I.; Aerts, J.C.; Benzie, M.; et al. Lessons from COVID-19 for managing transboundary climate risks and building resilience. Clim. Risk Manag. 2022, 35, 100395. [Google Scholar] [CrossRef]

- Hartzog, W.; Selinger, E.; Gunawan, J. Privacy Nicks: How the Law Normalizes Surveillance. SSRN Electron. J. 2023, 101, 717. [Google Scholar] [CrossRef]

- Barrett, C.M. Automated esSay Evaluation and the Computational Paradigm: Machine Scoring Enters the Classroom. Ph.D. Thesis, University of Rhode Island, Kingston, RI, USA, 2015. [Google Scholar] [CrossRef]

- Al-Alshaqi, M.; Rawat, D.B.; Liu, C. Ensemble Techniques for Robust Fake News Detection: Integrating Transformers, Natural Language Processing, and Machine Learning. Sensors 2024, 24, 6062. [Google Scholar] [CrossRef] [PubMed]

- Sándor, A.V. The Relationship Between Self-Representation on Social Media and Affective or Anxiety Disorders in the Perspective of the COVID-19 Pandemic. Ph.D. Thesis, Eötvös Loránd University, Budapest, Hungary, 2023. [Google Scholar] [CrossRef]

- Giachanou, A.; Zhang, G.; Rosso, P. Multimodal Multi-image Fake News Detection. In Proceedings of the 2020 IEEE 7th International Conference on Data Science and Advanced Analytics (DSAA), Sydney, Australia, 6–9 October 2020; pp. 647–654. [Google Scholar] [CrossRef]

- Singhal, S.; Shah, R.R.; Chakraborty, T.; Kumaraguru, P.; Satoh, S. SpotFake: A Multi-modal Framework for Fake News Detection. In Proceedings of the 2019 IEEE Fifth International Conference on Multimedia Big Data (BigMM), Singapore, 11–13 September 2019; pp. 39–47. [Google Scholar] [CrossRef]

- Duc Tuan, N.M.; Quang Nhat Minh, P. Multimodal Fusion with BERT and Attention Mechanism for Fake News Detection. In Proceedings of the 2021 RIVF International Conference on Computing and Communication Technologies (RIVF), Hanoi, Vietnam, 2–4 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Uppada, S.K.; Patel, P. An image and text-based multimodal model for detecting fake news in OSN’s. J. Intell. Inf. Syst. 2023, 61, 367–393. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, D.; Chen, H.; Zeng, Z.; Guo, W.; Miao, C.; Cui, L. BDANN: BERT-Based Domain Adaptation Neural Network for Multi-Modal Fake News Detection. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Segura-Bedmar, I.; Alonso-Bartolome, S. Multimodal Fake News Detection. Information 2022, 13, 284. [Google Scholar] [CrossRef]

- Palani, B.; Elango, S.; Viswanathan K, V. CB-Fake: A multimodal deep learning framework for automatic fake news detection using capsule neural network and BERT. Multimed. Tools Appl. 2022, 81, 5587–5620. [Google Scholar] [CrossRef] [PubMed]

- Lindsay, G. Convolutional Neural Networks as a Model of the Visual System: Past, Present, and Future. J. Cogn. Neurosci. 2020, 33, 2017–2031. [Google Scholar] [CrossRef] [PubMed]

- Hua, J.; Cui, X.; Li, X.; Tang, K.; Zhu, P. Multimodal fake news detection through data augmentation-based contrastive learning. Appl. Soft Comput. 2023, 136, 110125. [Google Scholar] [CrossRef]

- Xue, J.; Wang, Y.; Tian, Y.; Li, Y.; Shi, L.; Wei, L. Detecting fake news by exploring the consistency of multimodal data. Inf. Process. Manag. 2021, 58, 102610. [Google Scholar] [CrossRef]

- Giachanou, A.; Zhang, G.; Rosso, P. Multimodal Fake News Detection with Textual, Visual and Semantic Information. In Proceedings of the Text, Speech, and Dialogue; Sojka, P., Kopeček, I., Pala, K., Horák, A., Eds.; Springer: Cham, Switzerland, 2020; pp. 30–38. [Google Scholar] [CrossRef]

- Jaiswal, R.; Singh, U.P.; Singh, K.P. Fake News Detection Using BERT-VGG19 Multimodal Variational Autoencoder. In Proceedings of the 2021 IEEE 8th Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Dehradun, India, 11–13 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Ying, L.; Yu, H.; Wang, J.; Ji, Y.; Qian, S. Multi-Level Multi-Modal Cross-Attention Network for Fake News Detection. IEEE Access 2021, 9, 132363–132373. [Google Scholar] [CrossRef]

- Hangloo, S.; Arora, B. Combating multimodal fake news on social media: Methods, datasets, and future perspective. Multimed. Syst. 2022, 28, 2391–2422. [Google Scholar] [CrossRef]

- Ghorbanpour, F.; Ramezani, M.; Fazli, M.; Rabiee, H. FNR: A similarity and transformer-based approach to detect multi-modal fake news in social media. Soc. Netw. Anal. Min. 2023, 13, 56. [Google Scholar] [CrossRef]

- Peng, X.; Xintong, B. An effective strategy for multi-modal fake news detection. Multimed. Tools Appl. 2022, 81, 13799–13822. [Google Scholar] [CrossRef]

- Qian, S.; Wang, J.; Hu, J.; Fang, Q.; Xu, C. Hierarchical Multi-modal Contextual Attention Network for Fake News Detection. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’21, New York, NY, USA, 11–15 July 2021; pp. 153–162. [Google Scholar] [CrossRef]

- Yang, P.; Ma, J.; Liu, Y.; Liu, M. Multi-modal transformer for fake news detection. Math. Biosci. Eng. 2023, 20, 14699–14717. [Google Scholar] [CrossRef]

- Qi, P.; Cao, J.; Li, X.; Liu, H.; Sheng, Q.; Mi, X.; He, Q.; Lv, Y.; Guo, C.; Yu, Y. Improving Fake News Detection by Using an Entity-enhanced Framework to Fuse Diverse Multimodal Clues. In Proceedings of the 29th ACM International Conference on Multimedia, MM ’21, New York, NY, USA, 20–24 October 2021; pp. 1212–1220. [Google Scholar] [CrossRef]

- Comito, C.; Caroprese, L.; Zumpano, E. Multimodal fake news detection on social media: A survey of deep learning techniques. Soc. Netw. Anal. Min. 2023, 13, 101. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Bai, J.; Al-Sabaawi, A.; Santamaría, J.; Albahri, A.; Al-dabbagh, B.; Fadhel, M.; Manoufali, M.; Zhang, J.; Al-Timemy, A.; et al. A survey on deep learning tools dealing with data scarcity: Definitions, challenges, solutions, tips, and applications. J. Big Data 2023, 10, 46. [Google Scholar] [CrossRef]

- Allan, J.; Aslam, J.; Belkin, N.; Buckley, C.; Callan, J.; Croft, B.; Dumais, S.; Fuhr, N.; Harman, D.; Harper, D.J.; et al. Challenges in information retrieval and language modeling: Report of a workshop held at the center for intelligent information retrieval, University of Massachusetts Amherst, September 2002. ACM SIGIR Forum 2003, 37, 31–47. [Google Scholar] [CrossRef]

- Martínez Hernández, L.A.; Sandoval Orozco, A.L.; García Villalba, L.J. Analysis of Digital Information in Storage Devices Using Supervised and Unsupervised Natural Language Processing Techniques. Future Internet 2023, 15, 155. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.; Hassan, M.; Rodela, M.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Ali, A.B.M.S.; Gandomi, A. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Ismagilova, E.; Hughes, D.L.; Carlson, J.; Filieri, R.; Jacobson, J.; Jain, V.; Karjaluoto, H.; Kefi, H.; Krishen, A.S.; et al. Setting the future of digital and social media marketing research: Perspectives and research propositions. Int. J. Inf. Manag. 2021, 59, 102168. [Google Scholar] [CrossRef]

- Li, W.; Gu, C.; Chen, J.; Ma, C.; Zhang, X.; Chen, B.; Wan, S. DLS-GAN: Generative Adversarial Nets for Defect Location Sensitive Data Augmentation. IEEE Trans. Autom. Sci. Eng. 2024, 21, 5173–5189. [Google Scholar] [CrossRef]

- Al-Fuqaha, A.; Guizani, M.; Mohammadi, M.; Aledhari, M.; Ayyash, M. Internet of Things: A Survey on Enabling Technologies, Protocols, and Applications. IEEE Commun. Surv. Tutor. 2015, 17, 2347–2376. [Google Scholar] [CrossRef]

- Kaggle. Fake-and-Real-News-Dataset. Available online: https://www.kaggle.com/datasets/clmentbisaillon/fake-and-real-news-dataset (accessed on 19 April 2024).

- Callard, F.; Fitzgerald, D. Rethinking Interdisciplinarity across the Social Sciences and Neurosciences; Palgrave Macmillan London: London, UK, 2015. [Google Scholar] [CrossRef]

- Sarstedt, M.; Danks, N.P. Prediction in HRM research–A gap between rhetoric and reality. Hum. Resour. Manag. J. 2022, 32, 485–513. [Google Scholar] [CrossRef]

- Roberge, J.; Lebrun, T. KI-Realitäten; Transcript Verlag: Bielefeld, Germany, 2023; pp. 39–66. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Kaggle. Fake News Detection Datasets. Available online: https://www.kaggle.com/datasets/emineyetm/fake-news-detection-datasets (accessed on 7 December 2022).

- Kaggle. Welfake Dataset for Fake News. Available online: https://www.kaggle.com/datasets/syedsubahani/wel-fake-news-dataset (accessed on 19 February 2024).

- Dadkhah, S.; Zhang, X.; Weismann, A.G.; Firouzi, A.; Ghorbani, A.A. The Largest Social Media Ground-Truth Dataset for Real/Fake Content: TruthSeeker. IEEE Trans. Comput. Soc. Syst. 2024, 11, 3376–3390. [Google Scholar] [CrossRef]

- Salawu, S.; He, Y.; Lumsden, J. Approaches to Automated Detection of Cyberbullying: A Survey. IEEE Trans. Affect. Comput. 2020, 11, 3–24. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Ma, X.; Wu, J.; Xue, S.; Yang, J.; Zhou, C.; Sheng, Q.Z.; Xiong, H.; Akoglu, L. A Comprehensive Survey on Graph Anomaly Detection With Deep Learning. IEEE Trans. Knowl. Data Eng. 2023, 35, 12012–12038. [Google Scholar] [CrossRef]

- Cao, S.; Wang, L. CLIFF: Contrastive Learning for Improving Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic and Online, 7–11 November 2021; pp. 6633–6649. [Google Scholar] [CrossRef]

- Verma, G.; Mujumdar, R.; Wang, Z.J.; Choudhury, M.D.; Kumar, S. Overcoming Language Disparity in Online Content Classification with Multimodal Learning. Proc. Int. Aaai Conf. Web Soc. Media 2022, 16, 1040–1051. [Google Scholar] [CrossRef]

- Ferri Borredà, P. Deeep Continual Multimodal Multitask Modells for Out-of-Hospital Emergency Medical Call Incidents Triage Support in the Presence of Dataset Shifts. Ph.D. Thesis, Universitat Politècnica de València, Valencia, Spain, 2024. [Google Scholar] [CrossRef]

- Lampe, B.; Meng, W. Intrusion Detection in the Automotive Domain: A Comprehensive Review. IEEE Commun. Surv. Tutor. 2023, 25, 2356–2426. [Google Scholar] [CrossRef]

- Barnes, T.; Drake, R.; Paton, C.; Cooper, S.; Deakin, B.; Ferrier, I.; Gregory, C.; Haddad, P.; Howes, O.; Jones, I.; et al. Evidence-based guidelines for the pharmacological treatment of schizophrenia: Updated recommendations from the British Association for Psychopharmacology. J. Psychopharmacol. 2019, 34, 3–78. [Google Scholar] [CrossRef]

- Munger, K. The YouTube Apparatus; Cambridge University Press: Cambridge, UK, 2024. [Google Scholar] [CrossRef]

- Das, A.; Liu, H.; Kovatchev, V.; Lease, M. The state of human-centered NLP technology for fact-checking. Inf. Process. Manag. 2023, 60, 103219. [Google Scholar] [CrossRef]

- Ras, G.; Xie, N.; van Gerven, M.; Doran, D. Explainable Deep Learning: A Field Guide for the Uninitiated. J. Artif. Intell. Res. 2022, 73. [Google Scholar] [CrossRef]

- Gu, S.; Kelly, B.; Xiu, D. Empirical Asset Pricing via Machine Learning. Technical Report 25398, National Bureau of Economic Research. Rev. Financ. Stud. 2020, 33, 2223–2273. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).