Abstract

The adoption of Intelligent Virtual Assistants (IVAs) in the banking sector presents new opportunities to enhance customer service efficiency, reduce operational costs, and modernize service delivery channels. However, the factors driving IVA adoption and usage, particularly in specific regional contexts such as Portugal, remain underexplored. This study examined the determinants of IVA adoption intention and actual usage in the Portuguese banking sector, drawing on the Technology Acceptance Model (TAM) as its theoretical foundation. Data were collected through an online questionnaire distributed to 154 banking customers after they interacted with a commercial bank’s IVA. The analysis was conducted using Partial Least Squares Structural Equation Modeling (PLS-SEM). The findings revealed that perceived usefulness significantly influences the intention to adopt, which in turn significantly impacts actual usage. In contrast, other variables—including trust, ease of use, anthropomorphism, awareness, service quality, and gendered voice—did not show a significant effect. These results suggest that Portuguese users adopt IVAs based primarily on functional utility, highlighting the importance of outcome-oriented design and communication strategies. This study contributes to the understanding of technology adoption in mature digital markets and offers practical guidance for banks seeking to enhance the perceived value of their virtual assistants.

1. Introduction

The growing expansion of e-commerce is partially justified by using Intelligent Virtual Assistants (IVAs) to enhance the customer experience, as these can improve the whole efficiency of the process. In e-commerce, IVAs improve the customer experience by providing personalized recommendations and assistance at every contact with a brands’ customer journey [1,2].

The application of IVAs has been exponential in many sectors, addressing the consumers’ requirements to access information in the blink of an eye. The banking sector has long invested worldwide in technologies like IVAs, increasing their performance and technical efficiency [3,4]. The banking companies in Portugal are also diving into this new technology, offering IVAs in their app to respond to clients’ needs and lifestyles but still trying to understand the drivers of adoption intention fully.

To study the adoption of IVAs in banking, we used the Technology Acceptance Model (TAM). This model has proven its value in studying the adoption of technology for banking, proving its worth and contribution to this type of study [3,5,6,7,8]. TAM has been used in various areas, such as education [9,10,11,12,13], health [14,15,16] and tourism [17,18].

1.1. Perceived Usefulness and Perceived Ease of Use

The perceived usefulness and the perceived ease of use have been used in numerous adoption theories and models, such as TAM, and were found to have great implications for the usage intentions regarding adoption in the m-banking sectors [19,20,21]. The user’s intention concerning new technologies is impacted by the user’s conviction of the perceived usefulness and the solution’s ease of use [22]. The interactivity with the IVAs is affected by the user’s perceived ease of use and usefulness of the assistant [23]. Thus, we hypothesize the following:

H1.

Perceived usefulness affects the adoption intention of chatbots for banking.

H2.

Perceived ease of use affects the adoption intention of chatbots for banking.

1.2. Perceived Trust

Trust can not only be established between people but also between a person and an automated system. The process of forming trust incorporates people’s emotions and feelings. The perceived trust toward an IVA can be defined as the user’s willingness to be susceptible to the assistant [1,24]. The trust in IVAs can also be described as the IVA’s being capable, reliable and credible [25].

H3.

Perceived Trust affects the adoption intention of chatbots for banking.

1.3. Anthropomorphism

The presence of anthropomorphism on an IVA indicates that some human characteristics or behaviors have been implemented in the system. With this presence can also come a negative side effect, where a high level of anthropomorphism can reach the point of being uncanny, where humans are deeply repulsed by the IVA [26]. A negative effect can also occur when users realize that they are communicating with these AI systems while they were under the impression that they are interacting with humans [27].

H4.

Anthropomorphism affects the adoption intention of chatbots for banking.

1.4. Awareness of the Service

The awareness of the service, which is the knowledge that a service exists, is often linked with familiarity with the IVA and together presents a vital role to decrease the privacy concerns toward these assistants [28]. The low awareness of these assistants in the banking sector and their undeniable benefits relate to the customers’ low acceptance and usage of this technology [29,30]. Additionally, a lack of familiarity with the assistant is responsible for the users’ uncomfortable feelings concerning the IVA, as they have reservations about the accuracy of the errands completed with the solution [31].

H5.

Awareness of the service affects the adoption intention of chatbots for banking.

1.5. Service Quality

Service quality can be defined as a personal evaluation of the provided service given to the solution user [32]. Service quality is significantly impacted by the functional capabilities and the sensorial components of the service [33]. Implementing IVAs is responsible for altering consumers’ understanding of service quality as parameters like response time present a significant change between humans and IVA-based services. For this reason, the previously known scales to measure service quality had to be adapted to this new reality [27,34].

H6.

Service quality affects the adoption intention of chatbots for banking.

1.6. Gendered Voice

The introduction of voice features to IVAs has been getting traction, resulting in the increased use of voice assistants offered to help users [35]. These types of assistants provide a more humanized and strong service thanks to the presence of anthropomorphism seen in characteristics such as voice pitch and voice tone [36]. The attribution of gender to the IVA, and consequently to their voice, impacts the perceived human likeness of the assistant, as studies state that female IVAs have an advantage when compared with male IVAs in this capacity [37]. Additionally, ref. [38] stated that there is a female gender favoritism when designing an IVA, resulting in assistants with female features having a prominent presence in the areas of sales, customer service and branded conversations. To try to overcome this favoritism, genderless voices have been introduced to IVAs to overcome the impact this type of voice has on users [39]. Thus, we hypothesized the following:

H7.

A gendered voice moderates the relationship between the adoption intention and the actual usage of a chatbot for banking.

1.7. Adoption Intention

While the adoption intention, which is the subjective likelihood of having a specific behavior, is a convincing indication that the actual adoption will take place, it does not ensure that it will happen despite the high level of influence between the actual usage and the intention to use [18,40,41]. Previous research about the adoption intention of IVAs in the banking sector found that IVAs’ adoption intention is dependent on factors such as a positive intention toward these types of technology, perceived usefulness of the solution, perceived ease of use and anthropomorphism [3,42,43]. Thus, we hypothesize the following:

H8.

Adoption intention affects the actual usage of the chatbot for banking.

The study goal is to overcome the research gap in adopting IVAs for banking in Portugal, where the potential for this type of technology is massive, by answering the research question: What influences the experience with IVAs in the banking sector? Since these chatbots are still underdeveloped, their potential to provide users with the best experience was not fully explored. The study’s findings shed light on the main variables that influence IVA adoption intentions and actual usage of this technology in the banking sector in Portugal.

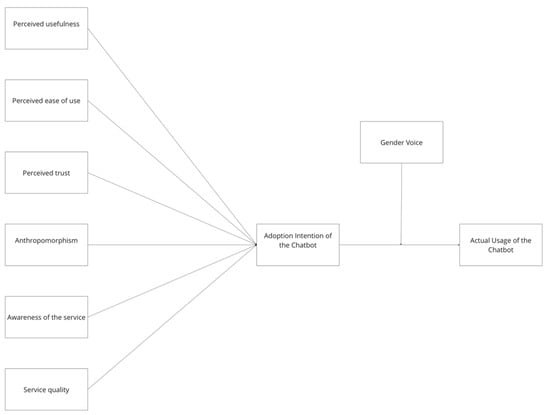

Taking into consideration the above, the study model that follows (Figure 1) is suggested, expanding upon the TAM model by analyzing the determinants of perceived usefulness, perceived ease of use, perceived trust and awareness of the service that were adapted from [3]. The proposed model aims to analyze whether the user’s perceived usefulness, perceived ease of use, perceived trust, anthropomorphism, awareness of the service and service quality affects the intention to adopt the chatbot and the actual usage of this technology. It also aims to identify if a gendered voice moderates the relationships between the adoption intention and the actual usage of the chatbot for banking.

Figure 1.

Proposed model.

2. Materials and Methods

2.1. Sample, Data Collection and Analysis

To validate the comprehension of the questions, we tested the questionnaire using a small sample of 30 people. Based on the results of this pilot study, we adjusted some of the items to ensure that all of them had clarity and that the participants could understand each question. After that, the questionnaire was completed using Microsoft Forms. The collection of data was conducted through an online survey during a period of 1 month. The survey was made available on the chatbot of Bank X, a Portuguese commercial bank, with more than 10% of the market share and a large client base, by a link to Microsoft Forms after the respondent used the virtual assistant. A total of 154 questionnaire responses were collected. The content of the questionnaire was translated from English into Portuguese as the survey was applied in Portugal. The participants were informed that the survey was anonymous and would not collect any personal information, such as names or any form of contact, to ensure the anonymity of the contributors. The participants had no exclusion criteria for this study to be classified as valid. A total of 154 questionnaire responses were collected. The data collected did not present any missing values, so a sample of 154 questionaries was used to research the model’s analysis. A normality test was performed on the dataset to assess if the sample followed a normal distribution and if any of the variables had a normal distribution. Non-parametric Mann–Whitney tests were performed to check if the responses collected earlier in the process presented significant differences from the ones collected closer to the end of the publication of the questionnaire. From those tests, no significant differences were found, demonstrating that there is no non-response bias. Analyzing the skewness of the variables, all the values were between the −2/+2 interval, meaning that the variables do not present non-normality [44].

2.2. Sample Characteristics

As far as the characteristics of the sample (Table 1), the proportion of men (51%) was comparable to women (49%) with only one person preferring not to disclose their gender, and age ranged from 18 to 76 years old, with a mean age of 41 years old. Concerning educational qualification, 66% of the respondents had an undergraduate degree, 29% had a graduate degree, 5% had a master’s degree and 1% had a doctoral degree.

Table 1.

Sample demographics.

2.3. Measurement Instruments

Firstly, four demographic questions were asked. Secondly, three questions regarding the experience with the solution were answered using a ten-point Likert scale ranging from “Bad” (1) to “Excellent” (10). Since the goal was to continuously record perceived experience, a neutral choice was excluded. Based on the literature, the variables were evaluated from 33 items. Answers to all items were given on 5-point scales (strongly disagree–strongly agree) (Table 2, Table 3, Table 4, Table 5, Table 6, Table 7 and Table 8). Thirdly, answers about a gendered voice were given on a 3-point scale (male voice, female voice, without humanization). Table A1 in the Appendix A presents all the measurement items and survey questions and their respective scales.

Table 2.

Perceived usefulness scale.

Table 3.

Perceived ease of use scale.

Table 4.

Perceived trust scale.

Table 5.

Anthropomorphism scale.

Table 6.

Awareness of the service scale.

Table 7.

Service quality scale.

Table 8.

Actual usage scale.

Perceived usefulness (PU) was rated on three items as follows, adapted from [3] (Table 2). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Perceived ease of use (PEU) was rated on three items as follows, adapted from [3] (Table 3). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Perceived trust (PT) was rated on four items as follows, adapted from [18] (Table 4). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Anthropomorphism (ANM) was rated on four items as follows, adapted from [18] (Table 5). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Awareness of the service (AS) was rated on three items as follows, adapted from [3] (Table 6). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Service quality (SQ) was rated on eleven items as follows, adapted from [45] (Table 7). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Actual usage (AU) was rated on four items as follows, adapted from [18] (Table 8). Answers were provided on a 5-point scale (strongly disagree–strongly agree).

Adoption intention (ADI) was rated on one item as follows, as used previously in articles [46,47,48,49], adapted from [18] (Table 9). Answers were provided on a 10-point scale (strongly disagree–strongly agree).

Table 9.

Adoption intention scale.

3. Results

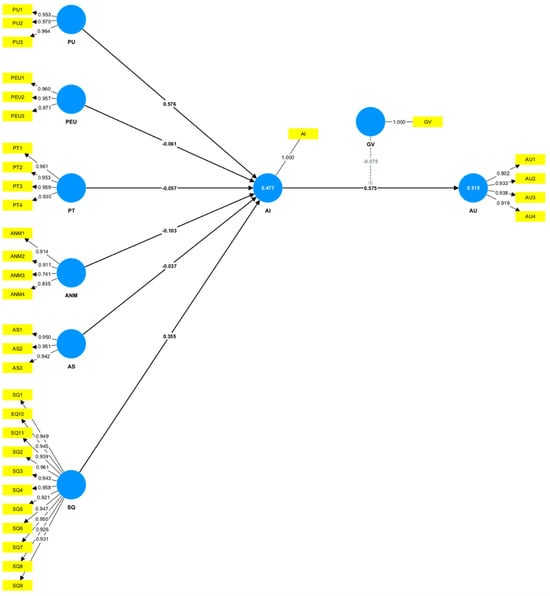

The investigation model was analyzed through PLS structural equation modeling (PLS-SEM), using SmartPLS software, version 4.0.9.6 [44]. In the analysis, we also explored the moderating effect of the gender of the voice on the Adoption Intention → Actual Usage.

3.1. Measurement Models

Firstly, we estimated the relationships between the constructs and their indicators. Table 10 displays the convergent validity, the internal consistency reliability, and the discriminant validity. All of the outer loadings of the reflective constructs are well above the threshold value of 0.708, which suggests sufficient levels of the reliability indicator [44]. The indicator ANM3 has the most negligible indicator reliability with a value of 0.549 (0.741)2, whereas ADI and GV had the highest indicator reliabilities with a value of 1 (1)2 as these constructs are unidimensional. All the AVE values are also above the required minimum of 0.50, meaning that the seven reflective constructs have high levels of convergent validity [44]. As the ρA from all the constructs is above the threshold value of 0.70, all of them have a high level of internal consistency reliability [44]. The Cronbach’s alpha and the ρc values for the constructs all exceed the threshold value of 0.70, meaning all the constructs analyzed have a high level of consistency and reliability [44].

Table 10.

Results of the measurement models.

After that, we analyzed the discriminant validity of the constructs through the Heterotrait–monotrait ratio (HTMT). Table 11 represents the HTMT values for all the pairs of constructs as well as the bootstrap confidence intervals. To exam if the HTMT values are significantly different from the threshold value, we ran a bootstrapping procedure to compute the bootstrap confidence intervals. This test focuses on the right tail of the bootstrap distribution to show that the HTMT values are significantly lower than the corresponding threshold value of 0.90 with a 5% probability of error [44]. Since the threshold for the HTMT values is 0.90, only PT, PEU, SQ and AU are above the threshold, meaning that a discriminant validity has not been established between the two reflective constructs. For the other constructs, discriminant validity has been established between the reflective constructs [44].

Table 11.

Heterotrait–monotrait (HTMT) values.

3.2. Structural Model

To evaluate the structural model, we first needed to check the structural model for collinearity issues by examining the Variance Inflation Factors (VIF) values. Table 12 presents the significance testing results of the structural model path coefficients. Some of the VIF values were above the threshold of five [44], meaning that some of the constructs (PEU → ADI, PT → ADI, PU → ADI and SQ → ADI) presented collinearity among them. Then, we assessed the significance and relevance of the structural model relationships by examining the path coefficients. All the path coefficient values were within the threshold of −1/+1 [44], with the strongest positive relationship between PU and ADI and the strongest negative relationship between ANM and ADI. Then, we ran the bootstrapping procedure to calculate the t values, p values, 95% BC confidence interval and the significance of the relationships in the structural model. By analyzing Table 12, only two relationships have significance. These relationships were ADI → AU, meaning that the adoption intention affects the actual usage of the chatbot for banking, which confirms H8, and PU → ADI, meaning that the perceived usefulness affects the adoption intention of the chatbot for banking, which confirms H1.

Table 12.

Significance testing results of the structural model path coefficients.

Then, we turned our attention to the assessment of the model’s explanatory power. Table 13 presents the explanatory power of the model. The R2 value of ADI can be considered moderate, whereas the R2 value of AU is rather weak [44]. From Table 8, we can see that ADI has a large effect size of 0.421 on AU, PU has a small effect size of 0.113 on ADI, SQ has a small effect size of 0.033 on ADI, and none of the other predictors have an effect on the outcome with their f2 being less than 0.02 [44,50].

Table 13.

Explanatory power.

To summarize, H1 is confirmed, meaning that perceived usefulness affects the adoption intention of chatbots for banking, agreeing with [7,14,16,18] and contradicting [11]. H2 is not confirmed, meaning that perceived ease of use does not affect the adoption intention of chatbots for banking, agreeing with [11] and contradicting [14,16,18]. H3 is not confirmed, meaning that perceived trust does not affect the adoption intention of chatbots for banking, agreeing with [7] and contradicting [18,51]. H4 is not confirmed, meaning that anthropomorphism does not affect the adoption intention of chatbots for banking, which contradicts [18]. H5 is not confirmed, meaning that the awareness of the service does not affect the adoption intention of chatbots for banking. H6 is not confirmed, meaning that service quality does not affect the adoption intention of chatbots for banking. H7 is not confirmed, meaning that a gendered voice does not moderate the relationship between the adoption intention and the actual usage of the chatbot for banking. Finally, H8 is confirmed, meaning that the adoption intention affects the actual usage of the chatbot for banking, agreeing with [3,7,17].

3.3. Mediation

To perform the mediation analysis, we tested the significance of the indirect effect of perceived usefulness (PU) via adoption intention (ADI) to actual usage (AU), which is the product of the path coefficients from PU to ADI and from ADI to AU. We ran the bootstrapping routine to test the significance of these path coefficients’ product [44]. Table 14 represents the analysis of the direct and indirect effects of the ADI. By analyzing the indirect effect, we conclude that the indirect effect is significant, as the 95% confidence interval does not include zero [44]. The direct effect of ADI exerts a pronounced (0.575) and significant (p < 0.05) effect on AU. We then concluded that ADI partially mediates the relationship since both the direct and the indirect effects are meaningful. To further substantiate the type of partial mediation, we computed the product of the direct and indirect effects. Since both are positive, the sign of their product is also positive (i.e., 0.575 * 0.0311 = 0.0179) [44]. Our findings provide empirical support for the mediating role of adoption intention in the studied model. The adoption intention serves as a complementary mediator for the relationship between perceived usefulness and actual usage.

Table 14.

Analysis of the indirect effects.

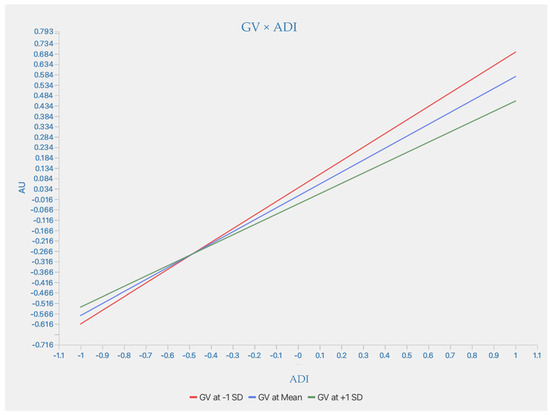

3.4. Moderation

To conduct the moderation analysis of the GV in relation to ADI and AU, we first checked the size of the moderating effect. Figure 2 tests whether GV moderates (i.e., changes the strength or direction of) the relationship between ADI and AU. In other words, it examines whether the type or prominence of the chatbot’s gendered voice influences how likely people are to actually use the assistant once they intend to adopt it.

Figure 2.

Moderator Analysis Results.

The base path coefficient from adoption intention to actual usage (main effect of ADI → AU) is 0.575, which is positive and statistically significant (p < 0.001). This indicates that higher adoption intention clearly leads to more actual use of the IVA.

In terms of the moderating effect of GV × ADI, the interaction term (GV × ADI) has a path coefficient of −0.079. This means that when GV increases (i.e., more gendered or human-like voices), the strength of the relationship between ADI and AU slightly decreases. However, the effect is not statistically significant (p = 0.288), and the confidence interval includes zero. So, no real moderating effect is confirmed.

Therefore, we can conclude that although there is a small interaction effect, where more gendered voices slightly weaken the influence of adoption intention on usage, the moderation is not statistically significant. Therefore, a gendered voice does not significantly alter user behavior, and adoption intention remains the dominant predictor of actual usage.

Figure 3 illustrates how the strength of the relationship between adoption intention and actual usage varies according to the level of the moderating variable—gendered voice. The graph includes three regression lines, each representing a different level of GV: middle line: average (mean) level of gendered voice; upper line: one standard deviation below the mean (i.e., “lower” GV—possibly gender-neutral or less salient voice); lower line: one standard deviation above the mean (i.e., “higher” GV—more pronounced gendered voice).

Figure 3.

Relationship between ADI and AU.

All three lines have a positive slope, confirming that higher adoption intention is associated with higher actual usage, regardless of the level of GV. However, the steepness of the lines varies slightly:

- The steepest line (GV below the mean) indicates that when the gendered voice is less prominent, the impact of adoption intention on actual usage is stronger.

- The flattest line (GV above the mean) shows that when the gendered voice is more noticeable or gendered, the impact of adoption intention on usage is slightly weaker.

- The middle line represents the effect at the average GV level.

The results of the moderation analysis are provided in Table 15. The moderation effect is insignificant as the p value is more significant than 0.05. Similarly, as the 95% percentile bootstrap bias-corrected confidence interval of the interaction term’s effect includes zero, we conclude that the effect is insignificant [44]. This means that a gendered voice does not influence the relationship between the adoption intention and the actual usage of the chatbot for banking.

Table 15.

Analysis of the moderation.

4. Discussion

This study proposed eight hypotheses to investigate the determinants influencing the adoption intention and actual usage of Intelligent Virtual Assistants (IVAs) in the Portuguese banking sector. Rooted in the Technology Acceptance Model (TAM), the research hypothesized that perceived usefulness (H1), ease of use (H2), trust (H3), anthropomorphism (H4), awareness of the service (H5), and service quality (H6) would affect adoption intention, while gendered voice (H7) would moderate the relationship between adoption intention and actual usage, and that adoption intention would influence actual usage (H8). Using PLS-SEM on data from 154 banking customers, the analysis confirmed only two hypotheses: H1, showing that perceived usefulness significantly impacts adoption intention, and H8, demonstrating that adoption intention positively influences actual usage. The remaining hypotheses (H2 to H7) were not supported. The novel contribution of this manuscript lies in its contextual focus on a digitally mature market (Portugal) where traditional adoption drivers such as trust, ease of use, and anthropomorphism are no longer significant, and perceived usefulness alone emerges as the central determinant. This insight highlights a shift toward a pragmatic, outcome-oriented evaluation of IVAs, advancing existing adoption models by demonstrating how maturity in user behavior and market infrastructure can reconfigure technology acceptance patterns.

According to the findings, in the context of Portuguese banking, variables such as trust, ease of use, anthropomorphism, awareness, service quality, and gendered voice do not significantly influence the adoption or usage of IVAs. This outcome is largely attributed to the high digital literacy and familiarity with banking technologies among Portuguese consumers, who evaluate these tools mainly based on their practical benefits rather than emotional or aesthetic characteristics [52,53]. Trust in IVAs appears to be derived from the broader trust in established banking institutions, while features like anthropomorphism and gendered voice are either underutilized or less culturally significant in Portugal [54]. Additionally, because many users had prior exposure to IVAs, awareness of the service did not substantially impact usage, and service quality is still strongly associated with human interaction, reducing its perceived relevance in automated contexts [53].

These findings reflect a pragmatic, utility-driven approach to technology adoption in Portugal, where perceived usefulness is the primary determinant of user behavior [52,53]. Culturally, Portuguese users adopt a task-focused attitude toward digital banking, prioritizing efficiency and usefulness over emotional or design features such as anthropomorphism or gendered voices. Technologically, Portugal’s high digital literacy and widespread experience with online banking make ease of use and awareness less decisive, as users already expect digital tools to be accessible and available [55]. From a market perspective, the Portuguese banking sector is digitally mature, with IVAs increasingly seen as standard features rather than innovations. In this environment, users assess IVAs primarily based on their utility and ability to improve task performance, making perceived usefulness the only factor with a significant impact [52,53].

To enhance the usefulness of IVAs, Portuguese banks should focus on increasing their practical value in customers’ daily financial activities. This includes integrating IVAs with a broader range of services, such as personalized financial advice, transaction automation, account management, and real-time assistance for complex tasks [56]. Ensuring that IVAs are responsive, accurate, and capable of handling nuanced interactions will further improve their effectiveness. Highlighting features that save time or simplify banking processes can also strengthen their perceived value [55,56]. Additionally, continuous learning and adaptation based on user preferences and history can result in more efficient and personalized interactions [54]. Marketing efforts should clearly communicate these advantages, emphasizing how IVAs contribute to convenience and productivity. Ultimately, IVAs should evolve from basic tools to proactive, multifunctional assistants that deliver tangible benefits in banking [52,53].

The findings of this study suggest that banks need to refine both the design and communication strategies of their IVAs to emphasize functionality and value. With perceived usefulness as the principal driver of adoption, design efforts should center on facilitating streamlined interactions and automating tasks [56]. IVAs must be equipped to manage complex banking activities, such as bill payments and budget planning, before they can be regarded as indispensable tools. Communication strategies should focus on clearly demonstrating the capabilities of IVAs, avoiding excessive emphasis on emotional or anthropomorphic features, and positioning the IVA as a smart assistant that enhances user control and productivity in banking [52,53].

5. Conclusions

This paper performed empirical research focusing on the Portuguese market in the banking sector from where we found the answer for this investigation regarding the research question, “What influences the experience with IVAs in the banking sector?” From this analysis, we highlight the importance of the perceived usefulness of the IVAs adoption intention, supporting H1 and complementing other studies [7,14,16,18]. This demonstrates that other banking companies should focus on the usability of their assistants and provide solutions that help facilitate their customers to complete daily tasks, aiming to give a positive customer experience in every small task. With this, once again, IVAs prove their value by assisting individuals with their daily tasks, increasing their productivity, and freeing them to do other personal actions that add more value to their lives. Together with that, the adoption intention of IVAs for banking also influences the actual usage of this type of technology, supporting H8 and backing up other investigations [3,7,17]. The findings prove that the users are ready and want to use these assistants that enhance their daily lives. These robust IVAs provide another layer for banking customers, giving them advice when needed, offering services in the palm of their hands, and serving as an essential connecting point with the bank. As the banking industry is sometimes seen as complex, developing this type of assistant can also break that assumption and provide a more people-centered experience, offering a way to easily manage their users’ finances.

5.1. Contributions to Theory and Practice

This study suggests some theoretical implications and practical contributions for several industries.

In theory, it presents a model to analyze the adoption intention and actual usage of IVAs for banking, serving as a basis for further studies in this area. It also provides the first analysis of the banking sector in Portugal, opening the way for more researchers to investigate this field. Furthermore, we would also like to think that by analyzing the Portuguese sample, more investigators will think of investigating this sample, not only on the topic of IVAs but also on other topics.

For management actions, this paper provides a guide for banking employees to analyze their IVAs and understand what the Portuguese consumer values more for this type of technological solution.

5.2. Limitations and Future Studies

This study is not exclusive of its limitations. To begin, identifying the papers relevant for this study was a significant challenge as the topic is evolving quickly. The next limitation is the lack of many articles focusing on the application of IVAs in the banking sector, which proved to be a challenge as there were not very many terms of comparison for the empirical investigation. Another limitation is that this study was restricted to a single bank in Portugal, which could be mitigated by expanding the research to encompass additional banks across diverse demographics.

This paper identified multiple open questions that could lead to further research on this topic.

To start with the implementation of IVAs in Portugal, future investigators could focus on the research question: How can the experience with IVAs in the retail sector improve the experience with brands in Portugal? For this topic, it would be fundamental for brands to understand what they could gain from implementing these assistants and to have an investigation that provides a guide on what the essential features are for their consumers. After that, as education has been an area where IVAs have been implemented lately, future research could analyze what influences the users’ experience by studying various types of education, whether it is online or remote, at different levels of the academic system and comparing the experience that these different groups of students have with this growing technology. This investigation could focus on the research question: What influences the experience with IVAs in education?

Author Contributions

The authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Restrictions apply to the availability of these data. Data were obtained from a third party (banking institution) and are available from the authors with the permission of the third party.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADI | Adoption Intention |

| ANM | Anthropomorphism |

| AS | Awareness of the Service |

| AU | Actual Usage |

| GV | Gendered Voice |

| IVA | Intelligent Virtual Assistant |

| PEU | Perceived Ease of Use |

| PLS-SEM | Partial Least Squares Structural Equation Modeling |

| PT | Perceived Trust |

| PU | Perceived Usefulness |

| SQ | Service Quality |

| TAM | Technology Acceptance Model |

Appendix A

Table A1.

Measurement items and survey questions.

Table A1.

Measurement items and survey questions.

| Items | Items | Scale |

|---|---|---|

| PU1 | I find the banking chatbot useful in my daily life. | 5-point scale (strongly disagree–strongly agree) |

| PU2 | Using the banking chatbot helps me accomplish things more quickly. | 5-point scale (strongly disagree–strongly agree) |

| PU3 | Using the banking chatbot increases my productivity. | 5-point scale (strongly disagree–strongly agree) |

| PEU1 | Learning how to use the banking chatbot is easy for me. | 5-point scale (strongly disagree–strongly agree) |

| PEU2 | My interaction with the banking chatbot is clear and understandable. | 5-point scale (strongly disagree–strongly agree) |

| PEU3 | I find the banking chatbot easy to use. | 5-point scale (strongly disagree–strongly agree) |

| PT1 | I feel that the information provided by the chatbot for banking is honest and authentic. | 5-point scale (strongly disagree–strongly agree) |

| PT2 | I feel that chatbots for banking have clarity of services provided and frank opinions that are reliable. | 5-point scale (strongly disagree–strongly agree) |

| PT3 | I feel that chatbots in banking are trustworthy. | 5-point scale (strongly disagree–strongly agree) |

| PT4 | I feel that chatbots for banking have the necessary ability to provide financial counseling. | 5-point scale (strongly disagree–strongly agree) |

| ANM1 | Chatbots for banking have their own mind. | 5-point scale (strongly disagree–strongly agree) |

| ANM2 | Chatbots for banking can experience emotions. | 5-point scale (strongly disagree–strongly agree) |

| ANM3 | I feel that chatbots for banking are inanimate: living. | 5-point scale (strongly disagree–strongly agree) |

| ANM4 | I feel that chatbots for banking are computer-animated: real. | 5-point scale (strongly disagree–strongly agree) |

| AS1 | My bank has communicated a banking chatbot usage policy to me. | 5-point scale (strongly disagree–strongly agree) |

| AS2 | My bank has a strategy regarding the usage of the banking chatbot. | 5-point scale (strongly disagree–strongly agree) |

| AS3 | I have received sufficient information from my bank regarding the usage of the banking chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ1 | When I have problems, this chatbot is sympathetic and reassuring. | 5-point scale (strongly disagree–strongly agree) |

| SQ2 | I felt that I could rely on the chatbot’s services to fulfill my needs. | 5-point scale (strongly disagree–strongly agree) |

| SQ3 | The chatbot responds promptly to my requests. | 5-point scale (strongly disagree–strongly agree) |

| SQ4 | I trust the chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ5 | I feel safe and reassured while having a conversation with the chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ6 | The chatbot has adequate knowledge to answer my questions. | 5-point scale (strongly disagree–strongly agree) |

| SQ7 | I can be in control of my personal needs through the chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ8 | The chatbot gives me the opportunity to respond. | 5-point scale (strongly disagree–strongly agree) |

| SQ9 | I feel valued by the brand through my conversations with the chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ10 | I feel empathetically understood through the conversations with the chatbot. | 5-point scale (strongly disagree–strongly agree) |

| SQ11 | I feel that the chatbot was developed to meet my personal needs. | 5-point scale (strongly disagree–strongly agree) |

| AU1 | I use the chatbot to clarify my doubts. | 5-point scale (strongly disagree–strongly agree) |

| AU2 | I use the chatbot to manage my banking account. | 5-point scale (strongly disagree–strongly agree) |

| AU3 | I use the chatbot to gain knowledge about financial products. | 5-point scale (strongly disagree–strongly agree) |

| AU3 | I use the chatbot to get financial advice. | 5-point scale (strongly disagree–strongly agree) |

| ADI1 | There is a possibility that I will recommend the use of the banking chatbot to my family and friends. | 5-point scale (strongly disagree–strongly agree) |

| GV1 | What kind of voice do you prefer in your personal assistant? | (Male, Female, Without humanization) |

| SQ1 | What is your gender? | (Male, Female, Not Disclosed) |

| SQ2 | What is your educational background? | (Undergraduate, Graduation, Master, PhD) |

| SQ3 | How old are you? | Numerical |

| SQ4 | What is your profession? | Open Answer |

| SQ5 | How would you rate your experience with this virtual assistant from Bank X? | 10-point scale (bad–excellent) |

| SQ6 | How would you rate your experience with virtual assistants in general? | 10-point scale (bad–excellent) |

| SQ7 | How often do you use the Bank X Contact Center? | 10-point scale (bad–excellent) |

References

- Cheng, X.; Bao, Y.; Zarifis, A.; Gong, W.; Mou, J. Exploring consumers’ response to text-based chatbots in e-commerce: The moderating role of task complexity and chatbot disclosure. Internet Res. 2022, 32, 496–517. [Google Scholar] [CrossRef]

- Cui, R.M.; Li, M.; Zhang, S.C. Al and Procurement. Manuf. Serv. Oper. Manag. 2022, 24, 691–706. [Google Scholar] [CrossRef]

- Alt, M.A.; Ibolya, V. Identifying Relevant Segments of Potential Banking Chatbot Users Based on Technology Adoption Behavior. Market-Trziste 2021, 33, 165–183. [Google Scholar] [CrossRef]

- Mor, S.; Gupta, G. Artificial intelligence and technical efficiency: The case of Indian commercial banks. Strateg. Chang. 2021, 30, 235–245. [Google Scholar] [CrossRef]

- Alenizi, A.S. Understanding the subjective realties of social proof and usability for mobile banking adoption: Using triangulation of qualitative methods. J. Islam. Mark. 2022, 14, 2027–2044. [Google Scholar] [CrossRef]

- Al-Khasawneh, M.H. A Mobile Banking Adoption Model in the Jordanian Market: An Integration of TAM with Perceived Risks and Perceived Benefits. J. Internet Bank. Commer. 2015, 20, 1–35. [Google Scholar]

- Marakarkandy, B.; Yajnik, N.; Dasgupta, C. Enabling internet banking adoption: An empirical examination with an augmented technology acceptance model (TAM). J. Enterp. Inf. Manag. 2017, 30, 263–294. [Google Scholar] [CrossRef]

- Mutahar, A.M.; Daud, N.M.; Ramayah, T.; Isaac, O.; Aldholay, A.H. The effect of awareness and perceived risk on the technology acceptance model (TAM): Mobile banking in Yemen. Int. J. Serv. Stand. 2018, 12, 180–204. [Google Scholar] [CrossRef]

- Alismaiel, O.A.; Cifuentes-Faura, J.; Al-Rahmi, W.M. Social Media Technologies Used for Education: An Empirical Study on TAM Model During the COVID-19 Pandemic. Front. Educ. 2022, 7, 882831. [Google Scholar] [CrossRef]

- Almogren, A.S.; Aljammaz, N.A. The integrated social cognitive theory with the TAM model: The impact of M-learning in King Saud University art education. Front. Psychol. 2022, 13, 1050532. [Google Scholar] [CrossRef]

- Mailizar, M.; Almanthari, A.; Maulina, S. Examining teachers’ behavioral intention to use e-learning in teaching of mathematics: An extended tam model. Contemp. Educ. Technol. 2021, 13, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Prieto, J.C.S.; Miguelánez, S.O.; García-Peñalvo, F.J. Mobile learning adoption from informal into formal: An extended TAM model to measure mobile acceptance among teachers. In Proceedings of the Second International Conference on Technological Ecosystems for Enhancing Multiculturality (TEEM ’14), Salamanca, Spain, 1–3 October 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 595–602. [Google Scholar] [CrossRef]

- Sánchez-Prieto, J.C.; Olmos-Migueláñez, S.; García-Peñalvo, F.J. MLearning and pre-service teachers: An assessment of the behavioral intention using an expanded TAM model. Comput. Hum. Behav. 2017, 72, 644–654. [Google Scholar] [CrossRef]

- Dutta, B.; Peng, M.H.; Sun, S.L. Modeling the adoption of personal health record (PHR) among individual: The effect of health-care technology self-efficacy and gender concern. Libyan J. Med. 2018, 13, 1500349. [Google Scholar] [CrossRef]

- Melas, C.D.; Zampetakis, L.A.; Dimopoulou, A.; Moustakis, V. Modeling the acceptance of clinical information systems among hospital medical staff: An extended TAM model. J. Biomed. Inform. 2011, 44, 553–564. [Google Scholar] [CrossRef] [PubMed]

- Tung, F.C.; Chang, S.C.; Chou, C.M. An extension of trust and TAM model with IDT in the adoption of the electronic logistics information system in HIS in the medical industry. Int. J. Med. Inform. 2008, 77, 324–335. [Google Scholar] [CrossRef] [PubMed]

- Mathew, V.; Soliman, M. Does digital content marketing affect tourism consumer behavior? An extension of technology acceptance model. J. Consum. Behav. 2021, 20, 61–75. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B. Adoption of AI-based chatbots for hospitality and tourism. Int. J. Contemp. Hosp. Manag. 2020, 32, 3199–3226. [Google Scholar] [CrossRef]

- Dahlberg, T.; Guo, J.; Ondrus, J. A critical review of mobile payment research. Electron. Commer. Res. Appl. 2015, 14, 265–284. [Google Scholar] [CrossRef]

- Selamat, M.A.; Windasari, N.A. Chatbot for SMEs: Integrating customer and business owner perspectives. Technol. Soc. 2021, 66, 101685. [Google Scholar] [CrossRef]

- Shaikh, A.A.; Karjaluoto, H. Mobile banking adoption: A literature review. Telemat. Inform. 2015, 32, 129–142. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. Manag. Inf. Syst. 1989, 13, 319–339. [Google Scholar] [CrossRef]

- Orús, C.; Ibáñez-Sánchez, S.; Flavián, C. Enhancing the customer experience with virtual and augmented reality: The impact of content and device type. Int. J. Hosp. Manag. 2021, 98, 103019. [Google Scholar] [CrossRef]

- Yagoda, R.E.; Gillan, D.J. You Want Me to Trust a ROBOT? The Development of a Human-Robot Interaction Trust Scale. Int. J. Soc. Robot. 2012, 4, 235–248. [Google Scholar] [CrossRef]

- You, S.; Kim, J.H.; Lee, S.H.; Kamat, V.; Robert, L.P. Enhancing perceived safety in human–robot collaborative construction using immersive virtual environments. Autom. Constr. 2018, 96, 161–170. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Noor, N.; Rao Hill, S.; Troshani, I. Recasting Service Quality for AI-Based Service. Australas. Mark. J. 2022, 30, 297–312. [Google Scholar] [CrossRef]

- Bouhia, M.; Rajaobelina, L.; PromTep, S.; Arcand, M.; Ricard, L. Drivers of privacy concerns when interacting with a chatbot in a customer service encounter. Int. J. Bank Mark. 2022, 40, 1159–1181. [Google Scholar] [CrossRef]

- Al-Somali, S.A.; Gholami, R.; Clegg, B. An investigation into the acceptance of online banking in Saudi Arabia. Technovation 2009, 29, 130–141. [Google Scholar] [CrossRef]

- Sathye, M. Adoption of Internet Banking by Australian Consumers: An Empirical Investigation. Int. J. Bank Mark. 1999, 17, 324–334. [Google Scholar] [CrossRef]

- Lishomwa, L.; Phiri, J. Adoption of Internet Banking Services by Corporate Customers for Forex Transactions Based on the TRA Model. Open J. Bus. Manag. 2020, 08, 329–345. [Google Scholar] [CrossRef][Green Version]

- Chen, Q.; Gong, Y.; Lu, Y.; Tang, J. Classifying and measuring the service quality of AI chatbot in frontline service. J. Bus. Res. 2022, 145, 552–568. [Google Scholar] [CrossRef]

- Kushwaha, A.K.; Kumar, P.; Kar, A.K. What impacts customer experience for B2B enterprises on using AI-enabled chatbots? Insights from Big data analytics. Ind. Mark. Manag. 2021, 98, 207–221. [Google Scholar] [CrossRef]

- Tran, A.D.; Pallant, J.I.; Johnson, L.W. Exploring the impact of chatbots on consumer sentiment and expectations in retail. J. Retail. Consum. Serv. 2021, 63, 102718. [Google Scholar] [CrossRef]

- Malodia, S.; Islam, N.; Kaur, P.; Dhir, A. Why Do People Use Artificial Intelligence (AI)-Enabled Voice Assistants. IEEE Trans. Eng. Manag. 2021, 71, 491–505. [Google Scholar] [CrossRef]

- Fan, H.; Han, B.; Gao, W.; Li, W. How AI chatbots have reshaped the frontline interface in China: Examining the role of sales–service ambidexterity and the personalization–privacy paradox. Int. J. Emerg. Mark. 2022, 17, 967–986. [Google Scholar] [CrossRef]

- Borau, S.; Otterbring, T.; Laporte, S.; Fosso Wamba, S. The most human bot: Female gendering increases humanness perceptions of bots and acceptance of AI. Psychol. Mark. 2021, 38, 1052–1068. [Google Scholar] [CrossRef]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. Gender Bias in Chatbot Design. In Chatbot Research and Design. CONVERSATIONS 2019; Følstad, A., Araujo, T., Papadopoulos, S., Law, E.L.-C., Granmo, O.-C., Luger, E., Brandtzaeg, P.B., Eds.; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzarland, 2020; Volume 11970, pp. 79–93. [Google Scholar] [CrossRef]

- Mooshammer, S.; Etzrodt, K. Social Research with Gender-Neutral Voices in Chatbots—The Generation and Evaluation of Artificial Gender-Neutral Voices with Praat and Google WaveNet. In Chatbot Research and Design Workshop; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzarland, 2022; Volume 13171, pp. 176–191. [Google Scholar] [CrossRef]

- Gu, J.C.; Lee, S.C.; Suh, Y.H. Determinants of behavioral intention to mobile banking. Expert Syst. Appl. 2009, 36, 11605–11616. [Google Scholar] [CrossRef]

- Mutambik, I.; Lee, J.; Almuqrin, A.; Zhang, J.Z.; Baihan, M.; Alkhanifer, A. Privacy Concerns in Social Commerce: The Impact of Gender. Sustainability 2023, 15, 12771. [Google Scholar] [CrossRef]

- Abdennebi, H.B. M-banking adoption from the developing countries perspective: A mediated model. Digit. Bus. 2023, 3, 100065. [Google Scholar] [CrossRef]

- Dhiman, N.; Jamwal, M.; Kumar, A. Enhancing value in customer journey by considering the (ad)option of artificial intelligence tools. J. Bus. Res. 2023, 167, 114142. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); SAGE Publications: Thousand Oaks, CA, USA, 2022. [Google Scholar]

- Yun, J.; Park, J. The Effects of Chatbot Service Recovery with Emotion Words on Customer Satisfaction, Repurchase Intention, and Positive Word-of-Mouth. Front. Psychol. 2022, 13, 922503. [Google Scholar] [CrossRef] [PubMed]

- Caycho-Rodríguez, T.; Tomás, J.M.; Yupanqui-Lorenzo, D.E.; Valencia, P.D.; Carbajal-León, C.; Vilca, L.W.; Ventura-León, J.; Paredes-Angeles, R.; Arias Gallegos, W.L.; Reyes-Bossio, M.; et al. Relationship Between Fear of COVID-19, Conspiracy Beliefs About Vaccines and Intention to Vaccinate Against COVID-19: A Cross-National Indirect Effect Model in 13 Latin American Countries. Eval. Health Professions. 2023, 46, 371–383. [Google Scholar] [CrossRef] [PubMed]

- Charles-Leija, H.; Castro, C.G.; Toledo, M.; Ballesteros-Valdés, R. Meaningful Work, Happiness at Work, and Turnover Intentions. Int. J. Environ. Res. Public Health 2023, 20, 3565. [Google Scholar] [CrossRef] [PubMed]

- Fishman, J.; Lushin, V.; Mandell, D.S. Predicting implementation: Comparing validated measures of intention and assessing the role of motivation when designing behavioral interventions. Implement. Sci. Commun. 2020, 1, 81. [Google Scholar] [CrossRef]

- Matteau, L.; Toupin, I.; Ouellet, N.; Beaulieu, M.; Truchon, M.; Gilbert-Ouimet, M. Nursing students’ academic conditions, psychological distress, and intention to leave school: A cross-sectional study. Nurse Educ. Today 2023, 129, 105877. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988. [Google Scholar] [CrossRef]

- Tanihatu, M.C.; Suryanto; Syahchari, D.H. H. Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; pp. 846–851. [CrossRef]

- Afonso, V.O. The adoption of conversational assistants in the banking industry. Heliyon 2023, 9, e17428. [Google Scholar]

- Silva, M.; Pereira, J.; Costa, P. Factors influencing the adoption of Intelligent Virtual Assistants in Portuguese banking. J. Digit. Bank. Res. 2023, 12, 45–61. [Google Scholar]

- Bhanu, P.; Vivek, S. Exploring users’ adoption intentions of intelligent virtual assistants in financial services: An anthropomorphic perspectives and socio-psychological perspectives. Comput. Hum. Behav. 2023, 146, 107912. [Google Scholar]

- Foulds, S. How Virtual Assistants Take Mobile Banking Apps to the Next Level; The Financial Brand: Gig Harbor, WA, USA, 2023. [Google Scholar]

- James, C. The CX Benefits of Implementing an Intelligent Virtual Agent (IVA); CMSWire: San Francisco, CA, USA, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).