Abstract

Regularization techniques are critical in the development of machine learning models. Complex models, such as neural networks, are particularly prone to overfitting and to performing poorly on the training data. regularization is the most extreme way to enforce sparsity, but, regrettably, it does not result in an NP-hard problem due to the non-differentiability of the 1-norm. However, the regularization term achieved convergence speed and efficiency optimization solution through a proximal method. In this paper, we propose a batch gradient learning algorithm with smoothing regularization (BGS) for learning and pruning a feedforward neural network with hidden nodes. To achieve our study purpose, we propose a smoothing (differentiable) function in order to address the non-differentiability of regularization at the origin, make the convergence speed faster, improve the network structure ability, and build stronger mapping. Under this condition, the strong and weak convergence theorems are provided. We used N-dimensional parity problems and function approximation problems in our experiments. Preliminary findings indicate that the BGS has convergence faster and good generalization abilities when compared with BG, BG, BG, and BGS. As a result, we demonstrate that the error function decreases monotonically and that the norm of the gradient of the error function approaches zero, thereby validating the theoretical finding and the supremacy of the suggested technique.

1. Introduction

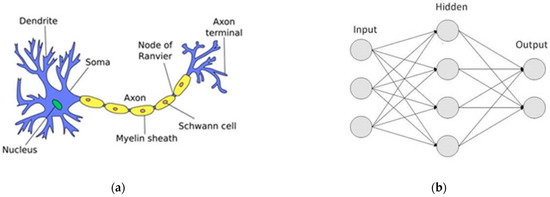

Artificial neural networks (ANNs) are computational networks based on biological neural networks. These networks form the basis of the human brain’s structure. Similar to neurons in a human brain, ANNs also have neurons that are interconnected to one another through a variety of layers. These neurons are known as nodes. The human brain is made up of 86 billion nerve cells known as neurons. They are linked to 1000 s of other cells by axons. Dendrites recognize stimulation from the external environment as well as inputs from sensory organs. These inputs generate electric impulses that travel quickly through the neural network. A neuron can then forward the message to another neuron to address the issue or not forward it at all. ANNs are made up of multiple nodes that mimic biological neurons in the human brain (See Figure 1). A feedforward neural network (FFNN) is the first and simplest type of ANN, and now it contributes significantly and directly to our daily lives in a variety of fields, such as education tools, health conditions, economics, sports, and chemical engineering [1,2,3,4,5].

Figure 1.

(a) The biological neural networks (b) The artificial neural network structure.

The most widely used learning strategy in FFNNs is the backpropagation method [6]. There are two methods for training the weights: batch and online [7,8]. In the batch method, the weights are modified after each training pattern is presented to the network, whereas in the online method, the error is accumulated during an epoch and the weights are modified after the entire training set is presented.

Overfitting in mathematical modeling is the creation of an analysis that is precisely tailored to a specific set of data and, thus, may fail to fit additional data or predict future findings accurately [9,10]. An overfitted model is one that includes more parameters than can be justified by the data [11]. Several techniques, such as cross-validation [12], early stopping [13], dropout [14], regularization [15], big data analysis [16], or Bayesian regularization [17], are used to reduce the amount of overfitting.

Regularization methods are frequently used in the FFNN training procedure and have been shown to be effective in improving generalization performance and decreasing the magnitude of network weights [18,19,20]. A term proportional to the magnitude of the weight vector is one of the simplest regularization penalty terms added to the standard error function [21,22]. Many successful applications have used various regularization terms, such as weight decay [23], weight elimination [24], elastic net regularization [25], matrix regularization [26], and nuclear norm regularization [27].

Several regularization terms are made up of the weights, resulting in the following new error function:

where is the standard error function depending on the weights , is the regularization parameter, and is the q-norm is given by

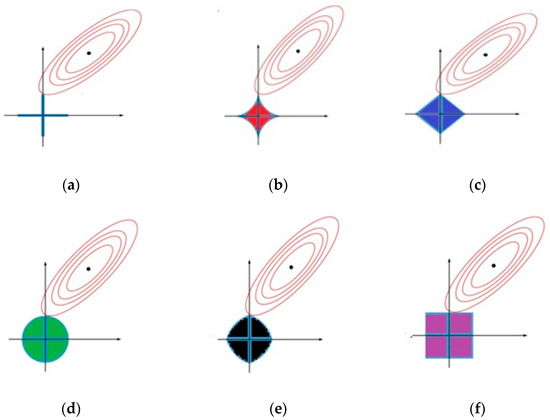

where (). The gradient descent algorithm is a popular method for solving this type of problem (1). The graphics of the , , , , elastic net, and regularizers in Figure 2 show the sparsity. The sparsity solution, as shown in Figure 2, is the first point at which the contours touch the constraint region, and this will coincide with a corner corresponding to a zero coefficient. It is obvious that the regularization solution occurs at a corner with a higher possibility, implying that it is sparser than the others. The goal of network training is to find so that . The weight vectors’ corresponding iteration formula is

regularization has a wide range of applications in sparse optimization [28]. As the regularization technique is an NP-hard problem, optimization algorithms, such as the gradient method, cannot be immediately applied [29]. To address this issue, ref. [30] proposes smoothing regularization with the gradient method for training FFNN. According to the regularization concept, Lasso regression was proposed to obtain the sparse solution based on regularization to reduce the complexity of the mathematical model [31]. Lasso quickly evolved into a wide range of models due to its outstanding performance. To achieve maximally sparse networks with minimal performance degradation, neural networks with smoothed Lasso regularization were used [32]. Due to its oracle properties, sparsity, and unbiasedness, the regularizer has been widely utilized in various studies [33]. A novel method for forcing neural network weights to become sparser was developed by applying regularization to the error function [34]. regularization is one of the most common types of regularization since the 2-norm is differentiable and learning can be advanced using a gradient method [35,36,37,38]; with regularization, the weights provided are bounded [37,38]. As a result, regularization is useful for dealing with overfitting problems.

Figure 2.

The sparsity property of different regularization (a) regularization, (b) regularization, (c) regularization, (d) regularization, (e) elastic net regularization, (f) regularization.

The batch update rule, which modifies all weights in each estimation process, has become the most prevalent. As a result, in this article, we concentrated on the gradient method with a batch update rule and smoothing regularization for FFNN. We first show that if certain Propositions 1–3 are met, the error sequence will be uniformly monotonous, and the algorithm will be weakly convergent during training. Secondly, if there are no interior points in the error function, the algorithm with weak convergence is strongly convergent with the help of Proposition 4. Furthermore, numerical experiments demonstrate that our proposed algorithm eradicates oscillation and increases the computation learning algorithm better than the standard regularization, regularization, regularization, and even the smoothing regularization methods.

The following is the rest of this paper. Section 2 discusses FFNN, the batch gradient method with regularization (BG), and the batch gradient method with smoothing regularization (BGS). The materials and methods are given in Section 3. Section 4 displays some numerical simulation results that back up the claims made in Section 3. Section 5 provides a brief conclusion. The proof of the convergence theorem is provided in “Appendix A”.

2. Network Structure and Learning Algorithm Methodology

2.1. Network Structure

A three-layer neural network based on error back-propagation is presented. Consider a three-layer structures consisting of input layers, hidden layers and 1 output layer. Given that can be a transfer function for the hidden and output layers, this is typically, but not necessarily, a sigmoid function. Let be the weight vector between all the hidden layers and the output layer, and let denoted be the weight vector between all the input layers and the hidden layer . To classify the offer, we write all the weight parameters in a compact form, i.e., , and we have also given a matrix . Furthermore, we define a vector function

where . For any given input the output of the hidden neuron is , and the final output of the network is

where represents the inner product between the vectors and .

2.2. Modified Error Function with Smoothing Regularization (BGS)

Given that the training set is , where is the desired ideal output for the input . The standard error function without regularization term as following

where . Furthermore, the gradient of the error function is given by

The modified error function with regularization is given by

where denoted the absolute value of the weights. The purpose of the network training is to find such that

The gradient method is a popular solution for this type of problem. Since Equation (8) involves the absolute value, this is a combinatorial optimization problem, and the gradient method cannot be employed to immediately minimize such an optimization problem. However, in order to estimate the absolute value of the weights, we recognize the use of a continuous and differentiable function to replace regularization by smoothing in (8). The error function with smoothing regularization can then be adapted by

where is any continuous and differentiable functions. Specifically, we use the following piecewise polynomial function as:

where is a suitable constant. Then the gradient of the error function is given by

The gradient of the error function in (10) with respect to is given by

The weights updated iteratively starting from an initial value by

and

where is learning rate, and is regularization parameter.

3. Materials and Methods

It will be necessary to prove the convergence theorem using the propositions below.

Proposition 1.

and are uniformly bounded for .

Proposition 2.

is uniformly bounded.

Proposition 3.

and are chosen to satisfy: , where

Proposition 4.

There exists a closed bounded region such that , and set contains only finite points.

Remark 1.

Both the hidden layer and output layer have the same transfer function, is . A uniformly bounded weight distribution is shown in Propositions 1 and 2. Thus, Proposition 4 is reasonable. The Equation (10) and Proposition 1, are uniformly bounded for Proposition 3. Regarding Proposition 2, we would like to make the following observation. This paper focuses mainly on simulation problems with being a sigmoid function satisfying Proposition 1. Typically, simulation problems require outputs of 0 and 1, or −1 and 1. To control the magnitude of the weights , one can change the desired output into 0 + α and 1 − α, or −1 + α and 1 − α, respectively, where α > 0 is a small constant. Actually, a more important reason of doing so is to prevent the overtraining, cf. [39]. In the case of sigmoid functions, when is bounded, the weights for the output layer are bounded.

Theorem 1.

Let the weight be generated by the iteration algorithm (14) for an arbitrary initial value , the error function be defined by (10) and if propositions 1–3 are valid, then we have

- I.

- II.

- There exists such that

- III.

- IV.

- Further, if proposition 4 is also valid, we have the following strong convergence

- V.

- There exists a point such that .

Note:

It is shown in conclusion (I) and (II) that the error function sequence is monotonic and has a limit (II). According to conclusions (II) and (IV), and are weakly converging. The strong convergence of {

} is mentioned in Conclusion (V).

We used the following strategy as a neuron selection criterion by simply computing the norm of the overall outgoing weights from the neuron number to ascertain whether a neuron number in the hidden units will survive or be removed after training. There is no standard threshold value in the literature for eliminating redundant weighted connections and redundant neurons from the initially assumed structure of neural networks. The sparsity of the learning algorithm was measured using the number of weights with absolute values of ≤0.0099 and ≤0.01, respectively, according to ref. [40]. In this study, we chose 0.00099 as a threshold value at random, which is less than the existing thresholds in the literature. This procedure is repeated ten times. Algorithm 1 describes the experiment procedure.

| Algorithm 1 The learning algorithm | |

| Input | Input the dimension , the number of the nodes, the number maximum iteration number , the learning rate , the regularization parameter , and the sample training set is . |

| Initialization | Initialize randomly the initial weight vectors and |

| Training | For do Compute the error function Equation (10). Compute the gradients Equation (15). Update the weights and by using Equation (14). end |

| Output | Output the final weight vectors and |

4. Experimental Results

The simulation results for evaluating the performance of the proposed BGS algorithm are presented in this section. We will compare BGS performance to that of four common regularization algorithms: the batch gradient method with regularization (BG), the batch gradient method with smoothing regularization (BGS), the batch gradient method with regularization (BG), and the batch gradient method with regularization (BG). Numerical experiments on the N-dimensional parity and function approximation problems support our theoretical conclusion.

4.1. N-Dimensional Parity Problems

The N-dimensional parity problem is another popular task that generates a lot of debate. If the input pattern contains an odd number of ones, the output criterion is one; alternatively, the output necessity is zero. An N-M-1 architecture (N inputs, M hidden nodes, and 1 output) is employed to overcome the N-bit parity problem. The well-known XOR problem is simply a 2-bit parity problem [41]. Here, the 3-bit and 6-bit parity problems are used as an example to test the performance of BGS. The network has three layers: input layers, hidden layers, and an output unit.

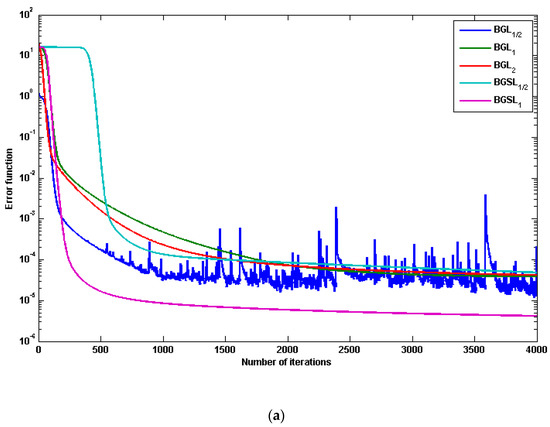

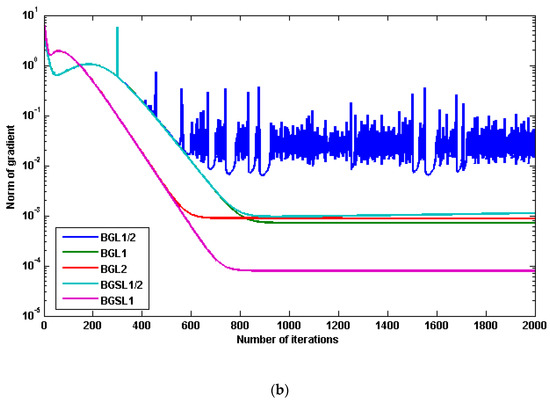

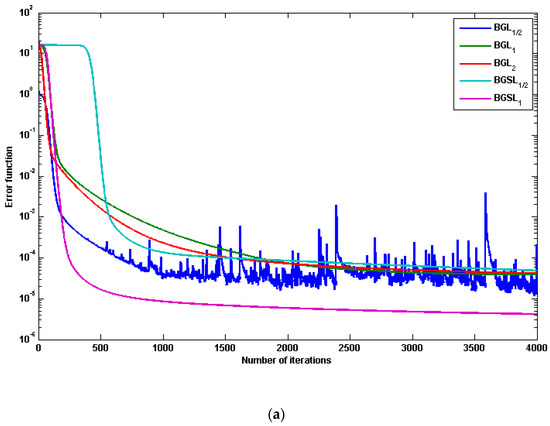

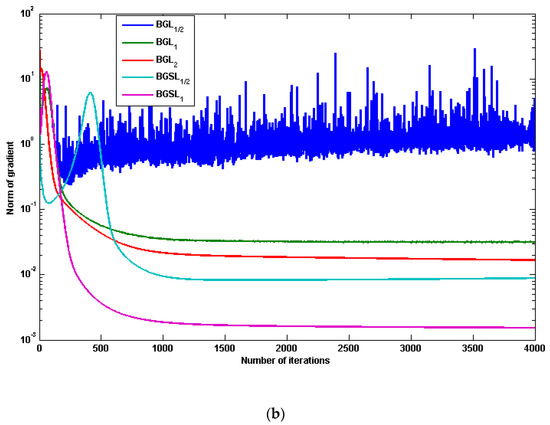

Table 1 shows the parameter settings for the corresponding network, where LR and RP are abbreviations for the learning rate and regularization parameter, respectively. Figure 3 and Figure 4 show the performance results of BG, BG, BG, BGS, and BGS for 3-bit and 6-bit parity problems, respectively. As illustrated by Theorem 1, the error function decreases monotonically in Figure 3a and Figure 4a, and the norm of the gradients of the error function approaches zero in Figure 3b and Figure 4b. According to the comparison results, our proposal demonstrated superior learning ability and faster convergence. This corresponds to our theoretical analysis. Table 2 displays the average error and running time of ten experiments, demonstrating that BGS not only converges faster but also has better generalization ability than others.

Table 1.

The learning parameters for parity problems.

Figure 3.

The performance results of five different algorithms based on 3-bit parity problem: (a) The curve of error function, (b) The curve of norm of gradient.

Figure 4.

The performance results of five different algorithms based on 6-bit parity problem: (a) The curve of error function, (b) The curve of norm of gradient.

Table 2.

Numerical results for parity problems.

4.2. Function Approximation Problem

A nonlinear function has been devised to compare the approximation capabilities of the above algorithms:

where and chooses 101 training samples from an evenly spaced interval of [−4, 4]. The initial weight of the network is typically generated at random within a given interval; training begins with an initial point and gradually progresses to a minimum of error along the slope of the error function, which is chosen stochastically in [−0.5, 0.5]. The training parameters are as follows: 0.02 and 0.0005 represent the learning rate () and parameter regularization (), respectively. The stop criteria are set to 1000 training cycles.

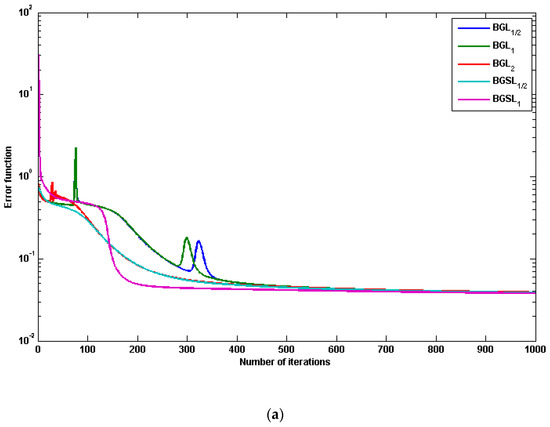

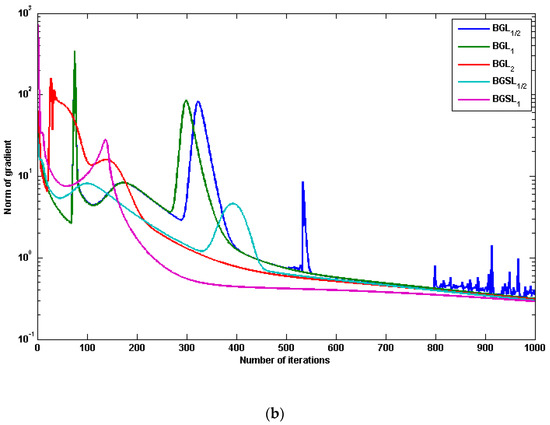

The average error and norm of the gradient and running time of 10 experiments are presented in Table 3. Through the results obtained from Figure 5a,b, respectively, we see that BGS has a better mapping capability than BGS, BG, BG and BG, with the error decreasing monotonically as learning proceeds and its gradient go to zero. Table 3 shows the preliminary results are extremely encouraging and that the speedup and generalization ability of BGS is better than BGS, BG, BG and BG.

Table 3.

Numerical results for function approximation problem.

Figure 5.

The performance results of five different algorithms based on function approximate problem: (a) The curve of error function, (b) The curve of norm of gradient.

5. Discussion

Table 2 and Table 3, respectively, show the performance comparison of the average error and the average norms of gradients under our five methods over the 10 trials. Table 2 shows the results of N-dimensional parity problems using the same parameters, while Table 3 shows the results of function approximation problems using the same parameters.

The comparison convincing in Table 2 and Table 3 shows that the BGS is more efficient and has better sparsity-promoting properties than BG, BG, BG and even than the BGS. In addition to that, Table 2 and Table 3 show that our proposed algorithm is faster than that of all numerical results. regularization is sparser than the traditional regularization solution. Recently, in ref. [34], the BGS also shows that the sparsity is better than that of BG. The results of ref. [33] show that the regularization has been demonstrated to have the following properties: unbiasedness, sparsity, and oracle properties.

We obtained all three numerical results for five different methods using one hidden layer of FFNNs. Our new method proposed a sparsification technique for FFNNs can be extended to encompass any number of hidden layers.

6. Conclusions

regularization is thought to be an excellent pruning method for neural networks. regularization, on the other hand, is also an NP-hard problem. In this paper, we propose BGS, a batch gradient learning algorithm with smoothing regularization for training and pruning feedforward neural networks, and we approximate the regularization by smoothing function. We analyzed some weak and strong theoretical results under this condition, and the computational results validated the theoretical findings. The proposed algorithm has the potential to be extended to train neural networks. In the future, we will look at the case of the online gradient learning algorithm with a smoothing regularization term.

Funding

The researcher would like to thank the Deanship of Scientific Research, Qassim University for funding the publication of this project.

Data Availability Statement

All data has been presented in this paper.

Acknowledgments

The tresearcher would like to thank the referees for their careful reading and helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

To prove the strong convergence, we will use the following result, which is basically the same as Lemma 3 in [38]. So its proof is omitted.

Lemma A1.

Let be continuous for a bounded closed region , and . The projection of on each coordinate axis does not contain any interior point. Let the sequence satisfy:

- (a)

- ;

- (b)

- .

Then, there exists a unique such that .

There are four statements in the proof of Theorem 1, each one is shown in –. We use the following notations for convenience:

From error function (10), we can write

and

Proof to (I) of Theorem 1.

Using the (A2), (A3), and Taylor expansion. We have

where is between and and is between and . From (16), Proposition 1 and the Lagrange mean value theorem. We have

and

where

is between

and . According to Cauchy-Schwarz inequality, Proposition 1, (16), (A5) and (A6). We have

where

and . In the same way, we have

where . It follows from Propositions 1 and 2 that

where .

Let .

A combination of (A4) to (A9), and from it easy to obtained , , , and . We have

Conclusion (I) of Theorem 1 is proved if the Proposition 3 is valid. □

Proof to (II) of Theorem 1.

Since the nonnegative sequence is monotone and bounded below, there must be a limit value such that

So conclusion (II) is proved. □

Proof to (III) of Theorem 1.

Proposition 1, (A10) and let > 0. We have

Thus, we can write

when for any and set then we have

This with (12), (14) and (A1). We have

□

Proof to (IV) of Theorem 1.

As a result, we can prove that the convergence is strong. Noting Conclusions (IV), we take and . This together with the finiteness of (cf. Proposition 4), (A13), and Lemma 1 leads directly to conclusion (IV). This completes the proof. □

References

- Deperlioglu, O.; Kose, U. An educational tool for artificial neural networks. Comput. Electr. Eng. 2011, 37, 392–402. [Google Scholar] [CrossRef]

- Abu-Elanien, A.E.; Salama, M.M.A.; Ibrahim, M. Determination of transformer health condition using artificial neural networks. In Proceedings of the 2011 International Symposium on Innovations in Intelligent Systems and Applications, Istanbul, Turkey, 15–18 June 2011; pp. 1–5. [Google Scholar]

- Huang, W.; Lai, K.K.; Nakamori, Y.; Wang, S.; Yu, L. Neural networks in finance and economics forecasting. Int. J. Inf. Technol. Decis. Mak. 2007, 6, 113–140. [Google Scholar] [CrossRef]

- Papic, C.; Sanders, R.H.; Naemi, R.; Elipot, M.; Andersen, J. Improving data acquisition speed and accuracy in sport using neural networks. J. Sport. Sci. 2021, 39, 513–522. [Google Scholar] [CrossRef]

- Pirdashti, M.; Curteanu, S.; Kamangar, M.H.; Hassim, M.H.; Khatami, M.A. Artificial neural networks: Applications in chemical engineering. Rev. Chem. Eng. 2013, 29, 205–239. [Google Scholar] [CrossRef]

- Li, J.; Cheng, J.H.; Shi, J.Y.; Huang, F. Brief introduction of back propagation (BP) neural network algorithm and its improvement. In Advances in Computer Science and Information Engineering; Springer: Berlin/Heidelberg, Germany, 2012; pp. 553–558. [Google Scholar]

- Hoi, S.C.; Sahoo, D.; Lu, J.; Zhao, P. Online learning: A comprehensive survey. Neurocomputing 2021, 459, 249–289. [Google Scholar] [CrossRef]

- Fukumizu, K. Effect of batch learning in multilayer neural networks. Gen 1998, 1, 1E-03. [Google Scholar]

- Hawkins, D.M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004, 44, 1–12. [Google Scholar] [CrossRef]

- Dietterich, T. Overfitting and undercomputing in machine learning. ACM Comput. Surv. 1995, 27, 326–327. [Google Scholar] [CrossRef]

- Everitt, B.S.; Skrondal, A. The Cambridge Dictionary of Statistics; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Moore, A.W. Cross-Validation for Detecting and Preventing Overfitting; School of Computer Science, Carnegie Mellon University: Pittsburgh, PA, USA, 2001. [Google Scholar]

- Yao, Y.; Rosasco, L.; Caponnetto, A. On early stopping in gradient descent learning. Constr. Approx. 2007, 26, 289–315. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Santos, C.F.G.D.; Papa, J.P. Avoiding overfitting: A survey on regularization methods for convolutional neural networks. ACM Comput. Surv. 2022, 54, 1–25. [Google Scholar] [CrossRef]

- Waseem, M.; Lin, Z.; Yang, L. Data-driven load forecasting of air conditioners for demand response using levenberg–marquardt algorithm-based ANN. Big Data Cogn. Comput. 2019, 3, 36. [Google Scholar] [CrossRef]

- Waseem, M.; Lin, Z.; Liu, S.; Jinai, Z.; Rizwan, M.; Sajjad, I.A. Optimal BRA based electric demand prediction strategy considering instance-based learning of the forecast factors. Int. Trans. Electr. Energy Syst. 2021, 31, e12967. [Google Scholar] [CrossRef]

- Alemu, H.Z.; Wu, W.; Zhao, J. Feedforward neural networks with a hidden layer regularization method. Symmetry 2018, 10, 525. [Google Scholar] [CrossRef]

- Li, F.; Zurada, J.M.; Liu, Y.; Wu, W. Input layer regularization of multilayer feedforward neural networks. IEEE Access 2017, 5, 10979–10985. [Google Scholar] [CrossRef]

- Mohamed, K.S.; Wu, W.; Liu, Y. A modified higher-order feed forward neural network with smoothing regularization. Neural Netw. World 2017, 27, 577–592. [Google Scholar] [CrossRef]

- Reed, R. Pruning algorithms-a survey. IEEE Trans. Neural Netw. 1993, 4, 740–747. [Google Scholar] [CrossRef]

- Setiono, R. A penalty-function approach for pruning feedforward neural networks. Neural Comput. 1997, 9, 185–204. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, K.; Hong, B.W. Adaptive weight decay for deep neural networks. IEEE Access 2019, 7, 118857–118865. [Google Scholar] [CrossRef]

- Bosman, A.; Engelbrecht, A.; Helbig, M. Fitness landscape analysis of weight-elimination neural networks. Neural Process. Lett. 2018, 48, 353–373. [Google Scholar] [CrossRef]

- Rosato, A.; Panella, M.; Andreotti, A.; Mohammed, O.A.; Araneo, R. Two-stage dynamic management in energy communities using a decision system based on elastic net regularization. Appl. Energy 2021, 291, 116852. [Google Scholar] [CrossRef]

- Pan, C.; Ye, X.; Zhou, J.; Sun, Z. Matrix regularization-based method for large-scale inverse problem of force identification. Mech. Syst. Signal Process. 2020, 140, 106698. [Google Scholar] [CrossRef]

- Liang, S.; Yin, M.; Huang, Y.; Dai, X.; Wang, Q. Nuclear norm regularized deep neural network for EEG-based emotion recognition. Front. Psychol. 2022, 13, 924793. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, P.; Li, Z.; Sun, T.; Yang, C.; Zheng, Q. Data regularization using Gaussian beams decomposition and sparse norms. J. Inverse Ill Posed Probl. 2013, 21, 1–23. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, Y. Online gradient method with smoothing ℓ0 regularization for feedforward neural networks. Neurocomputing 2017, 224, 1–8. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Koneru, B.N.G.; Vasudevan, V. Sparse artificial neural networks using a novel smoothed LASSO penalization. IEEE Trans. Circuits Syst. II Express Briefs 2019, 66, 848–852. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, H.; Wang, Y.; Chang, X.; Liang, Y. L1/2 regularization. Sci. China Inf. Sci. 2010, 53, 1159–1169. [Google Scholar] [CrossRef]

- Wu, W.; Fan, Q.; Zurada, J.M.; Wang, J.; Yang, D.; Liu, Y. Batch gradient method with smoothing L1/2 regularization for training of feedforward neural networks. Neural Netw. 2014, 50, 72–78. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yang, D.; Zhang, C. Relaxed conditions for convergence analysis of online back-propagation algorithm with L2 regularizer for Sigma-Pi-Sigma neural network. Neurocomputing 2018, 272, 163–169. [Google Scholar] [CrossRef]

- Mohamed, K.S.; Liu, Y.; Wu, W.; Alemu, H.Z. Batch gradient method for training of Pi-Sigma neural network with penalty. Int. J. Artif. Intell. Appl. IJAIA 2016, 7, 11–20. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, W.; Liu, F.; Yao, M. Boundedness and convergence of online gradient method with penalty for feedforward neural networks. IEEE Trans. Neural Netw. 2009, 20, 1050–1054. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, W.; Yao, M. Boundedness and convergence of batch back-propagation algorithm with penalty for feedforward neural networks. Neurocomputing 2012, 89, 141–146. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation, 2nd ed.; Tsinghua University Press: Beijing, China; Prentice Hall: Hoboken, NJ, USA, 2001. [Google Scholar]

- Liu, Y.; Wu, W.; Fan, Q.; Yang, D.; Wang, J. A modified gradient learning algorithm with smoothing L1/2 regularization for Takagi–Sugeno fuzzy models. Neurocomputing 2014, 138, 229–237. [Google Scholar] [CrossRef]

- Iyoda, E.M.; Nobuhara, H.; Hirota, K. A solution for the n-bit parity problem using a single translated multiplicative neuron. Neural Process. Lett. 2003, 18, 233–238. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).