Abstract

The correct detection of negations is essential to the performance of sentiment analysis tools. The evaluation of such tools is currently conducted through the use of corpora as an opportunistic approach. In this paper, we advocate using a different evaluation approach based on a set of intentionally built sentences that include negations, which aim to highlight those tools’ vulnerabilities. To demonstrate the effectiveness of this approach, we propose a basic testset of such sentences. We employ that testset to evaluate six popular sentiment analysis tools (with eight lexicons) available as packages in the R language distribution. By adopting a supervised classification approach, we show that the performance of most of these tools is largely unsatisfactory.

1. Introduction

Sentiment analysis now has a pretty long history, with the first reviews of the subject appearing roughly a decade ago [1,2]. Sentiment analysis techniques can be broadly classified based on using either lexicons or examples. A significant problem in sentiment analysis is identifying text polarity, i.e., assessing whether the text conveys a positive, negative or neutral sentiment. Lexicon-based tools approach that problem by resorting to lexicons, i.e., lists of words whose polarity has been assessed and arriving at the overall polarity of the text by examining the amount, intensity and relation of polarity-conveying words in the text. In contrast, example-based tools adopt a machine learning approach by considering large annotated corpora whose sentences have been assigned a polarity by manual examination and assigning the text under examination a polarity based on those examples (e.g., by assigning the same polarity of the closest example, after adopting a representation model for words and sentences and defining distance in that model).

In this paper, we limit ourselves to lexicon-based tools. However, we must mention that significant advances have been made in applying machine learning, and particularly deep learning methods, to sentiment analysis. Significant advances have been made in the techniques deployed to mine the sentiment embedded in unstructured text, including both supervised (where any classification technique can be used, such as Support Vector Machines as in [3]) and unsupervised approaches (see, e.g., the approach based on syntactic patterns in [4] or their combination [5]). Deep learning tools are particularly suitable for performing text analysis tasks since they can easily analyse instances where many features are present. The application of deep learning techniques in sentiment analysis is well surveyed, e.g., in [6,7]. A theme partially overlapping with sentiment analysis is the discovery of emotions expressed through the text, which has been surveyed, e.g., in [8] and the closely related theme of affective computing, examined in [9,10,11]

Another interesting new approach is the so-called attention-based sentiment analysis, where learning is focused on specific elements both at the word and sentence level so that features are selected based on their importance rather than being considered all equal. Notable proposals for this new approach are put forward in [12,13].

A move beyond the use of individual words or their simple combinations (e.g., bigrams and trigrams) in lexicon-based tools is represented by the definition of primitives that can represent several word combinations that convey the same concept. For example, the FOOD primitive can represent noun concepts such as pasta, cheese_cake or steak. This approach, which goes under the name of symbolic AI, is well described in [14].

However, among the challenges ahead of the researchers in the field, the theme of negation detection still ranks on the top of the list, as highlighted in the survey in [15], which builds on the previous works underlining that issue [16,17,18,19,20,21,22,23]. Some more recent papers still struggle with the issue of correctly detecting negations [24,25,26]. Moreover, the recent book by Díaz and López exhibits the high relevance that the topic still has [27].

We are not dealing with that problem here, but we note that any method proposed to detect negations must be adequately tested. The test must strain the negation-detection algorithm so that it can be safely relied upon to detect any occurrence of negations. So far, the dominant approach has employed annotated corpora, where the presence of negations has been spotted and manually labelled as such [28]. Despite the large size of the corpora (and the vast effort employed in annotating them), these do not cover either the wide set of application domains or the vast range of modes of negation.

What we put forward in this paper is a different approach to testing, which relies on devising a set of sentences that purposely include negations (the testset) that can be fed to the sentiment analysis tool. Our aim, in fact, is to create a testset which encompasses not only examples where negation is embedded in all the different positions and regards all the different parts of speech (e.g., negations affecting verbs or adjectives) but also sentences where negations do not affect their polarity; that is the crucial goal of any sentiment analysis tool. The polarity of those sentences would be embedded in their construction phase so that no additional labelling effort would be needed. Comparing the output of sentiment analysis tools with the ground truth would allow us to evaluate the sentiment analysis tool under test easily. This approach would be less demanding and more thorough at the same time, allowing the tester to pinpoint specific occurrences of sentences incorporating negations. As mentioned earlier, our approach applies to lexicon-based tools and has the advantage of not requiring the massive datasets needed for machine learning-based tools, where negations may not be adequately represented. Corpora employed in machine learning approaches may be heavily imbalanced, exhibiting an overwhelming majority of sentences that do not include negations, causing the sentiment analysis tool to be biased.

In this paper, after reviewing the theme of negations in Section 2, we describe this new testset-based approach and propose a basic testset, respectively, in Section 3 and Section 4. This testset is by no means to be considered exhaustive and has the sole purpose of providing a basis for the construction of more complete testsets and demonstrating its use with a selection of sentiment analysis tools (namely, tools widely available and used as packages in the R language), reviewed in Section 5.

The application concerning those tools with the procedures of Section 6, reported in Section 7, reveals that one of the tools under test (the syuzhet package) exhibits very poor performance when it comes to detecting negations, failing to recognise the negative polarity of a sentence in over 90% of the instances. Though the other tools under test perform significantly better, their capacity to correctly detect negative-polarity sentences may be as low as 67%.

2. A Theoretical Background of Negations

In this section, we provide an overview of recent advances concerning negations in the linguistic literature. We also provide a very brief overview of the current computer science approaches to negation detection. A summary of the relevant literature is shown in Table 1.

Table 1.

Summary of relevant literature (P: Conference proceedings; B: Book; A: Scientific article).

Targeting negations is a crucial issue within the general task of sentiment analysis. Determining automatically whether a product review posted online moves along positive or negative polarity is an ordinary (and at the same time one of the most strategic) application of sentiment analysis. Councill et al. show how the performance of their sentiment analysis system has been positively affected thanks to the introduction of negation-scope detection [29]. Wiegand et al. present a study of the role of negation in sentiment analysis and claim that an effective negation model for sentiment analysis requires knowledge of polarity expressions [22]. Since negation is not constructed through common negation words only, this model should also include other lexical units such as, for example, diminishers. Additionally, sometimes negation expressions are ambiguous, particularly when negation is implicit or entailed pragmatically. At this stage of the analysis, we focus on explicit negation exclusively, i.e., when negative polarity is clearly marked by negation words or particles.

Over the centuries, speculations on negation have interested many scholars from different research fields. Of course, we will look at negations from a linguistic and grammar point of view, providing a brief overview of the most significant taxonomies of negation. At the base of these classifications, there are two theoretical masterpieces, the works in [30,31], that have posed the major questions concerning this topic. We find these points developed in some studies that address negation detection, a few of which are related to sentiment analysis and opinion mining [29,32,33]. Tottie offers one of the most utilised taxonomies for analysing English negation, although it mainly focuses on clausal negations [34]. A clausal negation differs from a constituent negation to the extent to which the first negates the entire proposition (“I don’t have any idea”) while the second is combined with some constituent (“I have no idea”). In syntactic analysis, a constituent is a word or a group of words that function as a single unit within a hierarchical structure; in a constituent negation, the negative marker is placed immediately after the relative constituent (see the lecture notes on negations available at http://web.mit.edu/rbhatt/www/24.956/neg.pdf (accessed on 10 January 2023). Tottie’s classification comprises five categories: denials, rejections, imperatives, questions, supports and repetitions. Denials are the most common form and mirror an unambiguous negation of a particular clause (e.g., “I don’t like this movie, yet reviews are positive.”). Rejections appear in discourse where one speaker explicitly rejects an offer or suggestion of another as, e.g., in “Do you like this book? No.” Imperatives direct an audience away from a particular action, e.g., in “Do not hesitate to contact me”. Questions can be rhetorical or otherwise (e.g., “Why couldn’t they include a decent speaker in this phone?”, implying that the speaker does not work well). Supports and repetitions express agreement and add emphasis or clarity, respectively, when multiple expressions of negation are involved. According to Tottie, while denials, imperatives and questions are examples of clausal negations, rejections and supports are constituent or interclausal negations. A more comprehensive taxonomy of negation in English is that elaborated by Huddleston and Pullum, who describe four binomial categories as follows [35]: (1) verbal vs. non-verbal; (2) analytic vs. synthetic negation; (3) clausal vs. subclausal negation; (4) ordinary vs. metalinguistic negation. In verbal negations, the negative marker particle is grammatically associated with the verb, the head of the clause, whereas in non-verbal negation, it is associated with a dependent of the verb, an adjunct or an affix (e.g., “He is not polite” vs. “He is impolite”). Analytic negation is marked by words whose exclusive syntactic function is to mark negation, i.e., “not” as well as “no” meant as in contrast with “yes”, for example, in answering a question. In synthetic negations, the words that mark negation also have other functions in the sentence (e.g., “The report is not complete” vs. “The report isn’t complete”). Synthetic non-verbal negation is marked by elements of three kinds: (a) absolute negators, including “no” (and its compounds “nobody”, “nothing”, etc., as well as the independent form “none”), “neither”, “nor”, “never”; (b) approximate negators, such as “few”, “little”, “barely”, “hardly”, “scarcely”, “rarely”, “seldom”; (c) affixal negators, such as “un-”, “i-”, “no-”, “-less”, etc. Clausal negation is negation that supports a negative clause, whereas subclausal negation does not make the whole clause negative. (e.g., “My colleagues aren’t reliable” vs. “My colleagues are unreliable”). In ordinary negation, the negative indicates that it is not the case, not true, while metalinguistic negation does not dispute the truth but rather rejects and reformulates a statement (e.g., “You didn’t drive my old banger: you didn’t have the licence!” vs. “You didn’t drive my’ old banger’: it was still in good condition!”).

Linguistic negation is a complex topic and becomes more crucial when detecting negation combined with determining sentiments’ polarity. Negative polarity affects the sentiment expressed in a sentence in many ways, but negation detection in sentiment analysis is problematic. According to Liu (see pages 116–122 in [36]), there are three primary modalities thanks to which negative polarity shifts sentiment orientation and, at the same time, can create ambiguity: (1) A negation marker directly negates a positive or negative sentiment expression. However, this sentiment reversal is questionable as shifting sentiment orientation of the sentence “I like it” means to say “I don’t like it” or “I dislike it”, which slightly differ in terms of pragmatic meaning and sentiment orientation accordingly. (2) A negation marker is used to express that some expected or desirable functions or actions cannot be performed. In these cases, sentiment words are unnecessary (e.g., “When I click the start button, the program does not launch”). The negation words do not function as sentiment shifters and, hence, sentiment is difficult to detect. (3) A negation marker negates a desirable or undesirable state expressed without using a sentiment word (e.g., “The water that comes out of the fridge is not cold”). Moreover, it is difficult to recognise whether the sentence expresses positive or negative polarity in this case. There are diverse negation sentiment shifters, such as double negation, negation in comparative sentences, not followed by a noun phrase, never, etc., that make sentiment analysis limited and sometimes unsuccessful. Negation detection has been long investigated through different approaches, such as the rule-based approach [37,38] and machine learning techniques [23,29,39,40]. Recently, many studies have demonstrated the efficiency of deep learning algorithms when applied to the negation-detection process [41,42].

3. Corpus-Based vs. Testset-Based Approach

As hinted in the Introduction, we focus on negation detection in sentiment analysis, one of the major areas where negation detection is critical. Testing the performance of sentiment analysis tools for negation detection is crucial for their overall performance assessment: negations may completely change the polarity of a sentence and therefore produce a mistake in sentiment interpretation. In this section, we describe the current approach for performance testing, based on the use of annotated corpora and the approach we propose based on a testset. We also describe the performance metrics that can be used for this purpose.

3.1. The Corpus-Based Approach

The corpus-based approach to testing is currently the most widely adopted in the literature. In this approach, a corpus of documents is considered and annotated for negations. The negation-detection algorithm is then tested for its capacity to find annotated negative expressions and avoid misclassifying the positive ones. This approach has been widely adopted in the medical context (see Section 2.3 of [39]), where the documents are mostly medical reports and the focus is on detecting statements about the presence or absence of diseases. Though this context and the related purpose are different from ours, we may mention a few examples of that approach. Among the group of rule-based detection algorithms, we find the following: NegEx, developed in [43]; ConText, developed in [44]; NegHunter, developed in [45]. Though the biomedical area is the most beaten one, several corpora devoted to sentiment analysis are also present. For example, the Simon Fraser University (SFU) corpus includes 400 documents (50 of each type) of movie, book and consumer product reviews from the website Epinions.com [46]. The corpus was annotated by one linguist for negations, with a second linguist annotating a random selection through stratified sampling, amounting to 10% of the documents. Negated sentences account for a proportion of the sentences ranging from 13.4% (music) to 23.7% (movies). That corpus was employed, e.g., in [47] for Twitter data. The SFU Opinion and Comments Corpus (SOCC) is another corpus annotated for negation in the sentiment analysis domain [48]. It comprises 1043 comments written in response to a subset of the articles published in the Canadian newspaper “The Globe and Mail” in the five years between 2012 and 2016, annotated with three layers of annotations: constructiveness, negation and appraisal. For the negation annotation, the authors develop extensive and detailed guidelines for annotating negative keywords, scope and focus. [48].

There are three significant limitations to this approach. First, it requires the availability of large corpora, exhaustively annotated with negations, which is not the case at present, as stated in [28]. Second, sentences with negations represent a limited fraction of the overall sentences found in a corpus. According to the survey conducted in [28], sentences with negations represent a percentage between 9.37% and 32.16% in English corpora and between 10.67% and 34.22% in Spanish corpora. Third, corpora represent a limited sample of negations in texts, making this approach resemble what is called opportunity testing, i.e., testing using available datasets rather than stressing the system under test through a specifically devised testset.

3.2. The Testset-Based Approach

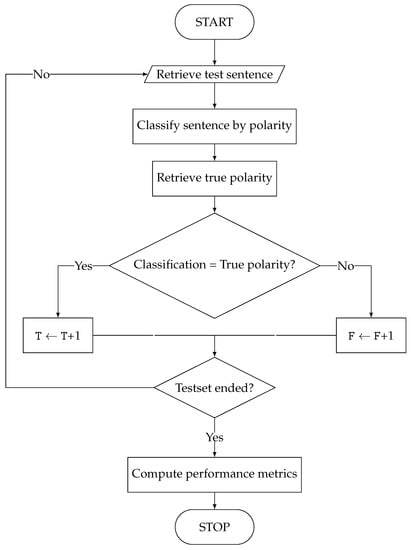

In this approach, we devise a set of testing sentences where negations are purposely included. The focus is not just on creating sentences where negations are included in all the different positions and concerning all the different parts of speech (e.g., negations affecting verbs or adjectives), but also on including examples where negations do not affect the polarity of the sentence, which is the first and foremost goal of any sentiment analysis tool. Each sentence in the testset is labelled with its polarity. That testset can then be fed to a sentiment analysis tool to assess whether it can correctly recognise the polarity of the sentence. We can go through the whole assessment process in Figure 1. Let us assume we have a polarity detection algorithm that we wish to test for its capacity to correctly detect negations. Let us also assume that we have built a testset made of n sentences whose polarity we know since we have labelled them. We retrieve each sentence in the testset in turn and feed it to the polarity detection algorithm under test. That algorithm will perform a classification task and will assign that sentence a polarity value. Since we know the true polarity of each sentence, we can retrieve the true polarity and compare the polarity output by the polarity detection algorithm against the true polarity. If the result is correct, we increase the TRUE counter T by one. Otherwise, we increase the FALSE counter F by one. For the sake of brevity, here we do not distinguish between True Positives and True Negatives (and similarly, between False Positives and False Negatives), but we would keep separate counters for each class. After updating counters, we go to the next sentence in the testset. When we do not have further sentences in the testset, we can compute the performance metrics as described in Section 6.

Figure 1.

Assessment process.

An early example of the testset-based approach is the testing method adopted for NegMiner [49], which employed a set of 500 testing sentences again for the biomedical context. However, its applicability outside that context appears dubious; neither the list of sentences nor a structured approach to their definition are reported in the paper, making its applicability practically impossible.

4. The Testset

As hinted in Section 3.2, the testset is a list of sentences that have been purposefully devised to assess the negation-detection capabilities of polarity detection/sentiment analysis tools. The sentences included in the testset are (mainly) negative ones disguised as positive ones to fool the sentiment analysis tool. The testset was created manually. At present, the testset includes 32 sentences whose polarity is manually annotated. Being aimed at detecting negations, the corpus is heavily imbalanced towards negative sentences: it comprises just five positive sentences. Its present form is not intended to be exhaustive and is reported here just to demonstrate the application of the evaluation method.

To describe the current form of the testset, we adopt a simplified and modified version of the Penn TreeBank tagset [50,51]. We simplify the tagset by adopting a general form for each tag family. For example, we adopt the more general VB tag to identify a verbal form as a representative of any of the specialised tags VBD, VBG, VBN, VBP and VBZ. Similarly, we employ the general form NN for a noun as a representative of any of the tags NNS, NNP and NNPS. In order to account for the presence of negations, we add the tag NEG, which is not present in the Penn TreeBank tagset. In fact, negative markers (such as not or never) are binned into the wider category of adverbs (hence tagged as RB) [52]. A list of the most frequent negative markers is reported in [46].

The testset sentences are built starting from the basic structure <DET><NN><VB> and adding a verbal predicate that involves negations and may have multiple variants. For example, we use the sentence

as in the sentence This device is not perfect and useless, whose polarity is negative but may generate ambiguous interpretations due to the presence of both a negative marker (not) and a negative-polarity adjective (useless).

In Appendix A, we present the complete list of sentences included in the current version of our testset. We strongly underline that it is not intended as a closed list and we expect to enlarge it to cover more occurrences of negative polarity sentences. We aim to put forward the testset-based approach rather than a specific list of sentences.

5. Libraries under Test

In this section, we briefly review the main characteristics of the current sentiment analysis tools. In order to test the effectiveness of our method to gauge the negation-detection performance of these tools, we employed several widely used sentiment analysis packages coded in R. In order to select a significant set of packages, we consulted the list available on www.cran.r-project.org, (accessed on 10 January 2023). The set of packages devoted to sentiment analysis is shown in Table 2 together with the number of downloads, which can be obtained by consulting the website http://cran-logs.rstudio.com accessed on 10 January 2023.

Table 2.

R packages for sentiment analysis by popularity.

Misuraca et al. had already performed a similar analysis, arriving at a shortlist of 5 packages [53]. An extended analysis of most of those R packages can be found in [54]. From that list, we have chosen the following ones, which are the most popular:

- syuzhet;

- sentimentR;

- SentimentAnalysis;

- RSentiment [55];

- meanr;

- VADER (Valence Aware Dictionary for Sentiment Reasoning).

The syuzhet package applies a more straightforward approach since it just accounts for the occurrences of polarised words, outputting the algebraic sum of the polarity scores of individual words [56]. However, it allows the choice of the lexicon among three ones: a similarly named syuzhet, afinn and bing. Examples of usage of the syuzhet package include its application to the reviews on Trip Advisor [57], the speeches of political leaders [58] and the analysis of self-report stress notes [59].

The sentimentR package adopts a dictionary lookup approach to assign sentiment scores to sentences [60]. It also considers valence shifters, including both negators and amplifiers/deamplifiers. Each sentence is first parsed to look for polarised words (i.e., words that have been tagged as either positive or negative in the dictionary) and then a window is examined across the polarised word to look for valence shifters. The resulting polarity score is unbounded. It employs a lexicon made of 11,709 terms. The sentimentR package has been employed, e.g., in [61] to analyse the opinions expressed on Twitter by UK energy consumers and [62] to extract the sentiment out of clinical notes in intensive care units.

The SentimentAnalysis package employs several existing dictionaries, such as QDAP, Harvard IV or finance-specific dictionaries. It can also employ customised dictionaries and generate dictionaries specialised for the financial domain by resorting to LASSO regression applied to stock returns [63]. The full description is available at https://cran.r-project.org/web/packages/SentimentAnalysis/SentimentAnalysis.pdf (accessed on 10 January 2023).

The RSentiment package employs a PoS (Parts of Speech) tagger so that it can track adverbs located close to nouns and assign a polarity score accordingly [55]. The output score is the algebraic sum of the polarity of individual words and is then unbounded. It has been used for the analysis of political preferences as expressed on Twitter [64] and the opinion of transit riders [65].

The meanr package returns the algebraic sum of the number of positive and negative scored words. It uses the Hu and Liu sentiment dictionary available on https://www.cs.uic.edu/~liub/FBS/sentiment-analysis.html (accessed on 10 January 2023) [66].

Finally, VADER employs a set of rules to obtain the polarity score of a sentence [67]. The rules consider punctuation (e.g., the presence of an exclamation point), capitalisation, degree modifiers (such as adverbs), conjunctions and preceding tri-grams, which allow detection of the presence of negations that flip the polarity of a sentence. The output includes the proportion of text classified as either positive, negative or neutral and a compound score in the [−1, +1] range. It has gained a wide acceptance, being employed, e.g., for students’ evaluation of teaching [68] and to label the email messages of customers [69].

6. Performance Evaluation Metrics

After describing the testset in Section 4, we can use the approach sketched in Figure 1 to evaluate the performance of any sentiment analysis tool. The evaluation approach described in Section 3.2 stops at obtaining the figures that make up the confusion matrix. However, several performance metrics can be computed based on those four basic figures. In this subsection, we review the performance metrics that we adopt for that purpose.

Since each sentence in the testset is labelled with a polarity, the task represented by carrying out a sentiment analysis is a supervised one, namely a binary classification. In fact, though the output of a sentiment analysis tool is typically numeric, we can bin the sentiment scores into either of the two classes of positive and negative polarity, respectively. The sentiment score ranges for the most common tools in R are reported in [54]. For example, the sentiment ranges from −5 to +5 if we use the syuzhet package with the afinn lexicon, but from −1 to 1 if we use the default syuzhet lexicon [56]. However, a fair assumption is that all tools employ a symmetric scale around zero so that positive scores imply a positive opinion (and similarly, negative scores imply a negative opinion).

By comparing the actual polarity with that estimated by the sentiment analysis tool at hand, we obtain the confusion matrix reported in Table 3. In this confusion matrix, the terms Positive and Negative refer to the sentence polarity. Hence a True Positive (TP) is a positive sentence that has been correctly classified as positive, while a False Positive (FP) is a negative sentence that has been incorrectly classified as positive. In this context, we are particularly interested in the mishandling of negative sentences, i.e., the error we make when classifying negative sentences as positive.

Table 3.

Confusion matrix.

However, the sentiment analysis tool may output a neutral (0) value. In that case, since the tool has failed the classification task of detecting the right polarity (misclassifying either a positive or negative sentence as a neutral one), we counted that case as a classification error: we add it to the number of False Negatives (FNs) if the sentence was classified as neutral, but it was actually positive. In contrast, we add it to the number of False Positives (FPs) if the sentence was classified as neutral, but was actually negative. It is, however, to be noted that most research papers adopt the simpler binary classification considering just positive and negative polarity, as reported in Section 3.1 of [36]. In addition, when ambivalence is present (sentences containing a mixture of positive and negative sentiments), the ambivalence is resolved by the use of transferring rules that map sentences to either positive or negative sentiment, as in [70]. However, since we propose a testing method that should be compatible with tools adopting even the simpler binary classification scheme, our testset does not include neutral sentences and adopts the binary classification scheme.

The entries in the confusion matrix allow us to compute one of the many performance metrics that may be employed for binary classification [71]. Since each metric has its own advantages and disadvantages (related, e.g., to the imbalance of the testset), we consider the major ones rather than choosing a single one (see Chapter 22 of [72] for a complete overview of classification metrics):

- Accuracy;

- Sensitivity;

- Specificity;

- Precision;

- F-score.

Accuracy is the ratio of correctly classified sentences and shows how much we err overall (i.e., by classifying positive sentences as negative ones and vice versa):

However, it is well known that Accuracy may provide misleading indications in the case of class imbalance since it tends to replicate the performance over the dominant class, overlooking the classification performance over the minority class.

A couple of performance metrics that disassemble the contribution to errors into the errors over the class of positives and negatives, respectively, are Sensitivity and Specificity. Sensitivity measures the proportion of positives that are correctly identified; i.e., it provides the correct classification ratio just over the class of positive instances. Its mathematical expression is

On the other hand, Specificity measures the proportion of correctly identified negatives, i.e., it provides the correct classification ratio just over the class of negative instances. Given that we are mostly interested in the misclassification of negative sentences, this metric is probably one to look at more carefully. Its mathematical expression is

An alternative pair that is often used in order to obtain a different view of performances is Precision and Recall. Precision is the percentage of true positive instances among those labelled as positive ones and helps us see how negative sentences are not detected and end up being considered as positive ones:

Recall is actually just another name for Sensitivity and we then do not report its definition here.

Most papers in the medical context have employed either of the previous combinations of two metrics, i.e., Sensitivity and Sensitivity, or Precision and Recall [43,44].

There is arguably more consensus on F-score (also named F1-score, as in Section 5.7.2.3 of [73]) when it comes to detecting negations [27]:

F-score ranges in the [0,1] interval; it is 1 for the perfect classifier, which makes no mistakes, and 0 for the opposite case. If the testset were made of negative polarity sentences only, a random classifier would report , , on average over n sentences, obtaining the following F-score

7. Results

We can finally employ our testset and the metrics described in Section 6 to assess the performance of the libraries reviewed in Section 5. In this section, we report the results of performance evaluation. We compare the R libraries under examination and rank them according to their performance.

The results are presented in Table 4. We recall that, in this context, the aim is to detect negations so that the instances where the sentence has a negative polarity and is detected as such by the sentiment computation tools are considered True Positives. Conversely, the instances where the sentence has a positive polarity (and is detected as such by the sentiment computation tool) are considered True Negatives.

Table 4.

Test results (* = best result).

If we focus on detecting negative polarity sentences, given the heavy imbalance of our testset, the metric of greatest interest is Specificity. The best sentiment analysis package for both metrics is RSentiment, with sentimentR and VADER as two (not so close) runner-ups. The performance of the syuzhet package is abysmal under the Specificity metric, regardless of the lexicon: that package is really unable to detect most negative polarity sentences, performing worse than a random classifier. The Precision metric achievement, though bad in general, is, however, better than Specificity scores, which means that the syuzhet package also tends to disguise positive polarity sentences as negative polarity ones.

If we consider the F-score, the metrics mostly considered for detecting negations, the picture is basically the same. RSentiment ranks first again, with sentimentR second and VADER third. The syuzhet package performs again quite badly, with a score well below 0.5.

The heavy differences we have observed so far between the best performing packages (RSentiment, sentimentR and VADER) and the worst performing syuzhet disappear when we focus on positive polarity sentences only through the Sensitivity metric. In that case, though RSentiment is still the best package around (but on a par with sentimentR), the syuzhet package is not too far off, with a score in any case larger than the random classifier.

In conclusion, after considering all the metrics, the best package is surely RSentiment, which ranks first among all of them, though its performance is far from perfect. It misses roughly 17% of the positive sentences but nearly one-quarter of the negative ones. Though its results for positive sentences are masked by the heavy imbalance of our dataset towards negative sentences, a better algorithm should be employed.

8. Discussion and Conclusions

We put forward a testset-based approach to assess whether a sentiment analysis tool can adequately account for negations in the evaluation of the polarity of a sentence. This approach allows us to evaluate lexicon-based sentiment analysis tools. It represents an alternative to the currently dominant corpus-based approach, where corpora are used both for training the algorithm and for testing it.

We also proposed a basic testset made of thirty-plus manually labelled sentences to demonstrate our approach. Even with this basic testset, some well known sentiment analysis packages exhibit very poor performances, with the percentage of correctly classified negative-polarity sentences falling even below 10%. On the other hand, the RSentiment package achieves an accuracy close to 80%, being the best in class but still far from what is expected as it misses the polarity of nearly one-quarter of the negative sentences.

Those results tell us two things. First, widely used sentiment analysis packages still fail when dealing with negative sentences. Second, our testset-based method allows us to spot such poor performances because it compels the algorithm to deal with particularly critical sentences whose polarity may be tricky for the algorithm to understand.

It could be argued that machine learning-based approaches could easily outperform the lexicon-based ones that we examined here. This may be true, but machine learning algorithms require a massive amount of labelled datasets to train. Aside from the difficulty of obtaining or creating those datasets, they may fail to include the kind of negative sentences that are most difficult for the algorithm to classify correctly. At any rate, creating a focussed dataset, as we have proposed in this paper, may prove valuable for machine learning-based approaches as well. In fact, our testset, or a similarly built one, could be added to the set of datasets employed to train the machine learning algorithm.

Of course, the analysis conducted in this paper exhibits some limitations. First, the testset we have built is made of just 32 sentences, created by starting from the basic pattern made of a determiner followed by a noun and a verb. More complex patterns may be considered, where negations are included in less explicit ways than we did, e.g., by using pronouns such as none or neither, or adverbs. However, we state again that we proposed an approach and employed a testset made of 32 sentences as an example. We do expect that richer testsets can be created following the same approach. A second limitation is related to the number of packages employed to test the approach. More packages are available in R and a whole lot more in Python, as well as some commercial tools. However, our intent was not to carry out an exhaustive survey of the negation-detection performance of sentiment analysis tools. Rather, we wish to highlight that widely used sentiment analysis tools fail when dealing with negative sentences and our method allows us to spot such failures.

In order to overcome those limitations, we envisage the following future research activities. First, we aim to enlarge the testset. For example, we intend to add sentences that employ different ways to include negations. We also intend to include neutral sentences or partly negative sentences (e.g., “I’m not at all sure...”) to obtain a more comprehensive selection of instances of negation appearances that may fool the sentiment analysis tool. Second, we aim to consider a broader selection of sentiment analysis tools developed in languages other than R or even commercial software. We also aim to extend the analysis to machine learning-based tools by specifically testing them with the testset we have developed.

Author Contributions

Conceptualization, M.N. and S.P.; methodology, M.N. and S.P.; software, M.N.; validation, S.P.; formal analysis, M.N. and S.P.; investigation, M.N. and S.P.; resources, M.N. and S.P.; data curation, S.P.; writing—original draft preparation, M.N. and S.P.; writing—review and editing, M.N. and S.P.; visualization, M.N.; supervision, M.N. and S.P.; project administration, M.N. and S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Sample Availability

Not applicable.

Abbreviations

The following abbreviations are used in this manuscript:

| DOAJ | Directory of open access journals |

| FN | False Negative |

| FP | False Positive |

| MDPI | Multidisciplinary Digital Publishing Institute |

| PoS | Parts of Speech |

| SFU | Simon Fraser University |

| SOCC | SFU Opinion and Comments Corpus |

| TN | True Negative |

| TP | True Positive |

| UK | United Kingdom |

| VADER | Valence Aware Dictionary for sEntiment Reasoning |

Appendix A. Sentence Testset

In this appendix, we report the current full set of sentences employed to test sentiment analysis tools. The sentences are accompanied by their parts-of-speech composition, which has been obtained by using the online PoS tagger available at http://www.infogistics.com/posdemo.htm (accessed on 10 January 2023). A tool adopting a simplified tagset is available at https://parts-of-speech.info (accessed on 10 January 2023). The structure of the sentences is more important than the actual content since the sentence This device is not perfect could be replaced by the similar sentence This product is not excellent, which exhibits the same PoS composition. In marking the parts of speech, we use a slightly modified version of the Penn Treebank tagset [50], where we have adopted VB as a short form for all the VBx tags (i.e., VBD, VBG, VBN, VBP and VBZ) and have added a polarity marker to accompany the JJ and RB tags (pertaining respectively to adjective and adverbs). We specify that the testset we show in Table A1 is the current one but is amenable to being enlarged since we are interested here more in establishing the notion of a different approach to testing rather than setting a definitive testset.

Table A1.

Sentence testset.

Table A1.

Sentence testset.

| Sentence | PoS Composition |

|---|---|

| This device is perfect, isn’t it | DT NN VB (POS)JJ VB RB PRP |

| This device is not perfect | DT NN VB (NEG)RB (POS)JJ |

| This device isn’t perfect | DT NN VB (NEG)RB (POS)JJ |

| This device is not perfect! | DT NN VB (NEG)RB (POS)JJ. |

| This device is not perfect at all | DT NN VB (NEG)RB (POS)JJ IN DT |

| This device is not perfect, is it? | DT NN VB (NEG)RB (POS)JJ, VB PRP. |

| This device is not perfect and useless | DT NN VB (NEG)RB (POS)JJ CC (NEG)JJ |

| This device is not perfect and almost useless | DT NN VB (NEG)RB (POS)JJ CC RB (NEG)JJ |

| This device is useless and not perfect | DT NN VB (NEG)JJ CC (NEG)RB (POS)JJ |

| She is unreliable | PRP VB (NEG)JJ |

| This device is neither perfect nor useful | DT NN VB RB JJ CC JJ |

| This device is not either perfect or useful | DT NN VB RB RB JJ CC JJ |

| This device is not perfect, but very useful | DT NN VB RB JJ, CC RB JJ |

| She is not reliable | PRP VB RB JJ |

| This device is not perfect, but useless | DT NN VB RB JJ, CC JJ |

| This device is not perfect and useless | DT NN VB RB JJ, CC JJ |

| This device is not perfect and hardly useless | DT NN VB RB JJ, CC RB JJ |

| This device is useless and not perfect | DT NN VB JJ, CC RB JJ |

| She is not unreliable | PRP VB RB JJ |

| This device is not the most perfect yet it is very useful | DT NN VB RB DT RBS JJ CC PRP VB RB JJ |

| I do not think this device is perfect | PRP VBP RB VB DT NN VB JJ |

| I do not think this device could be perfect | PRP VBP RB VB DT NN MD VB JJ |

| This device has never been perfect | DT NN VB RB VBN JJ |

| This device has never been imperfect | DT NN VB RB VBN JJ |

| This device has never been perfect and useful | DT NN VB RB VBN JJ CC JJ |

| This device has never been perfect but useful | DT NN VB RB VBN JJ CC JJ |

| This device has never been either perfect or useful | DT NN VB RB VBN RB JJ CC JJ |

| I do not like it at all | PRP VBP RB VB PRP IN DT |

| I don’t like it | PRP VBP RB VB PRP |

| I dislike anything | PRP VBP NN |

| I like nothing | PRP VBP NN |

| Nobody likes it | NN VB PRP |

References

- Feldman, R. Techniques and applications for sentiment analysis. Commun. ACM 2013, 56, 82–89. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Joachims, T. Making large-scale support vector machine learning practical. In Advances in Kernel Methods: Support Vector Learning; MIT Press: Cambridge, MA, USA, 1999; pp. 169–184. [Google Scholar]

- Turney, P.D. Thumbs up or thumbs down? Semantic orientation applied to unsupervised classification of reviews. arXiv 2002, arXiv:cs/0212032. [Google Scholar]

- Prabowo, R.; Thelwall, M. Sentiment analysis: A combined approach. J. Inf. 2009, 3, 143–157. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Liu, B. Deep learning for sentiment analysis: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1253. [Google Scholar] [CrossRef]

- Wang, J.; Xu, B.; Zu, Y. Deep learning for aspect-based sentiment analysis. In Proceedings of the 2021 International Conference on Machine Learning and Intelligent Systems Engineering (MLISE), Chongqing, China, 9–11 July 2021; pp. 267–271. [Google Scholar]

- Kratzwald, B.; Ilić, S.; Kraus, M.; Feuerriegel, S.; Prendinger, H. Deep learning for affective computing: Text-based emotion recognition in decision support. Decis. Support Syst. 2018, 115, 24–35. [Google Scholar] [CrossRef]

- Cambria, E. Affective Computing and Sentiment Analysis. IEEE Intell. Syst. 2016, 31, 102–107. [Google Scholar] [CrossRef]

- Cambria, E.; Das, D.; Bandyopadhyay, S.; Feraco, A. Affective computing and sentiment analysis. In A Practical Guide to Sentiment Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–10. [Google Scholar]

- Guo, F.; Li, F.; Lv, W.; Liu, L.; Duffy, V.G. Bibliometric analysis of affective computing researches during 1999∼ 2018. Int. J. Hum.- Interact. 2020, 36, 801–814. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An attention-based bidirectional CNN-RNN deep model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Usama, M.; Ahmad, B.; Song, E.; Hossain, M.S.; Alrashoud, M.; Muhammad, G. Attention-based sentiment analysis using convolutional and recurrent neural network. Future Gener. Comput. Syst. 2020, 113, 571–578. [Google Scholar] [CrossRef]

- Cambria, E.; Li, Y.; Xing, F.Z.; Poria, S.; Kwok, K. SenticNet 6: Ensemble application of symbolic and subsymbolic AI for sentiment analysis. In Proceedings of the 29th ACM International Conference on Information & knowledge Management, Virtual Event, Ireland, 19–23 October 2020; pp. 105–114. [Google Scholar]

- Hussein, D.M.E.D.M. A survey on sentiment analysis challenges. J. King Saud Univ.-Eng. Sci. 2018, 30, 330–338. [Google Scholar] [CrossRef]

- Heerschop, B.; van Iterson, P.; Hogenboom, A.; Frasincar, F.; Kaymak, U. Accounting for negation in sentiment analysis. In Proceedings of the 11th Dutch-Belgian Information Retrieval Workshop (DIR 2011). Citeseer, Amsterdam, The Netherlands, 4 February 2011; pp. 38–39. [Google Scholar]

- Hogenboom, A.; Van Iterson, P.; Heerschop, B.; Frasincar, F.; Kaymak, U. Determining negation scope and strength in sentiment analysis. In Proceedings of the 2011 IEEE International Conference on Systems, Man and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 2589–2594. [Google Scholar]

- Asmi, A.; Ishaya, T. Negation identification and calculation in sentiment analysis. In Proceedings of the second international conference on advances in information mining and management, Venice, Italy, 21–26 October 2012; pp. 1–7. [Google Scholar]

- Dadvar, M.; Hauff, C.; de Jong, F. Scope of negation detection in sentiment analysis. In Proceedings of the Dutch-Belgian Information Retrieval Workshop (DIR 2011). Citeseer, Amsterdam, The Netherlands, 27–28 January 2011; pp. 16–20. [Google Scholar]

- Jia, L.; Yu, C.; Meng, W. The effect of negation on sentiment analysis and retrieval effectiveness. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; pp. 1827–1830. [Google Scholar]

- Remus, R. Modeling and Representing Negation in Data-driven Machine Learning-based Sentiment Analysis. In Proceedings of the ESSEM@ AI*IA, Turin, Italy, 3 December 2013; pp. 22–33. [Google Scholar]

- Wiegand, M.; Balahur, A.; Roth, B.; Klakow, D.; Montoyo, A. A survey on the role of negation in sentiment analysis. In Proceedings of the Workshop on Negation and Speculation in Natural Language Processing, Uppsala, Sweden, 10 July 2010; pp. 60–68. [Google Scholar]

- Lapponi, E.; Read, J.; Øvrelid, L. Representing and resolving negation for sentiment analysis. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining Workshops, Brussels, Belgium, 10–13 December 2012; pp. 687–692. [Google Scholar]

- Farooq, U.; Mansoor, H.; Nongaillard, A.; Ouzrout, Y.; Qadir, M.A. Negation Handling in Sentiment Analysis at Sentence Level. JCP 2017, 12, 470–478. [Google Scholar] [CrossRef]

- Barnes, J.; Velldal, E.; Øvrelid, L. Improving sentiment analysis with multi-task learning of negation. arXiv 2019, arXiv:1906.07610. [Google Scholar] [CrossRef]

- Pröllochs, N.; Feuerriegel, S.; Lutz, B.; Neumann, D. Negation scope detection for sentiment analysis: A reinforcement learning framework for replicating human interpretations. Inf. Sci. 2020, 536, 205–221. [Google Scholar] [CrossRef]

- Díaz, N.P.C.; López, M.J.M. Negation and speculation detection; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2019; Volume 13. [Google Scholar]

- Jiménez-Zafra, S.M.; Morante, R.; Teresa Martín-Valdivia, M.; Ureña-López, L.A. Corpora Annotated with Negation: An Overview. Comput. Linguist. 2020, 46, 1–52. [Google Scholar] [CrossRef]

- Councill, I.G.; McDonald, R.; Velikovich, L. What’s great and what’s not: Learning to classify the scope of negation for improved sentiment analysis. In Proceedings of the Workshop on Negation and Speculation in Natural Language Processing, Uppsala, Sweden, 10 July 2010; pp. 51–59. [Google Scholar]

- Jespersen, O. Negation in English and Other Languages; Host: Kobenhavn, Denmark, 1917. [Google Scholar]

- Horn, L. A Natural History of Negation; University of Chicago Press: Chicago, IL, USA, 1989. [Google Scholar]

- Morante, R.; Sporleder, C. Modality and negation: An introduction to the special issue. Comput. Linguist. 2012, 38, 223–260. [Google Scholar] [CrossRef]

- Laka, I. Negation in syntax: On the nature of functional categories and projections. Anu. Semin. Filol. Vasca Julio Urquijo 1990, 25, 65–136. [Google Scholar]

- Tottie, G. Negation in English Speech and Writing: A Study in Variation; Academic Press: Cambridge, MA, USA, 1991; Volume 4. [Google Scholar]

- Huddleston, R.D.; Pullum, G.K. The Cambridge Grammar of the English Language; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2002. [Google Scholar]

- Liu, B. Sentiment Analysis: Mining Opinions, Sentiments and Emotions; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- De Albornoz, J.C.; Plaza, L.; Díaz, A.; Ballesteros, M. Ucm-i: A rule-based syntactic approach for resolving the scope of negation. In Proceedings of the First Joint Conference on Lexical and Computational Semantics-Volume 1: Proceedings of the Main Conference and the Shared Task and Volume 2: Proceedings of the Sixth International Workshop on Semantic Evaluation, Montréal, QC, Canada, 7–8 June 2012; pp. 282–287. [Google Scholar]

- Ballesteros, M.; Francisco, V.; Díaz, A.; Herrera, J.; Gervás, P. Inferring the scope of negation in biomedical documents. In Proceedings of the International Conference on Intelligent Text Processing and Computational Linguistics; Springer: Berlin/Heidelberg, Germany, 2012; pp. 363–375. [Google Scholar]

- Cruz, N.P.; Taboada, M.; Mitkov, R. A machine learning approach to negation and speculation detection for sentiment analysis. J. Assoc. Inf. Sci. Technol. 2016, 67, 2118–2136. [Google Scholar] [CrossRef]

- Skeppstedt, M.; Paradis, C.; Kerren, A. Marker Words for Negation and Speculation in Health Records and Consumer Reviews. In Proceedings of the 7th International Symposium on Semantic Mining in Biomedicine (SMBM), Potsdam, Germany, 4–5 August 2016; pp. 64–69. [Google Scholar]

- Qian, Z.; Li, P.; Zhu, Q.; Zhou, G.; Luo, Z.; Luo, W. Speculation and negation scope detection via convolutional neural networks. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 815–825. [Google Scholar]

- Lazib, L.; Zhao, Y.; Qin, B.; Liu, T. Negation scope detection with recurrent neural networks models in review texts. Int. J. High Perform. Comput. Netw. 2019, 13, 211–221. [Google Scholar] [CrossRef]

- Chapman, W.W.; Bridewell, W.; Hanbury, P.; Cooper, G.F.; Buchanan, B.G. A simple algorithm for identifying negated findings and diseases in discharge summaries. J. Biomed. Inform. 2001, 34, 301–310. [Google Scholar] [CrossRef]

- Harkema, H.; Dowling, J.N.; Thornblade, T.; Chapman, W.W. ConText: An algorithm for determining negation, experiencer and temporal status from clinical reports. J. Biomed. Inform. 2009, 42, 839–851. [Google Scholar] [CrossRef] [PubMed]

- Gindl, S.; Kaiser, K.; Miksch, S. Syntactical negation detection in clinical practice guidelines. Stud. Health Technol. Inform. 2008, 136, 187. [Google Scholar] [PubMed]

- Konstantinova, N.; De Sousa, S.C.; Díaz, N.P.C.; López, M.J.M.; Taboada, M.; Mitkov, R. A review corpus annotated for negation, speculation and their scope. In Proceedings of the LREC, Istanbul, Turkey, 21–27 May 2012; pp. 3190–3195. [Google Scholar]

- Reitan, J.; Faret, J.; Gambäck, B.; Bungum, L. Negation scope detection for Twitter sentiment analysis. In Proceedings of the 6th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Lisbon, Portugal, 17 September 2015; pp. 99–108. [Google Scholar]

- Kolhatkar, V.; Wu, H.; Cavasso, L.; Francis, E.; Shukla, K.; Taboada, M. The SFU opinion and comments corpus: A corpus for the analysis of online news comments. Corpus Pragmat. 2019, 4, 155–190. [Google Scholar] [CrossRef] [PubMed]

- Elazhary, H. Negminer: An automated tool for mining negations from electronic narrative medical documents. Int. J. Intell. Syst. Appl. 2017, 9, 14. [Google Scholar] [CrossRef]

- Marcus, M.P.; Marcinkiewicz, M.A. Building a Large Annotated Corpus of English: The Penn Treebank. Comput. Linguist. 1994, 19, 273. [Google Scholar]

- Taylor, A.; Marcus, M.; Santorini, B. The Penn Treebank: An overview. In Treebanks; Springer: Berlin/Heidelberg, Germany, 2003; pp. 5–22. [Google Scholar]

- Santorini, B. Part-of-Speech Tagging Guidelines for the Penn Treebank Project (3rd Revision); Tech. Rep. (CIS); University of Pennsylvania: Philadelphia, PA, USA, 1990; MS-CIS-90-47. [Google Scholar]

- Misuraca, M.; Forciniti, A.; Scepi, G.; Spano, M. Sentiment Analysis for Education with R: Packages, methods and practical applications. arXiv 2020, arXiv:2005.12840. [Google Scholar]

- Naldi, M. A review of sentiment computation methods with R packages. arXiv 2019, arXiv:1901.08319. [Google Scholar]

- Bose, S.; Saha, U.; Kar, D.; Goswami, S.; Nayak, A.K.; Chakrabarti, S. RSentiment: A tool to extract meaningful insights from textual reviews. In Proceedings of the 5th International Conference on Frontiers in Intelligent Computing: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2017; pp. 259–268. [Google Scholar]

- Jockers, M. Package ‘Syuzhet’. 2017. Available online: https://cran.r-project.org/web/packages/syuzhet/index.html (accessed on 10 January 2023).

- Valdivia, A.; Luzón, M.V.; Herrera, F. Sentiment analysis in Tripadvisor. IEEE Intell. Syst. 2017, 32, 72–77. [Google Scholar] [CrossRef]

- Liu, D.; Lei, L. The appeal to political sentiment: An analysis of Donald Trump’s and Hillary Clinton’s speech themes and discourse strategies in the 2016 US presidential election. Discourse Context Media 2018, 25, 143–152. [Google Scholar] [CrossRef]

- Yoon, S.; Parsons, F.; Sundquist, K.; Julian, J.; Schwartz, J.E.; Burg, M.M.; Davidson, K.W.; Diaz, K.M. Comparison of different algorithms for sentiment analysis: Psychological stress notes. Stud. Health Technol. Inform. 2017, 245, 1292. [Google Scholar]

- Rinker, T. Package ‘Sentimentr’. 2017. Available online: https://cran.r-project.org/web/packages/sentimentr/index.html (accessed on 10 January 2023).

- Ikoro, V.; Sharmina, M.; Malik, K.; Batista-Navarro, R. Analyzing sentiments expressed on Twitter by UK energy company consumers. In Proceedings of the 2018 Fifth International Conference on Social Networks Analysis, Management and Security (SNAMS), Valencia, Spain, 15–18 October 2018; pp. 95–98. [Google Scholar]

- Weissman, G.E.; Ungar, L.H.; Harhay, M.O.; Courtright, K.R.; Halpern, S.D. Construct validity of six sentiment analysis methods in the text of encounter notes of patients with critical illness. J. Biomed. Inform. 2019, 89, 114–121. [Google Scholar] [CrossRef]

- Pröllochs, N.; Feuerriegel, S.; Neumann, D. Generating Domain-Specific Dictionaries using Bayesian Learning. In Proceedings of the European Conference on Information Systems (ECIS), Münster, Germany, 26–29 May 2015. [Google Scholar]

- Kassraie, P.; Modirshanechi, A.; Aghajan, H.K. Election Vote Share Prediction using a Sentiment-based Fusion of Twitter Data with Google Trends and Online Polls. In Proceedings of the 6th International Conference on Data Science, Technology and Applications (DATA 2017), Madrid, Spain, 24–26 July 2017; pp. 363–370. [Google Scholar]

- Haghighi, N.N.; Liu, X.C.; Wei, R.; Li, W.; Shao, H. Using Twitter data for transit performance assessment: A framework for evaluating transit riders’ opinions about quality of service. Public Transp. 2018, 10, 363–377. [Google Scholar] [CrossRef]

- Hu, M.; Liu, B. Mining opinion features in customer reviews. In Proceedings of the AAAI, San Jose, CA, USA, 25–29 July 2004; Volume 4, pp. 755–760. [Google Scholar]

- Hutto, C.; Gilbert, E. Vader: A parsimonious rule-based model for sentiment analysis of social media text. In Proceedings of the International AAAI Conference on Weblogs and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; Volume 8. [Google Scholar]

- Newman, H.; Joyner, D. Sentiment analysis of student evaluations of teaching. In Proceedings of the International Conference on Artificial Intelligence in Education; Springer: Berlin/Heidelberg, Germany, 2018; pp. 246–250. [Google Scholar]

- Borg, A.; Boldt, M. Using VADER sentiment and SVM for predicting customer response sentiment. Expert Syst. Appl. 2020, 162, 113746. [Google Scholar] [CrossRef]

- Wang, Z.; Ho, S.B.; Cambria, E. Multi-level fine-scaled sentiment sensing with ambivalence handling. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2020, 28, 683–697. [Google Scholar] [CrossRef]

- Seliya, N.; Khoshgoftaar, T.M.; Hulse, J.V. A Study on the Relationships of Classifier Performance Metrics. In Proceedings of the 2009 21st IEEE International Conference on Tools with Artificial Intelligence, Newark, NJ, USA, 2–5 November 2009; pp. 59–66. [Google Scholar]

- Zaki, M.J.; Meira Jr, W. Data Mining and Machine Learning: Fundamental Concepts and Algorithms; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).