A Unifying Framework and Comparative Evaluation of Statistical and Machine Learning Approaches to Non-Specific Syndromic Surveillance

Abstract

1. Introduction

1.1. Contributions

1.2. Outline

2. Syndromic Surveillance

2.1. Overview of Prior Work in Syndromic Surveillance

2.2. Data for Syndromic Surveillance

2.3. Relation to Data Mining and Machine Learning

3. Non-Specific Syndromic Surveillance

3.1. Problem Definition

3.2. Modeling

3.2.1. Global Modeling

3.2.2. Local Modeling

Problem Formulation

Aggregation of p-Values

Problem of Multiple Testing

Relation to Syndromic Surveillance

4. Machine Learning Approaches to Non-Specific Syndromic Surveillance

4.1. Data Mining Surveillance System (DMSS)

4.2. What Is Strange about Recent Events? (WSARE)

- WSARE 2.0

- merges the patients of the 35, 42, 49 and 56 prior days together. The authors have specifically selected these set sizes in order to consider the day-of-the-week effect which represents the occurrence of different observations depending on the day of the week. For example, this effect can have a significant impact on the number of emergency department visits [55].

- WSARE 2.5

- merges the patients of all prior days which have the same values for the environmental attributes as the current day . This has the advantage that the expectations are conditioned on the environmental attributes , and that more patients are contained in the reference set , allowing to obtain more precise results.

- WSARE 3.0

- learns a Bayesian network over all recent data from which the reference set of patients is sampled. For the learning of the network, all patients of all previous days are merged together and encapsulated in a data set where the rows represent the patients. Each patient is characterized by the response attributes as well as the environmental attributes of the respective day the patient arrived. Moreover, the authors make use of domain knowledge for the structure learning of the Bayesian network and restrict the nodes of environmental attributes to have parent nodes. This can be done because the environmental attributes only serve as evidence for the sampling, the prediction of their distribution is not of interest. For the reference set the authors choose to generate 10,000 samples given the environmental attributes as evidence.

4.3. Eigenevent

5. Basic Statistical Approaches to Non-Specific Syndromic Surveillance

5.1. Parametric Distributions

- Gaussian.

- Not suitable for count data but often used is the Gaussian distribution . This distribution will serve as reference for the other distributions which are specifically designed for count data.

- Poisson.

- The Poisson distribution is directly designed for count data. For estimating the parameter , we use the maximum likelihood estimate which is the mean .

- Negative Binomial.

- Since the distributional assumptions of the Poisson distribution are often violated due to overdispersion, we also include the Negative Binomial distribution . This distribution includes an additional parameter, also referred to as the dispersion parameter, which allows to control the variance of the distribution [56]. We estimate the parameters with and .

- Fisher’s Test.

- Since the Fisher’s exact test has been used by most of the proposed approaches for non-specific syndromic surveillance, we also include it as a benchmark. It compares the proportion of cases with the syndrome to all cases on the previous data to the corresponding proportion on the current day based on the hypergeometric distribution. In particular, Fisher’s test is known to yield good results on small sample sizes [57], which is the case for the estimates derived from .

Modifications for Adapting the Sensitivity

5.2. Syndromic Surveillance Methods

- EARS C1

- and EARS C2 are variants of the Early Aberration Reporting System [37,59] which rely on the assumption of a Gaussian distribution. The difference between C2 and C1 lies in the added gap of two time points between the reference values and the current observed count , so that the distribution of are assumed as in the following:

- EARS C3

- combines the result of the C2 method over a period of three previous observations. For convenience of notation, the incidence counts for the C3 method are transformed according to the statistics so that it fits a normal distribution.

- Bayes method.

- In contrast to the family of EARS C-algorithms, the Bayes algorithm [62] relies on the assumption of a Negative Binomial distribution:With this initialization of parameters, the variance of the Negative Binomial distribution only depends on the window size. For small w a bigger variance is assumed due to insufficient data, while for big w it converges to a Poisson distribution.

- RKI method.

- Since the Gaussian distribution is not suitable for count data with a low mean, the RKI algorithm, as implemented by Salmon et al. [58], assumes a Poisson distribution:

6. Evaluation

6.1. Evaluation Setup

6.1.1. Evaluation Measures

6.1.2. Parameter Configurations

6.1.3. Additional Baselines

6.1.4. Implementation

6.2. Experiments on Synthetic Data

6.2.1. Data

6.2.2. Results

Comparison to the Benchmarks

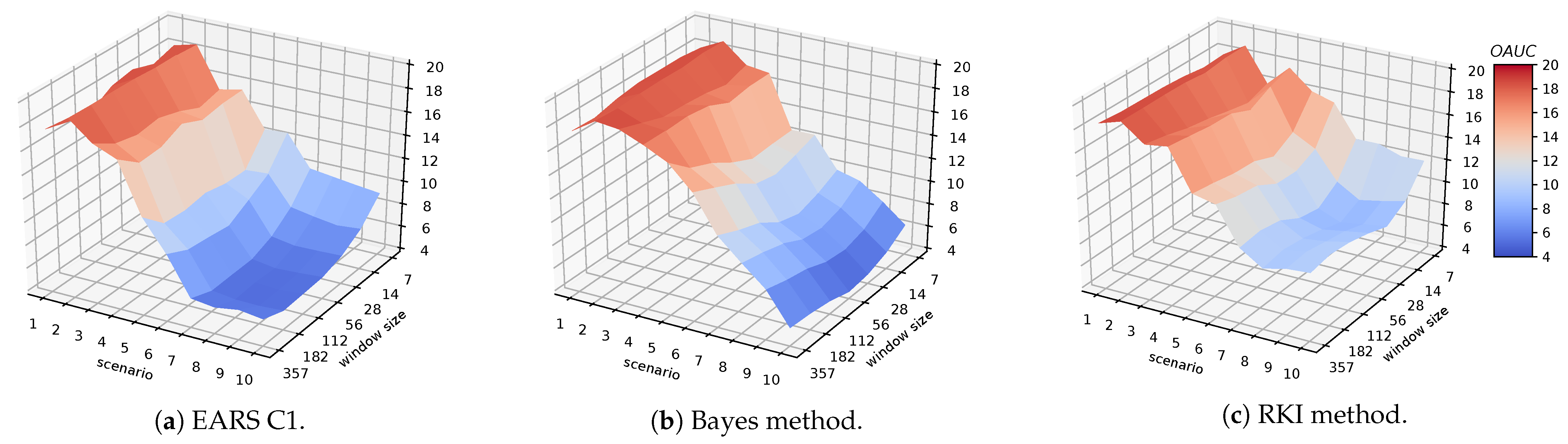

Evaluation of Syndromic Surveillance Methods

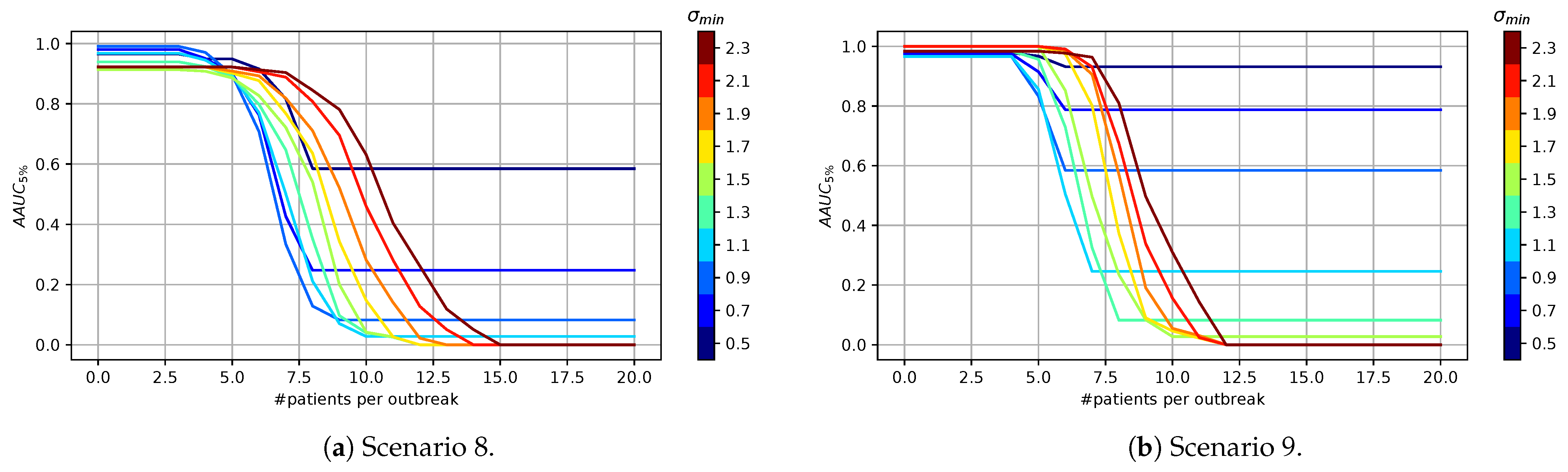

Sensitivity of Statistical Tests

6.3. Experiments on Real Data

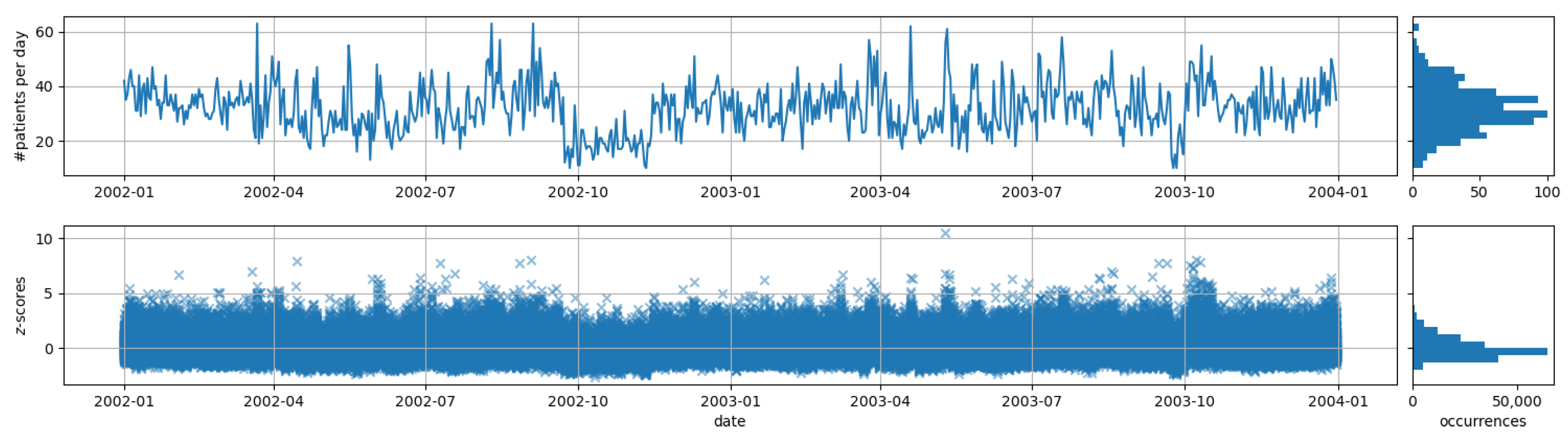

6.3.1. Data

6.3.2. Evaluation Process

6.3.3. Configuration of the Algorithms

6.3.4. Results

Comparison to the Benchmarks

Evaluation of Syndromic Surveillance Methods

Results on Specific Syndromic Surveillance

Sensitivity of Statistical Tests

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Noufaily, A.; Enki, D.; Farrington, P.; Garthwaite, P.; Andrews, N.; Charlett, A. An improved algorithm for outbreak detection in multiple surveillance systems. Stat. Med. 2013, 32, 1206–1222. [Google Scholar] [CrossRef]

- Henning, K.J. What is syndromic surveillance? Morb. Mortal. Wkly. Rep. Suppl. 2004, 53, 7–11. [Google Scholar]

- Buckeridge, D.L. Outbreak detection through automated surveillance: A review of the determinants of detection. J. Biomed. Inform. 2007, 40, 370–379. [Google Scholar] [CrossRef]

- Shmueli, G.; Burkom, H. Statistical challenges facing early outbreak detection in biosurveillance. Technometrics 2010, 52, 39–51. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning—A Guide for Making Black Box Models Explainable. 2020. Available online: http://christophm.github.io/interpretable-ml-book/ (accessed on 20 October 2020).

- Wong, W.K.; Moore, A.; Cooper, G.; Wagner, M. Bayesian Network Anomaly Pattern Detection for Disease Outbreaks. In Proceedings of the 20th International Conference on Machine Learning (ICML), Washington, DC, USA, 21–24 August 2003; Volume 2, pp. 808–815. [Google Scholar]

- Fanaee-T, H.; Gama, J. EigenEvent: An Algorithm for Event Detection from Complex Data Streams in Syndromic Surveillance. Intell. Data Anal. 2015, 19, 597–616. [Google Scholar] [CrossRef]

- Kulessa, M.; Loza Mencía, E.; Fürnkranz, J. Revisiting Non-Specific Syndromic Surveillance. In Proceedings of the 19th International Symposium Intelligent Data Analysis (IDA), Konstanz, Germany, 27–29 April 2021. [Google Scholar]

- Fricker, R.D. Syndromic surveillance. In Wiley StatsRef: Statistics Reference Online; American Cancer Society. 2014. Available online: https://onlinelibrary.wiley.com/doi/full/10.1002/9781118445112.stat03712 (accessed on 19 August 2020).

- Buehler, J.W.; Hopkins, R.S.; Overhage, J.M.; Sosin, D.M.; Tong, V. Framework for Evaluating Public Health Surveillance Systems for Early Detection of Outbreaks. 2008. Available online: https://www.cdc.gov/mmwr/preview/mmwrhtml/rr5305a1.htm (accessed on 14 July 2020).

- Rappold, A.G.; Stone, S.L.; Cascio, W.E.; Neas, L.M.; Kilaru, V.J.; Carraway, M.S.; Szykman, J.J.; Ising, A.; Cleve, W.E.; Meredith, J.T.; et al. Peat bog wildfire smoke exposure in rural North Carolina is associated with cardiopulmonary emergency department visits assessed through syndromic surveillance. Environ. Health Perspect. 2011, 119, 1415–1420. [Google Scholar] [CrossRef] [PubMed]

- Hiller, K.M.; Stoneking, L.; Min, A.; Rhodes, S.M. Syndromic surveillance for influenza in the emergency department—A systematic review. PLoS ONE 2013, 8, e73832. [Google Scholar] [CrossRef] [PubMed]

- Hope, K.; Durrheim, D.N.; Muscatello, D.; Merritt, T.; Zheng, W.; Massey, P.; Cashman, P.; Eastwood, K. Identifying pneumonia outbreaks of public health importance: Can emergency department data assist in earlier identification? Aust. N. Z. J. Public Health 2008, 32, 361–363. [Google Scholar] [CrossRef] [PubMed]

- Edge, V.L.; Pollari, F.; King, L.; Michel, P.; McEwen, S.A.; Wilson, J.B.; Jerrett, M.; Sockett, P.N.; Martin, S.W. Syndromic surveillance of norovirus using over the counter sales of medications related to gastrointestinal illness. Can. J. Infect. Dis. Med. Microbiol. 2006, 17. [Google Scholar] [CrossRef]

- Reis, B.Y.; Pagano, M.; Mandl, K.D. Using temporal context to improve biosurveillance. Proc. Natl. Acad. Sci. USA 2003, 100, 1961–1965. [Google Scholar] [CrossRef]

- Reis, B.Y.; Mandl, K.D. Time series modeling for syndromic surveillance. BMC Med. Inform. Decis. Mak. 2003, 3, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Ansaldi, F.; Orsi, A.; Altomonte, F.; Bertone, G.; Parodi, V.; Carloni, R.; Moscatelli, P.; Pasero, E.; Oreste, P.; Icardi, G. Emergency department syndromic surveillance system for early detection of 5 syndromes: A pilot project in a reference teaching hospital in Genoa, Italy. J. Prev. Med. Hyg. 2008, 49, 131–135. [Google Scholar]

- Wu, T.S.J.; Shih, F.Y.F.; Yen, M.Y.; Wu, J.S.J.; Lu, S.W.; Chang, K.C.M.; Hsiung, C.; Chou, J.H.; Chu, Y.T.; Chang, H.; et al. Establishing a nationwide emergency department-based syndromic surveillance system for better public health responses in Taiwan. BMC Public Health 2008, 8, 18. [Google Scholar] [CrossRef] [PubMed]

- Heffernan, R.; Mostashari, F.; Das, D.; Karpati, A.; Kulldorff, M.; Weiss, D. Syndromic Surveillance in Public Health Practice, New York City. Emerg. Infect. Dis. 2004, 10, 858–864. [Google Scholar] [CrossRef] [PubMed]

- Lober, W.B.; Trigg, L.J.; Karras, B.T.; Bliss, D.; Ciliberti, J.; Stewart, L.; Duchin, J.S. Syndromic surveillance using automated collection of computerized discharge diagnoses. J. Urban Health 2003, 80, i97–i106. [Google Scholar]

- Ising, A.I.; Travers, D.; MacFarquhar, J.; Kipp, A.; Waller, A.E. Triage note in emergency department-based syndromic surveillance. Adv. Dis. Surveill. 2006, 1, 34. [Google Scholar]

- Reis, B.Y.; Mandl, K.D. Syndromic surveillance: The effects of syndrome grouping on model accuracy and outbreak detection. Ann. Emerg. Med. 2004, 44, 235–241. [Google Scholar] [CrossRef] [PubMed]

- Begier, E.M.; Sockwell, D.; Branch, L.M.; Davies-Cole, J.O.; Jones, L.H.; Edwards, L.; Casani, J.A.; Blythe, D. The national capitol region’s emergency department syndromic surveillance system: Do chief complaint and discharge diagnosis yield different results? Emerg. Infect. Dis. 2003, 9, 393. [Google Scholar] [CrossRef]

- Fleischauer, A.T.; Silk, B.J.; Schumacher, M.; Komatsu, K.; Santana, S.; Vaz, V.; Wolfe, M.; Hutwagner, L.; Cono, J.; Berkelman, R.; et al. The validity of chief complaint and discharge diagnosis in emergency department–based syndromic surveillance. Acad. Emerg. Med. 2004, 11, 1262–1267. [Google Scholar]

- Ivanov, O.; Wagner, M.M.; Chapman, W.W.; Olszewski, R.T. Accuracy of three classifiers of acute gastrointestinal syndrome for syndromic surveillance. In Proceedings of the AMIA Symposium. American Medical Informatics Association, San Antonio, TX, USA, 9–13 November 2002; p. 345. [Google Scholar]

- Centers for Disease Control and Prevention. Syndrome Definitions for Diseases Associated with Critical Bioterrorism-Associated Agents. 2003. Available online: https://emergency.cdc.gov/surveillance/syndromedef/pdf/syndromedefinitions.pdf (accessed on 19 August 2020).

- Roure, J.; Dubrawski, A.; Schneider, J. A study into detection of bio-events in multiple streams of surveillance data. In NSF Workshop on Intelligence and Security Informatics; Springer: Berlin/Heidelberg, Germany, 2007; pp. 124–133. [Google Scholar]

- Held, L.; Höhle, M.; Hofmann, M. A statistical framework for the analysis of multivariate infectious disease surveillance counts. Stat. Model. 2005, 5, 187–199. [Google Scholar] [CrossRef]

- Kulldorff, M.; Mostashari, F.; Duczmal, L.; Katherine Yih, W.; Kleinman, K.; Platt, R. Multivariate scan statistics for disease surveillance. Stat. Med. 2007, 26, 1824–1833. [Google Scholar] [CrossRef]

- Webb, G.I.; Hyde, R.; Cao, H.; Nguyen, H.L.; Petitjean, F. Characterizing concept drift. Data Min. Knowl. Discov. 2016, 30, 964–994. [Google Scholar] [CrossRef]

- Hughes, H.; Morbey, R.; Hughes, T.; Locker, T.; Shannon, T.; Carmichael, C.; Murray, V.; Ibbotson, S.; Catchpole, M.; McCloskey, B.; et al. Using an emergency department syndromic surveillance system to investigate the impact of extreme cold weather events. Public Health 2014, 128, 628–635. [Google Scholar] [CrossRef] [PubMed]

- Dirmyer, V.F. Using Real-Time Syndromic Surveillance to Analyze the Impact of a Cold Weather Event in New Mexico. J. Environ. Public Health 2018, 2018, 2185704. [Google Scholar] [CrossRef]

- Johnson, K.; Alianell, A.; Radcliffe, R. Seasonal patterns in syndromic surveillance emergency department data due to respiratory Illnesses. Online J. Public Health Inform. 2014, 6, e66. [Google Scholar] [CrossRef]

- Buckeridge, D.L.; Burkom, H.; Campbell, M.; Hogan, W.R.; Moore, A.W. Algorithms for rapid outbreak detection: A research synthesis. J. Biomed. Inform. 2005, 38, 99–113. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Wong, W.K.; Moore, A.; Cooper, G.; Wagner, M. Rule-Based Anomaly Pattern Detection for Detecting Disease Outbreaks. In Proceedings of the 18th National Conference on Artificial Intelligence (AAAI), Edmonton, AL, Canada, 28 July–1 August 2002; American Association for Artificial Intelligence: Menlo Park, CA, USA, 2002; pp. 217–223. [Google Scholar]

- Hutwagner, L.; Thompson, W.; Seeman, G.; Treadwell, T. The bioterrorism preparedness and response early aberration reporting system (EARS). J. Urban Health 2003, 80, i89–i96. [Google Scholar] [PubMed]

- Dong, G.; Li, J. Efficient mining of emerging patterns: Discovering trends and differences. In Proceedings of the 5th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 15–18 August 1999; pp. 43–52. [Google Scholar]

- Bay, S.; Pazzani, M. Detecting group differences: Mining contrast sets. Data Min. Knowl. Discov. 2001, 5, 213–246. [Google Scholar] [CrossRef]

- Novak, P.K.; Lavrač, N.; Webb, G.I. Supervised descriptive rule discovery: A unifying survey of contrast set, emerging pattern and subgroup mining. J. Mach. Learn. Res. 2009, 10, 377–403. [Google Scholar]

- Wrobel, S. An algorithm for multi-relational discovery of subgroups. In European Symposium on Principles of Data Mining and Knowledge Discovery; Springer: Berlin/Heidelberg, Germany, 1997; pp. 78–87. [Google Scholar]

- Poon, H.; Domingos, P. Sum-product networks: A New Deep Architecture. In Proceedings of the 27th Conference on Uncertainty in Artificial Intelligence (UAI), Barcelona, Spain, 14–17 July 2011; pp. 337–346. [Google Scholar]

- Jensen, F.V. An Introduction to Bayesian Networks; UCL Press: London, UK, 1996; Volume 210. [Google Scholar]

- Duivesteijn, W.; Feelders, A.J.; Knobbe, A. Exceptional model mining. Data Min. Knowl. Discov. 2016, 30, 47–98. [Google Scholar] [CrossRef]

- Li, S.C.X.; Jiang, B.; Marlin, B. Misgan: Learning from incomplete data with generative adversarial networks. arXiv 2019, arXiv:1902.09599. [Google Scholar]

- Gao, J.; Tembine, H. Distributed mean-field-type filters for big data assimilation. In Proceedings of the 2016 IEEE 18th International Conference on High Performance Computing and Communications; IEEE 14th International Conference on Smart City; IEEE 2nd International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Sydney, NSW, Australia, 12–14 December 2016; pp. 1446–1453. [Google Scholar]

- Brossette, S.; Sprague, A.; Hardin, J.; Waites, K.; Jones, W.; Moser, S. Association Rules and Data Mining in Hospital Infection Control and Public Health Surveillance. J. Am. Med. Inform. Assoc. 1998, 5, 373–381. [Google Scholar] [CrossRef] [PubMed]

- Wong, W.K.; Moore, A.; Cooper, G.; Wagner, M. What’s Strange About Recent Events (WSARE): An Algorithm for the Early Detection of Disease Outbreaks. J. Mach. Learn. Res. 2005, 6, 1961–1998. [Google Scholar]

- Amrhein, V.; Greenland, S.; McShane, B. Scientists rise up against statistical significance. Nature 2019, 567, 305–307. [Google Scholar] [CrossRef]

- Knobbe, A.; Crémilleux, B.; Fürnkranz, J.; Scholz, M. From local patterns to global models: The LeGo approach to data mining. In Workshop Proceedings: From Local Patterns to Global Models (Held in Conjunction with ECML/PKDD-08); Utrecht University: Antwerp, Belgium, 2008; Volume 8, pp. 1–16. [Google Scholar]

- Heard, N.A.; Rubin-Delanchy, P. Choosing between methods of combining-values. Biometrika 2018, 105, 239–246. [Google Scholar] [CrossRef]

- Vial, F.; Wei, W.; Held, L. Methodological challenges to multivariate syndromic surveillance: A case study using Swiss animal health data. BMC Vet. Res. 2016, 12, 288. [Google Scholar] [CrossRef]

- Lindquist, M.A.; Mejia, A. Zen and the art of multiple comparisons. Psychosom. Med. 2015, 77, 114. [Google Scholar] [CrossRef]

- Leek, J.T.; Storey, J.D. A general framework for multiple testing dependence. Proc. Natl. Acad. Sci. USA 2008, 105, 18718–18723. [Google Scholar] [CrossRef]

- Faryar, K.A. The Effects of Weekday, Season, Federal Holidays, and Severe Weather Conditions on Emergency Department Volume in Montgomery County, Ohio; Wright State University: Dayton, OH, USA, 2013. [Google Scholar]

- Hilbe, J.M. Modeling Count Data. In International Encyclopedia of Statistical Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 836–839. [Google Scholar]

- Fisher, R.A. Statistical Methods for Research Workers, 5th ed.; Oliver and Boyd: Edinburgh, UK; London, UK, 1934. [Google Scholar]

- Salmon, M.; Schumacher, D.; Höhle, M. Monitoring count time series in R: Aberration detection in public health surveillance. J. Stat. Softw. 2016, 70, 1–35. [Google Scholar] [CrossRef]

- Fricker, R., Jr.; Hegler, B.; Dunfee, D. Comparing syndromic surveillance detection methods: EARS’ versus a CUSUM-based methodology. Stat. Med. 2008, 27, 3407–3429. [Google Scholar] [CrossRef] [PubMed]

- Bédubourg, G.; Le Strat, Y. Evaluation and comparison of statistical methods for early temporal detection of outbreaks: A simulation-based study. PLoS ONE 2017, 12, e0181227. [Google Scholar] [CrossRef]

- Hutwagner, L.; Browne, T.; Seeman, G.; Fleischauer, A. Comparing aberration detection methods with simulated data. Emerg. Infect. Dis. 2005, 11, 314–316. [Google Scholar] [CrossRef] [PubMed]

- Riebler, A. Empirischer Vergleich von Statistischen Methoden zur Ausbruchserkennung bei Surveillance Daten. Bachelor’s Thesis, Department of Statistics, University of Munich, Munich, Germany, 2004. [Google Scholar]

- Fawcett, T.; Provost, F. Activity monitoring: Noticing interesting changes in behavior. In Proceedings of the 5th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 15–18 August 1999; pp. 53–62. [Google Scholar]

- Gonzales, C.; Torti, L.; Wuillemin, P.H. aGrUM: A Graphical Universal Model framework. In Proceedings of the 30th International Conference on Industrial Engineering, Other Applications of Applied Intelligent Systems, Arras, France, 27–30 June 2017; pp. 171–177. [Google Scholar]

- Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 2018, 3, 638. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Fernandes, S.; Fanaee, T.H.; Gama, J. The Initialization and Parameter Setting Problem in Tensor Decomposition-Based Link Prediction. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Tokyo, Japan, 19–21 October 2017; pp. 99–108. [Google Scholar] [CrossRef]

- Gräff, I.; Goldschmidt, B.; Glien, P.; Bogdanow, M.; Fimmers, R.; Hoeft, A.; Kim, S.C.; Grigutsch, D. The German version of the Manchester Triage System and its quality criteria–first assessment of validity and reliability. PLoS ONE 2014, 9, e88995. [Google Scholar] [CrossRef]

| Data Source | Type |

|---|---|

| emergency department visits | clinical |

| emergency hotline calls | clinical |

| insurance claims | clinical |

| laboratory results | clinical |

| … | … |

| school or work absenteeism | alternative |

| pharmacy sales | alternative |

| internet-based searches | alternative |

| animal illnesses or deaths | alternative |

| … | … |

| Notation | Meaning |

|---|---|

| response attributes | |

| environmental attributes | |

| population of cases | |

| a single case | |

| t | index for the time slot |

| cases of time slot t | |

| environmental setting for time slot t | |

| information about time slot t | |

| information about previous time slots | |

| global model | |

| expectation for | |

| set of characteristics | |

| expectations for the characteristics | |

| local model creator | |

| a local model monitoring characteristic X | |

| set of all possible syndromes | |

| a particular syndrome | |

| count of syndrome s for time slot t | |

| time series of counts for syndrome s | |

| reference set of patients |

| Attribute | Type | Values | #Values |

|---|---|---|---|

| age | response | child, senior, … | 3 |

| gender | response | female, male | 2 |

| action | response | purchase, evisit, … | 3 |

| symptom | response | nausea, rash, … | 4 |

| drug | response | aspirin, nyquil, ... | 4 |

| location | spatial | center, east, … | 9 |

| flu level | environmental | high, low, … | 4 |

| day of week | environmental | weekday, sunday, … | 3 |

| weather | environmental | cold, hot | 2 |

| season | environmental | fall, spring, … | 4 |

| Field | Type | Values | #Values |

|---|---|---|---|

| age | response | child, senior, … | 3 |

| gender | response | female, male | 2 |

| MTS | response | diarrhea, asthma, … | 16 |

| fever | response | normal, high, very high | 3 |

| pulse | response | low, normal, high | 3 |

| respiration | response | low, normal, high | 3 |

| oxygen level | response | low, normal | 2 |

| blood pressure | response | normal, high, very high | 3 |

| season | environmental | fall, spring, … | 4 |

| weekday | environmental | Monday, Tuesday, … | 7 |

| Global Modeling | ||

|---|---|---|

| Micro | Macro | |

| Control Chart | 5.090 | 5.086 |

| Moving Average | 6.977 | 7.012 |

| Linear Regression | 3.308 | 3.279 |

| Eigenevent (rerun) | 5.721 | 4.993 |

| Eigenevent (imported p-values) | 4.596 | 4.391 |

| Local modeling | ||

| Gaussian | 0.971 | 0.941 |

| Poisson | 1.329 | 1.347 |

| Negative Binomial | 1.031 | 0.966 |

| Fisher’s test | 1.057 | 1.057 |

| WSARE 2.0 (min. p-value) | 3.054 | 2.963 |

| WSARE 2.5 (min. p-value) | 1.359 | 1.321 |

| WSARE 3.0 (min. p-value) | 0.925 | 0.898 |

| WSARE 2.0 (permutation test) | 3.922 | 3.805 |

| WSARE 2.5 (permutation test) | 1.656 | 1.614 |

| WSARE 3.0 (permutation test) | 1.348 | 1.325 |

| WSARE 2.0 (imported p-values) | 4.943 | 4.925 |

| WSARE 2.5 (imported p-values) | 1.966 | 1.931 |

| WSARE 3.0 (imported p-values) | 1.608 | 1.610 |

| DMSS (, ) | 3.270 | 3.310 |

| DMSS (, ) | 3.078 | 3.011 |

| DMSS (, ) | 3.384 | 3.433 |

| DMSS (, ) | 2.935 | 2.985 |

| DMSS (, ) | 2.838 | 2.817 |

| DMSS (, ) | 3.057 | 3.070 |

| DMSS (, ) | 2.839 | 2.819 |

| DMSS (, ) | 2.702 | 2.764 |

| DMSS (, ) | 2.955 | 2.996 |

| Attribute | Coefficient | Value | Attribute | Coefficient | Value |

|---|---|---|---|---|---|

| flu level | 2.805 | season | 0.266 | ||

| 6.221 | 2.805 | ||||

| −5.830 | 3.345 | ||||

| −3.197 | −6.417 | ||||

| day of week | 1.063 | weather | −8.776 | ||

| 0.683 | 8.776 | ||||

| −1.746 |

| Size of the Sliding Window | |||||||

|---|---|---|---|---|---|---|---|

| Algorithm | 7 | 14 | 28 | 56 | 112 | 182 | 357 |

| EARS C1 | 4.885 | 2.826 | 1.905 | 1.620 | 1.572 | 1.533 | 0.997 |

| EARS C2 | 4.642 | 2.445 | 1.723 | 1.651 | 1.635 | 1.568 | 0.969 |

| EARS C3 | 3.858 | 2.580 | 2.008 | 1.846 | 1.708 | 1.605 | 1.309 |

| RKI | 2.370 | 2.038 | 1.767 | 1.851 | 2.033 | 2.125 | 1.386 |

| Bayes | 2.302 | 1.662 | 1.760 | 1.857 | 2.003 | 2.203 | 1.312 |

| Scenario | Syndrome | Daily | |

|---|---|---|---|

| 1 | MTS = unease | 22.175 | 6.432 |

| 2 | age = adult AND blood pressure = very high | 16.568 | 4.554 |

| 3 | fever = high | 11.952 | 5.662 |

| 4 | gender = female AND MTS = abdominal pain | 7.274 | 2.787 |

| 5 | age = senior AND fever = high | 3.403 | 2.162 |

| 6 | gender = male AND MTS=diarrhea | 2.108 | 1.570 |

| 7 | MTS = gastrointestinal bleeding | 1.168 | 1.104 |

| 8 | age = adult AND MTS = collapse | 0.618 | 0.777 |

| 9 | fever = high AND respiration = high | 0.327 | 0.584 |

| 10 | MTS = asthma | 0.068 | 0.263 |

| Scenario | Avg. | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Global Modeling | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Rank |

| Linear Regre. | 16.43 (1) | 17.97 (1) | 15.88 (1) | 18.09 (15) | 17.76 (12) | 13.97 (10) | 15.83 (13) | 17.06 (13) | 15.91 (13) | 13.33 (13) | 9.2 |

| Control Chart | 17.32 (2) | 18.45 (7) | 16.08 (4) | 18.08 (14) | 18.38 (15) | 16.02 (14) | 16.77 (15) | 17.46 (14) | 16.87 (15) | 15.35 (15) | 11.5 |

| Moving Avg. | 17.45 (4) | 18.47 (8) | 17.67 (12) | 17.42 (8) | 18.28 (14) | 17.67 (16) | 17.89 (16) | 17.96 (15) | 18.05 (16) | 15.08 (14) | 12.3 |

| Eigenevent | 17.77 (9) | 18.30 (5) | 16.85 (7) | 18.69 (16) | 18.50 (16) | 16.95 (15) | 16.58 (14) | 18.20 (16) | 16.83 (14) | 16.35 (16) | 12.8 |

| local modeling | |||||||||||

| Gaussian | 17.55 (6) | 18.88 (11) | 18.02 (13) | 16.95 (5) | 14.19 (7) | 9.82 (5) | 5.80 (3) | 5.78 (4) | 6.15 (5) | 5.84 (4) | 6.3 |

| Poisson | 18.26 (15) | 18.92 (14) | 17.58 (11) | 17.46 (9) | 14.79 (8) | 12.14 (8) | 9.04 (7) | 9.17 (8) | 9.83 (11) | 10.01 (11) | 10.2 |

| Neg. Binomial | 17.65 (8) | 18.41 (6) | 17.16 (10) | 14.04 (1) | 13.33 (5) | 8.93 (4) | 5.13 (1) | 5.37 (2) | 5.83 (4) | 6.06 (8) | 4.9 |

| Fisher’s Test | 17.43 (3) | 19.00 (16) | 18.93 (16) | 17.66 (13) | 17.77 (13) | 15.69 (13) | 12.25 (11) | 10.57 (11) | 9.03 (10) | 6.05 (7) | 11.3 |

| WSARE 2.0 | 17.49 (5) | 18.25 (4) | 17.06 (8) | 17.50 (12) | 16.39 (10) | 15.64 (12) | 14.40 (12) | 12.61 (12) | 12.23 (12) | 11.47 (12) | 9.9 |

| WSARE 2.5 | 18.29 (16) | 18.20 (3) | 18.80 (15) | 17.31 (7) | 15.90 (9) | 13.68 (9) | 11.33 (9) | 9.83 (9) | 8.90 (8) | 6.77 (9) | 9.4 |

| WSARE 3.0 | 17.78 (10) | 18.14 (2) | 18.68 (14) | 17.46 (10) | 16.90 (11) | 14.70 (11) | 11.34 (10) | 10.04 (10) | 7.60 (7) | 6.04 (6) | 9.1 |

| C1 () | 17.85 (12) | 18.88 (10) | 16.03 (2) | 15.99 (3) | 10.52 (1) | 8.13 (1) | 5.65 (2) | 5.33 (1) | 5.42 (1) | 5.57 (2) | 3.5 |

| C2 () | 17.59 (7) | 18.95 (15) | 16.07 (3) | 16.16 (4) | 10.86 (3) | 8.47 (2) | 6.00 (4) | 5.75 (3) | 5.81 (3) | 5.96 (5) | 4.9 |

| C3 () | 17.81 (11) | 18.89 (13) | 16.71 (6) | 15.93 (2) | 10.69 (2) | 8.82 (3) | 6.07 (5) | 5.82 (5) | 5.69 (2) | 5.71 (3) | 5.2 |

| Bayes () | 18.14 (14) | 18.79 (9) | 17.15 (9) | 17.49 (11) | 13.15 (4) | 11.78 (6) | 8.92 (6) | 7.87 (6) | 6.42 (6) | 4.27 (1) | 7.2 |

| RKI () | 18.05 (13) | 18.88 (12) | 16.16 (5) | 17.07 (6) | 13.33 (6) | 11.83 (7) | 9.22 (8) | 8.66 (7) | 9.00 (9) | 9.32 (10) | 8.3 |

| Attribute | Coefficient | Value | Attribute | Coefficient | Value |

|---|---|---|---|---|---|

| weekday | 11.219 | season | −8.385 | ||

| 0.840 | 6.225 | ||||

| −2.436 | 5.527 | ||||

| −0.021 | −3.367 | ||||

| 3.886 | |||||

| −5.411 | |||||

| −8.077 |

| Local Modeling | Scenario | Avg. | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Rank | |

| Gaussian | 14.79 (10) | 9.64 (6) | 13.02 (10) | 5.31 (6) | 2.76 (3) | 3.10 (7) | 2.16 (8) | 1.31 (9) | 0.76 (8) | 0.48 (12) | 7.9 |

| Poisson | 14.63 (8) | 9.57 (4) | 12.63 (9) | 5.29 (4) | 2.75 (2) | 3.08 (6) | 2.09 (4) | 1.23 (4) | 0.69 (2) | 0.40 (5) | 4.8 |

| Neg. Binomial | 14.81 (11) | 9.66 (7) | 13.13 (11) | 5.32 (7) | 2.76 (4) | 3.06 (5) | 2.25 (11) | 1.23 (4) | 0.69 2) | 0.40 (5) | 6.7 |

| Fisher’s Test | 14.65 (9) | 8.67 (1) | 13.15 (12) | 5.09 (1) | 2.74 (1) | 2.90 (3) | 2.11 (5) | 1.16 (1) | 0.74 (6) | 0.40 (4) | 4.3 |

| WSARE 2.0 | 14.93 (12) | 10.18 (10) | 11.62 (7) | 6.80 (12) | 3.92 (12) | 3.23 (11) | 2.29 (12) | 1.46 (12) | 0.69 (5) | 0.40 (3) | 9.6 |

| WSARE 2.5 | 13.94 (3) | 8.72 (3) | 11.16 (6) | 5.73 (9) | 2.97 (7) | 2.88 (2) | 2.21 (9) | 1.17 (2) | 0.75 (7) | 0.27 (1) | 4.9 |

| WSARE 3.0 | 14.34 (6) | 8.70 (2) | 11.66 (8) | 5.20 (2) | 3.09 (10) | 2.81 (1) | 2.12 (6) | 1.21 (3) | 0.68 (1) | 0.40 (2) | 4.1 |

| C1 () | 14.16 (4) | 10.17 (9) | 9.63 (1) | 5.80 (11) | 3.09 (9) | 3.16 (9) | 2.03 (3) | 1.26 (7) | 0.78 (9) | 0.41 (10) | 7.2 |

| C2 () | 14.40 (7) | 10.20 (11) | 9.76 (2) | 5.80 (10) | 3.09 (11) | 3.15 (8) | 2.01 (2) | 1.27 (8) | 0.79 (10) | 0.41 (8) | 7.7 |

| C3 () | 13.83 (1) | 11.01 (12) | 10.95 (5) | 5.65 (8) | 3.08 (8) | 3.52 (12) | 2.25 (10) | 1.40 (11) | 0.81 (11) | 0.41 (8) | 8.6 |

| Bayes () | 13.84 (2) | 9.87 (8) | 9.91 (4) | 5.30 (5) | 2.85 (5) | 2.94 (4) | 1.98 (1) | 1.32 (10) | 0.89 (12) | 0.44 (11) | 6.2 |

| RKI () | 14.17 (5) | 9.64 (5) | 9.86 (3) | 5.24 (3) | 2.91 (6) | 3.19 (10) | 2.12 (7) | 1.23 (4) | 0.69 (2) | 0.40 (5) | 5.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kulessa, M.; Mencía, E.L.; Fürnkranz, J. A Unifying Framework and Comparative Evaluation of Statistical and Machine Learning Approaches to Non-Specific Syndromic Surveillance. Computers 2021, 10, 32. https://doi.org/10.3390/computers10030032

Kulessa M, Mencía EL, Fürnkranz J. A Unifying Framework and Comparative Evaluation of Statistical and Machine Learning Approaches to Non-Specific Syndromic Surveillance. Computers. 2021; 10(3):32. https://doi.org/10.3390/computers10030032

Chicago/Turabian StyleKulessa, Moritz, Eneldo Loza Mencía, and Johannes Fürnkranz. 2021. "A Unifying Framework and Comparative Evaluation of Statistical and Machine Learning Approaches to Non-Specific Syndromic Surveillance" Computers 10, no. 3: 32. https://doi.org/10.3390/computers10030032

APA StyleKulessa, M., Mencía, E. L., & Fürnkranz, J. (2021). A Unifying Framework and Comparative Evaluation of Statistical and Machine Learning Approaches to Non-Specific Syndromic Surveillance. Computers, 10(3), 32. https://doi.org/10.3390/computers10030032