Ensuring Reproducibility and Deploying Models with the Image2Radiomics Framework: An Evaluation of Image Processing on PanNET Model Performance

Simple Summary

Abstract

1. Introduction

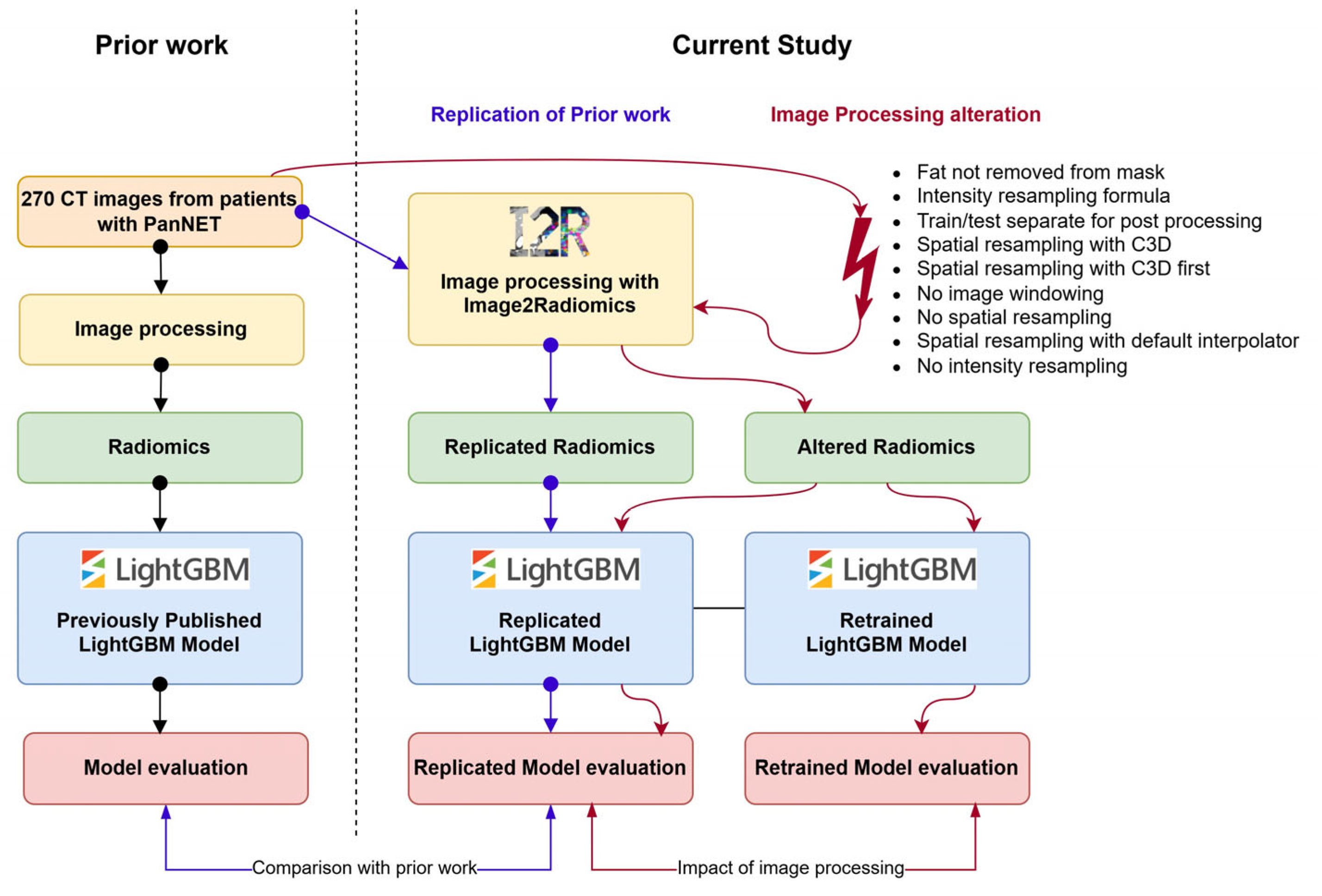

2. Materials and Methods

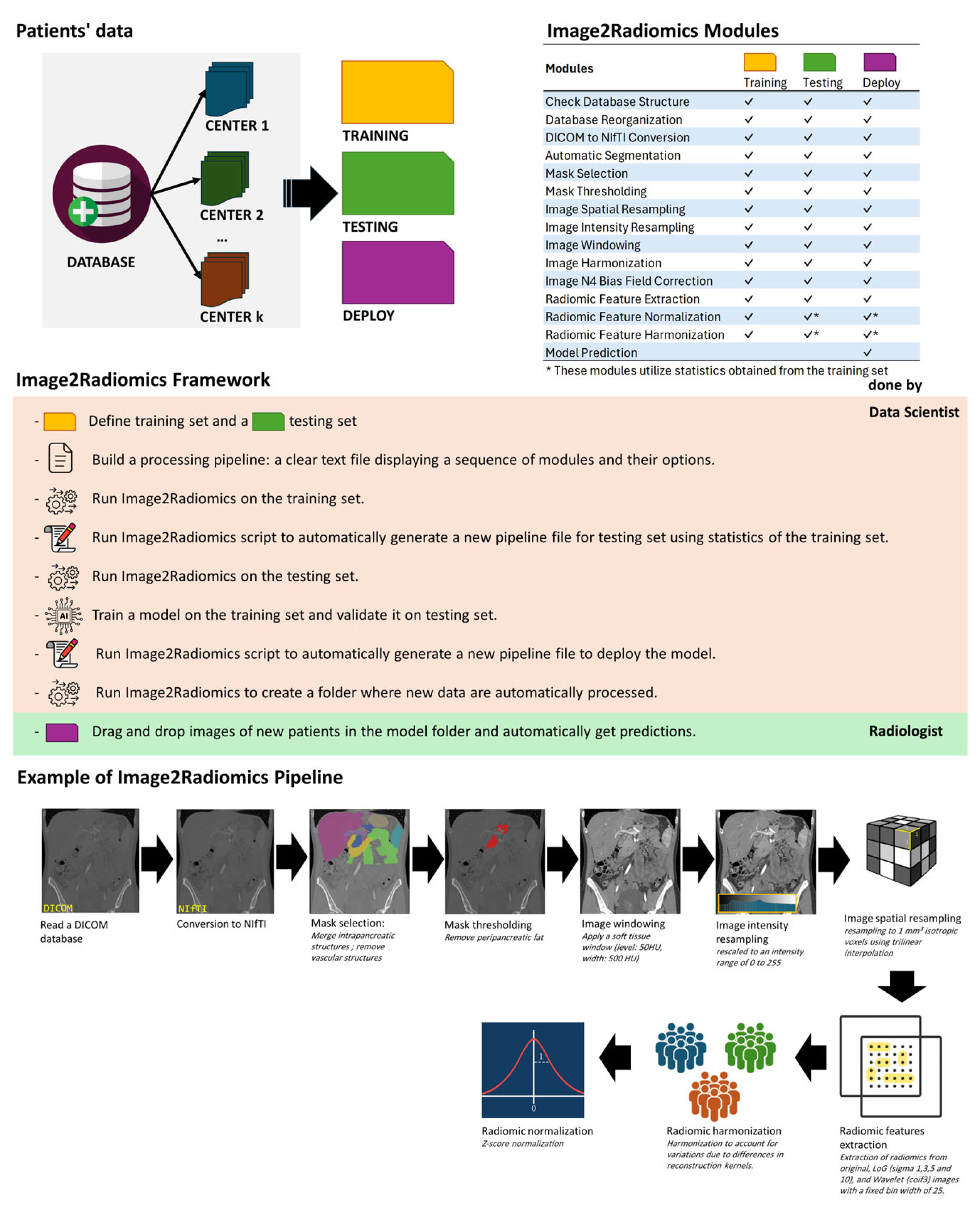

2.1. Image2Radiomics Framework

2.2. PanNETsModel

2.3. Image Processing Pipeline Alteration

2.4. Model Evaluation

3. Results

3.1. Replication of PanNET Study

3.2. Impact of the Altered Image Processing Pipelines on the Model Performance

3.3. Impact of the Altered Image Processing Pipelines on the Model Predictions

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUC | Area Under the Curve |

| CT | Computed Tomography |

| HPC | High-Performance Computing |

| HU | Hounsfield Unit |

| IBSI | Image Biomarker Standardisation Initiative |

| LightGBM | Light Gradient Boosting Machine |

| MRI | Magnetic Resonance Imaging |

| PanNET | Pancreatic Neuroendocrine Tumor |

| PET | Positron Emission Tomography |

| SD | Standard Deviation |

| SLURM | Simple Linux Utility for Resource Management |

| SGE | Sun Grid Engine |

References

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; van Stiphout, R.G.P.M.; Granton, P.; Zegers, C.M.L.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting More Information from Medical Images Using Advanced Feature Analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef]

- van Timmeren, J.E.; Cester, D.; Tanadini-Lang, S.; Alkadhi, H.; Baessler, B. Radiomics in Medical Imaging—“How-to” Guide and Critical Reflection. Insights Imaging 2020, 11, 91. [Google Scholar] [CrossRef]

- Hatt, M.; Cheze Le Rest, C.; Antonorsi, N.; Tixier, F.; Tankyevych, O.; Jaouen, V.; Lucia, F.; Bourbonne, V.; Schick, U.; Badic, B.; et al. Radiomics in PET/CT: Current Status and Future AI-Based Evolutions. Semin. Nucl. Med. 2021, 51, 126–133. [Google Scholar] [CrossRef]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images Are More than Pictures, They Are Data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Larue, R.T.H.M.; Defraene, G.; De Ruysscher, D.; Lambin, P.; van Elmpt, W. Quantitative Radiomics Studies for Tissue Characterization: A Review of Technology and Methodological Procedures. Br. J. Radiol. 2017, 90, 20160665. [Google Scholar] [CrossRef] [PubMed]

- Schick, U.; Lucia, F.; Bourbonne, V.; Dissaux, G.; Pradier, O.; Jaouen, V.; Tixier, F.; Visvikis, D.; Hatt, M. Use of Radiomics in the Radiation Oncology Setting: Where Do We Stand and What Do We Need? Cancer/Radiothérapie 2020, 24, 755–761. [Google Scholar] [CrossRef] [PubMed]

- Limkin, E.J.; Sun, R.; Dercle, L.; Zacharaki, E.I.; Robert, C.; Reuzé, S.; Schernberg, A.; Paragios, N.; Deutsch, E.; Ferté, C. Promises and Challenges for the Implementation of Computational Medical Imaging (Radiomics) in Oncology. Ann. Oncol. 2017, 28, 1191–1206. [Google Scholar] [CrossRef] [PubMed]

- Sotoudeh, H.; Sarrami, A.H.; Roberson, G.H.; Shafaat, O.; Sadaatpour, Z.; Rezaei, A.; Choudhary, G.; Singhal, A.; Sotoudeh, E.; Tanwar, M. Emerging Applications of Radiomics in Neurological Disorders: A Review. Cureus 2021, 13, e20080. [Google Scholar] [CrossRef]

- Ponsiglione, A.; Stanzione, A.; Cuocolo, R.; Ascione, R.; Gambardella, M.; De Giorgi, M.; Nappi, C.; Cuocolo, A.; Imbriaco, M. Cardiac CT and MRI Radiomics: Systematic Review of the Literature and Radiomics Quality Score Assessment. Eur. Radiol. 2022, 32, 2629–2638. [Google Scholar] [CrossRef]

- Tixier, F.; Jaouen, V.; Hognon, C.; Gallinato, O.; Colin, T.; Visvikis, D. Evaluation of Conventional and Deep Learning Based Image Harmonization Methods in Radiomics Studies. Phys. Med. Biol. 2021, 66, 245009. [Google Scholar] [CrossRef]

- Yadav, A.; Welland, S.; Hoffman, J.M.; Hyun, J.K.G.; Brown, M.S.; Prosper, A.E.; Aberle, D.R.; McNitt-Gray, M.F.; Hsu, W. A Comparative Analysis of Image Harmonization Techniques in Mitigating Differences in CT Acquisition and Reconstruction. Phys. Med. Biol. 2025, 70, 055015. [Google Scholar] [CrossRef] [PubMed]

- Fortin, J.-P.; Cullen, N.; Sheline, Y.I.; Taylor, W.D.; Aselcioglu, I.; Cook, P.A.; Adams, P.; Cooper, C.; Fava, M.; McGrath, P.J.; et al. Harmonization of Cortical Thickness Measurements across Scanners and Sites. NeuroImage 2018, 167, 104–120. [Google Scholar] [CrossRef] [PubMed]

- Da-Ano, R.; Masson, I.; Lucia, F.; Doré, M.; Robin, P.; Alfieri, J.; Rousseau, C.; Mervoyer, A.; Reinhold, C.; Castelli, J.; et al. Performance Comparison of Modified ComBat for Harmonization of Radiomic Features for Multicenter Studies. Sci. Rep. 2020, 10, 10248. [Google Scholar] [CrossRef] [PubMed]

- Da-Ano, R.; Visvikis, D.; Hatt, M. Harmonization Strategies for Multicenter Radiomics Investigations. Phys. Med. Biol. 2020, 65, 24TR02. [Google Scholar] [CrossRef]

- Zwanenburg, A.; Vallières, M.; Abdalah, M.A.; Aerts, H.J.W.L.; Andrearczyk, V.; Apte, A.; Ashrafinia, S.; Bakas, S.; Beukinga, R.J.; Boellaard, R.; et al. The Image Biomarker Standardization Initiative: Standardized Quantitative Radiomics for High-Throughput Image-Based Phenotyping. Radiology 2020, 295, 328–338. [Google Scholar] [CrossRef]

- Whybra, P.; Zwanenburg, A.; Andrearczyk, V.; Schaer, R.; Apte, A.P.; Ayotte, A.; Baheti, B.; Bakas, S.; Bettinelli, A.; Boellaard, R.; et al. The Image Biomarker Standardization Initiative: Standardized Convolutional Filters for Quantitative Radiomics Authors and Affiliations. Radiology 2024, 310, e231319. [Google Scholar] [CrossRef]

- Vallières, M.; Zwanenburg, A.; Badic, B.; Rest, C.C.L.; Visvikis, D.; Hatt, M. Responsible Radiomics Research for Faster Clinical Translation. J. Nucl. Med. 2018, 59, 189–193. [Google Scholar] [CrossRef]

- Huang, E.P.; O’Connor, J.P.B.; McShane, L.M.; Giger, M.L.; Lambin, P.; Kinahan, P.E.; Siegel, E.L.; Shankar, L.K. Criteria for the Translation of Radiomics into Clinically Useful Tests. Nat. Rev. Clin. Oncol. 2023, 20, 69–82. [Google Scholar] [CrossRef]

- Majumder, S.; Katz, S.; Kontos, D.; Roshkovan, L. State of the Art: Radiomics and Radiomics-Related Artificial Intelligence on the Road to Clinical Translation. BJR Open 2023, 6, tzad004. [Google Scholar] [CrossRef]

- Lopez-Ramirez, F.; Soleimani, S.; Azadi, J.R.; Sheth, S.; Kawamoto, S.; Javed, A.A.; Tixier, F.; Hruban, R.H.; Fishman, E.K.; Chu, L.C. Radiomics Machine Learning Algorithm Facilitates Detection of Small Pancreatic Neuroendocrine Tumors on CT. Diagn. Interv. Imaging 2025, 106, 28–40. [Google Scholar] [CrossRef]

- Lowekamp, B.C.; Chen, D.T.; Ibáñez, L.; Blezek, D. The Design of SimpleITK. Front. Neuroinform. 2013, 7, 45. [Google Scholar] [CrossRef]

- Yushkevich, P.A.; Piven, J.; Hazlett, H.C.; Smith, R.G.; Ho, S.; Gee, J.C.; Gerig, G. User-Guided 3D Active Contour Segmentation of Anatomical Structures: Significantly Improved Efficiency and Reliability. NeuroImage 2006, 31, 1116–1128. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, A.; Watkins, A.; Yousefirizi, F.; Rahmim, A.; Uribe, C.F. RT-Utils: A Minimal Python Library for RT-Struct Manipulation. arXiv 2024, arXiv:2405.06184. [Google Scholar] [CrossRef]

- van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.-C.; Pieper, S.; Aerts, H.J.W.L. Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [PubMed]

- D’Antonoli, T.A.; Berger, L.K.; Indrakanti, A.K.; Vishwanathan, N.; Weiß, J.; Jung, M.; Berkarda, Z.; Rau, A.; Reisert, M.; Küstner, T.; et al. TotalSegmentator MRI: Sequence-Independent Segmentation of 59 Anatomical Structures in MR Images. Radiology 2025, 314, e241613. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 3149–3157. [Google Scholar]

- Mukherjee, S.; Patra, A.; Khasawneh, H.; Korfiatis, P.; Rajamohan, N.; Suman, G.; Majumder, S.; Panda, A.; Johnson, M.P.; Larson, N.B.; et al. Radiomics-Based Machine-Learning Models Can Detect Pancreatic Cancer on Prediagnostic Computed Tomography Scans at a Substantial Lead Time Before Clinical Diagnosis. Gastroenterology 2022, 163, 1435–1446.e3. [Google Scholar] [CrossRef]

- Evans, H.; Snead, D. Understanding the Errors Made by Artificial Intelligence Algorithms in Histopathology in Terms of Patient Impact. npj Digit. Med. 2024, 7, 89. [Google Scholar] [CrossRef]

- van Timmeren, J.E.; Leijenaar, R.T.; van Elmpt, W.; Wang, J.; Zhang, Z.; Dekker, A.; Lambin, P. Test–Retest Data for Radiomics Feature Stability Analysis: Generalizable or Study-Specific? Tomography 2016, 2, 361. [Google Scholar] [CrossRef]

- Wichtmann, B.D.; Harder, F.N.; Weiss, K.; Schönberg, S.O.; Attenberger, U.I.; Alkadhi, H.; Pinto dos Santos, D.; Baeßler, B. Influence of Image Processing on Radiomic Features From Magnetic Resonance Imaging. Investig. Radiol. 2023, 58, 199. [Google Scholar] [CrossRef]

- Horvat, N.; Papanikolaou, N.; Koh, D.-M. Radiomics Beyond the Hype: A Critical Evaluation Toward Oncologic Clinical Use. Radiol. Artif. Intell. 2024, 6, e230437. [Google Scholar] [CrossRef]

- Cardoso, M.J.; Li, W.; Brown, R.; Ma, N.; Kerfoot, E.; Wang, Y.; Murrey, B.; Myronenko, A.; Zhao, C.; Yang, D.; et al. MONAI: An Open-Source Framework for Deep Learning in Healthcare. arXiv 2022, arXiv:10.48550/arXiv.2211.02701. [Google Scholar]

- Jackson, E.F. Quantitative Imaging: The Translation from Research Tool to Clinical Practice. Radiology 2018, 286, 499–501. [Google Scholar] [CrossRef]

- Akinci D’Antonoli, T.; Cuocolo, R.; Baessler, B.; Pinto dos Santos, D. Towards Reproducible Radiomics Research: Introduction of a Database for Radiomics Studies. Eur. Radiol. 2024, 34, 436–443. [Google Scholar] [CrossRef]

- Shafiq-ul-Hassan, M.; Zhang, G.G.; Latifi, K.; Ullah, G.; Hunt, D.C.; Balagurunathan, Y.; Abdalah, M.A.; Schabath, M.B.; Goldgof, D.G.; Mackin, D.; et al. Intrinsic Dependencies of CT Radiomic Features on Voxel Size and Number of Gray Levels. Med. Phys. 2017, 44, 1050–1062. [Google Scholar] [CrossRef] [PubMed]

- Um, H.; Tixier, F.; Bermudez, D.; Deasy, J.O.; Young, R.J.; Veeraraghavan, H. Impact of Image Preprocessing on the Scanner Dependence of Multi-Parametric MRI Radiomic Features and Covariate Shift in Multi-Institutional Glioblastoma Datasets. Phys. Med. Biol. 2019, 64, 165011. [Google Scholar] [CrossRef] [PubMed]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A Self-Configuring Method for Deep Learning-Based Biomedical Image Segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tixier, F.; Lopez-Ramirez, F.; Syailendra, E.A.; Blanco, A.; Javed, A.A.; Chu, L.C.; Kawamoto, S.; Fishman, E.K. Ensuring Reproducibility and Deploying Models with the Image2Radiomics Framework: An Evaluation of Image Processing on PanNET Model Performance. Cancers 2025, 17, 2552. https://doi.org/10.3390/cancers17152552

Tixier F, Lopez-Ramirez F, Syailendra EA, Blanco A, Javed AA, Chu LC, Kawamoto S, Fishman EK. Ensuring Reproducibility and Deploying Models with the Image2Radiomics Framework: An Evaluation of Image Processing on PanNET Model Performance. Cancers. 2025; 17(15):2552. https://doi.org/10.3390/cancers17152552

Chicago/Turabian StyleTixier, Florent, Felipe Lopez-Ramirez, Emir A. Syailendra, Alejandra Blanco, Ammar A. Javed, Linda C. Chu, Satomi Kawamoto, and Elliot K. Fishman. 2025. "Ensuring Reproducibility and Deploying Models with the Image2Radiomics Framework: An Evaluation of Image Processing on PanNET Model Performance" Cancers 17, no. 15: 2552. https://doi.org/10.3390/cancers17152552

APA StyleTixier, F., Lopez-Ramirez, F., Syailendra, E. A., Blanco, A., Javed, A. A., Chu, L. C., Kawamoto, S., & Fishman, E. K. (2025). Ensuring Reproducibility and Deploying Models with the Image2Radiomics Framework: An Evaluation of Image Processing on PanNET Model Performance. Cancers, 17(15), 2552. https://doi.org/10.3390/cancers17152552