Simple Summary

Early diagnosis of skin cancer is vital for providing effective treatment for patients. Dermoscopy is a non-invasive approach that utilizes specific equipment to examine the skin and is helpful in determining the specific patterns and features that might confirm the existence of skin cancer. Recently, Machine Learning (ML) algorithms have been developed to analyze dermoscopic images and classify such images as either benign or malignant. Convolutional Neural Networks (CNNs) and other ML techniques, such as the Support Vector Machine (SVM) and Random Forest classifiers, have been used in the extraction of the features from the dermoscopic images. The extracted features are then used to classify the dermoscopic images as either benign or malignant. Therefore, the current study develops a new Deep Learning-based skin cancer classification method for dermoscopic images, which has the potential to improve the accuracy and efficiency of the skin cancer diagnosis process and produce better outcomes for the patients.

Abstract

Artificial Intelligence (AI) techniques have changed the general perceptions about medical diagnostics, especially after the introduction and development of Convolutional Neural Networks (CNN) and advanced Deep Learning (DL) and Machine Learning (ML) approaches. In general, dermatologists visually inspect the images and assess the morphological variables such as borders, colors, and shapes to diagnose the disease. In this background, AI techniques make use of algorithms and computer systems to mimic the cognitive functions of the human brain and assist clinicians and researchers. In recent years, AI has been applied extensively in the domain of dermatology, especially for the detection and classification of skin cancer and other general skin diseases. In this research article, the authors propose an Optimal Multi-Attention Fusion Convolutional Neural Network-based Skin Cancer Diagnosis (MAFCNN-SCD) technique for the detection of skin cancer in dermoscopic images. The primary aim of the proposed MAFCNN-SCD technique is to classify skin cancer on dermoscopic images. In the presented MAFCNN-SCD technique, the data pre-processing is performed at the initial stage. Next, the MAFNet method is applied as a feature extractor with Henry Gas Solubility Optimization (HGSO) algorithm as a hyperparameter optimizer. Finally, the Deep Belief Network (DBN) method is exploited for the detection and classification of skin cancer. A sequence of simulations was conducted to establish the superior performance of the proposed MAFCNN-SCD approach. The comprehensive comparative analysis outcomes confirmed the supreme performance of the proposed MAFCNN-SCD technique over other methodologies.

1. Introduction

Melanoma is the most severe type of skin cancer that appears on any part of the skin or near a mole. This skin cancer is characterized by an uncontrollable growth of the cells without any apoptosis [1]. In this scenario, such cells of the body parts turn out to be tumorous and start spreading to other parts of the party. Unlike the rest of the skin cancer types, such as basal cell carcinoma and squamous cell carcinoma, melanoma is less common in nature. However, melanoma is highly dangerous compared to the rest of the skin cancer types, as it spreads to distinct body parts if left untreated or undiagnosed in its early stages. Melanoma spreads rapidly across the body and affects almost all body parts [2]. The dermatologists utilize photographic or microscopic instruments to see the additional details that are relevant to lesions. When diagnosing skin cancer, the clinician refers the individual to a tumor expert who performs surgery on the lesions [3]. Dermoscopy is a microscopic technique that inspects the surface of the skin. This technique is utilized to distinguish benign lesions from malignant ones based on the captured images without removing the skin and other maddening tests. This analytical procedure is conducted completely based on the oncologist’s expertise and experience [4]. Such a scenario pushed the current study authors to develop a computer-aided technique by employing the dermoscopic images and displaying the outcomes as assisting apparatuses for dermatologists. Various studies have been conducted so far to attain superior outcomes in the disease diagnosis process.

Numerous methods have been modeled so far for the automatic detection of melanoma-affected skin parts [5]. At first, handcrafted features-related methods were presented for diagnosing melanoma. But, such methods did not yield good outcomes due to dissimilarities in the color, shape, and size of the melanoma moles [6]. Then, segmentation-related methods such as the Iterative Selection Thresholding (ISO) and adaptive thresholding were proposed to enhance the detection accuracy of such automated mechanisms. Such methods tend to work on the segmented part of the melanoma called ‘RoI’ [7]. DL-related techniques have gained more popularity in medical imaging and diagnostics processes in recent years. In techniques such as the CNN, a small portion of the images, with melanoma-affected portions, is considered to train the automatic identification mechanism [8]. There exist numerous potential applications for deep unsupervised learning-based feature extraction from these images, such as object detection, image classification, image retrieval, anomaly detection, generative modeling, etc. Such methods execute the segmentation process on the test images that are related to the trained method. The DL-related techniques exhibit superior performance in detecting and segmenting melanoma images than the handcrafted feature-related methods [9]. Such procedures can automatically calculate the complicated and representative feature set. Furthermore, the DL methods can also easily trace the skin moles of different sizes in the occurrence of noise, blur, the incidence of light, color variations, and intensity [10].

The current research article develops an Optimal Multi-Attention Fusion Convolutional Neural Network-based Skin Cancer Diagnosis (MAFCNN-SCD) technique for the diagnosis of melanoma cancer from dermoscopic images. In the presented MAFCNN-SCD technique, the data pre-processing is performed initially. Next, the MAFNet method is enforced as a feature extractor with Henry Gas Solubility Optimization (HGSO) algorithm as a hyperparameter optimizer. Finally, the Deep Belief Network (DBN) method is exploited for the detection and classification of skin cancer. A sequence of experiments was conducted to validate the improved performance of the proposed MAFCNN-SCD approach. The key contributions of the current research work are listed herewith.

- An automated MAFCNN-SCD technique has been proposed in this study with pre-processing, MAFNet-based feature extraction, DBN classification, and HGSO-based hyperparameter tuning processes for skin cancer detection and classification. To the best of the authors’ knowledge, the proposed MAFCNN-SCD model is the first of its kind in this domain.

- The authors employed MAFNet as a feature extractor with DBN as a skin cancer detection and classification classifier.

- The hyperparameter optimization of the MAFNet model, using the HGSO algorithm with cross-validation, helped to boost the predictive outcomes of the proposed MAFCNN-SCD model for unseen data.

2. Literature Review

Shorfuzzaman [11] presented an interpretable CNN-based stacked ensemble structure for the detection of melanoma skin tumors at earlier stages. In this study, the Transfer Learning (TL) model was employed in the stacked ensemble framework, whereas a distinct number of CNN sub-models that apply similar classifier tasks were also gathered. A novel approach called meta-learner was employed in the prediction of every sub-model, and the last prediction outcomes were attained. Bhimavarapu and Battineni [12] intended to integrate the DL techniques for the automatic classification of melanoma in dermoscopic images. A Fuzzy-based GrabCut-stacked CNN (GC-SCNN) technique was validated using the trained images. The image extraction feature and the lesion classifier were leveraged, and the model’s efficacy was tested using distinct openly-accessible databases. The purpose of the study, conducted by Lafraxo et al. [13], was to automate the procedure of classifying the dermoscopic images comprising skin lesions as either benign or malignant. Thus, an enhanced DL-based solution with CNN was presented in this study. Data augmentation, regularization, and dropout were performed to avoid the over-fitting issue that is generally experienced in the CNN technique.

Banerjee et al. [14] examined a DL-based YOLO technique on the basis of the application of DCNNs. This technique was used to detect melanoma in digital and dermoscopic images. The authors suggested a faster and a precise outcome related to the typical CNNs. But, particular resourceful models were infused under two stages of segmentation. This segmentation is created by combining a graph model using a minimal spanning tree model and an L-type fuzzy number. The latter is related to approximation and mathematical extraction of the actual affected lesion regions in the feature extraction method. Daghrir et al. [15] established a hybrid system for melanoma skin tumor classification, and the method was employed for examining a few suspicious lesions. The presented method was dependent on the prediction of three distinct approaches, such as CNN and two typical ML techniques. These techniques were trained with a group of characteristics that explain the texture, border, and color of the skin tumor.

Tan et al. [16] presented an intelligent Decision Support System (DSS) for skin tumor classification. Specifically, the authors integrated the medically-essential features such as asymmetry, color, border irregularity, and other such dermoscopic structural features with the texture extraction features using the Histogram of Oriented Gradients (HOG), Grey Level Run Length Matrix and LBPs functions for tumor representations. Afterward, the authors presented two improved PSO techniques for the optimization of the features. In literature [17], a Hybrid DL (HDL) system was proposed fusing the sub-band of 3D wavelets. It was a non-invasive and objective system that was used for the inspection of skin images. During the primary phase of the HDL system, an easy Median Filter (MF) was utilized to remove unwanted data like noise and hair. During the secondary phase, the sub-band fusion method was used, and the 3D wavelet transform was executed to obtain the textural data in the dermoscopic images. In the last phase, the HDL system carried out a multiclass classification with the help of the fused sub-band.

Though several models have been proposed in the literature, the existing models do not focus on the hyperparameter selection process. This is a crucial process as it mostly influences classification performance. The hyperparameters such as epoch count, batch size, and learning rate selection are essential to attain effectual outcomes. Since the trial-and-error method for hyperparameter tuning is a tedious and erroneous process, the metaheuristic algorithms are applied. Therefore, in this research work, the HGSO algorithm is employed to select the parameters for the MAFNet model.

3. The Proposed Model

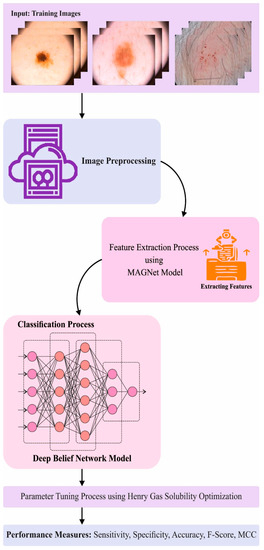

In this study, a new MAFCNN-SCD method has been proposed for the detection and classification of skin cancer from dermoscopic images. The major aim of the proposed MAFCNN-SCD technique is to diagnose and classify the type of skin cancers from dermoscopic images. It encompasses various stages such as image pre-processing, MAFNet feature extraction, HGSO hyperparameter tuning, and DBN classification. Figure 1 defines the overall procedure of the proposed MAFCNN-SCD system.

Figure 1.

The overall process of the proposed MAFCNN-SCD system.

3.1. Image Pre-Processing

In the presented MAFCNN-SCD approach, the data is pre-processed initially by following the Weiner Filter technique. The corrupted image is determined as , whereas the local mean is denoted by on a pixel window, the noise variance with the entire value is demonstrated by , whereas the local variance in the window is designated by . Then, the probable method of denoising the image is shown below [18]:

Here, when the noise variance across the image is equivalent to 0, then . If the global noise variance is smaller, then the local variance is larger than the global variance, according to which the ratio becomes almost equal to 1.

If , then . Whereas a higher local variance portrays the occurrence of the edges in the image windows [19]. In this case, when the local and global variances correspond together, the formulation progresses as follows: .

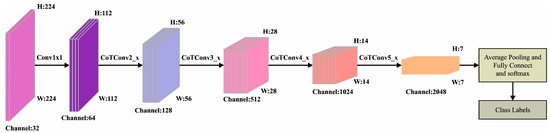

3.2. Feature Extraction Model

In this study, the MAFNet method is applied as a feature extractor. MAFNet encompasses convolution layers [20]; four convolution models exist in the middle, whereas three convolution structures exist across all the modules. The convolution module is disseminated in a symmetrical structure like [2,2,2,2]. At last, the FC layer is present with a total of 26 convolution modules [21,22]. Afterward, the images are fed as input, and the convolutional process is initially performed. Next, four convolution models are passed over four convolution models. The convolutional operation is substituted by the Contextual Transformer (CoT) blocks in the original ResNet convolution blocks. After the preceding convolution, pooling, and excitation operations, the features extracted are then fed as input to the FC layers that perform the role of “classification” in the CNN technique [23]. The architecture of the MAFNet model is shown in Figure 2. The FC layers perform the “classification” process in the CNN method that incorporates the preceding and extremely-abstracted feature and maps the learned feature to a sample space. It also employs the function to evaluate the probability of classification. At last, the output of the classifier outcome is given as follows.

Figure 2.

Architecture of MAFNet.

Equation (2) demonstrates the number of classes, represents the output value of the - node, indicates the output value of the - node, and shows the natural constant, which can be determined as follows.

In Equation (3), refers to the unique thermal encoding of the tag and indicates the number of tags; when the target label is , then , whereas the rest of the labels are equivalent to , and denotes the predictive probability of the - label, i.e., the Softmax value.

It must be noted that the lowest learning rate might result in slower convergence, and the largest learning rate might result in a constant oscillation of the loss functions. In order to construct a model with high accuracy and optimum parameters followed by its quick training, a dynamic-learning-rate approach is utilized. This helps in adjusting the learning rate per 30 epochs, for which the formula is given below [24].

In Equation (4), denotes the present learning rate, indicates the primary learning rate, and represents the overall number of training rounds.

In this study, the HGSO algorithm is applied as a hyperparameter optimizer. Hashim et al. [25] presented the HGSO technique, which is a newly-found physics-inspired optimization algorithm. This technique has been constructed based on Henry’s gas law which defines the rules for the solubility of a gas in a liquid. In general, temperature and pressure are two major elements that significantly impact the outcome of solubility. In regard to pressure, the ability of a gas to become solvable in the liquid increases when the pressure increases. At high temperatures, the solubility of the solid increases. On the other hand, gases cannot dissolve. By utilizing these two significant characteristics, the HGSO approach comprises eight steps, as listed herewith. Initially, the values of Henry’s constant per group j , the number of gases (population), position, and the partial pressure of the gas at every group are generated and are given as a mathematical form herewith.

In Equation (5), signifies the location of the - gas in population is determined by the chaotic number between 0 and 1, and and denote the bounds of the search space and (t) shows the iteration.

In Equation (6), to indicate the constant numbers that correspond to the values between 5 × 10−2, and 1 × 10−2.

Next, the clustering process is executed in which the population of the gas is categorized according to the type of gas. In all the groups, each gas has a similar

Then, the evaluation process is conducted to determine the most suitable gas from every cluster that attains the maximum equilibrium location over the rest of the gases. In this stage, the ranking is used to find the most suitable gas among the entire swarm [26].

Henry’s coefficient is expressed in the following equation.

In Equation (7), demonstrates the coefficient of Henry’s gas rules in all the groups characterizes the temperature, and describes the fixed quantity with a value of 298. Furthermore, signifies the overall iteration count. The solubility updating formula is given below in which demonstrates the solvability of a gas in all the groups characterizes the parochial pressure on gas in all the groups , and denotes a fixed value.

Step 6 characterizes a formula to upgrade the location of all the gases in Equations (9) and (10):

In Equation (10), characterizes the condition of all the gases in all the groups denotes the random numbers between 0 and 1, whereas denotes the iteration time, and illustrates the most suitable gas in all the groups . Further, characterizes the most suitable gas amongst the entire population [27]. In addition to these, signifies the ability of all the gases in all the groups to interact through the gases in its group, and illustrates the effect of the remaining gases on in every group and takes the value of 1. Additionally, a certain number is allocated to shows the fitness of all the gases in all the groups at the same time, and demonstrates the fitness of the most suitable gas in the entire population. The formula to prevent the local optima situation is given below.

In Equation (11), and indicate the worst agent and the number of search agents.

Lastly, the formula for updating the location of the worst agent is given below.

In Equation (12), demonstrates the condition for all the gases in group denotes a number that is disseminated between 0 and 1, and and indicate the bounds for the algorithm. Algorithm 1 illustrates the steps followed in the HGSO approach. The HGSO method derives a Fitness Function (FF) for the enhancement of the classification outcome. It sets a positive value to designate the superior outcomes of the candidate solutions.

| Algorithm 1: Pseudocode of HGSO Algorithm. |

| Initialization: , number of gas kinds , and Split the population agent into a number of gas kinds (cluster) with a similar Henry’ constant value . Estimate every cluster Obtain the more suitable gas , better in all the clusters, and the better search agent While maximal iteration count, do For every search agent, do Upgrade the position of each search agent through Equations (9) and (10). End for Upgrade Henry’s coefficient of all the gas kinds based on Equation (7). Upgrade solubility of all the gases based on Equation (8). Select and Rank the amount of worst agents based on Equation (11). Upgrade the position of the worst agent based on Equation (12). Upgrade the more suitable Xi, better, and the better search agent Xbest. End while Return Xbest |

In this work, the reduced classifier error rate is signified as FF as mentioned in Equation (13).

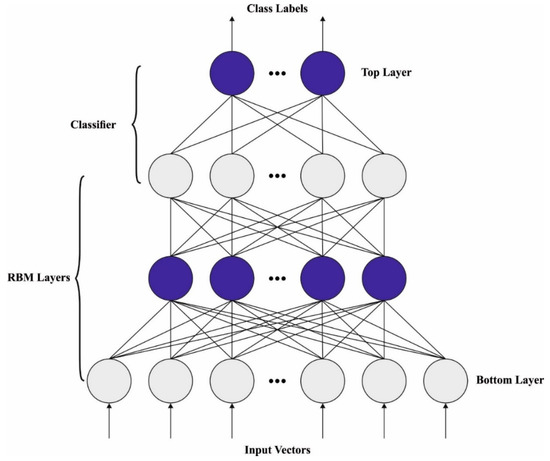

3.3. Skin Cancer Detection Model

In this last stage, the DBN model is exploited for the detection and classification of skin cancer. DBN is a NN that comprises numerous Restricted Boltzmann Machines (RBM) [28]. The input unit specifies the character of the original dataset, whereas the output unit specifies the label of this dataset. From the input to the output layers, the key features of the data are mined from the deep architecture via the layer abstraction process. The DBN can be accomplished by stacking numerous RBMs. The initial-layer RBM is the input of the DBN of pipeline leak detection, whereas the output is characterized by the latter, i.e., RBM Hidden Layer (HL). The DBN is processed as an MLP, and applied to the classifier, after which the LR is added to the output. The DBN model comprises certain RBMs. Every RBM has a visible layer (VL) and an HL. Consider and as the states of VL and HL, correspondingly. The quantity of the RBM joint configuration energy comprises biases and weights.

Here, represents the model parameter, indicates the weight between the hidden unit and the visible unit and show the biases of the VL and HL, correspondingly; and denote the count of visible and hidden units, correspondingly. The joint likelihood equation for VL and HL is given below.

In Equation (15), refers to the normalizing factor that is formulated as follows.

Since the visible–visible and hidden–hidden cases are independent of each other, the conditional probability of this unit can be formulated using the following equation [29]:

The layer-wise learning mechanism of the DBN comprises three HLs. The training dataset originates from a similar pipeline with similar experimental conditions and leakage sizes. Firstly, the training dataset is transferred to the VLs on the initial RBM unit. Then, the hidden unit feeds the input dataset in the VL. At last, the VLs of the 2nd RBM unit obtain a hidden unit in the RBM [30]. The subsequent individual RBM units accomplish the exercises of the DBN structure. Figure 3 demonstrates the infrastructure of the DBN.

Figure 3.

DBN structure.

4. Performance Evaluation

The proposed model was simulated using Python 3.6.5 tool on a PC with configurations such as i5–8600 k, GeForce 1050 Ti 4 GB, 16 GB RAM, 250 GB SSD, and 1 TB HDD. The parameter settings are given herewith: learning rate: 0.01, dropout: 0.5, batch size: 5, epoch count: 50, and activation: ReLU.

4.1. Dataset Used

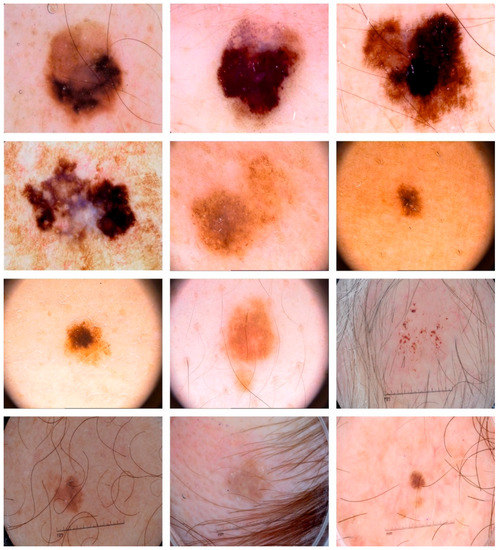

The presented MAFCNN-SCD model for the skin cancer classification process was validated using two benchmark databases such as the ISIC 2017 database [31] and the HAM10000 database [32]. The ISIC 2017 dataset comprises 2000 images under three classes, whereas the HAM10000 dataset holds a total of 10,082 samples under seven classes. Table 1 and Table 2 show a detailed description of the datasets under study. Figure 4 demonstrates some of the sample images from the datasets.

Table 1.

Details of the ISIC 2017 dataset [31].

Table 2.

Details on HAM10000 Dataset [32].

Figure 4.

Sample images.

4.2. Results Analysis

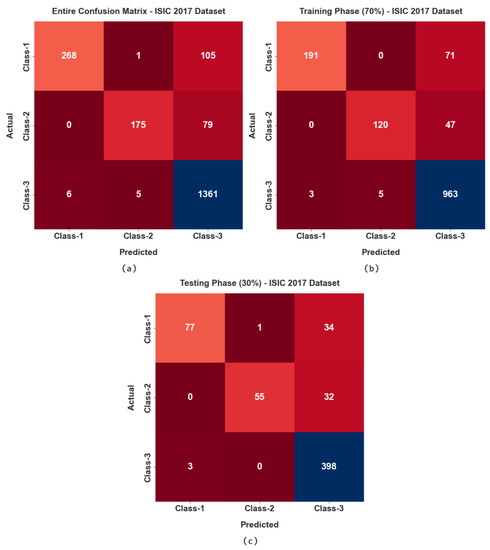

The confusion matrices generated by the proposed MAFCNN-SCD approach on ISIC 2017 dataset are demonstrated in Figure 5. The figure states that the proposed MAFCNN-SCD system proficiently recognized all three types of skin cancers from the applied dermoscopic images.

Figure 5.

Confusion matrices of the proposed MAFCNN-SCD system under ISIC 2017 dataset. (a) Entire database, (b) 70% of TR database, and (c) 30% of TS database.

Table 3 portrays the overall skin cancer classification outcomes achieved by the proposed MAFCNN-SCD method on ISIC 2017 dataset. On the entire dataset, the MAFCNN-SCD method attained the average , , , , and Mathew Correlation Coefficient (MCC) values, such as 93.47%, 79.92%, 90%, 85.50%, and 79.26%, respectively. Concurrently, on 70% TR database, the proposed MAFCNN-SCD approach achieved the average , , , , and MCC values, such as 94.00%, 81.31%, 90.61%, 86.61%, and 80.66%, correspondingly. In parallel, on 30% of the TS database, the presented MAFCNN-SCD method achieved the average , , , , and MCC values, such as 92.22%, 77.07%, 88.67%, 83.05%, and 76.23%, correspondingly.

Table 3.

Skin cancer classification outcomes of the proposed MAFCNN-SCD system upon the ISIC 2017 dataset.

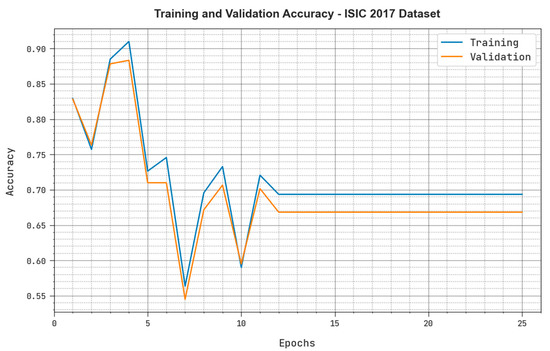

Both the Training Accuracy () and the Validation Accuracy () values acquired by the MAFCNN-SCD approach under ISIC 2017 dataset are presented in Figure 6. The simulation results emphasize that the proposed MAFCNN-SCD algorithm gained increased and values, while the values were better than the values.

Figure 6.

and results of the proposed MAFCNN-SCD system upon the ISIC 2017 dataset.

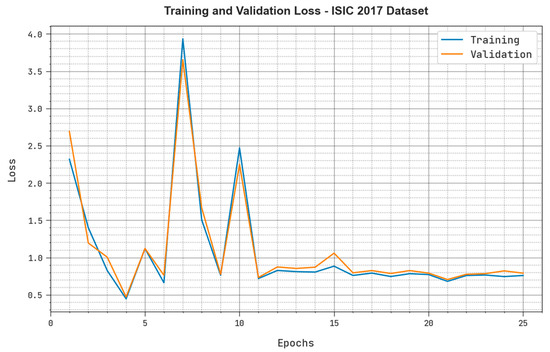

Both Training Loss () and the Validation Loss () values realized by the proposed MAFCNN-SCD system under ISIC 2017 dataset are exhibited in Figure 7. The simulation results represent that the proposed MAFCNN-SCD approach obtained the least and values, while the values were lesser than the values.

Figure 7.

and analyses outcomes of the proposed MAFCNN-SCD system upon the ISIC 2017 dataset.

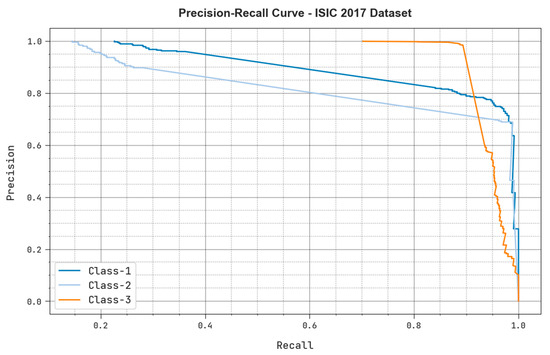

The precision-recall examination outcomes achieved by the proposed MAFCNN-SCD system under ISIC 2017 dataset are shown in Figure 8. The figure portrays that the proposed MAFCNN-SCD method produced high precision-recall values under each class label.

Figure 8.

Precision recall analysis outcomes of the proposed MAFCNN-SCD system upon the ISIC 2017 dataset.

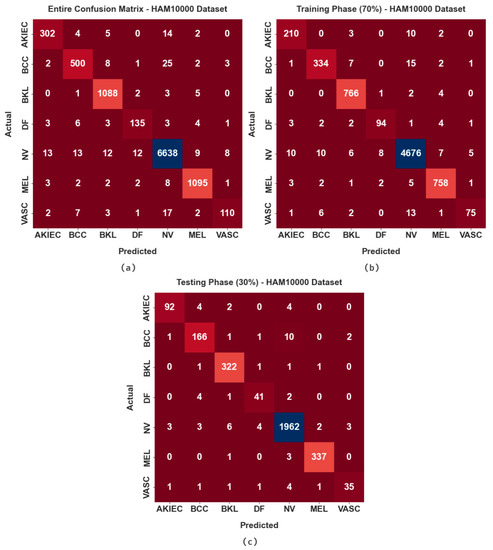

The confusion matrices generated by the proposed MAFCNN-SCD algorithm on the HAM10000 dataset are exhibited in Figure 9. The figure shows that the MAFCNN-SCD approach proficiently recognized all seven types of skin cancer on the applied dermoscopic images.

Figure 9.

Confusion matrices of the proposed MAFCNN-SCD method under HAM10000 dataset (a) Entire database, (b) 70% of TR database, and (c) 30% of TS database.

Table 4 depicts the overall skin cancer classification results attained by the proposed MAFCNN-SCD method on the HAM10000 dataset. On the entire dataset, the proposed MAFCNN-SCD system achieved the average , , , , and MCC values such as 99.39%, 92.25%, 99.49%, 93.08%, and 92.60%, correspondingly. At the same time, on 70% of the TR database, the MAFCNN-SCD algorithm attained the average , , , , and MCC outcomes such as 99.42%, 92.40%, 99.51%, 93.27%, and 92.82%, correspondingly. Concurrently, on 30% of the TS database, the proposed MAFCNN-SCD algorithm achieved the average , , , , and MCC values such as 99.34%, 91.92%, 99.44%, 92.67%, and 92.15%, correspondingly.

Table 4.

Skin cancer classification outcomes of the proposed MAFCNN-SCD system upon the HAM10000 dataset.

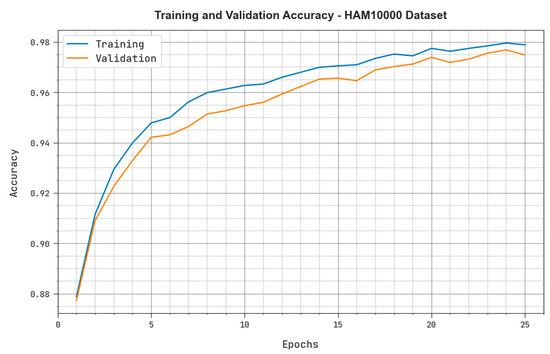

Both and values acquired by the MAFCNN-SCD methodology under the HAM10000 dataset are exhibited in Figure 10. The simulation results confirm that the proposed MAFCNN-SCD approach obtained the maximum and values, whereas the values were better than the values.

Figure 10.

and outcomes of the proposed MAFCNN-SCD system upon the HAM10000 dataset.

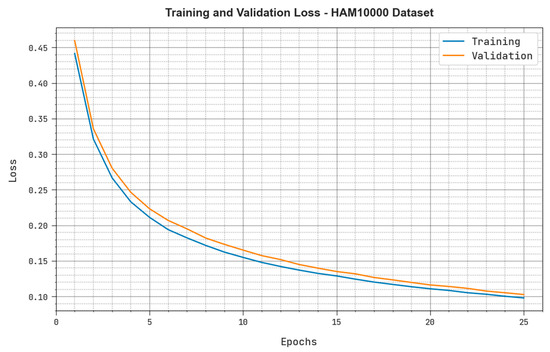

Both and values realized by the proposed MAFCNN-SCD system under the HAM10000 dataset are shown in Figure 11. The simulation results manifest that the presented MAFCNN-SCD approach obtained the least and values, while the values were lesser than the values.

Figure 11.

and results of the proposed MAFCNN-SCD system upon the HAM10000 dataset.

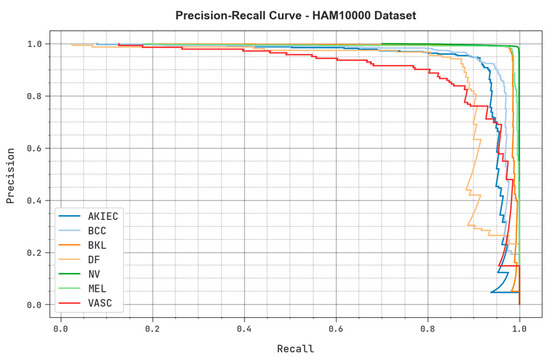

The precision-recall analysis results achieved by the MAFCNN-SCD system upon the HAM10000 dataset are exhibited in Figure 12. The figure demonstrates that the proposed MAFCNN-SCD method produced the maximum precision-recall values under each class label.

Figure 12.

Precision recall analysis outcomes of the proposed MAFCNN-SCD system upon the HAM10000 dataset.

4.3. Discussion

Table 5 and Figure 12 report the comparative study outcomes of the MAFCNN-SCD model and other recent models on the ISIC 2017 dataset. The simulation results imply that the MobileNet method reached a poor performance while the MSVM model gained a slightly raised outcome. Next, the NB, KELM, and DenseNet169 models produced closer skin cancer classification performance. However, the proposed MAFCNN-SCD model accomplished the maximum performance with an accuracy of 92.22%.

Table 5.

Comparative analysis outcomes of the proposed MAFCNN-SCD model and other recent methodologies upon the ISIC 2017 dataset [33,34].

Table 6 reports the comparative analysis outcomes achieved by the proposed MAFCNN-SCD method and other recent techniques on the HAM10000 dataset. The simulation outcomes imply that the NB methodology performed poorly, whereas the MobileNet approach acquired a slightly raised outcome.

Table 6.

Comparative analysis outcomes of the proposed MAFCNN-SCD system and other recent algorithms upon the HAM10000 Dataset [33,34].

Then, the KELM, MSVM, and DenseNet169 algorithms achieved closer skin cancer classification performance. But, the proposed MAFCNN-SCD model achieved the maximum performance. The comprehensive comparative analysis outcomes established the enhanced performance of the MAFCNN-SCD technique over other methodologies with maximum accuracy values such as 92.22% and 99.34% on the ISIC 2017 and HAM10000 datasets, respectively. These results establish the effectual skin cancer classification performance of the proposed MAFCNN-SCD method. The enhanced performance of the proposed model is due to the implementation of the HGSO algorithm in the hyperparameter tuning process.

5. Conclusions

In this study, a new MAFCNN-SCD approach has been modeled for skin cancer detection and classification from dermoscopic images. The major aim of the proposed MAFCNN-SCD technique is to identify and classify skin cancer from dermoscopic images. In the presented MAFCNN-SCD technique, the data pre-processing is performed initially. Next, the MAFNet methodology is applied as a feature extractor with the HGSO algorithm as a hyperparameter optimizer. Finally, the DBN method is exploited for skin cancer detection and classification. A sequence of experiments was conducted to showcase the supreme performance of the proposed MAFCNN-SCD approach. The comprehensive comparative analysis outcomes establish the enhanced performance of the MAFCNN-SCD technique over other methodologies with maximum accuracy values, such as 92.22% and 99.34% on the ISIC 2017 and HAM10000 datasets, respectively. Thus, the proposed model can be employed for the automated skin cancer classification process. In the future, the deep instance segmentation process can be incorporated to extend the detection rate of the MAFCNN-SCD technique to achieve low error rates in quantifying the skin lesion’s structure, boundary, and scale. To further increase the system’s performance, huge training datasets should be utilized to avoid under- and over-segmentation cases. Besides, the computation complexity of the proposed model should also be investigated in the future.

Author Contributions

Conceptualization, M.O. and M.M.; methodology, A.M.H.; software, A.S.S.; validation, A.A., M.M. and A.M.H.; formal analysis, A.A.; investigation, M.I.A.; resources, A.A.; data curation, A.S.S.; writing—original draft preparation, M.O., M.M., A.M.H., A.E.O. and A.A.A.; writing—review and editing, M.O., A.A.A., A.S.S. and A.E.O.; visualization, A.A.; supervision, M.O.; project administration, A.M.H.; funding acquisition, M.O., M.M. and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through small group Research Project under grant number (PGR.1/124/43). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R203), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Research Supporting Project number (RSPD2023R787), King Saud University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

This article does not contain any studies with human participants performed by any of the authors.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated during the current study.

Conflicts of Interest

The authors declare that they have no conflict of interest. The manuscript was written through the contributions of all authors. All authors have given approval for the final version of the manuscript.

References

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2021, 54, 811–841. [Google Scholar] [CrossRef]

- Kadampur, M.A.; Al Riyaee, S. Skin cancer detection: Applying a deep learning based model driven architecture in the cloud for classifying dermal cell images. Inform. Med. Unlocked 2020, 18, 100282. [Google Scholar] [CrossRef]

- Jojoa Acosta, M.F.; Caballero Tovar, L.Y.; Garcia-Zapirain, M.B.; Percybrooks, W.S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med. Imaging 2021, 21, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Hossin, M.A.; Rupom, F.F.; Mahi, H.R.; Sarker, A.; Ahsan, F.; Warech, S. Melanoma Skin Cancer Detection Using Deep Learning and Advanced Regularizer. In Proceedings of the 2020 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Depok City, Indonesia, 17–18 October 2020; IEEE: New York, NY, USA, 2020; pp. 89–94. [Google Scholar]

- Alazzam, M.B.; Alassery, F.; Almulihi, A. Diagnosis of melanoma using deep learning. Math. Probl. Eng. 2021, 2021, 1423605. [Google Scholar] [CrossRef]

- Mahbod, A.; Tschandl, P.; Langs, G.; Ecker, R.; Ellinger, I. The effects of skin lesion segmentation on the performance of dermatoscopic image classification. Comput. Methods Programs Biomed. 2020, 197, 105725. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H. A comparative study of deep learning architectures on melanoma detection. Tissue Cell 2019, 58, 76–83. [Google Scholar] [CrossRef]

- Ningrum, D.N.A.; Yuan, S.P.; Kung, W.M.; Wu, C.C.; Tzeng, I.S.; Huang, C.Y.; Li, J.Y.C.; Wang, Y.C. Deep learning classifier with patient’s metadata of dermoscopic images in malignant melanoma detection. J. Multidiscip. Healthc. 2021, 14, 877. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wang, C. Fusing fine-tuned deep features for skin lesion classification. Comput. Med. Imaging Graph. 2019, 71, 19–29. [Google Scholar] [CrossRef]

- Minaee, S.; Wang, Y.; Aygar, A.; Chung, S.; Wang, X.; Lui, Y.W.; Fieremans, E.; Flanagan, S.; Rath, J. MTBI identification from diffusion MR images using bag of adversarial visual features. IEEE Trans. Med. Imaging 2019, 38, 2545–2555. [Google Scholar] [CrossRef]

- Shorfuzzaman, M. An explainable stacked ensemble of deep learning models for improved melanoma skin cancer detection. Multimed. Syst. 2022, 28, 1309–1323. [Google Scholar] [CrossRef]

- Bhimavarapu, U.; Battineni, G. Skin Lesion Analysis for Melanoma Detection Using the Novel Deep Learning Model Fuzzy GC-SCNN. Healthcare 2022, 10, 962. [Google Scholar] [CrossRef]

- Lafraxo, S.; Ansari, M.E.; Charfi, S. MelaNet: An effective deep learning framework for melanoma detection using dermoscopic images. Multimed. Tools Appl. 2022, 81, 16021–16045. [Google Scholar] [CrossRef]

- Banerjee, S.; Singh, S.K.; Chakraborty, A.; Das, A.; Bag, R. Melanoma diagnosis using deep learning and fuzzy logic. Diagnostics 2020, 10, 577. [Google Scholar] [CrossRef]

- Daghrir, J.; Tlig, L.; Bouchouicha, M.; Sayadi, M. Melanoma Skin Cancer Detection Using Deep Learning and Classical Machine Learning Techniques: A Hybrid Approach. In Proceedings of the 2020 5th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sfax, Tunisia, 2–5 September 2020; IEEE: New York, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Tan, T.Y.; Zhang, L.; Lim, C.P. Intelligent skin cancer diagnosis using improved particle swarm optimization and deep learning models. Appl. Soft Comput. 2019, 84, 105725. [Google Scholar] [CrossRef]

- Maniraj, S.P.; Maran, P.S. A hybrid deep learning approach for skin cancer diagnosis using subband fusion of 3D wavelets. J. Supercomput. 2022, 78, 12394–12409. [Google Scholar] [CrossRef]

- Malibari, A.A.; Alzahrani, J.S.; Eltahir, M.M.; Malik, V.; Obayya, M.; Al Duhayyim, M.; Neto, A.V.L.; de Albuquerque, V.H.C. Optimal deep neural network-driven computer aided diagnosis model for skin cancer. Comput. Electr. Eng. 2022, 103, 108318. [Google Scholar] [CrossRef]

- Park, C.R.; Kang, S.H.; Lee, Y. Median modified wiener filter for improving the image quality of gamma camera images. Nucl. Eng. Technol. 2020, 52, 2328–2333. [Google Scholar] [CrossRef]

- Xu, H.; Jin, L.; Shen, T.; Huang, F. Skin Cancer Diagnosis Based on Improved Multiattention Convolutional Neural Network. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; IEEE: New York, NY, USA, 2021; Volume 5, pp. 761–765. [Google Scholar]

- Wang, C.; Zhang, S.; Lv, X.; Ding, W.; Fan, X. A Novel Multi-attention Fusion Convolution Neural Network for Blind Image Quality Assessment. Neural Process. Lett. 2022. [Google Scholar] [CrossRef]

- Zheng, H.; Song, T.; Gao, C.; Guo, T. M2FN: A Multilayer and Multiattention Fusion Network for Remote Sensing Image Scene Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, J.; Han, J.; Liu, C.; Wang, Y.; Shen, H.; Li, L. A Deep-Learning Method for the Classification of Apple Varieties via Leaf Images from Different Growth Periods in Natural Environment. Symmetry 2022, 14, 1671. [Google Scholar] [CrossRef]

- Chen, P.; Gao, J.; Yuan, Y.; Wang, Q. MAFNet: A Multi-Attention Fusion Network for RGB-T Crowd Counting. arXiv 2022, arXiv:2208.06761. [Google Scholar]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Mohammadi, D.; Abd Elaziz, M.; Moghdani, R.; Demir, E.; Mirjalili, S. Quantum Henry gas solubility optimization algorithm for global optimization. Eng. Comput. 2022, 38, 2329–2348. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Attiya, I. An improved Henry gas solubility optimization algorithm for task scheduling in cloud computing. Artif. Intell. Rev. 2021, 54, 3599–3637. [Google Scholar] [CrossRef]

- Yan, Y.; Hu, Z.; Yuan, W.; Wang, J. Pipeline leak detection based on empirical mode decomposition and deep belief network. Meas. Control. 2022, 56, 00202940221088713. [Google Scholar] [CrossRef]

- Zhang, Y.; You, D.; Gao, X.; Katayama, S. Online monitoring of welding status based on a DBN model during laser welding. Engineering 2019, 5, 671–678. [Google Scholar] [CrossRef]

- Hua, C.; Chen, S.; Xu, G.; Chen, Y. Defect detection method of carbon fiber sucker rod based on multi-sensor information fusion and DBN model. Sensors 2022, 22, 5189. [Google Scholar] [CrossRef]

- Codella, N.C.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin Lesion Analysis toward Melanoma Detection: A Challenge at the 2017 International Symposium on Biomedical Imaging (isbi), Hosted by the International Skin Imaging Collaboration (isic). In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; IEEE: New York, NY, USA; pp. 168–172. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Kousis, I.; Perikos, I.; Hatzilygeroudis, I.; Virvou, M. Deep Learning Methods for Accurate Skin Cancer Recognition and Mobile Application. Electronics 2022, 11, 1294. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Damaševičius, R.; Maskeliunas, R. Skin Lesion-Segmentation and Multiclass Classification Using Deep Learning Features and Improved Moth Flame Optimization. Diagnostics 2021, 11, 811. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).