Breast Cancer Risk Assessment Tools for Stratifying Women into Risk Groups: A Systematic Review

Abstract

Simple Summary

Abstract

1. Introduction

2. Methods

2.1. Study Registration

2.2. Eligibility Criteria

2.3. Information Sources and Search Strategy

2.4. Selection Process

2.5. Data Collection

2.6. Metrics for Evaluating Risk Assessment Tools and Statistical Analysis

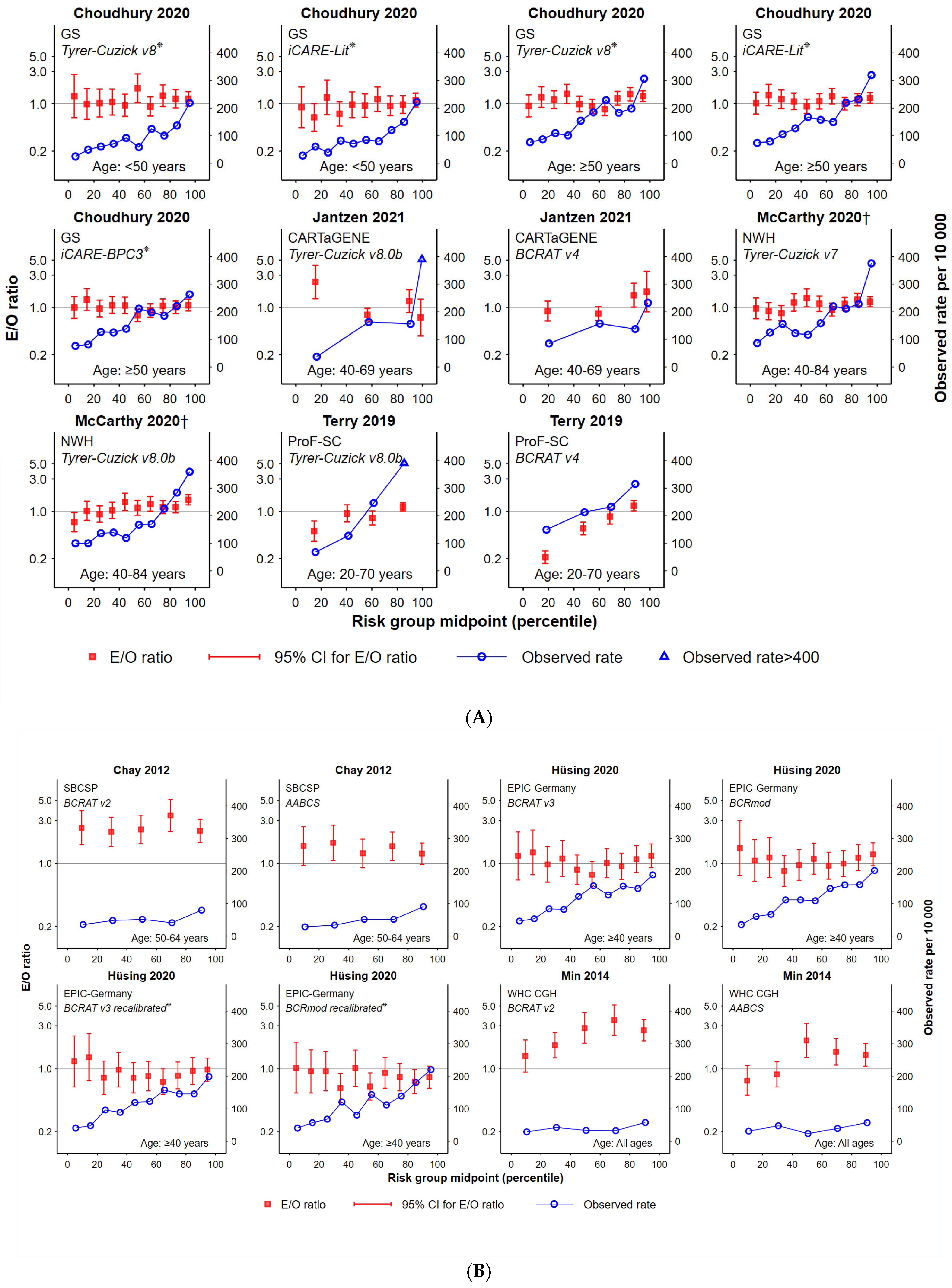

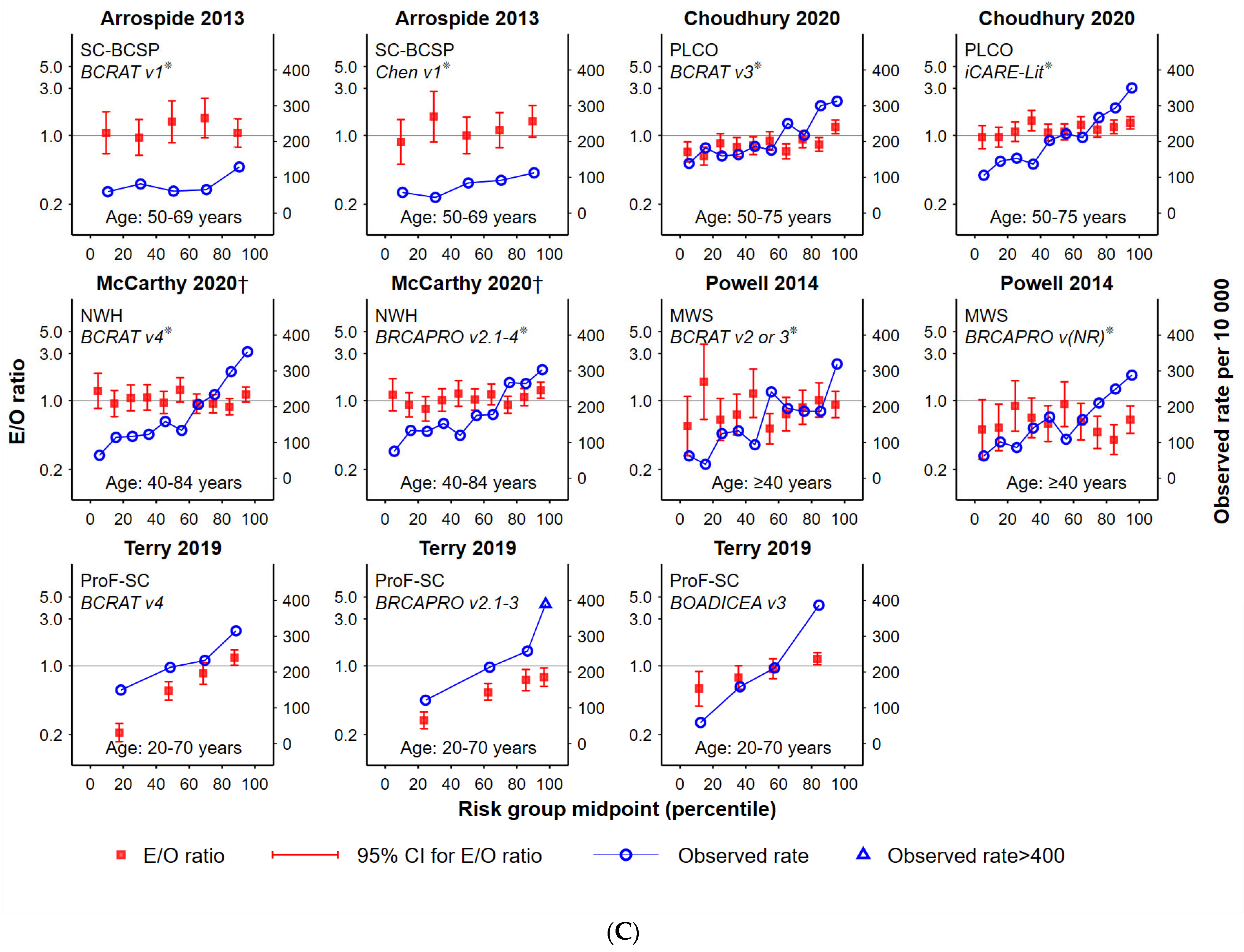

- A.

- Goodness of fit between expected (predicted) and observed outcomes:

- 1.

- Plotted ratios of expected versus observed cancers, by population percentile. The E/O ratio (in log10 scale) with 95% confidence intervals were plotted according to risk group assignment using the mid-point percentile of each risk group in the study population. This facilitated standardisation of comparisons between tools that had a different number of risk groups and/or assigned different proportions of women to each risk group.

- 2.

- The total number of women in each study cohort in risk groups for which the E/O 95%CIs included unity. This helped indicate the proportion of each study cohort that was well-validated by the tool, noting that this is more likely for smaller studies (and therefore wider CIs).

- 3.

- B.

- Analysis of observed outcomes by risk group classification:

- 1.

- Observed cancer rates (number of breast cancers divided by the number of women per 10,000 for each risk category), by mid-point percentile of each risk group in the study population. This helped to standardise comparisons.

- 2.

- Characterisation of the functional form (curve) of observed cancer incidence rates according to increasing risk group, classified as either: ‘increasing’ (observed rates consistently increasing across risk categories), ‘monotonic’ (i.e., increasing or remaining steady across groups) or ‘fluctuating’ (all other options).

- 3.

- Assessment of whether highest-risk women could be distinguished from women at more moderate-risk. We compared the observed breast cancer rate corresponding to the mid-range risk groups (usually quintiles 2–4 or deciles 3–8) with the highest risk group (quintile 5 or deciles 9–10). p-values <0.05 indicated a statistically significant difference and, therefore, good allocation of women to the highest risk group. To ensure comparability of findings, if >25% of the study cohort was allocated to the highest risk groups, p-values were reported but not taken into consideration when drawing conclusions regarding a particular tool. Consequently, mid-range risk groups would be expected to include ≥50% of the study cohort.

- 4.

- Assessment of whether lowest-risk women could be distinguished from women at more moderate-risk. As for (3 above), but for the lowest risk group (quintile 1 or deciles 1–2 or the equivalent sub-groups representing ≤25% of cohort), compared to the remainder (quintiles 2–4 or deciles 3–8, or equivalent sub-groups representing ≤50% of the cohort). To ensure comparability of findings, if >25% of the study cohort was allocated in the lowest risk groups, p-values were reported but not taken into consideration when drawing conclusions regarding a particular tool.

2.7. Risk of Bias Assessment

3. Results

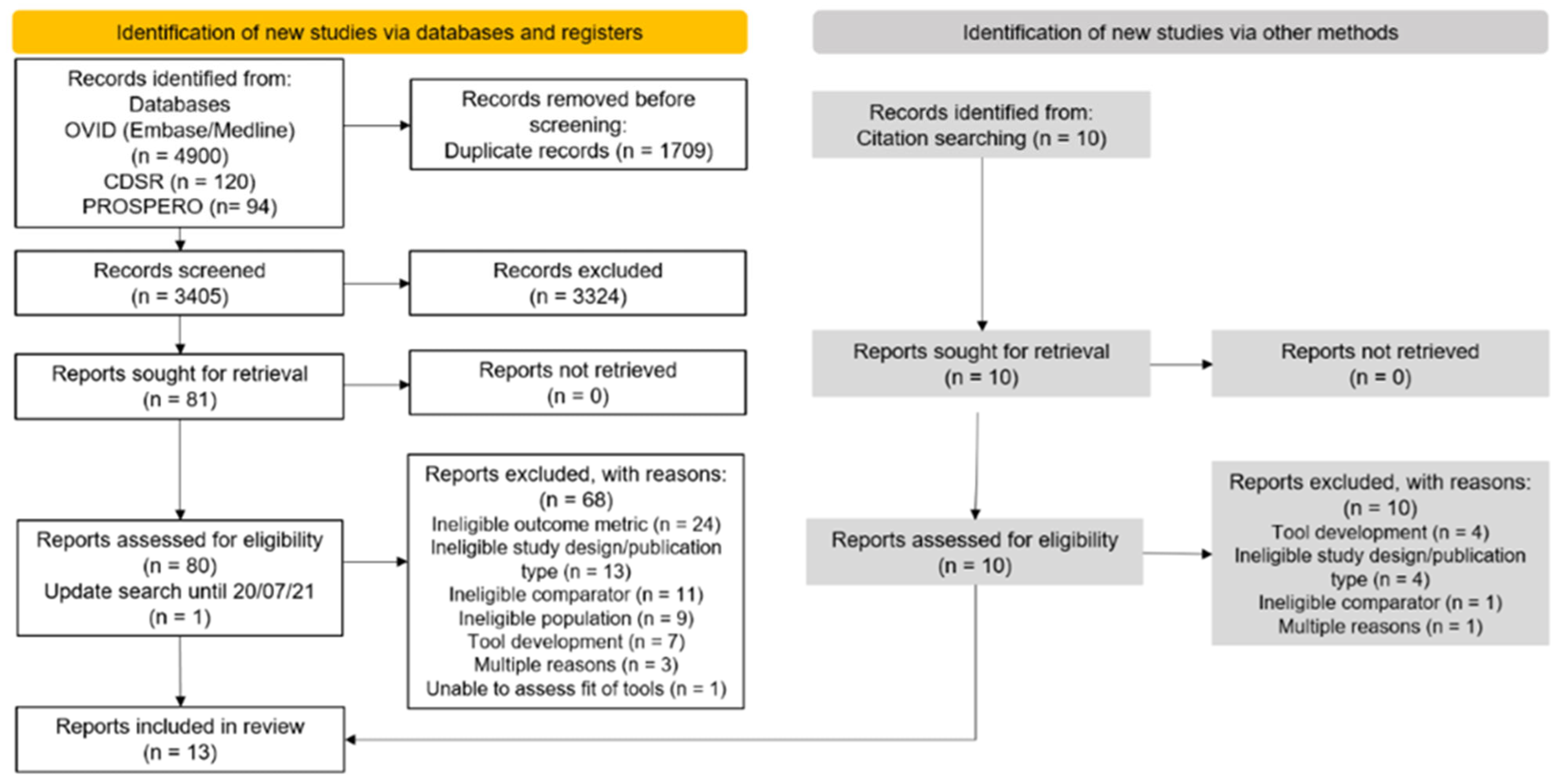

3.1. Selection of Articles and Summary Characteristics

3.2. Goodness-of-Fit

3.3. Observed Cancer Incidence by Risk Group

3.4. Risk of Bias Assessment

4. Discussion

4.1. Summary of Main Results

4.2. Comparison with other Published Work

4.3. Applicability and Model Performance

4.4. Risk of Bias and Quality of the Evidence

4.5. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schünemann, H.J.; Lerda, D.; Quinn, C.; Follmann, M.; Alonso-Coello, P.; Rossi, P.G.; Lebeau, A.; Nyström, L.; Broeders, M.; Ioannidou-Mouzaka, L.; et al. European Commission Initiative on Breast Cancer (ECIBC) Contributor Group. Breast Cancer Screening and Diagnosis: A Synopsis of the European Breast Guidelines. Ann. Intern. Med. 2020, 172, 46–56. [Google Scholar] [CrossRef] [PubMed]

- Monticciolo, D.L.; Newell, M.S.; Hendrick, R.E.; Helvie, M.A.; Moy, L.; Monsees, B.; Kopans, D.B.; Eby, P.R.; Sickles, E.A. Breast Cancer Screening for Average-Risk Women: Recommendations from the ACR Commission on Breast Imaging. J. Am. Coll. Radiol. 2017, 14, 1137–1143. [Google Scholar] [PubMed]

- Siu, A.L.; U.S. Preventive Services Task Force. Screening for Breast Cancer: U.S. Preventive Services Task Force Recommendation Statement. Ann. Intern. Med. 2016, 164, 279–296. [Google Scholar]

- Elder, K.; Nickson, C.; Pattanasri, M.; Cooke, S.; Machalek, D.; Rose, A.; Mou, A.; Collins, J.P.; Park, A.; De Boer, R.; et al. Treatment intensity differences after early-stage breast cancer (ESBC) diagnosis depending on participation in a screening program. Ann. Surg. Oncol. 2018, 25, 2563–2572. [Google Scholar] [CrossRef] [PubMed]

- Nelson, H.D.; Pappas, M.; Cantor, A.; Griffin, J.; Daeges, M.; Humphrey, L. Harms of Breast Cancer Screening: Systematic Review to Update the 2009 U.S. Preventive Services Task Force Recommendation. Ann. Intern. Med. 2016, 164, 256–267. [Google Scholar] [PubMed]

- BreastScreen Australia Program Website. Available online: https://www.health.gov.au/initiatives-and-programs/breastscreen-australia-program (accessed on 24 October 2022).

- UK Breast Screening Program Website. Available online: https://www.nhs.uk/conditions/breast-screening-mammogram/when-youll-be-invited-and-who-should-go/ (accessed on 24 October 2022).

- Canadian Breast Cancer Screening Program Information. Available online: https://www.partnershipagainstcancer.ca/topics/breast-cancer-screening-scan-2019-2020/ (accessed on 24 October 2022).

- Sankatsing, V.D.V.; van Ravesteyn, N.T.; Heijnsdijk, E.A.M.; Broeders, M.J.M.; de Koning, H.J. Risk stratification in breast cancer screening: Cost-effectiveness and harm-benefit ratios for low-risk and high-risk women. Int. J. Cancer. 2020, 147, 3059–3067. [Google Scholar] [CrossRef] [PubMed]

- Trentham-Dietz, A.; Kerlikowske, K.; Stout, N.K.; Miglioretti, D.L.; Schechter, C.B.; Ergun, M.A.; van den Broek, J.J.; Alagoz, O.; Sprague, B.L.; van Ravesteyn, N.T.; et al. Tailoring Breast Cancer Screening Intervals by Breast Density and Risk for Women Aged 50 Years or Older: Collaborative Modeling of Screening Outcomes. Ann. Intern. Med. 2016, 165, 700–712. [Google Scholar] [CrossRef]

- Gail, M.H. Twenty-five years of breast cancer risk models and their applications. J. Natl. Cancer Inst. 2015, 107, djv042. [Google Scholar] [CrossRef]

- Zhang, X.; Rice, M.; Tworoger, S.S.; Rosner, B.A.; Eliassen, A.H.; Tamimi, R.M.; Joshi, A.D.; Lindstrom, S.; Qian, J.; Colditz, G.A.; et al. Addition of a polygenic risk score, mammographic density, and endogenous hormones to existing breast cancer risk prediction models: A nested case-control study. PLoS Med. 2018, 15, e1002644. [Google Scholar]

- Shieh, Y.; Hu, D.; Ma, L.; Huntsman, S.; Gard, C.C.; Leung, J.W.; Tice, J.A.; Vachon, C.M.; Cummings, S.R.; Kerlikowske, K.; et al. Breast cancer risk prediction using a clinical risk model and polygenic risk score. Breast Cancer Res Treat. 2016, 159, 513–525. [Google Scholar]

- Nickson, C.; Procopio, P.; Velentzis, L.S.; Carr, S.; Devereux, L.; Mann, G.B.; James, P.; Lee, G.; Wellard, C.; Campbell, I. Prospective validation of the NCI Breast Cancer Risk Assessment Tool (Gail Model) on 40,000 Australian women. Breast Cancer Res. 2018, 20, 155. [Google Scholar] [PubMed]

- Brittain, H.K.; Scott, R.; Thomas, E. The rise of the genome and personalised medicine. Clin. Med. 2017, 17, 545–551. [Google Scholar] [CrossRef] [PubMed]

- Harkness, E.F.; Astley, S.M.; Evans, D.G. Risk-based breast cancer screening strategies in women. Best Pract. Res. Clin. Obstet. Gynaecol. 2020, 65, 3–17. [Google Scholar] [PubMed]

- Allman, R.; Spaeth, E.; Lai, J.; Gross, S.J.; Hopper, J.L. A streamlined model for use in clinical breast cancer risk assessment maintains predictive power and is further improved with inclusion of a polygenic risk score. PLoS ONE 2021, 16, e0245375. [Google Scholar] [CrossRef]

- Sherman, M.E.; Ichikawa, L.; Pfeiffer, R.M.; Miglioretti, D.L.; Kerlikowske, K.; Tice, J.; Vacek, P.M.; Gierach, G.L. Relationship of Predicted Risk of Developing Invasive Breast Cancer, as Assessed with Three Models, and and Breast Cancer Mortality among Breast Cancer Patients. PLoS ONE 2016, 11, e0160966. [Google Scholar] [CrossRef]

- Abdolell, M.; Payne, J.I.; Caines, J.; Tsuruda, K.; Barnes, P.J.; Talbot, P.J.; Tong, O.; Brown, P.; Rivers-Bowerman, M.; Iles, S. Assessing breast cancer risk within the general screening population: Developing a breast cancer risk model to identify higher risk women at mammographic screening. Eur. Radiol. 2020, 30, 5417–5426. [Google Scholar] [CrossRef]

- van Veen, E.M.; Brentnall, A.R.; Byers, H.; Harkness, E.F.; Astley, S.M.; Sampson, S.; Howell, A.; Newman, W.G.; Cuzick, J.; Evans, D.G.R. Use of Single-Nucleotide Polymorphisms and Mammographic Density Plus Classic Risk Factors for Breast Cancer Risk Prediction. JAMA Oncol. 2018, 4, 476–482. [Google Scholar] [CrossRef]

- Eriksson, M.; Czene, K.; Pawitan, Y.; Leifland, K.; Darabi, H.; Hall, P. A clinical model for identifying the short-term risk of breast cancer. Breast Cancer Res. 2017, 19, 29. [Google Scholar]

- Cancer Council Australia. Optimising Early Detection of Breast Cancer in Australia. Available online: https://www.cancer.org.au/about-us/policy-and-advocacy/early-detection-policy/breast-cancer-screening/optimising-early-detection (accessed on 24 October 2022).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar]

- Wolff, R.F.; Moons, K.G.M.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Reitsma, J.B.; Kleijnen, J.; Mallett, S.; PROBAST Group. PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann. Intern. Med. 2019, 170, 51–58. [Google Scholar] [CrossRef]

- Finazzi, S.; Poole, D.; Luciani, D.; Cogo, P.E.; Bertolini, G. Calibration belt for quality-of-care assessment based on dichotomous outcomes. PLoS ONE 2011, 6, e16110. [Google Scholar] [CrossRef] [PubMed]

- Li, S.X.; Milne, R.L.; Nguyen-Dumont, T.; English, D.R.; Giles, G.G.; Southey, M.C.; Antoniou, A.C.; Lee, A.; Winship, I.; Hopper, J.L.; et al. Prospective Evaluation over 15 Years of Six Breast Cancer Risk Models. Cancers 2021, 13, 5194. [Google Scholar] [PubMed]

- Marshall, A.; Altman, D.G.; Royston, P.; Holder, R.L. Comparison of techniques for handling missing covariate data within prognostic modelling studies: A simulation study. BMC Med. Res. Methodol. 2010, 10, 7. [Google Scholar]

- Sterne, J.A.C.; White, I.R.; Carlin, J.B.; Spratt, M.; Royston, P.; Kenward, M.G.; Wood, A.M.; Carpenter, J.R. Multiple imputation for missing data in epidemiological and clinical research: Potential and pitfalls. BMJ 2009, 338, b2393. [Google Scholar]

- Hurson, A.N.; Choudhury, P.P.; Gao, C.; Hüsing, A.; Eriksson, M.; Shi, M.; Jones, M.E.; Evans, D.G.R.; Milne, R.L.; Gaudet, M.M.; et al. Prospective evaluation of a breast-cancer risk model integrating classical risk factors and polygenic risk in 15 cohorts from six countries. Int. J. Epidemiol. 2021, 23, dyab036. [Google Scholar] [CrossRef]

- Powell, M.; Jamshidian, F.; Cheyne, K.; Nititham, J.; Prebil, L.A.; Ereman, R. Assessing breast cancer risk models in Marin County, a population with high rates of delayed childbirth. Clin. Breast Cancer. 2014, 14, 212–220. [Google Scholar] [CrossRef]

- Terry, M.B.; Liao, Y.; Whittemore, A.S.; Leoce, N.; Buchsbaum, R.; Zeinomar, N.; Dite, G.S.; Chung, W.K.; Knight, J.A.; Southey, M.C.; et al. 10-year performance of four models of breast cancer risk: A validation study. Lancet Oncol. 2019, 20, 504–517. [Google Scholar]

- Chay, W.Y.; Ong, W.S.; Tan, P.H.; Leo, N.Q.J.; Ho, G.H.; Wong, C.S.; Chia, K.S.; Chow, K.Y.; Tan, M.S.; Ang, P.S. Validation of the Gail model for predicting individual breast cancer risk in a prospective nationwide study of 28,104 Singapore women. Breast Cancer Res. 2012, 14, R19. [Google Scholar] [CrossRef]

- Jantzen, R.; Payette, Y.; de Malliard, T.; Labbe, C.; Noisel, N.; Broet, P. Validation of breast cancer risk assessment tools on a French-Canadian population-based cohort. BMJ Open 2021, 11, e045078. [Google Scholar] [CrossRef]

- McCarthy, A.M.; Guan, Z.; Welch, M.; Griffin, M.E.; Sippo, D.A.; Deng, Z.; Coopey, S.B.; Acar, A.; Semine, A.; Parmigiani, G.; et al. Performance of breast cancer risk assessment models in a large mammography cohort. J. Nat. Cancer Inst. 2020, 112, djz177. [Google Scholar]

- Choudhury, P.P.; Wilcox, A.N.; Brook, M.N.; Zhang, Y.; Ahearn, T.; Orr, N.; Coulson, P.; Schoemaker, M.J.; Jones, M.E.; Gail, M.H.; et al. Comparative validation of breast cancer risk prediction models and projections for future risk stratification. J. Nat. Cancer Inst. 2020, 112, djz113. [Google Scholar] [CrossRef] [PubMed]

- Hüsing, A.; Quante, A.S.; Chang-Claude, J.; Aleksandrova, K.; Kaaks, R.; Pfeiffer, R.M. Validation of two US breast cancer risk prediction models in German women. Cancer Causes Control. 2020, 31, 525–536. [Google Scholar] [CrossRef] [PubMed]

- Jee, Y.H.; Gao, C.; Kim, J.; Park, S.; Jee, S.H.; Kraft, P. Validating breast cancer risk prediction models in the Korean Cancer Prevention Study-II Biobank. Cancer Epidemiol. Biomark. Prev. 2020, 29, 1271–1277. [Google Scholar]

- Brentnall, A.R.; Cuzick, J.; Buist, D.S.M.; Bowles, E.J.A. Long-term Accuracy of Breast Cancer Risk Assessment Combining Classic Risk Factors and Breast Density. JAMA Oncol. 2018, 4, e180174. [Google Scholar] [CrossRef]

- Li, K.; Anderson, G.; Viallon, V.; Arveux, P.; Kvaskoff, M.; Fournier, A.; Krogh, V.; Tumino, R.; Sánchez, M.J.; Ardanaz, E.; et al. Risk prediction for estrogen receptor-specific breast cancers in two large prospective cohorts. Breast Cancer Res. 2018, 20, 147. [Google Scholar] [PubMed]

- Min, J.W.; Chang, M.C.; Lee, H.K.; Hur, M.H.; Noh, D.Y.; Yoon, J.H.; Jung, Y.; Yang, J.H.; Korean Breast Cancer Society. Validation of risk assessment models for predicting the incidence of breast cancer in Korean women. J. Breast Cancer. 2014, 17, 226–235. [Google Scholar] [CrossRef] [PubMed]

- Arrospide, A.; Forne, C.; Rue, M.; Tora, N.; Mar, J.; Bare, M. An assessment of existing models for individualized breast cancer risk estimation in a screening program in Spain. BMC Cancer 2013, 13, 587. [Google Scholar] [CrossRef]

- Keogh, L.A.; Steel, E.; Weideman, P.; Butow, P.; Collins, I.M.; Emery, J.D.; Mann, G.B.; Bickerstaffe, A.; Trainer, A.H.; Hopper, L.J.; et al. Consumer and clinician perspectives on personalising breast cancer prevention information. Breast 2019, 43, 39–47. [Google Scholar]

- The Royal Australian College of General Practitioners. Guidelines for Preventive Activities in General Practice. 9th edn, updated. East Melbourne, Vic: RACGP. 2018. Available online: https://www.racgp.org.au/FSDEDEV/media/documents/Clinical%20Resources/Guidelines/Red%20Book/Guidelines-for-preventive-activities-in-general-practice.pdf (accessed on 28 October 2022).

- Phillips, K.A.; Liao, Y.; Milne, R.L.; MacInnis, R.J.; Collins, I.M.; Buchsbaum, R.; Weideman, P.C.; Bickerstaffe, A.; Nesci, S.; Chung, W.K.; et al. Accuracy of Risk Estimates from the iPrevent Breast Cancer Risk Assessment and Management Tool. JNCI Cancer Spectr. 2019, 3, pkz066. [Google Scholar] [CrossRef]

- Louro, J.; Posso, M.; Hilton Boon, M.; Román, M.; Domingo, L.; Castells, X.; Sala, M. A systematic review and quality assessment of individualised breast cancer risk prediction models. Br. J. Cancer. 2019, 121, 76–85. [Google Scholar]

- Cintolo-Gonzalez, J.A.; Braun, D.; Blackford, A.L.; Mazzola, E.; Acar, A.; Plichta, J.K.; Griffin, M.; Hughes, K.S. Breast cancer risk models: A comprehensive overview of existing models, validation, and clinical applications. Breast Cancer Res. Treat. 2017, 164, 263–284. [Google Scholar] [PubMed]

- Anothaisintawee, T.; Teerawattananon, Y.; Wiratkapun, C.; Kasamesup, V.; Thakkinstian, A. Risk prediction models of breast cancer: A systematic review of model performances. Breast Cancer Res. Treat. 2012, 133, 1–10. [Google Scholar] [PubMed]

- Meads, C.; Ahmed, I.; Riley, R.D. A systematic review of breast cancer incidence risk prediction models with meta-analysis of their performance. Breast Cancer Res. Treat. 2012, 132, 365–377. [Google Scholar] [PubMed]

- Moons, K.G.; Kengne, A.P.; Grobbee, D.E.; Royston, P.; Vergouwe, Y.; Altman, D.G.; Woodward, M. Risk prediction models: II. External validation, model updating, and impact assessment. Heart 2012, 98, 691–698. [Google Scholar] [PubMed]

- Collins, G.S.; de Groot, J.A.; Dutton, S.; Omar, O.; Shanyinde, M.; Tajar, A.; Voysey, M.; Wharton, R.; Yu, L.M.; Moons, K.G.; et al. External validation of multivariable prediction models: A systematic review of methodological conduct and reporting. BMC Med. Res. Methodol. 2014, 14, 40. [Google Scholar]

- MyPEBS. Available online: https://www.mypebs.eu/the-project/ (accessed on 24 October 2022).

- WISDOM. Available online: https://www.thewisdomstudy.org/learn-more/ (accessed on 24 October 2022).

- MyPeBS. Breast Cancer Risk Assessment Models. Available online: https://www.mypebs.eu/breast-cancer-screening/ (accessed on 27 October 2022).

- The WISDOM Study. Fact Sheet for Healthcare Providers. Available online: https://thewisdomstudy.wpenginepowered.com/wp-content/uploads/2020/10/The-WISDOM-Study_Provider-Factsheet.pdf (accessed on 27 October 2022).

| Population | Outcome | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Study ID | Country | Cohort | Age Range (Median), y | N | Study Start | Screening | Tool Comparisons | FU | Calibrated to Population? | Breast Cancer | Risk Interval (y) |

| Jantzen 2021 [33] | Canada | CARTaGENE | 40–69 (53.1) | 10,200 | 2009–2010 | 2 yearly, 50–69 y | TC v8.0b vs. BCRAT v4 a | 5 | No | Invasive | 5 |

| Hurson 2021 [29] | UK | UK Biobank | Age subgroups <50 years: 40–49 at DNA collection; (46) ≥50 years: 50–72 at DNA collection; (61) | <50 years: 36,005 ≥50 years: 134,920 | 2006 | NR | iCARE-BPC3 vs. iCARE-BPC3 + PRS iCARE-Lit vs. iCARE-Lit + PRS | 4 | Yes Yes | Invasive or DCIS | 5 |

| USA | WGHS b | 50–74 at DNA collection; (56) | 17,001 | 2000 | NR | iCARE-Lit vs. iCARE-Lit + PRS | 21 d | Yes Yes | Invasive or DCIS | 5 | |

| McCarthy 2020 [34] | USA | Newton-Wellesley Hospital | 40–84; (53.9) d | 35,921 | 2007–2009 | NR | TC v7 vs. TC v8.0b BCRAT v4 a vs. BRCAPRO v2.1–4 | 6.7 d | No Yes | Invasive | 6 |

| Choudhury 2020 [35] | UK | Generations Study | Age subgroups <50 years: 35–49; (42) ≥50 years: 50–74; (58) | <50 years: 28,232 ≥50 years: 36,642 | 2003–2012 | NR | TC v8 vs. iCARE-Lit, TC v8 vs. iCARE-BPC3, BCRATv3 vs. iCARE-Lit, iCARE-BPC3 vs. iCARE-Lit aRAT c | 5 | Yes Yes Yes | Invasive | 5 |

| USA | PLCO | 50–75; (61) | 48,279 | 1993–2001 | NR | BCRAT v3 a vs. iCARE-Lit aRAT c | 5 | Yes | Invasive | 5 | |

| Hüsing 2020 [36] | Germany | EPIC-Germany | 20–70; (40+: median 52.6) | 22,098 | 1994–1998 | NR | BCRAT v3 a vs. BCRmod BCRAT v3 a recalibrated vs. BCRmod recalibrated | 11.8 | No Yes | Invasive | 5 |

| Jee 2020 [37] | Republic of Korea | KCPS-II Biobank | Age subgroups <50 years: 21–49; (38) ≥50 years: 50–80; (58) | <50 years: 57,439 ≥50 years: 19,776 | 2004–2013 | 2-yearly, ≥40 years | KREA vs. KRKR (iCARE-Lit—based tools) aRAT c | 8.6 | Yes | Invasive | 5 |

| Terry 2019 [31] | USA, Canada, Australia | ProF-SC | 20–70; (NR) | 15,732 | 1992–2011 | NR | BCRAT v4 a vs. BRCAPRO v2.1–3; TC v8.0b vs. BCRAT v4 a; BOADICEA v3 vs. BRCAPRO v2.1–3; BOADICEA v3 vs. BCRAT v4 a | 11.1 | No No No No | Invasive | 5, 10 |

| Brentnall 2018 [38] | USA | Kaiser Permanente Washington BCSC | 40–75; (50) (general population: ≥50 y; high risk: ≥40 y | 132,139 | 1996–2013 | Annually; 50–75 y; high-risk women 40–49 y e | TC v7.02 vs. TC v7.02 + breast density | 5.2 | No | Invasive | 10 |

| Li 2018 [39] | USA | WHI | 50–79; (63.2) d | 82,319 | 1993–1998 | NR | ER- vs. ER+ aRAT c | 8.2 d | No | Invasive | 5 |

| Min 2014 [40] | Republic of Korea | Women’s Healthcare Center of Cheil General Hospital, Seoul | <29 to ≥60; (NR) | 40,229 | 1999–2004 | NR | BCRAT v2 a vs. AABCS Original Korean tool vs. Updated Korean tool | NR | No Yes | Invasive | 5 |

| Powell 2014 [30] | USA | MWS | <40 to ≥80; (NR) | 12,843 | 2003–2007 | NR | BCRAT v2 or v3 a vs. BRCAPRO v(NR) aRAT c | NR | Yes | Invasive | 5 |

| Arrospide 2013 [41] | Spain | Screening in Sabadell-Cerdanyola (EDBC-SC) area in Catalonia | 50–69; (57.0) d | 13,760 | 1995–1998 | 2-yearly; 50–69 y | BCRAT v1 a,f vs. Chen v1 | 13.3 | Yes | Invasive | 5 g |

| Chay 2012 [32] | Singapore | SBCSP | 50–64;h (NR) | 28,104 i | 1994–1997 k | Single 2-view mammogram, 50–64 y | BCRAT v2 a vs. AABCS | NR | No | Invasive | 5, 10 |

| Study (Country, Age Range) | Model | Proportion of Cohort Well-Validated a | Evidence of Miscalibration (p-Value) | Mis- Calibration b | Lower Q Compared to Middle Qs (p-Value) | Distinguishes Women in Lowest RG? b,c | Upper Q Compared to Middle Qs (p-Value) | Distinguishes Women in Highest RG? b,c | Trend in Observed Rates |

|---|---|---|---|---|---|---|---|---|---|

| Tyrer-Cuzick vs. BCRAT (5-year risk) | |||||||||

| Jantzen 2021, [33] (Canada, 50–69 y) | TC v8.0b | 2/4 (18%) | 0.045 | Yes | <0.001 | N/A | <0.001 | N/A | Fluctuating |

| BCRAT v4 | 3/4 (84%) | 0.035 | Yes | <0.001 | N/A | <0.001 | N/A | Fluctuating | |

| Terry 2019 [31] (USA, Canada, Australia, 20–70 y) | TC v8.0b | 2/4 (40%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing |

| BCRAT v4 | 1/4 (16%) | <0.001 | Yes | 0.004 | N/A | 0.004 | N/A | Increasing | |

| Tyrer-Cuzick vs. BCRAT (10-year risk) | |||||||||

| Terry 2019 [31], (USA, Canada, Australia, 20–70 y) | BCRAT v4 | 1/4 (26%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing |

| TC v8.0b | 2/4 (42%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing | |

| Tyrer-Cuzick vs. its variants or other tools (5–6 year risk) | |||||||||

| Choudhury 2020 [35], 5 y risk (UK cohort) | TC v8 (<50 y) | 9/10 (90%) | 0.074 | No | <0.001 | Yes | <0.001 | Yes | Fluctuating |

| iCARE-Lit (<50 y) | 10/10 (100%) | 0.251 | No | 0.006 | Yes | <0.001 | Yes | Fluctuating | |

| TC v8 (≥50 y) | 7/10 (70%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| iCARE-Lit (≥50 y) | 9/10 (90%) | 0.010 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| iCARE-BPC3 (≥50 y) | 9/10 (90%) | 0.997 | No | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| McCarthy 2020 [34], 6 y risk (USA, 40–84 y) | TC v.7 | 7/10 (70%) | 0.002 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating |

| TC v8.0b | 6/10 (60%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| Tyrer-Cuzick tool variants (10-year risk) | |||||||||

| Brentnall 2018 [38] 10 y risk (USA, 40–75 y) | TC v7.02 | 2/5 (55%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing |

| TC v7.02 + MD | 2/5 (47%) | <0.001 | Yes | <0.001 | N/A | <0.001 | Yes | Increasing | |

| BCRAT vs. its modifications (5-year risk) | |||||||||

| Chay 2012 [32], (Singapore, 50–64 y) | BCRAT v2 | 0/5 (0%) | <0.001 | Yes | 0.269 | No | 0.004 | Yes | Fluctuating |

| AABCS | 3/5 (60%) | <0.001 | Yes | 0.082 | No | <0.001 | Yes | Monotonic | |

| Hüsing 2020 [36] (Germany, 20–70 y) | BCRAT v3 | 10/10 (100%) | 0.918 | No | <0.001 | Yes | 0.018 | Yes | Fluctuating |

| BCRmod | 10/10 (100%) | 0.227 | No | 0.002 | Yes | <0.001 | Yes | Fluctuating | |

| BCRAT v3 recalibrated | 10/10 (100%) | 0.324 | No | <0.001 | Yes | 0.011 | Yes | Fluctuating | |

| BCRmod recalibrated | 7/10 (70%) | 0.007 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| Min 2014 [40] (Republic of Korea, >29–60 y) | BCRAT v2 | 1/5 (19%) | <0.001 | Yes | 0.333 | No | 0.010 | Yes | Fluctuating |

| AABCS | 2/5 (40%) | <0.001 | Yes | 0.464 | No | 0.016 | Yes | Fluctuating | |

| BCRAT vs. its modifications (10-year risk) | |||||||||

| Chay 2012 [32], (Singapore, 50–64 y) | BCRAT v2 | 0/5 (0%) | <0.001 | Yes | 0.253 | No | <0.001 | Yes | Fluctuating |

| AABCS | 5/5 (100%) | 0.719 | No | 0.007 | Yes | <0.001 | Yes | Increasing | |

| BCRAT vs. other risk assessment tools (5-year risk) | |||||||||

| Arrospide 2013 [41] (Spain, 50–69 y) | BCRAT v1 | 5/5 (100%) | 0.289 | No | 0.599 | No | 0.004 | Yes | Fluctuating |

| Chen v1 | 5/5 (100%) | 0.124 | No | 0.430 | No | 0.060 | No | Fluctuating | |

| Choudhury 2020 [35] (USA cohort, 50–75 y) | BCRAT v3 | 3/10 (30%) | <0.001 | Yes | 0.045 | Yes | <0.001 | Yes | Fluctuating |

| iCARE-Lit | 6/10 (60%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| McCarthy 2020 [34] (6-year risk only) (USA, 40–84 y) | BCRAT v4 | 10/10 (100%) | 0.863 | No | <0.001 | Yes | <0.001 | Yes | Fluctuating |

| BRCAPRO v2.1–4 | 9/10 (90%) | 0.061 | No | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| Powell 2014 [30] (USA, >40–80 y) | BCRAT v2 or 3 | 9/10 (90%) | 0.009 | Yes | <0.001 | Yes | 0.003 | Yes | Fluctuating |

| BRCAPRO v(NR) | 4/10 (40%) | <0.001 | Yes | 0.012 | Yes | <0.001 | Yes | Fluctuating | |

| Terry 2019 [31] (USA, Canada, Australia, 20–70 y) | BCRAT v4 | 1/4 (26%) | <0.001 | Yes | 0.004 | N/A | <0.001 | N/A | Increasing |

| BRCAPRO v2.1–3 | 0/4 (0%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing | |

| BOADICEA v3 | 2/4 (44%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing | |

| BCRAT vs. other risk assessment tools (10-year risk) | |||||||||

| Terry 2019 [31], (USA, Canada, Australia, 20–70 y) | BCRAT v4 | 1/4 (26%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing |

| BRCAPRO v2.1–3 | 1/4 (7%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing | |

| BOADICEA v3 | 3/4 (66%) | <0.001 | Yes | <0.001 | N/A | <0.001 | N/A | Increasing | |

| Tool comparisons with and without polygenic risk scores (5-year risk) | |||||||||

| Hurson 2021 [29] (UK cohort) | iCARE-Lit (<50 y) | 6/10 (60%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating |

| iCARE-Lit + PRS (<50 y) | 8/10 (80%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Increasing | |

| iCARE-Lit (≥50 y) | 9/10 (90%) | 0.041 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| iCARE-Lit + PRS (≥50 y) | 9/10 (90%) | 0.004 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| iCARE-BPC3 (≥50 y) | 10/10 (100%) | 0.020 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| iCARE-BPC3 + PRS (≥50 y) | 10/10 (100%) | 0.002 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| Other risk assessment tools (5-year risk) | |||||||||

| Jee 2020 [37] (Republic of Korea) | KREA (<50 y) | 5/10 (50%) | 0.022 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating |

| KRKR (<50 y) | 4/10 (40%) | 0.383 | No | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| KREA (≥50 y) | 6/10 (60%) | 0.341 | No | 0.002 | Yes | 0.160 | No | Fluctuating | |

| KRKR (≥50 y) | 3/10 (30%) | 0.127 | No | 0.005 | Yes | 0.222 | No | Fluctuating | |

| Li 2018 [39] (USA, 50–79 y) | ER- | 9/10 (90%) | 0.044 | Yes | 0.810 | No | 0.380 | No | Fluctuating |

| ER+ | 9/10 (90%) | <0.001 | Yes | <0.001 | Yes | <0.001 | Yes | Fluctuating | |

| Min 2014 [40] (Republi of Korea, >29–60 y) | Original Korean tool | 1/5 (20%) | <0.001 | Yes | 0.439 | No | 0.356 | No | Fluctuating |

| Updated Korean tool | 2/5 (40%) | <0.001 | Yes | 0.640 | No | 0.022 | Yes | Fluctuating | |

| Study | RAT | Cohort | Year | Outcome | Participants | Predictors | Outcome | Analysis a | Overall RoB |

|---|---|---|---|---|---|---|---|---|---|

| Hurson 2021 [29] | iCARE BPC3 | UK Biobank | 5 | Invasive or DCIS | LR | LR | U | HR | HR |

| Hurson 2021 [29] | iCARE BPC3 LR PRS | UK Biobank | 5 | Invasive or DCIS | LR | U | U | HR | HR |

| Hurson 2021 [29] | iCARE Lit | UK Biobank | 5 | Invasive or DCIS | LR | LR | U | HR | HR |

| Hurson 2021 [29] | iCARE Lit LR PRS | UK Biobank | 5 | Invasive or DCIS | LR | U | U | HR | HR |

| Hurson 2021 [29] | iCARE Lit | WGHS | 5 | Invasive or DCIS | LR | U | U | HR | HR |

| Hurson 2021 [29] | iCARE Lit LR PRS | WGHS | 5 | Invasive or DCIS | LR | U | U | HR | HR |

| Jantzen 2021 [33] | TC v8 | CARTaGENE | 5 | Invasive | LR | LR | U | HR | HR |

| Jantzen 2021 [33] | BCRAT v4 | CARTaGENE | 5 | Invasive | LR | LR | U | HR | HR |

| McCarthy 2020 [34] | TC v7 | NWH | 6 | Invasive | HR | LR | U | HR | HR |

| McCarthy 2020 [34] | TC v8.0b | NWH | 6 | Invasive | HR | LR | U | HR | HR |

| McCarthy 2020 [34] | BCRAT v4 | NWH | 6 | Invasive | LR | LR | U | HR | HR |

| McCarthy 2020 [34] | BRCAPRO v2.1HR4 | NWH | 6 | Invasive | HR | LR | U | HR | HR |

| Choudhury 2020 [35] | TC v8 | GS | 5 | Invasive | LR | U | U | HR | HR |

| Choudhury 2020 [35] | iCARE Lit | GS | 5 | Invasive | LR | U | U | HR | HR |

| Choudhury 2020 [35] | iCARE BPC3 | GS | 5 | Invasive | LR | U | U | HR | HR |

| Choudhury 2020 [35] | BCRAT v3 | PLCO | 5 | Invasive | LR | LR | U | HR | HR |

| Choudhury 2020 [35] | iCARE Lit | PLCO | 5 | Invasive | LR | LR | U | HR | HR |

| Hüsing 2020 [36] | BCRAT v3 | EPICHRGermany | 5 | Invasive | HR | U | HR | HR | HR |

| Hüsing 2020 [36] | BCRmod | EPICHRGermany | 5 | Invasive | LR | U | HR | HR | HR |

| Hüsing 2020 [36] | BCRAT v3 recalibrated | EPICHRGermany | 5 | Invasive | HR | U | HR | HR | HR |

| Hüsing 2020 [36] | BCRmod recalibrated | EPICHRGermany | 5 | Invasive | LR | U | HR | HR | HR |

| Jee 2020 [37] | KREA | KCPSHRII Biobank | 5 | Invasive | LR | LR | U | HR | HR |

| Jee 2020 [37] | KRKR | KCPSHRII Biobank | 5 | Invasive | LR | LR | U | HR | HR |

| Terry 2019 [31] | BCRAT v4 | ProFHRSC | 5 | Invasive | HR | HR | HR | HR | HR |

| Terry 2019 [31] | BRCAPRO v2.1HR3 | ProFHRSC | 5 | Invasive | LR | HR | HR | HR | HR |

| Terry 2019 [31] | TC v8.0b | ProFHRSC | 5 | Invasive | LR | HR | HR | HR | HR |

| Terry 2019 [31] | BOADICEA v3 | ProFHRSC | 5 | Invasive | LR | HR | HR | HR | HR |

| Terry 2019 [31] | BCRAT v4 | ProFHRSC | 10 | Invasive | HR | HR | HR | HR | HR |

| Terry 2019 [31] | BRCAPRO v2.1HR3 | ProFHRSC | 10 | Invasive | LR | HR | HR | HR | HR |

| Terry 2019 [31] | TC v8.0b | ProFHRSC | 10 | Invasive | LR | HR | HR | HR | HR |

| Terry 2019 [31] | BOADICEA v3 | ProFHRSC | 10 | Invasive | LR | HR | HR | HR | HR |

| Brentnall 2018 [38] | TC v7.02 | KPWHRBCSC | 10 | Invasive | LR | HR | U | HR | HR |

| Brentnall 2018 [38] | TC v7.02 LR BD | KPWHRBCSC | 10 | Invasive | LR | HR | U | HR | HR |

| Li 2018 [39] | ERHR | WHI | 5 | Invasive | LR | U | HR | HR | HR |

| Li 2018 [39] | ERLR | WHI | 5 | Invasive | LR | U | HR | HR | HR |

| Min 2014 [40] | BCRAT v2 | WHC CGH | 5 | Invasive | HR | LR | U | HR | HR |

| Min 2014 [40] | AABCS | WHC CGH | 5 | Invasive | HR | LR | U | HR | HR |

| Min 2014 [40] | Original Korean tool | WHC CGH | 5 | Invasive | HR | LR | U | HR | HR |

| Min 2014 [40] | Updated Korean tool | WHC CGH | 5 | Invasive | HR | LR | U | HR | HR |

| Powell 2014 [30] | BCRAT v2 or 3 | MWS | 5 | Invasive | HR | HR | U | HR | HR |

| Powell 2014 [30] | BRCAPRO v(NR) | MWS | 5 | Invasive | LR | HR | U | HR | HR |

| Arrospide 2013 [41] | BCRAT v1 | SCHRBCSP | 5 | Invasive | LR | LR | HR | HR | HR |

| Arrospide 2013 [41] | Chen v1 | SCHRBCSP | 5 | Invasive | LR | HR | HR | HR | HR |

| Chay 2012 [32] | BCRAT v2 | SBCSP | 5 | Invasive | LR | HR | U | HR | HR |

| Chay 2012 [32] | AABCS | SBCSP | 5 | Invasive | LR | HR | U | HR | HR |

| Chay 2012 [32] | BCRAT v2 | SBCSP | 10 | Invasive | LR | HR | U | HR | HR |

| Chay 2012 [32] | AABCS | SBCSP | 10 | Invasive | LR | HR | U | HR | HR |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Velentzis, L.S.; Freeman, V.; Campbell, D.; Hughes, S.; Luo, Q.; Steinberg, J.; Egger, S.; Mann, G.B.; Nickson, C. Breast Cancer Risk Assessment Tools for Stratifying Women into Risk Groups: A Systematic Review. Cancers 2023, 15, 1124. https://doi.org/10.3390/cancers15041124

Velentzis LS, Freeman V, Campbell D, Hughes S, Luo Q, Steinberg J, Egger S, Mann GB, Nickson C. Breast Cancer Risk Assessment Tools for Stratifying Women into Risk Groups: A Systematic Review. Cancers. 2023; 15(4):1124. https://doi.org/10.3390/cancers15041124

Chicago/Turabian StyleVelentzis, Louiza S., Victoria Freeman, Denise Campbell, Suzanne Hughes, Qingwei Luo, Julia Steinberg, Sam Egger, G. Bruce Mann, and Carolyn Nickson. 2023. "Breast Cancer Risk Assessment Tools for Stratifying Women into Risk Groups: A Systematic Review" Cancers 15, no. 4: 1124. https://doi.org/10.3390/cancers15041124

APA StyleVelentzis, L. S., Freeman, V., Campbell, D., Hughes, S., Luo, Q., Steinberg, J., Egger, S., Mann, G. B., & Nickson, C. (2023). Breast Cancer Risk Assessment Tools for Stratifying Women into Risk Groups: A Systematic Review. Cancers, 15(4), 1124. https://doi.org/10.3390/cancers15041124