Virtual Reality-Assisted Awake Craniotomy: A Retrospective Study

Abstract

:Simple Summary

Abstract

1. Introduction

2. Methods

2.1. Patients

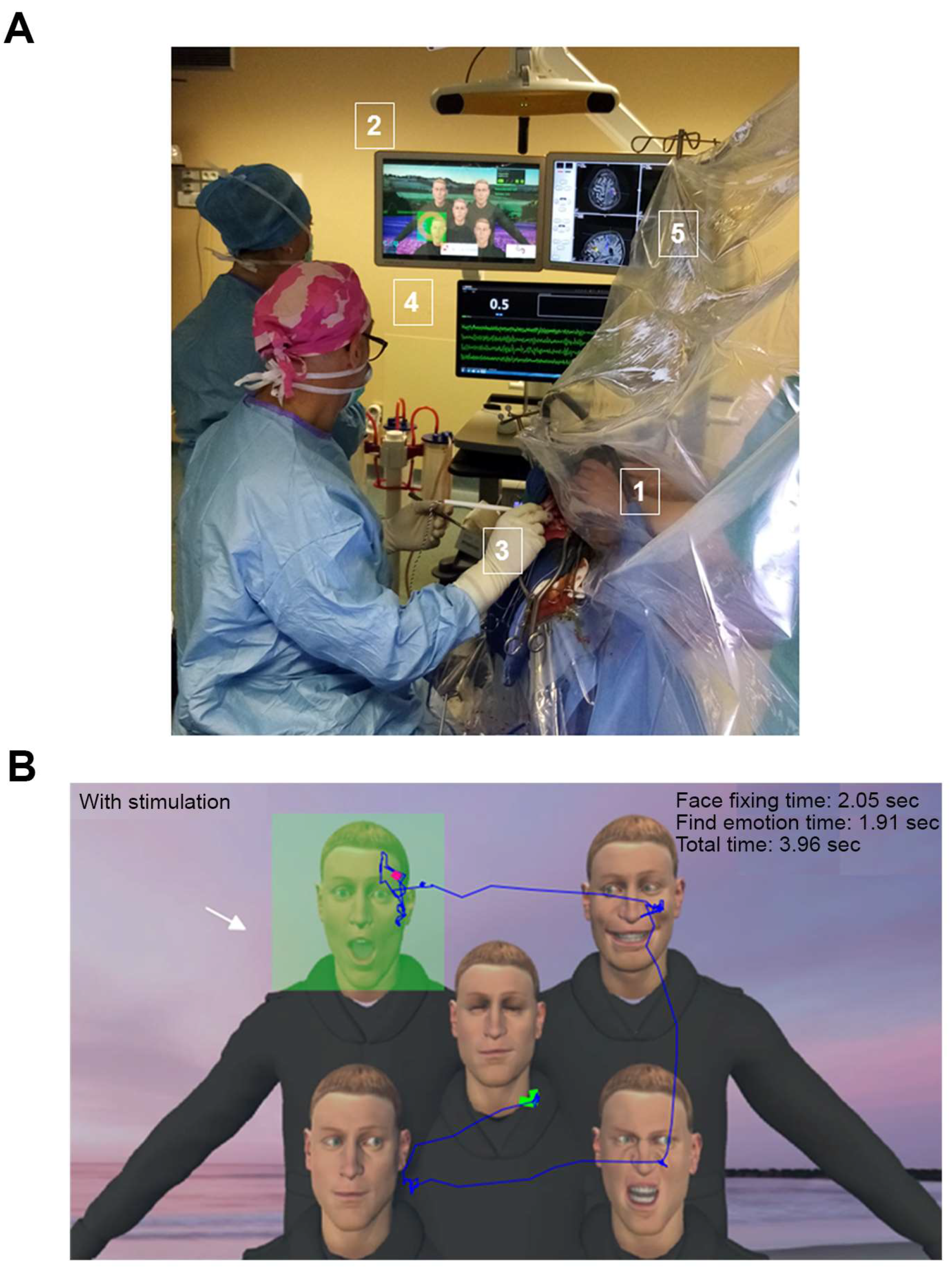

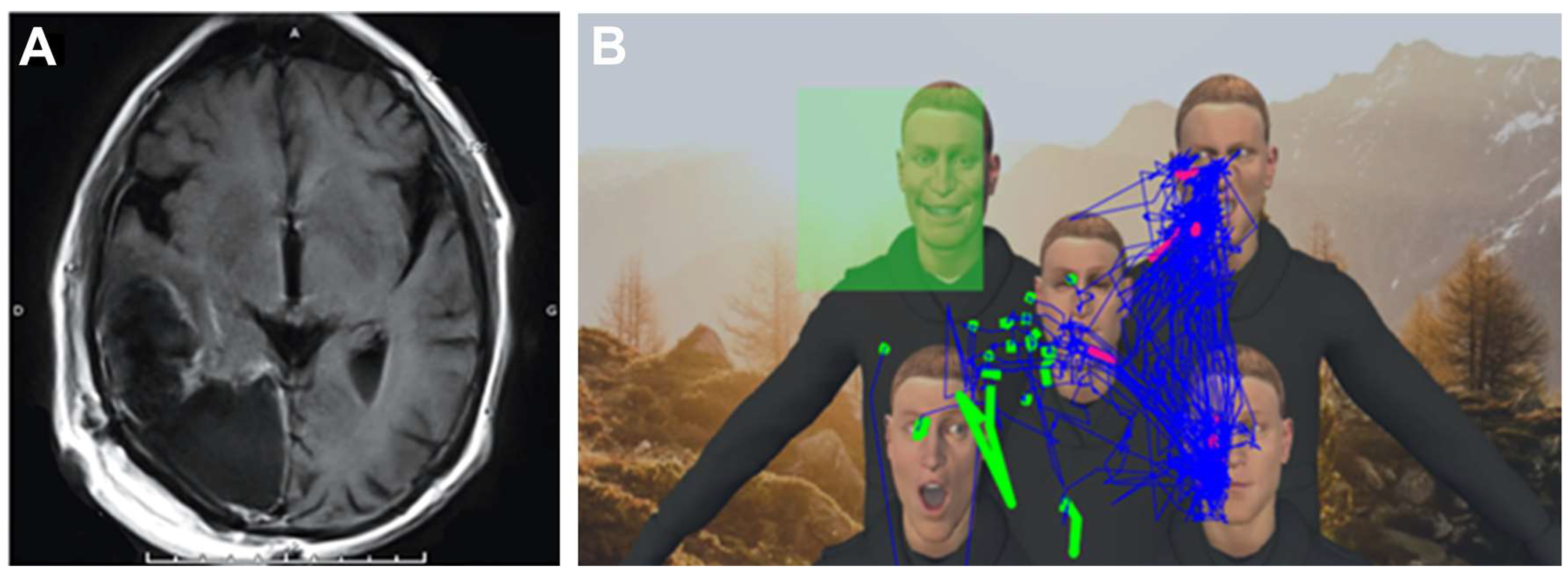

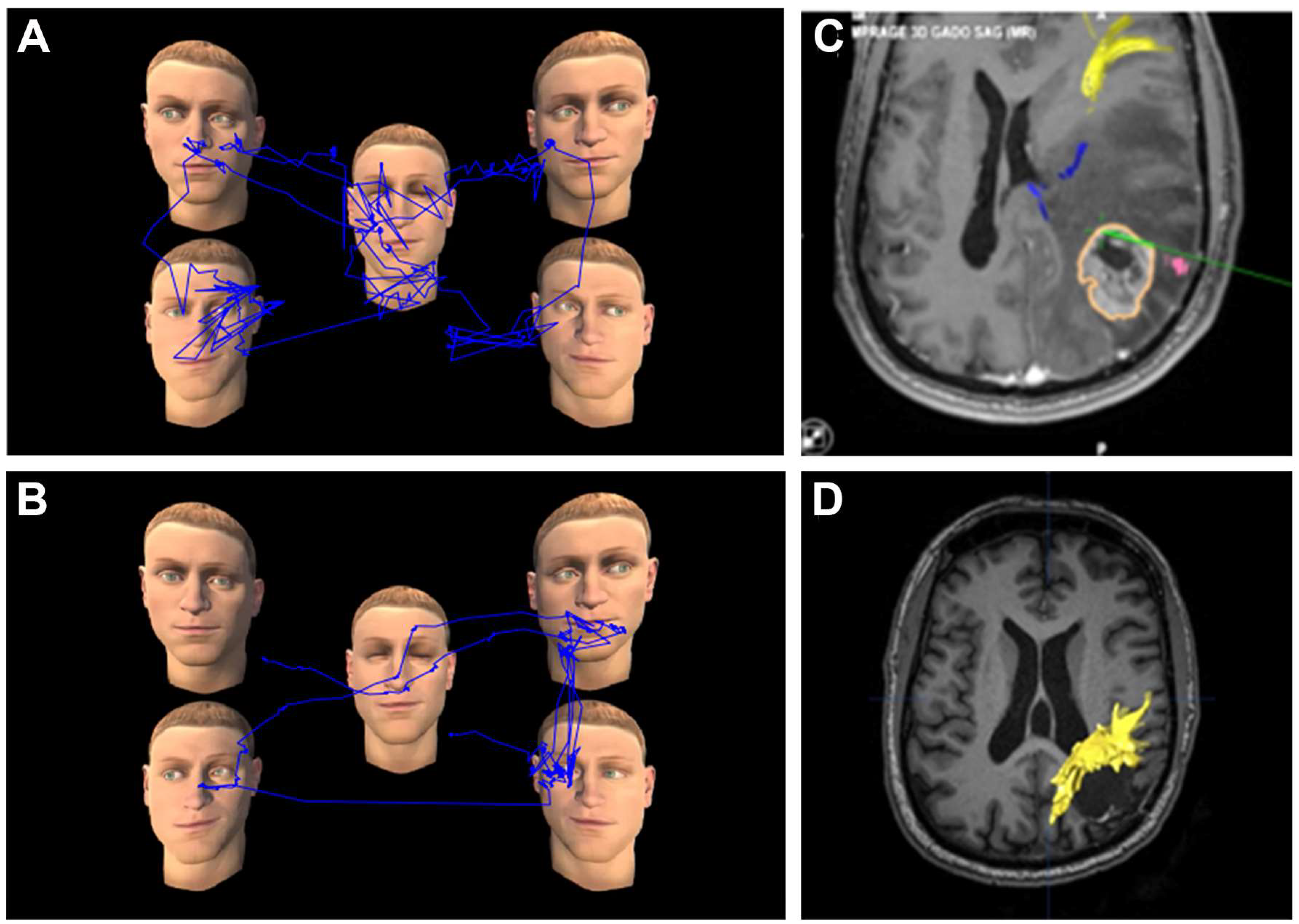

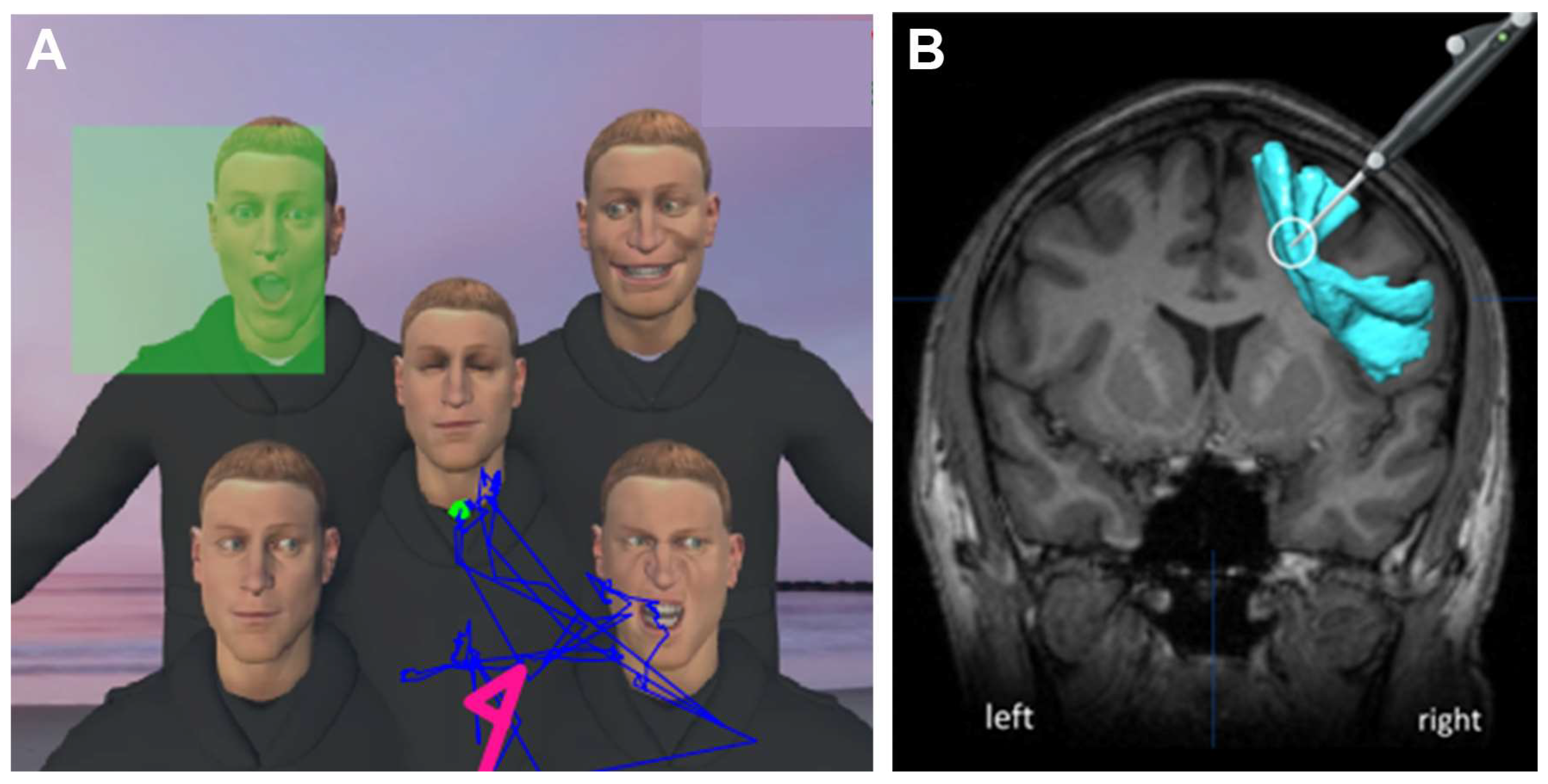

2.2. VRHs

2.3. VR Tasks

2.4. Operative Procedure

3. Results

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

References

- Duffau, H. New Philosophy, Clinical Pearls, and Methods for Intraoperative Cognition Mapping and Monitoring “à La Carte” in Brain Tumor Patients. Neurosurgery 2021, 88, 919–930. [Google Scholar] [CrossRef] [PubMed]

- Martín-Monzón, I.; Rivero Ballagas, Y.; Arias-Sánchez, S. Language Mapping: A Systematic Review of Protocols That Evaluate Linguistic Functions in Awake Surgery. Appl. Neuropsychol. Adult 2022, 29, 845–854. [Google Scholar] [CrossRef]

- Morshed, R.A.; Young, J.S.; Lee, A.T.; Berger, M.S.; Hervey-Jumper, S.L. Clinical Pearls and Methods for Intraoperative Awake Language Mapping. Neurosurgery 2021, 89, 143–153. [Google Scholar] [CrossRef] [PubMed]

- Motomura, K.; Ohka, F.; Aoki, K.; Saito, R. Supratotal Resection of Gliomas With Awake Brain Mapping: Maximal Tumor Resection Preserving Motor, Language, and Neurocognitive Functions. Front. Neurol. 2022, 13, 874826. [Google Scholar] [CrossRef] [PubMed]

- Seidel, K.; Szelényi, A.; Bello, L. Intraoperative Mapping and Monitoring during Brain Tumor Surgeries. Handb. Clin. Neurol. 2022, 186, 133–149. [Google Scholar] [CrossRef]

- Mandonnet, E.; Cerliani, L.; Siuda-Krzywicka, K.; Poisson, I.; Zhi, N.; Volle, E.; de Schotten, M.T. A Network-Level Approach of Cognitive Flexibility Impairment after Surgery of a Right Temporo-Parietal Glioma. Neurochirurgie 2017, 63, 308–313. [Google Scholar] [CrossRef]

- Sagberg, L.M.; Iversen, D.H.; Fyllingen, E.H.; Jakola, A.S.; Reinertsen, I.; Solheim, O. Brain Atlas for Assessing the Impact of Tumor Location on Perioperative Quality of Life in Patients with High-Grade Glioma: A Prospective Population-Based Cohort Study. NeuroImage Clin. 2019, 21, 101658. [Google Scholar] [CrossRef]

- Salo, J.; Niemelä, A.; Joukamaa, M.; Koivukangas, J. Effect of Brain Tumour Laterality on Patients’ Perceived Quality of Life. J. Neurol. Neurosurg. Psychiatry 2002, 72, 373–377. [Google Scholar] [CrossRef]

- Weed, E.; McGregor, W.; Feldbaek Nielsen, J.; Roepstorff, A.; Frith, U. Theory of Mind in Adults with Right Hemisphere Damage: What’s the Story? Brain Lang. 2010, 113, 65–72. [Google Scholar] [CrossRef]

- Bernard, F.; Lemée, J.-M.; Ter Minassian, A.; Menei, P. Right Hemisphere Cognitive Functions: From Clinical and Anatomic Bases to Brain Mapping During Awake Craniotomy Part I: Clinical and Functional Anatomy. World Neurosurg. 2018, 118, 348–359. [Google Scholar] [CrossRef]

- Fortin, D.; Iorio-Morin, C.; Tellier, A.; Goffaux, P.; Descoteaux, M.; Whittingstall, K. High-Grade Gliomas Located in the Right Hemisphere Are Associated With Worse Quality of Life. World Neurosurg. 2021, 149, e721–e728. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, P.; Zhao, J.; Zhong, J.; Pan, P.; Wang, G.; Yi, Z. Theory of Mind and Empathy in Adults With Epilepsy: A Meta-Analysis. Front. Psychiatry 2022, 13, 877957. [Google Scholar] [CrossRef]

- Mazerand, E.; Le Renard, M.; Hue, S.; Lemée, J.-M.; Klinger, E.; Menei, P. Intraoperative Subcortical Electrical Mapping of the Optic Tract in Awake Surgery Using a Virtual Reality Headset. World Neurosurg. 2017, 97, 424–430. [Google Scholar] [CrossRef]

- Santos, C.; García, V.; Gómez, E.; Velásquez, C.; Martino, J. Visual Mapping for Tumor Resection: A Proof of Concept of a New Intraoperative Task and A Systematic Review of the Literature. World Neurosurg. 2022, 164, 353–366. [Google Scholar] [CrossRef]

- Menei, P.; Clavreul, A.; Casanova, M.; Colle, D.; Colle, H. Vision. In Intraoperative Mapping of Cognitive Networks; Mandonnet, E., Herbet, G., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Conner, A.K.; Glenn, C.; Burks, J.D.; McCoy, T.; Bonney, P.A.; Chema, A.A.; Case, J.L.; Brunner, S.; Baker, C.; Sughrue, M. The Use of the Target Cancellation Task to Identify Eloquent Visuospatial Regions in Awake Craniotomies: Technical Note. Cureus 2016, 8, e883. [Google Scholar] [CrossRef]

- Fried, I.; Mateer, C.; Ojemann, G.; Wohns, R.; Fedio, P. Organization of Visuospatial Functions in Human Cortex. Evidence from Electrical Stimulation. Brain J. Neurol. 1982, 105, 349–371. [Google Scholar] [CrossRef]

- Giussani, C.; Pirillo, D.; Roux, F.-E. Mirror of the Soul: A Cortical Stimulation Study on Recognition of Facial Emotions. J. Neurosurg. 2010, 112, 520–527. [Google Scholar] [CrossRef]

- Herbet, G.; Moritz-Gasser, S. Beyond Language: Mapping Cognition and Emotion. Neurosurg. Clin. N. Am. 2019, 30, 75–83. [Google Scholar] [CrossRef]

- Kitabayashi, T.; Nakada, M.; Kinoshita, M.; Sakurai, H.; Kobayashi, S.; Okita, H.; Nanbu, Y.; Hayashi, Y.; Hamada, J.-I. Awake surgery with line bisection task for two cases of parietal glioma in the non-dominant hemisphere. No Shinkei Geka 2012, 40, 1087–1093. [Google Scholar]

- Lemée, J.-M.; Bernard, F.; Ter Minassian, A.; Menei, P. Right Hemisphere Cognitive Functions: From Clinical and Anatomical Bases to Brain Mapping During Awake Craniotomy. Part II: Neuropsychological Tasks and Brain Mapping. World Neurosurg. 2018, 118, 360–367. [Google Scholar] [CrossRef]

- Nakada, M.; Nakajima, R.; Okita, H.; Nakade, Y.; Yuno, T.; Tanaka, S.; Kinoshita, M. Awake Surgery for Right Frontal Lobe Glioma Can Preserve Visuospatial Cognition and Spatial Working Memory. J. Neurooncol. 2021, 151, 221–230. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, R.; Kinoshita, M.; Okita, H.; Liu, Z.; Nakada, M. Preserving Right Pre-Motor and Posterior Prefrontal Cortices Contribute to Maintaining Overall Basic Emotion. Front. Hum. Neurosci. 2021, 15, 612890. [Google Scholar] [CrossRef] [PubMed]

- Prat-Acín, R.; Galeano-Senabre, I.; López-Ruiz, P.; Ayuso-Sacido, A.; Espert-Tortajada, R. Intraoperative Brain Mapping of Language, Cognitive Functions, and Social Cognition in Awake Surgery of Low-Grade Gliomas Located in the Right Non-Dominant Hemisphere. Clin. Neurol. Neurosurg. 2021, 200, 106363. [Google Scholar] [CrossRef] [PubMed]

- Puglisi, G.; Sciortino, T.; Rossi, M.; Leonetti, A.; Fornia, L.; Conti Nibali, M.; Casarotti, A.; Pessina, F.; Riva, M.; Cerri, G.; et al. Preserving Executive Functions in Nondominant Frontal Lobe Glioma Surgery: An Intraoperative Tool. J. Neurosurg. 2018, 131, 474–480. [Google Scholar] [CrossRef]

- Roux, A.; Lemaitre, A.-L.; Deverdun, J.; Ng, S.; Duffau, H.; Herbet, G. Combining Electrostimulation With Fiber Tracking to Stratify the Inferior Fronto-Occipital Fasciculus. Front. Neurosci. 2021, 15, 683348. [Google Scholar] [CrossRef]

- Rutten, G.-J.M.; Landers, M.J.F.; De Baene, W.; Meijerink, T.; van der Hek, S.; Verheul, J.H.B. Executive Functional Deficits during Electrical Stimulation of the Right Frontal Aslant Tract. Brain Imaging Behav. 2021, 15, 2731–2735. [Google Scholar] [CrossRef]

- Yordanova, Y.N.; Cochereau, J.; Duffau, H.; Herbet, G. Combining Resting State Functional MRI with Intraoperative Cortical Stimulation to Map the Mentalizing Network. NeuroImage 2019, 186, 628–636. [Google Scholar] [CrossRef]

- Rahimpour, S.; Haglund, M.M.; Friedman, A.H.; Duffau, H. History of Awake Mapping and Speech and Language Localization: From Modules to Networks. Neurosurg. Focus 2019, 47, E4. [Google Scholar] [CrossRef]

- Pallud, J.; Rigaux-Viode, O.; Corns, R.; Muto, J.; Lopez Lopez, C.; Mellerio, C.; Sauvageon, X.; Dezamis, E. Direct Electrical Bipolar Electrostimulation for Functional Cortical and Subcortical Cerebral Mapping in Awake Craniotomy. Practical Considerations. Neurochirurgie 2017, 63, 164–174. [Google Scholar] [CrossRef]

- Katsevman, G.A.; Greenleaf, W.; García-García, R.; Perea, M.V.; Ladera, V.; Sherman, J.H.; Rodríguez, G. Virtual Reality During Brain Mapping for Awake-Patient Brain Tumor Surgery: Proposed Tasks and Domains to Test. World Neurosurg. 2021, 152, e462–e466. [Google Scholar] [CrossRef]

- Mishra, R.; Narayanan, M.D.K.; Umana, G.E.; Montemurro, N.; Chaurasia, B.; Deora, H. Virtual Reality in Neurosurgery: Beyond Neurosurgical Planning. Int. J. Environ. Res. Public. Health 2022, 19, 1719. [Google Scholar] [CrossRef]

- Parsons, T.D.; Gaggioli, A.; Riva, G. Virtual Reality for Research in Social Neuroscience. Brain Sci. 2017, 7, 42. [Google Scholar] [CrossRef]

- Vayssiere, P.; Constanthin, P.E.; Herbelin, B.; Blanke, O.; Schaller, K.; Bijlenga, P. Application of Virtual Reality in Neurosurgery: Patient Missing. A Systematic Review. J. Clin. Neurosci. Off. J. Neurosurg. Soc. Australas. 2022, 95, 55–62. [Google Scholar] [CrossRef]

- Bernard, F.; Lemée, J.-M.; Aubin, G.; Ter Minassian, A.; Menei, P. Using a Virtual Reality Social Network During Awake Craniotomy to Map Social Cognition: Prospective Trial. J. Med. Internet Res. 2018, 20, e10332. [Google Scholar] [CrossRef]

- Casanova, M.; Clavreul, A.; Soulard, G.; Delion, M.; Aubin, G.; Ter Minassian, A.; Seguier, R.; Menei, P. Immersive Virtual Reality and Ocular Tracking for Brain Mapping During Awake Surgery: Prospective Evaluation Study. J. Med. Internet Res. 2021, 23, e24373. [Google Scholar] [CrossRef]

- Delion, M.; Klinger, E.; Bernard, F.; Aubin, G.; Minassian, A.T.; Menei, P. Immersing Patients in a Virtual Reality Environment for Brain Mapping during Awake Surgery: Safety Study. World Neurosurg. 2020, 134, e937–e943. [Google Scholar] [CrossRef]

- Ocean Rift sur Oculus Rift [Internet] Oculus. Available online: https://www.oculus.com/experiences/rift/1253785157981619/ (accessed on 8 January 2021).

- VR Projects [Internet] Julius Horsthuis. Available online: http://www.julius-horsthuis.com/vr-projects (accessed on 8 January 2021).

- Zen Parade—Shape Space VR [Internet]. Available online: http://www.shapespacevr.com/zen-parade.html (accessed on 8 January 2021).

- vTime: The VR Sociable Network—Out Now for Windows Mixed Reality, Gear VR, Oculus Rift, iPhone, Google Daydream, and Google Cardboard [Internet]. Available online: http://www.webcitation.org/6zKYC8j6Q (accessed on 25 February 2018).

- Clavreul, A.; Aubin, G.; Delion, M.; Lemée, J.-M.; Ter Minassian, A.; Menei, P. What Effects Does Awake Craniotomy Have on Functional and Survival Outcomes for Glioblastoma Patients? J. Neurooncol. 2021, 151, 113–121. [Google Scholar] [CrossRef]

- Herbet, G.; Zemmoura, I.; Duffau, H. Functional Anatomy of the Inferior Longitudinal Fasciculus: From Historical Reports to Current Hypotheses. Front. Neuroanat. 2018, 12, 77. [Google Scholar] [CrossRef]

- Cantisano, N.; Menei, P.; Roualdes, V.; Seizeur, R.; Allain, P.; Le Gall, D.; Roy, A.; Dinomais, M.; Laurent, A.; Besnard, J. Relationships between Executive Functioning and Health-Related Quality of Life in Adult Survivors of Brain Tumor and Matched Healthy Controls. J. Clin. Exp. Neuropsychol. 2021, 43, 980–990. [Google Scholar] [CrossRef]

- Stroop, J.R. Studies of Interference in Serial Verbal Reactions. APA PsycArticles 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Schenkenberg, T.; Bradford, D.C.; Ajax, E.T. Line Bisection and Unilateral Visual Neglect in Patients with Neurologic Impairment. Neurology 1980, 30, 509–517. [Google Scholar] [CrossRef] [PubMed]

- Talacchi, A.; Squintani, G.M.; Emanuele, B.; Tramontano, V.; Santini, B.; Savazzi, S. Intraoperative Cortical Mapping of Visuospatial Functions in Parietal Low-Grade Tumors: Changing Perspectives of Neurophysiological Mapping. Neurosurg. Focus 2013, 34, E4. [Google Scholar] [CrossRef] [PubMed]

- Vallar, G.; Bello, L.; Bricolo, E.; Castellano, A.; Casarotti, A.; Falini, A.; Riva, M.; Fava, E.; Papagno, C. Cerebral Correlates of Visuospatial Neglect: A Direct Cerebral Stimulation Study. Hum. Brain Mapp. 2014, 35, 1334–1350. [Google Scholar] [CrossRef] [PubMed]

- Rolland, A.; Herbet, G.; Duffau, H. Awake Surgery for Gliomas within the Right Inferior Parietal Lobule: New Insights into the Functional Connectivity Gained from Stimulation Mapping and Surgical Implications. World Neurosurg. 2018, 112, e393–e406. [Google Scholar] [CrossRef]

- Bartolomeo, P.; Thiebaut de Schotten, M.; Duffau, H. Mapping of Visuospatial Functions during Brain Surgery: A New Tool to Prevent Unilateral Spatial Neglect. Neurosurgery 2007, 61, E1340. [Google Scholar] [CrossRef]

- Karnath, H.O.; Ferber, S.; Himmelbach, M. Spatial Awareness Is a Function of the Temporal Not the Posterior Parietal Lobe. Nature 2001, 411, 950–953. [Google Scholar] [CrossRef]

- Rorden, C.; Fruhmann Berger, M.; Karnath, H.-O. Disturbed Line Bisection Is Associated with Posterior Brain Lesions. Brain Res. 2006, 1080, 17–25. [Google Scholar] [CrossRef]

- Chechlacz, M.; Rotshtein, P.; Humphreys, G.W. Neuroanatomical Dissections of Unilateral Visual Neglect Symptoms: ALE Meta-Analysis of Lesion-Symptom Mapping. Front. Hum. Neurosci. 2012, 6, 230. [Google Scholar] [CrossRef]

- Papagno, C.; Pisoni, A.; Mattavelli, G.; Casarotti, A.; Comi, A.; Fumagalli, F.; Vernice, M.; Fava, E.; Riva, M.; Bello, L. Specific Disgust Processing in the Left Insula: New Evidence from Direct Electrical Stimulation. Neuropsychologia 2016, 84, 29–35. [Google Scholar] [CrossRef]

- Motomura, K.; Terasawa, Y.; Natsume, A.; Iijima, K.; Chalise, L.; Sugiura, J.; Yamamoto, H.; Koyama, K.; Wakabayashi, T.; Umeda, S. Anterior Insular Cortex Stimulation and Its Effects on Emotion Recognition. Brain Struct. Funct. 2019, 224, 2167–2181. [Google Scholar] [CrossRef]

- Joyal, C.C.; Jacob, L.; Cigna, M.-H.; Guay, J.-P.; Renaud, P. Virtual Faces Expressing Emotions: An Initial Concomitant and Construct Validity Study. Front. Hum. Neurosci. 2014, 8, 787. [Google Scholar] [CrossRef]

- Krumhuber, E.G.; Kappas, A.; Manstead, A.S.R. Effects of Dynamic Aspects of Facial Expressions: A Review. Emot. Rev. 2013, 5, 41–46. [Google Scholar] [CrossRef]

- Yordanova, Y.N.; Duffau, H.; Herbet, G. Neural Pathways Subserving Face-Based Mentalizing. Brain Struct. Funct. 2017, 222, 3087–3105. [Google Scholar] [CrossRef]

- Oakley, B.F.M.; Brewer, R.; Bird, G.; Catmur, C. Theory of Mind Is Not Theory of Emotion: A Cautionary Note on the Reading the Mind in the Eyes Test. J. Abnorm. Psychol. 2016, 125, 818–823. [Google Scholar] [CrossRef]

- Kerkhoff, G.; Schenk, T. Line Bisection in Homonymous Visual Field Defects—Recent Findings and Future Directions. Cortex J. Devoted Study Nerv. Syst. Behav. 2011, 47, 53–58. [Google Scholar] [CrossRef]

- Catani, M.; Bambini, V. A Model for Social Communication And Language Evolution and Development (SCALED). Curr. Opin. Neurobiol. 2014, 28, 165–171. [Google Scholar] [CrossRef]

- Burkhardt, E.; Kinoshita, M.; Herbet, G. Functional Anatomy of the Frontal Aslant Tract and Surgical Perspectives. J. Neurosurg. Sci. 2021, 65, 566–580. [Google Scholar] [CrossRef]

- Gallet, C.; Clavreul, A.; Bernard, F.; Menei, P.; Lemée, J.-M. Frontal Aslant Tract in the Non-Dominant Hemisphere: A Systematic Review of Anatomy, Functions, and Surgical Applications. Front. Neuroanat. 2022, 16, 1025866. [Google Scholar] [CrossRef]

- Dick, A.S.; Garic, D.; Graziano, P.; Tremblay, P. The Frontal Aslant Tract (FAT) and Its Role in Speech, Language and Executive Function. Cortex J. Devoted Study Nerv. Syst. Behav. 2019, 111, 148–163. [Google Scholar] [CrossRef]

- Garic, D.; Broce, I.; Graziano, P.; Mattfeld, A.; Dick, A.S. Laterality of the Frontal Aslant Tract (FAT) Explains Externalizing Behaviors through Its Association with Executive Function. Dev. Sci. 2019, 22, e12744. [Google Scholar] [CrossRef]

- Ferber, S.; Karnath, H.O. How to Assess Spatial Neglect--Line Bisection or Cancellation Tasks? J. Clin. Exp. Neuropsychol. 2001, 23, 599–607. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Measuring Facial Movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Wheelwright, S.; Hill, J.; Raste, Y.; Plumb, I. The “Reading the Mind in the Eyes” Test Revised Version: A Study with Normal Adults, and Adults with Asperger Syndrome or High-Functioning Autism. J. Child Psychol. Psychiatry 2001, 42, 241–251. [Google Scholar] [CrossRef] [PubMed]

| Patients (n = 64) | n | % | |

|---|---|---|---|

| Age (years) | median (range): 51 years (23 years–75 years) | ||

| <70 | 61 | 95.3 | |

| ≥70 | 3 | 4.7 | |

| Sex | |||

| Male | 37 | 57.8 | |

| Female | 27 | 42.2 | |

| Handedness | |||

| Right | 58 | 90.6 | |

| Left | 6 | 9.4 | |

| Tumor location | |||

| |||

| Left | 38 | 59.4 | |

| Right | 26 | 40.6 | |

| |||

| Frontal | 31 | 48.4 | |

| Temporal | 3 | 4.7 | |

| Parietal | 14 | 21.9 | |

| Occipital | 2 | 3.1 | |

| Insular | 1 | 1.6 | |

| Temporo-parietal junction | 8 | 12.5 | |

| Fronto-temporo-insular | 5 | 7.8 | |

| Tumor histology | |||

| Glioblastoma | 21 | 32.8 | |

| Anaplastic astrocytoma | 16 | 25.0 | |

| Anaplastic oligodendroglioma | 9 | 14.1 | |

| Oligodendroglioma grade 2 | 3 | 4.7 | |

| Metastasis | 14 | 21.9 | |

| Benign cystic lesion | 1 | 1.6 | |

| Awake surgery (n = 69) | n | % | |

| Duration of surgery | median (range): 2 h 17 min (1 h–5 h 20 min) | ||

| Time awake | median (range): 1 h 39 min (38 min–4 h 30 min) | ||

| Intensity of stimulation (mA) | median (range): 2.0 mA (0.5 mA–8.0 mA) | ||

| Intraoperative seizures | 14 | 20.3 | |

| Hemisphere location | |||

| Left | 40 | 58.0 | |

| Right | 29 | 42.0 | |

| Duration of VRH use per patient | median (range): 15.5 min (3.0 min–53.0 min) | ||

| VR tasks | |||

| |||

| One task | 54 | 78.3 | |

| Two tasks | 14 | 20.3 | |

| Three tasks | 1 | 1.5 | |

| |||

| VR-DO80 | 42 | 60.9 | |

| VR-TANGO | 33 | 47.8 | |

| VR-Esterman | 10 | 14.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bernard, F.; Clavreul, A.; Casanova, M.; Besnard, J.; Lemée, J.-M.; Soulard, G.; Séguier, R.; Menei, P. Virtual Reality-Assisted Awake Craniotomy: A Retrospective Study. Cancers 2023, 15, 949. https://doi.org/10.3390/cancers15030949

Bernard F, Clavreul A, Casanova M, Besnard J, Lemée J-M, Soulard G, Séguier R, Menei P. Virtual Reality-Assisted Awake Craniotomy: A Retrospective Study. Cancers. 2023; 15(3):949. https://doi.org/10.3390/cancers15030949

Chicago/Turabian StyleBernard, Florian, Anne Clavreul, Morgane Casanova, Jérémy Besnard, Jean-Michel Lemée, Gwénaëlle Soulard, Renaud Séguier, and Philippe Menei. 2023. "Virtual Reality-Assisted Awake Craniotomy: A Retrospective Study" Cancers 15, no. 3: 949. https://doi.org/10.3390/cancers15030949

APA StyleBernard, F., Clavreul, A., Casanova, M., Besnard, J., Lemée, J.-M., Soulard, G., Séguier, R., & Menei, P. (2023). Virtual Reality-Assisted Awake Craniotomy: A Retrospective Study. Cancers, 15(3), 949. https://doi.org/10.3390/cancers15030949