1. Introduction

By means of Artificial Intelligence (AI) and Machine Learning (ML), digital transformation can achieve higher performances, effectiveness, and increased sustainability by improving manufacturing processes to reduce waste and defects [

1,

2]. In fact, AI and ML allow enhanced condition monitoring [

3], early fault diagnosis, and reliable predictive maintenance [

4], fostering goals of zero-defect and zero-waste manufacturing [

5,

6]. Among the others, Digital Twins (DT) [

7,

8] have been established as an effective enabling technology for digital transformation to describe, monitor, predict and control [

9,

10] manufacturing elements, processes, and systems [

11,

12]. Increasingly, ML approaches and AI are being used to model DTs of physical entities, for such data-driven approaches [

13] can overcome expensive modelling efforts required by physics-based and analytical modelling strategies [

14].

However, AI and ML severely depend on the quality of the data. Within this framework, data metrology [

15] and virtual metrology [

16] are gaining a critical and core role to establish trustworthiness of advanced modelling tools. Specifically, data metrology aims at guaranteeing the quality and traceability of data, while providing all relevant information to quantify uncertainty for the particular application [

16]. Furthermore, a critical challenge for digital transformation is the availability of big data, which is required for the application of AI and ML to state-of-the-art monitoring approaches, e.g., DTs [

17,

18]. Also, traceable virtual experiments and digital twins can only be achieved when trustworthy data are available [

19].

Accordingly, synthetic datasets are increasingly resorted to augment the representativeness and the numerosity of the training dataset. In fact, rare or extreme conditions can often be underrepresented due to their low occurrence, e.g., due to failures, or extreme costs and risk of related experiments. To overcome such limitations, synthetic data generation has emerged as a strategic solution. In fact, synthetic data generation consists of creating artificial information that accurately reflects the physical and statistical characteristics of real processes. The technique has evolved along a path of increasing sophistication and has found several applications in current industrial and academic applied research.

Synthetic data can be generated mainly by analytical models, data-driven approaches, or by a hybrid method [

20]. Analytical models leverage closed-form solutions based on the physics constitutive and descriptive equations of the considered phenomenon. Although highly elegant and computationally effective, they often rely on simplifying assumptions, which might significantly bias the estimated response. Finite element method (FEM) simulations enable overcoming simplifying assumptions by iteratively reaching numerical solutions, albeit at the cost of introducing a large number of parameters, high computational costs, and a cost-efficiency that drives the approximation and bias of the response. Data-driven approaches stand on the opposite side of modelling. In fact, data-driven approaches build models starting from available data without prior knowledge of the system physics. Machine Learning has made data-driven approaches widely used [

21,

22], e.g., by exploiting generative adversarial networks [

23], reinforcement learning, variational autoencoders, Markov chain models, and Gaussian process regressions [

21,

22]. Inverse measurement problems solutions try to combine data-driven methods, most typically Bayesian metaheuristics, with numerical simulation, to find a set of hyperparameters that minimises the bias of the simulation [

24,

25,

26]. However, the solution is computationally and experimentally extremely expensive, potentially requiring thousands of indentations in different conditions, and may be not unique [

27]. Lastly, hybrid approaches aim to combine the simplicity and potential of ML with the prior knowledge typical of analytical methods. This is typically obtained by constraining some parameters and by defining the analytical form of the model in agreement with the physics of the system [

28].

Examples of synthetic data generation can be found both for modelling complex systems and processes and for advanced quality controls.

As far as the complex systems case is concerned, for example, Toro et al. developed a synthetic data generator for smart measurement sensors of electrical quantities integral to advanced grid infrastructures. The generator was based on an analytical model and was required due to the scarcity of real-world data because of privacy, security, and grid accessibility [

29]. Urgo et al. exploited synthetic image generation via virtual reality tools to augment the training dataset for a manufacturing system quality control that tracks objects on the production line [

3,

4]. Lopes et al. exploited synthetic data generated by a Random Graph model to train a DT of a production system, incorporating rare scenarios such as those related to failures, and to tune the response on different bottleneck, allocation, and productivity scenarios otherwise impossible or extremely expensive to obtain experimentally [

30].

Examples of effective use of synthetic datasets to model and control manufacturing processes can be found in Kim et al., who leveraged synthetic data generation to improve the robustness of DT training for a pick-and-place operation by a collaborative robot within a human–machine interaction framework. Such a scenario requires both object detection and gripping, as well as obstacle avoidance, which, for robust training, requires big data collected in a safe environment. Graphic rendering simulations were leveraged to generate synthetic images used to train the object detection and gripping optimisation, while virtual reality was exploited to simulate obstacles and robot response optimisation by means of a reinforcement learning strategy [

31]. Loaldi et al. overcame experimental costs to model the complex interaction of process parameters and part quality in micro-injection moulding processes by synthetic data generation through traceable FEM simulations [

32]. Similarly, Solis-Rios et al. reduced the cost of investigating the relationship between process parameters for PE-Oxides nanofibres by Neural Network generation of synthetic data [

33].

Similarly, measurement and quality controls largely benefited from synthetic data. For example, Nguyen et al. generated synthetic data for automotive wiring to compensate for the cost of acquiring real data by a neural network for geometrical data and by FEM simulation for electrical functionality [

34]. Synthetic data generation by generative artificial networks has also been largely exploited to increase the training datasets for Machine Vision applications, e.g., for surveillance [

35], and for visual inspection of quality of welds [

36] or packaging of microelectronics [

37].

Additionally, widespread adoption of synthetic dataset generation can be found for metrological applications to improve the accuracy and measurement uncertainty of measurement techniques. Lafon et al. developed a methodology to generate reference data to benchmark the performance of registration algorithms [

38].

Extensive use can also be found in surface science to support nanometrology and increase the informativeness of characterisation techniques. Necas and Klapetek recently reviewed the use of synthetic data for nanometrology of scanning probe microscopy (SPM), highlighting benefits both in terms of accuracy, uncertainty, and measurement duration. Also, synthetic data allows compiling an atlas of measurement error useful both for training and measurement compensations [

39]. Advanced deep learning modelling of SPM-based nanoindentation was obtained by synthetic data generation based on contact models [

40].

Among the other surface characterisation techniques, nanoindentation [

41,

42] has largely been the object of data fusion through synthetic data generation. This work aims at developing a metrological framework for synthetic data generation to support error detection and modelling in nanoindentation. The following subsection will briefly introduce the fundamentals of nanoindentation, review state-of-the-art applications of synthetic data to nanoindentation, and define the scope of the work.

1.1. Fundamentals of Nanoindentation

Nanoindentation, i.e., Instrumented Indentation Test in the nano-range [

42], is a depth-sensing, non-conventional hardness measuring technique which allows high-resolution characterisation of mechanical properties of surface layers [

41,

43]. It allows evaluating estimates of Young modulus, creep, and relaxation behaviour of materials [

42,

43] and coatings [

44,

45,

46]. It finds extensive applications, e.g., to study grain and phase size, distribution, and mechanical properties [

47], to characterise properties’ gradient in functional coatings [

46,

48], directional manufacturing [

47], and finishing processes [

49], and it can also measure film thickness [

50]. Most innovative applications are now focusing on in-operando characterisation, typically for high-temperature aerospace environments [

51] and for biological materials [

52].

Nanoindentation is performed by applying a loading–holding–unloading force cycle to a sample by means of an indenter. The (most typically) applied force F and the indenter penetration depth h in the sample are measured during the whole experiment, thus obtaining the Indentation Curve (IC), i.e., F(h). The characterisation at nanoscales is enabled by the calibrated correlation between the area of the contact surface of the indenter with the sample and the penetration depth, i.e., the area shape function A(h).

Traceability is obtained by calibrating the force and displacement scales, and by calibrating the area shape function parameters and the frame compliance

Cf, needed to compensate for the elastic deformation of the indentation platform occurring during the load application. In particular, the corrected contact depth

hc is obtained as in Equation (1a), and the maximum corrected contact depth

hc,max results in Equation (1b):

where

h0 is the zero contact point,

accounts for the elastic deformation of the indentation testing machine, and

for the elastic deformation of the sample at the onset of the unloading [

42,

53]. The latter term includes a correction factor to cater for the indenter geometry, i.e.,

, and for the measured contact stiffness,

Sm.

Sm is evaluated as the derivative of the IC at the onset of the unloading. The derivative evaluation requires modelling the

F(

h) relationship [

42]. Such a task, although other approximations have been suggested [

54,

55], is best obtained by leveraging Sneddon’s solution of Boussinesq’s contact problem [

56]. This assumes a power-law relationship, see Equation (2), where the parameters depend on the material of the sample and the indenter and on the indenter geometry.

Two different models, i.e., sets of parameters, can be obtained by studying the loading and unloading segment of the IC, respectively, with parameters

, and

. The parameters are estimated by nonlinear regression, with the constraint that 1 <

< 2 [

56,

57]. For the loading segment, the

x-axis offset parameter estimates the zero contact point

h0, while for the unloading segment, it estimates the residual indentation depth

hp.

Although the system is calibrated, several measurement errors can still be introduced during the experimental procedure. First, the sample material creep response shall be compensated to avoid any unwanted dynamic contribution. This is obtained by a suitably long primary holding at the maximum force

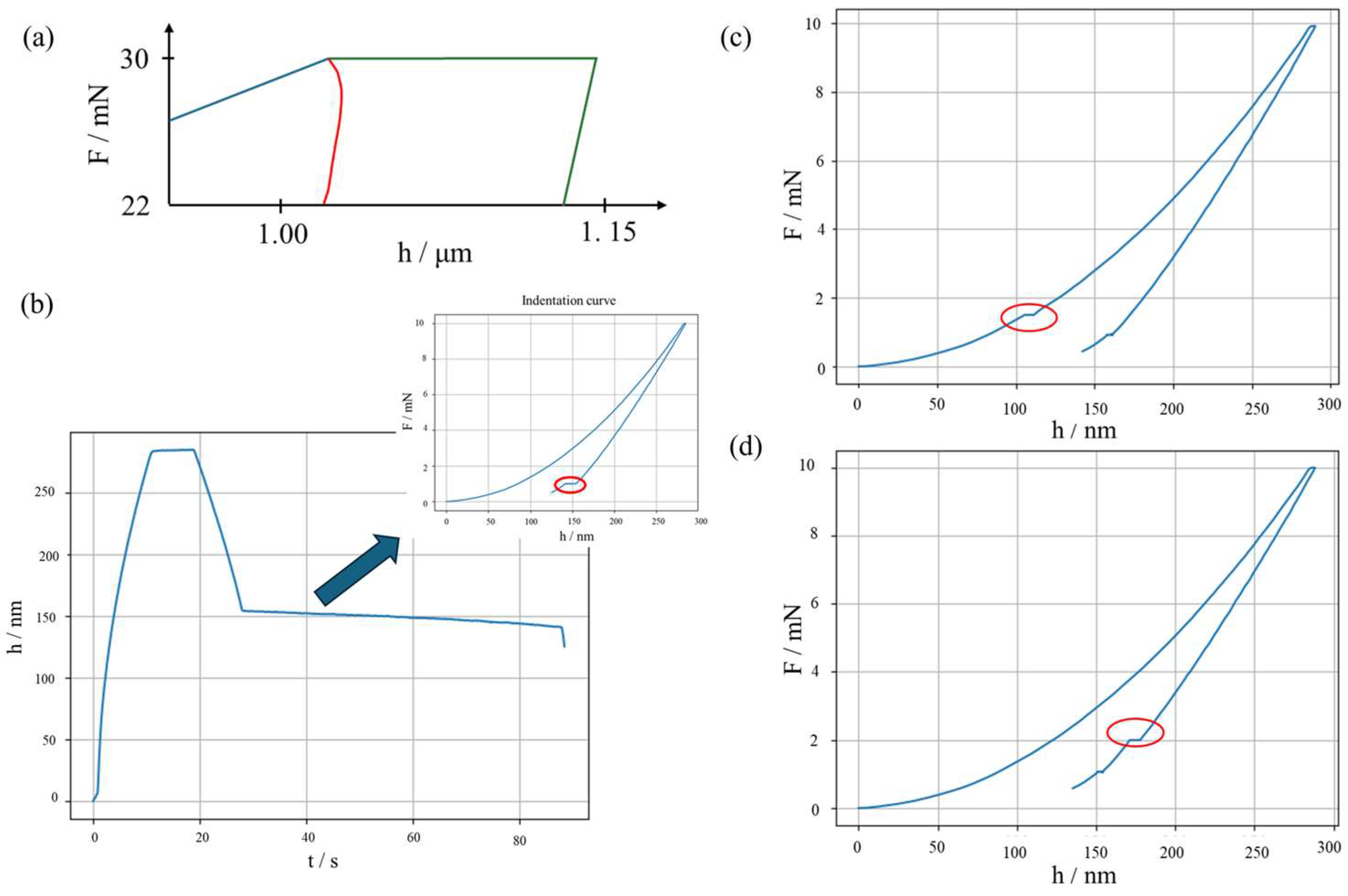

Fmax. If the holding is insufficient, i.e., in the presence of a significant room temperature creep, a “nose” at the onset of the unloading, see

Figure 1a, can be appreciated, hindering proper evaluation of the sample contact stiffness. Second, a thermal drift is generated between the indenter tip and the sample. This generates a trend in

h as a function of time

t, biasing the results. The thermal drift can be compensated by introducing a secondary holding, conventionally set a 10% of

Fmax. Such secondary holding allows estimating the slope of

h(

t), which is then used to correct the penetration depth measurements.

Figure 1b shows examples of significant thermal drifts. Last, discontinuities in the IC can indicate an abrupt change in the material behaviour, which can be attributed to phase transformation or cracking, as shown in

Figure 1c,d.

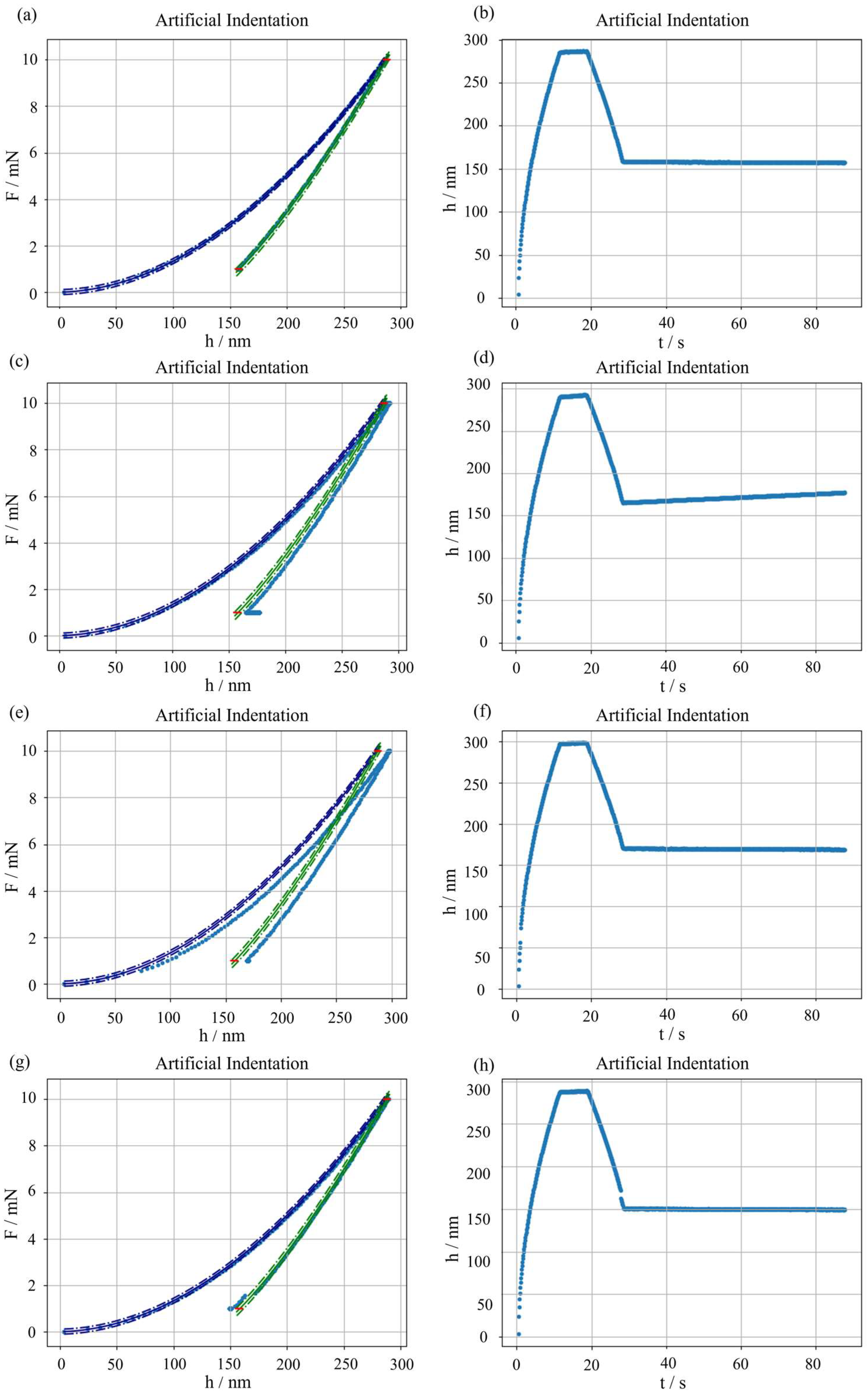

Figure 1.

Typical measurement errors in nanoindentation. (

a) “Nose” due to too short holding: blue, loading; red, unloading with nose; green, primary holding and unloading with correct shape. (

b) Thermal drift: notice the slope in the secondary holding of

h(

t); the inset shows the longer secondary holding (circled in red), compared to a typical IC of

Figure 2. (

c) Pop-in event, highlighted by a red circle. (

d) Pop-out event, highlighted by a red circle.

Figure 1.

Typical measurement errors in nanoindentation. (

a) “Nose” due to too short holding: blue, loading; red, unloading with nose; green, primary holding and unloading with correct shape. (

b) Thermal drift: notice the slope in the secondary holding of

h(

t); the inset shows the longer secondary holding (circled in red), compared to a typical IC of

Figure 2. (

c) Pop-in event, highlighted by a red circle. (

d) Pop-out event, highlighted by a red circle.

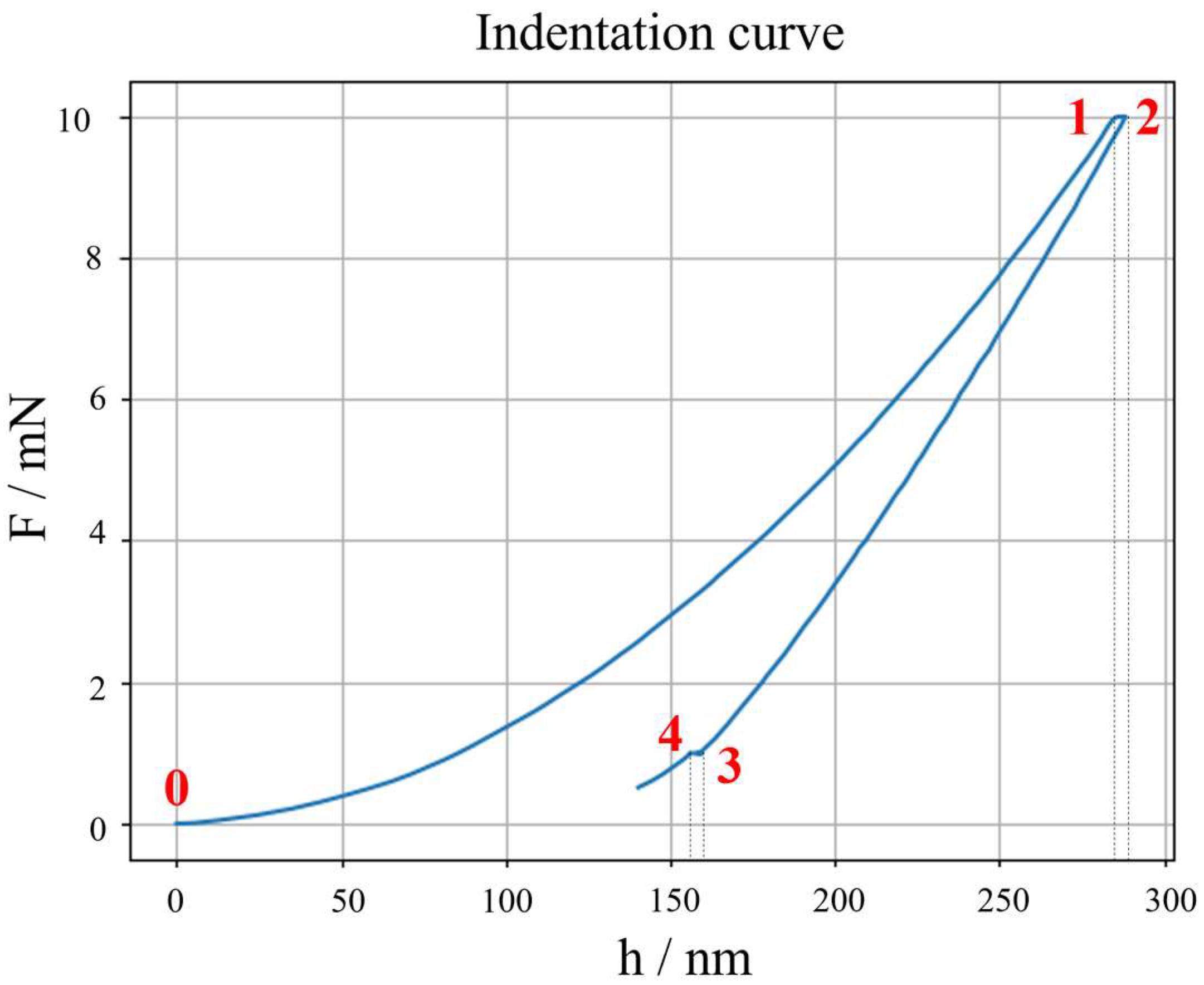

Figure 2.

Annotation of critical IC points for continuity: 0: first contact point, 1: end of loading and beginning of primary holding, 2: end of primary holding and start of unloading, 3: end of unloading and start of secondary holding, 4: end of secondary holding.

Figure 2.

Annotation of critical IC points for continuity: 0: first contact point, 1: end of loading and beginning of primary holding, 2: end of primary holding and start of unloading, 3: end of unloading and start of secondary holding, 4: end of secondary holding.

1.2. Applications of Synthetic Data Generation to Nanoindentation

Nanoindentation has also been the object of synthetic data generation. Several examples can be found in the literature, and, like other measurement techniques—as discussed above—the application involves both advanced characterisation and metrology.

As far as the application for advanced characterisation, these are most often coupled with ML [

58]. For example, Koumoulos et al. exploited synthetic data generation by the Synthetic Minority Over-sampling Technique (SMOTE) algorithm to increase the robustness of automatic classification and identification of reinforcement fibres in carbon fibre reinforced polymers (CFRP) [

59]. Giolando et al. exploited a numerical synthetic dataset generator to solve the inverse indentation problem in biological tissues. The synthetic dataset allowed increasing experimental conditions necessary to overcome the lack of uniqueness of the solution [

60]. Bruno et al. used k-NN++ synthetic data generation to impute missing data to enhance a correlative microscopy study for transformation induced plasticity (TRIP) steels [

61]. Mahmood and Zia trained a generative adversarial network to generate synthetic data to support the prediction of the hardness of diamond-like carbon (DLC) coatings under varying heat treatment processing conditions [

62].

Widespread adoption of synthetic dataset generation can also be found for metrological applications. Some target the model optimisation for cutting-edge testing techniques, such as SPM-based nanoindentation. For example, synthetic data were used to train an ML model estimating mechanical parameters [

40], which would otherwise require a complex choice of contact model. Other applications can be found for uncertainty estimation and to support calibration methods. In particular, data-driven approaches have been used to investigate the effect of sample size on the accuracy and uncertainty of the calibration of the area shape function parameters and of the frame compliance. They were based on Monte Carlo sampling [

63] and on bootstrapping [

64]. The generation of synthetic data allowed highlighting a significant contribution of the calibration dataset [

64] and a relevant sensitivity of calibration methods to the dataset and experimental conditions [

65]. However, although practical, the bootstrapping features a severe limitation in extrapolation and prediction behaviour. Similarly, the Monte Carlo method requires obtaining accurate predictions and avoiding significant overestimation of the measurement uncertainty to model the covariance of simulated quantities, i.e.,

F(

h).

1.3. Scope of the Work

This work aims to develop a traceable synthetic dataset generator for quasi-static room-temperature nanoindentation that can account for the correlation and covariance of simulated quantities. The synthetic dataset generator will be tested for accuracy and will enable associating uncertainty with the simulated results to enable the application within a metrological framework. The developed model will then be benchmarked with other alternatives, e.g., based on bootstrapping, to compare relative performances. Last, the synthetic dataset generator will include the possibility to simulate the most typical measurement errors. Such a feature will allow adopting the developed approach to train and validate advanced quality control tools for automatic measurement error detection in nanoindentation, e.g., a Digital Twin. Innovatively, the proposed method aims at establishing traceability for the synthetic datasets while keeping the experimental and computational cost effective.

The rest of the paper is structured as follows.

Section 2 describes the modelling, highlighting methods to establish traceability and to evaluate measurement uncertainty.

Section 3 will present results, which are discussed in

Section 4. Finally,

Section 5 draws conclusions and provides an outlook on future research.

2. Metrological Nanoindentation Synthetic Dataset Generator

This section describes first how a metrological synthetic dataset generator is obtained, and how the uncertainty of the generated data can be estimated. Later, it addresses how the main measurement errors can be simulated. Last, it presents validation methodologies to discuss accuracy with respect to real data, and relative performances with respect to other synthetic nanoindentation dataset generators for metrological applications.

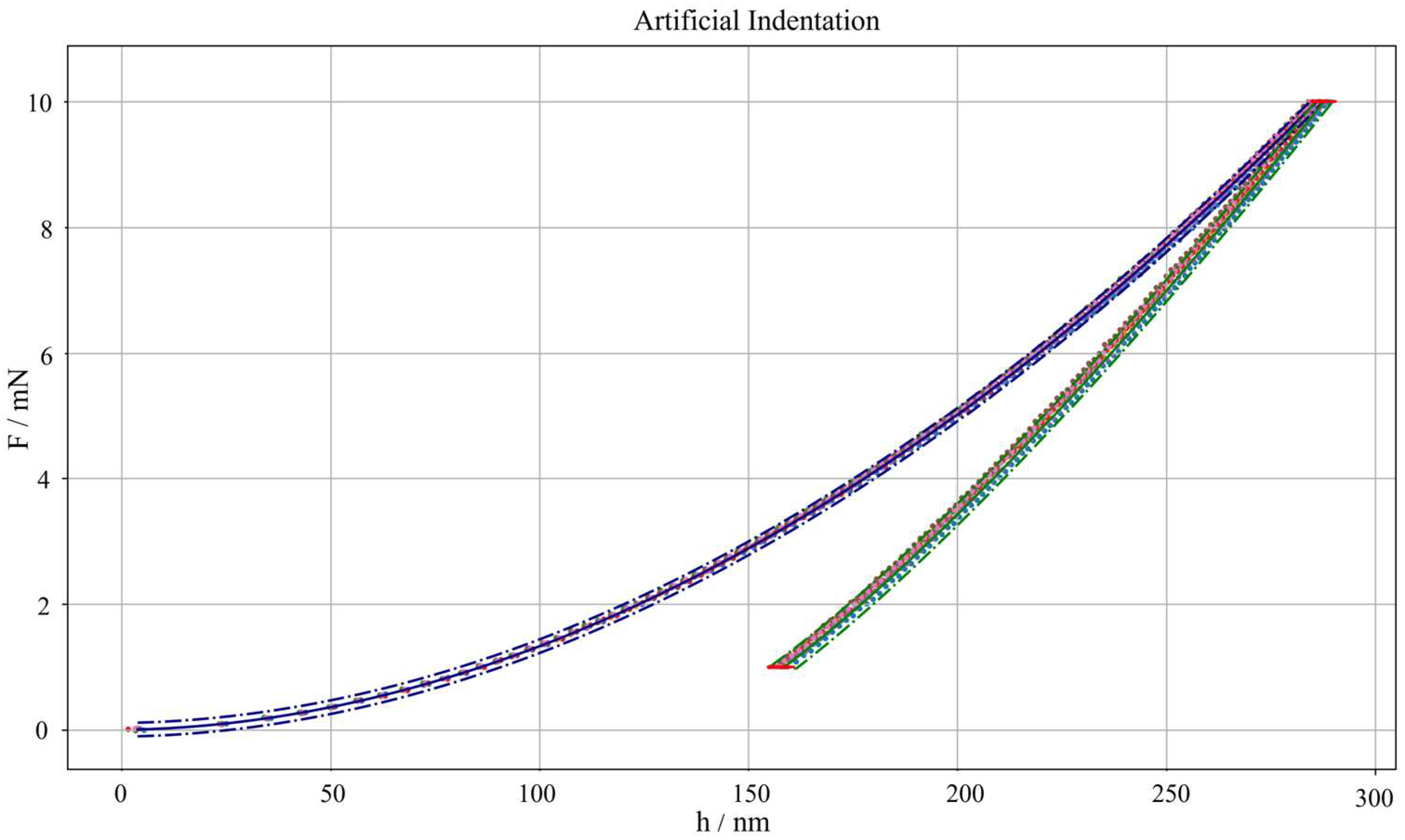

This work proposes to leverage a hybrid approach to model the synthetic data generator. Such a choice, as briefly commented in the Introduction Section, aims to overcome the simplicity of analytical methods, which requires a strong hypothesis of ideal elastic behaviour and isotropy of the sample; the lack of uniqueness of solutions based on the inverse indentation problem, which challenges metrological applications; and the lack of coherence with the physics of the system of data-driven approaches. Accordingly, a physics-informed modelling is followed.

The statistical methodology is ultimately a parametric simulation approach, where the main simulation parameters, i.e., the distribution shape and related parameters, of measured quantities shall be defined to allow synthetic data generation. The approach proposes to estimate such parameters by exploiting experimental data, thereby allowing for the establishment of traceability for the synthetic dataset. The advantage of this approach lies in the fact that it allows for both modelling correlation between measured quantities and ensuring GUM-compliance for the sake of uncertainty evaluation [

66,

67,

68,

69].

The synthetic data generation modelling is discussed assuming the most typical choice of a force-controlled loading–holding–unloading force cycle, with a secondary holding for thermal drift compensation. In such a case, both the loading and unloading segments of the IC can be modelled as per Equation (2), while the two holding segments follow the assumption of a constant force as a function of time. Continuity is then constrained, with reference to

Figure 2, such that if we consider the

i-th indentation, it follows:

where

indicates the average, nominally constant, force of the secondary holding, and

indicates the penetration depth at the end of the unloading.

2.1. Model Training

Model training is based on real data to guarantee traceability. In particular, we can consider a training set of

K ICs, each consisting of

J data collected at a certain sampling frequency; collected data are typically triplets of

. Each IC can be modelled with a power-law model for loading and unloading:

Parameters

are estimated by nonlinear orthogonal distance regression (ODR) to account for error-in-variables. In fact, ODR assumes error both in the response (ε) and in the regressors (δ), e.g.,

, and allows obtaining an estimation of model parameters by minimising

, which are both expressed for the loading segment of the IC.

Then, it is possible to estimate the average parameters and the mean squared errors of the residuals for both the loading and unloading segments of the IC, e.g., Equations (5a), (5b) and (6) exemplify computations for the loading segment:

According to the modelling of Equation (4a) and (4b) for the loading and unloading segment of the ICs, it is also possible to invert the model to express the estimated penetration depth:

2.2. Data Generation

For each synthetic indentation and for each segment of the indentation curve, the number of points

J is sampled from a normal distribution with mean and variance evaluated from the

K training curves, i.e.,

where the

indicates the sample average of

K observations, and

indicates the sample variance of

K observations.

Then, for each synthetic indentation and for each segment of the indentation curve, the start time, as in Equation (9a), and end time, as in Equation (9b), are sampled from a normal distribution with mean and variance evaluated from the

K curves to train the model. The time vector

t, see Equation (9d), is then simulated as a linear spacing of

J data in the overall duration

, as in Equation (9c). The variance of the nanoindentation experiment overall duration is obtained as

.

2.2.1. Loading Segment Generation

With reference to

Figure 2, the force at point 0,

F0, is sampled from a normal distribution with mean and variance evaluated from the

K training ICs, see Equation (10), and the force at point 1,

F1, is sampled from a normal distribution with mean and variance from the primary holding of the

K experimental curves, as in Equation (11).

Model parameters

are sampled considering that the estimates by the regression come from a multivariate normal distribution with mean

and covariance matrix

, as in Equation (12):

Then, the penetration depth at point 1,

, is evaluated as in Equation (13).

Since a force-controlled cycle is considered, the force vector for the loading segment

Fl is simulated as a linear spacing vector between

F0 and

F1, as in Equation (14a). A point-wise zero-mean random noise is then added to cater for measurement noise, as in Equation (14b)

Then, the penetration depth vector is simulated from the regression curve

, as described in Equation (7), constraining

. No further measurement noise, simulating reproducibility, is added as it is already included in the distributions of

and

.

2.2.2. Primary Holding Generation

The primary holding is characterised by the maximum applied force

Fmax and by the duration. The primary holding aims to compensate for the room-temperature creep. In fact, the control parameters (

Fmax and duration) induce an increase in the penetration depth

computed as the difference between the first and last point of the primary holding, i.e.,

, defined with reference to

Figure 2.

is sampled from a normal distribution with mean and variance evaluated from the

K training ICs as in Equation (15), where the variance is obtained by combining individual contributions as

.

Then, from the previously sampled

, as per Equation (13), the penetration depth at point 2,

, is evaluated as in Equation (16a). The penetration depth vector for the primary holding,

, is then simulated as a linear spacing vector between

and

, as in Equation (16b). A point-wise random noise is added to account for the accuracy, assumed normally distributed, with zero mean, and variance proportional to the measured penetration depth, see Equation (16c) [

42]. No measurement reproducibility is further added because it is already included in the distributions of

and

.

The associated force vector,

, is simulated, as in Equation (17a), as a constant force value equal to

(with

=

), sampled from Equation (11). A point-wise random noise is added to account for the assumed normally distributed, with zero mean, and variance proportional to the measured force, see Equation (17b) [

42]. No measurement reproducibility is further added because it is already included in the distributions of

.

2.2.3. Unloading Generation

The process is highly similar to the generation of the loading, described in

Section 2.2.1. The parameters

of the regression are sampled from a multivariate normal distribution with mean

and covariance matrix

, both evaluated from the

K training ICs, i.e., similarly to Equation (12)

. The force at point 3, with reference to

Figure 2, is sampled from a normal distribution with mean and variance from the secondary holding, empirically estimated, as in Equation (18), which allows estimating the penetration depth at point 3,

, by inverting the regression model, as in Equation (19).

Then, the force vector

is simulated as a linear spacing vector between

(=

) and

, as described by Equation (20a). A point-wise random noise is added, assuming a zero mean normal distribution with the variance estimated as in Equation (20b).

Finally, the penetration depth vector is simulated from the regression curve

, as described in Equation (7), constraining

. No further measurement noise, simulating reproducibility, is added as it is already included in the distributions of

and

.

2.2.4. Secondary Holding Generation

Also in this case, the generation process is highly similar to the one introduced in

Section 2.2.2 for the primary holding. The secondary holding is introduced to compensate for thermal drifts, which will be simulated in

Section 2.4. Conversely, in nominal conditions, a constant force and penetration should be obtained.

Accordingly, the penetration depth vector is created as a constant value equal to

(with

=

), as described in Equation (21), to which a point-wise random noise is added to account for the measurement accuracy.

The force vector is created as a constant force value equal to

(with

=

), to which point-wise random noise is added to account for the accuracy, as in Equation (22)

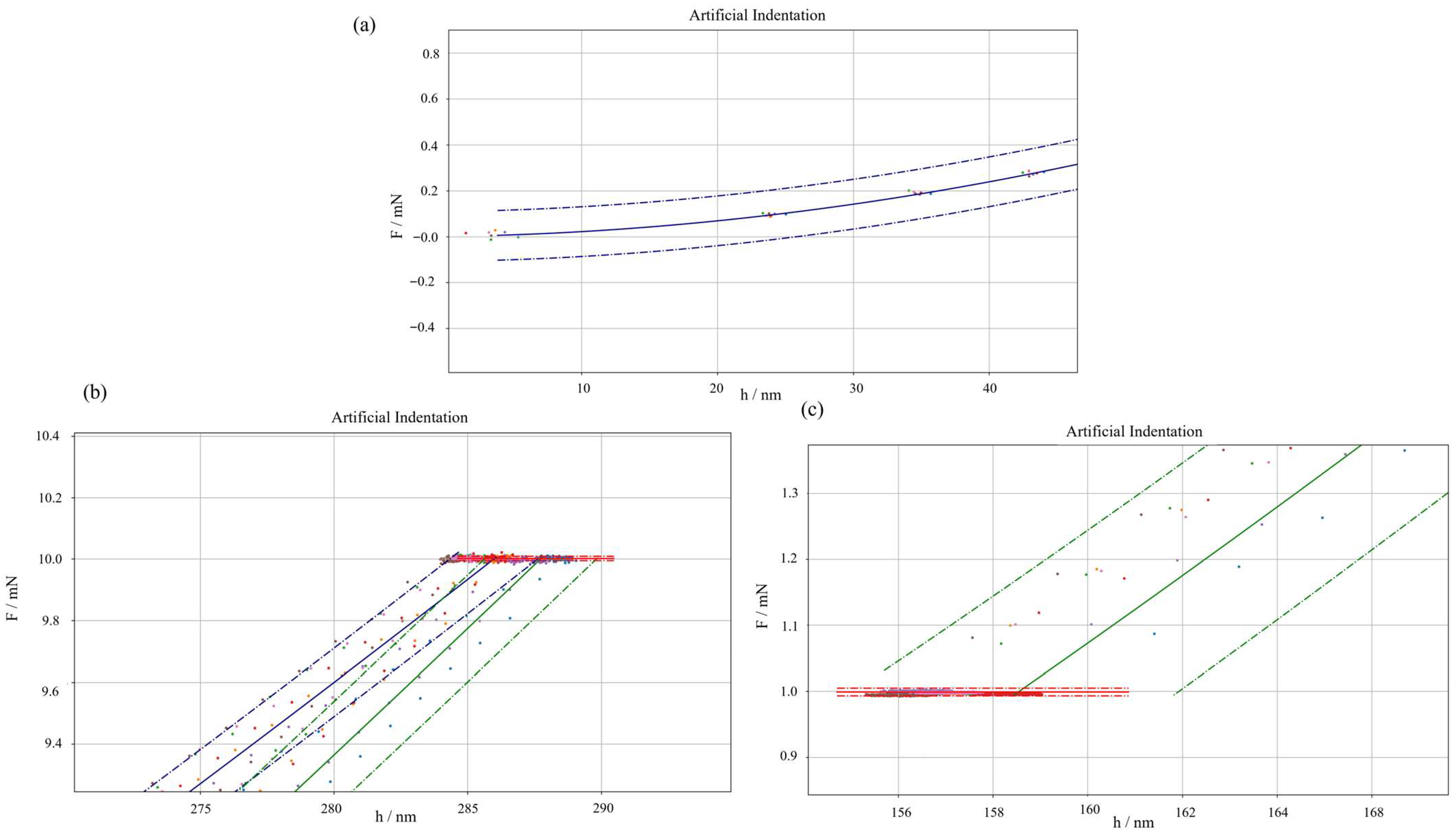

2.3. Uncertainty Evaluation

Synthetically generated quantities are obtained by sampling from underlying statistical distributions. Accordingly, it is possible to estimate the uncertainty of the synthetic indentation curve. The loading and unloading segment uncertainty evaluation leverages the law of propagation of uncertainty (LPU) [

70] and caters for the fact that model parameters have been estimated by ODR. According to the LPU, the combined variance is obtained as a linear combination of the variance contributions weighted for the squared sensitivity coefficients, i.e., the partial derivatives of the response, in this case

F, to the independent quantities. The model for partial derivative estimation is defined in Equation (23), e.g., for the loading segment.

In Equation (23),

estimates the error in the regressor variable, and

, as introduced in Equations (14b) and (20b), describes the residual error. In particular, on the regression residuals, e.g., for the loading segment as in Equation (23), it is possible to evaluate

. The mse includes, by definition, the error in the response ε, which is also subject to minimization for estimating the parameters

by ODR.

Applying the LPU to the metrological model of Equation (23), the variance of the simulated force can be obtained as in Equation (24), which is written, for example, for the loading segment.

In Equation (24),

and

are the standard uncertainties of the displacement sensor and the force transducer, typically obtained from a calibration certificate, which shall be added to include contributions from the traceability chain.

is the already introduced covariance matrix of the model parameters, estimated by ODR, which caters for the uncertainty in the parameters estimation.

2.4. Error Simulation Approaches

The metrological synthetic dataset generator for nanoindentation described in

Section 2.2 also aims at generating the most typical measurement errors. Simulating errors is useful to allow training of error detection models, as reviewed in

Section 1.2. In this work, three main errors are modelled: thermal drift, pop-in, and pop-out.

2.4.1. Thermal Drift Simulation

Even in controlled laboratory conditions, a thermal gradient can be present between the indenter tip and the sample. This can occur due to insufficient stabilisation of the sample, because of electronics heating the indenter through conduction, and because of the small amount of heat dissipated through friction during the indentation. To evaluate the thermal drift

, the most robust approach, even though not the most time efficient, consists of performing a secondary holding and evaluating the slope of

h(

t), i.e.,

, such that:

[

42,

71]. In ideal conditions,

. However, this is never the case. In optimal experimental conditions, i.e., after a long thermal stabilisation of the sample in the measurement environment, the heating due to electronics plays a major role, typically inducing a thermal flux from the indenter to the sample, inducing

[

41,

51].

Presence of thermal drift can be simulated by modifying all the penetration depths generated in

Section 2.2, such that

Provided that any thermal drift can be generated, if a real-world scenario traceable to experimental data is aimed at,

can be sampled from the K training ICs, assuming a normal distribution

.

2.4.2. Pop-In Simulation

A pop-in event describes a singularity in the loading segment, such that at a given force level, a discontinuity

in the penetration depth occurs, suddenly increasing the penetration depth. This phenomenon is typically associated with phase changes, e.g., in semiconductors [

72,

73], or cracking, e.g., for coatings [

74]. Accordingly, a pop-in event can be simulated by adding from a certain time instant onwards, during the loading segment, a shift in the penetration depth simulated, according to

Section 2.2.1, i.e.,

, with

. The specific selection of the

that induces the pop-in event shall be modelled depending on the material under study considering the specific loading cycle. In this work, to demonstrate capability of the synthetic dataset generator to include pop-in events,

was randomly selected between the instants realising

during the loading.

2.4.3. Pop-Out Simulation

Quite in a dual manner, a pop-out event describes a sudden decrease in the penetration depth. Pop-out is typically induced by phase transformation to metastable phases [

72,

75], or by cracks closing [

73], and is induced by the load removal. Thus, it typically takes place during the final part of the unloading.

Accordingly, a pop-out event can be simulated by removing from a certain time instant onwards, during the unloading segment, a shift in the penetration depth simulated, according to

Section 2.2.3, i.e.,

, with

. Also for pop-out, the selection of

is strictly material dependent. Thus, to simply demonstrate capability of the synthetic dataset generator to include pop-out events,

was randomly selected between the instants realising

during the unloading.

2.5. Validation Methodology

The validation of the synthetic dataset generator is performed by testing its capability of simulating data from the real world. In particular, an Anton Paar STeP6 platform equipped with an NHT

3 nanoindentation head is considered. The equipment, hosted in the metrological laboratory of the MInd4Lab of Department of Management and Production Engineering of Politecnico di Torino, was equipped with a Berkovich indenter, calibrated [

63] and used to perform a set of 15 indentations of a certified reference material, i.e., a NPL-calibrated sample of SiO

2, having the Young modulus of (73.0 ± 0.5) GPa and Poisson’s ratio of 0.163 ± 0.002. Indentations implemented a force-controlled indentation cycle with a maximum force of 10 mN, and duration of loading, primary holding, and secondary holding (at 10% of

Fmax), respectively, of 10 s, 8 s, 9 s, and 60 s. The force and displacement sensors have, respectively, a calibrated relative accuracy of 0.061% and 0.058%. Raw force and displacement data, i.e., not automatically corrected for frame compliance, are considered to apply the full analysis pipeline described in previous sections.

The validation aims to test that real-world data cannot be statistically distinguished from the synthetically generated dataset, with a confidence level of 95%. That is, a hypothesis test based on the t-Student distribution, with a null hypothesis

is built, having the test statistic

. The test statistic estimates the standard uncertainty of the synthetically generated force as

, according to Equation (24). Since Equation (24) includes traceability and reproducibility through

and the mse, to avoid overestimation, it is assumed that the dispersion to experimental data is already included.

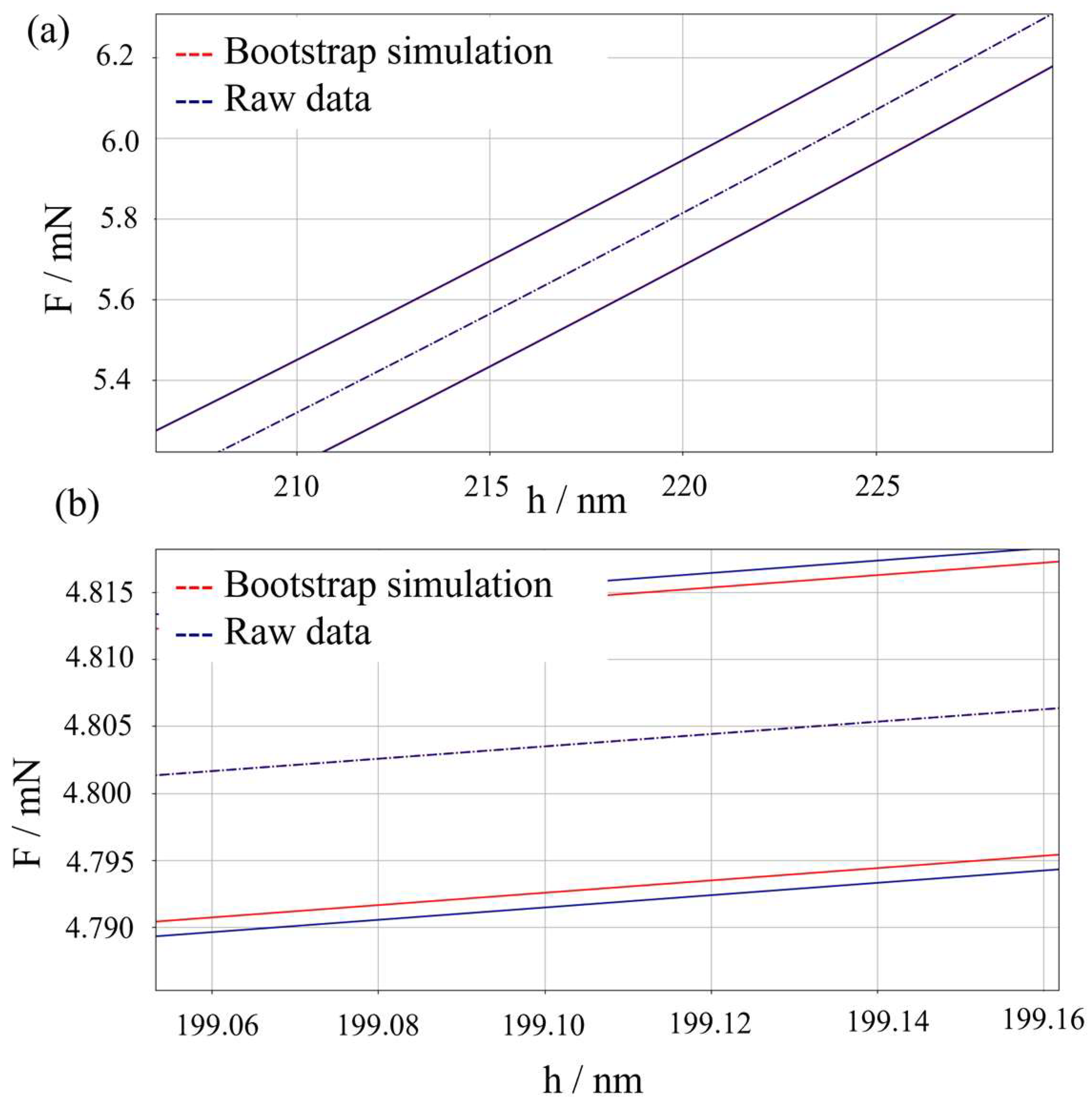

Further validation is performed by comparing the performances of the proposed metrological synthetic data generator for nanoindentation based on parametric simulation with other approaches available in the literature. In particular, a simulation based on non-parametric bootstrapping is considered. The methodology for bootstrap generation is reported elsewhere [

64], and it has been proven effective in various metrological applications [

65] overcoming the limits of Monte Carlo approaches. In particular, the presence of significant bias is tested, as well as any effect of systematic underestimation of measurement uncertainty. The former is once more tested by means of a t-Student hypothesis test. The latter by means of a hypothesis test based on the F-Fisher distribution, having the null hypothesis

, and test statistic

.

4. Discussion

The methodology outlined in

Section 2 ultimately consists of a parametric synthetic dataset generator for nanoindentation.

With respect to other parametric approaches [

63], it allows modelling the correlation between input quantities, thus overcoming possible distortions in the uncertainty evaluation.

Similarly, with respect to non-parametric methods, i.e., based on bootstrapping [

64], it has shown no systematic differences, neither in terms of mean nor of dispersion. Compared to bootstrapping, it requires less computational power, is much faster, and allows overcoming issues of sample representativeness needed to perform the non-parametric approach.

The method requires modelling the indentation response of a material by means of a power-law model. Although the mathematical implementation allows for any set of parameters to be used, without the need for an experimental training dataset, the suggested approach in

Section 2.1 allows, by means of Orthogonal Distance Regression, to establish a traceable and physics-informed model. Indeed, when the regression is performed to evaluate model parameters, the model representativeness is limited to a specific combination of material and indentation machine. In such a case, the proposed method limits the representativeness of the model to a specific material and specific indentation cycle parameters, i.e., the maximum force and indentation cycle segments’ durations. Such limitation is inherent with the proposed methodology and can be considered the cost for having a traceable synthetic dataset generator. To extend the validity of the model to other materials and indentation machines, being the response intertwined due to the indenter geometry and frame compliance, a new training dataset is required.

Further, an underlying assumption of the synthetic dataset generator is the requirement of homogeneous material for training data. This condition is easily met for amorphous materials and for monocrystals. Conversely, for multiphase materials and polycrystalline materials, if the indentation scale of interest allows to distinguish different phases, a separate synthetic dataset generator per each phase can be trained relying on phase-specific data.