Research on an Online Monitoring Device for the Powder Laying Process of Laser Powder Bed Fusion

Abstract

1. Introduction

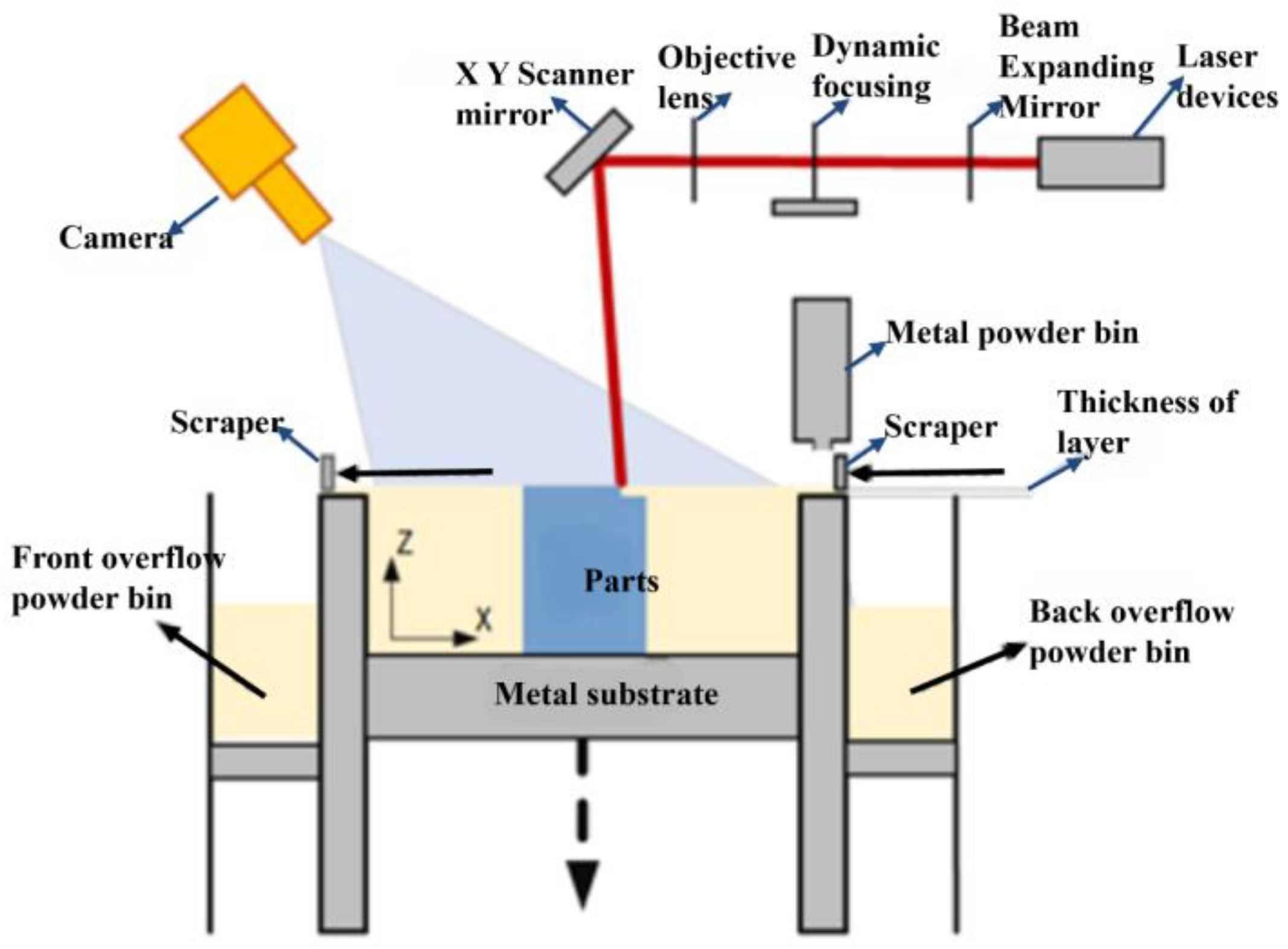

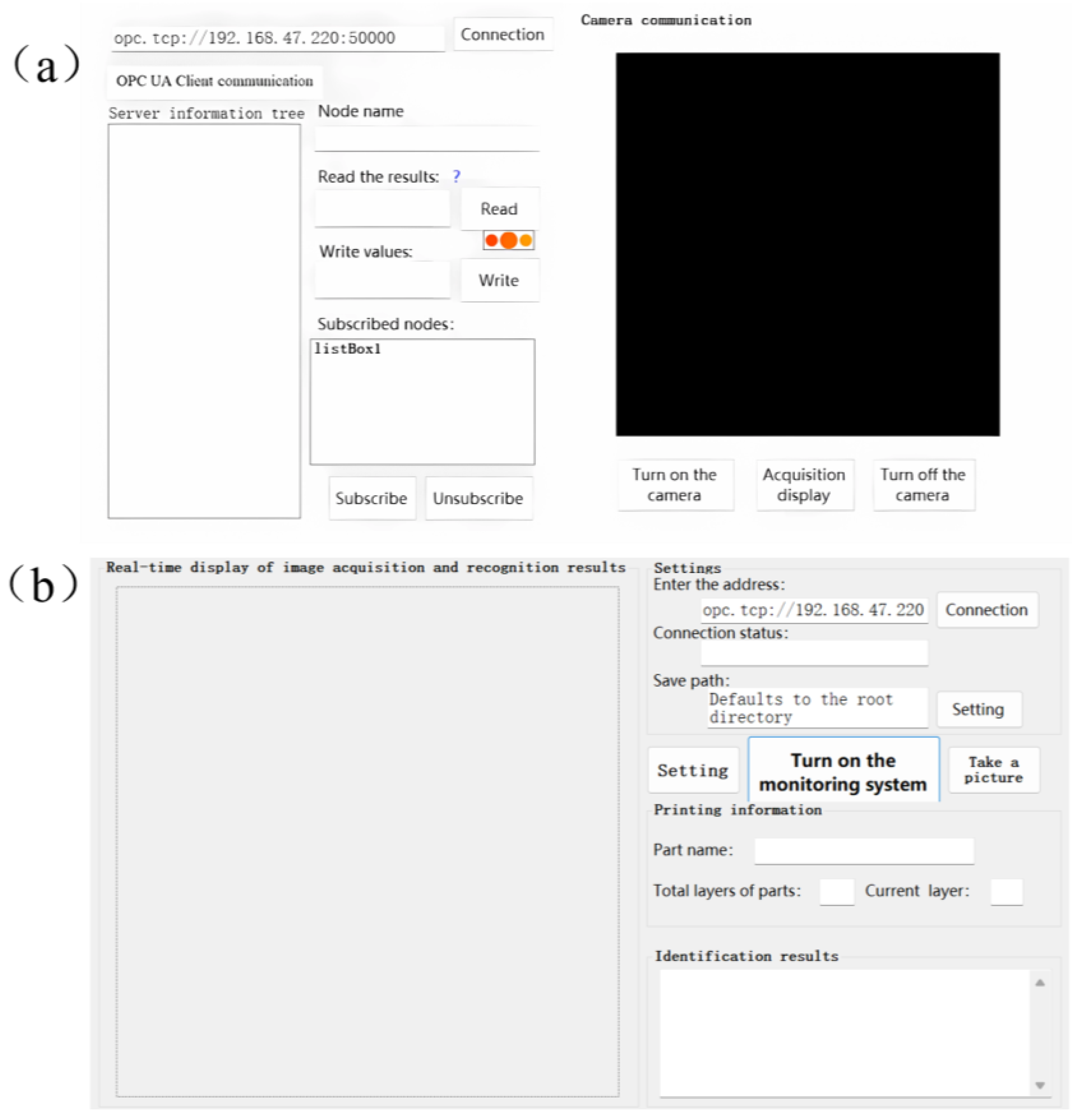

2. Program Design of Online Visual Monitoring Device for the LPBF Powder Laying Process

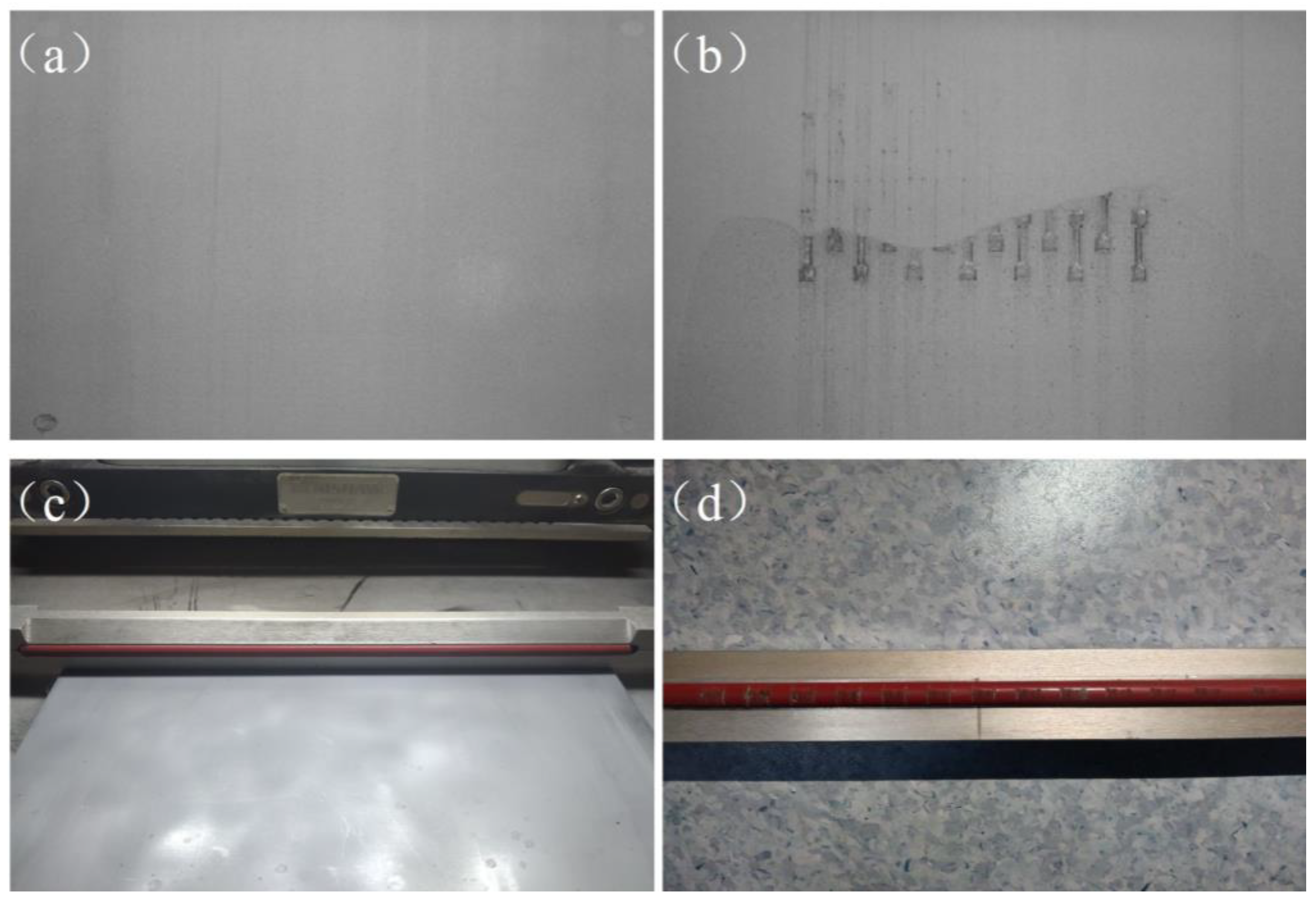

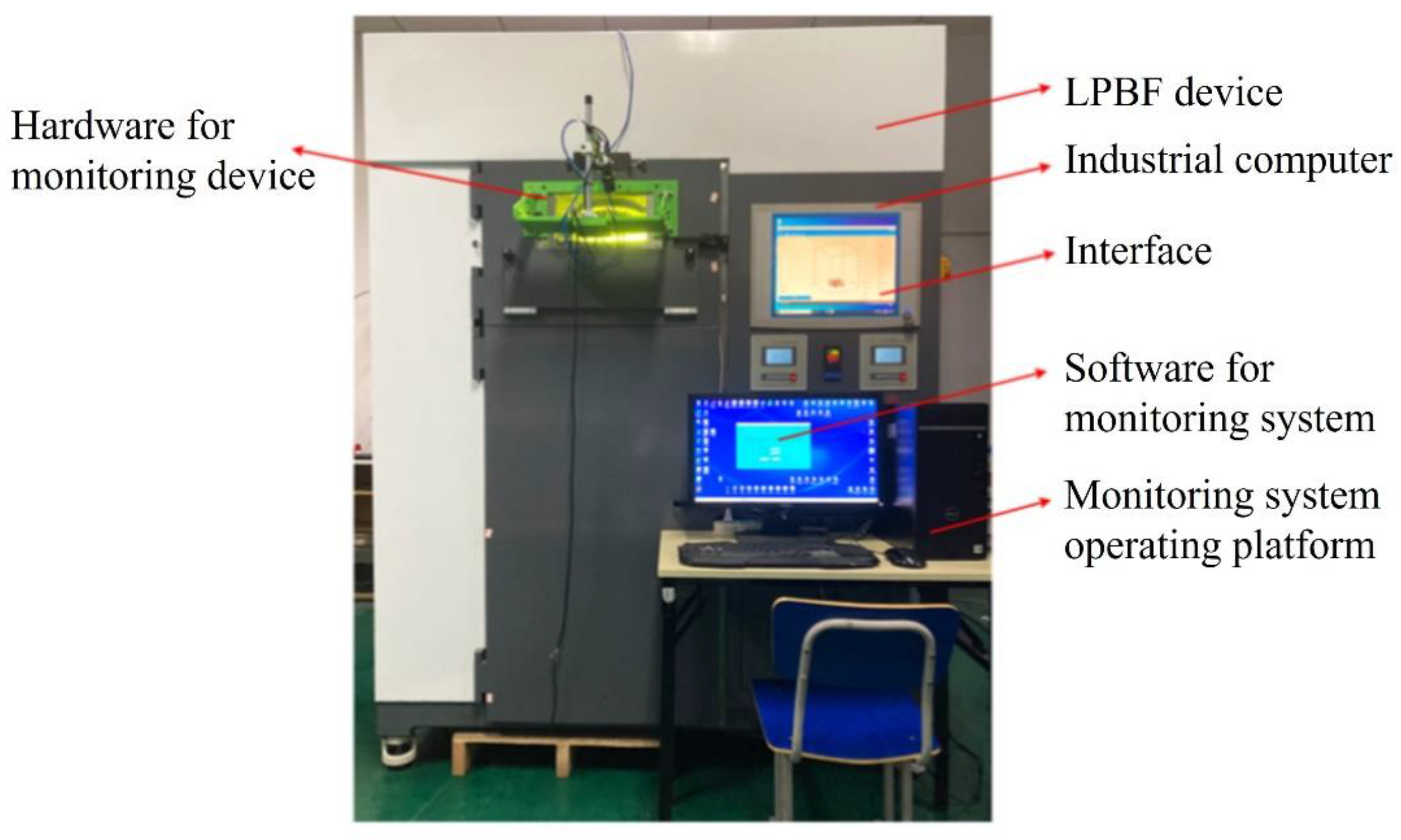

3. Construction of the LPBF Online Monitoring Device for the Powder Laying Process

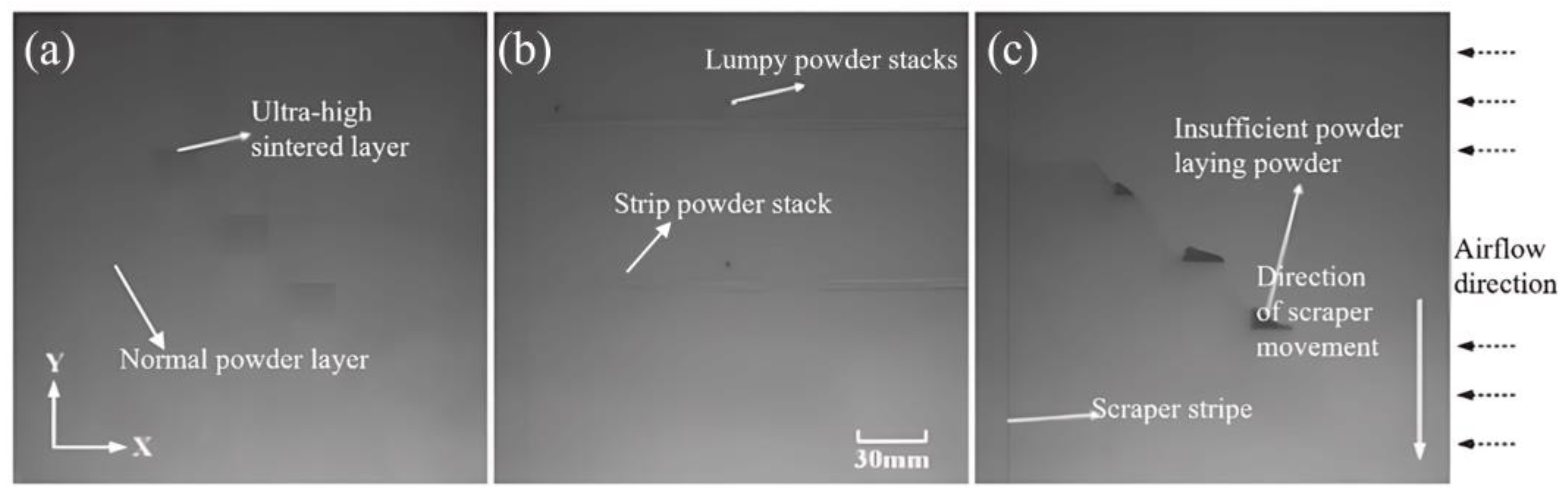

4. Research on the Defect Identification Algorithm of the LPBF Powder Laying Process

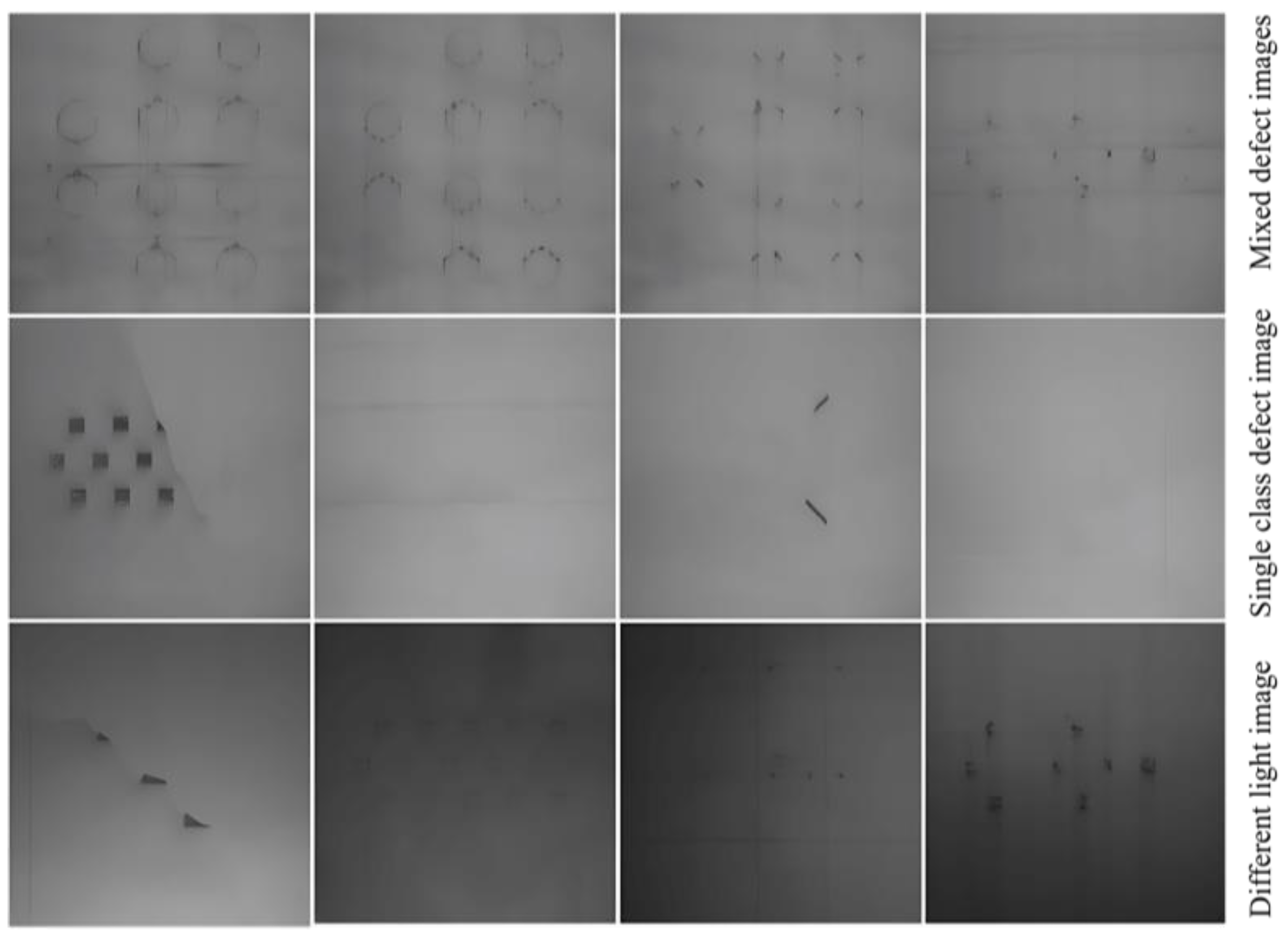

4.1. Data Set Construction and Evaluation

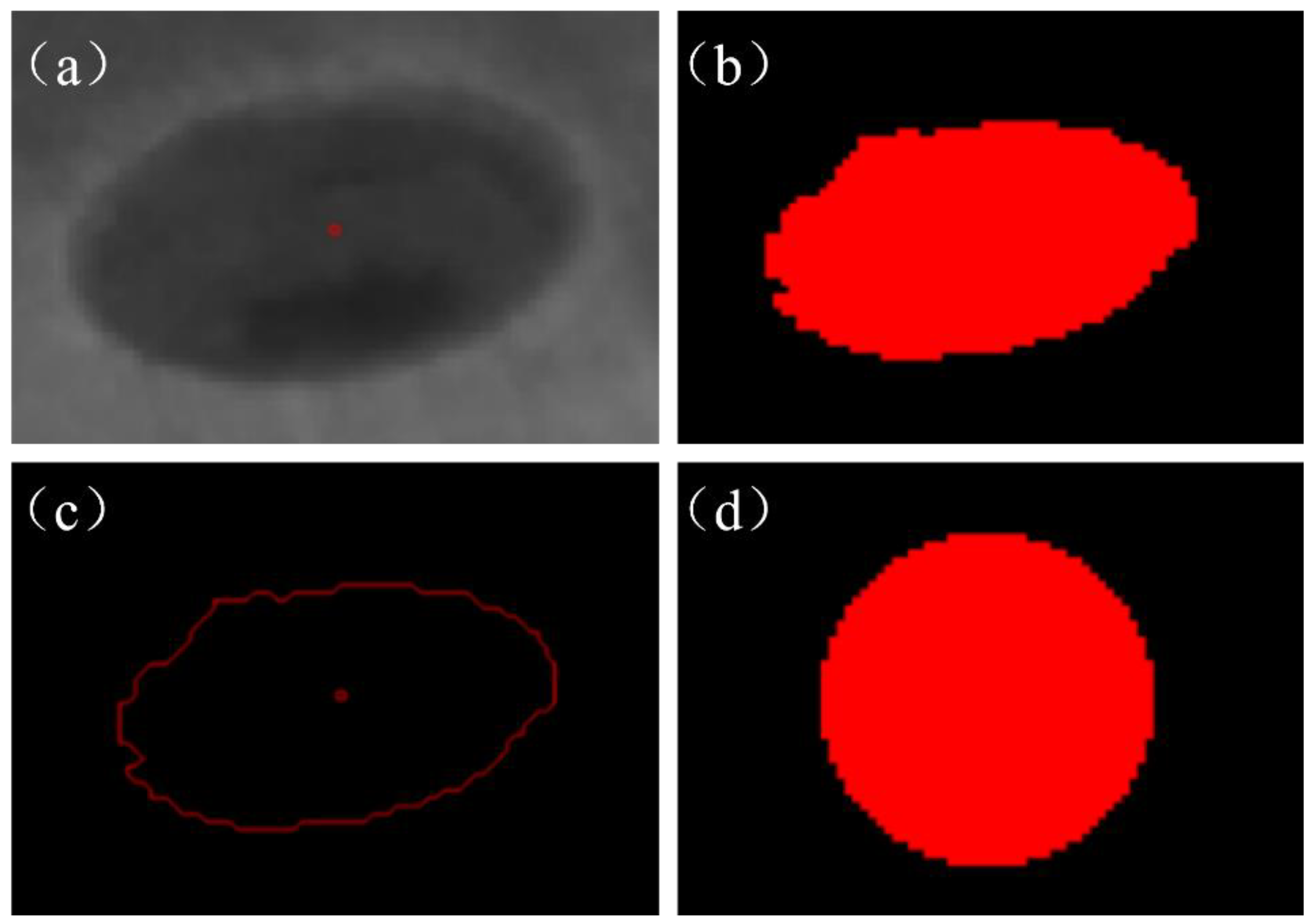

4.1.1. Tilted Image Correction

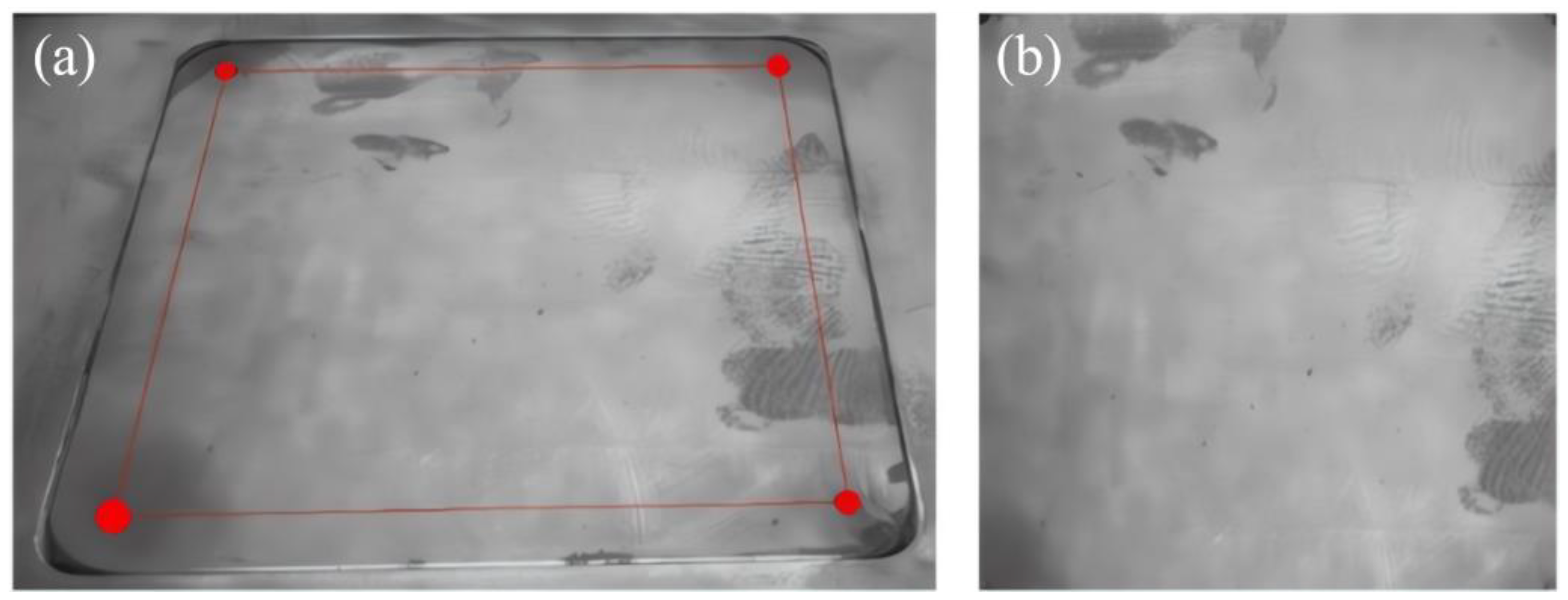

4.1.2. Perspective Correction

4.1.3. Image Processing and Data Enhancement

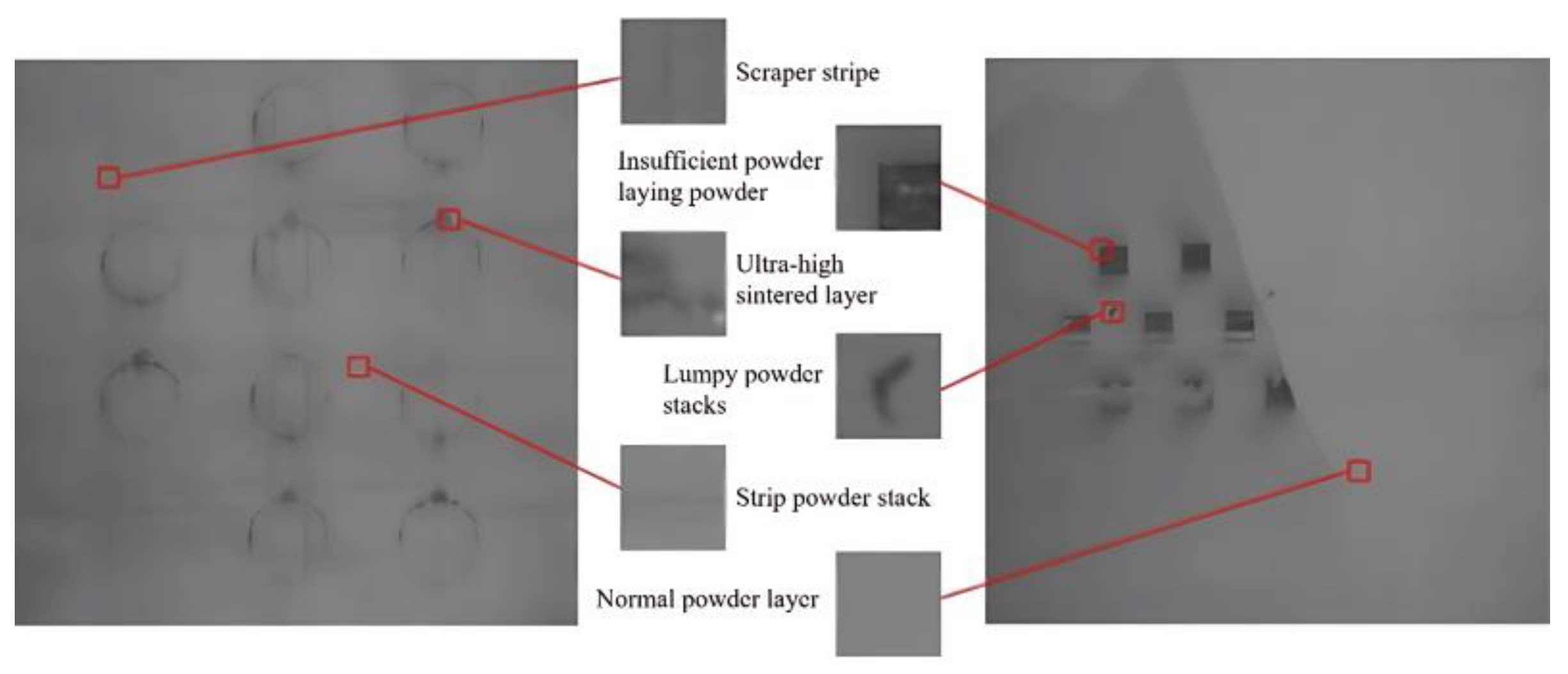

4.2. Identification of Powder Laying Defects by Small-Scale Area Division

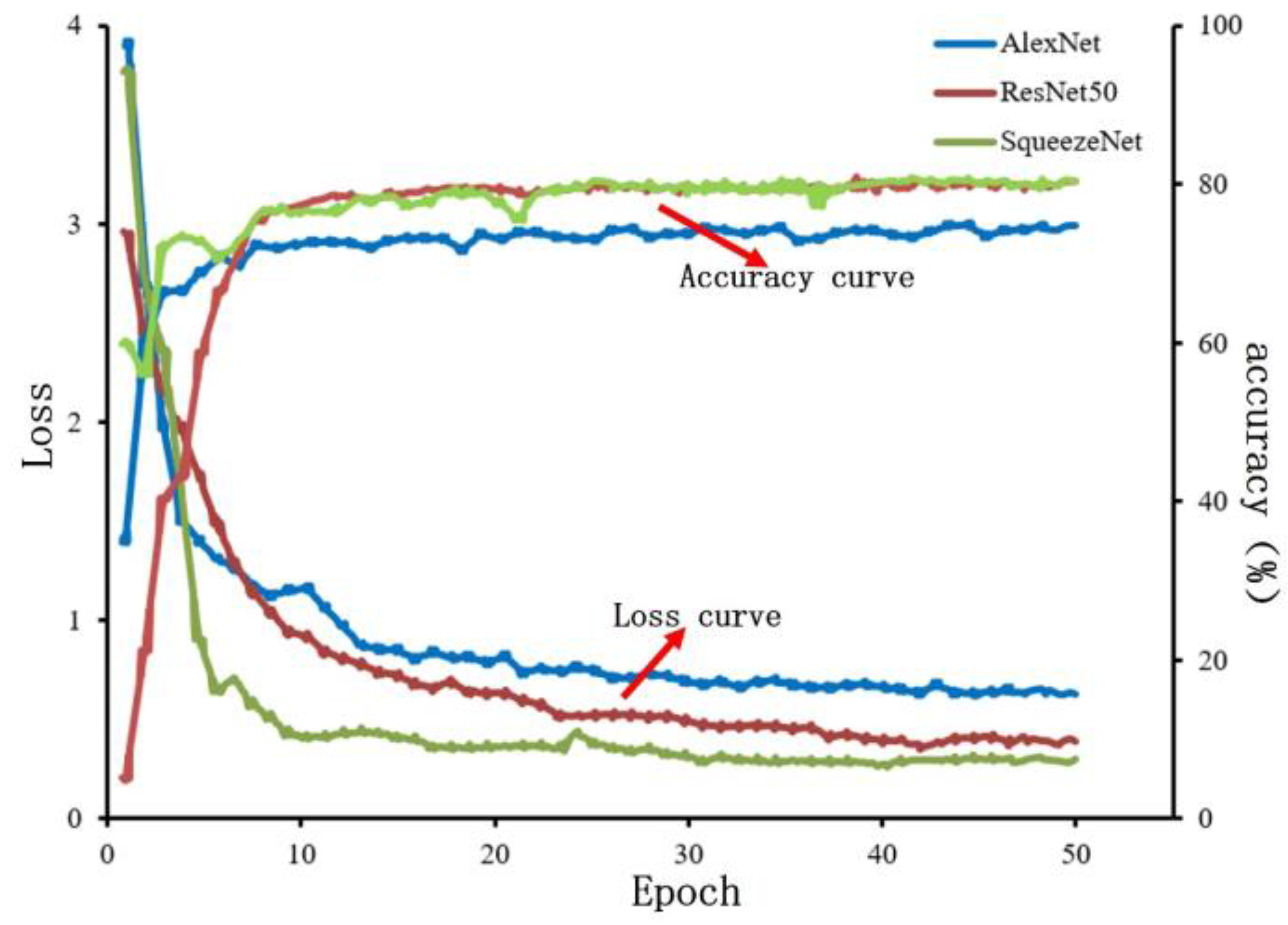

4.2.1. Model Training

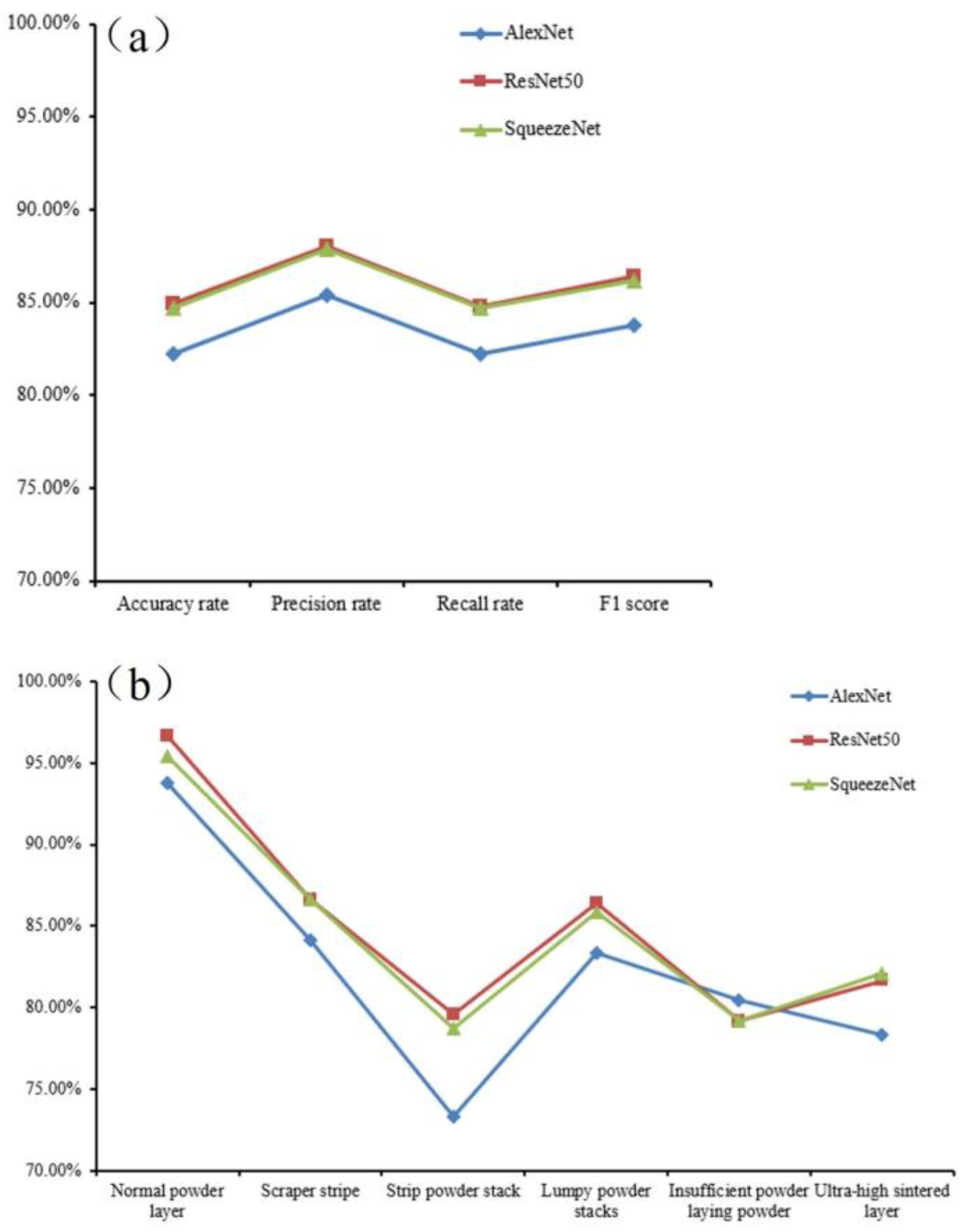

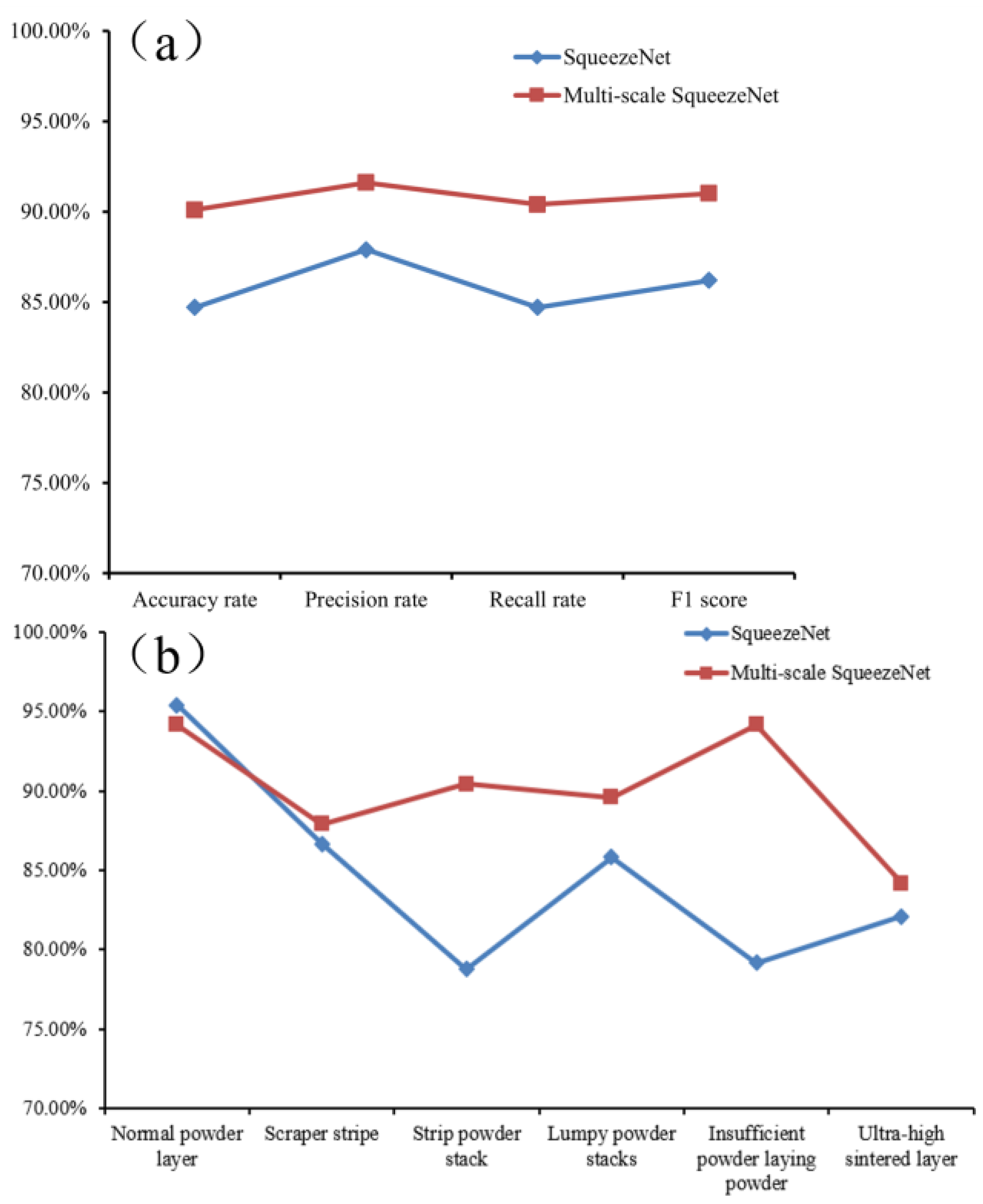

4.2.2. Evaluation of the Model

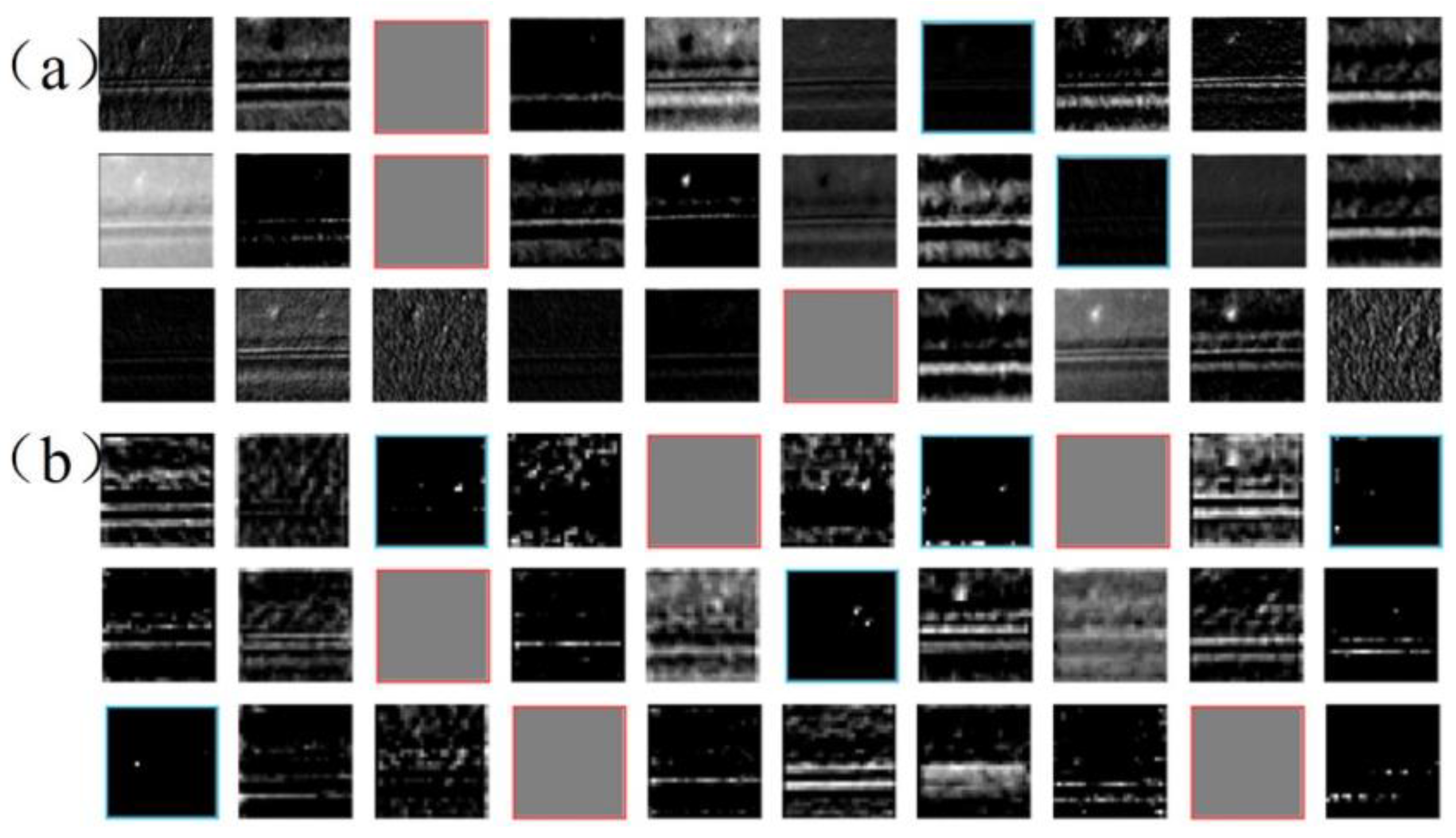

4.2.3. Heat Map Visualization and Analysis

4.3. SqueezeNet Model-Based Multiscale Improved Method for Identifying Powder Laying Defects

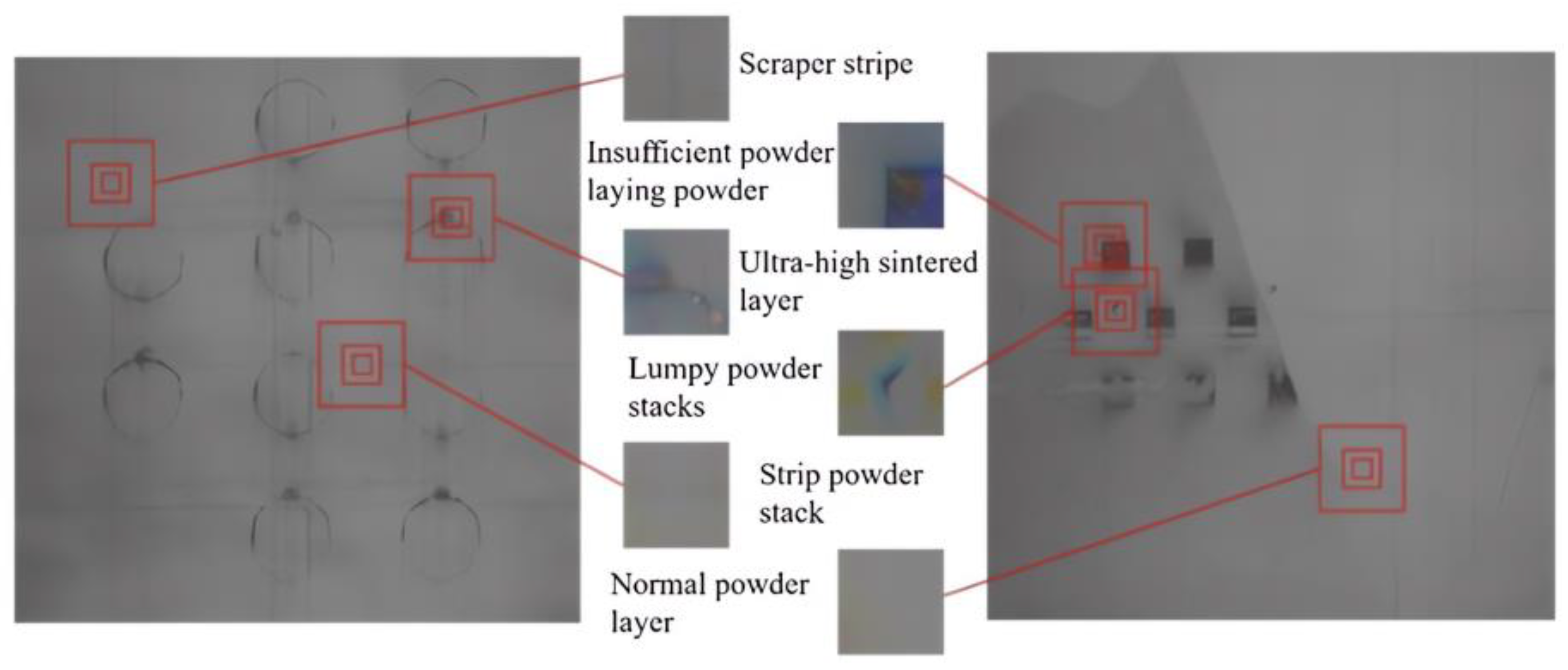

4.3.1. Multiscale Powder Laying Defect Identification Methods

4.3.2. Data Set Construction

4.3.3. Model Training

4.3.4. Model Evaluation

4.3.5. Feature Map Visualization

4.4. Channel Pruning Model Optimization Method

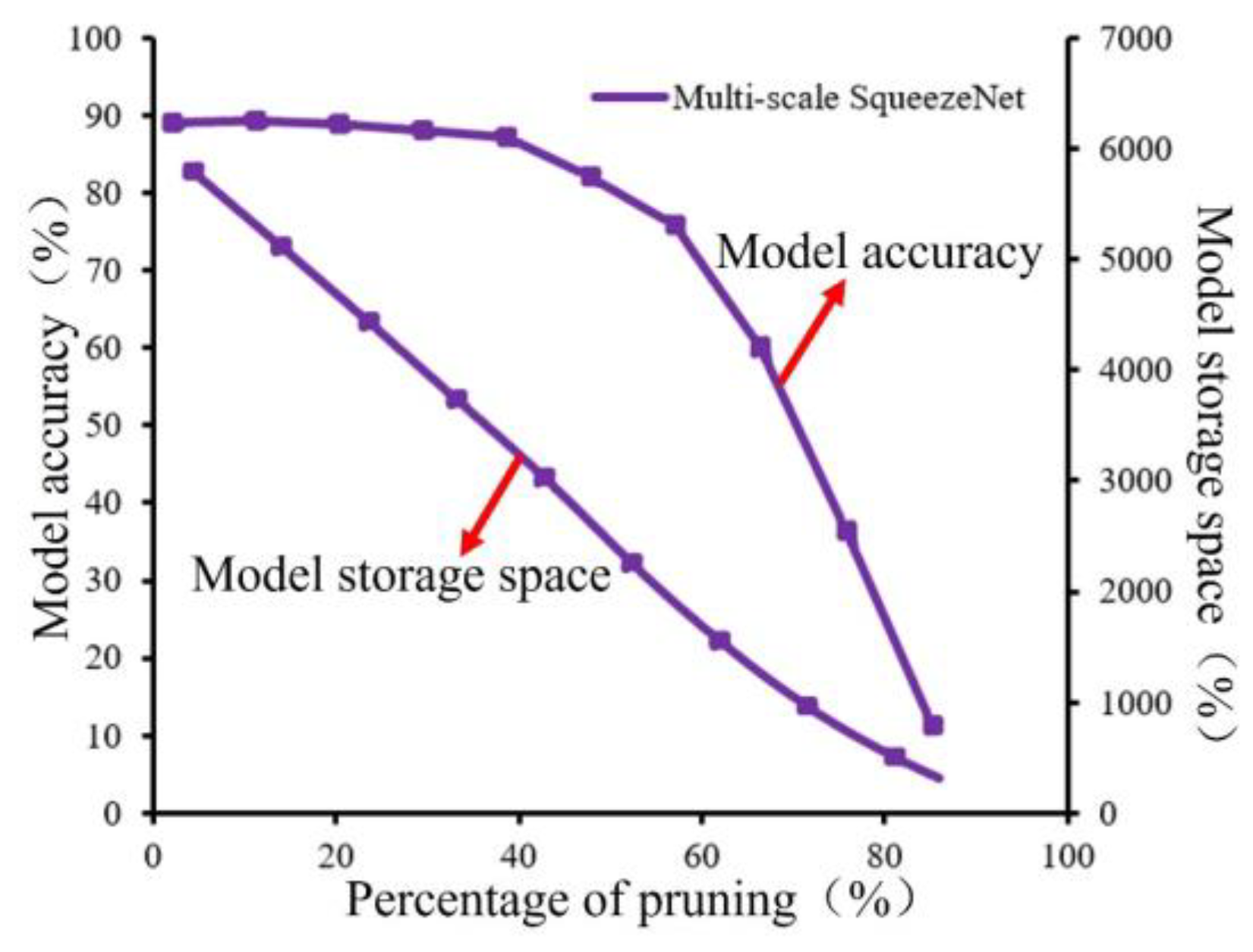

Analysis of Pruning Results

- (1)

- Changing patterns of model accuracy and storage space at different levels of pruning.

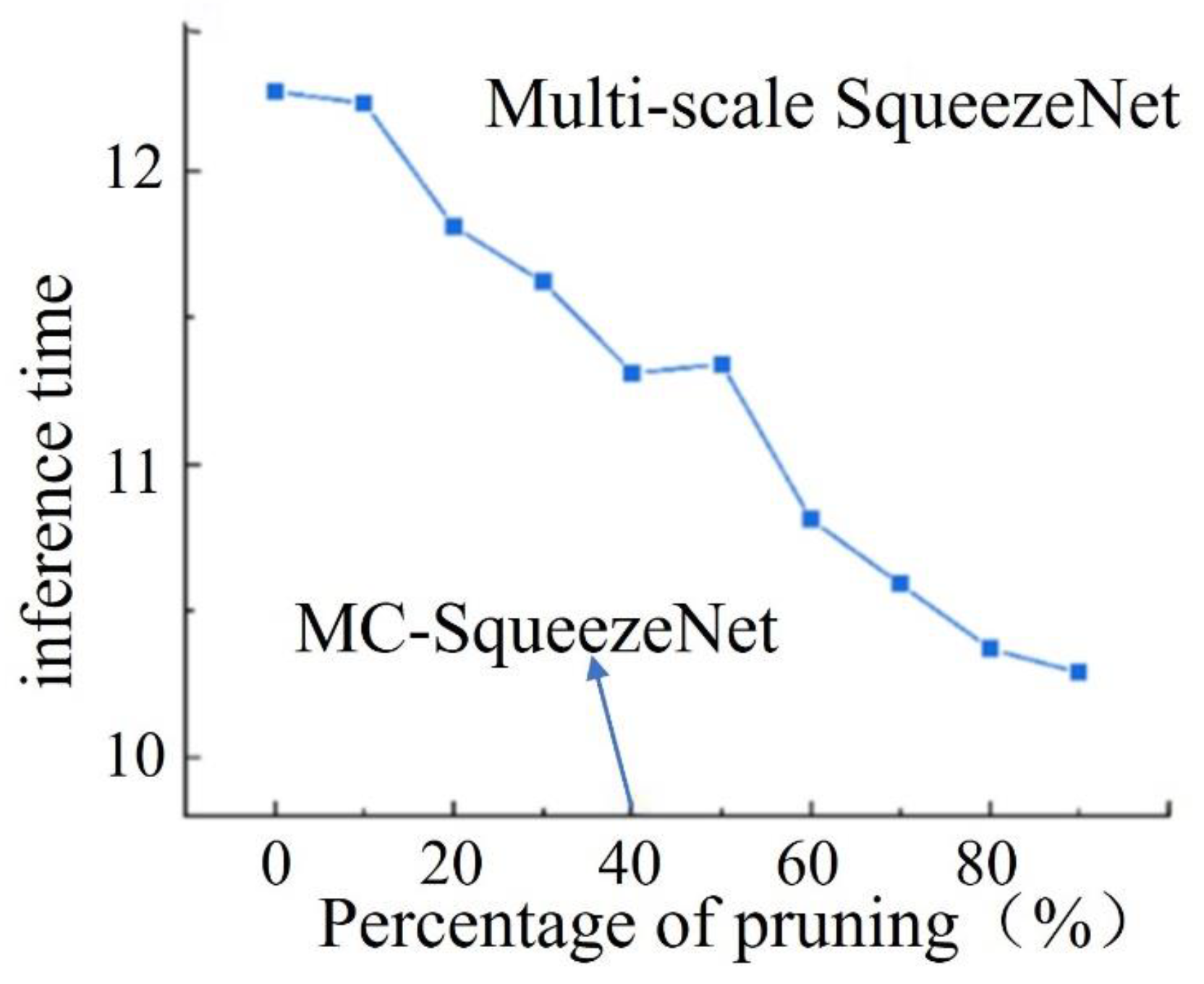

- (2)

- Changes in reasoning speed before and after model pruning.

- (3)

- Change in the number of convolution kernels in each layer of the model before and after pruning.

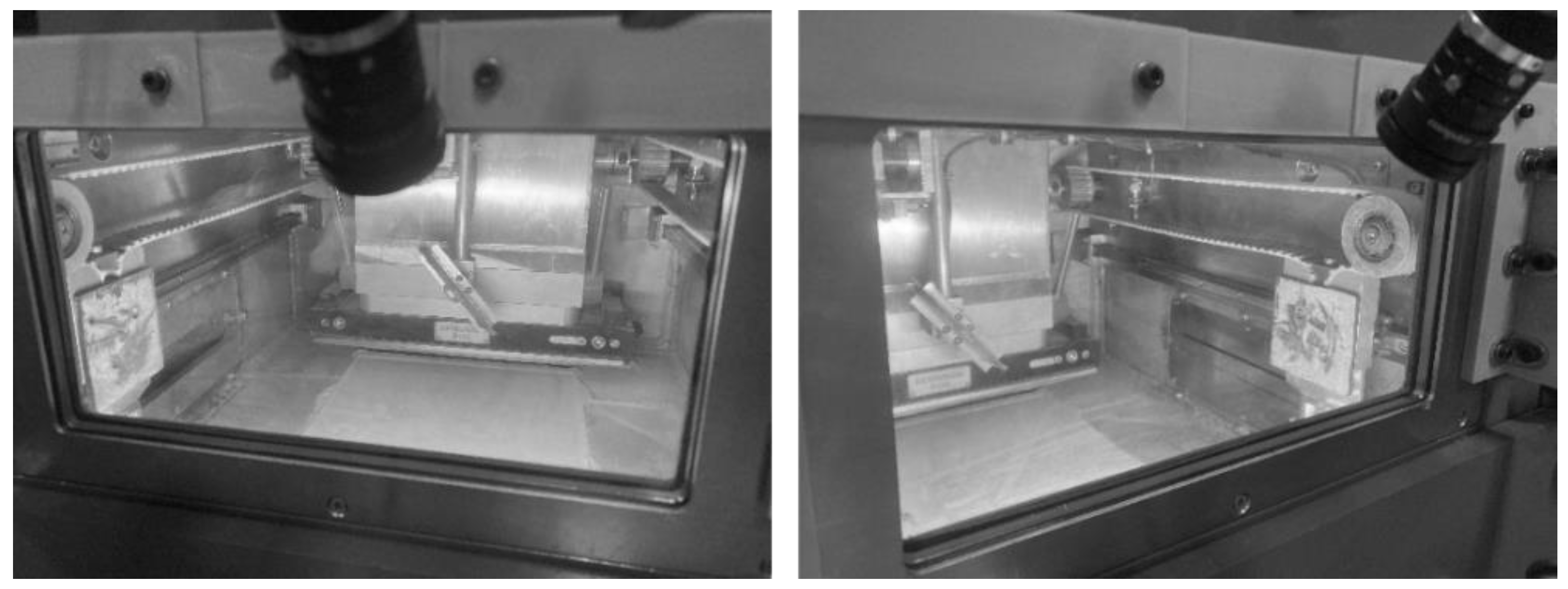

5. Experimental Validation of Defect Identification Algorithms for Metal Powder Spreading Process

5.1. Experimental Equipment and Materials

5.2. Manufacturing Experiment and Analysis

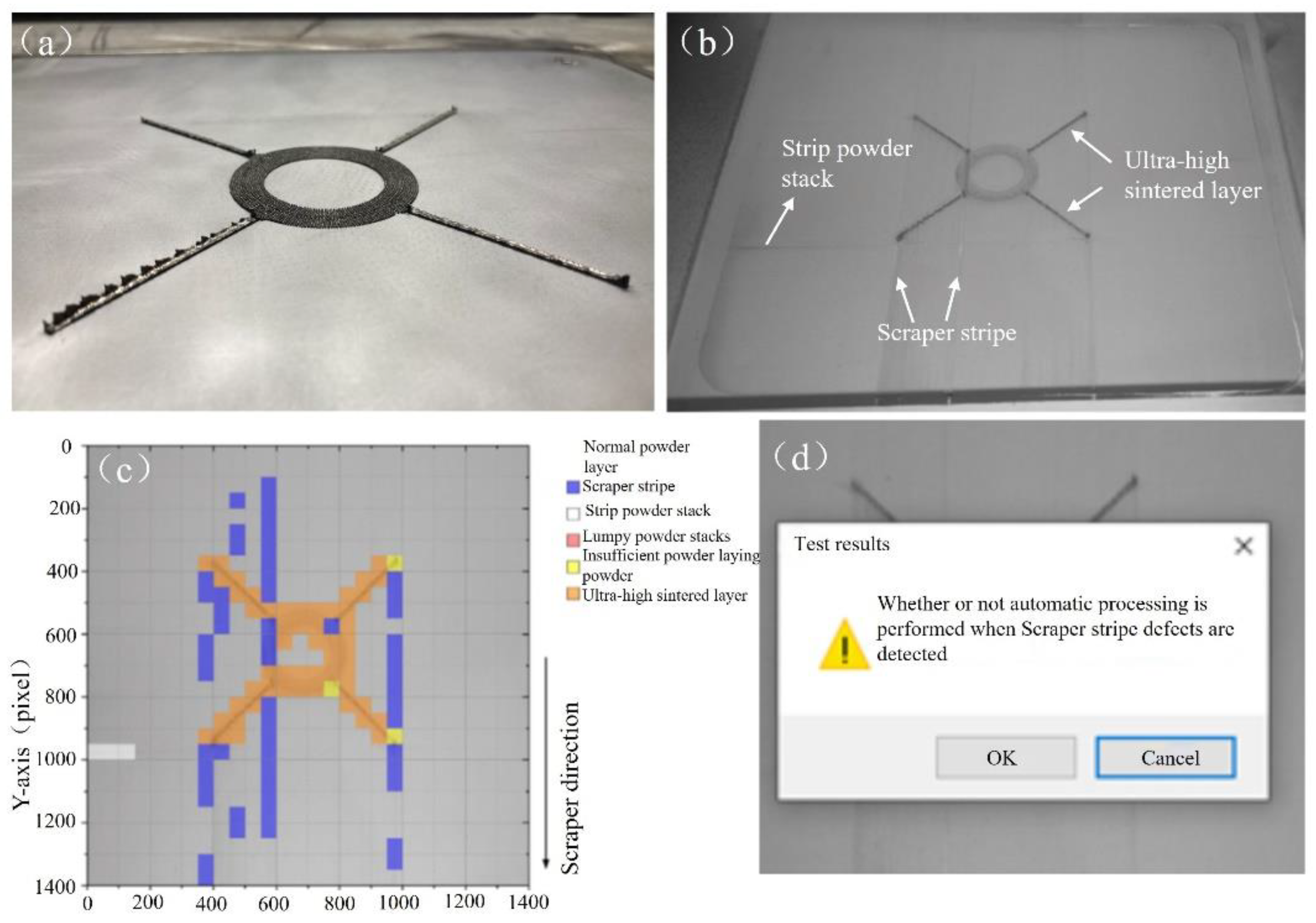

- (1)

- The first experiment

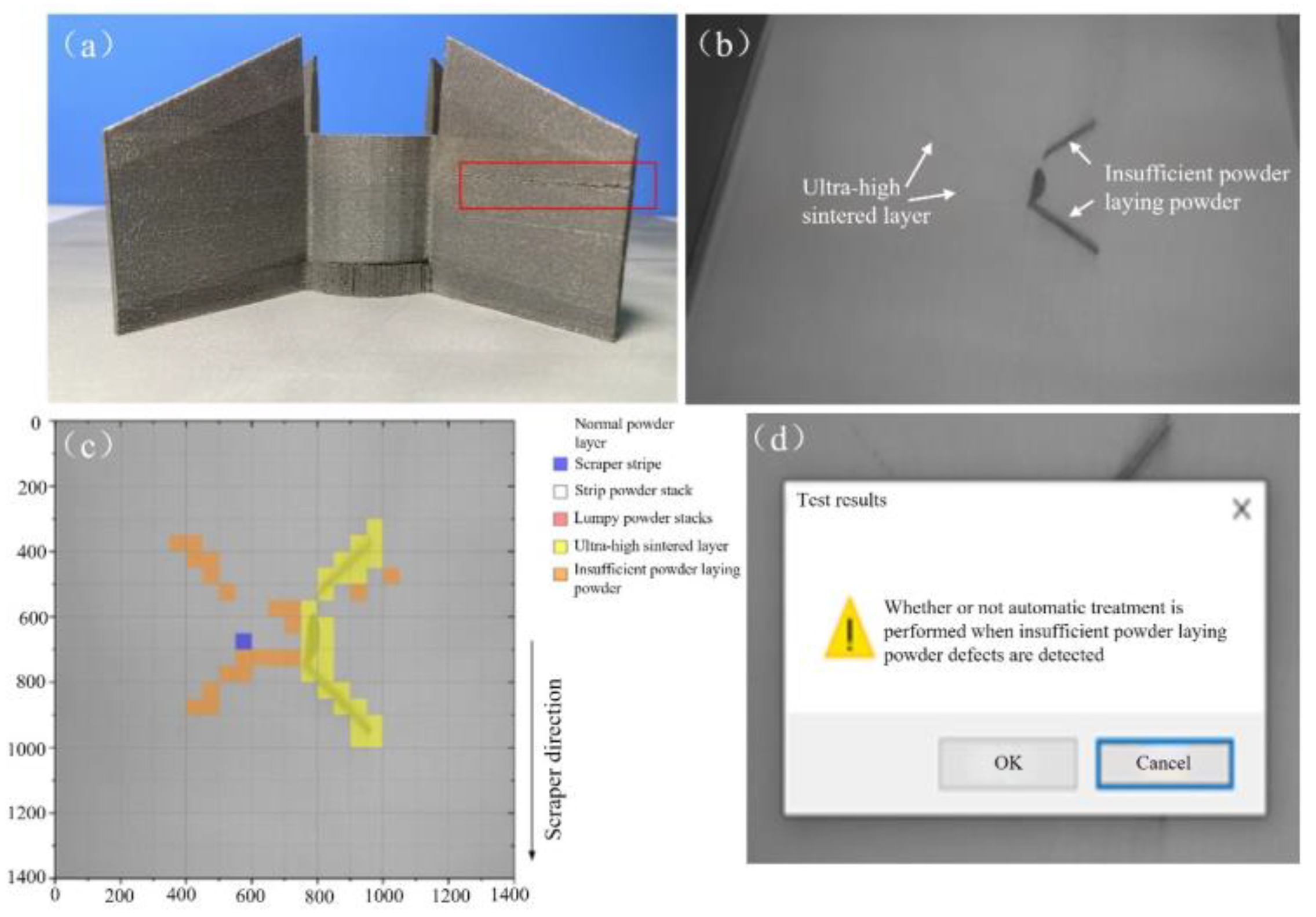

- (2)

- Second manufacturing experiment.

- (3)

- Online detection accuracy and detection time.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bitharas, I.; Parab, N.; Zhao, C.; Sun, T.; Rollett, A.D.; Moore, A.J. The interplay between vapor, liquid, and solid phases in laser powder bed fusion. Nat. Commun. 2022, 13, 2959. [Google Scholar] [CrossRef] [PubMed]

- Riener, K.; Albrecht, N.; Ziegelmeier, S.; Ramakrishnan, R.; Haferkamp, L.; Spierings, A.B.; Leichtfried, G.J. Influence of particle size distribution and morphology on the properties of the powder feedstock as well as of AlSi10Mg parts produced by laser powder bed fusion (LPBF). Addit. Manuf. 2020, 34, 101286. [Google Scholar] [CrossRef]

- Tenbrock, C.; Kelliger, T.; Praetzsch, N.; Ronge, M.; Schleifenbaum, J.K. Effect of laser-plume interaction on part quality in multi-scanner Laser Powder Bed Fusion. Addit. Manuf. 2021, 38, 101810. [Google Scholar] [CrossRef]

- Guaglione, F.; Caprio, L.; Previtali, B.; Demir, A.G. Single point exposure LPBF for the production of biodegradable Zn-alloy lattice structures. Addit. Manuf. 2021, 48, 102426. [Google Scholar] [CrossRef]

- Druzgalski, C.L.; Ashby, A.; Guss, G.; King, W.E.; Roehling, T.T.; Matthews, M.J. Process optimization of complex geometries using feed forward control for laser powder bed fusion additive manufacturing. Addit. Manuf. 2020, 34, 101169. [Google Scholar] [CrossRef]

- Zhang, X.B.; Cheng, B.; Tuffile, C. Simulation study of the spatter removal process and optimization design of gas flow system in laser powder bed fusion. Addit. Manuf. 2020, 32, 101049. [Google Scholar] [CrossRef]

- Chen, H.; Cheng, T.; Li, Z.W.; Yan, W.T. Is high-speed powder spreading really unfavourable for the part quality of laser powder bed fusion additive manufacturing? Acta Mater. 2022, 231, 117901. [Google Scholar] [CrossRef]

- Zhou, J.T.; Han, X.; Li, H.; Liu, S.; Yi, J.C. Investigation of layer-by-layer laser remelting to improve surface quality, microstructure, and mechanical properties of laser powder bed fused AlSi10Mg alloy. Mater. Design 2021, 210, 110092. [Google Scholar] [CrossRef]

- Bernd, R.; Witt, G. Process Monitoring in the Laser Beam Melting Process-reduction of Process Breakdowns and Defective Parts. Proc. Mater. Sci. Technol. 2012, 2012, 9–15. [Google Scholar]

- Kleszczynski, S.; Jacobsmuhlen, J.Z.; Reinarz, B.; Sehrt, J. Improving Process Stability of Laser Beam Melting Systems. In Proceedings of the Frauenhofer Direct Digital Manufacturing Conference, Berlin, Germany, 12–13 March 2014. [Google Scholar]

- Craeghs, T.; Clijsters, S.; Yasa, E.; Kruth, J.P. Online Quality Control of Selective Laser Melting. In Proceedings of the 20th Solid Freeform Fabrication (SFF) Symposium, Austin, TX, USA, 9–11 August 2011; pp. 212–226. [Google Scholar]

- Grasso, M.; Valsecchi, G.; Colosimo, B.M. Powder Bed Irregularity and Hot-spot Detection in Electron Beam Melting by Means of In-situ Video Imaging. Manuf. Lett. 2020, 24, 47–51. [Google Scholar] [CrossRef]

- Lin, Z.Q.; Lai, Y.W.; Pan, T.T.; Zhang, W.; Zheng, J.; Ge, X.H.; Liu, Y.G. A New Method for Automatic Detection of Defects in Selective Laser Melting Based on Machine Vision. Materials 2021, 14, 4175. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.C.; He, Y.; Wang, F.; He, Y.Y.; Rong, X.X.; Chen, M.J.; Wang, Y.; Yue, H.H.; Liu, J.Y. Convolutional neural network assisted infrared imaging technology: An enhanced online processing state monitoring method for laser powder bed fusion. Infrared. Phys. Techn. 2023, 131, 104661. [Google Scholar] [CrossRef]

- Li, Z.W.; Liu, X.J.; Wen, S.F.; He, P.Y.; Zhong, K.; Wei, Q.S.; Shi, Y.S.; Liu, S. In Situ 3D Monitoring of Geometric Signatures in the Powder-bed-fusion Additive Manufacturing Process Via Vision Sensing Methods. Sensors 2018, 18, 1180. [Google Scholar] [CrossRef] [PubMed]

- Scime, L.; Beuth, J. Anomaly Detection and Classification in a Laser Powder Bed Additive Manufacturing Process Using a Trained Computer Vision Algorithm. Addit. Manuf. 2018, 19, 114–126. [Google Scholar] [CrossRef]

- Scime, L.; Beuth, J. A Multi-scale Convolutional Neural Network for Autonomous Anomaly Detection and Classification in a Laser Powder Bed Fusion Additive Manufacturing Process. Addit. Manuf. 2018, 24, 273–286. [Google Scholar] [CrossRef]

- Abdelrahman, M.; Reutzel, E.W.; Nassar, A.R. Flaw detection in powder bed fusion using optical imaging. Addit. Manuf. 2017, 15, 1–11. [Google Scholar] [CrossRef]

- Shi, B.B.; Chen, Z.H. A Layer-wise Multi-defect Detection System for Powder Bed Monitoring: Lighting Strategy for Imaging, Adaptive Segmentation and Classification. Mater. Design 2021, 210, 110035. [Google Scholar] [CrossRef]

- Bevans, B.; Barrett, C.; Spears, T.; Gaikwad, A.; Riensche, A.; Smoqi, Z. Heterogeneous sensor data fusion for multiscale, shape agnostic flaw detection in laser powder bed fusion additive manufacturing. Virtual Phys. Prototy 2023, 18, e2196266. [Google Scholar] [CrossRef]

- Gobert, C.; Reutzel, E.W.; Petrich, J.; Nassar, A.R.; Phoha, S. Application of supervised machine learning for defect detection during metallic powder bed fusion additive manufacturing using high resolution imaging. Addit. Manuf. 2018, 21, 517–528. [Google Scholar] [CrossRef]

- Imani, F.; Gaikwad, A.; Montazeri, M.; Rao, P. Process mapping and in-process monitoring of porosity in laser powder bed fusion using layerwise optical imaging. J. Manuf. Sci. Eng. 2018, 140, 101009. [Google Scholar] [CrossRef]

- Estalaki, S.M.; Lough, C.S.; Landers, R.G.; Kinzel, E.C.; Luo, T. Predicting defects in laser powder bed fusion using in-situ thermal imaging data and machine learning. Addit. Manuf. 2022, 58, 103008. [Google Scholar] [CrossRef]

- Caltanissetta, F.; Grasso, M.; Petro, S.; Colosimo, B.M. Characterization of in-situ measurements based on layerwise imaging in laser powder bed fusion. Addit. Manuf. 2018, 24, 183–199. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 818–833. [Google Scholar]

| Laser spot diameter | 0.07 mm |

| Laser power | 0–400 W |

| Laser scanning speed | 0–5000 mm/s |

| Layer thickness | 0.05–0.1 mm |

| Inert protective gas | Argon gas |

| X | −149.196 mm | X-direction rotation | 322.433° |

| Y | −129.95 mm | Y-direction rotation | 358.278° |

| Z | 481.991 mm | Z-direction rotation | 355.92° |

| Hierarchy | Number of Convolution Kernel | Removal Ratio | |

|---|---|---|---|

| Multiscale SqueezeNet | MC-SqueezeNet | ||

| Conv1 | 64 | 62 | 3% |

| Fire2 | 144 | 124 | 14% |

| Fire3 | 144 | 127 | 12% |

| Fire4 | 288 | 222 | 23% |

| Fire5 | 288 | 219 | 24% |

| Fire6 | 432 | 243 | 44% |

| Fire7 | 432 | 258 | 40% |

| Fire8 | 576 | 290 | 50% |

| Fire9 | 576 | 251 | 56% |

| Conv10 | 6 | 6 | 0% |

| The First Print Experiment | The Second Printing Experiment | System Accuracy | ||||

|---|---|---|---|---|---|---|

| Total Number of Layers | Identify the Correct Number of Layers | Accuracy Rate | Total Number of Layers | Identify the Correct Number of Layers | Accuracy Rate | |

| 58 | 54 | 93.1% | 1402 | 1386 | 98.89% | 98.63% |

| Phase | Image Acquisition | Tilt Correction and Storage | Partition and Identification | Total |

|---|---|---|---|---|

| Average time consuming (s) | 0.795 | 0.159 | 2.562 | 3.516 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, B.; Liu, J.; Li, J.; Zhao, Z.; Liu, Y.; Yang, G.; Liu, L.; Chang, H. Research on an Online Monitoring Device for the Powder Laying Process of Laser Powder Bed Fusion. Micromachines 2024, 15, 97. https://doi.org/10.3390/mi15010097

Wei B, Liu J, Li J, Zhao Z, Liu Y, Yang G, Liu L, Chang H. Research on an Online Monitoring Device for the Powder Laying Process of Laser Powder Bed Fusion. Micromachines. 2024; 15(1):97. https://doi.org/10.3390/mi15010097

Chicago/Turabian StyleWei, Bin, Jiaqi Liu, Jie Li, Zigeng Zhao, Yang Liu, Guang Yang, Lijian Liu, and Hongjie Chang. 2024. "Research on an Online Monitoring Device for the Powder Laying Process of Laser Powder Bed Fusion" Micromachines 15, no. 1: 97. https://doi.org/10.3390/mi15010097

APA StyleWei, B., Liu, J., Li, J., Zhao, Z., Liu, Y., Yang, G., Liu, L., & Chang, H. (2024). Research on an Online Monitoring Device for the Powder Laying Process of Laser Powder Bed Fusion. Micromachines, 15(1), 97. https://doi.org/10.3390/mi15010097