How to Ease the Pain of Taking a Diagnostic Point of Care Test to the Market: A Framework for Evidence Development

Abstract

1. Introduction

1.1. An Example to Pin the Ideas On

1.2. It’s a Bit More Complicated Than That

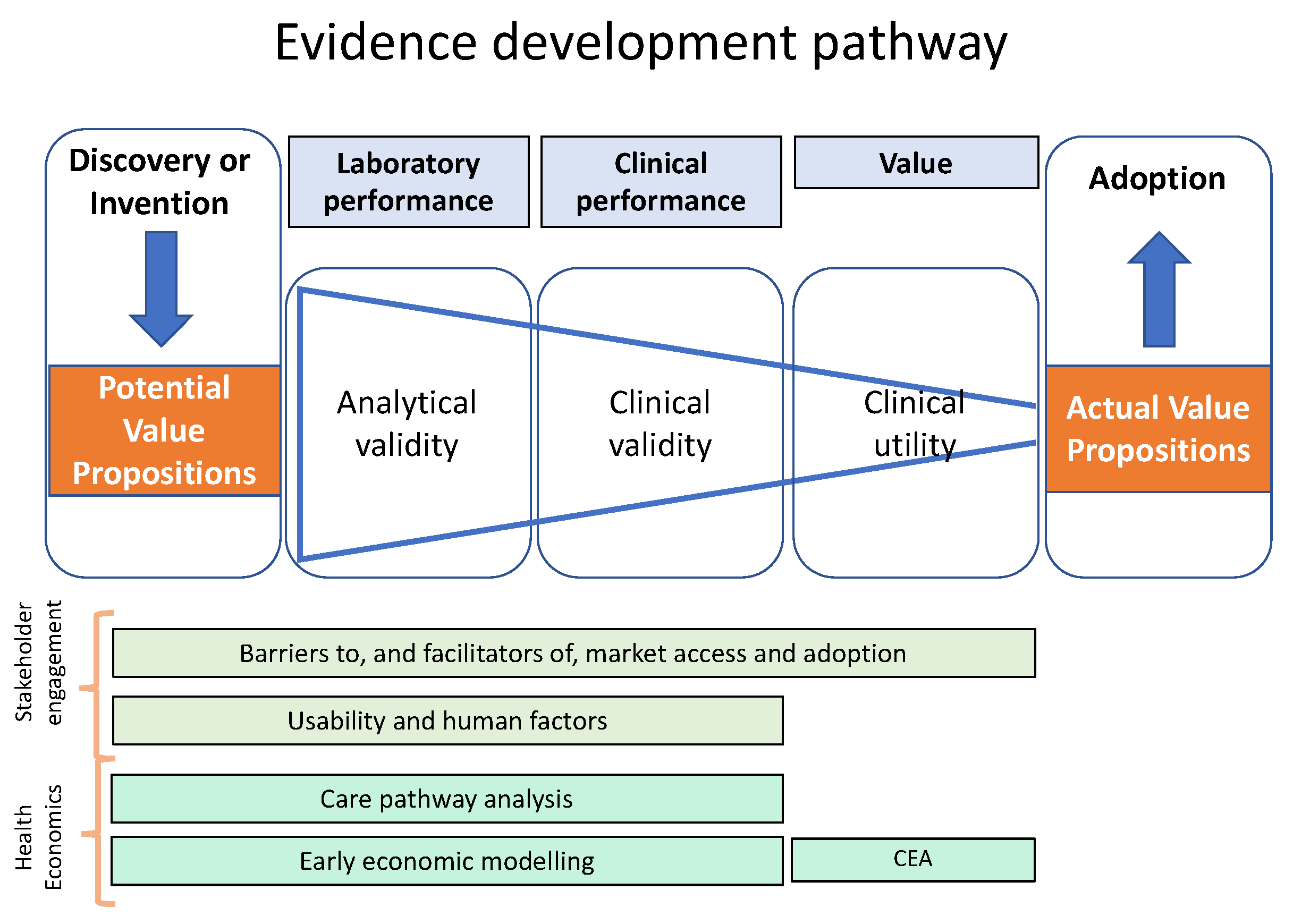

2. A Framework to Guide Evidence Development for POCT IVDs and Other Medical Devices

3. Articulating Value Propositions

- Understand the changing healthcare landscape

- Identify the most appropriate route to NHS access

- Explore their value proposition with system stakeholders.

| Exercise: Value propositions |

Write down the value propositions for the NewTestR and NewTestRx devices foregrounded by:

|

4. Care Pathway Analysis—Some Necessary Theoretical Background

4.1. What is a Care Pathway?

- The patient’s view of their journey through the healthcare system.

- The healthcare system’s view of the services provided, including workflows and information flows.

- The range of actual practices (for example, as documented by a clinical audit).

- The accepted best practice (for example as defined by clinical guidelines, standards, protocols).

4.2. What is Care Pathway Analysis?

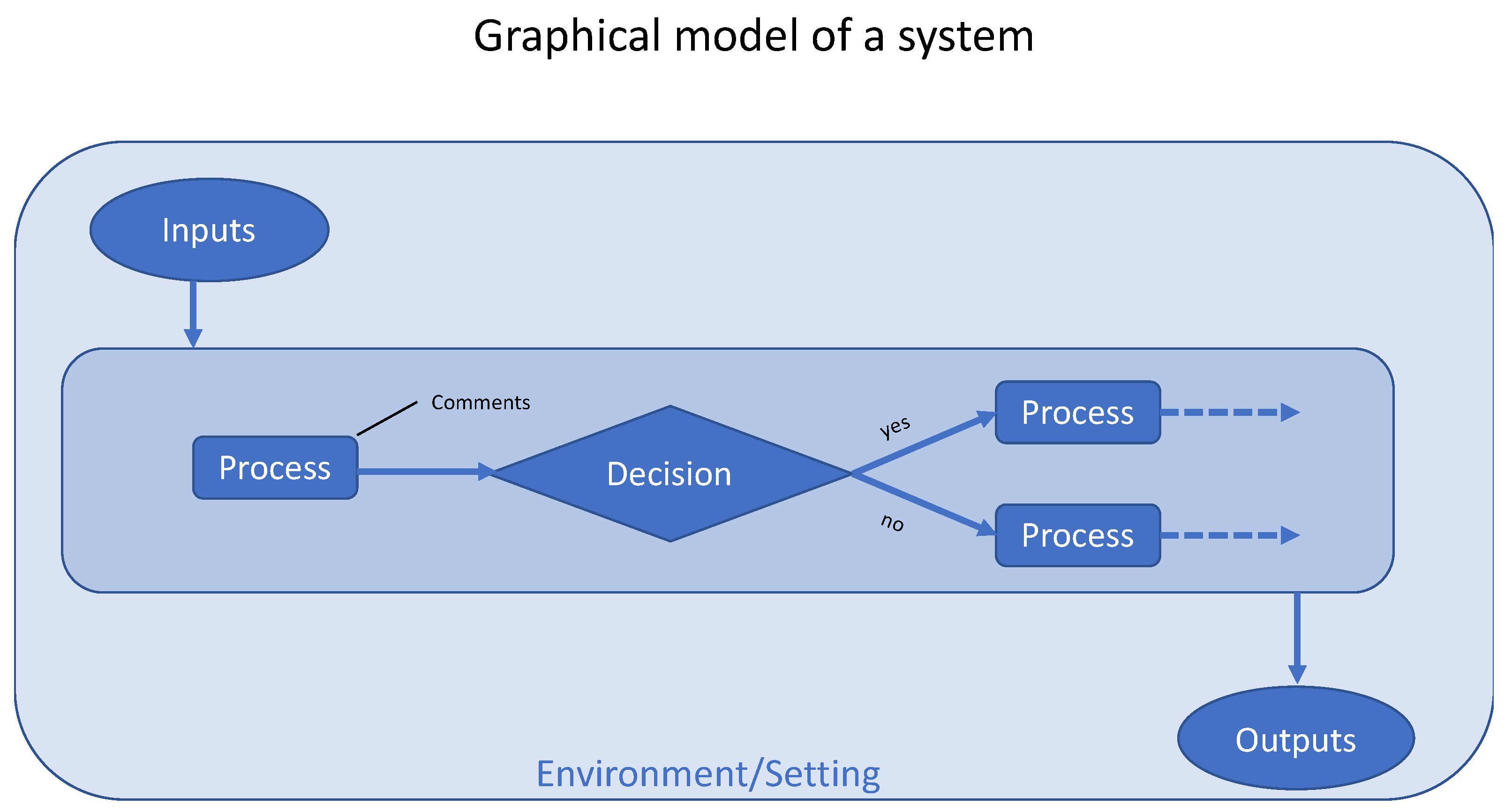

4.3. How Should a System be Represented Graphically?

5. Care Pathway Analysis—A Practical Approach

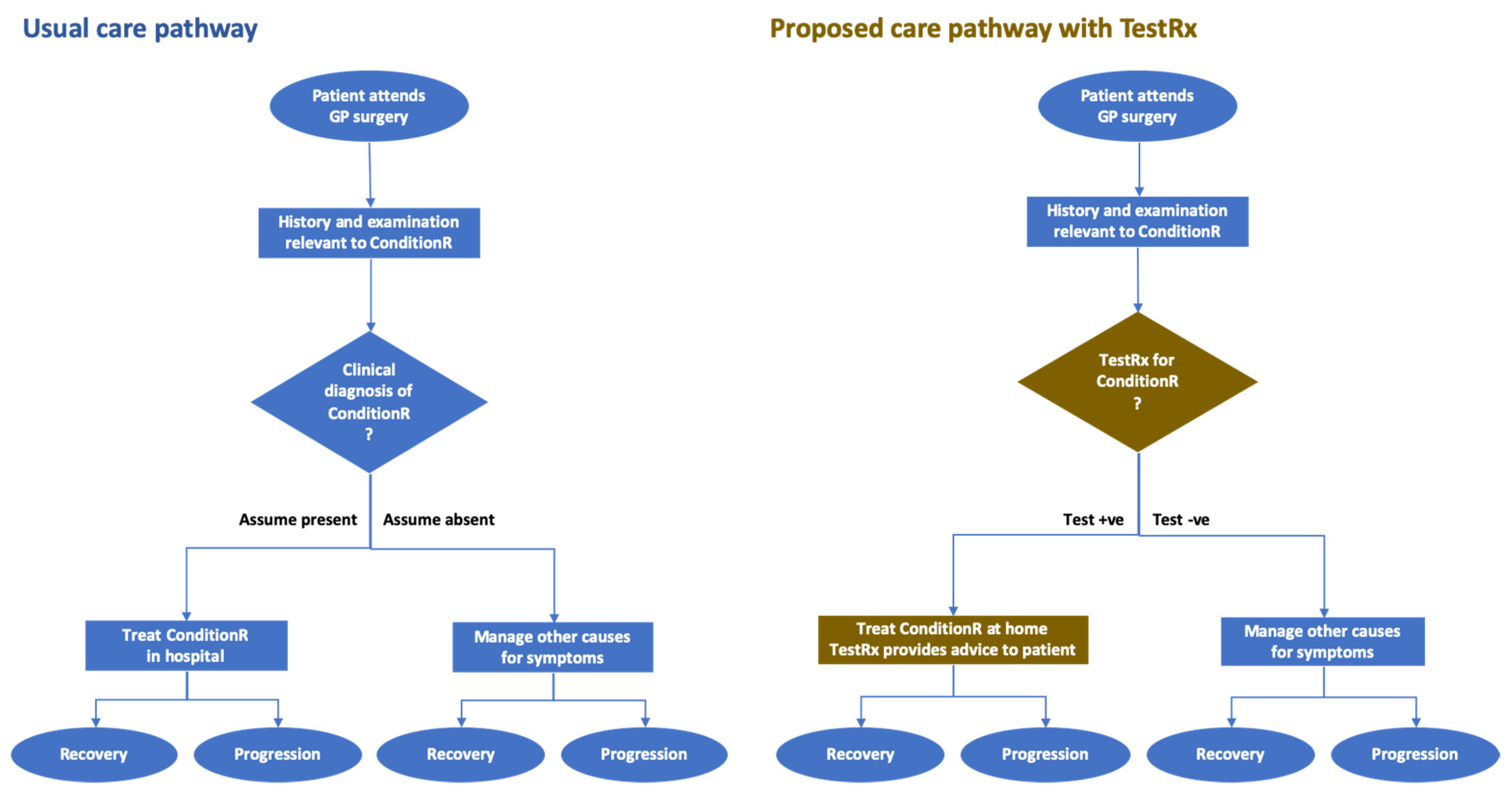

5.1. Recommended Approach to Care Pathway Analysis

- The manufacturer and their investors to understand the clinical and commercial potential of the device, develop their market access strategy, build their business plan, and guide marketing.

- The methodologists or researchers to build health economic models and to design clinical studies to generate the evidence required.

- Healthcare funders to understand the potential value of the product in clinical and financial terms, and decide whether to support further development.

- Work with the manufacturer to articulate the opportunities for improvement (these will be a source for the statement of value propositions).

- Work collaboratively with a team that has representatives (direct or proxy) of all stakeholders, including the manufacturer (who will usually act as a proxy for their investors), healthcare payers, healthcare providers (organizations, departments, and professionals), and patients. The EFLM TE-WG tool can be used as a framework for discussion. Patient and public involvement is described in the following section.

- Review local (i.e., hospital) and national guidelines relevant to the condition to understand the current recommendations on its management and the variation of recommendations across the country.

- Obtain frequent feedback on documents (narrative and graphic representations of the base case and new care pathways) as they are drafted and redrafted—see Figure 3 and the explanation in the next section. Feedback should also be sought from both experts and patients.

- o

- Be aware of the ethical issues and organizational processes around involving patients, the public, and staff in meetings and surveys.

- o

- Feedback, advice, information can be obtained through team meetings, individual meetings, focus groups, and surveys.

- Summarize current evidence on the product, such as analytical validity studies—don’t assume that these have been done or that the results are encouraging.

- Articulate the clinical scenario: the clinical problem, setting, recommended management.

- Describe the product, indications for use, strengths and limitations, and how it will be used by whom and where.

- Describe how the information produced by the product will guide management decisions.

- Obtain comments on usability and potential utility from stakeholders—they could be patients, clinicians in the ward or clinics, laboratory staff, or methodologists such as health economists.

- Describe the outcomes (financial and clinical) that are expected.

- Visit (preferably with an example product) the places where the device and the information produced will be used and talk to potential users of the device and/or the information it provides.

- o

- Desk research is necessary. Getting out and seeing the real world and talking to real people is even more necessary.

5.2. Example Care Pathway

6. Patient and Public Involvement and Engagement in Healthcare Research

- Why the device is needed?

- Will there be a market for it?

- Would it benefit the patient and/or the healthcare professionals?

- Improving the quality of research delivery—public/patient involvement can help develop and review materials that participants will see as part of the research (e.g. patient information sheets, consent forms etc.), to make these more relevant and accessible. This will positively impact on the ease with which participants are able to consider whether to take part and to achieve participant recruitment targets.

- Providing a different perspective—public/patient involvement can provide the research team with real-life insights and perspectives that are not within the reach of the research team without PPIE. A research team may feel nervous on requesting certain samples for research such as muscle or feces samples, or make assumptions on where the best place or time to hold recruitment will be. Many times we find that people surprise us with their insights or perspectives that either affirm the research approach or offer alternatives which benefit the study’s delivery.

Putting PPIE into Practice

- Consultation: to ask for views/advice.

- Collaboration/co-production: researchers and people work together e.g. to identify research questions.

- User led: people make the decisions about research, e.g. they will be the principal investigator.

- Setting up a public/patient steering group/advisory board or attending an existing one to consult with them on the research question, study design, feasibility, methodology, recruitment strategies etc.

- Working with the public/patients who will be co-applicants when applying for funding grants.

7. Early Economic Assessment

8. Analytical Validity

Developing Evidence on Analytical Validity for an IVD

9. Clinical Validity

9.1. Developing Evidence on the Clinical Validity of a Diagnostic Test

- What is the Aim when using the test?

- What is the Setting in which the test will be used?For example, home, clinic, hospital.

- What is the Population?How will participants be recruited: practicalities as well as inclusion and exclusion criteria?

- What is the Index (new test)?

- What is the Comparison or reference test?Is a test currently available? If not, what would current practice be to provide an operational definition of making the diagnosis?

- What Outcome (diagnostic accuracy) measures will be used?

- Over what length of Time are the outcomes measured?

9.2. Study Designs for Assessing Diagnostic Accuracy

9.3. Studies to be Conducted Alongside Diagnostic Accuracy Studies

9.4. Measures of Diagnostic Accuracy

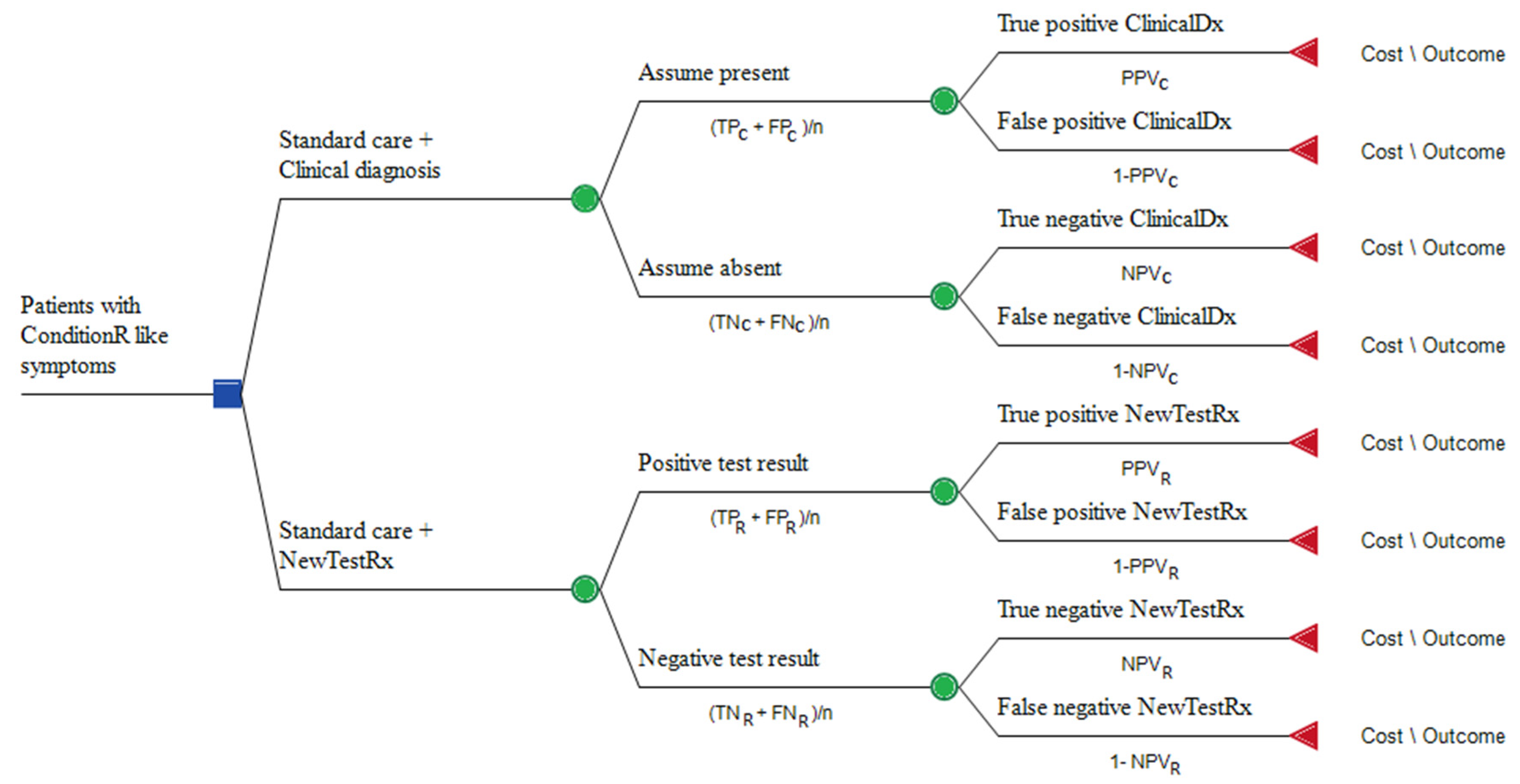

- Counts or proportions of test results: TP = number of true positive results, e.g. when a patient with ConditionR tests positive; FP = number of false positive results, e.g. when a patient without ConditionR tests positive; TN = number of true negative results, e.g. when a patient without ConditionR tests negative; FN = number of false negative results, e.g. when a patient with ConditionR tests negative.

- Sensitivity: the proportion of people with a condition who test positive = TP/(TP + FN).

- Specificity: the proportion of people who do not have the condition and who test negative = TN/(TN + FP).

- Positive predictive value (PPV): the proportion of people who test positive and actually have the condition, in the population being tested = TP/(TP + FP).

- Negative predictive value (NPV): the proportion of people who test negative and do not actually have the condition, in the population being tested = TN/(TN + FN).

9.5. Uses and Abuses of Diagnostic Accuracy Statistics

- For clinical use. A test that is accurate enough to use in a high prevalence scenario may not be accurate enough to be useful in a low prevalence scenario.

9.6. The Tradeoff between Sensitivity and Specificity:Ssetting the Threshold for Classifying a Test Result

9.7. Some Tips on Statistical Analysis

- 1)

- When planning clinical studies it is good practice (and required by many funders) to estimate the minimum sample size that will allow the desired effect to be observed in the population of interest. The optimal sample size depends, amongst other things, on the acceptable risks of being wrong. For diagnostic accuracy studies, the risk of being wrong might be expressed as a confidence interval around a diagnostic accuracy statistic such as sensitivity. For studies designed to detect a difference in clinical outcomes, the risks of being wrong might be defined by (i) the P-value for accepting a result as statistically significant (the risk of a false positive conclusion), and (ii) the statistical power of the study (the risk of a false negative conclusion). There are applications that can help with the sample size calculations such as G*Power (https://stats.idre.ucla.edu/other/gpower/), which is free and covers a large range of designs (many relevant for clinical utility studies), but is not ideal for diagnostic accuracy studies [39]. JMP (https://www.jmp.com/en_gb/home.html) is more comprehensive but not free [40]. Hajian-Tilaki provides simple explanations and tables to calculate sample size for diagnostic accuracy studies [41]. Choosing the optimal sample size is often difficult and the logistical and financial implications can be large, so it is wise to consult a statistician when planning the study.

- 2)

- Sometimes the outputs of the test are not binary. Also, test results can be undetermined or inconclusive, for example if they are just above or below the threshold that separate “positives” from “negatives”. Shinkins and co-authors provide guidelines on how test results should be analyzed and reported when they are invalid (result missing or interpretable) or valid (interpretable but near to the threshold) [42]. As a rule of thumb, all results should be reported and, when possible, included in the analysis, for example with sensitivity analyses to assess the impacts of missing, invalid, and borderline results on the outcome.

- 3)

- When there is no perfectly accurate test to use as the gold standard, an imperfect reference standard must be used. The methods used to take account of the biases introduced by using an imperfect reference standard have recently been reviewed [43].

- 4)

- Studies reporting accuracy measures, such as sensitivity and specificity, should be replicated in a different population. This is called external validation [44]. External validation is particularly important for studies which select a subset of multiple biomarkers, as the selection results may be significantly different in different populations. Furthermore, estimates of sensitivity and specificity in the sample population where the biomarker selection has been optimized are likely to be optimistic estimates of the test accuracy.

- 5)

- Sensitivity and specificity may be difficult to translate directly into decisions about patient treatment, for which information presented in the form of probabilities of disease after a positive or a negative test result may be more useful. Two online interactive tools have been developed to clarify the relationship between pre-test (prevalence) and post-test probabilities of disease. Probabilities of disease can be, then, compared with decision thresholds above and below which different treatment decisions may be indicated [33].

9.8. Standards for Reporting Diagnostic Aaccuracy Studies

10. Clinical Utility

- It cannot be delivered quickly and to a high-standard in real-life settings (e.g., in complex healthcare settings).

- Test results do not influence clinical decision-making or patient-outcomes (e.g., the test is sensitive but there is no effective treatment for the condition).

10.1. Developing Evidence on the Clinical Utility of a Diagnostic Test

- What is the Aim for the test?

- What is the Setting in which the test will be used?

- What is the Population?How will participants be recruited: practicalities as well as inclusion and exclusion criteria?

- What is the Index (new test) and its care pathway?How will the test be delivered; who will be told the results and when; how will this affect subsequent treatment?

- What is the Comparison or reference test and its care pathway?Is a test currently available? If not, what would current practice be?

- What Outcome measures will be used to assess clinical utility?In which way might the new test provide advantages over current practice? How will these be measured and analyzed?

- Over what length of Time are the outcomes measured?

10.2. Study Dsigns for Assessing the Full Pathway Clinical Utility of a Diagnostic Test

10.3. Randomized Controlled Trials

10.4. Standards for Reporting Clinical Utility Trials

10.5. Studies to Consider Carrying out alongside a Clinical Utility Study

11. Health Economic Studies

11.1. Developing Evidence on Value for Money and Affordability

11.2. Decision Analysis

11.3. Cost Minimization Analysis

11.4. Cost-consequences Analysis

11.5. Budget Impact Analysis

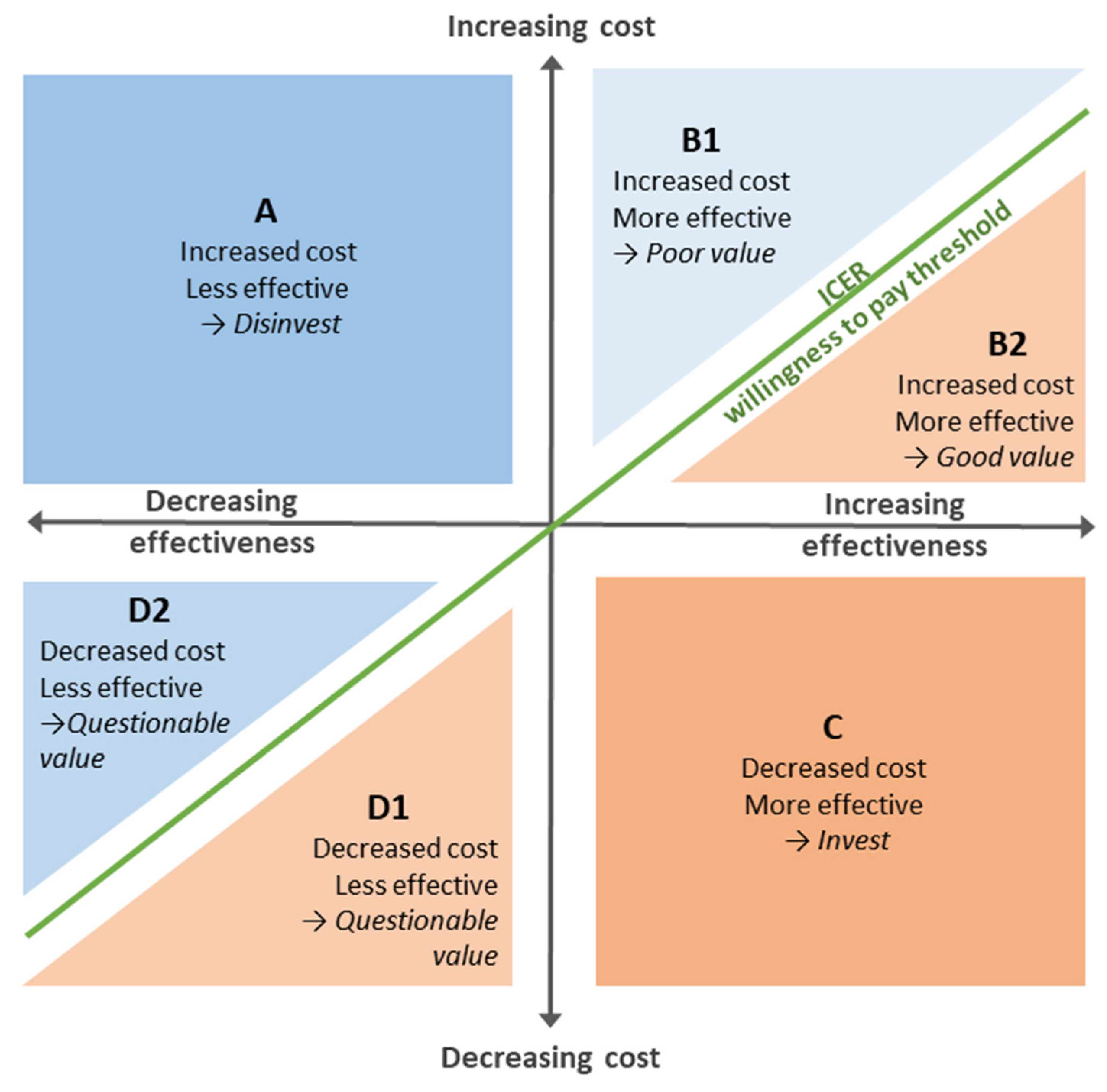

11.6. Cost-effectiveness Analysis

- A:

- Products that cost more, but are less effective are mapped to this quadrant. The rational decision about investing in these products is clearly to invest elsewhere.

- B:

- Products that cost more, and are more effective need to be assessed for the value that would be provided were they to be adopted. The green diagonal line shows the threshold above which the product would be considered poor value (B1), and below which it would be considered good value (B2).Because there are uncertainties in health economic studies, the threshold line is in reality broader and fuzzier than shown, and thus assessing the value of products near the line requires careful judgement.

- C:

- Products that cost less while being more effective make easy investment decisions since they clearly provide better value than the alternative.

- D:

- Products that cost less while being less effective require a value judgement about the benefit of the savings relative to the loss in effectiveness. Products below the diagonal green line (D1) may provide an opportunity for savings, while those above the line may not be good value.

11.7. Assessing Uncertainty in Health Economics Studies

- 1)

- Should the technology be adopted on the basis of current evidence and its uncertainty surrounding clinical and economic outcomes?

- 2)

- Is further evidence needed in order to support this decision now, and in the future?

11.8. Value of Information Analysis (VOI)

11.9. Standards for Reporting Health Economics Studies

12. Developing Evidence on Digital Health Devices and Data-driven Technologies

12.1. Evidence Tiers for Digital Health Devices

12.2. Code of Conduct for Data-driven Technologies

- Understand users, their needs and the context.

- Define the outcome and how the technology will contribute to it.

- Use data that is in line with appropriate guidelines for the purpose for which it is being used.

- Be fair, transparent and accountable about what data is being used.

- Make use of open standards.

- Be transparent about the limitations of the data used and algorithms deployed.

- Show what type of algorithm is being developed or deployed, the ethical examination of how the data is used, how its performance will be validated and how it will be integrated into health and care provision. Demonstrate the learning methodology of the algorithm being built. Aim to show in a clear and transparent way how outcomes are validated.

- Generate evidence of effectiveness for the intended use and value for money.

- Make security integral to the design.

- Define the commercial strategy.

12.3. Similarities between the Evidence Development Strategies for IVDs and DHTs

13. Product Management

- Project planning and management: know what must be delivered and when, identify where the dependencies are and determine the critical path of the project, which helps to define the shortest timeframe in which the project can be completed. Product management should include appropriate evaluation of potential risks and issues, change and resources management.

- Stakeholder strategy: Identify the stakeholders in the project together with their requirements, planning your communications with them and how they will be involved in the project.

- Financial planning: plan what will be your strategy to fund the evaluation in addition to the development of the new device. Clinical studies especially can be very expensive but there are many funding bodies which can financially support your research. In the UK, the NIHR In Vitro Diagnostic Co-operatives [65] can help you to develop a successful evidence development strategy that can feed into a grant application.

- Regulations: at an early stage in product development, identify the relevant regulators and what evidence is required for marketing approval. In the European Union the new Medical Devices Regulation Medical Devices Regulation (MDR, Council Regulation 2017/745) will be enforced from the 26th May 2020 [66]. For IVDs the date of enforcement is 26th May 2022 (IVDR, Council Regulation 2017/746) [1]. Among other important changes, the MDR and IVDR will required increased evidence on clinical utility. In US the main regulator for market access for medical devices is the Food and Drug Administration, which is also increasing the requirements for evidence on clinical utility [67].

- IP protection and publication strategy: consider filing patents before sharing information with the wider community through scientific posters and articles. Note that scientific publications are important to obtain funding, buy-in from clinicians, and of course to facilitate adoption in the later stages. So, timing of patents and publications should be carefully planned and pursued.

- Prevent waste: Review and mitigate avoidable sources of waste and failure in the evidence development strategy [68].

14. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Regulation (EU) 2017/746 of the European Parliament and of the Council of 5 April 2017 on in vitro diagnostic medical devices and repealing Directive 98/79/EC and Commission Decision 2010/227/EU. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32017R0746 (accessed on 2 March 2020).

- NICE Evidence Standards Framework for Digital Health Technologies. Available online: https://www.nice.org.uk/about/what-we-do/our-programmes/evidence-standards-framework-for-digital-health-technologies (accessed on 2 March 2020).

- DHSC Code of Conduct for Data-Driven Health and Care Technology. Available online: https://www.gov.uk/government/publications/code-of-conduct-for-data-driven-health-and-care-technology (accessed on 2 March 2020).

- NICE Diagnostics Assessment Programme Manual. Available online: https://www.nice.org.uk/Media/Default/About/what-we-do/NICE-guidance/NICE-diagnostics-guidance/Diagnostics-assessment-programme-manual.pdf (accessed on 2 March 2020).

- Soares, M.O.; Walker, S.; Palmer, S.J.; Sculpher, M.J. Establishing the value of Diagnostic and Prognostic Tests in Health Technology Assessment. Med. Decis. Making. 2018, 38, 495–508. [Google Scholar] [CrossRef] [PubMed]

- Bossuyt, P.M.; Irwig, L.; Craig, J.; Glasziou, P. Comparative accuracy: Assessing new tests against existing diagnostic pathways. BMJ 2006, 332, 1089–1092. [Google Scholar] [CrossRef] [PubMed]

- Lijmer, J.G.; Leeflang, M.; Bossuyt, P.M. Proposals for a phased evaluation of medical tests. Med. Decis. Making. 2009, 29, E13–E21. [Google Scholar] [CrossRef] [PubMed]

- NICE Office for Market Access. Available online: https://www.nice.org.uk/about/what-we-do/life-sciences/office-for-market-access (accessed on 2 March 2020).

- Ferrante di Ruffano, L.; Hyde, C.J.; McCaffery, K.J.; Bossuyt, P.M.; Deeks, J.J. Assessing the value of diagnostic tests: A framework for designing and evaluating trials. BMJ 2012, 344, e686. [Google Scholar] [CrossRef]

- Wurcel, V.; Cicchetti, A.; Garrison, L.; Kip, M.; Koffijberg, H.; Kolbe, A.; Leeflang, M.; Merlin, T.; Mestre-Ferrandiz, J.; Oortwijn, W. The value of diagnostic information in personalised healthcare: A comprehensive concept to facilitate bringing this technology into healthcare systems. Public Health Genomics. 2019, 22, 8–15. [Google Scholar] [CrossRef]

- Kinsman, L.; Rotter, T.; James, E.; Snow, P.; Willis, J. What is a clinical pathway? Development of a definition to inform the debate. BMC Med. 2010, 8, 31. [Google Scholar] [CrossRef]

- Lawal, A.K.; Rotter, T.; Kinsman, L.; Machotta, A.; Ronellenfitsch, U.; Scott, S.D.; Goodridge, D.; Plishka, C.; Groot, G. What is a clinical pathway? Refinement of an operational definition to identify clinical pathway studies for a Cochrane systematic review. BMC Med. 2016, 14, 35. [Google Scholar] [CrossRef]

- Schrijvers, G.; van Hoorn, A.; Huiskes, N. The care pathway: Concepts and theories: An introduction. Int. J. Integr. Care 2012, 12, e192. [Google Scholar] [CrossRef]

- Bossuyt, P.M.; Reitsma, J.B.; Linnet, K.; Moons, K.G. Beyond diagnostic accuracy: The clinical utility of diagnostic tests. Clin. Chem. 2012, 58, 1636–1643. [Google Scholar] [CrossRef]

- ISO 5807:1985 [ISO 5807:1985] Information Processing—Documentation Symbols and Conventions for Data, Program and System Flowcharts, Program Network Charts and System Resources Charts. Available online: https://www.iso.org/standard/11955.html (accessed on 2 March 2020).

- Monaghan, P.J.; Robinson, S.; Rajdl, D.; Bossuyt, P.M.M.; Sandberg, S.; St John, A.; O’Kane, M.; Lennartz, L.; Röddiger, R.; Lord, S.J.; et al. Practical guide for identifying unmet clinical needs for biomarkers. EJIFCC 2018, 29, 129–137. [Google Scholar]

- Hanson, C.L.; Osberg, M.; Brown, J.; Durham, G.; Chin, D.P. Conducting patient-pathway analysis to inform programming of tuberculosis services: Methods. J. Infect. Dis. 2017, 216, S679–S685. [Google Scholar] [CrossRef] [PubMed]

- Abel, L.; Shinkins, B.; Smith, A.; Sutton, A.J.; Sagoo, G.S.; Uchegbu, I.; Allen, A.J.; Graziadio, S.; Moloney, E.; Yang, Y.; et al. Early economic evaluation of diagnostic technologies: Experiences of the NIHR diagnostic evidence co-operatives. Med. Decis. Making 2019, 39, 857–866. [Google Scholar] [CrossRef]

- St John, A.; Price, C.P. Economic evidence and point-of-care testing. Clin. Biochem. Rev. 2013, 34, 61–74. [Google Scholar]

- Simoens, S. Health economic assessment: A methodological primer. Int. J. Environ. Res. Public Health 2009, 6, 2950–2966. [Google Scholar] [CrossRef] [PubMed]

- Girling, A.; Lilford, R.; Cole, A.; Young, T. Headroom approach to device development: Current and future directions. Int. J. Technol. Assess. Health Care 2015, 31, 331–338. [Google Scholar] [CrossRef] [PubMed]

- Boyd, K.A. Employing Early Decision Analytic Modelling to Inform Economic Evaluation in Health Care: Theory & Practice. Ph.D. Thesis, University of Glasgow, Glasgow, UK, 2012. Available online: http://theses.gla.ac.uk/3685/ (accessed on 2 March 2020).

- FDA Bioanalytical Method Validation: Guidance for Industry. United States of America: Food and Drug Administration, 2018. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/bioanalytical-method-validation-guidance-industry (accessed on 2 March 2020).

- Magnusson, B.; Örnemark, U. Eurachem Guide: The Fitness for Purpose of Analytical Methods — A Laboratory Guide to Method Validation and Related Topics. 2nd Ed. 2014. Available online: https://www.eurachem.org/images/stories/Guides/pdf/MV_guide_2nd_ed_EN.pdf (accessed on 2 March 2020).

- WHO Technical Guidance Series for WHO Prequalification—Diagnostic Assessment: Guidance on Test method validation for in vitro diagnostic medical devices. World Health Organisation; 2017. Available online: https://apps.who.int/medicinedocs/documents/s23474en/s23474en.pdf (accessed on 9 March 2020).

- Borsci, S.; Uchegbu, I.; Buckle, P.; Ni, Z.; Walne, S.; Hanna, G.B. Designing medical technology for resilience: Integrating health economics and human factors approaches. Expert. Rev. Med. Devices 2018, 15, 15–26. [Google Scholar] [CrossRef] [PubMed]

- Borsci, S.; Buckle, P.; Walne, S. Is the LITE version of the usability metric for user experience (UMUX-LITE) a reliable tool to support rapid assessment of new healthcare technology? Appl. Ergon. 2020, 84, 103007. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; Moher, D.; Tugwell, P.; Welch, V.; Kristjansson, E.; et al. AMSTAR 2: A critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Knottnerus, J.A.; Muris, J.W. Assessment of the accuracy of diagnostic tests: The cross-sectional study. J. Clin. Epidemiol. 2003, 56, 1118–1128. [Google Scholar] [CrossRef]

- Rutjes, A.W.; Reitsma, J.B.; Vandenbroucke, J.P.; Glas, A.S.; Bossuyt, P.M. Case-control and two-gate designs in diagnostic accuracy studies. Clin. Chem. 2005, 51, 1335–1341. [Google Scholar] [CrossRef]

- Leeflang, M.M.; Bossuyt, P.M.; Irwig, L. Diagnostic test accuracy may vary with prevalence: Implications for evidence-based diagnosis. J. Clin. Epidemiol. 2009, 62, 5–12. [Google Scholar] [CrossRef] [PubMed]

- Lendrem, B.C.; Lendrem, D.W.; Pratt, A.G.; Naamane, N.; McMeekin, P.; Ng, W.F.; Allen, A.J.; Power, M.; Isaacs, J.D. Between a ROC and a hard place: Teaching prevalence plots to understand real world biomarker performance in the clinic. Pharm. Stat. 2019, 18, 632–635. [Google Scholar] [CrossRef] [PubMed]

- Fanshawe, T.R.; Power, M.; Graziadio, S.; Ordóñez-Mena, J.M.; Simpson, J.; Allen, J. Interactive visualisation for interpreting diagnostic test accuracy study results. BMJ Evid. Based Med. 2018, 23, 13–16. [Google Scholar] [CrossRef] [PubMed]

- The accuracy and utility of diagnostic tests: How information from a test can usefully inform decisions. Available online: https://micncltools.shinyapps.io/ClinicalAccuracyAndUtility/ (accessed on 2 March 2020).

- Bayesian Clinical Diagnostic Model. Kennis Research. Available online: https://kennis-research.shinyapps.io/Bayes-App/ (accessed on 2 March 2020).

- Receiver Operating Characteristic (ROC) Curves. Kennis Research. Available online: https://kennis-research.shinyapps.io/ROC-Curves/ (accessed on 2 March 2020).

- Pewsner, D.; Battaglia, M.; Minder, C.; Marx, A.; Bucher, H.C.; Egger, M. Ruling a diagnosis in or out with "SpPIn" and "SnNOut": A note of caution. BMJ 2004, 329, 209–213. [Google Scholar] [CrossRef] [PubMed]

- Pepe, M.S.; Janes, H.; Li, C.I.; Bossuyt, P.M.; Feng, Z.; Hilden, J. Early-phase studies of biomarkers: What target sensitivity and specificity values might confer clinical utility? Clin Chem. 2016, 62, 737–742. [Google Scholar] [CrossRef] [PubMed]

- G*POWER. UCLA Institute for Digital Research & Eductaion: Statistical Consulting. Available online: https://stats.idre.ucla.edu/other/gpower/ (accessed on 2 March 2020).

- JMP. Statistical Discovery™. SAS. Available online: https://www.jmp.com/en_gb/home.html (accessed on 2 March 2020).

- Hajian-Tilaki, K. Sample size estimation in diagnostic test studies of biomedical informatics. J Biomed Inform. 2014, 48, 193–204. [Google Scholar] [CrossRef]

- Shinkins, B.; Thompson, M.; Mallett, S.; Perera, R. Diagnostic accuracy studies: How to report and analyse inconclusive test results. BMJ 2013, 346, f2778. [Google Scholar] [CrossRef]

- Umemneku Chikere, C.M.; Wilson, K.; Graziadio, S.; Vale, L.; Allen, A.J. Diagnostic test evaluation methodology: A systematic review of methods employed to evaluate diagnostic tests in the absence of gold standard — An update. PLoS ONE 2019, 14, e0223832. [Google Scholar] [CrossRef]

- Van den Bruel, A.; Aertgeerts, B.; Buntinx, F. Results of diagnostic accuracy studies are not always validated. J. Clin. Epidemiol. 2006, 59, 559–566. [Google Scholar] [CrossRef] [PubMed]

- STARD 2015: An Updated List of Essential Items for Reporting Diagnostic Accuracy Studies. Available online: https://www.equator-network.org/reporting-guidelines/stard/ (accessed on 2 March 2020).

- Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD): The TRIPOD Statement. Available online: https://www.equator-network.org/reporting-guidelines/tripod-statement/ (accessed on 2 March 2020).

- FDA. Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests — Guidance for Industry and FDA Staff. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/statistical-guidance-reporting-results-studies-evaluating-diagnostic-tests-guidance-industry-and-fda (accessed on 2 March 2020).

- NICE Medical Technologies Evaluation Programme Methods Guide. Process and Methods [PMG33]. Available online: https://www.nice.org.uk/process/pmg33/chapter/introduction (accessed on 2 March 2020).

- Bossuyt, P.M.; McCaffery, K. Additional patient outcomes and pathways in evaluations of testing. Med. Decis. Making 2009, 29, E30–E38. [Google Scholar] [CrossRef]

- Glasziou, P.; Chalmers, I.; Altman, D.G.; Bastian, H.; Boutron, I.; Brice, A.; Jamtvedt, G.; Farmer, A.; Ghersi, D.; Groves, T.; et al. Taking healthcare interventions from trial to practice. BMJ. 2010, 341, c3852. [Google Scholar] [CrossRef] [PubMed]

- Ferrante di Ruffano, L.; Dinnes, J.; Taylor-Phillips, S.; Davenport, C.; Hyde, C.; Deeks, J.J. Research waste in diagnostic trials: A methods review evaluating the reporting of test-treatment interventions. BMC Med. Res. Methodol. 2017, 17, 32. [Google Scholar] [CrossRef] [PubMed]

- Biomarker-Guided Trial Designs (BiGTeD): An Online Tool to Help Develop Personalised Medicine. Available online: http://bigted.org/ (accessed on 2 March 2020).

- Bland, J.M. Cluster randomised trials in the medical literature: Two bibliometric surveys. BMC Med. Res. Methodol. 2004, 4, 21. [Google Scholar] [CrossRef] [PubMed]

- Grayling, M.J.; Wason, J.M.; Mander, A.P. Stepped wedge cluster randomized controlled trial designs: A review of reporting quality and design features. Trials 2017, 18, 33. [Google Scholar] [CrossRef]

- Zapf, A.; Stark, M.; Gerke, O.; Ehret, C.; Benda, N.; Bossuyt, P.; Deeks, J.; Reitsma, J.; Alonzo, T.; Friede, T. Adaptive trial designs in diagnostic accuracy research. Stat Med 2019. [Google Scholar] [CrossRef]

- Freidlin, B.; Jiang, W.; Simon, R. The cross-validated adaptive signature design. Clin. Cancer Res. 2010, 16, 691–698. [Google Scholar] [CrossRef]

- Antoniou, M.; Kolamunnage-Dona, R.; Jorgensen, A.L. Biomarker-guided non-adaptive trial designs in phase II and phase III: A methodological review J. J. Pers. Med. 2017, 7, 1. [Google Scholar] [CrossRef]

- CONSORT 2010 Statement: Updated guidelines for reporting parallel group randomised trials. Available online: https://www.equator-network.org/reporting-guidelines/consort/ (accessed on 2 March 2020).

- TreeAge Pro 2019, R2. TreeAge Software, Williamstown, MA, USA. Available online: http://www.treeage.com (accessed on 2 March 2020).

- GeNIe Modeler BayesFusion: BayesFusion, LLC; 2020. Available online: https://www.bayesfusion.com/genie/ (accessed on 2 March 2020).

- Microsoft Visio Flowchart Maker & Diagram Software: Microsoft; 2020. Available online: https://products.office.com/en-gb/visio/flowchart-software (accessed on 2 March 2020).

- Briggs, A.; Claxton, K.; Sculpher, M. Decision Modelling for Health Economic Evaluation, 2nd ed.; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Claxton, K.; Sculpher, M.; Drummond, M. A rational framework for decision making by the National Institute for Clinical Excellence (NICE). Lancet 2002, 360, 711–715. [Google Scholar] [CrossRef]

- Husereau, D.; Drummond, M.; Petrou, S.; Carswell, C.; Moher, D.; Greenberg, D.; Augustovski, F.; Briggs, A.H.; Mauskopf, J.; Loder, E. Consolidated health economic evaluation reporting standards (CHEERS)—explanation and elaboration: A report of the ISPOR health economic evaluations publication guidelines good reporting practices task force. Value Health 2013, 16, 231–250. [Google Scholar] [CrossRef]

- How We Support Medical Technology, Device and Diagnostic Companies. NIHR; 2020. Available online: https://www.nihr.ac.uk/partners-and-industry/industry/access-to-expertise/medtech.htm (accessed on 2 March 2020).

- Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices, amending Directive 2001/83/EC, Regulation (EC) No 178/2002 and Regulation (EC) No 1223/2009 and repealing Council Directives 90/385/EEC and 93/42/EEC 2017. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32017R0745 (accessed on 2 March 2020).

- Overview of Device Regulation: US FDA, 2020. Available online: https://www.fda.gov/medical-devices/device-advice-comprehensive-regulatory-assistance/overview-device-regulation (accessed on 2 March 2020).

- Ioannidis, J.P.A.; Bossuyt, P.M.M. Waste, Leaks, and Failures in the Biomarker Pipeline. Clin. Chem. 2017, 63, 963–972. [Google Scholar] [CrossRef]

| Question | Methods to Address the Question |

|---|---|

| Why is the device needed? | Articulating the value propositions1 for investors, purchasers, providers, and patients 2 Care pathway analysis 1 |

| Will there be a market for it? | Market research including total available market, serviceable available market, and serviceable obtainable market |

| Could the product be commercially viable? | Early economic assessment 1 to estimate maximum price attainable and identify the information most important for reducing decision uncertainties Barriers to, and facilitators of, adoption, including market access |

| Does it work in the development lab? | Analytical validity studies 1 |

| Can it work in a clinical environment? | Clinical validity studies1 Human factors analysis and feasibility studies |

| Would it benefit the patient and/or the healthcare professionals? | Clinical utility studies1 to establish safety, burdens and benefits to patients, and benefits to the healthcare system |

| How would it impact the healthcare system? | Cost-effectiveness analysis1 (value for money) Cost-consequences analysis 1 (impact of expenditure on clinical outcomes) Budget impact analysis 1 (affordability within a given budget) |

| Will the product be commercially viable? | Evidence required by the regulator (e.g. equivalence, risks, safety, etc.) Return on investment |

| Characteristic | Description |

|---|---|

| Analytical sensitivity | The change in instrument response to changes in analyte concentration. |

| Analytical specificity | Ability of the method to determine an individual analyte in the presence of interferences. |

| Trueness | The agreement of the mean of an infinite number of repeated measurements to the reference value—measured with a confidence interval. |

| Analytical bias | Assessment of the systematic over or under estimation of the reference value i.e. attributable to the method. |

| Precision | Describes how close the results of repeated measurements are to each other under controlled conditions. |

| Repeatability | Variability of results when measured in a single sample over a short time period. |

| Intermediate precision | Variability when repeating the test over multiple days, different operators and using different equipment. |

| Reproducibility | Variability of results between different laboratories. |

| Detection limits | The minimum and maximum concentrations of the analyte which can be detected by the test. |

| Quantification Limits | The minimum and maximum quantities which can be accurately measured by the test. |

| Linearity | Ability of the test to give results which are directly proportional to the analyte concentration. |

| Range | The interval over which the test provides results with acceptable accuracy (often dictated by linearity). |

| Robustness | Ability of the test to withstand small variations in the method parameters e.g. temperature or pH. |

| Population with high prevalence | ||||||||

| population | 1000 | Condition | ||||||

| prevalence | 50% | Present | Absent | totals | ||||

| sensitivity | 90% | Test | Positive | 450 | 100 | 550 | ||

| specificity | 80% | Negative | 50 | 400 | 450 | |||

| +ve predictive value | 82% | totals | 500 | 500 | 1000 | |||

| -ve predictive value | 89% | |||||||

| Population with low prevalence | ||||||||

| population | 1000 | Condition | ||||||

| prevalence | 5% | Present | Absent | totals | ||||

| sensitivity | 90% | Test | Positive | 45 | 190 | 235 | ||

| specificity | 80% | Negative | 5 | 760 | 765 | |||

| +ve predictive value | 19% | totals | 50 | 950 | 1000 | |||

| -ve predictive value | 99% | |||||||

| Study Type | Design of Randomization and Comparison |

|---|---|

| Randomized controlled trial | Patients are randomized to have either the usual test and its management or the new test and its management. |

| Marker by treatment designs | Patients are tested, classified into groups such as test positive and test negative, and then randomized to treatment or control arms. The difference between the treatment and control groups in the test positive patients is then compared to the difference between the treatment and control groups in the test negative patients. |

| Biomarker strategy designs | Patients are randomized to usual care or to care informed by the test results. |

| Cluster-randomized designs | Study centers with groups of patients rather than individual patients are randomized to the usual or new testing strategy [53]. |

| Stepped wedge designs | The stepped wedge design is an adaptation of the cluster-randomized design in which the new testing strategy is introduced stepwise to a randomly chosen series of clusters over a number of periods of time [54]. |

| Adaptive designs | Adaptive designs allow changes to be made to a trial as information is collected [55,56,57]. |

| Umbrella designs | Several different biomarkers are measured and each determines a different care pathway. This allows several trials to be carried out in parallel. |

| Evidence Tier | Category of Digital Health Technology (DHT) Category, and Required Supporting Evidence * |

|---|---|

| Tier 1 | DHTs with potential system benefits but no direct user benefits. Evidence of Credibility with UK health and social care professionals. Relevance to current care pathways in the UK. Acceptability with users. Equalities considerations. Accurate and reliable measurements (if relevant). Accurate and reliable transmission of data (if relevant). |

| Tier 2 | DHTs which help users to understand healthy living and illnesses but are unlikely to have measurable user outcomes. Evidence for tier 1, and of Reliable information content. Ongoing data collection to show usage of the DHT. Ongoing data collection to show value of the DHT. Quality and safeguarding. |

| Tier 3b | DHTs with measurable user benefits, including tools used for treatment and diagnosis, as well as those influencing clinical management through active monitoring or calculation. It is possible DHTs in this tier will qualify as medical devices. |

| Tier 3a | DHTs for preventing and managing diseases. They may be used alongside treatment and will likely have measurable user benefits. Evidence for tiers 2 and 1, and of Use of appropriate behavioral change techniques (if relevant). Effectiveness for the intended use (compared to current best practice). Value for money (compared to current best practice). Budget impact Costs and non-monetary consequences Cost-utility (cost-effectiveness) |

| Evaluation Question | IVD Methodology | Maps to | DHT Tier |

|---|---|---|---|

| Does the device perform as expected in the development laboratory? | Analytical validity | ↔ | Tier 1 |

| Does the device perform as expected in the real world? | Clinical validity | ↔ | Tier 2 |

| Does the device provide value to patients and the healthcare system? | Clinical utility | ↔ | Tier 3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Graziadio, S.; Winter, A.; Lendrem, B.C.; Suklan, J.; Jones, W.S.; Urwin, S.G.; O’Leary, R.A.; Dickinson, R.; Halstead, A.; Kurowska, K.; et al. How to Ease the Pain of Taking a Diagnostic Point of Care Test to the Market: A Framework for Evidence Development. Micromachines 2020, 11, 291. https://doi.org/10.3390/mi11030291

Graziadio S, Winter A, Lendrem BC, Suklan J, Jones WS, Urwin SG, O’Leary RA, Dickinson R, Halstead A, Kurowska K, et al. How to Ease the Pain of Taking a Diagnostic Point of Care Test to the Market: A Framework for Evidence Development. Micromachines. 2020; 11(3):291. https://doi.org/10.3390/mi11030291

Chicago/Turabian StyleGraziadio, Sara, Amanda Winter, B. Clare Lendrem, Jana Suklan, William S. Jones, Samuel G. Urwin, Rachel A. O’Leary, Rachel Dickinson, Anna Halstead, Kasia Kurowska, and et al. 2020. "How to Ease the Pain of Taking a Diagnostic Point of Care Test to the Market: A Framework for Evidence Development" Micromachines 11, no. 3: 291. https://doi.org/10.3390/mi11030291

APA StyleGraziadio, S., Winter, A., Lendrem, B. C., Suklan, J., Jones, W. S., Urwin, S. G., O’Leary, R. A., Dickinson, R., Halstead, A., Kurowska, K., Green, K., Sims, A., Simpson, A. J., Power, H. M., & Allen, A. J. (2020). How to Ease the Pain of Taking a Diagnostic Point of Care Test to the Market: A Framework for Evidence Development. Micromachines, 11(3), 291. https://doi.org/10.3390/mi11030291