Evaluation of Available Cognitive Tools Used to Measure Mild Cognitive Decline: A Scoping Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Protocol and Registration

2.2. Eligibility Criteria

2.3. Information Sources and Search

2.4. Selection of Sources of Evidence

2.5. Data Charting Process and Data Items

2.6. Synthesis of Results

3. Results

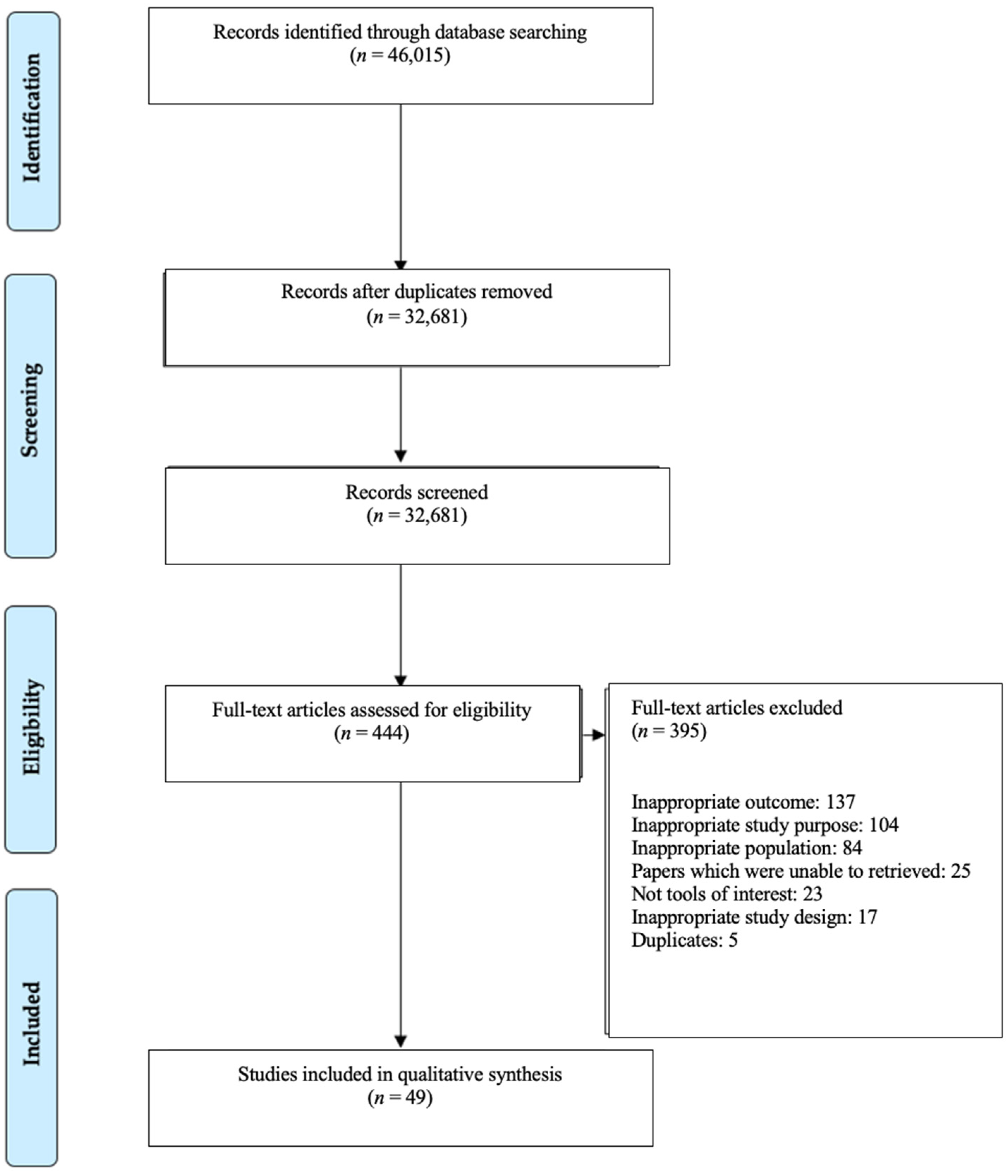

3.1. Study Selection

3.2. Study Characteristics

3.3. Cognitive Tools for Mild Cognitive Decline

3.4. Psychometric Performance of Included Cognitive Tools

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Dementia: A Public Health Priority; Alzheimer’s Disease International: London, UK, 2012. [Google Scholar]

- Alzheimer’s Disease International. World Alzheimer Report 2019: Attitudes to dementia; WHO: London, UK, 2019. [Google Scholar]

- World Health Organization. Risk Reduction of Cognitive Decline and Dementia: WHO Guidelines; WHO: Geneva, Switzerland, 2019. [Google Scholar]

- Deary, I.J.; Corley, J.; Gow, A.; Harris, S.E.; Houlihan, L.M.; Marioni, R.; Penke, L.; Rafnsson, S.B.; Starr, J.M. Age-associated cognitive decline. Br. Med. Bull. 2009, 92, 135–152. [Google Scholar] [CrossRef]

- Mild Cognitive Impairment. Available online: https://www.dementia.org.au/about-dementia-and-memory-loss/about-dementia/memory-loss/mild-cognitive-impairment (accessed on 8 October 2020).

- Knopman, D.; Petersen, R. Mild Cognitive Impairment and Mild Dementia: A Clinical Perspective. Mayo Clin. Proc. 2014, 89, 1452–1459. [Google Scholar] [CrossRef]

- Mild Cognitive Impairment. Available online: https://memory.ucsf.edu/dementia/mild-cognitive-impairment (accessed on 2 November 2021).

- Cognitive Assessment Toolkit. Available online: https://www.alz.org/media/documents/cognitive-assessment-toolkit.pdf (accessed on 8 October 2020).

- Singh-Manoux, A.; Kivimaki, M.; Glymour, M.M.; Elbaz, A.; Berr, C.; Ebmeier, K.; E Ferrie, J.; Dugravot, A. Timing of onset of cognitive decline: Results from Whitehall II prospective cohort study. BMJ 2012, 344, d7622. [Google Scholar] [CrossRef]

- Cornelis, M.C.; Wang, Y.; Holland, T.; Agarwal, P.; Weintraub, S.; Morris, M.C. Age and cognitive decline in the UK Biobank. PLoS ONE 2019, 14, e0213948. [Google Scholar] [CrossRef]

- Roberts, R.O.; Knopman, D.S.; Mielke, M.M.; Cha, R.H.; Pankratz, V.S.; Christianson, T.J.; Geda, Y.E.; Boeve, B.F.; Ivnik, R.J.; Tangalos, E.G.; et al. Higher risk of progression to dementia in mild cognitive impairment cases who revert to normal. Neurology 2013, 82, 317–325. [Google Scholar] [CrossRef]

- Mild Cognitive Impairment. Available online: https://www.dementia.org.au/sites/default/files/helpsheets/Helpsheet-OtherInformation01-MildCognitiveImpairment_english.pdf (accessed on 27 August 2020).

- Safari, S.; Baratloo, A.; Alfil, M.; Negida, A. Evidence Based Emergency Medicine; Part 5 Receiver Operating Curve and Area under the Curve. Emergency 2016, 4, 111–113. [Google Scholar]

- Razak, M.A.A.; Ahmad, N.A.; Chan, Y.Y.; Kasim, N.M.; Yusof, M.; Ghani, M.K.A.A.; Omar, M.; Aziz, F.A.A.; Jamaluddin, R. Validity of screening tools for dementia and mild cognitive impairment among the elderly in primary health care: A systematic review. Public Health 2019, 169, 84–92. [Google Scholar] [CrossRef]

- Cummings, J.; Aisen, P.S.; Dubois, B.; Frölich, L.; Jr, C.R.J.; Jones, R.W.; Morris, J.C.; Raskin, J.; Dowsett, S.A.; Scheltens, P. Drug development in Alzheimer’s disease: The path to 2025. Alzheimer’s Res. Ther. 2016, 8, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Mental Health: WHO Guidelines on Risk Reduction of Cognitive Decline and Dementia. Available online: https://www.who.int/mental_health/neurology/dementia/risk_reduction_gdg_meeting/en/ (accessed on 27 August 2020).

- Alice, M.; Lesley, M.W.; Amanda, P. Is Diet Quality a predictor of cognitive decline in older adults? A Systematic Review. Nutrients. (manuscript in preparation).

- Snyder, P.J.; Jackson, C.E.; Petersen, R.C.; Khachaturian, A.S.; Kaye, J.; Albert, M.S.; Weintraub, S. Assessment of cognition in mild cognitive impairment: A comparative study. Alzheimers Dement. 2011, 7, 338–355. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- EndNote X9. Available online: https://clarivate.libguides.com/endnote_training/endnote_online (accessed on 6 February 2020).

- Covidence. Systematic Review Software; Veritas Health Innovation: Melbourne, Australia, 2019. [Google Scholar]

- Parikh, R.; Mathai, A.; Parikh, S.; Sekhar, G.C.; Thomas, R. Understanding and using sensitivity, specificity and predictive values. Indian J. Ophthalmol. 2008, 56, 45. [Google Scholar] [CrossRef]

- Greiner, M.; Pfeiffer, D.; Smith, R. Principles and practical application of the receiver-operating characteristic analysis for diagnostic tests. Prev. Vet. Med. 2000, 45, 23–41. [Google Scholar] [CrossRef]

- Charernboon, T. Diagnostic accuracy of the Thai version of the Mini-Addenbrooke’s Cognitive Examination as a mild cognitive impairment and dementia screening test. Psychogeriatrics 2019, 19, 340–344. [Google Scholar] [CrossRef]

- Chen, K.-L.; Xu, Y.; Chu, A.-Q.; Ding, D.; Liang, X.N.; Nasreddine, Z.S.; Dong, Q.; Hong, Z.; Zhao, Q.-H.; Guo, Q.-H. Validation of the Chinese version of Montreal Cognitive Assessment basic for screening mild cognitive impairment. J. Am. Geriatr. Soc. 2016, 64, 285–290. [Google Scholar] [CrossRef]

- Chiu, H.F.; Zhong, B.L.; Leung, T.; Li, S.W.; Chow, P.; Tsoh, J.; Yan, C.; Xiang, Y.T.; Wong, M. Development and validation of a new cognitive screening test: The Hong Kong Brief Cognitive Test (HKBC). Int. J. Geriatr. Psychiatry 2018, 33, 994–999. [Google Scholar] [CrossRef]

- Chiu, P.; Tang, H.; Wei, C.; Zhang, C.; Hung, G.U.; Zhou, W. NMD-12: A new machine-learning derived screening instrument to detect mild cognitive impairment and dementia. PLoS ONE 2019, 14, e0213430. [Google Scholar] [CrossRef]

- Chu, L.; Ng, K.; Law, A.; Lee, A.; Kwan, F. Validity of the Cantonese Chinese Montreal Cognitive Assessment in Southern Chinese. Geriatr. Gerontol. Int. 2014, 15, 96–103. [Google Scholar] [CrossRef]

- Fung, A.W.-T.; Lam, L.C.W. Validation of a computerized Hong Kong—Vigilance and memory test (HK-VMT) to detect early cognitive impairment in healthy older adults. Aging. Ment. Health 2018, 24, 186–192. [Google Scholar] [CrossRef]

- Huang, L.; Chen, K.; Lin, B.; Tang, L.; Zhao, Q.H.; Li, F.; Guo, Q.H. An abbreviated version of Silhouettes test: A brief validated mild cognitive impairment screening tool. Int. Psychogeriatr. 2018, 31, 849–856. [Google Scholar] [CrossRef] [PubMed]

- Julayanont, P.; Tangwongchai, S.; Hemrungrojn, S.; Tunvirachaisakul, C.; Phanthumchinda, K.; Hongsawat, J.; Suwichanarakul, P.; Thanasirorat, S.; Nasreddine, Z.S. The Montreal Cognitive Assessment-Basic: A Screening Tool for Mild Cognitive Impairment in Illiterate and Low-Educated Elderly Adults. J. Am. Geriatr. Soc. 2015, 63, 2550–2554. [Google Scholar] [CrossRef]

- Kandiah, N.; Zhang, A.; Bautista, D.; Silva, E.; Ting, S.K.S.; Ng, A.; Assam, P. Early detection of dementia in multilingual populations: Visual Cognitive Assessment Test (VCAT). J. Neurol. Neurosurg. Psychiatry 2015, 87, 156–160. [Google Scholar] [CrossRef]

- Phua, A.; Hiu, S.; Goh, W.; Ikram, M.K.; Venketasubramanian, N.; Tan, B.Y.; Chen, C.L.-H.; Xu, X. Low Accuracy of Brief Cognitive Tests in Tracking Longitudinal Cognitive Decline in an Asian Elderly Cohort. J. Alzheimers. Dis. 2018, 62, 409–416. [Google Scholar] [CrossRef]

- Low, A.; Lim, L.; Lim, L.; Wong, B.; Silva, E.; Ng, K.P.; Kandiah, N. Construct validity of the Visual Cognitive Assessment Test (VCAT)—A cross-cultural language-neutral cognitive screening tool. Int. Psychogeriatr. 2019, 32, 141–149. [Google Scholar] [CrossRef]

- Mellor, D.; Lewis, M.; McCabe, M.; Byrne, L.; Wang, T.; Wang, J.; Zhu, M.; Cheng, Y.; Yang, C.; Dong, S.; et al. Determining appropriate screening tools and cut-points for cognitive impairment in an elderly Chinese sample. Psychol. Assess. 2016, 28, 1345–1353. [Google Scholar] [CrossRef]

- Ni, J.; Shi, J.; Wei, M.; Tian, J.; Jian, W.; Liu, J.; Liu, T.; Liu, B. Screening mild cognitive impairment by delayed story recall and instrumental activities of daily living. Int. J. Geriatr. Psychiatry 2015, 30, 888–890. [Google Scholar] [CrossRef]

- Park, J.; Jung, M.; Kim, J.; Park, H.; Kim, J.R.; Park, J.H. Validity of a novel computerized screening test system for mild cognitive impairment. Int. Psychogeriatr. 2018, 30, 1455–1463. [Google Scholar] [CrossRef]

- Feng, X.; Zhou, A.; Liu, Z.; Li, F.; Wei, C.; Zhang, G.; Jia, J. Validation of the Delayed Matching-to-Sample Task 48 (DMS48) in Elderly Chinese. J. Alzheimers Dis. 2018, 61, 1611–1618. [Google Scholar] [CrossRef]

- Xu, F.; Ma, J.; Sun, F.; Lee, J.; Coon, D.W.; Xiao, Q.; Huang, Y.; Zhang, L.; Liang, Z.H. The Efficacy of General Practitioner Assessment of Cognition in Chinese Elders Aged 80 and Older. Am. J. Alzheimer’s Dis. 2019, 34, 523–529. [Google Scholar] [CrossRef]

- Zainal, N.; Silva, E.; Lim, L.; Kandiah, N. Psychometric Properties of Alzheimer’s Disease Assessment Scale-Cognitive Subscale for Mild Cognitive Impairment and Mild Alzheimer’s Disease Patients in an Asian Context. Ann. Acad. Med. Singap. 2016, 45, 273–283. [Google Scholar]

- Apostolo, J.L.A.; Paiva, D.d.S.; da Silva, R.C.G.; dos Santos, E.J.F.; Schultz, T.J. Adaptation and validation into Portuguese language of the six-item cognitive impairment test (6CIT). Aging. Ment. Health 2018, 22, 1184–1189. [Google Scholar] [CrossRef] [PubMed]

- Avila-Villanueva, M.; Rebollo-Vazquez, A.; de Leon, J.M.R.-S.; Valenti, M.; Medina, M.; Fernández-Blázquez, M.A. Clinical relevance of specific cognitive complaints in determining mild cognitive impairment from cognitively normal states in a study of healthy elderly controls. Front Aging Neurosci. 2016, 8, 233. [Google Scholar] [CrossRef] [PubMed]

- Bartos, A.; Fayette, D. Validation of the Czech Montreal Cognitive Assessment for Mild Cognitive Impairment due to Alzheimer Disease and Czech Norms in 1,552 Elderly Persons. Dement. Geriatr. Cogn. Disord. 2018, 1, 335–345. [Google Scholar] [CrossRef]

- Robin, H.; James, H.; Munro, C.; Lisa, D.; Barry, E.; Amy, F.; Laura, L.; Karin, M.; Dustin, W. Brief Cognitive Status Exam (BCSE) in older adults with MCI or dementia. Int. Psychogeriatr. 2015, 27, 221–229. [Google Scholar]

- Chipi, E.; Frattini, G.; Eusebi, P.; Mollica, A.; D’Andrea, K.; Russo, M.; Bernardelli, A.; Montanucci, C.; Luchetti, E.; Calabresi, P.; et al. The Italian version of cognitive function instrument (CFI): Reliability and validity in a cohort of healthy elderly. Neurol. Sci. 2018, 39, 111–118. [Google Scholar] [CrossRef]

- Duro, D.; Freitas, S.; Tábuas-Pereira, M.; Santiago, B.; Botelho, M.A.; Santana, I. Discriminative capacity and construct validity of the Clock Drawing Test in Mild Cognitive Impairment and Alzheimer’s disease. Clin. Neuropsychol. 2018, 33, 1159–1174. [Google Scholar] [CrossRef] [PubMed]

- Lemos, R.; Marôco, J.; Simões, M.; Santana, I. Construct and diagnostic validities of the Free and Cued Selective Reminding Test in the Alzheimer’s disease spectrum. J. Clin. Exp. 2016, 38, 913–924. [Google Scholar] [CrossRef]

- Pirrotta, F.; Timpano, F.; Bonanno, L.; Nunnari, D.; Marino, S.; Bramanti, P.; Lanzafame, P. Italian Validation of Montreal Cognitive Assessment. Eur. J. Psychol. Assess. 2015, 31, 131–137. [Google Scholar] [CrossRef]

- Rakusa, M.; Jensterle, J.; Mlakar, J. Clock Drawing Test: A Simple Scoring System for the Accurate Screening of Cognitive Impairment in Patients with Mild Cognitive Impairment and Dementia. Dement. and Geriatr. Cogn. Disord. 2018, 45, 326–334. [Google Scholar] [CrossRef]

- Ricci, M.; Pigliautile, M.; D’Ambrosio, V.; Ercolani, S.; Bianchini, C.; Ruggiero, C.; Vanacore, N.; Mecocci, P. The clock drawing test as a screening tool in mild cognitive impairment and very mild dementia: A new brief method of scoring and normative data in the elderly. J. Neurol. Sci. 2016, 37, 867–873. [Google Scholar] [CrossRef]

- Serna, A.; Contador, I.; Bermejo-Pareja, F.; Mitchell, A.; Fernandez-Calvo, B.; Ramos, F.; Villarejo, A.; Benito-Leon, J. Accuracy of a Brief Neuropsychological Battery for the Diagnosis of Dementia and Mild Cognitive Impairment: An Analysis of the NEDICES Cohort. J. Alzheimers Dis. 2015, 48, 163–173. [Google Scholar] [CrossRef]

- Van, E.; Van, J.; Jansen, I.; Van, M.; Floor, Z.; Bimmel, D.; Tjalling, R.; Andringa, G. The Test Your Memory (TYM) Test Outperforms the MMSE in the Detection of MCI and Dementia. Curr. Alzheimer Res. 2017, 14, 598–607. [Google Scholar]

- Vyhnálek, M.; Rubínová, E.; Marková, H.; Nikolai, T.; Laczó, J.; Andel, R.; Hort, J. Clock drawing test in screening for Alzheimer’s dementia and mild cognitive impairment in clinical practice. Int. J. Geriatr. 2016, 32, 933–939. [Google Scholar] [CrossRef]

- Baerresen, K.M.; Miller, K.J.; Hanson, E.R.; Miller, J.S.; Dye, R.V.; E Hartman, R.; Vermeersch, D.; Small, G.W. Neuropsychological tests for predicting cognitive decline in older adults. Neurodegener. Dis. Manag. 2015, 5, 191–201. [Google Scholar] [CrossRef]

- Krishnan, K.; Rossetti, H.; Hynan, L.; Carter, K.; Falkowski, J.; Lacritz, L.; Munro, C.; Weiner, M. Changes in Montreal Cognitive Assessment Scores Over Time. Assessment 2016, 24, 772–777. [Google Scholar]

- Malek-Ahmadi, M.; Chen, K.; Davis, K.; Belden, C.; Powell, J.; Jacobson, S.A.; Sabbagh, M.N. Sensitivity to change and prediction of global change for the Alzheimer’s Questionnaire. Alzheimer’s Res. Ther. 2015, 7, 1. [Google Scholar] [CrossRef]

- Mansbach, W.; Mace, R. A comparison of the diagnostic accuracy of the AD8 and BCAT-SF in identifying dementia and mild cognitive impairment in long-term care residents. Aging Neuropsychol. Cogn. 2016, 23, 609–624. [Google Scholar] [CrossRef]

- Mitchell, J.; Dick, M.; Wood, A.; Tapp, A.; Ziegler, R. The Utility of the Dementia Severity Rating Scale in Differentiating Mild Cognitive Impairment and Alzheimer Disease from Controls. Alzheimer Dis. Assoc. Disord. 2015, 29, 222–228. [Google Scholar] [CrossRef]

- Scharre, D.; Chang, S.; Nagaraja, H.; Vrettos, N.; Bornstein, R.A. Digitally translated Self-Administered Gerocognitive Examination (eSAGE): Relationship with its validated paper version, neuropsychological evaluations, and clinical assessments. Alzheimer’s Res. Ther. 2017, 9, 44. [Google Scholar] [CrossRef]

- Townley, R.; Syrjanen, J.; Botha, H.; Kremers, W.; Aakre, J.A.; Fields, J.A.; MacHulda, M.M.; Graff-Radford, J.; Savica, R.; Jones, D.T.; et al. Comparison of the Short Test of Mental Status and the Montreal Cognitive Assessment Across the Cognitive Spectrum. Mayo. Clin. Proc. 2019, 94, 1516–1523. [Google Scholar] [CrossRef]

- Damin, A.; Nitrini, R.; Brucki, S. Cognitive Change Questionnaire as a method for cognitive impairment screening. Dement. Neuropsychol. 2015, 9, 237–244. [Google Scholar] [CrossRef][Green Version]

- Pinto, T.; Machado, L.; Costa, M.; Santos, M.; Bulgacov, T.M.; Rolim, A.P.P.; Silva, G.A.; Rodrigues-Júnior, A.L.; Sougey, E.B.; Ximenes, R.C. Accuracy and Psychometric Properties of the Brazilian Version of the Montreal Cognitive Assessment as a Brief Screening Tool for Mild Cognitive Impairment and Alzheimer’s Disease in the Initial Stages in the Elderly. Dement. Geriatr. Cogn. Disord. 2019, 47, 366–374. [Google Scholar] [CrossRef]

- Radanovic, M.; Facco, G.; Forlenza, O. Sensitivity and specificity of a briefer version of the Cambridge Cognitive Examination (CAMCog-Short) in the detection of cognitive decline in the elderly: An exploratory study. Int. J. Geriatr. Psychiatry 2018, 33, 769–778. [Google Scholar] [CrossRef]

- Clarnette, R.; O’Caoimh, R.; Antony, D.; Svendrovski, A.; Molloy, D.W. Comparison of the Quick Mild Cognitive Impairment (Qmci) screen to the Montreal Cognitive Assessment (MoCA) in an Australian geriatrics clinic. Int. J. Geriatr. Psychiatry 2016, 32, 643–649. [Google Scholar] [CrossRef]

- Lee, S.; Ong, B.; Pike, K.; Mullaly, E.; Rand, E.; Storey, E.; Ames, D.; Saling, M.; Clare, L.; Kinsella, G.J. The Contribution of Prospective Memory Performance to the Neuropsychological Assessment of Mild Cognitive Impairment. Clin. Neuropsychol. 2016, 30, 131–149. [Google Scholar] [CrossRef]

- Georgakis, M.K.; Papadopoulos, F.C.; Beratis, I.; Michelakos, T.; Kanavidis, P.; Dafermos, V.; Tousoulis, D.; Papageorgiou, S.; Petridou, E.T. Validation of TICS for detection of dementia and mild cognitive impairment among individuals characterized by low levels of education or illiteracy: A population-based study in rural Greece. Clin. Neuropsychol. 2017, 31, 61–71. [Google Scholar] [CrossRef]

- Iatraki, E.; Simos, P.; Bertsias, A.; Duijker, G.; Zaganas, I.; Tziraki, C.; Vgontzas, A.; Lionis, C. Cognitive screening tools for primary care settings: Examining the ‘Test Your Memory’ and ‘General Practitioner assessment of Cognition’ tools in a rural aging population in Greece. Euro. J. Gen. 2017, 23, 172–179. [Google Scholar] [CrossRef]

- Roman, F.; Iturry, M.; Rojas, G.; Barceló, E.; Buschke, H.; Allegri, R.F. Validation of the Argentine version of the Memory Binding Test (MBT) for Early Detection of Mild Cognitive Impairment. Dement. Neuropsychol. 2016, 10, 217–226. [Google Scholar] [CrossRef][Green Version]

- Freedman, M.; Leach, L.; Carmela, M.; Stokes, K.A.; Goldberg, Y.; Spring, R.; Nourhaghighi, N.; Gee, T.; Strother, S.C.; Alhaj, M.O.; et al. The Toronto Cognitive Assessment (TorCA): Normative data and validation to detect amnestic mild cognitive impairment. Alzheimers. Res. Ther. 2018, 10, 65. [Google Scholar] [CrossRef]

- Heyanka, D.; Scott, J.; Adams, R. Improving the Diagnostic Accuracy of the RBANS in Mild Cognitive Impairment with Construct-Consistent Measures. Appl. Neuropsychol. Adult 2013, 22, 32–41. [Google Scholar] [CrossRef]

- Broche-Perez, Y.; Lopez-Pujol, H.A. Validation of the Cuban Version of Addenbrooke’s Cognitive Examination-Revised for Screening Mild Cognitive Impairment. Dement. Geriatr. Cogn. Disord. 2017, 44, 320–327. [Google Scholar] [CrossRef]

- Yavuz, B.; Varan, H.; O’Caoimh, R.; Kizilarslanoglu, M.; Kilic, M.; Molloy, D.; Dogrul, R.T.; Karabulut, E.; Svendrovski, A.; Sagir, A.; et al. Validation of the Turkish Version of the Quick Mild Cognitive Impairment Screen. Am. J. Alzheimer’s Dis. 2017, 32, 145–156. [Google Scholar] [CrossRef]

- Rosli, R.; Tan, M.; Gray, W.; Subramanian, P.; Chin, A.V. Cognitive assessment tools in Asia: A systematic review. Int. Psychoger. 2015, 28, 189–210. [Google Scholar] [CrossRef]

- Arevalo-Rodriguez, I.; Smailagic, N.; Roqué, M.; Ciapponi, A.; Sanchez-Perez, E.; Giannakou, A.; Pedraza, O.L.; Cosp, X.B.; Cullum, S. Mini-Mental State Examination (MMSE) for the detection of Alzheimer’s disease and other dementias in people with mild cognitive impairment (MCI). Cochrane Database Syst. Rev. 2015. [Google Scholar] [CrossRef]

- Ciesielska, N.; Sokołowski, R.; Mazur, E.; Podhorecka, M.; Polak-Szabela, A.; Kędziora-Kornatowska, K. Is the Montreal Cognitive Assessment (MoCA) test better suited than the Mini-Mental State Examination (MMSE) in mild cognitive impairment (MCI) detection among people aged over 60? Meta-analysis. Psychiatr. Pol. 2016, 50, 1039–1052. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cumming, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Alzheimer’s Disease. National Institute on Aging. Available online: https://www.nia.nih.gov/health/alzheimers-disease-fact-sheet (accessed on 27 October 2020).

- What Is Mild Cognitive Impairment? Available online: https://www.nia.nih.gov/health/what-mild-cognitive-impairment (accessed on 8 October 2020).

- Csukly, G.; Sirály, E.; Fodor, Z.; Horváth, A.; Salacz, P.; Hidasi, Z.; Csibri, É.; Rudas, G.; Szabó, Á. The Differentiation of Amnestic Type MCI from the Non-Amnestic Types by Structural MRI. Front Aging Neurosci. 2016, 8, 1–52. [Google Scholar] [CrossRef]

- Ghose, S.; Das, S.; Poria, S.; Das, T. Short test of mental status in the detection of mild cognitive impairment in India. Indian J. Psychiatry 2019, 61, 184–191. [Google Scholar]

| Criteria * | Interpretation | Range (%) |

|---|---|---|

| Sn and Sp | Excellent | 91–100 |

| Good | 76–90 | |

| Fair | 50–75 | |

| Poor | <50 | |

| AUC | Excellent | 91–100 |

| Good | 81–90 | |

| Fair | 71–80 | |

| Poor | <70 | |

| PPV and NPV | Excellent | 91–100 |

| Good | 76–90 | |

| Fair | 50–75 | |

| Poor | <50 |

| No. | Authors, Year, Country | Study Design | Participants Characteristics | Cognitive Tool | Comparison Standard | ||

|---|---|---|---|---|---|---|---|

| Age (Mean ± SD or Range) | % Female | Education Years (Mean ± SD or Range) | |||||

| 1 | Apostolo JLA et al., 2018, Portugal [42] | Cross-sectional | 67.7 ± 9.7 | 70.4 | 30.7% 0–2 years, 43.3% 3–6 years, 26% 7–18 years | 6CIT | MMSE |

| 2 | Avila-Villanueva M et al., 2016, Spain [43] | Cross-sectional | 74.07 ± 3.8 | 63 | 11.15 ± 6.69 | EMQ | CDR, NIA-AA criteria |

| 3 | Baerresen KM et al., 2015, US [55] | Cohort | 60.84 ± 10.76 | 60 | 16.67 ± 2.94 | BSRT, RCFT, TMT | Rigorous diagnostic methods: MRI scan, clinical consensus of neurology, geriatric psychiatry, neuropsychology and radiology staff |

| 4 | Bartos A et at., 2018, Czech Republic [44] | Cross-sectional | 70 ± 8 | 59 | 12–17 | MoCA | NIA-AA criteria |

| 5 | Bouman Z et al., 2015 Netherlands [45] | Cross-sectional | 76.6 ± 5.9 | ~46 | ~66% low level, 19% average level, 16% high level | BCSE | MMSE |

| 6 | Broche-Perez Y et al., 2018, Cuba [72] | Cross-sectional | 73.28 ± 7.16 | ~67 | 9.82 ± 4.23 | ACE, MMSE | Petersen’s criteria, CDR |

| 7 | Charernboon T, 2019, Thailand [25] | Cross-sectional | 64.9 ± 6.5 | 76.7 | 10.2 ± 4.9 | ACE | Thai version of MMSE |

| 8 | Chen K-L et al., 2016, China [26] | Cross-sectional | 68.2 ± 9.1 | ~66 | 4.8 ± 1.7 | MMSE, MoCA | CDR |

| 9 | Chipi E et al., 2017, Italy [46] | Cross-sectional | 70.9 ± 5.1 | 61.2 | 11.5 ± 4.5 | CFI | MMSE |

| 10 | Chiu HF et al., 2017, Hong Kong [27] | Cross-sectional | 75.4 ± 6.6 | 56.6 | 6.5 ± 3.8 | HKBC, MoCA, MMSE | DSM-5 |

| 11 | Chiu P et al., 2019, Taiwan [28] | Cross-sectional | 67.8 ± 10.7 | 47.2 | 6.9 ± 5.1 | MMSE, NMD-12, MoCA, IADL, AD8, CASI, NPI | NIA-AA criteria, CDR |

| 12 | Chu L et al., 2015, Hong Kong [29] | Cross-sectional | 72.2 ± 6.1 | 87 | 6.97 ± 4.69 | MMSE | Petersen’s criteria |

| 13 | Clarnette R et al., 2016, Australia [65] | Cross-sectional | 50–95 | 52 | 4–21 | Qmci, MoCA | CDR |

| 14 | Damin A et al., 2015 Brazil [62] | Cohort | 68.27 ± 7.34 | N/A | 7.48 ± 4.48 | CCQ | MMSE, CAMCog, CDR and the brief cognitive screening battery |

| 15 | Duro D et al., 2018, Portugal [47] | Cross-sectional | 69.47 ± 8.89 | 63.5 | 6.69 ± 4.14 | CDT | NIA-AA criteria |

| 16 | Freedman M et al., 2018 [70] | Cross-sectional | 75.3 ± 7.9 | ~67 | 15.02 ± 3.2 | TorCA | NIA-AA criteria |

| 17 | Fung AW-T et al., 2018, Hong Kong [30] | Cohort | 68.8 ± 6.3 | 58.4 | 9.8 ± 4.8 | HK-VMT | Combined clinical and cognitive criteria suitable for local older population, CDR |

| 18 | Georgakis MK et al., 2017, Greece [67] | Cross-sectional | 74.3 ± 6.6 | 51.6 | 4.5 ± 2.6 | TICS | 5-objects test |

| 19 | Heyanka D et al., 2015 [71] | Cross-sectional | 71.5 ± 7.5 | ~43 | 14.8 ± 3.2 | RBANS | Petersen’s criteria |

| 20 | Huang L et al., 2018, China [31] | Cross-sectional | 65.71 ± 8.10 | ~56 | 12.78 ± 2.74 | RCFT, MoCA, VOSP, BNT, STT, JLO, ST | Petersen’s criteria, CDR |

| 21 | Iatraki E et al., 2017, Greece [68] | Cross-sectional | 71.0 ± 6.9 | 64.6 | 6.4 ± 3.1 | TYM, GPCog | Unclear |

| 22 | Julayanont P et al., 2015, Thailand [32] | Cross-sectional | 66.6 ± 6.7 | 84 | 3.6 ± 1.1 | MoCA, MMSE | CDR global |

| 23 | Khandiah N et al., 2015, Singapore [33] | Cohort | 67.8 ± 8.86 | 46.1 | 10.5 ± 6.0 | VCAT | Petersen’s criteria, CDR, NIA-AA criteria |

| 24 | Phua A et al., 2017, Singapore [34] | Cross-sectional | 66.8 ± 5.5 | 62 | 9.3 ± 4.9 | MoCA, MMSE | DSM-IV, CDR global |

| 25 | Krishnan K et al., 2016, US [56] | Cohort | 58–77 | 64 | 15.2 ± 2.7 | MoCA | History, clinical examination, CDR, and a comprehensive neuropsychological battery based on published criteria |

| 26 | Lee S et al., 2016, Australia [66] | Cross-sectional | Median 73 | 53 | Median 14 | CVLT, The Envolope Task, PRMQ, Single-item Memory Scale, MMSE | HVLT-R, Logical Memory, Wechsler Memory Scale Third Edition, Verbal Paired Associates, Wechsler Memory Scale Fourth Edition, RCFT, CDR, ADFACS, NINCDS-ADRDA criteria, MMSE |

| 27 | Lemos R et al., 2016, Portugal [48] | Cohort | 70.22 ± 7.65 | 52.5 | 7.7 ± 5.01 | FCSRT | MMSE, CDR |

| 28 | Low A et al., 2019, Singapore [35] | Cross-sectional | 61.47 ± 7.19 | 70 | 12.36 ± 3.76 | VCAT | NIA-AA criteria, CDR, MRI scan |

| 29 | Malek-Ahmadi M et al., 2015, US [57] | Longitudinal Cohort | 81.70 ± 7.25 | ~48 | 14.74 ± 2.54 | MMSE, AQ, FAQ | Petersen’s criteria |

| 30 | Mansbach W et al., 2016, US [58] | Cohort | 82.33 ± 9.15 | 64 | 84% at least 12 years education | BCAT, AD8 | Unclear, diagnosed by licensed psychologist’s evaluations |

| 31 | Mellor D et al., 2016, China [36] | Cohort | 72.54 ± 8.40 | 57.9 | 9.12 ± 4.36 | MoCA, MMSE | Petersen’s criteria |

| 32 | Mitchell J et al., 2015, US [59] | Case–control | 75.9 ± 8.5 | 50.9 | 15.2 ± 2.9 | FAQ, DSRS, CWLT, BADLS | WMS-III Logical Memory test or the CERAD Word List |

| 33 | Ni J et al., 2015, China [37] | Cross-sectional | 62.57 ± 8.61 | ~59 | 12.04 ± 3.34 | DSR | History and physical exams, MMSE, story recall (immediate and 30 min delayed), CDR, ADL |

| 34 | Park J et al., 2018, South Korea [38] | Cross-sectional | 74.93 ± 6.96 | 56.3 | 5.83 ± 4.52 | mSTS-MCI | MoCA-K, MMSE-K, neuropsychological battery (Rey Auditory Verbal Learning Test and Delayed Visual Reproduction and Logical Memory, two subtests of the Wechsler Memory Scale) |

| 35 | Pinto T et al., 2019, Brazil [63] | Cross-sectional | 73.9 ± 6.2 | 76.4 | 10.9 ± 4.4 | MoCA | Statistically compared |

| 36 | Pirrotta F et al., 2014, Italy [49] | Cross-sectional | 70.5 ± 11.5 | 58.2 | 8.1 ± 4.6 | MoCA | MMSE |

| 37 | Radanovic M et al., 2017, Brazil [64] | Cohort | ~68.7 ± 5.85 | ~79 | ~10.35 ± 2.45 | CAMCog | Petersen’s criteria |

| 38 | Rakusa M et al., 2018, Slovenia [50] | Cohort | Median 74 | N/A | 65% Secondary school, 23% University, 12% Primary School | MMSE, CDT | NIA-AA criteria |

| 39 | Ricci M et al., 2016, Italy [51] | Cohort | 73.3 ± 6.9 | N/A | 7.2 ± 4.2 | CDT | NINCDS- ADRDA criteria |

| 40 | Roman F et al., 2016, Argentina [69] | Cross-sectional | 67.5 ± 8.3 | N/A | 11.5 ± 4.1 | MBT | Spanish Version of MMSE, CDT, Signoret Verbal Memory Battery, TMT, VF, Spanish Version of BNT, and the Digit Span forward and backward |

| 41 | Scharre D et al., 2017, US [60] | Investigational | 75.2 ± 7.3 | 67 | 15.1 ± 2.7 | SAGE | Unclear |

| 42 | Serna A et al., 2015, Spain [52] | Cohort | 78.10 ± 5.04 | 59.3 | 64.2% illiteracy/read and write, 35.8% primary/secondary or higher | Semantic Fluency/VF, Logical Memory | International Work Group criteria, MMSE |

| 43 | Townley R et al., 2019 US [61] | Cohort | ~72.4 ± 8.95 | 47–51 | ~ 15.05 ± 2.65 | STMS, MoCA | Clinical consensus |

| 44 | Van de Zande E et al., 2017, Netherlands [53] | Cross-sectional | 73.05 ± 8.62 | ~52 | 10.34 ± 3.66 | MMSE, TYM | Petersen’s criteria |

| 45 | Vyhnálek M et al., 2016, Czech Republic [54] | Cross-sectional | 71.20 ± 6.77 | ~64 | 15.30 ± 2.95 | CDT | CDR |

| 46 | Feng X et al., 2017, China [39] | Cross-sectional | 65.99 ± 10.45 | 62.59 | 2.88% 0 years, 7.19% 1–6 years, 51.08% 7–12 years, 38.85% ≥12 years | DMS48 | Chinese Version of MMSE, MoCA, CDR, NIA-AA criteria |

| 47 | Xu F et al., 2019, China [40] | Cross-sectional | 82.87 ± 3.134 | 33.4 | 62.8% having bachelor’s degrees | MMSE, GPCog | NIA-AA criteria |

| 48 | Yavuz B et al., 2017 Turkey [73] | Cross-sectional | 75.4 ± 6.9 | 65 | 0–21 (Median 5) | MMSE, Qmci | Petersen’s criteria |

| 49 | Zainal N et al., 2016, Singapore [41] | Cross-sectional | 61.81 ± 6.96 | 68.8 | 11.70 ± 3.13 | ADAS-Cog | Petersen’s criteria, CDR |

| No. | Cognitive Tool | Article No. | Authors, Year, Country | Settings | Administration Method |

|---|---|---|---|---|---|

| 1 | 6CIT | 1 | Apostolo JLA et al., 2018, Portugal [42] | Community, Primary health care units | By untrained examiner (post-graduate student) |

| 2 | EMQ | 1 | Avila-Villanueva M et al., 2016, Spain [43] | Community | Self-administered |

| 3 | BSRT | 2 | Baerresen KM et al., 2015, US [55] | Community | NR |

| 4 | RCFT | 2 | Baerresen KM et al., 2015, US [55] | Community | NR |

| 2 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner | ||

| 5 | TMT | 4 | Baerresen KM et al., 2015, US [55] | Community | NR |

| 6 | MoCA | 8 | Bartos A et at., 2018, Czech Republic [44] | Community | By trained examiner |

| 10 | Chen K-L et al., 2016, China [26] | Hospital | By trained examiner | ||

| 12 | Chiu HF et al., 2017, Hong Kong [27] | Community | By untrained examiner (research assistant) | ||

| 13 | Chu L et al., 2015, Hong Kong [29] | Memory Clinic, Community | By examiner | ||

| 6 | MoCA | 13 | Clarnette R et al., 2016, Australia [65] | Geriatrics Clinic | By trained professionals (geriatrician) |

| 22 | Julayanont P et al., 2015, Thailand [32] | Community Hospital | By trained professionals (nurse with expertise in cognitive assessment) | ||

| 24 | Phua A et al., 2017, Singapore [34] | Memory Clinic | NR | ||

| 25 | Krishnan K et al., 2016, US [56] | Community, Clinical Care | NR | ||

| 31 | Mellor D et al., 2016, China [36] | Community | By trained professionals (psychologist or attending level psychiatrist) | ||

| 35 | Pinto T et al., 2019, Brazil [63] | Health Care Centres | By trained professionals (neurologist researcher) | ||

| 36 | Pirrotta F et al., 2014, Italy [49] | Clinical, Research | By trained professionals (psychologist) | ||

| 43 | Townley R et al., 2019 US [61] | Community | NR | ||

| 48 | Yavuz B et al., 2017, Turkey [73] | Geriatrics Clinic | By trained professionals (psychologist) | ||

| 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) | ||

| 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner | ||

| 7 | BCSE | 5 | Bouman Z et al., 2015 Netherlands [45] | Memory Clinic | By untrained examiner |

| 8 | ACE | 6 | Broche-Perez Y et al., 2018, Cuba [72] | Primary Care Community Centre: nursing homes (permanent residences for the elderly) and day care centres | By trained professionals (neurologist and geriatrician) |

| 7 | Charernboon T, 2019, Thailand [25] | Memory Clinic | NR | ||

| 9 | MMSE | 6 | Broche-Perez Y et al., 2018, Cuba [72] | Primary Care Community Centre: nursing homes (permanent residences for the elderly) and day care centres | By professionals (neurologist and geriatrician) |

| 8 | Chen K-L et al., 2016, China [26] | Hospital | By trained examiner | ||

| 10 | Chiu HF et al., 2017, Hong Kong [27] | Community | By untrained examiner (research assistant) | ||

| 12 | Chu L et al., 2015, Hong Kong [29] | Memory Clinic, Community | By examiner | ||

| 22 | Julayanont P et al., 2015, Thailand [32] | Community Hospital | By trained professionals (nurse with expertise in cognitive assessment) | ||

| 24 | Phua A et al., 2017, Singapore [34] | Memory Clinic | NR | ||

| 26 | Lee S et al., 2016, Australia [66] | Community, Memory Clinic | Unclear | ||

| 31 | Mellor D et al., 2016, China [36] | Community | By trained professionals (psychologist or psychiatrist) | ||

| 38 | Rakusa M et al., 2018, Slovenia [50] | Community | NR | ||

| 44 | Van de Zande E et al., 2017, Netherlands [53] | Memory Clinic | By trained examiner | ||

| 47 | Xu F et al., 2019, China [40] | Community | By trained examiner | ||

| 48 | Yavuz B et al., 2017 Turkey [73] | Geriatrics Clinic | By trained examiner | ||

| 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) | ||

| 29 | Malek-Ahmadi M et al., 2015, US [57] | Community | NR | ||

| 10 | CFI | 9 | Chipi E et al., 2017, Italy [46] | Memory Clinic | By professionals (neuropsychologist) |

| 11 | RBANS | 19 | Heyanka D et al., 2015 [71] | Medical Centre | NR |

| 12 | HKBC | 10 | Chiu HF et al., 2017, Hong Kong [27] | Community | By untrained examiner (research assistant) |

| 13 | NMD-12 | 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) |

| 14 | Qmci | 13 | Clarnette R et al., 2016, Australia [65] | Geriatrics Clinic | By trained professionals (geriatrician) |

| 48 | Yavuz B et al., 2017 Turkey [73] | Geriatrics Clinic | By trained examiner | ||

| 15 | CCQ | 14 | Damin A et al., 2015 Brazil [62] | Clinical | By professionals (physician)or self-administered |

| 16 | CDT | 15 | Duro D et al., 2018, Portugal [47] | Tertiary Centre | By professionals (neuropsychologist) |

| 38 | Rakusa M et al., 2018, Slovenia [50] | Community | NR | ||

| 39 | Ricci M et al., 2016, Italy [51] | Memory Clinic, Community | By untrained examiner | ||

| 45 | Vyhnálek M et al., 2016, Czech Republic [54] | Memory Clinic | By professionals (neuropsychologist, neurologist, resident) | ||

| 17 | HK-VMT | 17 | Fung AW-T et al., 2018, Hong Kong [30] | Community | Self-administered (touch-screen laptop) |

| 18 | TorCA | 16 | Freedman M et al., 2018 [70] | Suitable for use in any medical setting | By trained examineror professionals (health care professionals) |

| 19 | TICS | 18 | Georgakis MK et al., 2017, Greece [67] | Community, Health Centre | By professionals (health care professionals) |

| 20 | VOSP | 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner |

| 21 | TYM | 21 | Iatraki E et al., 2017, Greece [68] | Rural Primary Care | By trained examiner |

| 44 | Van de Zande E et al., 2017, Netherlands [53] | Memory Clinic, Primary Clinical Setting (GP practice, home care) | Self-administered (under supervision) | ||

| 22 | GPCog | 21 | Iatraki E et al., 2017, Greece [68] | Rural Primary Care | By trained examiner |

| 47 | Xu F et al., 2019, China [40] | Outpatient Clinical, Primary Care | By trained examiner | ||

| 23 | CVLT | 26 | Lee S et al., 2016, Australia [66] | Community, Memory Clinic | NR |

| 24 | The Envelope Task | 26 | Lee S et al., 2016, Australia [66] | Community, Memory Clinic | NR |

| 25 | PRMQ | 26 | Lee S et al., 2016, Australia [66] | Community, Memory Clinic | NR |

| 26 | Single-item Memory Scale | 26 | Lee S et al., 2016, Australia [66] | Community, Memory Clinic | NR |

| 27 | FCSRT | 27 | Lemos R et al., 2016, Portugal [48] | Community, Hospital | NR |

| 28 | AQ | 29 | Malek-Ahmadi M et al., 2015, US [57] | Designed for ease of use in primary care setting | NR |

| 29 | FAQ | 29 | Malek-Ahmadi M et al., 2015, US [57] | Community | NR |

| 32 | Mitchell J et al., 2015, US [59] | Community | By professionals (clinician) | ||

| 30 | BCAT | 30 | Mansbach W et al., 2016, US [58] | Long-Term Care | By professionals |

| 31 | AD8 | 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) |

| 30 | Mansbach W et al., 2016, US [58] | Long-Term Care | Self-administered | ||

| 32 | DSRS | 32 | Mitchell J et al., 2015, US [59] | Community | By professionals (clinician) |

| 33 | CMLT | 32 | Mitchell J et al., 2015, US [59] | Community | By professionals (clinician) |

| 32 + 33 | CWLT-5 + DSRS | 32 | Mitchell J et al., 2015, US [59] | Community | By professionals (clinician) |

| 34 | BADLS | 32 | Mitchell J et al., 2015, US [59] | Community | By professionals (clinician) |

| 35 | DSR | 33 | Ni J et al., 2015, China [37] | Memory Clinic | NR |

| 36 | mSTS-MCI | 34 | Park J et al., 2018, South Korea [38] | Clinical settings, Primary care, Geriatrics Outpatient Clinics | By professionals (occupational therapist), using mobile application |

| 37 | CAMCog | 37 | Radanovic M et al., 2017, Brazil [64] | Clinical | By professionals (physician) |

| 38 | MBT | 40 | Roman F et al., 2016, Argentina [69] | Clinical | NR |

| 39 | SAGE | 41 | Scharre D et al., 2017, US [60] | Community, Clinic, Research | Self-administered (paper-based or on tablet) |

| 40 | Semantic Fleuncy/VF | 42 | Serna A et al., 2015, Spain [52] | Community | NR |

| 41 | Logical Memory | 42 | Serna A et al., 2015, Spain [52] | Community | NR |

| 42 | STMS | 43 | Townley R et al., 2019 US [61] | Community, Primary Care | NR |

| 43 | DMS48 | 46 | Feng X et al., 2017, China [39] | Memory Clinic | By trained examiner |

| 44 | ADAS-Cog | 49 | Zainal N et al., 2016, Singapore [41] | Clinical Trials, Clinic | By trained examiner |

| 45 | IADL | 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) |

| 46 | CASI | 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) |

| 47 | NPI | 11 | Chiu P et al., 2019, Taiwan [28] | Health Care Centres | By professionals (neuropsychologist) |

| 48 | BNT | 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner |

| 49 | STT | 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner |

| 50 | JLO | 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner |

| 51 | ST | 20 | Huang L et al., 2018, China [31] | Memory Clinic | By trained examiner |

| 52 | VCAT | 23 | Khandiah N et al., 2015, Singapore [33] | Community, Clinical | By trained examiner |

| 28 | Low A et al., 2019, Singapore [35] | Community, Memory Clinic | By professionals (psychologist) |

| No. | Cognitive Tool | Version of Tools | Author, Year, Country | Range of Total Score | Cut-Off Point * | Sn/Sp (%) | Validity | Reliability | Affecting Factors | Duration (mins) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC (%) | PPV/NPV (%) | ||||||||||

| 1 | 6CIT | Portuguese Version | Apostolo JLA et al., 2018, Portugal [42] | 8–11 | ≤10 (all literacy level) | 82.78/84.84 | 91 | 84.3/83.3 | High test–retest reliability, Strong internal consistency | Literacy Level | 2 to 3 |

| Portuguese Version | Apostolo JLA et al., 2018, Portugal [42] | 4–15 | ≤12 (education 0–2 years) | 93.44/68.09 | 94 | 88.4/80 | High test–retest reliability, Strong internal consistency | Literacy Level | 2 to 3 | ||

| Portuguese Version | Apostolo JLA et al., 2018, Portugal [42] | 9–12.03 | ≤10 (education 3–6 years) | 88/86.23 | 95 | 82.2/90.8 | High test–retest reliability, Strong internal consistency | Literacy Level | 2 to 3 | ||

| 2 | EMQ | - | Avila-Villanueva M et al., 2016, Spain [43] | NR | NR | NR | NR | NR | NR | NR | NR |

| 3 | BSRT | - | Baerresen KM et al., 2015, US [55] | NR | NR | Predicted conversion to MCI and the conversion to AD | NR | NR | |||

| 4 | RCFT | - | Baerresen KM et al., 2015, US [55] | 0–36 | NR | Predicted conversion from normal aging to MCI | NR | NR | |||

| Rey Complex Figure Test Copy (CFT-C) | Huang L et al., 2018, China [31] | 0–36 | ≤32 | 46.9/76.9 | 81.6 | NR | NR | NR | NR | ||

| 5 | TMT | Test B (TMT-B) | Baerresen KM et al., 2015, US [55] | NR | NR | Predicted conversion to MCI and the conversion to AD | NR | NR | |||

| 6 | MoCA | Czech Version (MoCA-CZ) | Bartos A et at., 2018, Czech Republic [44] | 0–30 | ≤25 | 94/62 | 89 | NR | NR | NR | 12 ± 3 |

| Czech Version (MoCA-CZ) | Bartos A et at., 2018, Czech Republic [44] | 0–30 | ≤24 | 87/72 | 89 | NR | NR | NR | 12 ± 3 | ||

| Chinese Version (MoCA-BC) | Chen K-L et al., 2016, China [26] | 0–30 | ≤19 (education ≤6 years) | 87.9/81 | 89.6 | NR | NR | NR | NR | ||

| Chinese Version (MoCA-BC) | Chen K-L et al., 2016, China [26] | 0–30 | ≤22 (education 7–12 years) | 92.9/91.2 | 94.9 | NR | NR | NR | NR | ||

| Chinese Version (MoCA-BC) | Chen K-L et al., 2016, China [26] | 0–30 | ≤24 (education >12 years) | 89.9/81.5 | 91.6 | NR | NR | NR | NR | ||

| Cantonese Version | Chiu HF et al., 2017, Hong Kong [27] | 0–30 | ≤19/20 | 80/86 | 91.3 | 94/98 | NR | Education | NR | ||

| 6 | MoCA | Cantonese Chinese Version | Chu L et al., 2015, Hong Kong [29] | 0–30 | 22/23 | 78/73 | 95 | NR | High test–retest reliability, High internal consistency, High inter-rater reliability | Education (sex and age not associated) | ≤10 |

| - | Clarnette R et al., 2016, Australia [65] | 0–30 | ≤23 | 87/80 | 84–92 | 95/58 | NR | NR | NR | ||

| Basic Version (MoCA-B) | Julayanont P et al., 2015, Thailand [32] | 0–30 | 24/25 | 86/86 | NR | 85/82 | Good internal consistency | Designed to be less dependent upon education and literacy | 15 to 21 | ||

| - | Phua A et al., 2017,Singapore [34] | 0–30 | NR | 63/77 | NR | 70/65 | NR | NR | NR | ||

| - | Krishnan K et al., 2016, US [56] | 0–30 | ≤26 | 51/96 | NR | NR | Good test–retest reliability | NR | 10 | ||

| 6 | MoCA | - | Mellor D et al., 2016, China [36] | 0–30 | ≤22.5 | 87/73 | 89 | 54.5/93.6 | NR | Age, Gender, Education | NR |

| Brazilian Version (MoCA-BR) | Pinto T et al., 2019, Brazil [63] | 0–30 | NR | NR | NR | NR | Good internal consistency, Good test–retest reliability, Excellent inter-examiner reliability | NR | 13.1 ± 2.7 | ||

| Italian version | Pirrotta F et al., 2014, Italy [49] | 0–30 | ≤15.5 | 83/97 | 96 | NR | High intra-rater reliability, High test–retest agreement, Excellent inter-rater reliability | NR | 10 | ||

| - | Townley R et al., 2019 US [61] | 0–30 | ≤26 | 89/47 | Incident MCI: 70, a-MCI: 90, na- MCI: 84 | NR | NR | NR | NR | ||

| 6 | MoCA | - | Yavuz B et al., 2017, Turkey [73] | 0–30 | <26 | 59/72 | 69 | 72/71 | NR | NR | 10 |

| - | Chiu P et al., 2019, Taiwan [28] | 0–30 | 19/20 | 68/65 | 67 | NR | NR | Age, Education | NR | ||

| - | Huang L et al., 2018, China [31] | 0–30 | ≤24 | 81.5/65.1 | 81.8 | NR | NR | NR | NR | ||

| 7 | BCSE | Dutch Version | Bouman Z et al., 2015 Netherlands [45] | 0–58 | ≤46 | 81/80 | NR | 61/92 | Excellent inter-rater reliability, High internal consistency | Age | 5 to 15 |

| Dutch Version | Bouman Z et al., 2015 Netherlands [45] | 0–58 | ≤27 | 84/76 | NR | 57/92 | Excellent inter-rater reliability, High internal consistency | Age | 5 to 15 | ||

| 8 | ACE | Cuban Revised Version (ACE-R) | Broche-Perez Y et al., 2018, Cuba [72] | 0–100 | ≤84 | 89/72 | 93 | NR | Good internal consistency reliability | Age, Years of Schooling | A few mins more than MMSE |

| Thai Mini Version | Charernboon T, 2019, Thailand [25] | 0–100 | 21/22 | 95/85 | 90 | 80.9/96.2 | High internal consistency | NR | 8 to 13 | ||

| 9 | MMSE | - | Broche-Perez Y et al., 2018, Cuba [72] | 1–30 | 25/26 | 56/83 | 63 | NR | NR | NR | NR |

| - | Chen K-L et al., 2016, China [26] | 1–30 | ≤26 | 86.2/60.3 | 79.7 | NR | NR | NR | NR | ||

| - | Chen K-L et al., 2016, China [26] | 1–30 | ≤27 | 78.6/52.2 | 73.6 | NR | NR | NR | NR | ||

| - | Chen K-L et al., 2016, China [26] | 1–30 | ≤28 | 76.4/53.4 | 72.1 | NR | NR | NR | NR | ||

| Cantonese Version | Chiu HF et al., 2017, Hong Kong [27] | 1–30 | 25/26 | 83/84 | 90.4 | 93/98 | NR | NR | NR | ||

| 9 | MMSE | Chinese Version | Chu L et al., 2015, Hong Kong [29] | 1–30 | 27/28 | 67/83 | 78 | NR | NR | Education | NR |

| Thai Version | Julayanont P et al., 2015, Thailand [32] | 1–30 | NR | 33/88 | 70.2 | NR | NR | NR | NR | ||

| - | Phua A et al., 2017, Singapore [34] | 1–30 | NR | 70/59 | NR | 64/66 | NR | NR | NR | ||

| - | Lee S et al., 2016, Australia [66] | 1–30 | <29 | 75.7/68.9 | 77 | NR | NR | Emotional status indices (anxiety and depression) | NR | ||

| - | Mellor D et al., 2016, China [36] | 1–30 | <25.5 | 68/83 | 85 | 60.5/87.4 | NR | Age, Gender, Educational Level | NR | ||

| 9 | MMSE | - | Rakusa M et al., 2018, Slovenia [50] | 1–30 | 25/26 | 20/93 | 63 | NR | NR | NR | NR |

| - | Van de Zande E et al., 2017, Netherlands [53] | 1–30 | ≤23 | 57/98 | 68.5 | 96/69.5 | NR | Education | 5 to 10 | ||

| - | Xu F et al., 2019, China [40] | 1–30 | 27 ≤ and ≤ 29 | 59/58.2 | NR | NR | NR | NR | 5 to 10 | ||

| Standardised Mini Version (SMMSE) | Yavuz B et al., 2017 Turkey [73] | 1–30 | ≤23 | 36/94 | 71 | 87/56 | NR | NR | NR | ||

| - | Chiu P et al., 2019, Taiwan [28] | 1–30 | 26/27 | 64/70 | 66 | NR | NR | Age, Education | NR | ||

| - | Malek-Ahmadi M et al., 2015, US [57] | 1–30 | NR | Small sensitivity to change (helpful in detecting change over time) | 56% Reliability | NR | NR | ||||

| 10 | CFI | Italian Version | Chipi E et al., 2017, Italy [46] | 0–14 | NR | NR | Accurate | Reliable | NR | NR | |

| 11 | RBANS | - | Heyanka D et al., 2015 [71] | 0–100 | NR | 52–93/ 35–93 (based on different subtests) | NR | 16–91/ 72–94 (based on different subtests) | NR | NR | NR |

| 12 | HKBC | - | Chiu HF et al., 2017, Hong Kong [27] | 0–30 | 21/22 | 90/86 | 95.5 | 94/99 | Good test–retest reliability, Excellent interrater reliability, Satisfactory internal consistency | NR | 7 |

| 13 | NMD-12 | - | Chiu P et al., 2019, Taiwan [28] | NR | 1/2 | 87/93 | 94 | NR | NR | NR | NR |

| 14 | Qmci | - | Clarnette R et al., 2016, Australia [65] | 0–100 | ≤60 | 93/80 | 91–97 | 95/73 | NR | NR | 4.2 |

| 14 | Qmci | Turkish Version (Qmci-TR) | Yavuz B et al., 2017 Turkey [73] | 0–100 | <62 | 67/81 | 80 | 80/68 | Strong inter-rater reliability, Strong test–retest reliability | NR | 3 to 5 |

| 15 | CCQ | 8-item CCQ (CCQ8) | Damin A et al., 2015 Brazil [62] | NR | >1 | 97.6/66.7 | High Accuracy | 78.4/95.6 | NR | NR | NR |

| 8-item CCQ (CCQ8) | Damin A et al., 2015 Brazil [62] | NR | ≥2 | 78/93.9 | High Accuracy | 94.1/77.5 | NR | NR | NR | ||

| 16 | CDT | - | Duro D et al., 2018, Portugal [47] | 0–18 (Babins System) | ≤15 | 60/62 | 63.8 | 61/61 | High inter-rater reliability | NR | NR |

| - | Duro D et al., 2018, Portugal [47] | 0–10 (Rouleau System) | ≤9 | 64/58 | 63.5 | 60/62 | High inter-rater reliability | NR | NR | ||

| - | Rakusa M et al., 2018, Slovenia [50] | 0–4 | ≤3 | 69/91 | 81 | NR | NR | Age, Education | <2 | ||

| 16 | CDT | - | Ricci M et al., 2016, Italy [51] | 0–5 | ≤1.30 | 76/84 | Good Diagnostic Accuracy | Excellent inter-rater reliability | NR | Very short and easy | |

| - | Vyhnálek M et al., 2016, Czech Republic [54] | NR | NR | 62–84/47 –63 | NR | NR | NR | NR | NR | ||

| 17 | TorCA | - | Freedman M et al., 2018 [70] | 0–295 | ≤275 | 80/79 | 79% Accuracy | Good test–retest reliability, Adequate internal consistency | NR | Median 34 | |

| 18 | HK-VMT | - | Fung AW-T et al., 2018, Hong Kong [30] | 0–40 | 21/22 | 86.1/75.3 | 79.3 | NR | Good test–retest reliability | Education | 15 |

| - | Fung AW-T et al., 2018, Hong Kong [30] | 0–40 | <22 (education <6 years) | 71.1/87.3 | 79.3 | NR | Good test–retest reliability | Education | 15 | ||

| 18 | HK-VMT | - | Fung AW-T et al., 2018, Hong Kong [30] | 0–40 | <25 (education >6 years) | 71.4/76.5 | 79.3 | NR | Good test–retest reliability | Education | 15 |

| 19 | TICS | - | Georgakis MK et al., 2017, Greece [67] | 0–41 | 26/27 | 45.8/73.7 | 56.9 | 30.6/84.3 | Adequate internal consistency, Very high test–retest reliability | Age, Education | NR |

| 20 | VOSP | Abbreviated version of the Silhouettes subtest (Silhouettes-A) | Huang L et al., 2018, China [31] | 0–15 | ≤10 | 79.6/65.1 | 81.6 | NR | High internal consistency/inter-rater reliability, Excellent test–retest reliability | Gender, Education (Unaffected by age) | 3 to 5 |

| 21 | TYM | Greek Version | Iatraki E et al., 2017, Greece [68] | 0–50 | 35/36 | 80/77 | NR | 47/93 | Good internal consistency | Age, Education | 5 to 10 |

| Dutch Version | Van de Zande E et al., 2017, Netherlands [53] | 0–50 | ≤38 | 74/91 | 79.5 | 87.9/79.2 | Good inter-rater reliability | Education | 10 to 15 | ||

| 22 | GPCog | Greek Version of GPCog-Patient | Iatraki E et al., 2017, Greece [68] | 0–9 | 7/8 | 89/61 | High discrimination accuracy for high education level population; Moderate accuracy for low education level population | 38/95 | Good internal consistency | Age, Education | <5 |

| Chinese Version of 2-stage method (GPCOG-C) | Xu F et al., 2019, China [40] | GPCOG-patient: 0–9; Informant Interview: 0–9 | GPCOG-patient: 5–8; Informant Interview: >4 | 62.3/84.6 | NR | NR | NR | Unaffected by education, gender and age | 4 to 6 | ||

| 23 | CVLT | Second Edition (CVLT-II) | Lee S et al., 2016, Australia [66] | 0–16 | <8 | 82.9/93.2 | 94 | NR | NR | Emotional status indices (anxiety and depression) | NR |

| 24 | The Envelope Task | - | Lee S et al., 2016, Australia [66] | 0–4 | <3 | 64.3/91.9 | 83 | NR | NR | Emotional status indices (anxiety and depression) | NR |

| 25 | PRMQ | - | Lee S et al., 2016, Australia [66] | 0–80 | <46 | 50/75.7 | 66 | NR | NR | Emotional status indices (anxiety and depression) | NR |

| 26 | Single-item Memory Scale | - | Lee S et al., 2016, Australia [66] | 0–5 | <3 | 55.7/89.2 | 76 | NR | NR | Emotional status indices (anxiety and depression) | NR |

| 27 | FCSRT | Portuguese Version | Lemos R et al., 2016, Portugal [48] | ITR: 0–48 | ≤35 | 72/83 | 81.8 | 81/75 | NR | Unaffected by literacy level | ~2 |

| Portuguese Version | Lemos R et al., 2016, Portugal [48] | DTR: 0–16 | ≤12 | 76/80 | 82.4 | 79/77 | NR | Unaffected by literacy level | ~30 | ||

| 28 | AQ | - | Malek-Ahmadi M et al., 2015, US [57] | 0–27 | NR | Small sensitivity to change (helpful in detecting change over time) | 65% Reliability | NR | NR | ||

| 29 | FAQ | - | Malek-Ahmadi M et al., 2015, US [57] | 0–30 | NR | Small sensitivity to change (helpful in detecting change over time) | 63% Reliability | NR | NR | ||

| - | Mitchell J et al., 2015, US [59] | 0–30 | NR | 47/82 | NR | NR | NR | NR | NR | ||

| 30 | BCAT | Short Form (BCAT-SF) | Mansbach W et al., 2016, US [58] | 0–21 | ≤19 | 82/80 | 86 | 93/57 | Good internal consistency, Reliable | NR | 3 to 4 |

| 31 | AD8 | - | Chiu P et al., 2019, Taiwan [28] | 0–8 | 1/2 | 78/93 | 92 | NR | NR | Unaffected by age, education | NR |

| - | Mansbach W et al., 2016, US [58] | 0–8 | ≥1 | 78/30 | 59 | 78/29 | Acceptable internal consistency | NR | NR | ||

| - | Mansbach W et al., 2016, US [58] | 0–8 | ≥2 | 68/63 | 59 | 83/34 | Acceptable internal consistency | NR | NR | ||

| 31 | AD8 | - | Mansbach W et al., 2016, US [58] | 0–8 | ≥3 | 47/63 | 59 | 81/27 | Acceptable internal consistency | NR | NR |

| 32 | DSRS | - | Mitchell J et al., 2015, US [59] | 0–51 | NR | 60/81 | NR | NR | Good construct reliability | NR | 5 |

| 33 | CWLT | CERAD Word List 5-minute recall test | Mitchell J et al., 2015, US [59] | NR | NR | 62/96 | NR | NR | NR | NR | NR |

| CWLT-3rd Trial | Mitchell J et al., 2015, US [59] | NR | NR | 41/90 | NR | NR | NR | NR | NR | ||

| CWLT-Trials 1-3 | Mitchell J et al., 2015, US [59] | NR | NR | 57/94 | NR | NR | NR | NR | NR | ||

| CWLT-Composite | Mitchell J et al., 2015, US [59] | NR | NR | 66/95 | NR | NR | NR | NR | NR | ||

| 32 and 33 | CWLT-5 + DSRS | - | Mitchell J et al., 2015, US [59] | NR | NR | 76/98 | NR | NR | NR | NR | NR |

| 34 | BADLS | - | Mitchell J et al., 2015, US [59] | NR | NR | 36/86 | NR | NR | Good construct reliability | NR | NR |

| 35 | DSR | - | Ni J et al., 2015, China [37] | NR | ≤15 | 100/95.9 | 99.8 | Good diagnostic accuracy | Excellent internal consistency | NR | NR |

| 36 | mSTS-MCI | mSTS-MCI Scores | Park J et al., 2018, South Korea [38] | 0–18 | 18/19 | 99/93 | High Concurrent Validity | High internal consistency, High test–retest reliability | NR | 15 | |

| mSTS-MCI Reaction Time | Park J et al., 2018, South Korea [38] | 0–10 | 13.22/13.32 | 100/97 | High Concurrent Validity | High internal consistency, High test–retest reliability | NR | 15 | |||

| 37 | CAMCog | Briefer Version (CAMCog-Short) | Radanovic M et al., 2017, Brazil [64] | 0–63 | 51/52 (education >9 years) | 65.2/78.8 | 79.7 | NR | NR | NR | NR |

| Briefer Version (CAMCog-Short) | Radanovic M et al., 2017, Brazil [64] | 0–63 | 59/60 (education ≤8) | 70/75.5 | 77.3 | NR | NR | NR | NR | ||

| 38 | MBT | Argentine Version | Roman F et al., 2016, Argentina [69] | 0–32 | NR | 69/88 | 88 | 93/55 | NR | NR | 6 |

| 39 | SAGE | - | Scharre D et al., 2017, US [60] | 6–22 | <15 | 71/90 | 88 | NR | NR | NR | Median 17.5 |

| Digitally Translated (eSAGE) | Scharre D et al., 2017, US [60] | 10–22 | <16 | 69/86 | 83 | NR | NR | NR | Median 16 | ||

| 40 | Semantic Fleuncy/VF | - | Serna A et al., 2015, Spain [52] | 0–17 | ≤10.5 | 53/67 | 72 | 52/75 | NR | NR | 1 |

| - | Serna A et al., 2015, Spain [52] | 0–17 | ≤11.5 | 62/67 | 72 | 52/75 | NR | NR | 1 | ||

| - | Serna A et al., 2015, Spain [52] | 0–17 | ≤12.5 | 70/56 | 72 | 48/76 | NR | NR | 1 | ||

| 41 | Logical Memory | 20-min Delayed Recall (DR) | Serna A et al., 2015, Spain [52] | 0–6 | ≤2.5 | 43/85 | 71 | 63/72 | NR | NR | 20 |

| 20-min Delayed Recall (DR) | Serna A et al., 2015, Spain [52] | 0–6 | ≤3.5 | 57/71 | 71 | 54/74 | NR | NR | 20 | ||

| 41 | Logical Memory | 20-min Delayed Recall (DR) | Serna A et al., 2015, Spain [52] | 0–6 | ≤4.5 | 78/42 | 71 | 44/77 | NR | NR | 20 |

| 42 | STMS | - | Townley R et al., 2019 US [61] | N/A | <35 | 72/74 | Incident MCI: 71, a-MCI: 85, na-MCI: 91 | NR | NR | NR | NR |

| 43 | DMS48 | - | Feng X et al., 2017, China [39] | 0–48 | 42/43 | 86.6/94.2 | 96.6 | NR | NR | Age (Unaffected by education) | Short time taking |

| 44 | ADAS-Cog | ADAS-Cog 11-item | Zainal N et al., 2016, Singapore [41] | 0–70 | ≥4 | 73/69 | 78 | 90/40 | Excellent internal consistency | Age | 30 to 45 |

| ADAS-Cog 12-item | Zainal N et al., 2016, Singapore [41] | 0–80 | ≥5 | 90/53 | 79 | 88/58 | Excellent internal consistency | NR | 30 to 45 | ||

| ADAS-Cog Episodic Memory Composite Scale | Zainal N et al., 2016, Singapore [41] | 0–32 | ≥6 | 61/73 | 73 | 86/41 | Excellent internal consistency | NR | 30 to 45 | ||

| 45 | IADL | - | Chiu P et al., 2019, Taiwan [28] | NR | 7/8 | 98/27 | 63 | NR | NR | NR | NR |

| 46 | CASI | - | Chiu P et al., 2019, Taiwan [28] | NR | 82/83 | 68/68 | 72 | NR | NR | Age, Education | NR |

| 47 | NPI | - | Chiu P et al., 2019, Taiwan [28] | NR | 3/4 | 63/62 | 63 | NR | NR | NR | NR |

| 48 | BNT | - | Huang L et al., 2018, China [31] | NR | 24 | 70.6/55.2 | 67.3 | NR | NR | NR | NR |

| 49 | STT | Test B (STT-B) | Huang L et al., 2018, China [31] | NR | 169 | 50.7/80 | 68.3 | NR | NR | NR | NR |

| 50 | JLO | - | Huang L et al., 2018, China [31] | NR | 27 | 59.7/53.2 | 62 | NR | NR | NR | NR |

| 51 | ST | - | Huang L et al., 2018, China [31] | NR | 14 | 64/62.6 | 66.4 | NR | NR | NR | NR |

| 52 | VCAT | - | Khandiah N et al., 2015, Singapore [33] | 0–30 | 18–22 | 85.6/81.1 | 93.3 | 89/75.9 | NR | Unaffected by language | 15.7 ± 7.3 |

| - | Low A et al., 2019, Singapore [35] | 0–30 | 20–24 | 75.4/71.1 | Good construct validity | 74.4/72.3 | Good internal consistency | Unaffected by language and cultural background | NR | ||

| Tool | Cut-Off Point | Different Versions Included | Validity | Good Reliability | Affecting Factors | Administration Time ≤15 mins | Can Be Self-Administered or Conducted by Non-Professional |

|---|---|---|---|---|---|---|---|

| 6 CIT | ≤4/10/12 | ✓ | Good/Excellent | ✓ | Education | ✓ | ✓ |

| EMQ | Limited results | ||||||

| BSRT | Limited results | ||||||

| RCFT | ≤32 | ✓ | Fair | - | - | - | x |

| TMT | Limited results | ||||||

| MoCA | ≤26 | ✓ | Fair/Good | ✓ | Education (may be affected by gender and age) | ✓ | ✓ |

| ≤25, ≤22.5 | Good | ||||||

| ≤24, ≤22, ≤19, ≤15.5 | Good/Excellent | ||||||

| ≤20 | Fair | ||||||

| BCSE | ≤27, ≤46 | ✓ | Fair/Good | ✓ | Age | ✓ | ✓ |

| ACE | ≤84, ≤22 | ✓ | Good/Excellent | ✓ | Age, Education | ✓ | x |

| MMSE | ≤29, ≤27 | ✓ | Fair | ✓ | Age, Education, Emotional status, Gender | ✓ | ✓ |

| ≤28, ≤25.5, ≤23 | Fair/Good | ||||||

| ≤26 | Good | ||||||

| CFI | - | ✓ | Good | ✓ | - | - | x |

| RBANS | - | - | Fair | - | - | - | - |

| HKBC | ≤22 | - | Excellent | ✓ | - | ✓ | ✓ |

| NMD-12 | ≤2 | - | Excellent | - | - | - | x |

| Qmci | <62/≤60 | ✓ | Good/Excellent | ✓ | - | ✓ | x |

| CCQ | >1, ≥2 | ✓ | Good/Excellent | - | - | - | ✓ |

| CDT | ≤15, ≤9, ≤3, ≤1.3 | - | Fair/Good | ✓ | Age, Education | ✓ | ✓ |

| TorCA | ≤275 | - | Good | - | - | x | x |

| HK-VMT | <22, ≤25 | - | Fair | ✓ | Education | ✓ | ✓ |

| TICS | ≤27 | - | Poor/Fair | ✓ | Age, Education | - | x |

| VOSP | ≤10 | - | Good | ✓ | Gender, Education | ✓ | x |

| TYM | ≤38, ≤36 | ✓ | Fair/Good | ✓ | Age, Education | ✓ | ✓ |

| GPCog | ≥4, ≥8 | ✓ | Fair/Good | ✓ | Inconsistent results | ✓ | x |

| CVLT | <8 | ✓ | Good/Excellent | - | Emotional Status | - | - |

| The Envelope Task | <3 | - | Good | - | Emotional Status | - | - |

| PRMQ | <46 | - | Fair | - | Emotional Status | - | - |

| Single-item Memory Scale | <3 | - | Fair/Good | - | Emotional Status | - | - |

| FCSRT | ≤35, ≤12 | ✓ | Good | - | - | x | - |

| AQ | Limited results | ||||||

| FAQ | - | - | Poor/Good | - | - | - | - |

| BCAT | ≤19 | - | Good | ✓ | - | ✓ | x |

| AD8 | ≥1, ≥2, ≥3 | - | Poor/Fair | ✓ | - | - | ✓ |

| DSRS | - | - | Fair/Good | ✓ | - | ✓ | x |

| CWLT | - | ✓ | Fair | - | - | - | x |

| CWLT + DSRS | - | - | Good/Excellent | - | - | - | x |

| BADLS | - | - | Poor | ✓ | - | - | x |

| DSR | ≤15 | Excellent | ✓ | - | - | - | |

| mSTS-MCI | ≤19, ≤13.32 | ✓ | Excellent | ✓ | - | ✓ | x |

| CAMCog | ≤52, ≤60 | ✓ | Fair/Good | - | - | - | x |

| MBT | - | ✓ | Good | - | - | ✓ | - |

| SAGE | <15, <16 | - | Good | - | - | x | ✓ |

| Semantic Fluency/VF | ≤10.5, ≤11.5, ≤12.5 | - | Fair | - | - | ✓ | - |

| Logical Memory | ≤2.5, ≤3.5, ≤4.5 | ✓ | Poor/Fair | - | - | x | - |

| STMS | <35 | - | Good | - | - | - | - |

| DMS48 | ≤43 | - | Good/Excellent | - | Age | - | x |

| ADAS-Cog | ≥4, ≥5, ≥6 | ✓ | Good/Excellent | ✓ | - | x | x |

| IADL | ≤8 | - | Poor/Fair | - | - | - | x |

| CASI | ≤83 | - | Fair | - | Age, Education | - | x |

| NPI | ≤4 | - | Fair | - | - | - | x |

| BNT | ≤24 | - | Fair | - | - | - | ✓ |

| STT | ≤169 | - | Fair | - | - | - | ✓ |

| JLO | ≤27 | - | Fair | - | - | - | ✓ |

| ST | ≤14 | - | Fair | - | - | - | ✓ |

| VCAT | 18–22, 20–24 | - | Good/Excellent | ✓ | x | x | ✓ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chun, C.T.; Seward, K.; Patterson, A.; Melton, A.; MacDonald-Wicks, L. Evaluation of Available Cognitive Tools Used to Measure Mild Cognitive Decline: A Scoping Review. Nutrients 2021, 13, 3974. https://doi.org/10.3390/nu13113974

Chun CT, Seward K, Patterson A, Melton A, MacDonald-Wicks L. Evaluation of Available Cognitive Tools Used to Measure Mild Cognitive Decline: A Scoping Review. Nutrients. 2021; 13(11):3974. https://doi.org/10.3390/nu13113974

Chicago/Turabian StyleChun, Chian Thong, Kirsty Seward, Amanda Patterson, Alice Melton, and Lesley MacDonald-Wicks. 2021. "Evaluation of Available Cognitive Tools Used to Measure Mild Cognitive Decline: A Scoping Review" Nutrients 13, no. 11: 3974. https://doi.org/10.3390/nu13113974

APA StyleChun, C. T., Seward, K., Patterson, A., Melton, A., & MacDonald-Wicks, L. (2021). Evaluation of Available Cognitive Tools Used to Measure Mild Cognitive Decline: A Scoping Review. Nutrients, 13(11), 3974. https://doi.org/10.3390/nu13113974