1. Introduction

Aquatic vegetation has important ecological and regulatory functions in aquatic ecosystems. Aquatic plants serve as food and habitat for many organisms, including microflora, zooplankton, macroinvertebrates, fish, and waterfowl [

1]. As major primary producers, aquatic plants are important for nutrient cycling and metabolism regulation in freshwater systems [

2] and can transfer nutrients and oxygen between the sediment and the water [

3,

4]. In the littoral zone, aquatic vegetation alters the composition of its physical environment by absorbing wave energy, thereby stabilizing sediments [

1]. It forms an interface between the surrounding land and water, and intercepts terrestrial nutrient run-off [

1,

5]. Aquatic plant species are also important indicators for environmental pressures and hence are integrated globally in the assessment of ecological status of aquatic ecosystems [

6,

7,

8].

Aquatic ecosystems are under increasing pressure from climate change, intensification of land use, and spread of invasive alien species [

9,

10,

11]. As a result, the need to monitor changes in aquatic ecosystems is greater than ever. However, field data collection is often resource demanding and the desired monitoring cannot effectively be achieved by field work alone. Remote sensing data have been used successfully as an alternative and/or a complement to field data collection in a wide range of applications [

12], for example, by increasing the surveyed area as compared to field work alone [

13,

14]. However, due to the need for calibration and validation of remote sensing data, field work cannot be completely replaced. The recent development of unmanned aircraft systems (UASs) has improved the prospects for remote sensing of aquatic plants by providing images with sub-decimetre resolution [

15]. In a previous study based on visual interpretation, we found that true-colour digital images collected from a UAS platform allowed for the identification of 21 non-submerged aquatic and riparian species [

16].

Species identification is a requirement for ecological status assessment of lakes and rivers as implemented in different parts of the world, including Europe [

6], USA [

7], and Australia [

8]. Traditionally, such assessments are based on submerged vegetation (e.g., [

17]) which is more difficult to detect with a UAS than non-submerged vegetation, especially using true-colour sensors. Recent studies show that helophytes, i.e., emerging vegetation, are valuable indicators in bio-assessment of lakes (e.g., [

18,

19]), especially at high latitudes, where helophytes form a significant share of the species pool in lakes and wetlands. Birk and Ecke [

20] demonstrated that a selection of non-submerged aquatic plants identifiable using very-high-resolution remote sensing was a good predictor for ecological status in coloured boreal lakes. Determination of lake ecological status should be based on a whole-lake assessment [

7,

21]. Common praxis is to choose a number of field-survey transects meant to be representative for the whole lake [

21,

22]. Here, UAS-remote sensing could contribute critical information, both in the planning process (e.g., placement of transects) and in providing an overview of the whole lake area facilitating vegetation cover estimates. Differentiation among growth forms of aquatic vegetation helps in describing the character of lakes and rivers, for example, in relation to aquatic plant succession and terrestrialization [

23], and in assessing their value as habitat for a variety of species from invertebrates to migrating waterfowl (e.g., [

24,

25,

26]), as well as undesired invaders. Ecke et al. [

27] demonstrated that lakes rich in nymphaeids (floating-leaved vegetation) and with wide belts of helophytes (emergent vegetation) showed a higher risk than other lakes of being invaded by muskrat (

Ondatra zibethicus), an invasive species in Europe.

Visual interpretation and manual mapping of aquatic vegetation from UAS-images is labour-intensive [

16], restricting implementation over larger areas such as entire lakes. Automated methods for image analysis are available, but have rarely been evaluated for aquatic vegetation when applied to very-high-resolution images, particularly at the scale of individual vegetation stands. The segmentation into image-objects (spectrally homogenous areas) prior to automated image analysis, referred to as object-based image analysis (OBIA), is particularly effective in automated classification approaches on very high spatial but low spectral resolution images [

28,

29,

30]. In addition to spectral features, textural, geometric, and contextual features of these image-objects can be included in the automated classification [

31,

32,

33].

The aim of our study was to investigate whether an automated classification approach using OBIA would increase the time-efficiency of the mapping process compared to manual mapping, and to assess the classification accuracy of the automatically produced maps. Based on a true-colour UAS-orthoimage with 5-cm pixel resolution taken at a lake in northern Sweden, we mapped non-submerged aquatic vegetation at three levels of detail (water versus vegetation, growth form, and dominant taxon) at five test sites (100 m × 100 m each). The five sites had varying levels of vegetation complexity. The fifth test site was used to evaluate classification robustness given poor image quality. Two classification methods were used, namely threshold classification for simple separation of spectrally distinct classes (e.g., water and vegetation), and Random Forest [

34] for classifying several spectrally similar classes. We compared the results to manual mapping, discussed them in relation to classification accuracy and time-efficiency, and inferred on the potential of the methods for ecological assessment.

3. Results

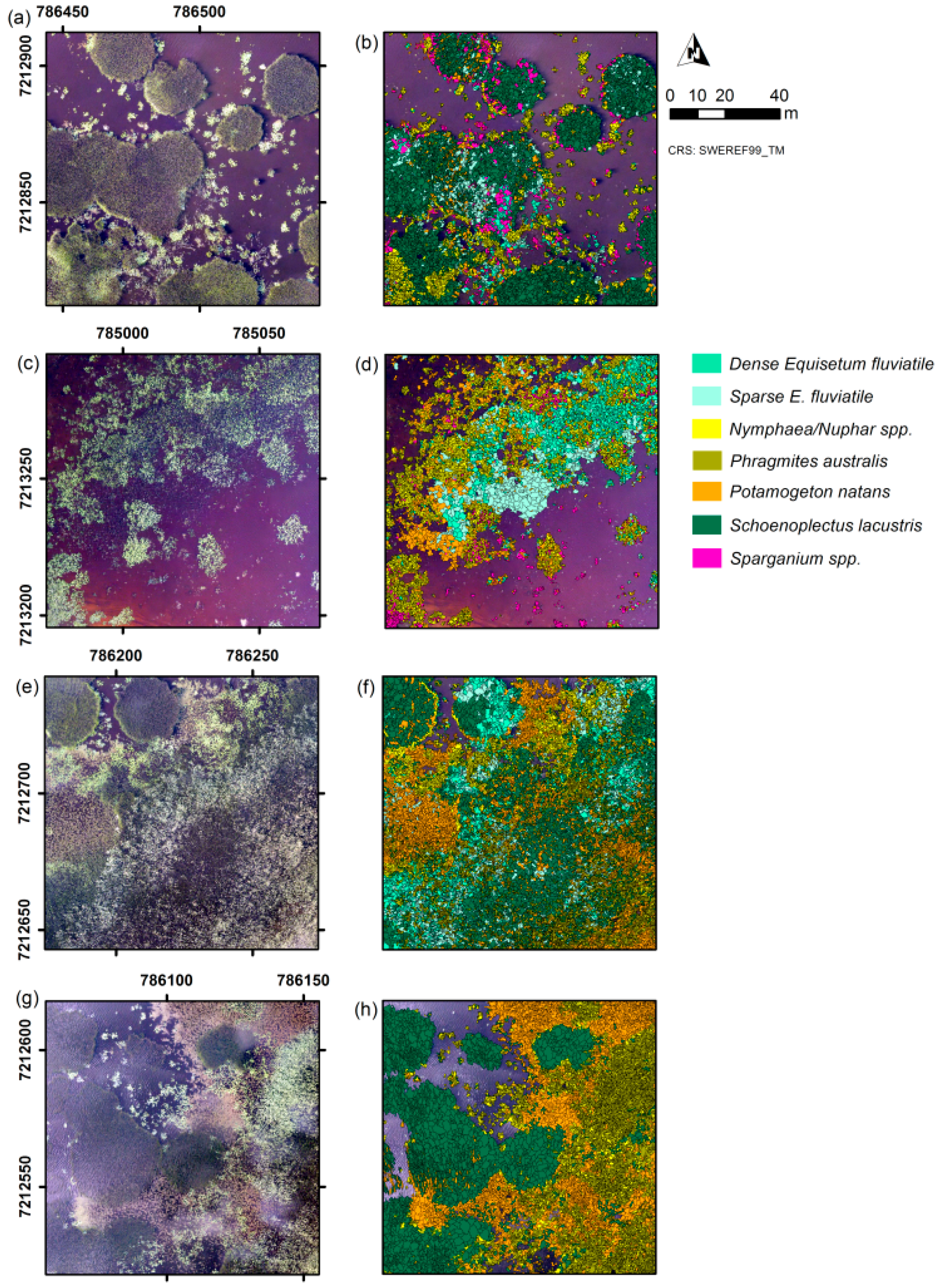

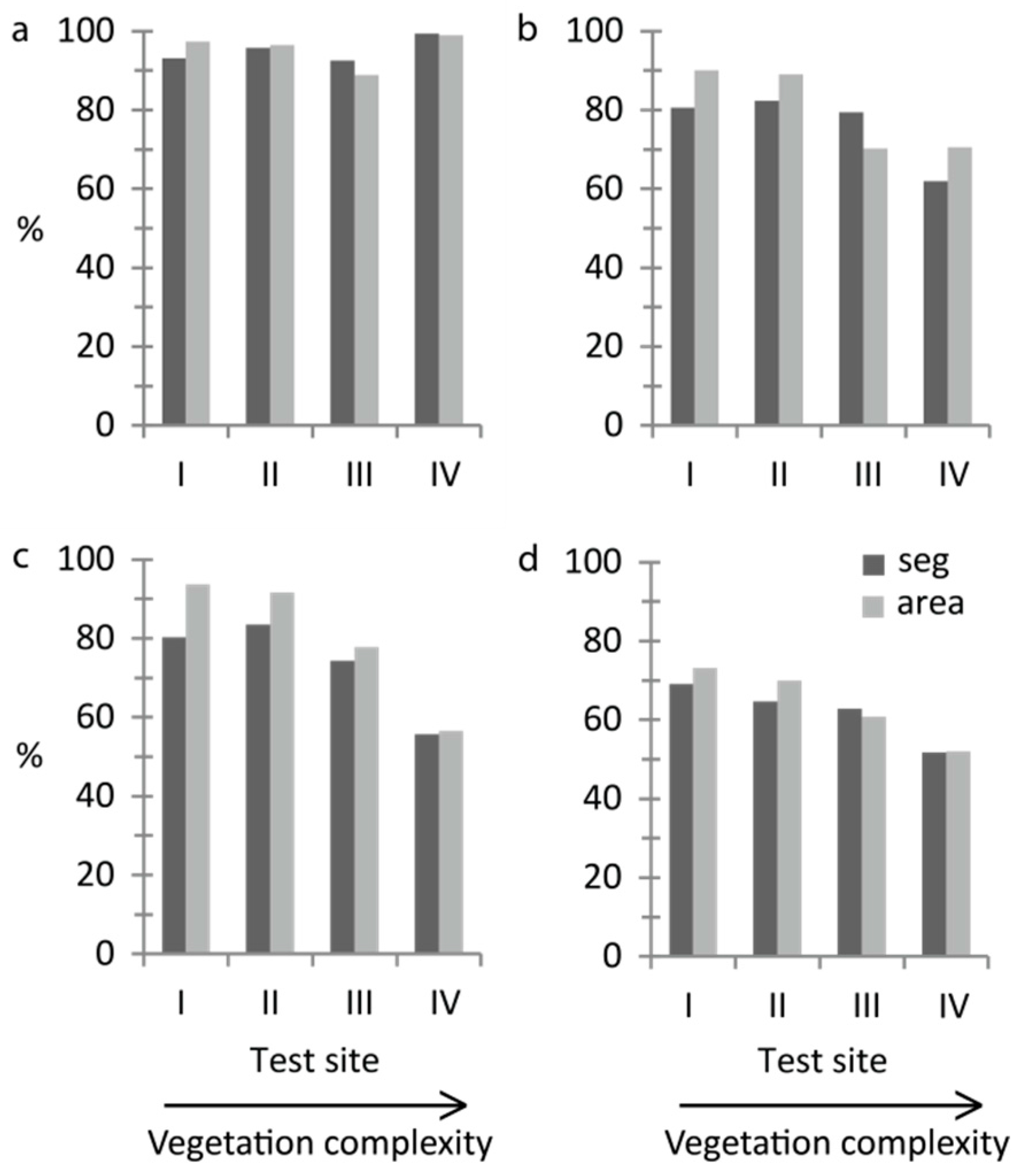

The quick and straightforward threshold method successfully separated water and vegetation. More than 90% of the validation segments were correctly classified at all sites and at least 89% of the area was correctly mapped (

Figure 3a). Kappa coefficients ranged from 0.60–0.83 (

Table S1). At the majority of sites quantity disagreement was larger than allocation disagreement (

Table S1). In all cases, vegetation had a higher Producer’s accuracy than water (

Table 5). Differences in User’s accuracy were less pronounced. The error matrices for all levels of detail at all sites are included in

Table S1. We found the texture feature “GLCM Homogeneity” to be best in distinguishing between water and vegetation at sites I–IV. In addition, the feature “Brightness” was used at site II and the feature “NDI Green–Blue” was used at site IV to optimise the classification. At site V, wave action had changed the characteristic texture of water, and in this case only “NDI Green–Blue” was used.

For growth form, overall accuracy decreased with increasing vegetation complexity (

Figure 3b,c). With the threshold method, the correctly classified area was at least 70% at all sites (

Figure 3b). The percentage of correctly classified validation segments was lowest at site IV (62%) but was around 80% at sites I–III (

Figure 3b). Kappa coefficients for the threshold classification ranged from 0.16–0.52 (

Table S1). At the majority of sites quantity disagreement was larger than allocation disagreement (

Table S1). The feature “Brightness” was used to separate helophytes and nymphaeids at all sites. In addition, the feature “Hue” was used at site IV and the feature “NDI Green–Red” was used at site V to optimise the threshold classification. The two tested classification methods gave similar results, except at the most complex site IV where the overall classification accuracy was 56% with Random Forest (

Figure 3c). Kappa coefficients for the Random Forest classification ranged from 0.25–0.69 (

Table S1). At the majority of sites allocation disagreement was larger than quantity disagreement (

Table S1). Producer’s and User’s accuracies for growth form varied between classes and sites (

Table 6).

At the most advanced level of detail, dominant taxon (

Figure 1h and

Figure 2b,d,f,h), overall accuracies decreased with increasing vegetation complexity and 70% correctly classified area was reached only at sites I and II (

Figure 3d). Kappa coefficients ranged from 0.34–0.54 (

Table S1). At the majority of sites quantity disagreement was larger than allocation disagreement (

Table S1). Producer’s and User’s accuracies showed large variation between classes and sites (

Table 7).

Nymphaea/

Nuphar spp. was most reliably classified with User’s and Producer’s accuracies of at least 61% at all sites (segment-based,

Table 7). Also

S. lacustris had a minimum User’s and Producer’s accuracy of 65%, except for the Producer’s accuracy at site IV (43% segment-based and 51% area-based). The two

E. fluviatile classes,

P. natans and

Sparganium spp. had in all cases a lower User’s than Producer’s accuracy indicating a large number of false inclusions (segment-based,

Table 7).

P. australis, which occurred at site IV–V, was confused with all other taxa present at site IV, but most frequently with the

E. fluviatile classes and

P. natans, resulting in a Producer’s accuracy of 48%.

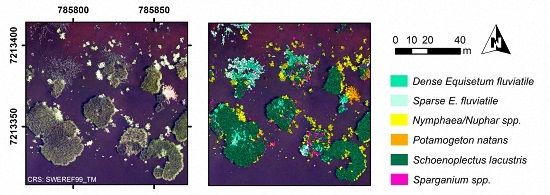

Overall accuracies at site V were within the same range of overall accuracies at the other test sites for all performed OBIAs (

Table S1), except for the area-based accuracy assessment for dominant-taxon (

Figure 2h) where site V surprisingly showed the highest overall accuracy among all sites (75%). The segment-based overall accuracy was 65%.

The increase in accuracy (

Inon-dom) owing to non-dominant-taxon classifications for segment-based and area-based accuracy assessment, respectively, was 0.3% and 0.1% at site I, 0.8% and 0.2% at site II, 8.4% and 6.2% at site III, 3.3%, and 3.4% at site IV, and 4.6% and 3.5% at site V. The increase was most pronounced at sites III–V which had a high proportion of mixed vegetation stands (

Table 2). Including non-dominant-taxon classifications, site III showed the highest segment-based accuracy of all sites (71%).

The manual mapping took 2.06 h at site I and 5.39 h at site IV. The automated dominant-taxon classification took 2.25 h at site I and 2.12 h at site IV.

4. Discussion

Our results demonstrate that it is feasible to extract ecologically relevant information on non-submerged aquatic vegetation from UAS-orthoimages in an automated way. The automatization increases time-efficiency compared to manual mapping, but might decrease classification accuracy with increased vegetation complexity. The classification accuracies achieved in our study lie in the range of accuracies reported from other OBIA studies in wetland environments as reviewed by Dronova [

33]. The exception to this is for the growth-form and dominant-taxon level at the most complex site IV. Here, presence of

P. australis increased the number of misclassifications on the dominant-taxon level and the growth-form level, where

P. australis was to a large extent classified as nymphaeid, probably due to its light green colour. Site IV also has the highest number of mixed-vegetation classes in the manual mapping, but non-dominant-taxon classifications were only of minor importance in explaining misclassifications. The two taxa that were most reliably classified regarding all sites,

Nymphaea/

Nuphar spp. and

S. lacustris, typically covered large areas. High within-taxon variation of

S. lacustris (as also observed by Husson et al. [

16]) posed problems for the automated classification. Bent stems with high sun exposure, typically at the edge of vegetation stands, as well as straight stems with low area coverage were repeatedly misclassified. Because these areas were small in comparison to the total area of

S. lacustris, accuracies for this taxon were not highly affected. However, these misclassifications had a major impact on the classification accuracy of taxa with smaller total areas (

E. fluviatile,

P. natans, and

Sparganium spp.) by increasing the number of false inclusions, giving low User’s accuracies.

At four out of five test sites, OBIA combined with Random Forest proved to be an appropriate automated classification method, in most cases giving good classification accuracies at a high level of detail (i.e., >75% for growth forms and >60% for dominant taxa). One way to raise classification accuracy (even at site IV) could be to manually choose training samples of high quality. The inclusion of segments with mixed vegetation as training samples due to random selection might have influenced the result because Random Forest is sensitive to inaccurate training data [

39]. The threshold method is more intuitive compared to Random Forest and can be quick when expert-knowledge for feature selection is available. No samples and no ruleset are needed, which makes thresholding easier to use. A drawback of the threshold method is the limited number of classes. In contrast to Random Forest, two thresholding steps were necessary to get to the growth-form level. For more complex classification tasks, empirical determination of thresholds is not time-efficient. Empirical determination also increases subjectivity and limits the transferability of classification settings to other sites. A more automated approach to determine thresholds should be evaluated in the future.

The results for site V indicate that the method used here could cope with poor image quality. The surprisingly high overall classification accuracy at the dominant-taxon level at site V was probably caused by a favourable species composition that reduced the risk for confusion between taxa. There was a large difference between segment-based and area-based accuracy assessment, likely because some of the correctly classified segments at site V covered a large area.

A challenge in OBIA is the relative flexibility in the framework (e.g., [

33]). Segmentation parameters, classification method, and discriminating object features influence the classification result; routines for an objective determination of these parameters are still missing. We used a relatively small scale parameter, resulting in segments that were smaller than the objects (i.e., vegetation stands) to be classified (over-segmentation). This is advantageous in comparison to under-segmentation [

51], because if one segment contains more than one object, a correct classification is impossible. Kim et al. [

44] and Ma et al. [

52] found that the optimal segmentation scale varied between classes; by using multiple segmentation scales, classification results could be optimised.

Time-measurement revealed that manual mapping of dominant taxa can be faster than OBIA in cases where vegetation cover and complexity level is low. However, regarding time-efficiency in general and for areas larger than our 100 m × 100 m test sites, automated classification out-performs manual mapping. This is because manual-mapping time is proportional to the mapped area, i.e., if the area doubles so does the time needed for manual mapping. A doubling of the area would, however, have only a small effect on the time needed to execute the OBIA, mainly through an increase in processing time and potentially an increase in the time needed for the assembly of reference data over a larger area. However, when applying OBIA to large or multiple image files, there may be limitations due to restrictions in computing power.

Heavy rains after the image acquisition forced us to postpone the field work to the next growing season. The one-year time lag between image acquisition and field work is a potential source of error. However, during field work the aquatic plants were in the same development stage as during image acquisition and the vegetation appeared very similar to the printed images. We were able to locate both training and control points selected on the UAS-orthoimage in 2011 in the field in 2012 without problems. This is confirmed by the high number of correct predictions during the image interpreter training. Another potential source of error relates to misclassifications of taxa during manual mapping which might have caused incorrect training and validation data. In a comparable lake environment but with a larger variety of taxa, Husson et al. [

16] achieved an overall accuracy of 95% for visual identification of species.

Lightweight UASs are flexible and easy to handle allowing their use even in remote areas which are difficult to access for field work. UAS-technology is also especially favourable for monitoring purposes with short and/or user-defined repetition intervals. Our automated classification approach for water versus vegetation and at the growth-form level is highly applicable in lake and river management [

53] and aquatic plant control, such as in evaluation of rehabilitation measures [

54]. Valta-Hulkkonen et al. [

55] also found that a lake’s degree of colonisation by helophytes and nymphaeids detected by remote sensing was positively correlated with the nutrient content in the water. For a full assessment of ecological status of lakes, it is, at the moment, not possible to replace field sampling by the method proposed here, partly due to uncertainty in the classification of certain taxa (which might be solved in the future) but also because submerged vegetation is not included. The latter is a considerably larger problem in temperate regions, where hydrophyte flora dominates, than in the boreal region where the aquatic flora naturally has a high proportion of emergent plants [

18]. In the boreal region with its high number of lakes, the proposed method has large potential. Most boreal region lakes are humic [

56] with high colour content which increases the importance of non-submerged plants because low water transparency hinders the development of submerged vegetation [

57]. The taxonomical resolution achieved in our classification allows calculation of a remote-sensing-based ecological assessment index for non-submerged vegetation in coloured lakes, as suggested by Birk and Ecke [

20].

UASs are still an emerging technology facing technical and regulatory challenges [

58]. In the future, a systematic approach to explore image quality problems associated with UAS-imagery is needed. Compared to conventional aerial imagery, the close-to-nadir perspective in the UAS-orthoimage reduced angular variation and associated relief displacement of the vegetation. We used high image overlap and external ground control points for georeferencing. Therefore, the geometric accuracy and quality of the produced orthoimage in our study was not affected by autopilot GPS accuracy or aircraft stability (see also [

16]). However, of concern for image quality was that the UAS-orthoimage was assembled from different flights undertaken at different times of the day and under varying weather conditions. This resulted in different degrees of wave action, cloud reflection, and sunglint, as well as varying position and size of shadows in different parts of the orthoimage. The high image overlap allowed for exclusion of individual images with bad quality from the data set prior to orthoimage production. In Sweden, UASs that may be operated by registered pilots without special authorisation at heights above 120 m can have a maximum total weight of 1.5 kg [

59]. A challenge with those miniature UASs is limited payload [

15]. Therefore, we used a lightweight digital compact camera. Here, very-high-resolution true-colour images performed well, without compromising UAS flexibility. However, due to recent technical development and progress in miniaturisation of sensors, lightweight UAS with multispectral and hyperspectral sensors are becoming available (e.g., [

60]), even if such equipment still is relatively expensive. Inclusion of more optical bands (especially in the (near-)infrared region [

39]) in UAS-imagery will likely increase classification accuracy at a high taxonomic level. Another relevant development is deriving 3D data from stereo images or UAS LiDAR sensors, enabling inclusion of height data together with spectral data, potentially improving the accuracy of automated growth-form and taxon discrimination [

61].