1. Introduction

Coastal zones face unprecedented challenges from intensifying extreme weather events and accelerating sea level rise, necessitating robust and reliable monitoring systems for surf impact detection [

1,

2]. Traditional monitoring approaches, including in situ sensors, numerical simulations, and manual observations, become unreliable or inoperable during severe weather conditions, precisely when monitoring is most critical [

3,

4,

5,

6]. Surf impacts during typhoons can generate forces exceeding 250 kN/m

2, potentially causing catastrophic damage to coastal structures and threatening public safety [

7,

8,

9]. This creates an urgent need for automated, resilient monitoring systems capable of continuous operation in extreme conditions.

Surf constitutes a fundamental phenomenon in coastal environments, arising from interactions between ocean waves and various coastal features and seabed topographies [

10,

11,

12]. Depending on the context, surf exhibits distinct behaviors, manifesting as either shallow-water surf over gradual slopes or high-energy impacts on steep coastal features, such as rocky shorelines [

13]. In shallow water, nonlinear effects dominate wave deformation, causing the wave crest to propagate faster than the trough. This leads to steepening on the front face, flattening on the rear, and eventual forward collapse [

14,

15]. Conversely, surf impacts on steep coastal features produce highly localized and energetic events, characterized by prominent splash columns and high-magnitude, short-duration impact forces [

16,

17].

Figure 1a,b present representative surf events captured during typhoon observations.

Despite the significant impacts of surf on coastal environments, traditional monitoring methods face substantial challenges during extreme weather conditions. Field monitoring techniques (e.g., pressure sensors mounted on seawalls) often fail to capture the full spatial and temporal characteristics of surf impacts, particularly the complex splash patterns and impact forces that are critical indicators of potential structural damage [

18,

19,

20]. The deployment of sensors in surf zones is inherently constrained by hazardous wave conditions, leading to frequent equipment loss or data discontinuity. In contrast to field monitoring, laboratory and numerical approaches are often employed to overcome these limitations. However, they also exhibit inherent constraints. While laboratory surf flumes can simulate surf, they cannot fully replicate the complex interaction between typhoon-driven surf and coastal structures, especially the violent impact processes and splash formations that characterize real-world breaking events. Numerical simulations employ various hydrodynamic equation-based approaches including refraction–diffraction equation models, Boussinesq equation models, and Navier–Stokes equation models. While refraction–diffraction models are suitable for simple topographies with gradual slopes, Boussinesq and Navier–Stokes models can handle complex topographies but require temporal steps significantly smaller than wave periods and spatial discretization much smaller than wavelengths. These computational constraints result in intensive processing demands, making it challenging to accurately model the highly nonlinear, multi-phase dynamics of surf impacts and splash generation in real time [

21,

22,

23,

24].

Recent advances in video-based monitoring and deep learning have enhanced wave height measurements, real-time breaking pattern analysis, and shoreline change tracking, particularly during extreme weather events like typhoons [

25,

26,

27]. Video monitoring offers comprehensive spatial coverage and high temporal resolution, overcoming limitations of traditional methods such as wave buoys and pressure sensors, which are prone to equipment failure and require costly maintenance in harsh conditions [

28,

29,

30].

Video-based monitoring and deep learning have significantly enhanced various aspects of coastal observation, offering advantages over traditional methods such as higher spatial-temporal resolution, non-intrusiveness, and the ability to capture dynamic processes in real time. These technologies have been successfully applied to tasks such as shoreline change detection, surf zone dynamics monitoring [

31], and surf pattern classification [

32]. Advanced deep learning approaches, including convolutional neural networks (CNNs) and optical flow techniques, have demonstrated remarkable effectiveness in extracting nearshore parameters and analyzing coastal processes. For instance, the LEUCOTEA system on the Mediterranean coast combines CNNs and optical flow to assess tidal phases, wave dynamics, and storm surges in real time, providing a robust framework for coastal hazard assessment [

33]. Similarly, automated wave height monitoring through breaking zone detection [

34,

35] and swash zone analysis [

36,

37,

38] further highlight the potential of video-based methods. However, despite these advancements, the specific application of video-based monitoring and deep learning to surf impact detection during extreme weather events, particularly typhoons, remains largely unexplored. A key challenge lies in the limited availability of high-quality video datasets capturing surf impacts under such conditions. The combination of harsh environmental factors, equipment durability requirements, and the sporadic nature of typhoon events makes data acquisition exceptionally difficult. Additionally, existing systems often struggle to maintain performance during adverse conditions, such as low-light periods or extreme weather [

8]. These limitations underscore the critical need for further research to develop robust methods for surf impact detection during typhoons.

YOLO models have significantly advanced video-based monitoring capabilities in coastal and environmental applications, offering an optimal balance between detection accuracy and computational efficiency for real-time monitoring. The architecture has proven effective across diverse applications: achieving high accuracy in underwater object detection despite poor visibility [

39], identifying shoreline plastic debris using RGB-NIR imagery [

40], detecting floating debris in aquatic environments [

41], and classifying species in ecological monitoring [

42]. However, its use in surf or ocean wave observation, particularly during extreme weather, remains underexplored. This study leverages YOLO’s efficiency to address this gap, adapting it for real-time surf impact detection under typhoon conditions. Recent YOLO iterations (v9 [

43], v10 [

44], and v11 [

45]) introduce architectural innovations that are particularly well-suited for typhoon surf detection scenarios. The Programmable Gradient Information (PGI) enhances the model’s ability to capture rapid surf motions, while Generalized Efficient Layer Aggregation Networks (GELAN) effectively process varying scales of wave impacts. The Cross-Stage Partial with Spatial Attention (C2PSA) mechanism improves detection reliability under different lighting conditions from day to night, and anchor-free detection strategies ensure real-time processing capability. These architectural advantages align precisely with the requirements of typhoon surf monitoring, where the detection system must process dynamic wave impacts in real time while maintaining reliability across different times of day. This study examines the performance of multiple YOLO variants in this specialized monitoring scenario.

This study proposes a lightweight YOLO-based detection framework for monitoring surf impacts on rocky shorelines during typhoon events. Previous studies have primarily focused on analyzing surf dynamics in shallow waters, leaving the critical challenge of real-time surf impact detection during extreme weather conditions largely unexplored. We address this gap by developing an efficient detection system that leverages modern YOLO architectures’ strengths in processing high-speed motions and handling varying lighting conditions. The framework achieves reliable detection performance with minimal computational requirements, making it particularly suitable for continuous monitoring in coastal safety applications. The primary contributions of this study encompass:

- (1)

Development of a first-of-its-kind specialized surf impact dataset: We developed a pioneering high-resolution dataset capturing typhoon-induced surf impacts through continuous coastal monitoring during five major typhoons. This comprehensive dataset, encompassing diverse temporal and environmental conditions, addresses the critical gap in labeled data for extreme weather coastal monitoring.

- (2)

A lightweight YOLO-based framework for surf detection: We present an efficient YOLO-based detection framework for real-time surf impact monitoring during typhoons, achieving optimal accuracy and computational efficiency for continuous coastal surveillance.

- (3)

Performance comparison under extreme conditions: We assess multiple object detection models (Fast R-CNN, RT-DETR-l, YOLO series) in typhoon conditions. YOLOv6n demonstrates superior robustness against nighttime monitoring and light interference, outperforming other models prone to overlapping boxes and missed detections.

2. Materials

2.1. Dataset Preparation

The monitoring system was deployed at the Chongwu Tide Gauge Station in Quanzhou, Fujian Province, China. The DH-SPCAW230U camera (sourced from Zhejiang Dahua Technology Co., Ltd., Hangzhou, China). was strategically mounted on the eastern side of the tide gauge station platform, approximately 15 m above sea level, providing an unobstructed view of the surf–rock interactions along the coastal outcrop (

Figure 1c,d). This installation location was specifically chosen to capture breaking surf impacts against the rocky shoreline during typhoon events.

The monitoring system employs a DH-SPCAW230U coastal surveillance camera equipped with a 1/2.8-inch CMOS sensor (2.0 megapixels) and 30× optical zoom lens (focal length 4.5–135 mm). The camera system is engineered for extreme maritime environments, featuring 316L stainless steel housing with WF2, NEMA4X, and C5-M anti-corrosion certifications, and IP68 waterproof rating. The imaging system operates at 1920 × 1080 resolution with 50 fps capture rate, maintaining high-quality image acquisition across diverse lighting conditions through enhanced low-light sensitivity (0.005lux@F1.6 in color mode; 0.0005lux@F1.6 in monochrome mode) and 100 m infrared illumination capability.

The surveillance data collection period extended from 26 June 2024 (15:00:00 UTC + 8) to 20 November 2024 (09:07:50 UTC + 8), encompassing five significant typhoon events. The dataset composition, summarized in

Table 1, includes observation periods, extracted frames (video frames with potential surf events identified through preprocessing), labeled images (manually annotated frames with surf impact bounding boxes), and augmented images (enhanced versions of labeled images using data augmentation to simulate diverse conditions).

Video data were processed through a Preprocessing and Initial Frame Screening pipeline (

Section 2.2), yielding 59,028 frames with potential surf impacts. The pipeline uses computer vision to identify frames showing significant surf motion and activity.

From the extracted 59,028 frames, a subset of 2855 images were annotated using the LabelImg tool. These annotated images document instances of surf events and were stratified across the five typhoon events, as detailed in

Table 1. The annotation process emphasized precise identification of surf impacts, thereby ensuring high-quality data for model training and evaluation.

The annotation process presented two challenges that required resolution to ensure the reliability of the dataset. One is subjective Bias in Annotation. Surf impacts exhibit substantial variation in visual features such as splash size and intensity, potentially resulting in discrepancies between annotators. Standardized annotation guidelines were implemented to minimize annotator variability and ensure consistent labeling. The other is illumination variability. The dataset encompasses footage captured across diverse lighting conditions, ranging from bright daytime to dim nighttime settings. Nighttime videos presented increased complexity due to lower visibility, necessitating annotators to rely on subtle visual cues to identify surf impacts. This additional variability was addressed by selecting frames with distinguishable features and excluding those with highly ambiguous characteristics.

The combination of automated frame screening and rigorous manual annotation established a robust foundation for training and evaluating the proposed detection model.

2.2. Preprocessing and Initial Frame Screening

The primary objective of this preprocessing pipeline is to systematically identify video frames that potentially contain surf events for dataset construction. Through automated frame selection and filtering, this pipeline significantly reduces the manual annotation workload while maintaining the quality of the training dataset. All parameters in the following stages were determined through extensive experimentation to achieve optimal performance in surf impact detection. The process of identifying potential surf impact frames for dataset annotation comprises four sequential stages, detailed below:

This stage optimizes video frames for analysis by isolating relevant regions and enhancing salient features: (i) Region of Interest (ROI): To optimize computational efficiency, analysis is restricted to the lower half of the video frame (0.5 H-H), where surf predominantly occur. (ii) Grayscale Conversion: Frames undergo grayscale conversion to facilitate pixel intensity calculations and minimize computational complexity. (iii) Gaussian Blurring: A Gaussian blur (kernel size: 21 × 21) is applied to smooth the image, minimizing noise and ensuring feature consistency.

Motion detection identifies regions exhibiting significant movement indicative of potential surf: (i) Background Subtraction: An improved mixture of Gaussians (MOG2) background modeling algorithm differentiates moving objects (foreground) from static areas (background). (ii) Background Model Parameters: A history of 1000 frames and a variance threshold of 32 are used to adaptively model the background and identify motion effectively. (iii) Morphological Operations: Noise in the motion mask is reduced by applying morphological operations, including opening (erosion followed by dilation) and dilation.

- (3)

Surf Impact Identification

This stage implements a series of thresholds to determine frames likely to contain surf events: (i) Motion Ratio Threshold: Frames with a motion ratio exceeding 0.04 are flagged for further analysis. (ii) Motion Change Rate: Sudden motion changes greater than 0.02 are used to identify abrupt and high-energy events. (iii) Frame Interval Control: To prevent redundant detections, a minimum interval of 50 frames is enforced between consecutive detections. (iv) Dynamic Thresholding: Motion ratios are compared to the historical average (scaled by 1.5×) to dynamically adjust detection thresholds based on scene activity.

Temporal analysis ensures that surf events are detected as abrupt deviations from historical motion patterns: (i) Motion History Window: A sliding window of 30 frames is maintained to provide temporal context. (ii) Historical Comparison: Current frame motion data are compared with the motion history to detect sudden, impactful movements characteristic of surf impacts.

The pipeline efficiently identifies potential surf impact frames, significantly reducing manual annotation workload while preserving valuable instances for dataset construction.

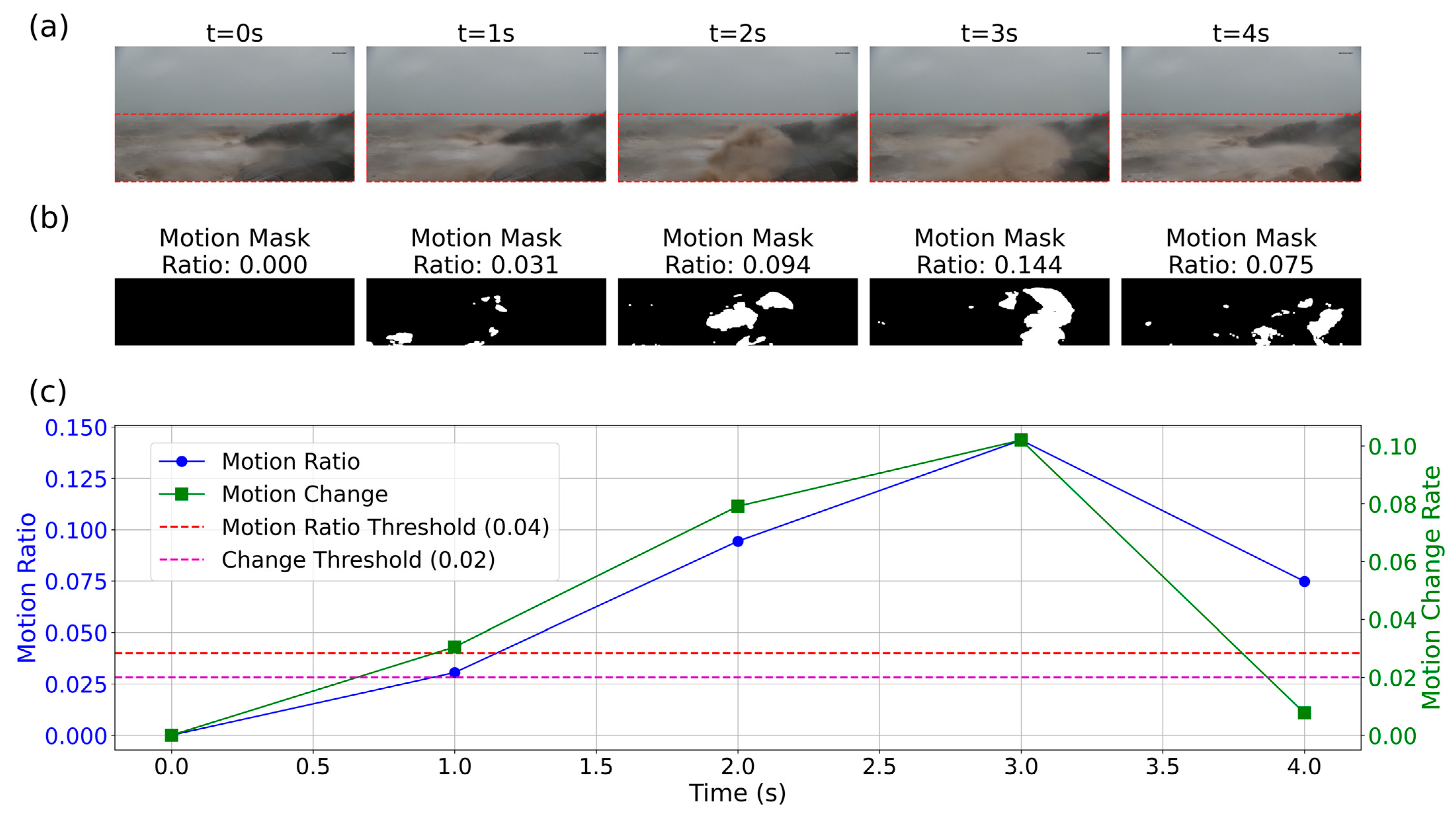

Figure 2 illustrates our detection framework through a representative surf sequence. The monitored coastal region in the original frames (

Figure 2a, red dashed boundaries) captures surf evolution over a 4 s interval. The motion masks (

Figure 2b), generated through MOG2-based background subtraction, effectively isolate surf impact zones from static elements. Quantitative analysis (

Figure 2c) reveals temporal dynamics through two metrics: Motion Ratio quantifying spatial extent of movement, and Motion Change Rate tracking temporal variations. The detection thresholds (0.04 and 0.02) successfully discriminate significant surf impacts from background motion, validated by the correlation between peak metric values and visually confirmed surf events.

2.3. Data Augmentation

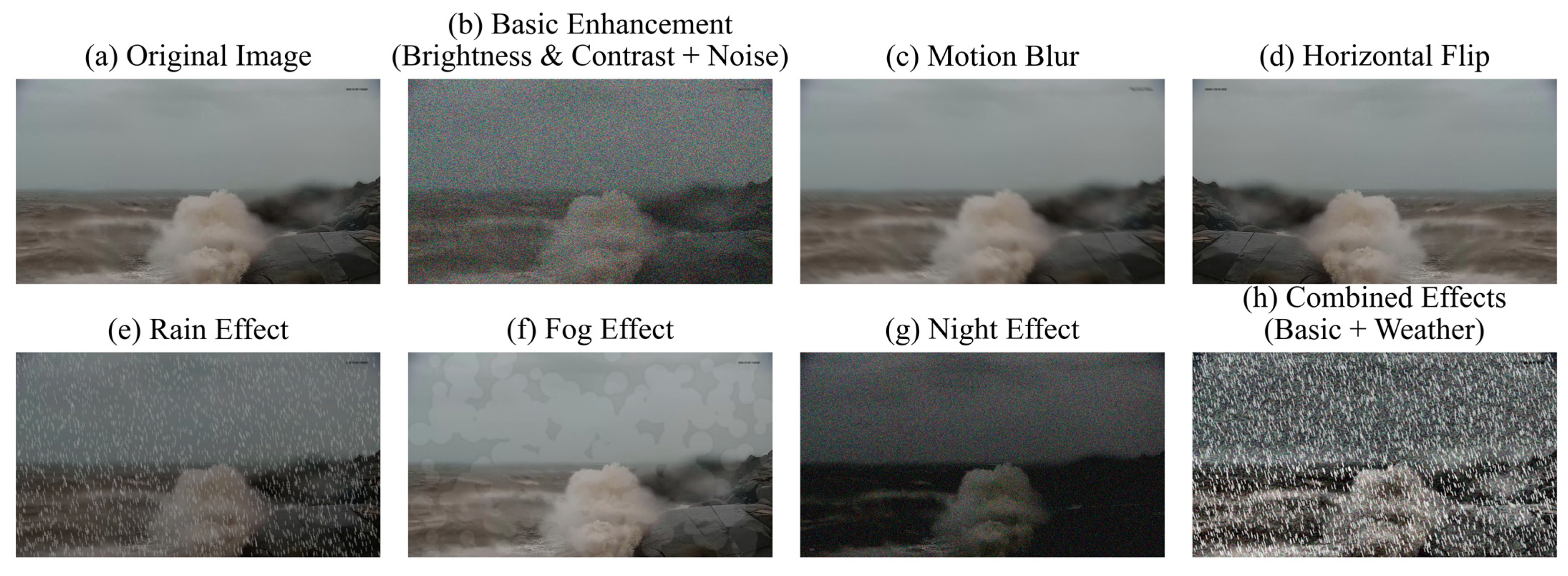

To enhance the diversity of the dataset and improve the robustness of the detection model, we applied two groups of data augmentation strategies to simulate real-world variations in image characteristics, lighting, and weather conditions. These strategies ensured the model could generalize effectively across diverse scenarios. Examples of the applied augmentation strategies are illustrated in

Figure 3, which includes both Basic Enhancements and Weather and Lighting Simulations alongside the original image for comparison. The Basic Enhancements group includes the following: (i) brightness and contrast adjustment (±0.3, normalized scale) with noise (Gaussian, variance 50–150 on a 0–255 intensity scale, or multiplicative, 0.7–1.3,

p = 1.0), simulating lighting and sensor noise (

Figure 3b); (ii) blur or motion blur (kernel size 5–7 pixels,

p = 0.8) to mimic camera motion (

Figure 3c); and (iii) horizontal flipping (

p = 0.5) for orientation variety (

Figure 3d). The Weather and Lighting Simulations group include the following: (i) weather effects such as rain (brightness coefficient 0.7, drop width 2 pixels, blur 5) or fog (coefficient 0.3–0.5) for environmental interference (

Figure 3e,f); (ii) color jitter (brightness, contrast, saturation ± 0.3, hue ± 0.2,

p = 1.0) for lighting variation (

Figure 3g); and (iii) CLAHE (clip limit 6.0, 8 × 8 grid) or equalization (

p = 0.8) for contrast enhancement. Each of the 2855 original images was augmented twice—once per group—yielding 8565 images, with combined effects (e.g., brightness adjustment and rain) shown in

Figure 3h.

For each original image, one augmentation was applied from each group, generating two augmented images per original image. This process expanded the dataset from 2855 labeled images to 8565 images, tripling its size. The augmented image count for each typhoon event is detailed in

Table 1, highlighting how the augmentation strategy contributed to the dataset’s overall size. All augmentations preserved the integrity of bounding box annotations to ensure high-quality training data for the detection model.

3. Methods

In this study, we evaluate multiple state-of-the-art object detection models to determine their suitability for surf detection in coastal environments.

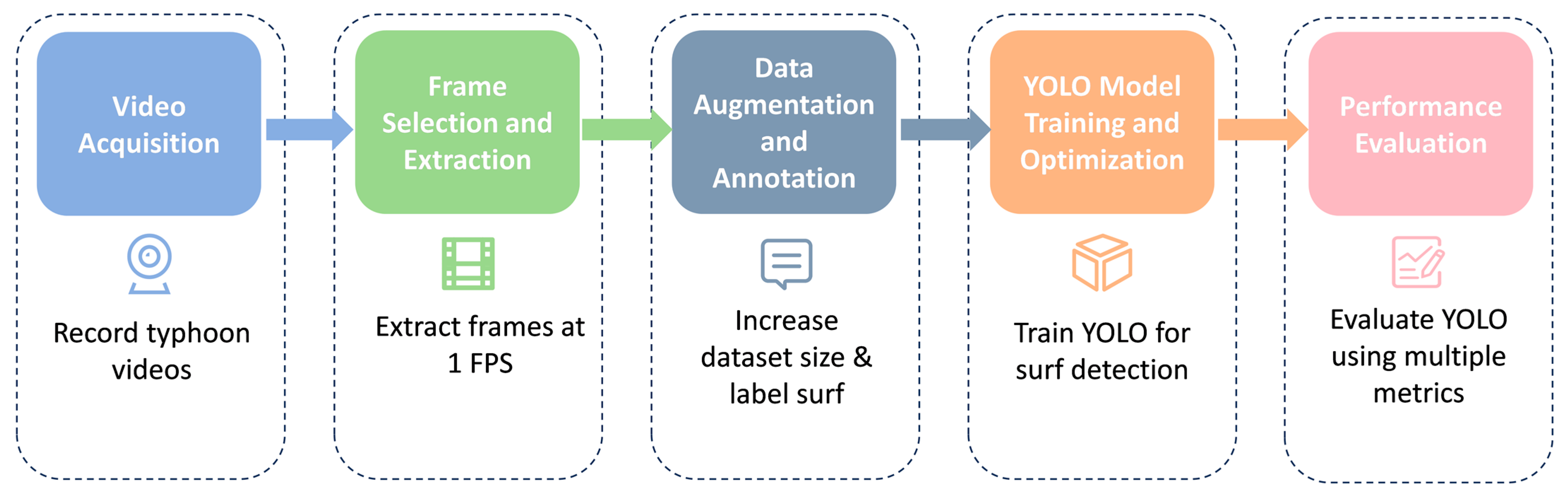

Figure 4 illustrates the technical roadmap of our proposed method, which systematically outlines the complete workflow from data acquisition to performance evaluation. These models represent recent advancements in real-time detection architectures, each featuring unique innovations to balance detection accuracy, computational efficiency, and adaptability to challenging conditions such as varying lighting and high surf dynamics.

The development of these detection models has undergone significant evolution. Starting from the traditional two-stage detector Faster R-CNN [

46] (30 June 2015), through the development of efficient single-stage YOLO detectors, to the recent transformer-based RT-DETR [

47] (6 July 2023) and the latest YOLOv11 [

45] (30 September 2024), each model represents key advancements in detection capabilities and computational efficiency.

3.1. Overview of Model Architectures

Modern object detection models can be broadly categorized into two primary approaches. (i) Two-stage Detectors: Represented by Faster R-CNN, these detectors first generate region proposals and subsequently refine them through classification and bounding box adjustments. While known for their high accuracy, the two-stage approach is computationally expensive and less suited for real-time applications. (ii) One-stage Detectors: Exemplified by the YOLO family, these architectures combine object localization and classification into a single forward pass, significantly reducing inference time. One-stage models are ideal for real-time tasks like surf impact detection, where rapid processing of high-resolution video streams is critical.

Each architecture consists of three key components. Backbone: Functions as the primary feature extractor, transforming raw images into multi-scale feature representations. Neck: Aggregates and enhances features across different scales for improved detection of objects at varying sizes. Head: Generates final predictions, including bounding boxes and class probabilities.

These components are crucial for addressing the challenges inherent in surf detection, such as high-speed motion, complex patterns, and diverse environmental conditions.

3.2. Selected Models

The evaluation includes a diverse range of models, reflecting advancements in detection accuracy, real-time performance, and architectural innovation. These models were selected based on their potential to address the specific challenges of surf impact detection.

YOLOv5n [

48]: A lightweight version of YOLOv5, optimized for speed and efficiency. It uses a CSPNet backbone to reduce computational complexity and a PANet neck for robust multi-scale feature fusion. Its compact architecture makes it particularly suitable for edge-based real-time monitoring tasks.

YOLOv6n [

49]: Incorporates Balanced Inference Cost (BiC) to balance speed and accuracy, alongside SimCSPSPPF (Simple CSP-based Spatial Pyramid Pooling Fast) for efficient global context extraction. These features enhance its effectiveness in resource-constrained deployments requiring continuous operation.

YOLOv8n [

50]: Introduces an anchor-free detection strategy that simplifies training and inference while improving detection robustness. Dynamic label assignment and multi-task loss functions further enhance its suitability for complex surf detection scenarios.

- (2)

Latest YOLO Iterations:

YOLOv9t [

43]: Features Programmable Gradient Information (PGI) for enhanced gradient flow and GELAN (Generalized Efficient Layer Aggregation Network) for multi-scale feature extraction. It is particularly effective in detecting overlapping surf impacts in turbulent environments.

YOLOv10n [

44]: Employs an NMS-free training strategy, optimizing boundary box localization and reducing false positives. Its dual-label assignment mechanism further improves detection accuracy in dynamic conditions, such as rapidly changing waveforms.

YOLOv11n [

45], YOLOv11m, YOLOv11s: Introduce spatial attention mechanisms (C2PSA) and optimized detection heads, making these models highly effective for detecting surf impacts in low-light conditions or complex coastal environments.

- (3)

Alternative Architectures:

RT-DETR-l [

47]: A transformer-based architecture designed for real-time object detection. Its self-attention mechanisms allow it to process high-resolution images efficiently, making it suitable for high-precision detection of surf impacts in challenging scenarios.

Faster R-CNN [

46]: Included as a baseline model, Faster R-CNN provides insights into the trade-offs between accuracy and computational efficiency. While accurate, its two-stage architecture limits its practicality for real-time monitoring applications.

Model selection and evaluation criteria were established to address the specific challenges of coastal surf monitoring applications. First, given the requirement for continuous video analysis during extreme weather events, real-time processing capability was prioritized, with a focus on architectures that could maintain both high frame rates and detection accuracy when handling high-resolution streams. Second, to evaluate deployment flexibility across different computational environments, we included models of varying computational complexity, from lightweight architectures suitable for edge devices to more sophisticated models for centralized processing systems. Third, we incorporated both CNN-based detectors and transformer-based architectures to provide comprehensive insights into how different algorithmic approaches handle the unique challenges of surf detection, such as rapid motion patterns and varying illumination conditions. Our evaluation framework quantified model performance through multiple metrics: detection accuracy using mean Average Precision (mAP) at different Intersection over Union (IoU) thresholds (0.5–0.95), computational efficiency measured by inference speed and resource utilization, and deployment practicality assessed through model size and memory requirements. This systematic approach enables evidence-based selection of architectures that optimize the balance between detection performance and operational constraints in real-world coastal monitoring systems.

3.3. Experimental Setting

The experiments were implemented using PyTorch framework (version 2.3.0) [

51], with model training conducted on a high-performance computing server equipped with a DCU K100 GPU (sourced from Hygon Information Technology Co., Ltd., Beijing, China) and 110 GB of system memory (RAM). Evaluation was performed on a workstation with an NVIDIA GeForce RTX 4060 GPU (sourced from NVIDIA Corporation, Santa Clara, CA, USA) and 32 GB of RAM. The dataset comprises 8565 labeled images from five typhoon events. To assess the model’s generalization to an unseen typhoon, images from the first four typhoons (Gaemi, Yagi, Kong-rey, Yinxing;

n = 7464) were split into training (80%,

n = 5972) and validation (20%,

n = 1492) sets, while the entire fifth typhoon (Toraji;

n = 1101) was used as the test set. This results in approximately 70% (

n = 5972), 17% (

n = 1492), and 13% (

n = 1101) of the total dataset for training, validation, and testing, respectively. Input images were preprocessed by resizing from 1920 × 1080 to 640 × 640 pixels. Training employed SGD optimization with a batch size of 16, initial learning rate of 0.01, and 100 epochs. The model weights corresponding to the epoch with the best performance on the validation set were selected for subsequent evaluation.

3.4. Evaluation Metrics

To quantitatively evaluate the performance of the proposed surf detection method, we established a comprehensive evaluation framework incorporating both detection accuracy metrics and computational efficiency metrics, which are widely adopted in deep learning-based object detection tasks.

- (1)

Detection Performance Metrics

The detection performance is evaluated using standard metrics from object detection literature. Given the challenging nature of surf detection in coastal surveillance videos, we selected the following metrics:

Precision (

), which quantifies the model’s ability to avoid false detections:

where

represents correctly identified surf (True Positives) and

denotes incorrectly identified non-surf (False Positives). This metric is particularly important in our application as false detections could lead to unnecessary coastal warning signals.

Recall (

), which measures the model’s capability to detect all surf present in the scene:

where

represents surf that the model failed to detect (False Negatives). A high recall is crucial for coastal monitoring systems to ensure no significant surf is missed.

For a comprehensive evaluation of detection accuracy, we utilize the Mean Average Precision () metric at different Intersection over Union ( thresholds. The IoU threshold determines how well the predicted bounding box must overlap with the ground truth to be considered a correct detection.

The

metric evaluates detection performance at an IoU threshold of 0.5:

where

represents the Average Precision for class

at

threshold 0.5, and

denotes the number of classes. This metric provides a baseline assessment of detection capability.

To evaluate localization accuracy more rigorously, we employ

, which averages the

over multiple

thresholds from 0.5 to 0.95 with a step of 0.05:

where

represents the mean Average Precision at

threshold

. This metric provides a more stringent evaluation of the model’s localization precision.

- (2)

Real-time Performance Metrics

For real-world coastal monitoring applications, computational efficiency is crucial. We evaluate the system’s real-time performance using Frames Per Second (

):

where

represents the average processing time per frame. This metric directly reflects the system’s capability to process coastal surveillance video streams in real-time conditions.

4. Results

4.1. Comparative Analysis of Model Performance in Accuracy and Efficiency

The experimental results from our extensive evaluation of surf detection models are summarized in

Table 2, which present a detailed comparison across multiple performance metrics including detection accuracy, computational efficiency, and resource utilization for all evaluated architectures. These metrics reflect model performance on the test set, consisting of 1101 images from the typhoon Toraji.

The experimental results reveal significant insights into the performance characteristics of different model architectures. In terms of detection accuracy, YOLOv6n achieved the highest score of 99.3%, demonstrating exceptional performance in basic detection tasks. This result is particularly noteworthy given its relatively lightweight architecture with only 4.2 M parameters. The YOLOv11 series, especially the larger variants, showed superior performance in strict detection metrics, with YOLOv11l achieving the highest score of 78.4%. This indicates that while basic detection capabilities are comparable across different model sizes, larger models tend to provide more precise localization of surf impacts.

The analysis of precision and recall metrics reveals interesting patterns across different architectures. YOLOv5n and YOLOv8n achieved the highest precision of 98.5%, demonstrating excellent ability to minimize false detections. Meanwhile, Fast R-CNN and YOLOv6n showed superior recall rates exceeding 98.0%, indicating robust capabilities in detecting all surf impact instances. However, Fast R-CNN’s relatively low precision of 80.8% suggests a tendency toward over-detection, highlighting the challenges in balancing precision and recall in traditional architectures.

As visualized in

Table 2, computational efficiency analysis revealed substantial advantages of YOLO-series architectures over traditional two-stage detectors and transformer-based models. The YOLO family demonstrated superior real-time performance, with most variants achieving over 100 FPS. YOLOv6n emerged as the most efficient model, processing 161.8 frames per second with a minimal latency of 6.18 ms. This real-time capability stems from YOLO’s efficient single-stage detection strategy and streamlined network architecture. In contrast, the two-stage Fast R-CNN showed significant computational overhead, processing only 12.7 frames per second with a high latency of 78.71 ms, primarily due to its region proposal network and separate classification stage. The transformer-based RT-DETR-l, despite its architectural innovations, achieved only 36.6 frames per second, highlighting the computational cost of self-attention mechanisms.

4.2. Performance Analysis in Challenging Conditions

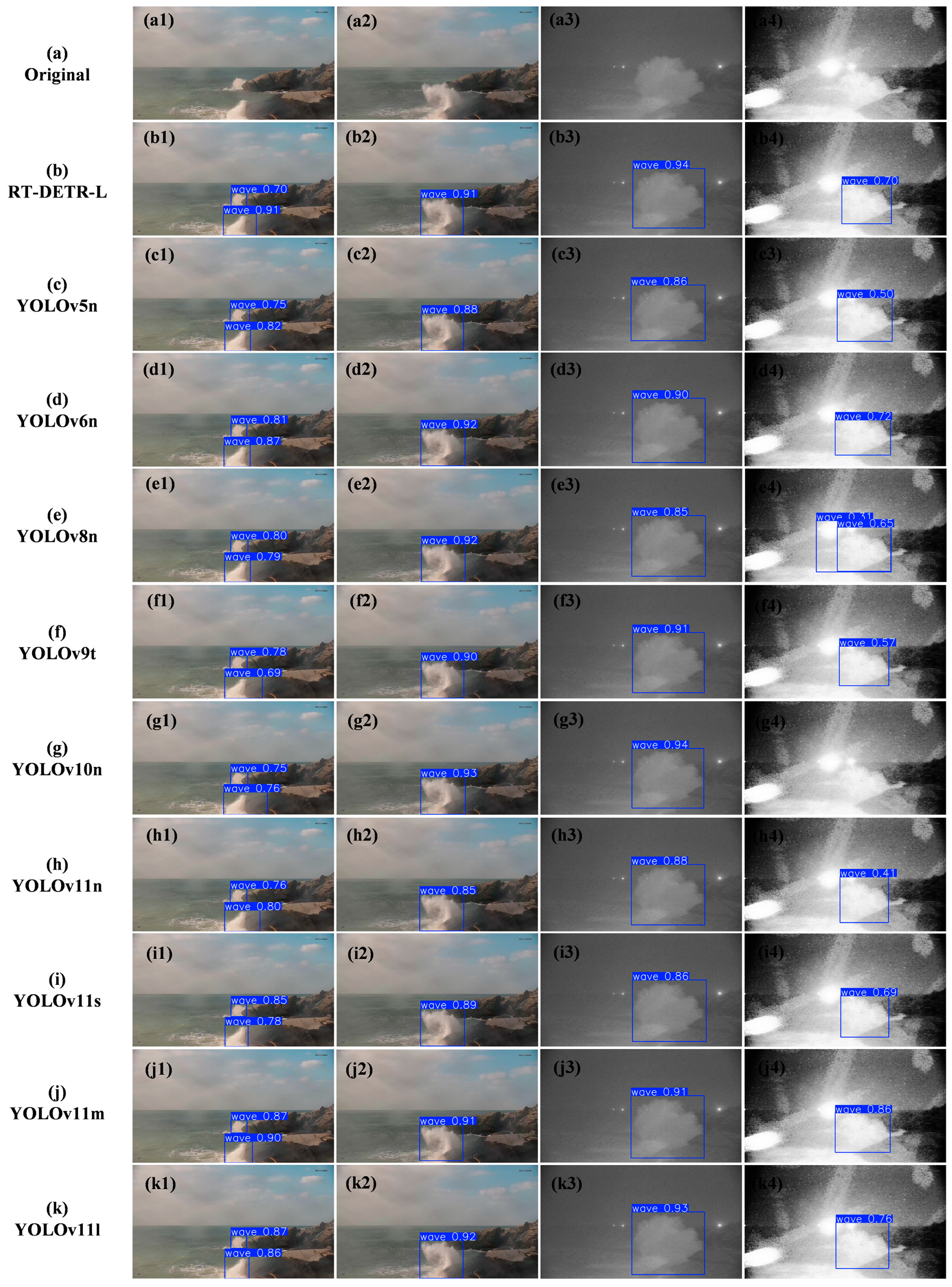

To further validate the quantitative results, we present qualitative detection results across different scenarios in

Figure 5. The visualization comparison spans four representative conditions: multiple surf impacts in daylight, single surf impact in daylight, nighttime conditions, and scenarios with marine vessel light interference. Under standard daylight conditions (columns 1–2), most YOLO variants demonstrate precise bounding box localization with confidence scores typically exceeding 0.90, which aligns well with their high

scores shown in

Table 2.

However, challenging scenarios with marine vessel light interference (column 4) reveal important limitations of certain architectures. For instance, YOLOv8n generates false positive detections with multiple overlapping bounding boxes, while YOLOv10n fails to detect the surf impact entirely under these conditions. These observations highlight the challenges in maintaining reliable detection performance when dealing with complex lighting conditions that simulate real-world maritime environments. Additionally, nighttime scenarios (column 3) demonstrate that while most models maintain detection capability, their confidence scores show notable variation, suggesting room for improvement in low-light conditions.

To complement these qualitative insights, we conducted a detailed quantitative analysis of YOLOv6n’s performance on two subsets extracted from the test set (Toraji,

n = 1101 images): a “night” subset (

n = 222 images) and a “strong interference” subset (

n = 50 images). In the night subset, YOLOv6n achieved a precision of 96.2%, recall of 98.2%,

of 99.3%, and

of 78.9%, matching the overall test set performance (

= 99.3%). This consistency suggests robust performance in low-light conditions, likely due to data augmentation strategies like brightness adjustment applied during training. In contrast, the strong interference subset—representing intense light from ships or other sources—yielded a precision of 97.9%, recall of 92.0%,

of 98.5%, and

of 72.4%. The reduced recall and slightly lower

indicate that strong interference can lead to missed detections, though precision remains high. These findings align with

Figure 5, where YOLOv6n detects surf with a confidence of 0.9 in the night scenario (Column 3) and a confidence of 0.72 in the strong interference scenario (Column 4).

Most notably, as evident from

Table 2, YOLO models achieved this superior speed while maintaining or even exceeding the accuracy of slower models. For instance, YOLOv6n’s 99.3%

surpassed both Fast R-CNN (98.3%) and RT-DETR-l (98.7%), while operating at 12.7× and 4.4× faster speeds, respectively. This demonstrates that architectural design of YOLO successfully addresses the speed–accuracy trade-off that traditionally challenges real-time object detection.

4.3. Model Scalability and Resource Efficiency

A detailed analysis of the YOLOv11 family scaling characteristics was conducted by evaluating four model variants (nano, small, medium, and large) under identical conditions. As shown in

Figure 6, the quantitative assessment of the YOLOv11 series demonstrated clear performance patterns: while

remained relatively stable across different scales (99.0% to 99.1%), the more stringent

metric showed substantial improvement from nano to large variants (74.6% to 78.4%). Precision maintained consistent high performance across scales (97.9% to 97.8%), with recall showing slight improvement from nano to medium variants (97.2% to 97.5%).

The computational implications of model scaling were significant. As illustrated by the FPS curve in

Figure 6, when model capacity increased, we observed a substantial decline in processing speed from nano to large variants (112.5 to 61.4 FPS), accompanied by increased latency (8.89 ms to 16.29 ms). Parameter count scaled approximately tenfold between variants (2.6 M to 25.3 M), with model size growing proportionally from 5.2 MB to 97.3 MB. This scaling pattern indicates a substantial computational cost for marginal improvements in detailed detection metrics. In contrast, YOLOv6n achieves a superior balance with its lightweight EfficientRep backbone and anchor-free detection, maintaining high accuracy (

= 99.3%) and exceptional speed (161.8 FPS), as detailed below.

The broader analysis across different architectural scales revealed that models in the 2–5 M parameter range offer the most favorable balance between performance and resource utilization. This is particularly evident when comparing YOLO variants with Fast R-CNN and RT-DETR-l. Despite having significantly fewer parameters (2.0–4.2 M vs. 28.3–32.0 M), lightweight YOLO models achieved superior detection accuracy and substantially higher processing speeds. To explain YOLOv6n’s standout performance, we highlight its architectural innovations: an EfficientRep backbone reduces computational load (4.2 M parameters), while the Rep-PAN neck efficiently aggregates multi-scale features. Its decoupled head and anchor-free detection enhance precision ( = 99.3%) and adaptability to dynamic surf zones, supported by structural reparameterization for efficiency (161.8 FPS, 6.18 ms latency). To quantify their suitability for real-time applications, we introduce a Real-time Performance Score (RPS = × FPS/Latency). Here, YOLOv6n (2601.03) outperforms YOLOv5n (1832.31), YOLOv8n (2160.08), YOLOv11n (1253.23), Fast R-CNN (15.87), and RT-DETR-l (132.12). In contrast, YOLOv11n, while improving (74.6%), sacrifices speed (112.5 FPS) due to increased complexity, less suited for our surf detection task where real-time processing of a single, large target class is paramount. This metric underscores YOLOv6n’s exceptional balance of accuracy, speed, and responsiveness.

The scaling analysis of the YOLOv11 family, combined with the comparative performance across different architectures, suggests that for real-world surf detection applications, YOLOv6n provides the most practical solution. Its high RPS (2601.03) reflects an optimal balance of accuracy ( = 99.3%), speed (161.8 FPS), and responsiveness (6.18 ms), critical for timely typhoon monitoring. Unlike newer variants like YOLOv11, which prioritize precision (e.g., = 78.4% for YOLOv11l) at the expense of speed (61.4 FPS), YOLOv6n’s lightweight design aligns with the real-time demands of surf detection—a task with a single, dynamic target class—where rapid processing outweighs marginal accuracy gains. These findings demonstrate that YOLOv6n’s architectural innovations effectively address the computational challenges of real-time surf detection, making it well-suited for practical deployment in coastal monitoring systems.

5. Discussion

The superior performance of YOLO variants in surf detection stems from their architectural alignment with task-specific requirements. The single-stage detection approach achieved 99.3%

at over 100 FPS, significantly outperforming traditional two-stage detectors (98.3%

, 12.7 FPS). This efficiency derives from YOLO’s grid-based prediction mechanism, which naturally suits surf characteristics where impacts present as large-scale, high-contrast phenomena occupying significant frame portions. The dense prediction nature and feature pyramid network structure effectively capture rapid shape changes and varying intensity patterns, while the compact model size (2–5 M parameters) enables deployment in resource-constrained coastal monitoring stations. Our YOLOv11 scaling analysis challenges conventional assumptions by demonstrating that lightweight variants achieve comparable accuracy to larger models while maintaining high processing speeds. Notably, among these lightweight variants, YOLOv6n excels due to its optimized design for real-time surf detection, outperforming even newer models like YOLOv11 by prioritizing speed over marginal precision gains critical for typhoon monitoring, as detailed in

Section 4.3.

The deployment of AI-based surf detection systems presents both opportunities and challenges in coastal monitoring applications. Our experiments demonstrate sustained detection accuracy above 95% across varying lighting conditions, weather patterns, and potential lens contamination from salt spray. The high processing speed of YOLO models enables implementation of additional preprocessing steps or ensemble methods to address environmental challenges without compromising real-time performance. While traditional instruments provide point measurements, these vision-based systems offer comprehensive spatial coverage and simultaneous tracking of multiple surf events, significantly expanding monitoring capabilities within existing infrastructure constraints. The efficiency of YOLO architectures particularly suits coastal monitoring stations, which often operate in remote locations with limited computational resources.

While YOLOv6n demonstrates robustness across various conditions, its practical deployment during typhoon events reveals certain limitations. The strong interference subset’s reduced recall (92.0% vs. 98.2% in the night subset) suggests that intense light sources, such as ship headlights, may cause missed detections, potentially compromising real-time coastal monitoring reliability. This could be mitigated by enhancing training data with additional interference simulations (e.g., artificial light or fog). Furthermore, equipment durability poses a challenge in typhoon environments, where high winds, rain, or saltwater corrosion could damage cameras. Protective casings or regular maintenance could address this issue. Data collection continuity is another concern, as heavy rain or wind may obstruct lenses, interrupting video feeds. Deploying backup cameras or employing data interpolation techniques (e.g., predicting surf presence based on historical patterns) could ensure operational consistency. These enhancements would strengthen the system’s applicability in real-world coastal safety scenarios.

Several promising research directions emerge from our findings, focusing on system robustness and practical deployment. Key areas include developing adaptive architectures that dynamically adjust to environmental conditions, incorporating temporal feature integration for enhanced detection reliability, and extending architectural principles to related coastal monitoring tasks such as debris detection and shoreline change monitoring. Future work should also explore self-supervised learning approaches to reduce manual labeling requirements, develop comprehensive validation protocols across diverse environments, and establish automated calibration and maintenance systems. These advancements would facilitate wider adoption of AI-based coastal monitoring systems while supporting both operational needs and long-term research objectives. Integration with physical monitoring systems could provide valuable cross-validation, enhancing overall system reliability and expanding the application scope of this technology. Additionally, incorporating physical models, such as wave dynamics, into data augmentation could enhance realism of simulated surf patterns, though their computational complexity requires further optimization. This could improve detection robustness in extreme typhoon conditions.

Another promising direction is measuring surf heights. While our current 2D video-based method excels at detecting surf presence, it lacks depth data to estimate heights, a capability vital for assessing coastal hazards during typhoons. Future research could explore stereo vision to derive depth from dual-camera setups [

52] or use LiDAR for precise measurements [

53], though these require advanced hardware and validation. Such advancements could significantly enhance real-time coastal monitoring.

6. Conclusions

This study addressed the challenge of maintaining reliable coastal monitoring during extreme weather conditions through the development of a deep learning-based real-time surf detection system. We established a comprehensive dataset of 2855 labeled images from five typhoon events at the Chongwu Tide Gauge Station, capturing diverse scenarios including daytime, nighttime, and varying weather conditions. Through data augmentation strategies simulating different lighting and weather variations, we expanded this dataset to 8565 images, creating a robust foundation for model development and evaluation. In our experimental results, lightweight YOLO architectures demonstrated exceptional performance in this specialized monitoring scenario, with YOLOv6n achieving an of 99.3% at 161.8 FPS, significantly outperforming traditional two-stage detectors and transformer-based models. Models with 2–5 M parameters proved particularly effective, offering an optimal balance between detection accuracy and computational efficiency while maintaining high performance across various environmental conditions with minimal computational resources. The successful implementation of this system represents a significant advancement in coastal monitoring technology, offering a reliable and efficient solution for continuous surf impact detection during extreme weather events. This capability directly enhances coastal safety and infrastructure protection by providing real-time monitoring capabilities previously unavailable through traditional methods, while the demonstrated effectiveness of lightweight architectures opens new possibilities for widespread deployment in resource-constrained coastal monitoring stations.

Future research should focus on addressing these limitations by expanding the dataset to include diverse coastal regions and environmental conditions, enhancing detection strategies for complex and extreme scenarios, and integrating surf detection systems into existing coastal monitoring infrastructures. These efforts will contribute to the development of more reliable and scalable automated surf detection systems, ultimately improving coastal hazard monitoring and disaster preparedness.