1. Introduction

The yearly seasonal fallow area encompasses 24.9 million hectares, which constitutes 25% of arable land in China [

1]. Planting green manure crops on fallow areas significantly contributes to expanding fertilizer sources and enhancing soil nitrogen and organic matter levels. Chinese milk vetch (CMV,

Astragalus sinicus L.) indicates robust winter growth capabilities, enriched with nitrogen, phosphorus, potassium, and trace elements. Prevalent in the Yangtze River basin, CMV is strategically cultivated in fallow fields after rice harvest. Subsequently, at the full blooming stage, CMV is incorporated into the soil to increase soil organic matter and thus substitute a portion of nitrogen chemical fertilizers. Previous research indicated that the CMV’s biological nitrogen fixation approximately contributes to 78% of the overall nitrogen accumulation, supplying 41–146 kg/ha of nitrogen for subsequent crops [

2]. Consequently, CMV has the potential to substitute 20–40% of nitrogen fertilizers after incorporation [

3]. The utilization of this natural nitrogen fertilizer substitute has triggered substantial studies on estimating the biological nitrogen fixation amount (BNFA) of CMV [

4,

5,

6]. The nitrogen fixation capacity of CMV correlates with aboveground biomass (AGB), nitrogen content, and biological nitrogen fixation rate, with AGB emerging as the primary influencing factor. Therefore, accurately estimating the AGB of CMV becomes pivotal in evaluating its impact of CMV on soil fertility and optimizing nitrogen fertilizer application in rice fields.

Traditional approaches for estimating AGB and related physiological and biochemical parameters, such as field surveys and vegetation growth models, encounter notable limitations. Field surveys are time-consuming and susceptible to investigator bias. Additionally, the application of vegetation growth models requires many parameters and heavily depends on extensive field survey data for calibration. For example, the APSIM (Agricultural Production Systems Simulator) model requires detailed calibration of parameters related to photosynthetic and respiration rates, which are specific to plant species and environmental conditions [

7,

8]. These parameters need to be measured under controlled conditions, often requiring complex experimental setups [

7,

8,

9,

10]. Furthermore, there is currently no comprehensive explanation of the mechanisms by which extreme atmospheric conditions impact agricultural production, leading to lower accuracy in crop simulation under extreme agricultural climates [

11,

12]. Therefore, crop growth models need to be improved by incorporating appropriate empirical models tailored to different crops.

Fortunately, the recent emergence of unmanned aerial vehicle (UAV) remote sensing technology offers a promising alternative to traditional AGB estimation methods. UAV remote sensing has several advantages, including high-speed data acquisition, objectivity, quantifiability, and non-destructiveness [

13,

14,

15]. This technological innovation has proven its efficiency and accuracy in estimating vegetation AGB and other physiological and biochemical indicators [

14,

16,

17,

18]. However, the application of UAVs also faces challenges, such as regulatory issues related to airspace restrictions and flight permissions, which can complicate deployment in certain regions. Additionally, battery capacity, though less of a concern, can limit flight duration and the area that can be covered in a single survey. Despite these challenges, solutions are emerging—regulatory frameworks are gradually evolving to accommodate UAV usage, and advancements in battery technology and energy-efficient systems are likely to extend flight times, making these limitations manageable rather than insurmountable [

19,

20].

A prevalent approach for estimating vegetation parameters through UAV remote sensing capitalizes on the interaction between vegetation and solar radiation. By establishing correlations between reflectance and physiological and biochemical indices, such as AGB, the estimation of these indices becomes attainable [

10,

13,

15,

17,

21]. To improve accuracy and mitigate interference from non-vegetated features, researchers have introduced various vegetation indices, such as the normalized difference vegetation index (NDVI), normalized green–red difference index (NGRDI), modified chlorophyll absorption ratio index (MCARI), and simple ratio index (SR) [

18,

22,

23,

24,

25]. This method, based on optical remote sensing, offers the advantages of requiring minimal parameters and featuring straightforward and accurate mathematical expressions [

26,

27]. However, in open field scenarios, optical remote sensing methods encounter challenges arising from various factors, including soil background, variations in vegetation structure during different growth periods, and sowing densities. These factors may result in saturation issues in optical measurements [

28,

29].

Being a creeping crop, the high-density sowing of CMV fosters intense competition among individual plants, resulting in alterations in plant morphology. These changes can influence canopy spectral information, internal plant structure, and the composition of AGB, leading to substantial variations. Given these considerations, it is necessary to develop a reliable remote sensing estimation method for the AGB of CMV, crucial for accurately capturing the spatial distribution and structural composition of CMV plants in open fields.

In recent years, the fusion of diverse modeling features has emerged as a pivotal strategy to improve AGB estimation. For example, textural features extracted from UAV imagery can autonomously capture spatial information in the images, regardless of color tones. Key texture features, such as variance, homogeneity, and contrast, are commonly used in this context. Variance reflects the image’s contrast level by measuring the intensity variation across neighboring pixels, homogeneity captures the uniformity of pixel intensities within the image, and contrast quantifies the difference in intensity between adjacent pixels. These texture features, when integrated into models, enhance the ability to discern vegetation characteristics and improve AGB estimation accuracy. This capability assists in discerning variations in the spatial distribution of vegetation, vegetation types, and densities [

30,

31], thereby improving the precision of AGB estimation [

32,

33,

34]. Furthermore, UAVs equipped with light detection and ranging (LiDAR) technology can reconstruct 3D point cloud information of vegetation [

14,

35,

36]. Structural features, such as vegetation plant height, canopy diameter, and canopy cover, derived from this 3D point cloud, facilitate efficient AGB estimation [

37]. Despite its potential, the application of this method in precision agriculture management still encounters challenges related to technology costs. Nevertheless, advancements in the structure from motion (SFM) algorithm for motion recovery now enable the reconstruction of vegetation 3D point cloud information using low-cost, high-resolution RGB images [

38]. This development allows for a cost-effective AGB estimation [

39,

40,

41]. Moreover, the fusion of structural and spectral features has progressively proven to be an effective approach for enhancing the accuracy of vegetation AGB estimation [

42,

43]. Additionally, the comprehensive fusion of spectral, structural, and image textural features captures the spatial distribution and growth status of vegetation, contributing to further improvements in AGB estimation performance [

16,

44].

Previous studies underscore the effectiveness of UAV multi-source map fusion technology in improving AGB estimation through the resolution of optical measurement saturation challenges. However, no investigations have applied this technique to CMV. A comprehensive examination is imperative to assess the applicability and spatial transferability of this optimization methodology in the AGB estimation of CMV, given its prostrate growth and high-density sowing. Hence, this study aims to (1) quantitatively evaluate the impact of UAV-based spectral, textural, and structural features on the AGB of CMV modeling and (2) ascertain the efficacy of various feature combinations in mitigating optical remote sensing saturation during modeling. Through the construction of an optimized AGB estimation model, this study contributes to the large-scale rapid estimation of BNFA, thus optimizing the fertilizer application practice in the CMV-rice rotation system.

2. Materials and Methods

2.1. Study Area

The study area was in Taihu Farm, Jingzhou City, Hubei Province, China (30°21′N, 112°02′E), with an average annual temperature of 16.3 °C and an annual rainfall of 1200 mm (

Figure 1). The study area implemented a CMV-rice rotation system, where rice was cultivated as a single-season crop and CMV was grown during the winter fallow period. The CMV variety was Yijiang (Wuhu Qing Yijiang Seed Industry Co., Ltd., Wuhu, China), and the seeds were sown uniformly at a rate of 30 kg/ha. No chemical fertilizers or irrigation were applied during the CMV growth period.

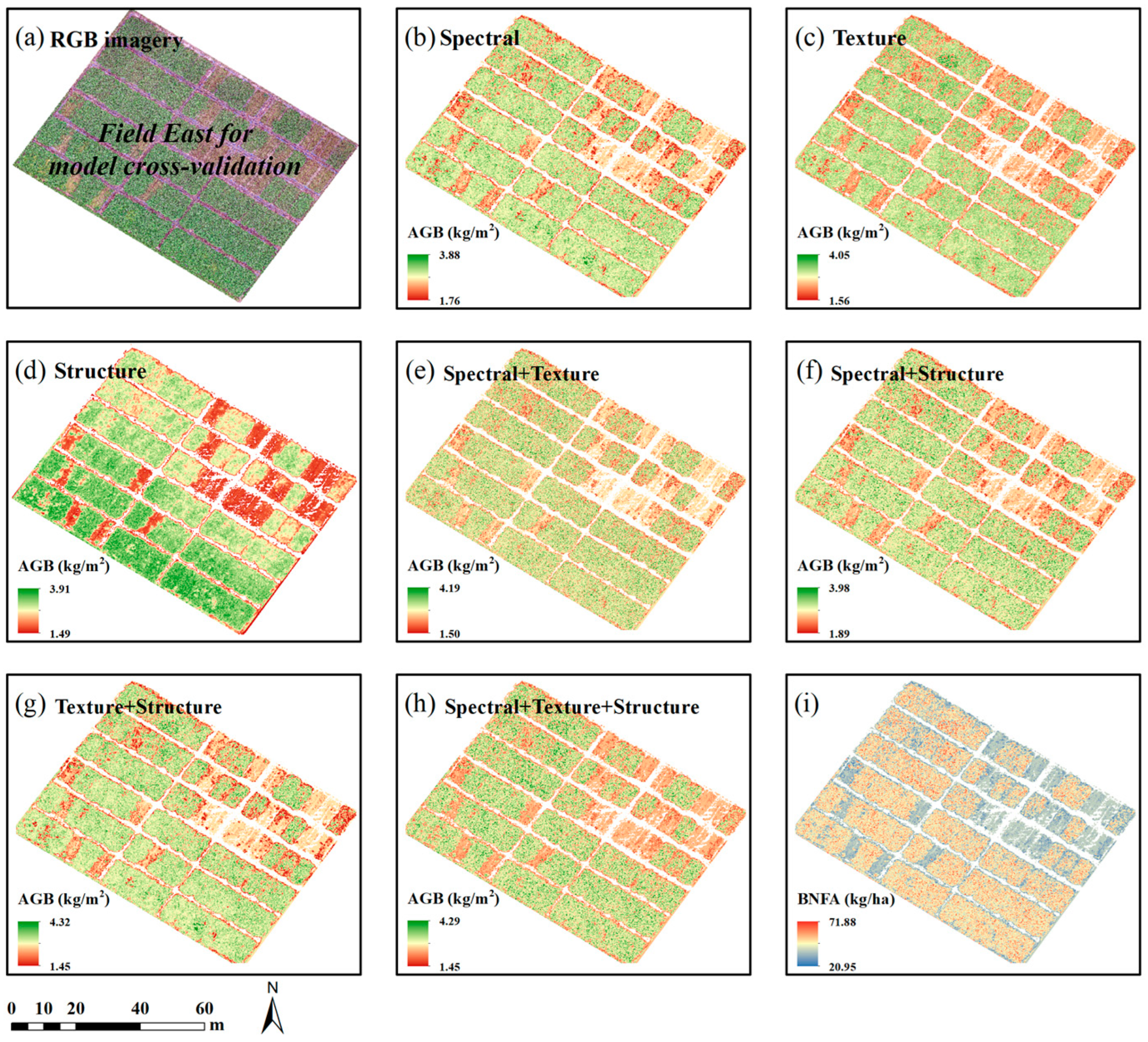

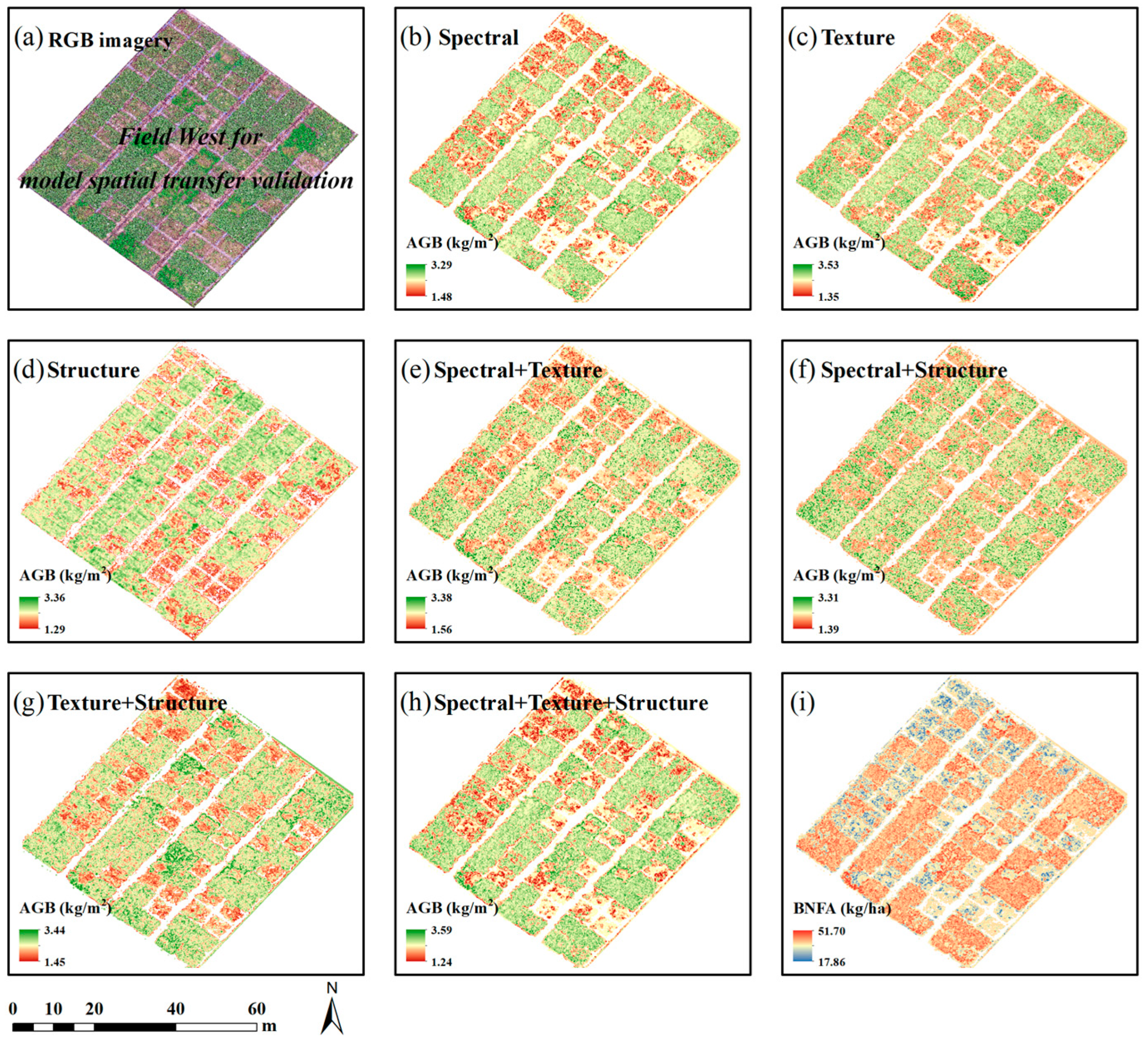

This study was conducted in two specified regions identified as Field East and Field West, both located at Taihu Farm. The fields share similar conditions in terms of sunlight, air temperature, irrigation, and soil characteristics. Each study field was subdivided into 96 plots, measuring 32 m2 (4 m × 8 m) for Field East and 20 m2 (4 m × 5 m) for Field West, respectively, with 30 cm wide ridges separating the plots. The field trial was set up in 2015 to evaluate the effect of CMV incorporation on soil fertility and rice growth. The primary objective of the current investigation was to develop a model for estimating the AGB of CMV using remote sensing technology. Consequently, data acquisition exclusively focused on plots with CMV cultivation. Specifically, 75 plots from Field East were selected for constructing the AGB estimation model of CMV and conducting cross-validation, while 48 plots from Field West were chosen for the spatial transferability validation.

2.2. Overall Workflow

The workflow comprises five key components: image preprocessing, feature extraction, feature selection, feature combination, model construction, and spatial transferability validation (

Figure 2).

2.3. Ground Data Acquisition and Processing

- (1)

Ground-based measurement of CMV plant height

Ground truth plant height data were measured on 13 April 2021, during the full blooming stage of CMV. Initially, a sample plot with dimensions of 30 cm × 30 cm was randomly chosen from each plot, and the precise count of CMV plants within it was documented. Following this, ten representative plants were selected from the plot, and the height of fully extended plants was gauged using a telescopic ruler. The average of these measurements was regarded as the plant height value (in centimeters) for the respective plot.

- (2)

Ground-based measurement of the AGB of CMV

Ground data measurement ensued after acquiring actual plant height measurements on the same day. All CMV plants were exclusively harvested within each plot, and the fresh weight meticulously recorded to determine the AGB of CMV in kg/m2 for the respective plot.

- (3)

Ground-based measurement of CMV moisture content and nitrogen content

For each plot, approximately 500 g of harvested CMV samples were taken and subjected to fresh weight measurement. Subsequently, the samples were dried in an oven (initially at 105 °C for the first 30 min) until a constant weight was achieved at 70 °C. The measured dry weight facilitated the calculation of moisture content (%) for the CMV. Following this, the plant samples were ground and digested with H2SO4-H2O2. The nitrogen content (%) was then measured using the Kjeldahl method. The data on moisture and nitrogen contents were utilized for the estimation of BNFA (kg/ha).

2.4. UAV Image Data Acquisition and Processing

To ensure consistency between UAV remote sensing data and ground-based data, RGB and multispectral images were acquired using DJI Mavic 2 Pro and P4 multispectral quadcopter UAVs between 11:00 and 13:00 on the day of ground data acquisition.

The Mavic 2 Pro, designed for commercial applications, is a lightweight drone equipped with an advanced omnidirectional vision system, infrared sensors, and a high-precision anti-shake gimbal. It features a 20-megapixel CMOS sensor, a 24 mm focal length lens, and an 85° angle of view for high-resolution RGB image capture. Similarly, the P4 multispectral, tailored for multispectral imaging, shares key features with the Mavic 2 Pro. It incorporates a multi-directional vision system, an infrared sensor system, and a high-precision anti-shake gimbal with a built-in real-time kinematic (RTK) system. Equipped with six CMOS image sensors, the P4 multispectral captures images in five wavelength bands: blue (450 ± 16 nm), green (560 ± 16 nm), red (650 ± 16 nm), red edge (730 ± 16 nm), and near-infrared (840 ± 16 nm). Each monochrome sensor features 2.12 million pixels, a lens focal length of 40 mm, and a viewing angle of 62.7°.

All aerial missions occurred under optimal weather conditions, including clear skies, minimal wind, at an altitude of 20 m, and a speed of 2 m/s. Images were acquired at regular 2 s intervals. The flight grid was a single grid, and both the forward and side overlaps were set to 80%, ensuring adequate coverage between images and improving the accuracy of image stitching. Flight paths covered the entire study area, and radiometric calibration panels were strategically placed within the flight coverage. For radiometric calibration, the empirical linearization radiometric calibration (ELRC) method [

45] (Farrand et al., 1994) was applied to ensure accuracy and consistency of radiometric values acquired by the multispectral sensors during flights. Additionally, ten ground control points (GCPs) were positioned within each study field for precise georeferencing. Horizontal measurements of the GCPs’ geographic coordinates were conducted using a GNSS device based on RTK technology, providing precise locations for geometric correction of images. Moreover, RGB images of the study area were acquired during the bare ground period between 11:00 and 13:00 on 18 September 2020. These images were utilized for generating a digital elevation model (DEM) representing the bare ground period after rice harvest and before CMV sowing.

2.4.1. Image Mosaic and Radiometric Calibration

In this study, the UAV multispectral and RGB images from the two study fields were mosaiced using Agisoft Metashape 1.8.0 software. Subsequently, radiometric calibration was performed on the multispectral images using the ELRC method.

Note:

Ri and

DNi represent the surface reflectance and gray value of a specific pixel in the

i-th band. The gain and offset values for the

i-th band are constants derived from experimental measurements, considering the reflectance characteristics of diverse radiometric calibration targets.

2.4.2. Removal of Soil Background

In the quantitative assessment of vegetation physiological and biochemical indices, non-vegetative elements, such as soil may distort radiometric data of vegetation targets, lowering accuracy in information extraction. To mitigate this, removing soil background from remote sensing images is crucial before extracting vegetation-related data. ArcGIS 10.8 and ENVI 5.3 were used in this study for soil background removal from radiometrically calibrated multispectral and RGB images.

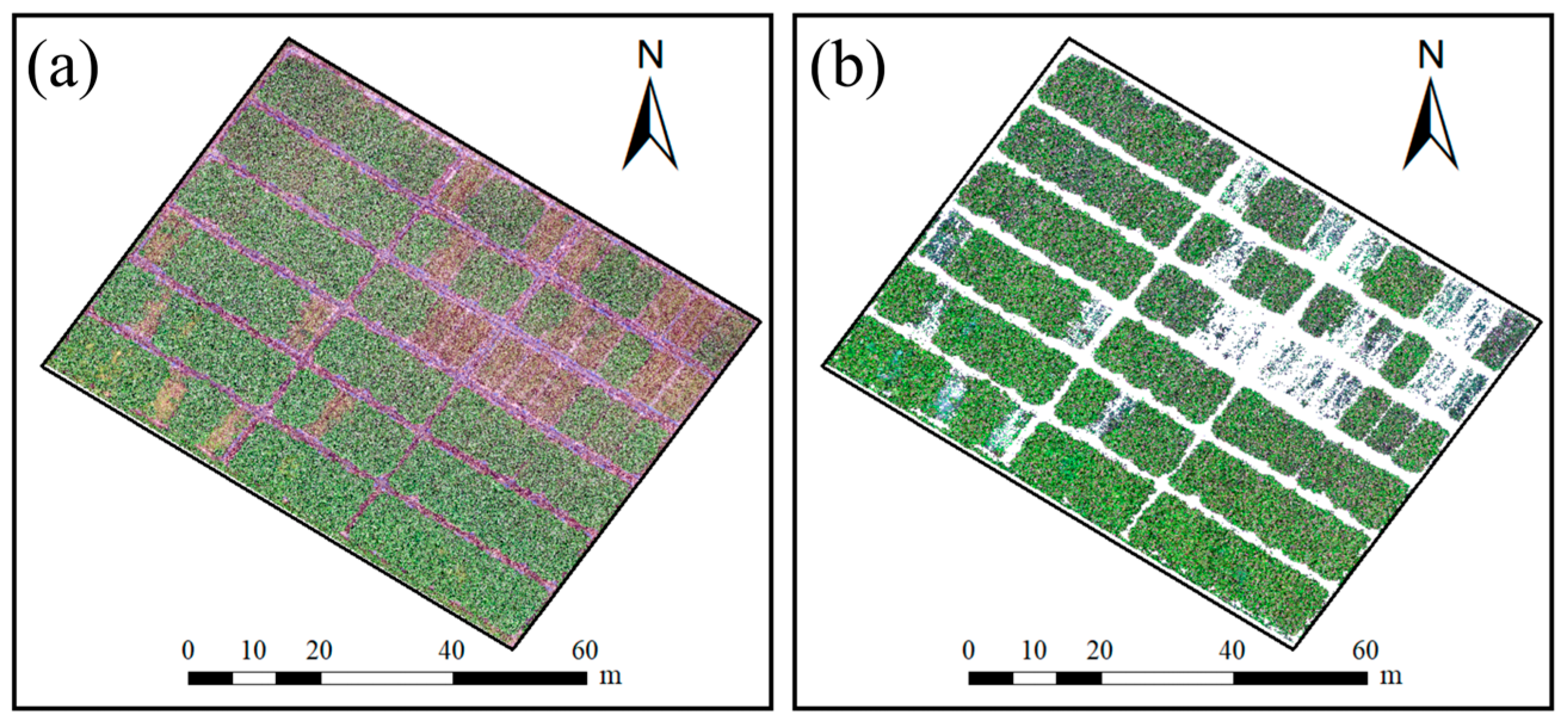

Figure 3 shows multispectral images before and after soil removal (similarly for RGB images). A soil background mask was constructed through supervised classification using an SVM classifier, with visual interpretation markers established simultaneously. Ten thousand random sample points for soil and vegetation were selected to evaluate the SVM classifier’s performance. Classification results indicated overall accuracies of 95.43% for soil and 93.36% for vegetation in both multispectral and RGB images (

Figure 4). The validated soil background mask was then exported as a vector file, facilitating soil background removal from the images.

2.4.3. Extraction of Spectral, Textural, and Structural Features

In the study area, a rectangular region of interest (ROI) was defined for multispectral and RGB images based on plot areas to extract spectral, structural, and textural features. To mitigate edge effects, ROI dimensions were set 0.2 m smaller than the original plots. Spectral, structural, and textural features were extracted using the “zonal statistics as table” function from the ArcPy library. Twelve vegetation indices, widely recognized for AGB estimation, were selected as spectral features. Corresponding spectral feature images were systematically computed using the geospatial data abstraction library (GDAL) in Python (version 3.10). The calculation formulas are presented in

Table 1.

Textural features based on the gray level co-occurrence matrix (GLCM) using ENVI 5.3 included eight metrics: mean (Mean), variance (Var), homogeneity (Hom), contrast (Con), dissimilarity (Dis), entropy (En), second moment (Sm), and correlation (Cor). For multispectral images, forty textural features were calculated across five bands. The second-order probabilistic statistical filter used default settings with a 3 × 3 window size and a diagonal direction of [1, 1]. Calculation formulas are presented in

Table 2.

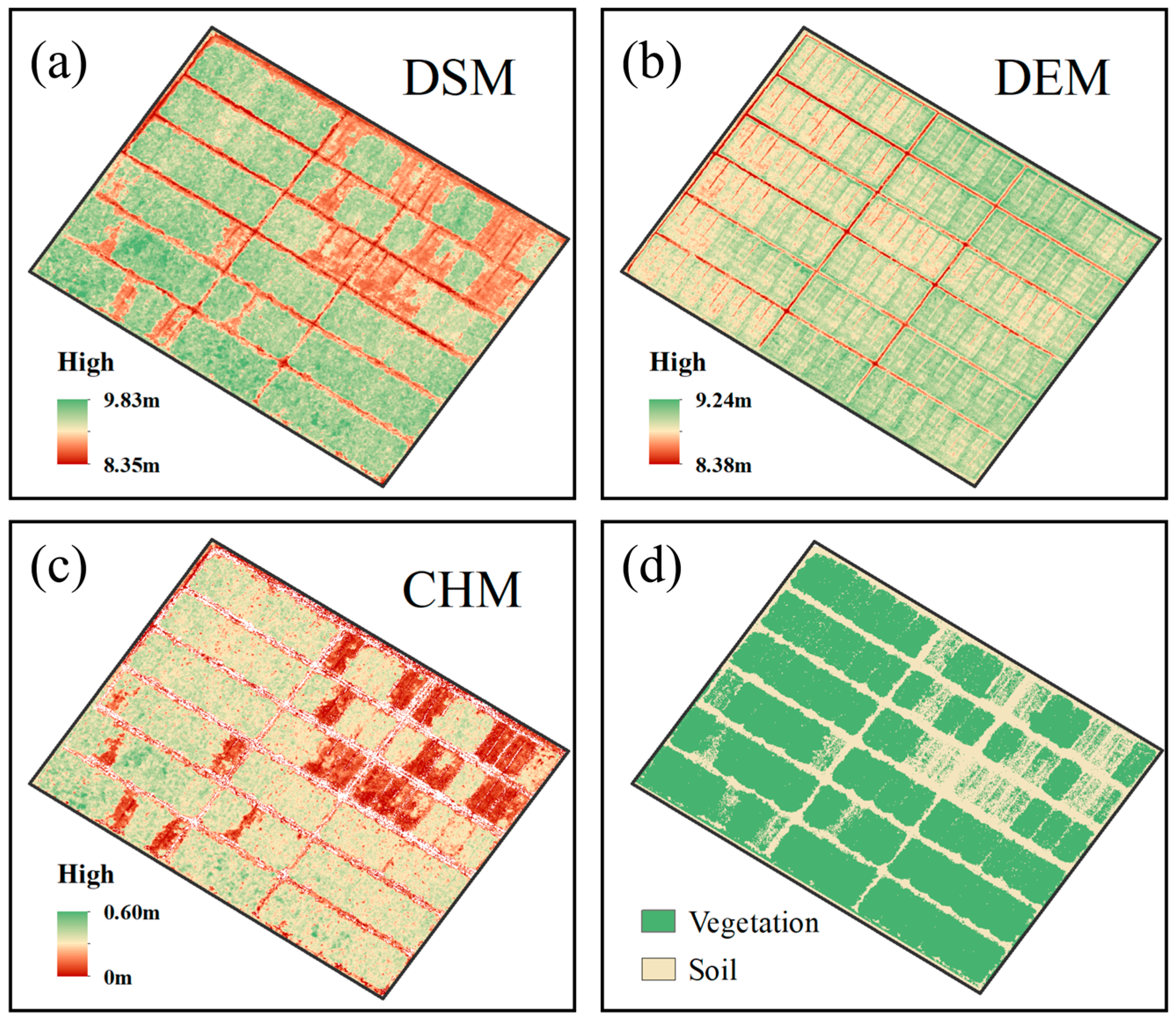

Utilizing the SFM algorithm to reconstruct 3D point cloud information from RGB images provides elevation details for the study area. However, its accuracy is limited, offering only vegetation canopy elevation information rather than precise plant height. To overcome this limitation, the study aims to determine the bare ground height of the study field, crucial for accurate plant height information. After the rice harvest on 18 September 2020, RGB images were acquired to generate the DEM of the study area (

Figure 5b). Subsequently, the DSM (

Figure 5a), derived from RGB images during the CMV full blooming stage, underwent raster subtraction with the DEM, resulting in the specific CMV canopy height model (CHM) (

Figure 5c). Finally, the soil background mask from

Section 2.4.2 was applied to eliminate soil pixels from the CHM, ensuring more accurate plant height information and laying the foundation for subsequent feature extraction.

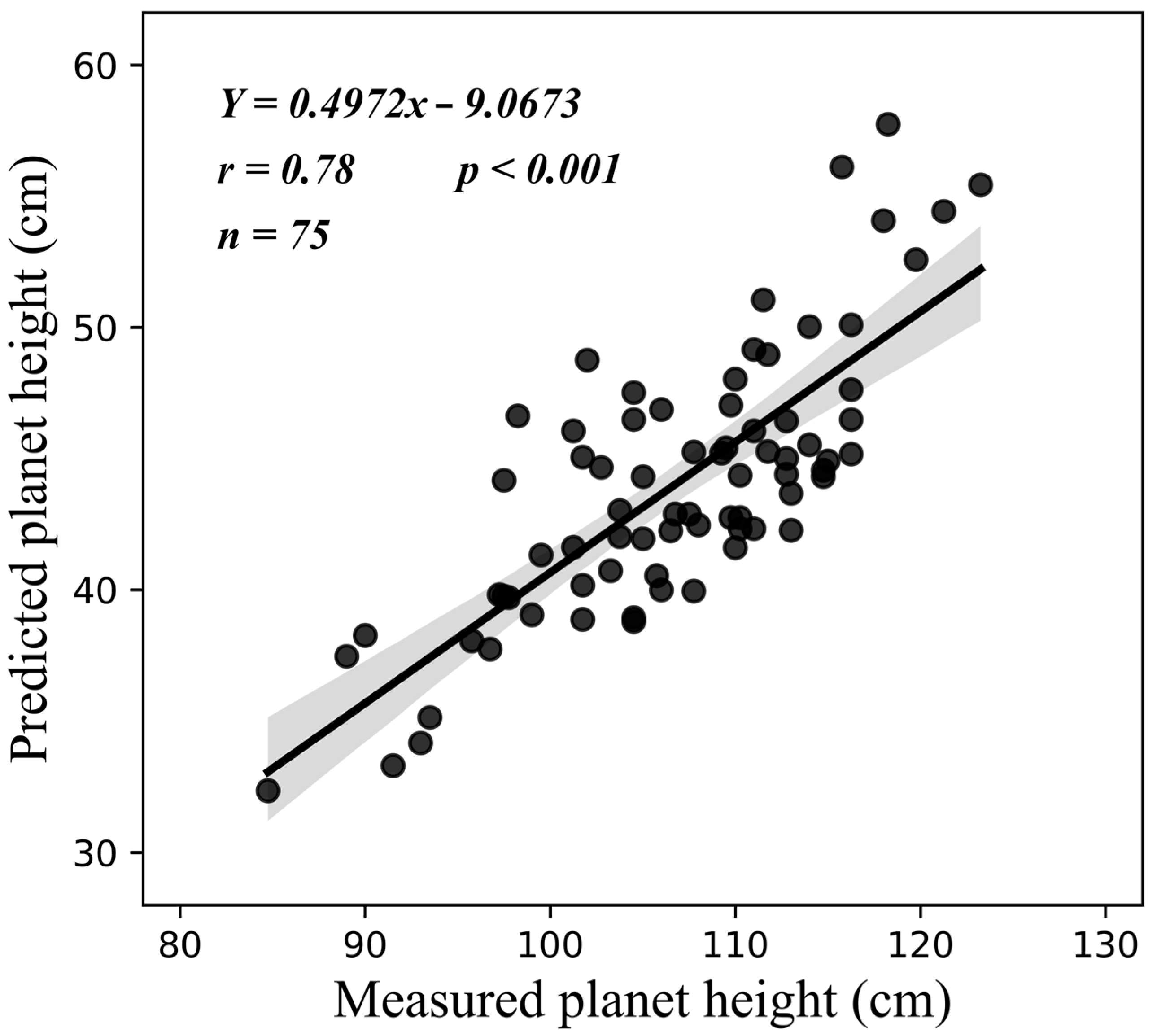

Validating the extracted plant height from the CHM is crucial for ensuring accuracy and reliability in practical applications. In this study, linear regression analysis was utilized to examine the correlation between the estimated plant height from CHM and the measured ground plant height.

Figure 6 indicates a robust correlation (r = 0.78,

p < 0.001), confirming the reliability of the CHM-extracted plant height for subsequent extraction of vegetation structure features.

Methods using volumetric pixels and cylindrical surface fitting models 3D vegetation canopy structures with optimal cylindrical shapes derived from LiDAR or photogrammetric point clouds to extract vegetation volume in diverse environments, such as forests and shrubs [

57,

58]. However, the 3D point cloud generated from RGB images and SFM algorithms has limitations, capturing only outer canopy volume (CV) and lacking information about the internal structure of complex vegetation growth [

59]. To overcome this, our study employed the surface difference method to calculate canopy volume. This method involves determining the volume between the highest and lowest points of the canopy based on the CHM. The CV for each plot was computed as the product of the extracted plant height and the number of vegetation pixels within the plot [

60,

61].

Ultimately, four structural features were extracted using the CHM: mean plant height (

PHmean), standard deviation of plant height (

PHstd), coefficient of variation of plant height (

PHcv), CV, and plot-scale canopy cover (CC) derived through vegetation and soil classification masks [

17].

PHstd and

PHcv are employed to characterize the vertical structure complexity of vegetation [

62]. These structural features offer a quantitative depiction of the vertical vegetation structure. The calculation formulas are presented in

Table 3.

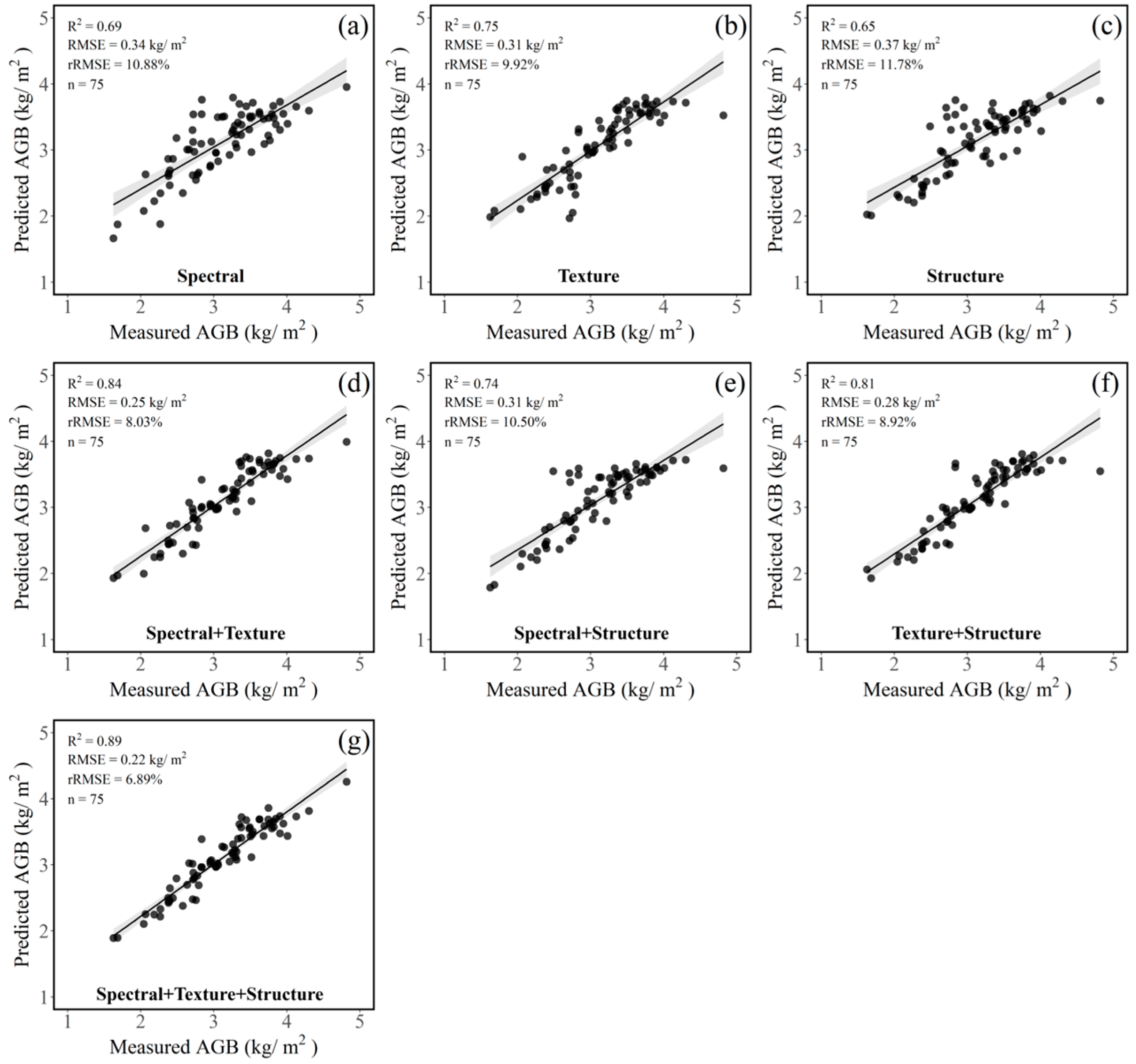

2.5. Selection and Fusion of Features

Pearson correlation analysis quantifies the linear correlation strength between two variables, commonly applied in exploratory data analysis. It calculates the correlation coefficient (“r”) as the ratio of the product of variable covariance to their standard deviation. The coefficient (“r”) ranges from −1 to 1, with a larger absolute value indicating a stronger correlation. Variable selection using random forests (VSURF) is a feature selection technique based on the random forests algorithm (RF) [

63] (Genuer et al., 2010). It efficiently selects crucial features from a vast pool, addressing model uncertainty and multiple covariance issues [

64,

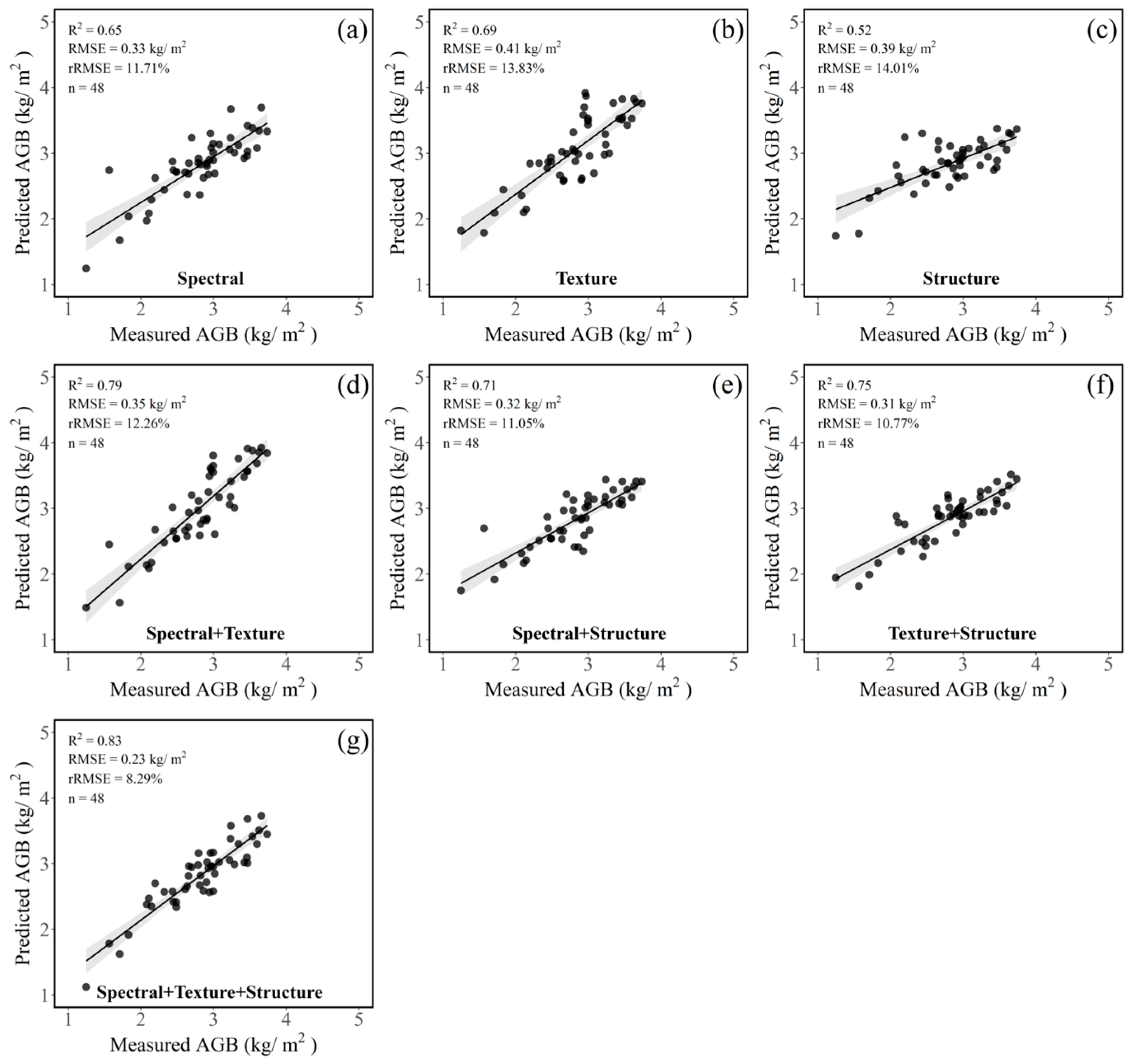

65]. In this study, RStudio 1.4 (R 4.2.0) and the R language library VSURF were employed for feature selection. The feature selection process occurred sequentially in this study using Pearson and VSURF for all feature types. Seven feature combinations were established for the retained features: Sp, Tex, Str, Sp + Tex, Sp + Str, Tex + Str, and Sp + Tex + Str.

2.6. Model Evaluation and AGB, BNFA Mapping

In this study, the Python Scikit-learn library was employed to construct an AGB estimation model for CMV using the RF algorithm. Grid search optimization focused on key hyperparameters, such as the number of decision trees (n_estimators), maximum tree depth (max_depth), maximum features at each split (max_features), minimum samples required for leaf nodes (min_samples_leaf), and internal nodes of a tree (min_samples_split). The process involved defining a hyperparameter space, systematically adjusting parameters, and determining the set that maximized accuracy on the model validation set. Model performance was assessed through repeated k-fold cross-validation and spatial transferability validation, using evaluation metrics, including the coefficient of determination (

R2), root mean square error (

RMSE), and relative root mean square error (

rRMSE). A more robust model is indicated by higher

R2 and lower

RMSE and

rRMSE values (Equations (3)–(5)).

Note:

n represents the number of samples,

yi represents the measured

AGB value of the

i-th sample,

ŷi represents the predicted

AGB value of the

i-th sample, and

ȳ represents the average measured

AGB value.

In this study, the

AGB estimation model was constructed under various feature combination settings and employed to generate

AGB estimation maps for CMV. Subsequently, the

BNFA of CMV was calculated with the biological nitrogen fixation rate of 66% [

66] (Bolger et al., 1995). The formula is presented below:

Note:

BNFA represents the biological nitrogen fixation amount (kg/ha),

AGB represents the aboveground fresh weight of CMV (kg/m

2),

MC% represents the moisture content (%),

N% represents the nitrogen content (%), and

BNF% represents the rate of biological nitrogen fixation (%). The average values for

BNF%,

MC%, and

N% were measured from the field studies (i.e., 66%, 87.76%, and 2.03%), and 10

4 represents the unit conversion.