MSDF-Mamba: Mutual-Spectrum Perception Deformable Fusion Mamba for Drone-Based Visible–Infrared Cross-Modality Vehicle Detection

Highlights

- An infrared–visible fusion network, named MSDF-Mamba, is proposed for vehicle detection in aerial imagery. MSDF-Mamba addresses the problem of infrared–visible images’ misalignment, providing a mutual-referenced solution for target misalignment correction. Its fusion mechanism is also designed to enhance detection performance while keeping low computational costs.

- A Mutual-Spectrum Deformable Alignment (MSDA) module is introduced to achieve precise spatial alignment of cross-modal objects, and a Selective Scan Fusion (SSF) module is designed to enhance the fusion capability of dual-modal features. Experimental results on two cross-modal benchmark datasets, DroneVehicle and DVTOD, demonstrate that our method achieves an mAP of 82.5% and 86.4%, respectively, significantly outperforming other fusion detection methods.

- By taking full advantage between visible and infrared modalities, this study offers a feasible fusion detection approach for all-day object detection.

- This work provides a high-accuracy, low-complexity Mamba network solution for cross-modal vehicle detection on UAV platforms.

Abstract

1. Introduction

- We propose a novel multimodal object detection framework based on the Mamba architecture, which solves two problems specific to visible–infrared images’ object detection tasks: positional misalignment and the imbalance between effectiveness and computational complexity.

- We design the Mutual Spectrum Deformable Alignment (MSDA) module, which acquires complementary features through bidirectional cross-attention and generates a shared reference for predicting offsets. Based on offsets, deformable convolutions are used to explicitly align features, thereby effectively overcoming modal misalignment.

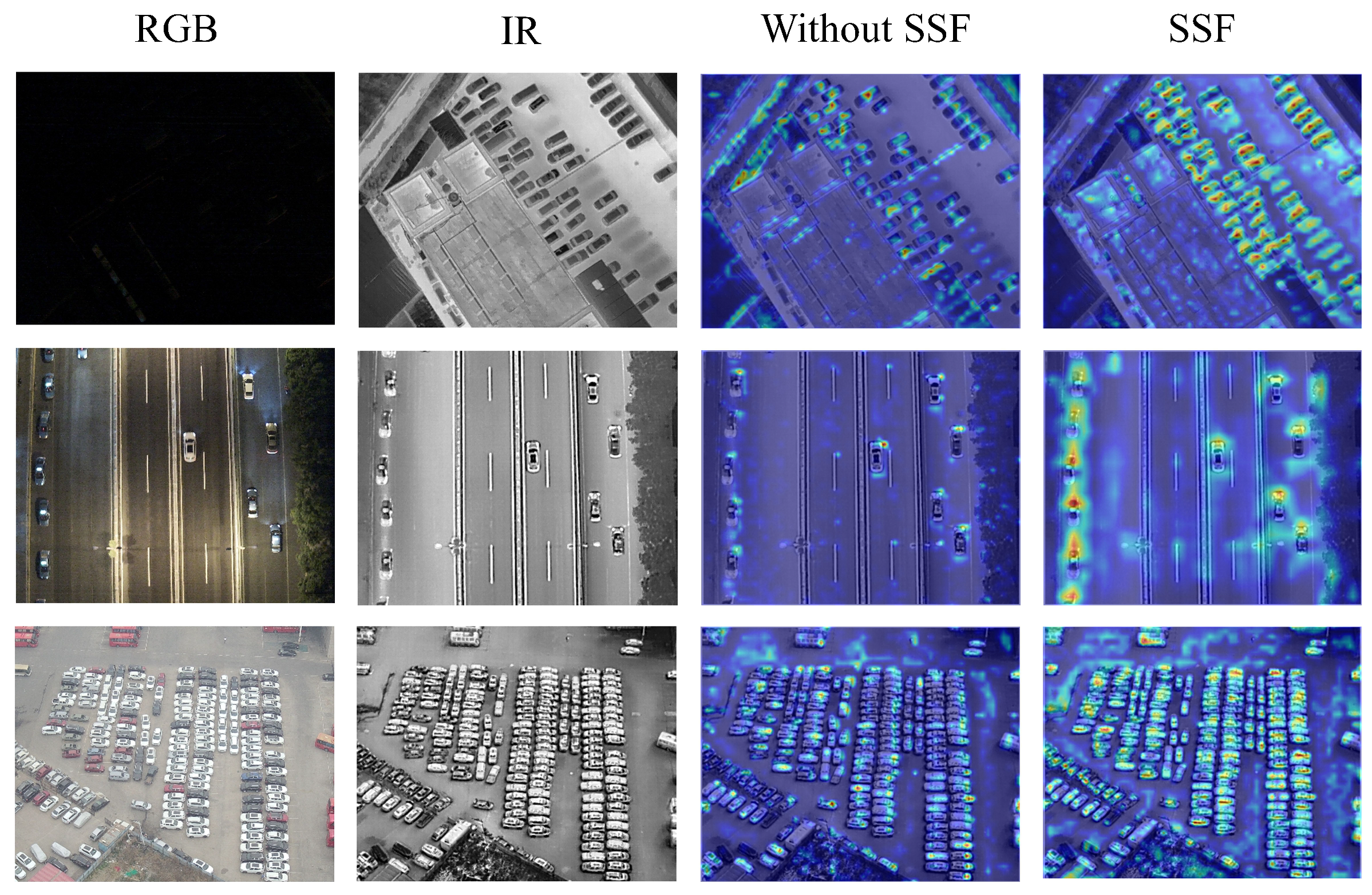

- We also design a Selective Scan Fusion (SSF) module that maps features to a shared hidden state space via a selective scanning mechanism. This module achieves efficient cross-modal feature interaction while maintaining linear computational complexity.

- We validate the effectiveness of our proposed framework on two widely used remote sensing datasets, DroneVehicle and DVTOD. Our method achieves state-of-the-art performance with 82.5% mAP on DroneVehicle and 86.4% mAP on DVTOD, significantly outperforming existing fusion detection methods in both accuracy and efficiency.

2. Materials

3. Methods

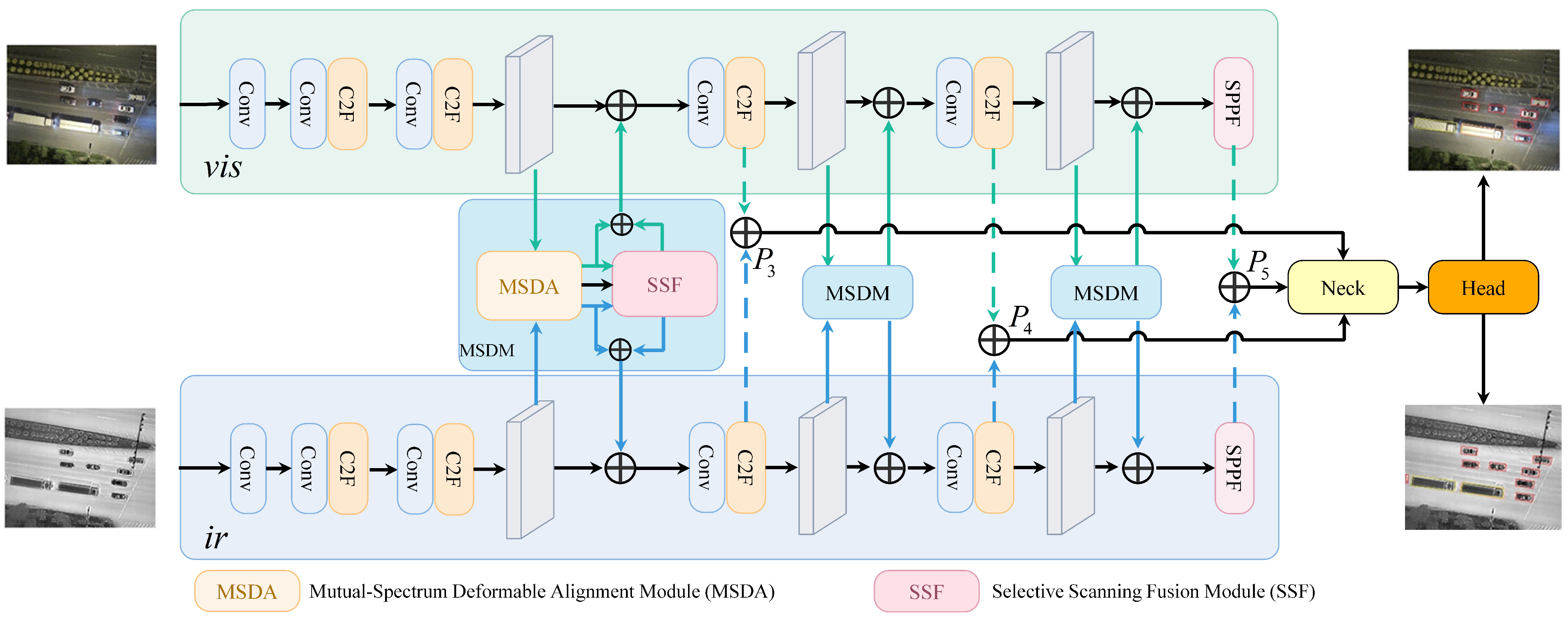

3.1. Overall Framework of MSDF-Mamba

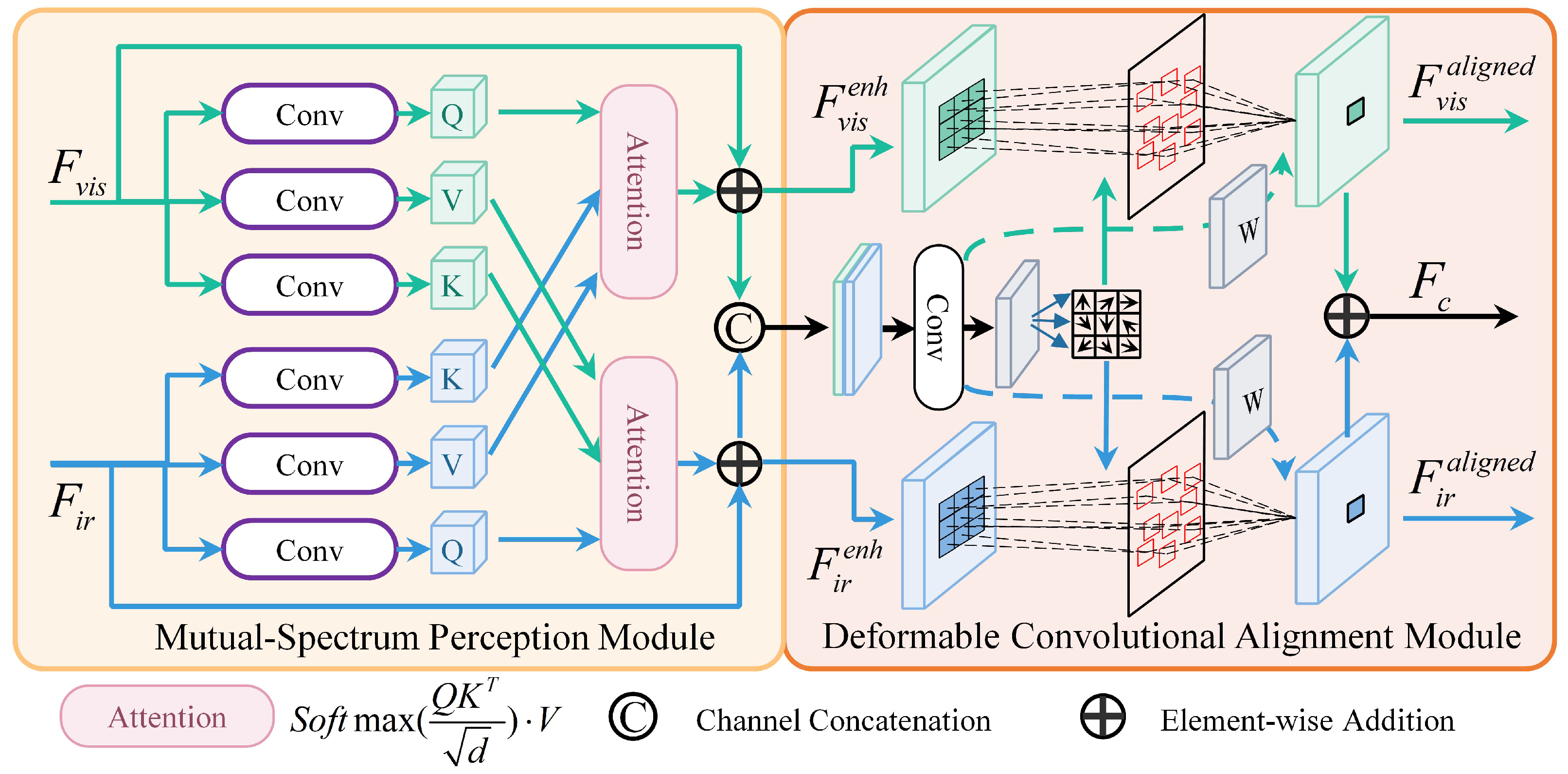

3.2. MSDA Module

3.3. SSF Module

3.4. Loss Function

4. Results

4.1. Datasets and Evaluation Metrics

4.1.1. DroneVehicle Dataset

4.1.2. DVTOD Dataset

4.1.3. Evaluation Metrics

4.2. Implementation Details

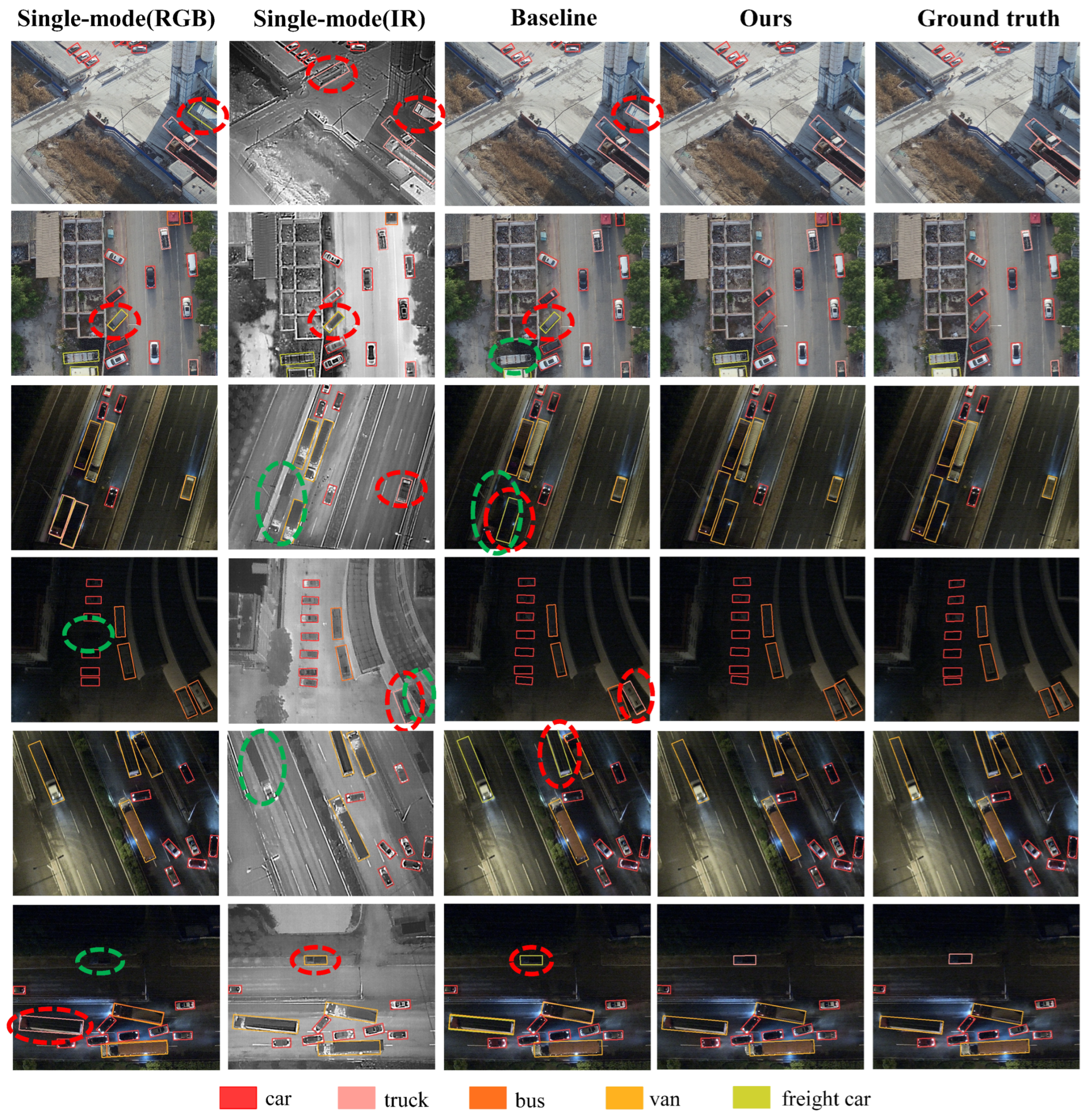

4.3. Comparison Experiments

4.3.1. Comparison on DroneVehicle Dataset

4.3.2. Comparison on DVTOD Dataset

4.3.3. Comparisons of Computational Costs and Speed

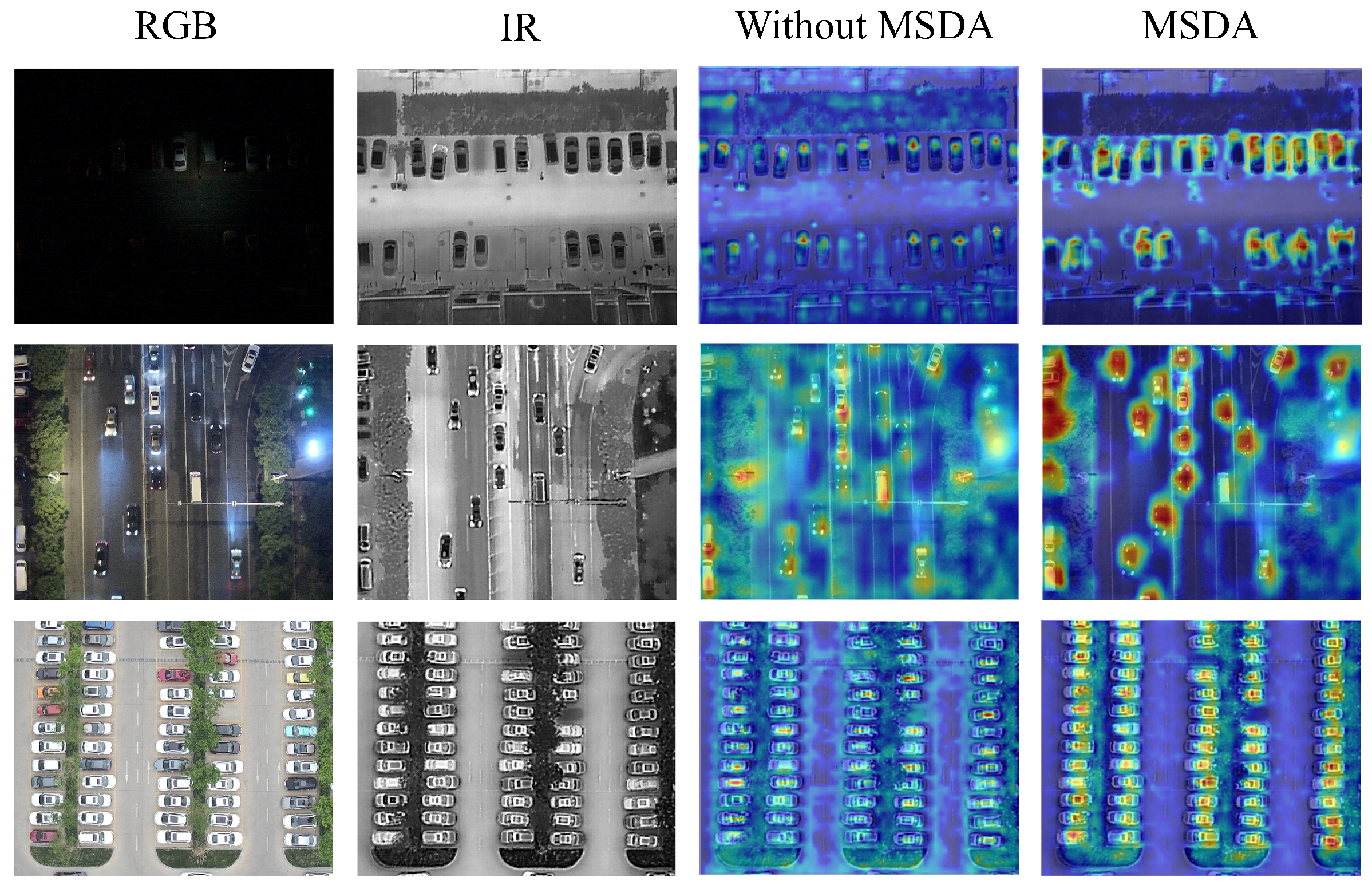

4.4. Ablation Experiments

5. Discussion

5.1. Rationality Analysis of the SSF Module

5.2. Limitations of MSDF-Mamba

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, Y.; Zhang, Y.; Shi, Z.; Fu, R.; Liu, D.; Zhang, Y.; Du, J. Enhanced Cross-Domain Dim and Small Infrared Target Detection via Content-Decoupled Feature Alignment. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Fu, R.; Shi, Z.; Zhang, J.; Liu, D.; Du, J. Learning Nonlocal Quadrature Contrast for Detection and Recognition of Infrared Rotary-Wing UAV Targets in Complex Background. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, H.; Li, C.; Wang, K.; Zhang, Z. DVIF-Net: A Small-Target Detection Network for UAV Aerial Images Based on Visible and Infrared Fusion. Remote Sens. 2025, 17, 3411. [Google Scholar] [CrossRef]

- Li, H.; Hu, Q.; Zhou, B.; Yao, Y.; Lin, J.; Yang, K. CFMW: Cross-modality Fusion Mamba for Robust Object Detection under Adverse Weather. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 12066–12081. [Google Scholar] [CrossRef]

- Zhang, Y.; Rui, X.; Song, W. A UAV-Based Multi-Scenario RGB-Thermal Dataset and Fusion Model for Enhanced Forest Fire Detection. Remote Sens. 2025, 17, 2593. [Google Scholar] [CrossRef]

- Nag, S. Image Registration Techniques: A Survey. arXiv 2017, arXiv:1712.07540. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Zhu, X.; Song, Z.; Yang, X.; Lei, Z. Weakly Aligned Feature Fusion for Multimodal Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 4145–4159. [Google Scholar] [CrossRef]

- Yuan, M.; Wei, X. C2Former: Calibrated and Complementary Transformer for RGB-Infrared Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403712. [Google Scholar] [CrossRef]

- Tian, C.; Zhou, Z.; Huang, Y.; Li, G.; He, Z. Cross-Modality Proposal-Guided Feature Mining for Unregistered RGB-Thermal Pedestrian Detection. IEEE Trans. Multimed. 2024, 26, 6449–6461. [Google Scholar] [CrossRef]

- Yuan, M.; Wang, Y.; Wei, X. Translation, Scale and Rotation: Cross-Modal Alignment Meets RGB-Infrared Vehicle Detection. In Proceedings of the Computer Vision—ECCV2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 509–525. [Google Scholar]

- Liu, Z.; Luo, H.; Wang, Z.; Wei, Y.; Zuo, H.; Zhang, J. Cross-modal Offset-guided Dynamic Alignment and Fusion for Weakly Aligned UAV Object Detection. arXiv 2025, arXiv:2506.16737. [Google Scholar]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised Misaligned Infrared and Visible Image Fusion via Cross-modality Image Generation and Registration. arXiv 2022, arXiv:2205.11876. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Xu, M.; Zhang, H.; Xiao, G. STDFusionNet: An infrared and visible image fusion network based on salient target detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, S.; Thachan, S.; Chen, J.; Qian, Y.; Lei, Z. Deconv R-CNN for Small Object Detection on Remote Sensing Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2483–2486. [Google Scholar]

- Wang, Z.; Liao, X.; Yuan, J.; Yao, Y.; Li, Z. CDC-YOLOFusion: Leveraging Cross-Scale Dynamic Convolution Fusion for Visible-Infrared Object Detection. IEEE Trans. Intell. Veh. 2024, 10, 2080–2093. [Google Scholar] [CrossRef]

- Hu, J.; Huang, T.; Deng, L.; Dou, H.; Hong, D.; Vivone, G. Fusformer: A Transformer-Based Fusion Network for Hyperspectral Image Super-Resolution. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6012305. [Google Scholar] [CrossRef]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.M.; Shum, H.-Y. Mask DINO: Towards a Unified Transformer-Based Framework for Object Detection and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 3041–3050. [Google Scholar]

- Gao, Z.; Li, D.; Kuai, Y.; Chen, R.; Wen, G. Visible-Infrared Image Alignment for UAVs: Benchmark and New Baseline. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5613514. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Kiu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Zhou, M.; Li, T.; Qiao, C.; Xie, D.; Wang, G. DMM: Disparity-Guided Multispectral Mamba for Oriented Object Detection in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5404913. [Google Scholar] [CrossRef]

- Wang, S.; Wang, C.; Shi, C.; Liu, Y.; Lu, M. Mask-Guided Mamba Fusion for Drone-Based Visible-Infrared Vehicle Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5005712. [Google Scholar] [CrossRef]

- Liu, C.; Ma, X.; Yang, X.; Zhang, Y.; Dong, Y. COMO: Cross-Mamba Interaction and Offset-Guided Fusion for Multimodal Object Detection. Inf. Fusion. 2025, 125, 103414. [Google Scholar] [CrossRef]

- Dong, W.; Zhu, H.; Lin, S.; Luo, X.; Shen, Y.; Guo, G. Fusion-Mamba for Cross-Modality Object Detection. IEEE Trans. Multimed. 2025, 27, 7392–7406. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time Sequence Modeling with Selective State Spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO 2023. Available online: https://docs.ultralytics.com/zh/models/yolov8/ (accessed on 16 October 2025).

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets V2: More Deformable, Better Results. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9300–9308. [Google Scholar]

- Zhao, Z.; Zhang, W.; Xiao, Y.; Li, C.; Tang, J. Reflectance-Guided Progressive Feature Alignment Network for All-Day UAV Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5404215. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-Based RGB-Infrared Cross-Modality Vehicle Detection Via Uncertainty-Aware Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Song, K.; Xue, X.; Wen, H.; Ji, Y.; Yan, Y.; Meng, Q. Misaligned Visible-Thermal Object Detection: A Drone-Based Benchmark and Baseline. IEEE Trans. Intell. Veh. 2024, 9, 7449–7460. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; pp. 3163–3171. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2844–2853. [Google Scholar]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D. Multispectral Deep Neural Networks for Pedestrian Detection. arXiv 2016, arXiv:1611.02644. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Zhang, S.; Yang, X.; Qiao, H.; Huang, K.; Hussain, A. Cross-modality Interactive Attention Network for Multispectral Pedestrian Detection. Inf. Fusion 2019, 50, 20–29. [Google Scholar] [CrossRef]

- Zhou, K.; Chen, L.; Cao, X. Improving Multispectral Pedestrian Detection by Addressing Modality Imbalance Problems. In Proceedings of the Computer Vision—ECCV2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 787–803. [Google Scholar]

- Chen, C.; Qi, J.; Liu, X.; Bin, K.; Fu, R.; Hu, X.; Zhong, P. Weakly Misalignment-Free Adaptive Feature Alignment for UAVs-Based Multimodal Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 26826–26835. [Google Scholar]

- Ren, K.; Wu, X.; Xu, L.; Wang, L. RemoteDet-Mamba: A Hybrid Mamba-CNN Network for Multi-modal Object Detection in Remote Sensing Images. arXiv 2024, arXiv:2410.13532. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Ingham, F.; Poznanski, J.; Fang, J.; Yu, L.; et al. ultralytics/yolov5: v3. 1-Bug Fixes and Performance Improvements. Available online: https://zenodo.org/records/4154370 (accessed on 16 October 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Zhang, J.; Liu, H.; Yang, K.; Hu, X.; Liu, R.; Stiefelhagen, R. CMX: Cross-Modal Fusion for RGB-X Semantic Segmentation with Transformers. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14679–14694. [Google Scholar] [CrossRef]

- Fang, Q.; Han, D.; Wang, Z. Cross-modality fusion transformer for multispectral object detection. arXiv 2021, arXiv:2111.00273. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Hyperparameters | Value |

|---|---|

| Image size | 640 × 640 |

| Epochs | 150 |

| Batch size | 4 |

| Optimizer | SGD |

| Momentum | 0.937 |

| Data enhancement | Mosaic |

| Weight decay | 0.0005 |

| Initial learning rate | 0.01 |

| Method | Image | Type | Car | Truck | Freight-Car | Bus | Van | mAP@0.5 (%) |

|---|---|---|---|---|---|---|---|---|

| RetinaNet [32] | One-Stage | 78.5 | 34.4 | 24.1 | 69.8 | 28.8 | 47.1 | |

| R3Det [33] | One-Stage | 80.3 | 56.1 | 42.7 | 80.2 | 44.4 | 60.8 | |

| S2ANet [34] | RGB | One-Stage | 80.0 | 54.2 | 42.2 | 84.9 | 43.8 | 61.0 |

| Faster-R-CNN [35] | Two-Stage | 79.0 | 49.0 | 37.2 | 77.0 | 37.0 | 55.9 | |

| RoITransformer [36] | Two-Stage | 61.6 | 55.1 | 42.3 | 85.5 | 44.8 | 61.6 | |

| YOLOv8(OBB) [27] | One-Stage | 93.4 | 70.6 | 56.6 | 92.1 | 59.8 | 74.5 | |

| RetinaNet [32] | One-Stage | 88.8 | 35.4 | 39.5 | 76.5 | 32.1 | 54.5 | |

| R3Det [33] | One-Stage | 89.5 | 48.3 | 16.6 | 87.1 | 39.9 | 62.3 | |

| S2ANet [34] | IR | One-Stage | 89.9 | 54.5 | 55.8 | 88.9 | 48.4 | 67.5 |

| Faster-R-CNN [35] | Two-Stage | 89.4 | 53.5 | 48.3 | 87.0 | 42.6 | 64.2 | |

| RoITransformer [36] | Two-Stage | 89.6 | 51.0 | 53.4 | 88.9 | 44.5 | 65.5 | |

| YOLOv8(OBB) [27] | One-Stage | 97.3 | 73.1 | 67.8 | 93.6 | 61.2 | 78.6 | |

| Ours | RGB + IR | One-Stage | 98.6 | 82.5 | 69.8 | 96.9 | 64.5 | 82.5 |

| Method | Image | Type | Car | Truck | Freight-Car | Bus | Van | mAP@0.5 (%) |

|---|---|---|---|---|---|---|---|---|

| UA-CMDet [30] | Two-Stage | 87.5 | 60.7 | 46.8 | 87.1 | 38.0 | 64.0 | |

| Halfway Fusion [37] | Two-Stage | 90.1 | 62.3 | 58.5 | 89.1 | 49.8 | 70.0 | |

| CIAN [38] | One-Stage | 90.1 | 63.8 | 60.7 | 89.1 | 50.3 | 70.8 | |

| AR-CNN [7] | Two-Stage | 90.1 | 64.8 | 62.1 | 89.4 | 51.5 | 71.6 | |

| MBNet [39] | One-Stage | 90.1 | 64.4 | 62.4 | 88.8 | 53.6 | 71.9 | |

| TSFADet [10] | RGB + IR | Two-Stage | 89.9 | 67.9 | 63.7 | 89.8 | 54.0 | 73.1 |

| C2Former [8] | One-Stage | 90.2 | 68.3 | 64.4 | 89.8 | 58.5 | 74.2 | |

| DMM [21] | One-Stage | 90.4 | 79.8 | 68.2 | 89.9 | 68.6 | 79.4 | |

| OAFA [40] | One-Stage | 90.3 | 76.8 | 73.3 | 90.3 | 66.0 | 79.4 | |

| RemoteDet-Mamba [41] | One-Stage | 98.2 | 81.2 | 67.9 | 95.7 | 65.1 | 81.8 | |

| Ours | RGB + IR | One-Stage | 98.6 | 82.5 | 69.8 | 96.9 | 64.5 | 82.5 |

| Method | Image | Person | Car | Bicycle | mAP@0.5 (%) |

|---|---|---|---|---|---|

| YOLOv3 [42] | 25.1 | 53.8 | 24.6 | 34.5 | |

| YOLOv5 [43] | 23.3 | 50.2 | 26.7 | 33.4 | |

| YOLOv6 [44] | RGB | 31.0 | 52.7 | 30.5 | 38.1 |

| YOLOv7 [45] | 26.5 | 45.0 | 20.4 | 30.6 | |

| YOLOv8 [27] | 28.4 | 52.7 | 24.7 | 35.3 | |

| YOLOv10 [46] | 30.4 | 50.8 | 23.8 | 35.0 | |

| YOLOv3 [42] | 87.1 | 76.1 | 84.8 | 82.7 | |

| YOLOv5 [43] | 86.2 | 75.9 | 86.2 | 82.8 | |

| YOLOv6 [44] | IR | 86.2 | 78.3 | 88.8 | 84.4 |

| YOLOv7 [45] | 80.2 | 68.7 | 84.5 | 77.8 | |

| YOLOv8 [27] | 86.2 | 75.6 | 88.1 | 83.3 | |

| YOLOv10 [46] | 85.9 | 74.9 | 85.3 | 82.1 | |

| YOLOv5 + Add | 88.8 | 74.3 | 74.6 | 79.2 | |

| YOLOv5 + CMX [47] | 88.9 | 75.9 | 79.6 | 81.6 | |

| CFT [48] | RGB + IR | 88.9 | 78.0 | 81.3 | 82.7 |

| CMA-Det [31] | 90.3 | 81.6 | 83.1 | 85.0 | |

| Ours | 89.8 | 83.3 | 86.2 | 86.4 |

| Method | MSDA | SSF | Parameters (MB) | Speed (FPS) | mAP@0.5 (%) |

|---|---|---|---|---|---|

| Baseline | × | × | 11.70 | 337.05 | 78.9 |

| Baseline + MSDA | √ | × | 38.36 | 68.15 | 80.8 |

| Baseline + SSF | × | √ | 29.86 | 47.39 | 81.5 |

| Ours | √ | √ | 51.34 | 34.65 | 82.5 |

| Module | Parameters (MB) | |||

|---|---|---|---|---|

| Stage 1 (128 Channels) | Stage 2 (256 Channels) | Stage 3 (512 Channels) | All | |

| Baseline | 0 | 0 | 0 | 0 |

| MSDA | 1.21 | 4.33 | 16.33 | 21.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, J.; He, J.; Liu, Q.; Zhang, Z.; Wang, G.; Lu, D. MSDF-Mamba: Mutual-Spectrum Perception Deformable Fusion Mamba for Drone-Based Visible–Infrared Cross-Modality Vehicle Detection. Remote Sens. 2025, 17, 4037. https://doi.org/10.3390/rs17244037

Shen J, He J, Liu Q, Zhang Z, Wang G, Lu D. MSDF-Mamba: Mutual-Spectrum Perception Deformable Fusion Mamba for Drone-Based Visible–Infrared Cross-Modality Vehicle Detection. Remote Sensing. 2025; 17(24):4037. https://doi.org/10.3390/rs17244037

Chicago/Turabian StyleShen, Jiashuo, Jun He, Qiuyu Liu, Zhilong Zhang, Guoyan Wang, and Dawei Lu. 2025. "MSDF-Mamba: Mutual-Spectrum Perception Deformable Fusion Mamba for Drone-Based Visible–Infrared Cross-Modality Vehicle Detection" Remote Sensing 17, no. 24: 4037. https://doi.org/10.3390/rs17244037

APA StyleShen, J., He, J., Liu, Q., Zhang, Z., Wang, G., & Lu, D. (2025). MSDF-Mamba: Mutual-Spectrum Perception Deformable Fusion Mamba for Drone-Based Visible–Infrared Cross-Modality Vehicle Detection. Remote Sensing, 17(24), 4037. https://doi.org/10.3390/rs17244037