1. Introduction

Hyperspectral remote sensing image classification is regarded as a central issue in hyperspectral data analysis, with extensive applications in fields such as vegetation cover statistics [

1], resource exploration [

2], military target detection and operations [

3], agricultural development [

4], urban planning monitoring, and meteorological and environmental monitoring [

5]. While hyperspectral remote sensing images offer abundant information, they also present some challenges, such as high dimensionality, strong redundancy, scarce samples, wide intra-class scatter coupled with narrow inter-class margins [

6]. These challenges make it difficult for traditional classification methods to perform high-precision identification tasks in complex scenes. Traditional classification methods mainly rely on manually designed shallow features and machine learning algorithms. Although they have a certain interpretability in specific tasks, they still have significant shortcomings in deep feature extraction, generalization ability, and modeling complex land structures [

7].

Currently, representative methods for hyperspectral image classification include 1D-CNN-based spectral feature extraction [

8], 2D-CNN-driven spatial feature extraction [

9], and 3D-CNN-enabled spectral-spatial feature extraction [

10]. However, most of these methods employ fixed-size convolutional kernels, making it difficult to adapt to the features of geospatial objects of different scales. Meanwhile, the local perceptual scope of these models limits their ability to perform global modeling of distant objects of the same class during classification [

11]. Mou et al. [

12] input spectral band sequences as time-series data into recurrent neural networks and optimized activation functions, improving land cover classification accuracy but with limited capability in modeling long-sequence spectral data. Li et al. [

13] proposed a grouped multi-kernel convolution strategy to enhance intra-group discriminative information and preserve spectral integrity, yet failing to effectively distinguish different land cover components at the spatial scale. Three-dimensional convolutional networks have gained traction in their ability to simultaneously extract multi-scale fusion of spectral and spatial dimensions in hyperspectral analysis [

14]. Roy et al. [

15] proposed HybridSN, a hybrid spectral CNN integrating 3D-CNN’s spectral-spatial joint modeling and 2D-CNN’s deep feature extraction. It effectively addressed similar texture classification but tends to over-model redundant spectral bands. Li et al. [

16] introduced an end-to-end 3D-CNN method for joint spectral-spatial feature extraction and pixel-level classification, but the model was prone to misclassifying edges and small targets and lacked adaptive spectral band selection, resulting in coarse handling of the spectral dimension. He et al. [

17] proposed the ResNet, which effectively addressed the training difficulties of deep networks through shortcut connections. Zhong et al. [

18] developed SSRN (Spectral-Spatial Residual Network), which jointly optimized spectral-spatial features to enhance feature transfer and reuse, improving classification efficiency but suffering from accuracy degradation due to redundant pixels and invalid bands.

In order to solve the redundant pixel issue, researchers introduced attention mechanisms for hyperspectral image classification [

19]. Zhu et al. [

20] combined 2D residual CNNs with the spectral-spatial attention mechanism (SSAM) to obtain more discriminative spectral-spatial features, but they did not adopt 3D convolution—SSAM relied on local weighting and lacked global context modeling capability. Ma et al. [

21] proposed CPDB-Net, a center-pixel-based dual-branch network, which integrated a novel frequency-aware attention mechanism into the ViT-based spatial branch. This mitigated non-target interference from expanded spatial windows, yet high-dimensional spectral data increased computational complexity, making it hard for the network to capture subtle spectral differences in land covers in high-dimensional space.

To address challenges posed by high-dimensional data, Dai et al. [

22] proposed the SDAE method. It used noise in unsupervised steps and fine-tunes with supervision, effectively alleviating the Hughes phenomenon and noise interference in high-dimensional data. Since it ignored spatial info, it often misclassified land types that looked similar in space but had tiny spectral differences. Zhao et al. [

23] proposed a multi-scale cross-convolution method. It mined spatial-spectral correlation features at different scales, and fused cross-scale semantic info, effectively addressing spectral confusion and high-dimensional redundancy. However, its dataset generalization needed improvement. Mei et al. [

24] proposed a group-aware hierarchical method. It divided bands into groups via grouping strategies, fused spatial neighborhood info to better utilize high-dimensional data, but lacked sufficient use of spatial details.

As GPU computing performance improved, various deep learning models had been developed. Early deep learning used shallow networks for spectral or spatial feature extraction [

25,

26,

27,

28]. Later, deep and multi-layer architectures enabled efficient fusion of spectral and spatial information. Now, multi-scale and multi-view CNNs accurately capture subtle changes in hyperspectral images [

29,

30]. Models combining attention mechanisms and Transformers excel at capturing long-range dependencies, improving classification accuracy and generalization [

31,

32]. Spectral, spatial, and central attention methods have been increasingly proposed and applied. Spectral methods exploit subtle spectral differences. Shu et al. [

33] used separated attention to strengthen spectral and spatial features but lacked feature interaction and struggled with complex objects. Duan et al. [

34] applied spectral attention for discriminative bands but was weak against noise. Chhapariya et al. [

35] achieved high accuracy with low parameters using residual-dual branch attention, though settings relied on experience. Han et al. [

36] modeled sub-pixel spectral uncertainty for robustness but could not precisely focus on core features. Spatial methods simulated ground object distributions. Multi-scale CNNs used varied receptive fields to capture diverse spatial patterns [

37,

38]. Ge et al. [

39] proposed a pyramid network with channel-spatial separation to address fixed receptive fields and attention redundancy, though kernel scales were not fully adaptive. Central attention methods focused on key features and cross-domain fusion. Feng et al. [

40] developed the CAT Transformer, using hierarchical spectral-spatial tokens to target central pixels, but it struggled with certain ground object categories. Zhang et al. [

41] introduced S2CABT, which handled spectral reduction, feature extraction, and context refinement simultaneously, yet its generalization in complex scenes was limited. Jia et al. [

42] presented CenterFormer, which precisely focused on core features and enhances spectral-spatial collaboration, but fell short in capturing edge details.

In unsupervised remote sensing algorithms, breakthroughs addressed challenges like scarce labels and mixed-pixel interference through spatial-spectral learning, unsupervised representation mining, low-rank sparse modeling, and graph topology analysis. Wang et al. [

43] proposed OraL, a two-stage (observation-regeneration) pixel-level change detector. It narrowed the supervised performance gap but depends on initial pseudo-label quality and needed validation for extreme spectral variations. Zhou et al. [

44] developed LRSnet, combining low-rank feature extraction with noise suppression and CDnet for change mining. It achieved high accuracy but struggled with large-scale pseudo-changes and hyperparameter sensitivity. Li et al. [

45] introduced EDIP-Net, a two-stage unsupervised super-resolution network. It generated scene-aware coarse estimates (ZSL) and refined results (DIG) without external data, offering strong robustness but high computational costs and noise sensitivity. Wang et al. [

46] created CAN, using PCA compression and attention modules for adaptive spatial-spectral feature weighting. However, it showed limited accuracy for spectrally similar objects and complex classifications. Mei et al. [

47] combined multi-scale low-rank representation (for hierarchical feature separation) with bidirectional recursive filtering (for spatial-spectral enhancement). The method risked blurring fine object boundaries due to over-smoothing. Wang et al. [

48] proposed a dual stochastic graph-based projection clustering method for global topological relationship mining. While scalable, it lacked spatial neighborhood integration, causing confusion for similarly distributed objects.

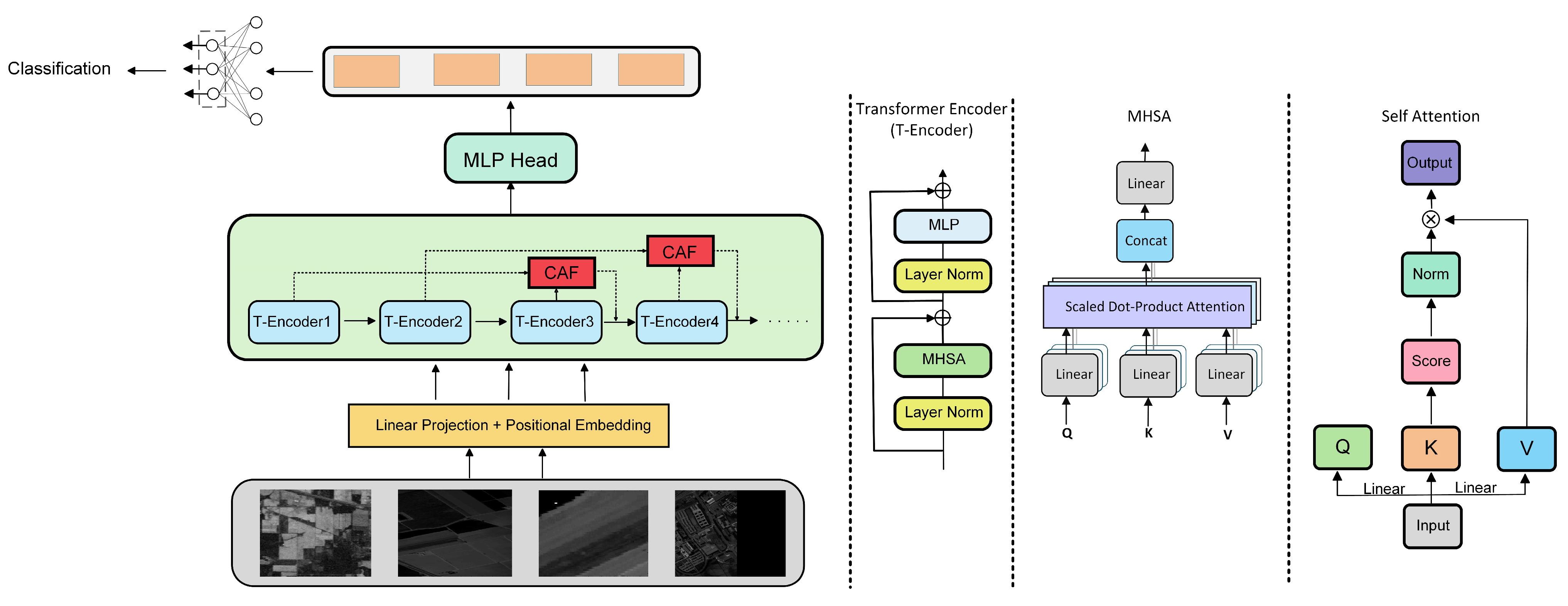

These algorithms had achieved good classification performance, and new architecture-based algorithms had been proposed in recent years. To address overfitting and poor generalization of models under small-sample scenarios, Hong et al. [

49] proposed SpectralFormer for hyperspectral image classification, achieving breakthroughs in spectral sequence modeling via local spectral embedding (GSE) and cross-layer fusion (CAF) modules but ignoring local spectral continuity with insufficient spectral detail capture. Zhong et al. [

50] proposed SSTN, which effectively addressed long-range dependency modeling in hyperspectral classification by introducing Transformer yet lacked a unified spectral-spatial joint modeling mechanism. Zhang et al. [

51] proposed the SSSAN model, which simultaneously performed spatial and spectral self-attention and employed pixel-wise sliding window prediction, applying attention to each individual pixel. This resulted in a large amount of redundant computation. Moreover, the model did not incorporate spatial positional encoding, making it prone to overfitting and attention degradation in scenarios with limited training samples or complex boundaries. Li et al. [

52] proposed the end-to-end CNN-based CVSSN, fusing spectral-spatial information efficiently with a center-pixel-oriented mechanism, but this mechanism amplified interference when neighborhood pixel categories were inconsistent in class boundary regions. Xu et al. [

53] proposed the robust self-ensemble network (RSEN) for hyperspectral classification, achieving high accuracy with extremely few labeled samples via self-ensemble learning. Fu et al. [

54] proposed ReSC-net, enhancing inter-channel non-linear expression via the ECA mechanism and optimizing deep feature representation with dual attention, but it did not consider spectral non-linear mixing and had poor adaptability to datasets with large band number differences. Cui et al. [

55] proposed DEMUNet, cascading bidirectional Mamba scanning, CNN-Transformer dual-branch encoding, and U-Net decoding to aggregate long-range spectral-spatial features with linear complexity; however, it insufficiently exploited spectral 3D continuity, and bidirectional scanning plus dual-path fusion increased computation and parameters.

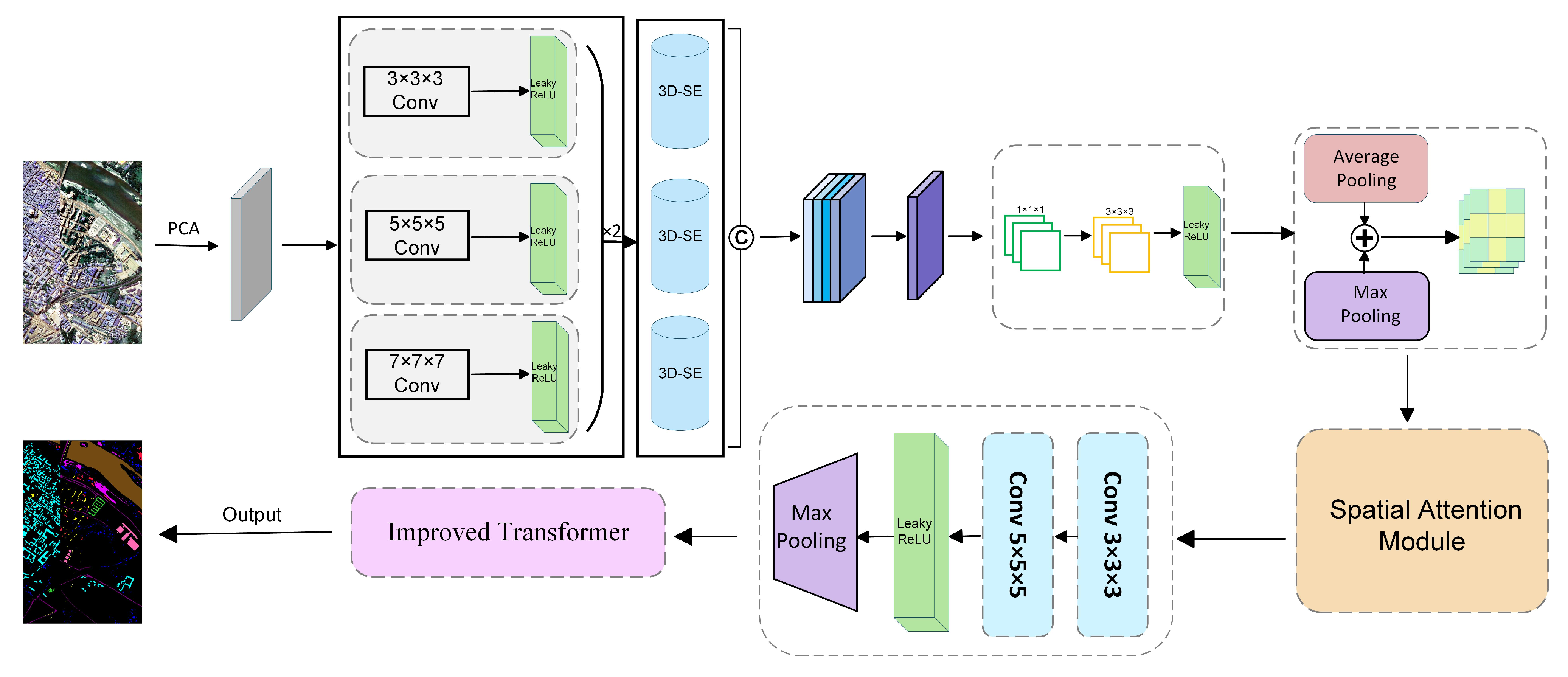

Although existing research has validated that the introduction of attention mechanisms or multi-scale convolution can improve the performance of hyperspectral image classification, significant gaps persist under scenarios of extreme sample scarcity. Most models rely solely on single-scale token modeling and lack explicit representation of multi-level granularity, causing spatial details to be suppressed by global averaging. Secondly, although lightweight convolutions are employed, fixed kernel sizes are used which fail to adaptively adjust the receptive field according to the target scale. This often leads to non-negligible discriminative confusion in boundary regions; and the introduction of unidimensional attention—either channel-wise or spatial-wise—lacks the joint optimization of the three-dimensional coupling among “channel, space, and context,” making it difficult to suppress noise and amplify common features. Therefore, this paper proposes a “Multi-scale and Multi-level” joint modeling framework. For the first time, it couples three-dimensional multi-path parallel convolution with a progressive channel-spatial self-attention mechanism into a unified optimization objective. This framework achieves the simultaneous recalibration of discriminative weights at the pixel, scale, and channel levels. Consequently, it effectively resolves the challenging problem of dynamic fusion of different-sized features—a dilemma that existing methods struggle to balance under small-sample conditions—thereby significantly improving both the classification accuracy and boundary preservation capability for hyperspectral images.

In this study, we concentrate on the following pivotal work:

- (1)

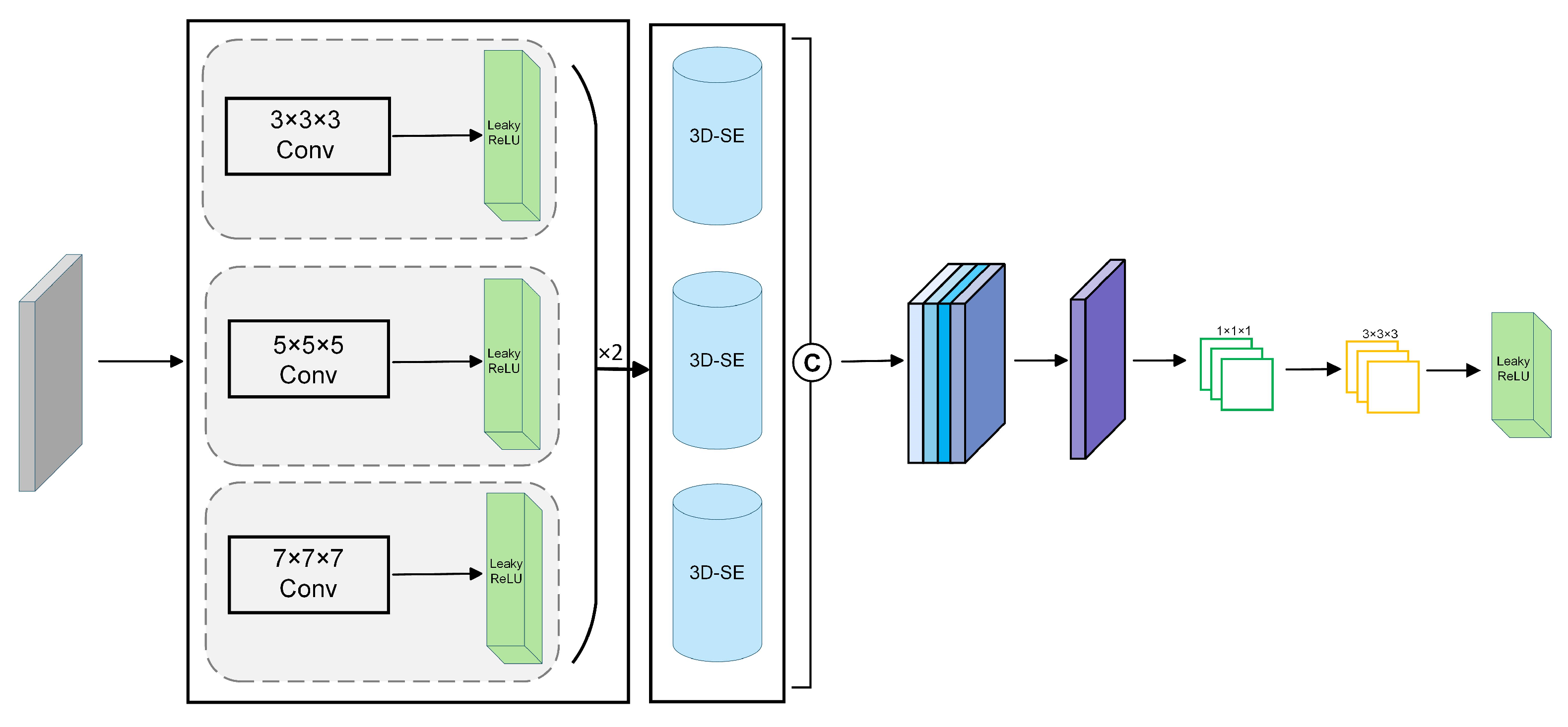

To tackle the multi-scale representation challenge inherent in hyperspectral imagery, a multi-scale spectral-spatial feature extraction mechanism is suggested. By leveraging three parallel 3D convolutional pathways, the model builds receptive fields to hierarchically extract spectral–spatial features, spanning fine local textures to coarse global contexts. These convolutional operations not only strengthen local structural representations but also support channel reconstruction and feature refinement.

- (2)

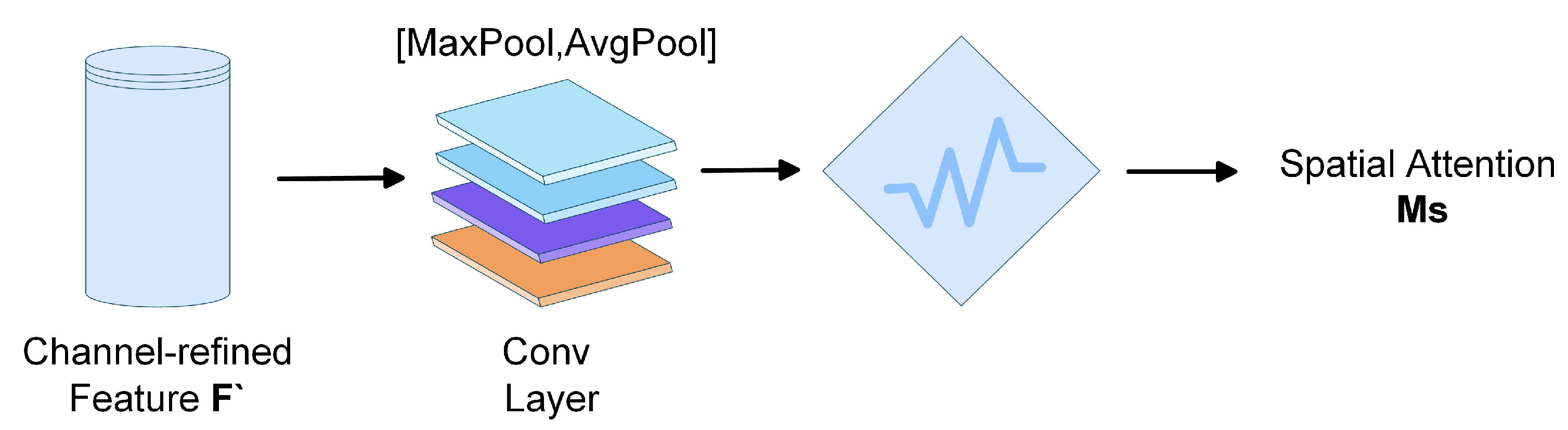

To adaptively enhance feature representations, both spatial and channel attention mechanisms are incorporated. The 3D-SE channel attention module is adopted to reinforce local semantic information and facilitate the efficient aggregation of cross-scale key features. Additionally, the CBAM mechanism is utilized to emphasize significant spectral bands and enhance focus on spatial regions of interest.

- (3)

To capture global spectral dependencies, self-attention is adopted, and relative positional encoding is introduced to maintain the sequential order of channels. A cross-attention fusion mechanism is then leveraged to achieve bidirectional integration between the local spatial features extracted by CNN and the global spectral features modeled by Transformer.

The rest of this paper is structured in the following:

Section 2 presents the main research methods of this paper, including the algorithm of the model, dataset introduction, evaluation indicators, model training strategies, loss function construction, and the experimental environment in which the model operates. In

Section 3, we show and analyze the model’s experimental results, and comparative and ablation experiments are included. In

Section 4, we critically discuss the advantages and drawbacks of the algorithm shown in this paper. Finally, in

Section 5, we provide a comprehensive summary of this paper.

3. Experiments and Results

To circumvent the spatial leakage issue caused by pixel-level random partitioning, we employed a K-Fold Cross-Validation experimental design [

67]. Specifically, the entire hyperspectral image was divided into K non-overlapping subsets (patches). In each iteration, K-1 subsets were selected as the training set, while the remaining 1 subset served as the test set. This process was repeated K times, ensuring that each subset was used exactly once as the test set. This approach guaranteed an evaluation of the model’s generalization capability across different spatial locations and class distributions. In this study, we set K = 5. Within each fold, a small portion of samples was further extracted from the training set to serve as the validation set for early stopping and hyperparameter tuning during the training process. We calculated the mean results and variances as the final performance metrics. The best results are shown in bold. During the network training phase, the Adam optimizer was utilized to update parameters, with an initial learning rate of 0.0001, a batch size of 32, and 100 training epochs to ensure convergence.

3.1. Comparative Experiments

To validate the efficacy of the hyperspectral image classification method presented in this study, which integrates multi-scale feature fusion with a multi-level attention mechanism, comparative experiments are conducted between the proposed model and several state-of-the-art approaches: SpectralFormer [

49], SSTN [

50], SSSAN [

51], CVSSN [

52], RSEN [

53], and DEMUNet [

55]. Experiments are conducted on four widely adopted benchmark datasets: Indian Pines, Pavia University, Salinas Valley, and Houston 2013. Results are assessed with OA, AA, Kappa, Macro-F1 and Weighted-F1. In the classification maps, regions where significant classification differences exist among different models have been individually marked.

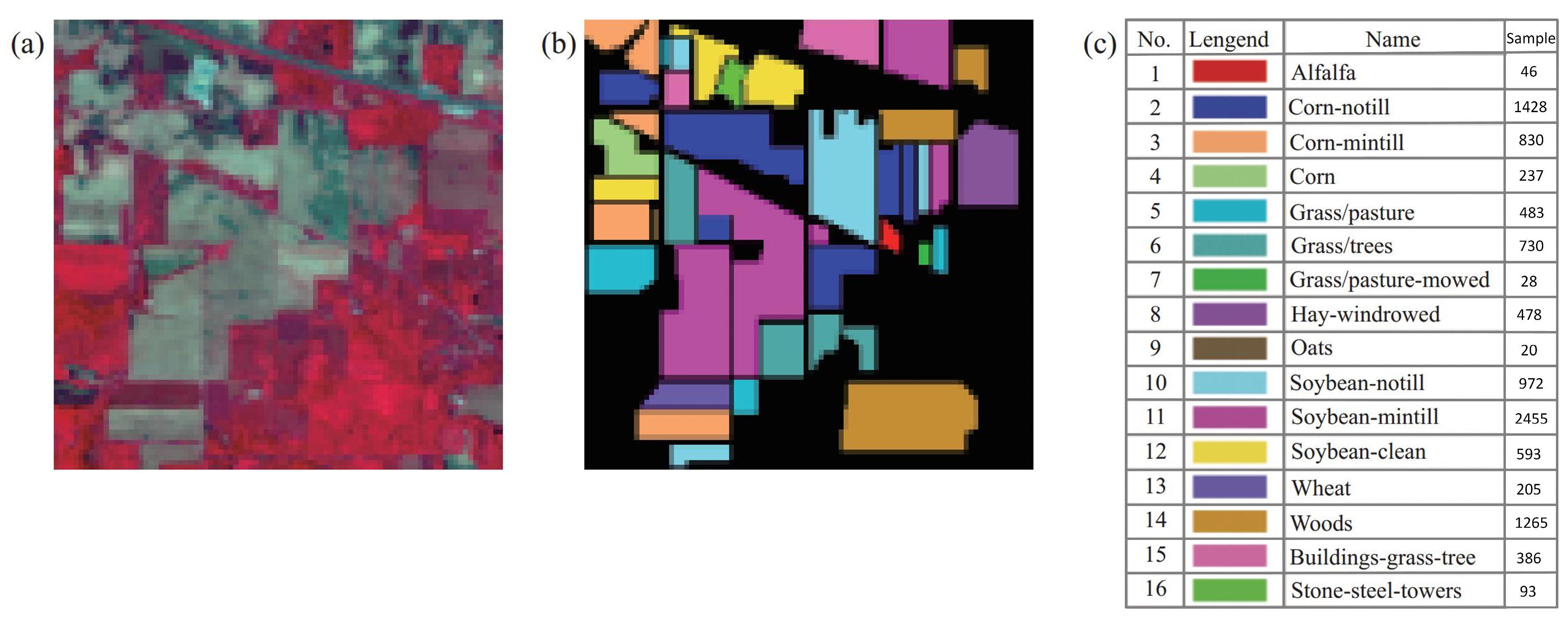

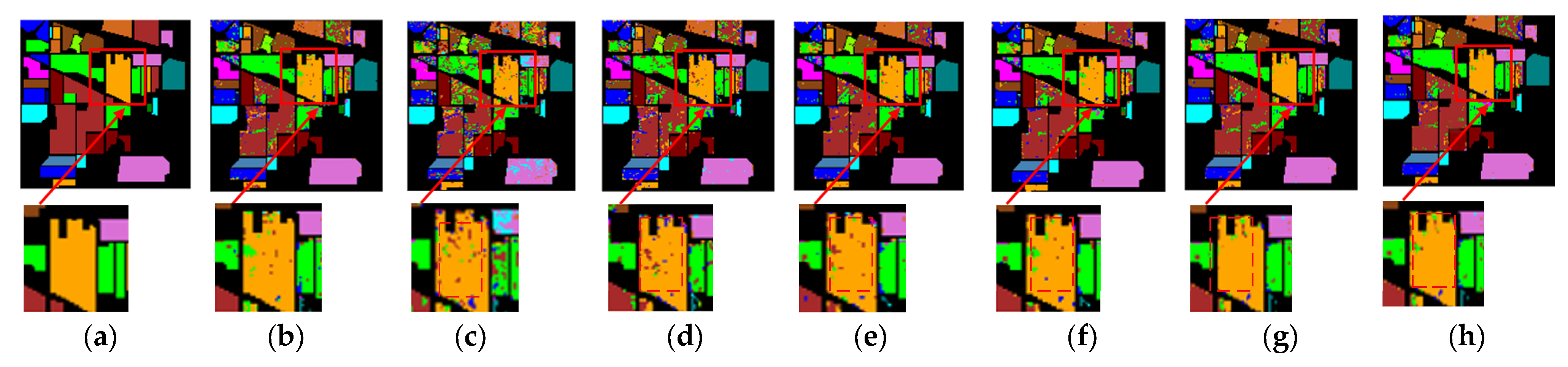

Evaluated on Indian Pines data, the model proposed in this paper outperforms the comparative methods across most metrics, demonstrating a distinct performance advantage. The Macro-F1 score reaches 0.8881, indicating that our method effectively addresses the prevalent class imbalance issue within the dataset and successfully mitigates the resultant misclassification. The Weighted-F1 score is 0.8817. Although slightly outperforming the sub-optimal model DEMUNet, it still demonstrates strong classification capability in dominant categories, with overall classification performance remaining good. Class 11 has historically been regarded as a quintessential example of a “highly confusing and difficult-to-discriminate” category. Its spectral curve exhibits only a subtle difference of 2–3 nm within the 720–760 nm red-edge region compared to the adjacent Soybean-notill and Corn-mintill classes. Furthermore, its spatial patches are fragmented, with edges intricately interspersed with roads and bare soil, making it prone to misclassification by conventional methods. Our proposed method achieves a classification accuracy of 0.9333 for this class, the highest among all compared algorithms. This result fully substantiates the efficacy of the introduced Transformer self-attention mechanism in capturing long-range spatial-spectral dependencies. The dual-attention mechanism, comprising SE and CBAM, further improves the model’s performance: in the channel dimension, it amplifies the weights of the red-edge features while suppressing noise from bare soil; in the spatial dimension, it generates a high-response mask outlining the plant contours. The specific effects are illustrated in

Figure 10 and detailed in

Table 3.

Evaluated on Pavia University dataset, the presented method achieves a Weighted-F1 score significantly superior to all comparative algorithms, which fully demonstrates that it not only maintains robust high performance on dominant classes with extremely skewed category distributions and large disparities in sample size, but also leverages the synergy of multi-scale 3D convolution and dual attention (SE-CBAM) to transform the abundant sample advantages of dominant classes into more discriminative global decision boundaries, thereby avoiding the polarization of overfitting on large classes and underfitting on small classes typical of traditional methods. For category 2 with complex terrain features, the classification accuracy reaches 0.9934, still surpassing all comparative methods. This directly validates the effectiveness of the “multi-scale + channel-spatial dual attention” mechanism: it amplifies subtle spectral differences in edge pixels and suppresses redundant background in mixed pixels, ultimately enabling the model to output high-confidence joint feature representations even under severe spatial-spectral confusion in class 2, thus achieving comprehensive leadership in both overall and local performance. For category 5, several classical methods achieve zero false detection, whereas the classification accuracy of our proposed method nears 100%, indicating strong classification capability in easily identifiable categories. For category 3 and category 7, our method shows minor shortcomings with relatively small overall gaps, likely because the spatial features of these categories are relatively simple, rendering the rich feature mechanisms somewhat redundant and slightly affecting classification accuracy. The specific effects are illustrated in

Figure 11 and detailed in

Table 4.

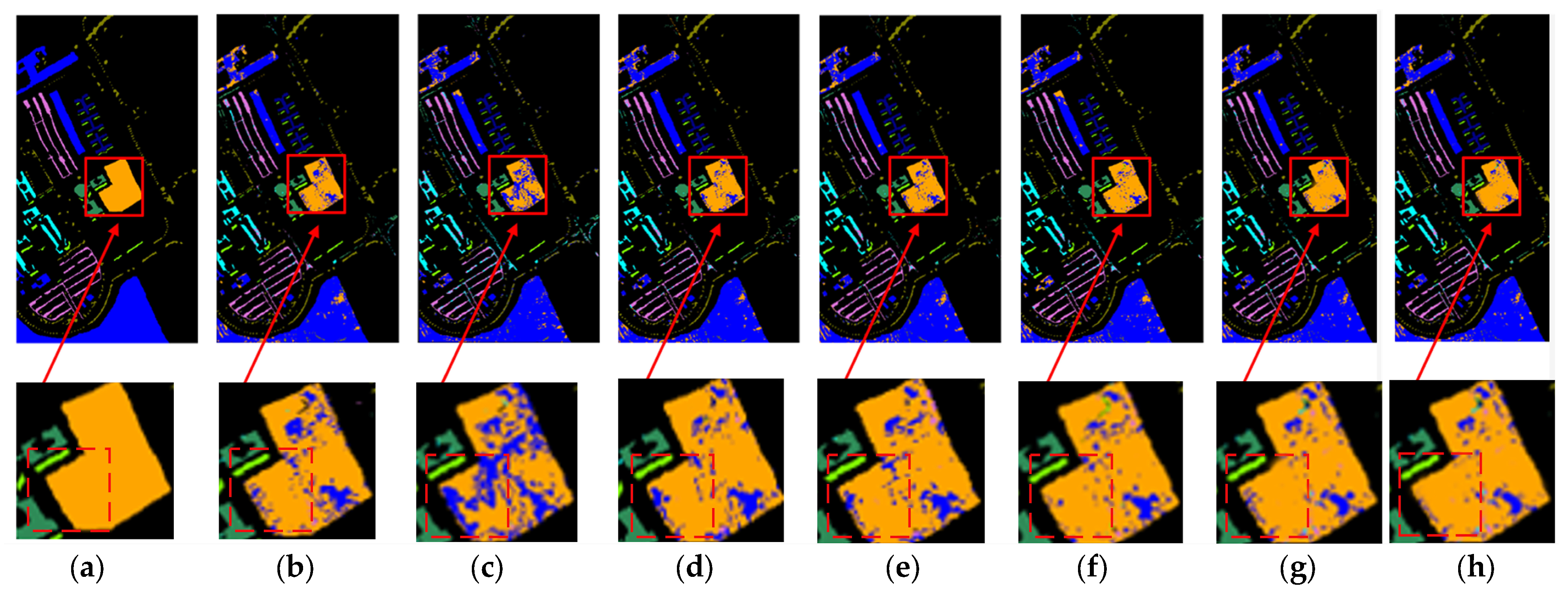

On the Salinas Valley dataset, the proposed model attains an Overall Accuracy (OA) of 0.9929, surpassing all comparative methods. Furthermore, the Average Accuracy (AA) reaches 0.9949, demonstrating exceptional class balance, while the Kappa coefficient of 0.9916 further corroborates the method’s stability and generalization capability. The classification accuracy of classes 1, 2, and 3 reached 0.9995, 0.9998, and 0.9836, respectively, which is higher than other comparison methods, demonstrating the effectiveness of multi-scale spatial-spectral fusion and attention mechanism in distinguishing fine-grained classes. These three classes exhibit extreme spectral similarity and subtle spatial texture differences; all show comparable reflectance slopes at the 550 nm green peak and 700 nm red edge, and their adjacent plots with interlaced strips often lead to misclassification in traditional methods due to “inter-class spectral confusion and intra-class spatial heterogeneity”. Ultimately, the proposed method accomplishes fine-grained separation of these three easily confused classes with ultra-high accuracy, comprehensively outperforming the second-best algorithm. This success fully reveals the progressive amplification pathway—from differential feature extraction via 3D convolution, to spectral weighting by SE, and spatial recalibration by CBAM—wherein subtle differences are progressively magnified across both channel and spatial dimensions. The performance on category 8 and category 15 is relatively unsatisfactory, prompting us to conduct further error visualization analysis (as shown in

Figure 12). The qualitative and quantitative analysis results of the model are presented in

Figure 13 and

Table 5, respectively.

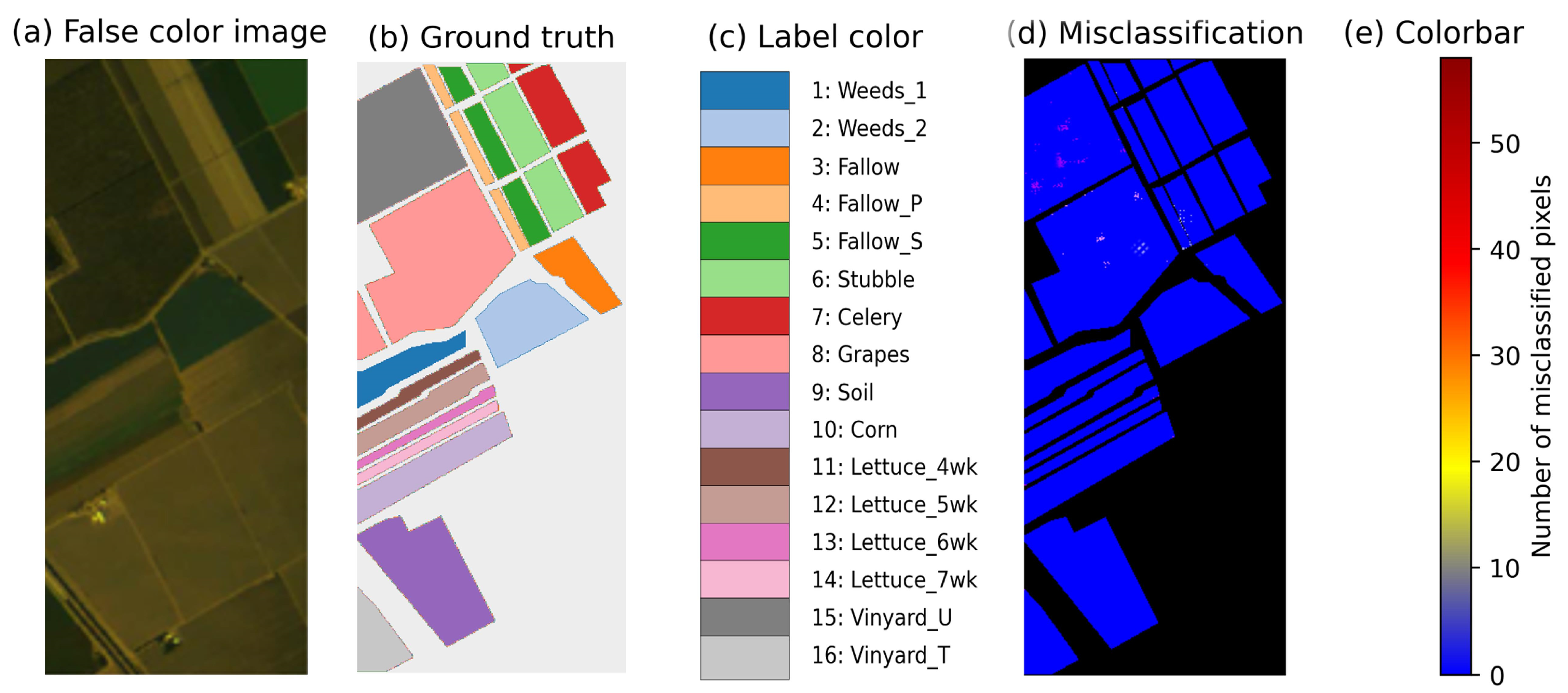

As shown in

Figure 12d, misclassified pixels of Class 8 (Grapes) appear as a few local bright spots, mostly along field borders or in sparsely vegetated areas. Their reflectance is highly variable because of differences in vine density, exposed soil and shadowing, leading to substantial overlap with the spectral signatures of Fallow and Soil; edge pixels further exhibit mixed-pixel characteristics. For Class 15 (Vinyard), errors concentrate in untrimmed inter-rows where weeds are intermixed with vines, displaying a “spotty–diffuse” pattern; the weeds’ elevated green peak and shallow red trough make the canopy appear “excessively green”, causing the model to label it as grape foliage. Although SE, CBAM and Transformer modules are employed to enhance spectral-spatial modeling, the attention mechanisms in SE and CBAM are readily distracted by high-gradient edge regions, while the global attention of Transformer attenuates fine-grained spectral differences after patch embedding, thereby amplifying local noisy pixels and producing small-area misclassification. Overall, the model still has limited capability in handling mixed pixels.

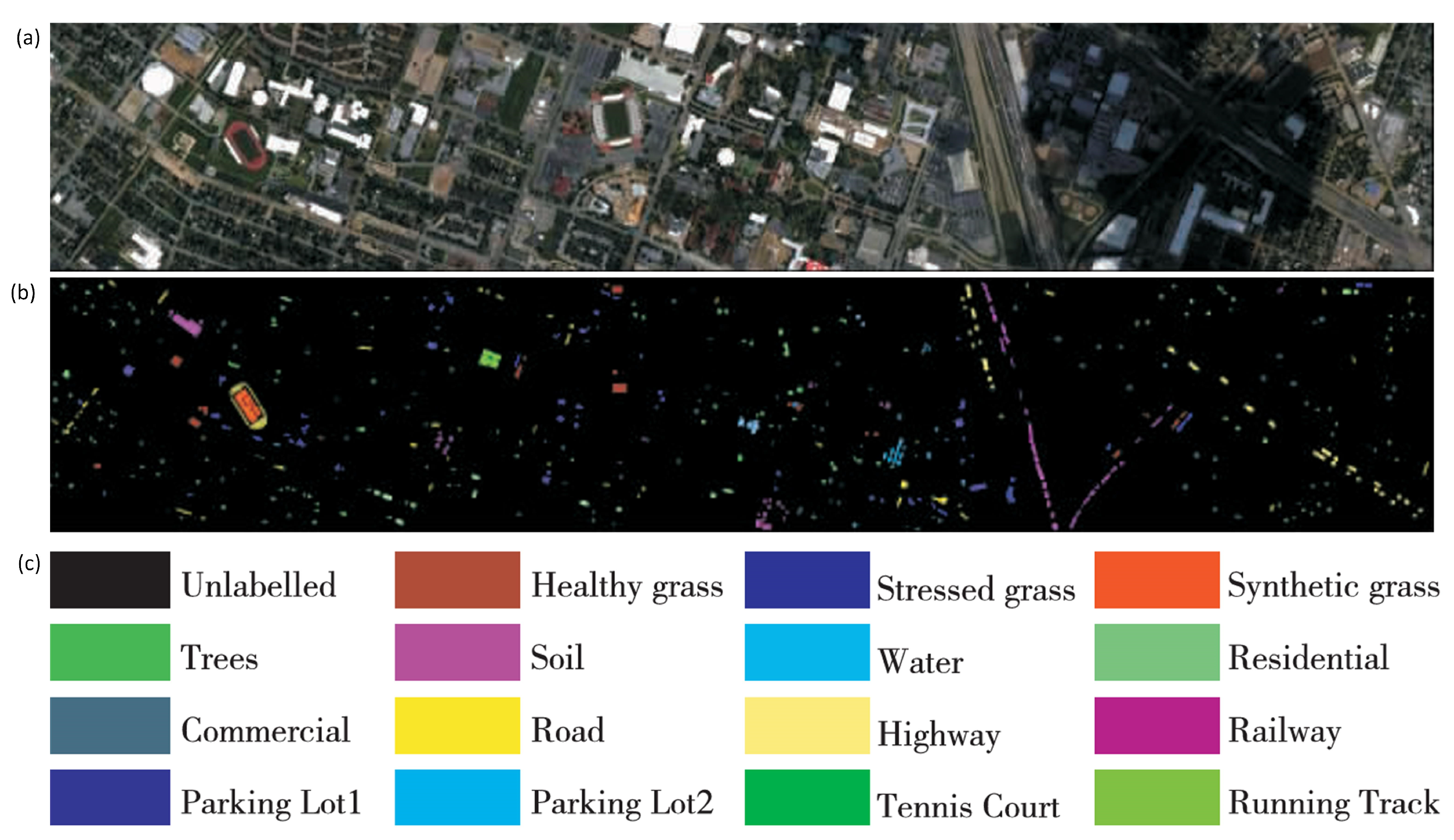

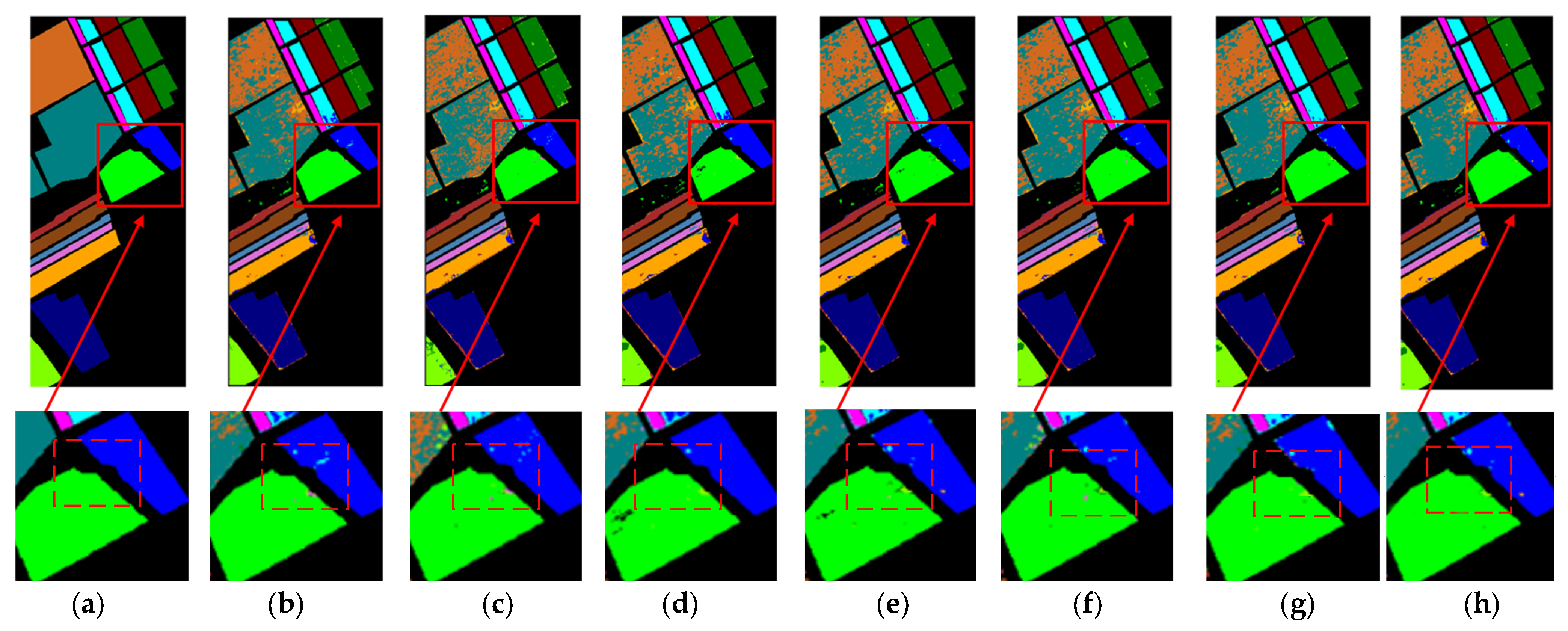

On the Houston 2013 dataset, the scene is complex, the classes are diverse, and the samples are highly imbalanced. Our model outperforms all comparative methods across multiple metrics, including AA, Kappa, and Weighted-F1. This indicates that the multi-scale 3D convolution first captures cross-scale textures of roofs, roads, and vegetation; the SE-CBAM dual attention mechanism suppresses noise along the spectral axis and sharpens edges; and the Transformer module further establishes long-range dependencies across the full image. Together, these components enable balanced recognition across all 16 classes while maintaining robust performance on dominant classes, achieving dual optimization in both overall performance and statistical consistency. The specific effects are illustrated in

Figure 14 and detailed in

Table 6.

The experimental results on the four datasets demonstrate that the classification method proposed in this paper exhibits outstanding overall performance. Compared with methods such as SpectralFormer, SSTN, SSSAN, CVSSN, RSEN, and DEMUNet, the proposed method achieves improvements in OA, AA, and Kappa coefficient across all datasets. The multiscale 3D convolutional operations, through spectral subdivision and spatial blocking mechanisms, not only fully exploit the physicochemical information embedded in high-dimensional spectral signals, but also effectively leverage spatial context to mitigate interference from mixed pixels and noise. By embedding the dual attention mechanism (SE and CBAM) into the 3D convolutional backbone, the model significantly enhances the adaptive suppression of noisy bands while simultaneously improving recognition accuracy for edge and small targets. The spatial attention submodule of CBAM performs max-pooling and average-pooling along the spectral axis on the multiscale 3D feature maps to generate a 2D spatial weight map. This weight map explicitly amplifies regions with significant edge gradients while suppressing homogeneous backgrounds. For small targets, the channel attention highlights the spectral bands most relevant to the target, while the spatial attention generates high-response weights within the target’s local window, suppressing surrounding background interference. Spectral signatures of edge pixels are often affected by adjacent endmember mixing, leading to frequent misclassifications in traditional CNNs. The dual attention mechanism, through a “channel + spatial” dual gating strategy, preserves the effective spectral features of edge pixels while suppressing mixed noise, thereby enhancing classification confidence for these pixels. The Transformer self-attention mechanism completes the final piece of the puzzle in the hyperspectral classification network, complementing the “convolution + dual attention” framework. In the spectral dimension, it establishes global dependencies across hundreds of bands, effectively distinguishing subtle spectral differences. In the spatial dimension, it overcomes the limitations of local receptive fields by aggregating semantic context from the entire image, thereby achieving true global context modeling.

To further evaluate model behavior under extremely small-sample conditions, we conduct restricted-sample experiments using only 5% and 10% of the original training data. On the SA and PU datasets, the proposed approach still reaches 0.9212 and 0.9234 overall accuracy, respectively. Reducing the training set from 100% to 10% decreased OA by only 0.05~0.07 on each dataset, indicating robust performance even when training data are severely limited. At 5% of the training data the model continues to deliver high accuracy, demonstrating its ability to extract discriminative spectral–spatial features in extreme few-shot scenarios. Meanwhile, performance improves steadily as more samples are added, gradually approaching saturation with the full training set; detailed results are given in

Table 7.

3.2. Ablation Study

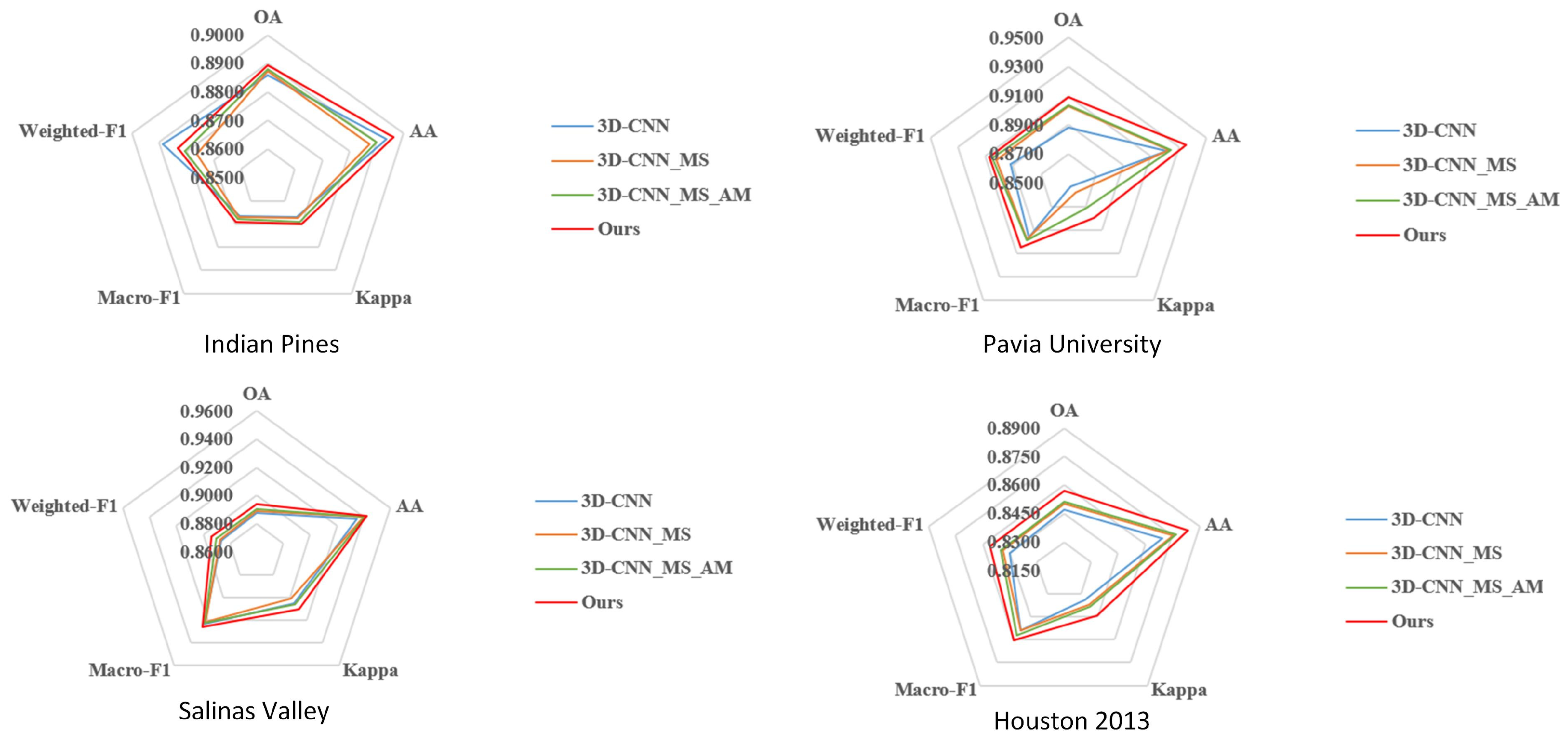

To evaluate the efficacy of the improvement strategies introduced in this classification method and to investigate the specific impacts of these different strategies on model performance, this section conducts ablation experiments. For this purpose, starting from a baseline 3D-CNN model, the following components are progressively introduced: a multi-scale spectral-spatial feature extraction module (3D-CNN_MS), channel and spatial attention mechanisms (3D-CNN_MS_AM), and finally a local-global feature fusion module (Ours). The experimental results are analyzed by using the aforementioned datasets and evaluation metrics, with the overall outcomes illustrated in

Figure 15.

The experimental results demonstrate that after incorporating the multi-scale spectral-spatial feature extraction module (3D-CNN_MS), the model achieves improvements in overall classification accuracy, average classification accuracy, Kappa coefficient, and F1-Score across all datasets compared to the baseline 3D-CNN model. This demonstrates that this structure succeeds in more thoroughly extracting spatial and spectral features at different scales in hyperspectral remote sensing images, thereby enhancing feature representation capability and model performance. Furthermore, with the introduction of channel and spatial attention mechanisms (3D-CNN_MS_AM), the model’s performance improves again, particularly on the Pavia University and Salinas Valley datasets. This suggests that attention mechanisms play a crucial role in suppressing redundant information and highlighting key features, further strengthening the model’s discriminative ability. Finally, after incorporating the Transformer-based local-global feature fusion module (Ours), the model attains optimal performance on every metric, with especially significant improvements in OA and Kappa coefficient on structurally complex datasets such as Houston 2013 and Pavia University. This demonstrates that the introduced Transformer structure can model broader contextual dependencies, effectively compensating for the limitations of convolutional models in global modeling. It promotes richer local-global feature fusion, boosting both classification accuracy and stability.

To further analyze the independent effects and synergistic gains of attention mechanisms, this study further supplemented ablation experiments to evaluate the individual contributions of the SE and CBAM. The newly added groups include 3D-CNN_MS with only SE (3D-CNN_MS_SE) and 3D-CNN_MS with only CBAM (3D-CNN_MS_CBAM), which are compared with the 3D-CNN_MS_AM group (with the synergistic combination of SE and CBAM). From the multi-indicator distribution in the radar chart, it can be observed that when the SE or CBAM is introduced individually, the model’s performance in various tasks is superior to that of the basic 3D-CNN_MS, but the improvement is limited. In contrast, the 3D-CNN_MS_AM group, which combines SE and CBAM, achieves a more significant leap in performance across most indicators. The results clearly demonstrate that SE and CBAM do not simply produce additive effects; instead, they form a synergistic gain under the complementary mechanism of channel attention and spatial attention, serving as one of the key factors for enhancing model performance. The experimental results are shown in

Figure 16.

3.3. Performance Analysis

Table 8 presents the computational complexity of the proposed method compared with other models. Our model achieves a superior balance between efficiency and representational capacity. Although the model has a relatively large number of parameters due to the introduction of the Transformer structure, it maintains high inference efficiency. This is mainly because the computational structure is highly parallelized and the computation paths are well-organized. From the overall architecture shown in

Figure 1, it can be clearly seen that the input is first preprocessed with PCA and mapped into a relatively compact low-dimensional space, directly reducing the computational base of the convolutional and attention modules and avoiding the extra overhead caused by high-dimensional inputs in deep layers. Next, we use small 3 × 3 × 3 convolutional kernels to extract local spectral–spatial features, enabling local representations to be computed at very low cost and providing efficient features for subsequent global modeling. Channel attention (SE) and spatial attention (CBAM) are then sequentially introduced; and their adaptive reweighting mechanisms enhance important features and suppress redundant information, significantly improving representational efficiency and enabling the model to achieve higher information density. The improved Transformer structure optimizes the patch processing pipeline, significantly reducing the number of attention tokens. The main operations of the network consist of convolutions and matrix multiplications, both of which are highly parallelizable on GPUs, allowing large-scale parameters to be executed in batch with high throughput. This “parameter-concentrated yet computation-controlled” design enables the model to maintain very low inference latency while achieving high accuracy, striking a dual balance between performance and efficiency.

Some comparative models adopt complex mechanisms such as multi-scale pyramids, recursive stacking, and adaptive neighborhood construction. These architectures often involve numerous serial dependencies, multi-branch merging, and frequent shape-varying feature map operations, making it difficult to achieve true parallelism on GPUs. Additionally, some modules require dynamically generating attention weights or performing complex spatial resampling during inference. The scattered computation paths and complex scheduling ultimately lead to significantly slower inference. Specifically, the SSSAN model applies both spatial and spectral self-attention on high-dimensional hyperspectral patches, generating a large number of tokens. The computational complexity of self-attention grows quadratically with the number of tokens. Moreover, SSSAN employs a pixel-wise sliding window prediction, requiring the two attention branches to be recalculated for each overlapping patch, resulting in substantial redundant computation. During inference, the model frequently constructs attention matrices and performs high-dimensional inner products and normalization operations, incurring considerable memory access overhead and computational delay.

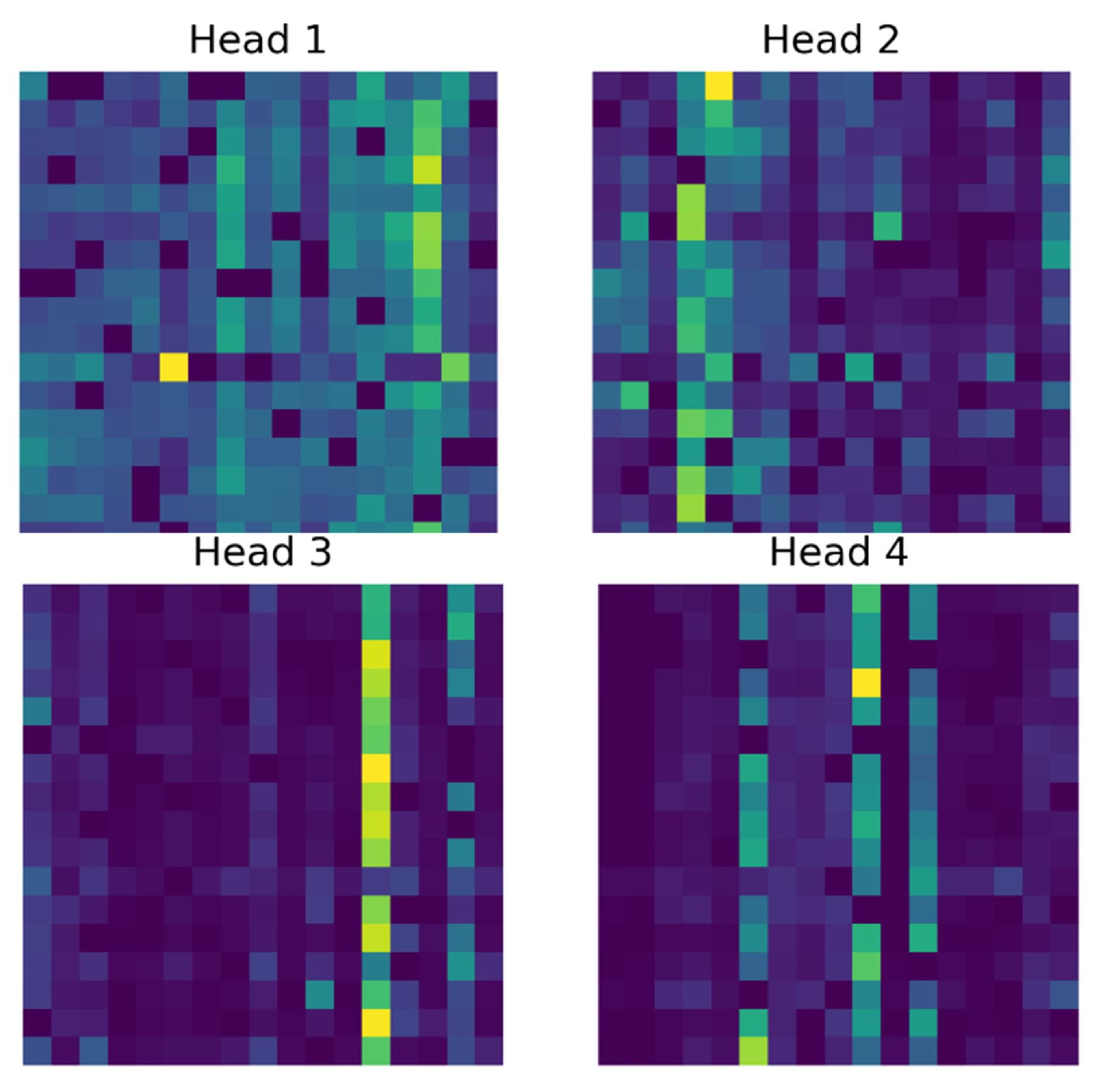

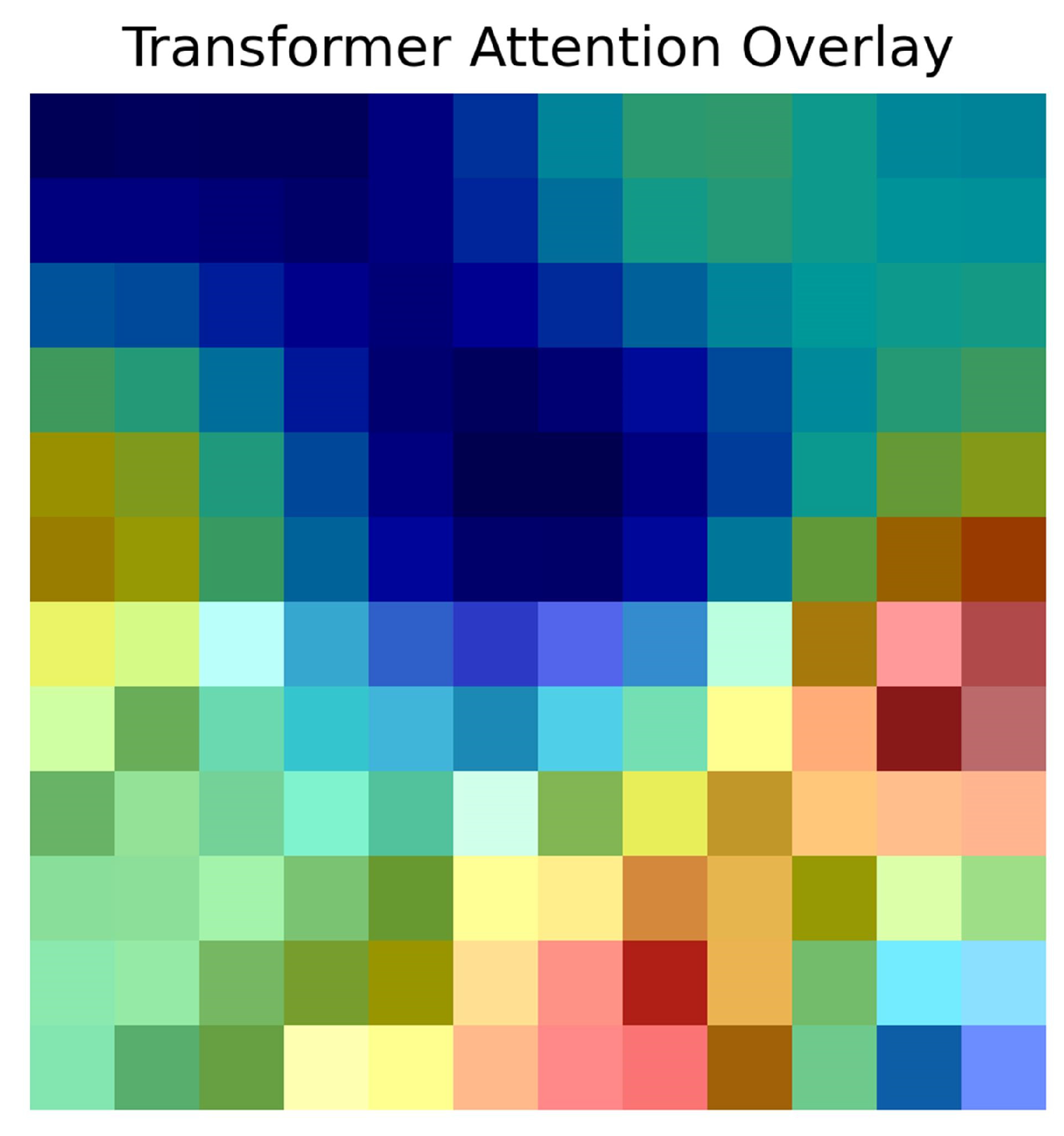

To further verify the interpretability of the proposed model during the feature extraction process and to evaluate the effectiveness of its attention mechanism, a visualization analysis of the multi-head attention weights in the Transformer model was conducted. This analysis allows an intuitive observation of the regions that the model focuses on in the spatial–spectral domain, revealing its capability to model features in complex areas such as land-cover boundaries and mixed pixels.

Figure 17 illustrates the attention weight distributions of different attention heads in the proposed Transformer model. It can be observed that the attention heads exhibit distinct focus patterns across spatial and spectral dimensions, indicating that the multi-head attention mechanism successfully captures diverse spatial–spectral dependencies. Some attention heads show concentrated responses in specific spectral channels, while others mainly focus on spatial boundary regions of land-cover classes.

Figure 18 presents the integrated attention overlay map obtained by averaging the multi-head attention matrices. The integrated attention map reveals that the model assigns higher attention weights to the boundaries of land-cover classes and mixed pixels—areas that are typically difficult to distinguish by using conventional methods. This finding demonstrates that the Transformer effectively models spatial contextual relationships, thereby enhancing the discriminative capability of the learned feature.

4. Discussion

In this paper, we propose a novel remote sensing image classification model that utilizes multi-scale feature fusion, a multi-level attention mechanism (SE and CBAM), and a Transformer self-attention module to realize effective integration of local and global features. Ultimately, our model achieves leading classification accuracy on four benchmark datasets: Indian Pines, Pavia University, Salinas Valley, and Houston 2013. Compared to baseline models, our approach employs multi-scale feature fusion and a multi-level attention mechanism. Multi-scale convolution breaks free from the confines of a fixed, single-scale receptive field. The channel attention (3D-SE) performs band selection, effectively suppressing noise and water absorption bands. While algorithms like CVSSN demonstrate the utilization of spatial and spectral information and can efficiently fuse them, they exhibit deficiencies in identifying class boundary regions. Our CBAM-style spatial attention focuses on positional information, enhancing edges and small targets. The cascaded combination of these two attentions forms a multi-level recalibration mechanism, enabling the network to provide high-confidence discrimination for pixels that are “spectrally similar but spatially different” or “spatially similar but spectrally distinct”. The global self-attention establishes long-range dependencies between “any pixel and any band”, addressing the limited receptive field problem of traditional convolutions. Concurrently, positional encoding preserves spatial location information, allowing the network to perceive relationships between pixels that are spectrally similar but spatially distant, or spatially adjacent but spectrally different. Local CNN features (with strong inductive bias) and global Transformer features (with high expressive power) are fused layer by layer through gating or cross-attention mechanisms. This preserves the translation equivariance of convolutions while introducing the dynamic weights, ensuring the network maintains stable performance and significantly reduces edge misclassification.

Despite the significant advantages demonstrated by our method, it also has some limitations. Our model exhibits suboptimal performance on certain classes within the four training datasets. This may stem from the joint contribution of the global self-attention and the CNN’s dual attention, which amplifies the “tail dilution” effect. In the global softmax of the Transformer’s long-range attention, the feature responses of rare classes are suppressed as they are dominated by neighboring majority-class pixels, causing a sharp drop in recall for the extremely small classes. Overall, our model shows a tendency of being “farmland-friendly and city-sensitive” in terms of recall. It achieves near-zero missed detections in scenarios with low-to-medium-resolution imagery, continuous land parcels, and sufficient training samples, such as the Indian Pines, Salinas Valley, and Houston 2013 datasets. The spatial resolutions of these three datasets range from 2 to 20 m, where farmland or suburban parcels are distributed in large, continuous areas with high spectral homogeneity and few mixed edge pixels. This indicates that our spectral-spatial feature fusion strategy is highly effective for large-scale homogeneous regions. However, the recall is less ideal in the high-resolution urban scene of Pavia University. The very high resolution exposes the sharp boundaries of roofs, roads, and trees, resulting in a large number of mixed edge pixels with complex spectra. The model shows a slight weakness in distinguishing mixed edge pixels.