Construction and Visualization of Levels of Detail for High-Resolution LiDAR-Derived Digital Outcrop Models

Highlights

- Proposed an automated and adaptive workflow for constructing multi-scale LOD models from high-resolution LiDAR-derived digital outcrops, integrating model segmentation, pseudo-quadtree tiling, and feature-preserving simplification.

- Enhanced the traditional QEM simplification algorithm with vertex sharpness constraint and boundary-freezing and fallback strategies to maintain geometric continuity and preserve key geological structures.

- Developed a dynamic multi-scale visualization framework using an LOD index and the OSG PagedLOD mechanism, achieving real-time, view-dependent rendering of massive outcrop models on standard hardware.

- Provide a practical and efficient solution for managing and visualizing large-scale LiDAR-derived outcrop models, effectively addressing rendering bottlenecks in geological applications.

- Enable smooth, interactive, and scalable visualization, supporting detailed multi-scale geological interpretation and paving the way for future web-based collaborative analysis platforms.

Abstract

1. Introduction

- A specialized LOD workflow that uses texture-based segmentation and a pseudo-quadtree to generate an adaptive tile pyramid, effectively handling the elongated morphology of outcrops.

- A feature-preserving simplification algorithm that introduces a vertex sharpness constraint and a boundary freezing strategy to retain critical geological features during aggressive mesh reduction.

- A dynamic visualization framework that leverages an LOD index and the OSG engine to achieve real-time, view-dependent loading and rendering, enabling interactive exploration of massive models on standard hardware.

2. Literature Review

2.1. Digital Outcrops

2.2. Level of Detail

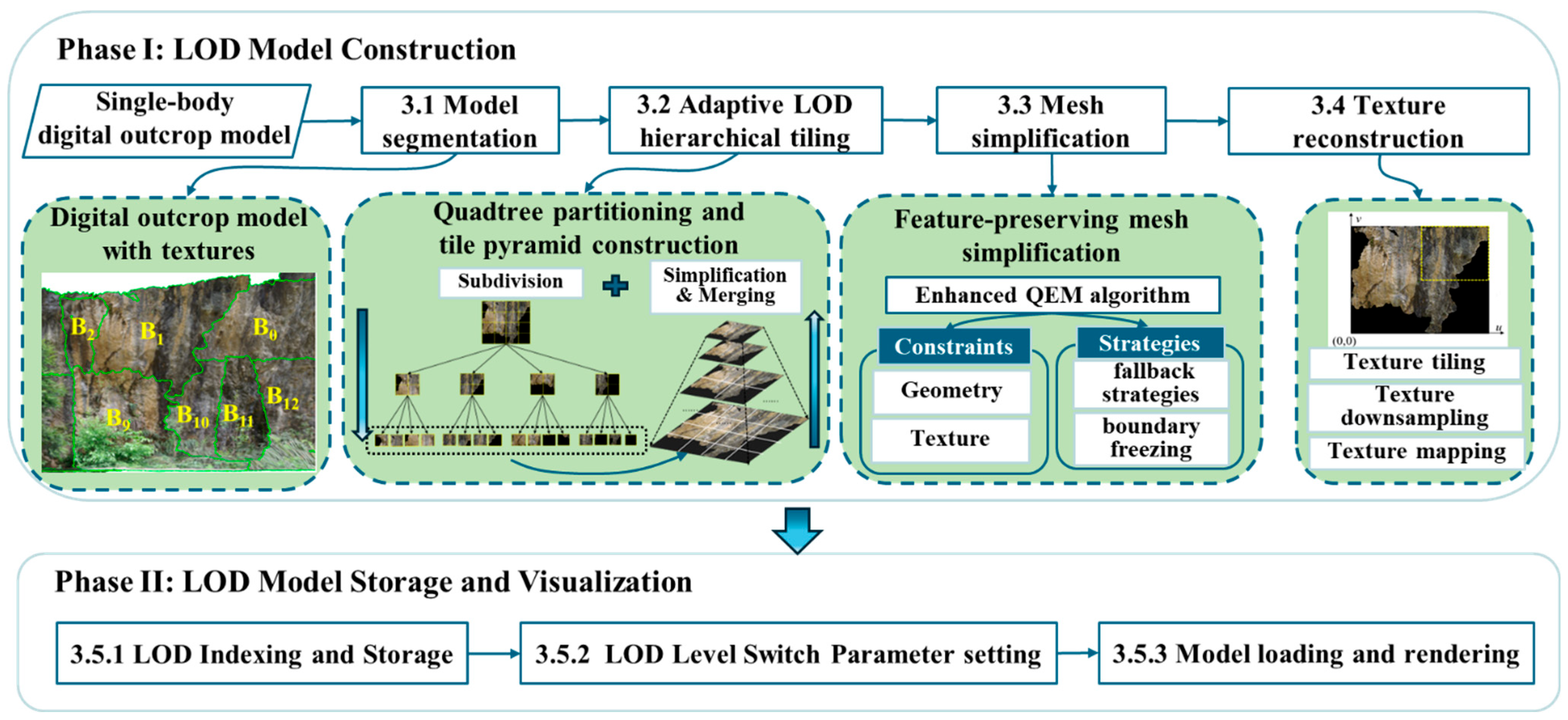

3. Methods

- Single-body Model Segmentation (Section 3.1): Import the high-resolution, large-scale LiDAR-derived single-body digital outcrop model, then partition it into multiple sub-models according to the coverage area of each texture image.

- Adaptive LOD Hierarchical Tiling (Section 3.2): For each sub-model, construct a multi-level LOD tile structure using a pseudo-quadtree partitioning approach, forming a tile pyramid through iterative subdivision, simplification, and merging processes.

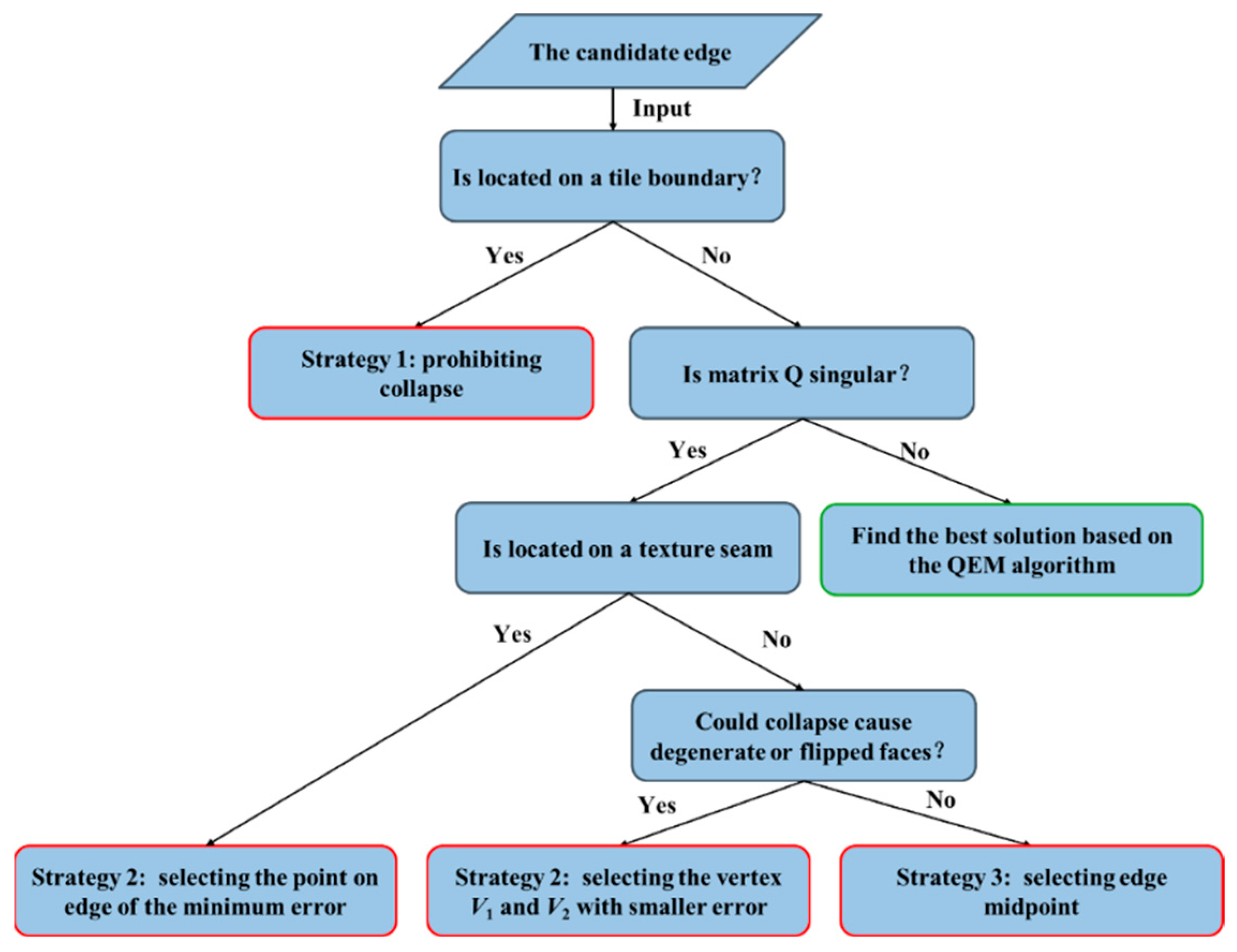

- Mesh Simplification (Section 3.3): Apply a feature-preserving QEM algorithm to simplify each tile across all LOD levels, incorporating constraints for geometry and texture preservation along with strategies such as fallback tactics and boundary freezing.

- Texture Reconstruction (Section 3.4): Reconstruct texture images and their corresponding coordinates for tiles at each LOD level, involving texture tiling, downsampling, and remapping operations.

- LOD Indexing and Storage (Section 3.5.1): Establish an LOD index file for the entire model, storing geometric data, texture information, and other parameters of each constructed tile in OSGB format.

- Display Parameter Setting (Section 3.5.2): Configure model display parameters based on the texture image size of each tile to optimize visualization quality.

- Model Loading and Rendering (Section 3.5.3): Implement multi-scale loading and rendering of the LOD digital outcrop model using the OSG engine, enabling efficient visualization across different detail levels.

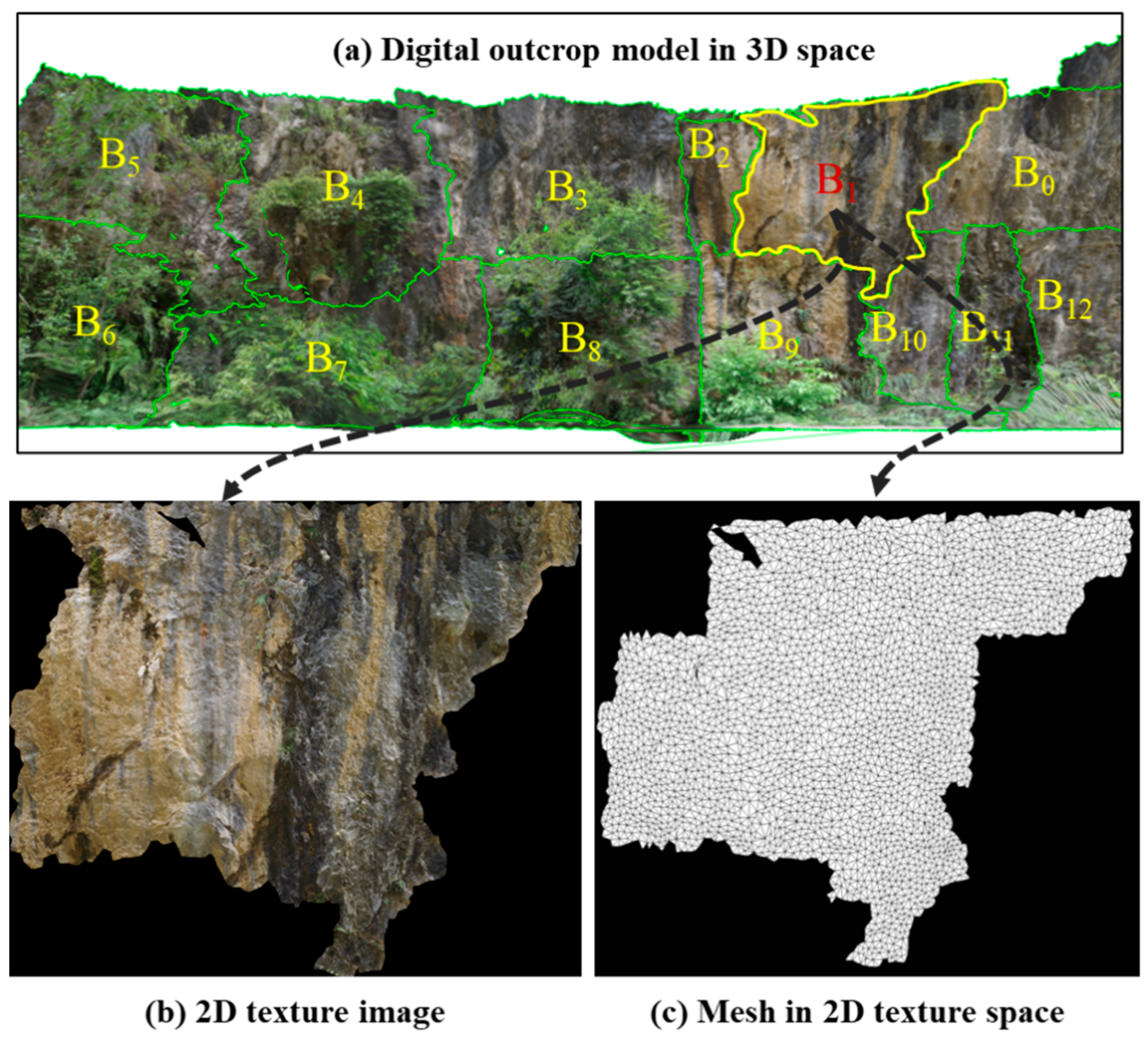

3.1. Single-Body Model Segmentation

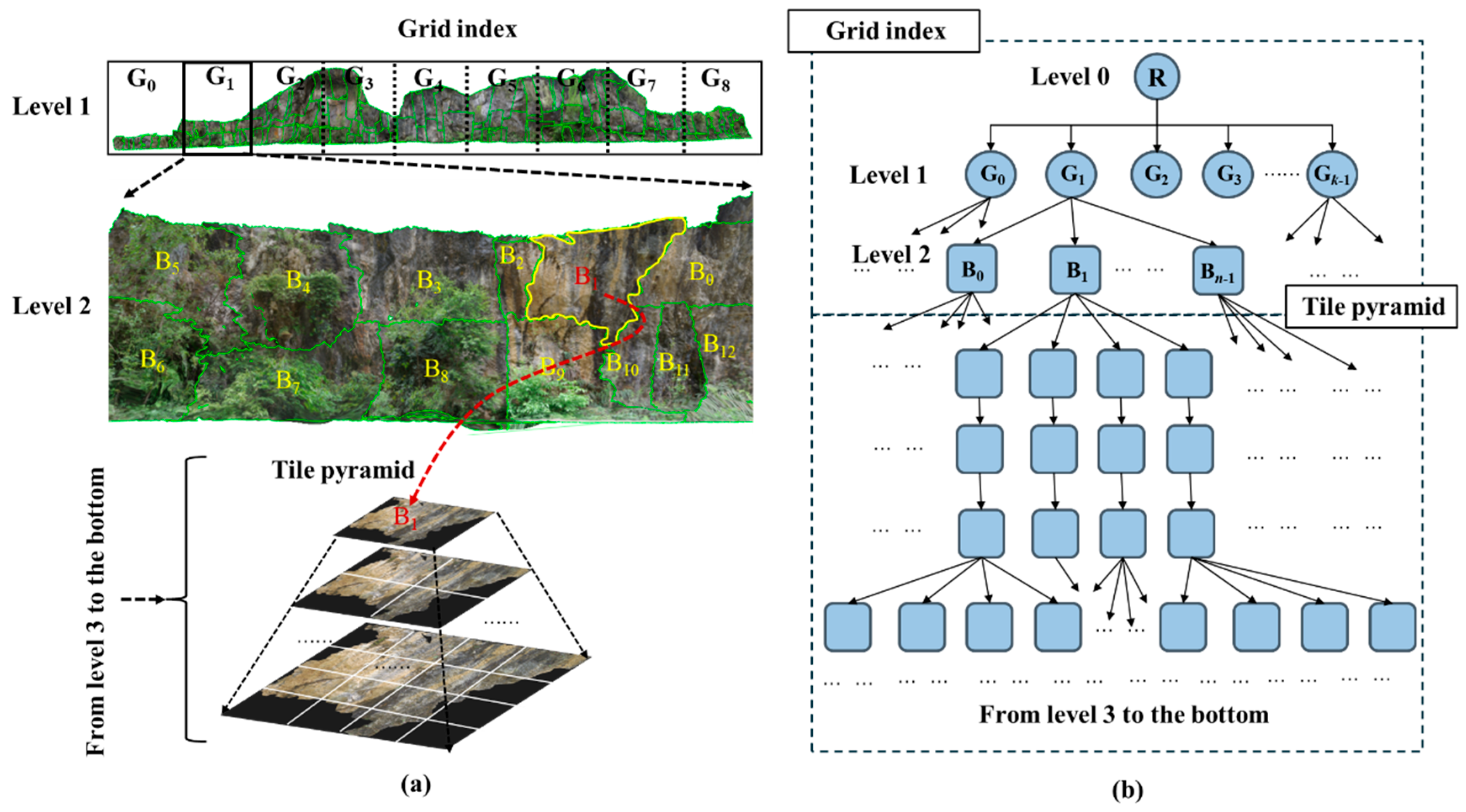

3.2. Adaptive LOD Hierarchical Tiling

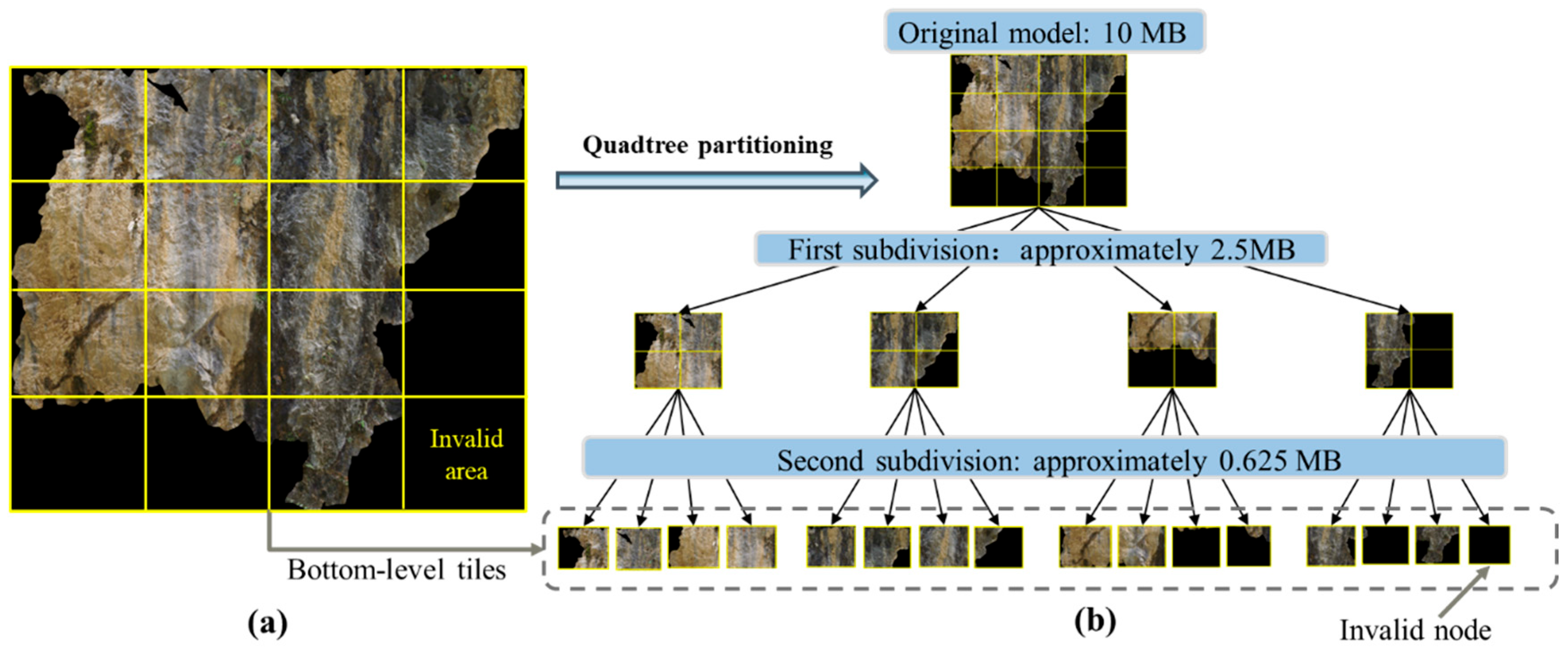

3.2.1. Bottom-Level Tile Generation Based on Quadtree Partitioning

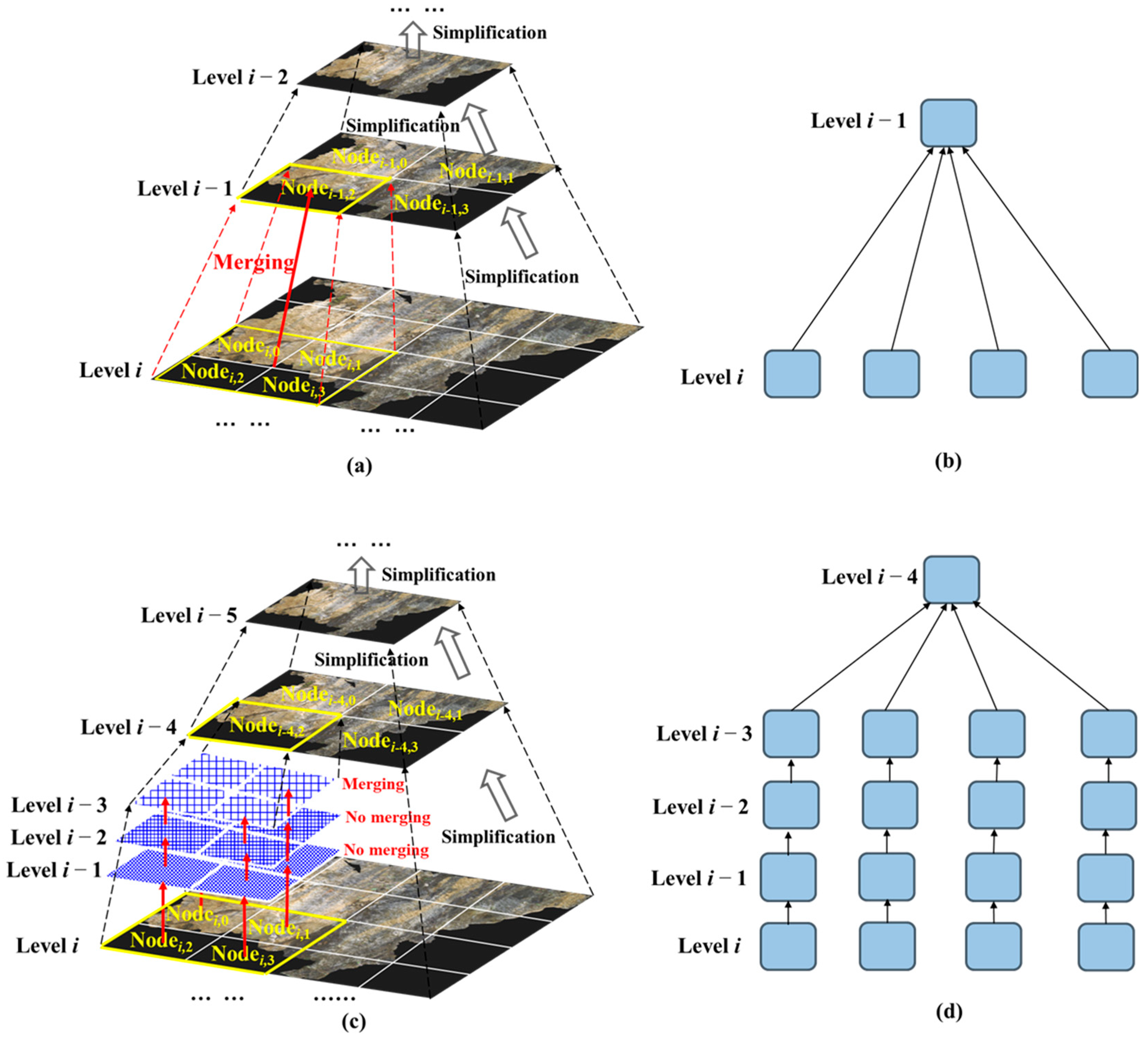

3.2.2. Simplification and Merging Strategies for Bottom-Up Tile Generation

3.3. Feature-Preserving Mesh Simplification

3.3.1. QEM Simplification Algorithm

3.3.2. Vertex Sharpness Constraint

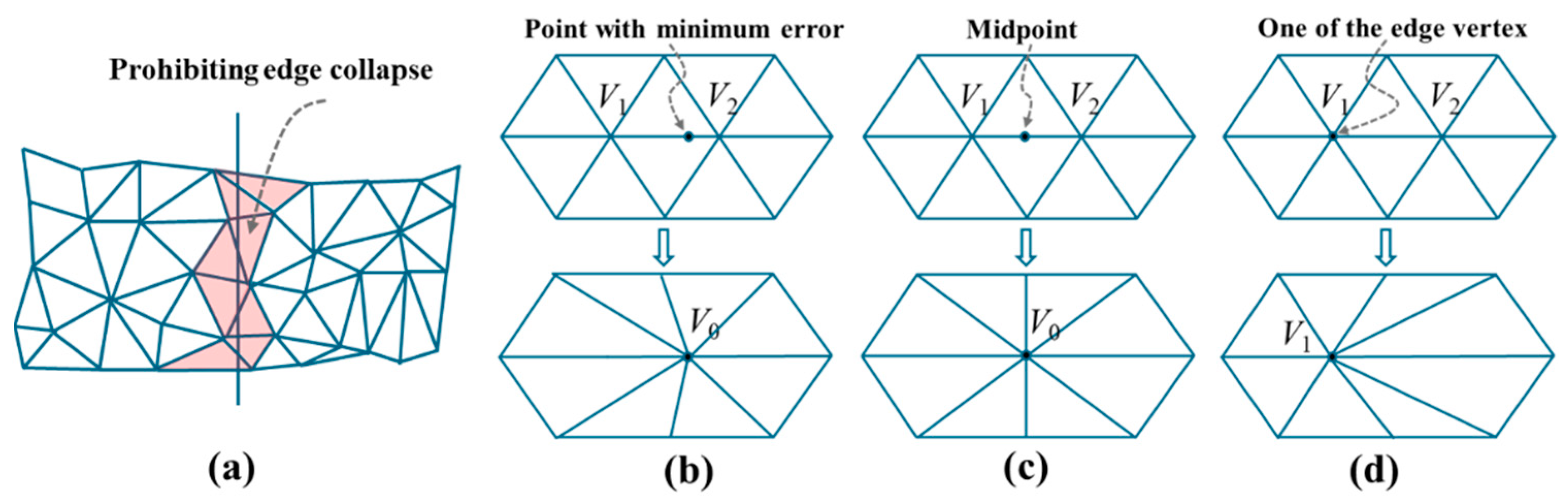

3.3.3. Strategies for Specific Cases

3.4. Tile Texture Downsampling and Remapping

3.5. LOD Model Storage and Visualization

3.5.1. LOD Indexing and Storage

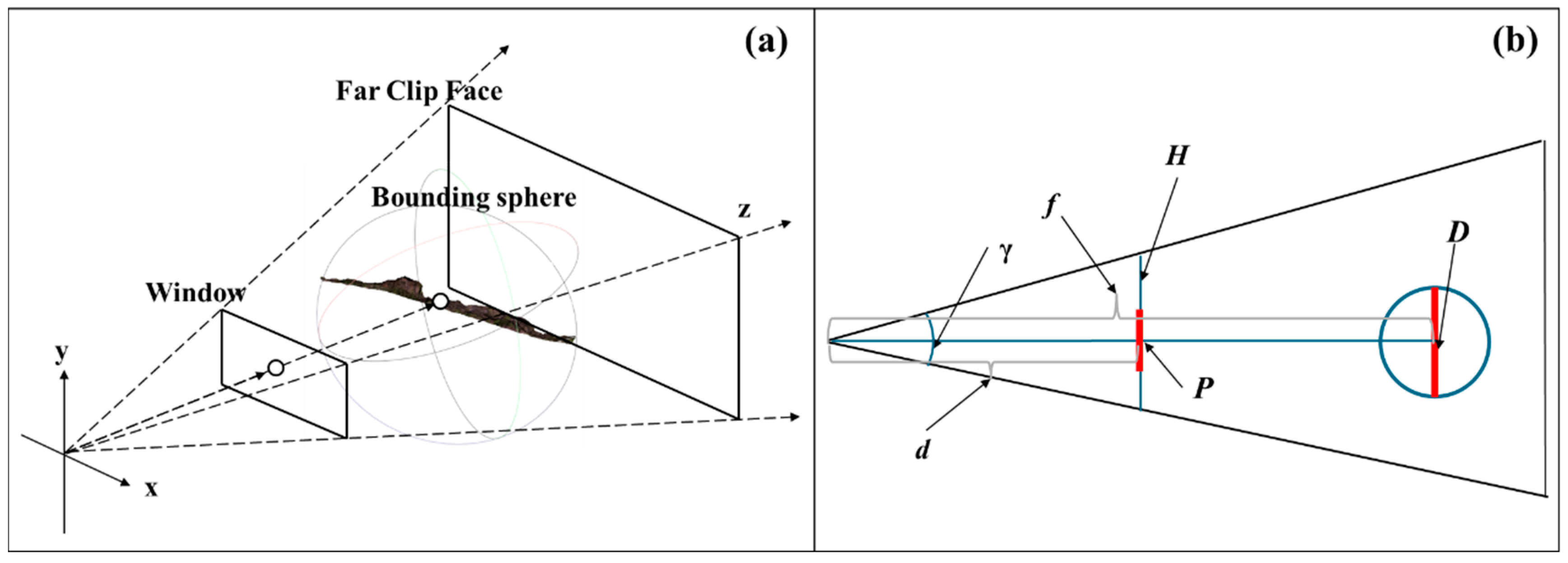

3.5.2. LOD Level Switch Parameter

3.5.3. LOD Model Loading and Rendering

4. Results

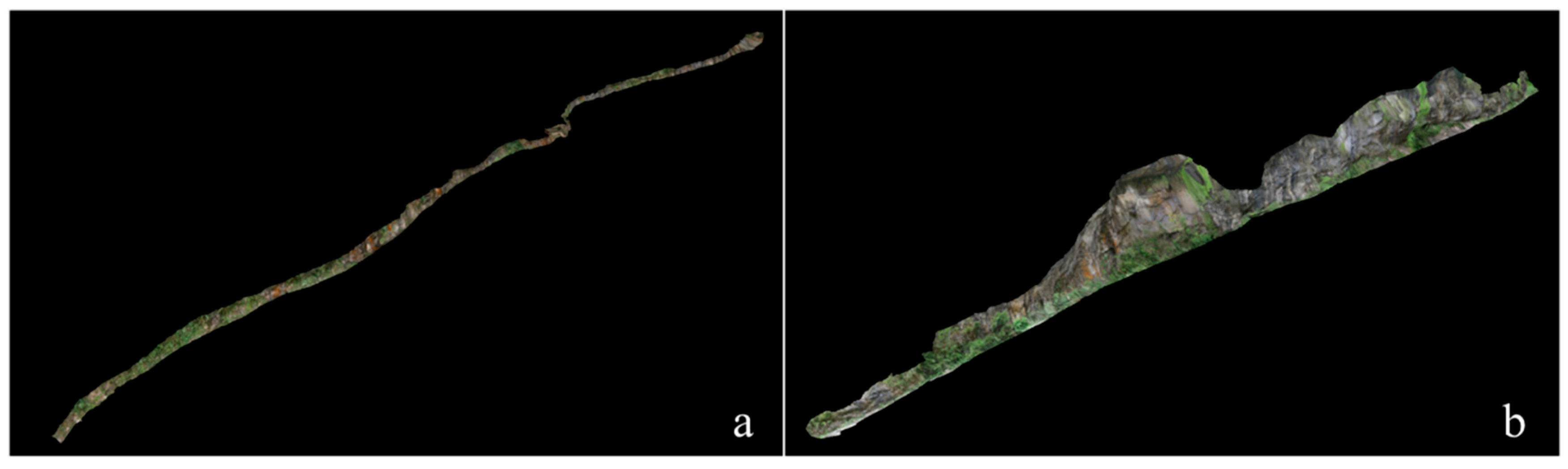

4.1. Experimental Dataset and Environment

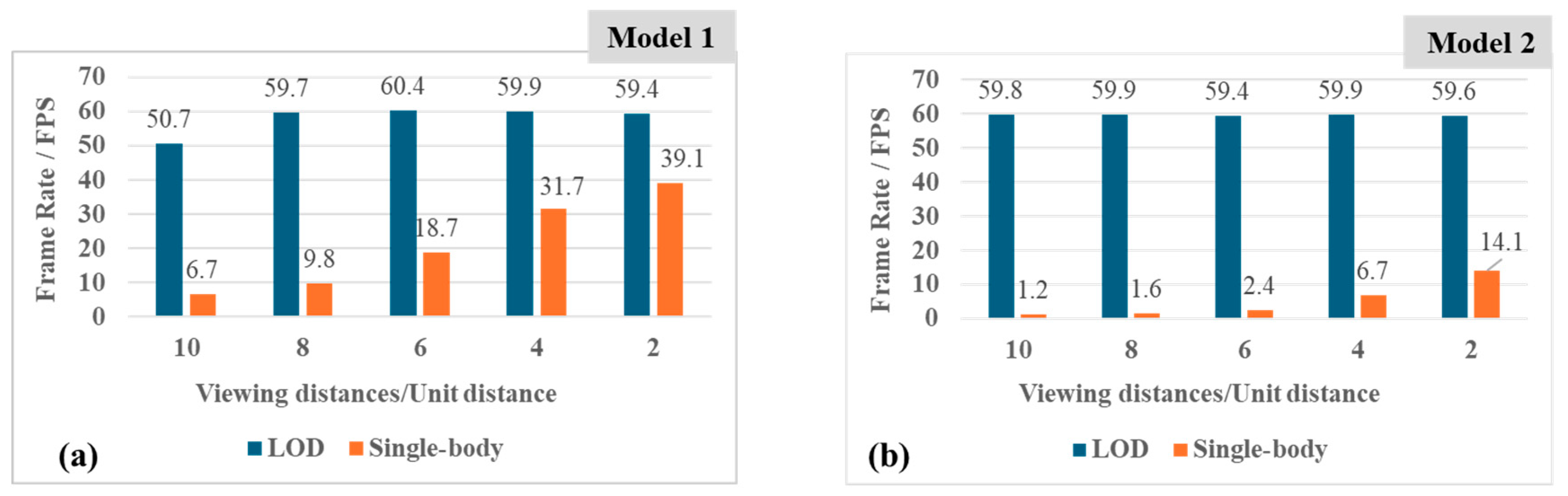

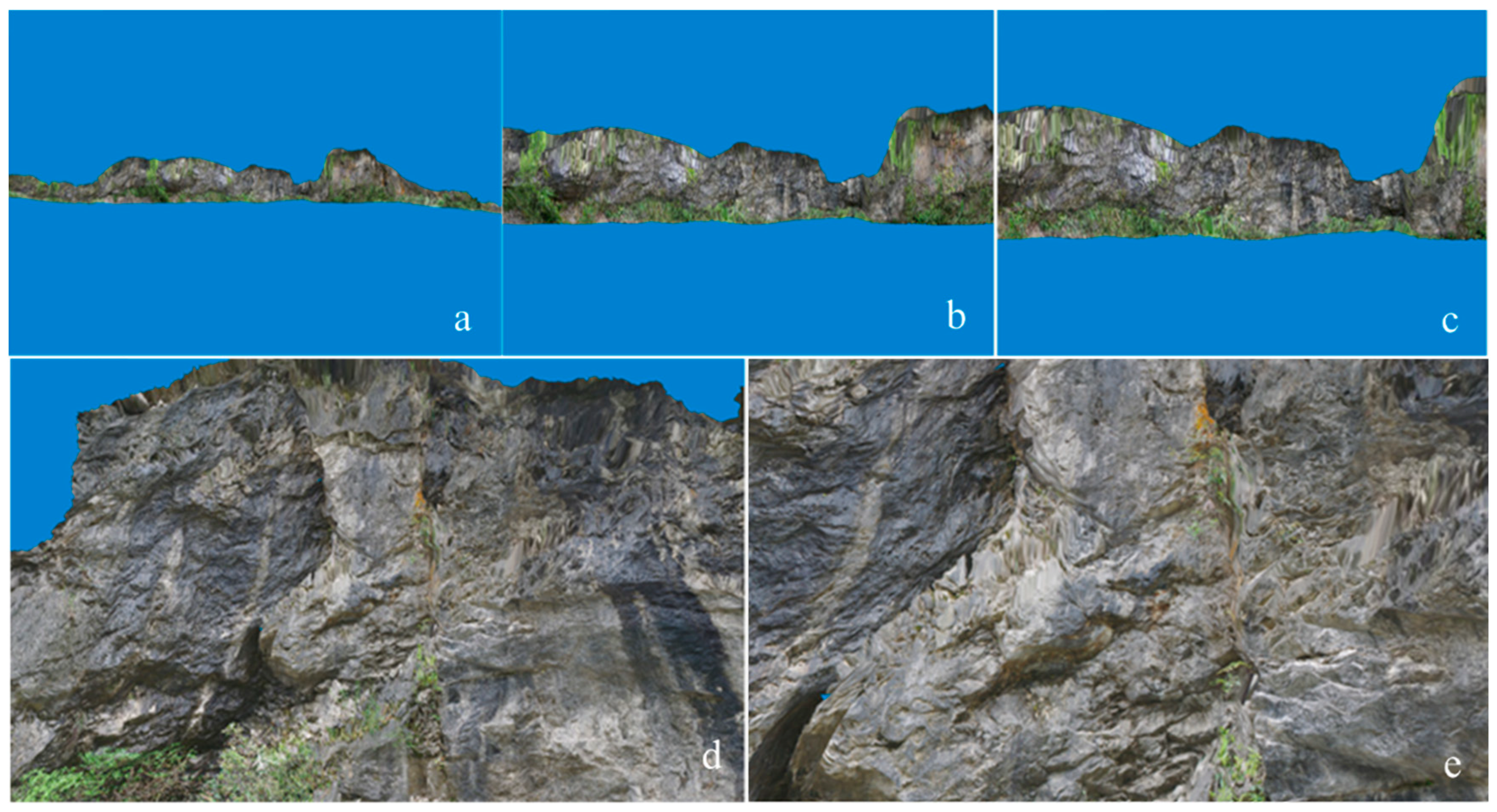

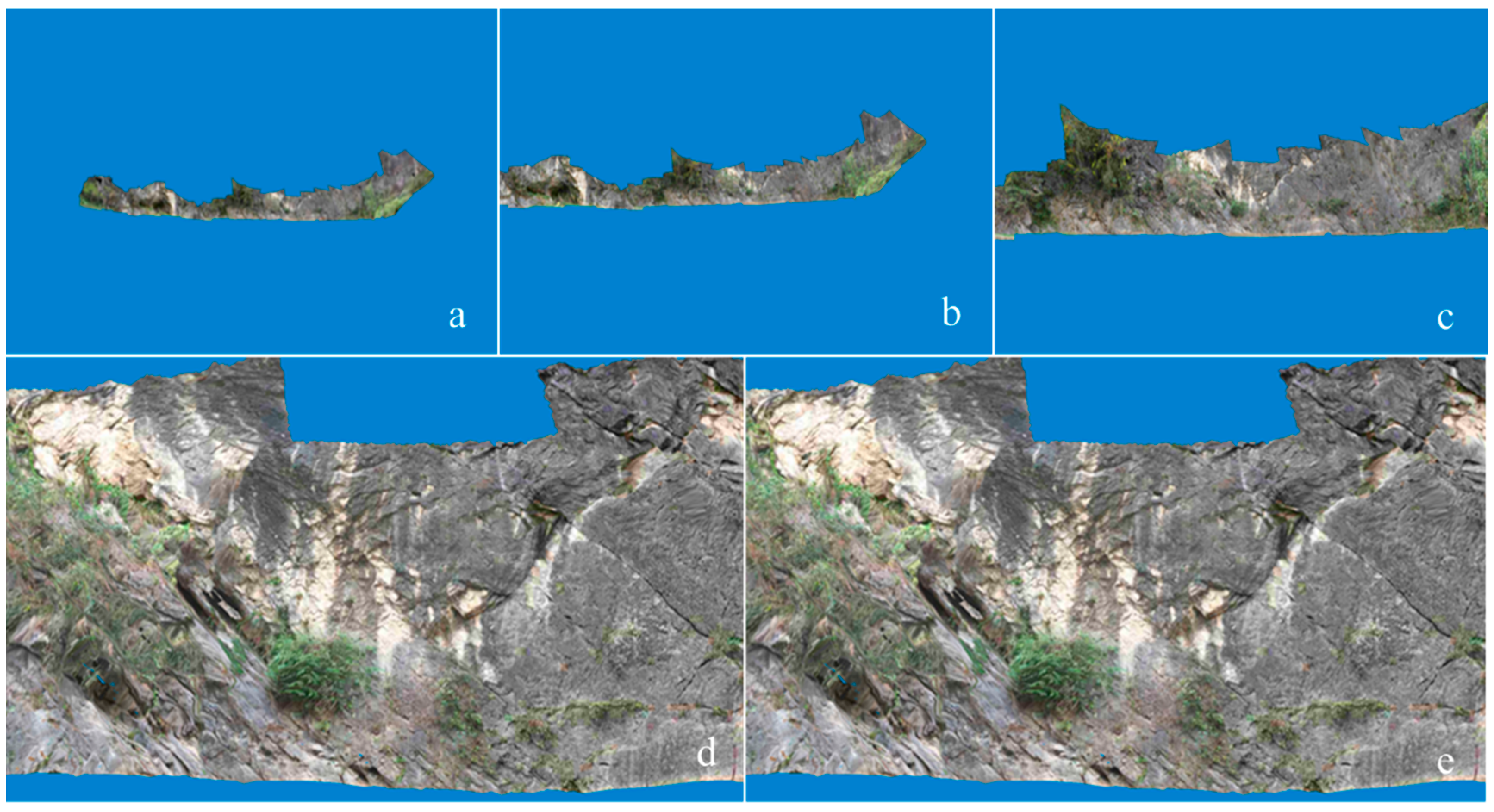

4.2. Results and Analysis of LOD Models Construction and Visualization

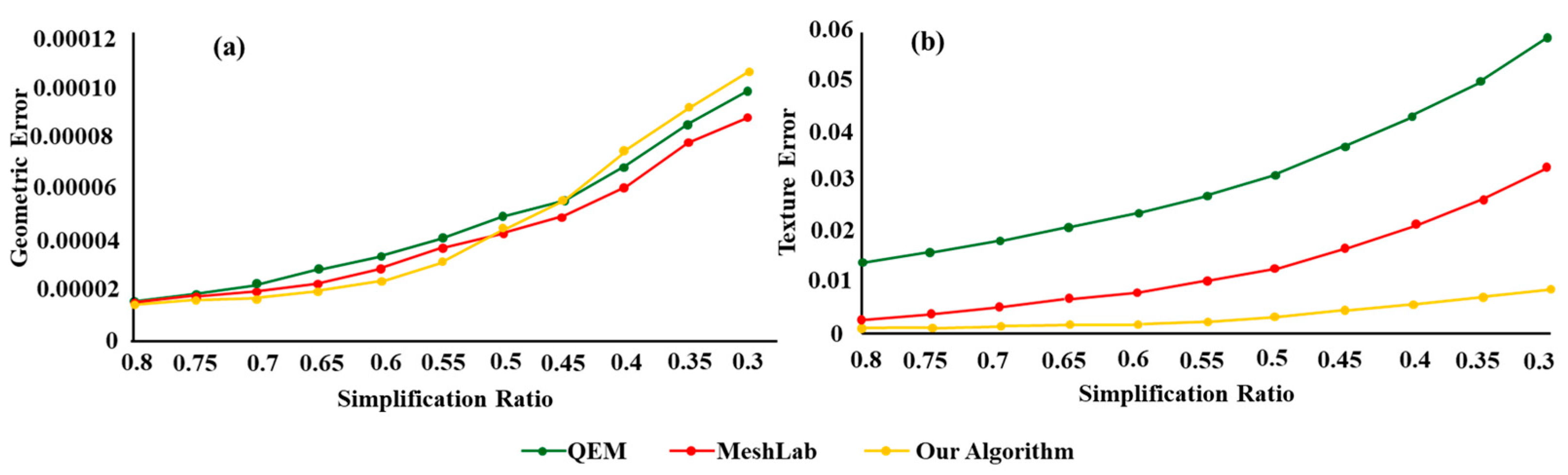

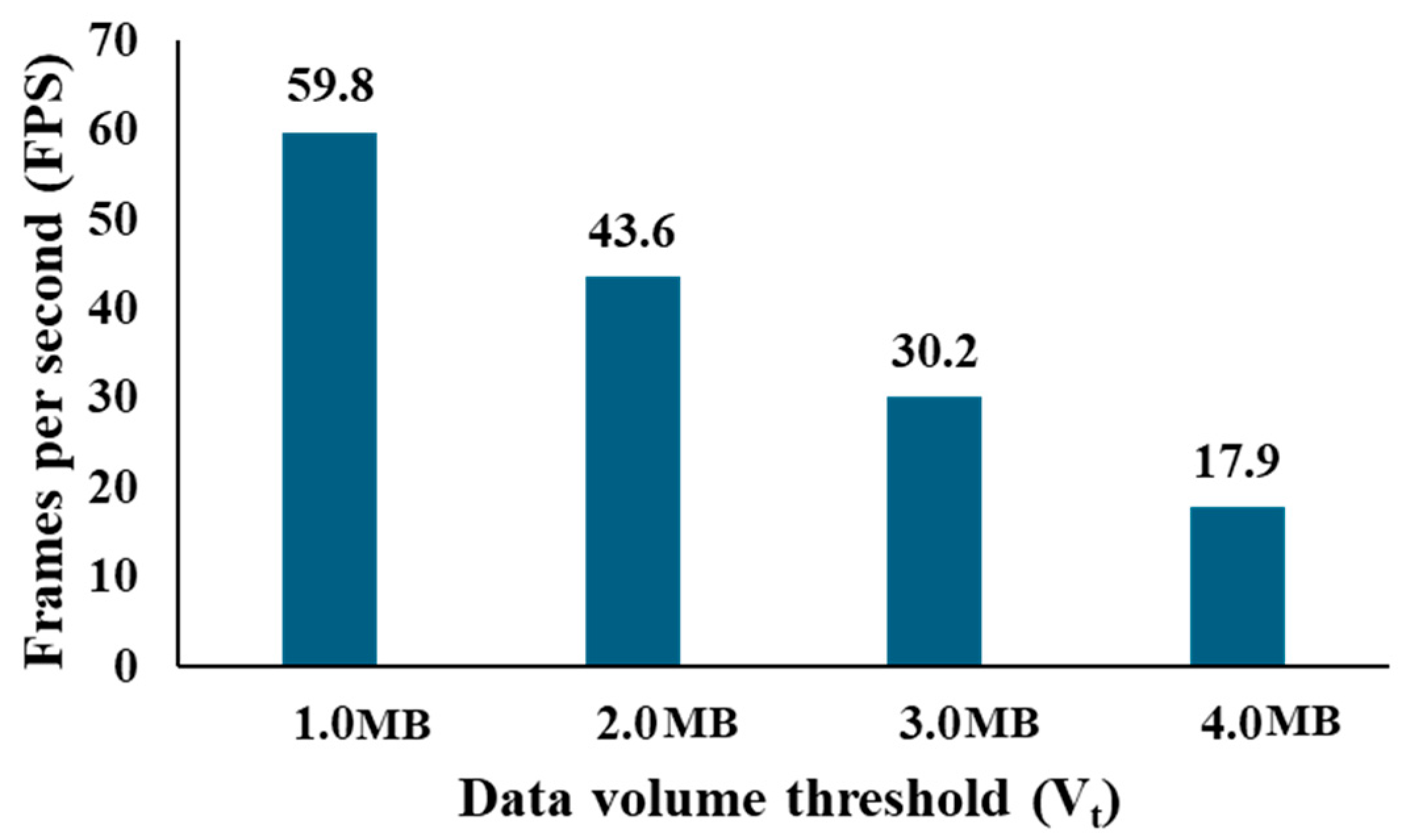

4.3. Comparative Analysis of Simplification Algorithm Results

5. Discussion

5.1. Potential Limitations of the Tiling Method

- Suboptimal Tile Boundaries on Complex Geometry: A single tile in 2D texture space may encompass disparate 3D regions with different geometric characteristics, such as the limb and hinge of a fold. During the tile-wise simplification (Section 3.2.2), the algorithm cannot adapt to intra-tile geometric variance, potentially leading to visual artifacts like over-simplification of high-curvature features or aliasing along sharp intersections.

- Inefficient Culling and Streaming: For self-intersecting or highly folded surfaces, multiple disjoint 3D regions can be mapped to a contiguous area in the 2D texture atlas. A quadtree partition in this 2D space will create tiles that combine these disjointed 3D elements. During rendering, this can frustrate view-frustum culling and lead to inefficient data streaming, as a single tile may be loaded to render several small, spatially separated parts of the model.

- Geometric Feature Analysis: Prior to partitioning, the 3D mesh will be analyzed to compute key geometric attributes such as curvature, normal vector variation, and relief intensity [20]. This will identify regions of high geometric complexity (e.g., folds, fractures) versus relatively planar regions.

- Hybrid Quadtree-Octree Partitioning: Based on this analysis, an adaptive strategy can be implemented: for planar regions, the current texture-space quadtree partitioning remains highly efficient; for regions identified as highly complex, the method would switch to a 3D octree partitioning. An octree recursively subdivides 3D space, ensuring that tiles represent spatially coherent and geometrically homogeneous regions, allowing for more effective simplification and culling [20].

- Morphology-Based Classification: We will explore classifying outcrop profiles into different morphological types (e.g., planar facade, folded structure, and intersecting bedding). A classifier could then select the optimal partitioning strategy for each segment, ensuring robustness across a wider range of geological scenarios.

5.2. Empirical and Generalizability Concerns in Algorithm Parameters

5.3. Analysis of Algorithmic Performance Bottlenecks and Optimization Directions

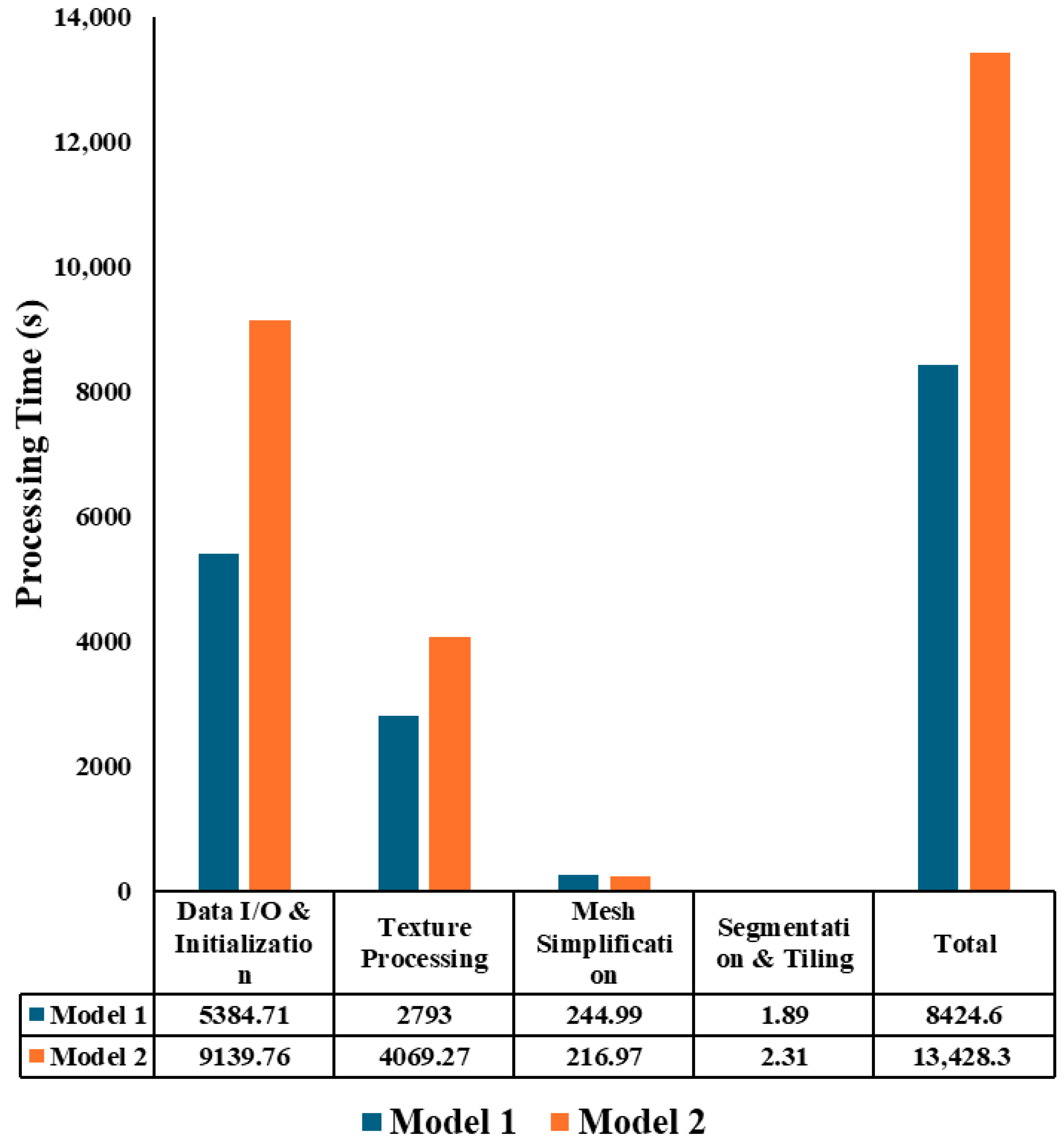

- Data I/O and Initialization: The model loading and data preparation stage (Data reading and copying) is one of the most time-consuming components, especially for very large-scale models. This stage involves reading high-resolution mesh and texture data from storage into memory and performing initial data structure conversions. This process is currently single-threaded and heavily constrained by storage I/O speed and memory copy bandwidth. For Model 1 and Model 2, this stage accounts for approximately 64% and 68% of the total time, respectively. Therefore, our primary optimization efforts should focus on efficient handling of the reading, writing, and initialization of massive datasets.

- Texture Processing: Texture reconstruction is another major bottleneck. This stage includes texture image partitioning, downsampling, and coordinate remapping. For models with high-resolution textures (such as Model 1 and Model 2), the time consumption in this stage is an order of magnitude higher than that of mesh simplification. Its computational complexity is directly related to the total number of pixels in the texture images, indicating that the efficiency of image processing operations (e.g., resampling) in the current implementation needs improvement. Parallelization of related operations will be a key direction for future enhancements.

- Mesh Simplification: The mesh simplification stage follows, with time consumption significantly lower than texture processing. The computational complexity of the QEM-based simplification algorithm is closely related to the number of vertices in the input model and involves extensive iterative calculations and local geometric queries. While its optimization approach is similar to that of texture processing and also requires parallelization, this component is not the primary focus of optimization.

- Segmentation and Tiling: Although it is the core of the algorithm, the data show that its time consumption is negligible compared to other components. The overhead of model segmentation and tiling is minimal, confirming that this stage is efficiently designed and does not constitute a bottleneck.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aydin, A. Fractures, faults, and hydrocarbon entrapment, migration and flow. Mar. Pet. Geol. 2000, 17, 797–814. [Google Scholar] [CrossRef]

- Rimmer, S.M. Geochemical paleoredox indicators in Devonian–Mississippian black shales, central Appalachian Basin (USA). Chem. Geol. 2004, 206, 373–391. [Google Scholar] [CrossRef]

- Howell, J.A.; Martinius, A.W.; Good, T.R. The application of outcrop analogues in geological modelling: A review, present status and future outlook. Geol. Soc. Lond. Spéc. Publ. 2014, 387, 1–25. [Google Scholar] [CrossRef]

- Bryant, I.; Carr, D.; Cirilli, P.; Drinkwater, N.; McCormick, D.; Tilke, P.; Thurmond, J. Use of 3D digital analogues as templates in reservoir modelling. Pet. Geosci. 2000, 6, 195–201. [Google Scholar] [CrossRef]

- Bellian, J.; Kerans, C.; Jennette, D. Digital outcrop models: Applications of terrestrial scanning lidar technology in stratigraphic modeling. J. Sediment. Res. 2005, 75, 166–176. [Google Scholar] [CrossRef]

- Liang, B.; Liu, Y.; Shao, Y.; Wang, Q.; Zhang, N.; Li, S. 3D quantitative characterization of fractures and cavities in Digital Outcrop texture model based on Lidar. Energies 2022, 15, 1627. [Google Scholar] [CrossRef]

- Jing, R.; Shao, Y.; Zeng, Q.; Liu, Y.; Wei, W.; Gan, B.; Duan, X. Multimodal feature integration network for lithology identification from point cloud data. Comput. Geosci. 2024, 194, 105775. [Google Scholar] [CrossRef]

- Shao, Y.; Li, P.; Jing, R.; Shao, Y.; Liu, L.; Zhao, K.; Gan, B.; Duan, X.; Li, L. A Machine Learning-Based Method for Lithology Identi-fication of Outcrops Using TLS-Derived Spectral and Geometric Features. Remote Sens. 2025, 17, 2434. [Google Scholar] [CrossRef]

- Riquelme, A.J.; Abellán, A.; Tomás, R.; Jaboyedoff, M. A new approach for semi-automatic rock mass joints recog-nition from 3D point clouds. Comput. Geosci. 2014, 68, 38–52. [Google Scholar] [CrossRef]

- Wu, S.; Wang, Q.; Zeng, Q.; Zhang, Y.; Shao, Y.; Deng, F.; Liu, Y.; Wei, W. Automatic extraction of outcrop cavity based on a multiscale regional convolution neural network. Comput. Geosci. 2022, 160, 105038. [Google Scholar] [CrossRef]

- Liang, B.; Liu, Y.; Su, Z.; Zhang, N.; Li, S.; Feng, W. A workflow for interpretation of fracture characteristics based on digital outcrop models: A case study on ebian XianFeng profile in Sichuan Basin. Lithosphere 2023, 2022, 7456300. [Google Scholar] [CrossRef]

- Yeste, L.M.; Palomino, R.; Varela, A.N.; McDougall, N.D.; Viseras, C. Integrating outcrop and subsurface data to improve the predictability of geobodies distribution using a 3D training image: A case study of a Triassic Channel–Crevasse-splay complex. Mar. Pet. Geol. 2021, 129, 105081. [Google Scholar] [CrossRef]

- Yong, R.; Wang, C.; Barton, N.; Du, S. A photogrammetric approach for quantifying the evolution of rock joint void geometry under varying contact states. Int. J. Min. Sci. Technol. 2024, 34, 461–477. [Google Scholar] [CrossRef]

- Clark, J.H. Hierarchical geometric models for visible surface algorithms. Commun. ACM 1976, 19, 547–554. [Google Scholar] [CrossRef]

- Lindstrom, P.; Pascucci, V. Visualization of large terrains made easy. In Proceedings of the Visualization, San Diego, CA, USA, 21–26 October 2001; VIS’01. IEEE: New York, NY, USA, 2001; pp. 363–574. [Google Scholar]

- Lindstrom, P.; Pascucci, V. Terrain simplification simplified: A general framework for view-dependent out-of-core visualization. IEEE Trans. Vis. Comput. Graph. 2002, 8, 239–254. [Google Scholar] [CrossRef]

- Brooks, R.; Tobias, A. Choosing the best model: Level of detail, complexity, and model performance. Math. Comput. Model. 1996, 24, 1–14. [Google Scholar] [CrossRef]

- Cignoni, P.; Ganovelli, F.; Gobbetti, E.; Marton, F.; Ponchio, F.; Scopigno, R. Adaptive tetrapuzzles: Efficient out-of-core construction and visualization of gigantic multiresolution polygonal models. ACM Trans. Graph. (TOG) 2004, 23, 796–803. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, C.; Cai, H.; Qv, W.; Zhang, S. A model simplification algorithm for 3D reconstruction. Remote Sens. 2022, 14, 4216. [Google Scholar] [CrossRef]

- Ge, Y.; Xiao, X.; Guo, B.; Shao, Z.; Gong, J.; Li, D. A novel LOD rendering method with multi-level structure keeping mesh simplification and fast texture alignment for realistic 3D models. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 3457796. [Google Scholar] [CrossRef]

- Li, G.; Li, J. An adaptive-size vector tile pyramid construction method considering spatial data distribution density characteristics. Comput. Geosci. 2024, 184, 105537. [Google Scholar] [CrossRef]

- Abualdenien, J.; Borrmann, A. Levels of detail, development; definition, and information need: A critical literature review. J. Inf. Technol. Constr. 2022, 27, 363–392. [Google Scholar] [CrossRef]

- Boswick, B.; Pankratz, Z.; Glowacki, M.; Lu, Y. Re-(De) fined Level of Detail for Urban Elements: Integrating Geo-metric and Attribute Data. Architecture 2024, 5, 1. [Google Scholar] [CrossRef]

- Klapa, P. Standardisation in 3D building modelling: Terrestrial and mobile laser scanning level of detail. Adv. Sci. Technol. Res. J. 2025, 19, 238–251. [Google Scholar] [CrossRef] [PubMed]

- Buckley, S.J.; Enge, H.D.; Carlsson, C.; Howell, J.A. Terrestrial laser scanning for use in virtual outcrop geology. Photogramm. Rec. 2010, 25, 225–239. [Google Scholar] [CrossRef]

- Minisini, D.; Wang, M.; Bergman, S.C.; Aiken, C. Geological data extraction from lidar 3-D photorealistic models: A case study in an organic-rich mudstone, Eagle Ford Formation, Texas. Geosphere 2014, 10, 610–626. [Google Scholar] [CrossRef]

- Cao, T.; Xiao, A.; Wu, L.; Mao, L. Automatic fracture detection based on Terrestrial Laser Scanning data: A new method and case study. Comput. Geosci. 2017, 106, 209–216. [Google Scholar] [CrossRef]

- Becker, I.; Koehrer, B.; Waldvogel, M.; Jelinek, W.; Hilgers, C. Comparing fracture statistics from outcrop and reservoir data using conventional manual and t-LiDAR derived scanlines in Ca2 carbonates from the Southern Permian Basin, Germany. Mar. Pet. Geol. 2018, 95, 228–245. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geo-science applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Da Silva, R.M.; Veronez, M.R.; Gonzaga, L.; Tognoli, F.M.W.; De Souza, M.K.; Inocencio, L.C. 3-D Reconstruction of Digital Outcrop Model Based on Multiple View Images and Terrestrial Laser Scanning. In Proceedings of the Brazilian Symposium on GeoInformatics, São Paulo, Brazil, 29 November–2 December 2015; pp. 245–253. [Google Scholar] [CrossRef]

- Nesbit, P.; Durkin, P.R.; Hugenholtz, C.H.; Hubbard, S.; Kucharczyk, M. 3-D stratigraphic mapping using a digital outcrop model derived from UAV images and structure-from-motion photogrammetry. Geosphere 2018, 14, 2469–2486. [Google Scholar] [CrossRef]

- Devoto, S.; Macovaz, V.; Mantovani, M.; Soldati, M.; Furlani, S. Advantages of Using UAV Digital Photogrammetry in the Study of Slow-Moving Coastal Landslides. Remote Sens. 2020, 12, 3566. [Google Scholar] [CrossRef]

- Perozzo, M.; Menegoni, N.; Foletti, M.; Poggi, E.; Benedetti, G.; Carretta, N.; Ferro, S.; Rivola, W.; Seno, S.; Giordan, D.; et al. Evaluation of an innovative, open-source and quantitative approach for the kinematic analysis of rock slopes based on UAV based Digital Outcrop Model: A case study from a railway tunnel portal (Finale Ligure, Italy). Eng. Geol. 2024, 340, 107670. [Google Scholar] [CrossRef]

- Dong, Z.; Tang, P.; Chen, G.; Yin, S. Synergistic application of digital outcrop characterization techniques and deep learning algorithms in geological exploration. Sci. Rep. 2024, 14, 22948. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Yong, R.; Luo, Z.; Du, S.; Karakus, M.; Huang, C. A novel method for determining the three-dimensional roughness of rock joints based on profile slices. Rock Mech. Rock Eng. 2023, 56, 4303–4327. [Google Scholar] [CrossRef]

- Betlem, P.; Birchall, T.; Lord, G.; Oldfield, S.; Nakken, L.; Ogata, K.; Senger, K. High resolution digital outcrop model of faults and fractures in caprock shales, konusdalen west, central spitsbergen. Earth Syst. Sci. Data Discuss. 2024, 16, 985–1006. [Google Scholar] [CrossRef]

- De Castro, D.B.; Ducart, D.F. Creating a Methodology to Elaborate High-Resolution Digital Outcrop for Virtual Reality Models with Hyperspectral and LIDAR Data. In Proceedings of the International Conference on ArtsIT, Interactivity and Game Creation, Abu Dhabi, United Arab Emirates, 13–15 November 2024; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Yong, R.; Song, J.; Wang, C.; Luo, Z.; Du, S. Determination of the minimum number of specimens required for laboratory testing of the shear strength of rock joints. Geomech. Geophys. Geo-Energy Geo-Resour. 2023, 9, 155. [Google Scholar] [CrossRef]

- Wang, X.; Gao, F. Quantitatively deciphering paleostrain from digital outcrops model and its application in the eastern Tian Shan, China. Tectonics 2020, 39, e2019TC005999. [Google Scholar] [CrossRef]

- Hoppe, H.; DeRose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Mesh optimization. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 2–6 August 1993; pp. 19–26. [Google Scholar]

- Luebke, D.; Reddy, M.; Cohen, J.D.; Varshney, A.; Watson, B.; Huebner, R. Level of Detail for 3D Graphics; Elsevier: Amsterdam, The Netherlands, 2002. [Google Scholar]

- Balsa Rodríguez, M.; Gobbetti, E.; Iglesias Guitián, J.A.; Makhinya, M.; Marton, F.; Pajarola, R.; Suter, S.K. State-of-the-Art in Compressed GPU-Based Direct Volume Rendering. Comput. Graph. Forum 2015, 34, 13–37. [Google Scholar] [CrossRef]

- Grger, G.; Plümer, L. CityGML—Interoperable semantic 3D city models. ISPRS J. Photogramm. Remote Sens. 2012, 71, 12–33. [Google Scholar] [CrossRef]

- Biljecki, F.; Ledoux, H.; Stoter, J. Error propagation in the computation of volumes in 3D city models with the level of detail. ISPRS Int. J. Geo-Inf. 2014, 3, 1155–1175. [Google Scholar]

- Löwner, M.-O.; Gröger, G.; Benner, J.; Biljecki, F.; Nagel, C. Proposal for a new LOD and multi-representation concept for CityGML. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2016, IV-2/W1, 3–12. [Google Scholar] [CrossRef]

- Hodgetts, D.; Gawthorpe, R.L.; Wilson, P.; Rarity, F. 2007, Integrating Digital and Traditional Field Techniques Using Virtual Reality Geological Stu dio (VRGS). In Proceedings of the 69th EAGE Conference and Exhibition incorporating SPE EUROPEC, London, UK, 11–14 June 2007; pp. 11–14. [Google Scholar] [CrossRef]

- Buckley, S.J.; Ringdal, K.; Naumann, N.; Dolva, B.; Kurz, T.H.; Howell, J.A.; Dewez, T.J. LIME: Software for 3-D visualization, interpretation, and communication of virtual geoscience models. Geosphere 2019, 15, 222–235. [Google Scholar] [CrossRef]

- Nesbit, P.R.; Boulding, A.D.; Hugenholtz, C.H.; Durkin, P.R.; Hubbard, S.M. Visualization and sharing of 3D digital outcrop models to promote open science. GSA Today 2020, 30, 4–10. [Google Scholar] [CrossRef]

- Buckley, S.J.; Howell, J.A.; Naumann, N.; Lewis, C.; Chmielewska, M.; Ringdal, K.; Vanbiervliet, J.; Tong, B.; Mulelid-Tynes, O.S.; Foster, D.; et al. V3Geo: A cloud-based repository for virtual 3D models in geoscience, Geosci. Geosci. Commun. 2022, 5, 67–82. [Google Scholar] [CrossRef]

- Tian, Y.; Wu, J.; Chen, G.; Liu, G.; Zhang, X. Big Data-Driven 3D Visualization Analysis System for Promoting Regional-Scale Digital Geological Exploration. Appl. Sci. 2025, 15, 4003. [Google Scholar] [CrossRef]

- Tayeb, J.; Ulusoy, Ö.; Wolfson, O. A Quadtree-Based Dynamic Attribute Indexing Method. Comput. J. 1998, 41, 185–200. [Google Scholar] [CrossRef]

- Garland, M.; Heckbert, P.S. Surface simplification using quadric error metrics. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH’97), Anaheim, LA, USA, 3–8 August 1997; pp. 209–216. [Google Scholar]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. Meshlab: An open-source mesh processing tool. In Proceedings of the Eurographics Italian Chapter Conference, Salerno, Italy, 2–4 July 2008; Volume 2008, pp. 129–136. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Papageorgiou, A.; Platis, N. Triangular mesh simplification on the GPU. Vis. Comput. 2014, 31, 235–244. [Google Scholar] [CrossRef]

| Model | Number of Vertices | Number of Triangular Facets | Number of Texture Images | Amount of the Model (GB) |

|---|---|---|---|---|

| Model 1 | 592,473 | 1,145,853 | 103 | 1.16 |

| Model 2 | 648,698 | 1,244,590 | 146 | 1.74 |

| Model | Execution Time (s) | Memory Usage (MB) | CPU Usage (%) | Amount of the LOD Model (GB) |

|---|---|---|---|---|

| Model 1 | 8421 ± 12 | 2652.2 ± 42 | 9.6 ± 0.5 | 9.74 |

| Model 2 | 13,425 + 26 | 3354.1 ± 47 | 12.1 ± 0.7 | 8.72 |

| Model | CPU Usage (%) | Memory Usage (MB) | Loading Time (s) | Display Frame Rate (FPS) |

|---|---|---|---|---|

| Model 1 (LOD) | 15 ± 1 | 187 ± 5 | 3.7 ± 0.1 | 57.7 ±0.3 |

| Model 1 (single-body) | 21 ± 1 | 3504 ± 15 | 118.1 ± 2.5 | 6.8 ± 0.1 |

| Model 2 (LOD) | 13.7 ± 0.7 | 111 ± 4 | 4.1 ± 0.1 | 58.8 ± 0.3 |

| Model 2 (single-body) | 20.1 ± 0.8 | 11,609 ± 50 | 551.2 ± 10 | 1.2 ± 0.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ao, J.; Liu, Y.; Liang, B.; Jing, R.; Shao, Y.; Li, S. Construction and Visualization of Levels of Detail for High-Resolution LiDAR-Derived Digital Outcrop Models. Remote Sens. 2025, 17, 3758. https://doi.org/10.3390/rs17223758

Ao J, Liu Y, Liang B, Jing R, Shao Y, Li S. Construction and Visualization of Levels of Detail for High-Resolution LiDAR-Derived Digital Outcrop Models. Remote Sensing. 2025; 17(22):3758. https://doi.org/10.3390/rs17223758

Chicago/Turabian StyleAo, Jingcheng, Yuangang Liu, Bo Liang, Ran Jing, Yanlin Shao, and Shaohua Li. 2025. "Construction and Visualization of Levels of Detail for High-Resolution LiDAR-Derived Digital Outcrop Models" Remote Sensing 17, no. 22: 3758. https://doi.org/10.3390/rs17223758

APA StyleAo, J., Liu, Y., Liang, B., Jing, R., Shao, Y., & Li, S. (2025). Construction and Visualization of Levels of Detail for High-Resolution LiDAR-Derived Digital Outcrop Models. Remote Sensing, 17(22), 3758. https://doi.org/10.3390/rs17223758