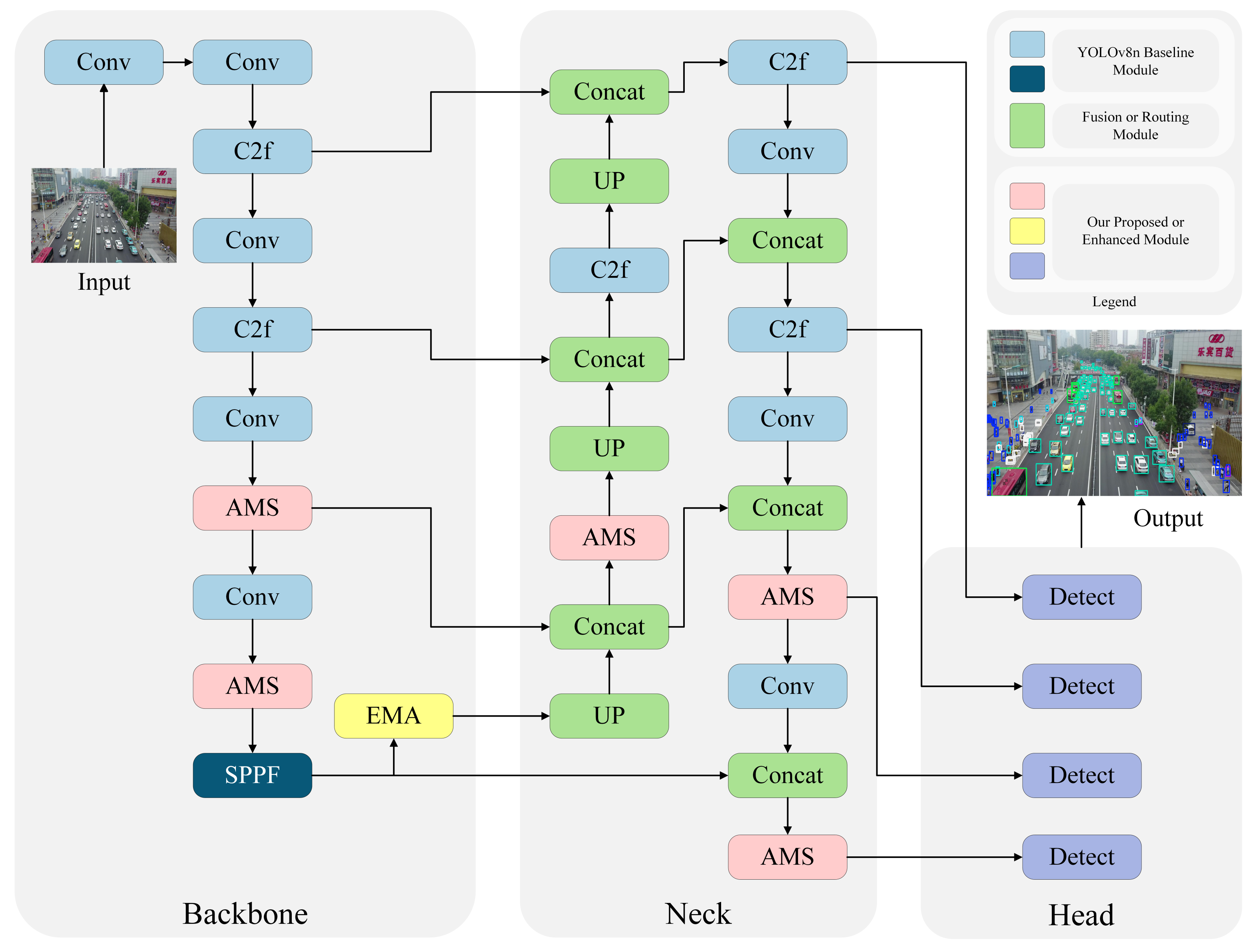

2.2.1. The AMS Module

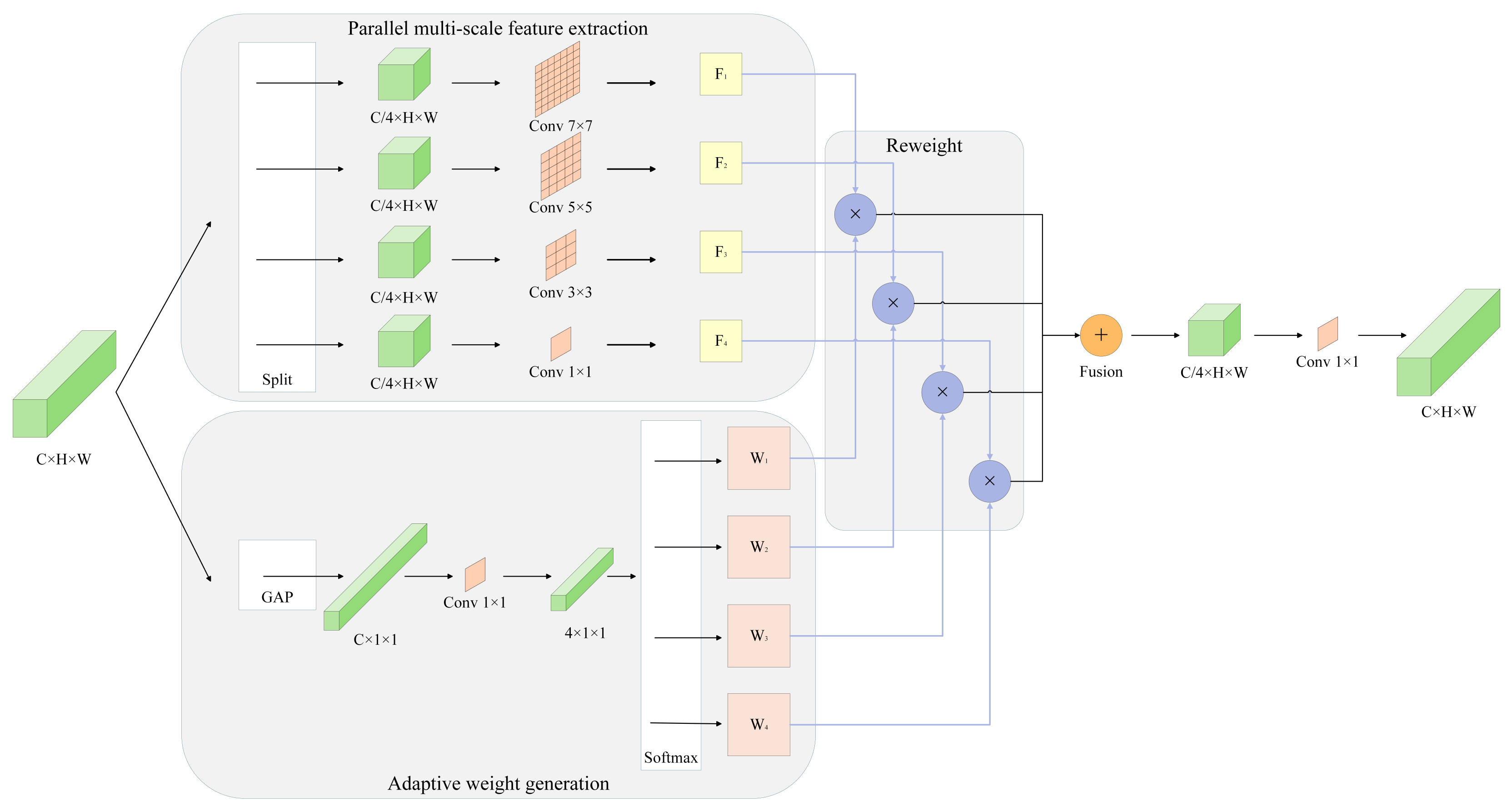

This section details the design of the proposed Adaptive Multi-Scale (AMS) convolution module, a novel component engineered for efficient and adaptive feature fusion. The detailed architecture of the AMS module is illustrated in

Figure 2. The design is conceptually motivated by the principle of feature redundancy, as effectively exploited by the lightweight network GhostNet [

31]. The central tenet of GhostNet is to approximate the output of a standard convolution through a more computationally parsimonious, two-stage process. It first employs a primary convolution to generate a set of “intrinsic” feature maps, which capture the core characteristics of the input. Subsequently, it applies a series of lightweight linear operations to these intrinsic maps to produce supplementary “ghost” features. By concatenating these two feature sets, GhostNet significantly reduces parametric and computational costs while maintaining a comparable feature representation capacity. Building upon this principle of computational efficiency, Li Jin et al. introduced the Efficient Multi-Scale Convolution Pyramid (EMSCP) module [

32].

The EMSCP module is designed to efficiently fuse multi-scale feature information. Its core mechanism involves splitting the input feature map into four parallel branches along the channel dimension. Each branch is then processed by a convolutional kernel of a different size (1 × 1, 3 × 3, 5 × 5, and 7 × 7, respectively). This design aims to simultaneously capture diverse levels of information, from fine-grained local details to broad contextual information. Subsequently, the output feature maps from all branches are concatenated and passed through a final 1 × 1 convolution for information integration and channel dimension restoration. Compared to using a single large-kernel convolution, this architecture acquires rich multi-scale features at a lower computational cost.

However, the analysis reveals a fundamental limitation of the EMSCP module: its input-agnostic static feature fusion strategy. The simple concatenation operation is tantamount to assigning a fixed and uniform weight to each scale branch. In practice, the importance of different scales is highly dynamic and content-dependent; scenes with small objects demand greater attention to fine-grained features (captured by small kernels), whereas those with large objects rely more on global context (captured by large kernels). EMSCP’s static fusion mechanism cannot adapt to these variations in input, leading to a sub-optimal representation where critical scale-specific information is diluted by redundant features, thereby limiting its performance in complex scenarios.

To overcome this limitation, we propose the Adaptive Multi-Scale (AMS) module, which introduces a novel Adaptive Scale Attention mechanism. This mechanism transforms the feature fusion from a passive, static aggregation into an active, content-dependent selection process. Specifically, a lightweight weight-learning network first leverages Global Average Pooling (GAP) [

33] to generate a global context descriptor. Based on this descriptor, the network dynamically generates a unique attention weight for each parallel scale branch. In this manner, the AMS module selectively enhances the most informative scale-specific features while suppressing the others. This ensures the fused representation is optimally tailored to the input content, significantly improving the model’s adaptability and accuracy.

While this mechanism is inspired by channel attention networks such as SENet, the Adaptive Scale Attention operates on a fundamentally different dimension. SENet recalibrates the importance of features along the channel dimension. In contrast, the AMS module operates on the scale dimension, addressing the distinct challenge of dynamically allocating weights among parallel multi-scale branches. This approach elevates the concept of attention from the channel level to a structural, scale-wise level, offering a new perspective on multi-scale representation learning.

The process begins by splitting the input feature map

evenly into

N sub-feature maps

along the channel dimension. Each sub-feature map is then fed into a separate branch with a specific kernel size for feature extraction, yielding a corresponding output feature map

This operation is formulated as:

Here, , , , and represent convolution operations with kernel sizes of 1 × 1, 3 × 3, 5 × 5, and 7 × 7 respectively.

The core innovation of the AMS module is its adaptive weight-learning network, which dynamically generates a set of weights , based on the input feature map X. This process consists of two primary stages: adaptive weight generation and weighted feature fusion.

Adaptive Weight Generation

The generation of adaptive weights is a three-step process designed to convert the input feature map into a compact and informative set of weights for each scale branch:

Global Information Squeeze: The process begins by capturing a global context descriptor from the input feature map. We hypothesize that the spatial distribution of features correlates with object size; small objects produce localized, sharp activations, while large objects produce more dispersed activations. To capture this, we use Global Average Pooling (GAP) to squeeze the entire feature map

X into a single channel-wise descriptor vector

z:

This vector z encapsulates the global response intensity for each channel, serving as an effective summary of the scene’s characteristics.

Weight Excitation: The global descriptor

z is then fed into a lightweight network to learn the mapping from global information to branch importance. This is implemented using a simple 1 × 1 convolution, which acts as an efficient channel-wise fully connected layer, to produce a raw weight vector (logits)

:

The parameters of this convolutional layer are learned end-to-end with the rest of the network.

Weight Normalization: To ensure the weights represent a probability distribution and to encourage competition among the branches, the Softmax function is applied to the raw weight vector

. This normalizes the weights so that they sum to 1 and amplifies the importance of the most relevant scale(s):

Weighted Feature Fusion

After obtaining the adaptive weights

W and the output features

from each of the four scale branches, the AMS module performs a dynamic weighted fusion. Each feature map

is scaled by its corresponding learned weight

. These weighted feature maps are then fused via summation to produce the final aggregated feature map,

:

Finally, a 1 × 1 convolution is applied to the fused features to enable cross-channel information interaction and produce the final output

Y of the AMS module:

This process allows the AMS module to dynamically re-calibrate the contribution of each scale branch based on the input features, shifting from a static aggregation to an active, content-aware selection of information.

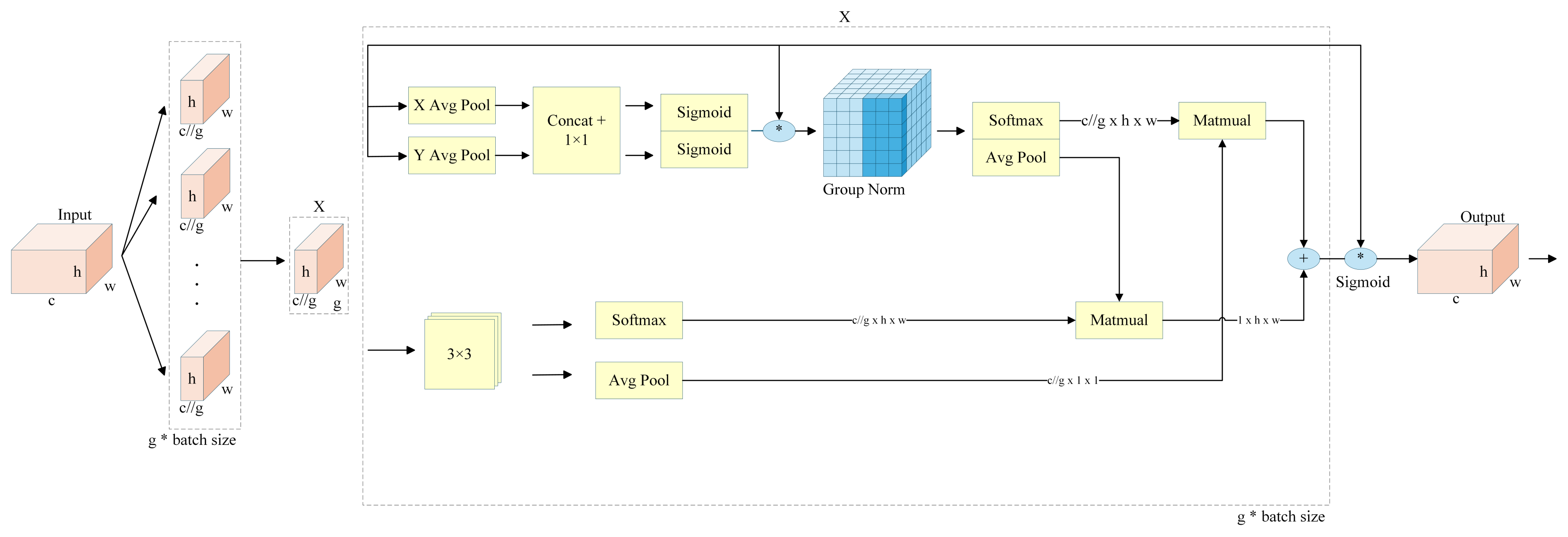

2.2.2. The EMA Mechanism

In complex visual perception tasks, the random movement of vehicles and pedestrians, frequent object occlusions, and backgrounds with features similar to targets all pose significant challenges. These multi-scale interfering factors can degrade a model’s recognition accuracy, leading to missed or false detections and compromising the safety of critical applications. To address these issues, we introduce the Efficient Multi-Scale Attention (EMA) mechanism.

Figure 3 shows the overall structure of the EMA mechanism.

Attention mechanisms were proposed to overcome the limitations of traditional Convolutional Neural Networks (CNNs) in complex scenarios. By learning to weigh feature maps, attention modules enable a model to focus on salient target regions while suppressing irrelevant background information, thereby enhancing its feature discrimination capabilities in a plug-and-play manner. Current mainstream attention mechanisms can be broadly categorized. The Squeeze-and-Excitation (SE) module, for instance, models channel inter-dependencies but can suffer from information loss due to its dimensionality-reduction step. The CBAM module integrates both channel and spatial attention, showcasing the potential of cross-dimensional information interaction. More recently, the Coordinate Attention (CA) module [

34] embedded positional information into channel attention by capturing long-range spatial dependencies through two 1D global pooling operations. However, these methods still have shortcomings in multi-scale feature fusion; for example, the limited receptive field of CA’s 1 × 1 convolutions can hinder detailed global and cross-channel modeling.

To overcome these limitations, the Efficient Multi-Scale Attention (EMA) module was introduced. Its architecture is designed to capture rich multi-scale spatial dependencies while preserving channel information integrity. The core of EMA can be deconstructed into three main stages: (1) Channel Splitting and Reshaping for efficient feature representation, (2) a parallel multi-scale network to extract short- and long-range dependencies, and (3) a Cross-Spatial Learning mechanism to fuse these dependencies adaptively.

Channel Splitting and Reshaping

Unlike the SE module, which uses a dimensionality-reduction bottleneck that can lead to information loss, EMA avoids this by retaining complete channel information. Given an input feature map

, the channel dimension

C is first split into

G groups. This operation is formulated as a tensor reshape:

To enable parallel processing and reduce computational overhead, the group dimension G is then merged into the batch dimension B. This yields a reshaped tensor , which serves as the input to the subsequent parallel branches.

Parallel Multi-Scale Feature Extraction

The reshaped tensor is fed into a dual-branch parallel sub-network. One branch employs a 3 × 3 convolution to capture local spatial context and short-range dependencies. The other branch uses two sequential 1 × 1 convolutions to model cross-channel correlations and long-range dependencies efficiently. This parallel design allows EMA to simultaneously perceive features at different scales and levels of abstraction.

Cross-Spatial Learning and Fusion

To adaptively fuse the information from the parallel branches, EMA employs a cross-spatial learning mechanism based on dot-product attention. This stage explicitly models pixel-level pairwise relationships to highlight global contextual information. Specifically, a Query (Q) is generated from the output of the 1 × 1 convolution branch (long-range context), while a Key (K) and Value (V) are generated from the output of the 3 × 3 convolution branch (local context).

After flattening the spatial dimensions, such that

, an attention map

A is computed by measuring the similarity between the query and the key:

where

is the dimension of the key vectors. The resulting attention map

encodes the pairwise importance between every pixel in the long-range feature map and every pixel in the local feature map. This map then weights the values

V to produce an attended feature map:

Then, the output tensor is reshaped back to the original dimensions .

While EMA’s fusion mechanism uses a dot-product attention similar to standard Self-Attention (SA), it implements a form of Cross-Attention. In SA, the query, key, and value are derived from the same input tensor. In EMA, the query is derived from one branch (global context) and the key/value pair from another (local context). This design enables the module to explicitly model the relationships between long-range and short-range dependencies.

In our architecture, the EMA and AMS modules are highly complementary, addressing spatial and scale attention, respectively. EMA functions as a global spatial attention mechanism, modeling long-range dependencies to identify salient target regions. Subsequently, AMS performs fine-grained scale fusion, dynamically weighting parallel convolutional branches to select the optimal feature scale for the target. EMA first determines where to focus, and AMS then decides what scale to use, significantly enhancing the model’s detection performance in complex scenarios.

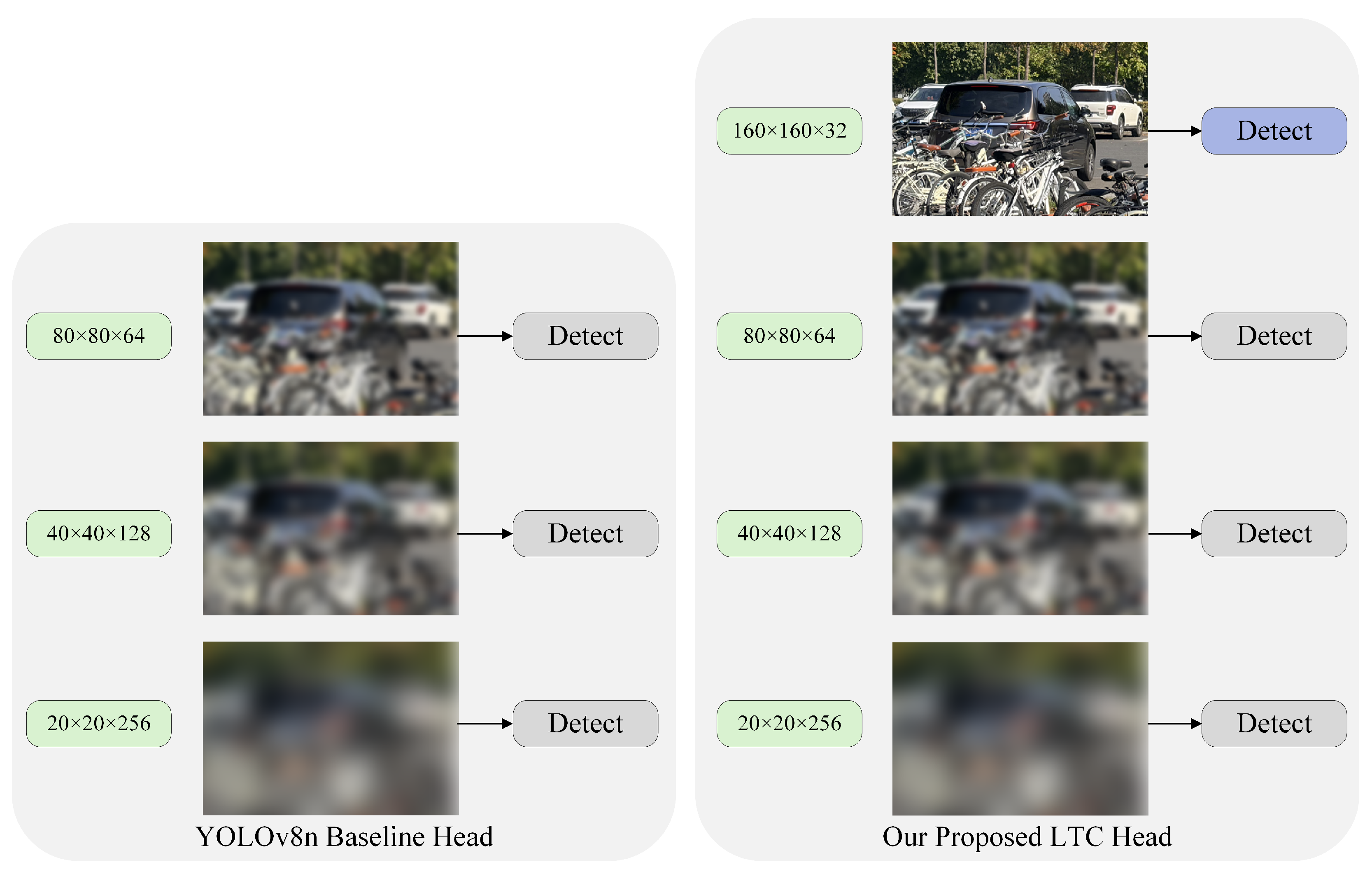

2.2.3. P2 Small Object Detection Head

Reliable detection of small objects is critical across multiple domains. Examples include tiny targets in remote sensing images, as well as distant vehicles and traffic lights in ground-level views. These objects are susceptible to feature degradation during the successive downsampling operations within the network backbone. To fully leverage the fine-grained features enhanced by EMA and AMS modules and to counteract this information loss, we adapt the network’s detection neck by incorporating a higher-resolution P2 feature layer (160 × 160).

Figure 4 provides a visual comparison of the detection heads between the YOLOv8n baseline and the LTC framework. This approach follows the proven practices in established detectors such as FPN and YOLOv5.

While this higher-resolution layer inherently aids in localizing small objects by utilizing shallow features with smaller receptive fields, our primary motivation stems from addressing a critical training instability in modern dynamic label assignment strategies. Dynamic assigners, such as the Task-Aligned Assigner used in YOLOv8, face “cold-start” and “high IoU sensitivity” challenges, particularly with small objects. In the initial training stages, when the model’s predictions are still inaccurate, even a minor offset in a predicted bounding box for a small target can cause its Intersection over Union (IoU) to drop precipitously below the matching threshold. Consequently, the assigner fails to find sufficient positive samples, leading to unstable gradients and inefficient convergence.

The introduction of the P2 detection head directly mitigates this issue. The denser anchor points and finer-grained features on the 160 × 160 map provide a more robust basis for matching. Even with slight prediction inaccuracies, there is a higher probability that a prediction will achieve sufficient IoU with a ground-truth object. This ensures a stable and high-quality stream of positive samples for the label assigner, especially in the crucial early phases of training. This stabilization accelerates model convergence and leads to superior final detection performance for small targets.

As specified in our network architecture, the P2 feature map is generated by first upsampling the P3 feature layer (80 × 80) from the neck. This upsampled map is then fused via concatenation with the shallow C2 feature layer (160 × 160) from the backbone. The resulting feature map is processed by a final C2f block before being fed into the detection head.