1. Introduction

HSI captures large-scale data comprising hundreds of continuous spectral bands and integrates spatial information to form a data cube, enabling detailed land cover analysis [

1]. This technology has been successfully applied across diverse domains, including environmental monitoring, geological exploration, precision agriculture, defense and security [

2], and medical imaging [

3]. In recent years, substantial research efforts have been dedicated to advancing HSI processing techniques [

4,

5,

6]. Within this context, HSIC has emerged as a key research direction, establishing a systematic mapping between image pixels and predefined land cover categories by leveraging both spectral and spatial features inherent in the data. Nevertheless, HSIC continues to face several challenges, primarily stemming from the high-dimensional nature of the data, significant inter-band correlation, severe information redundancy, and inherently limited spatial resolution.

Over the past few decades, traditional HSIC methods have partially mitigated issues such as band redundancy and large data volume through manually designed features. In early research, Support Vector Machine (SVM) was widely adopted for extracting spectral–spatial features, and several improved variants were developed to enhance model stability and discriminative ability [

7,

8]. For example, multi-kernel SVM has been shown to substantially improve classification accuracy by incorporating both spatial context and spectral information [

9]. Other techniques, including K-Nearest Neighbors (KNNs) [

10] and Random Forests (RFs) [

11], have also effectively facilitated categorical discriminant mapping by exploiting inter-band correlations. Nevertheless, these conventional approaches generally depend on handcrafted feature engineering, which not only demands substantial domain expertise but also tends to exhibit limited generalization when dealing with structurally complex HSI data.

In recent years, Convolutional Neural Networks (CNNs) have garnered significant attention and widespread adoption in HSIC. By leveraging mechanisms such as local connectivity, weight sharing, and hierarchical sparse representation, CNNs effectively extract discriminative joint spectral–spatial features, thereby reducing reliance on manually designed features. For instance, Hu et al. introduced CNNs into HSIC, processing spectral information via 1D convolutional operations and achieving promising results [

12]. Zhao and Du applied 2D convolution to extract spatial features across multiple spectral dimensions, enabling accurate characterization of detailed contours [

13]. To capture deeper joint spectral–spatial representations, Hamida et al. [

14] employed 3D CNN to simultaneously model spectral and spatial information. Roy et al. [

15] further proposed the HybridSN model, which integrates 2D-CNN and 3D-CNN architectures and demonstrates notable performance advantages.

However, conventional convolution operations are constrained by fixed kernel sizes, resulting in limited receptive fields and restricted capacity for modeling complex spatial structures. To address this limitation, He et al. [

16] developed an end-to-end M3D-CNN that extracts multi-scale spectral and spatial features from HSI in parallel. Sun et al. [

17] designed M2FNet, which combines multi-scale 3D–2D hybrid convolution with morphological enhancement modules to achieve effective fusion of heterogeneous data. Yang et al. [

18] proposed a dual-branch network utilizing dilated convolution and diverse kernel sizes to extract multi-scale spectral-spatial features, further improving classification accuracy.

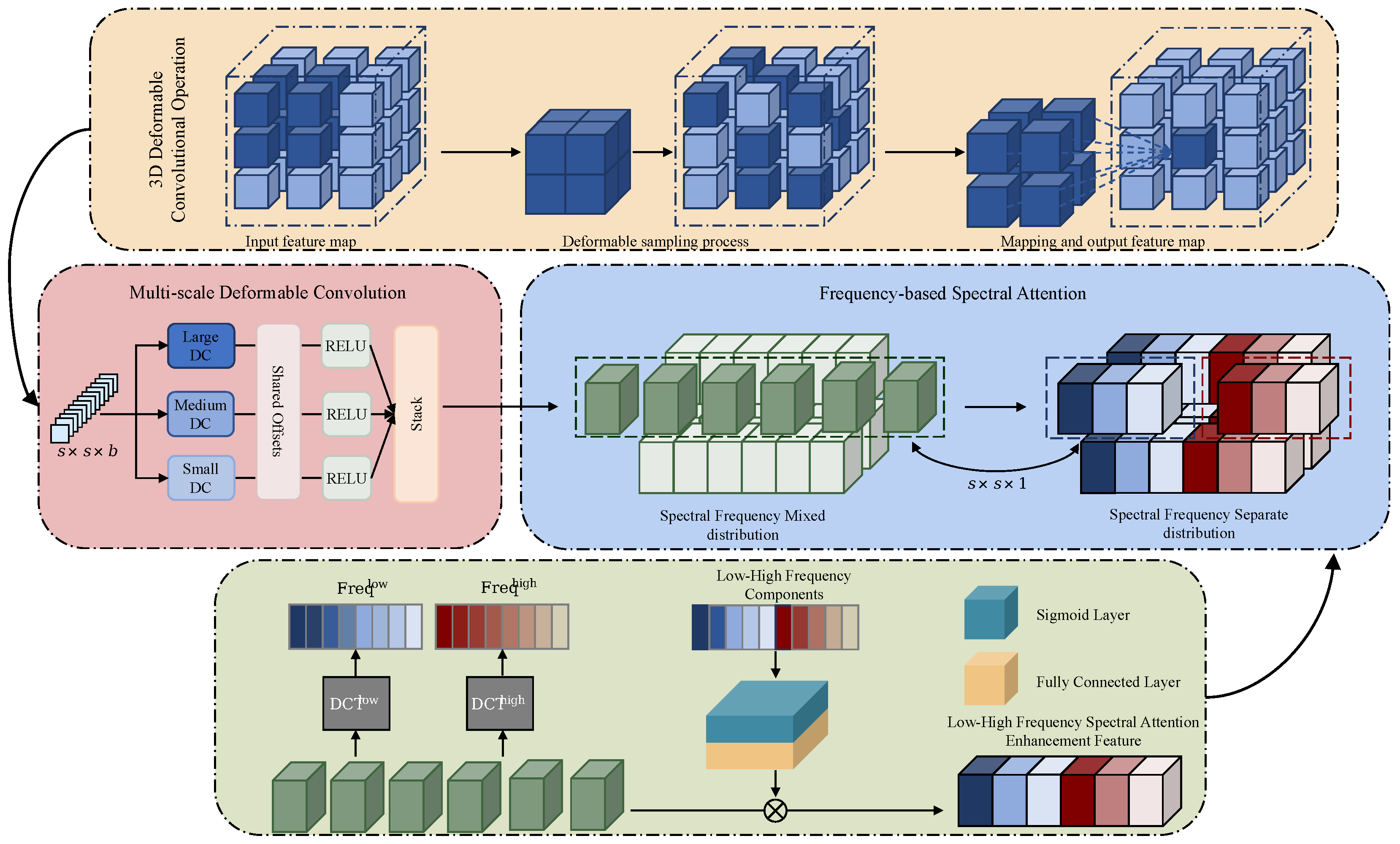

Despite these advances, static convolution-based methods exhibit inherent shortcomings: their fixed-weight parameters struggle to adapt to the complex and heterogeneous terrain distributions in HSI. In contrast to dynamic convolution, they fail to accurately capture subtle inter-class spectral variations, which ultimately limits feature discriminability.

Building on the remarkable success of the self-attention mechanism in Transformers [

19] for natural language processing, researchers have increasingly explored its potential in visual applications [

20]. Benefiting from its powerful capacity for modeling global dependencies, Transformer architectures have been introduced to HSIC tasks to capture global spectral interactions. Hong et al. [

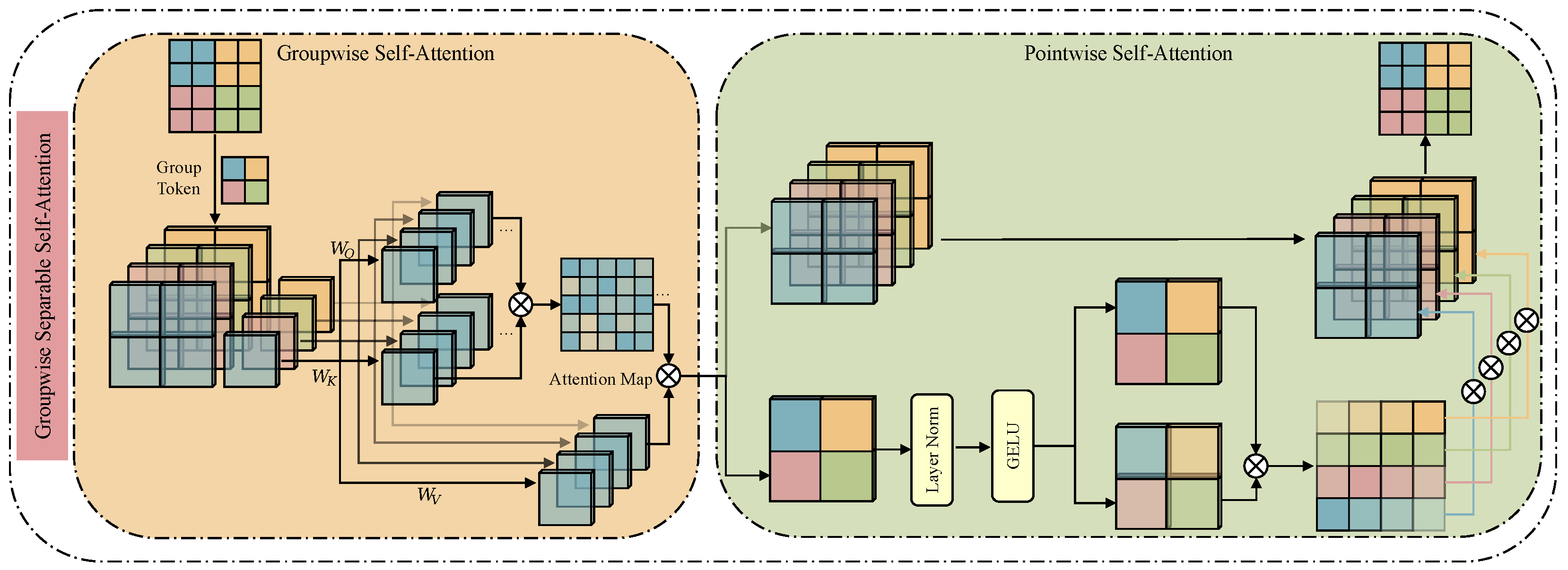

21] systematically evaluated the applicability of Transformers in HSIC and proposed SpectralFormer, which captures local contextual relationships between adjacent spectral bands via group-wise spectral embedding, and introduces a cross-layer adaptive fusion mechanism to alleviate the loss of shallow features in deep networks. Subsequently, He et al. [

22] introduced a dual-branch Transformer architecture: the spatial branch employs window and shifted window mechanisms to extract both local and global spatial features, while the spectral branch captures long-range dependencies across bands, achieving effective synergistic fusion of spectral–spatial information. Furthermore, the complementarity between CNNs and Transformers has attracted growing research interest. For instance, Wu et al. [

23] proposed SSTE-Former, which leverages the local feature extraction capability of CNNs alongside the global modeling capacity of Transformers. Zhao et al. [

24] designed CTFSN, a dual-branch network that integrates local and global features, while Yang et al. [

25] developed a parallel interactive framework named ITCNet for multi-level feature fusion. While CNN-Transformer hybrid models integrate local perception and global attention mechanisms for HSIC, they still exhibit notable limitations: the quadratic computational complexity of Transformers compromises spectral sequence integrity, while the fixed convolutional kernels of CNNs struggle to adapt to irregular land-cover boundaries, resulting in loss of fine details. The FreqMamba framework addresses this by incorporating a Mamba module, which employs a selective SSM to capture long-range dependencies across the full spectral bands with linear complexity, while dynamically focusing on discriminative features. By effectively combining local feature extraction with global contextual modeling, the framework enhances joint spectral–spatial representation capability for complex land-cover types, while significantly improving computational efficiency.

Furthermore, frequency-domain transforms like the Discrete Cosine Transform (DCT) have been utilized to enhance channel attention mechanisms. For instance, in image classification, DCT-based attention improves feature discriminability by compressing frequency-domain information [

26]. However, these methods tend to be biased toward low-frequency information, potentially leading to the loss of high-frequency details, such as subtle spectral variations, in HSI. To address this, the proposed FMDC module innovatively introduces a dual-frequency preservation strategy, as Equation (

7) shows, which simultaneously captures low-frequency global trends and high-frequency local details.

In recent years, the Mamba architecture [

27], based on State Space Models (SSMs), has emerged as an efficient sequence modeling method due to its selective mechanism and hardware-aware optimization, achieving linear computational complexity with respect to sequence length and demonstrating performance comparable to Transformers in long-range modeling. The sequential nature of HSI demonstrates strong compatibility with the Mamba architecture. The spectral dimension inherently constitutes a long-sequence signal with strong inter-band correlations, enabling the dynamic weighting mechanism of SSM to adaptively focus on discriminative spectral bands. Furthermore, compared to the fixed forgetting mechanism of traditional RNNs, the selective SSM achieves dynamic adjustment of state transitions through input-dependent parameterization. This characteristic proves particularly advantageous for HSIC scenarios characterized by diverse land-cover categories and highly variable features. For instance, Huang et al. [

28] developed a dual-branch spectral–spatial Mamba model that overcomes the quadratic complexity bottleneck with linear computational complexity, enabling efficient fusion of spectral–spatial features. Yang et al. [

29] proposed HSIMamba, which leverages bidirectional SSMs to effectively extract both spectral and spatial features from hyperspectral data while maintaining high computational efficiency. Yao et al. [

30] introduced SpectralMamba, a framework that tackles spectral variability, redundancy, and computational challenges from a sequence-modeling perspective. It integrates sequential scanning with gated spatial–spectral merging to encode latent spatial regularity and spectral characteristics, yielding robust discriminative representations. Considering the high dimensionality of hyperspectral data, He et al. [

31] designed a 3D spectral–spatial Mamba (3DSS-Mamba) framework, which introduces a 3D selective scanning mechanism to perform pixel-level scanning along both spectral and spatial dimensions. Specifically, it constructs five distinct scanning paths to systematically analyze the impact of dimensional priority on feature extraction.

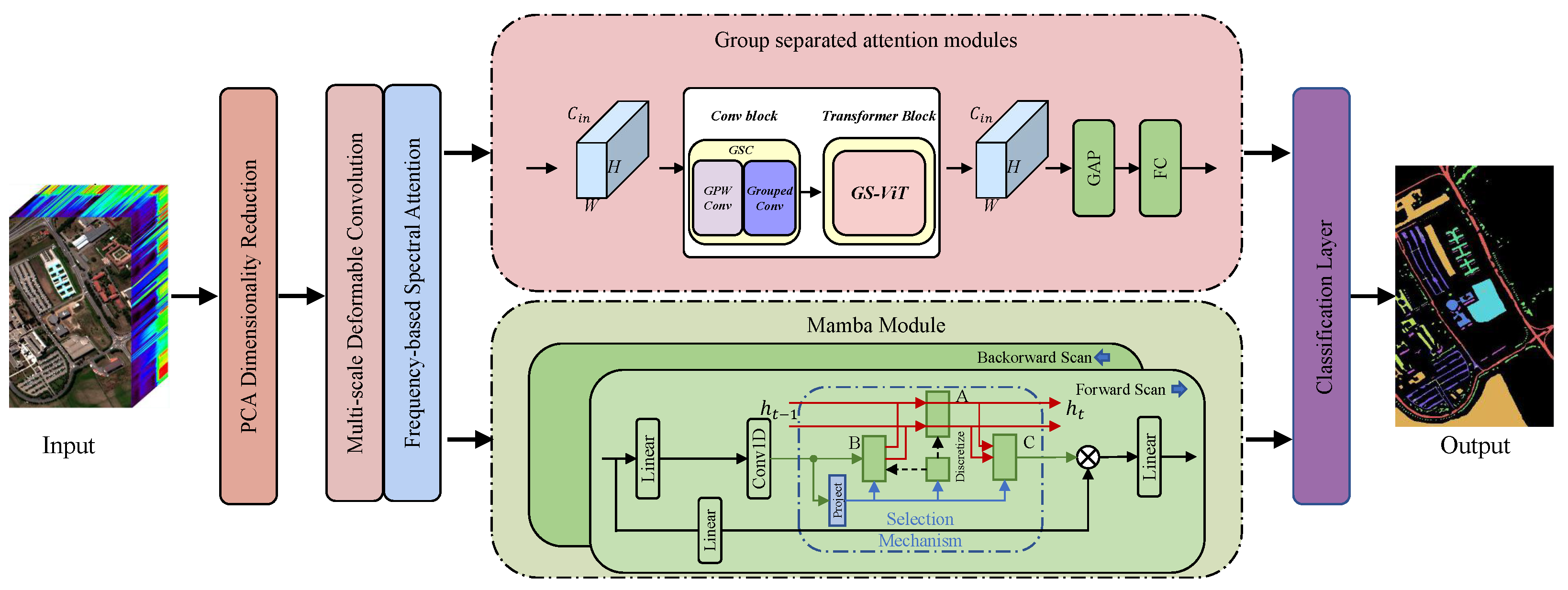

Inspired by the CNN-Transformer hybrid architecture, we propose a novel FreqMamba hybrid architecture that effectively integrates CNN for local feature extraction, customized self-attention mechanism for global context, and Mamba-based SSM for efficient remote dependency modeling. Our contributions are summarized as follows:

Innovative Frequency-based Multi-Scale Deformable Convolutional Feature Extraction Module (FMDC): The module combines dynamic convolution with the frequency-domain attention mechanism to dynamically learn parameters, so that it can adaptively focus on irregular object contours and complex target shapes, thus significantly enhancing the discriminative representation ability of features.

Group-Separated Attention Module Combining Local and Global Features: The module uses a grouping operation to decompose the global self-attention calculation into multiple parallel and lightweight sub-processes, which greatly reduces the parameters and calculation amount, and collaborates with grouping convolution to enhance local detail extraction, and finally improves the discriminant power of the model under efficient calculation.

Application of Efficient Mamba Architecture in HSIC: This study utilized the Mamba architecture based on SSM to achieve efficient capture of spectral and spatial dependencies. Through the proposed bidirectional scanning dual-branch Mamba structure, the model can systematically characterize the dynamic attributes and time dependencies in the data while maintaining computational efficiency.

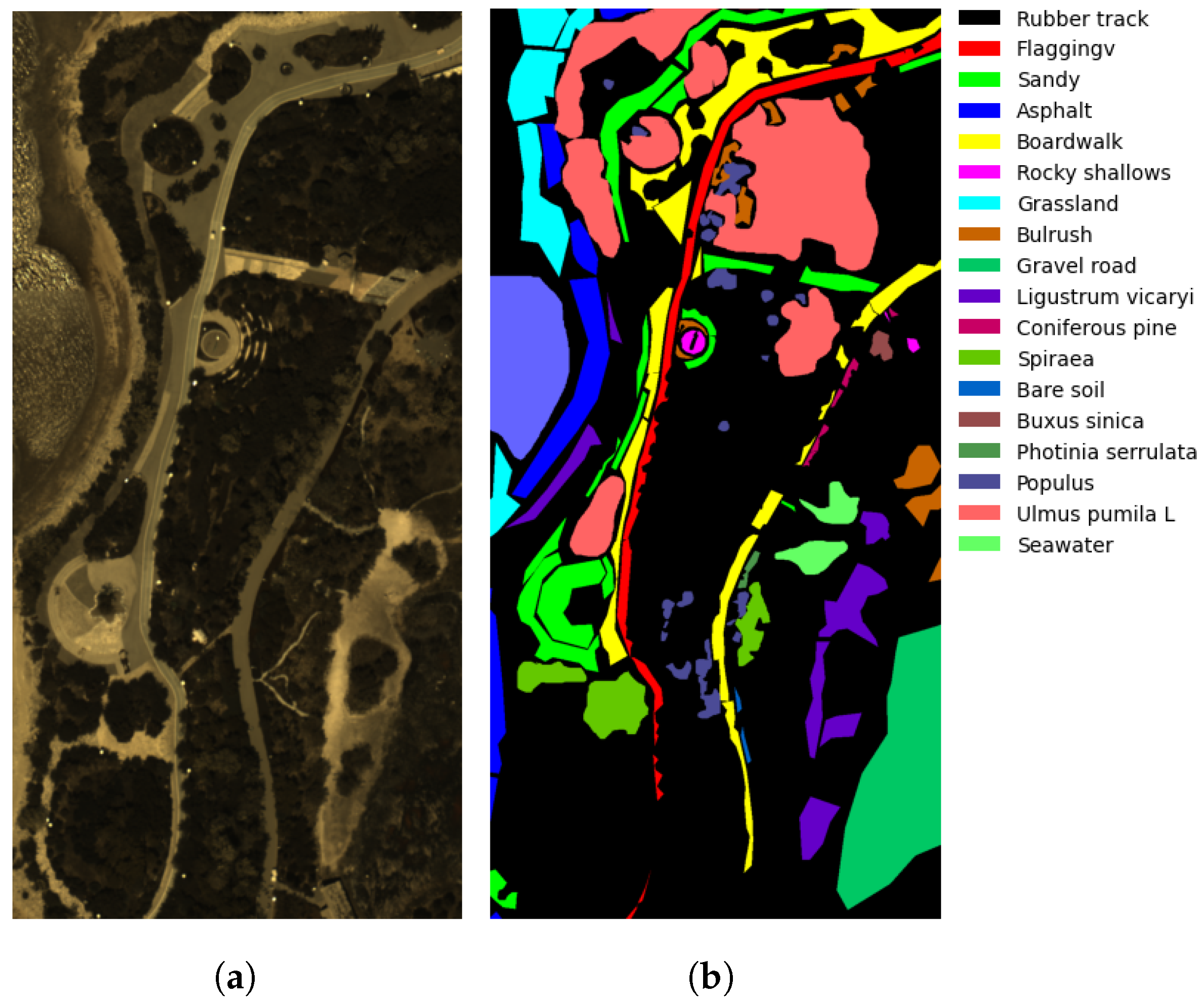

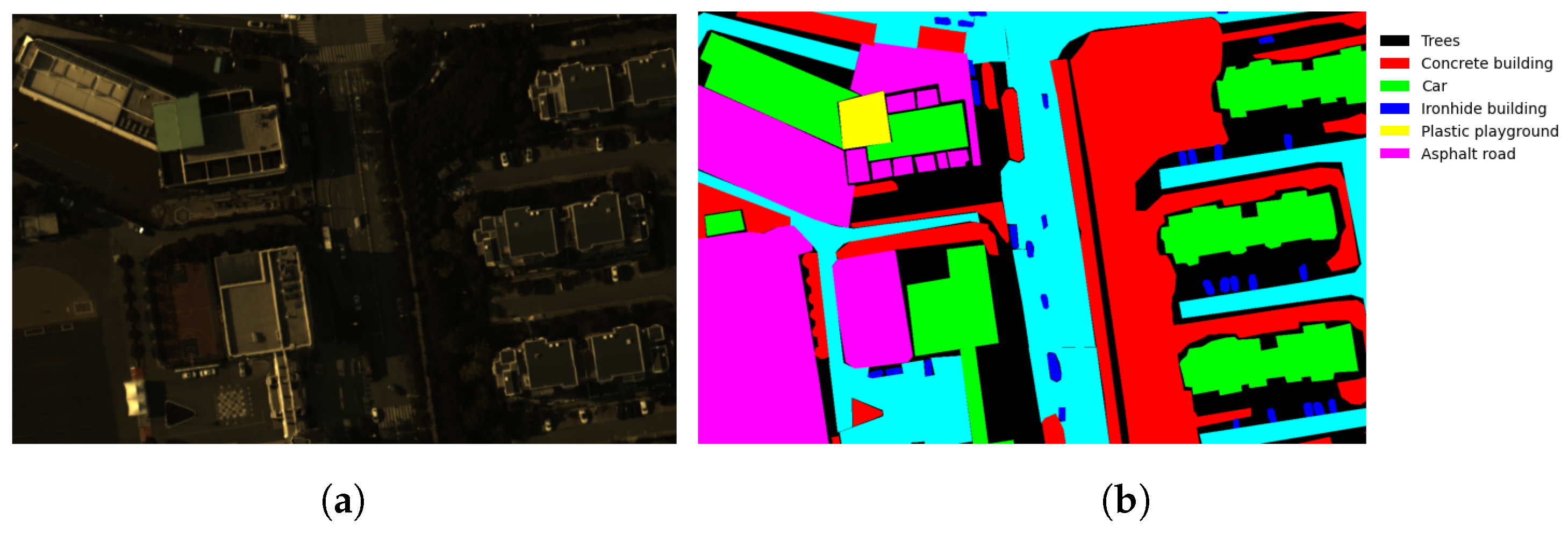

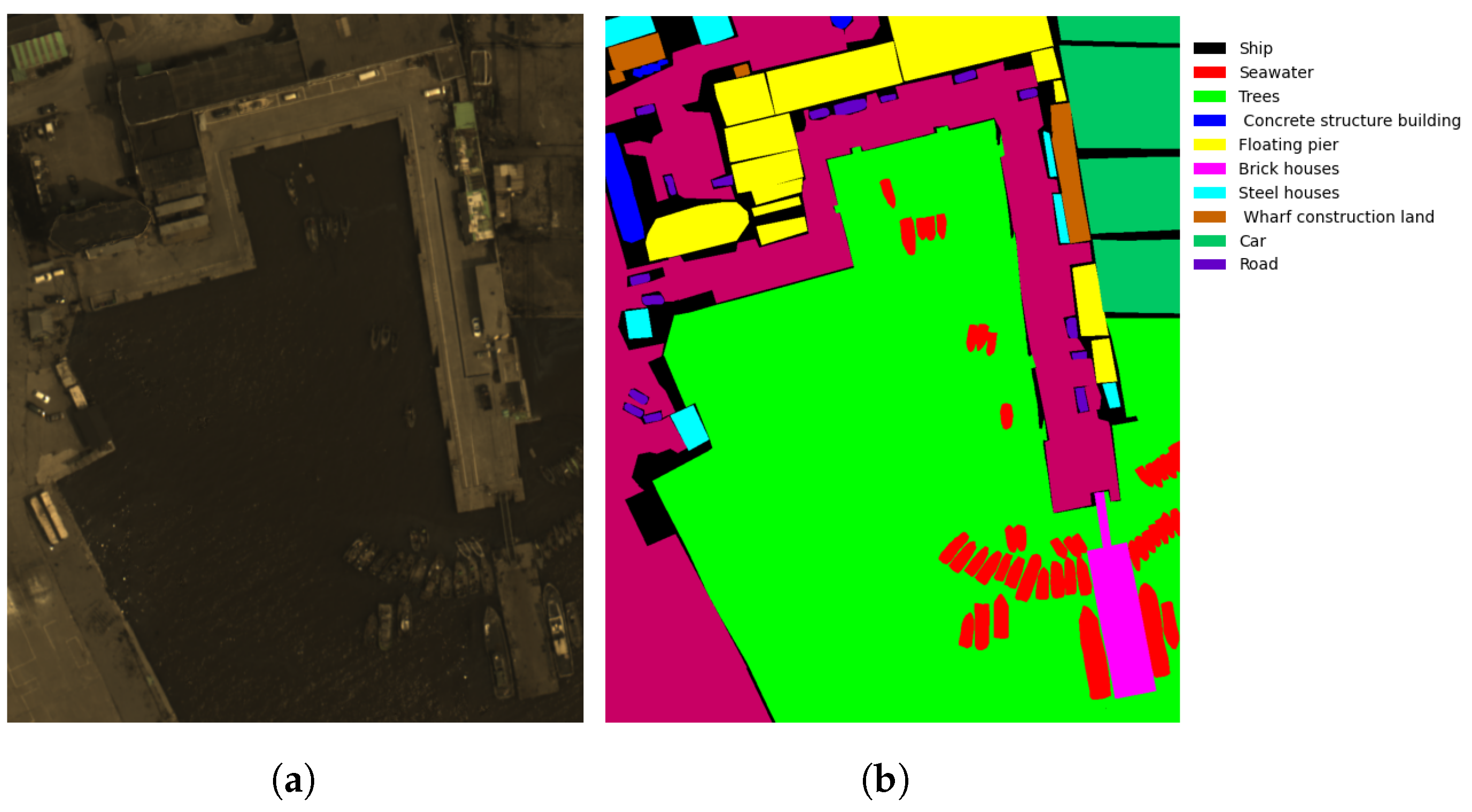

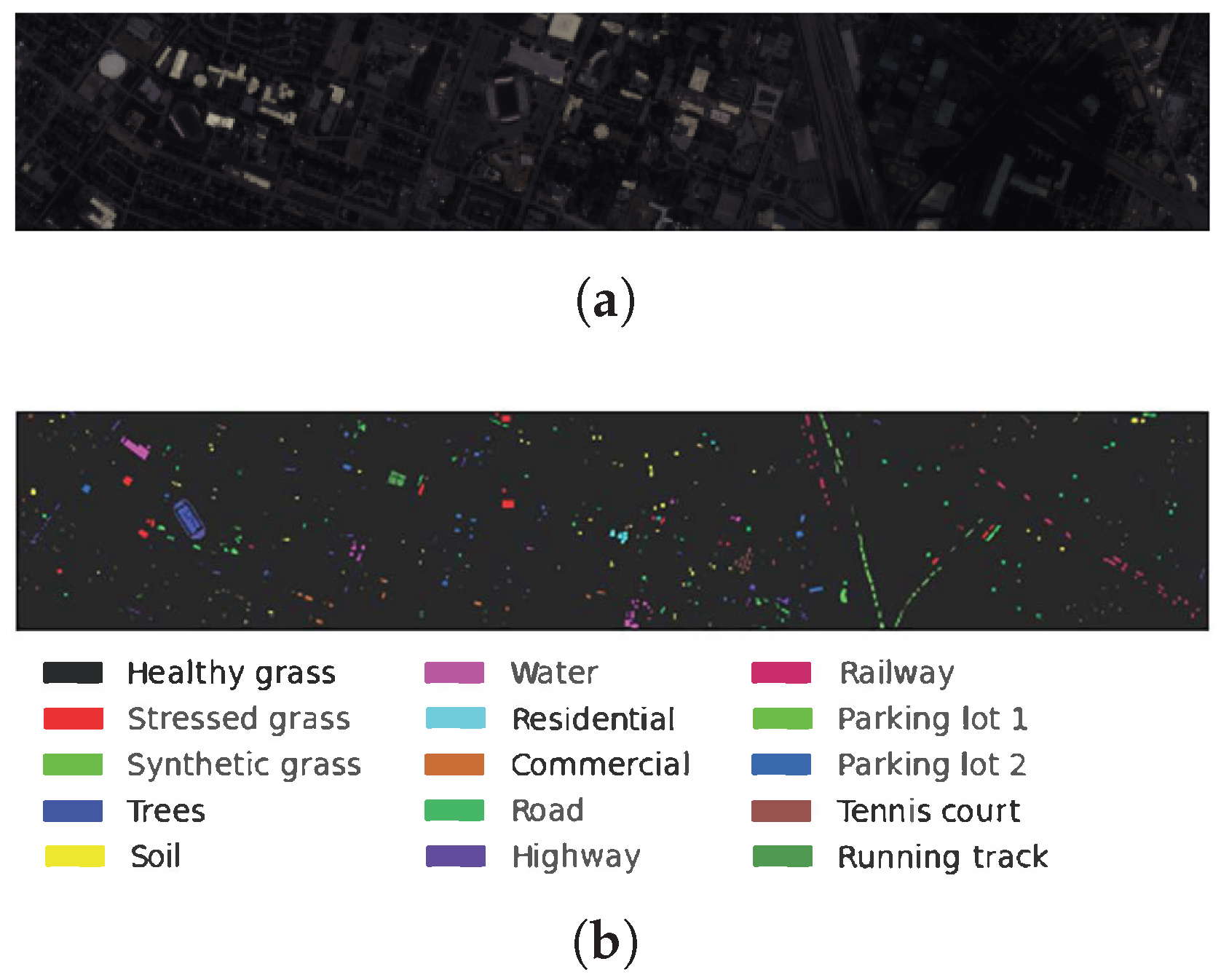

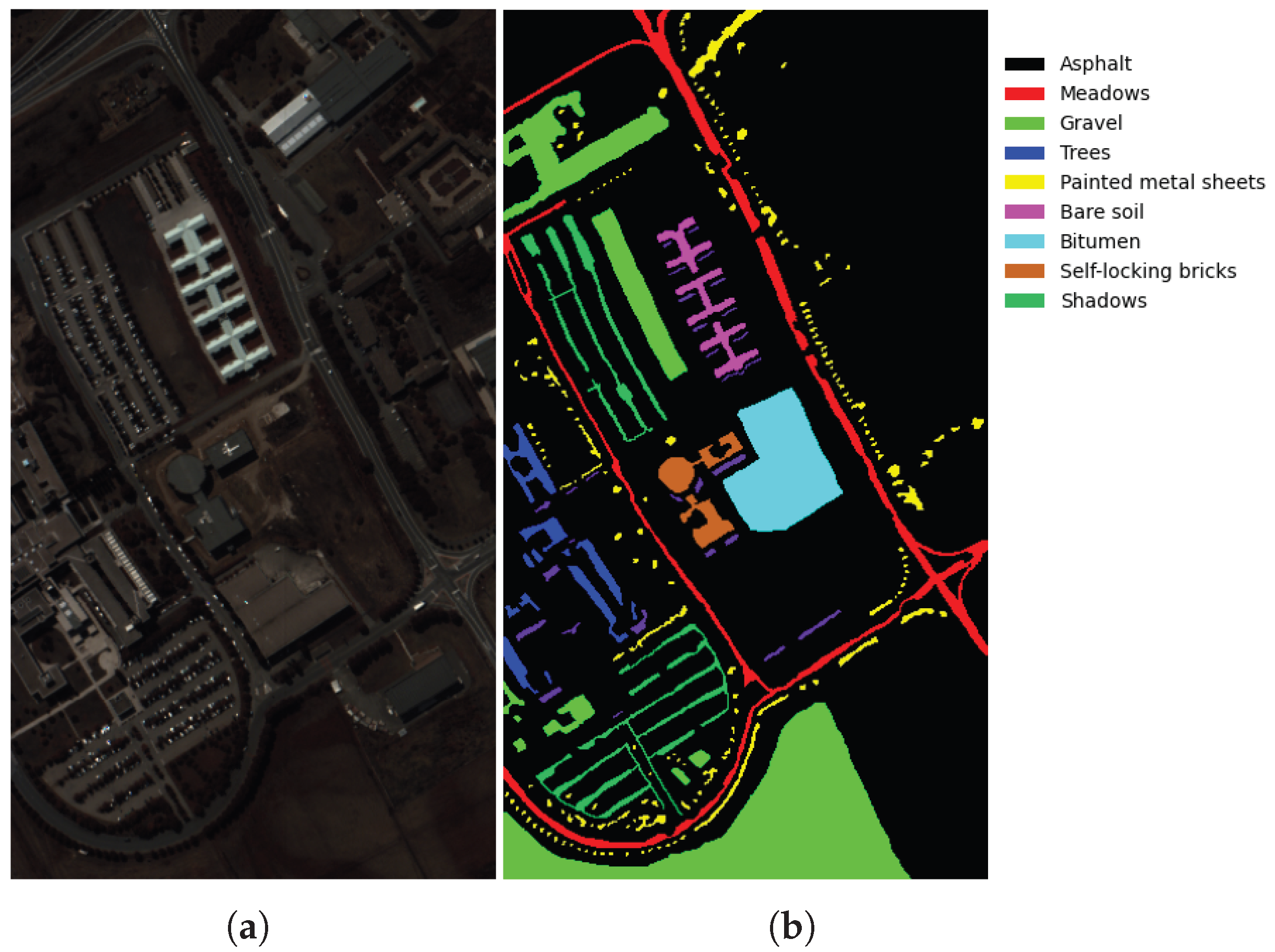

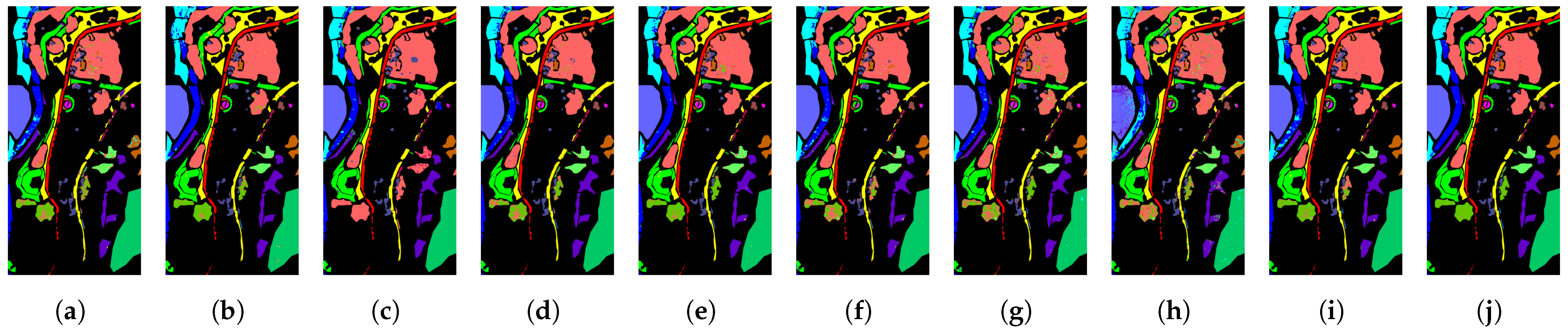

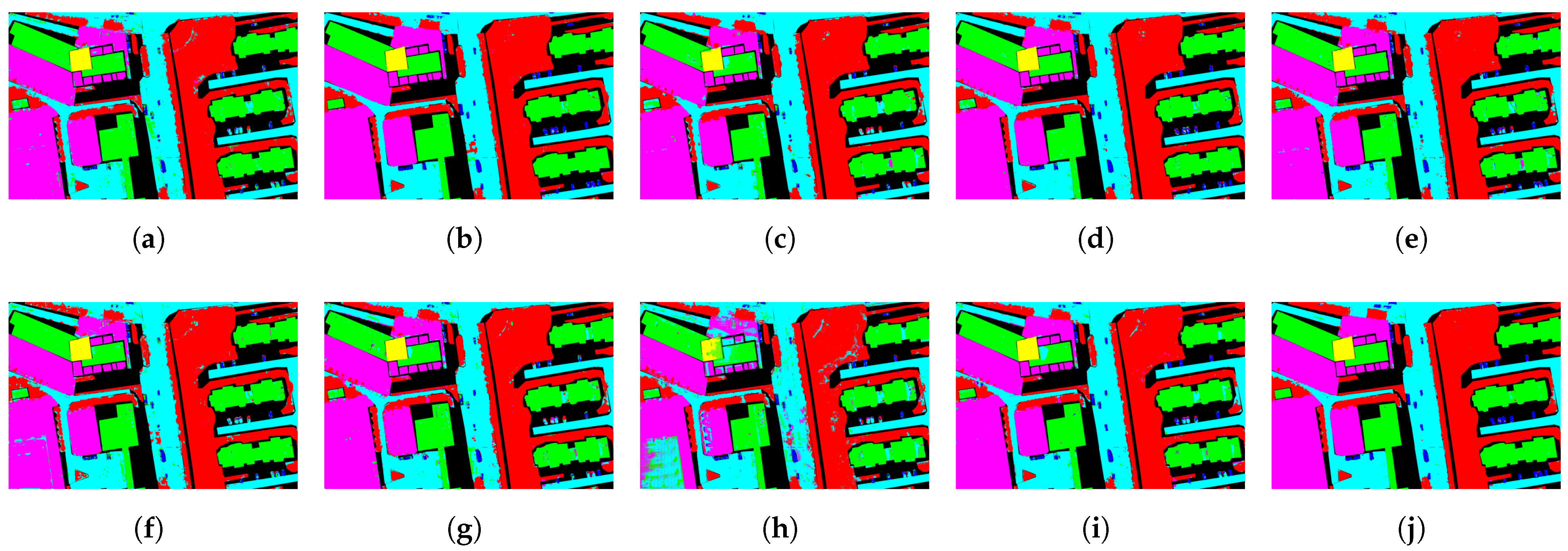

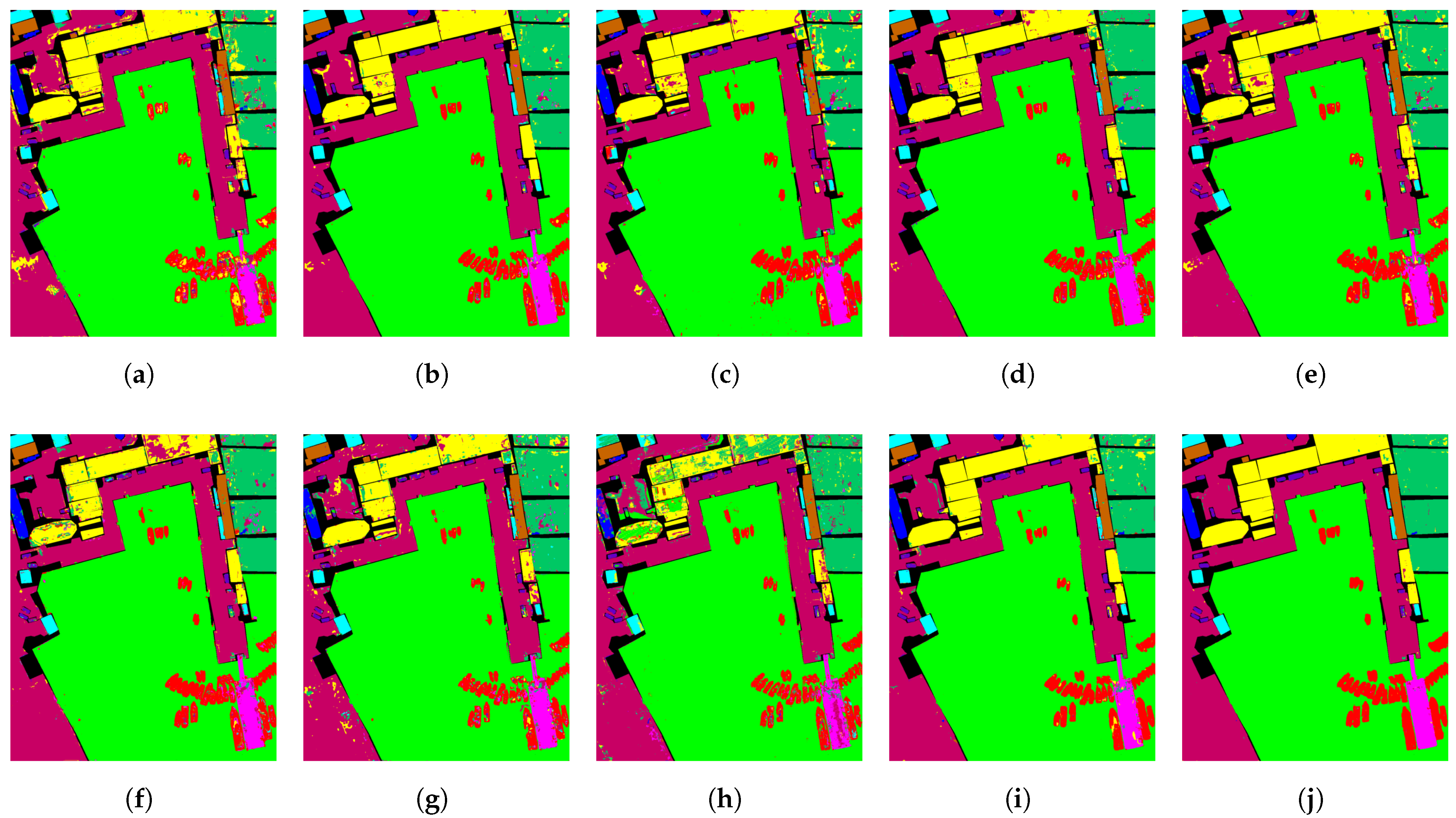

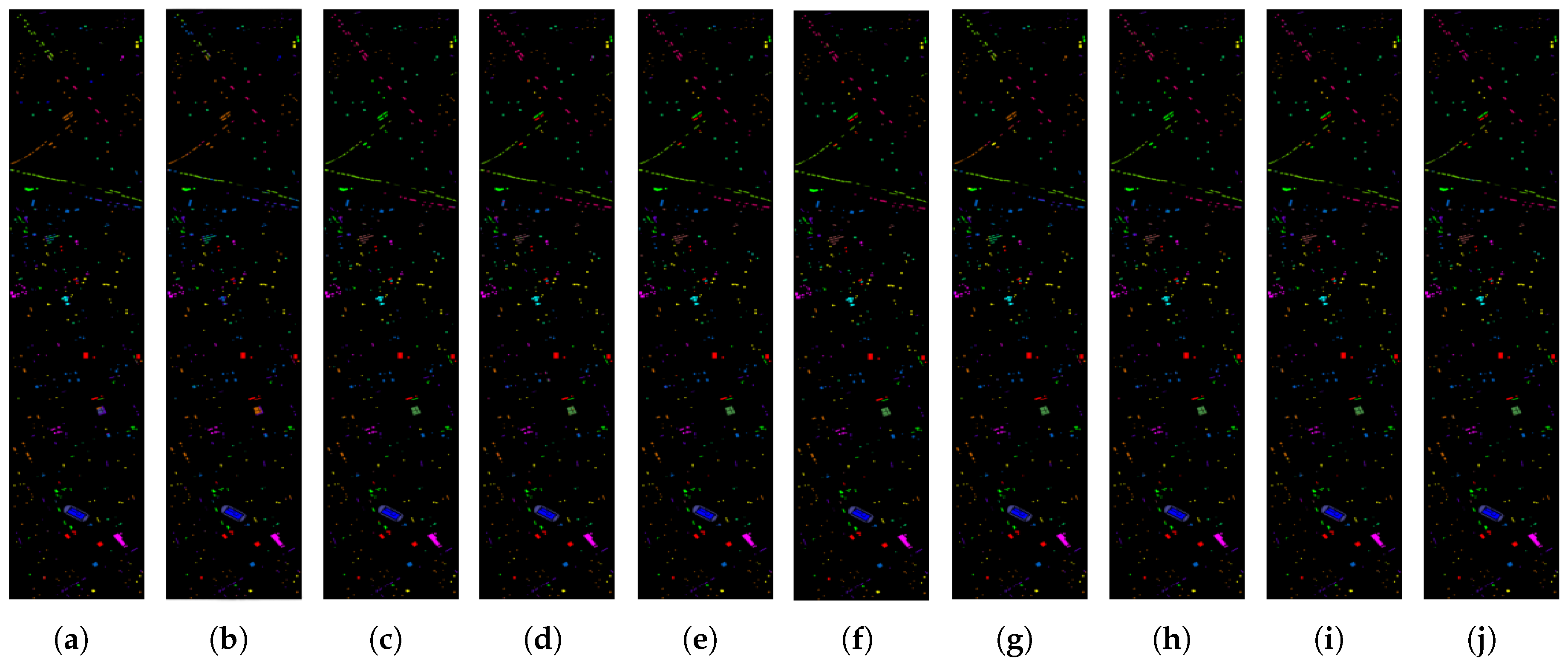

Exceptional Performance on Benchmark Datasets: The proposed method has demonstrated superior performance on various benchmark datasets, including the QUH-Tangdaowan, QUH-Qingyun, and QUH-Pingan datasets. Achieving the highest overall accuracy (OA), average accuracy (AA), and Kappa coefficient across these datasets, the model has proven its effectiveness and robustness in HSIC tasks, showcasing its capability to generalize well across different scenarios and datasets.

This paper is organized in the following manner: It commences with a review of related work in

Section 2.

Section 3 is devoted to a detailed exposition of the proposed framework. The experimental results on four hyperspectral datasets are reported in

Section 4, while the ablation analyses are provided in

Section 5. The paper concludes with a summary of the study in

Section 6.

6. Conclusions

The proposed FreqMamba framework introduces a Mamba module with linear complexity combined with deformable convolution, enabling effective joint modeling of long-range spectral dependencies and adaptive spatial features in HSI. This approach dynamically focuses on discriminative frequency bands and spatial details. While significantly improving computational efficiency, it successfully overcomes the limitations of traditional CNN-Transformer hybrid models, demonstrating substantial advantages in both classification accuracy and robustness.

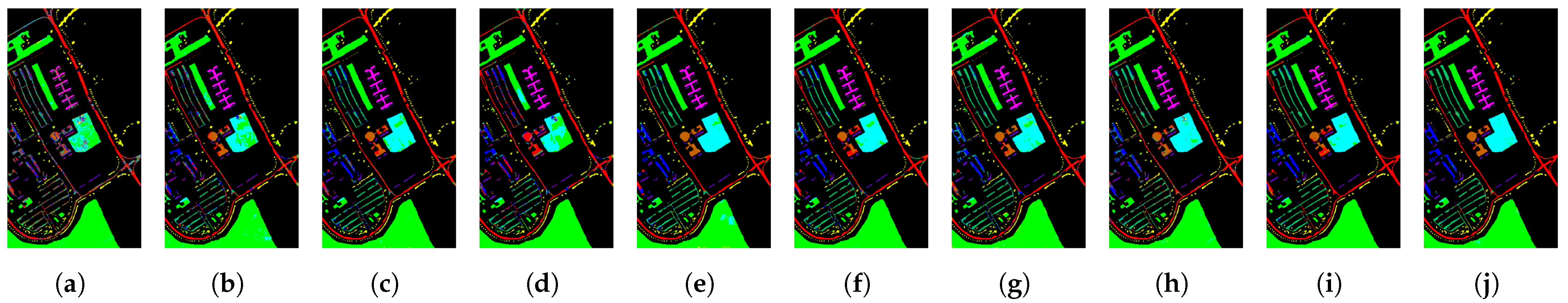

Experimental results on the QUH-Tangdaowan, QUH-Qingyun, QUH-Pingan, Houston2013, and PU benchmark datasets demonstrated FreqMamba’s superior performance. Our approach achieved the highest overall accuracy (OA), average accuracy (AA), and Kappa coefficient, outperforming state-of-the-art CNN, Transformer, and SSM-based methods. This confirms the robustness, high generalization capability, and effectiveness of the framework in handling hyperspectral data with high interclass similarity and significant intra-class variability.

Although FreqMamba has theoretical advantages in terms of computational efficiency, the frequency-domain transformation and dynamic convolution operations in its multi-branch architecture put forward high requirements for hardware parallelization support, and special operator optimization is still needed. At the same time, the frequency-domain attention mechanism is sensitive to spectral perturbations caused by illumination changes, which may lead to feature distortion in extreme shadows or strong reflection conditions.

For future work, we will focus on developing adaptive group size mechanisms to reduce hyperparameter sensitivity, exploring lightweight designs for edge-device deployment, and extending the framework to tasks such as hyperspectral segmentation and change detection. These efforts aim to enhance practicality while maintaining FreqMamba’s efficiency and interpretability.