OptiFusionStack: A Physio-Spatial Stacking Framework for Shallow Water Bathymetry Integrating QAA-Derived Priors and Neighborhood Context

Highlights

- OptiFusionStack fuses QAA-derived IOPs with multi-scale neighborhood features and ensemble learning, overcoming the limits of pixel-wise SDB.

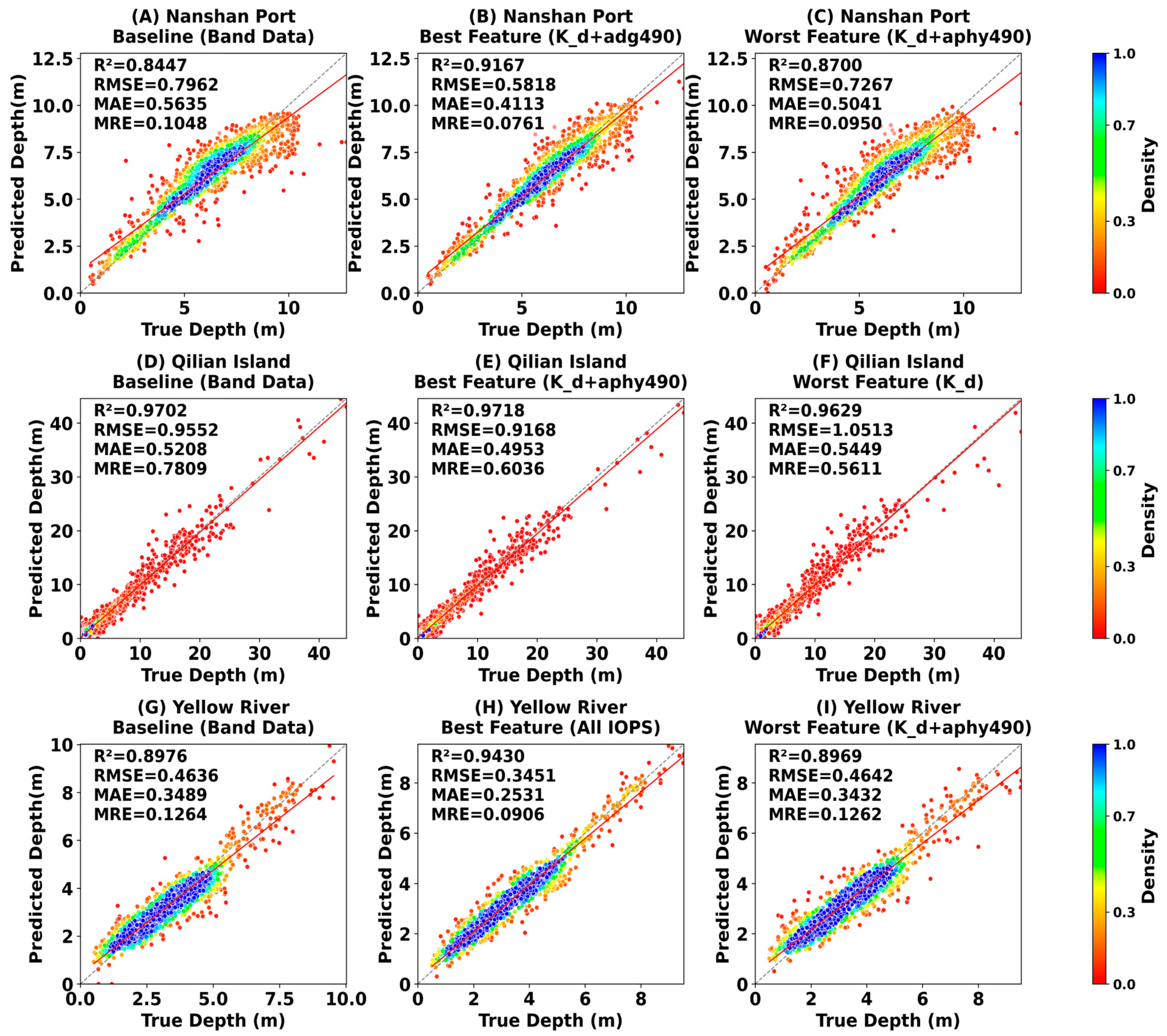

- The framework achieves substantially higher accuracy and spatially coherent maps (e.g., R2 up to 0.9167), validated across optically diverse sites.

- Incorporating spatial context is as critical as physical priors for reliable and interpretable SDB.

- The approach shows strong potential for operational coastal mapping in regions with limited or no in situ calibration data.

Abstract

1. Introduction

2. Materials and Methods

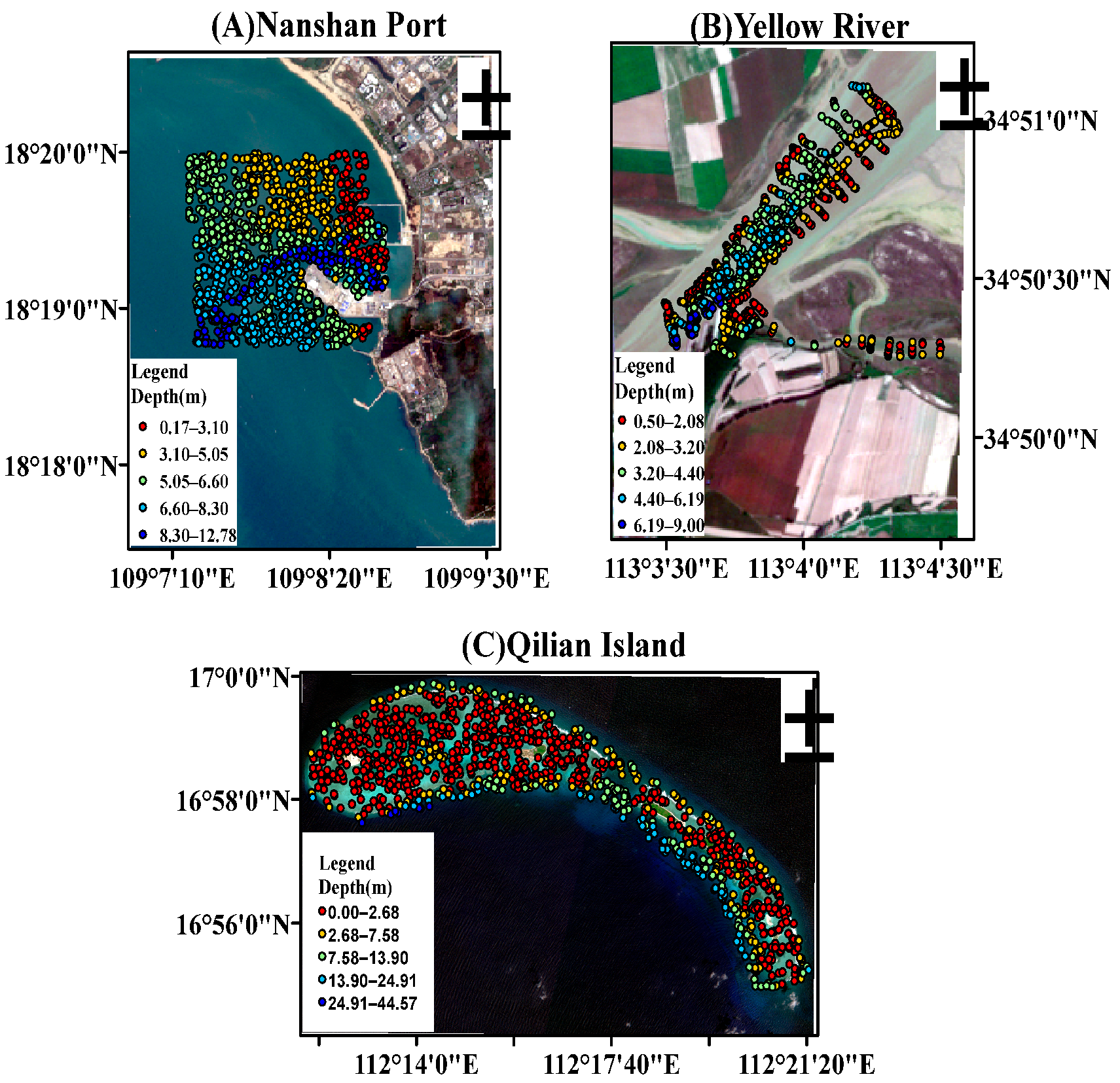

2.1. Study Areas and Datasets

2.1.1. Nanshan Port (Coastal Harbor)

2.1.2. Yellow River–Yiluo River Confluence (Optically Complex Inland River)

2.1.3. Qilian Islands (Clear Offshore Water)

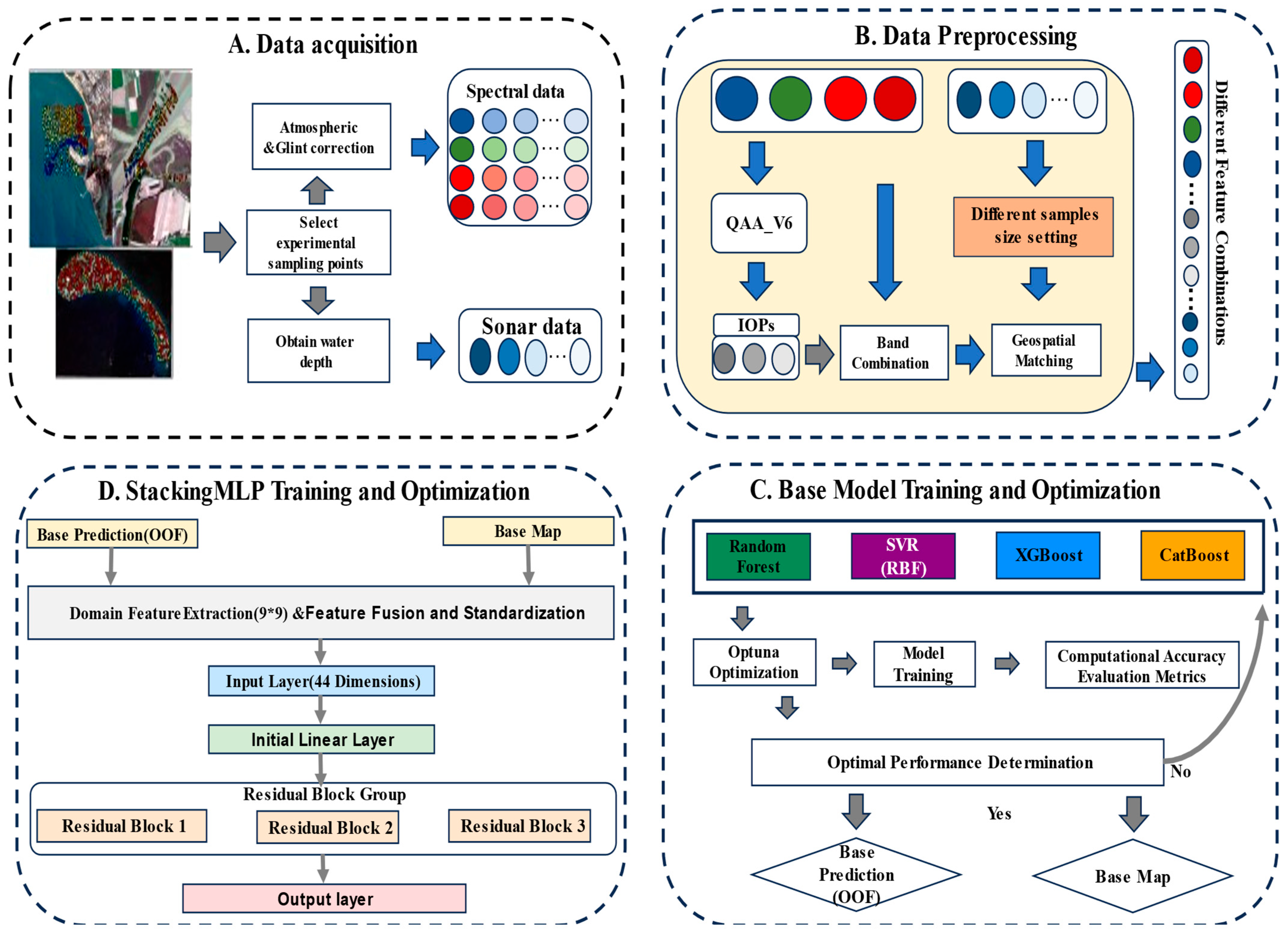

2.2. Overall Design of the OptiFusionStack Framework

2.3. Feature Engineering: A Two-Stage Physio-Spatial Approach

Stage 2: Multi-Scale Spatial Context Construction

2.4. The OptiFusionStack Modeling Framework

2.4.1. Level 1: Initial Prediction with Physics-Informed Base Learners

2.4.2. Level 2: Synergistic Spatial Fusion with a StackingMLP Meta-Learner

2.5. Accuracy Assessment Metrics

3. Results

3.1. Determination of Optimal Neighborhood Size

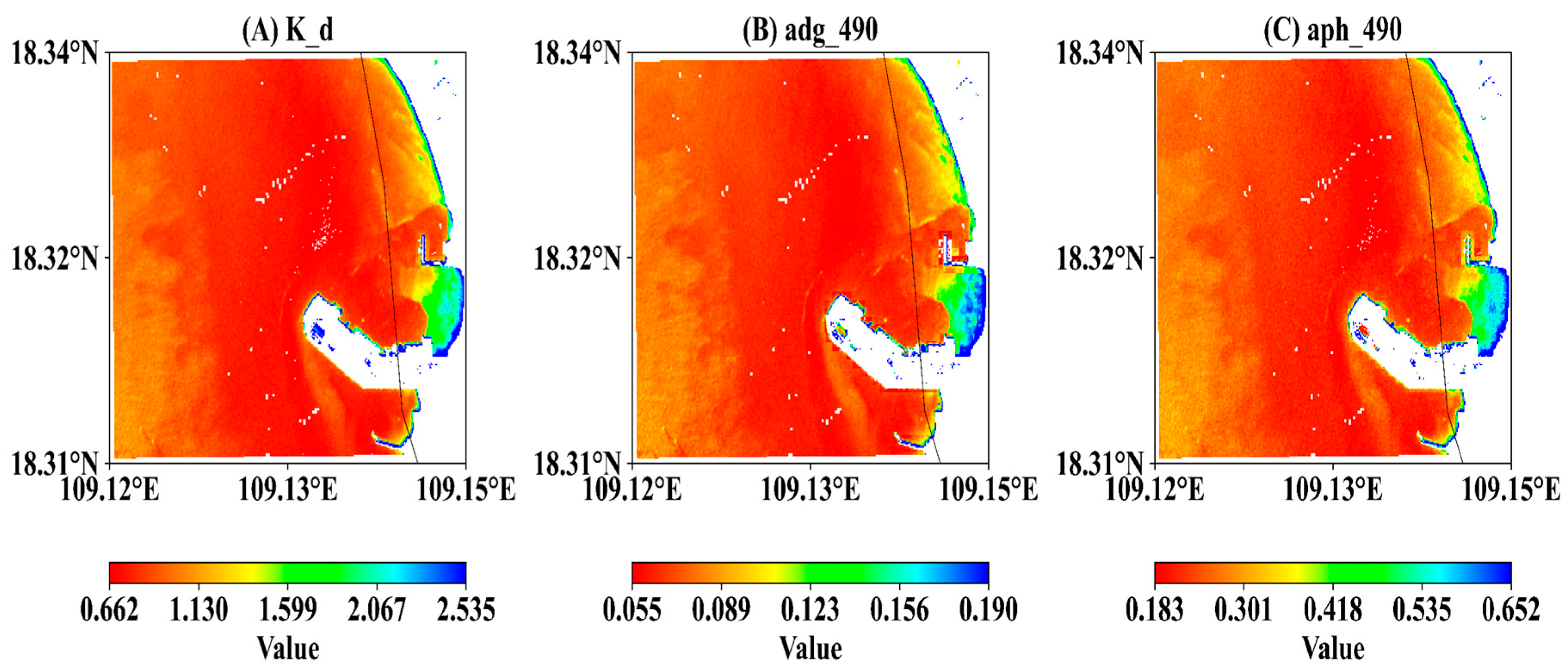

3.2. Retrieval and Correlation Analysis of IOPs

3.3. Performance Bottlenecks of Pixel-Wise Models

3.4. Performance of OptiFusionStack Framework

3.4.1. Global Performance Assessment and Optimal Feature Selection

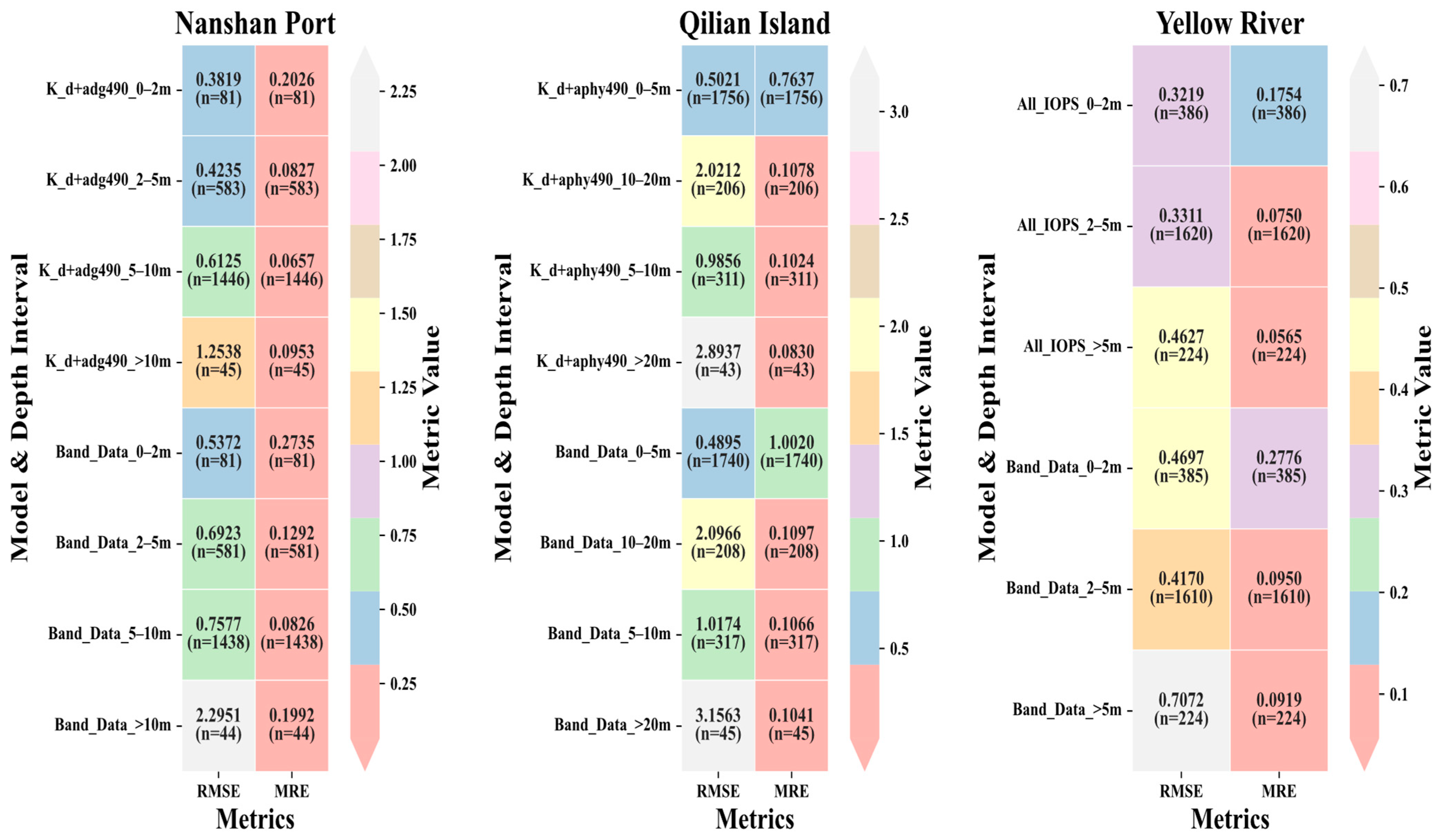

3.4.2. Analysis of Robustness Across Depths and Environments

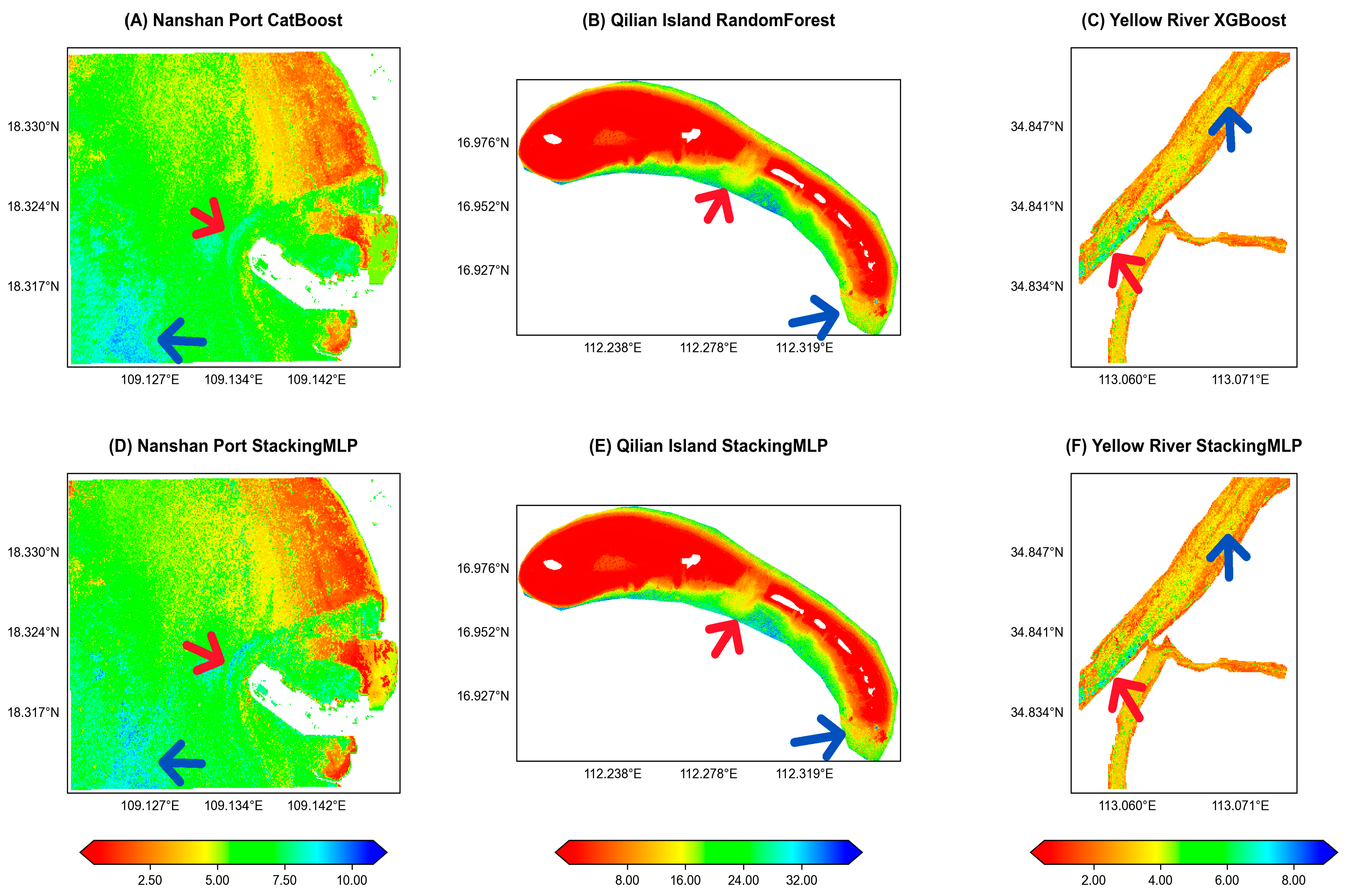

3.4.3. Superior Spatial Coherence and Artifact Suppression

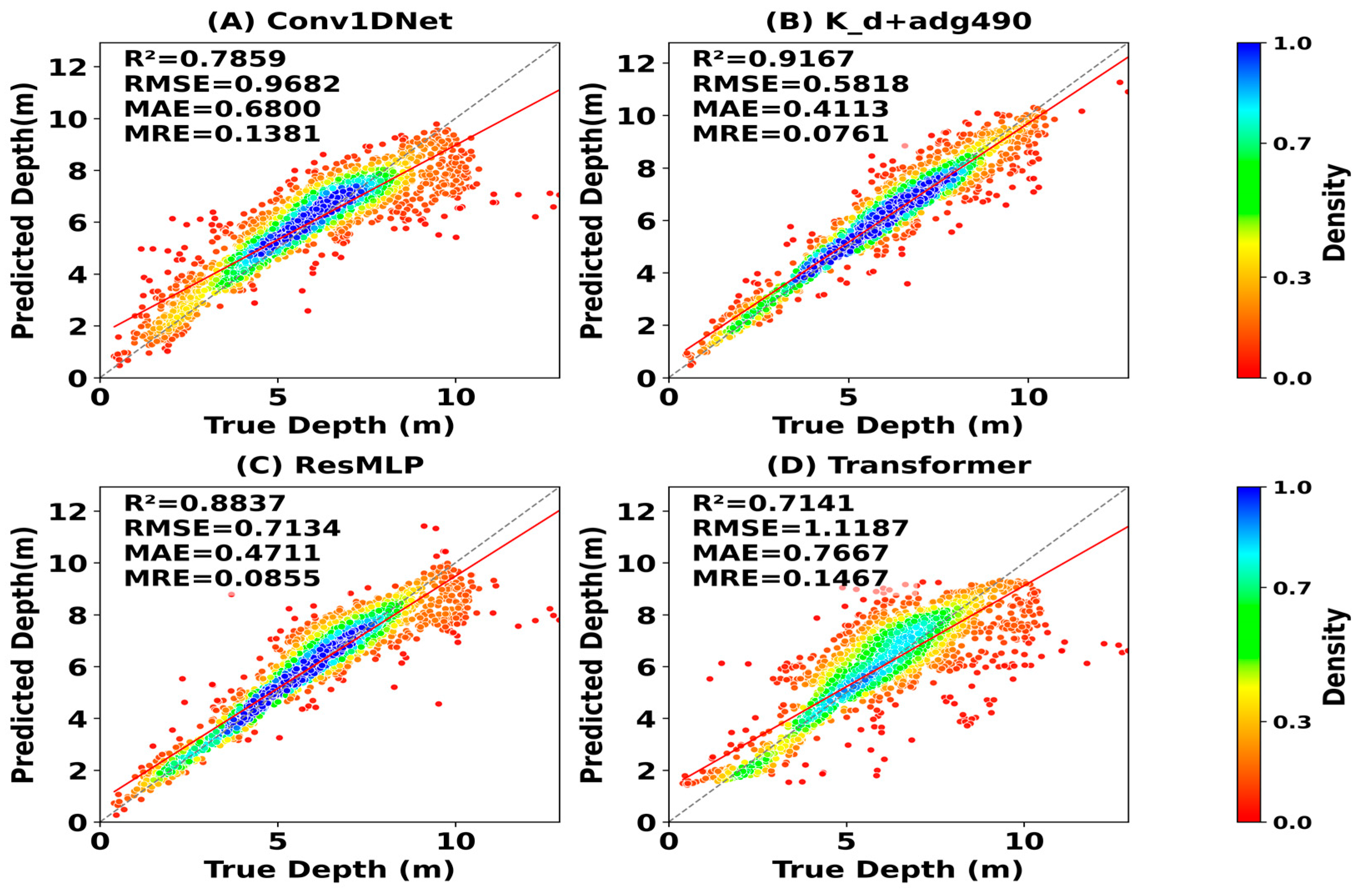

3.4.4. Architectural Superiority: A Benchmark Against Monolithic Deep Learning Models

4. Discussion

4.1. The Physio-Spatial Synergy: From Signal Entanglement to Semantic Decoupling

4.1.1. The Role of IOPs: Decoupling the Physical Signal

4.1.2. The Role of Spatial Context: Decoupling the Geographic Semantics

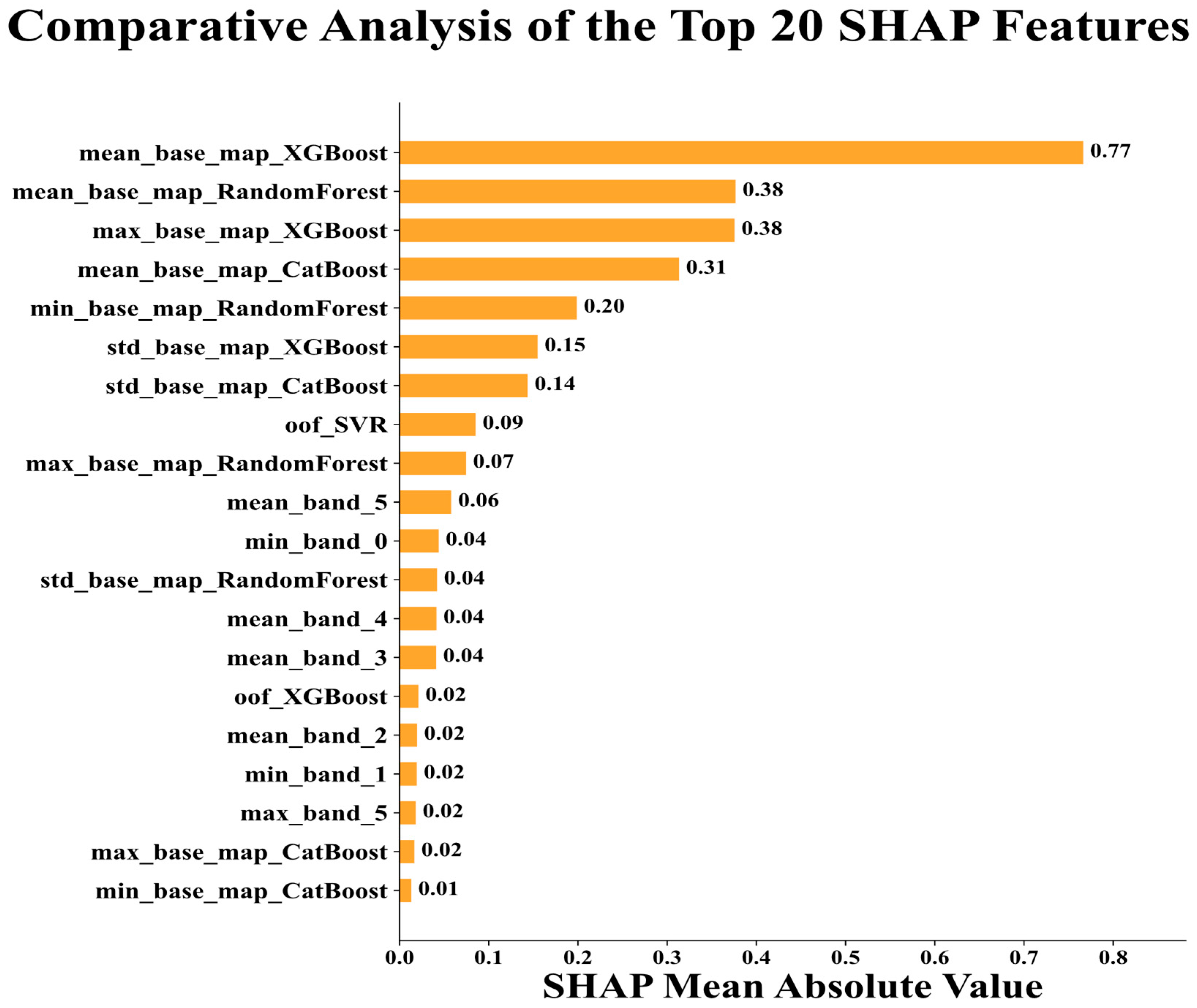

4.2. Advantages of the Stacking Framework: Architectural Intelligence and Information Prioritization

4.2.1. The Primacy of Spatial Context

4.2.2. The Complementary Roles of Predictive and Physical Features

4.3. Architectural Considerations: Comparison with Convolutional Neural Networks (CNNs)

4.4. Implications, Limitations, and Future Directions Under Uncalibrated Conditions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Caballero, I.; Stumpf, R.P. Retrieval of nearshore bathymetry from Sentinel-2A and 2B satellites in South Florida coastal waters. Estuar. Coast. Shelf Sci. 2019, 226, 106277. [Google Scholar] [CrossRef]

- Fang, S.; Wu, Z.; Wu, S.; Chen, Z.; Shen, W.; Mao, Z. Enhancing Water depth inversion accuracy in turbid coastal environments using random forest and coordinate attention mechanisms. Front. Mar. Sci. 2024, 11, 1471695. [Google Scholar] [CrossRef]

- Huang, W.; Zhao, J.; Ai, B.; Sun, S.; Yan, N. Bathymetry and benthic habitat mapping in shallow waters from Sentinel-2A imagery: A case study in Xisha islands, China. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Hedley, J.D. Remote sensing of coral reefs for monitoring and management: A review. Remote Sens. 2016, 8, 118. [Google Scholar] [CrossRef]

- Hedley, J.D.; Harborne, A.R.; Mumby, P.J. Technical note: Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Li, J.; Chu, S.; Hu, Q.; Qu, Z.; Zhang, J.; Cheng, L. A Slope Adaptive Bathymetric Method by Integrating ICESat-2 ATL03 Data with Sentinel-2 Images. Remote Sens. 2025, 17, 3019. [Google Scholar] [CrossRef]

- Kalybekova, A. A Review of Advancements and Applications of Satellite-Derived Bathymetry. Eng. Sci. 2025, 35, 1541. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Shen, W. A Review of Ensemble Learning Algorithms Used in Remote Sensing Applications. Appl. Sci. 2022, 12, 8654. [Google Scholar] [CrossRef]

- Wang, E.; Zhang, H.; Wang, J.; Cao, W.; Li, D. Simulation and Sensitivity Analysis of Remote Sensing Reflectance for Optically Shallow Water Bathymetry. Remote Sens. 2025, 17, 1384. [Google Scholar] [CrossRef]

- Pacheco, A.; Horta, J.; Loureiro, C.; Ferreira, Ó. Retrieval of nearshore bathymetry from Landsat 8 images: A tool for coastal monitoring in shallow waters. Remote Sens. Environ. 2015, 159, 102–116. [Google Scholar] [CrossRef]

- Xu, Y.; Cao, B.; Deng, R.; Cao, B.; Liu, H.; Li, J. Bathymetry over broad geographic areas using optical high-spatial-resolution satellite remote sensing without in-situ data. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103308. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Y.; Le, Y.; Zhang, D.; Yan, Q.; Dong, Y.; Han, W.; Wang, L. Nearshore bathymetry based on ICESat-2 and multispectral images: Comparison between Sentinel-2, Landsat-8, and testing Gaofen-2. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2449–2462. [Google Scholar] [CrossRef]

- Zhong, J.; Sun, J.; Lai, Z.; Song, Y. Nearshore Bathymetry from ICESat-2 LiDAR and Sentinel-2 Imagery Datasets Using Deep Learning Approach. Remote Sens. 2022, 14, 4229. [Google Scholar] [CrossRef]

- Najar, M.A.; Benshila, R.; Bennioui, Y.E.; Thoumyre, G.; Almar, R.; Bergsma, E.W.J.; Delvit, J.-M.; Wilson, D.G. Coastal Bathymetry Estimation from Sentinel-2 Satellite Imagery: Comparing Deep Learning and Physics-Based Approaches. Remote Sens. 2022, 14, 1196. [Google Scholar] [CrossRef]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating Satellite-Derived Bathymetry (SDB) with the Google Earth Engine and Sentinel-2. Remote Sens. 2018, 10, 859. [Google Scholar] [CrossRef]

- He, C.; Jiang, Q.; Wang, P. An Improved Physics-Based Dual-Band Model for Satellite-Derived Bathymetry Using SuperDove Imagery. Remote Sens. 2024, 16, 3801. [Google Scholar] [CrossRef]

- Le, Y.; Sun, X.; Chen, Y.; Zhang, D.; Wu, L.; Liu, H.; Hu, M. High-accuracy shallow-water bathymetric method including reliability evaluation based on Sentinel-2 time-series images and ICESat-2 data. Front. Mar. Sci. 2024, 11, 1470859. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef]

- Sokoletsky, L.G.; Shen, F. Optical closure for remote-sensing reflectance based on accurate radiative transfer approximations: The case of the Changjiang (Yangtze) River Estuary and its adjacent coastal area, China. Int. J. Remote Sens. 2014, 35, 4193–4224. [Google Scholar] [CrossRef]

- Huang, E.; Chen, B.; Luo, K.; Chen, S. Effect of the One-to-Many Relationship between the Depth and Spectral Profile on Shallow Water Depth Inversion Based on Sentinel-2 Data. Remote Sens. 2024, 16, 1759. [Google Scholar] [CrossRef]

- Hsu, H.-J.; Huang, C.-Y.; Jasinski, M.; Li, Y.; Gao, H.; Yamanokuchi, T.; Wang, C.-G.; Chang, T.-M.; Ren, H.; Kuo, C.-Y.; et al. A semi-empirical scheme for bathymetric mapping in shallow water by ICESat-2 and Sentinel-2: A case study in the South China Sea. ISPRS J. Photogramm. Remote Sens. 2021, 178, 1–19. [Google Scholar] [CrossRef]

- Dorogush, A.V.; Ershov, V.; Gulin, A. CatBoost: Gradient boosting with categorical features support. arXiv 2018, arXiv:1810.11363. [Google Scholar] [CrossRef]

- McKinna, L.I.; Fearns, P.R.; Weeks, S.J.; Werdell, P.J.; Reichstetter, M.; Franz, B.A.; Shea, D.M.; Feldman, G.C. A semianalytical ocean color inversion algorithm with explicit water column depth and substrate reflectance parameterization. J. Geophys. Res. Oceans 2015, 120, 1741–1770. [Google Scholar] [CrossRef]

- Lee, Z.; Carder, K.L.; Arnone, R.A. Deriving inherent optical properties from water color: A multiband quasi-analytical algorithm for optically deep waters. Appl. Opt. 2002, 41, 5755–5772. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, Y.; Zhang, J. Shallow Water Bathymetry Based on Inherent Optical Properties Using High Spatial Resolution Multispectral Imagery. Remote Sens. 2020, 12, 3027. [Google Scholar] [CrossRef]

- Saeidi, V.; Seydi, S.T.; Kalantar, B.; Ueda, N.; Tajfirooz, B.; Shabani, F. Water depth estimation from Sentinel-2 imagery using advanced machine learning methods and explainable artificial intelligence. Geomat. Nat. Hazards Risk 2023, 14, 2225691. [Google Scholar] [CrossRef]

- Hochberg, E.J.; Atkinson, M.J.; Andréfouët, S. Spectral reflectance of coral reef bottom-types worldwide and implications for coral reef remote sensing. Remote Sens. Environ. 2003, 85, 159–173. [Google Scholar] [CrossRef]

- Huang, R.; Yu, K.; Wang, Y.; Wang, J.; Mu, L.; Wang, W. Bathymetry of the Coral Reefs of Weizhou Island Based on Multispectral Satellite Images. Remote Sens. 2017, 9, 750. [Google Scholar] [CrossRef]

- Wu, Z.; Mao, Z.; Shen, W.; Yuan, D.; Zhang, X.; Huang, H. Satellite-derived bathymetry based on machine learning models and an updated quasi-analytical algorithm approach. Opt. Express 2022, 30, 16773–16793. [Google Scholar] [CrossRef]

- Qiu, Z.F.; Wu, T.T.; Su, Y.Y. Retrieval of diffuse attenuation coefficient in the China seas from surface reflectance. Opt. Express 2013, 21, 15287–15297. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Rehm, E.; McCormick, N.J. Inherent optical property estimation in deep waters. Opt. Express 2011, 19, 24986–25005. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Kwon, J.; Shin, H.; Kim, D.; Lee, H.; Bouk, J.; Kim, J.; Kim, T. Estimation of shallow bathymetry using Sentinel-2 satellite data and random forest machine learning: A case study for Cheonsuman, Hallim, and Samcheok Coastal Seas. J. Appl. Remote Sens. 2024, 18, 014522. [Google Scholar] [CrossRef]

- Eugenio, F.; Marcello, J.; Mederos-Barrera, A.; Marqués, F. High-Resolution Satellite Bathymetry Mapping: Regression and Machine Learning-Based Approaches. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Liang, Y.; Cheng, Z.; Du, Y.; Song, D.; You, Z. An improved method for water depth mapping in turbid waters based on a machine learning model. Estuar. Coast. Shelf Sci. 2024, 296, 108577. [Google Scholar] [CrossRef]

- Wu, Z.; Zhao, Y.; Wu, S.; Chen, H.; Song, C.; Mao, Z.; Shen, W. Satellite-Derived Bathymetry Using a Fast Feature Cascade Learning Model in Turbid Coastal Waters. J. Remote Sens. 2024, 4, 0272. [Google Scholar] [CrossRef]

- Cheng, J.; Chu, S.; Cheng, L. Advancing Shallow Water Bathymetry Estimation in Coral Reef Areas via Stacking Ensemble Machine Learning Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 12511–12530. [Google Scholar] [CrossRef]

- Ye, M.; Yang, C.; Zhang, X.; Li, S.; Peng, X.; Li, Y.; Chen, T. Shallow Water Bathymetry Inversion Based on Machine Learning Using ICESat-2 and Sentinel-2 Data. Remote Sens. 2024, 16, 4603. [Google Scholar] [CrossRef]

- Shen, W.; Chen, M.; Wu, Z.; Wang, J. Shallow-Water Bathymetry Retrieval Based on an Improved Deep Learning Method Using GF-6 Multispectral Imagery in Nanshan Port Waters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8550–8562. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, C.; Zhu, J.; Su, D.; Yang, F.; Zhu, J. A satellite-derived bathymetry method combining depth invariant index and adaptive logarithmic ratio: A case study in the Xisha Islands without in-situ measurements. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104232. [Google Scholar] [CrossRef]

- Mumby, P.J.; Skirving, W.; Strong, A.E.; Hardy, J.T.; LeDrew, E.F.; Hochberg, E.J.; Stumpf, R.P.; David, L.T. Remote sensing of coral reefs and their physical environment. Mar. Pollut. Bull. 2004, 48, 219–228. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, X.; Wu, Z.; Han, C.; Zhang, L.; Xu, P.; Mao, Z.; Wang, Y.; Zhang, C. Machine Learning-Constrained Semi-Analysis Model for Efficient Bathymetric Mapping in Data-Scarce Coastal Waters. Remote Sens. 2025, 17, 3179. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

| Feature Category | Feature Name | Source | Center Wavelength (nm) |

|---|---|---|---|

| Spectral Features | Sentinel-2B Band 2–Blue | 492.1 | |

| Spectral Features | Sentinel-2B Band 3–Green | 559.0 | |

| Spectral Features | Sentinel-2B Band 4–Red | 664.9 | |

| Spectral Features | Sentinel-2B Band 8–NIR | 832.8 | |

| Physical Priors | QAA Calculation | 490 | |

| Physical Priors | QAA Calculation | 490 | |

| Physical Priors | QAA Calculation | 490 |

| Index | Feature Type | Description | Dimensions |

|---|---|---|---|

| 1–4 | Point-wise Predictive | The Out-of-Fold (OOF) depth predictions from the four base models: Random Forest, XGBoost, SVR, and CatBoost | 4 |

| 5–4 × i | Spatial Context (Spectral) | The mean, std, min, and max values of the original spectral bands within a 9 × 9 neighborhood window centered on the sample point. | i × bands × 4stats = 4 × i |

| 4 × i + 1 to 4 × i + 17 | Spatial Context (Predictive) | The mean, std, min, and max values of the 4 base model prediction maps within a 9 × 9 neighborhood window centered on the sample point. | 4maps × 4stats = 16 |

| Total Dimensions | 20 + 4 × i | ||

| Neighborhood Window Size | R2 | RMSE (m) | MAE (m) | MRE |

|---|---|---|---|---|

| 3 × 3 | 0.9136 | 0.6151 | 0.4449 | 0.0932 |

| 5 × 5 | 0.8933 | 0.6834 | 0.4850 | 0.0901 |

| 7 × 7 | 0.8780 | 0.7308 | 0.5214 | 0.0973 |

| 9 × 9 | 0.9167 | 0.5818 | 0.4113 | 0.1048 |

| 11 × 11 | 0.8802 | 0.7241 | 0.4968 | 0.0951 |

| Study Area | Water Type | Range (m−1) | Typical Literature Range (m−1) | Reference(s) |

|---|---|---|---|---|

| Qilian Islands | Clear waters | 0.046–0.335 | 0.05–0.15 | Hochberg (2003) [27] |

| Nanshan Port | coastal waters | 0.662–2.535 | 0.03–10 | Qiu et al. (2013) [30] |

| Yellow River | estuarine waters | 1.587–5.152 | 0.17–59 | Sokoletsky & Shen (2014) [19] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, W.; Liu, J.; Li, X.; Zhao, D.; Wu, Z.; Xu, Y. OptiFusionStack: A Physio-Spatial Stacking Framework for Shallow Water Bathymetry Integrating QAA-Derived Priors and Neighborhood Context. Remote Sens. 2025, 17, 3712. https://doi.org/10.3390/rs17223712

Shen W, Liu J, Li X, Zhao D, Wu Z, Xu Y. OptiFusionStack: A Physio-Spatial Stacking Framework for Shallow Water Bathymetry Integrating QAA-Derived Priors and Neighborhood Context. Remote Sensing. 2025; 17(22):3712. https://doi.org/10.3390/rs17223712

Chicago/Turabian StyleShen, Wei, Jinzhuang Liu, Xiaojuan Li, Dongqing Zhao, Zhongqiang Wu, and Yibin Xu. 2025. "OptiFusionStack: A Physio-Spatial Stacking Framework for Shallow Water Bathymetry Integrating QAA-Derived Priors and Neighborhood Context" Remote Sensing 17, no. 22: 3712. https://doi.org/10.3390/rs17223712

APA StyleShen, W., Liu, J., Li, X., Zhao, D., Wu, Z., & Xu, Y. (2025). OptiFusionStack: A Physio-Spatial Stacking Framework for Shallow Water Bathymetry Integrating QAA-Derived Priors and Neighborhood Context. Remote Sensing, 17(22), 3712. https://doi.org/10.3390/rs17223712