Variational Gaussian Mixture Model for Tracking Multiple Extended Targets or Unresolvable Group Targets in Closely Spaced Scenarios

Highlights

- We find that performing secondary processing after clustering sensor measurements that exhibit a Gaussian mixture effectively separates the measurements in space, making it more suitable for the tracking of multiple targets in close proximity.

- Using the separated measurements together with an extent model improves shape estimation for targets and alleviates the state fusion commonly encountered in such scenarios.

- These findings advance our use of the variational Gaussian mixture model (VGMM) by integrating it with a random finite set (RFS) filtering framework to enable the robust state estimation of multiple targets with spatial extent.

- VGMM outputs can be integrated into extent models to mitigate state fusion when tracking closely spaced targets, thereby broadening the applicability of existing tracking methods to extended targets or unresolvable group targets in complex scenarios.

Abstract

1. Introduction

- We provide a solution for METT/MUGTT in challenging scenarios, particularly with closely spaced targets.

- The method models the target extent with the MEM, enabling accurate shape estimation with decoupled orientation and axis lengths.

- Built on the CPHD filtering framework, the method effectively handles target births and deaths.

- We propose an applicable VGMM-based measurement update that supplies soft responsibilities for probabilistic data association, mitigating state fusion among closely spaced targets.

2. Problem Formulation

- For a single target, the measurement sources generated on its surface remain independent and identically distributed (I.I.D.).

- An independent measurement source corresponds only to a unique measurement.

- For a single target, its measurement sources are uniformly distributed over its surface.

- The number of measurements generated by the n-th target at time k follows a Poisson distribution with a rate parameter of , i.e.,where is the number of measurements generated by the n-th target at time k. For simplicity, the Poisson rate is assumed constant across time and targets, namely .

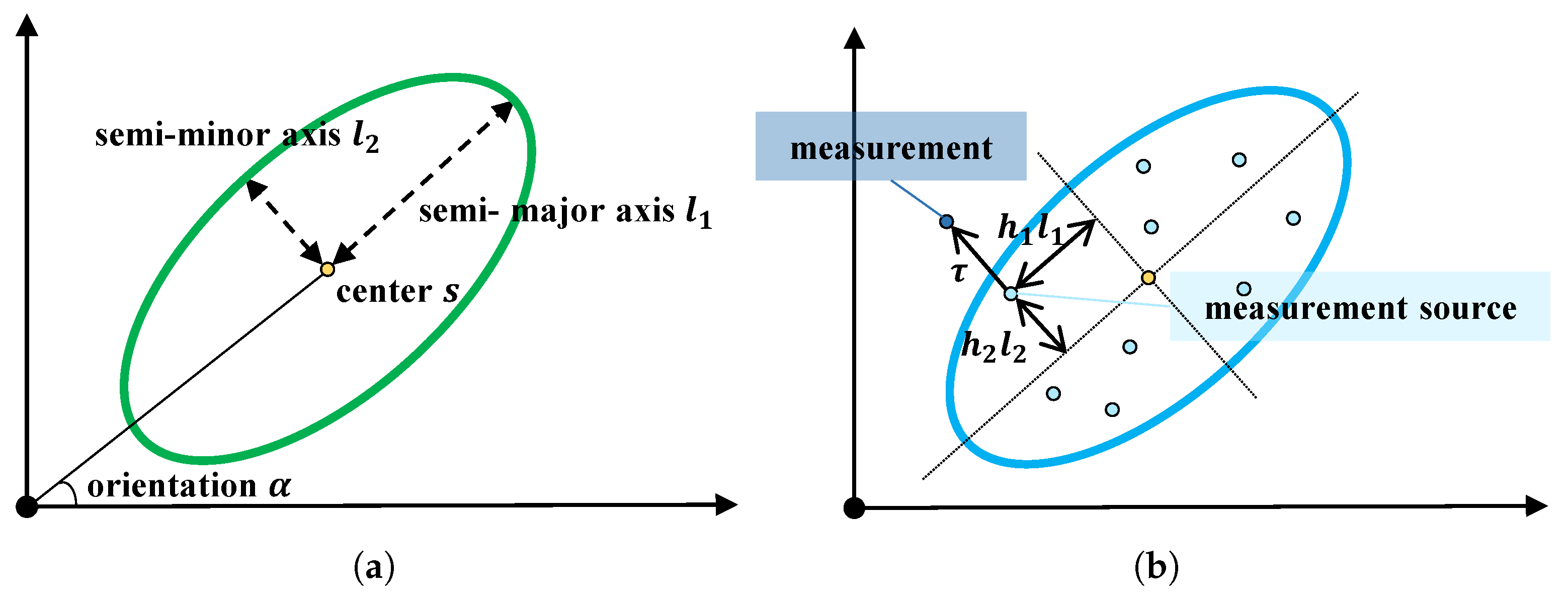

- For a single target-generated measurement , it can be regarded as a 2D Cartesian measurement, i.e., . In radar or LiDAR sensor applications, the measurement is expressed in polar coordinates, consisting of the measured range and bearing . In this case, a coordinate transformation is used to obtain the radar position measurement [28], i.e., and . Then, the conditional distribution that produces measurement generated by the n-target is approximately described by the following Gaussian distribution:where is the shape description matrix of the n-th target, which encodes the orientation and semi-axis lengthsand is the measurement matrix, where expresses the -order zero matrix. For simplicity, it can be treated as a constant matrix . is the covariance of multiplicative error term . This term is used to describe the covariance of uniformly distributed measurement sources generated by the n-th target and satisfies , with (for a uniform distribution on the elliptical surface, = 4; on a rectangular surface, = 3 [39]). is the covariance matrix of additive noise , which is used to describe the deviation between a measurement source and its corresponding measurement; for the n-th target, we assume that . An illustration of the single target measurement model is shown in Figure 1b.

3. Time Update

4. Measurement Update with the VGMM

4.1. VGMM for MTT

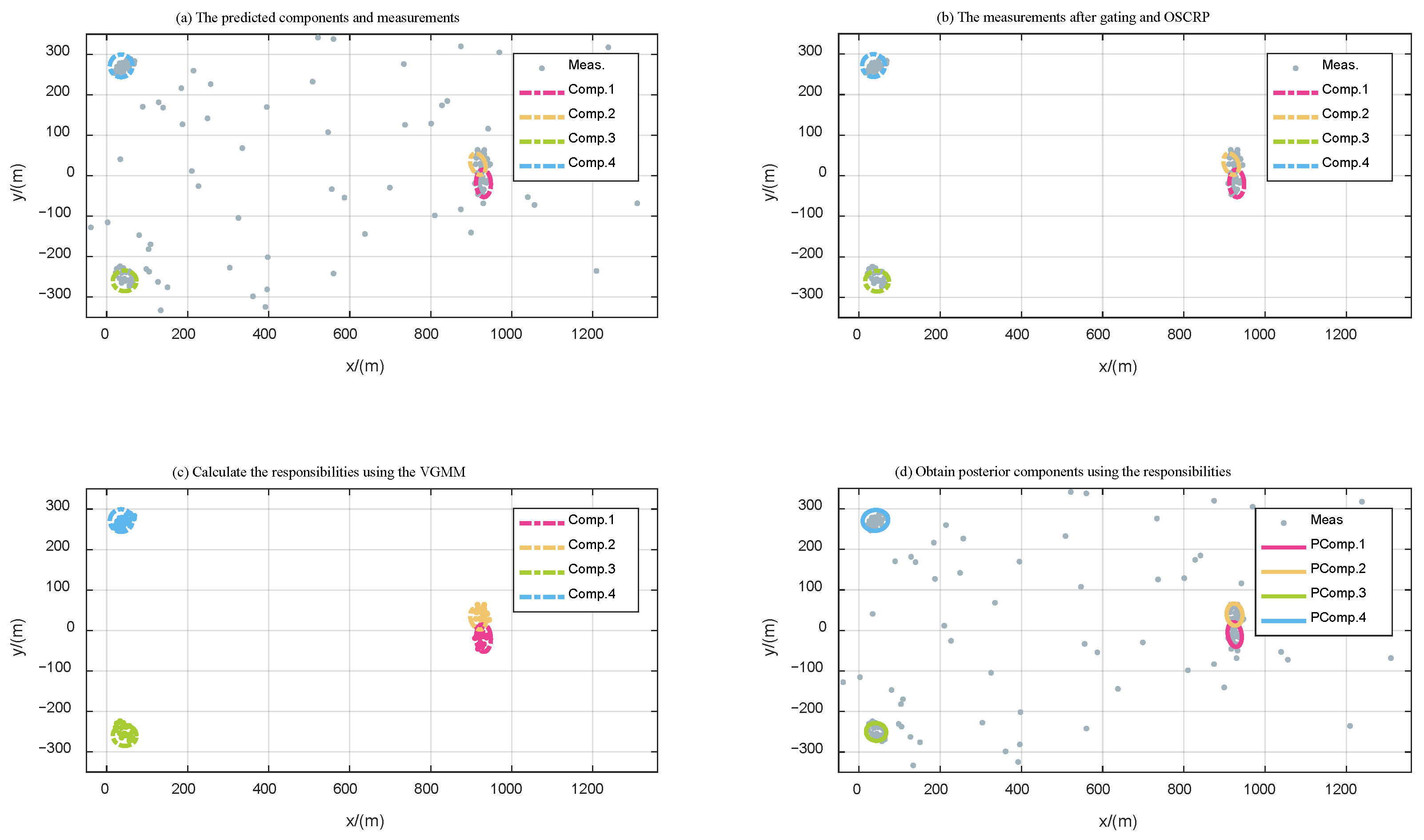

- The VGMM assumes that samples follow a Gaussian mixture, which is consistent with the multi-target measurement model shown in Equation (9). Here, we can treat the measurements as samples and the components of the PHD as Gaussian clusters for VGMM processing.

- The VGMM provides responsibility (see Equation (27)) to describe the relationship between each measurement and each Gaussian component, which can be used as a marginal association probability to update target states.

- The core of the VGMM is iterative variational inference, which does not strictly rely on prior information, such as the initial number of Gaussian clusters. This advantage of the VGMM makes it valuable for tracking applications with variable target numbers.

4.2. Measurement Update

- C1: For each measurement in , the measurement updates performed using it are independent of other measurements in .

- C2: The posterior state estimation obtained from the previous measurement serves as the prior information for the subsequent measurement.

- C3: The order of merging measurements may influence the final update result, but this effect can be ignored.

- C4: The measurement updates of kinematic parameters and shape parameters of the same target are irrelevant.

- C5: Since the responsibility can be seen as an association probability, an individual measurement will update the target state following the probability data association (PDA) algorithm [45].

- C6: For the kinematic state, the measurement update follows the standard Kalman PDA update. For the shape state, the pseudo-measurement corresponding to each measurement is used for the measurement update.

| Algorithm 1 A single Bayesian iteration of the MEM-CPHD-VGMM filter |

Require: prior PHD and prior cardinality distribution at time step , measurement set at time step k, birth PHD , and birth cardinality distribution .

|

4.3. One-Step Clutter Removal Process

5. Complexity Analysis

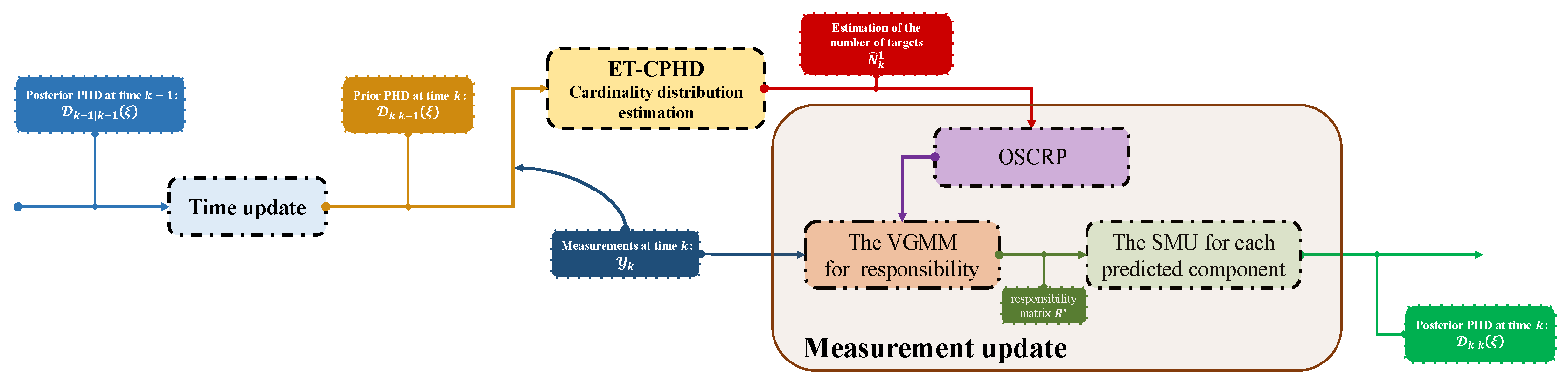

- : Time update

- : ET-CPHD-based cardinality distribution estimation

- : OSCRP

- : VGMM for responsibility estimation

- : SMU for each predicted component

6. Experimental Results

- MEM combined with the JIPDA algorithm for MTT [34], referred to as the MEM-JIPDA filter;

- A widely used RMM-RFS-based method—here, we specifically select the CPHD-based implementation, referred to as the GIW-CPHD filter [17];

- The ET-CPHD filter [41] integrated with the MEM, referred to as the MEM-CPHD filter;

- A recent RFS-based PMB filter with the RMM, referred to as the GIW-PMB filter, implemented with reference to [47].

6.1. Metrics

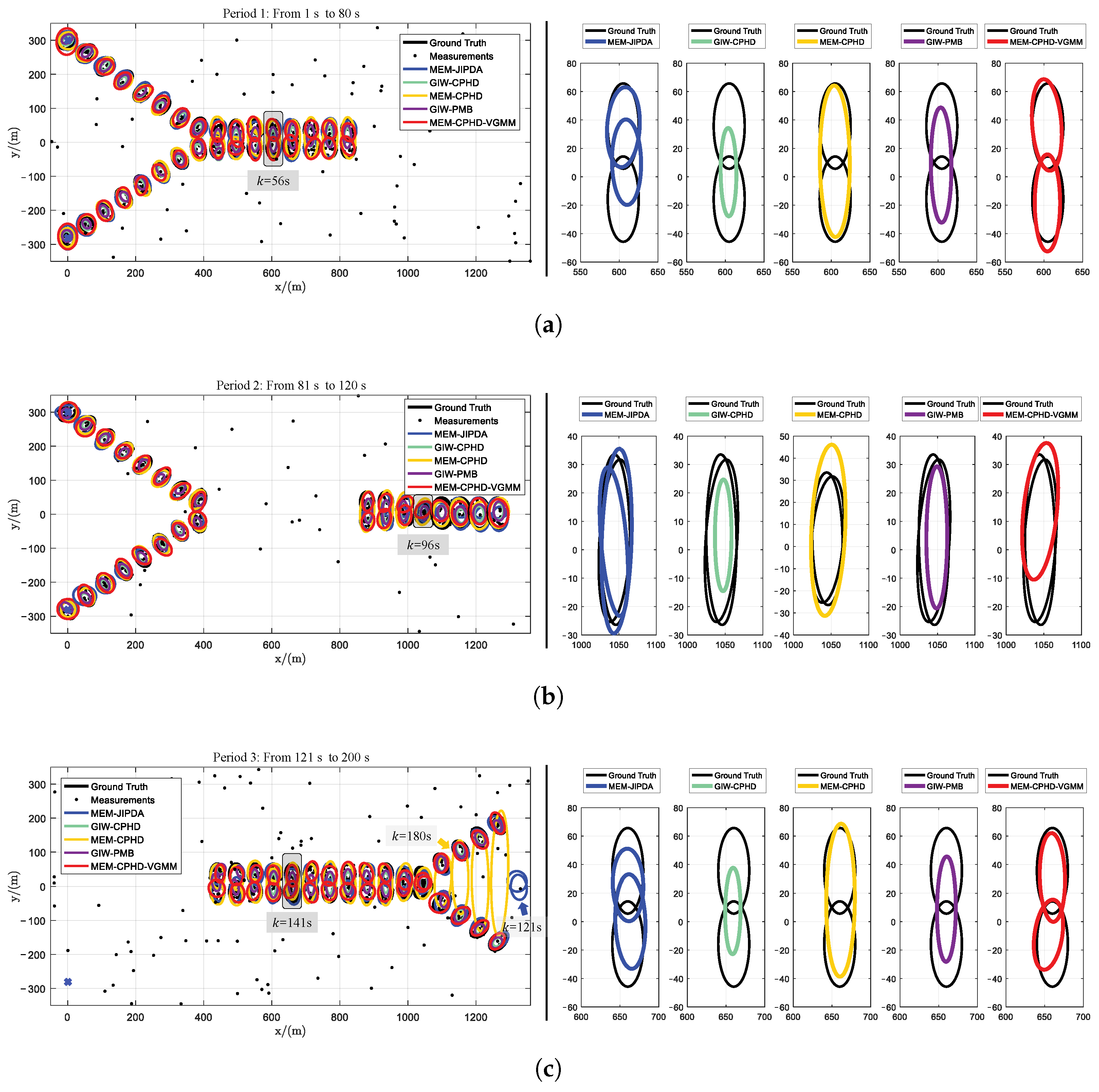

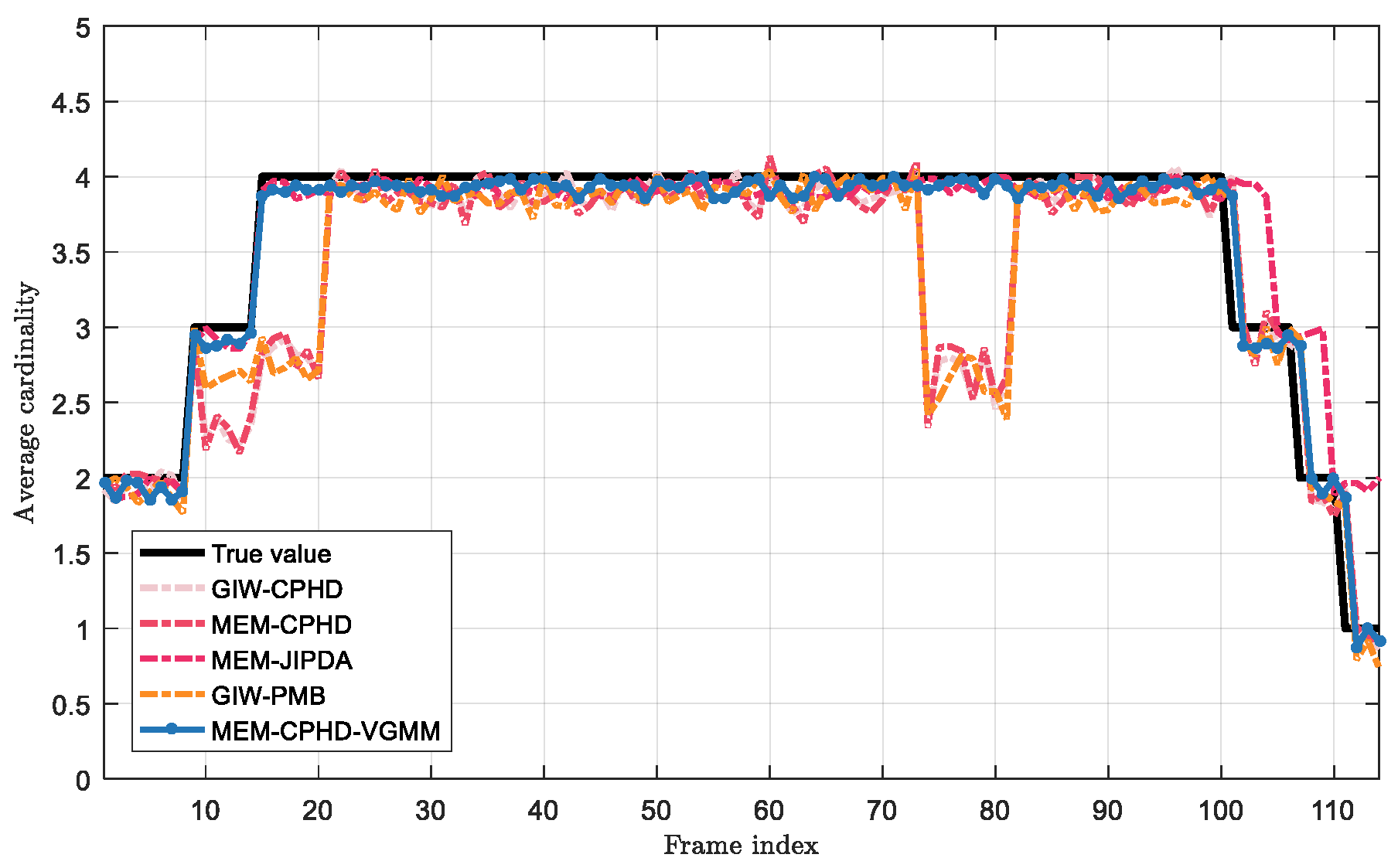

6.2. Experiment 1: Multi-Unresolvable Group Target Tracking

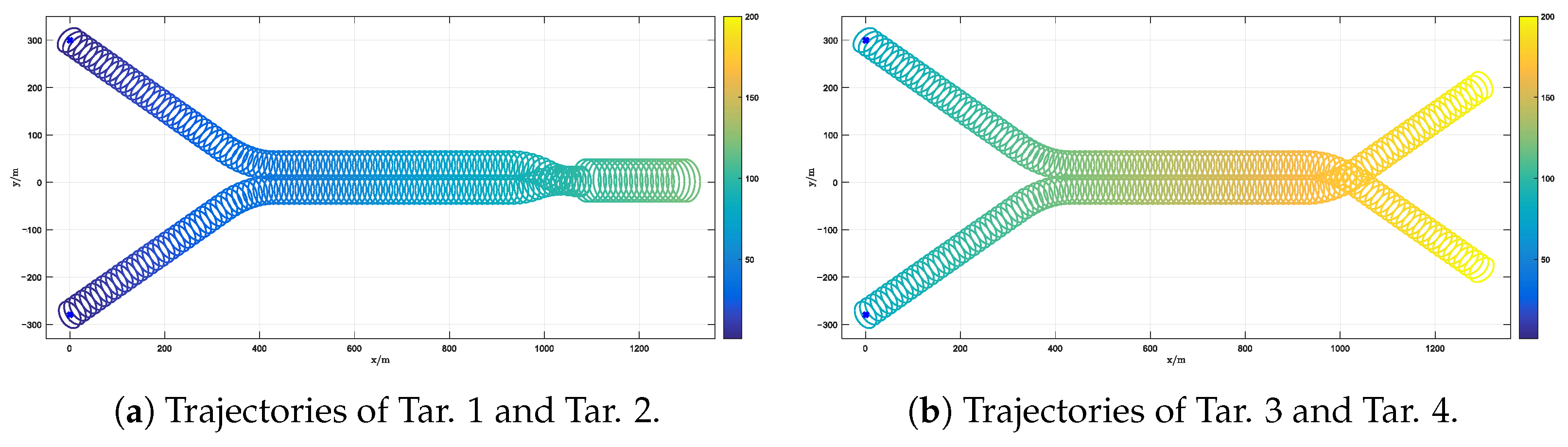

- All targets are considered to have a unified state transition model that follows constant velocity dynamics. We assume that the shape of the target does not change during the time update, so we havewith the corresponding process noise matrix

- For the measurements generated by the target, the non-homogeneous PPP with the Poisson parameter is employed. The Poisson parameter of the merged target is 15. For clutter, we set the Poisson parameter , so the scene’s measurement density is . The measurement matrix and the covariance of the additive measurement noise are set as

- The target’s detection probability and survival probability are taken as

- There are two targets at the initial time. Their initial positions are set to be the same as the positions of the two launch points; namely, we set and , as well as the corresponding initial shape states . The birth components of each filter at each time are consistent with the initial states of these two targets. The probability mass function of the birth components in Equation (16) is set to

- It should be noted that, for the GIW-CPHD filter, although the shape states of each component cannot be explicitly specified, its extent matrix can be calculated via Equation (65). For the inverse Wishart distribution of the birth components, we set its parameters toto ensure that the extent of its birth components is consistent with other filters.

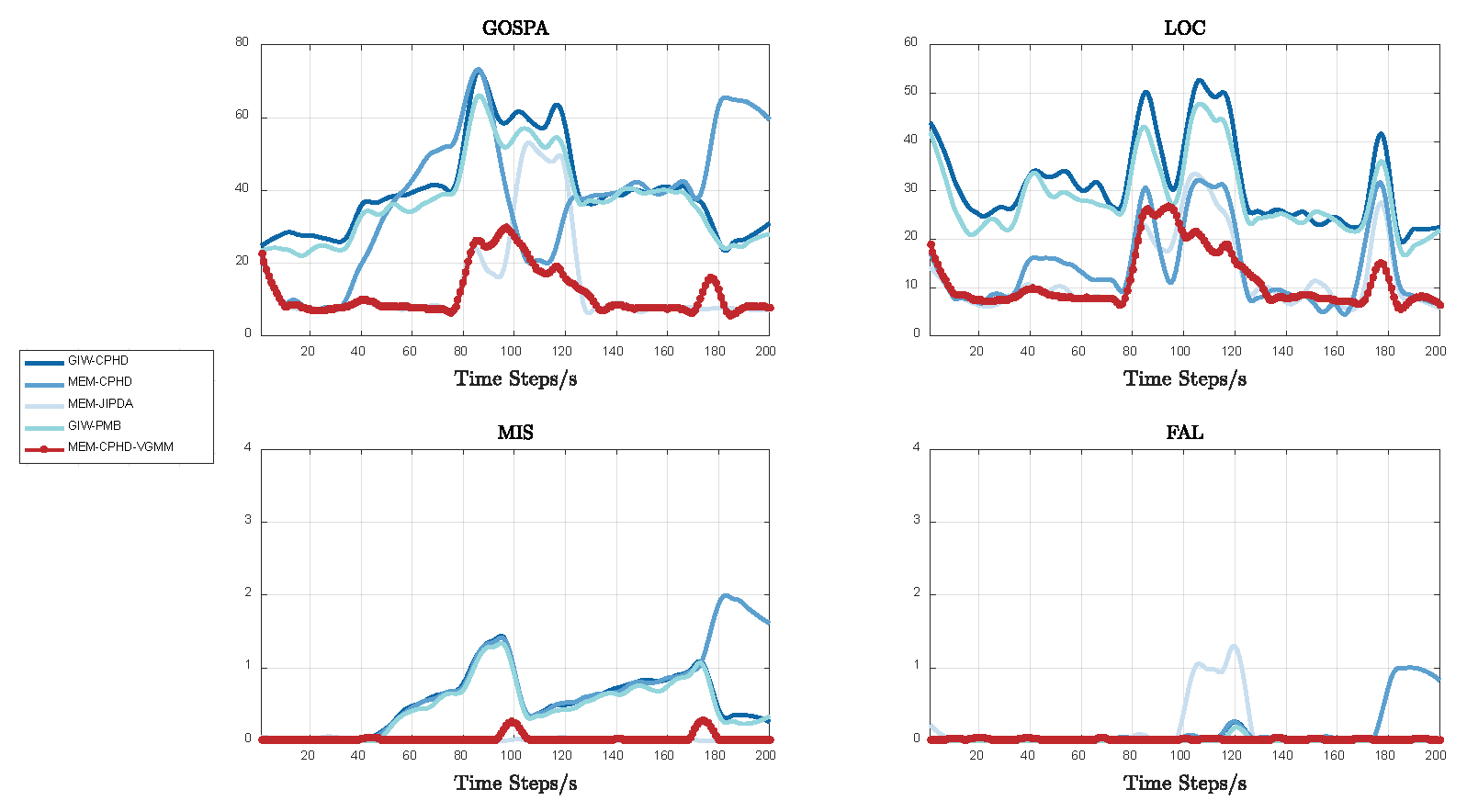

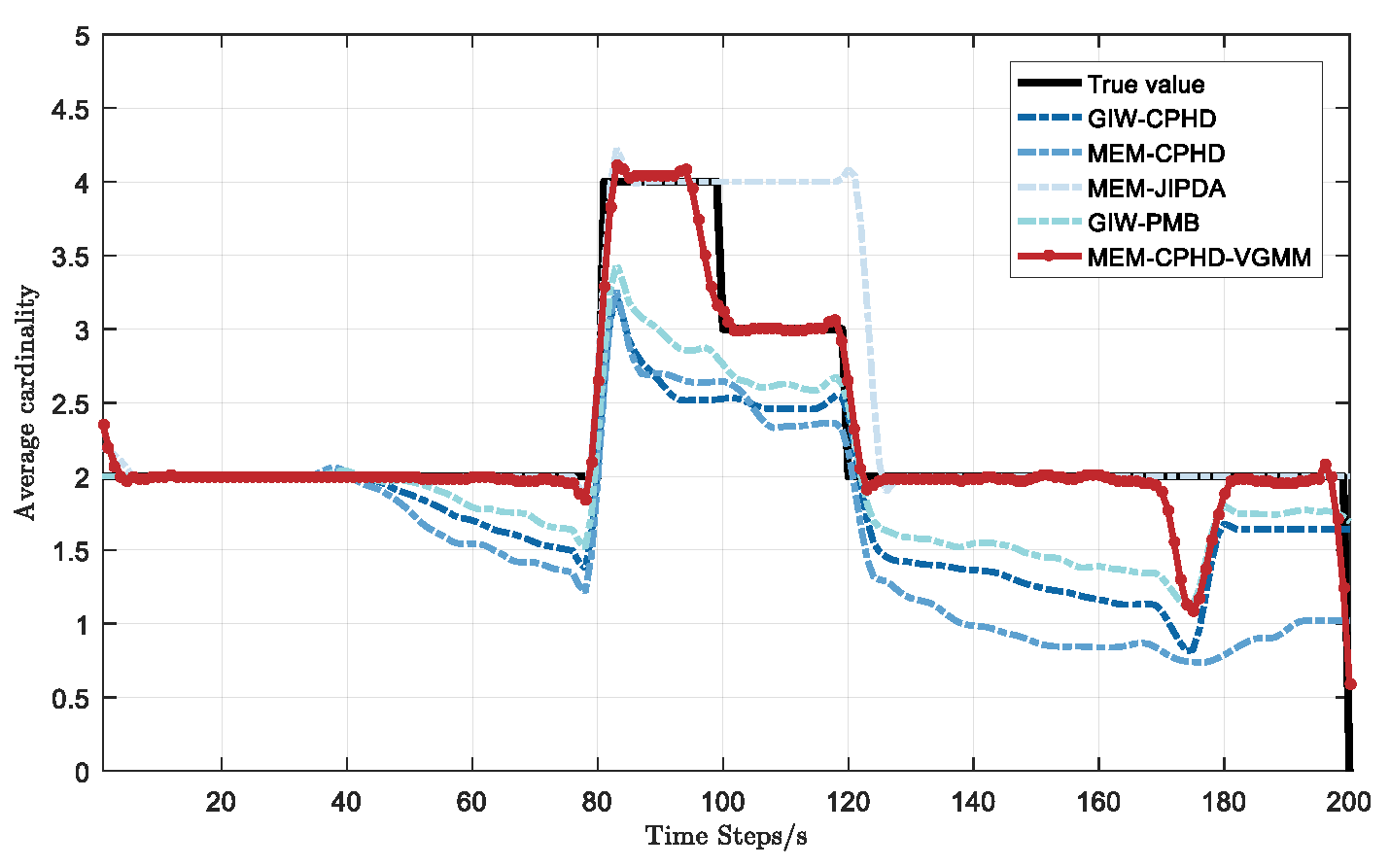

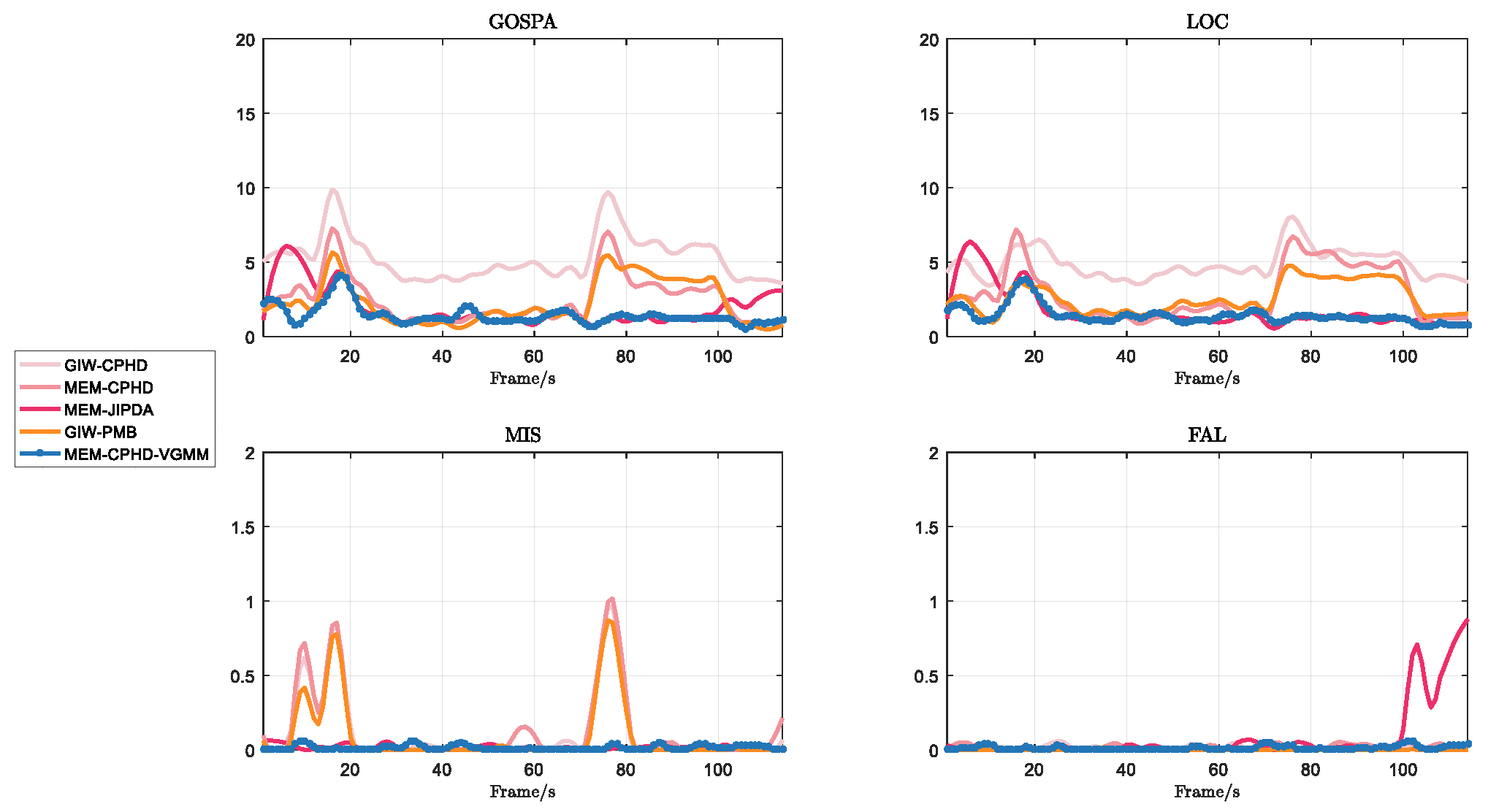

- Regarding GIW-CPHD, it has the worst performance in shape estimation; therefore, compared to other filters, it has a significantly larger LOC. GIW-PMB shows the second-worst shape estimation; although its LOC decreases, the reduction is marginal.

- For MEM-CPHD, its accuracy in shape estimation is improved compared to GIW-CPHD and GIW-PMB, but both have the disadvantage of state fusion for nearby targets. Therefore, their MIS increases from 40 s to 100 s and from 120 s to 168 s, resulting in a larger GOSPA during these time periods. Differently, MEM-CPHD still has erroneous estimation fusion after target crossover. Therefore, after 178 s, the MIS values of GIW-CPHD and GIW-PMB return to normal, but the MIS of MEM-CPHD still shows an upward trend, accompanied by an increase in its FAL. This results in MEM-CPHD having the maximum GOSPA after 178 s.

- For MEM-JIPDA, it has a stable MIS. However, due to its insensitivity to target merging and disappearance, there will be redundant state estimates after the merging of two targets, which leads to a peak in its FAL after 100 s, causing an increase in its GOSPA.

- Regarding our proposed MEM-CPHD-VGMM, its LOC, MIS, and FAL all indicate the best performance, meaning that it has the lowest GOSPA among all filters. However, when two targets remain in close proximity for a prolonged period, MEM-CPHD-VGMM still tends to treat the two targets as one, resulting in a small peak in its MIS at around 95–105 s and 170–180 s, which leads to a slight increase in its GOSPA during these time periods.

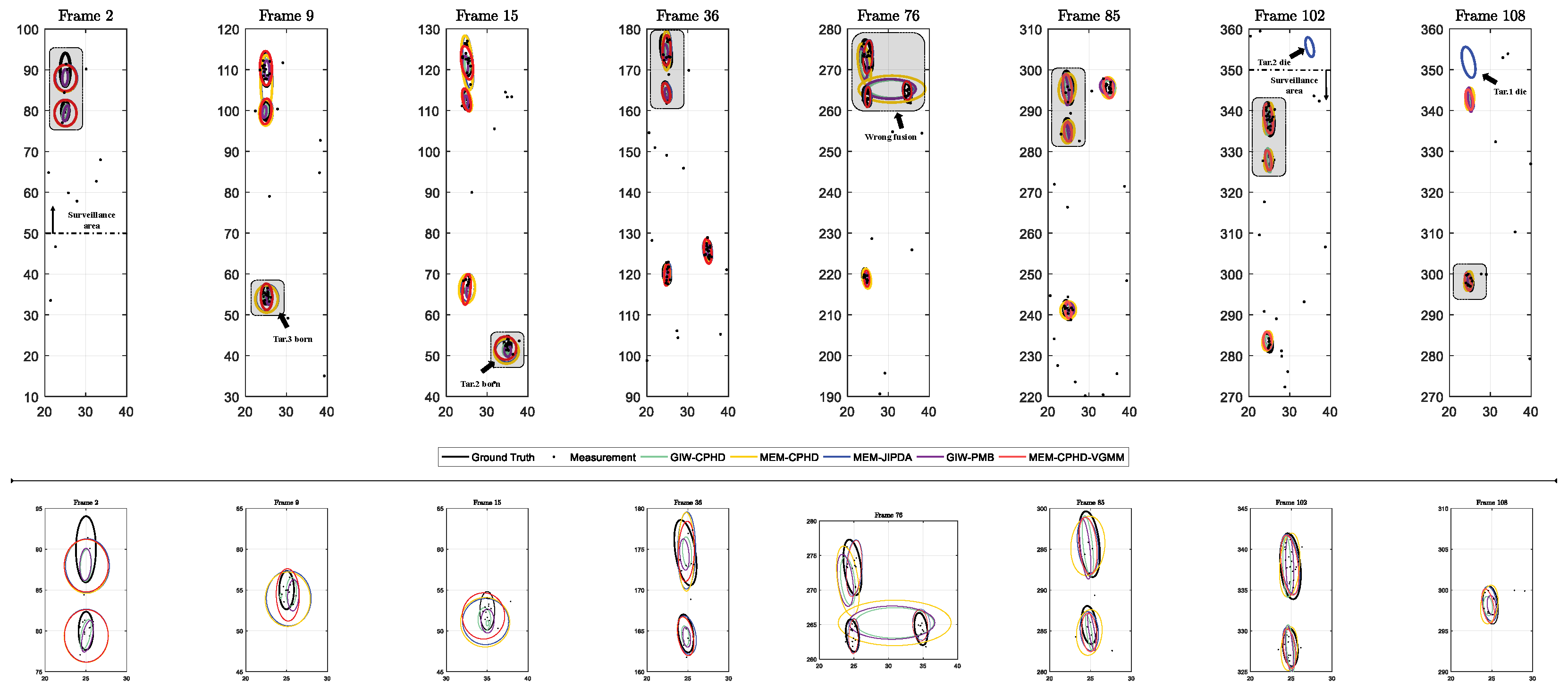

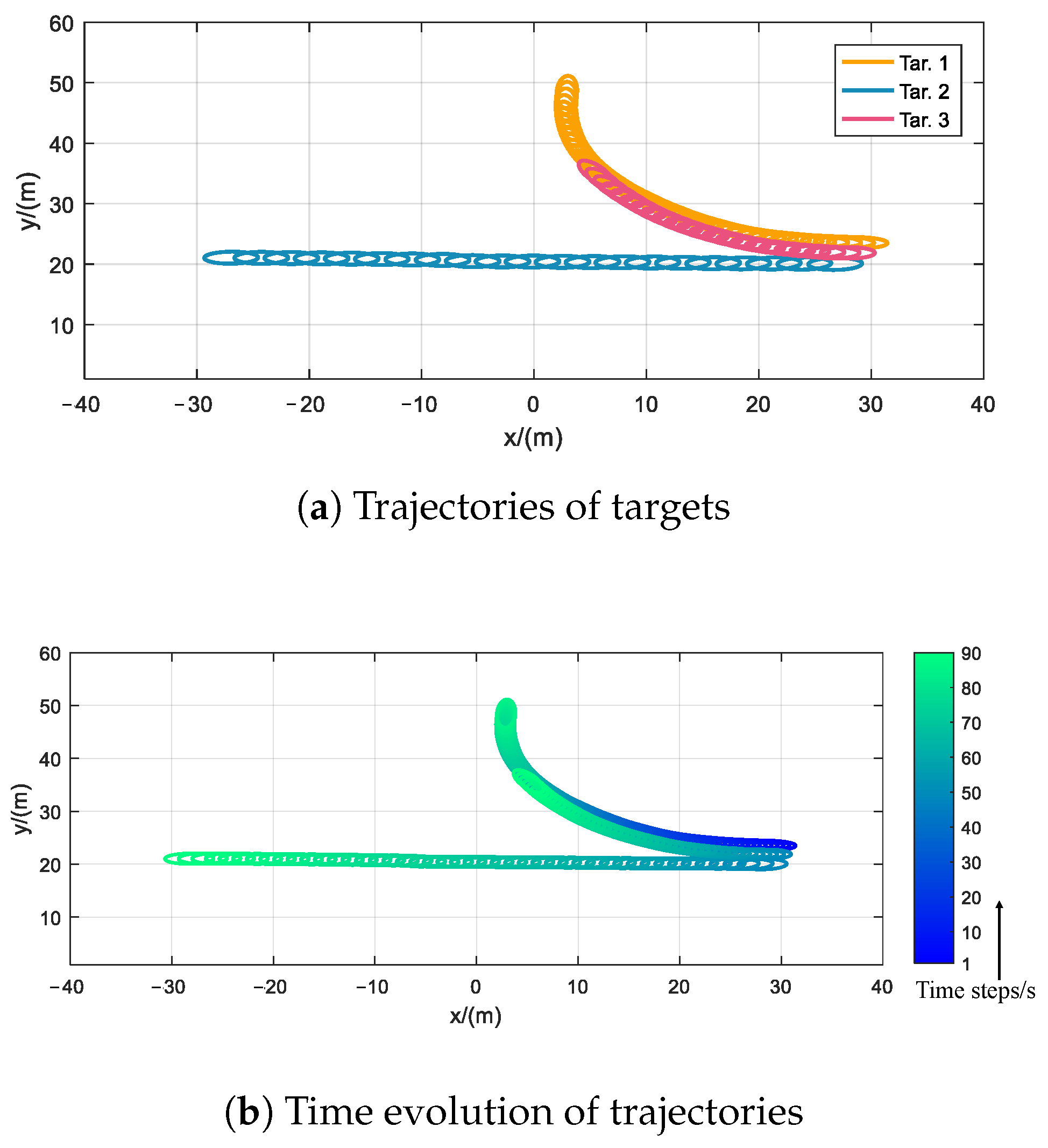

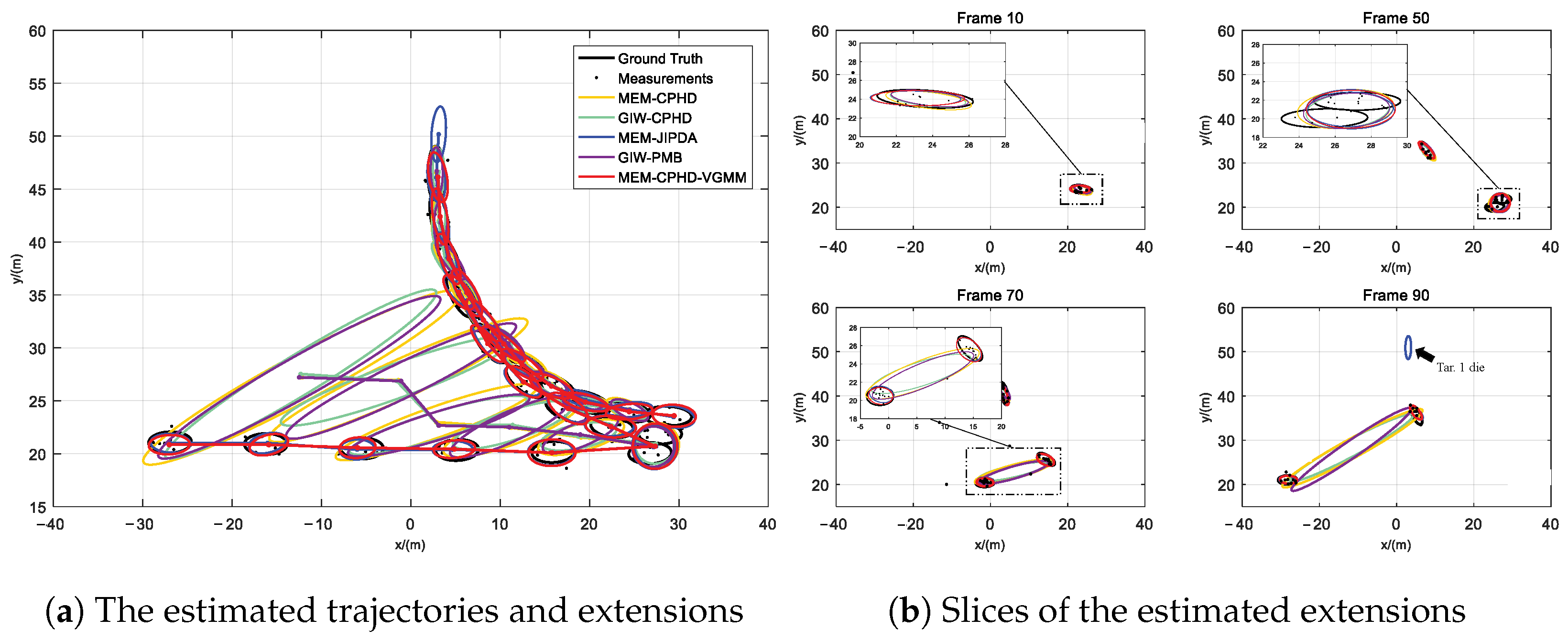

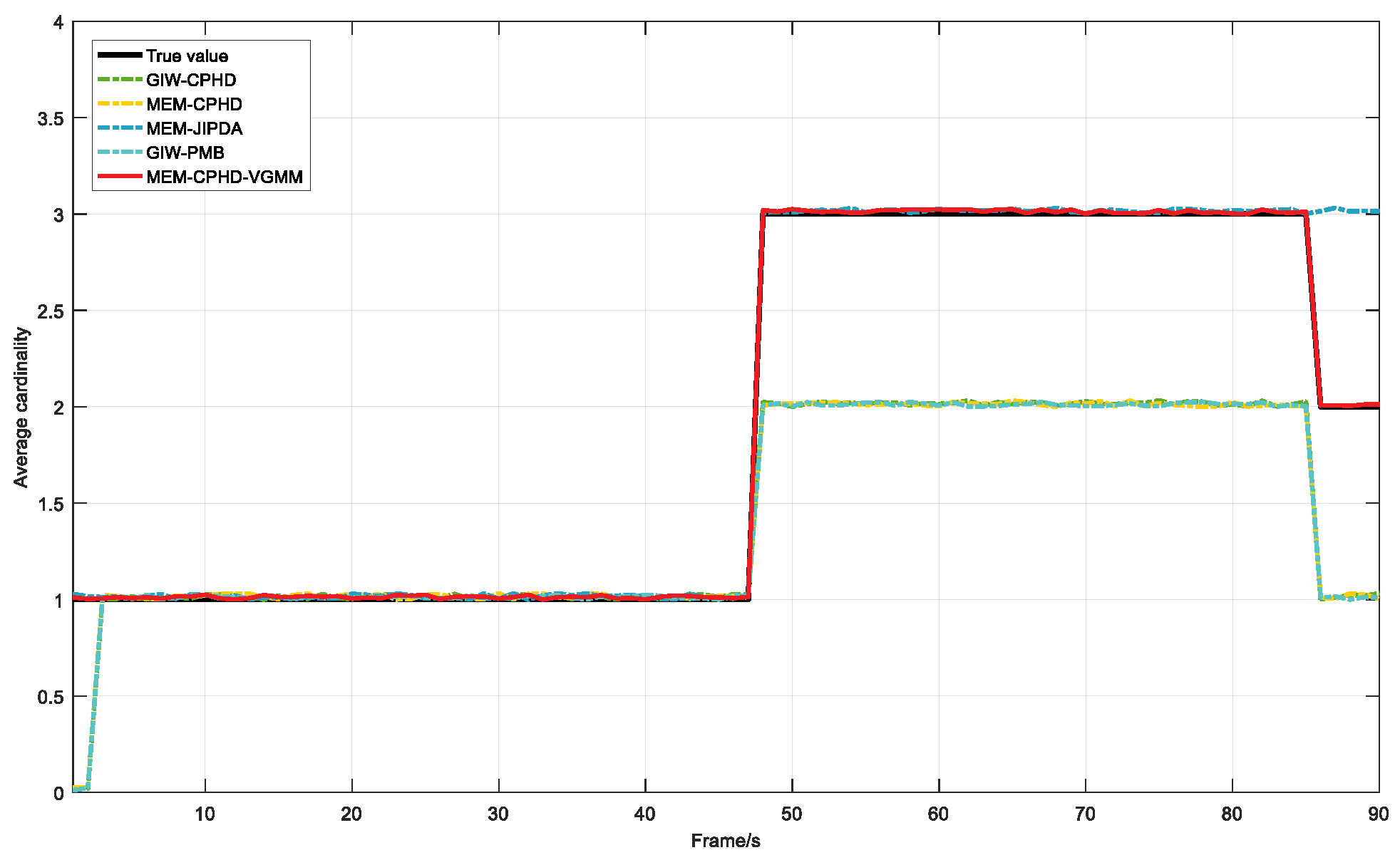

6.3. Experiment 2: Multi-Extended Target Tracking

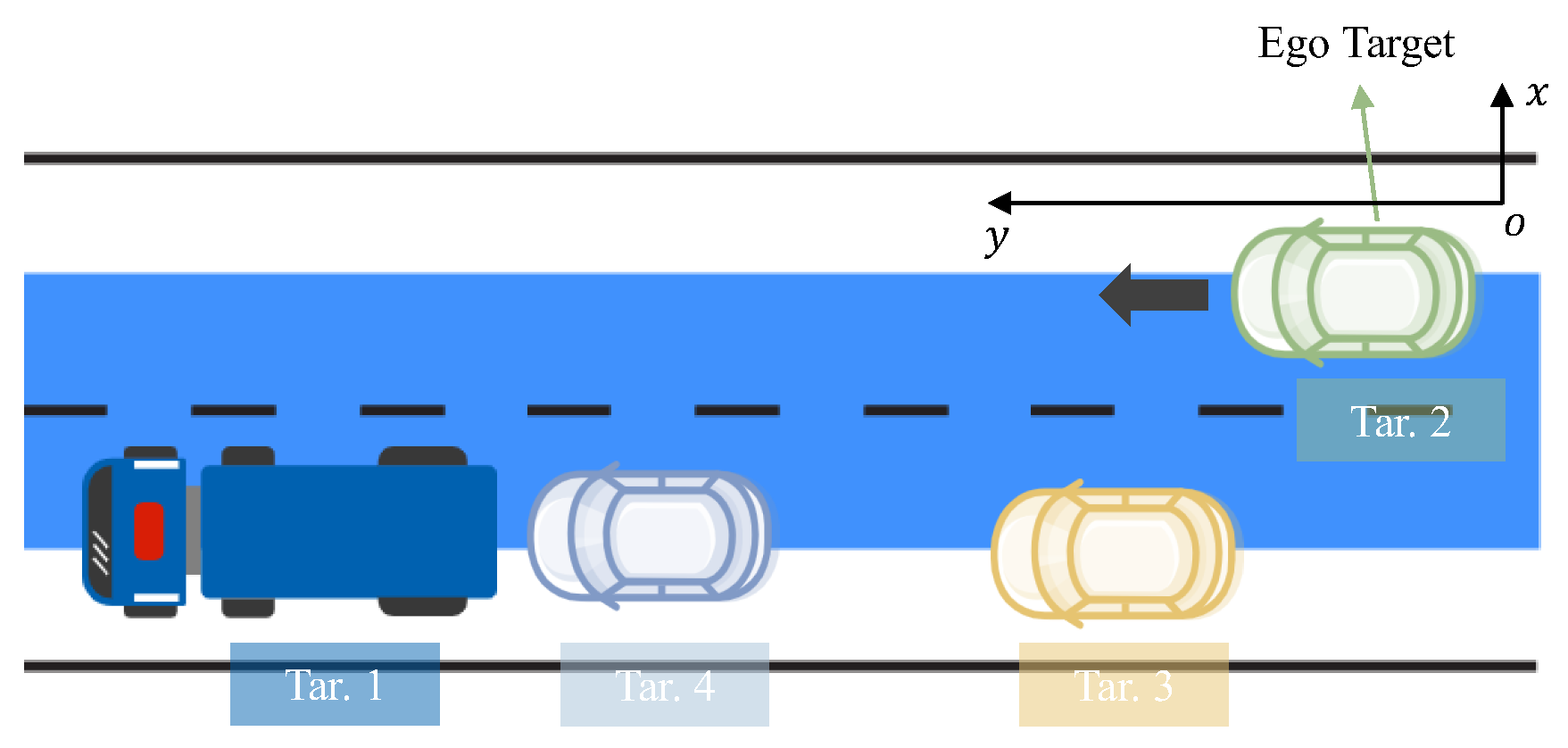

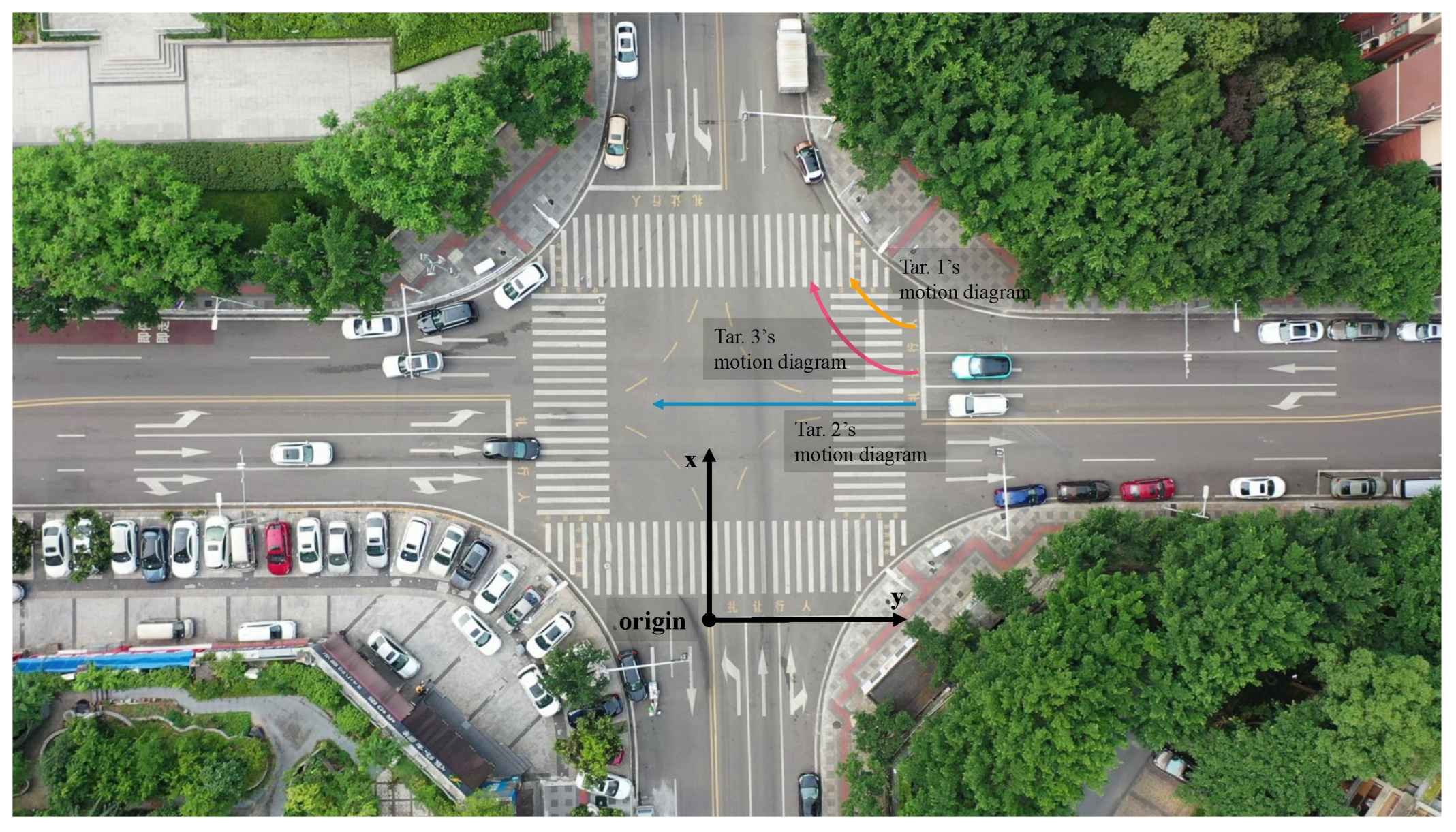

6.4. Experiment 3: Experiment with Real Data in the SIND Dataset

7. Discussion

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| METT | Multiple Extended Target Tracking |

| MUGTT | Multiple Unresolvable Group Target Tracking |

| RMM | Random Matrix Model |

| CPHD | Cardinality Probabilistic Hypothesis Density |

| SMU | Sequential Measurement Update |

| OSCRP | One-Step Clutter Removal Process |

| MTCE | Mean Target Count Error |

Appendix A. Notations and Corresponding Explanations

| |

| |

|

|

Appendix B. Proof of Theorem 1

Appendix C. Proof of Theorem 2

- The measurement and pseudo-measurement are in one-to-one correspondence.

- Equation (24) in [24] states that the pseudo-measurement is obtained via an uncorrelated transformation of the corresponding measurement , which means that and are unrelated.

Appendix D. Pseudo-Code

| Algorithm A1 The VGMM process |

| Require: Predicted PHD , measurement set , estimated number of targets from cardinality distribution , maximum number of executions , maximum number of iterations . = 0; while or do Initialization , , , and . Iterations: for do Equation (42). for do % Solve for auxiliary variables. Equation (39), Equation (40). end for for do for do % Compute responsibilities. Equation (41), Equation (28). end for Equation (27). % Normalize responsibilities. end for for do % Update parameters of each variational distribution. Equation (31), Equation (33), Equation (34). Equation (35), Equation (36), Equation (30). end for end for Obtain the final parameters: for do % Finalize the variational distribution parameters. , , , , . end for for do for do % Store the responsibilities. end for end for end while Output: . |

| Algorithm A2 The measurement update of the MEM-CPHD-VGMM filter |

| Require: Predicted PHD , measurement set , responsibilities for each measurement and each predicted component . • Calculate via ([17], Equation (41)). % The missed detection part of the posterior PHD. • Calculate : % The detection part of the posterior PHD. fordo for each partition do for each measurement cell in do Update via ([17], Equation (39)). Update and : 1. Initialize and via Equation (51). 2. Initialize and via Equation (52). 3. for in the cell do % Perform SMU for each posterior component. Equation (53), Equation (54). Equation (56), Equation (57). end for 4. Obtain and via Equations (58) and (59). end for end for end for • Calculate the posterior PHD via Equation (47). |

References

- Granström, K.; Baum, M. A tutorial on multiple extended object tracking. TechRxiv 2022. preprint. [Google Scholar] [CrossRef]

- Ding, G.; Liu, J.; Xia, Y.; Huang, T.; Zhu, B.; Sun, J. LiDAR point cloud-based multiple vehicle tracking with probabilistic measurement-region association. In Proceedings of the 27th International Conference on Information Fusion (FUSION), Venice, Italy, 7–11 July 2024; pp. 1–8. [Google Scholar]

- Liu, J.; Bai, L.; Xia, Y.; Huang, T.; Zhu, B.; Han, Q.-L. GNN-PMB: A simple but effective online 3D multi-object tracker without bells and whistles. IEEE Trans. Intell. Veh. 2023, 8, 1176–1189. [Google Scholar] [CrossRef]

- Yao, X.; Qi, B.; Wang, P.; Di, R.; Zhang, W. Novel multi-target tracking based on Poisson multi-Bernoulli mixture filter for high-clutter maritime communications. In Proceedings of the 12th International Conference on Information Systems and Computing Technology (ISCTech), Xi’an, China, 8–11 November 2024; pp. 1–7. [Google Scholar]

- Yan, J.; Li, C.; Jiao, H.; Liu, H. Joint allocation of multi-aircraft radar transmit and maneuvering resource for multi-target tracking. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–5. [Google Scholar]

- Zhou, Z. Comprehensive discussion on remote sensing modeling and dynamic electromagnetic scattering for aircraft with speed brake deflection. Remote Sens. 2025, 17, 1706. [Google Scholar] [CrossRef]

- Granström, K.; Baum, M.; Reuter, S. Extended object tracking: Introduction, overview and applications. J. Adv. Inf. Fusion. 2017, 12, 139–174. [Google Scholar]

- Koch, W. Bayesian approach to extended object and cluster tracking using random matrices. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 1042–1059. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, P.; Cao, Z.; Jia, Y. Random matrix-based group target tracking using nonlinear measurement. In Proceedings of the 5th IEEE International Conference on Electronics Technology (ICET), Chengdu, China, 13–16 May 2022; pp. 1224–1228. [Google Scholar]

- Bartlett, N.J.; Renton, C.; Wills, A.G. A closed-form prediction update for extended target tracking using random matrices. IEEE Trans. Signal Process. 2020, 68, 2404–2418. [Google Scholar] [CrossRef]

- Granström, K.; Bramstång, J. Bayesian smoothing for the extended object random matrix model. IEEE Trans. Signal Process. 2019, 67, 3732–3742. [Google Scholar] [CrossRef]

- Wieneke, M.; Koch, W. A PMHT approach for extended objects and object groups. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2349–2370. [Google Scholar] [CrossRef]

- Schuster, M.; Reuter, J.; Wanielik, G. Probabilistic data association for tracking extended targets under clutter using random matrices. In Proceedings of the 18th International Conference on Information Fusion (FUSION), Washington, DC, USA, 6–9 July 2015; pp. 961–968. [Google Scholar]

- Wei, Y.; Lan, J.; Zhang, L. Multiple extended object tracking using PMHT with extension-dependent measurement numbers. In Proceedings of the 27th International Conference on Information Fusion (FUSION), Venice, Italy, 7–11 July 2024; pp. 1–8. [Google Scholar]

- Li, Y.; Shen, T.; Gao, L. Multisensor multiple extended objects tracking based on the message passing. IEEE Sens. J. 2024, 24, 16510–16528. [Google Scholar] [CrossRef]

- Granström, K.; Orguner, U. A PHD filter for tracking multiple extended targets using random matrices. IEEE Trans. Signal Process. 2012, 60, 5657–5671. [Google Scholar] [CrossRef]

- Lundquist, C.; Granström, K.; Orguner, U. An extended target CPHD filter and a Gamma Gaussian inverse Wishart implementation. IEEE J. Sel. Top. Signal Process. 2013, 7, 472–483. [Google Scholar] [CrossRef]

- Granström, K.; Fatemi, M.; Svensson, L. Poisson multi-Bernoulli mixture conjugate prior for multiple extended target filtering. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 208–225. [Google Scholar] [CrossRef]

- Xia, Y.; Granström, K.; Svensson, L.; Fatemi, M.; García-Fernández, Á.F.; Williams, J.L. Poisson multi-Bernoulli approximations for multiple extended object filtering. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 890–906. [Google Scholar] [CrossRef]

- Wei, S.; García-Fernández, Á.F.; Yi, W. The trajectory PHD filter for coexisting point and extended target tracking. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 5669–5685. [Google Scholar] [CrossRef]

- Xia, Y.; García-Fernández, Á.F.; Meyer, F.; Williams, J.L.; Granström, K.; Svensson, L. Trajectory PMB filters for extended object tracking using belief propagation. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 9312–9331. [Google Scholar] [CrossRef]

- Zhu, S.; Liu, W.; Weng, C.; Cui, H. Multiple group targets tracking using the generalized labeled multi-Bernoulli filter. In Proceedings of the 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 4871–4876. [Google Scholar]

- García-Fernández, Á.F.; Svensson, L.; Williams, J.L.; Xia, Y.; Granström, K. Trajectory multi-Bernoulli filters for multi-target tracking based on sets of trajectories. In Proceedings of the 23rd International Conference on Information Fusion (FUSION), Pretoria, South Africa, 6–9 July 2020; pp. 1–8. [Google Scholar]

- Yang, S.; Baum, M. Tracking the orientation and axes lengths of an elliptical extended object. IEEE Trans. Signal Process. 2019, 67, 4720–4729. [Google Scholar] [CrossRef]

- Tuncer, B.; Ozkan, E. Random matrix based extended target tracking with orientation: A new model and inference. IEEE Trans. Signal Process. 2021, 69, 1910–1923. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, J. Extended object tracking using aspect ratio. IEEE Trans. Signal Process. 2024. early access. [Google Scholar]

- Wen, Z.; Lan, J.; Zheng, L.; Zeng, T. Velocity-dependent orientation estimation using variance adaptation for extended object tracking. IEEE Signal Process. Lett. 2024, 31, 3109–3113. [Google Scholar] [CrossRef]

- Wen, Z.; Zheng, L.; Zeng, T. Extended object tracking using an orientation vector based on constrained filtering. Remote Sens. 2025, 17, 1419. [Google Scholar] [CrossRef]

- Yang, S.; Thormann, K.; Baum, M. Linear-time joint probabilistic data association for multiple extended object tracking. In Proceedings of the 10th IEEE Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; pp. 6–10. [Google Scholar]

- Fowdur, J.S.; Baum, M.; Heymann, F.; Banys, P. An overview of the PAKF-JPDA approach for elliptical multiple extended target tracking using high-resolution marine radar data. Remote Sens. 2023, 15, 2503. [Google Scholar] [CrossRef]

- Yang, S.; Teich, F.; Baum, M. Network flow labeling for extended target tracking PHD filters. IEEE Trans. Ind. Inf. 2019, 15, 4164–4171. [Google Scholar] [CrossRef]

- Ennaouri, M.; Ettahiri, I.; Zellou, A.; Doumi, K. Leveraging extravagant linguistic patterns to enhance fake review detection: A comparative study on clustering methods. In Proceedings of the 2024 International Conference on Electrical, Communication and Computer Engineering (ICECCE), Kuala Lumpur, Malaysia, 30–31 October 2024; pp. 1–5. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.-P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Yang, S.; Wolf, L.M.; Baum, M. Marginal association probabilities for multiple extended objects without enumeration of measurement partitions. In Proceedings of the 23rd International Conference on Information Fusion (FUSION), Pretoria, South Africa, 6–9 July 2020; pp. 1–8. [Google Scholar]

- Cheng, Y.; Cao, Y.; Yeo, T.-S.; Yang, W.; Jie, F. Using fuzzy clustering technology to implementing multiple unresolvable group object tracking in a clutter environment. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 8839–8854. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Scheel, A.; Dietmayer, K. Tracking multiple vehicles using a variational radar model. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3721–3736. [Google Scholar] [CrossRef]

- Honer, J.; Kaulbersch, H. Bayesian extended target tracking with automotive radar using learned spatial distribution models. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 14–16 September 2020; pp. 316–322. [Google Scholar]

- Baum, M.; Hanebeck, U.D. Extended object tracking with random hypersurface models. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 149–159. [Google Scholar] [CrossRef]

- Gilholm, K.; Salmond, D. Spatial distribution model for tracking extended objects. IEE Proc. Radar Sonar Navig. 2005, 152, 364–371. [Google Scholar] [CrossRef]

- Orguner, U.; Lundquist, C.; Granström, K. Extended target tracking with a cardinalized probability hypothesis density filter. In Proceedings of the 14th International Conference on Information Fusion (FUSION), Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Zhang, X.; Jiao, P.; Gao, M.; Li, T.; Wu, Y.; Wu, H.; Wu, H.; Zhao, Z. VGGM: Variational graph Gaussian mixture model for unsupervised change point detection in dynamic networks. IEEE Trans. Inf. Forensics Secur. 2024, 19, 4272–4284. [Google Scholar] [CrossRef]

- An, Y.; Zhang, K.; Chai, Y.; Zhu, Z.; Liu, Q. Gaussian mixture variational-based transformer domain adaptation fault diagnosis method and its application in bearing fault diagnosis. IEEE Trans. Ind. Inf. 2024, 20, 615–625. [Google Scholar] [CrossRef]

- Bishop, C.M. Chapter 10: Variational Inference and EM. In Pattern Recognition and Machine Learning: Solutions to the Exercises; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Blackman, S.S.; Popoli, R.F. Design and Analysis of Modern Tracking Systems; Artech House: London, UK, 1999. [Google Scholar]

- Xue, X.; Wei, D.; Huang, S. A novel TPMBM filter for partly resolvable multitarget tracking. IEEE Sens. J. 2024, 24, 16629–16646. [Google Scholar] [CrossRef]

- Chen, C.; Yang, J.; Liu, J. Adaptive Poisson multi-Bernoulli filter for multiple extended targets with Gamma and Beta estimator. Digit. Signal Process. 2025, 163, 105204. [Google Scholar]

- Yang, S.; Baum, M.; Granström, K. Metrics for performance evaluation of elliptic extended object tracking methods. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 523–528. [Google Scholar]

- Rahmathullah, A.S.; García-Fernández, Á.F.; Svensson, L. Generalized optimal sub-pattern assignment metric. In Proceedings of the 20th International Conference on Information Fusion (FUSION), Xi’an, China, 10–13 July 2017; pp. 1–8. [Google Scholar]

- Xu, Y.; Shao, W.; Li, J.; Yang, K.; Wang, W.; Huang, H.; Lv, C.; Wang, H. SIND: A drone dataset at signalized intersection in China. In Proceedings of the 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 2471–2478. [Google Scholar]

- Li, G.; Kong, L.; Yi, W.; Li, X. Robust Poisson multi-Bernoulli mixture filter with unknown detection probability. IEEE Trans. Veh. Technol. 2021, 70, 886–899. [Google Scholar] [CrossRef]

- Baerveldt, M.; López, M.E.; Brekke, E.F. Extended target PMBM tracker with a Gaussian process target model on LiDAR data. In Proceedings of the 26th International Conference on Information Fusion (FUSION), Charleston, SC, USA, 27–30 June 2023; pp. 1–8. [Google Scholar]

- Wang, L.; Zhan, R. Joint detection, tracking and classification of multiple maneuvering star-convex extended targets. IEEE Sens. J. 2023, 24, 5004–5024. [Google Scholar] [CrossRef]

- Li, M.; Lan, J.; Li, X.R. Tracking of extended object using random triangle model. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 2926–2940. [Google Scholar] [CrossRef]

- Yang, H.; Zhu, Y. B-spline model for extended object tracking based on sequential fusion. In Proceedings of the 6th International Conference on Intelligent Control, Measurement and Signal Processing (ICMSP), Xi’an, China, 29 November–1 December 2024; pp. 682–685. [Google Scholar]

- Cao, X.; Tian, Y.; Yang, J.; Li, W.; Yi, W. Trajectory PHD filter for extended traffic target tracking with interaction and constraint. In Proceedings of the 27th International Conference on Information Fusion (FUSION), Venice, Italy, 7–11 July 2024; pp. 1–8. [Google Scholar]

- Xiong, Y.; Cao, X.; Luo, Y.; Zhang, L.; Li, W. The extended target trajectory PHD filter combined with interacting multiple model. In Proceedings of the 28th International Conference on Information Fusion (FUSION), Rio de Janeiro, Brazil, 7–11 July 2025; pp. 1–7. [Google Scholar]

| Explanation | Default Value | |

|---|---|---|

| Maximum number of executions for VGMM | 5 | |

| Maximum number of iterations per execution | 5 | |

| , | Initial parameters of j-th Gaussian distribution | |

| Initial parameters of j-th Dirichlet distribution | ||

| , | Initial parameters of j-th Wishart distribution |

| Time step | 1s | 81 s | 101 s | 121 s | 176 s | 201 s |

| Number of targets in scene | 2 | 4 | 3 | 2 | 2 | 0 |

| Situation | Tar. 1 and Tar. 2 birth | Tar. 3 and Tar. 4 birth | Tar. 1 and Tar. 2 merge | Merged target dies | Tar. 3 and Tar. 4 cross | Tar. 3 and Tar. 4 die |

| Experiment 1 | Experiment 2 | |||||

|---|---|---|---|---|---|---|

| Target Count = 4 | Target Count = 4 | |||||

| MTCE↓ | MTCE | |||||

| GIW-CPHD | 0.512 | 0.018 | 0.530 | 0.108 | 0.004 | 0.112 |

| MEM-CPHD | 0.687 | 0.123 | 0.810 | 0.100 | 0.003 | 0.103 |

| MEM-JIPDA | 0.009 | 0.139 | 0.148 | 0.011 | 0.078 | 0.089 |

| GIW-PMB | 0.464 | 0.012 | 0.476 | 0.083 | 0.003 | 0.086 |

| MEM-CPHD-VGMM | 0.017 | 0.009 | 0.026 | 0.006 | 0.001 | 0.007 |

| Color | Type | Size | Motion Time | Initial State | Identifier |

|---|---|---|---|---|---|

| Dark blue | Truck | [8.10 × 2.50] | [1, 107] | [24, 87, 0, 8] | Tar. 1 |

| Light green | Saloon car | [4.80 × 1.80] | [15, 101] | [35, 50, 0, 10] | Tar. 2 |

| Orange | Saloon car | [4.80 × 1.80] | [9, 114] | [26, 50, 0, 10] | Tar. 3 |

| Light blue | Saloon car | [4.80 × 1.80] | [1, 111] | [25, 76, 0, 10] | Tar. 4 |

| Experiment 1 | Experiment 2 | |||

|---|---|---|---|---|

| Target Count = 4, | Target Count = 4, | |||

| Single MC↓ | Per Iteration Cycle↓ | Single MC↓ | Per Iteration Cycle↓ | |

| GIW-CPHD | 170.80 | 0.854 | 174.53 | 1.531 |

| MEM-CPHD | 193.42 | 0.967 | 190.84 | 1.674 |

| MEM-JIPDA | 256.61 | 1.283 | 230.05 | 2.018 |

| GIW-PMB | 184.76 | 0.924 | 185.77 | 1.630 |

| MEM-CPHD-VGMM | 275.96 | 1.376 | 241.34 | 2.117 |

| Experiment 1 | |||||

|---|---|---|---|---|---|

| Original Parameters: = 0.98, = 1, = 5, | |||||

| GIW-CPHD | MEM-CPHD | MEM-JIPDA | GIW-PMB | MEM-CPHD-VGMM | |

| No change | 39.901 | 36.193 | 34.185 | 37.198 | 33.036 |

| 40.303 | 36.594 | 35.373 | 38.345 | 33.284 | |

| 41.824 | 39.202 | 36.642 | 39.112 | 35.634 | |

| 42.669 | 40.185 | 38.784 | 38.224 | 37.332 | |

| 43.225 | 42.185 | 40.105 | 41.875 | 39.717 | |

| 43.665 | 40.378 | 38.718 | 40.487 | 37.093 | |

| 49.556 | 47.098 | 43.137 | 46.512 | 42.538 | |

| 41.612 | 39.631 | 37.875 | 39.147 | 36.991 | |

| Experiment 2 | |||||

| Original Parameters: = 0.98, = 1, = 5, | |||||

| GIW-CPHD | MEM-CPHD | MEM-JIPDA | GIW-PMB | MEM-CPHD-VGMM | |

| No change | 3.271 | 2.523 | 1.936 | 2.784 | 1.560 |

| 4.055 | 2.669 | 2.619 | 3.015 | 1.577 | |

| 3.632 | 2.811 | 2.097 | 3.245 | 1.808 | |

| 3.752 | 3.210 | 2.614 | 2.832 | 2.108 | |

| 3.951 | 3.567 | 2.514 | 3.193 | 2.208 | |

| 3.864 | 2.951 | 2.597 | 3.047 | 2.348 | |

| 4.357 | 3.480 | 2.839 | 3.217 | 2.410 | |

| 3.745 | 3.160 | 2.621 | 2.979 | 2.181 | |

| Experiment 3 | |||||

|---|---|---|---|---|---|

| Target Count = 3 | |||||

| GIW-CPHD | MEM-CPHD | MEM-JIPDA | GIW-PMB | MEM-CPHD-VGMM | |

| 0.833 | 0.811 | 0.003 | 0.807 | 0.002 | |

| 0.331 | 0.324 | 0.055 | 0.326 | ||

| MTCE ↓ | 1.164 | 1.135 | 0.058 | 1.133 | |

| Experiment 3 | |||||

|---|---|---|---|---|---|

| Target Count = 3; Maximum Measurement Density: | |||||

| GIW-CPHD | MEM-CPHD | MEM-JIPDA | GIW-PMB | MEM-CPHD-VGMM | |

| Total runtime ↓ | 49.05 | 51.75 | 53.37 | 52.74 | 55.17 |

| Per iteration cycle ↓ | 0.545 | 0.575 | 0.593 | 0.586 | 0.613 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, Y.; Cao, Y.; Yeo, T.-S.; Zhang, Y.; Fu, J. Variational Gaussian Mixture Model for Tracking Multiple Extended Targets or Unresolvable Group Targets in Closely Spaced Scenarios. Remote Sens. 2025, 17, 3696. https://doi.org/10.3390/rs17223696

Cheng Y, Cao Y, Yeo T-S, Zhang Y, Fu J. Variational Gaussian Mixture Model for Tracking Multiple Extended Targets or Unresolvable Group Targets in Closely Spaced Scenarios. Remote Sensing. 2025; 17(22):3696. https://doi.org/10.3390/rs17223696

Chicago/Turabian StyleCheng, Yuanhao, Yunhe Cao, Tat-Soon Yeo, Yulin Zhang, and Jie Fu. 2025. "Variational Gaussian Mixture Model for Tracking Multiple Extended Targets or Unresolvable Group Targets in Closely Spaced Scenarios" Remote Sensing 17, no. 22: 3696. https://doi.org/10.3390/rs17223696

APA StyleCheng, Y., Cao, Y., Yeo, T.-S., Zhang, Y., & Fu, J. (2025). Variational Gaussian Mixture Model for Tracking Multiple Extended Targets or Unresolvable Group Targets in Closely Spaced Scenarios. Remote Sensing, 17(22), 3696. https://doi.org/10.3390/rs17223696