1. Introduction

Digital elevation models (DEMs) are digital representations of variations in ground surface elevation. DEMs serve as a fundamental data source for spatial information analysis, which is crucial in fields such as hydrological analysis, urban planning and landform evolution analysis [

1,

2,

3]. While the aforementioned applications are critical, their accuracy and reliability are often directly constrained by the resolution of the input DEM. High-resolution (HR) DEMs are capable of capturing finer topographic features [

4], such as small gullies, steep riverbanks, subtle fault lines, and artificial structures. They are particularly important not only to provide more accurate topographic information for real-world 3D construction and land use optimization, but also to improve the quality of decision-making in disaster management and environmental protection issues, such as flood monitoring, earthquake assessment, and landslide risk assessment [

5,

6,

7].

Traditional methods for obtaining high-resolution DEMs mainly include light detection and ranging (LiDAR), aerial photogrammetry, and geodetic surveying, all of which have certain limitations, such as limited measurement range, harsh weather conditions, and excessive costs of manpower and material resources [

8,

9]. Overcoming the limitations of traditional methods, interferometric synthetic aperture radar (InSAR) technology has been employed recently to generate large-extent and high-resolution DEMs due to its all-weather, all-day capability and immunity to clouds, rain, and fog [

10,

11]. However, the generated InSAR DEMs have missing data areas due to factors such as shadowing, signal noise and temporal phase variations in the imaged area [

12]. Due to the many challenges of acquiring high-resolution DEMs, breakthroughs must be sought from the large amounts of low-resolution DEM data covering the entire globe, such as SRTM and ALOS World 3D. The past decade has witnessed significant advances in deep learning, establishing it as a viable pathway for reconstructing seamless, high-resolution DEMs from low-resolution (LR) DEMs, building upon its formidable performance in image super-resolution (SR) tasks [

13].

SR technology has its roots in digital image processing and computer vision. Its fundamental concept involves using algorithms to reconstruct HR images from one or several LR images [

14]. Traditional image SR methods are predominantly based on interpolation or regularization, yet their effectiveness remains limited. Interpolation-based methods, such as inverse distance weighting, spline function interpolation and bicubic convolution [

15,

16,

17], offer computational simplicity and rapid processing, yet suffer from pronounced edge effects and over-smoothing [

18]. Regularization-based methods constrain parameters during image reconstruction by incorporating prior knowledge, such as edge-directed priors and similarity redundancy [

19,

20], thereby effectively suppressing noise and overfitting. However, they produce ringing artifacts and are highly dependent on prior knowledge [

21]. The widespread adoption of convolutional neural networks (CNNs) has greatly advanced deep learning-based SR methods, leading to unprecedented progress in reconstructing high-resolution images [

22]. The methods leverage extensive training data to learn a complex nonlinear mapping from LR to HR images through network training, and subsequently employs this mapping relationship to predict high-resolution outputs. Its evolution has progressed from the pioneering Super Resolution Convolutional Neural Network (SRCNN) [

23], to models employing deeper network structures and efficient up-sampling strategies such as Very Deep Convolutional Network (VDSR) [

24] and Efficient Sub-Pixel Convolutional Neural Network (ESPCN) [

25], and further to high-performance architectures with enhanced representation learning capabilities like Enhanced Deep Residual Network (EDSR) [

26] and Residual Dense Network (RDN) [

27]. To further enhance visual perceptual quality, Generative Adversarial Networks (GANs) [

28] were introduced into the field, giving rise to models such as Super-resolution Generative Adversarial Network (SRGAN) [

29] and Enhanced Super-Resolution Generative Adversarial Network (ESRGAN) [

30] capable of generating photo-realistic details.

Inspired by image SR, deep learning-based methods are increasingly applied to DEM SR reconstruction. Xu et al. employed a deep CNN to train on natural images, thereby acquiring gradient-based prior knowledge, before incorporating transfer learning to reconstruct high-resolution DEMs [

31]. Deng et al. developed a deep residual generative adversarial network for DEM SR, yielding more accurate reconstruction results while retaining more topographic features [

32]. However, DEM data differs significantly from natural images in that each pixel value represents a continuous elevation value rather than discrete RGB color intensity, with its spatial distribution governed by physical principles such as hydrology and geomorphology. Therefore, directly transferring SR models designed for images to DEM data often results in issues such as excessive smoothing and texture distortion in the reconstructed DEMs [

33]. To solve the issues, more and more researchers have begun to use raster terrain features, like slope, and vector terrain features, like ridge lines and river networks, in high-precision DEM reconstruction studies to strengthen the model’s sensitivity to capture terrain changes. Zhou et al. assessed the impact of incorporating different sets of terrain features into the loss function, and the experiment achieved better elevation accuracy and terrain retention compared to other methods [

34]. Jiang et al. incorporated slope and curvature losses into the high-resolution DEM reconstruction model, resulting in better reconstruction results in high mountain Asia [

35]. Gao and Yue investigated the integration of optical remote sensing images to assist DEM super-resolution, reconstructing DEMs with high-fidelity elevation and clearer topographic details [

36]. Although some studies have incorporated terrain features or optical remote sensing images, current research methodologies predominantly suffer from the limitation of using a single data source, which constrains the models’ perception and reasoning capabilities. To overcome this bottleneck, the integration of multi-source data such as DEM data and SAR images to provide complementary information has become an inevitable trend.

Furthermore, most models enhance performance by increasing network depth, which is accompanied by a sharp rise in computational complexity and substantial growth in model parameters, severely constraining training and deployment efficiency in practical scenarios. The introduction of the Transformer module addressed this issue [

37], initially developed for Natural Language Processing (NLP). Through efficient global self-attention mechanism, this module can capture long-range contextual dependencies at relatively shallow network depths, thereby avoiding the parameter redundancy problem associated with simply stacking convolutional layers [

38,

39]. Its outstanding performance has also led many scholars to apply it to the field of SR. Yang et al. proposed a Texture Transformer Network for Image Super-Resolution (TTSR), which restores textures from multiple scales, achieving significant improvements in both evaluation metrics and visual quality [

40]. Liu et al. proposed an image Super-Resolution network based on the Global Dependency Transformer (GDTSR), enabling each pixel to establish global dependencies with the entire feature map, thereby effectively enhancing the model’s performance and accuracy [

41]. Given its proven efficacy in image SR, adapting the Transformer module to DEM SR represents a meaningful direction for developing more precise and efficient reconstruction models.

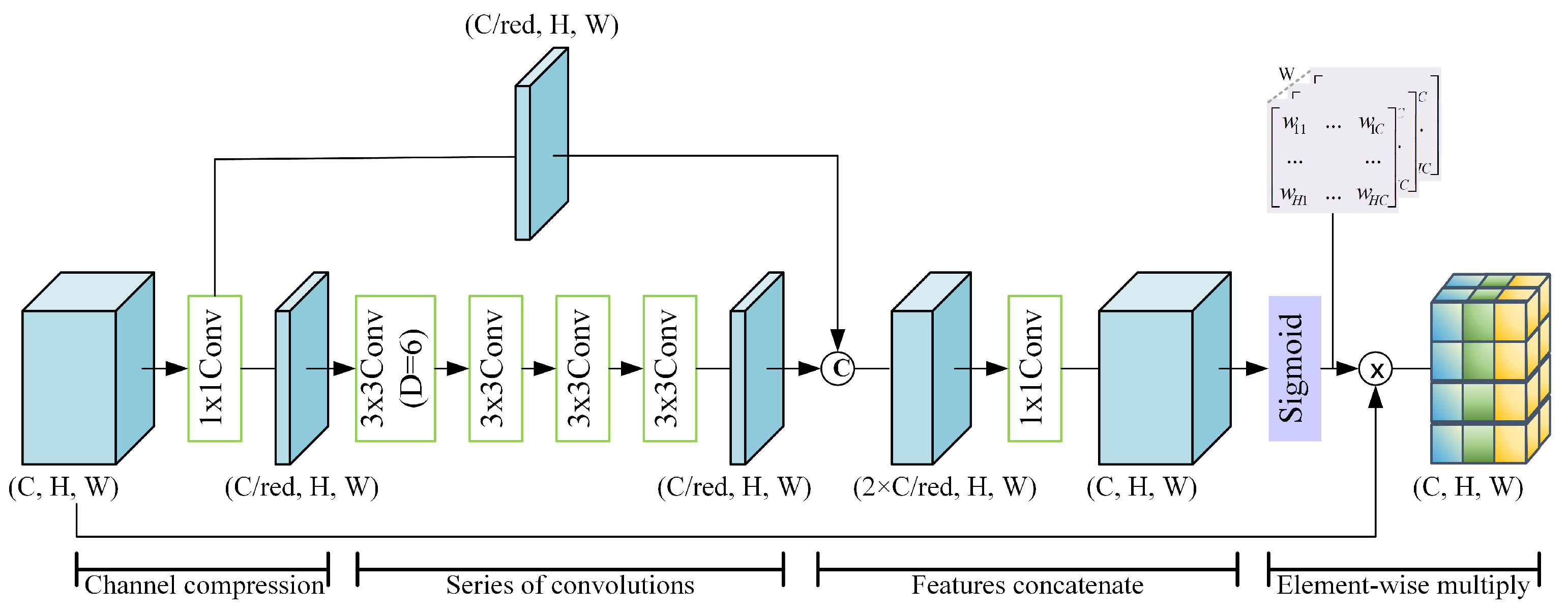

Therefore, this paper proposes a Transformer-based residual attention network combining SAR and terrain features for DEM super-resolution reconstruction (TRAN-ST). In particular, TRAN-ST consists mainly of a feature fusion module, a deep feature extraction module, and a loss function module. The feature fusion module optimizes DEM reconstruction process and improves elevation estimation accuracy by fusing SAR features with DEM data for joint input. The deep feature extraction module is the backbone of this network, combining Transformer with residual attention to learn feature information more efficiently. The introduction of terrain features as losses in the loss function module effectively improves terrain reconstruction and retains more terrain information. The following is a summary of this study’s main contributions:

We innovate a novel DEM SR reconstruction algorithm, which introduces SAR data as a complementary source to DEMs. It overcome the limitations of single-source dependency in traditional DEM super-resolution, which often leads to insufficient detail restoration and poor generalization. By leveraging the synergistic effect between SAR’s all-weather penetration capability and elevation information, the proposed algorithm effectively produces seamless high-resolution DEMs with enhanced terrain fidelity.

We further incorporate both SAR features and terrain features into the network, significantly advancing the representation capability of the model. The introduced SAR features provide high-frequency spatial information that are often absent in low-resolution DEMs, while terrain features constraints in the loss function enhance terrain consistency. The features are introduced to not only enhance the ability of DEM details reconstructing, but also to improve overall DEM quality in terms of accuracy.

Our algorithm combines a lightweight Transformer module with a residual feature aggregation structure to enhance the global perception capability, while aggregating local residual features to capture more comprehensive feature information, and also effectively reduce the model’s redundant parameters.

4. Discussion

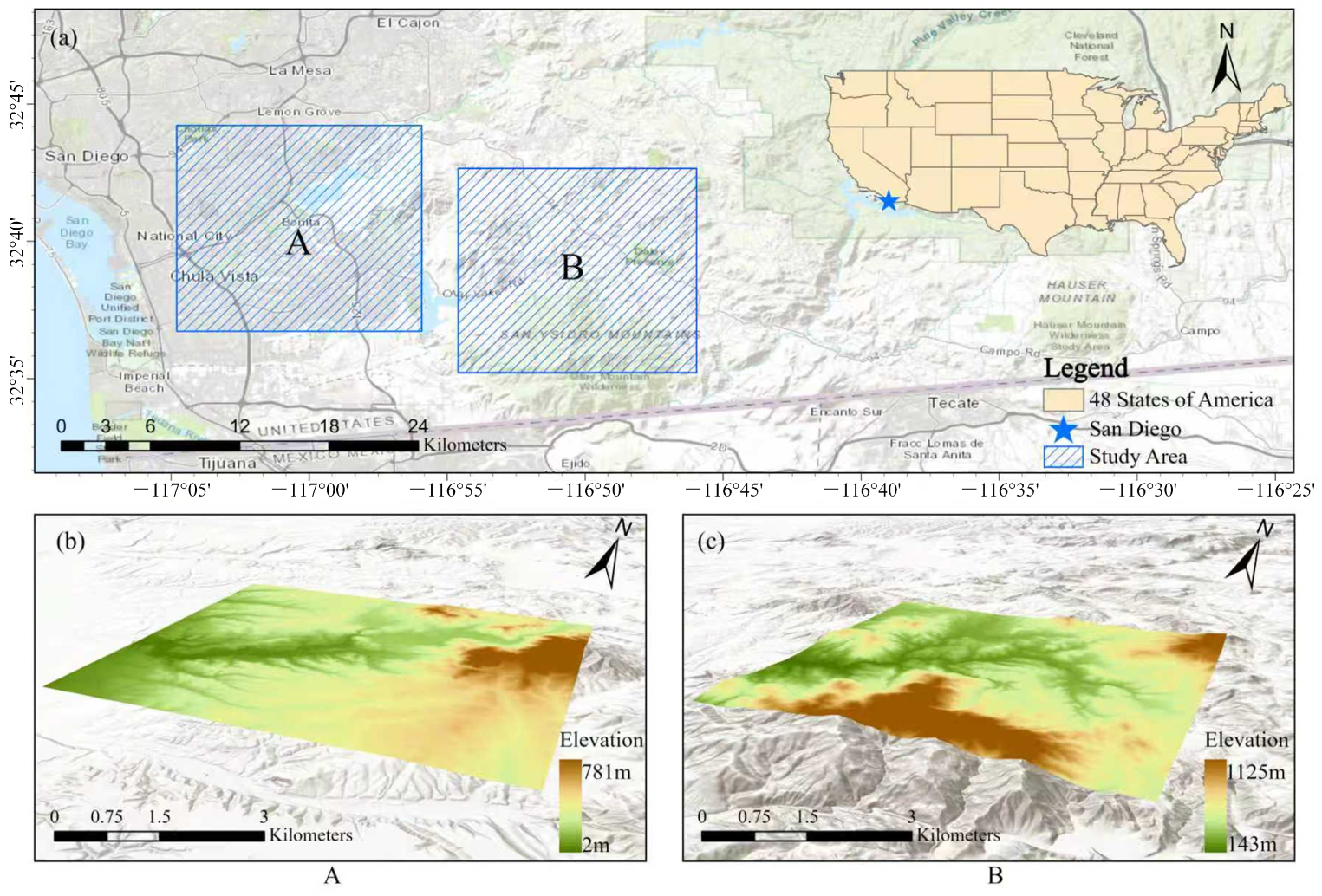

4.1. Enhancing DEM Reconstruction Accuracy with SAR Data

The study area’s 3 m resolution photogrammetric DEM, covering a local target area and downloaded from the United States Department of Agriculture (

https://datagateway.nrcs.usda.gov/, accessed on 11 October 2024), was utilized to assess our SAR-integrated model’s performance against other single-source reconstruction models, as shown in

Figure 10. This comparison enables a more thorough and comprehensive evaluation of DEM reconstruction results of each model, as the reconstruction accuracy of the missing data area could not be confirmed using InSAR DEM.

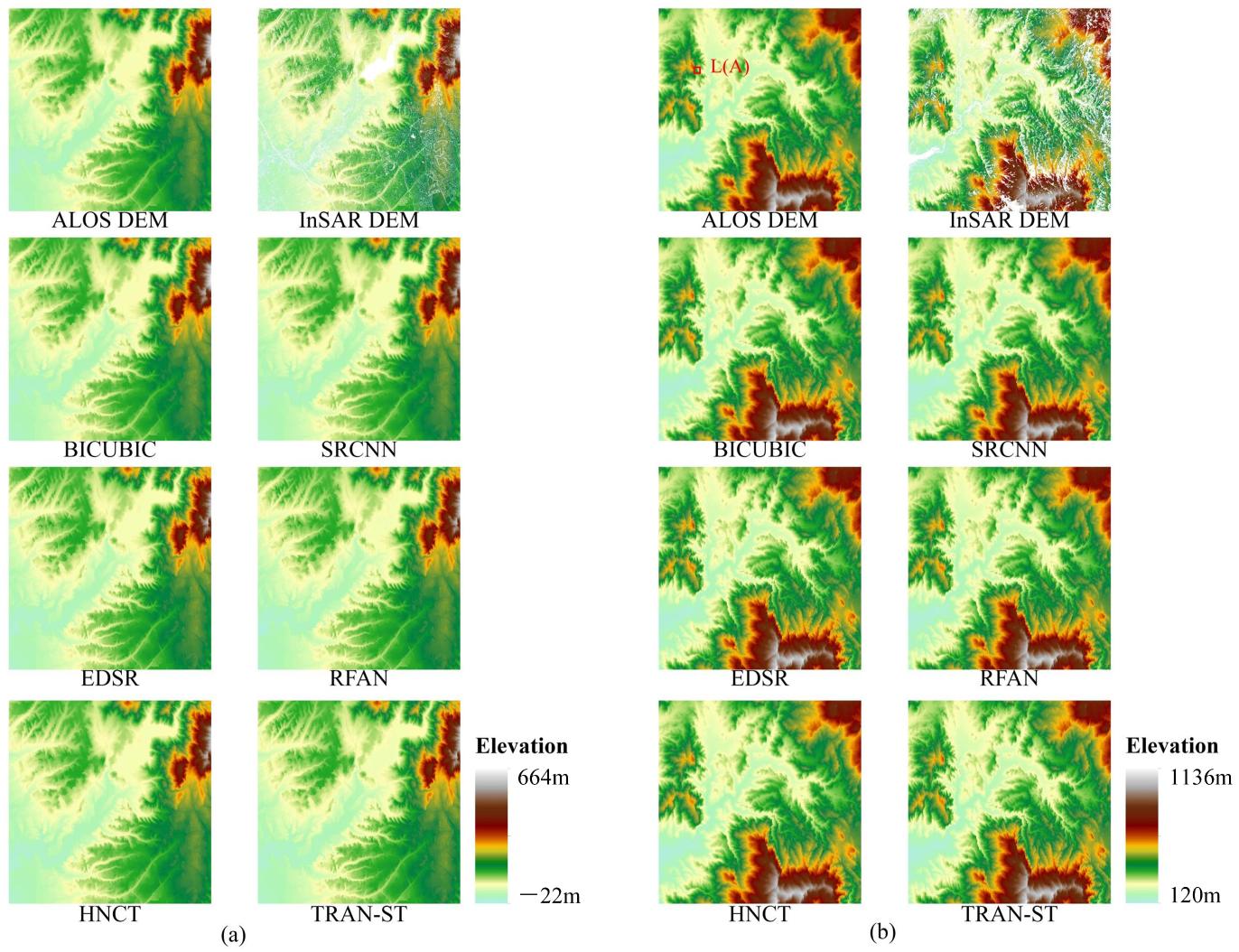

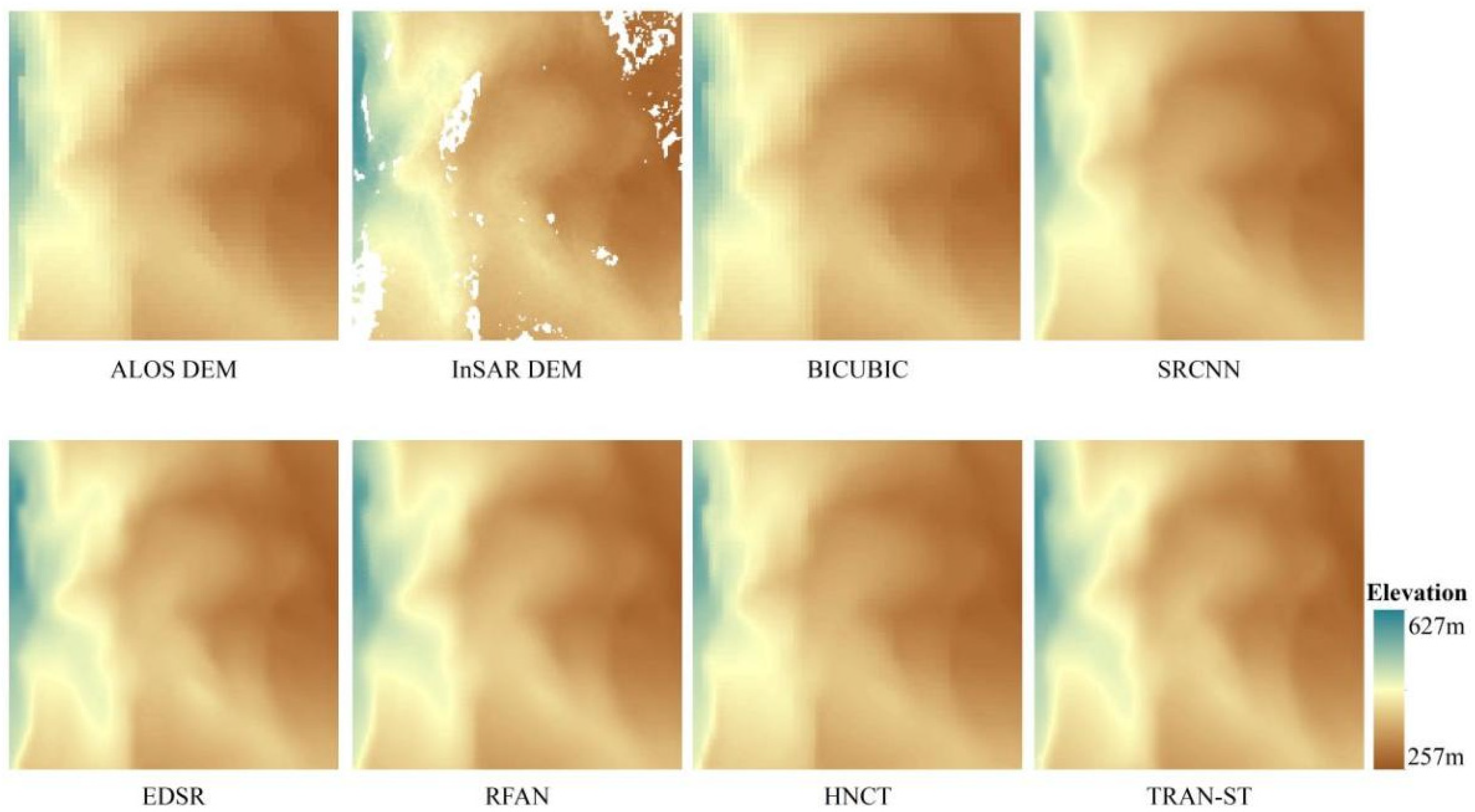

As shown in

Table 4, the error between the DEMs obtained from the reconstruction of different models and the photogrammetric DEM is calculated and quantitatively evaluated using the MAE, RMSE and PSNR metrics. It demonstrates that TRAN-ST achieves optimal performance across all three metrics: a 0.04–21.54% reduction in MAE, a 1.35–7.72% improvement in RMSE, and a 0.34–2.02% improvement in PSNR compared to baseline models. Influenced by the elevation error between the InSAR DEMs and photogrammetric DEMs, the accuracy metric values are inferior to the training accuracy values as a whole, but TRAN-ST still shows good reconstruction accuracy through the synergistic optimization of the multi-source feature fusion mechanism and the residual attention module, which also proves its good reconstruction effect in the area of missing DEM values. In summary, compared to SR models based on other single data sources, our SR model incorporating SAR data achieves superior reconstruction results.

To deeply investigate the model’s spatial heterogeneity characteristics, a quantitative assessment of error distribution is conducted between DEMs obtained from several SR reconstruction models and the photogrammetric DEM, with region M(A) in

Figure 10 serving as a representative example. The results are shown in

Figure 11. The BICUBIC, SRCNN, EDSR, and HNCT models all exhibit significant systematic negative bias, and in comparison, the RFAN and TRAN-ST show closer alignment with the ground truth values in negatively biased pixels. Further quantitative analysis of error distribution across multiple intervals reveals a consistent advantage of TRAN-ST over RFAN. Specifically, within the [−4 m, 4 m] range, TRAN-ST encompasses 88.33% of error pixels, compared to 87.89% for RFAN. More notably, as the interval expands to [−6 m, 6 m], TRAN-ST maintains a higher concentration of errors (97.56% vs. 97.36%), demonstrating that its error distribution is more concentrated and that it produces fewer extreme outliers. Although it produces localized overestimation in the reconstruction of high elevation points, which is considered to be affected by the inherent resolution discrepancies between DEM data and SAR data during the cross-modal feature alignment process, the overall accuracy is still better than other models.

To precisely evaluate the respective contributions of intensity and coherence features in DEM reconstruction, systematic ablation experiments are designed. Specifically, to ensure fairness in evaluation, when removing a specific SAR feature, its corresponding loss function is simultaneously removed. The experimental results (

Table 5) indicate that incorporating both intensity and coherence features enhances the elevation accuracy of reconstructed DEMs, yielding improvements in MAE, RMSE and PSNR metrics compared to models without SAR features. It should be noted that the model without SAR features, by learning solely from DEM data, reduces sensitivity to local elevation noise in slope calculations, thereby achieving lower MAE in slope. However, it underperforms on all other metrics compared to other models. Most importantly, the integrated model combining both intensity and coherence features achieved optimal overall performance. Its improvement surpassed the simple sum of the individual contributions from each feature, demonstrating a synergistic enhancement effect between them. This indicates that the effective fusion of these two features enables the model to reconstruct a more accurate DEM.

Having confirmed that fusing SAR data significantly improves reconstruction accuracy, ablation experiments are further conducted to quantify the impact of different SAR features fusion methods. Ablation experiments are compared in terms of the sampling methods used to dimensionally align SAR features with DEMs and the effectiveness of the attentional module used to fuse SAR features, respectively.

SAR features are used as model inputs to learn the InSAR DEM together with the low-resolution DEM. Since there are scale differences between the SAR features and the low-resolution DEM, the shallow features extracted from both need to be dimensionally aligned by sampling prior to feature fusion. Two types of sampling are identified: down-sampling the SAR features (192 × 192) to align them with the low-resolution DEM’s (48 × 48) dimensions, and up-sampling the low-resolution DEM to align it with the SAR features’ dimensions. And three typical interpolation methods—nearest, bilinear, and bicubic—are employed for comparative analysis to evaluate the impact of different up-sampling methods on reconstruction accuracy. As shown in

Table 6, the model using the up-sampling approach substantially outperforms the down-sampling in all metrics due to the fact that the up-sampling can better preserve spatial details and high-frequency information in the original SAR features. Although up-sampling low-resolution DEMs introduces interpolation errors, more plausible spatial distribution patterns can be effectively learned by the subsequent convolutional neural network. Conversely, down-sampling SAR features leads to irreversible loss of critical high-frequency information such as topographic edges and textural characteristics, due to the discard of essential detail data. Additionally, a comparative analysis of interpolation methods for up-sampling shallow features in DEMs was conducted, with results clearly indicating that nearest interpolation is the optimal choice. This is because the objects sampled at this stage are the feature maps extracted from LR DEMs by the convolutional layer. The core objective is to achieve spatial alignment between these feature maps and the shallow feature maps derived from intensity and coherence data rather than introducing additional information or altering the feature distribution. In this process, nearest interpolation effectively avoids feature smoothing and confusion that may arise from bilinear and bicubic interpolation by directly replicating pixel values, thereby maximizing the preservation of the original features’ discriminative power.

Feature fusion is performed on shallow features extracted from the low-resolution DEM and SAR features after dimensional alignment. Ablation experiments, where the CA module is removed from our model, show that CA contributes to improved multi-source feature fusion. By aggregating spatial context through global pooling to generate channel attention weights, CA enables the network to strengthen its response to critical channels of DEM reconstruction while suppressing redundant or conflicting features caused by modal discrepancies.

4.2. Ensuring Topographic Consistency Through Terrain Features

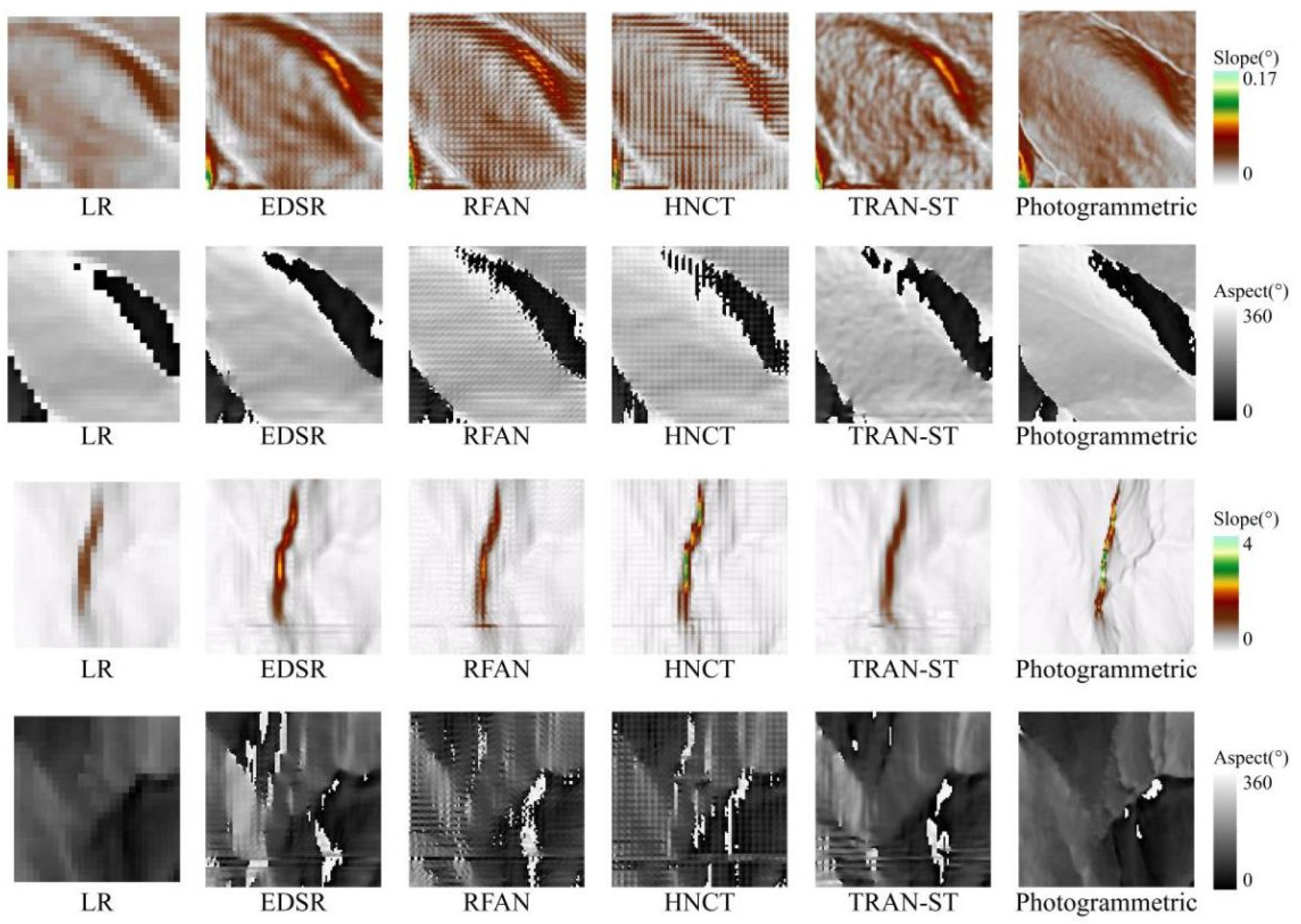

To validate the effectiveness of incorporating terrain features into the model, this study selects slope, aspect, and river network as representative terrain features, and conducts a multi-model comparative analysis of topographic consistency parameters, thereby quantifying the model’s capability in maintaining the spatial distribution of topographic elements.

Slope and aspect analyses were conducted for two representative geomorphic study areas, N(A) and N(B), as shown in

Figure 10. Study area N(A) is characterized by relatively flat terrain with overall lower elevation values, while N(B) is located in a mountainous area with higher elevation values. A comparative accuracy assessment was performed using MAE and RMSE metrics against the terrain features derived from the photogrammetric DEM. According to the visualization and quantification results in

Figure 12 and

Table 7, our model demonstrates closer alignment with the photogrammetric DEM in terms of slope across both gently undulating terrain and high-relief mountainous regions, and the integration of high-precision SAR features enriches texture details of output HR DEM. As for aspect, our model shows superior reconstruction performance in high-relief mountainous areas, while the effect is inferior to EDSR in flat terrain areas. This discrepancy may be due to the fact that the calculation of aspect in flat terrain is more sensitive to minor elevation fluctuations, where critical points of aspect determination have opposite polarities of elevation change. Alternatively, the photogrammetric DEMs may suffer from excessive smoothing, failing to preserve the fine-grained geomorphic details expected from high-resolution DEMs [

42]. And the impact on the experimental results caused by the lack of precision in the validation data is also one of the limitations of this study.

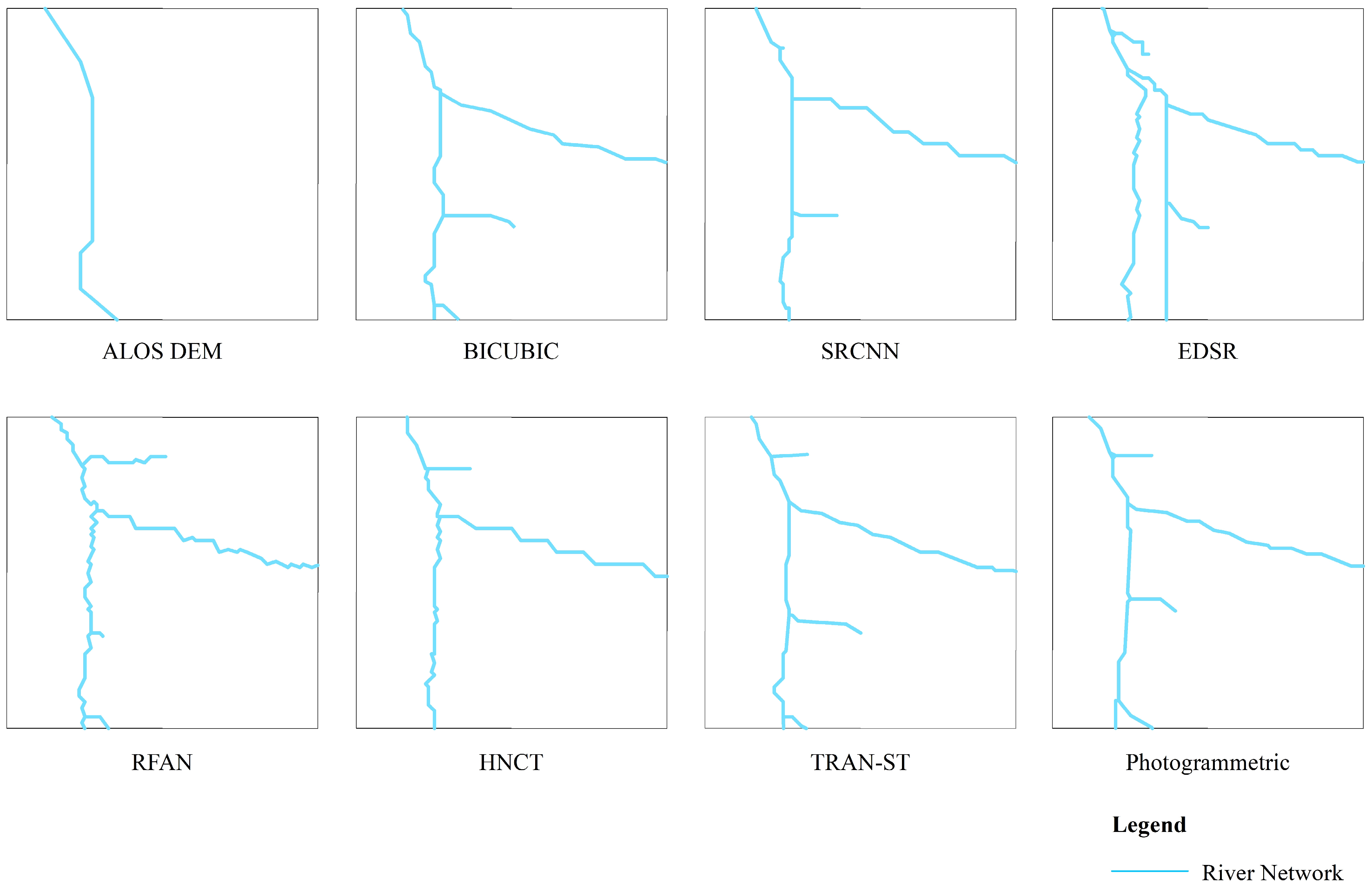

In terms of the river network, the N(C) study area in

Figure 10, where the river network is more densely distributed, is selected for comparison of the extraction results. As shown in

Figure 13, compared to other models, the river network extracted by TRAN-ST is generally consistent with the photogrammetric DEM. Under unified hydrological extraction standards, TRAN-ST not only accurately reproduces the structure of the main river network but also enables more complete extraction of minor tributaries within the watershed. It confirms that TRAN-ST achieves superior performance in reconstructing hydrologically meaningful terrain structures.

4.3. Network Design for Enhanced Performance

The ablation experiments are compared for the major improvements in TRAB, the core module of this network. One is to validate the ESA blocks in TRAB by replacing all ESA blocks in the module with Spatial Attention (SA) blocks, and the second is to validate the STB by replacing the STB with a 3 × 3 convolutional layer. As shown in

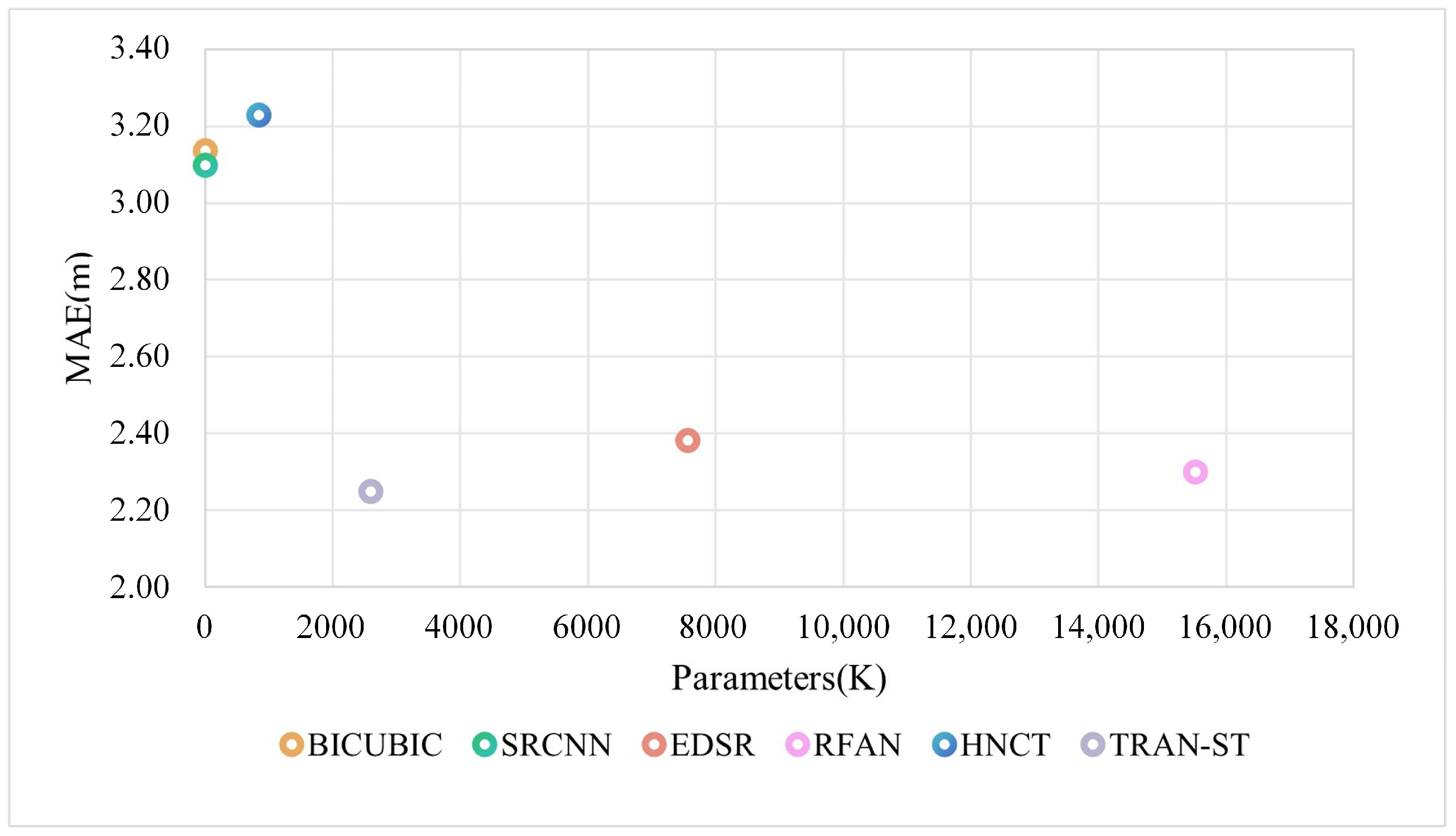

Table 8, compared with SA, the ESA block, as an improved spatial attention mechanism, effectively improves the model’s reconstruction accuracy. And STB improves reconstruction effect by improving the global perception ability, and it also substantially reduces the model’s parameters (

Figure 9).

Beyond the core module of TRAN-ST, loss function is also crucial to the network architecture by guiding the model optimization process. In order to verify loss function plays a supervisory role in model training, ablation experiments are conducted on the terrain feature loss and SAR feature loss while keeping the training datasets unchanged, and the results are shown in

Table 9. SAR feature loss includes intensity loss and coherence loss, and terrain feature loss includes slope loss and aspect loss. During the design process of the ablation experiment, considering that SAR data and low-resolution DEM participate together in the DEM SR reconstruction task as multi-source inputs, the two types of data have significant differences in numerical dimensions, which can cause a severe decrease in elevation accuracy if not constrained by SAR feature loss. Therefore, this section does not additionally compare the intensity and coherence losses in the SAR feature loss in separate ablation experiments, and discusses them as a whole. Quantitative results reveal that our model performs optimally on most metrics. Although

achieves optimal performance on the

metric, its MAE and RMSE values are 6.62% and 14.23% higher than those of TRAN-ST, respectively, confirming the critical role of SAR feature loss in improving topographic inversion accuracy. The ablation experiments further demonstrate that the synergistic optimization mechanism of slope and aspect loss effectively facilitates the recovery of terrain detail through joint constraints and improves the overall elevation reconstruction accuracy.

4.4. Limitations and Future Enhancements

While demonstrating competitive performance, the proposed method still possesses several limitations. Firstly, the photogrammetric DEM used for validation lacks sufficient accuracy, restricting our ability to evaluate the sensitivity of SAR features to subtle elevation variations and fine-grained terrain textures. Secondly, current evaluation lacks a systematic analysis of reconstruction error distribution across specific complex terrains like steep slopes, gullies, and urban construction areas. Thirdly, although the model performs well in the selected localized study area, its ability to be generalized across diverse geomorphic types, such as alpine, desert, coastal and highly urbanized terrains, remains unverified.

Future research efforts will explore two main directions to overcome the limitations. Firstly, utilizing high-precision elevation data from LiDAR DEMs or ICESat-2 data would enhance the validation of subtle topographic details, offering more than photogrammetric DEMs can provide. It would enable more accurate quantification of elevation errors and topographic consistency. Secondly, systematic investigation of the model’s performance across different terrain regions should be pursued by constructing datasets featuring detailed topographic annotations, to precisely quantify error patterns and guide future model improvements. Thirdly, extensive experiments involving multi-regional datasets spanning a wider range of geomorphological settings, as well as multi-seasonal remote sensing data, should be conducted to evaluate the model’s transferability.

5. Conclusions

This paper proposes a TRAN-ST algorithm that integrates SAR features and terrain features for DEM super-resolution reconstruction. By jointly using SAR features and DEM data as model inputs and introducing terrain features as part of the loss function, the multi-source feature integration deep learning model demonstrates improved elevation accuracy and enhanced terrain feature preservation capabilities. Meanwhile, the model incorporates a TRAB module that combines the lightweight Transformer with the residual attention mechanism to enable cross-scale modeling of long-range dependencies while maintaining a focus on local detail features for more comprehensive capture of feature information. The experimental results indicate that TRAN-ST provides a significant improvement in elevation and terrain accuracy compared to interpolation and traditional deep learning models used for DEM super-resolution reconstruction. During the model training phase, TRAN-ST improves elevation and terrain accuracy by 30.34% and 13.48%, respectively; when photogrammetric DEM is used for validation, TRAN-ST improves elevation reconstruction accuracy by 21.54%. In addition, the introduction of the lightweight Transformer significantly reduces the model’s parameters, thus saving computational resources. All of the above validate TRAN-ST’s superiority and practical significance in DEM super-resolution reconstruction research.

However, this study still has some limitations: First, the photogrammetric DEM used in the current validation process lacks sufficient accuracy, making it difficult to fully verify the sensitivity of the SAR data to subtle elevation changes. Future studies should include LiDAR DEMs or ICESat-2 data to perform cross-validation for terrain texture details beyond the capabilities of the photogrammetric DEM. Second, this study lacks a systematic analysis of reconstruction errors in specific complex terrains, and future work should construct datasets annotated with terrain categories to comprehensively understand the model’s performance boundaries. Third, although the method demonstrates efficient terrain reconstruction capability in the local study area, its generalizability to a wide range of complex geomorphological scenarios still needs to be further verified by multi-regional and multi-seasonal remote sensing data, which provides a key algorithmic validation of the technical path of reconstructing high-resolution DEM based on the synergy of multi-source data.