Automated Detection of Embankment Piping and Leakage Hazards Using UAV Visible Light Imagery: A Frequency-Enhanced Deep Learning Approach for Flood Risk Prevention

Highlights

- EmbFreq-Net achieves 77.68% mAP@0.5 for embankment hazard detection, outperforming the baseline by 4.19 percentage points while reducing computational cost by 27.0% and parameters by 21.7%.

- Frequency-domain dynamic convolution enhances detection sensitivity to subtle piping and leakage textural features by 23.4% compared to conventional spatial convolution methods.

- Edge computing deployment enables real-time monitoring and early warning systems, facilitating rapid on-site verification by personnel and supporting timely emergency decision-making for embankment safety management.

- The 23.4% improvement in detecting subtle piping and leakage textural features provides a cost-effective and more accurate embankment detection algorithm, promoting widespread adoption and better supporting emergency decision-making processes.

Abstract

1. Introduction

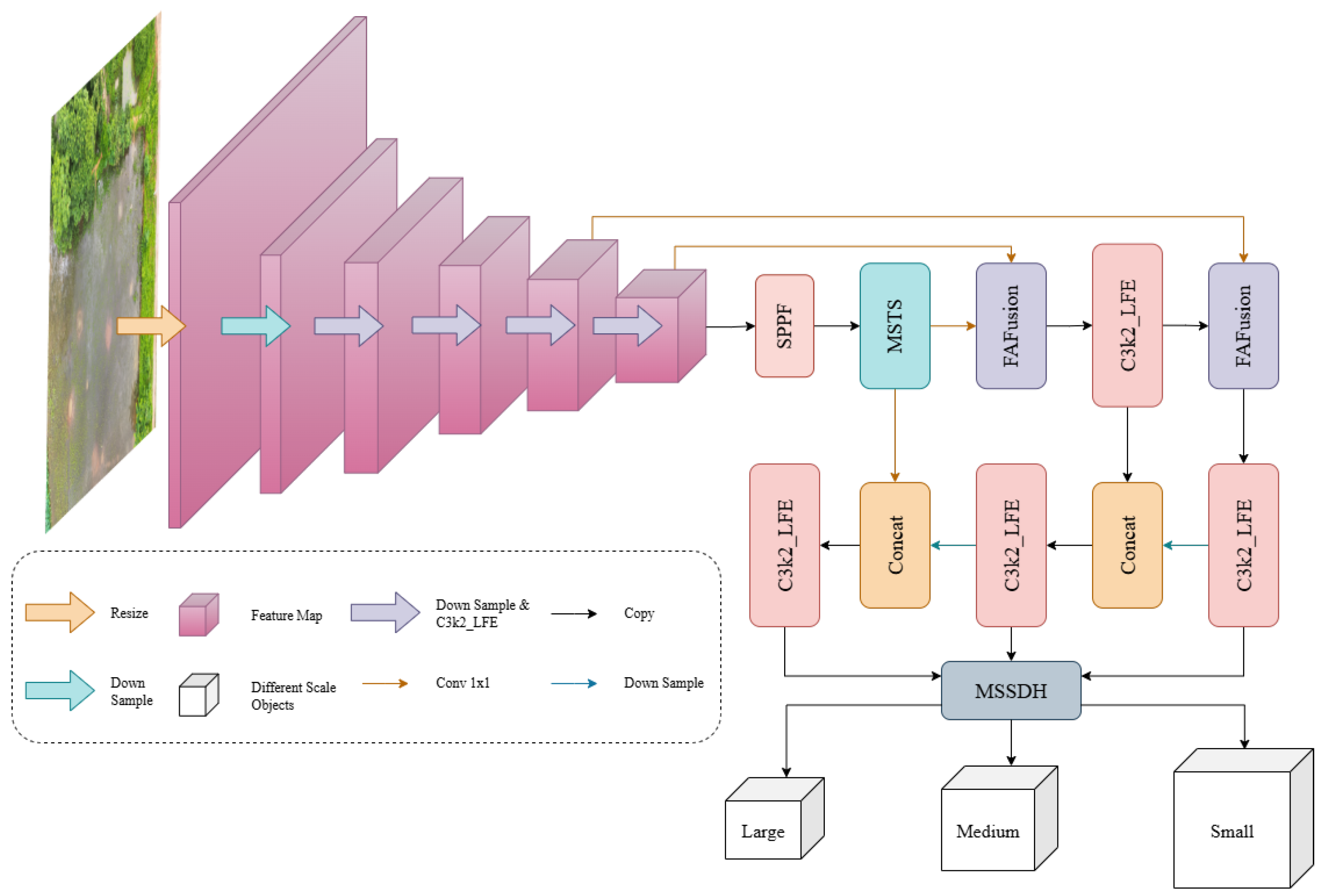

- An Integrated Architecture for Embankment Safety Applications: This study presents a lightweight detection architecture tailored for addressing the inherent challenges of embankment inspection. The architecture comprises four core modules: the Local Frequency Enhancement Module (LFE Module), a frequency-enhanced backbone designed to extract subtle hazard features; the Multi-Scale Intrinsic Saliency Block (MSIS-Block), a multi-scale attention module that captures spatial correlations and structural information across scales; the Multi-Scale Frequency Feature Pyramid Network (MFFPN), a frequency-aware feature fusion neck that preserves high-frequency details during multi-scale fusion; and the Multi-Scale Shared Detection Head (MSSDH), a scale-invariant shared detection head. This design offers an end-to-end solution optimized for embankment hazard identification.

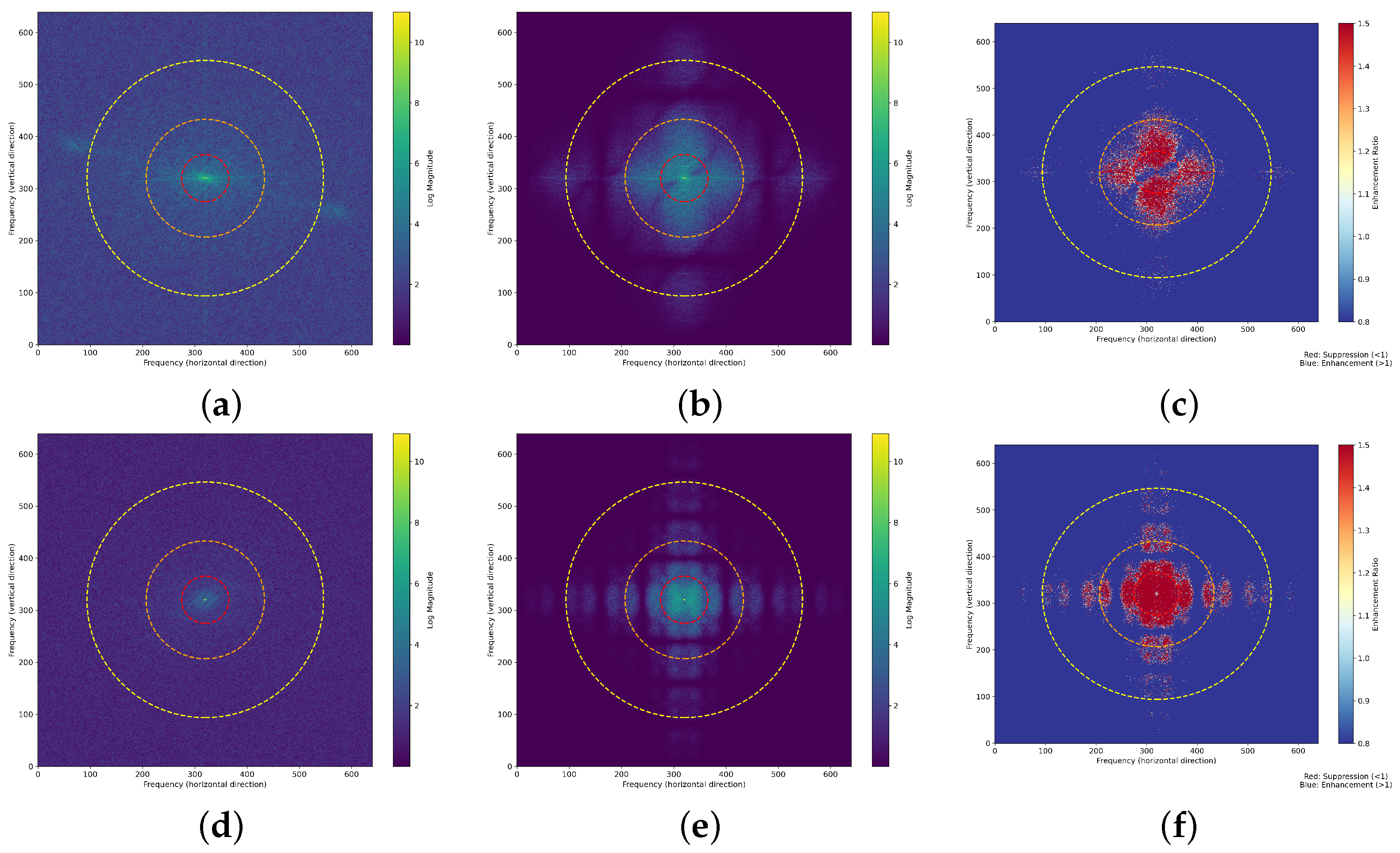

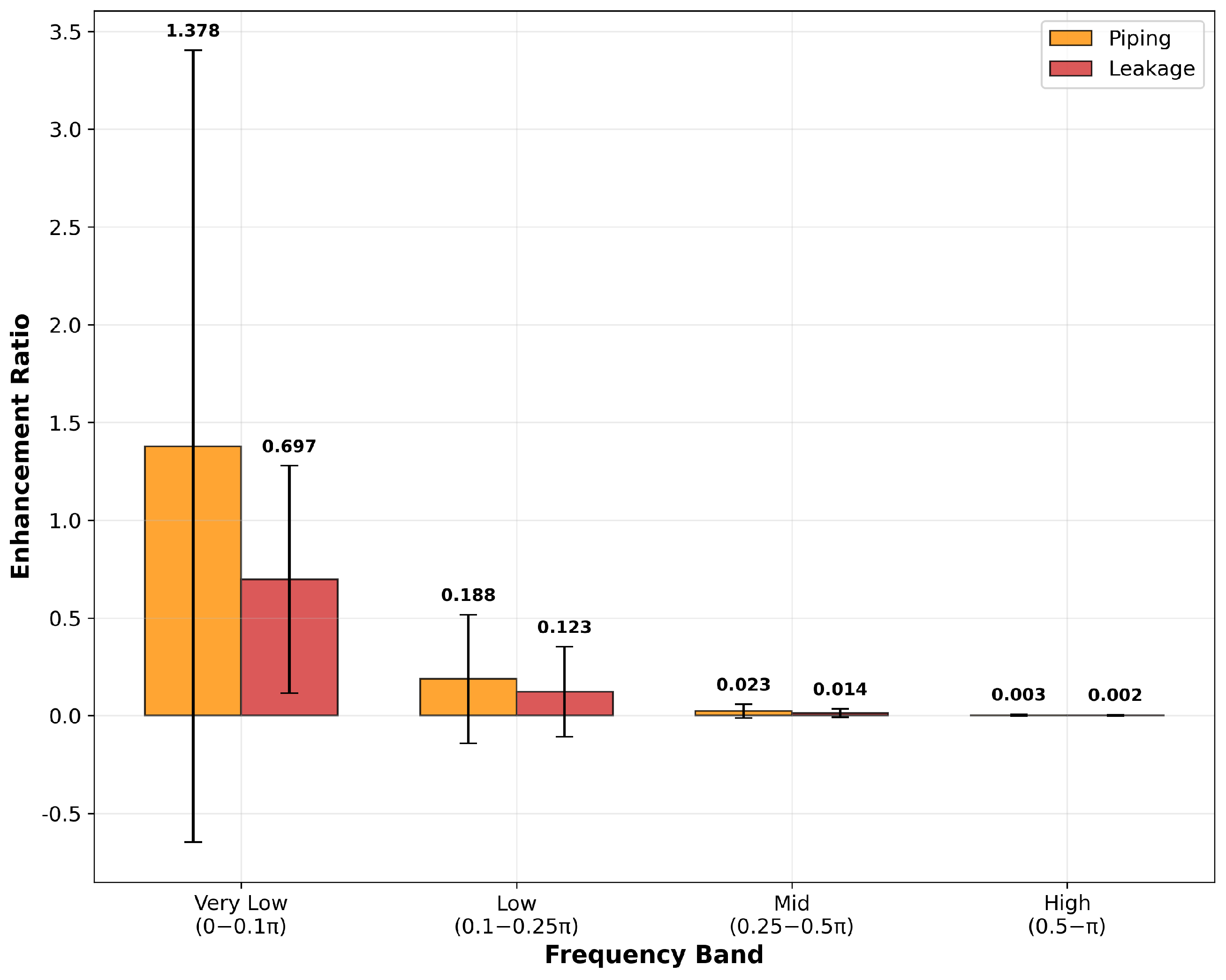

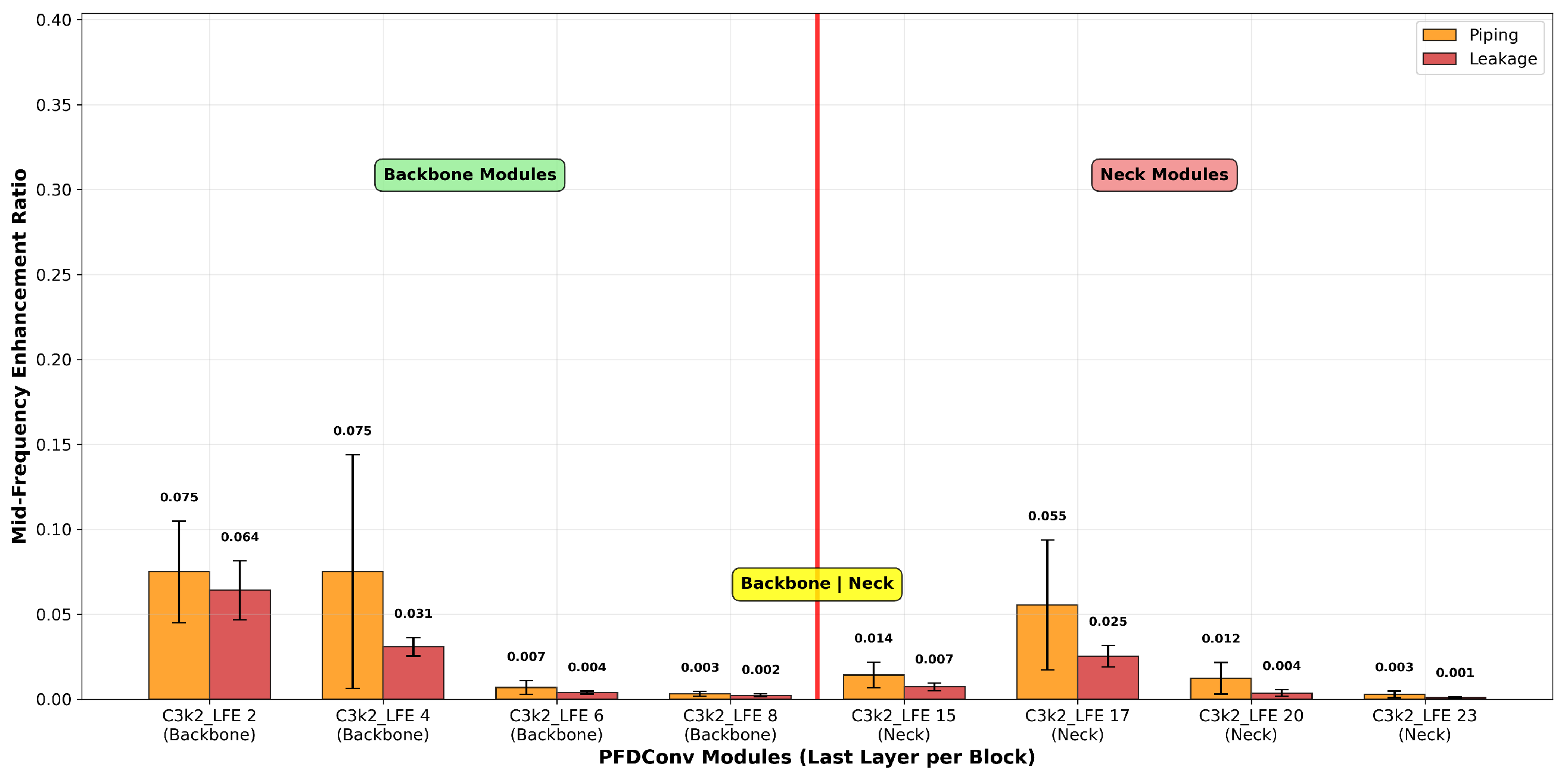

- Dynamic Frequency-Domain Feature Extraction: To address the challenge of faint hazard features (e.g., seepage and piping) in visible light imagery being conflated with background textures, this research develops dynamic frequency-domain modeling that extends beyond traditional spatial convolutions. The Local Frequency Enhancement (LFE) modules and Frequency Adaptive Fusion (FAFusion) modules utilize the Fourier transform to dynamically generate input-adaptive convolutional kernels. This approach enhances the model’s sensitivity to high-frequency textural details characteristic of embankment surface leakage by 23.4% compared to conventional spatial convolution methods. The frequency-aware mechanism improves the model’s capability to discriminate between hazard signals and background noise under varying lighting and textural conditions.

- Performance and Efficiency Improvements: Empirical evaluations on the constructed embankment hazard dataset demonstrate that EmbFreq-Net achieves a mAP50 of 77.68%, representing a 4.19 percentage point improvement over YOLOv11n (73.49%). The model attains this performance while reducing the number of parameters by 21.7% (from 2.58M to 2.02M) and computational complexity by 27.0% (from 6.3 to 4.6 GFLOPs). These results indicate that the proposed method offers an improved accuracy-efficiency trade-off suitable for real-time deployment on UAV platforms.

2. Related Works

- Limited utilization of visual features: Existing methods predominantly rely on the infrared thermal imaging, with insufficient research on visual features of leakage and piping in visible light images.

- Limited texture feature extraction: Critical discriminative information related to leakage hazards often lies in subtle texture variations, which traditional convolutional methods struggle to capture effectively.

3. Methodology

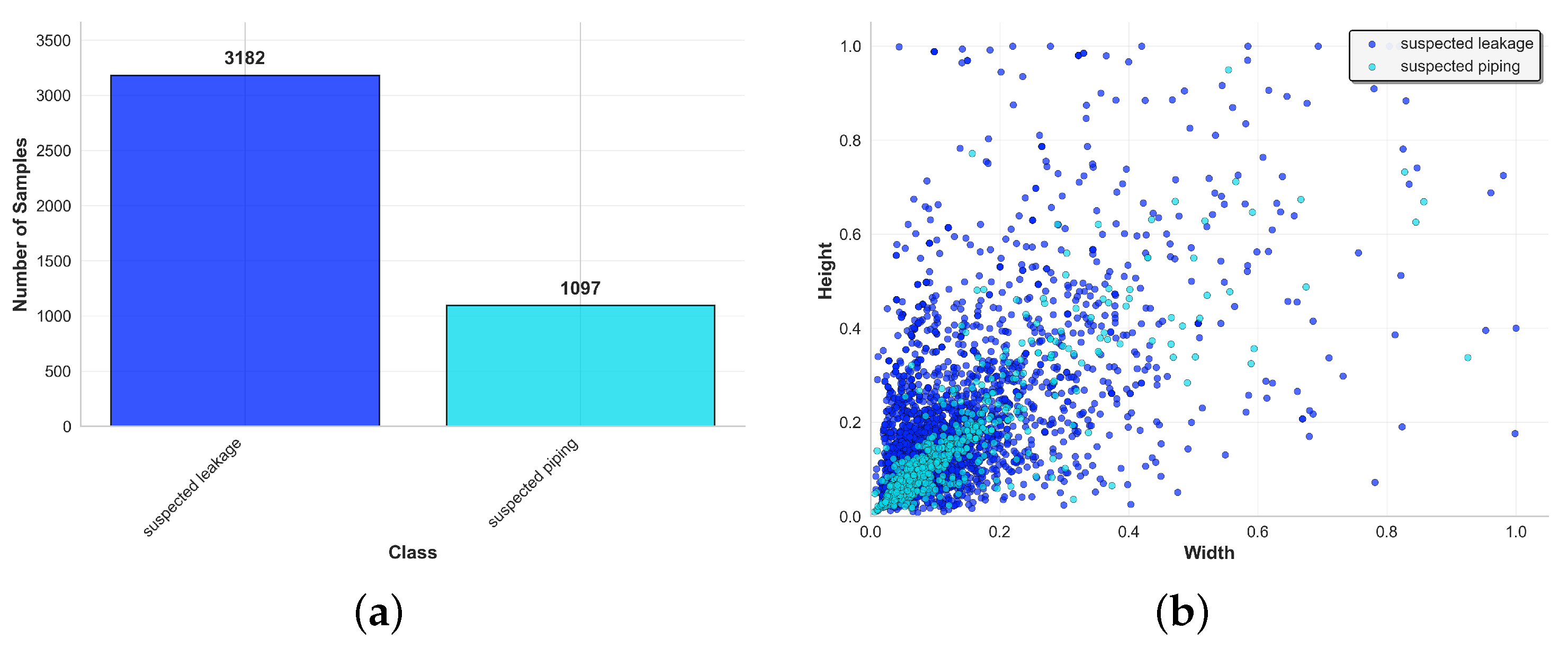

3.1. Dataset

3.2. Embankment-Frequency Network Architecture

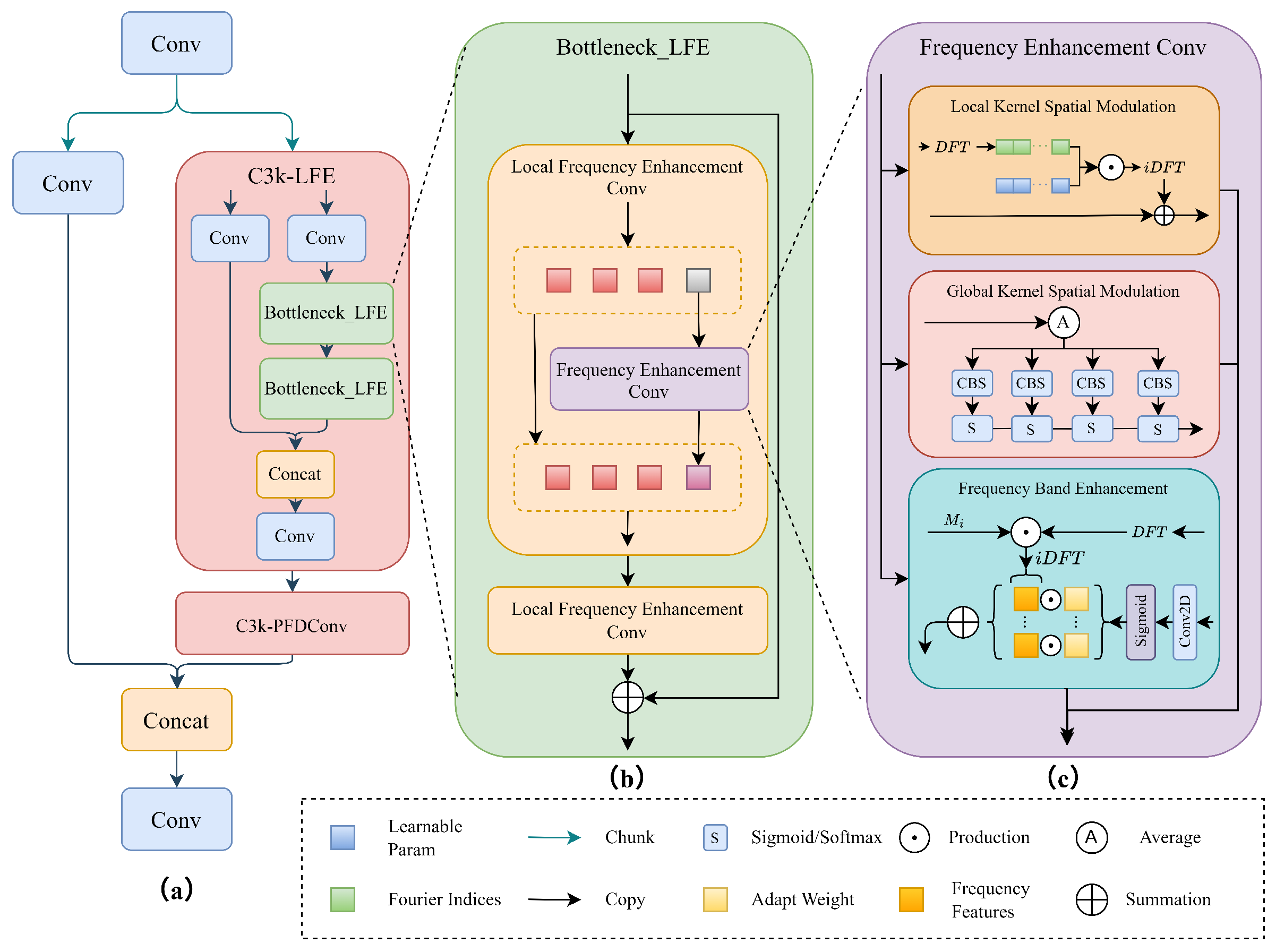

3.3. C3k2 with Local Frequency Enhancement

- Kernel Attention (): This determines the combination of base kernels from a predefined dictionary. The dictionary of base kernels, , exists in the frequency domain. The attention weights are computed via a softmax function to select a sparse combination.where is a learned linear transformation and is a temperature parameter.

- Spatial, Channel, and Filter Attentions (): These generate multiplicative masks to control the spatial focus (which parts of the kernel are emphasized), input channel importance, and output filter (channel) contributions, respectively. They are derived from through separate linear projections and sigmoid activations.

3.4. Multi-Scale Intrinsic Saliency Attention Block

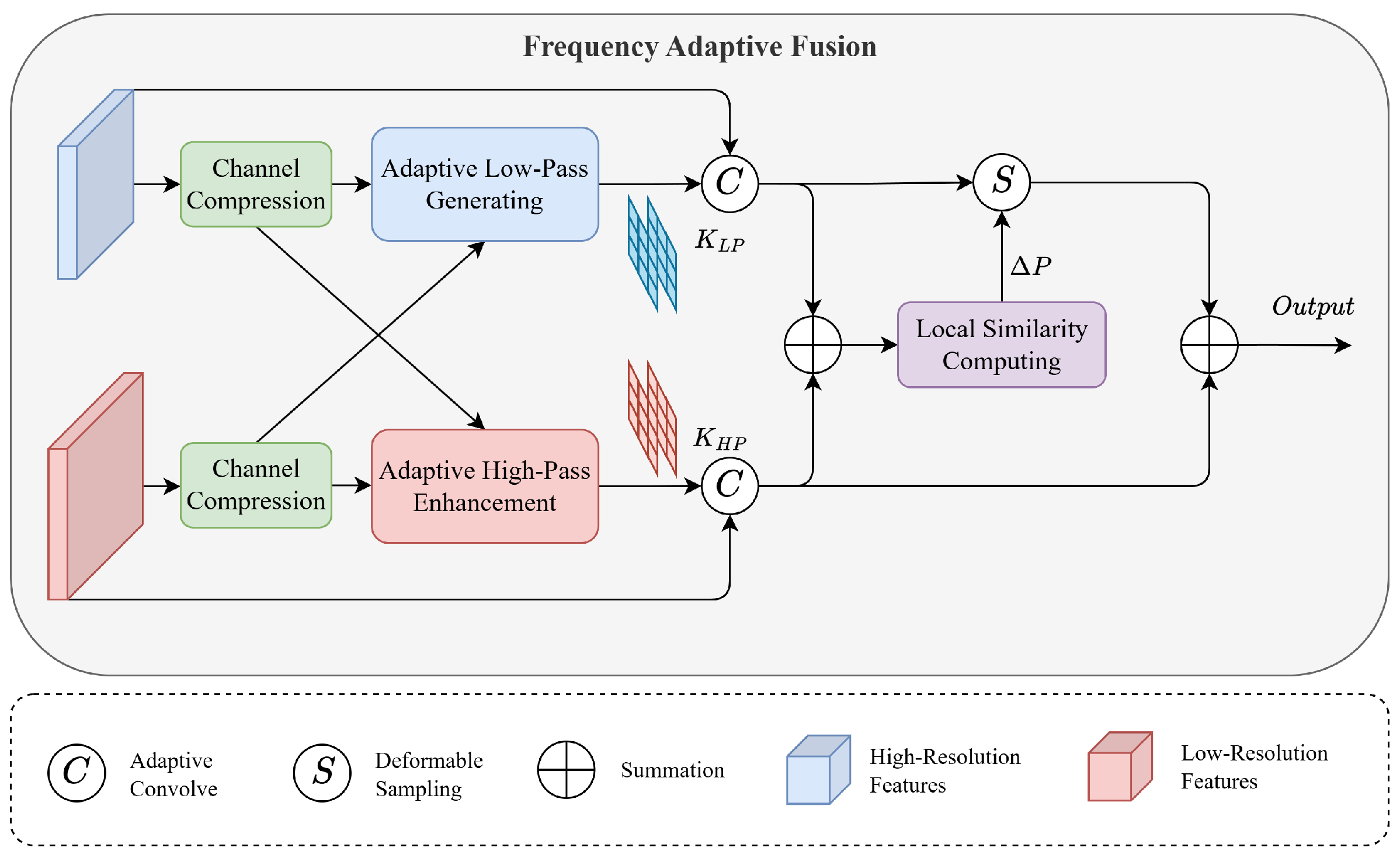

3.5. Multi-Scale Frequency Feature Pyramid Network

3.6. Multi-Scale Shared Detection Head

4. Experimental and Result Analysis

4.1. Experimental Environment and Hyper-Params

4.2. Evaluation Mertrics

4.3. Comparison Experiments

4.3.1. Backbone Architecture Comparison

4.3.2. Neck Module Comparison

4.3.3. Comparison with State-of-the-Art Models

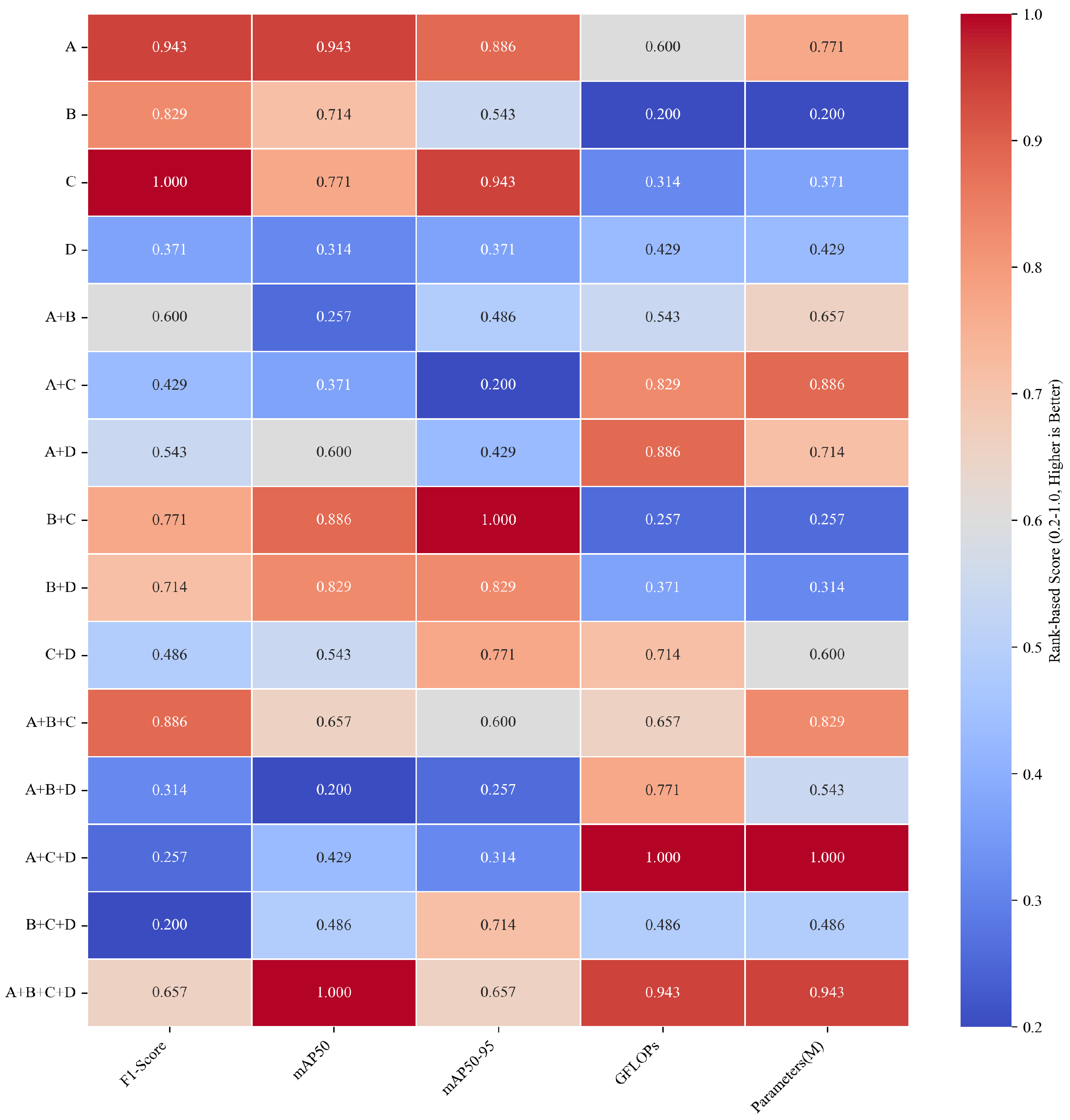

4.4. Ablation Experiment

4.4.1. Effectiveness of the C3k2-LFE

4.4.2. Effectiveness of the MSIS-Block

4.4.3. Effectiveness of the MFFPN

4.4.4. Effectiveness of the MSSDH

4.5. Hyperparameter Sensitivity Analysis

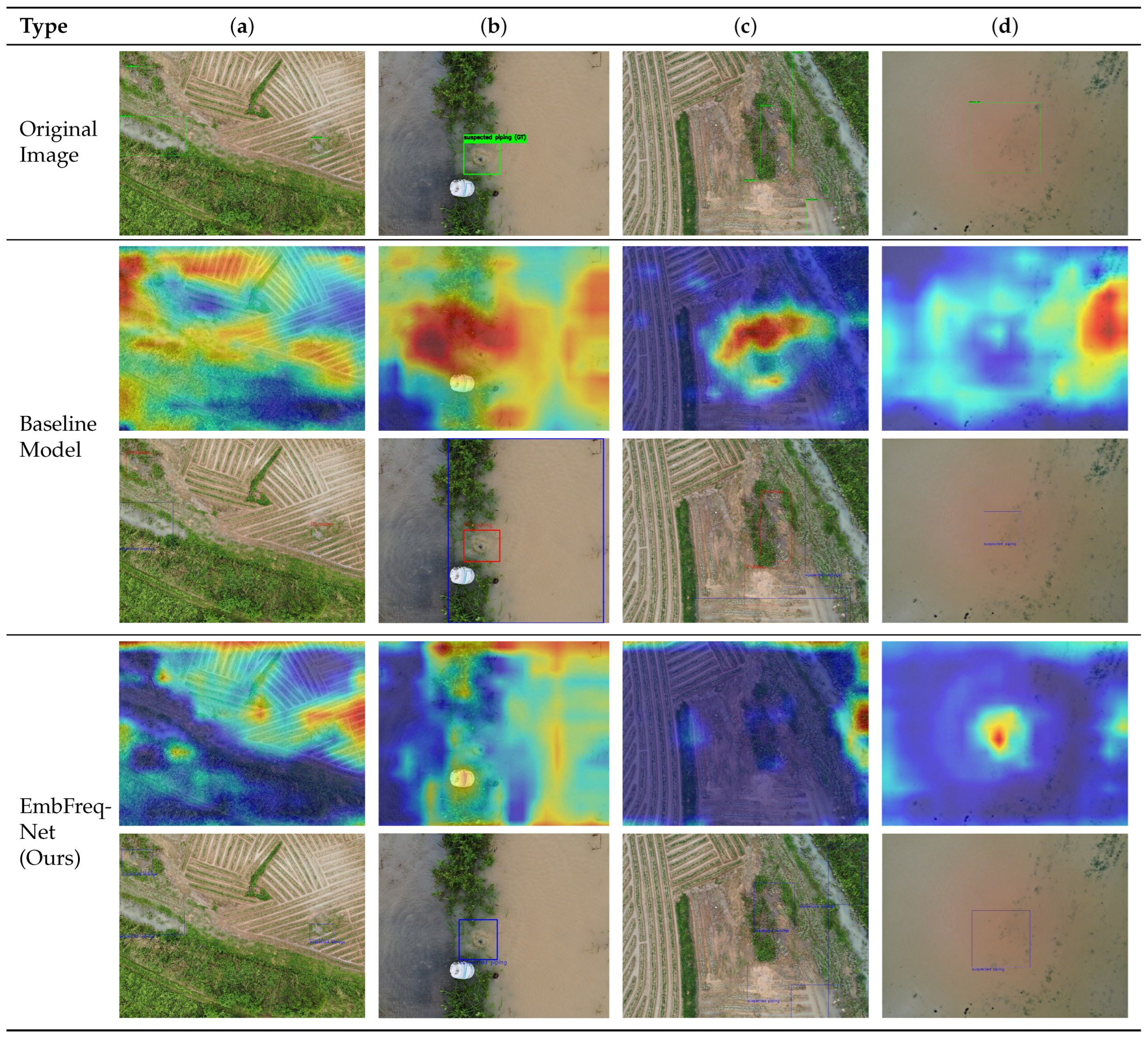

4.6. Interpretability Analysis

4.7. Visualized Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- ASCE/EWRI Task Committee on Dam/Levee Breaching. Earthen Embankment Breaching. J. Hydraul. Eng. 2011, 137, 1549–1564. [Google Scholar] [CrossRef]

- Zhong, Q.; Chen, S.; Fu, Z.; Shan, Y. New Empirical Model for Breaching of Earth-Rock Dams. Nat. Hazards Rev. 2020, 21, 06020002. [Google Scholar] [CrossRef]

- Wu, W. Simplified Physically Based Model of Earthen Embankment Breaching. J. Hydraul. Eng. 2013, 139, 837–851. [Google Scholar] [CrossRef]

- Foster, M.; Fell, R.; Spannagle, M. The Statistics of Embankment Dam Failures and Accidents. Can. Geotech. J. 2000, 37, 1000–1024. [Google Scholar] [CrossRef]

- Yu, G.; Li, C. Research Progress of Dike Leak Rescue Technology. Water 2023, 15, 903. [Google Scholar] [CrossRef]

- Hongen, L.; Guizhen, M.; Fang, W.; Wenjie, R.; Yongjun, H. Analysis of dam failure trend of China from 2000 to 2018 and improvement suggestions. Hydro-Sci. Eng. 2021, 5, 101–111. [Google Scholar] [CrossRef]

- Shekhar, S.; Ram, S.; Burman, A. Probabilistic Analysis of Piping in Habdat Earthen Embankment Using Monte Carlo and Subset Simulation: A Case Study. Indian Geotech. J. 2022, 52, 907–926. [Google Scholar] [CrossRef]

- Zhou, R.; Wen, Z.; Su, H. Automatic Recognition of Earth Rock Embankment Leakage Based on UAV Passive Infrared Thermography and Deep Learning. ISPRS J. Photogramm. Remote Sens. 2022, 191, 85–104. [Google Scholar] [CrossRef]

- Cardarelli, E.; Cercato, M.; De Donno, G. Characterization of an Earth-Filled Dam through the Combined Use of Electrical Resistivity Tomography, P- and SH-wave Seismic Tomography and Surface Wave Data. J. Appl. Geophys. 2014, 106, 87–95. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Shimada, J.; Sato, A. Three-dimensional Spatial and Temporal Monitoring of Soil Water Content Using Electrical Resistivity Tomography. Water Resour. Res. 2001, 37, 273–285. [Google Scholar] [CrossRef]

- Comina, C.; Vagnon, F.; Arato, A.; Fantini, F.; Naldi, M. A New Electric Streamer for the Characterization of River Embankments. Eng. Geol. 2020, 276, 105770. [Google Scholar] [CrossRef]

- Palacky, G.; Ritsema, I.; De Jong, S. Electromagnetic Prospecting for Groundwater in Precambrian Terrains in the Republic of Upper Volta*. Geophys. Prospect. 1981, 29, 932–955. [Google Scholar] [CrossRef]

- Howard, A.Q.; Nabulsi, K. Transient electromagnetic response from a thin dyke in the earth. Radio Sci. 1984, 19, 267–274. [Google Scholar] [CrossRef]

- Cheng, L.; Zhang, A.; Cao, B.; Yang, J.; Hu, L.; Li, Y. An Experimental Study on Monitoring the Phreatic Line of an Embankment Dam Based on Temperature Detection by OFDR. Opt. Fiber Technol. 2021, 63, 102510. [Google Scholar] [CrossRef]

- Abdulameer, L.; Al Maimuri, N.; Nama, A.; Rashid, F.; Mohammed, H.; Al-Dujaili, A. Review of Artificial Intelligence Applications in Dams and Water Resources: Current Trends and Future Directions. J. Adv. Res. Fluid Mech. Therm. Sci. 2025, 128, 205–225. [Google Scholar] [CrossRef]

- Srinivas, M.; Akash, R.; Barkha, N.; Brunda, P.; Ravikumar, S. Smart Dam Automation Using Internet of Things, Image Processing and Deep Learning. In Proceedings of the 2nd International Conference on Intelligent and Sustainable Power and Energy Systems ISPES-Volume 1, Bangalore, India, 26–27 September 2024; pp. 164–170. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, H.; Wei, Y.; Bao, T.; Li, T.; Wang, Q.; Wang, N.; Zhao, M. Vision-guided crack identification and size quantification framework for dam underwater concrete structures. Struct. Health Monit. 2024, 24, 2125–2148. [Google Scholar] [CrossRef]

- Li, R.; Wang, Z.; Sun, H.; Zhou, S.; Liu, Y.; Liu, J. Automatic Identification of Earth Rock Embankment Piping Hazards in Small and Medium Rivers Based on UAV Thermal Infrared and Visible Images. Remote Sens. 2023, 15, 4492. [Google Scholar] [CrossRef]

- Jiang, Y.; Cheng, C.; Deng, L. LGIFNet: An infrared and visible image fusion network with local-global frequency interaction. Nondestruct. Test. Eval. 2025, 0, 1–25. [Google Scholar] [CrossRef]

- Jing, H.; Bin, W.; Jiachen, H. Chlorophyll inversion in rice based on visible light images of different planting methods. PLoS ONE 2025, 20, e0319657. [Google Scholar] [CrossRef]

- Li, S.; Liu, Z.; Wang, W.; Li, Q. Adaptive Frequency Separation Enhancement Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5642613. [Google Scholar] [CrossRef]

- Liu, Y.; Tu, B.; Liu, B.; He, Y.; Li, J.; Plaza, A. Spatial Frequency Domain Transformation for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5634916. [Google Scholar] [CrossRef]

- Fu, J.; Yu, Y.; Wang, L. FSDENet: A Frequency and Spatial Domains-Based Detail Enhancement Network for Remote Sensing Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 19378–19392. [Google Scholar] [CrossRef]

- Gupta, A.K.; Mathur, P.; Mishra, S.; Malav, M. Comparative Analysis of YOLO, Faster-RCNN and RetinaNet Object Detection Models for Satellite Imagery Analysis. In Proceedings of the 2024 2nd International Conference on Cyber Physical Systems, Power Electronics and Electric Vehicles (ICPEEV), Hyderabad, India, 26–28 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Li, N.; Wang, M.; Huang, H.; Li, B.; Yuan, B.; Xu, S. PAR-YOLO: A Precise and Real-Time YOLO Water Surface Garbage Detection Model. Earth Sci. Inform. 2025, 18, 135. [Google Scholar] [CrossRef]

- Lin, F.; Hou, T.; Jin, Q.; You, A. Improved YOLO Based Detection Algorithm for Floating Debris in Waterway. Entropy 2021, 23, 1111. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Yang, Z.; Guan, Q.; Zhao, K.; Yang, J.; Xu, X.; Long, H.; Tang, Y. Multi-Branch Auxiliary Fusion YOLO with Re-parameterization Heterogeneous Convolutional for accurate object detection. arXiv 2024, arXiv:2407.04381. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, Y.; Zhang, Y.; Zhang, T. Video saliency prediction via single feature enhancement and temporal recurrence. Eng. Appl. Artif. Intell. 2025, 160, 111840. [Google Scholar] [CrossRef]

- Chi, L.; Jiang, B.; Mu, Y. Fast Fourier Convolution. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 4479–4488. [Google Scholar]

- Lian, J.; Zhang, Y.; Li, H.; Hu, J.; Li, L. DFT-Net: A Bimodal Object Detection Algorithm for Complex Traffic Environments. In Proceedings of the 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), Beijing, China, 17–20 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- An, K.; Bao, W.; Huang, M.; Xiang, X. Frequency-Domain-Based Multispectral Pedestrian Detection Network. In Proceedings of the 2025 4th International Symposium on Computer Applications and Information Technology (ISCAIT), Xi’an, China, 21–23 March 2025; pp. 453–459. [Google Scholar] [CrossRef]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. YOLO-FaceV2: A scale and occlusion aware face detector. Pattern Recognit. 2024, 155, 110714. [Google Scholar] [CrossRef]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, X.; He, K.; LeCun, Y.; Liu, Z. Transformers without Normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025; pp. 14901–14911. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss V2: Learning Reliable Localization Quality Estimation for Dense Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021. [Google Scholar] [CrossRef]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the Stars. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar] [CrossRef]

- Cai, H.; Li, J.; Hu, M.; Gan, C.; Han, S. EfficientViT: Lightweight Multi-Scale Attention for High-Resolution Dense Prediction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 17256–17267. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal Models for the Mobile Ecosystem. In Computer Vision—ECCV 2024; Springer Nature: Cham, Switzerland, 2024; pp. 78–96. [Google Scholar] [CrossRef]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. DAMO-YOLO: A Report on Real-Time Object Detection Design. arXiv 2023, arXiv:2211.15444. [Google Scholar]

- Sun, Z.; Lin, M.; Sun, X.; Tan, Z.; Li, H.; Jin, R. MAE-DET: Revisiting Maximum Entropy Principle in Zero-Shot NAS for Efficient Object Detection. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; Volume 162, pp. 20810–20826. [Google Scholar]

- Jiang, Y.; Tan, Z.; Wang, J.; Sun, X.; Lin, M.; Li, H. GiraffeDet: A Heavy-Neck Paradigm for Object Detection. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Yang, G.; Lei, J.; Tian, H.; Feng, Z.; Liang, R. Asymptotic Feature Pyramid Network for Labeling Pixels and Regions. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7820–7829. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. In Proceedings of the Advances in Neural Information Processing Systems; Globerson, A., Mackey, L., Belgrave, D., Fan, A., Paquet, U., Tomczak, J., Zhang, C., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2024; Volume 37, pp. 107984–108011. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. Rep ViT: Revisiting Mobile CNN From ViT Perspective. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15909–15920. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What YouWant to Learn Using Programmable Gradient Information. In Computer Vision—ECCV 2024; Springer Nature: Basel, Switzerland, 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO, Version 8.3.0.Computer Software. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 27 October 2025).

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. RT-DETRv2: Improved Baseline with Bag-of-Freebies for Real-Time Detection Transformer. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

| Category | Method/Study | Year | Detection Technology | Platform | Target Hazards | Key Innovation |

|---|---|---|---|---|---|---|

| Traditional Methods | Manual Inspection | - | Visual observation | Ground-based | Leakage, Piping | Direct observation |

| Resistivity Detection [9] | 2014 | Geophysical | Ground-based | Subsurface anomalies | Non-invasive detection | |

| Ground-Penetrating Radar [12] | 1981 | Electromagnetic | Ground-based | Subsurface structures | Deep penetration capability | |

| Thermal-based Methods | Thermal Infrared Detection [18] | 2023 | Thermal imaging | UAV/Ground | Temperature anomalies | Temperature contrast detection |

| Automatic Recognition [8] | 2022 | Thermal + AI | UAV | Surface anomalies | Automated thermal processing | |

| AI Infrastructure Monitoring | Smart Dam Automation [16] | 2024 | YOLOv5 + Deep Learning | Fixed sensors | Structural cracks | High precision crack detection |

| Vision-guided Inspection [17] | 2024 | Computer Vision | Underwater ROV | Underwater defects | 98.6% precision at 68 FPS | |

| AI Applications Review [15] | 2025 | Comprehensive AI | Multi-platform | Various hazards | Systematic AI overview | |

| Frequency-Domain Methods | Adaptive Frequency Enhancement [21] | 2025 | FFT + Deep Learning | - | Infrared small targets | Multi-frequency decomposition |

| Spatial-Frequency Transform [22] | 2025 | U-Net + Frequency Attention | - | Small targets | Self-attention mechanism | |

| Frequency-Spatial Enhancement [23] | 2025 | FFT + Haar Wavelet | Remote sensing | Shadow/low-contrast areas | Dual-domain processing | |

| YOLO-based Enhancements | PAR-YOLO [25] | 2025 | Ghost bottleneck + YOLO | Edge computing | General objects | Lightweight design |

| Improved YOLO [26] | 2021 | Attention + YOLO | - | General objects | Feature map attention | |

| BiFPN Enhancement [27] | 2020 | Modified FPN-PANet | - | General objects | Reduced computational complexity | |

| MAFPN [28] | 2024 | Multi-branch FPN | - | Small targets | P2 layer utilization | |

| Our Approach | EmbFreq-Net | 2025 | Frequency + YOLO | UAV | Embankment hazards | Task-specific frequency enhancement |

| Location | Data Volume | Collection Time | Resolution | Acquisition Equipment |

|---|---|---|---|---|

| Songhua River, Nong’an, Jilin | 125 | August 2024 | 6252 × 4168 | Zhixun AR10 |

| Baigou River, Zhuozhou, Hebei | 336 | August 2023 | 4056 × 3040 | DJI ZH20T |

| Fogang, Qingyuan, Guangdong | 64 | April 2024 | 4032 × 3024 | DJI H30T |

| 77 | June 2024 | 4056 × 3040 | DJI ZH20T | |

| 149 | August 2024 | 4056 × 3040 | DJI ZH20T | |

| Changping, Beijing | 53 | December 2024 | 4032 × 3024 | DJI M4T |

| Augment Type | Augment Name | Method Description | Probability/% |

|---|---|---|---|

| Pixel-level | Affine | Rotation, scaling, translation | 50 |

| BBoxSafeRandomCrop | Safe cropping preserving targets | 10 | |

| D4 | Eight-fold symmetry transforms | 10 | |

| ElasticTransform | Non-linear shape deformation | 10 | |

| HorizontalFlip | Left-right mirroring | 10 | |

| VerticalFlip | Up-down mirroring | 10 | |

| GridDistortion | Grid-based distortion | 10 | |

| Perspective | Viewpoint angle changes | 10 | |

| Spatial-level | GaussNoise | Gaussian noise simulation | 10 |

| ISONoise | Camera sensor noise | 10 | |

| ImageCompression | JPEG compression artifacts | 10 | |

| RandomBrightnessContrast | Lighting variations | 10 | |

| RandomFog | Fog weather simulation | 10 | |

| RandomRain | Rain weather simulation | 10 | |

| RandomSnow | Snow weather simulation | 10 | |

| RandomShadow | Shadow effects | 10 | |

| RandomSunFlare | Sun glare effects | 10 | |

| ToGray | Grayscale conversion | 10 |

| Method | P | R | F1 | mAP50 | mAP50–95 | GFLOPs | Params |

|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (M) | |||

| Yolo11 | 75.40 | 73.28 | 0.7432 | 73.49 | 34.41 | 6.3 | 2.58 |

| EfficientViT | 79.07 | 72.29 | 0.7552 | 77.06 | 35.62 | 7.9 | 3.74 |

| FasterNet | 78.52 | 71.89 | 0.7498 | 74.05 | 34.64 | 9.2 | 3.90 |

| MobileNet | 75.62 | 67.25 | 0.7104 | 73.18 | 33.49 | 21.0 | 5.43 |

| StarNet | 82.79 | 70.37 | 0.7607 | 76.47 | 35.43 | 5.0 | 1.94 |

| FFC | 82.32 | 72.22 | 0.7694 | 77.03 | 35.23 | 6.1 | 2.47 |

| C3k2-LFE | 79.80 | 75.50 | 0.7759 | 77.45 | 35.96 | 5.4 | 2.19 |

| Method | P | R | F1 | mAP50 | mAP50–95 | GFLOPs | Params |

|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (M) | |||

| BiFPN | 76.42 | 71.19 | 0.7371 | 73.57 | 33.55 | 6.3 | 1.92 |

| MAFPN | 74.73 | 70.64 | 0.7260 | 72.52 | 32.97 | 7.1 | 2.70 |

| RepGFPN | 83.22 | 73.85 | 0.7819 | 76.48 | 35.13 | 8.2 | 3.66 |

| AFPN | 80.44 | 69.36 | 0.7449 | 74.16 | 33.79 | 8.8 | 2.65 |

| ASF | 81.14 | 71.89 | 0.7622 | 76.82 | 36.18 | 7.1 | 2.67 |

| MFFPN | 78.10 | 78.30 | 0.7819 | 77.13 | 35.96 | 6.1 | 2.47 |

| Method | P | R | F1 | mAP50 | mAP50–95 | GFLOPs | Params |

|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (M) | |||

| YOLO11 | 75.40 | 73.28 | 0.7432 | 73.49 | 34.41 | 6.3 | 2.58 |

| YOLOv10 | 82.02 | 68.43 | 0.7461 | 75.07 | 34.07 | 8.2 | 2.70 |

| YOLOv9 | 83.03 | 65.95 | 0.7349 | 73.50 | 32.59 | 6.4 | 1.73 |

| YOLOv8 | 78.00 | 73.14 | 0.7549 | 75.72 | 34.61 | 6.8 | 2.68 |

| DETR-l | 71.34 | 67.74 | 0.6932 | 69.10 | 29.62 | 103.4 | 31.99 |

| DETR-R50 | 74.57 | 68.17 | 0.7115 | 70.88 | 32.24 | 125.6 | 41.94 |

| RepViT | 80.14 | 71.68 | 0.7566 | 76.18 | 36.55 | 17.0 | 6.43 |

| EmbFreq-Net | 76.52 | 74.59 | 0.7554 | 77.68 | 35.25 | 4.6 | 2.02 |

| Configuration | F1-Score | mAP50 | mAP50–95 | GFLOPs | Parameters |

|---|---|---|---|---|---|

| (%) | (%) | (M) | |||

| A (LFE Module) | 0.7759 | 77.45 | 35.96 | 5.4 | 2.19 |

| B (MSIS-Block) | 0.7682 | 76.95 | 34.89 | 6.4 | 2.66 |

| C (MFFPN) | 0.7819 | 77.13 | 35.96 | 6.1 | 2.47 |

| D (MSSDH) | 0.7391 | 74.23 | 33.98 | 5.6 | 2.42 |

| A + B | 0.7547 | 74.17 | 34.64 | 5.4 | 2.27 |

| A + C | 0.7474 | 74.63 | 32.92 | 5.2 | 2.10 |

| A + D | 0.7515 | 76.60 | 34.48 | 5.1 | 2.24 |

| B + C | 0.7626 | 77.37 | 36.58 | 6.1 | 2.55 |

| B + D | 0.7598 | 77.28 | 35.73 | 5.7 | 2.50 |

| C + D | 0.7480 | 76.29 | 35.63 | 5.3 | 2.29 |

| A + B + C | 0.7697 | 76.71 | 35.08 | 5.3 | 2.18 |

| A + B + D | 0.7350 | 74.11 | 33.56 | 5.2 | 2.32 |

| A+ C + D | 0.7346 | 75.01 | 33.57 | 4.5 | 1.94 |

| B + C + D | 0.7226 | 75.66 | 35.46 | 5.4 | 2.39 |

| A + B + C + D (Full Model) | 0.7554 | 77.68 | 35.25 | 4.6 | 2.02 |

| Hyperparameter | Value | P | R | F1 | mAP50 | mAP50–95 |

|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | |||

| Compressed Channels | 16 (Default) | 76.52 | 74.59 | 0.7554 | 77.68 | 35.25 |

| 32 | 78.53 | 68.96 | 0.7344 | 76.44 | 34.78 | |

| 64 | 77.74 | 68.19 | 0.7264 | 75.74 | 34.94 | |

| 128 | 73.29 | 71.50 | 0.7238 | 74.96 | 36.31 | |

| 256 | 75.23 | 71.68 | 0.7341 | 75.73 | 35.68 | |

| Kernel Number | 4 | 76.12 | 68.24 | 0.7195 | 76.89 | 36.06 |

| 8 | 82.58 | 66.90 | 0.7387 | 76.70 | 35.80 | |

| 16 (Default) | 76.52 | 74.59 | 0.7554 | 77.68 | 35.25 | |

| 32 | 75.41 | 72.95 | 0.7407 | 75.96 | 34.39 | |

| 64 | 78.70 | 72.82 | 0.7564 | 77.83 | 36.58 | |

| Attention Heads | 2 (Default) | 76.52 | 74.59 | 0.7554 | 77.68 | 35.25 |

| 4 | 79.00 | 70.78 | 0.7462 | 75.28 | 35.27 | |

| 8 | 78.75 | 73.08 | 0.7576 | 78.39 | 37.87 | |

| 16 | 78.24 | 71.89 | 0.7493 | 75.49 | 35.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Wang, Z.; Li, R.; Zhao, R.; Zhang, Q. Automated Detection of Embankment Piping and Leakage Hazards Using UAV Visible Light Imagery: A Frequency-Enhanced Deep Learning Approach for Flood Risk Prevention. Remote Sens. 2025, 17, 3602. https://doi.org/10.3390/rs17213602

Liu J, Wang Z, Li R, Zhao R, Zhang Q. Automated Detection of Embankment Piping and Leakage Hazards Using UAV Visible Light Imagery: A Frequency-Enhanced Deep Learning Approach for Flood Risk Prevention. Remote Sensing. 2025; 17(21):3602. https://doi.org/10.3390/rs17213602

Chicago/Turabian StyleLiu, Jian, Zhonggen Wang, Renzhi Li, Ruxin Zhao, and Qianlin Zhang. 2025. "Automated Detection of Embankment Piping and Leakage Hazards Using UAV Visible Light Imagery: A Frequency-Enhanced Deep Learning Approach for Flood Risk Prevention" Remote Sensing 17, no. 21: 3602. https://doi.org/10.3390/rs17213602

APA StyleLiu, J., Wang, Z., Li, R., Zhao, R., & Zhang, Q. (2025). Automated Detection of Embankment Piping and Leakage Hazards Using UAV Visible Light Imagery: A Frequency-Enhanced Deep Learning Approach for Flood Risk Prevention. Remote Sensing, 17(21), 3602. https://doi.org/10.3390/rs17213602