Evaluating the Impact of Different Spatial Resolutions of UAV Imagery on Mapping Tidal Marsh Vegetation Using Multiple Plots of Different Complexity

Highlights

- The classification accuracy of vegetation varied with images at different spatial resolution.

- Vegetation complexity affected classification accuracy.

- Mapping tidal marshes with different vegetation complexities should use images of different spatial resolutions.

- UAV data with 5 cm resolution was recommended for tidal marsh vegetation classification in the Yellow River Delta or regions of similar vegetation complexity.

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Image Acquisition and Processing

2.3. Selection of Multiple Plots of Different Vegetation Complexity

2.4. Vegetation Community Classification and Accuracy Assessment

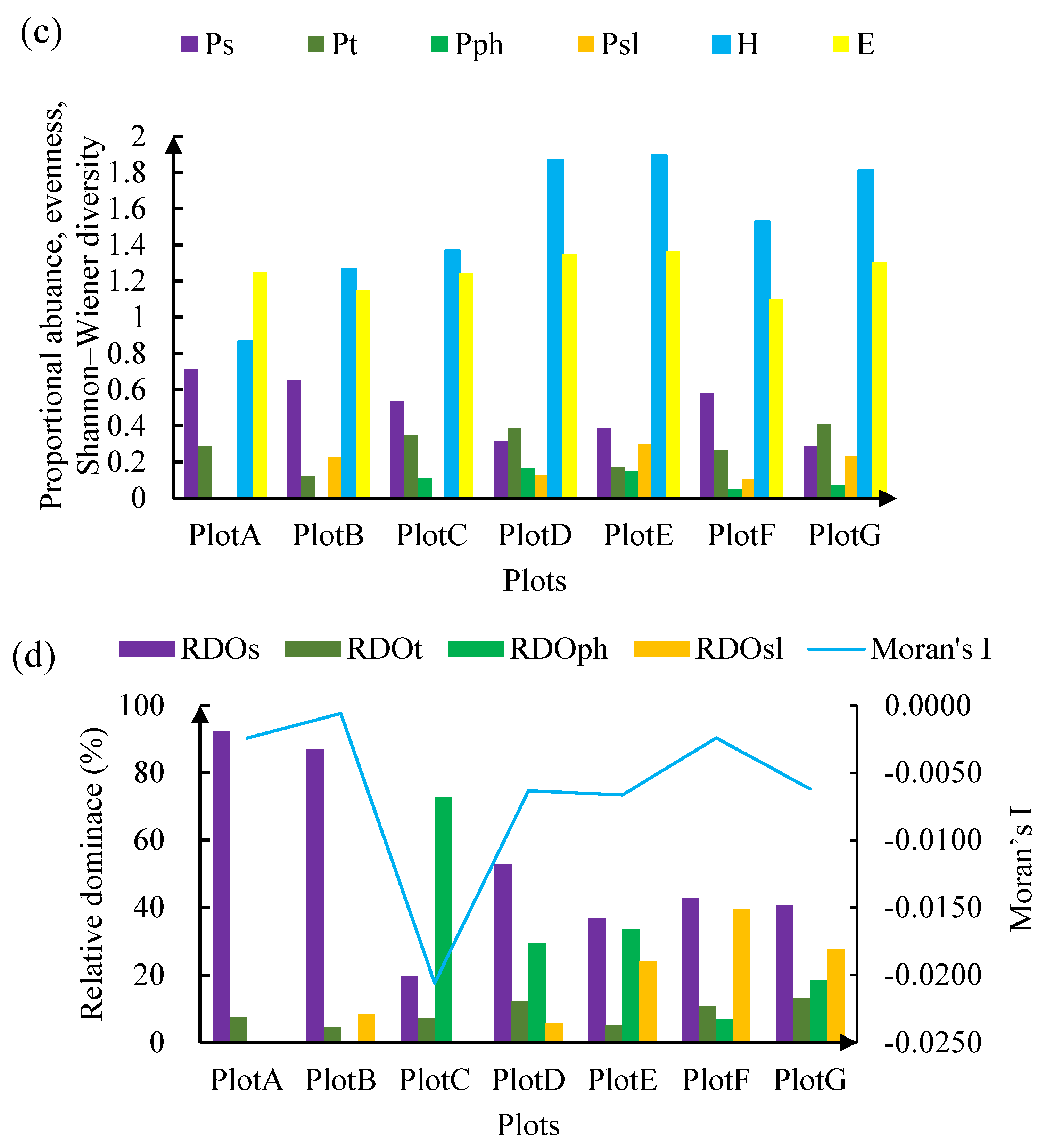

2.5. Analysis of Vegetation Composition and Structure

2.6. Statistical Test

3. Results

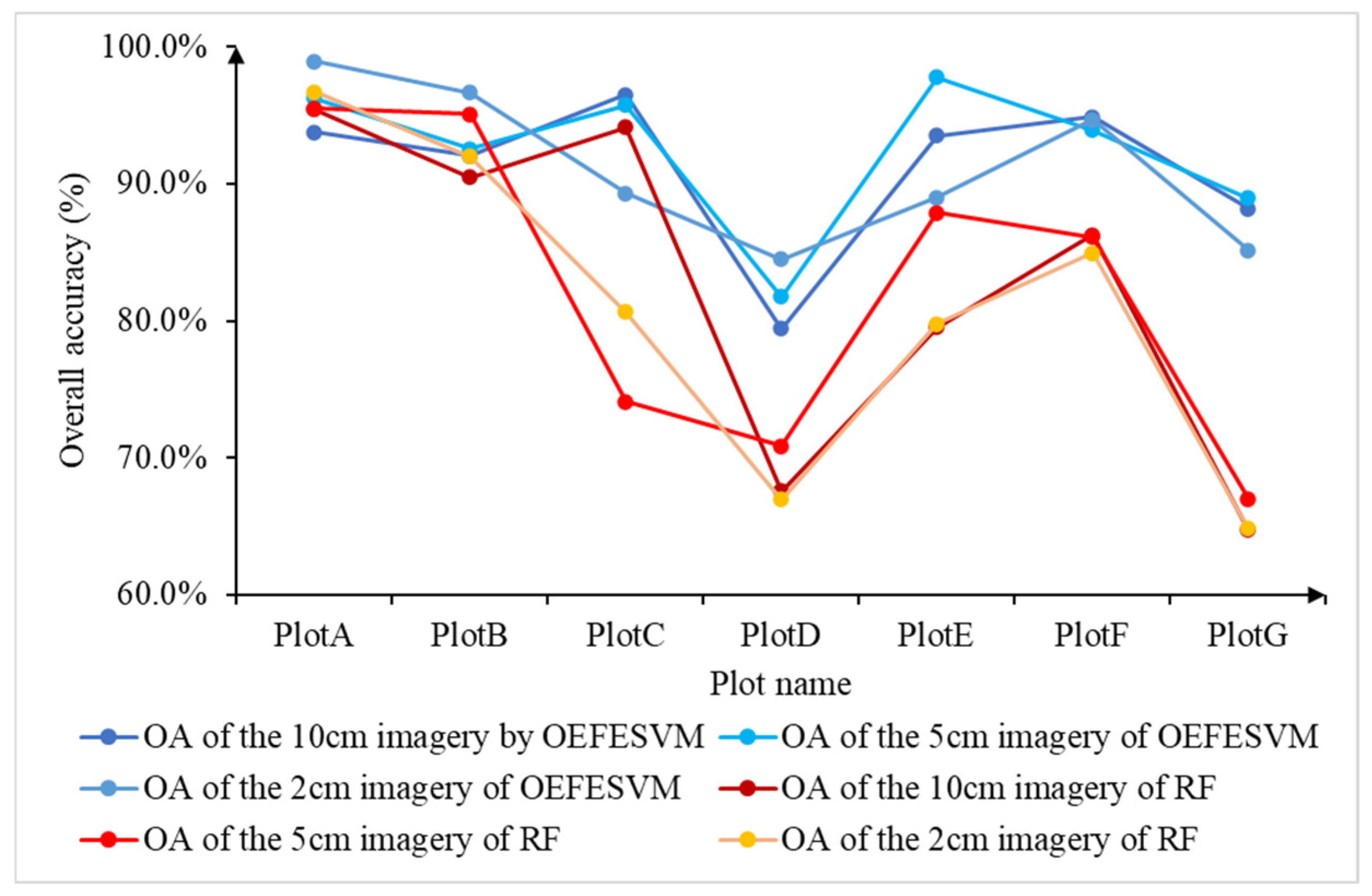

3.1. Quantitative Comparison of Classification Overall Accuracy

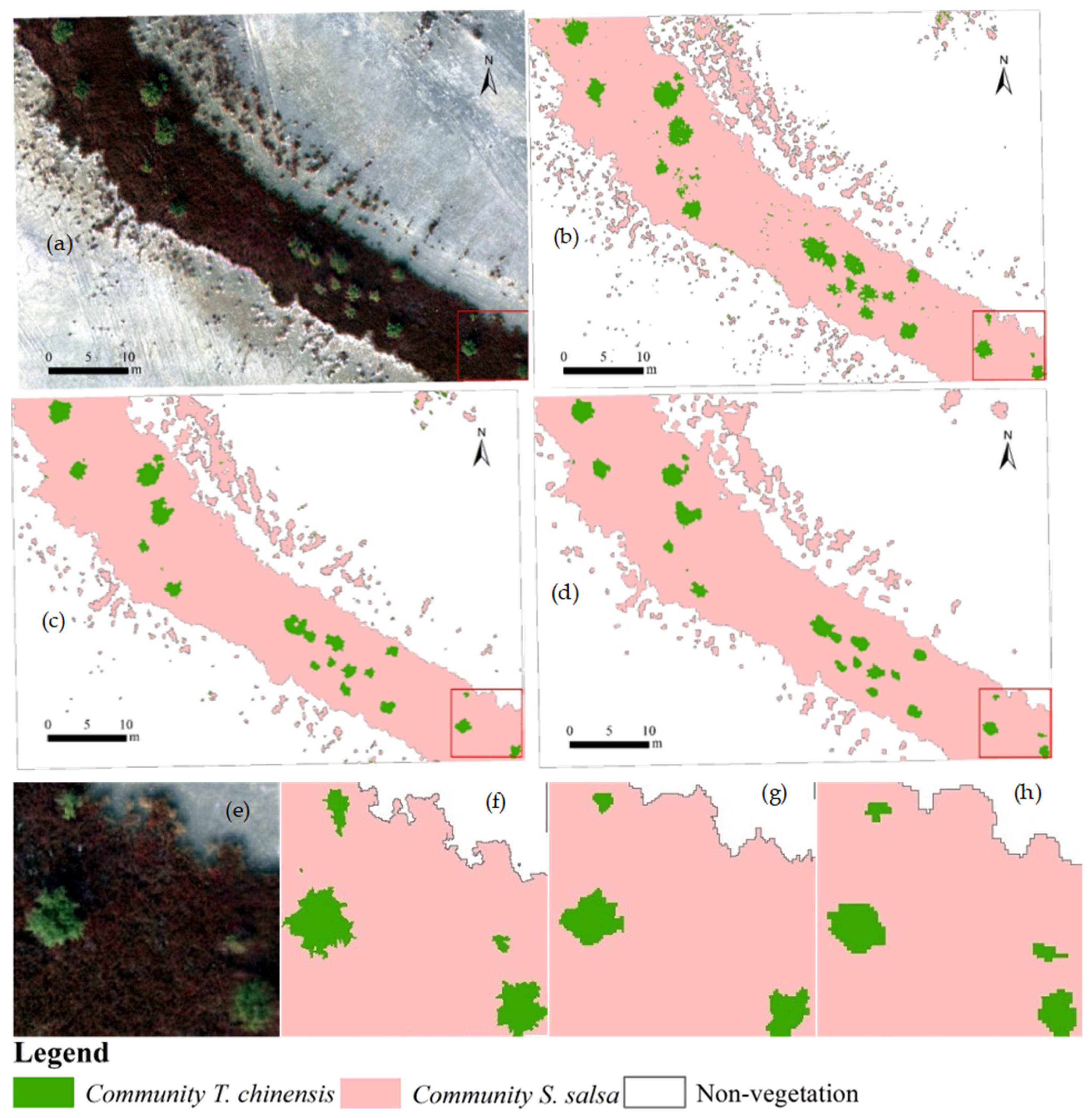

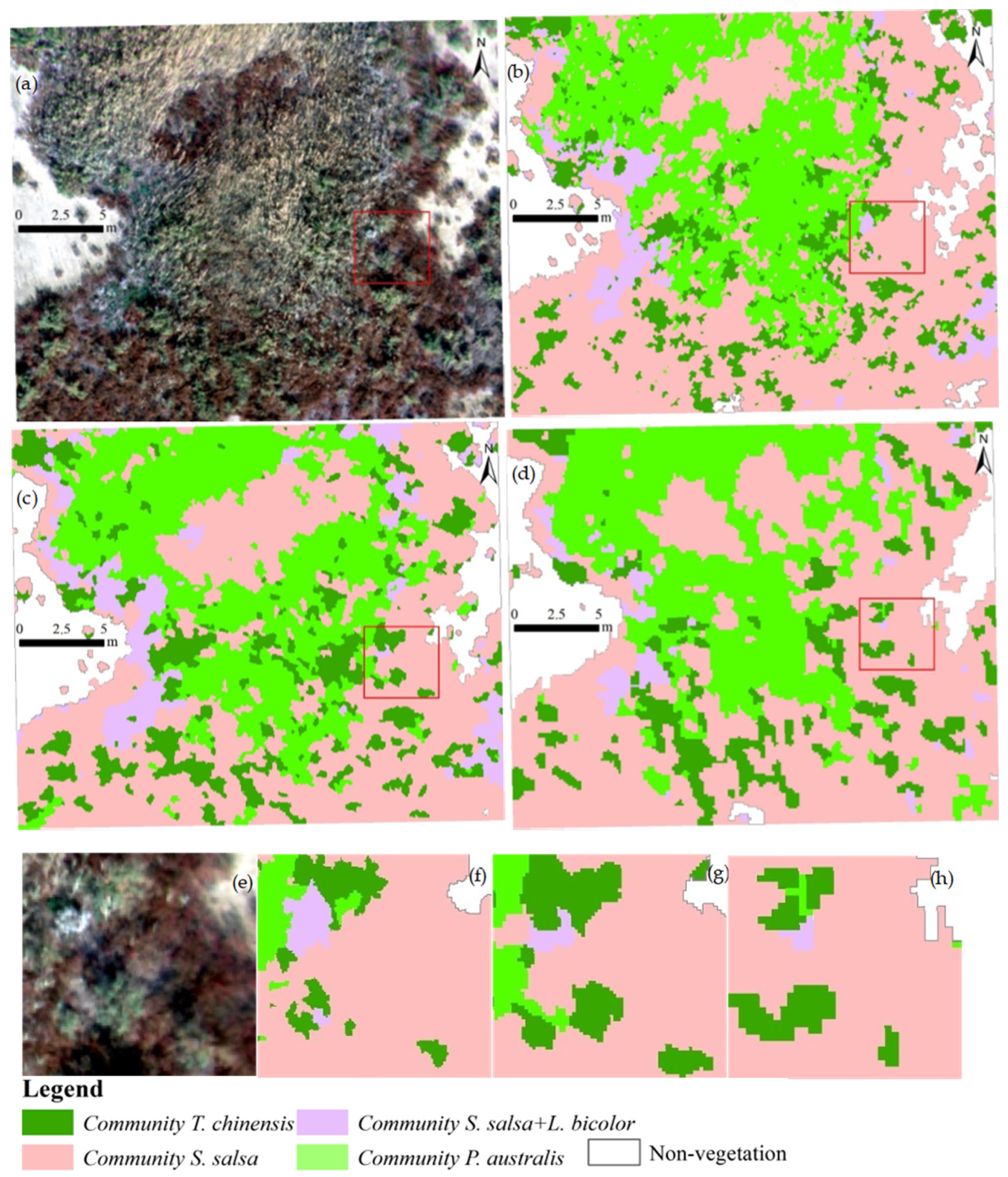

3.2. Qualitative Comparison of Classification Accuracy

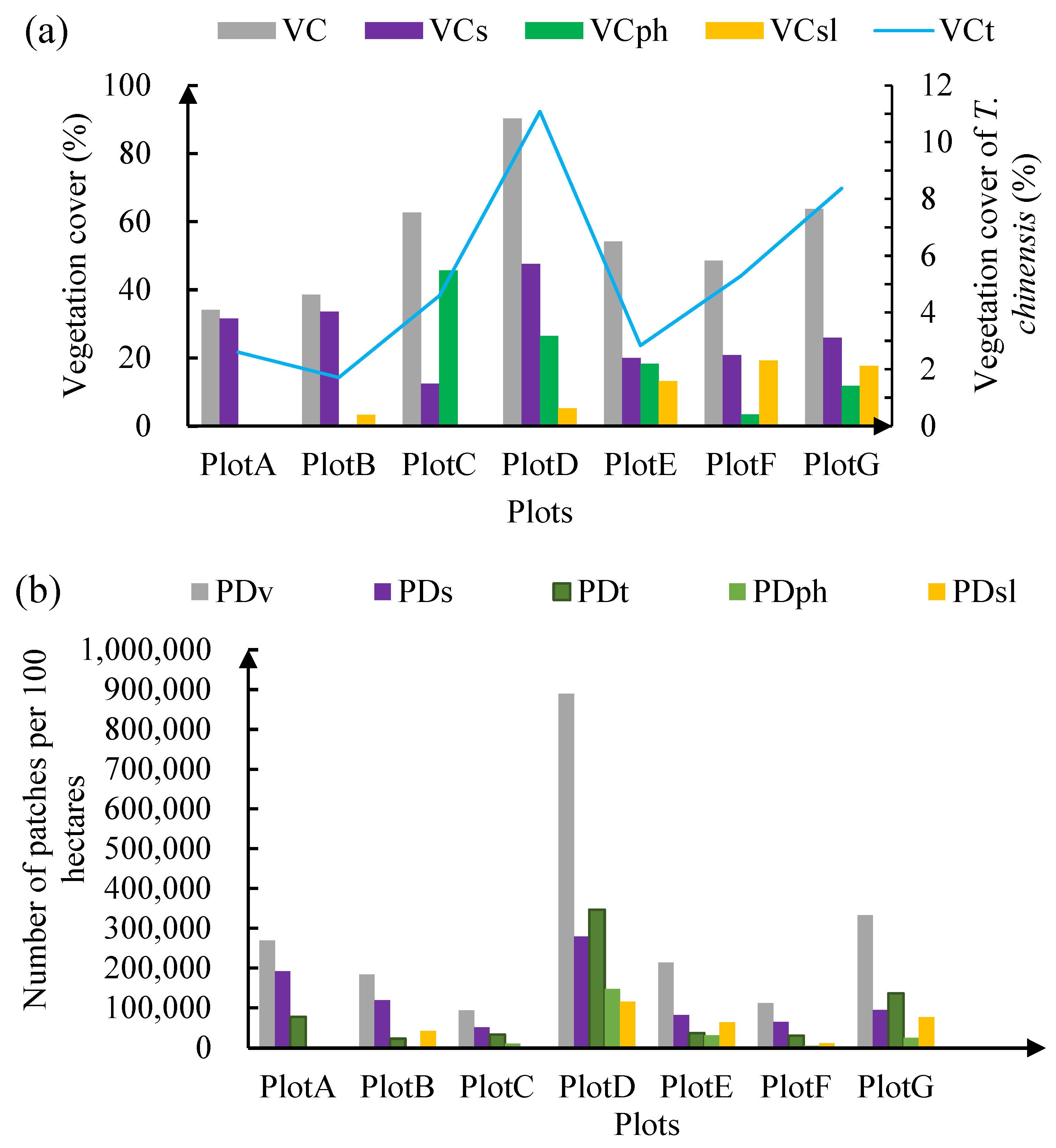

3.3. Vegetation Structure of the Seven Plots

4. Discussion

4.1. Vegetation Characteristics and Classification Accuracy

4.2. Statistical Metrics of Vegetation Complexity

4.3. Prospects

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. The Vegetation Community Classification Accuracy for Seven Plots in the Study Area

| Plot Name | Spatial Resolution | OA | Kappa | S. salsa | T. chinensis | P. australis | S. salsa + L. bicolor | Non-Vegetation | |

|---|---|---|---|---|---|---|---|---|---|

| (cm) | (%) | (%) | (%) | (%) | (%) | (%) | |||

| PlotA | 10 | 93.75 | 0.8972 | PA | 99.96 | 66.76 | 100.0 | ||

| UA | 83.19 | 100.0 | 99.97 | ||||||

| 5 | 96.23 | 0.9378 | PA | 99.88 | 79.47 | 100.0 | |||

| UA | 89.03 | 100.0 | 99.93 | ||||||

| 2 | 98.94 | 0.9824 | PA | 100.0 | 93.97 | 100.0 | |||

| UA | 96.64 | 100.0 | 100.0 | ||||||

| PlotB | 10 | 92.01 | 0.8731 | PA | 98.18 | 66.41 | 76.41 | 99.90 | |

| UA | 78.09 | 99.12 | 89.67 | 97.83 | |||||

| 5 | 92.54 | 0.8796 | PA | 80.27 | 82.09 | 90.57 | 99.98 | ||

| UA | 85.81 | 99.50 | 69.65 | 98.92 | |||||

| 2 | 96.63 | 0.9440 | PA | 99.65 | 82.07 | 89.88 | 100.0 | ||

| UA | 86.35 | 99.93 | 98.90 | 99.78 | |||||

| PlotC | 10 | 96.49 | 0.9240 | PA | 72.54 | 74.57 | 97.68 | 99.97 | |

| UA | 80.31 | 67.64 | 97.84 | 99.30 | |||||

| 5 | 95.73 | 0.9087 | PA | 89.16 | 93.00 | 94.76 | 99.96 | ||

| UA | 51.53 | 72.53 | 99.31 | 99.86 | |||||

| 2 | 89.32 | 0.7920 | PA | 98.49 | 99.27 | 84.96 | 99.96 | ||

| UA | 26.93 | 41.00 | 99.97 | 100.0 | |||||

| PlotD | 10 | 79.43 | 0.6876 | PA | 96.55 | 76.01 | 76.37 | 42.58 | 99.88 |

| UA | 46.70 | 72.64 | 97.67 | 73.05 | 99.88 | ||||

| 5 | 81.76 | 0.7152 | PA | 98.91 | 90.27 | 74.37 | 79.39 | 100.0 | |

| UA | 54.08 | 49.27 | 99.21 | 77.49 | 99.61 | ||||

| 2 | 84.48 | 0.7528 | PA | 97.73 | 92.71 | 77.60 | 91.87 | 99.73 | |

| UA | 56.62 | 50.71 | 99.29 | 90.78 | 99.93 | ||||

| PlotE | 10 | 93.49 | 0.9104 | PA | 95.54 | 94.83 | 88.05 | 95.74 | 99.81 |

| UA | 88.89 | 42.38 | 98.22 | 98.07 | 99.30 | ||||

| 5 | 97.74 | 0.9681 | PA | 98.82 | 91.14 | 96.77 | 96.84 | 99.96 | |

| UA | 98.75 | 62.62 | 99.00 | 98.80 | 99.71 | ||||

| 2 | 88.98 | 0.8459 | PA | 57.24 | 98.84 | 90.53 | 97.11 | 99.84 | |

| UA | 73.04 | 60.94 | 99.89 | 67.19 | 99.99 | ||||

| PlotF | 10 | 94.85 | 0.9253 | PA | 90.55 | 91.59 | 83.81 | 94.49 | 99.19 |

| UA | 78.40 | 92.70 | 99.73 | 94.27 | 98.59 | ||||

| 5 | 93.90 | 0.9114 | PA | 93.94 | 95.87 | 62.02 | 98.99 | 100.0 | |

| UA | 86.05 | 76.46 | 99.88 | 91.96 | 99.40 | ||||

| 2 | 94.66 | 0.9214 | PA | 98.95 | 98.57 | 64.81 | 97.23 | 100.00 | |

| UA | 77.89 | 85.45 | 99.78 | 92.96 | 99.94 | ||||

| PlotG | 10 | 88.16 | 0.8315 | PA | 76.86 | 76.93 | 86.02 | 91.84 | 100.0 |

| UA | 55.18 | 59.24 | 97.83 | 88.63 | 98.73 | ||||

| 5 | 88.94 | 0.8408 | PA | 88.10 | 75.16 | 85.72 | 94.17 | 100.0 | |

| UA | 47.57 | 74.73 | 98.19 | 83.28 | 99.77 | ||||

| 2 | 85.15 | 0.7896 | PA | 92.14 | 87.57 | 75.19 | 98.29 | 99.99 | |

| UA | 51.91 | 55.94 | 99.67 | 73.91 | 99.93 |

| Plot Name | Spatial Resolution | OA | Kappa | S. salsa | T. chinensis | P. australis | S. salsa + L. bicolor | Non-Vegetation | |

|---|---|---|---|---|---|---|---|---|---|

| (cm) | (%) | (%) | (%) | (%) | (%) | (%) | |||

| PlotA | 10 | 95.45 | 0.9254 | PA | 99.80 | 76.09 | 100.0 | ||

| UA | 87.35 | 99.82 | 99.87 | ||||||

| 5 | 95.45 | 0.8248 | PA | 99.93 | 75.10 | 100.0 | |||

| UA | 87.05 | 100.0 | 99.92 | ||||||

| 2 | 96.67 | 0.945 | PA | 96.41 | 87.36 | 99.99 | |||

| UA | 92.98 | 93.37 | 99.98 | ||||||

| PlotB | 10 | 90.46 | 0.8488 | PA | 97.95 | 59.20 | 72.92 | 99.81 | |

| UA | 77.82 | 97.23 | 77.26 | 97.99 | |||||

| 5 | 95.05 | 0.9197 | PA | 98.54 | 79.10 | 86.07 | 99.38 | ||

| UA | 86.65 | 98.04 | 89.99 | 98.83 | |||||

| 2 | 91.98 | 0.8659 | PA | 99.45 | 71.08 | 57.10 | 100.0 | ||

| UA | 74.25 | 94.33 | 91.56 | 99.86 | |||||

| PlotC | 10 | 94.12 | 0.8753 | PA | 62.32 | 76.71 | 94.78 | 99.82 | |

| UA | 44.08 | 76.71 | 97.14 | 99.53 | |||||

| 5 | 74.11 | 0.5779 | PA | 85.36 | 86.54 | 64.25 | 99.92 | ||

| UA | 12.85 | 28.55 | 98.29 | 99.71 | |||||

| 2 | 80.63 | 0.6398 | PA | 42.71 | 69.31 | 98.13 | 76.44 | ||

| UA | 8.76 | 29.38 | 95.42 | 96.00 | |||||

| PlotD | 10 | 67.55 | 0.5767 | PA | 87.55 | 78.83 | 49.98 | 46.07 | 100.0 |

| UA | 56.41 | 36.10 | 82.22 | 79.76 | 99.30 | ||||

| 5 | 70.88 | 0.6162 | PA | 93.51 | 83.00 | 52.68 | 62.69 | 100.0 | |

| UA | 60.92 | 32.82 | 92.47 | 61.78 | 98.60 | ||||

| 2 | 66.94 | 0.5697 | PA | 97.26 | 69.51 | 44.53 | 58.63 | 99.82 | |

| UA | 50.06 | 41.04 | 92.74 | 56.89 | 93.20 | ||||

| PlotE | 10 | 79.50 | 0.7316 | PA | 92.52 | 90.92 | 58.22 | 90.25 | 99.61 |

| UA | 58.33 | 24.00 | 93.77 | 94.01 | 99.67 | ||||

| 5 | 87.86 | 0.8318 | PA | 85.34 | 77.90 | 82.80 | 86.75 | 99.85 | |

| UA | 72.34 | 40.13 | 92.63 | 91.00 | 99.36 | ||||

| 2 | 79.79 | 0.7234 | PA | 79.27 | 80.84 | 70.95 | 72.50 | 99.50 | |

| UA | 48.28 | 36.74 | 89.75 | 92.28 | 99.87 | ||||

| PlotF | 10 | 86.24 | 0.8004 | PA | 87.71 | 90.34 | 10.89 | 93.86 | 100.00 |

| UA | 53.20 | 67.71 | 88.64 | 96.81 | 98.60 | ||||

| 5 | 86.11 | 0.7992 | PA | 91.69 | 93.07 | 12.24 | 96.21 | 99.42 | |

| UA | 49.78 | 73.44 | 81.36 | 93.40 | 99.06 | ||||

| 2 | 84.96 | 0.7801 | PA | 79.80 | 88.65 | 25.42 | 87.48 | 99.71 | |

| UA | 42.11 | 74.77 | 72.31 | 90.64 | 99.85 | ||||

| PlotG | 10 | 64.73 | 0.5648 | PA | 79.87 | 70.16 | 34.91 | 88.76 | 100.0 |

| UA | 31.00 | 27.96 | 95.06 | 84.21 | 98.41 | ||||

| 5 | 67.01 | 0.5887 | PA | 88.45 | 68.30 | 39.22 | 88.23 | 99.98 | |

| UA | 27.00 | 32.69 | 91.69 | 84.23 | 99.82 | ||||

| 2 | 64.85 | 0.5664 | PA | 84.27 | 70.91 | 28.03 | 90.88 | 97.91 | |

| UA | 25.35 | 39.07 | 85.47 | 70.94 | 99.80 |

References

- Li, H.; Yang, S.L. Trapping effect of tidal marsh vegetation on suspended sediment, Yangtze Delta. J. Coast. Res. 2009, 25, 915–924. [Google Scholar] [CrossRef]

- Van Belzen, J.; Van de Koppel, J.; Kirwan, M.L.; Van der Wal, D.; Herman, P.M.J.; Dakos, V.; Kefi, S.; Scheffer, M.; Guntenspergen, G.R.; Bouma, T.J. Vegetation recovery in tidal marshes reveals critical slowing down under increased inundation. Nat. Commun. 2017, 8, 15811. [Google Scholar] [CrossRef]

- Correll, M.D.; Hantson, W.; Hodgman, T.P.; Cline, B.B.; Elphik, C.S.; Shrive, W.G.; Tymkiw, E.L.; Olsen, B.J. Fine-scale mapping of coastal plant communities in the northeastern USA. Wetlands 2019, 39, 17–28. [Google Scholar] [CrossRef]

- Gedan, K.B.; Kirwan, M.L.; Wolanski, E.; Barbier, E.B.; Silliman, B.R. The present and future role of coastal wetland vegetation in protecting shorelines: Answering recent challenges to the paradigm. Clim. Change 2011, 106, 7–29. [Google Scholar] [CrossRef]

- Duarte, C.M.; Losada, I.J.; Hendriks, I.E.; Mazarrasa, I.; Marba, N. The role of coastal plant communities for climate change mitigation and adaption. Nat. Clim. Change 2013, 3, 961–969. [Google Scholar] [CrossRef]

- Kearney, W.S.; Fagherazzi, S. Salt marsh vegetation promotes efficient tidal channel networks. Nat. Commun. 2016, 7, 12287. [Google Scholar] [CrossRef] [PubMed]

- Van Zelst, V.T.M.; Dijkstra, J.T.; Van Wesenbeeck, B.K.; Eilander, D.; Morris, E.P.; Winsemius, H.C.; Ward, P.J.; De Vries, M.B. Cutting the costs of coastal protection by integrating vegetation in flood defences. Nat. Cummun. 2021, 12, 6533. [Google Scholar] [CrossRef] [PubMed]

- Villoslada, M.; Bergamo, T.F.; Ward, R.D.; Burnside, N.G.; Joyce, C.B.; Bunce, R.G.H. Fine scale plant community assessment in coastal meadows using UAV based multispectral data. Ecol. Indic. 2020, 111, 105979. [Google Scholar] [CrossRef]

- Worthington, T.A.; Spalding, M.; Landis, E.; Maxwell, T.L.; Navarro, A.; Smart, L.S.; Murray, N.J. The distribution of global tidal marshes from Earth observation data. Glob. Ecol. Biogeogr. 2024, 33, e13852. [Google Scholar] [CrossRef]

- Chen, Z.Z.; Chen, J.J.; Yue, Y.M.; Lan, Y.P.; Ling, M.; Li, X.H.; You, H.T.; Han, X.W.; Zhou, G.Q. Tradeoffs among multi-source remote sensing images, spatial resolution, and accuracy for the classification of wetland plant species and surface objects based on the MRS_DeepLabV3+ model. Ecol. Inform. 2024, 81, 102594. [Google Scholar] [CrossRef]

- Higinbotham, C.B.; Alber, M.; Chalmers, A.G. Analysis of tidal marsh vegetation patterns in two Georgia estuaries using aerial photography and GIS. Estuaries 2004, 27, 670–683. [Google Scholar] [CrossRef]

- Rajakumari, S.; Mahesh, R.; Sarunjith, K.J.; Ramesh, R. Building spectral catalogue for salt marsh vegetation, hyperspectral and multispectral remote sensing. Reg. Stud. Mar. Sci. 2022, 53, 102435. [Google Scholar] [CrossRef]

- Wu, Z.; Zhao, S.; Zhang, X.; Sun, P.; Wang, L. Studies on interrelation between salt vegetation and soil salinity in the Yellow River Delta. Chin. J. Plant Ecol. 1994, 18, 184–193, (In Chinese with English abstract). [Google Scholar]

- Li, H.; Liu, Q.S.; Huang, C.; Zhang, X.; Wang, S.X.; Wu, W.; Shi, L. Variation in vegetation composition and structure across mudflat areas in the Yellow River Delta, China. Remote Sens. 2024, 16, 3495. [Google Scholar] [CrossRef]

- Dronova, I.; Kislik, C.; Dinh, Z.; Kelly, M. A review of unoccupied aerial vehicle use in wetland applications: Emerging opportunities in approach, technology, and data. Drones 2021, 5, 45. [Google Scholar] [CrossRef]

- Huang, Y.F.; Lu, C.Y.; Jia, M.M.; Wang, Z.L.; Su, Y.; Su, Y.L. Plant species classification of coastal wetlands based on UAV images and object-oriented deep learning. Biodivers. Sci. 2023, 31, 22411, (In Chinese with English abstract). [Google Scholar] [CrossRef]

- Fassnacht, E.E.; Latifi, H.; Sterenczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Belluco, E.; Camuffo, M.; Ferrari, S.; Modenese, L.; Silvestri, S.; Marani, A.; Marani, M. Mapping salt-marsh vegetation by multispectral and hyperspectral remote sensing. Remote Sens. Environ. 2006, 105, 54–67. [Google Scholar] [CrossRef]

- Rasanen, A.; Virtanen, T. Data and resolution requirements in mapping vegetation in spatially heterogeneous landscapes. Remote Sens. Environ. 2019, 230, 111207. [Google Scholar] [CrossRef]

- Kolarik, N.E.; Gaughan, A.E.; Stevens, F.R.; Pricope, N.G.; Woodward, K.; Cassidy, L.; Salerno, J.; Hartter, J. A multi-plot assessment of vegetation structure using a micro-unmanned aerial system (UAS) in a semi-arid savanna environment. ISPRS J. Photogramm. Remote Sens. 2020, 164, 84–96. [Google Scholar] [CrossRef]

- Mullerova, J.; Gago, X.; Bucas, M.; Company, J.; Estrany, J.; Fortesa, J.; Manfreda, S.; Michez, A.; Mokros, M.; Pauluse, G.; et al. Characterizing vegetation complexity with unmanned aerial systems (UAV)-a framework and synthesis. Ecol. Indic. 2021, 131, 108156. [Google Scholar] [CrossRef]

- Avtar, R.; Suab, S.A.; Syukur, M.S.; Korom, A.; Umarhadi, D.A.; Yunus, A.P. Assessing the influence of UAV altitude on extracted biophysical parameters of young oil palm. Remote Sens. 2020, 12, 3030. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Fagioli, A.; Foresti, G.L.; Pannone, D.; Piciarelli, C. Automatic estimation of optimal UAV flight parameters for real-time wide areas monitoring. Multimed. Tools Appl. 2021, 80, 25009–25031. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Garcia, M.D.N.; Larriva, J.E.M.D.; Garcia-Ferrer, A. An analysis of the influence of flight parameters in the generation of unmanned aerial vehicle (UAV) orthomosaicks to survey archaeological areas. Sensors 2016, 16, 1838. [Google Scholar] [CrossRef] [PubMed]

- Cui, B.; Yang, Q.; Yang, Z.; Zhang, K. Evaluating the ecological performance of wetland restoration in the Yellow River Delta, China. Ecol. Eng. 2009, 35, 1090–1103. [Google Scholar] [CrossRef]

- Wang, H.; Gao, J.; Ren, L.; Kong, Y.; Li, H.; Li, L. Assessment of the red-crowned crane habitat in the Yellow River Delta Nature Reserve, East China. Reg. Environ. Change 2013, 13, 115–123. [Google Scholar] [CrossRef]

- Li, S.; Cui, B.; Xie, T.; Zhang, K. Diversity pattern of macrobenthos associated with different stages of wetland restoration in the Yellow River Delta. Wetlands 2016, 36, S57–S67. [Google Scholar] [CrossRef]

- Liu, Q.S.; Huang, C.; Gao, X.; Li, H.; Liu, G.H. Size distribution of the quasi-circular vegetation patches in the Yellow River Delta, China. Ecol. Inform. 2022, 71, 101807. [Google Scholar] [CrossRef]

- Bai, X.H.; Yang, C.Z.; Fang, L.; Chen, J.Y.; Wang, X.F.; Gao, N.; Zheng, P.M.; Wang, G.Q.; Wang, Q.; Ren, S.L. Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning. Drones 2025, 9, 235. [Google Scholar] [CrossRef]

- Li, H.; Wang, P.; Huang, C. Comparison of deep learning methods for detecting and counting sorghum heads in UAV Imagery. Remote Sens. 2022, 14, 3143. [Google Scholar] [CrossRef]

- Zhu, H.L.; Huang, Y.W.; An, Z.K.; Zhang, H.; Han, Y.Y.; Zhao, Z.H.; Li, F.F.; Zhang, C.; Hou, C.C. Assessing radiometric calibration methods for multispectral UAV imagery and the influence of illumination, flight altitude and flight time on reflectance, vegetation index and inversion of winter wheat AGB and LAI. Comput. Electron. Agric. 2024, 219, 108821. [Google Scholar] [CrossRef]

- Liu, Q.S.; Huang, C.; Liu, G.H.; Yu, B.W. Comparison of CBERS-04, GF-1, and GF-2 satellite panchromatic images for mapping quasi-circular vegetation patches in the Yellow River Delta, China. Sensors 2018, 18, 2733. [Google Scholar] [CrossRef]

- Shi, L.; Liu, Q.S.; Huang, C.; Li, H.; Liu, G.H. Comparing pixel-based random forest and the object-based support vector machine approaches to map the quasi-circular vegetation patches using individual seasonal fused GF-1 imagery. IEEE Access 2020, 8, 228955–228966. [Google Scholar] [CrossRef]

- Liu, Q.S.; Song, H.W.; Liu, G.H.; Huang, C.; Li, H. Evaluating the Potential of Multi-Seasonal CBERS-04 Imagery for Mapping the Quasi-Circular Vegetation Patches in the Yellow River Delta Using Random Forest. Remote Sens. 2019, 11, 1216. [Google Scholar] [CrossRef]

- Yeo, S.; Lafon, V.; Alard, D.; Curti, C.; Dehouck, A.; Benot, M.L. Classification and mapping of saltmarsh vegetation combining multispectral images with field data. Estuar. Coast. Shelf Sci. 2020, 236, 106643. [Google Scholar] [CrossRef]

- Game, M.; Carrel, J.E.; Hotrabhavandra, T. Patch dynamics of plant succession on abandoned surface coal mines: A case history approach. J. Ecol. 1982, 70, 707–720. [Google Scholar] [CrossRef]

- Giriraj, A.; Murthy, M.S.R.; Ramesh, B.R. Vegetation composition, structure and patterns of diversity: A case study from the tropical wet evergreen forests of the western Ghats, India. Edinb. J. Bot. 2008, 65, 1–22. [Google Scholar] [CrossRef]

- DeMeo, T.E.; Manning, M.M.; Rowland, M.M.; Vojta, C.D.; McKelvey, K.S.; Brewer, C.K.; Kennedy, R.S.H.; Maus, P.A.; Schulz, B.; Westfall, J.A.; et al. Monitoring vegetation composition and structure as habitat attributes. In A Technical Guide for Monitoring Wildlife Habitat; Gen. Tech. Rep. WO-89; Rowland, M.M., Vojta, C.D., Eds.; Department of Agriculture, Forest Service: Washington, DC, USA, 2013; pp. 4-1–4-63. [Google Scholar]

- Meloni, F.; Nakamura, G.M.; Granzotti, C.R.F.; Martinez, A.S. Vegetation cover reveals the phase diagram of patch patterns in drylands. Phys. A 2019, 534, 122048. [Google Scholar] [CrossRef]

- Taddeo, S.; Dronova, I.; Depsky, N. Spectral vegetation indices of wetland greenness: Response to vegetation structure, composition, and spatial distribution. Remote Sens. Environ. 2019, 234, 111467. [Google Scholar] [CrossRef]

- Sanou, L.; Brama, O.; Jonas, K.; Mipro, H.; Adjima, T. Composition, diversity, and structure of woody vegetation along a disturbance gradient in the forest corridor of the Boucle du Mouhoun, Burkina Faso. Plant Ecol. Divers. 2021, 13, 305–317. [Google Scholar] [CrossRef]

- He, W.; Li, L.; Gao, X. Geocomplexity statistical indicator to enhance multiclass semantic segmentation of remotely sensed data with less sampling bias. Remote Sens. 2024, 16, 1987. [Google Scholar] [CrossRef]

- Jonckheere, A.R. A distribution-free K-sample test against ordered alternatives. Biometrika 1954, 41, 133–145. [Google Scholar] [CrossRef]

- Robinson, J.M.; Harrison, P.A.; Mavoa, S.; Breed, M.F. Existing and emerging uses of drones in restoration ecology. Methods Ecol. Evol. 2022, 13, 1899–1911. [Google Scholar] [CrossRef]

- Griffith, J.A.; McKellip, R.D.; Morisette, J.T. Comparison of multiple sensors for identification and mapping of tamarisk in Western Colorado: Preliminary findings. In Proceedings of the ASPRS 2005 Annual Conference on Geospatial Goes Global: From Your Neighborhood to the Whole Planet, Baltimore, MD, USA, 7–11 March 2005. [Google Scholar]

- Alvarez-Vanhard, E.; Houet, T.; Mony, C.; Lecoq, L.; Corpetti, T. Can UAVs fill the gap between in situ surveys and satellites for habitat mapping? Remote Sens. Environ. 2020, 243, 111780. [Google Scholar] [CrossRef]

- Roth, K.L.; Roberts, D.A.; Dennison, P.E.; Peterson, S.H.; Alonzo, M. The impact of spatial resolution on the classification of plant species and functional types within imaging spectrometer data. Remote Sens. Environ. 2015, 171, 45–57. [Google Scholar] [CrossRef]

- Neyns, R.; Canters, F. Mapping of urban vegetation with high-resolution remote sensing: A Review. Remote Sens. 2022, 14, 1031. [Google Scholar] [CrossRef]

- Hu, Z.W.; Chu, Y.Q.; Zhang, Y.H.; Zheng, X.Y.; Wang, J.Z.; Xu, W.M.; Wang, J.; Wu, G.F. Scale matters: How spatial resolution impacts remote sensing based urban green space mapping? Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104178. [Google Scholar] [CrossRef]

- Taylor, S.; Kumar, L.; Reid, N. Accuracy comparison of Quickbird, Landsat TM and SPOT 5 imagery for Lantana camara mapping. J. Spat. Sci. 2011, 56, 241–252. [Google Scholar] [CrossRef]

- Duncan, J.M.A.; Boruff, B. Monitoring spatial patterns of urban vegetation: A comparison of contemporary high-resolution datasets. Landsc. Urban Plan. 2023, 233, 104671. [Google Scholar] [CrossRef]

- Liu, Y.X.; Zhang, Y.H.; Zhang, X.; Che, C.G.; Huang, C.; Li, H.; Peng, Y.; Li, Z.S.; Liu, Q.S. Fine-Scale Classification of Dominant Vegetation Communities in Coastal Wetlands Using Color-Enhanced Aerial Images. Remote Sens. 2025, 17, 2848. [Google Scholar] [CrossRef]

- Mardanisamani, S.; Eramian, M. Segmentation of vegetation and microplots in aerial agriculture images: A survey. Plant Phenome J. 2022, 5, e20042. [Google Scholar] [CrossRef]

- Curcio, A.C.; Barbero, L.; Peralta, G. UAV-Hyperspectral Imaging to Estimate Species Distribution in Salt Marshes: A Case Study in the Cadiz Bay (SW Spain). Remote Sens. 2023, 15, 1419. [Google Scholar] [CrossRef]

| Vegetation Composition | Plot Name (Area/m2) | Spatial Resolution | Training Data | Validation Data | Segmentation | Segmentation |

|---|---|---|---|---|---|---|

| (cm) | (Polygon) | (Polygon) | (Scale Level) | (Merge Level) | ||

| S. salsa and T. chinensis | PlotA 1614.14 | 10 | 177 | 27 | 25 | 85 |

| 5 | 564 | 27 | 25 | 85 | ||

| 2 | 1433 | 27 | 25 | 85 | ||

| S. salsa, S. salsa + L. bicolor, and T. chinensis | PlotB 2794.68 | 10 | 288 | 141 | 25 | 85 |

| 5 | 312 | 141 | 25 | 95 | ||

| 2 | 126 | 141 | 25 | 95 | ||

| S. salsa, P. australis, and T. chinensis | PlotC 654.82 | 10 | 179 | 53 | 25 | 80 |

| 5 | 183 | 53 | 15 | 90 | ||

| 2 | 80 | 53 | 25 | 90 | ||

| S. salsa, S. salsa + L. bicolor, P. australis, and T. chinensis | PlotD 366.84 | 10 | 132 | 68 | 20 | 65 |

| 5 | 159 | 68 | 25 | 85 | ||

| 2 | 212 | 68 | 25 | 95 | ||

| PlotE 1640.94 | 10 | 464 | 95 | 20 | 65 | |

| 5 | 483 | 95 | 25 | 85 | ||

| 2 | 577 | 95 | 25 | 95 | ||

| PlotF 1591.04 | 10 | 545 | 111 | 20 | 65 | |

| 5 | 556 | 111 | 25 | 85 | ||

| 2 | 368 | 111 | 25 | 95 | ||

| PlotG 4195.76 | 10 | 680 | 233 | 20 | 65 | |

| 5 | 855 | 233 | 25 | 85 | ||

| 2 | 539 | 233 | 25 | 95 |

| Parameters | Formulation | Description |

|---|---|---|

| Vegetation cover () of the th community equals the total area of th community patches, divided by the total area () in a given plot | ||

| 10,000100 | Patch density () of the th community equals the total number of th community patches (), divided by the total area (), and multiplied by 10,000 and 100 (to convert to 100 hectares) in a given plot [37] | |

| Relative dominance () of the th community equals the total area of th community patches, divided by the total vegetation area () in a given plot [39] | ||

| Proportional abundance () of the th community equals the total number of th community patches (), divided by the total number of vegetation patches () in a given plot | ||

| Shannon–Wiener diversity index (), a measure of the total community diversity in a given plot | ||

| Evenness index (), a measure of the evenness of all communities in a given plot; , and is the total number of community types in a given plot | ||

| Moran’s I | / | The Moran’s I measures the spatial pattern and spatial structure in a given plot |

| Vegetation Community | Spatial Resolution | Max. PA | Min. PA | Mean PA | PA Difference | Max. UA | Min. UA | Mean UA | UA Difference |

|---|---|---|---|---|---|---|---|---|---|

| (cm) | (%) | (%) | (%) | (%) | (%) | (%) | (%) | (%) | |

| S. salsa | 10 | 99.96 | 72.54 | 90.03 | 27.42 | 88.89 | 46.70 | 72.96 | 42.19 |

| 5 | 99.88 | 80.27 | 92.73 | 19.61 | 98.75 | 47.57 | 73.26 | 51.18 | |

| 2 | 100.0 | 57.24 | 92.03 | 42.76 | 96.64 | 26.93 | 67.05 | 69.71 | |

| T. chinensis | 10 | 94.83 | 66.41 | 78.16 | 28.42 | 100.0 | 42.38 | 76.25 | 57.62 |

| 5 | 95.87 | 75.16 | 86.71 | 20.71 | 100.0 | 49.27 | 76.44 | 50.73 | |

| 2 | 99.27 | 82.07 | 93.29 | 17.20 | 100.0 | 41.00 | 70.57 | 59.00 | |

| P. australis | 10 | 97.68 | 76.37 | 86.39 | 21.31 | 99.73 | 97.67 | 98.26 | 2.14 |

| 5 | 96.77 | 62.02 | 82.73 | 34.75 | 99.88 | 98.19 | 99.12 | 1.69 | |

| 2 | 90.53 | 64.81 | 78.62 | 25.62 | 99.97 | 99.29 | 99.72 | 0.68 | |

| S. salsa + L. bicolor | 10 | 95.74 | 42.58 | 80.21 | 53.16 | 98.07 | 73.05 | 88.74 | 25.02 |

| 5 | 98.99 | 79.39 | 91.99 | 19.60 | 98.80 | 69.65 | 84.24 | 29.25 | |

| 2 | 98.29 | 89.88 | 94.88 | 8.41 | 98.90 | 67.19 | 84.75 | 31.71 |

| Spatial Resolution | Flight Altitude | Flight Time | Number of Battery Packs | Number of Images Taken | Data Volume |

|---|---|---|---|---|---|

| 10 cm | 188.2 m | 1 h and 20 m and 19 s | 6 | 480 | 9.33 GB |

| 5 cm | 94.9 m | 3 h and 13 m and 18 s | 13 | 1953 | 37.95 GB |

| 2 cm | 188.2 m | 12 h and 13 m and 46 s | 46 | 12,404 | 241.05 GB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Huang, C.; Zhang, X.; Li, H.; Peng, Y.; Wang, S.; Gao, L.; Li, Z. Evaluating the Impact of Different Spatial Resolutions of UAV Imagery on Mapping Tidal Marsh Vegetation Using Multiple Plots of Different Complexity. Remote Sens. 2025, 17, 3598. https://doi.org/10.3390/rs17213598

Liu Q, Huang C, Zhang X, Li H, Peng Y, Wang S, Gao L, Li Z. Evaluating the Impact of Different Spatial Resolutions of UAV Imagery on Mapping Tidal Marsh Vegetation Using Multiple Plots of Different Complexity. Remote Sensing. 2025; 17(21):3598. https://doi.org/10.3390/rs17213598

Chicago/Turabian StyleLiu, Qingsheng, Chong Huang, Xin Zhang, He Li, Yu Peng, Shuxuan Wang, Lijing Gao, and Zishen Li. 2025. "Evaluating the Impact of Different Spatial Resolutions of UAV Imagery on Mapping Tidal Marsh Vegetation Using Multiple Plots of Different Complexity" Remote Sensing 17, no. 21: 3598. https://doi.org/10.3390/rs17213598

APA StyleLiu, Q., Huang, C., Zhang, X., Li, H., Peng, Y., Wang, S., Gao, L., & Li, Z. (2025). Evaluating the Impact of Different Spatial Resolutions of UAV Imagery on Mapping Tidal Marsh Vegetation Using Multiple Plots of Different Complexity. Remote Sensing, 17(21), 3598. https://doi.org/10.3390/rs17213598