Author Contributions

Conceptualization, J.S. and H.N.; methodology, H.N. and D.L.; software, H.N.; validation, H.N. and X.Z. (Xin Zhou); formal analysis, H.N. and X.Z. (Xin Zhang); investigation, H.N. and J.C.; data curation, H.N. and W.L.; writing—original draft preparation, H.N.; writing—review and editing, H.N. and S.L.; visualization, H.N. and X.Z. (Xin Zhou); supervision, J.S. All authors have read and agreed to the published version of the manuscript.

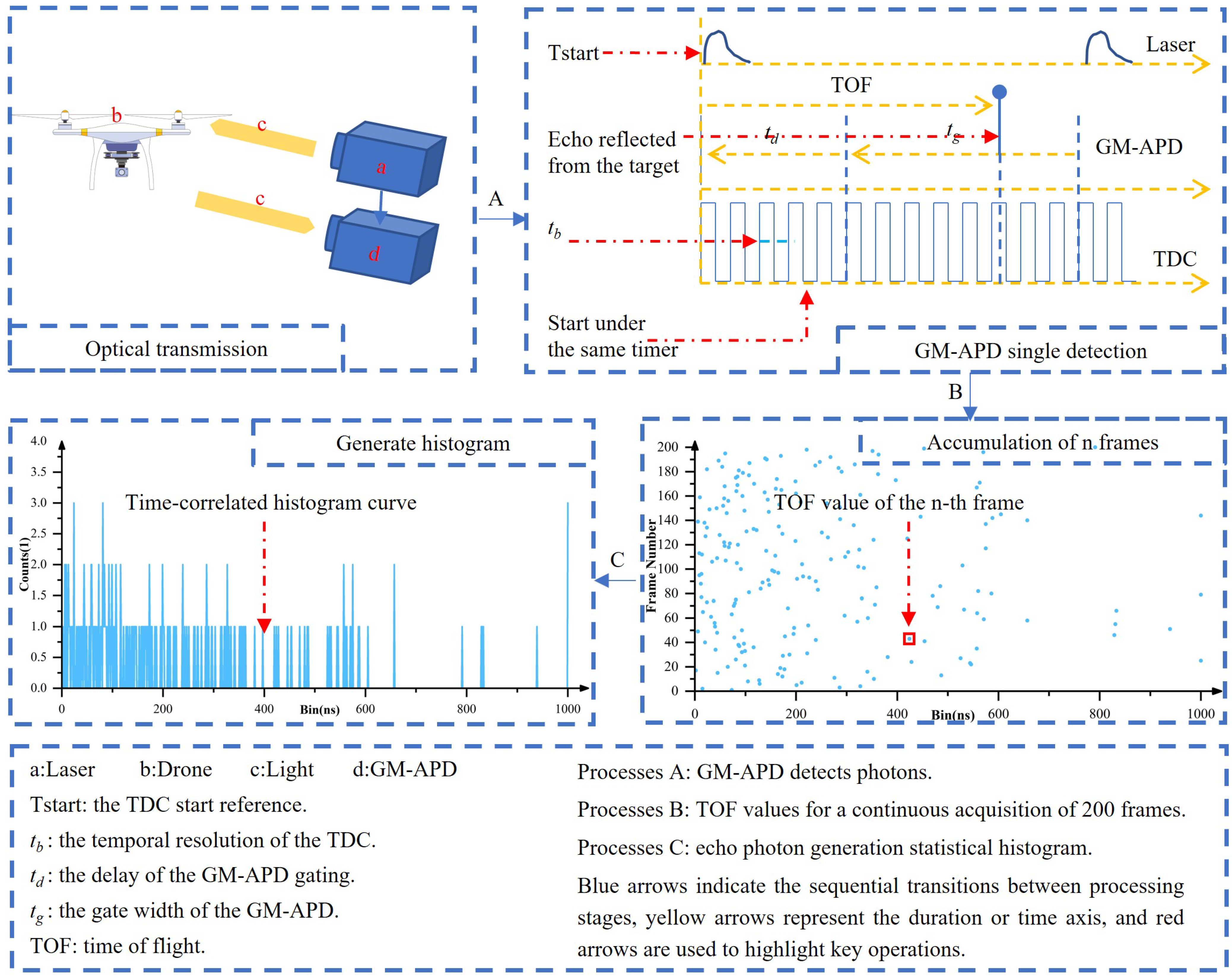

Figure 1.

GM-APD LiDAR echo signal acquisition.

Figure 1.

GM-APD LiDAR echo signal acquisition.

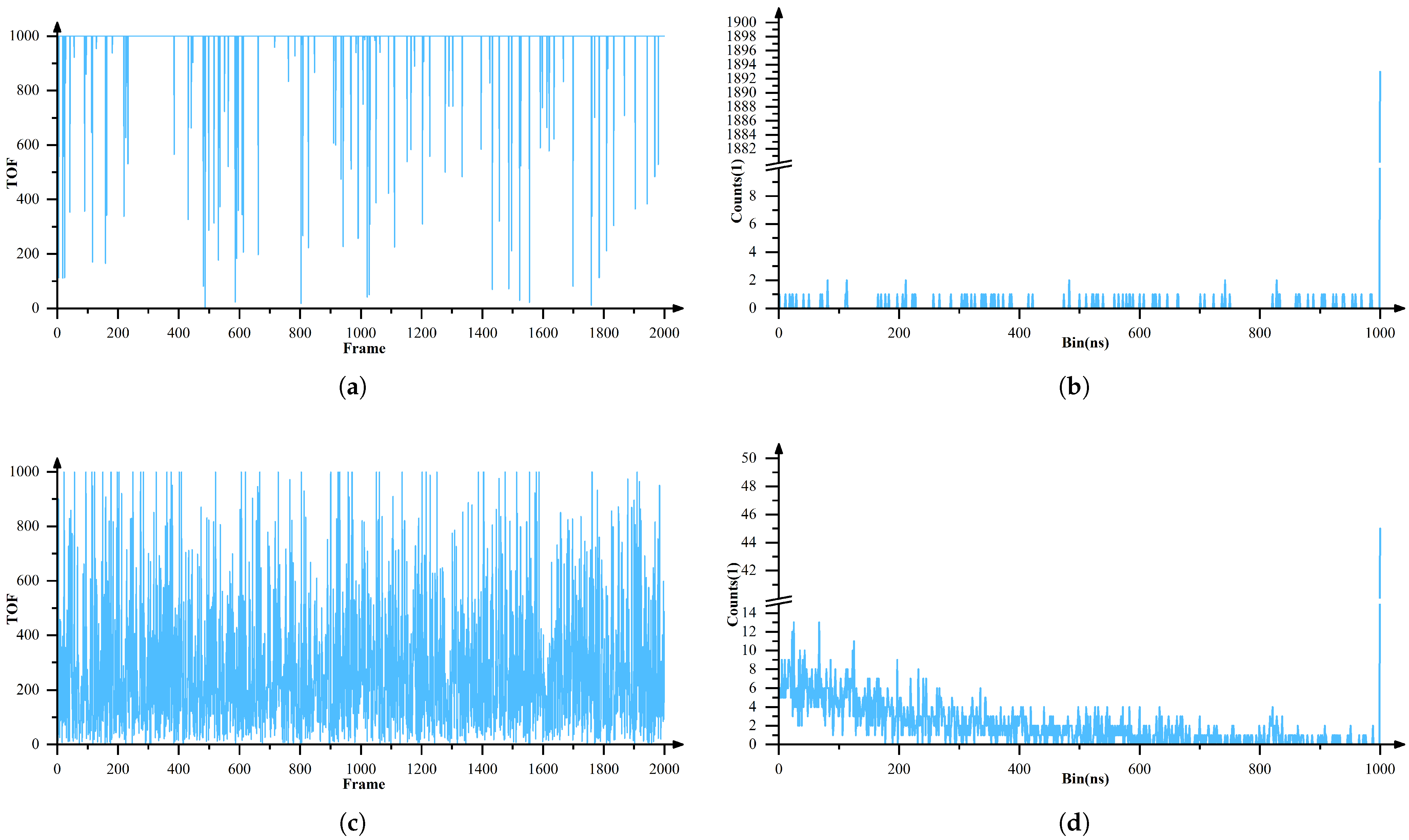

Figure 2.

Illustration of target and background noise echo data. (a) Raw data of 2000 frames of background noise echoes. (b) Statistical histogram of background noise echoes. (c) Raw data of 2000 frames of target echoes. (d) Statistical histogram of target echoes.

Figure 2.

Illustration of target and background noise echo data. (a) Raw data of 2000 frames of background noise echoes. (b) Statistical histogram of background noise echoes. (c) Raw data of 2000 frames of target echoes. (d) Statistical histogram of target echoes.

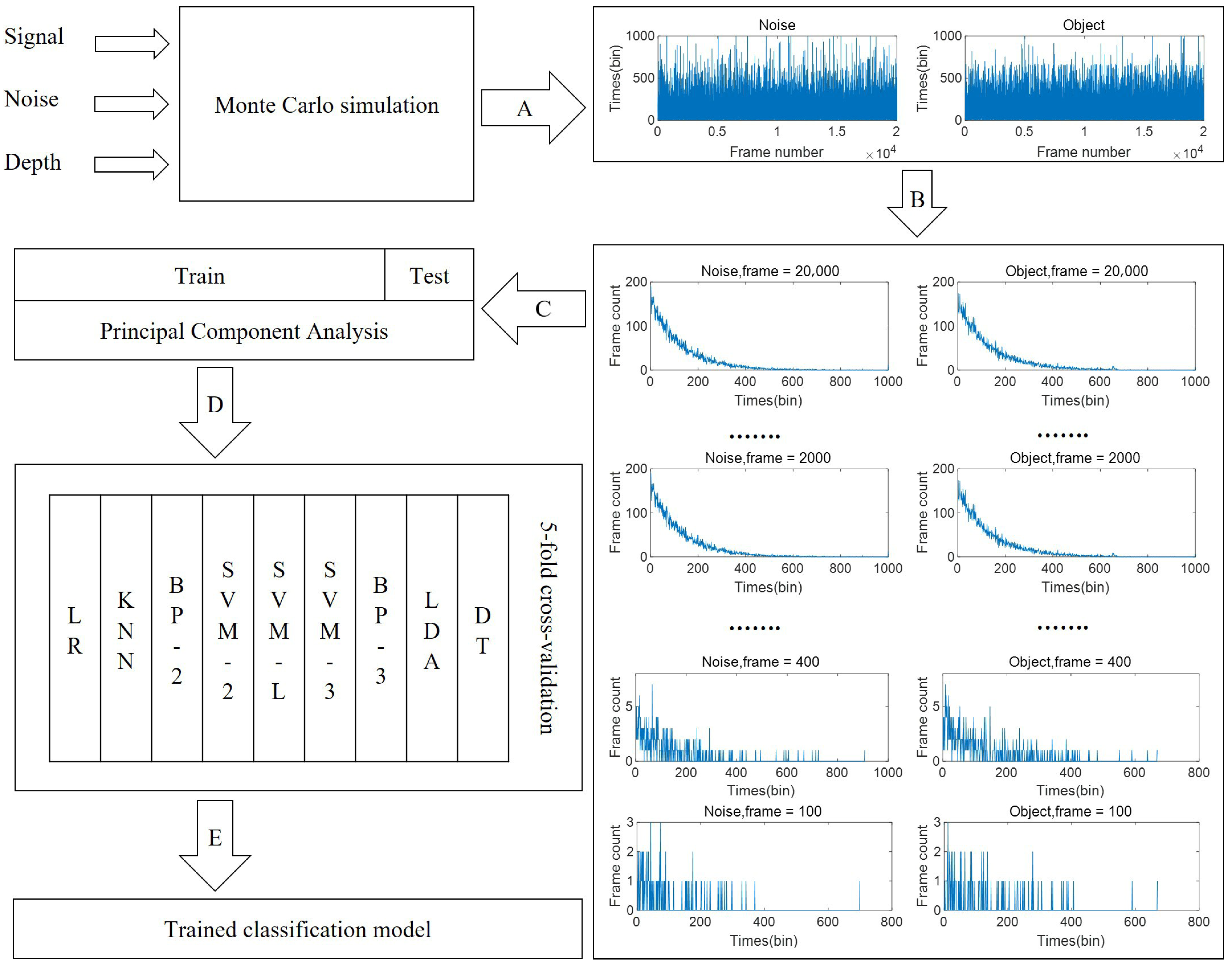

Figure 3.

Training framework diagram for GM-APD signal.

Figure 3.

Training framework diagram for GM-APD signal.

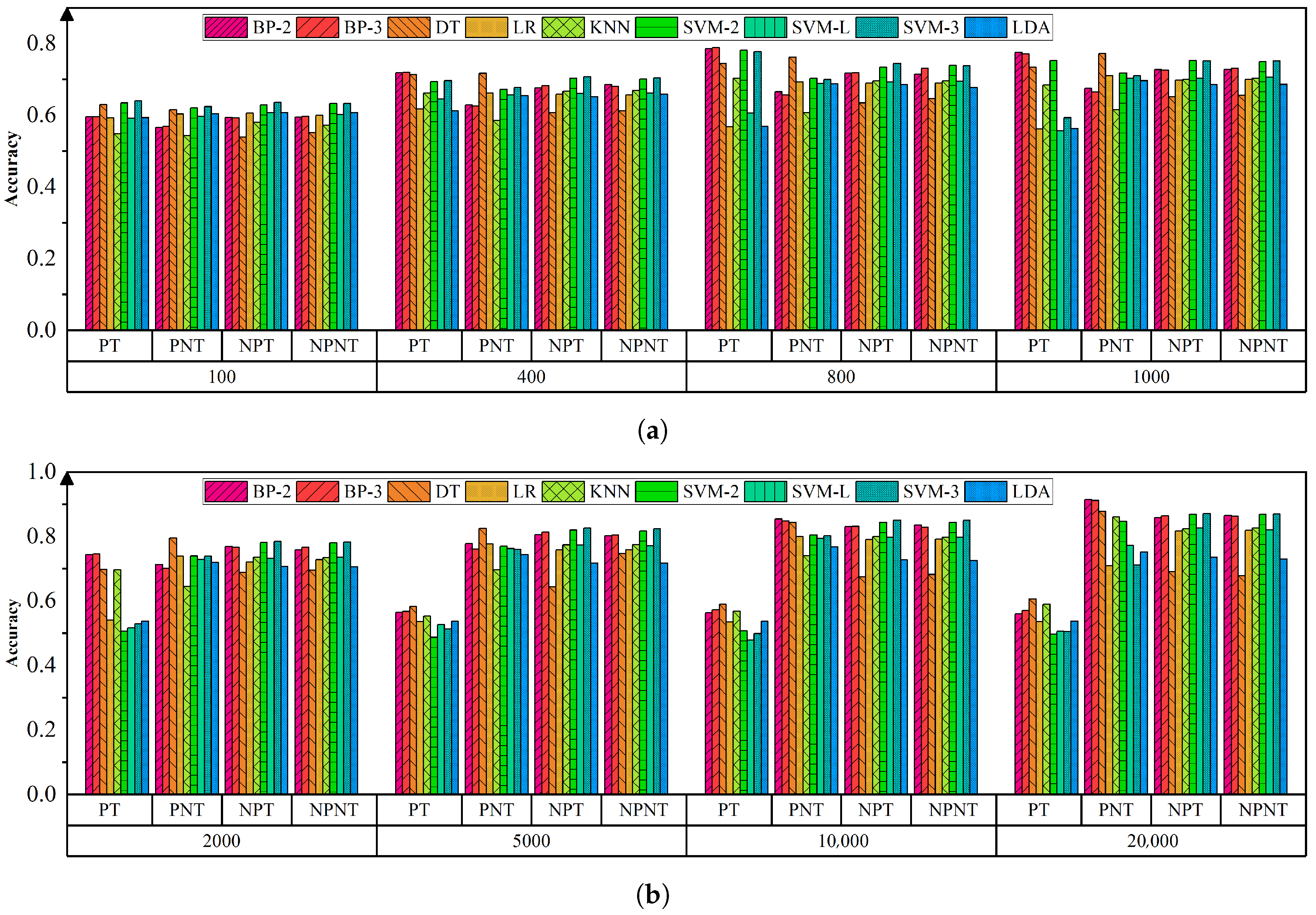

Figure 4.

Training accuracy for models with different feature processing methods at different SFNs. (a) SFN is 100, 400, 800, 1000. (b) SFN is 2000, 5000, 10,000, 20,000.

Figure 4.

Training accuracy for models with different feature processing methods at different SFNs. (a) SFN is 100, 400, 800, 1000. (b) SFN is 2000, 5000, 10,000, 20,000.

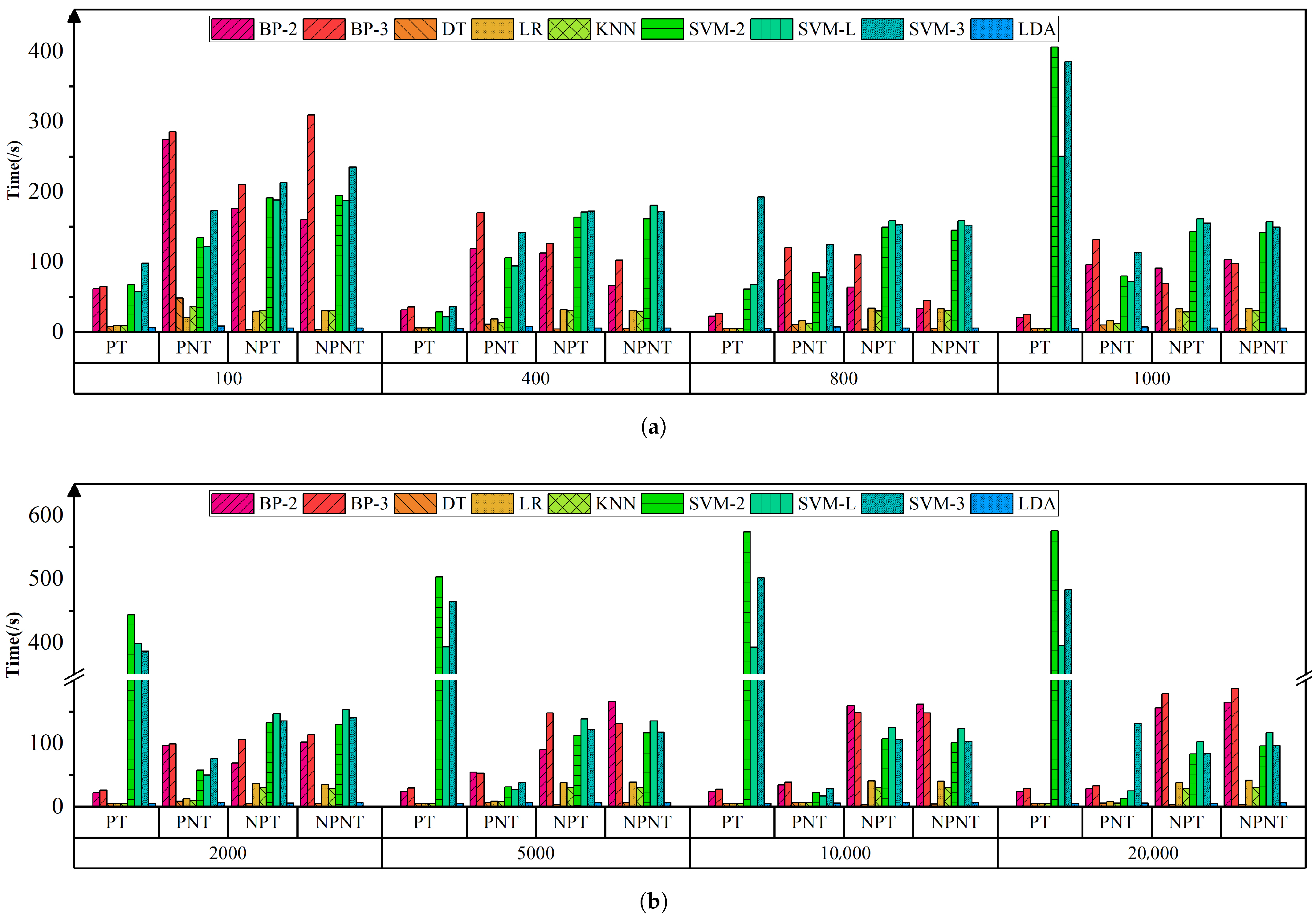

Figure 5.

Training time for ML models with different feature processing methods at different SFNs. (a) SFN is 100, 400, 800, 1000. (b) SFN is 2000, 5000, 10,000, 20,000.

Figure 5.

Training time for ML models with different feature processing methods at different SFNs. (a) SFN is 100, 400, 800, 1000. (b) SFN is 2000, 5000, 10,000, 20,000.

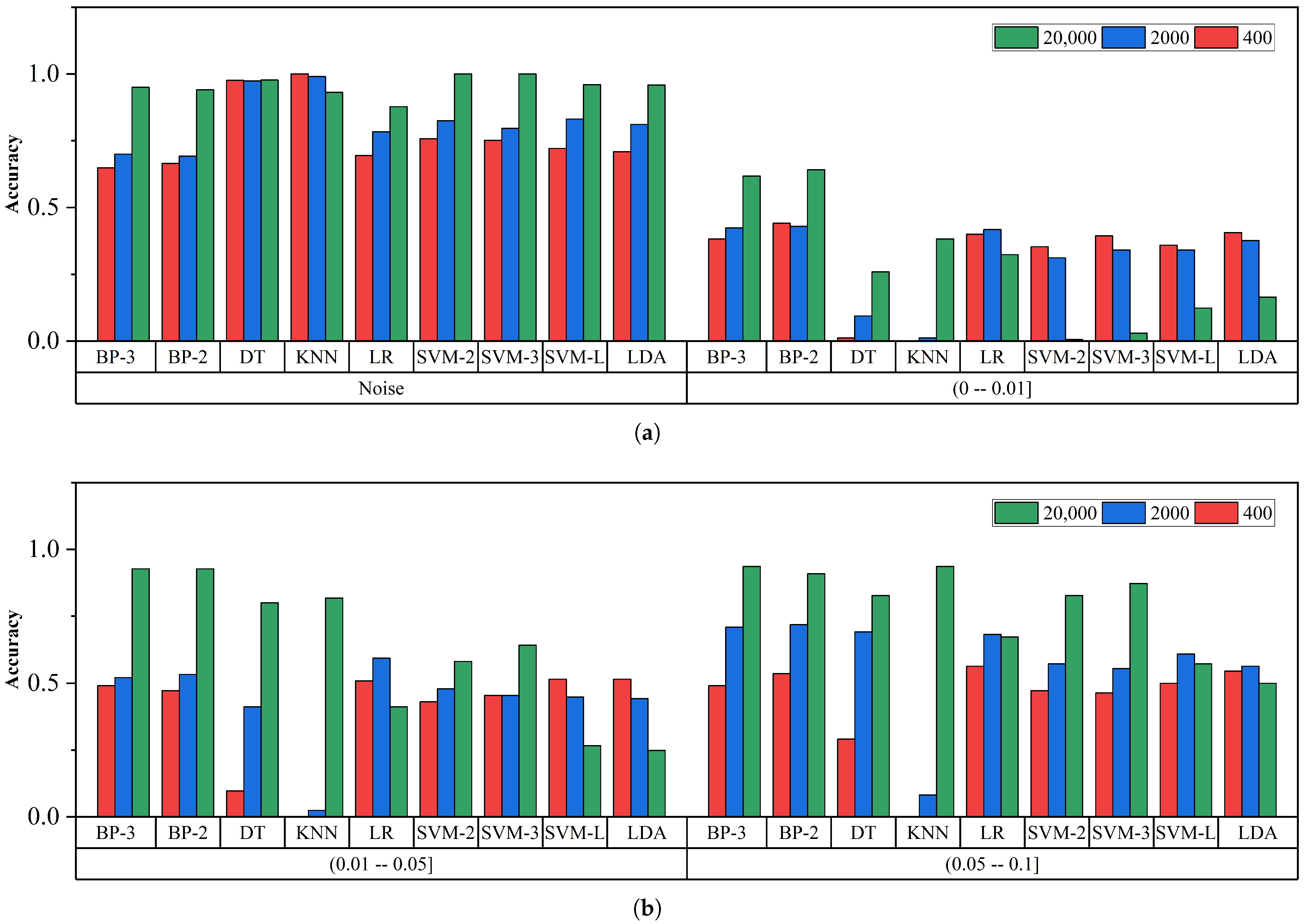

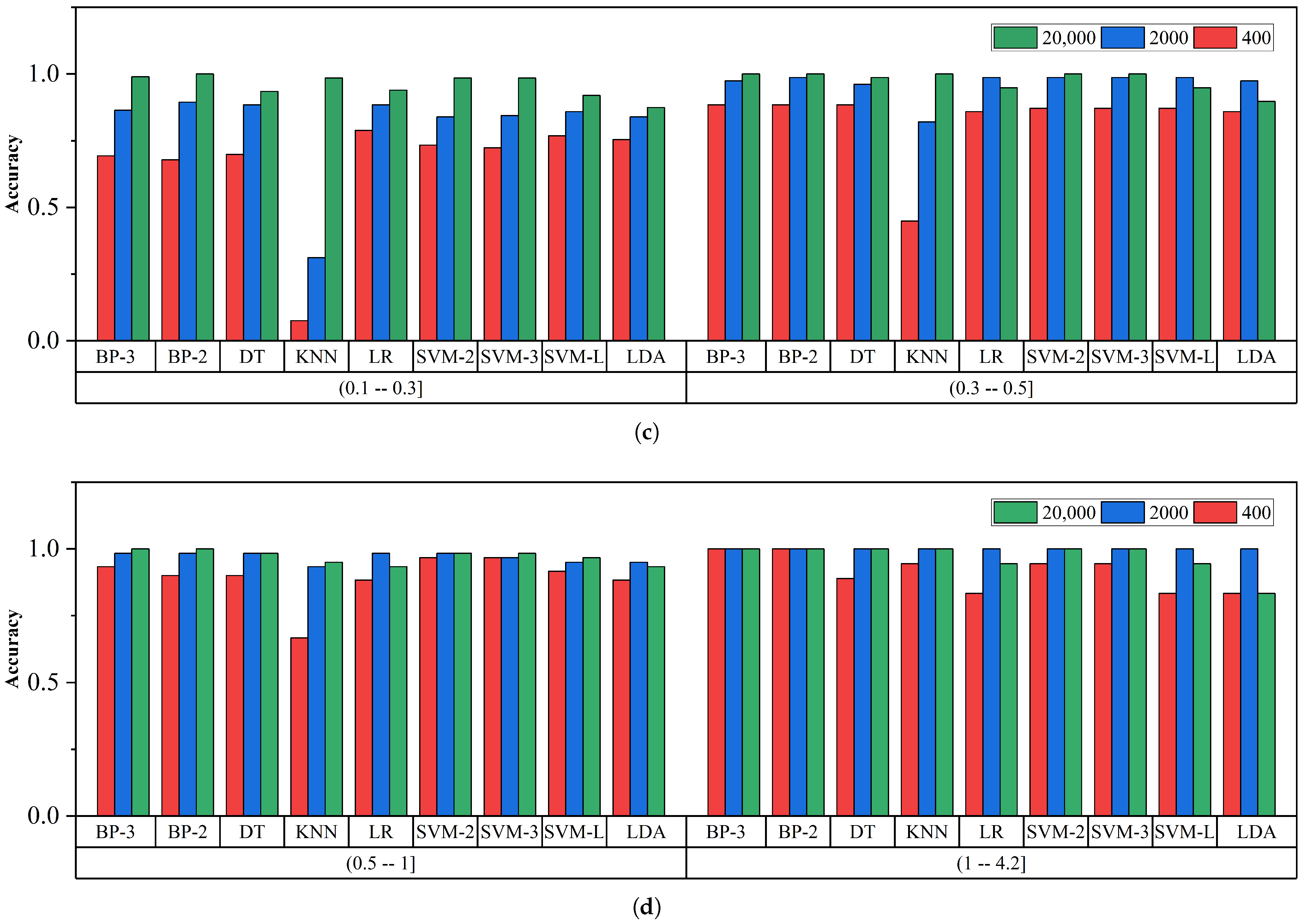

Figure 6.

Accuracy for different models at different ESNRs. (a) ESNR distribution in noise, (0.00–0.01]. (b) ESNR distribution in (0.01–0.05], (0.05–0.10]. (c) ESNR distribution in (0.10–0.30], (0.30–0.50]. (d) ESNR distribution in (0.50–1.00], (1.00–4.20].

Figure 6.

Accuracy for different models at different ESNRs. (a) ESNR distribution in noise, (0.00–0.01]. (b) ESNR distribution in (0.01–0.05], (0.05–0.10]. (c) ESNR distribution in (0.10–0.30], (0.30–0.50]. (d) ESNR distribution in (0.50–1.00], (1.00–4.20].

Figure 7.

Line chart illustrating the classification accuracy of different models under varying ESNR conditions. (a) Changes in classification accuracy of different models under SFN = 400. (b) Changes in classification accuracy of different models under SFN = 2000. (c) Changes in classification accuracy of different models under SFN = 20,000.

Figure 7.

Line chart illustrating the classification accuracy of different models under varying ESNR conditions. (a) Changes in classification accuracy of different models under SFN = 400. (b) Changes in classification accuracy of different models under SFN = 2000. (c) Changes in classification accuracy of different models under SFN = 20,000.

Figure 8.

Analysis of model stability under different SFNs.

Figure 8.

Analysis of model stability under different SFNs.

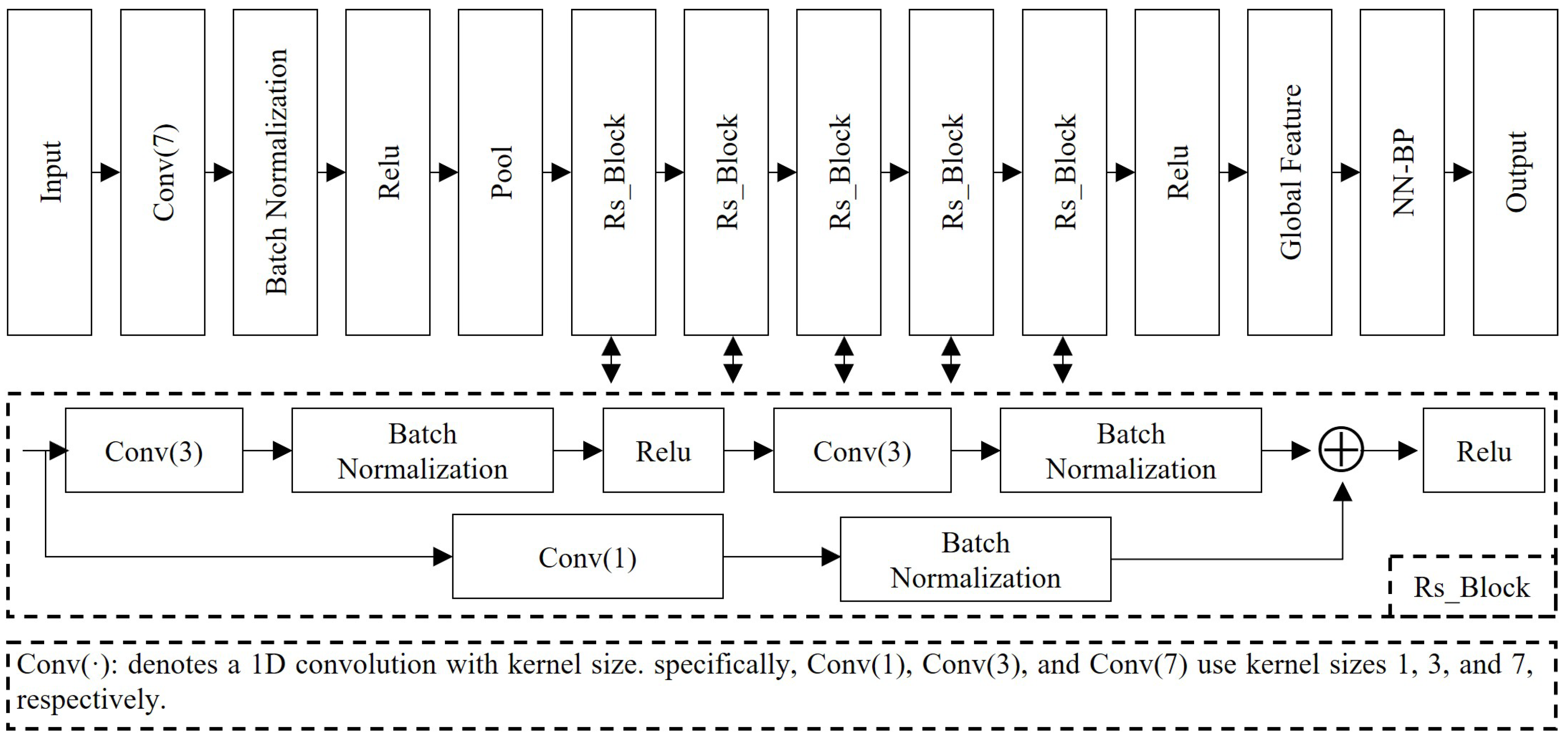

Figure 9.

Schematic of the NN-BP-based ResNet architecture.

Figure 9.

Schematic of the NN-BP-based ResNet architecture.

Table 1.

Dimensionality of features before and after PCA under different SFNs.

Table 1.

Dimensionality of features before and after PCA under different SFNs.

| Frame Count | 100 | 400 | 800 | 1000 | 2000 | 5000 | 10,000 | 20,000 |

|---|

| 370 | 122 | 17 | 2 | 1 | 1 | 1 | 1 |

| 657 | 596 | 530 | 503 | 400 | 243 | 126 | 63 |

Table 2.

Simulation parameters for target and noise signal generation.

Table 2.

Simulation parameters for target and noise signal generation.

| Parameter Category | Parameter Name | Distribution/Range | Description |

|---|

| arget pixels | Noise photons | Uniform [0.01, 1.01] | Simulated background-noise photon count |

| Laser photons | Uniform [0.01, 10.01] | Simulated laser-echo photon count |

| Target location | Uniform [1, 900] | Time/space index of the target |

| Temporal profile of Laser pulse | See Equation (1) | Temporal distribution of the laser pulse |

| Laser pulse width | Fixed at 20 bins | Constant pulse width |

| Noise pixels | Noise photons | Uniform [0.01, 1.01] | Simulated pure-noise pixel photon count |

| GM-APD parameters | Dark count rate | Fixed at 0.01 | Dark count rate within the time-gating window |

| width | 1 μs | Gate width in the synchronous mode of GM-APD |

| 1 ns | Time resolution within the GM-APD gate |

Table 3.

Illustration of the confusion matrix.

Table 3.

Illustration of the confusion matrix.

| | Positive (Target) | Negative (Background) |

|---|

| Predicted positive | | |

| Predicted negative | | |

Table 4.

Sparsity of data at different SFNs.

Table 4.

Sparsity of data at different SFNs.

| Frame Count | 100 | 400 | 800 | 1000 | 2000 | 5000 | 10,000 | 20,000 |

|---|

| ADNZE | 0.0791 | 0.2270 | 0.3415 | 0.3823 | 0.5137 | 0.6752 | 0.7711 | 0.8409 |

Table 5.

Models with the highest training accuracy and testing accuracy at different SFNs.

Table 5.

Models with the highest training accuracy and testing accuracy at different SFNs.

| Frame Count | 100 | 400 | 800 | 1000 | 2000 | 5000 | 10,000 | 20,000 |

|---|

| Train Max | SVM-3 | DT-2 | DT-2 | DT-2 | DT-2 | NN-BP-2 | NN-BP-2 | NN-BP-2 |

| Acc | 0.6241 | 0.7171 | 0.7621 | 0.7715 | 0.7947 | 0.8247 | 0.8540 | 0.9137 |

| Train Min | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | LR-4 |

| Acc | 0.5433 | 0.5852 | 0.6070 | 0.6156 | 0.6453 | 0.6962 | 0.7401 | 0.7091 |

| Test Max | SVM-3 | SVM-3 | SVM-3 | DT-2 | DT-2 | DT-2 | NN-BP-2 | NN-BP-3 |

| Acc | 0.6219 | 0.6756 | 0.7194 | 0.7662 | 0.7919 | 0.8056 | 0.8681 | 0.9213 |

| Test Min | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | KNN-4 | LDA-4 |

| Acc | 0.5300 | 0.5669 | 0.5944 | 0.5981 | 0.6294 | 0.6319 | 0.7281 | 0.7538 |

Table 6.

Corresponding metrics for the test data at higher SFNs (100–1000).

Table 6.

Corresponding metrics for the test data at higher SFNs (100–1000).

| Frame Count | Model | Acc | Pre | Rec | FPR | FNR | F1 | Kappa |

|---|

| 100 | NN-BP-3 | 0.5575 | 0.5523 | 0.6075 | 0.4925 | 0.4361 | 0.5786 | 0.1150 |

| NN-BP-2 | 0.5618 | 0.5613 | 0.5663 | 0.4425 | 0.4376 | 0.5638 | 0.1238 |

| DT | 0.5998 | 0.8272 | 0.2513 | 0.0525 | 0.4414 | 0.3854 | 0.1988 |

| KNN | 0.53 | 1 | 0.06 | 0 | 0.4845 | 0.1132 | 0.06 |

| LR | 0.6094 | 0.6122 | 0.6013 | 0.3825 | 0.3924 | 0.6062 | 0.2188 |

| SVM-2 | 0.6131 | 0.6238 | 0.57 | 0.3438 | 0.3959 | 0.5957 | 0.2263 |

| SVM-3 | 0.6219 | 0.633 | 0.58 | 0.3363 | 0.3875 | 0.6053 | 0.2438 |

| SVM-L | 0.6025 | 0.6085 | 0.575 | 0.37 | 0.4028 | 0.5913 | 0.205 |

| LDA | 0.6088 | 0.6107 | 0.6 | 0.3825 | 0.3931 | 0.6053 | 0.2175 |

| 400 | NN-BP-3 | 0.625 | 0.6312 | 0.6013 | 0.3513 | 0.3807 | 0.6159 | 0.25 |

| NN-BP-2 | 0.6375 | 0.6455 | 0.61 | 0.335 | 0.3697 | 0.6272 | 0.275 |

| DT | 0.6931 | 0.9452 | 0.41 | 0.0238 | 0.3767 | 0.5719 | 0.3863 |

| KNN | 0.5669 | 1 | 0.1338 | 0 | 0.4642 | 0.2359 | 0.1338 |

| LR | 0.6638 | 0.6747 | 0.6325 | 0.305 | 0.3459 | 0.6529 | 0.3275 |

| SVM-2 | 0.6738 | 0.7087 | 0.59 | 0.2425 | 0.3512 | 0.6439 | 0.3475 |

| SVM-3 | 0.6756 | 0.7069 | 0.6 | 0.2488 | 0.3474 | 0.6491 | 0.3513 |

| SVM-L | 0.6681 | 0.6881 | 0.615 | 0.2788 | 0.348 | 0.6495 | 0.3363 |

| LDA | 0.6662 | 0.6817 | 0.6238 | 0.2913 | 0.3468 | 0.6514 | 0.3325 |

| 800 | NN-BP-3 | 0.6525 | 0.6506 | 0.6588 | 0.3538 | 0.3456 | 0.6547 | 0.305 |

| NN-BP-2 | 0.6769 | 0.6724 | 0.69 | 0.3363 | 0.3184 | 0.6811 | 0.3538 |

| DT | 0.7563 | 0.9702 | 0.5288 | 0.0163 | 0.3239 | 0.6845 | 0.5125 |

| KNN | 0.5944 | 1 | 0.1888 | 0 | 0.4479 | 0.3176 | 0.1888 |

| LR | 0.7181 | 0.733 | 0.6863 | 0.25 | 0.2949 | 0.7088 | 0.4363 |

| SVM-2 | 0.7187 | 0.7589 | 0.6413 | 0.2038 | 0.3106 | 0.6951 | 0.4375 |

| SVM-3 | 0.7194 | 0.7489 | 0.66 | 0.2213 | 0.3039 | 0.7017 | 0.4388 |

| SVM-L | 0.7119 | 0.7432 | 0.6475 | 0.2238 | 0.3123 | 0.6921 | 0.4238 |

| LDA | 0.7025 | 0.7225 | 0.6575 | 0.2525 | 0.3142 | 0.6885 | 0.405 |

| 1000 | NN-BP-3 | 0.675 | 0.6724 | 0.6825 | 0.3325 | 0.3223 | 0.6774 | 0.35 |

| NN-BP-2 | 0.6613 | 0.6633 | 0.655 | 0.3325 | 0.3407 | 0.6591 | 0.3225 |

| DT | 0.7663 | 0.9494 | 0.5625 | 0.03 | 0.3108 | 0.7064 | 0.5325 |

| KNN | 0.5981 | 0.9937 | 0.1975 | 0.0013 | 0.4455 | 0.3295 | 0.1963 |

| LR | 0.7138 | 0.728 | 0.6825 | 0.255 | 0.2988 | 0.7045 | 0.4275 |

| SVM-2 | 0.715 | 0.7567 | 0.6338 | 0.2038 | 0.3151 | 0.6898 | 0.43 |

| SVM-3 | 0.7056 | 0.7031 | 0.6525 | 0.2413 | 0.3141 | 0.6891 | 0.4113 |

| SVM-L | 0.715 | 0.7457 | 0.6525 | 0.2225 | 0.3089 | 0.696 | 0.43 |

| LDA | 0.7031 | 0.7254 | 0./6538 | 0.2475 | 0.3151 | 0.6877 | 0.4063 |

| 2000 | NN-BP-3 | 0.7006 | 0.7004 | 0.7013 | 0.3 | 0.2991 | 0.7008 | 0.4013 |

| NN-BP-2 | 0.7038 | 0.6993 | 0.7150 | 0.3075 | 0.2916 | 0.707 | 0.4075 |

| DT | 0.7919 | 0.9587 | 0.61 | 0.0263 | 0.286 | 0.7456 | 0.5838 |

| KNN | 0.6294 | 0.9641 | 0.2688 | 0.01 | 0.4248 | 0.4203 | 0.2588 |

| LR | 0.7506 | 0.7684 | 0.7175 | 0.2163 | 0.2649 | 0.7421 | 0.5013 |

| SVM-2 | 0.735 | 0.7866 | 0.645 | 0.175 | 0.3008 | 0.7088 | 0.47 |

| SVM-3 | 0.72 | 0.7596 | 0.6438 | 0.2038 | 0.3091 | 0.6969 | 0.44 |

| SVM-L | 0.7419 | 0.7945 | 0.6525 | 0.1688 | 0.2948 | 0.7165 | 0.4838 |

| LDA | 0.7288 | 0.774 | 0.6463 | 0.1888 | 0.3036 | 0.7044 | 0.4575 |

| 5000 | NN-BP-3 | 0.7844 | 0.792 | 0.7713 | 0.2025 | 0.2229 | 0.7815 | 0.5688 |

| NN-BP-2 | 0.7931 | 0.795 | 0.79 | 0.2038 | 0.2087 | 0.7925 | 0.5863 |

| DT | 0.8056 | 0.9358 | 0.6563 | 0.045 | 0.2647 | 0.7715 | 0.6113 |

| KNN | 0.6819 | 0.9619 | 0.3788 | 0.015 | 0.3868 | 0.5435 | 0.3638 |

| LR | 0.7925 | 0.8154 | 0.7563 | 0.1713 | 0.2273 | 0.7847 | 0.585 |

| SVM-2 | 0.7619 | 0.8006 | 0.6975 | 0.1738 | 0.268 | 0.7455 | 0.5238 |

| SVM-3 | 0.7438 | 0.7671 | 0.7 | 0.2125 | 0.2759 | 0.732 | 0.4875 |

| SVM-L | 0.7838 | 0.8834 | 0.6538 | 0.0863 | 0.2748 | 0.7514 | 0.5675 |

| LDA | 0.7556 | 0.819 | 0.6563 | 0.145 | 0.2868 | 0.7287 | 0.5113 |

Table 7.

Corresponding metrics for the test data at higher SFNs (2000–20,000).

Table 7.

Corresponding metrics for the test data at higher SFNs (2000–20,000).

| Frame Count | Model | Acc | Pre | Rec | FPR | FNR | F1 | Kappa |

|---|

| 2000 | NN-BP-3 | 0.7006 | 0.7004 | 0.7013 | 0.3 | 0.2991 | 0.7008 | 0.4013 |

| NN-BP-2 | 0.7038 | 0.6993 | 0.7150 | 0.3075 | 0.2916 | 0.707 | 0.4075 |

| DT | 0.7919 | 0.9587 | 0.61 | 0.0263 | 0.286 | 0.7456 | 0.5838 |

| KNN | 0.6294 | 0.9641 | 0.2688 | 0.01 | 0.4248 | 0.4203 | 0.2588 |

| LR | 0.7506 | 0.7684 | 0.7175 | 0.2163 | 0.2649 | 0.7421 | 0.5013 |

| SVM-2 | 0.735 | 0.7866 | 0.645 | 0.175 | 0.3008 | 0.7088 | 0.47 |

| SVM-3 | 0.72 | 0.7596 | 0.6438 | 0.2038 | 0.3091 | 0.6969 | 0.44 |

| SVM-L | 0.7419 | 0.7945 | 0.6525 | 0.1688 | 0.2948 | 0.7165 | 0.4838 |

| LDA | 0.7288 | 0.774 | 0.6463 | 0.1888 | 0.3036 | 0.7044 | 0.4575 |

| 5000 | NN-BP-3 | 0.7844 | 0.792 | 0.7713 | 0.2025 | 0.2229 | 0.7815 | 0.5688 |

| NN-BP-2 | 0.7931 | 0.795 | 0.79 | 0.2038 | 0.2087 | 0.7925 | 0.5863 |

| DT | 0.8056 | 0.9358 | 0.6563 | 0.045 | 0.2647 | 0.7715 | 0.6113 |

| KNN | 0.6819 | 0.9619 | 0.3788 | 0.015 | 0.3868 | 0.5435 | 0.3638 |

| LR | 0.7925 | 0.8154 | 0.7563 | 0.1713 | 0.2273 | 0.7847 | 0.585 |

| SVM-2 | 0.7619 | 0.8006 | 0.6975 | 0.1738 | 0.268 | 0.7455 | 0.5238 |

| SVM-3 | 0.7438 | 0.7671 | 0.7 | 0.2125 | 0.2759 | 0.732 | 0.4875 |

| SVM-L | 0.7838 | 0.8834 | 0.6538 | 0.0863 | 0.2748 | 0.7514 | 0.5675 |

| LDA | 0.7556 | 0.819 | 0.6563 | 0.145 | 0.2868 | 0.7287 | 0.5113 |

| 10,000 | NN-BP-3 | 0.8494 | 0.8625 | 0.8313 | 0.1325 | 0.1628 | 0.8466 | 0.6988 |

| NN-BP-2 | 0.8681 | 0.887 | 0.8438 | 0.1075 | 0.149 | 0.8648 | 0.7363 |

| DT | 0.8444 | 0.9599 | 0.7188 | 0.03 | 0.2248 | 0.822 | 0.6888 |

| KNN | 0.7281 | 0.8585 | 0.5463 | 0.09 | 0.3327 | 0.6677 | 0.4563 |

| LR | 0.8031 | 0.8392 | 0.75 | 0.1438 | 0.226 | 0.7921 | 0.6063 |

| SVM-2 | 0.8125 | 0.9098 | 0.6938 | 0.0688 | 0.2475 | 0.7872 | 0.625 |

| SVM-3 | 0.8125 | 0.8776 | 0.7263 | 0.1013 | 0.2335 | 0.7948 | 0.625 |

| SNM-L | 0.8006 | 0.9168 | 0.6613 | 0.06 | 0.2649 | 0.7683 | 0.6013 |

| LDA | 0.7781 | 0.887 | 0.6375 | 0.0813 | 0.2829 | 0.7418 | 0.5563 |

| 20,000 | NN-BP-3 | 0.9213 | 0.947 | 0.8925 | 0.050 | 0.1017 | 0.9189 | 0.8425 |

| NN-BP-2 | 0.9188 | 0.9385 | 0.8963 | 0.0588 | 0.0993 | 0.9169 | 0.8375 |

| DT | 0.8681 | 0.9712 | 0.7588 | 0.0225 | 0.1979 | 0.8519 | 0.7363 |

| KNN | 0.8731 | 0.9222 | 0.815 | 0.0688 | 0.1657 | 0.8653 | 0.7463 |

| LR | 0.7706 | 0.8442 | 0.6638 | 0.1225 | 0.277 | 0.7432 | 0.5413 |

| SVM-2 | 0.8369 | 1.0000 | 0.6738 | 0 | 0.246 | 0.8051 | 0.6738 |

| SVM-3 | 0.8488 | 1.0000 | 0.6975 | 0 | 0.2322 | 0.8218 | 0.6975 |

| SVM-L | 0.7675 | 0.935 | 0.575 | 0.04 | 0.3069 | 0.7121 | 0.535 |

| LDA | 0.7538 | 0.9301 | 0.5488 | 0.0413 | 0.32 | 0.6903 | 0.5075 |

Table 8.

Mean ± 95% CI per model (F1/Kappa).

Table 8.

Mean ± 95% CI per model (F1/Kappa).

| Model | Mean | SD | Lower 95% CI | Upper 95% CI |

|---|

|

F1

|

Kappa

|

F1

|

Kappa

|

F1

|

Kappa

|

F1

|

Kappa

|

|---|

| NN-BP-3 | 0.7218 | 0.4414 | 0.1175 | 0.2435 | 0.6236 | 0.2378 | 0.8200 | 0.6450 |

| NN-BP-2 | 0.7266 | 0.4553 | 0.1213 | 0.2434 | 0.6251 | 0.2518 | 0.8280 | 0.6589 |

| DT | 0.6924 | 0.5313 | 0.1514 | 0.1723 | 0.5659 | 0.3872 | 0.8189 | 0.6754 |

| KNN | 0.4366 | 0.3005 | 0.2448 | 0.2196 | 0.2320 | 0.1169 | 0.6413 | 0.4841 |

| LR | 0.7168 | 0.4555 | 0.0634 | 0.1323 | 0.6638 | 0.3449 | 0.7698 | 0.5661 |

| SVM-2 | 0.7089 | 0.4667 | 0.0700 | 0.1441 | 0.6503 | 0.3462 | 0.7674 | 0.5872 |

| SVM-3 | 0.7113 | 0.4619 | 0.0712 | 0.1445 | 0.6518 | 0.3411 | 0.7709 | 0.5827 |

| SVM-L | 0.6971 | 0.4478 | 0.0562 | 0.1304 | 0.6502 | 0.3388 | 0.7441 | 0.5569 |

| LDA | 0.6873 | 0.4242 | 0.0431 | 0.1101 | 0.6512 | 0.3322 | 0.7233 | 0.5163 |

Table 9.

Friedman test summary (F1/Kappa).

Table 9.

Friedman test summary (F1/Kappa).

| | Chi-Square | p-Value | Kendall’s W | N (SFN Levels) | k (Models) |

|---|

| | F1 | Kappa | F1 | Kappa | F1 | Kappa |

|---|

| Value | 18.83 | 24.14 | 0.0158 | 0.00217 | 0.29 | 0.38 | 8 | 9 |

Table 10.

Pairwise Wilcoxon—all model pairs (F1/Kappa).

Table 10.

Pairwise Wilcoxon—all model pairs (F1/Kappa).

| Model A | Model B | Mean_Diff | Median_Diff | | | Effect_Size_r |

|---|

| F1 | Kappa | F1 | Kappa | F1 | Kappa | F1 | Kappa | F1 | Kappa |

|---|

| DT | KNN | 0.2558 | 0.2308 | 0.2988 | 0.2500 | 0.0156 | 0.0156 | 0.4531 | 0.4219 | 0.855 | 0.855 |

| DT | LDA | 0.0051 | 0.1070 | 0.0300 | 0.1168 | 0.6406 | 0.0156 | 1 | 0.4688 | 0.165 | 0.855 |

| DT | LR | −0.0244 | 0.0758 | −0.0056 | 0.0793 | 0.7422 | 0.0156 | 1 | 0.4375 | −0.116 | 0.855 |

| DT | SVM-2 | −0.0165 | 0.0645 | 0.0213 | 0.0694 | 0.8438 | 0.0156 | 1 | 0.5156 | 0.070 | 0.855 |

| DT | SVM-3 | −0.0189 | 0.0694 | 0.0222 | 0.0687 | 0.8438 | 0.0391 | 1 | 0.9375 | 0.070 | 0.730 |

| DT | SVM-L | −0.0047 | 0.0834 | 0.0153 | 0.0881 | 0.7422 | 0.0156 | 1 | 0.4531 | 0.116 | 0.855 |

| KNN | LDA | −0.2506 | −0.1237 | −0.3211 | −0.1781 | 0.0234 | 0.1953 | 0.6562 | 1 | −0.801 | −0.458 |

| KNN | LR | −0.2802 | −0.1550 | −0.3484 | −0.2074 | 0.0156 | 0.0547 | 0.4688 | 1 | −0.855 | −0.679 |

| KNN | SVM-2 | −0.2723 | −0.1662 | −0.3244 | −0.1900 | 0.0156 | 0.0156 | 0.4844 | 0.4844 | −0.855 | −0.855 |

| KNN | SVM-3 | −0.2747 | −0.1614 | −0.3181 | −0.1825 | 0.0156 | 0.0156 | 0.5 | 0.5 | −0.855 | −0.855 |

| KNN | SVM-L | −0.2605 | −0.1473 | −0.3313 | −0.2031 | 0.0234 | 0.0781 | 0.6328 | 1 | −0.801 | −0.623 |

| LR | LDA | 0.0295 | 0.0313 | 0.0290 | 0.0326 | 0.0078 | 0.0234 | 0.2734 | 0.6094 | 0.940 | 0.801 |

| LR | SVM-2 | 0.0079 | −0.0112 | 0.0121 | −0.0050 | 0.1953 | 0.5469 | 1 | 1 | 0.458 | −0.213 |

| LR | SVM-3 | 0.0055 | −0.0064 | 0.0055 | −0.0106 | 0.3125 | 0.7422 | 1 | 1 | 0.357 | −0.116 |

| LR | SVM-L | 0.0197 | 0.0077 | 0.0202 | 0.0094 | 0.0078 | 0.0781 | 0.2656 | 1 | 0.940 | 0.623 |

| NN-BP-2 | DT | 0.0342 | −0.0759 | 0.0319 | −0.0931 | 0.25 | 0.1094 | 1 | 1 | 0.407 | −0.566 |

| NN-BP-2 | KNN | 0.2899 | 0.1548 | 0.3081 | 0.1450 | 0.0078 | 0.0078 | 0.2578 | 0.2656 | 0.940 | 0.940 |

| NN-BP-2 | LDA | 0.0393 | 0.0311 | −0.0024 | −0.0506 | 0.6406 | 0.9453 | 1 | 1 | −0.165 | −0.024 |

| NN-BP-2 | LR | 0.0097 | −0.0002 | −0.0267 | −0.0675 | 0.8438 | 0.8438 | 1 | 1 | −0.070 | −0.070 |

| NN-BP-2 | SVM-2 | 0.0177 | −0.0114 | −0.0079 | −0.0675 | 0.7422 | 0.9453 | 1 | 1 | −0.116 | −0.024 |

| NN-BP-2 | SVM-3 | 0.0152 | −0.0066 | −0.0052 | −0.0544 | 0.6406 | 0.9453 | 1 | 1 | −0.165 | −0.024 |

| NN-BP-2 | SVM-L | 0.0294 | 0.0075 | −0.0103 | −0.0656 | 0.7422 | 0.8438 | 1 | 1 | −0.116 | −0.070 |

| NN-BP-3 | DT | 0.0294 | −0.0899 | 0.0173 | −0.1100 | 0.5469 | 0.0781 | 1 | 1 | 0.213 | −0.623 |

| NN-BP-3 | KNN | 0.2852 | 0.1409 | 0.3088 | 0.1294 | 0.0078 | 0.0078 | 0.2812 | 0.2734 | 0.940 | 0.940 |

| NN-BP-3 | LDA | 0.0345 | 0.0172 | −0.0070 | −0.0563 | 0.7422 | 1 | 1 | 1 | −0.116 | −0.000 |

| NN-BP-3 | LR | 0.0050 | −0.0141 | −0.0273 | −0.0775 | 0.7422 | 0.4609 | 1 | 1 | −0.116 | −0.261 |

| NN-BP-3 | NN-BP-2 | −0.0048 | −0.0139 | −0.0086 | −0.0132 | 0.5469 | 0.1484 | 1 | 1 | −0.213 | −0.511 |

| NN-BP-3 | SVM-2 | 0.0129 | −0.0253 | −0.0102 | −0.0743 | 0.8438 | 0.4609 | 1 | 1 | −0.070 | −0.261 |

| NN-BP-3 | SVM-3 | 0.0105 | −0.0205 | −0.0039 | −0.0500 | 0.6406 | 0.7422 | 1 | 1 | −0.165 | −0.116 |

| NN-BP-3 | SVM-L | 0.0247 | −0.0064 | −0.0142 | −0.0813 | 0.9453 | 0.7422 | 1 | 1 | −0.024 | −0.116 |

| SVM-2 | LDA | 0.0216 | 0.0425 | 0.0055 | 0.0193 | 0.25 | 0.0078 | 1 | 0.2812 | 0.407 | 0.940 |

| SVM-2 | SVM-3 | −0.0024 | 0.0048 | −0.0059 | −0.0007 | 0.6406 | 0.7263 | 1 | 1 | −0.165 | −0.124 |

| SVM-2 | SVM-L | 0.0117 | 0.0189 | −0.0013 | 0.0124 | 1 | 0.3627 | 1 | 1 | −0.000 | 0.322 |

| SVM-3 | LDA | 0.0241 | 0.0377 | 0.0024 | 0.0225 | 0.1834 | 0.1094 | 1 | 1 | 0.470 | 0.566 |

| SVM-3 | SVM-L | 0.0142 | 0.0141 | 0.0046 | 0.0150 | 0.6406 | 0.8438 | 1 | 1 | 0.165 | 0.070 |

| SVM-L | LDA | 0.0099 | 0.0236 | 0.0102 | 0.0250 | 0.1094 | 0.0234 | 1 | 0.5859 | 0.566 | 0.801 |

Table 11.

Distribution of different ESNRs in the test data (calculated under SFN being 20,000).

Table 11.

Distribution of different ESNRs in the test data (calculated under SFN being 20,000).

| ESNR | Number | Percent (%) |

|---|

| (0.00–0.01] | 170 | 21.25 |

| (0.01–0.05] | 165 | 20.625 |

| (0.05–0.10] | 110 | 13.75 |

| (0.10–0.30] | 199 | 24.875 |

| (0.30–0.50] | 78 | 9.75 |

| (0.50–1.00] | 60 | 7.5 |

| (1.00–4.20] | 18 | 2.25 |

Table 12.

Performance comparison at different accumulation frame counts on GM-APD echoes.

Table 12.

Performance comparison at different accumulation frame counts on GM-APD echoes.

| Frame Count | Model | Acc | Pre | Rec | FPR | FNR | F1 | Kappa |

|---|

| 400 | DT | 0.6931 | 0.9452 | 0.4100 | 0.0238 | 0.3767 | 0.5719 | 0.3863 |

| 400 | ResNet | 0.7800 | 0.8425 | 0.6887 | 0.1288 | 0.3113 | 0.7579 | 0.5600 |

| 2000 | DT | 0.7919 | 0.9587 | 0.6100 | 0.0263 | 0.2860 | 0.7456 | 0.5838 |

| 2000 | ResNet | 0.8644 | 0.9664 | 0.7550 | 0.0262 | 0.2450 | 0.8477 | 0.7288 |

| 20,000 | NN-BP-3 | 0.9213 | 0.9470 | 0.8925 | 0.0500 | 0.1017 | 0.9189 | 0.8425 |

| 20,000 | ResNet | 0.9444 | 0.9696 | 0.9175 | 0.0288 | 0.0825 | 0.9428 | 0.8887 |

Table 13.

Run time and model size comparison at different accumulation frame counts.

Table 13.

Run time and model size comparison at different accumulation frame counts.

| Frame Count | M-Name | Algorithm Time (s) | Avg. Latency (ms) | Rate (Hz) | Params (MB) |

|---|

| 400 | NN-BP-3 | 0.8536 | 0.2084 | 1.1715 | 2.4100 |

| NN-BP-2 | 0.2746 | 0.0670 | 3.6417 | 4.8200 |

| DT | 0.4014 | 0.0980 | 2.4913 | 2.3900 |

| KNN | 3.9585 | 0.9664 | 0.2526 | 36.8400 |

| LR | 0.5453 | 0.1331 | 1.8339 | 2.3880 |

| SVM-2 | 4.2910 | 1.0474 | 0.2331 | 29.7200 |

| SVM-3 | 4.6212 | 1.1282 | 0.2164 | 31.9170 |

| SVM-L | 3.7983 | 0.9273 | 0.2633 | 27.9180 |

| LDA | 0.3543 | 0.0865 | 2.8225 | 3.8140 |

| ResNet | 6.5561 | 1.6006 | 0.1525 | 0.3800 |

| 2000 | NN-BP-3 | 0.2167 | 0.0529 | 4.6147 | 1.6190 |

| NN-BP-2 | 0.1942 | 0.0474 | 5.1493 | 1.6190 |

| DT | 0.1323 | 0.0332 | 7.5586 | 1.6070 |

| KNN | 2.7283 | 0.6661 | 0.3665 | 24.7610 |

| LR | 0.1712 | 0.0418 | 5.8411 | 1.6040 |

| SVM-2 | 1.6910 | 0.4129 | 0.5914 | 17.1840 |

| SVM-3 | 2.0473 | 0.4998 | 0.4884 | 18.6390 |

| SVM-L | 1.7719 | 0.4326 | 0.5644 | 16.4310 |

| LDA | 0.1947 | 0.0475 | 5.1361 | 2.2470 |

| ResNet | 6.9294 | 1.6918 | 0.1443 | 0.3800 |

| 20,000 | NN-BP-3 | 0.1444 | 0.0352 | 6.9252 | 0.2588 |

| NN-BP-2 | 0.1032 | 0.0252 | 9.6899 | 0.2588 |

| DT | 0.1312 | 0.0320 | 7.6220 | 0.2600 |

| KNN | 0.4405 | 0.1076 | 2.2701 | 4.0000 |

| LR | 0.1711 | 0.0418 | 5.8445 | 0.2560 |

| SVM-2 | 0.3407 | 0.0832 | 2.9351 | 1.7530 |

| SVM-3 | 0.3049 | 0.0735 | 3.2312 | 1.5290 |

| SVM-L | 0.3491 | 0.0852 | 2.8645 | 2.3970 |

| LDA | 0.1241 | 0.0303 | 8.0580 | 0.2274 |

| ResNet | 7.1379 | 1.7426 | 0.1401 | 0.3800 |