1. Introduction

Object detection is a fundamental task in remote sensing image processing. Through object detection algorithms, aircraft, vehicles, and ships can be accurately localized and identified, which plays an essential role in military intelligence analysis and operational decision-making. However, remote sensing images typically acquired from high altitudes, contain objects with substantial scale variations. These objects are often small and densely arranged. In addition to the influence of other factors such as weather and sensor parameters, it is often a significant challenge to separate the objects of interest from their surroundings. Furthermore, the deployment in resource-constrained environments such as onboard satellites, unmanned aerial vehicles (UAVs), and embedded platforms [

1], imposes stringent requirements on computational efficiency and power consumption.

The key strategy to tackle the challenges mentioned above is to build efficient multiscale feature representations. The Feature Pyramid Network (FPN) and its variants have become standard structures for this purpose. However, the performance of FPN largely depends on the quality of its built-in sampling operations—specifically, upsampling and downsampling. These operations are responsible for transferring and combining features across different levels of the pyramid. Therefore, the quality of sampling methods directly impacts the completeness and semantic richness of multiscale features, as well as the final object detection performance.

In remote sensing image analysis, upsampling and downsampling operations serve as fundamental components for multiscale feature representation. Upsampling enhances spatial resolution to recover fine details and facilitates the fusion of low-level spatial information with high-level semantics, thereby constructing feature representations that are simultaneously rich in both spatial and semantic content. However, traditional interpolation-based upsampling methods often fail to adequately capture semantic context within feature maps, leading to the loss of fine-grained details that are critical for detecting small objects in remote sensing imagery. To mitigate this, learnable upsampling operators have been developed, which incorporate trainable parameters to enhance representational flexibility. Representative dynamic upsampling approaches, such as CARAFE [

2], A2U [

3], DLU [

4], FADE [

5], SAPA [

6], DIP [

7] and DySample [

8], perform instance-specific processing in a data-driven manner by adaptively adjusting upsampling strategies based on input content. Conversely, downsampling reduces spatial resolution to alleviate computational complexity, commonly implemented via max pooling or strided convolution, which aggregate local features into higher-level abstractions. Nevertheless, such fixed operations tend to inadequately preserve fine-grained details, often resulting in feature blurring or irreversible information loss—an issue particularly critical in remote sensing, where small and structurally complex targets demand rich detail for accurate recognition. Recent efforts have introduced content-aware downsampling strategies to address this limitation. For instance, CARAFE++ [

9] integrates a complementary content-aware downsampling operation, unifying both upsampling and downsampling within a single framework. Similarly, modules like the Adaptive Downsampling Module (ADM) [

10], Robust Feature Downsampling (RFD) [

11], and Content-aware Pooling and Downsampling Module (CPDM) [

12] aim to preserve essential details during resolution reduction.

Although advanced sampling operators demonstrate improved adaptability and performance, they exhibit systematic limitations that hinder practical deployment in remote sensing applications. Current methods typically specialize in either upsampling or downsampling operations, lacking a unified framework for efficient bidirectional resampling. Static approaches fail to preserve critical details across diverse remote sensing scenarios [

13], while non-unified architectures necessitate separate modules for different sampling directions, increasing structural complexity [

14]. Moreover, dynamically parameterized operators often introduce substantial computational costs and parameter overhead in pursuit of feature preservation, rendering them unsuitable for resource-constrained environments where efficiency is paramount [

13]. For instance, while CARAFE++ supports bidirectional operations, its parameter burden remains considerable; DLU has made progress in lightweight design but relies on learnable offset prediction and has not been extended to downsampling or thoroughly evaluated in remote sensing contexts. This architectural separation and methodological limitation creates a fundamental trade-off where reducing model size or improving inference speed typically comes at the expense of detection accuracy. Consequently, a unified framework capable of delivering high-performance bidirectional dynamic sampling under strict lightweight constraints remains unavailable, significantly impeding deployment in real-time remote sensing applications and edge computing systems where computational resources are severely limited [

14].

To achieve a better balance between detection accuracy and computational complexity, we propose a novel resampling framework named Lurker, which builds upon the foundation of DLU. Lurker extends the principles of DLU to both upsampling and downsampling but eliminates the need for learnable guidance offsets. This is achieved by constructing a compact source kernel space and generating the target kernels for both upsampling and downsampling via bilinear interpolation. Consequently, Lurker not only reduces the parameter count and computational overhead but also improves the mean Average Precision (

), thereby achieving a more favorable overall performance trade-off.

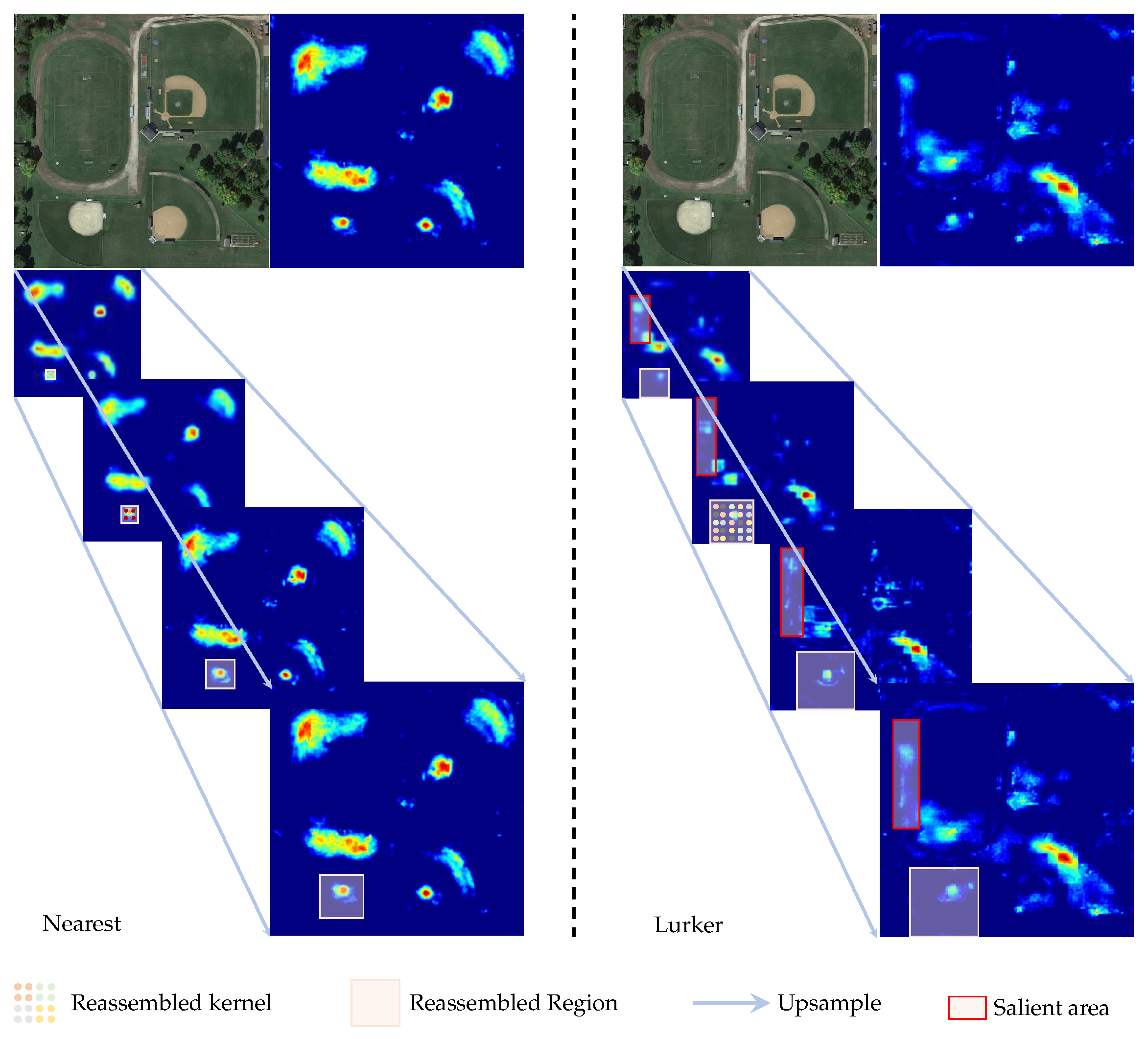

Figure 1 reveals the working mechanism of Lurker in an upsampling case. We visualize the feature maps in the top-down pathway of feature pyramid network (FPN) [

15] and compare Lurker with the nearest neighbor interpolation baseline. After upsampled by Lurker, a feature map can more accurately represent the informational characteristics of objects, consequently enabling the model to achieve superior remote sensing object detection results.

The main contributions of our work are summarized as follows:

- 1.

Lightweight and Unified Dynamic Resampling Kernel: To improve parameter efficiency, we propose Lurker, which extends the dynamic lookup (DLU) principle to perform both upsampling and downsampling using a compact source kernel space and bilinear interpolation. In contrast to DLU, our method removes the need for learnable guidance offsets and a channel compressor, yet maintains competitive performance with significantly fewer parameters.

- 2.

Unified Bidirectional Resampling Framework: To resolve architectural inconsistency across resampling operations, we design a unified bidirectional resampling framework based on consistent design principles. Unlike existing methods that employ separate designs for each resampling direction, our framework ensures architectural coherence and promotes more effective multiscale feature learning—critical for remote sensing object detection.

- 3.

Comprehensive Experimental Validation: To fill the gap in remote sensing validation, we conduct extensive experiments on two authoritative benchmarks. We compare Lurker against several notable resampling modules on the challenging DIOR [

16] and DOTA [

17] datasets. The results confirm that our approach consistently outperforms baseline methods while operating under considerably lower parameter constraints compared to existing learnable resampling techniques.

The remainder of this paper is organized as follows.

Section 2 reviews related work on feature upsampling and downsampling operators in remote sensing.

Section 3 presents our proposed methodology, including an overview of the Lurker framework and detailed descriptions of the kernel generation and dynamic reassembly modules.

Section 4 provides comprehensive experiments and discussions, covering dataset descriptions, implementation details, evaluation metrics, result analysis with both qualitative and quantitative comparisons, effectiveness analysis, and ablation studies. Finally,

Section 5 concludes the paper with a summary of our main findings and contributions.

2. Related Work

In remote sensing imagery, objects often exhibit a wide range of sizes, requiring the use of multiscale feature representations to effectively detect them. To address this challenge, many modern detectors leverage Feature Pyramid Networks (FPN) [

15] as the neck structure, which constructs multiscale features to represent objects of varying sizes.

2.1. Feature Upsampling Operators in Remote Sensing

Within the FPN architecture, the upsampling operation plays a fundamental role. In the FPN, nearest-neighbor interpolation is typically employed to upsample feature maps from coarser resolutions to higher resolutions. Traditional interpolation methods, such as nearest-neighbor and bilinear interpolation, rely on predetermined rules for upsampling feature maps. However, these methods often fail to capture semantic information when processing small objects and tend to lose critical fine-grained details [

18].

To overcome these limitations, several learnable upsampling operators have been proposed. These approaches incorporate trainable parameters, often leveraging the concept of convolution, to improve model performance. For instance, deconvolution is employed to upsample high-level features, enhancing detection performance for small objects in SAPNet [

19]. Deconvolution [

20] achieves feature upsampling by reversing the standard convolution process. In Info-FPN [

21], pixel shuffle upsampling is proposed for multiscale feature fusion. Pixel Shuffle [

22] reduces the number of channels, but redistributes this information to the spatial dimension, ensuring no loss of information. Despite these advancements, these methods still have inherent limitations. Deconvolution relies on fixed learned kernels during inference, and Pixel Shuffle is constrained by its predefined channel-to-space transformation rule. These upsampling approaches struggle to tackle the unique challenges posed by remote sensing images, such as complex backgrounds, varying target scales, and densely arranged objects.

In response to these limitations, significant research efforts have shifted toward dynamic upsampling operators that adapt to input content. As a class of kernel-based methods, they generate adaptive convolution kernels and have demonstrated considerable promise in addressing the complex challenges of remote sensing imagery. CARAFE [

2] stands as a prominent example, employing a subnetwork to produce dynamic convolution kernels for content-aware feature reorganization. Its integration into detection frameworks demonstrates improved feature representation for small targets through larger receptive fields and instance-specific processing. Building upon this kernel generation paradigm, several specialized variants have emerged to address particular challenges in remote sensing imagery. CAFUS [

23] introduces a feature modification kernel that refines interpolated outputs to better preserve semantic information. CAU [

24] adaptively generates upsampling kernels according to contextual information, proving particularly valuable for recovering detailed object boundaries in high-resolution scenes. More recently, DLU was proposed to reduce parameter count while maintaining performance through a compact source kernel space and learnable guided offsets. However, this approach still requires an additional offset predictor that increases computational complexity, and it has yet to see widespread adoption in remote sensing applications compared to earlier methods.

In contrast to kernel-based methods, the pixel displacement paradigm achieves upsampling through spatial transformation rather than convolution operations. DySample exemplifies this approach by splitting single points into multiple locations from a point-sampling perspective, creating sharper edges through precise semantic clustering. Its successful integration into networks like ADD-YOLO and 4SDC-YOLOv8 demonstrates particular effectiveness in enhancing small target detection in remote sensing imagery. Following similar principles, Spatial-Guided Feature Upsampler (SGFU) [

25] dynamically computes sampling positions by incorporating both higher-level and lower-level features, with specialized offset prediction improving geometric accuracy. Similarly, Guided Upsampling Module (GU) [

26] employs a guidance table of offset vectors to direct sampling toward correct semantic categories. Another notable approach, Flow Guided Upsampling Module (FGUM) [

27], addresses feature shift issues by constructing flow fields that enable shallow features to guide the upsampling of deep features through grid sampling operations. Together, these pixel displacement methods offer complementary advantages for remote sensing applications where precise spatial alignment and edge preservation are critical.

The feature rearrangement paradigm represents another important approach for resolution enhancement in remote sensing, which operates through spatial reorganization of existing features rather than kernel generation or pixel displacement. A foundational method in this category is Sub-Pixel Conv, which employs periodic shuffling to efficiently increase feature resolution. Building upon this concept, SP-Conv extends the rearrangement strategy with more sophisticated spatial processing. Further advancing this paradigm, Local Relationship Upsampling (LRU) [

28] calculates similarity relationships between high-level feature points and their corresponding low-level regions to enhance point-to-region integration. These rearrangement-based methods collectively offer computationally efficient alternatives for remote sensing applications, particularly valuable in scenarios demanding rapid processing while maintaining adequate feature representation.

2.2. Feature Downsampling Operators in Remote Sensing

Downsampling represents a critical operation in deep neural networks for expanding receptive fields, reducing computational costs, and feature aggregation. In remote sensing object detection, however, conventional downsampling approaches often incur substantial information loss that adversely affects performance, particularly for small objects. Traditional methods including pooling operations and strided convolution provide computational efficiency but frequently sacrifice essential spatial information.

To mitigate these limitations, recent research has developed hybrid multi-path pooling mechanisms that better preserve critical information. Several works employ dual-branch architectures to combine complementary downsampling strategies. The Enhanced Effective Channel Attention in ABNet [

29] integrates both average and max pooling to generate enriched channel attention maps. Similarly, the Haar wavelet-based downsampling and max pooling (HWD-MP) [

30] module preserves complete information while providing diverse feature representations. The Efficient Downsample Module(EDown) [

31] combines max pooling with depthwise separable convolution, utilizing batch normalization to maintain feature continuity and computational efficiency.

Beyond conventional downsampling techniques, several advanced content-aware approaches have emerged specifically designed for remote sensing challenges. These methods can be broadly categorized by their underlying mechanisms. One line of research focuses on dynamic kernel generation, exemplified by CARAFE++ [

9] which extends the content-aware paradigm to both upsampling and downsampling through adaptive kernels within large receptive fields, though its application in remote sensing remains limited. Another direction employs adaptive weighting strategies, as seen in the Adaptive Downsampling Module (ADM) [

10] that enhances tiny object detection through local detail preservation, and the Content-Aware Downsampling Module (CADM) [

12] which implements a three-stage process of channel expansion, content-aware weight prediction, and feature aggregation to maintain small object information. Further advancing this concept, the Scale-Enhanced Detection Head combines adaptive downsampling with multi-scale feature enhancement without increasing parameters. A distinct approach explores multi-branch feature integration through the Robust Feature Downsampling module(RFD) [

11], which combines features from different downsampling techniques to create complementary feature sets that overcome limitations of single-method approaches. Collectively, these advanced methods demonstrate the evolving sophistication in addressing information preservation challenges during downsampling in remote sensing applications.

Despite these advancements, a fundamental challenge in resampling methods for remote sensing object detection (RSOD) lies in achieving an optimal balance between lightweight design and effective information retention. Traditional approaches often rely on complex module structures or additional operator parameters to preserve useful information while filtering out background interference. To address this issue, we propose a learned and unified resampling kernel that extends the DLU framework. Our kernel incorporates downsampling operations while eliminating the requirement for learnable guidance offsets and channel compression, thereby achieving efficient and effective sampling within a unified architecture. Unlike existing dynamic sampling operators such as DySample, which only support upsampling, our framework achieves content aware upsampling and downsampling in a unified structure.

4. Experiment and Discussion

In this section, we conduct extensive experiments on two widely used remote sensing object detection benchmarks: DIOR [

16] and DOTA [

17]. To establish a comprehensive and impartial comparison, we integrate our proposed Lurker module into the FPN structure for upsampling, evaluating its performance against established methods including nearest neighbor interpolation, CARAFE++ [

9], Dysample [

8] and DLU [

4]. Similarly, for downsampling within the ResNet50 backbone, we compare Lurker against methods with low parameter overhead, including average pooling, max pooling, CARAFE++ [

9], and strided convolution. Due to their substantial parameter complexity, which introduces approximately 1 to 2 million additional parameters, methods such as RFD [

11] and LIP [

32] are excluded from our comparison. This ensures our analysis remains focused on computationally efficient downsampling strategies. All compared modules were carefully re-implemented and evaluated under identical experimental settings to ensure a fair comparison.

4.1. Datasets

The proposed resampling module is evaluated on two established remote sensing object detection benchmarks, the DIOR and DOTA datasets, both employing horizontal bounding boxes for object annotation. These datasets consist of optical satellite imagery acquired in the visible spectrum with three-channel RGB color components. The spatial resolution varies across images in each dataset, reflecting realistic acquisition conditions and presenting significant scale-related challenges for detection algorithms.

4.1.1. DIOR Dataset

The DIOR serves as a large-scale benchmark for optical remote sensing object detection, containing 23,463 images uniformly sized at pixels. The dataset is divided into 11,725 training and 11,738 testing images. The imagery exhibits diverse spatial resolutions ranging from 0.5 to 30 m, introducing substantial scale variations. It also displays natural diversity in illumination, atmospheric conditions, and sensor noise, representing realistic operational environments. Expertly annotated using Google Earth imagery, DIOR includes 192,472 object instances spanning 20 common geographic categories including Airplane, Airport, Bridge, Harbor, Ship, Stadium, and Windmill.

4.1.2. DOTA Dataset

The DOTA dataset serves as a pivotal benchmark for aerial image object detection, distinguished by its rigorous annotation standards and complex scene composition. It comprises 2806 high-resolution images divided into 1403 for training, 468 for validation, and 935 for testing. The original image sizes vary considerably, with dimensions ranging from

to

pixels. To facilitate model processing, all images were cropped into

patches with a 200-pixel overlap between adjacent patches. The dataset exhibits a spatial resolution range of 0.3 to 4.5 m [

33], representing one of the highest-resolution aerial imagery collections publicly available. It encompasses 15 object categories and introduces challenging real-world conditions, notably high object densities of up to 2000 instances per image and extreme scale variations. These characteristics collectively establish DOTA as a robust testbed for evaluating detection algorithms under demanding operational conditions.

4.2. Implementation Details

All experiments were executed on a high-performance computing workstation equipped with an Intel(R) Xeon(R) Gold 6226R CPU @ 2.90GHz, 256 GB DDR4 RAM (3000 MHz), and a NVIDIA RTX A6000 GPU (48 GB VRAM). Utilizing the mmdetection framework with PyTorch 2.0.0, we implemented the proposed method and comparative approaches. We employed ResNet-50 as the consistent backbone architecture across all experiments Experiments were conducted utilizing two publicly available remote sensing datasets, DIOR and DOTA, both comprising satellite imagery. Random horizontal flipping served as the primary data augmentation technique during training. Addressing the dense target distributions characteristic of aerial imagery, we optimized critical testing parameters, nms_pre was elevated from 1000 to 2000 and max_per_img from 100 to 2000. These adjustments, consistent with the default configurations in mmrotate, are necessary to handle the high target density and prevalence of small objects in aerial imagery.

Faster R-CNN served as the baseline detection model, optimized using Stochastic Gradient Descent [

34] with a mini-batch size of 8, momentum set to 0.9, and weight decay fixed at

. A random seed of 2025 was used for all experiments to ensure reproducibility. For the upsampling experiments, a 12-epoch training schedule (schedule 1x) was employed with an initial learning rate of 0.01, which was reduced by a factor of 10 at epochs 8 and 11. The downsampling experiments utilized a 24-epoch schedule (schedule 2x) with the same initial learning rate of 0.01, similarly reduced 10-fold at epochs 16 and 22.

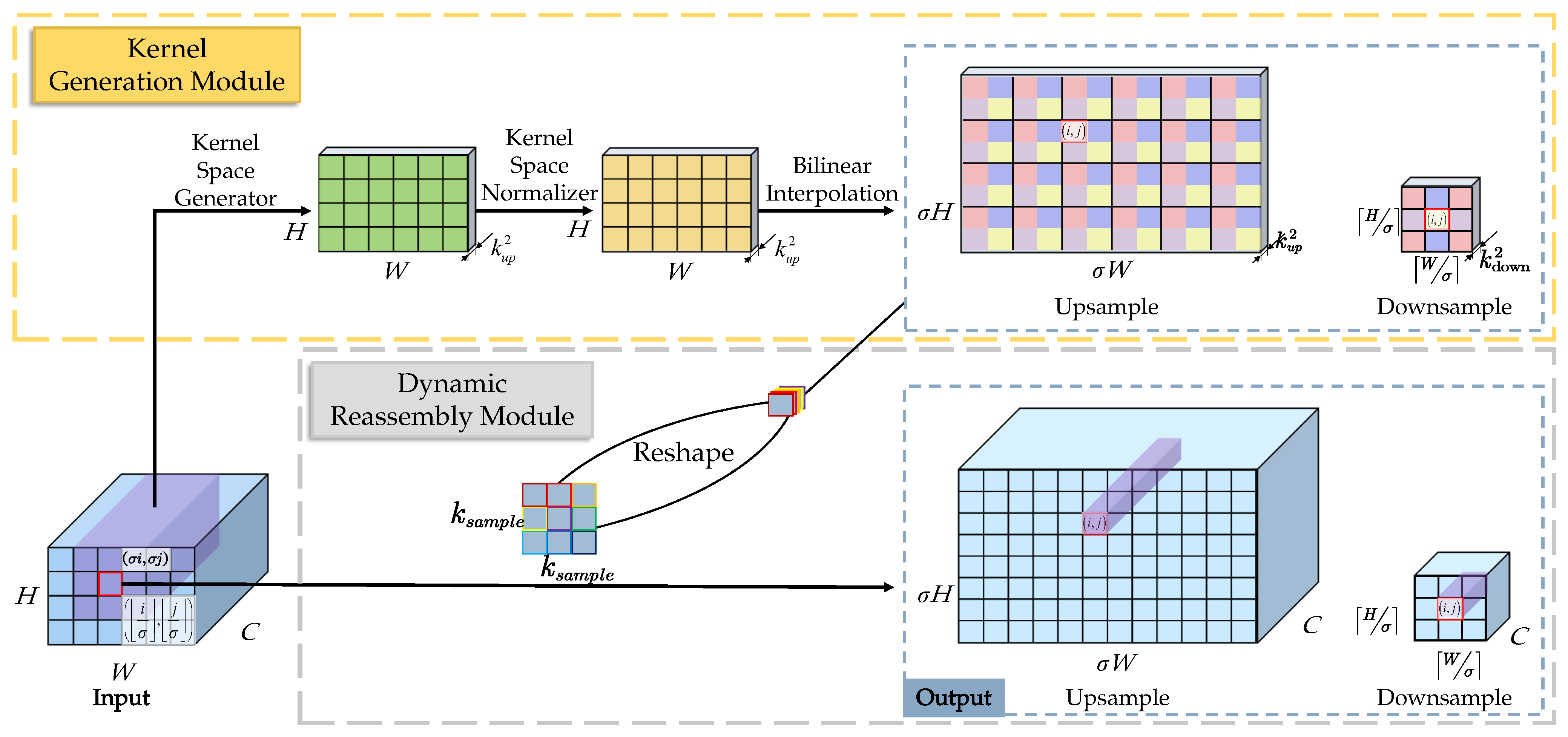

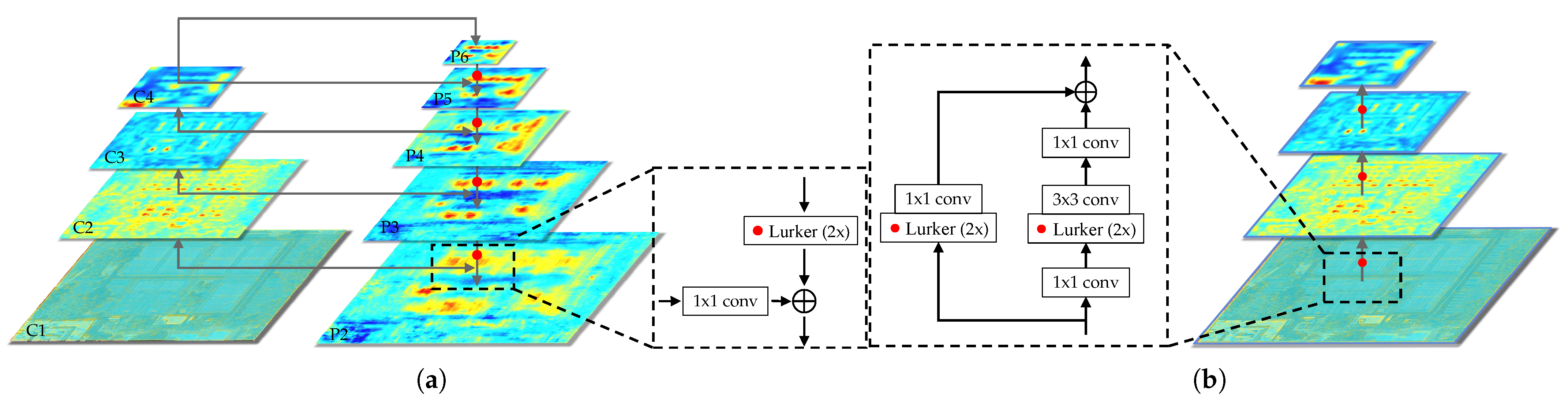

The Lurker resampling operator was systematically integrated into both the backbone and neck of the detection network. In the ResNet50 backbone, all standard

convolutions with stride = 2 inside Bottleneck blocks were replaced by Lurker modules

Figure 3a, each followed by a

convolution with stride = 1 to improve feature extraction. Correspondingly, within the downsampling layers, the conventional stride = 2 convolution was replaced with the Lurker operator along with a

convolution using stride = 1. In the Feature Pyramid Network (FPN) [

15] neck, nearest neighbor interpolation was replaced by the Lurker module

Figure 3b, which performs 2× upsampling via a learnable kernel. This kernel based method adaptively produces resampling patterns optimized for reconstructing features while preserving spatial details, without introducing additional convolutional layers.

4.3. Evaluation Metrics

To comprehensively evaluate object detection performance, we employ Mean Average Precision (mAP) and its variants, which are standard metrics in remote sensing detection tasks. The evaluation begins with two fundamental metrics: precision and recall. Precision (P), which measures the reliability of the detected objects, is defined as the ratio of true positive to all positive detections:

Recall (R), which measures the ability to find all relevant objects, is defined as the ratio of true positives to all actual ground-truth objects:

Here

,

, and

denote true positives, false positives, and false negatives, respectively. These detections are determined based on the Intersection over Union (IoU) metric, which measures the spatial overlap between predicted bounding boxes and ground-truth annotations. The IoU is calculated as the ratio of the intersection area to the union area of the predicted and ground-truth bounding boxes:

where

represents the predicted bounding box and

represents the ground-truth bounding box. A detection is considered a true positive when the IoU exceeds a predefined threshold. The Precision-Recall (PR) curve is then plotted by varying the detection confidence threshold. The Average Precision (AP) for a single class is computed as the area under this PR curve:

In practice, this is typically approximated using a discrete summation over a set of equally spaced recall levels. In our evaluation, we report both

and

, where

uses an IoU threshold of 0.5, providing a more lenient evaluation suitable for general object detection, while

uses a stricter IoU threshold of 0.75, demanding more precise localization accuracy. The overall mean Average Precision (mAP) is finally obtained by averaging the AP values across all object categories:

where

N is the total number of classes. The overall mAP serves as our primary accuracy indicator, as it is the most widely adopted benchmark. This is complemented by the scale-specific variants

for small objects (with a pixel area less than

),

for medium objects (pixel area between

and

), and

for large objects (pixel area greater than

) which provide detailed performance insights across different object sizes.

Model efficiency is evaluated through architectural lightweightness, measured by the parameter count (#Params) of the resampling module, and inference speed, quantified by Frames Per Second (FPS) on a test platform. Additionally, we include computational complexity metrics: Floating Point Operations (FLOPs) to measure computational requirements, and GPU memory usage (GPU RAM) during inference to assess practical deployment constraints. The FLOPs for a convolutional layer can be calculated as [

35]:

where

H and

W are the output feature map dimensions,

and

are the input and output channels, and

and

are the kernel dimensions. The total FLOPs of the network is the sum over all layers. GPU memory usage is measured as the peak memory consumption during inference of a single image batch. Note that #Params refers to the sum of parameters from the contrastive resampling methods used to replace either all downsampling modules in the ResNet50 backbone or all upsampling modules in the FPN structure. These metrics collectively form a rigorous framework addressing accuracy, efficiency, and deployability.

4.4. Results and Analysis

4.4.1. Results and Analysis of Upsampling

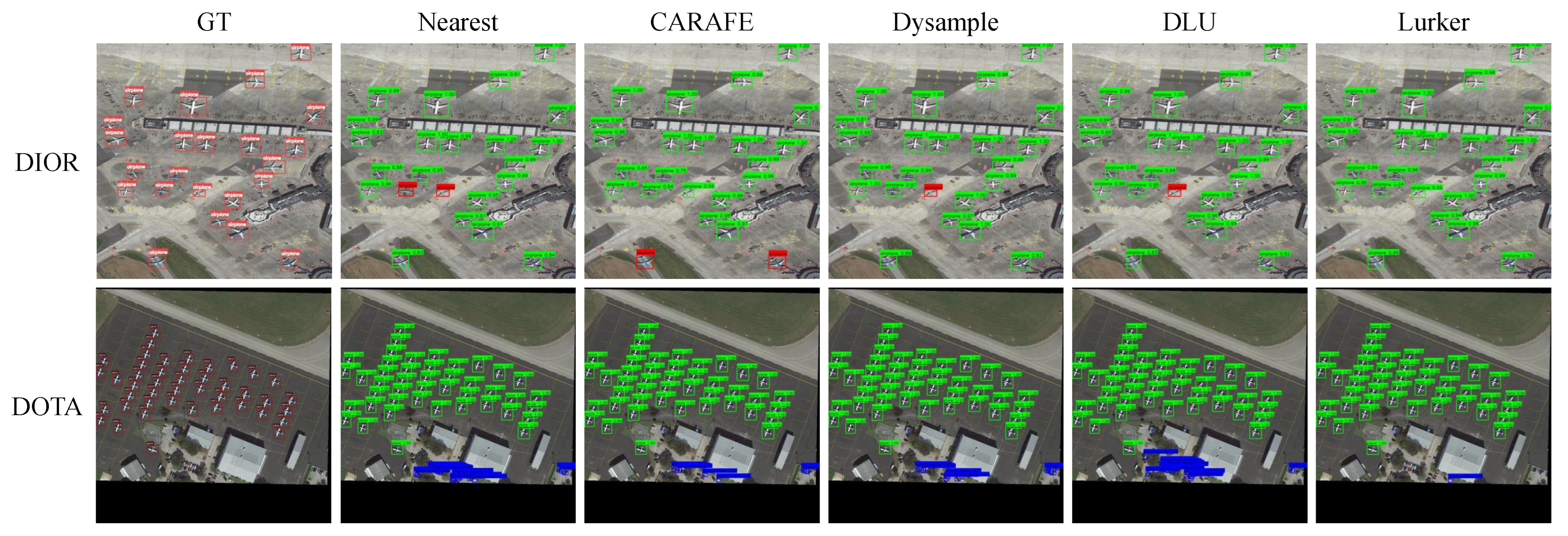

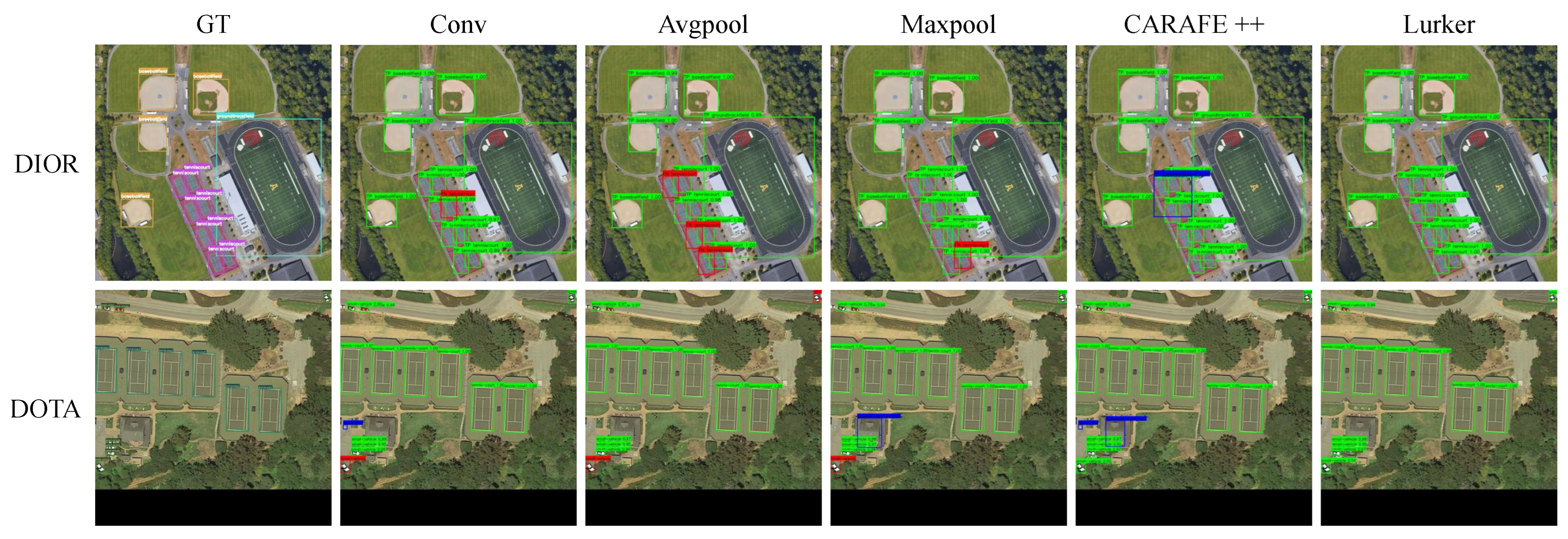

Figure 4 and

Table 1 present comprehensive qualitative and quantitative comparisons of upsampling methods on the DIOR and DOTA datasets, evaluating detection performance, inference speed, model complexity, and computational efficiency.

The qualitative visualization in

Figure 4 compares detection results using nearest neighbor interpolation, CARAFE, DySample, DLU, and our proposed Lurker method. On the DIOR dataset (first row), our method successfully detects all targets while other approaches exhibit detection misses, particularly when objects are difficult to distinguish from cluttered backgrounds. On the DOTA dataset (second row), all baseline methods produce multiple false alarms when processing small objects embedded in visually similar backgrounds, whereas Lurker maintains strong discriminative ability with only one false positive. These observations suggest that Lurker offers superior capability in distinguishing small targets within complex scenes.

Quantitatively,

Table 1 demonstrates that Lurker achieves an optimal balance between performance and efficiency across multiple metrics. On the DIOR dataset, Lurker attains a competitive mAP of 43.9 while achieving the highest inference speed of 70.7 FPS among learnable upsamplers, representing a 56% speed improvement over CARAFE. Crucially, Lurker accomplishes this with only 149.29 GFLOPs and 1758 MB GPU RAM usage, achieving the lowest computational complexity and memory footprint among all learnable methods. This represents a significant reduction in both computational overhead and memory requirements compared to alternatives.

On the more challenging DOTA dataset, Lurker excels in small object detection with an of 27.6 (second-highest) and ranks second in medium object detection with an of 46.1, while maintaining the highest inference speed of 57.2 FPS. Notably, Lurker achieves these results with only 226.54 GFLOPs and 2312 MB GPU RAM, demonstrating superior computational efficiency. The combination of low GFLOPs and minimal GPU RAM usage, coupled with competitive detection performance, underscores Lurker’s practical advantage for real-world applications where computational resources are constrained.

The strong performance on small and medium objects, combined with substantial efficiency gains across all metrics (FPS, GFLOPs, GPU RAM, and parameters), validates Lurker’s design approach of replacing complex learnable components with efficient bilinear interpolation while preserving effective feature representation. This efficiency-performance trade-off makes Lurker particularly suitable for deployment in resource-constrained environments typical of remote sensing applications.

4.4.2. Results and Analysis of Downsampling

Figure 5 and

Table 2 present comprehensive qualitative and quantitative comparisons of downsampling methods on the DIOR and DOTA datasets, demonstrating Lurker’s exceptional balance between detection accuracy and computational efficiency.

The qualitative visualization in

Figure 5 compares detection results using strided convolution, average pooling, max pooling, CARAFE++, and our Lurker method. On the DIOR dataset (first row), traditional methods including average pooling, max pooling, and strided convolution suffer from noticeable missed detections when handling dense similar targets. While the learnable CARAFE++ method introduces false alarms in such scenarios, our approach achieves perfect detection of all targets without any errors. On the DOTA dataset (second row), all four comparison methods exhibit either missed detections or false alarms when processing small targets against complex backgrounds, whereas our method successfully identifies all targets without any such errors. These visual comparisons clearly demonstrate Lurker’s superior capability in detecting small targets and distinguishing them from challenging backgrounds.

Quantitatively,

Table 2 demonstrates Lurker’s efficiency advantages across multiple metrics. On the DIOR dataset, Lurker delivers competitive performance with an mAP of 41.1 while achieving a processing speed of 69.7 FPS. This frame rate substantially surpasses that of strided convolution at 43.2 FPS and CARAFE++ at 54.6 FPS. Importantly, Lurker attains these results with computational requirements of only 148.35 GFLOPs and 1818 MB GPU RAM usage, showing superior efficiency compared to other learnable methods. This efficiency advantage is further emphasized by Lurker’s minimal parameter count of 24.85K, which represents an 82 percent reduction relative to CARAFE++.

Lurker’s advantages become even more pronounced on the complex DOTA dataset, where it achieves state-of-the-art performance with an mAP of 38.7, surpassing all other methods including CARAFE++ (38.2). Scale-specific analysis reveals exceptional performance on large objects, attaining an of 47.9 compared to CARAFE++’s 46.5, attributed to Lurker’s content-aware dynamic kernels that adaptively aggregate features over semantically meaningful receptive fields. Remarkably, Lurker maintains the highest inference speed of 51.2 FPS while using only 225.11 GFLOPs and 2188 MB GPU RAM—significantly more efficient than CARAFE++ which requires 226.42 GFLOPs and 4358 MB GPU RAM. This 50% reduction in memory usage, combined with lower computational complexity and superior accuracy, underscores Lurker’s practical advantages for processing large-scale remote sensing imagery.

The combination of leading accuracy, high speed, minimal parameters, and low computational footprint validates Lurker’s design principle: a simple yet effective dynamic kernel generation mechanism, based on bilinear interpolation from a compact source space, enables superior feature resampling for remote sensing object detection. Its consistent performance across all object scales and computational metrics confirms its effectiveness in handling the multiscale challenges inherent in remote sensing imagery while maintaining exceptional efficiency.

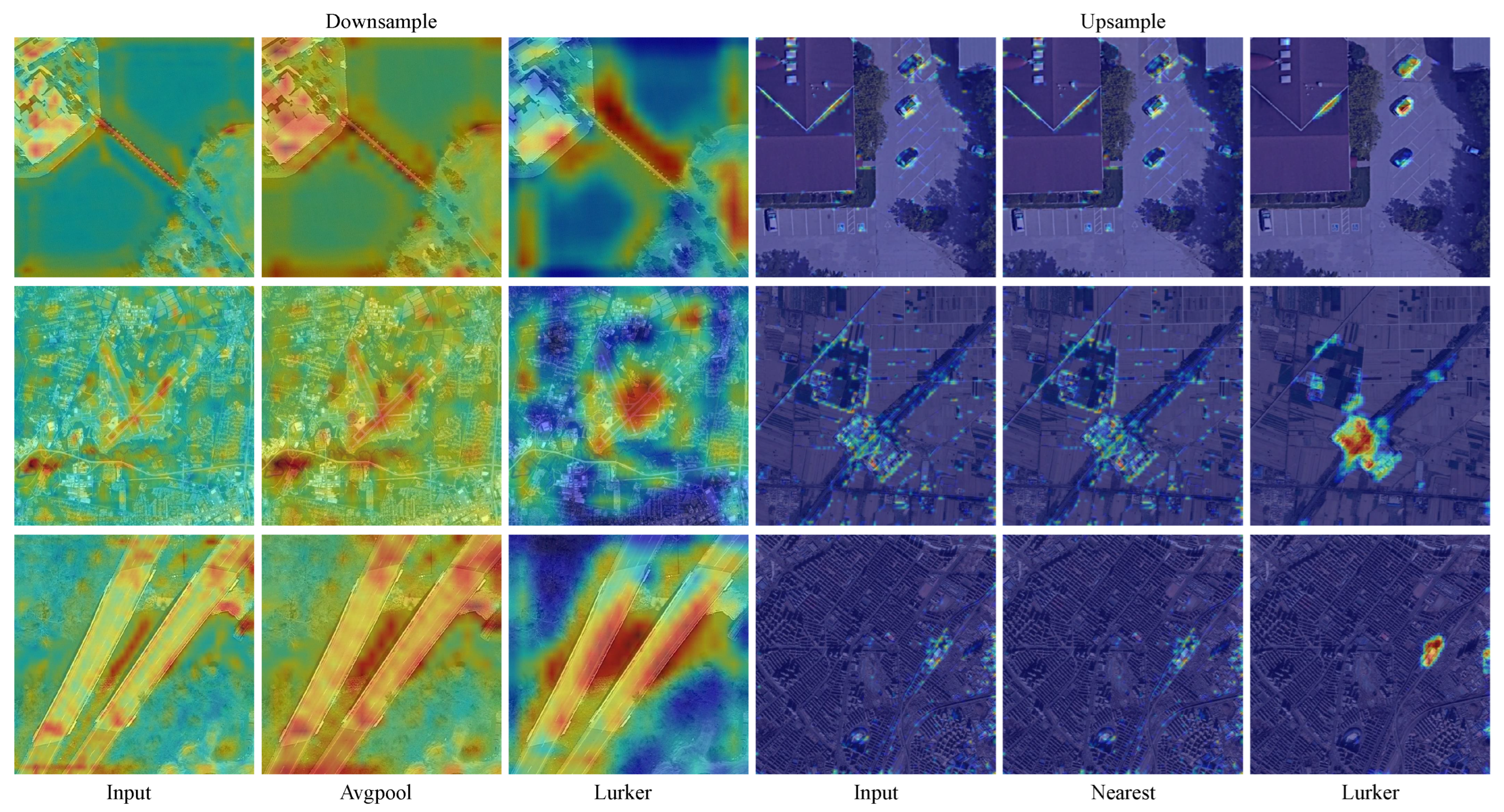

4.4.3. Visualization of the Lurker Mechanism

To intuitively demonstrate the operational principles of our proposed Lurker module, we present comparative visualizations of feature representations in

Figure 6. The left three columns display downsampling results from ResNet50’s final residual layer, while the right three columns show upsampling results from the P2 level of the FPN architecture. Specifically, columns 1–3 compare the original ResNet50 features, average pooling output, and Lurker downsampling results. The visualization clearly demonstrates that our Lurker achieves more concentrated attention on target regions, as shown in the first row where Lurker exhibits stronger focus on dam structures compared to average pooling. Similarly, columns 4–6 present the original P2 features, nearest-neighbor interpolation results, and Lurker upsampling outputs. These comparisons reveal that Lurker effectively captures higher-level semantic information during upsampling, providing more precise target localization and superior background suppression. For instance, in the second row, Lurker accurately localizes expressway service areas while significantly reducing background interference compared to nearest-neighbor interpolation.

4.5. Ablation Study

This subsection presents a comprehensive ablation study to validate the effectiveness of our proposed Lurker method. The study is structured into two parts module quantity analysis and hyperparameter sensitivity evaluation. All ablated models are assessed using the absolute performance in terms of , , and , as well as their relative improvement over the baseline. Here, and denote the mean average precision computed at IoU thresholds of 0.5 and 0.75, respectively. We first investigate the effect of progressively inserting the Lurker module into the FPN and ResNet50 architectures. We then analyze the sensitivity of key hyperparameters, including the encoder kernel size and the receptive field settings for upsampling and downsampling operations. These experiments collectively substantiate the design rationale of Lurker and its robustness in handling multiscale objects in remote sensing imagery.

Table 3 systematically evaluates the impact of progressively replacing nearest neighbor interpolation with our Lurker modules throughout the FPN architecture. The baseline configuration without Lurker modules establishes reference performance at 43.2 AP, 70.6

, and 45.7

. Experimental results demonstrate a clear positive correlation between the number of Lurker modules and performance improvement. While a single module shows minimal performance variation, incorporating more modules yields consistent gains across all metrics. The optimal four-module configuration achieves the most significant improvement, elevating AP by 0.7 points to 43.9 and

by 1.2 points to 71.8 while maintaining

at 46.6, 0.9 points above baseline. These progressive enhancements confirm Lurker’s effectiveness in improving multiscale feature representation for remote sensing object detection, with complete replacement delivering the most substantial performance benefits.

Table 4 presents a systematic ablation study evaluating the impact of progressively replacing standard downsampling operations with our Lurker modules throughout the ResNet50 architecture. The baseline configuration without any Lurker modules establishes reference performance at 39.5 mAP, 63.5

, and 43.0

. Introducing a single Lurker module to replace the maxpool layer demonstrates immediate performance improvements, achieving a significant 1.2 point mAP gain and a remarkable 2.9 point improvement in

. While the two-module configuration experiences a slight performance dip, the three-module setup recovers with a solid 0.7 point mAP improvement and substantial 2.5 point gain in

. The most impressive results emerge with full replacement of four downsampling operations, where Lurker delivers maximum performance gains of 1.6 points in mAP, 3.8 points in

, and 0.3 points in

. These progressive improvements demonstrate Lurker’s capacity to enhance feature representation throughout the network architecture, with complete replacement yielding the most substantial performance benefits. The consistent enhancement in

across all configurations highlights Lurker’s particular effectiveness in improving detection accuracy for standard IoU thresholds, making it highly suitable for practical object detection applications in remote sensing imagery.

Table 5 and

Table 6 present comprehensive ablation studies on the key hyperparameters of the Lurker module, focusing on the encoder kernel size and receptive field configuration for both upsampling and downsampling operations. In the case of upsampling, the configuration with an encoder kernel size of 3 and an upsampling kernel size of 3 achieves the best performance of 44.2 mAP. However, we ultimately select a configuration with kernel sizes of 1 and 5, which attains a competitive mAP of 43.9. This decision is driven by practical deployment needs, as the minimal performance drop of only 0.3 mAP is offset by a substantial reduction in parameter count and computational overhead, consistent with Lurker’s focus on extreme lightweight design. For downsampling, the highest performance of 41.1 mAP is achieved using encoder and downsampling kernel sizes of 5 and 7, respectively. In contrast, we adopt a more efficient setup with sizes of 1 and 3, yielding 40.9 mAP, which represents only a marginal decrease of 0.2 mAP. This configuration significantly reduces computational complexity, an important advantage given the frequent use of downsampling operations in the backbone network. These hyperparameter choices reflect the core design philosophy of Lurker, which aims to maintain high detection accuracy while minimizing computational cost. The results confirm that our method successfully balances performance and efficiency, making it highly suitable for resource-constrained remote sensing applications.

4.6. Discussion

In this section, we first elucidate the position of our proposed framework relative to existing research in dynamic kernel learning and remote sensing feature resampling. Subsequently, the limitations of Lurker are discussed. Finally, we conclude by exploring potential directions for future research. Current approaches in remote sensing can be grouped into three main categories including kernel based generation (for example CAFUS [

23], CAU [

24], ADM [

10], CADM [

12], EDown [

31], ScDown [

36], CARAFE [

2], and DLU [

4]), pixel displacement methods (such as SGFU [

25], DySample [

8], FGUM [

27], and GU [

26]), and feature rearrangement techniques (including SP-Conv [

37], LRU [

28], and Sub-Pixel Conv). While these approaches demonstrate promise, they exhibit distinct limitations that we analyze per category below.

Kernel based methods: Kernel based methods typically implement content-aware filtering but often require substantial computational resources. This pattern is evident in CARAFE’s large kernel prediction, the multi-stage architectures of CAU and CAFUS, and the channel-spatial attention mechanisms used in ADM and CADM, all of which introduce considerable parameters and complexity. While EDown and ScDown pursue more efficient designs, they usually sacrifice kernel adaptability or dynamic range to obtain these efficiency gains.

Pixel displacement methods: Pixel displacement methods like DySample, GU, and SGFU achieve operational efficiency by sampling from a small fixed receptive field, generally limited to a area. This approach naturally constrains their capacity to incorporate wider contextual information, frequently causing loss of fine details and boundary artifacts in complex remote sensing imagery.

Feature rearrangement methods: Feature rearrangement techniques such as Sub-Pixel Conv and LRU present another limitation through their dependence on static transformations that operate uniformly across content. Since these methods cannot adjust to local semantic variations, they struggle to manage the high heterogeneity found in geospatial imagery.

As a representative of the kernel-based generation paradigm, Lurker preserves the essential concept of dynamic kernel generation through a significantly simplified structure, standing out as the most lightweight operator in its category while preserving competitive accuracy. In contrast to pixel-displacement methods, Lurker addresses their inherent limitation of constrained receptive fields by employing content-adaptive kernels with configurable sizes. This design allows it to capture multi-scale contextual information, which is crucial for interpreting complex geospatial scenes. Likewise, whereas feature rearrangement techniques apply uniform transformations irrespective of content, Lurker incorporates a dynamic sampling mechanism that adapts to local semantic variations. Unlike feature rearrangement techniques, which perform uniform transformations irrespective of local content and thus limit their flexibility, Lurker employs a dynamic sampling mechanism that adapts to semantic variations. This capability is particularly vital for handling the high heterogeneity present in remote sensing imagery. By integrating these strengths, Lurker demonstrates that effective resampling in remote sensing must strike a balance between semantic awareness and operational efficiency, suggesting a direction for future designs that achieve robust performance without excessive complexity

Despite these advantages, certain limitations warrant attention. The main difficulty arises when incorporating Lurker as a downsampling operator into pre-trained models, where structural changes interfere with weight compatibility and hinder effective use of pre-trained initialization. This limitation is clearly reflected in experimental outcomes, where downsampling setups consistently yield poorer results than upsampling across both datasets, with particular impact on small and medium object detection. Moreover, the qualitative assessment, though demonstrating Lurker’s attention mechanisms, depends on limited scene examples that cannot comprehensively represent performance across varied environmental conditions and sensor types. Consequently, generalizability to wider operational situations remains partially unconfirmed. Additionally, the evaluation uses original-resolution images without considering image compression effects, leaving practical implementation concerns partially unresolved given the common presence of compression artifacts in real-world remote sensing applications.

To overcome these challenges, subsequent research will concentrate on three primary directions. First, we will create weight adaptation methods to settle compatibility problems between Lurker and pre-trained architectures, maintaining output distribution consistency while protecting learned representations. Next, we will thoroughly examine how image compression influences Lurker’s performance, investigating its incorporation into complete preprocessing pipelines to reduce information loss from standard compression techniques. Finally, we will extend validation across diverse geographical settings, sensor properties, and operational scenarios to completely evaluate generalization capacity and robustness. Through these organized investigations, we intend to improve Lurker’s practical value and reliability for real-world remote sensing implementations while methodically addressing existing constraints.

5. Conclusions

This paper presents Lurker, an innovative learned and unified resampling framework that addresses critical challenges in remote sensing object detection. The core contribution lies in the development of a lightweight content-aware kernel generation mechanism that effectively handles both upsampling and downsampling operations within a single architectural paradigm. Through systematic evaluation on the challenging DIOR and DOTA datasets, our experiments demonstrate that Lurker achieves detection accuracy comparable to or superior than state-of-the-art methods while maintaining exceptionally low parameter overhead. The framework reduces parameters by approximately 90% compared to CARAFE and 82% compared to CARAFE++, establishing new benchmarks for efficiency in learnable resampling operators. The comprehensive ablation studies provide strong validation for Lurker’s design principles, confirming the effectiveness of its three-component architecture and the robustness of hyperparameter selections across different operational scenarios. Our analysis reveals that the combination of compact source kernel generation with efficient bilinear interpolation enables superior feature representation while minimizing computational complexity. The method demonstrates particular strength in handling the multiscale challenges inherent in remote sensing imagery, showing consistent performance improvements across small, medium, and large object categories. While Lurker represents a significant advancement in efficient resampling for remote sensing applications, our discussion has identified important limitations that warrant future investigation. The framework’s current limitation in fully utilizing pre-trained weights during downsampling integration presents an opportunity for further refinement. Additionally, the exploration of image compression effects and broader environmental validation will be crucial for enhancing practical deployment capabilities. Despite these challenges, Lurker establishes a solid foundation for future research in lightweight dynamic resampling, offering a compelling solution for resource-constrained remote sensing applications where the balance between accuracy and efficiency is paramount.