1. Introduction

In recent years, the progressive maturation of precision sensors, advanced batteries, autonomous navigation systems, and computer vision technologies has enabled UAVs to achieve widespread adoption across a diverse range of application scenarios, including geospatial mapping [

1], parcel delivery [

2], public security patrols [

3], emergency response [

4], and agricultural monitoring [

5]. Owing to their low deployment cost, broad observational coverage, and agile task scheduling, UAVs have demonstrated significant practical value in reducing manpower demands, accelerating operational workflows, and enhancing task safety. However, small object detection, which plays a vital role in advancing UAV intelligence, is still at a relatively immature stage [

6,

7]. This technical lag has led to unsatisfactory detection accuracy in complex UAV aerial scenarios, thereby posing a major constraint on the further adoption and evolution of UAVs in various industries. Although the advent of deep neural networks, particularly convolutional architectures, has markedly advanced object detection capabilities [

8,

9,

10,

11], existing algorithms still fail to meet the precision and robustness demands of UAV applications. The main challenges are as follows. First, UAV imagery often contains numerous small objects with subtle visual cues, making it difficult for conventional detection frameworks to extract sufficiently discriminative features, which in turn degrades detection accuracy. Second, the wide coverage and dynamic viewpoints inherent to aerial imagery introduce complex terrain textures and varying lighting conditions, both of which can significantly hinder the detection process and compromise the reliability of the results.

To address the above challenges, researchers have conducted extensive studies on enhancing the representation of small objects, among which multi-scale feature fusion has become a widely adopted approach for improving detection performance [

12,

13]. Although existing multi-scale fusion methods enhance small object detection by aggregating semantic and spatial information across different feature levels [

14,

15], they still suffer from several limitations. These methods typically rely on direct concatenation or a simple weighted summation of multi-scale feature maps. While such operations help alleviate the problem of insufficient feature expression, they lack explicit modeling of global semantic context, making it difficult to capture the implicit relationships between small objects and their surrounding environments. As a result, these methods are often inadequate in characterizing object–scene interactions, which compromises detection accuracy and robustness. In contrast, the proposed Scene Interaction Modeling Module (SIMM) introduces a simplified self-attention mechanism over deep features to generate compact scene embeddings, which are then combined with spatial descriptors obtained via the channel-wise pooling of shallow features. By performing interactive modeling between these two components, the SIMM explicitly incorporates scene-level priors into the fusion process. This enables the network to focus on the global contextual relationships between small objects and the scene, guiding the selective enhancement or suppression of shallow spatial responses under semantic supervision. Consequently, the SIMM significantly improves the representational distinctiveness and discriminative power of small object features, achieving more accurate localization and more reliable cross-scale alignment, particularly in complex background conditions.

Studies have shown that the contextual information surrounding small objects can provide valuable cues for object detection, and effectively leveraging this information helps improve detection performance [

16,

17]. Existing contextual information-based methods typically introduce fixed receptive fields to capture local or global context [

18,

19,

20]. However, this fixed design has notable limitations: it fails to accommodate the varying contextual needs of small objects with different characteristics, and it may also introduce redundant background information that weakens the discriminability of object features. This issue is particularly pronounced in UAV aerial imagery, where small objects vary significantly in categories, textures, and fine-grained details. To address this problem, we propose the Dynamic Context Modeling Module (DCMM), which constructs two receptive field branches and dynamically generates their weights based on the input features. By selectively combining the responses of these branches in the spatial dimension, the DCMM enables adaptive context modeling. This design breaks through the limitations of fixed receptive fields, allowing the network to flexibly adjust the contextual information needed for different small objects. As a result, it facilitates feature enhancement in an object-specific manner and effectively mitigates the problem of missed detections caused by the improper use of contextual information.

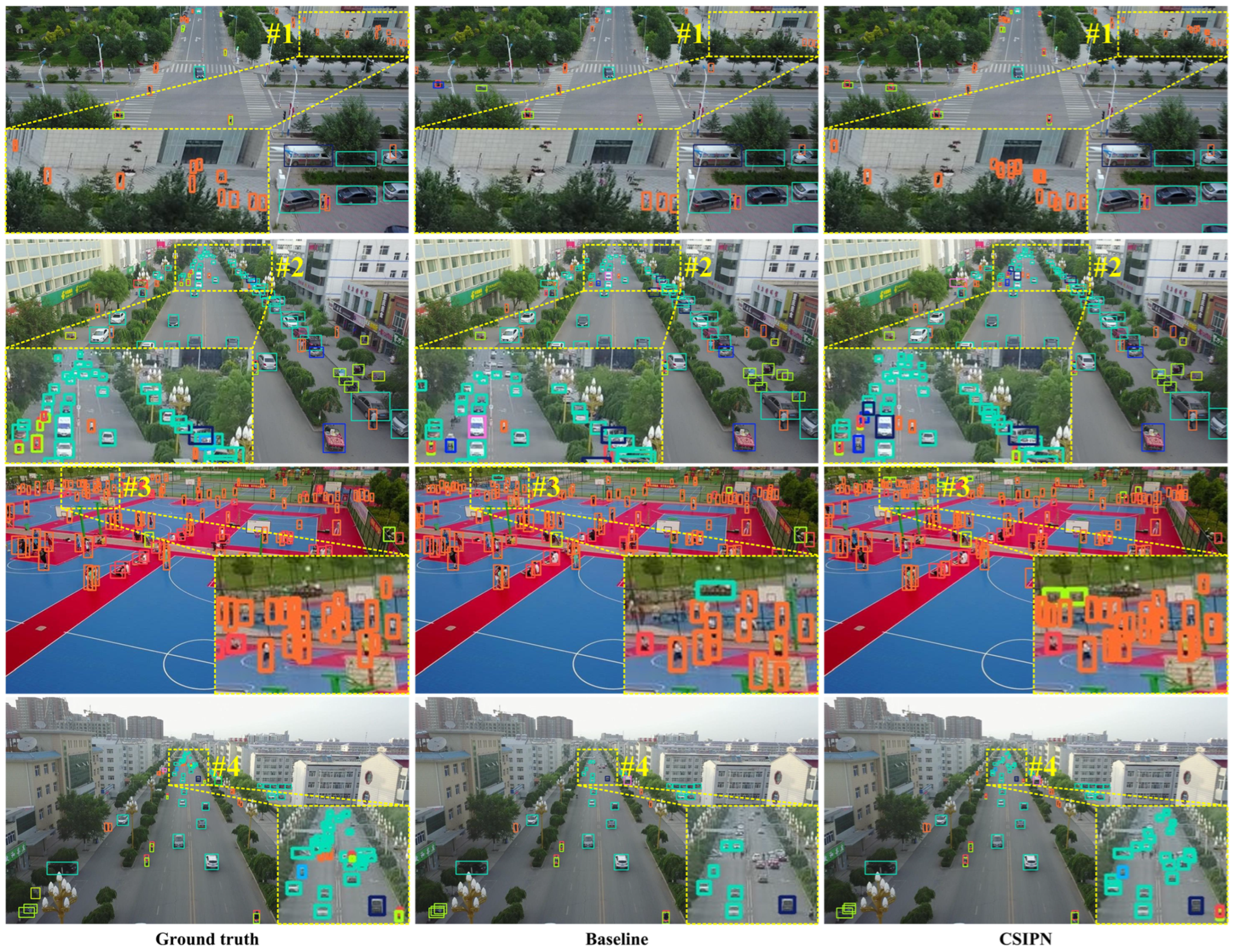

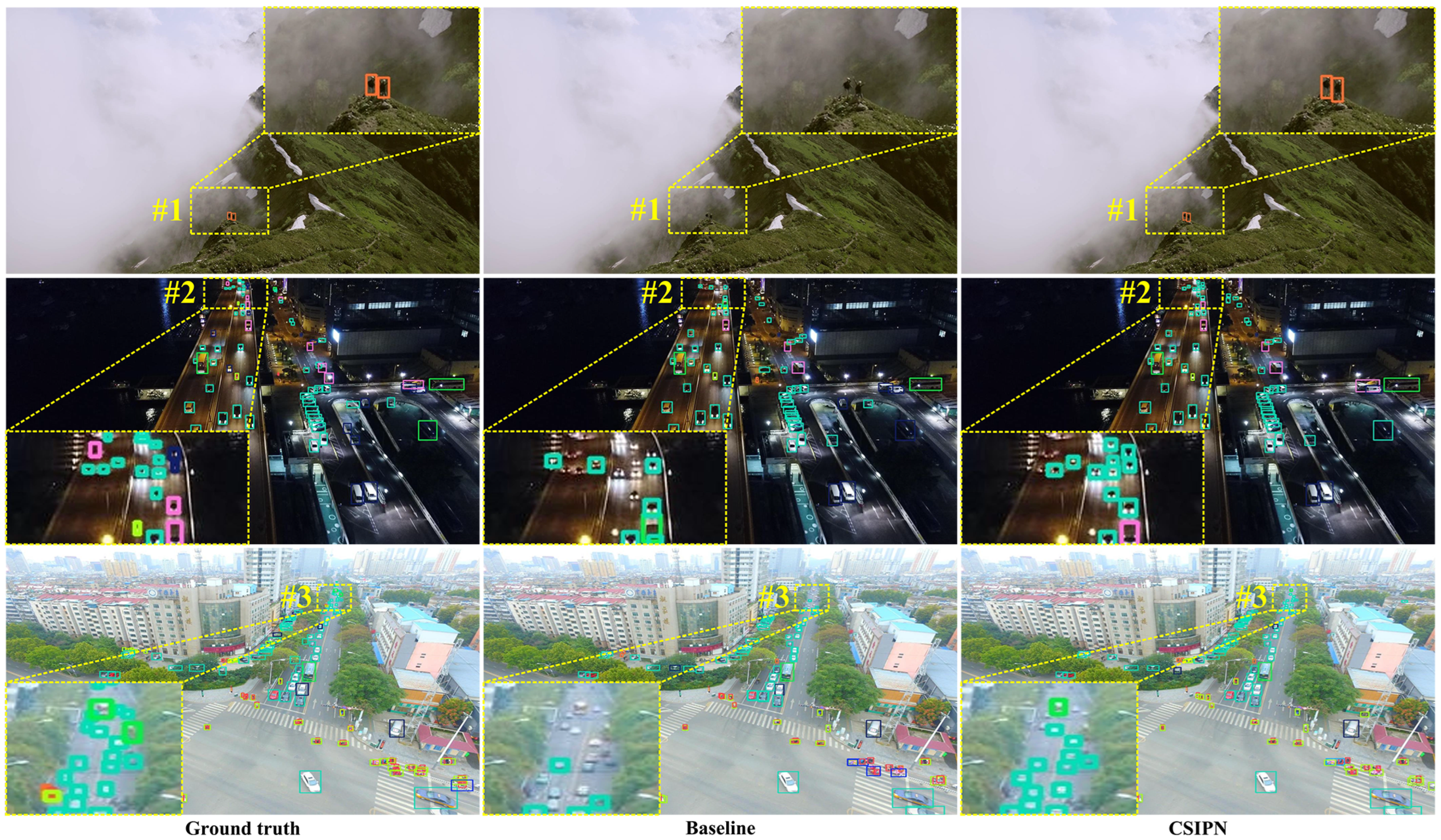

To comprehensively enhance the representation of small objects from the perspectives of scene understanding, context modeling, and semantic fusion, we propose a novel detector tailored for UAV aerial imagery, which is named the Context–Semantic Interaction Perception Network (CSIPN). This network comprises three key modules: the SIMM, the DCMM, and the Semantic-Context Dynamic Fusion Module (SCDFM). Specifically, in the scene interaction modeling stage, the SIMM constructs a simplified self-attention mechanism on deep features to generate compact scene embedding vectors while applying channel-wise pooling operations to shallow features to obtain spatial descriptors. These two representations are then jointly modeled to explicitly capture the semantic correlations between small objects and the global scene, enabling the selective enhancement or suppression of shallow spatial responses under the guidance of global semantic priors. This process improves the discriminability and saliency of small object representations. In the context modeling stage, the DCMM builds context modeling branches with diverse receptive fields and dynamically assigns weights based on input features, enabling the selective integration and enhancement of contextual information. By overcoming the limitations of fixed receptive fields, the DCMM strengthens the network’s ability to adaptively capture the contextual cues needed by different small objects, thereby improving detection recall. In the semantic-context dynamic fusion stage, the SCDFM adaptively integrates the deep semantic features extracted from the backbone with the context-enhanced representations generated by the DCMM, supplementing and balancing semantic and contextual cues to facilitate comprehensive feature interactions and refine small object representations. Most existing UAV aerial datasets primarily focus on urban traffic scenarios, leading to deep learning-based methods that often suffer from poor generalization and limited robustness in real-world applications. To address this issue, we construct a new UAV-based object detection dataset named WildDrone, objecting diverse and unstructured wild environments, with the aim of enhancing model adaptability and practical performance in complex scenes. Comprehensive evaluations are performed on the VisDrone-DET [

21], TinyPerson [

22], WAID [

23], and WildDrone datasets. Experimental results show that the CSIPN surpasses current state-of-the-art detectors in both detection accuracy and robustness.

A novel Context–Semantic Interaction Perception Network (CSIPN) is proposed, which integrates scene understanding, dynamic context modeling, and semantic fusion to effectively improve the detection performance of small objects in UAV aerial imagery.

A Scene Interaction Modeling Module (SIMM) is designed to explicitly capture the relationships between small objects and the global scene. Shallow spatial responses are guided to be selectively enhanced or suppressed under global semantic priors, thereby strengthening semantic perception and discriminative modeling in complex background.

A Dynamic Context modeling Module (DCMM) is presented, in which multi-receptive-field branches are employed with dynamically assigned weights. This enables the adaptive selection and integration of contextual information across different scales, facilitating the dynamic compensation of missing appearance cues for small objects and improving detection completeness and robustness.

A Semantic-Context Dynamic Fusion Module (SCDFM) is proposed, which adaptively integrates the deepest semantic information with the shallow contextual information, effectively leveraging their complementary relationship to enhance the representations of small objects.

To improve the generalization and practical applicability of detection models in complex environments, a new UAV-based dataset named WildDrone is constructed, which focuses on diverse and unstructured wild scenes.

Extensive experiments on UAV aerial datasets including VisDrone-DET, TinyPerson, WAID, and WildDrone validate the superior performance of the proposed method in small object detection tasks.

3. Methods

3.1. Overview of CSIPN

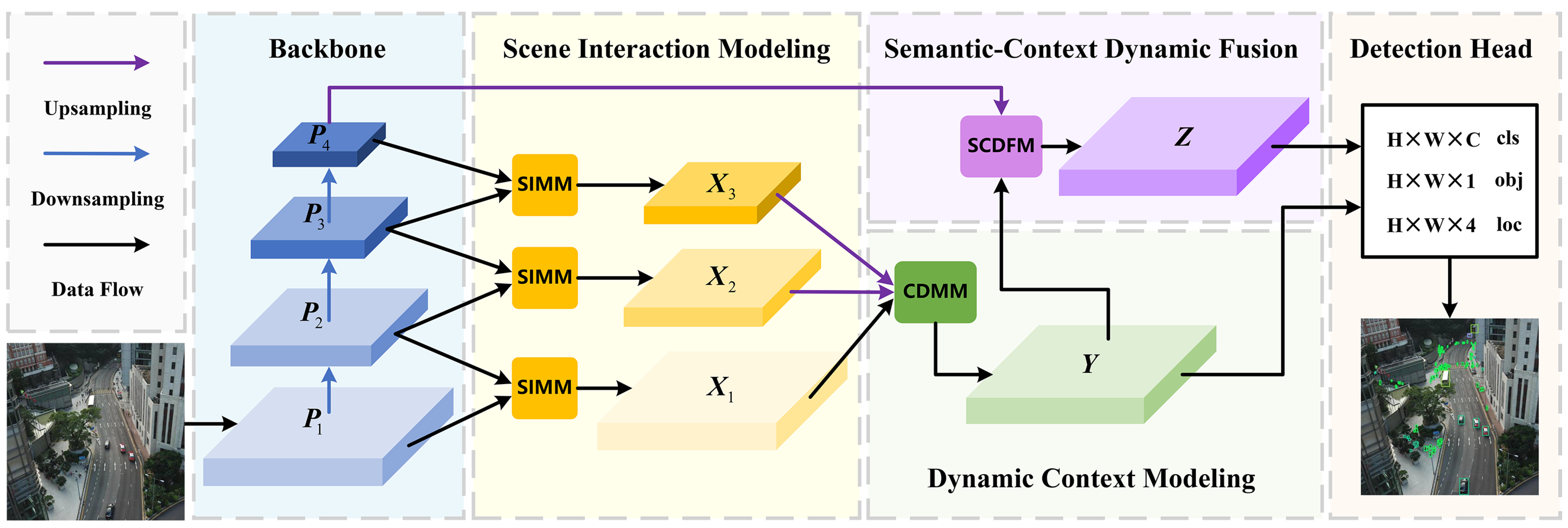

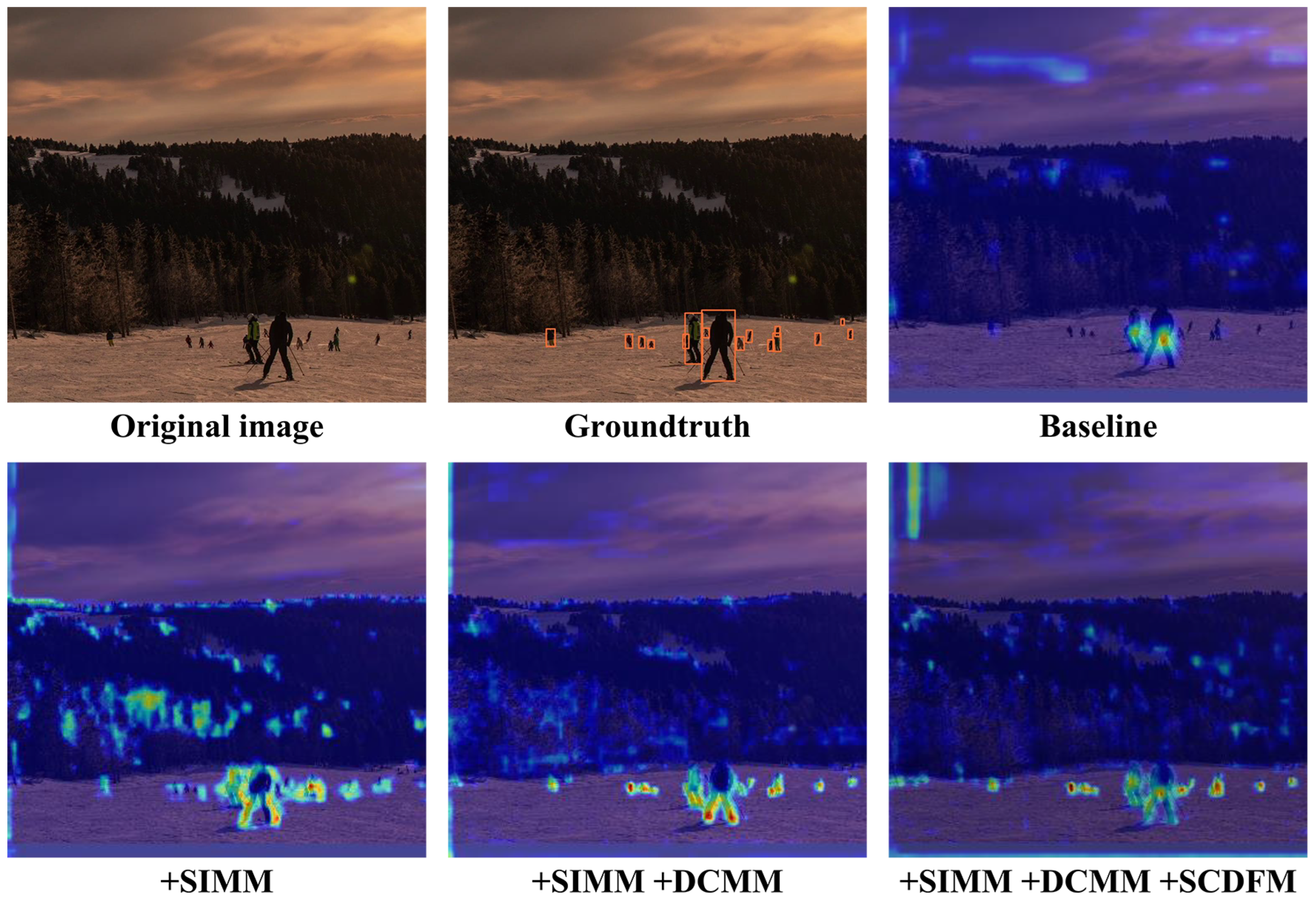

The overall architecture of the CSIPN is illustrated in

Figure 1. The network consists of five stages: backbone feature extraction, scene interaction modeling, dynamic context modeling, semantic-context fusion, and prediction. In the backbone feature extraction stage, the network extracts fundamental features from the input image, generating multi-level feature representations for subsequent modeling. These features are denoted as

, where

,

, and

represent the number of channels, height, and width of the feature map at level

i, respectively.

In the scene interaction modeling stage, the network employs the SIMM to establish the relationships between small objects and the global scene. This module leverages deep semantic cues to guide the spatial responses of shallow features, thereby achieving implicit semantic alignment and providing semantic priors for subsequent context modeling.

In the dynamic context modeling stage, the DCMM dynamically aggregates contextual information from multiple receptive field branches under the guidance of the semantic priors provided by the SIMM. Through multi-branch weighting and spatially selective fusion, the DCMM adaptively captures contextual dependencies suited to different objects, effectively enhancing the discriminative power of foreground features and alleviating missed detections in UAV aerial imagery.

In the semantic-context fusion stage, the SCDFM dynamically fuses the deep semantic features extracted by the backbone with the context-enhanced representations generated by the DCMM. This module adaptively selects and integrates complementary information from different features, ensuring that the fused representations are semantically consistent and mutually complementary.

Finally, in the prediction stage, the features produced by the DCMM and SCDFM are fed into the detection heads to generate the final detection results. Overall, the CSIPN establishes a progressive and collaborative feature modeling framework: the SIMM provides semantic guidance while achieving cross-scale fusion; under this guidance, the DCMM performs dynamic context modeling; and the SCDFM further builds a dynamic complementarity between semantics and context, thereby enhancing the overall discriminability and robustness for small object detection.

3.2. Scene Interaction Modeling Module (SIMM)

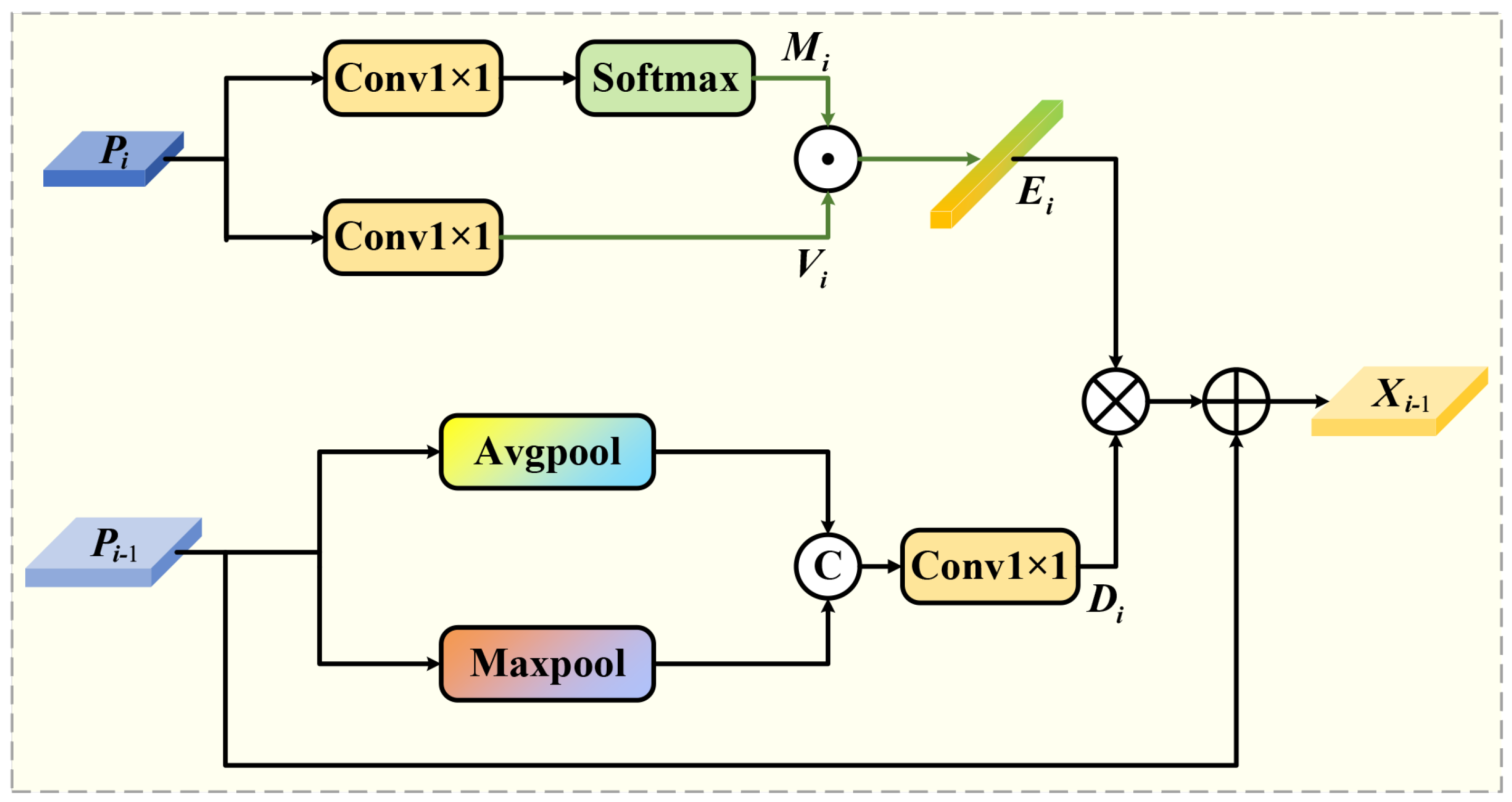

Different from conventional Transformer-based global attention mechanisms that rely on full self-attention computation, the SIMM introduces a lightweight and semantically guided interaction strategy that efficiently captures global contextual dependencies while selectively enhancing shallow spatial details, thereby improving the saliency and discriminability of small object representations. Specifically, the SIMM approximates global attention through a series of convolutional and reshaping operations to generate a compact global scene embedding vector without computing the full attention matrix, where N denotes the total number of pixels in the input feature map. This design significantly reduces computational complexity while maintaining the capability to capture long-range dependencies. More importantly, by integrating this global semantic prior with shallow spatial responses, the SIMM explicitly models the interaction between global semantics and fine-grained spatial information, which distinguishes it from existing global attention modules.

To further mitigate the semantic inconsistency between deep and shallow features during multi-scale fusion, the SIMM incorporates a guided interaction mechanism to achieve implicit semantic alignment. The scene embedding

generated from deep features acts as a semantic prior to modulate the spatial responses

of shallow features. This semantic guidance selectively enhances the spatial details associated with small objects while suppressing irrelevant background interference. As a result, the shallow spatial responses are filtered and refined under the guidance of global semantics, achieving alignment with the global scene information extracted from deep features. Consequently, the fused features across different scales preserve both spatial coherence and semantic consistency, leading to more robust and discriminative multi-scale representations that enhance small object detection performance. The detailed structure of this module is shown in

Figure 2.

We first design a lightweight self-attention mechanism to perform global relation modeling on the deep feature

that contains richer semantic information, aiming to capture long-range dependencies between pixels. Specifically, we approximate the similarity between the Query and Key matrices through convolution operations and feature dimension reshaping, which significantly reduces computational overhead while maintaining effective modeling capability. The detailed process is as follows:

where

denotes the similarity matrix,

denotes the reshape operation,

denotes the Softmax function, and

denotes an

convolution layer with a SiLU activation function. The value matrix

is generated as follows:

Subsequently, we integrate

and

to construct a one-dimensional scene embedding representation

, which captures the semantic correlation between foreground small objects and the scene, and it is expressed as follows:

where ⊙ denotes the function used to estimate the similarity between features. To accelerate computation, we efficiently implement this process using matrix multiplication.

To comprehensively capture the spatial fine-grained information of small objects, channel-wise max pooling and channel-wise average pooling are applied to the shallow feature

, followed by a convolution layer that fuses these two types of spatial descriptors to obtain the spatial detail response

:

where

denotes channel-wise concatenation,

represents channel-wise average pooling, and

indicates channel-wise max pooling.

To selectively enhance or suppress shallow spatial responses under the guidance of global semantic priors and thereby improve the saliency and discriminability of small objects, the one-dimensional scene embedding

is applied to weight the spatial detail response

. A residual structure is then applied to ensure training stability, resulting in the final scene interaction feature

, which is expressed as

where ⊕ denotes element-wise addition and ⊗ denotes broadcast element-wise multiplication.

3.3. Dynamic Context Modeling Module (DCMM)

Due to the lack of distinctive appearance features in small objects, utilizing contextual information can be beneficial for detection to a certain extent. However, the excessive supplementation of contextual information may introduce a significant amount of irrelevant background and noise, reducing the detector’s discriminative ability and increasing the false detection rate. Considering the diversity of object types and scales in UAV aerial images, the amount of contextual information required for detecting different objects also varies.

To address this, the DCMM is designed. It consists of two receptive field branches, whose weights (i.e., spatial selection masks) are dynamically determined based on the input. It is worth noting that the DCMM does not require any additional loss function to guide the learning of dynamic weights. Specifically, the dynamic weights depend both on the convolutional parameters learned during training and the characteristics of the input feature maps. The learned convolutional parameters define the rules for weight generation, while the input feature maps provide the information necessary to instantiate these rules. During training, the gradients from the detection loss are backpropagated through the DCMM, automatically optimizing the convolutional parameters that generate the spatial selection masks. During inference, these learned convolutional parameters interact with the input feature maps to dynamically generate the weights of the receptive field branches.

By selectively combining these receptive field branches in the spatial dimension, the DCMM overcomes the limitation of fixed receptive fields and enables the dynamic selection of contextual information tailored to different small objects. This allows the DCMM to adaptively supplement the contextual cues needed for detecting various small objects, enhancing distinct foreground features and effectively alleviating the issue of severe missed detections in UAV aerial imagery.

As shown in

Figure 3, the input to the DCMM consists of

,

, and

. First,

is upsampled by a factor of 4 and

is upsampled by a factor of 2. Then, the upsampled

, upsampled

, and

are concatenated along the channel dimension and passed through a

convolution layer to adjust the number of channels, resulting in

:

where

denotes

n-times upsampling using the nearest-neighbor interpolation method.

Then, the fused feature

passes through two receptive field branches, producing outputs

and

:

The outputs from the different receptive field ranches

and

are concatenated to form

:

Next, channel-wise max pooling and channel-wise average pooling are applied to

to extract the spatial relationship:

where

and

denote spatial feature descriptors obtained through max pooling and average pooling, respectively. To achieve information interaction between different spatial feature descriptors,

and

are concatenated and passed through a

convolution layer with a SiLU activation function, producing the spatial selection mask

:

The features from different receptive field branches are then weighted by their corresponding spatial selection masks and fused through a

convolution layer with a SiLU activation function to obtain the attention feature

:

Finally, the output

of the DCMM is the element-wise product of

and

, which is expressed as shown below:

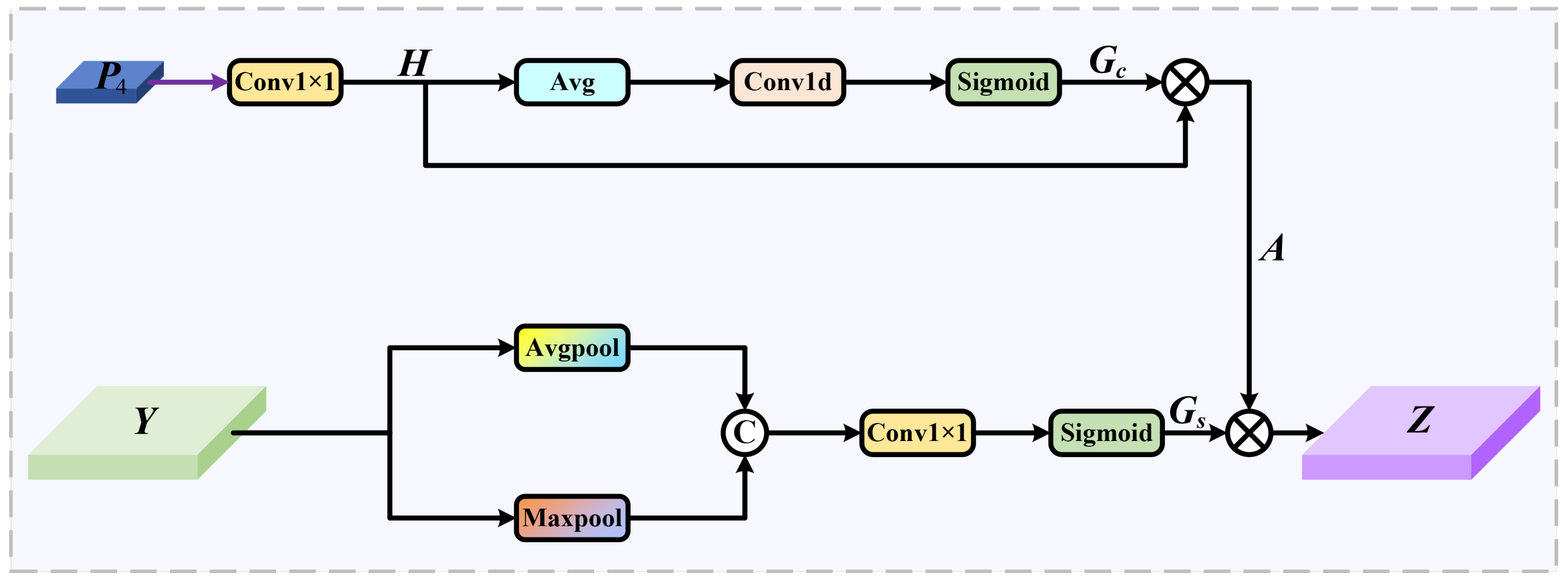

3.4. Semantic-Context Dynamic Fusion Module (SCDFM)

Unlike the SIMM and DCMM, which focus on semantic-guided scene interaction and contextual modeling, respectively, the SCDFM serves as a complementary fusion module that dynamically supplements semantic cues beneficial for small-object classification. Rather than performing explicit geometric alignment, the SCDFM concentrates on ensuring semantic compatibility between the modulated contextual information from the DCMM and the deep semantic representations extracted from the backbone. To this end, the SCDFM adaptively adjusts the fusion ratios of multi-scale features through channel-wise and spatial-wise attention, which is guided by deep semantics. This design allows the network to reconcile high-level semantic information with shallow contextual cues, thereby enhancing the discriminability of small-object features.

As shown in

Figure 4, to ensure dimensional compatibility with shallow features,

is upsampled by a factor of 8 and then passed through a

convolution layer to obtain

:

To effectively extract key semantic information from deep features, global average pooling is applied to capture the overall distribution characteristics. The resulting tensor is then reshaped, which is followed by a one-dimensional convolution to perform nonlinear feature mapping. Finally, a Sigmoid activation function is used to generate the global channel descriptor

:

where

denotes the Sigmoid activation function,

represents a one-dimensional convolution layer with a kernel size of 3, and

indicates global average pooling. Then,

guides the selection of semantic information by performing a broadcast element-wise multiplication with

H, resulting in the attention-enhanced feature

:

To establish a feature selection mechanism along the spatial dimension, it is necessary to model the spatial contextual relationships of

Y. Specifically, average pooling and max pooling are applied along the channel dimension to capture different statistical characteristics. The resulting feature is then transformed by a

convolution layer and passed through a Sigmoid function to generate the global spatial attention map

:

Finally, the spatial descriptor

is used to guide spatially selective feature fusion, thereby highlighting key regions of small objects and producing the optimized output feature

:

6. Conclusions

To address the challenges of small object detection in UAV aerial scenarios, we propose a novel CSIPN, which significantly improves detection performance through scene interaction modeling, dynamic context modeling, and dynamic feature fusion. In the scene interaction modeling stage, the SIMM employs a lightweight self-attention mechanism to generate a global semantic embedding and interact with shallow spatial descriptors, thereby enabling object–scene semantic interaction and achieving cross-scale alignment during feature fusion. In the dynamic context modeling stage, the DCMM adaptively models contextual information through two dynamically weighted receptive field branches, effectively supplementing the contextual cues required for detecting different small objects. In the semantic-context fusion stage, the SCDFM uses a dual channel-spatial weighting strategy to adaptively fuse deep semantic information with shallow contextual details, further optimizing the feature representation of small objects. Experiments on the TinyPerson dataset, the WAID dataset, the VisDrone-DET dataset, and our self-built WildDrone dataset show that the CSIPN achieves mAPs of 37.2%, 93.4%, 50.8%, and 48.3%, respectively, significantly outperforming existing state-of-the-art methods with only 25.3M parameters. Moreover, the CSIPN exhibits excellent robustness in challenging scenarios such as complex background occlusions and dense small object distributions, demonstrating its superiority in real-world applications. In the future, we will explore more efficient context modeling and lightweight feature fusion strategies to better support real-time deployment on resource-constrained UAV platforms.