Filter-Wise Mask Pruning and FPGA Acceleration for Object Classification and Detection

Highlights

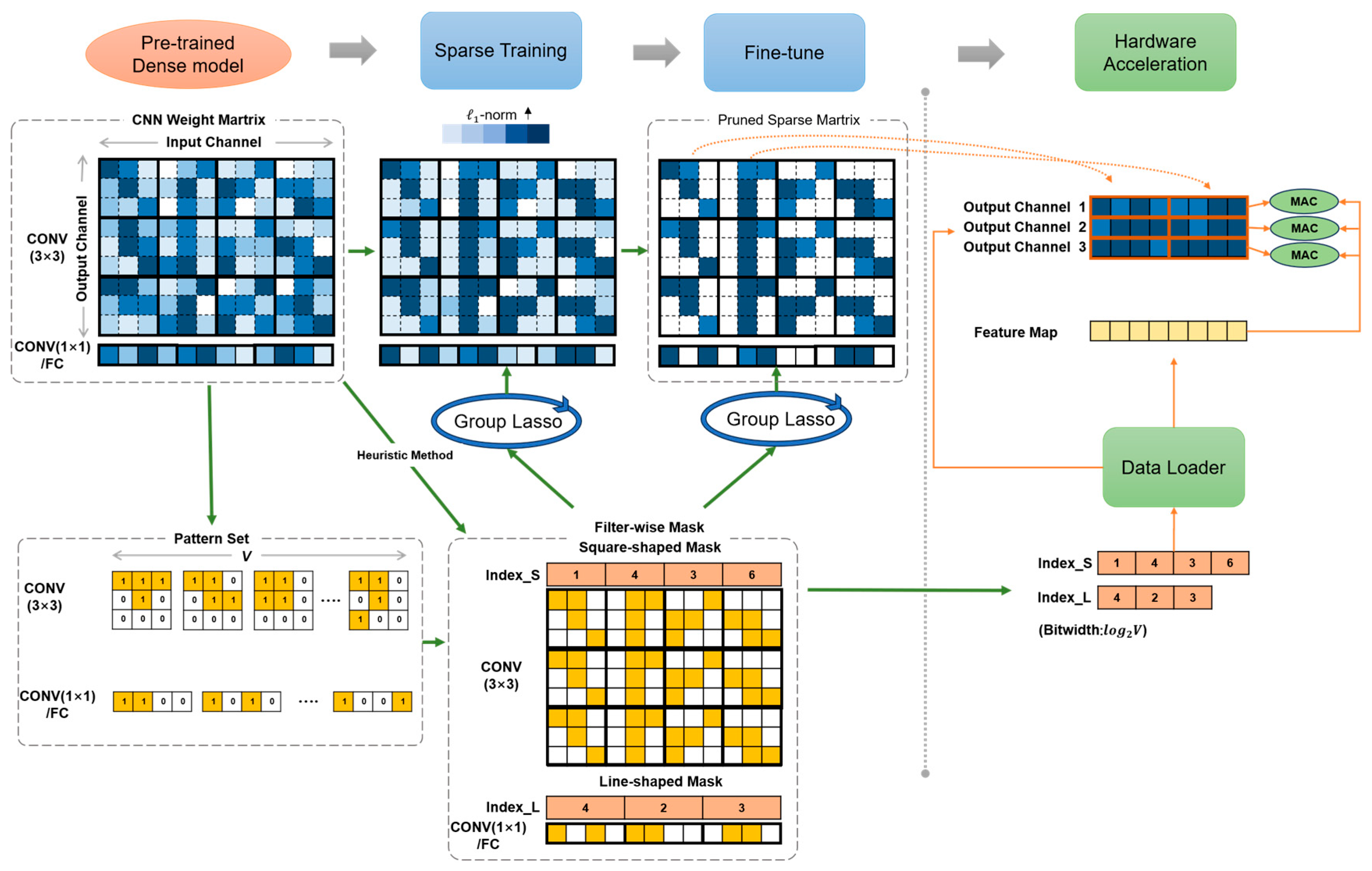

- A novel filter-wise mask pruning approach is proposed, which achieves the benefits of both unstructured and structured pruning. The newly introduced structural constraint on the filter dimension leads to more regularity, generating more hardware-friendly and performant models.

- An FMP-based acceleration architecture is proposed for real-time processing. The strategy for calculation parallelism and memory access is dedicatedly optimized to enhance workload balance and throughput.

- The proposed pruning method is proven on both classification networks and detection networks. The pruning rate can achieve 75.1% for VGG-16 and 84.6% for ResNet-50 without accuracy compromise. The pruned YOLOv5s achieves a pruning rate of 53.43% with a slight accuracy degradation of 0.6%.

- The proposed acceleration architecture is implemented on FPGA to evaluate its practical execution performances. The throughput reaches up to 809.46MOPS. The pruned network achieves the speedup of 2.23× and 4.4×, with a compression rate of 2.25× and 4.5×, respectively, converting the model compression to the execution speedup effectively.

Abstract

1. Introduction

- Aiming for efficient model compression, a novel filter-wise mask pruning approach is proposed, which achieves the benefits of both unstructured and structured pruning. Besides the fine-grained pattern pruning, the newly introduced structural constraint on filter dimension leads to more regularity, generating more hardware-friendly and performant models.

- We further propose FMP-based acceleration architecture for real-time processing. Aiming for efficient streaming computing, the strategy for calculation parallelism and memory access are dedicatedly optimized to enhance workload balance and throughput, which fully translates weight pruning to hardware performance gain.

- We conduct extensive experiments on various remote sensing tasks, including VGG-16 and ResNet-50 for object classification and YOLOv5 for object detection, providing benchmarks and tricks for sparse network generation. The proposed acceleration architecture is implemented in FPGA, achieving a high-performing speedup.

2. Materials and Methods

2.1. Filter-Wise Mask Pruning

2.1.1. Design of Filter-Wise Mask

| Algorithm 1: Filter-wise mask pattern set generation |

| Input: Weight matrix of a pre-trained CNN model , number of patterns V, number of non-zeros in kernel ; Initialization: Predefined pattern set ; Output: Filter-wise sparsity mask ; 1 ; 2 ; 3 for l from 1 to L do 4 for do 5 for do 6 Obtain -norm according to Equation (2); 7 Sort -norm and obtain the pattern index with the largest magnitude; 8 end 9 end 10 for c from 1 to do 11 Count and obtain the most common pattern index ; 12 end 13 Form with . |

2.1.2. Weight Sparsification with Filter-Wise Mask

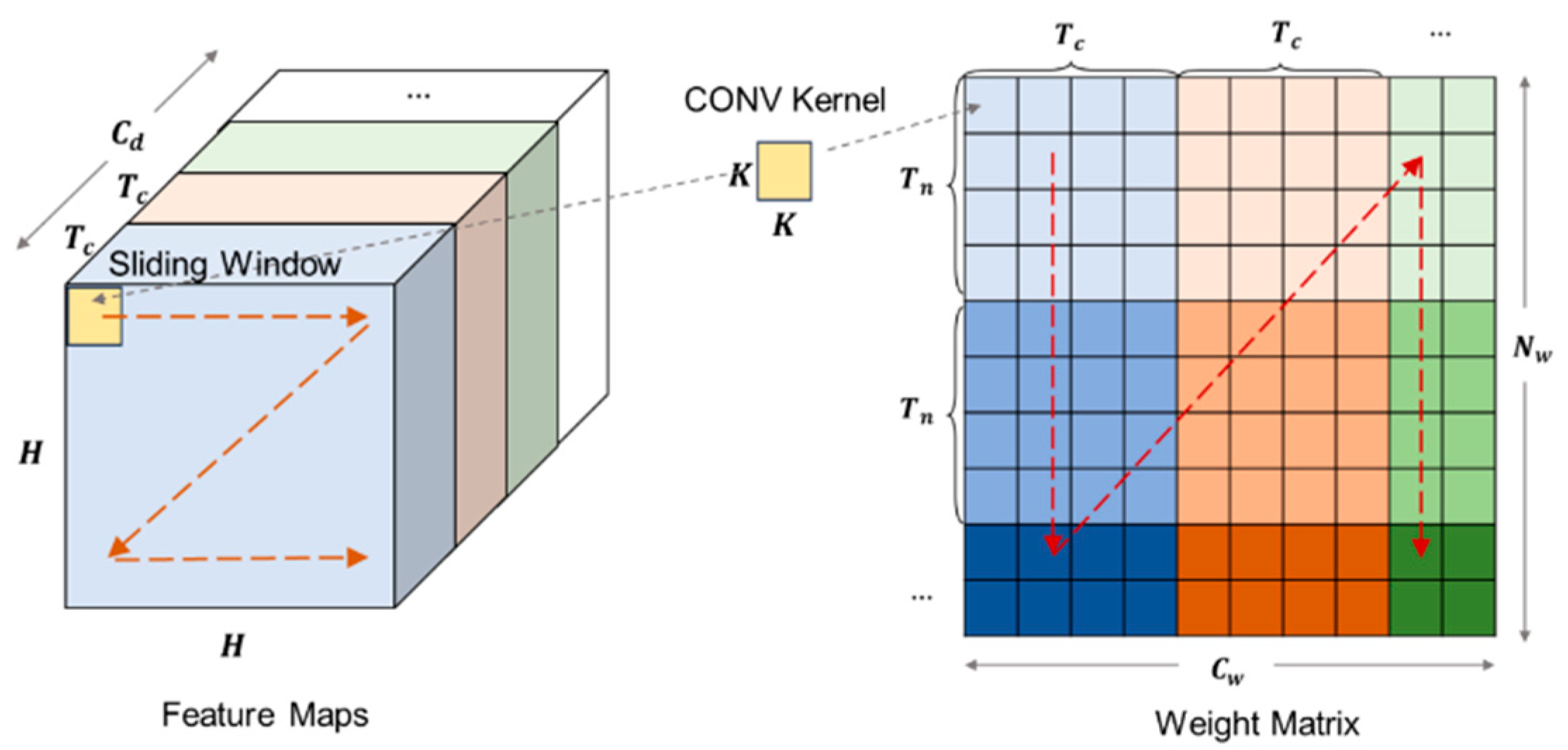

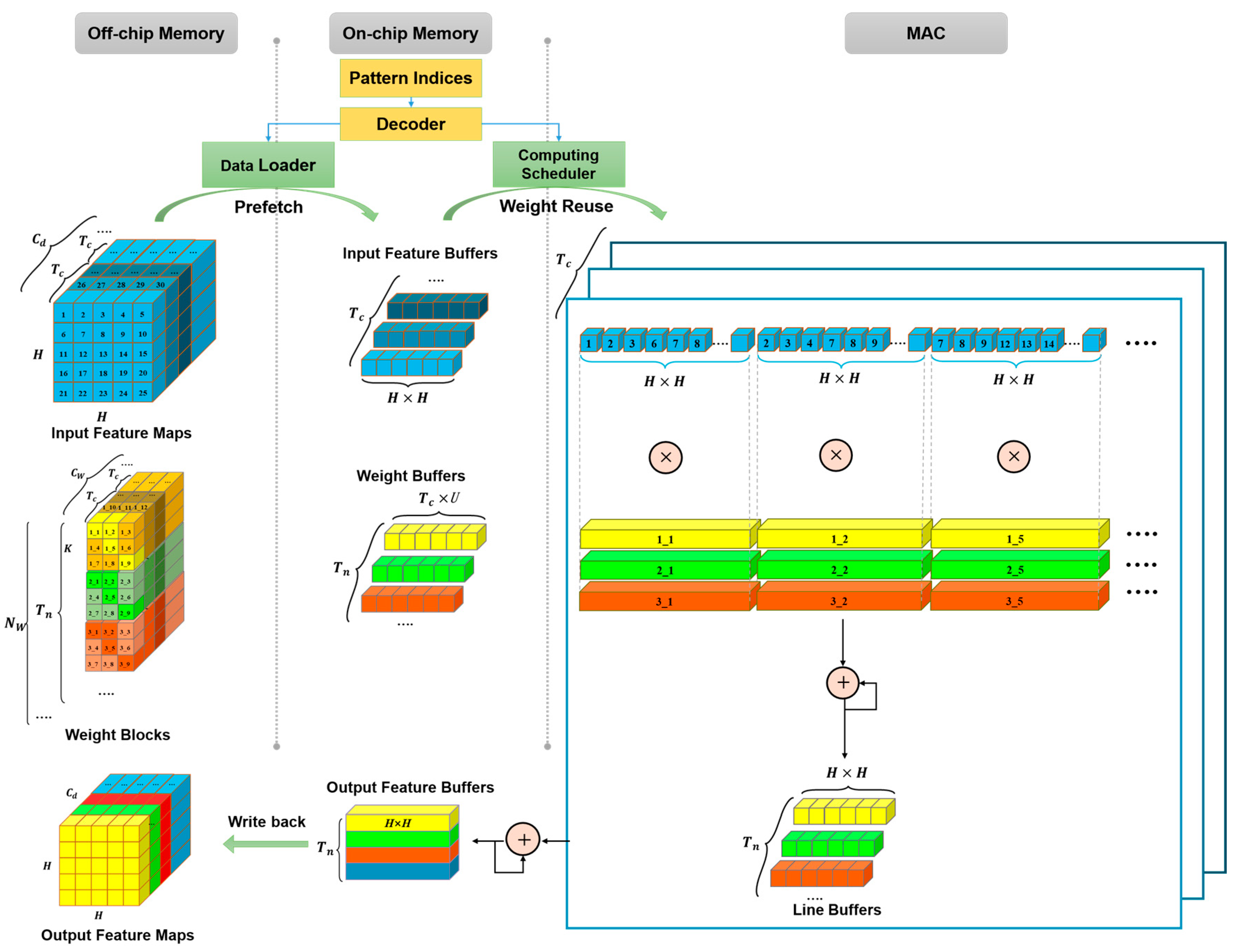

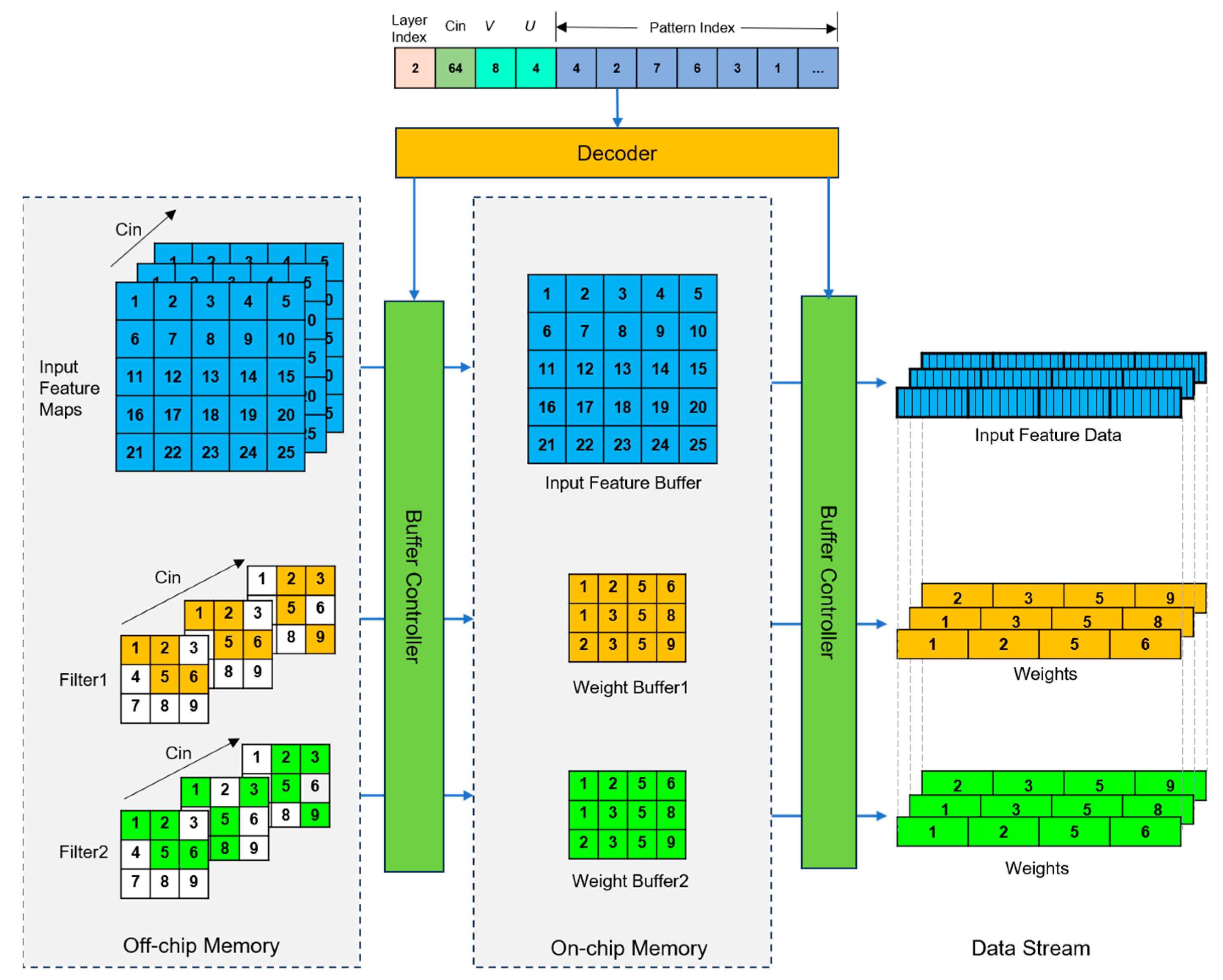

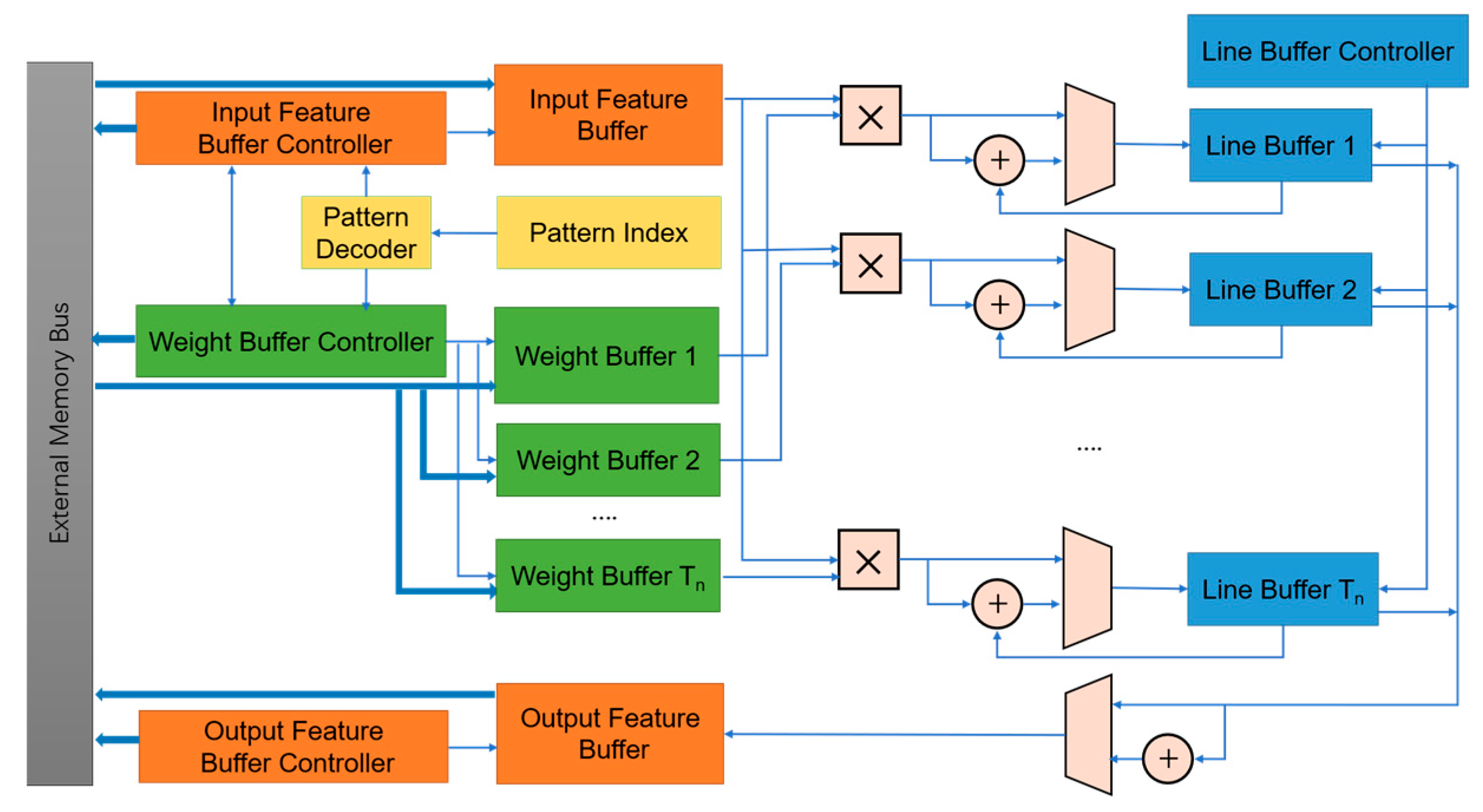

2.2. Acceleration Architecture with FMP

2.2.1. Data-Path Optimization for Streaming Computation

2.2.2. FMP-Based Convolutional Processing

2.2.3. Overview of Acceleration Architecture

3. Results

3.1. Implementation Settings

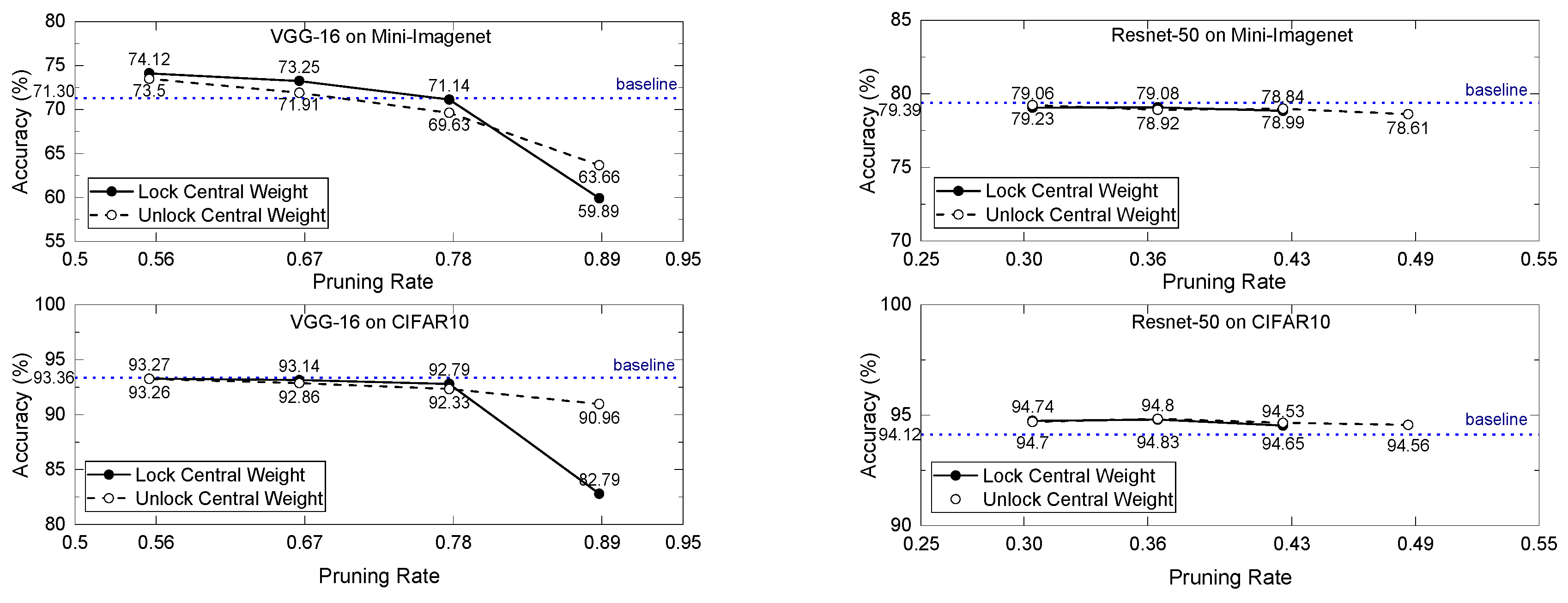

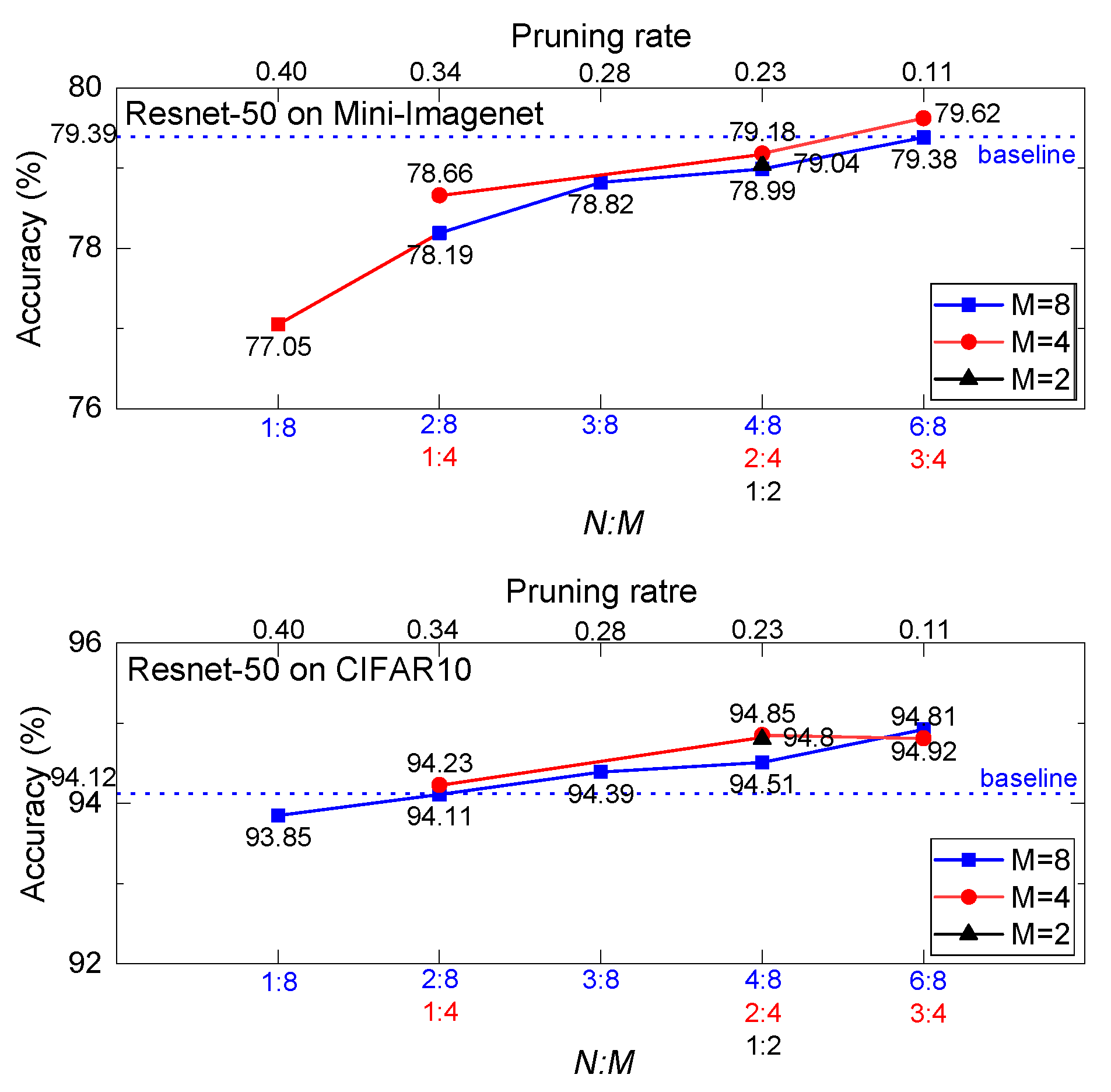

3.2. Filter-Wise Mask Pruning on Classification Networks

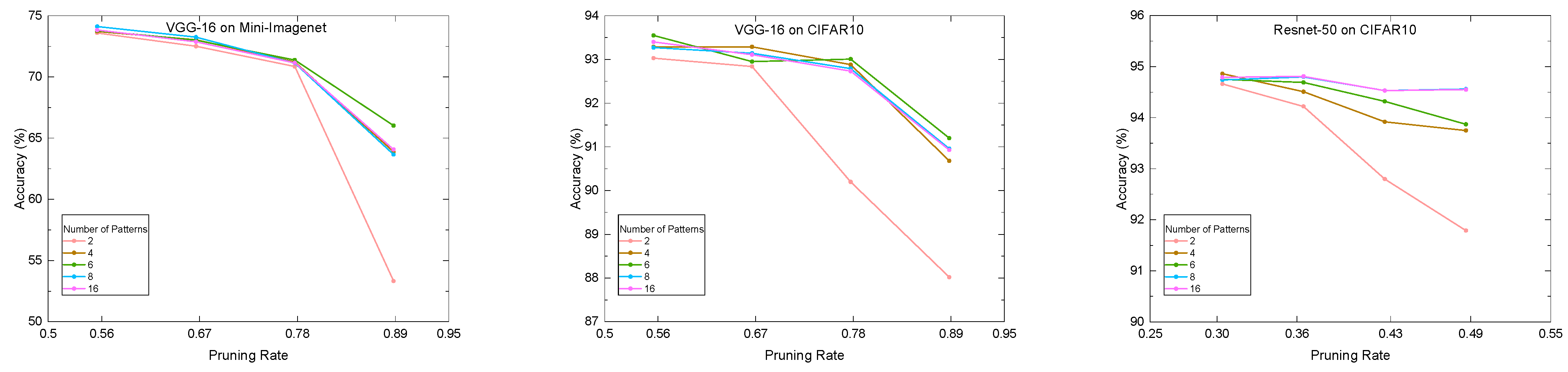

3.3. Evaluation of Pruning Patterns

3.4. Filter-Wise Mask Pruning on YOLOv5

3.5. Performance of FMP-Based Accelerator

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, G.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Wang, J. Algorithm/Hardware Codesign for Real-Time On-Satellite CNN-Based Ship Detection in SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sens. 2022, 14, 2861. [Google Scholar] [CrossRef]

- Han, D.; Lee, S.B.; Song, M.; Cho, J.S. Change Detection in Unmanned Aerial Vehicle Images for Progress Monitoring of Road Construction. Buildings 2021, 11, 150. [Google Scholar] [CrossRef]

- Vanhoy, G.; Lichtman, M.; Hoare, R.R.; Brevik, C. Rapid Prototyping Framework for Intelligent Arrays with Heterogeneous Computing. In Proceedings of the 2022 IEEE International Symposium on Phased Array Systems & Technology (PAST), Waltham, MA, USA, 11–14 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Li, W.; Liu, J.; Mei, H. Lightweight convolutional neural network for aircraft small target real-time detection in Airport videos in complex scenes. Sci. Rep. 2022, 12, 14474. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Wu, Z.; Zhang, C.; Gu, X.; Duporge, I.; Hughey, L.F.; Stabach, J.A.; Skidmore, A.K.; Hopcraft, J.G.C.; Lee, S.J.; Atkinsonet, P.M.; et al. Deep learning enables satellite-based monitoring of large populations of terrestrial mammals across heterogeneous landscape. Nat. Commun. 2023, 14, 3072. [Google Scholar] [CrossRef]

- Golcarenarenji, G.; Martinez-Alpiste, I.; Wang, Q.; Alcaraz-Calero, J.M. Efficient Real-Time Human Detection Using Unmanned Aerial Vehicles Optical Imagery. Int. J. Remote Sens. 2021, 42, 2440–2462. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, L.; Wang, Q.; Ye, X.; Huang, Y.; Yao, P. Accelerating Sparse Deep Neural Network Inference Using GPU Tensor Cores. In Proceedings of the 2022 IEEE High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 19–23 September 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Lin, D.-L.; Huang, T.-W. Accelerating Large Sparse Neural Network Inference Using GPU Task Graph Parallelism. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 3041–3052. [Google Scholar] [CrossRef]

- Li, Z.; Yuan, G.; Niu, W.; Zhao, P.; Li, Y.; Cai, Y. NPAS: A Compiler-aware Framework of Unified Network Pruning and Architecture Search for Beyond Real-Time Mobile Acceleration. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14250–14261. [Google Scholar] [CrossRef]

- Sui, X.; Lv, Q.; Zhi, L.; Zhu, B.; Yang, Y.; Zhang, Y.; Tan, Z. A Hardware-Friendly High-Precision CNN Pruning Method and Its FPGA Implementation. Sensors 2023, 23, 824. [Google Scholar] [CrossRef]

- Zhang, J.; Cheng, L.; Li, Y.; He, G.; Xu, N. A Low-Latency FPGA Implementation for Real-Time Object Detection. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Republic of Korea, 22–28 May 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Li, H.; Yue, X.; Wang, Z.; Chai, Z.; Wang, W.; Hiroyuki, T.; Lin, M. Optimizing the Deep Neural Networks by Layer-Wise Refined Pruning and the Acceleration on FPGA. Comput. Intell. Neurosci. 2022, 2022, 8039281. [Google Scholar] [CrossRef]

- Eckert, C.; Wang, X.; Wang, J.; Subramaniyan, A.; Iyer, R.; Sylvester, D.; Blaauw, D.; Das, R. Neural cache: Bitserial in-cache acceleration of deep neural networks. In Proceedings of the 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; IEEE Press: New York, NY, USA, 2018; pp. 383–396. [Google Scholar]

- Hegde, K.; Yu, J.; Agrawal, R.; Yan, M.; Pellauer, M.; Fletcher, C.W. Ucnn: Exploiting computational reuse in deep neural networks via weight repetition. In Proceedings of the 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; IEEE Press: New York, NY, USA, 2018; pp. 674–687. [Google Scholar]

- Jain, A.; Phanishayee, A.; Mars, J.; Tang, L.; Pekhimenko, G. Gist: Efcient data encoding for deep neural network training. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; IEEE: New York, NY, USA, 2018; pp. 776–789. [Google Scholar]

- Huang, K.; Li, B.; Chen, S.; Claesen, L.; Xi, W.; Chen, J. Structured Term Pruning for Computational Efficient Neural Networks Inference. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 190–203. [Google Scholar] [CrossRef]

- Chen, S.; Huang, K.; Xiong, D.; Li, B.; Claesen, L. Fine-Grained Channel Pruning for Deep Residual Neural Networks. In Artificial Neural Networks and Machine Learning—ICANN 2020. ICANN 2020; Farkaš, I., Masulli, P., Wermter, S., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12397. [Google Scholar] [CrossRef]

- Rhu, M.; O’Connor, M.; Chatterjee, N.; Pool, J.; Kwon, Y.; Keckler, S. Compressing DMA Engine: Leveraging Activation Sparsity for Training Deep Neural Networks. In Proceedings of the 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), Vienna, Austria, 24–28 February 2018; IEEE: New York, NY, USA, 2018; pp. 331–344. [Google Scholar]

- Akhlaghi, V.; Yazdanbakhsh, A.; Samadi, K.; Gupta, R.K.; Esmaeilzadeh, H. Snapea: Predictive early activation for reducing computation in deep convolutional neural networks. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 2–6 June 2018; IEEE: New York, NY, USA, 2018; pp. 662–673. [Google Scholar]

- Li, C.; Wang, G.; Wang, B.; Ling, X.; Li, Z.; Chang, X. Dynamic Slimmable Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Virtual, 19–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 8607–8617. [Google Scholar]

- Dai, X.; Yin, H.; Jha, N.K. NeST: A Neural Network Synthesis Tool Based on a Grow-and-Prune Paradigm. IEEE Trans. Comput. 2019, 68, 1487–1497. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Sun, J. Channel Pruning for Accelerating Very Deep Neural Networks. In Proceedings of the Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 1398–1406. [Google Scholar]

- Guo, Y.; Yao, A.; Chen, Y. Dynamic network surgery for efficient dnns. In Advances in Neural Information Processing Systems; Curran Associates: New York, NY, USA, 2016; pp. 1379–1387. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, UK, 2015; pp. 1135–1143. [Google Scholar]

- Mao, H.; Han, S.; Pool, J.; Li, W.; Liu, X.; Wang, Y.; Dally, J.W. Exploring the granularity of sparsity in convolutional neural networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 1927–1934. [Google Scholar]

- Buluç, A.; Fineman, J.T.; Frigo, M.; Gilbert, J.R.; Leiserson, C.E. Parallel sparse matrix-vector and matrix-transpose-vector multiplication using compressed sparse blocks. In Proceedings of the Twenty-First Annual Symposium on Parallelism in Algorithms and Architectures, Calgary, AB, Canada, 11–13 August 2009; ACM: New York, NY, USA, 2009; pp. 233–244. [Google Scholar]

- Anwar, S.; Hwang, K.; Sung, W. Structured pruning of deep convolutional neural networks. ACM J. Emerg. Technol. Comput. Syst. 2017, 13, 1–18. [Google Scholar] [CrossRef]

- Renda, A.; Frankle, J.; Carbin, M. Comparing rewinding and fine-tuning in neural network pruning. In Proceedings of the International Conference on Learning Representation (ICLR), Simien Mountains, Ethiopia, 30 April 2020. [Google Scholar]

- Ma, X.; Guo, F.M.; Niu, W.; Lin, X.; Tang, J.; Ma, K.; Ren, B.; Wang, Y. PCONV: The Missing but Desirable Sparsity in DNN Weight Pruning for Real-time Execution on Mobile Devices. arXiv 2019, arXiv:1909.05073. [Google Scholar] [CrossRef]

- Niu, W.; Ma, X.; Lin, S.; Wang, S.; Qian, X.; Lin, X.; Wang, Y.; Ren, B. PatDNN: Achieving Real-Time DNN Execution on Mobile Devices with Pattern-based Weight Pruning. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 907–922. [Google Scholar]

- Tan, Z.; Song, J.; Ma, X.; Tan, S.; Chen, H.; Miao, Y. PCNN: Pattern-based Fine-Grained Regular Pruning Towards Optimizing CNN Accelerators. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T. Lite-YOLOv5: A lightweight deep learning detector for on-board ship detection in large-scene sentinel-1 AR images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, R.; Tan, Y.; Li, P. LE-YOLO: A Novel Lightweight Object Detection Method. In Proceedings of the 2023 3rd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI), Wuhan, China, 15–17 December 2023; pp. 477–480. [Google Scholar] [CrossRef]

- Ma, X.; Ji, K.; Xiong, B.; Zhang, L.; Feng, S.; Kuang, G. Light-YOLOv4: An edge-device oriented target detection method for remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10808–10820. [Google Scholar] [CrossRef]

- Yu, H.; Lee, S.; Yeo, B.; Han, J.; Park, E.; Pack, S. Towards a Lightweight Object Detection through Model Pruning Approaches. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 11–13 October 2023; pp. 875–880. [Google Scholar] [CrossRef]

- Zhou, A.; Ma, Y.; Zhu, J.; Liu, J.; Zhang, Z.; Yuan, K.; Sun, W.; Li, H. Learning N:M Fine-grained Structured Sparse Neural Networks from Scratch. arXiv 2021, arXiv:2102.04010. [Google Scholar]

- Mishra, A.K.; Latorre, J.A.; Pool, J.; Stosic, D.; Stosic, D.; Venkatesh, G.; Yu, C.; Icikevicius, P. Accelerating Sparse Deep Neural Networks. arXiv 2021, arXiv:2104.08378. [Google Scholar] [CrossRef]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning convolutional neural networks for resource efficient transfer learning. arXiv 2016, arXiv:1611.06440. [Google Scholar]

- Lee, N.; Ajanthan, T.; Torr, P. SNIP: Single-Shot Networkpruning Based on Connection Sensitivity. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Molchanov, P.; Mallya, A.; Tyree, S.; Frosio, I.; Kautz, J. Importance estimation for neural network pruning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 11264–11272. [Google Scholar]

- Mocanu, D.C.; Lu, Y.; Pechenizkiy, M. Do We Actually Need Dense Over-Parameterization? In-Time Over-Parameterization in Sparse Training. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning efficient convolutional networks through network slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2736–2744. [Google Scholar]

- Yang, T.-J.; Chen, Y.-H.; Sze, V. Designing energy-efficient convolutional neural networks using energy-aware pruning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6071–6079. [Google Scholar]

- Yu, J.; Lukefahr, A.; Palframan, D.; Dasika, G.; Das, R.; Mahlke, S. Scalpel: Customizing dnn pruning to the underlying hardware parallelism. In Proceedings of the Computer Architecture (ISCA), 2017 ACM/IEEE 44th Annual International Symposium, Toronto, ON, Canada, 24–28 June 2017; IEEE: New York, NY, USA, 2017; pp. 548–560. [Google Scholar]

- Sun, F.; Wang, C.; Gong, L.; Xu, C.; Zhang, Y.; Lu, Y. A high-performance accelerator for large-scale convolutional neural networks. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; pp. 622–629. [Google Scholar]

- Yan, Z.; Xing, P.; Wang, Y.; Tian, Y. Prune it yourself: Automated pruning by multiple level sensitivity. In Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; pp. 73–78. [Google Scholar]

- Chen, R.; Yuan, S.; Wang, S.; Li, Z.; Xing, M.; Feng, Z. Model selection—Knowledge distillation framework for model compression. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Orlando, FL, USA, 5–7 December 2021. [Google Scholar]

- Chen, C.; Gan, Y.; Han, Z.; Gao, H.; Li, A. An Improved YOLOv5 Detection Algorithm with Pruning and OpenVINO Quantization. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 4691–4696. [Google Scholar] [CrossRef]

- Sun, X.; Liu, Z.; Zhao, J.; Zhu, J.; Zheng, Z.; Ji, Z. Research on Multi-target Detection of Lightweight Substation Based on YOLOv5. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 19–21 April 2024; pp. 1337–1341. [Google Scholar] [CrossRef]

- Wang, P.; Yu, Z.; Zhu, Z. Channel Pruning-Based Lightweight YOLOv5 for Pedestrian Object Detection. In Proceedings of the 2023 5th International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Changzhou, China, 22–24 September 2023; pp. 229–232. [Google Scholar] [CrossRef]

- Yi, Q.; Sun, H.; Fujita, M. FPGA Based Accelerator for Neural Networks Computation with Flexible Pipelining. arXiv 2021, arXiv:2112.15443v1. [Google Scholar] [CrossRef]

- Lian, X.; Liu, Z.; Song, Z.; Dai, J.; Zhou, W.; Ji, X. High-performance FPGAbased CNN accelerator with block-floating-point arithmetic. IEEE Trans. Very Large Scale Integr. Syst. 2019, 27, 1874–1885. [Google Scholar] [CrossRef]

- Kim, D.; Jeong, S.; Kim, J.-Y. Agamotto: A performance optimization framework for CNN accelerator with row stationary dataflow. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 2487–2496. [Google Scholar] [CrossRef]

- Yang, C.; Yang, Y.; Meng, Y.; Huo, K.; Xiang, S.; Wang, J. Flexible and efficient convolutional acceleration on unified hardware using the two-stage splitting method and layer-adaptive allocation of 1-D/2-D winograd units. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2024, 43, 919–932. [Google Scholar] [CrossRef]

- Natsuaki, R.; Ohki, M.; Nagai, H.; Motohka, T.; Tadono, T.; Shimada, M.; Suzuki, S. Performance of ALOS-2 PALSAR-2 for disaster response. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2434–2437. [Google Scholar] [CrossRef]

- Lei, J.; Yang, G.; Xie, W.; Li, Y.; Jia, X. A low-complexity hyperspectral anomaly detection algorithm and its FPGA implementation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 907–921. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, J.; Peng, X.; Xiong, R. Frequencydomain dynamic pruning for convolutional neural networks. Adv. Neural Inf. Process. Syst. 2018, 31, 1049–1059. [Google Scholar]

- Frankle, J.; Carbin, M. The lottery ticket hypothesis: Finding sparse, trainable neural networks. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

| Datasets | Training Images | Validation Images | Testing Images | Classes |

|---|---|---|---|---|

| CIFAR-10 | 45,000 | 5000 | 10,000 | 10 |

| Mini-ImageNet | 38,400 | 9600 | 12,000 | 100 |

| Self-established Livestock dataset | 344 (8014 Instances) | 39 (1751 Instances) | 86 (3136 Instances) | 3 |

| Models | Sparse Training Epochs | Fine-Tune Epochs | Learning Rate | Weight Decay | Momentum |

|---|---|---|---|---|---|

| VGG-16/ResNet-50 On CIFAR10 | 45 | 35 | CosineAnnealingLR (lr: 0.01) | 0.0005 | 0.9 |

| VGG-16/ResNet-50 On Mini-ImageNet | 36 | 24 | CosineAnnealingLR (lr: 0.01) | 0.0005 | 0.9 |

| YOLOv5 | 60 | 80 | OneCycleLR (lr0: 0.01 lrf: 0.1) | 0.0005 | 0.937 |

| Methods | Top-1 Acc (%) | Relative Acc (%) | Params (M) | FLOPs (G) | Compression Rate | Pruning Rate (%) |

|---|---|---|---|---|---|---|

| baseline | 94.12 | - | 20.68 | 1.19 | - | - |

| SqMk | 95.00 | +0.88 | 14.39 | 0.83 | 1.44× | 30.41 |

| filter-SqMk | 94.74 | +0.62 | 14.39 | 0.83 | 1.44× | 30.41 |

| filter-SqMk + LnMk | 94.68 | +0.56 | 9.71 | 0.56 | 2.13× | 53.04 |

| filter-SqMk + filter-LnMk(FMP) | 94.34 | +0.22 | 9.71 | 0.56 | 2.13× | 53.04 |

| FMP + kernel pruning-A | 94.28 | +0.16 | 3.00 | 0.17 | 6.88× | 85.47 |

| FMP + kernel pruning-B | 94.11 | −0.01 | 1.44 | 0.08 | 14.37× | 93.04 |

| FMP + kernel pruning-L | 93.13 | −0.99 | 0.69 | 0.04 | 29.89× | 96.65 |

| Methods | Acc (%) | Relative Acc (%) | Params (M) | FLOPs (G) | Compression Rate | Pruning Rate (%) | ||

|---|---|---|---|---|---|---|---|---|

| Top-1 | Top-5 | Top-1 | Top-5 | |||||

| baseline | 79.39 | 93.06 | - | - | 20.68 | 3.75 | - | - |

| SqMk | 79.59 | 93.84 | 0.20 | 0.78 | 14.39 | 2.61 | 1.44× | 30.40 |

| filter-SqMk | 79.38 | 93.95 | −0.01 | 0.89 | 14.39 | 2.61 | 1.44× | 30.40 |

| filter-SqMk + LnMk | 78.87 | 93.64 | −0.52 | 0.58 | 9.72 | 1.76 | 2.13× | 53.02 |

| filter-SqMk + filter-LnMk(FMP) | 78.75 | 93.47 | −0.64 | 0.41 | 9.72 | 1.76 | 2.13× | 53.02 |

| FMP + kernel pruning-A | 78.42 | 93.33 | −0.97 | 0.27 | 5.32 | 0.96 | 3.89× | 74.29 |

| FMP + kernel pruning-L | 77.40 | 93.48 | −1.99 | 0.42 | 2.79 | 0.50 | 7.42× | 86.52 |

| Networks | Methods | Top-1 Acc (%) | Pruning Rate (%) | |||

|---|---|---|---|---|---|---|

| Baseline | Pruned | Relative | FLOPs | Para. | ||

| VGG-16 | PCNN [32] | 93.54 | 93.58 | +0.04 | 66.7 | 66.7 |

| KRP [11] | 92.76 | 92.54 | −0.22 | 70.0 | 70.0 | |

| ITOP [42] | 93.29 | 93.10 | −0.19 | 80.0 | 80.0 | |

| Ours-FMP | 93.42 | 93.46 | +0.04 | 75.1 | 75.1 | |

| Ours-FMP + kernel pruning | 93.42 | 93.26 | −0.16 | 79.5 | 79.5 | |

| ResNet-50 | Prune it yourself [47] | 92.85 | 92.69 | −0.16 | 34.2 | 41.5 |

| MS-KD [48] | 95.36 | 95.12 | −0.24 | 84.1 | 84.0 | |

| Layer-wise refined pruning [13] | 93.60 | 92.78 | −0.82 | 84.3 | 86.3 | |

| Ours-FMP | 94.12 | 94.19 | +0.07 | 84.6 | 84.6 | |

| Ours-FMP + kernel pruning | 94.12 | 94.28 | +0.16 | 85.5 | 85.5 | |

| Models | Total Params (M) | Params of 3 × 3 Kernels (M) | Params of 1 × 1 Kernels (M) |

|---|---|---|---|

| YOLOv5s | 7.01 | 4.51 (64.4%) | 2.49 (35.5%) |

| YOLOv5m | 20.85 | 14.73 (70.7%) | 6.10 (29.3%) |

| YOLOv5l | 46.11 | 34.34 (74.5%) | 11.75 (25.5%) |

| Models | Methods | mAP (%) | Relative mAP (%) | Params (M) | FLOPs (G) | Compression Rate | Pruning Rate (%) |

|---|---|---|---|---|---|---|---|

| YOLOv5s | baseline | 87.1 | - | 7.01 | 16.00 | - | - |

| FMP | 86.5 | −0.6 | 3.26 | 7.45 | 2.15× | 53.43 | |

| YOLOv5m | baseline | 88.6 | - | 20.85 | 48.20 | - | - |

| FMP | 91.4 | +2.8 | 9.68 | 22.38 | 2.15× | 53.57 | |

| FMP-L | 86.2 | −2.4 | 4.57 | 10.56 | 4.56× | 78.09 | |

| YOLOv5l | baseline | 91.6 | - | 46.11 | 108.30 | - | - |

| FMP | 93.4 | +1.8 | 21.27 | 49.97 | 2.17× | 53.86 | |

| FMP-L | 89.6 | −2.0 | 5.44 | 12.77 | 8.48× | 88.21 |

| Models | Methods | mAP (%) Baseline/Pruned | Relative mAP (%) | Params (M) | FLOPs (G) | Compression Rate |

|---|---|---|---|---|---|---|

| YOLOv5s | [49] | 96.8/97.5 | +0.7 | 4.45 | - | 1.57× |

| Ours | 87.1/88.8 | +1.7 | 4.52 | 10.32 | 1.55× | |

| [50] | 91.5/91.0 | −0.5 | - | 18.2 | 1.95× | |

| Ours | 87.1/86.5 | −0.6 | 3.26 | 7.45 | 2.15× | |

| YOLOv5m | [51] | 81.6/80.6 | −1.0 | 8.9 | 23.4 | 2.38× |

| Ours | 88.6/90.2 | +1.6 | 8.34 | 19.27 | 2.50× |

| Layers | Feature Map Size | Dense Network | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Operations (MOPs) | Execution Time (ms) | Throughput (GOPS) | Operations (MOPs) | Execution Time (ms) | Throughput (GOPS) | Operations (MOPs) | Execution Time (ms) | Throughput (GOPS) | ||

| block1_conv1 | (224,224,3) | 89.92 | 2.272 | 39.57 | 39.96 | 1.012 | 39.47 | 19.98 | 0.508 | 39.30 |

| block1_conv2 | (224,224,64) | 1852.90 | 6.817 | 271.79 | 823.51 | 3.037 | 271.12 | 411.76 | 1.525 | 269.92 |

| block2_conv1 | (112,112,64) | 926.45 | 1.715 | 540.11 | 411.76 | 0.766 | 537.47 | 205.88 | 0.386 | 532.79 |

| block2_conv2 | (112,112,128) | 1851.29 | 2.287 | 809.46 | 822.80 | 1.021 | 805.51 | 411.40 | 0.515 | 798.49 |

| block3_conv1 | (56,56,128) | 925.65 | 1.155 | 801.20 | 411.40 | 0.517 | 795.03 | 205.70 | 0.262 | 784.81 |

| block3_conv2 | (56,56,256) | 1850.49 | 2.311 | 800.86 | 822.44 | 1.035 | 794.69 | 411.22 | 0.524 | 784.47 |

| block3_conv3 | (56,56,256) | 1850.49 | 2.311 | 800.86 | 822.44 | 1.035 | 794.69 | 411.22 | 0.524 | 784.47 |

| block4_conv1 | (28,28,256) | 925.25 | 1.181 | 783.76 | 411.22 | 0.531 | 774.54 | 205.61 | 0.271 | 758.48 |

| block4_conv2 | (28,28,512) | 1850.09 | 2.361 | 783.59 | 822.26 | 1.062 | 774.37 | 411.13 | 0.542 | 758.32 |

| block4_conv3 | (28,28,512) | 1850.09 | 2.361 | 783.59 | 822.26 | 1.062 | 774.37 | 411.13 | 0.542 | 758.32 |

| block5_conv1 | (14,14,512) | 462.52 | 0.616 | 750.75 | 205.57 | 0.280 | 733.95 | 102.78 | 0.146 | 705.54 |

| block5_conv2 | (14,14,512) | 462.52 | 0.616 | 750.75 | 205.57 | 0.280 | 733.95 | 102.78 | 0.146 | 705.54 |

| block5_conv3 | (14,14,512) | 462.52 | 0.616 | 750.75 | 205.57 | 0.280 | 733.95 | 102.78 | 0.146 | 705.54 |

| FC1 | (4096) | 102.76 | 0.157 | 655.36 | 51.38 | 0.078 | 655.36 | 25.69 | 0.039 | 655.36 |

| FC2 | (4096) | 16.78 | 0.026 | 655.36 | 8.39 | 0.013 | 655.36 | 4.19 | 0.006 | 655.36 |

| FC3 | (4096) | 0.41 | 0.001 | 512.00 | 0.20 | 0.000 | 512.00 | 0.10 | 0.000 | 512.00 |

| Total | - | 15,480.13 | 26.803 | 577.55 | 6886.72 | 12.010 | 573.36 | 3443.36 | 6.084 | 565.94 |

| Speedup | - | - | 2.23× | 4.4× | ||||||

| Compression Rate | - | - | 2.25× | 4.5× | ||||||

| Sources | Available | Dense Network | ||

|---|---|---|---|---|

| LUTs | 341.3 K | 279.0 K (81.76%) | 276.6 K (81.05%) | 276.1 K (80.90%) |

| FFs | 682.6 K | 279.4 K (40.94%) | 279.0 K (40.88%) | 278.9 K (40.86%) |

| LUTRAMs | 184.3 K | 2304 (1.25%) | 2304 (1.25%) | 2304 (1.25%%) |

| BRAMs (36 Kb) | 744 | 416 (55.91%) | 416 (55.91%) | 416 (55.91%) |

| DSPs | 3528 | 785 (22.25%) | 785 (22.25%) | 785 (22.25%) |

| [53] (X. Lian, 2019) | [52] (Q. Yi, 2021) | [54] (D. Kim, 2023) | [55] (C. Yang, 2024) | Ours | |

|---|---|---|---|---|---|

| FPGA | XC7VX690T | XC7Z045 | XCVU9P | XCVU9P | XCZU15EG |

| Frequency (MHz) | 200 | 200 | 200 | 430 | 200 |

| DSPs | 1027 (29%) | 900 (98%) | 2286 (33%) | 576 (8%) | 785 (22%) |

| LUTs | 231.8 K (54%) | 118.0 K (54%) | 814 K (69%) | 93 K (8%) | 279.0 K (82%) |

| FFs | 141 K (16%) | 148.6 K (34%) | 795 K (34%) | NA | 279.4 K (41%) |

| BRAMs | 913 (62%) | 403 (74%) | 1663 (77%) | 336 (16%) | 416 (56%) |

| Throughput (GOPS) | 760.83 | 706 | 402 | 711 | 809.46 |

| Performance Efficiency (GOPS/DSP) | 0.74 | 0.78 | 0.18 | 1.23 | 1.03 |

| Power (W) | 9.18 | 7.2 | NA | 37.6 | 8.4 |

| Power Efficiency (GOPS/W) | 82.88 | 98.06 | NA | 18.9 | 96.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, W.; Mei, S.; Hu, J.; Ma, L.; Hao, S.; Lv, Z. Filter-Wise Mask Pruning and FPGA Acceleration for Object Classification and Detection. Remote Sens. 2025, 17, 3582. https://doi.org/10.3390/rs17213582

He W, Mei S, Hu J, Ma L, Hao S, Lv Z. Filter-Wise Mask Pruning and FPGA Acceleration for Object Classification and Detection. Remote Sensing. 2025; 17(21):3582. https://doi.org/10.3390/rs17213582

Chicago/Turabian StyleHe, Wenjing, Shaohui Mei, Jian Hu, Lingling Ma, Shiqi Hao, and Zhihan Lv. 2025. "Filter-Wise Mask Pruning and FPGA Acceleration for Object Classification and Detection" Remote Sensing 17, no. 21: 3582. https://doi.org/10.3390/rs17213582

APA StyleHe, W., Mei, S., Hu, J., Ma, L., Hao, S., & Lv, Z. (2025). Filter-Wise Mask Pruning and FPGA Acceleration for Object Classification and Detection. Remote Sensing, 17(21), 3582. https://doi.org/10.3390/rs17213582