1. Introduction

In recent years, with the increasing use and popularity of drones in both civilian and military domains, there has been extensive application of drones for detecting, recognizing, and tracking ground targets. The images and videos captured by drones differ significantly from those taken at human eye level perspectives. Drone imagery typically features a bird’s-eye view, with variable angles and heights [

1]. This results in challenges such as uneven target distribution, small target proportions, overly expansive scenes, complex backgrounds, and susceptibility to weather conditions [

2]. Consequently, object detection in drone-captured imagery presents significant technical challenges, primarily manifested in three critical dimensions inherent to aerial platforms. The foremost challenge stems from deficient feature representation, where targets occupying limited pixel areas exhibit minimal texture and shape information, causing progressive feature degradation through deep network hierarchies. Secondly, complex operational environments introduce substantial background clutter, where semantically irrelevant elements create high noise-to-signal ratios that impede reliable target–background differentiation. Furthermore, stringent operational constraints necessitate meticulous balancing of detection accuracy and computational efficiency, requiring models to maintain real-time processing capabilities while operating within strict power and memory budgets typical of embedded aerial platforms. These interconnected challenges collectively define the unique problem space of UAV-based detection systems, demanding specialized architectural considerations beyond conventional computer vision approaches.

With the rapid advancement of deep learning-based object detection algorithms, numerous methods have emerged, which can be categorized into two types: single-stage detection and two-stage detection. Single-stage detection algorithms predict the location and category of targets directly from the feature maps of images, without generating candidate regions firstly. The core idea simplifies the object detection problem into a dense regression and classification task. This approach has clear advantages and disadvantages: it is fast and simple, but it may have slightly lower detection accuracy, especially in complex scenes with small targets. Classic single-stage algorithms include the You Only Look Once (YOLO) series [

3] and Single Shot Multibox Detector (SSD) [

4]. The YOLO series has evolved rapidly, dividing the input image into a

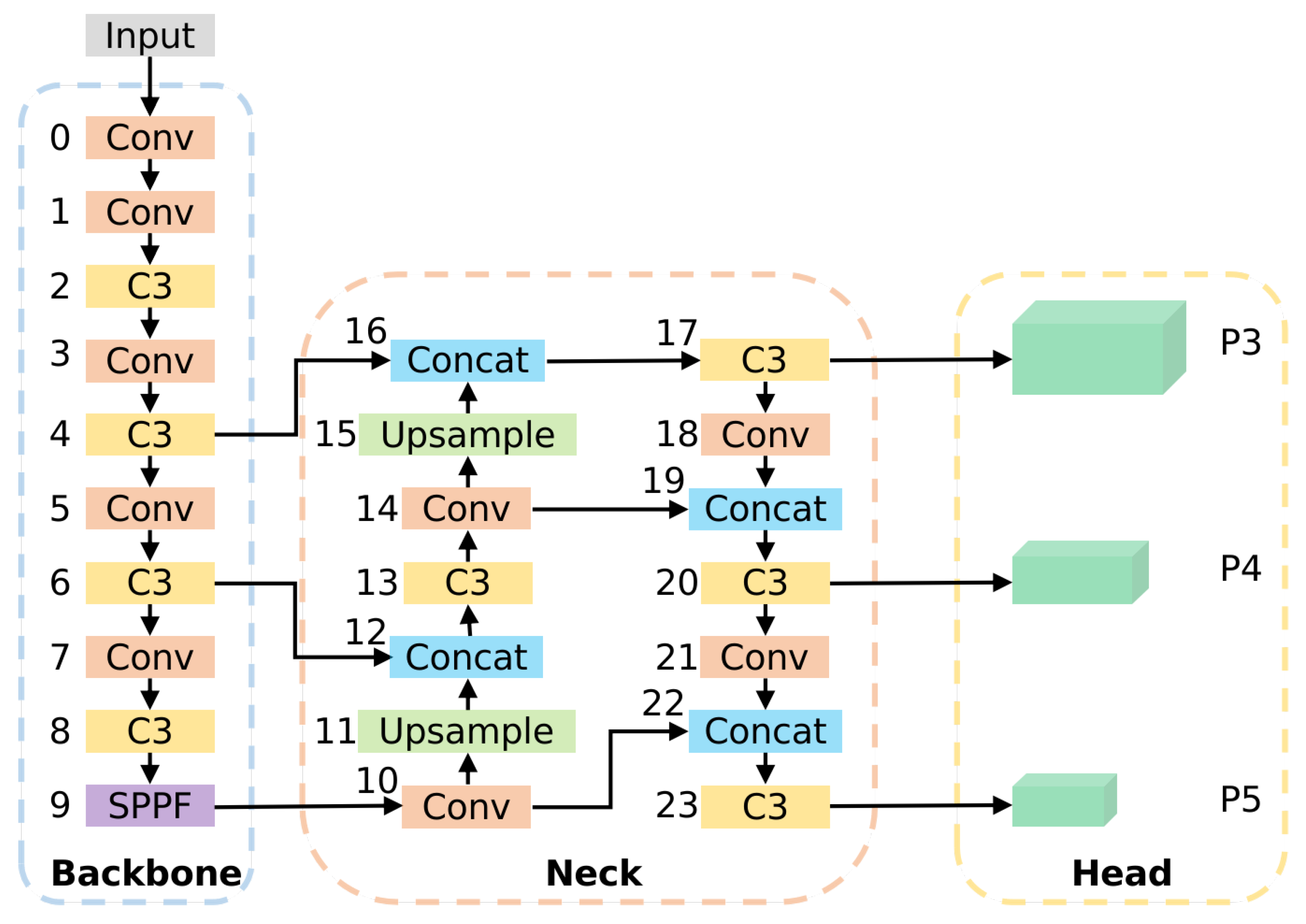

grid, each grid being responsible for detecting targets. The YOLO family has become one of the leading frameworks for drone detection due to its excellent balance between speed and accuracy. In recent years, research has focused on architectural evolution. YOLOv5 and YOLOv7 [

5] further optimized gradient flow and computational efficiency by introducing the more efficient CSPNet and ELAN structures. Subsequently, YOLOv8, YOLOv9 [

6], and YOLOv10 [

7] continued to improve multi-scale detection performance by introducing anchor-free designs, more advanced feature fusion networks and programmable model scaling strategies. However, these general improvements do not specifically address the sparse features and complex backgrounds of extremely small objects in drone imagery. While they provide strong baseline models, their feature extraction and fusion mechanisms still have room for improvement when directly addressing the specific challenges of drones. The latest YOLO algorithm is YOLOv13 [

8], while YOLOv5 is used mainly in industrial applications. Therefore, this research focuses on the YOLOv5 series for future industrial applications and experiments.

The two-stage detection algorithms involve two phases: the first phase generates candidate regions and the second phase performs precise classification and regression of the bounding box for those regions. This method typically achieves higher detection accuracy compared to single-stage methods, as it first focuses on identifying potential target areas before performing detailed processing. The advantages include high accuracy and flexibility, while the drawbacks are high computational requirements, slower speeds, and increased model complexity. Classic two-stage detection algorithms include R-CNN, Fast R-CNN, and Faster R-CNN [

9]. Addressing the unsatisfactory performance of small target detection from a drone’s perspective, Zhan et al. [

10] introduced additional detection layers in the YOLOv5 model, which improved small target detection accuracy, but also increased model complexity, resulting in slower detection speeds. Lim et al. [

11] proposed a small target detection algorithm that combines context and attention mechanisms, focusing on small objects in images. Although it improved small target detection capabilities to some extent, its accuracy still needs to be improved for application in drone imagery. Liu et al. [

12] designed a multibranch parallel pyramid network structure, incorporating a supervised spatial attention module to reduce background noise interference and focus on target information. Despite improvements in small target detection, accuracy remains relatively low. Feng et al. [

13] combined the SCAM and SC-AFF modules in YOLOv5s, introducing a transformer module into the backbone network to enhance the extraction of small target features while maintaining a detection speed of 46 frames per second. Qiu et al. [

14] added a lightweight channel attention mechanism to YOLOv5n, enhancing the network’s ability to extract effective information from feature maps. They also introduced an adaptive spatial feature fusion module and used the EIoU loss function to accelerate convergence, ultimately improving detection accuracy by 6.1 percentage points. Liu et al. [

15] incorporated a channel-space attention mechanism into YOLOv5, improving the extraction of target features and improving the loss function

-

as a regression loss in the box, increasing accuracy by 6.4%. Wang et al. [

16] proposed a lightweight drone aerial target detection algorithm based on YOLOv5, called SDS-YOLO, which adjusted the detection layer and receptive field structure and established multi-scale detection information dependencies between shallow and deep semantic layers, further enhancing the shallow network’s weights and improving small target detection performance. Di et al. [

17] proposes a lightweight high-precision detector based on YOLOv8s, addresses small target detection challenges in UAV imagery—including scale variation, target density, and inter-class similarity—through architectural refinements featuring Double Separation Convolution (DSC), a cross-level SPPL module, DyHead for adaptive feature fusion, and a unified WIPIoU loss, significantly enhancing accuracy while reducing computational complexity. To enhance the model’s ability to focus on key information, attention mechanisms have been widely integrated into detection networks. SENet [

18] and CBAM [

19], through adaptive calibration of channel and spatial dimensions, respectively, have become fundamental modules for improving the representational capabilities of convolutional neural networks. Recent research, such as SKNet [

20], ECANet [

21], and coordinate attention, has further explored more efficient or fine-grained feature recalibration strategies. In the field of drone detection, many works (e.g., refs. [

11,

14,

15]) have attempted to embed various attention modules into different stages of YOLO to improve small object detection performance. Although these methods have achieved some success, most of them use attention modules in isolation or sequentially, failing to fully utilize the synergistic and complementary effects between channel and spatial attention, and lack a unified attention architecture designed specifically for small object detection in complex backgrounds.

Beyond the aforementioned detection methodologies, the field has witnessed significant advancements through the emergence of vision transformer (ViT) architectures, exemplified by models such as DETR [

22] and Swin Transformer [

23]. These approaches fundamentally reconceptualize image processing by decomposing input images into sequences of non-overlapping patches, subsequently leveraging self-attention mechanisms within transformer-based encoders and decoders to capture long-range dependencies and perform end-to-end object detection. While ViTs offer compelling advantages, most notably the elimination of hand-crafted components like anchor boxes and region proposal networks—thereby streamlining the overall architectural framework—their computational intensity presents substantial deployment challenges. Specifically, the quadratic complexity inherent in self-attention operations, coupled with the substantial memory footprint required for high-resolution feature maps, renders standard ViT variants prohibitively resource-intensive for integration into platforms with stringent hardware constraints, such as UAVs. In contrast, single-stage detectors (e.g., YOLO, SSD) demonstrate a superior balance between detection accuracy and computational efficiency. Their streamlined architecture, characterized by dense predictions performed in a single pass over the feature maps, achieves favorable inference speeds and reduced parameter counts. This efficiency profile makes single-stage detectors a pragmatically optimal choice for real-time object detection tasks deployed on computationally limited UAV platforms, where sustained processing latency and power consumption are critical operational parameters. Yan et al. [

24] presents an enhanced YOLOv10-based detection network for UAV imagery, incorporating adaptive convolution, multi-scale feature aggregation, and an improved bounding box loss to boost small-target detection accuracy and robustness in complex, dense scenes.

One difficult in object detection in UAV-acquired aerial imagery is further complicated by two interconnected challenges: intricate background clutter and significant inter-class similarity. The inherent characteristics of UAV platforms—enabling broad spatial coverage with heterogeneous backgrounds—often introduce substantial environmental noise, wherein non-target elements compete for the model’s attention. Compounding this issue is the frequent morphological and chromatic resemblance among distinct object categories (e.g., vehicles, infrastructure, or natural features), particularly pronounced under suboptimal imaging conditions. This convergence of intra-class variability and inter-class similarity creates ambiguous feature representations in latent space, substantially impeding robust class discrimination. The challenge escalates for small targets, where limited pixel resolution diminishes discriminative feature availability, thereby amplifying misclassification risks. To mitigate these limitations, recent methodological innovations have strategically enhanced attention mechanisms within established detection frameworks. Xiong et al. [

25] refined the spatial attention module in YOLOv5, dynamically reweighting feature responses to amplify salient small-target signatures while adaptively suppressing irrelevant background activations. Zhang et al. [

26] incorporated Bilinear Routing Attention (BRA) within YOLOv10’s feature extraction stage, employing a two-layer routing mechanism to establish sparse feature interactions that preserve critical foreground details while effectively attenuating background interference through contextual filtering. Weng et al. [

27] addresses the accuracy–efficiency trade-off in UAV infrared object detection by integrating ShuffleNetV2 with a Multi-Scale Dilated Attention (MSDA) module for adaptive feature extraction, designing DRFAConvP convolutions for efficient multi-scale fusion, and employing a Wise-IoU loss with dynamic gradient allocation, achieving optimal performance under computational constraints.

Another difficult in object detection in aerial imagery acquired by UAVs is frequently challenged by inherent complexities such as fuzzy object boundaries and severe occlusion phenomena. These limitations stem primarily from the distinctive operational context: the high-altitude oblique perspective often induces atmospheric interference and resolution constraints, leading to degraded image quality where object edges become indistinct, thereby complicating the precise localization of bounding boxes during detection. Concurrently, dense urban or crowded environments present pervasive occlusion scenarios, wherein objects mutually obscure visibility. Under such conditions, critical targets may exhibit only minimal visible portions, substantially diminishing the discriminative information available to the model and impeding reliable identification and localization based on fragmented visual cues. To address these specific challenges, recent research has focused on architectural enhancements to established detection frameworks. Wang et al. [

28] refined the RTDETR architecture by integrating the HiLo attention mechanism with an in-scale feature interaction module within its hybrid encoder; this synergistic integration augments the model’s capacity to discern and prioritize densely packed targets amidst clutter, demonstrably reducing both the missed detection rate (MDR) and false detection rate (FDR). Similarly, Chang et al. [

29] augmented the YOLOv5s model by incorporating a coordinated attention mechanism subsequent to convolutional operations. This modification strategically enhances the model’s sensitivity to spatially correlated features and channel dependencies, significantly boosting detection accuracy for small, low-contrast targets particularly susceptible to degradation under image blurring conditions prevalent in UAV-captured imagery. Qu et al. [

30] proposes a small-object detection algorithm for UAV imagery that integrates slicing convolution, cross-scale feature fusion, and an adaptive detection head to enhance feature retention and localization accuracy while reducing model complexity in complex, dense scenes.

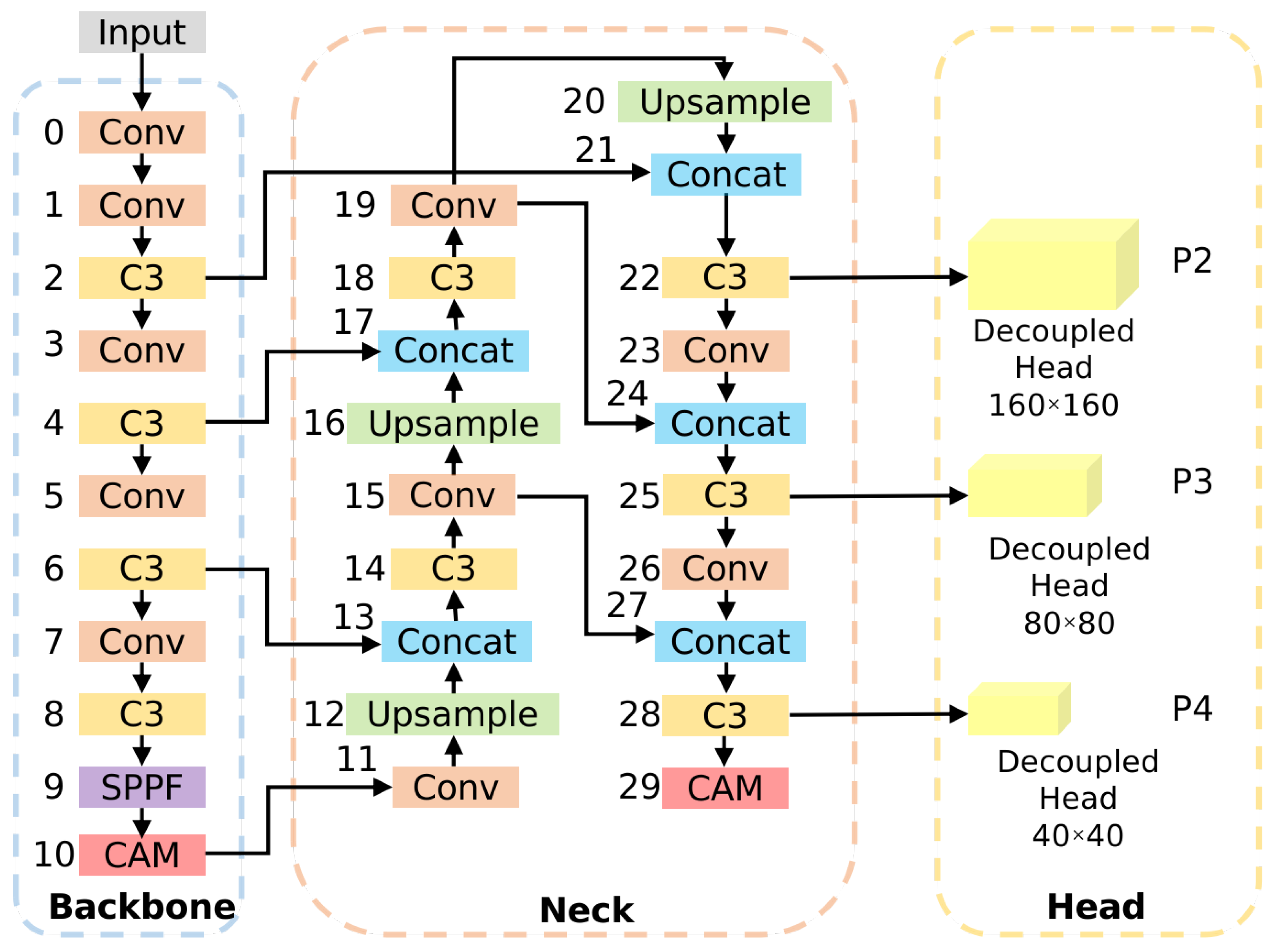

In summary, there exists a clear research gap that how to systematically address the three core challenges of small object detection in drones—weak features, cluttered backgrounds, and difficult regression—through collaborative architectural innovations within an extremely lightweight baseline model (such as YOLOv5n), rather than simply stacking modules or relying on larger model parameters. YOLO-CAM, proposed in this paper, aims to fill this gap. Compared to existing work, our novelties lie in three aspects. First, this paper proposes a parallel fusion CAM, rather than simple sequential stacking, to achieve more efficient feature calibration. Next, this paper performs a pruning and boosting structural redesign of the detection head, specifically expanding the detection capabilities of small objects while reducing the total number of parameters. Third, this paper designs the inner-Focal-EIoU loss to specifically address the weak gradients and poor quality of small object regression. This paper aims to establish a new precision-efficiency method, providing a truly practical, high-performance detection solution for resource-constrained drone platforms. The contributions of this paper are as follows:

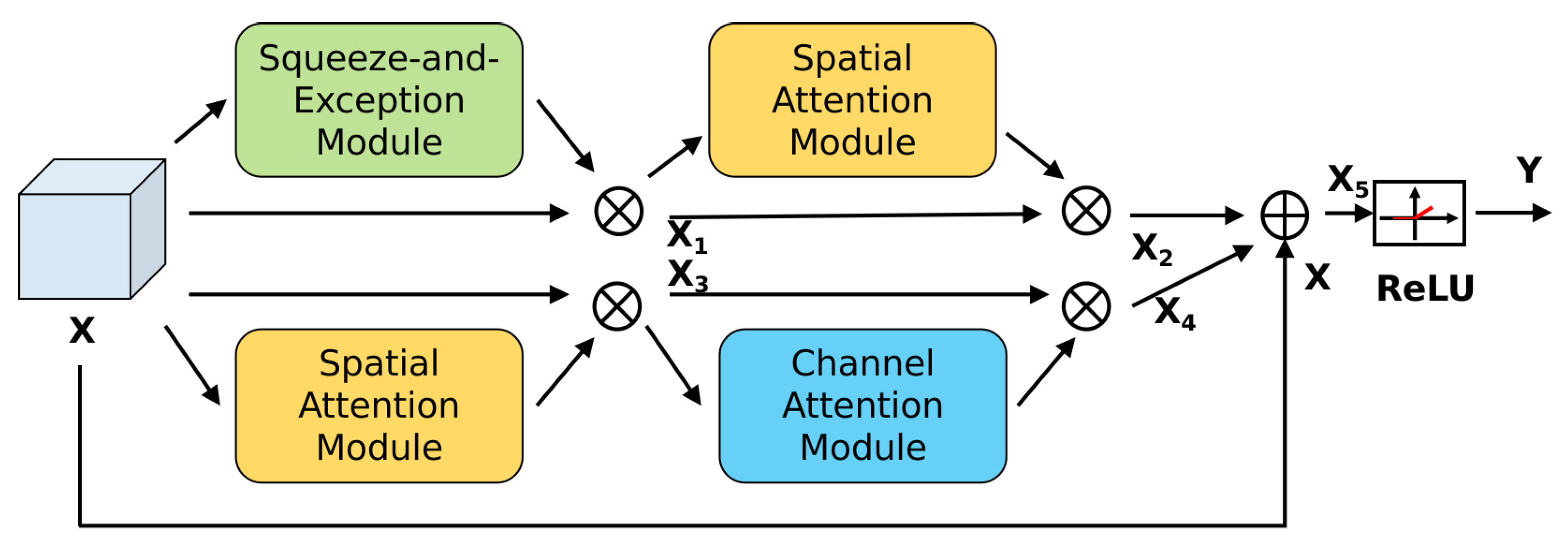

A novel combined attention mechanism (CAM) is proposed to achieve efficient feature calibration across dimensions. Unlike the commonly used methods of sequentially stacking or using attention modules separately, CAM adopts an innovative parallel fusion strategy to synergistically integrate channel attention (SE), spatial attention (SA), and improved channel attention (CA). This design enables the model to simultaneously and efficiently model inter-channel dependencies and critical spatial contextual information, significantly enhancing the feature representation of small objects in complex backgrounds. At the same time, due to its parameter-efficient nature, the computational overhead is minimal.

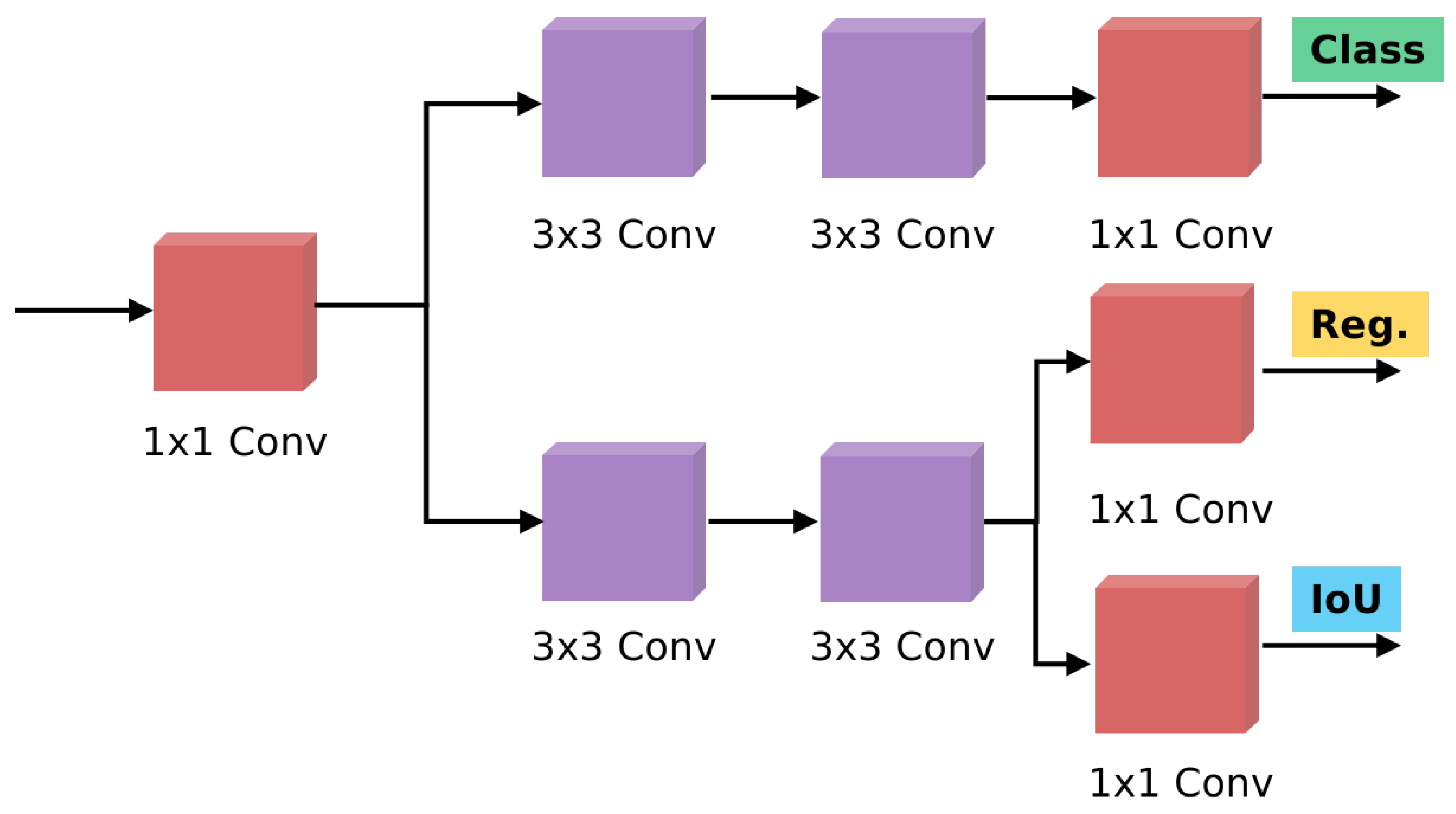

We designed a structural optimization scheme for detection heads tailored to the drone’s perspective, achieving a synergistic improvement in accuracy and efficiency. Rather than simply increasing the number of detection heads, we implemented a targeted architectural reorganization based on the distribution of object scales in drone imagery. We introduced a high-resolution P2 detection head to capture the fine features of tiny objects (down to pixels), while simultaneously removing the redundant P5 detection head for low-altitude targets. This "both increasing and decreasing" strategy not only expanded the detection capability for extremely small objects but also reduced the total number of model parameters by approximately 30%, demonstrating significant system-level optimization advantages.

The inner-Focal-EIoU loss function is introduced to specifically optimize the difficulties of small object regression. This loss function combines the auxiliary boundary concept of Inner-IoU with the dynamic focusing mechanism of Focal-EIoU. It calculates IoU using auxiliary boundaries, enhancing the model’s robustness to slight shifts in the bounding box. Furthermore, by dynamically adjusting the weights of difficult and easy examples, it prioritizes the regression process for low-quality examples (such as occluded and blurred small objects), effectively improving localization accuracy and model convergence speed.

By collaboratively designing these components, we constructed an extremely lightweight object detection model, YOLO-CAM. Comprehensive experiments on the VisDrone2019 challenging dataset demonstrate that our approach achieves a mAP50 score of 31.0% with only 1.65M parameters and a real-time speed of 128 FPS, a 7.5% improvement over the original YOLOv5n. This work provides a cost-effective solution for achieving high-precision, real-time visual perception on computationally constrained drone platforms.

The remainder of this paper is organized as follows:

Section 2 presents the improved model proposed for small object detection in UAV images, detailing the model architecture and operational principles of related modules.

Section 3 outlines the experimental environment and parameter configurations, followed by test results on VisDrone2019 datasets, including ablation studies, comparative evaluations, visualization experiments, and generalization experiments designed to validate the effectiveness of the proposed method.

Section 4 discusses potential directions for future research.

Section 5 concludes this paper.

3. Results

3.1. Datasets

This investigation employs the extensively validated VisDrone2019 benchmark dataset [

43], a large-scale aerial imagery collection captured across diverse urban environments in 14 Chinese cities under variable illumination and weather conditions. Comprising 288 independently captured video sequences (totaling 261,908 temporally annotated frames) and 10,209 high-resolution static images, the corpus represents one of the most comprehensive UAV-oriented detection datasets publicly available. Following established evaluation protocols, we partition the static imagery into three stratified subsets: 6471 training samples for model optimization, 1610 testing images for performance quantification, and 548 validation images for hyperparameter tuning.

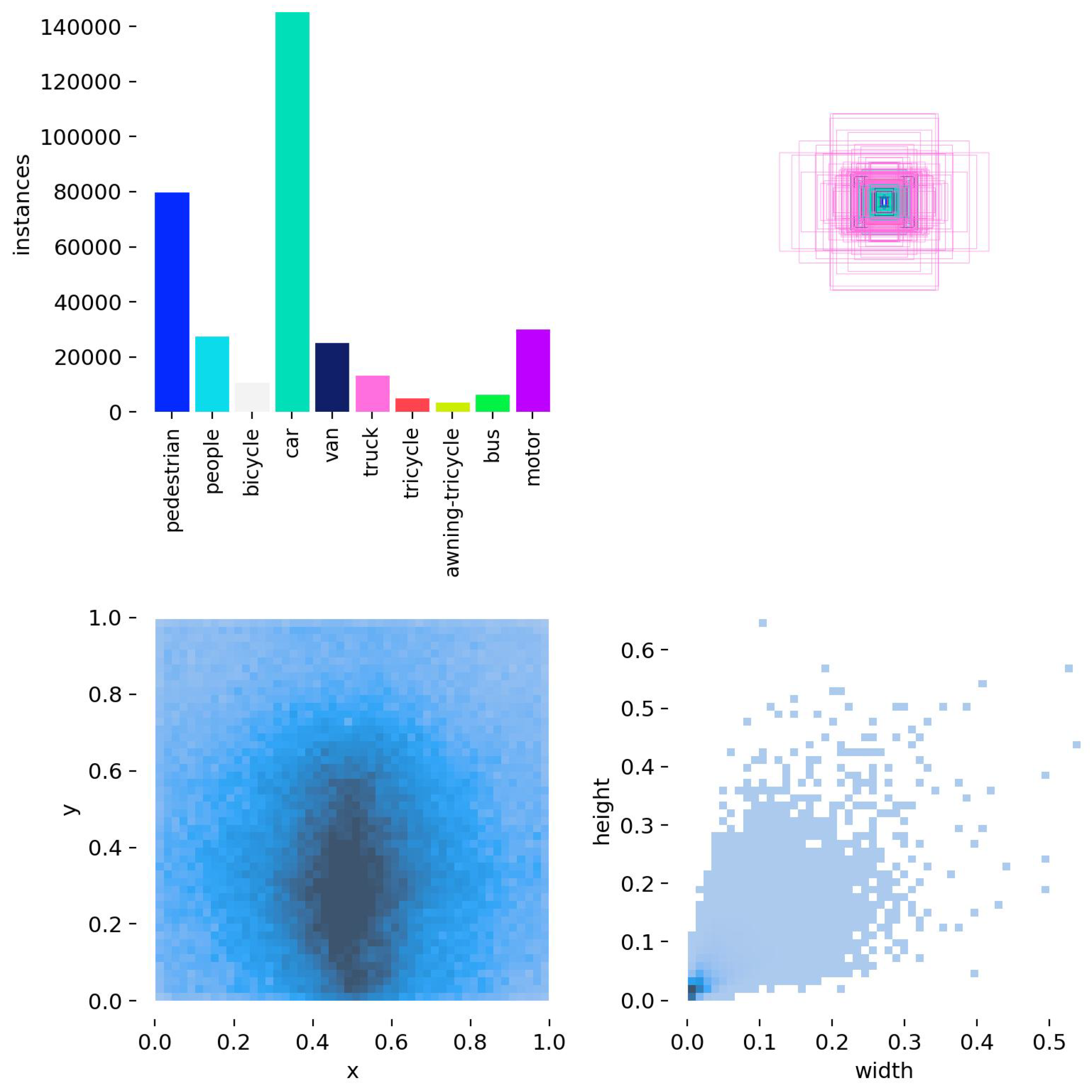

As illustrated in

Figure 5, the dataset encompasses heterogeneous aerial perspectives including nadir, oblique, and low-altitude viewpoints at altitudes ranging 5–200 m, ensuring significant operational diversity. Ten critical urban object categories are exhaustively annotated—encompassing pedestrians, cyclists (bicycle/tricycle), motorized transport (car/bus/truck/van/motorcycle), and specialized vehicle types (covered tricycle)—with instance-level bounding boxes exhibiting realistic occlusion patterns (26–83% occlusion ratios across categories). The 2.6 million precisely calibrated annotations demonstrate exceptional label density (average 42.7 objects per image), capturing complex urban interactions.

Figure 6 further quantifies two critical characteristics: categorical distribution analysis reveals substantial class imbalance (pedestrians constitute 42.7% of instances versus 1.3% for buses), while object size profiling confirms the dataset’s small-target dominance (71.3% of objects occupy

pixels). This carefully curated data ecosystem provides a rigorous testbed for evaluating aerial detection robustness against scale variation, occlusion complexity, and environmental heterogeneity.

3.2. Experimental Environment

The experiments were conducted on a machine configured with an Intel i9-14900KF processor, 128 GB of RAM, and an Nvidia RTX 4090 GPU with 24 GB of VRAM. The system used was Ubuntu 22.04, and the environment was set up with Python 3.10, PyTorch 2.1.2, and CUDA 12.1. The training hyperparameters were adopted from the common settings in the YOLOv5 community to ensure a fair comparison with the baseline model, as listed in

Table 1.

3.3. Evaluation Metrics

The evaluation metrics used in this study include mAP50, mAP75, mAP50:95, Params, GFLOPs, and FPS. The mean Average Precision (

mAP) represents the average precision across all object categories. It is obtained by calculating the Average Precision (

AP) for each category and then averaging these

AP values. The

AP itself is calculated as the area under the Precision-Recall (P-R) curve, where precision (

P) and recall (

R) are defined as follows:

Here, True Positive (

TP) refers to the number of correctly predicted positive samples, False Positive (

FP) refers to the number of incorrectly predicted positive samples, and False Negative (

FN) refers to the number of positive samples incorrectly predicted as negative.

AP is defined as follows:

The mean Average Precision (

mAP) provides a unified assessment of model performance by averaging the precision over all

N classes, offering a unified metric for model performance evaluation.

mAP is defined as follows:

The evaluation framework employs established object detection metrics with stratified IoU sensitivity analysis. The mAP50 metric quantifies mean average precision at a permissive 0.5 Intersection-over-Union threshold, emphasizing recall performance in localization-tolerant scenarios. Conversely, mAP75 represents strict localization accuracy under demanding 0.75 IoU criteria, reflecting precise bounding box regression capability. The comprehensive mAP50:95 benchmark evaluates robustness across progressive difficulty levels, calculating mean precision through ten distinct IoU thresholds (from 0.50 to 0.95 in 0.05 increments), thereby providing holistic assessment of detection consistency.

Beyond detection quality, computational efficiency is characterized through three critical dimensions: Parameter count (Params) indicates model architectural complexity and memory footprint requirements; Giga-FLOPs (GFLOPs) measures theoretical computational intensity during inference; while Frames-Per-Second (FPS) quantifies real-time deployment capability on target hardware. For operational UAV systems, sustained FPS exceeding 24 frames constitutes the empirical real-time threshold, corresponding to human visual continuity perception limits. Crucially, input resolution inversely impacts processing latency—higher spatial dimensions linearly increase computational load. To ensure standardized comparison, all efficiency metrics reported herein were measured at the conventional resolution under identical hardware configurations and software environments, eliminating confounding variables in performance benchmarking.

3.4. Ablation Experiments

To rigorously evaluate the individual and collective contributions of the proposed architectural refinements to small-object detection efficacy, a systematic ablation study was conducted on the VisDrone2019 benchmark dataset. This controlled experimental framework methodically assesses four critical innovations integrated into the YOLOv5n baseline: (1) implementation of the Combined Attention Mechanism (CAM) module for cross-dimensional feature recalibration; (2) adoption of decoupled prediction heads to resolve task-specific optimization conflicts; (3) incorporation of dual specialized detection pathways for small and micro targets (32px and 16px, respectively); and (4) replacement of the conventional CIoU loss with the gradient-aware inner-Focal-EIoU formulation. Quantitative outcomes, documented in the accompanying tabulation, employ binary indicators (✓) to denote the activation status of each component within progressively augmented configurations. All trials were executed under identical hardware specifications and containerized software environments to eliminate performance confounding variables.

The ablation results reveal distinct mechanistic contributions from each component. The CAM module independently increases mAP50 from 23.5% to 27.4%, representing the most significant individual improvement. This enhancement stems from CAM’s dual-path attention design, which combines channel-wise and spatial attention mechanisms. The channel attention component adaptively recalibrates feature responses by suppressing noisy channels and amplifying discriminative ones, while the spatial attention module prioritizes critical regions by generating spatial weight maps that highlight small targets amidst complex backgrounds. Despite a moderate increase in GFLOPs (from 4.2 to 6.6) due to additional attention computations, the model’s parameter count decreases by 0.156 million, indicating efficient feature enhancement without significant structural expansion. Consequently, CAM effectively suppresses background clutter and enhances the visibility of small objects, which are often submerged in heterogeneous aerial imagery.

The decoupled head contributes a 1.1% improvement in mAP50 by resolving the inherent conflict between classification and localization tasks. In conventional coupled heads, the shared feature map leads to suboptimal gradient interactions due to divergent requirements: classification benefits from translation-invariant features, while localization demands translation-covariant representations. By decoupling these tasks into dedicated branches—fully connected layers for classification and convolutional layers for regression—the model achieves more stable convergence and enhanced feature specialization. This results in higher precision for both object categorization and bounding box regression. This separation reduces gradient conflict and enhances convergence stability, particularly for small objects where precise localization is challenging. The accompanying parameter increase to 1.846M reflects the additional branch structures but is justified by the improved task-specific feature learning.

The inner-Focal-EIoU loss function independently boosts mAP50 by 0.9% while surprisingly increasing inference speed by 32 FPS. This loss function incorporates an auxiliary bounding box mechanism (Inner-IoU) to improve generalization, particularly for small targets where slight label offsets can drastically reduce IoU. The Focal component dynamically scales the loss based on IoU values, emphasizing hard examples and accelerating convergence. Crucially, the inner-Focal-EIoU substitution alone mitigates bounding box regression instability under occlusion, reducing localization variance, which is a critical advancement for drone-based perception where partial target visibility is endemic. This empirical decomposition establishes both the necessity and optimal integration strategy of each proposed modification for addressing the fundamental limitations of conventional detectors in aerial small-object detection scenarios. By decoupling dimensional optimization from positional regression, inner-Focal-EIoU ensures more robust bounding box regression under occlusion and scale variations, which are common in UAV imagery.

The combination of all components (YOLO-CAM) yields a non-linear performance gain, achieving a 7.5% improvement in mAP50 over the baseline, which exceeds the sum of individual improvements. This synergy arises from the complementary nature of the enhancements. The CAM-enhanced features provide a richer representation for the decoupled head to exploit, allowing for more accurate classification and regression. Simultaneously, the inner-Focal-EIoU loss optimizes the bounding box regression process on these improved features, further refining localization accuracy. Specifically, the decoupled head leverages the spatially refined features from CAM to reduce task interference, while the loss function ensures stable gradient propagation even for low-IoU samples. This tripartite collaboration results in a coherent system where each component amplifies the others’ strengths, leading to superior detection performance, especially in challenging scenarios involving small, occluded, or cluttered targets. This integrated approach addresses the core challenges of aerial detection—background clutter, task conflict, and regression instability—in a cohesive manner.

The efficiency metrics demonstrate a favorable trade-off between accuracy and computational cost. The parameter count decreases from 1.773M to 1.648M in the full YOLO-CAM model, primarily due to the removal of the P5 detection head, which was found to be redundant for typical UAV operating altitudes. Although the FPS decreases from 261 to 128, this reduction is justified by the substantial accuracy gains. The final speed of 128 FPS far exceeds the real-time threshold of 24 FPS, ensuring practical deployment on resource-constrained UAV platforms. The GFLOPs increase slightly from 4.2 to 6.7, reflecting the added complexity of CAM and the decoupled head, but the model remains lightweight and efficient.

The ablation study outcomes presented in

Table 2 provide rigorous quantification of each architectural enhancement’s contribution to model performance. Implementation of the CAM module elevates mAP50 by 3.9%, mAP75 by 2.1%, and mAP50:95 by 2.2%, while simultaneously decreasing inference throughput by 118 FPS with large parameter decrease (0.156M)—demonstrating efficient feature recalibration without computational burden. Subsequent detection head restructuring yields substantial gains: a 1.1% mAP50 improvement, 0.9% mAP75 improvement and 0.7% mAP50:95 enhancement coupled with significant parameter incease from 1.773M to 1.846M, indicating superior feature utilization efficiency. Adoption of inner-Focal-EIoU loss independently boosts mAP50 by 0.9%, mAP75 by 0.8% and mAP50:95 by 0.7% while remarkably accelerating inference by 32 FPS, confirming its dual role in localization refinement and computational optimization. Cumulatively, the integrated modifications achieve 7.5% mAP50, 4.9% mAP75, and 4.6% mAP50:95 improvements over baseline while reducing parameters by 0.125M, establishing an unprecedented accuracy–efficiency equilibrium in UAV-oriented detection systems.

3.5. Comparison Experiments

To rigorously evaluate the performance of the proposed methodology, comprehensive comparative experiments were conducted against state-of-the-art object detection architectures using the challenging VisDrone2019 benchmark dataset. This large-scale aerial imagery corpus, characterized by significant scale variation, dense target distribution, and complex urban backgrounds, serves as an authoritative testbed for UAV-oriented detection systems. All evaluated models underwent standardized preprocessing with input images uniformly resized to

resolution, followed by identical training protocols including data augmentation strategies and optimization parameters. During the testing phase, each algorithm was assessed under identical environmental conditions on a dedicated hardware platform, while maintaining consistent software dependencies to eliminate performance confounding factors. Quantitative results were compiled across multiple performance dimensions including precision-recall metrics, computational efficiency indicators, and small-object detection efficacy, with detailed comparative analysis presented in

Table 3. This stringent evaluation framework ensures not only methodological reproducibility but also provides critical insights into architectural advantages under real-world operational constraints characteristic of drone-based surveillance, traffic monitoring, and infrastructure inspection scenarios where model robustness against environmental degradation factors is paramount.

Comprehensive comparative analysis on the VisDrone2019 benchmark substantiates the superior efficacy of the proposed algorithm relative to contemporary state-of-the-art detectors. Quantitative evaluation across standard metrics reveals significant performance differentials: the proposed architecture achieves a 7.5% absolute improvement in mAP50 over the YOLOv5n baseline and a substantial 11.1% gain versus RetinaNet. While the 3.5% mAP50 advantage over YOLOv5s appears marginal, this incremental enhancement is achieved concurrently with a large parameter reduction, 90% lower computational complexity (GFLOPs), and a decreased memory footprint—demonstrating exceptional efficiency gains without compromising detection fidelity. These performance characteristics validate the algorithm’s specialized optimization for UAV-based perception, where it exhibits three critical advantages: (1) enhanced small-target discriminability through multi-scale feature fusion, evidenced by higher recall for sub-32px objects; (2) superior contextual modeling capacity via hybrid attention mechanisms, enabling more effective exploitation of spatial-channel dependencies in complex aerial scenes; and (3) optimized computational efficiency on embedded hardware—exceeding real-time operational thresholds while maintaining detection robustness under illumination variations and occlusion scenarios. The architectural refinements collectively facilitate richer feature extraction from limited visual cues characteristic of low-altitude imagery, establishing a new Pareto frontier in the accuracy–efficiency tradeoff space for drone-based visual perception systems.

In this comparative analysis, it is important to note that the YOLO-CAM proposed in this study, along with the recently proposed SD-YOLO [

44] and SL-YOLO [

45], represent two distinct design paradigms and technical approaches for drone object detection. SD-YOLO and SL-YOLO, based on the YOLOv8s architecture, achieved significant breakthroughs in detection accuracy by introducing complex feature enhancement modules, establishing state-of-the-art performance among small-scale detectors. In contrast, YOLO-CAM, based on the extremely lightweight YOLOv5n architecture, achieves a 31.0% mAP50 with only 1.65M parameters and 128 FPS by combining an attention mechanism, structured detection head optimization, and a dedicated loss function. This sets a new performance benchmark for nanoscale detectors. This comparison illustrates an important design trade-off: in resource-constrained deployment scenarios, YOLO-CAM, through its system-level lightweight collaborative design, provides the optimal accuracy–efficiency balance for micro-UAV platforms with strict constraints on computing power, power consumption, and storage space. Models based on larger baselines are more suitable for applications that are less sensitive to computing resources but require extreme accuracy. This paradigm differentiation provides a clear basis for selecting technologies for UAV platforms with different application requirements.

3.6. Visualization and Comparative Analysis

To comprehensively assess the classification performance of the trained detection model, we employ a confusion matrix analysis as depicted in

Figure 7. This evaluation methodology provides granular insights into per-class prediction accuracy and error distribution. As shown in the confusion matrix, our model achieves high recall and precision for prevalent classes like people and car. However, performance is lower for classes with fewer instances and those that are visually similar, such as awining-tricycle being occasionally misclassified as tricycle due to their morphological resemblance. This analysis confirms that the overall mAP is primarily limited by the long-tail distribution of the dataset and inter-class similarity, rather than a universal failure mode.

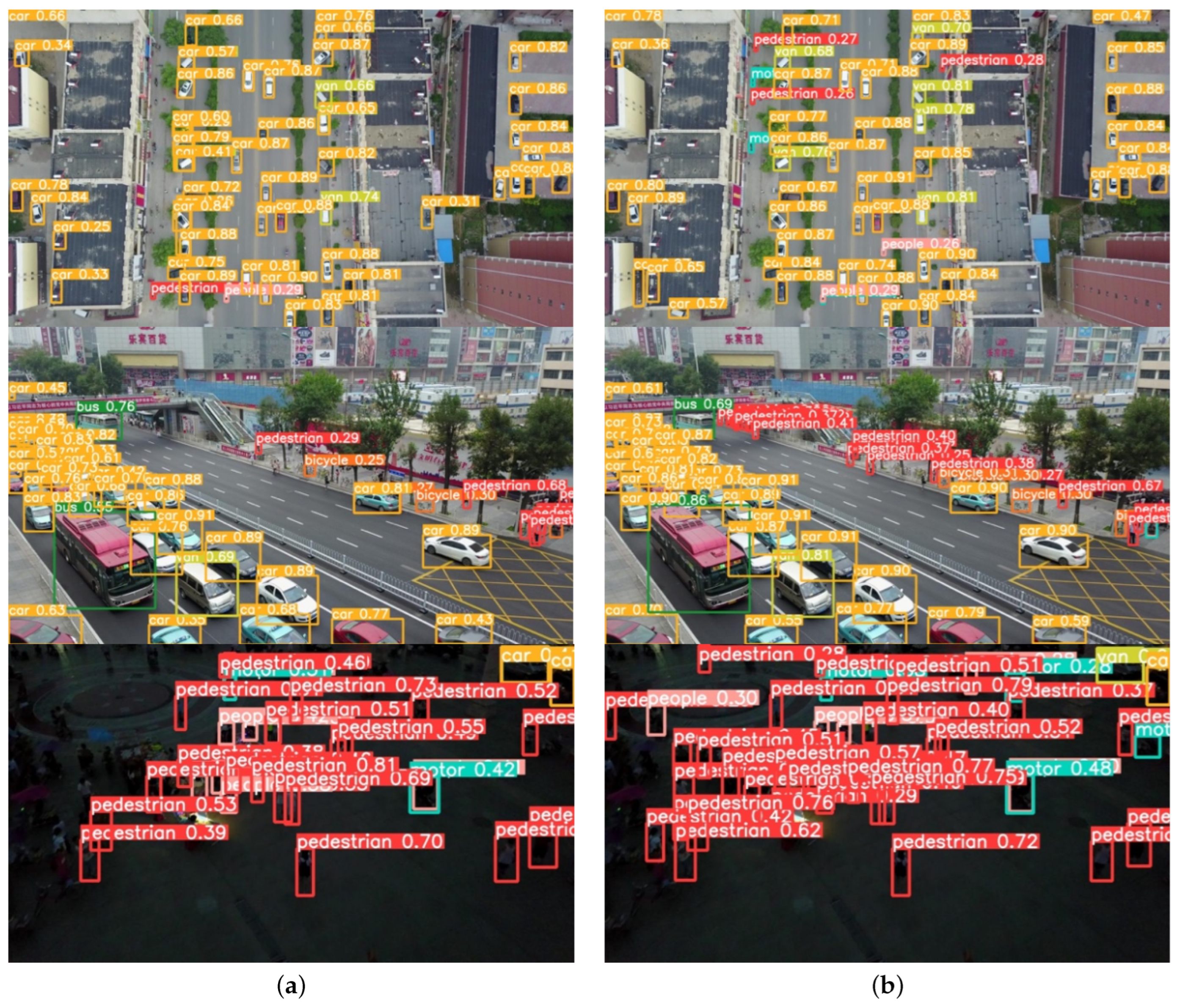

To empirically validate the efficacy of the proposed algorithmic enhancements, a comprehensive visual assessment was conducted using representative samples from the VisDrone2019 test corpus. Systematically selected detection outcomes, as illustrated in

Figure 8, provide qualitative evidence of the model’s advanced perceptual capabilities across challenging aerial scenarios. These visualizations demonstrate three critical performance dimensions: (1) significantly improved small-target recognition fidelity, evidenced by consistent detection of sub-20px pedestrians and vehicles amidst complex urban textures; (2) enhanced occlusion robustness through accurate localization of partially obscured objects in high-density traffic scenarios, maintaining precise bounding box regression despite 60–80% occlusion ratios; and (3) superior false positive suppression in cluttered backgrounds, eliminating spurious detections from architectural patterns and shadow artifacts that frequently mislead baseline models. The comparative visual analysis further reveals the architecture’s nuanced contextual understanding—preserving detection continuity across scale transitions from low-altitude close-ups to high-altitude panoramas while maintaining temporal coherence in object trajectory prediction. This multi-faceted visual evidence substantiates quantitative performance metrics by demonstrating operational advantages under real-world conditions where environmental complexity, target density, and imaging limitations collectively challenge conventional detectors, thereby establishing the solution’s practical viability for deployment in critical UAV applications including infrastructure inspection, emergency response, and precision surveillance.

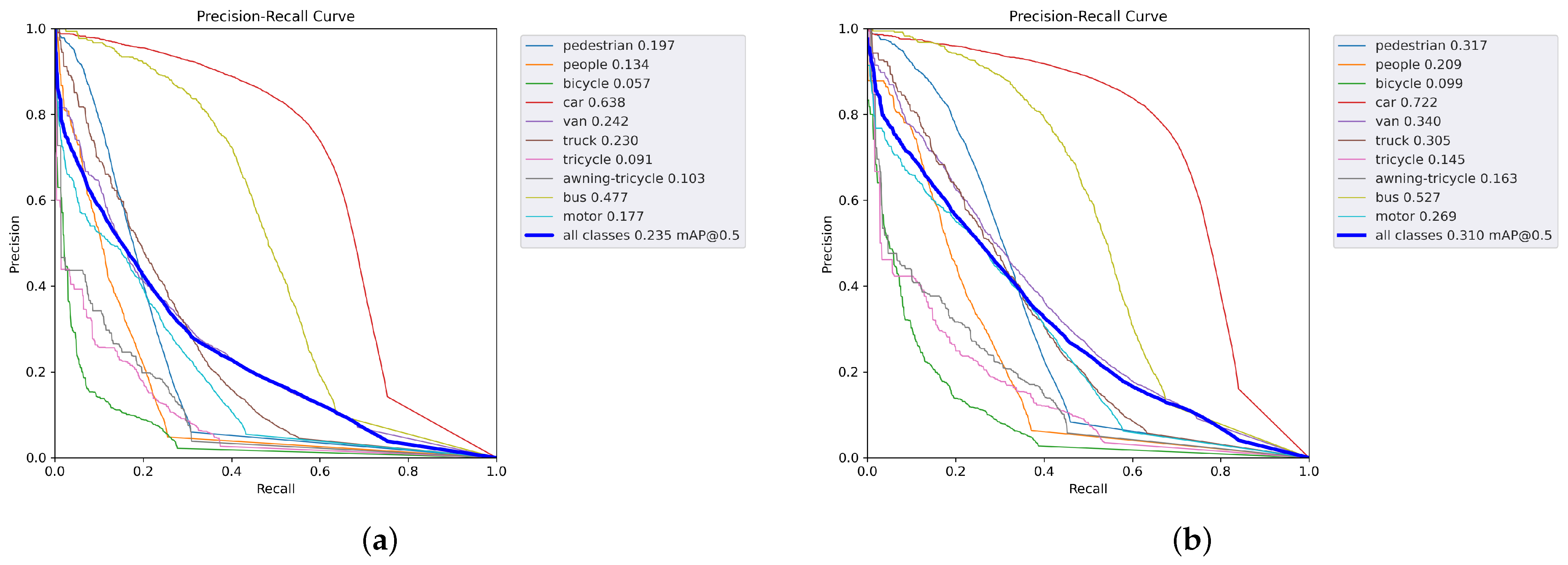

The Precision-Recall (PR) curve serves as a critical diagnostic instrument for evaluating classification model performance, particularly in contexts of severe class imbalance where target categories exhibit significant rarity relative to negative instances. This visualization technique comprehensively depicts the operational equilibrium between precision—quantifying the accuracy of positive predictions by measuring true positives against all positive classifications—and recall, which assesses the model’s capacity to identify all genuine positive instances within a dataset. The intrinsic inverse relationship between these metrics manifests through characteristic trade-offs: precision typically diminishes as recall increases, and vice versa. In imbalanced learning scenarios, the PR curve’s principal utility resides in its exclusive focus on minority class performance, circumventing evaluation biases introduced by prevalent negative samples. The Area Under the PR Curve (AUC-PR) provides a singular scalar metric encapsulating overall model efficacy across all decision thresholds. Empirical validation through this methodology, as demonstrated in

Figure 9, reveals the proposed algorithm’s superior performance across all object categories, with particularly notable gains in pedestrian detection where precision-recall metrics advanced from 19.7% to 31.7%. This 60.9% relative improvement underscores the architecture’s enhanced capability in small-object discrimination and dense-scene analysis, attributable to its refined feature extraction mechanisms and contextual modeling. The observed simultaneous elevation of both precision and recall metrics signifies substantial reduction in both false negatives and false positives—critical for applications demanding high-fidelity detection under operational constraints. These advancements establish a new performance benchmark for UAV-based visual perception systems where reliable identification of sub-pixel targets in cluttered environments remains paramount for industrial deployment in precision surveillance, automated inspection, and critical infrastructure monitoring.

Qualitative assessment through comparative visual analysis, as presented in

Figure 7, substantiates the proposed algorithm’s superior detection efficacy in operationally challenging scenarios. The architecture demonstrates significant performance gains in high-density small-target environments relative to baseline YOLOv5n, attributable to its enhanced multi-scale feature fusion and contextual modeling capabilities. Particularly noteworthy is its robustness in degraded imaging conditions: in low-illumination nighttime scenes (exemplified in

Figure 7, third row), the solution maintains detection fidelity for low-contrast pedestrian targets where conventional approaches fail, achieving higher recall despite photon-limited noise and thermal crossover effects. These visual outcomes validate three critical advancements, first, improved spatial discernment in clustered object distributions through occlusion-robust attention mechanisms; second, enhanced feature discriminability for sub-32px targets via hierarchical resolution preservation; and third, adaptive photometric normalization that mitigates illumination artifacts without compromising inference speed. The consistent performance differential across diverse environmental contexts—from urban congestion to nocturnal operations—confirms the architecture’s operational superiority in real-world UAV applications where conventional detectors exhibit fundamental limitations in small-target sensitivity and environmental adaptability, positioning this solution as a transformative advancement for precision surveillance, infrastructure inspection, and emergency response systems requiring reliable perception under adversarial conditions.

Complementary qualitative validation, illustrated through strategically sampled VisDrone2019 test cases, visually corroborates these quantitative advancements. The visualizations demonstrate three critical operational improvements: (1) enhanced small-target discriminability in high-density urban environments, reducing occlusion-induced misses; (2) consistent detection continuity across scale transitions from low-altitude close-ups to panoramic views; and (3) superior false positive suppression in cluttered backgrounds, particularly eliminating vegetation and shadow artifacts that frequently degrade baseline performance. These empirical results collectively validate the solution’s efficacy in addressing fundamental limitations of conventional drone-based detection systems, positioning it as an optimal framework for real-world applications requiring robust perception under size, weight, and power constraints.

3.7. Generalization Experiments

To rigorously evaluate the generalization capability and robustness of the proposed YOLO-CAM framework for object detection in unmanned aerial imagery, additional validation was conducted using the Aerial Dataset of Floating Objects (AFO) [

46]. This dataset addresses marine search and rescue scenarios through deep learning-based detection of objects on water surfaces, comprising 3647 images derived from 50 drone-captured video sequences with over 40,000 meticulously annotated instances of persons and floating objects. A notable characteristic of the dataset is the prevalence of small-scale targets, presenting significant detection challenges due to their limited pixel representation and low contrast against water backgrounds. The dataset is partitioned into training (67.4% of objects), validation (13.48%), and test (19.12%) subsets, with the test set intentionally curated from nine excluded videos to prevent data leakage and objectively assess model generalization. Within this experimental framework, both the baseline YOLOv5n and the proposed YOLO-CAM models were fully retrained and evaluated under consistent settings, with quantitative results comprehensively compared in

Table 4.

The proposed YOLO-CAM model demonstrates marked performance gains on the AFO dataset, substantially outperforming the YOLOv5n baseline across all evaluation metrics. Specifically, YOLO-CAM achieves mAP50, mAP75, and mAP50:95 scores of 28.8%, 15.5%, and 12.5%, respectively, compared to 23.6%, 14.5%, and 11.6% attained by YOLOv5n. These improvements reflect the model’s strong generalization capacity across diverse data distributions and its enhanced ability to handle characteristic aerial imaging challenges such as small target size, partial occlusion, and dense object clustering. The consistent superiority in detection performance underscores the robustness of the YOLO-CAM architecture in UAV-based visual recognition tasks. A qualitative comparison, illustrated in

Figure 10, further corroborates these findings, showing clearer detection results and reduced false negatives in sample images from the AFO dataset, thereby visually affirming the practical advantage of the proposed model.

5. Conclusions

This study introduces YOLO-CAM, an enhanced object detection framework based on YOLOv5n, specifically optimized for small target recognition in UAV imagery. To achieve high detection accuracy under strict computational constraints, a novel CAM is integrated into the feature pyramid network, enabling parallel channel and spatial feature recalibration for improved focus on minute objects within cluttered environments. The detection head is structurally reconfigured by incorporating a high-resolution P2 prediction layer capable of detecting objects as small as pixels, while removing the P5 head redundant for low-altitude scenarios, thereby extending detection coverage and reducing parameter count. Furthermore, the conventional CIoU loss is replaced with the inner-Focal-EIoU loss, which enhances gradient properties and convergence stability for small target localization through auxiliary boundary computation and dynamic sample weighting. Evaluated on the VisDrone2019 dataset, YOLO-CAM attains a mAP0.5 of 31.0%, representing a 7.5% gain over YOLOv5n, while sustaining real-time inference at 128 FPS. Comparative assessments confirm its superior balance between accuracy and efficiency relative to contemporary detectors, underscoring its practical suitability for UAV-based visual perception tasks.

Notwithstanding YOLO-CAM’s commendable performance across diverse operational scenarios, its robustness under degenerative imaging conditions—such as pronounced motion blur and extremely low illumination—remains an area for further enhancement. Additionally, while the model has been rigorously validated on visible-spectrum datasets, its generalizability to alternative imaging modalities, including infrared and multispectral data, remains unverified, potentially limiting its applicability in specialized domains such as nighttime surveillance or multisource reconnaissance. Although architecturally lightweight, the model’s practical efficiency on resource-constrained embedded drone processors warrants further empirical validation and potential refinement through advanced compression techniques such as neural quantization and structured pruning.

Future research will focus on real-world deployment and evaluation of the model on embedded aerial platforms under dynamic flight conditions. We also intend to leverage digital twin frameworks synergized with synthetic data generation to enhance model stability in edge-case environments. Another critical direction involves incorporating multimodal fusion mechanisms to bolster environmental adaptability and all-weather reliability.

In conclusion, YOLO-CAM offers a computationally efficient and pragmatically viable detection solution for UAV platforms operating under stringent resource constraints. We posit that this system-level co-design methodology will provide a valuable reference for future developments in lightweight vision models tailored for aerial robotics and mobile perception systems.