IAASNet: Ill-Posed-Aware Aggregated Stereo Matching Network for Cross-Orbit Optical Satellite Images

Highlights

- An ill-posed-aware stereo matching framework integrates monocular depth estimation with adaptive geometry fusion to improve disparity estimation in ill-posed regions of cross-orbit images.

- An enhanced mask augmentation strategy improves robustness to occlusions, weak textures, and imaging challenges in cross-orbit satellite conditions.

- Achieving 5.38% D1-error and 0.958px EPE on the corrected US3D dataset, with significant accuracy gains in ill-posed regions.

- Enhancing generalization ability, enabling more reliable cross-orbit remote sensing applications.

Abstract

1. Introduction

- We construct an ill-posed-aware aggregation network that incorporates monocular depth, where ill-posed regions are identified via left-right consistency masks and used as constraints to generate aware features. By adaptively weighting and aggregating aware and geometric features, the network comprehensively improves disparity estimation accuracy.

- We propose an enhanced mask-based data augmentation training strategy (EMA) for remote sensing imagery, which integrates random erasing and key-point mask augmentation to effectively improve the robustness and generalization capability of the model in complex scenarios.

- Our method achieves state-of-the-art performance on the US3D cross-orbit satellite stereo matching dataset, with particularly remarkable improvements in ill-posed regions.

2. Related Work

2.1. Deep Learning for Stereo Matching

2.2. Disparity Optimization in Ill-Posed Regions

3. Materials and Methods

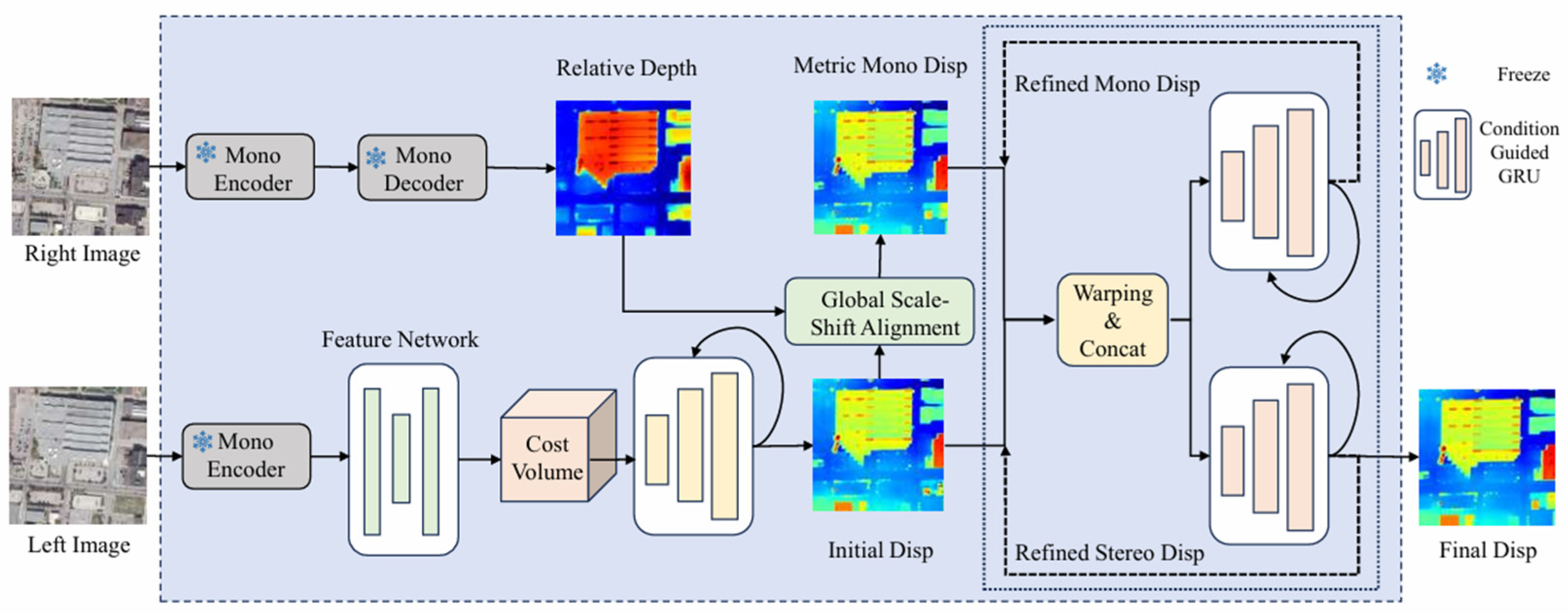

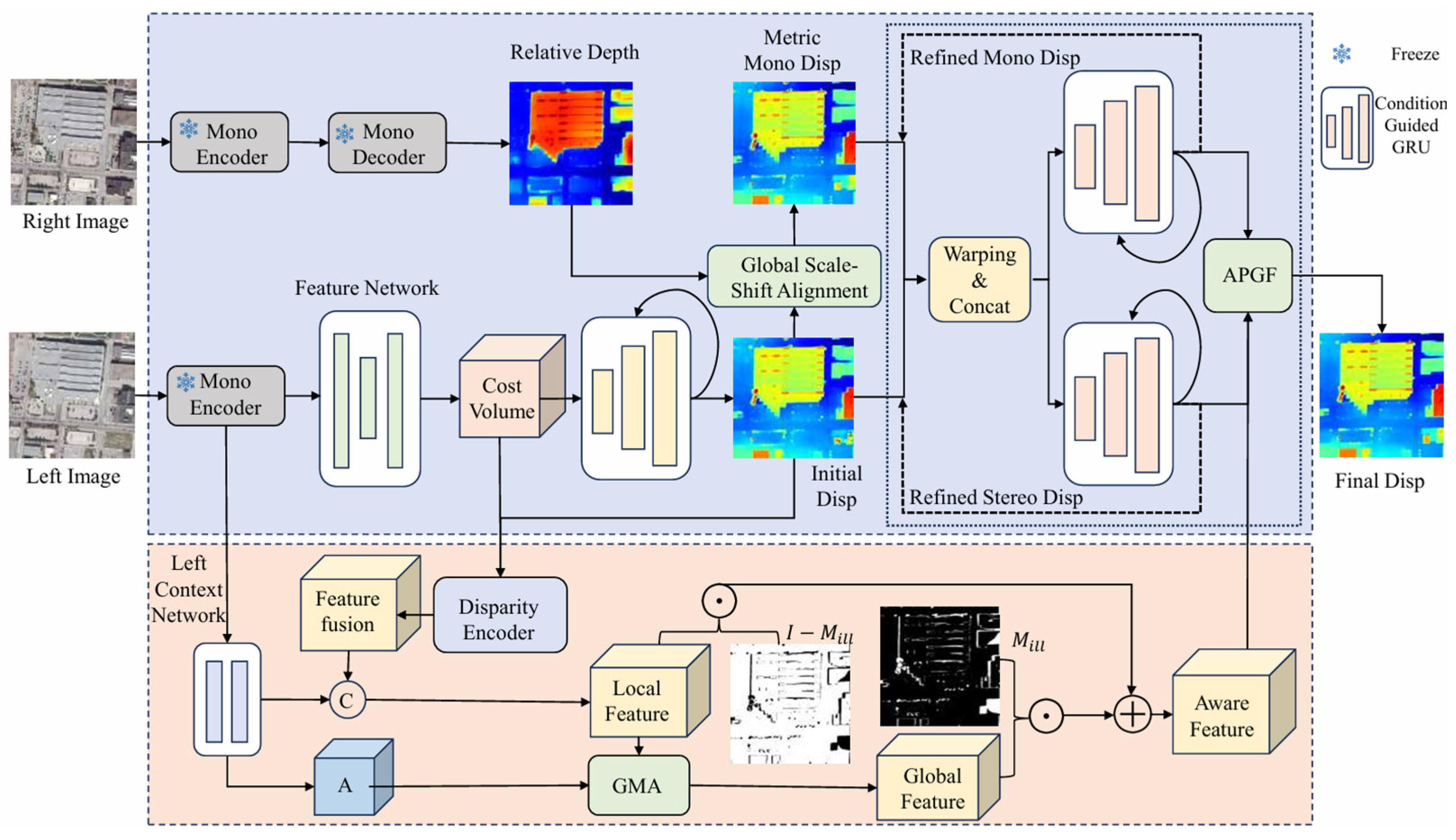

3.1. Overall Framework

3.2. Marry Monodepth to Stereo Matching

3.2.1. Monocular and Stereo Branches

3.2.2. Mutual Refinement

3.3. Ill-Posed-Aware Aggregated Satellite Stereo Matching Network

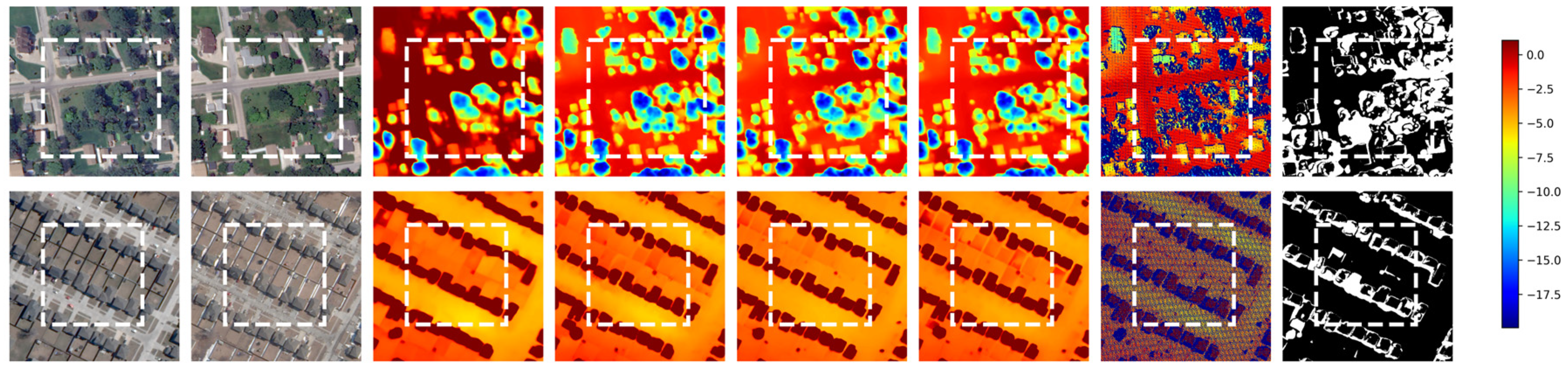

3.3.1. Ill-Posed Region Estimation

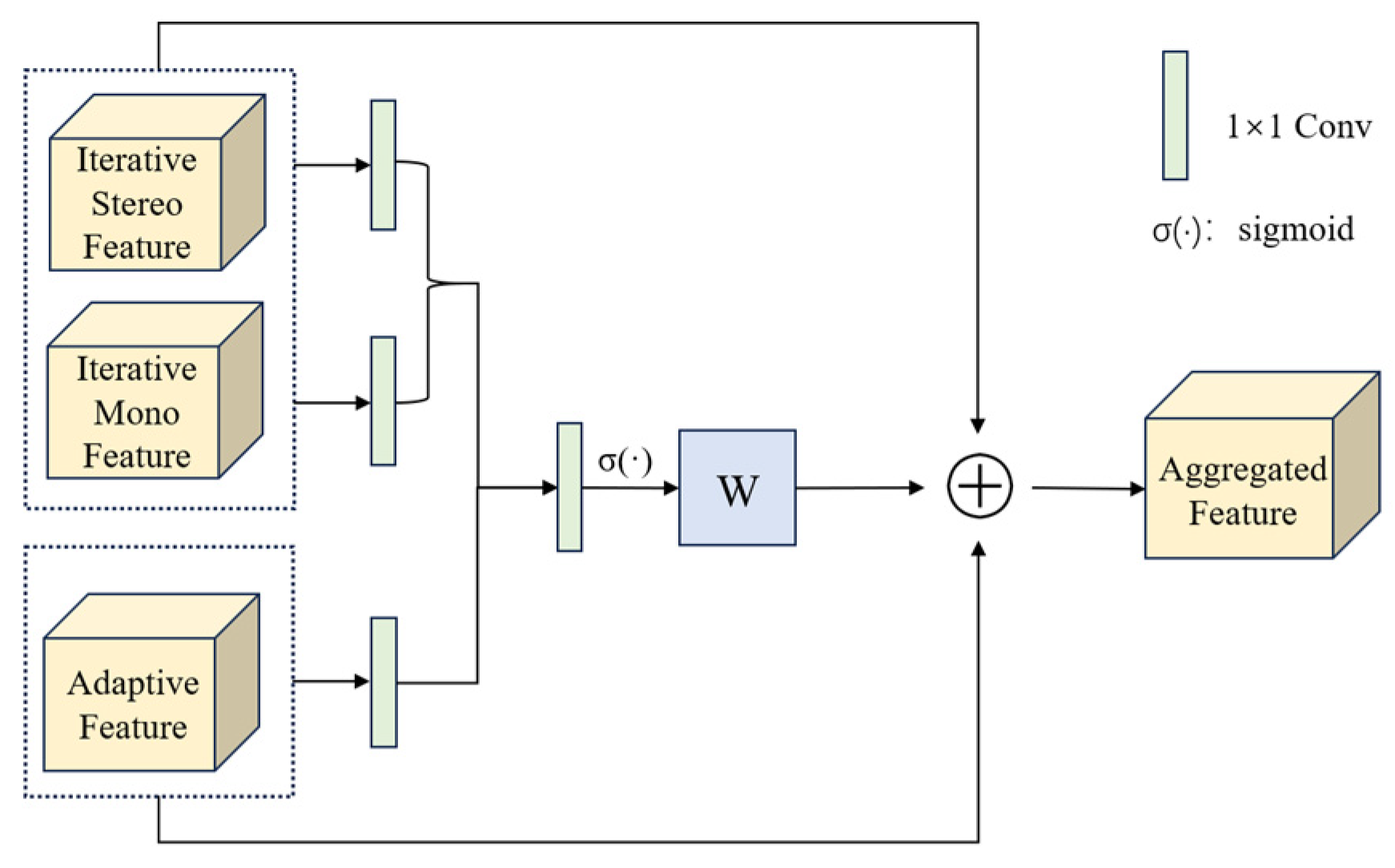

3.3.2. Ill-Posed-Guided Adaptive Aware Geometry Fusion

3.4. Data Augmentation and Train

3.4.1. Enhanced Mask Augmentation

3.4.2. Loss Function

4. Results

4.1. Experiment Setting

4.1.1. Datasets

4.1.2. Evaluation Metrics

4.1.3. Implementation Details

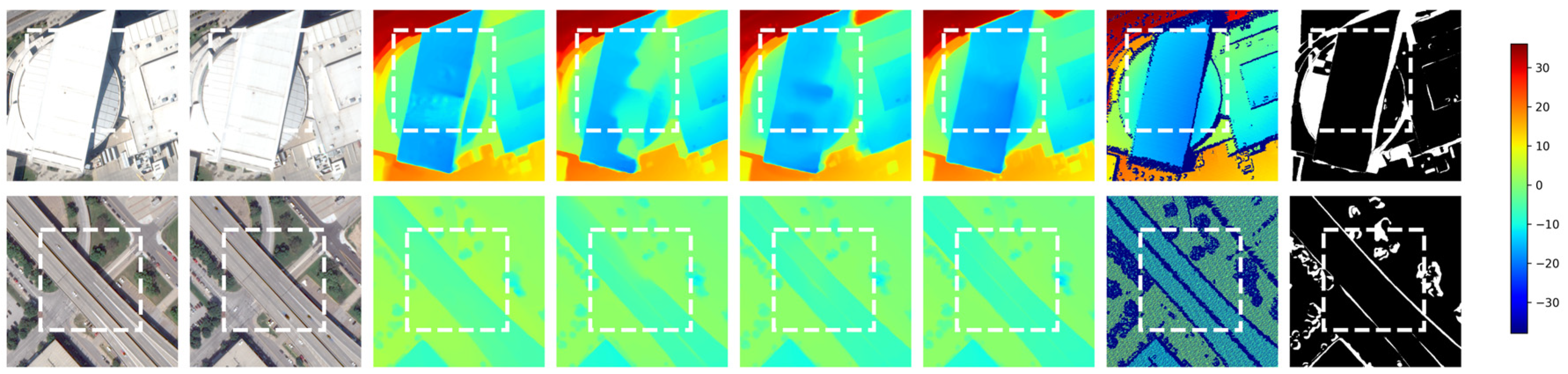

4.2. Results and Comparisons

5. Discussion

5.1. Ablation Experiment

5.2. Efficiency Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stucker, C.; Schindler, K. ResDepth: A Deep Residual Prior for 3D Reconstruction from High-Resolution Satellite Images. ISPRS J. Photogramm. Remote Sens. 2022, 183, 560–580. [Google Scholar] [CrossRef]

- Ji, S.; Liu, J.; Lu, M. CNN-Based Dense Image Matching for Aerial Remote Sensing Images. Photogramm. Eng. Remote Sens. 2019, 85, 415–424. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W.; Wang, L. Unmanned Aerial Vehicle-Based Photogrammetric 3D Mapping: A Survey of Techniques, Applications, and Challenges. IEEE Geosci. Remote Sens. Mag. 2022, 10, 135–171. [Google Scholar] [CrossRef]

- He, S.; Li, S.; Jiang, S.; Jiang, W. HMSM-Net: Hierarchical Multi-Scale Matching Network for Disparity Estimation of High-Resolution Satellite Stereo Images. ISPRS J. Photogramm. Remote Sens. 2022, 188, 314–330. [Google Scholar] [CrossRef]

- He, S.; Zhou, R.; Li, S.; Jiang, S.; Jiang, W. Disparity Estimation of High-Resolution Remote Sensing Images with Dual-Scale Matching Network. Remote Sens. 2021, 13, 5050. [Google Scholar] [CrossRef]

- Khamis, S.; Fanello, S.; Rhemann, C.; Kowdle, A.; Valentin, J.; Izadi, S. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 573–590. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. GCNet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 1971–1980. [Google Scholar]

- Gu, X.; Fan, Z.; Zhu, S.; Dai, Z.; Tan, F.; Tan, P. Cascade Cost Volume for High-Resolution Multi-View Stereo and Stereo Matching. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2492–2501. [Google Scholar]

- Wang, T.; Ma, C.; Su, H.; Wang, W. CSPN: Multi-Scale Cascade Spatial Pyramid Network for Object Detection. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 1490–1494. [Google Scholar]

- Shen, Z.; Dai, Y.; Rao, Z. CFNet: Cascade and Fused Cost Volume for Robust Stereo Matching. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13906–13915. [Google Scholar]

- Guo, X.; Yang, K.; Yang, W.; Wang, X.; Li, H. Group-Wise Correlation Stereo Network. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3268–3277. [Google Scholar]

- Chang, J.-R.; Chen, Y.-S. Pyramid Stereo Matching Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H.S. GA-Net: Guided Aggregation Net for End-To-End Stereo Matching. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 185–194. [Google Scholar]

- Xu, G.; Wang, X.; Ding, X.; Yang, X. Iterative Geometry Encoding Volume for Stereo Matching. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21919–21928. [Google Scholar]

- Jeong, W.; Park, S.-Y. UGC-Net: Uncertainty-Guided Cost Volume Optimization with Contextual Features for Satellite Stereo Matching. Remote Sens. 2025, 17, 1772. [Google Scholar] [CrossRef]

- Kim, J.; Cho, S.; Chung, M.; Kim, Y. Improving Disparity Consistency with Self-Refined Cost Volumes for Deep Learning-Based Satellite Stereo Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 9262–9278. [Google Scholar] [CrossRef]

- Cheng, J.; Yin, W.; Wang, K.; Chen, X.; Wang, S.; Yang, X. Adaptive Fusion of Single-View and Multi-View Depth for Autonomous Driving. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 10138–10147. [Google Scholar]

- Li, K.; Wang, L.; Zhang, Y.; Xue, K.; Zhou, S.; Guo, Y. LoS: Local Structure-Guided Stereo Matching. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 19746–19756. [Google Scholar]

- Yang, G.; Zhao, H.; Shi, J.; Deng, Z.; Jia, J. SegStereo: Exploiting Semantic Information for Disparity Estimation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 660–676. ISBN 978-3-030-01233-5. [Google Scholar]

- Guo, W.; Li, Z.; Yang, Y.; Wang, Z.; Taylor, R.H.; Unberath, M.; Yuille, A.; Li, Y. Context-Enhanced Stereo Transformer. In Proceedings of the Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 263–279. [Google Scholar]

- EdgeStereo: An Effective Multi-Task Learning Network for Stereo Matching and Edge Detection|International Journal of Computer Vision. Available online: https://link.springer.com/article/10.1007/s11263-019-01287-w (accessed on 1 September 2025).

- Liao, P.; Zhang, X.; Chen, G.; Wang, T.; Li, X.; Yang, H.; Zhou, W.; He, C.; Wang, Q. S2Net: A Multitask Learning Network for Semantic Stereo of Satellite Image Pairs. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, G.; Tan, X.; Wang, T.; Wang, J.; Zhang, X. S3Net: Innovating Stereo Matching and Semantic Segmentation with a Single-Branch Semantic Stereo Network in Satellite Epipolar Imagery. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 8737–8740. [Google Scholar]

- Wu, Z.; Wu, X.; Zhang, X.; Wang, S.; Ju, L. Semantic Stereo Matching with Pyramid Cost Volumes. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7484–7493. [Google Scholar]

- Heo, Y.S.; Lee, K.M.; Lee, S.U. Joint Depth Map and Color Consistency Estimation for Stereo Images with Different Illuminations and Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1094–1106. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Jung, C. Deep Cross Spectral Stereo Matching Using Multi-Spectral Image Fusion. IEEE Robot. Autom. Lett. 2022, 7, 5373–5380. [Google Scholar] [CrossRef]

- Li, Z.; Liu, X.; Drenkow, N.; Ding, A.; Creighton, F.X.; Taylor, R.H.; Unberath, M. Revisiting Stereo Depth Estimation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6197–6206. [Google Scholar]

- Liu, Z.; Li, Y.; Okutomi, M. Global Occlusion-Aware Transformer for Robust Stereo Matching. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 3523–3532. [Google Scholar]

- Learning Stereo from Single Images|SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-3-030-58452-8_42 (accessed on 1 September 2025).

- Muresan, M.P.; Raul, M.; Nedevschi, S.; Danescu, R. Stereo and Mono Depth Estimation Fusion for an Improved and Fault Tolerant 3D Reconstruction. In Proceedings of the 2021 IEEE 17th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 28–30 October 2021; pp. 233–240. [Google Scholar]

- Zhang, C.; Meng, G.; Su, B.; Xiang, S.; Pan, C. Monocular Contextual Constraint for Stereo Matching with Adaptive Weights Assignment. Image Vis. Comput. 2022, 121, 104424. [Google Scholar] [CrossRef]

- Jiang, J.; Liao, X.; Yang, F.; Cheung, K.; Wang, X.; Zhao, Y. Leveraging Monocular Depth and Feature Fusion for Generalized Stereo Matching. In Proceedings of the 2025 IEEE 6th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Shenzhen, China, 11–13 April 2025; pp. 1–5. [Google Scholar]

- Wen, B.; Trepte, M.; Aribido, J.; Kautz, J.; Gallo, O.; Birchfield, S. FoundationStereo: Zero-Shot Stereo Matching. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 5249–5260. [Google Scholar]

- Yang, L.; Kang, B.; Huang, Z.; Zhao, Z.; Xu, X.; Feng, J.; Zhao, H. Depth Anything V2. Adv. Neural Inf. Process. Syst. 2024, 37, 21875–21911. [Google Scholar]

- Jiang, H.; Lou, Z.; Ding, L.; Xu, R.; Tan, M.; Jiang, W.; Huang, R. DEFOM-Stereo: Depth Foundation Model Based Stereo Matching. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 21857–21867. [Google Scholar]

- Cheng, J.; Liu, L.; Xu, G.; Wang, X.; Zhang, Z.; Deng, Y.; Zang, J.; Chen, Y.; Cai, Z.; Yang, X. MonSter: Marry Monodepth to Stereo Unleashes Power. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 6273–6282. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2024, arXiv:2304.07193. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision Transformers for Dense Prediction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 12179–12188. [Google Scholar]

- Hirschmuller, H.; Scharstein, D. Evaluation of Stereo Matching Costs on Images with Radiometric Differences. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1582–1599. [Google Scholar] [CrossRef] [PubMed]

- Zitnick, C.L.; Kanade, T. A Cooperative Algorithm for Stereo Matching and Occlusion Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 675–684. [Google Scholar] [CrossRef]

- Kanade, T.; Okutomi, M. A Stereo Matching Algorithm with an Adaptive Window: Theory and Experiment. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 920–932. [Google Scholar] [CrossRef]

- Jiang, S.; Campbell, D.; Lu, Y.; Li, H.; Hartley, R. Learning To Estimate Hidden Motions with Global Motion Aggregation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9772–9781. [Google Scholar]

- Lipson, L.; Teed, Z.; Deng, J. RAFT-Stereo: Multilevel Recurrent Field Transforms for Stereo Matching. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 218–227. [Google Scholar]

- Bosch, M.; Foster, K.; Christie, G.; Wang, S.; Hager, G.D.; Brown, M. Semantic Stereo for Incidental Satellite Images. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1524–1532. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- WACV 2022 Open Access Repository. Available online: https://openaccess.thecvf.com/content/WACV2022/html/Shamsafar_MobileStereoNet_Towards_Lightweight_Deep_Networks_for_Stereo_Matching_WACV_2022_paper.html (accessed on 1 September 2025).

- Guo, X.; Zhang, C.; Lu, J.; Duan, Y.; Wang, Y.; Yang, T.; Zhu, Z.; Chen, L. OpenStereo: A Comprehensive Benchmark for Stereo Matching and Strong Baseline. arXiv 2024, arXiv:2312.00343. [Google Scholar] [CrossRef]

| Class | All | Well-Posed | Ill-Posed | |||

|---|---|---|---|---|---|---|

| Model | D1 | epe | D1 | epe | D1 | epe |

| PSMnet [12] | 6.61 | 1.1303 | 4.59 | 0.9717 | 27.51 | 2.7923 |

| CFNet [10] | 6.15 | 1.0465 | 4.18 | 0.8953 | 26.17 | 2.6048 |

| GWCNet [11] | 6.22 | 1.0678 | 4.27 | 0.9155 | 26.18 | 2.6500 |

| MSNet3D [46] | 6.56 | 1.1082 | 4.56 | 0.9549 | 27.06 | 2.7243 |

| Stereobase [47] | 6.08 | 1.0268 | 4.15 | 0.8761 | 25.82 | 2.5884 |

| IGEV [14] | 5.60 | 0.9754 | 3.75 | 0.8357 | 24.29 | 2.4215 |

| DEFOStereo [35] | 5.59 | 0.9950 | 3.85 | 0.8612 | 23.24 | 2.3639 |

| Monster [36] | 5.50 | 0.9696 | 3.86 | 0.8454 | 24.10 | 2.4074 |

| IAASNet (ours) | 5.38 | 0.9582 | 3.75 | 0.8355 | 21.76 | 2.2199 |

| Model | Metrics | PSMnet | CFNet | GWC | MSNet 3D | Stereo Base | IGEV | DEFOM | Monster | IAAS Net |

|---|---|---|---|---|---|---|---|---|---|---|

| OMA 132_042_026 | D1 | 6.59 | 6.26 | 6.03 | 6.04 | 6.14 | 7.62 | 8.11 | 6.08 | 5.72 |

| epe | 1.1368 | 1.0541 | 1.0311 | 1.0358 | 0.9797 | 1.4265 | 1.1481 | 0.9701 | 0.9444 | |

| OMA 212_007_041 | D1 | 3.70 | 1.72 | 0.52 | 2.58 | 0.40 | 1.32 | 1.99 | 0.69 | 0.58 |

| epe | 0.9441 | 0.8199 | 0.8335 | 0.8389 | 0.6386 | 1.4062 | 0.7162 | 0.6325 | 0.6234 | |

| OMA 315_036_030 | D1 | 8.24 | 8.27 | 7.76 | 7.83 | 7.51 | 7.80 | 11.41 | 6.43 | 6.18 |

| epe | 1.1174 | 1.0837 | 1.0361 | 1.1336 | 1.0031 | 1.4551 | 1.5278 | 0.9723 | 0.9683 | |

| OMA 383_001_027 | D1 | 2.49 | 2.04 | 2.69 | 5.22 | 1.92 | 7.18 | 1.43 | 1.50 | 1.15 |

| epe | 0.7929 | 0.7316 | 0.7928 | 0.9451 | 0.7041 | 1.8048 | 0.6814 | 0.7408 | 0.6581 |

| Model | Class | Metrics | IGEV | DEFOM | Monster | IAASNet |

|---|---|---|---|---|---|---|

| OMA 251_008_004 | All | D1 | 7.22 | 6.24 | 5.57 | 2.99 |

| epe | 1.1877 | 1.1727 | 0.9856 | 0.8074 | ||

| ill | D1 | 41.40 | 25.71 | 26.36 | 18.85 | |

| epe | 4.1619 | 2.6631 | 2.5143 | 2.1047 | ||

| OMA 247_035_001 | All | D1 | 11.42 | 3.97 | 2.31 | 2.30 |

| epe | 2.0244 | 0.7883 | 0.7815 | 0.7719 | ||

| ill | D1 | 36.77 | 16.70 | 14.30 | 13.63 | |

| epe | 2.7584 | 1.6008 | 1.5981 | 1.5594 |

| Model | Class | Metrics | IGEV | DEFOM | Monster | IAASNet |

|---|---|---|---|---|---|---|

| OMA 212_008_006 | All | D1 | 2.47 | 1.49 | 1.23 | 1.06 |

| epe | 0.7309 | 0.6731 | 0.6440 | 0.6469 | ||

| ill | D1 | 13.19 | 12.06 | 10.28 | 9.18 | |

| epe | 1.3536 | 1.4620 | 1.1651 | 1.2591 | ||

| OMA 225_027_021 | All | D1 | 3.22 | 2.96 | 2.64 | 2.41 |

| epe | 1.4675 | 0.7672 | 0.6873 | 0.7110 | ||

| ill | D1 | 18.85 | 18.90 | 18.77 | 17.52 | |

| epe | 1.7884 | 1.7641 | 1.7689 | 1.7221 | ||

| OMA 281_006_027 | All | D1 | 5.26 | 1.80 | 1.88 | 1.68 |

| epe | 1.5189 | 0.6675 | 0.6880 | 0.6365 | ||

| ill | D1 | 25.53 | 16.58 | 18.69 | 16.23 | |

| epe | 2.4792 | 1.9656 | 2.1995 | 1.9362 | ||

| OMA 288_008_006 | All | D1 | 16.36 | 15.33 | 12.75 | 11.72 |

| epe | 1.9682 | 2.0384 | 1.7578 | 1.6750 | ||

| ill | D1 | 42.30 | 41.37 | 35.69 | 31.05 | |

| epe | 4.1575 | 3.8776 | 3.5824 | 3.2646 |

| Model | Class | Metrics | IGEV | DEFOM | Monster | IAASNet |

|---|---|---|---|---|---|---|

| OMA 132_002_034 | All | D1 | 34.83 | 8.23 | 8.25 | 7.94 |

| epe | 2.8886 | 1.1081 | 1.1341 | 1.0991 | ||

| ill | D1 | 48.08 | 21.85 | 20.93 | 20.40 | |

| epe | 3.4058 | 2.0110 | 1.9844 | 1.9365 | ||

| OMA 391_025_019 | All | D1 | 12.50 | 11.43 | 12.04 | 10.88 |

| epe | 1.5640 | 1.4413 | 1.4411 | 1.3879 | ||

| ill | D1 | 32.99 | 28.71 | 28.14 | 23.34 | |

| epe | 3.2079 | 2.8541 | 2.6949 | 2.4983 |

| Model | Class | Metrics | IGEV | DEFOM | Monster | IAASNet |

|---|---|---|---|---|---|---|

| OMA 244_003_036 | All | D1 | 10.72 | 9.13 | 9.04 | 8.21 |

| epe | 1.6474 | 1.3414 | 1.3023 | 1.2453 | ||

| ill | D1 | 56.66 | 45.64 | 43.86 | 40.42 | |

| epe | 6.0267 | 4.5189 | 4.0973 | 3.7137 | ||

| OMA 172_027_019 | All | D1 | 13.30 | 6.07 | 6.59 | 6.03 |

| epe | 1.9438 | 0.9737 | 1.0043 | 0.9708 | ||

| ill | D1 | 43.91 | 22.43 | 23.00 | 20.39 | |

| epe | 3.4219 | 2.1047 | 2.1381 | 2.0072 |

| Class | All | Well-Posed | Ill-Posed | |||||

|---|---|---|---|---|---|---|---|---|

| Model | EMA | IAGF | D1 | epe | D1 | epe | D1 | epe |

| IGEV | 5.60 | 0.9753 | 3.79 | 0.8357 | 24.29 | 2.4215 | ||

| IGEV + EMA | √ | 5.56 | 0.9713 | 3.86 | 0.8454 | 24.10 | 2.4074 | |

| DEFOM | 5.59 | 0.9950 | 3.85 | 0.8612 | 23.24 | 2.3639 | ||

| DEFOM + IAGF | √ | 5.47 | 0.9687 | 3.72 | 0.8327 | 23.13 | 2.3523 | |

| Monster | 5.50 | 0.9696 | 3.86 | 0.8454 | 24.10 | 2.4074 | ||

| Monster + EMA | √ | 5.47 | 0.9685 | 3.82 | 0.8442 | 22.04 | 2.2517 | |

| Monster + SRU | 5.46 | 0.9674 | 3.84 | 0.8391 | 21.90 | 2.2352 | ||

| Monster + IAGF | √ | 5.44 | 0.9611 | 3.82 | 0.8378 | 21.77 | 2.2202 | |

| Model | Number | Size | Erasing Rate | All | Ill-Posed | ||

|---|---|---|---|---|---|---|---|

| D1 | Epe | D1 | Epe | ||||

| Monster | 5.50 | 0.9696 | 24.10 | 2.4074 | |||

| Monster +EMA(1) | [1, 3] | [50, 100] | 0.5 | 5.49 | 0.9689 | 23.53 | 2.3351 |

| Monster +EMA(2) | [1, 5] | [50, 100] | 0.5 | 5.49 | 0.9686 | 23.02 | 2.3151 |

| Monster +EMA(3) | [1, 5] | [100, 200] | 0.5 | 5.49 | 0.9679 | 22.31 | 2.2752 |

| Monster +EMA(4) | [1, 5] | [100, 200] | 1 | 5.47 | 0.9685 | 22.04 | 2.2517 |

| Model | Epe | D1 | Iteration Number | Total Parameters (M) | Run-Time (S) |

|---|---|---|---|---|---|

| Monster | 5.50 | 0.9696 | 32 | 388.69 | 0.65 |

| Ours-8 | 5.42 | 0.9666 | 8 | 388.89 | 0.66 |

| Ours-16 | 5.39 | 0.9593 | 16 | 388.89 | 0.72 |

| Ours | 5.38 | 0.9582 | 32 | 388.89 | 1.42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Sun, H.; Wang, T. IAASNet: Ill-Posed-Aware Aggregated Stereo Matching Network for Cross-Orbit Optical Satellite Images. Remote Sens. 2025, 17, 3528. https://doi.org/10.3390/rs17213528

Huang J, Sun H, Wang T. IAASNet: Ill-Posed-Aware Aggregated Stereo Matching Network for Cross-Orbit Optical Satellite Images. Remote Sensing. 2025; 17(21):3528. https://doi.org/10.3390/rs17213528

Chicago/Turabian StyleHuang, Jiaxuan, Haoxuan Sun, and Taoyang Wang. 2025. "IAASNet: Ill-Posed-Aware Aggregated Stereo Matching Network for Cross-Orbit Optical Satellite Images" Remote Sensing 17, no. 21: 3528. https://doi.org/10.3390/rs17213528

APA StyleHuang, J., Sun, H., & Wang, T. (2025). IAASNet: Ill-Posed-Aware Aggregated Stereo Matching Network for Cross-Orbit Optical Satellite Images. Remote Sensing, 17(21), 3528. https://doi.org/10.3390/rs17213528