In this section, we study the performance improvement of complex operations and evaluate LAM for optimizing segmentation results by comparing it with other attention mechanisms at first. Then, semantic segmentation experiments are conducted on three PolSAR datasets, and the performance of LAM-CV-BiSeNetV2 is compared with that of five other RV networks, namely FCN [

54], U-Net [

55], DeepLabV3+ [

56], and ICNet [

49] to verify the advantages of LAM-CV-BiSeNetV2. Among them, the FCN, UNet, and DeepLabV3+ follow an encoder–decoder structure. A kind of multi-scale cascade structure is used by ICNet.

4.1. Evaluation of LAM

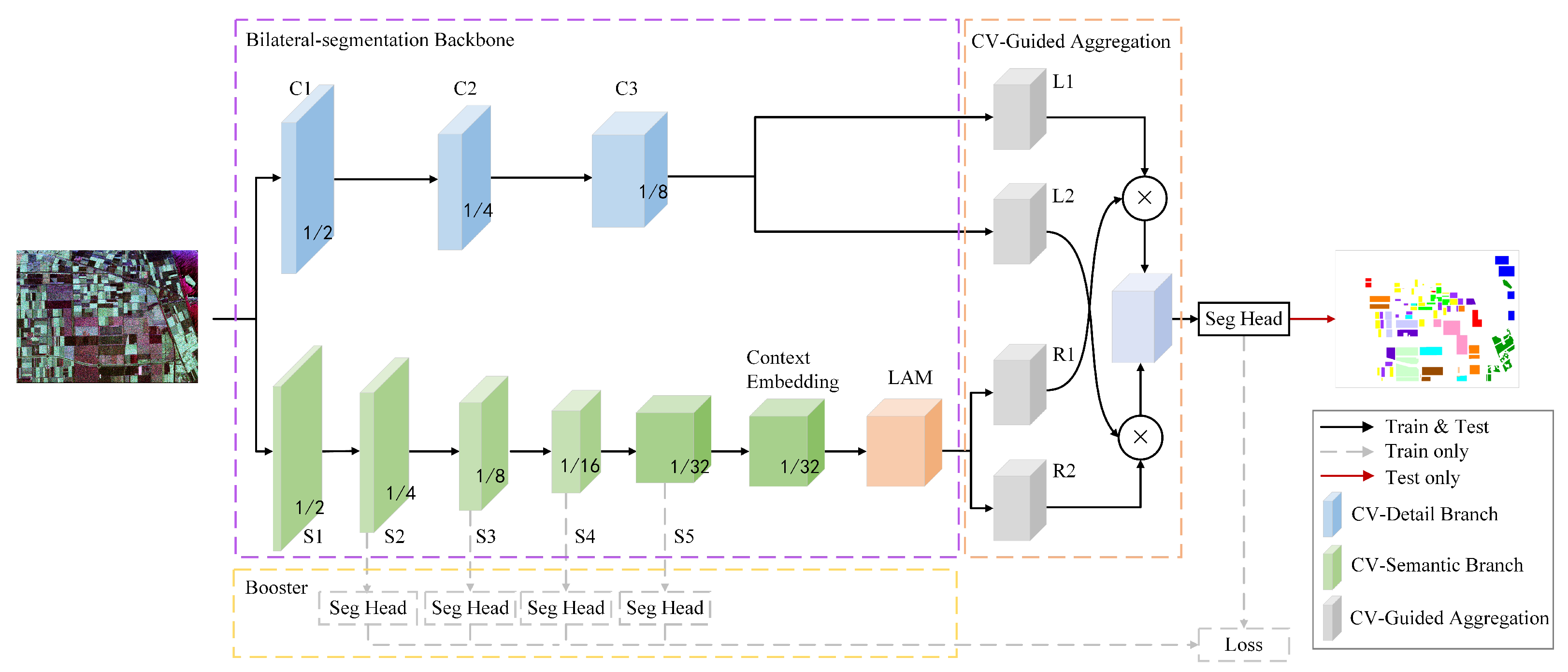

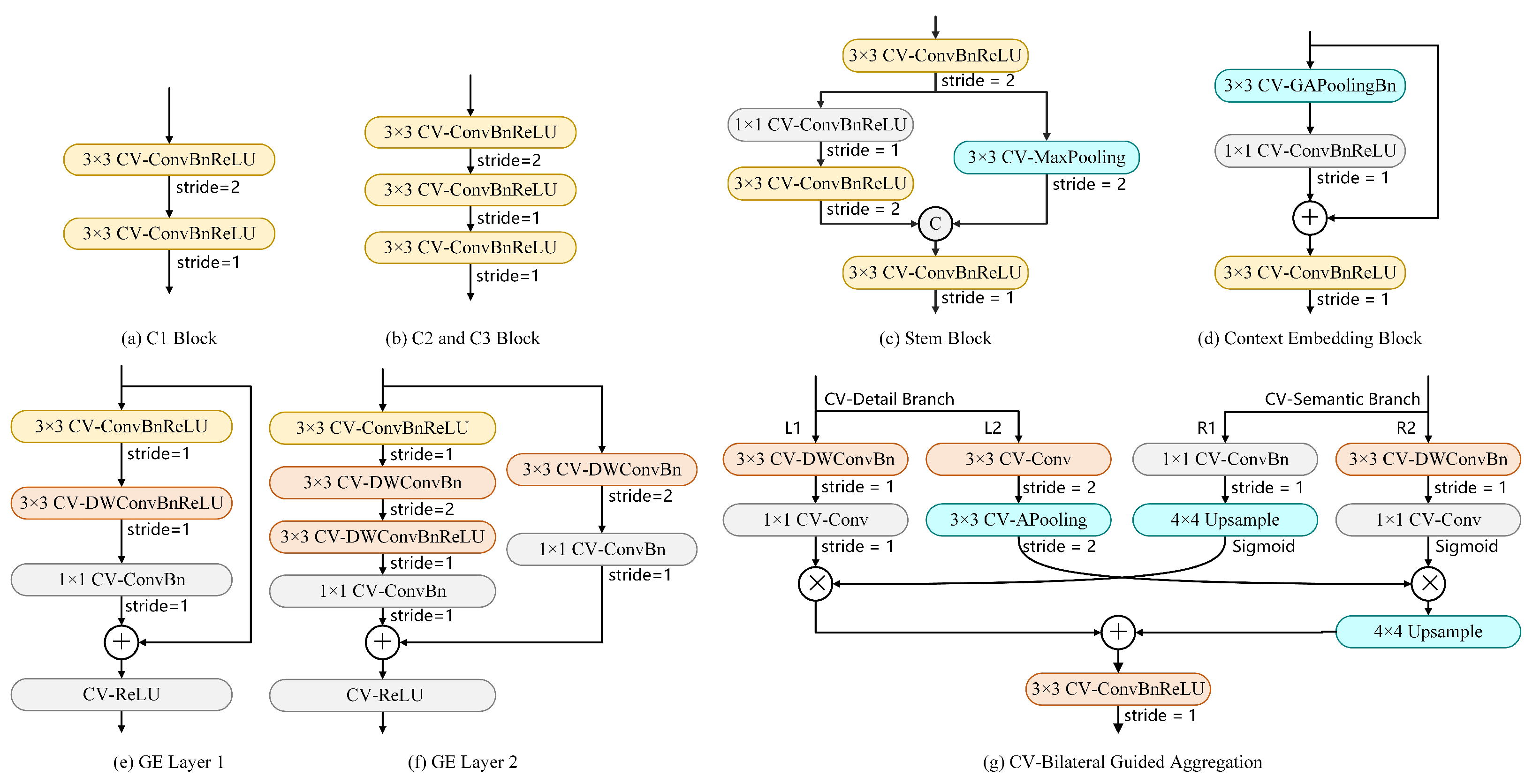

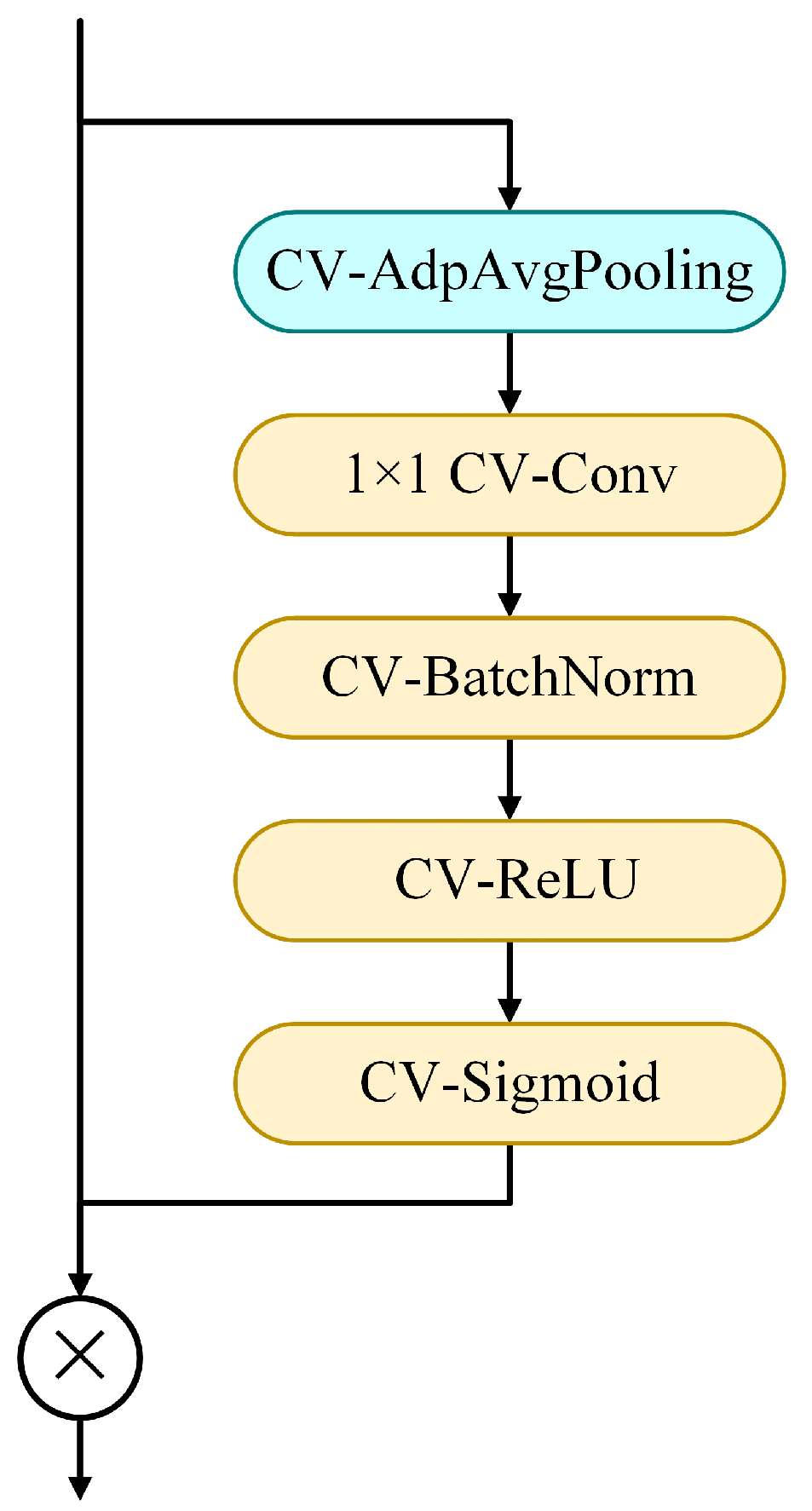

In our method, a lightweight attention module (LAM) is designed to optimize the extraction of semantic details and features, which is placed at the end of the CV-Semantic Branch. In order to verify the improvement of LAM on segmentation performance, comparative experiments are carried out on three PolSAR datasets for BiSeNetV2, CV-BiSeNetV2, and LAM-CV-BiSeNetV2. Furthermore, in order to demonstrate the effectiveness of LAM compared to other attention modules, CBAM [

43], SEAM [

57], and Self-Attention [

58] are placed at the same position in the network, and the same experiments are conducted.

CBAM is a classic attention module that combines channel attention and spatial attention and is often used to optimize semantic segmentation. SEAM uses equivariance and attention mechanisms to improve the performance of semantic segmentation models, and is applicable to both supervised and weakly supervised conditions. The self-attention mechanism enables the model to pay more attention to global information rather than just local neighborhoods during feature extraction. Compared with these classic attention mechanisms, LAM can capture global information, refine features further, and integrate them with the features of the previous stage to achieve better segmentation results.

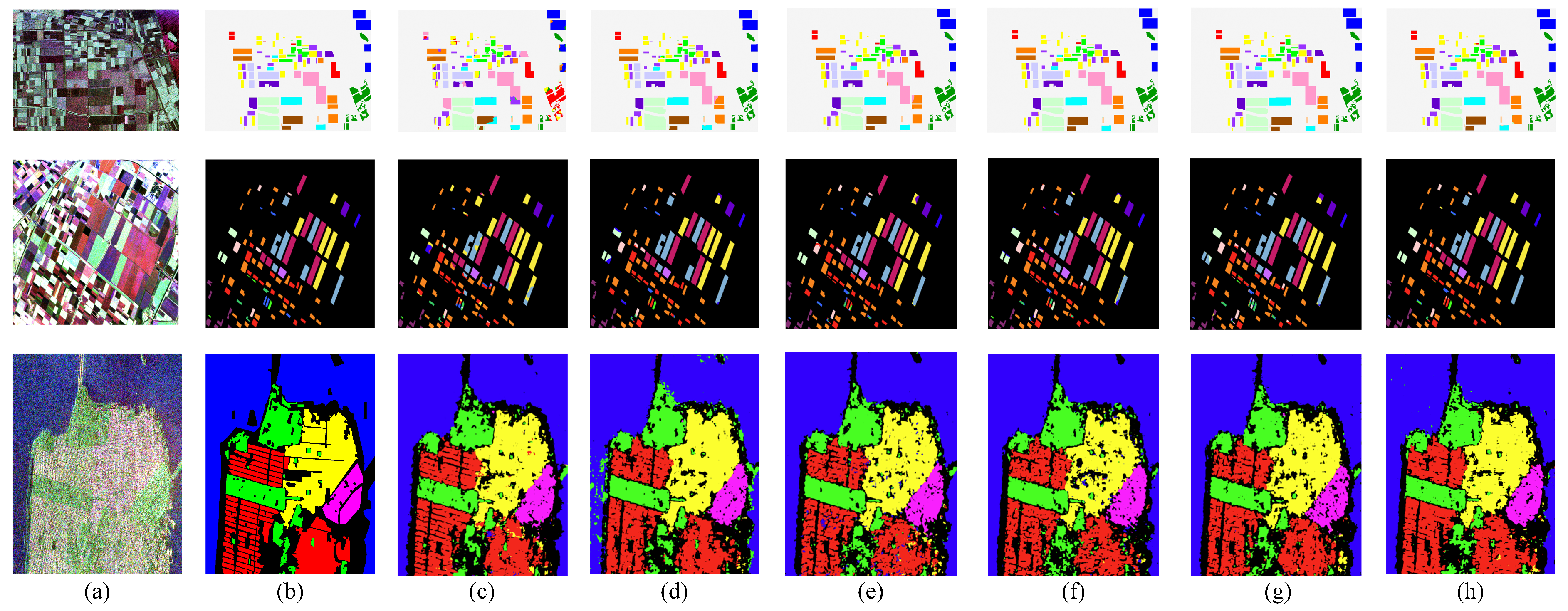

The MPA, MIoU, FWIoU, and OA obtained by BiSeNetV2, CV-BiSeNetV2, CBAM-CV-BiSeNetV2, SEAM-CV-BiSeNetV2, Self Attention-CV-BiSeNetV2, and LAM-CV-BiSeNetV2 on three datasets are shown in

Table 2. CV-BiSeNetV2 represents the complex-valued BiSeNetV2 network without any attention mechanism. The frames per second (FPS) of CV-BiSeNetV2 with different attention mechanisms are shown in

Table 3. The segmentation results of all experiments are shown in

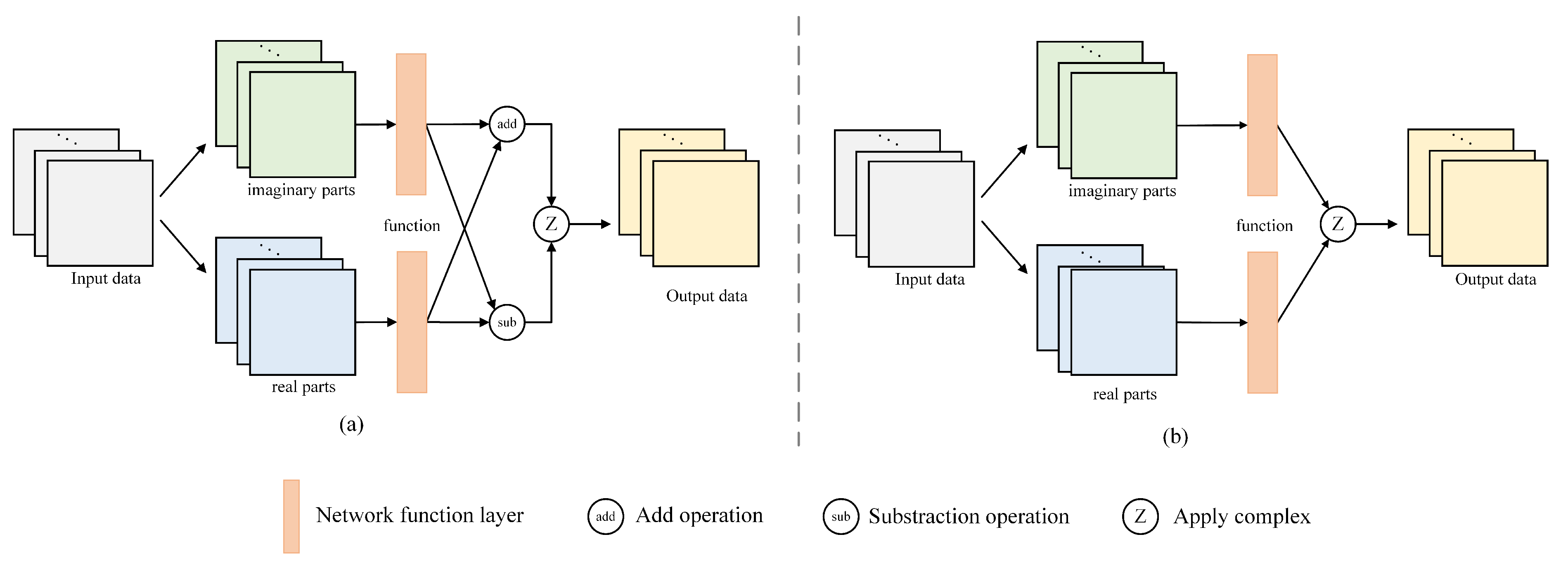

Figure 8a–h. Based on the above experiments, complex-valued calculation makes it utilize the phase information in polarimetric SAR data fully and extract more polarization features, thereby achieving better classification results.

It can be observed from

Table 2 that the performance of BiSeNetV2, CV-BiSeNetV2, and LAM-CV-BiSeNetV2 is improved successively. Compared to BiSeNetV2, CV-BiSeNetV2 shows an 18.34% improvement in MPA, 26.94% improvement in MIoU, 27.38% improvement in FWIoU, and 17.96% improvement in OA on the Flevoland Dataset 1 dataset. On the Flevoland Dataset 2 dataset, CV-BiSeNetV2 shows an increase of 5.23% in MPA, 6.2% in MIoU, 12.58% in FWIoU, and 7.22% in OA. On the San Francisco Dataset, it shows a 2.87% increase in MPA, a 1.8% increase in MIoU, a 0.51% increase in FWIoU, and a 0.66% increase in OA. Overall, CV operation has significantly improved network performance.

In the experiment comparing the attention mechanism to CV-BiSeNetV2, LAM has the best effect on improving the network. On Flevoland Dataset 1, LAM improves the MPA of CV BiSeNetV2 by 1.03%, MIoU by 2.91%, FWIoU by 2.33%, and OA by 1.22%; On Flevoland Dataset 2, LAM results in a 2.53% increase in MPA, a 7.36% increase in MIoU, a 3.2% increase in FWIoU, and a 1.99% increase in OA. On the San Francisco Dataset, with the addition of LAM, MPA increased by 1.22%, MIoU by 2.37%, FWIoU by 2.45%, and OA by 1.76%. In addition, on Flevoland dataset 1, CBAM, SEAM, and Self Attention all have a positive effect on the performance improvement of CV-BiSeNetV2, while on Flevoland dataset 2, SEAM and Self Attention have a negative effect, and on the San Francisco Dataset, SEAM has a negative effect, indicating that the attention module has an unstable effect on network performance. However, in all experiments, LAM shows a positive effect, which to some extent proves the adaptability and stability of LAM relative to the network.

FPS is the inverse of the time required for model inference, and a higher FPS indicates a faster inference of the network. In the experiment, the FPS was calculated by inferring and predicting each complete PolSAR image 100 times.

Table 3 shows that LAM-CV-BiSeNetV2 has the highest FPS on Flevoland dataset1 and Flevoland dataset2, and is close to Self Attention-CV-BiSeNetV2, which has the highest FPS, in the San Francisco Dataset. In general, LAM is more lightweight compared to other attention mechanisms.

Overall, the CV operation significantly improves the classification accuracy, LAM further improves the performance of the model in a relatively lightweight manner, and LAM-CV-BiSeNetV2 has the best classification performance.

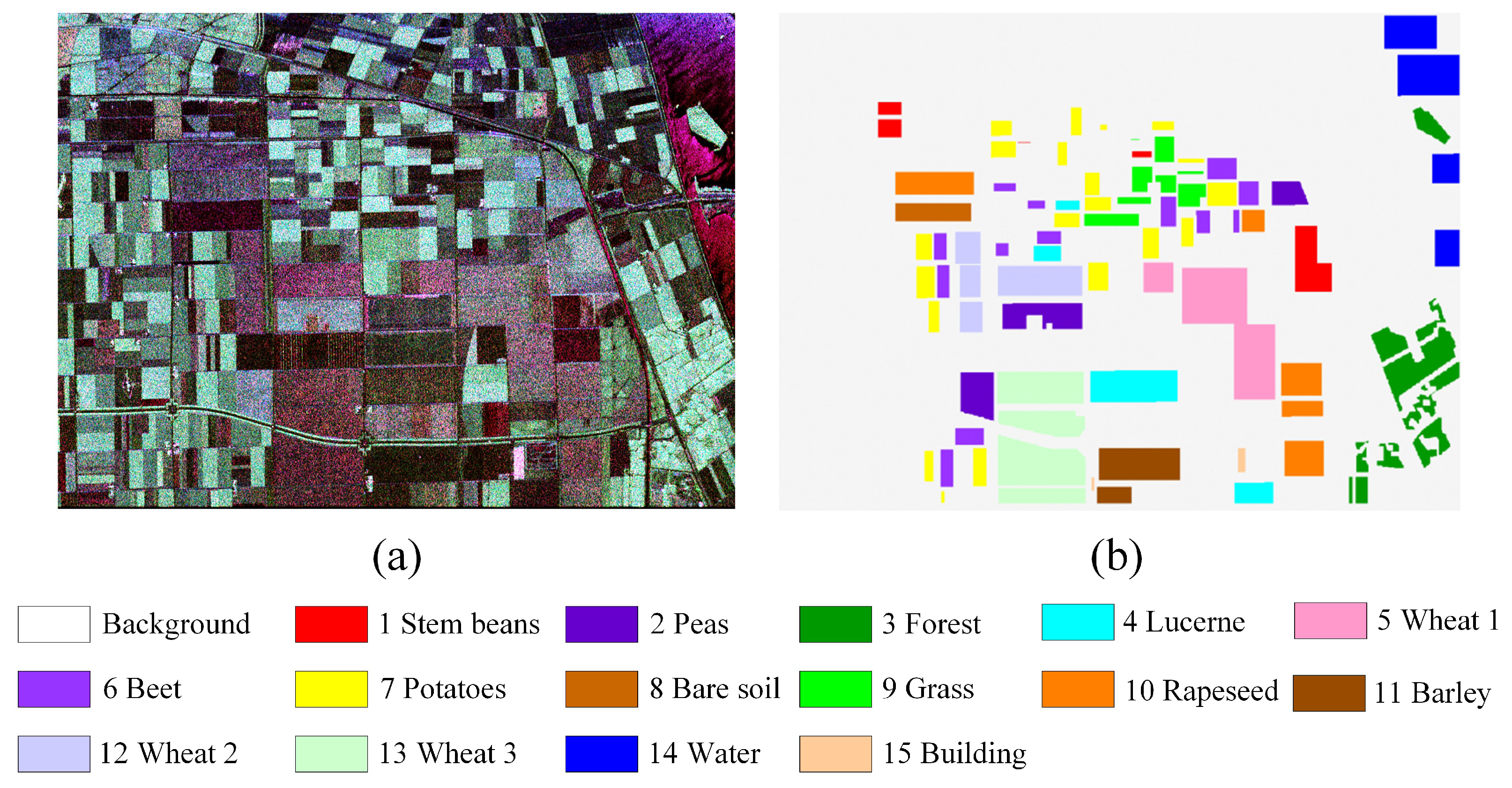

4.2. Experiment on Flevoland Dataset 1

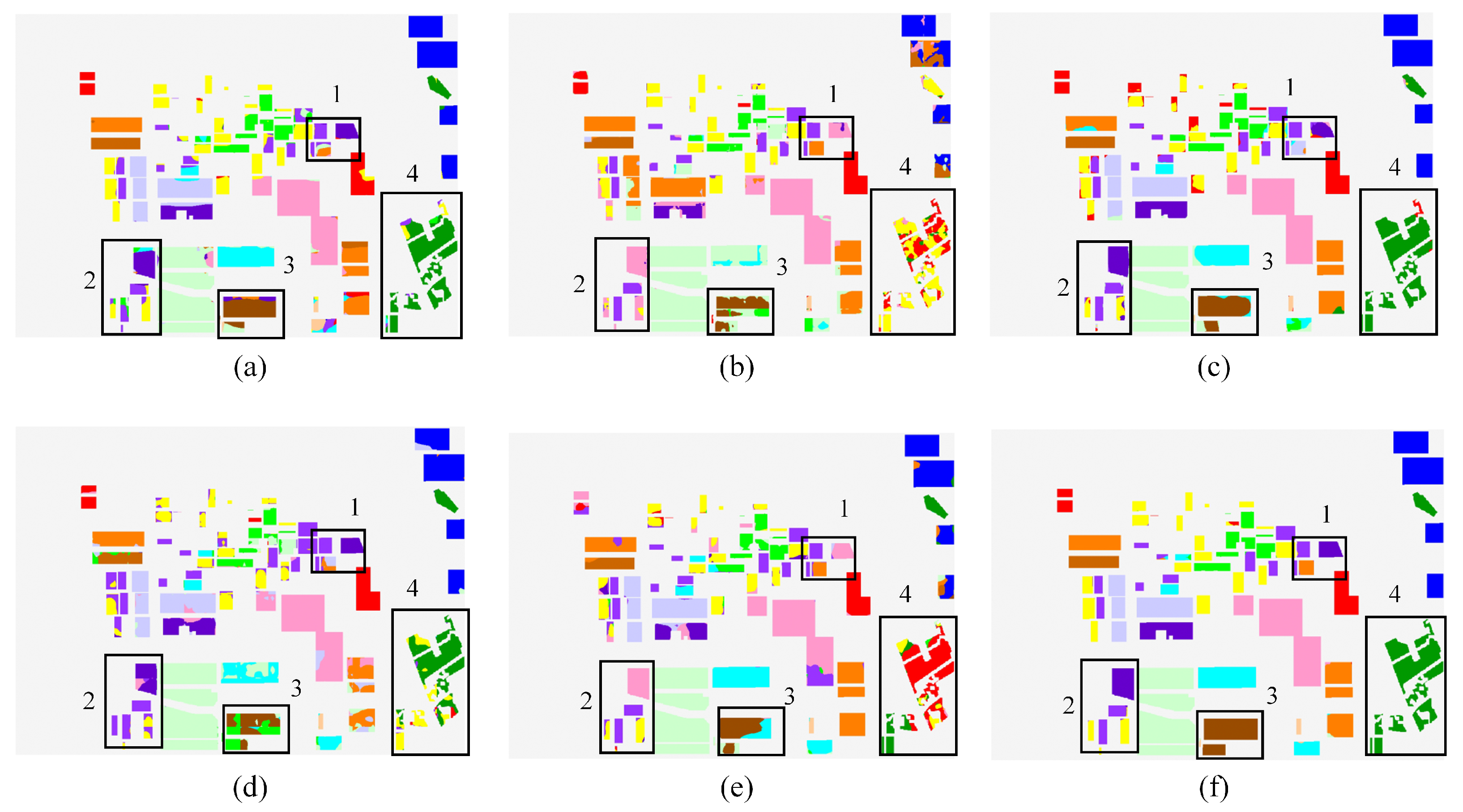

For Flevoland dataset 1, the semantic segmentation results of LAM-CV-BiSeNetV2 and other RV networks are presented in

Figure 9a–f, respectively. In each figure of different networks, there are four parts marked by black boxes.

The region framed by black box 1 includes peas, beets, and rapeseed, and these three categories are relatively balanced in the sample. Some rapeseed is misclassified into beet by FCN; peas are covered as wheat 1 by U-Net and BiSeNetV2; rapeseed is mixed with wheat 2 by DeepLabV3+ and ICNet. These errors arise mainly from similar polarimetric backscattering characteristics among these crops. The region framed by black box 2 includes peas, beets, and potatoes, and these three categories are also relatively balanced in the sample. Some parts of peas and beets are mixed with other categories by FCN; peas and potatoes are misclassified as wheat 1 by U-Net; peas are mixed with wheat 1, and potatoes are mixed with beets by DeepLabV3+ and ICNet; peas are misclassified as wheat 1 by BiSeNetV2. Such misclassifications often occur in areas with blurred field boundaries. In the region of barley framed by black box 3 barley, which is partially mixed with wheat 3 by FCN; partially misclassified as grass and wheat 3 by U-Net; partially misclassified as grass by ICNet, and partially mixed with wheat 3 and lucerne by DeepLabV3+ and BiSeNetV2. This confusion stems from similar scattering intensities. In the region of forest framed by black box 4, a small part is mixed with other categories by FCN and DeepLabV3+; a considerable part is misclassified as stem beans and potatoes by U-Net, ICNet, and BiSeNetV2. This is mainly due to similar surface roughness and volume scattering patterns. Since barley and forest are less represented and more spatially clustered in the dataset, they pose a greater challenge for the model to generalize effectively.

In contrast, for these marked regions, our LAM-CV-BiSeNetV2 captures the phase coupling between real and imaginary components while the attention mechanism highlights crop boundaries, effectively separating visually and physically similar classes. The performance of LAM-CV-BiSeNetV2 is much better than other networks.

In addition, the segmentation performances of different methods are presented in

Table 4 and

Table 5. The classification accuracy obtained by FCN, U-Net, DeepLabV3+, ICNet, BiSeNetV2, and LAM-CV-BiSeNetV2 in different categories of Flevoland Dataset 1 is shown in

Table 4. Apart from stem beans, our method achieves the highest classification accuracy. The MPA, MIoU, FWIoU, OA, and Kappa obtained by these semantic segmentation networks on Flevoland Dataset 1 are presented in

Table 5. It can be observed that our method achieves the highest MPA, MIoU, FWIoU OA, and Kappa. In addition, LAM optimization and complex-valued calculation make the performance of LAM-CV-BiSeNetV2 surpass that of FCN and ICNet, which are better than the original BiSeNetV2.

According to the segmentation results in

Figure 9,

Table 4 and

Table 5, it can be analyzed that LAM-CV-BiSeNetV2 has the best classification performance in this semantic segmentation experiment.

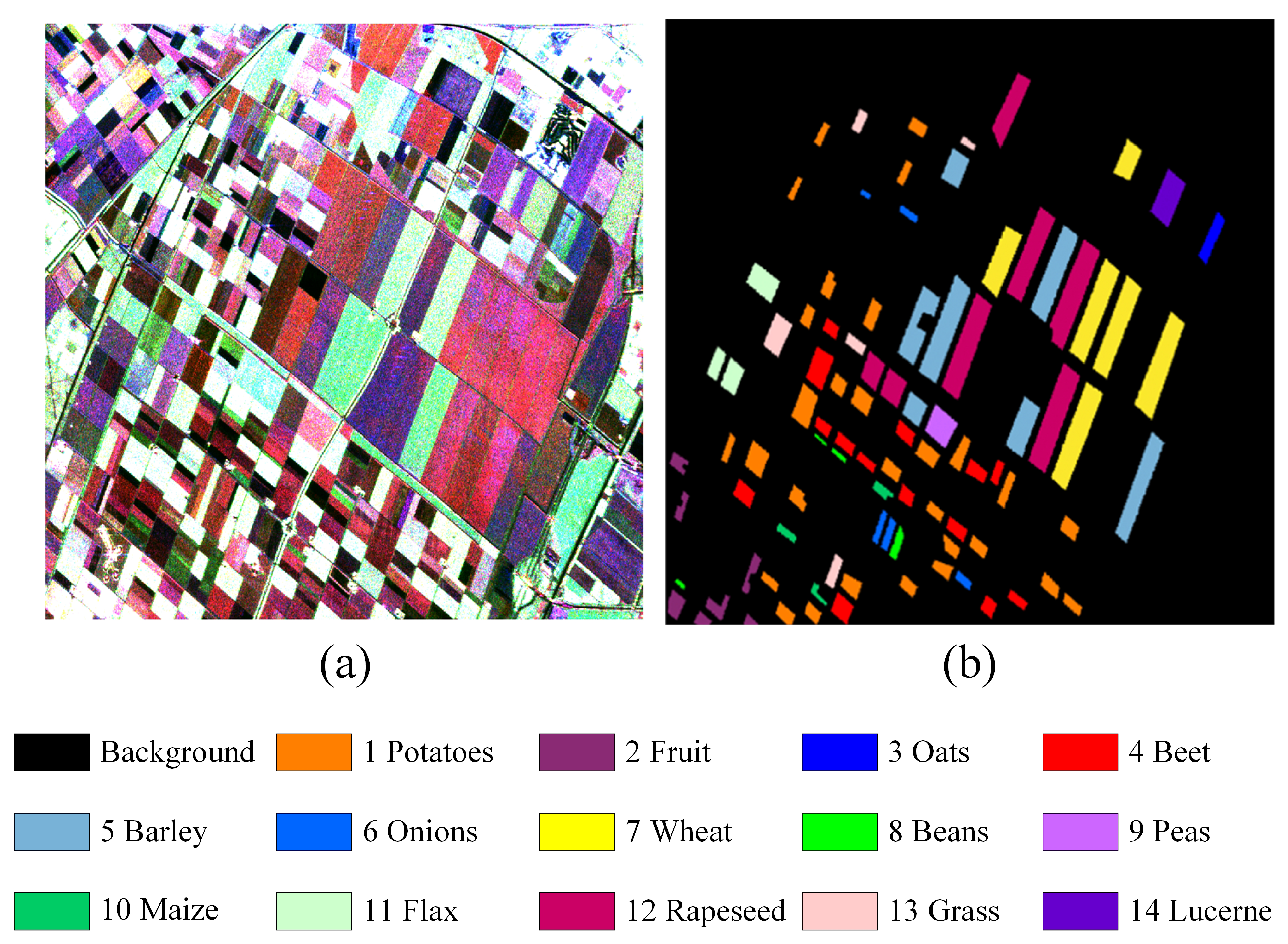

4.3. Experiment on Flevoland Dataset 2

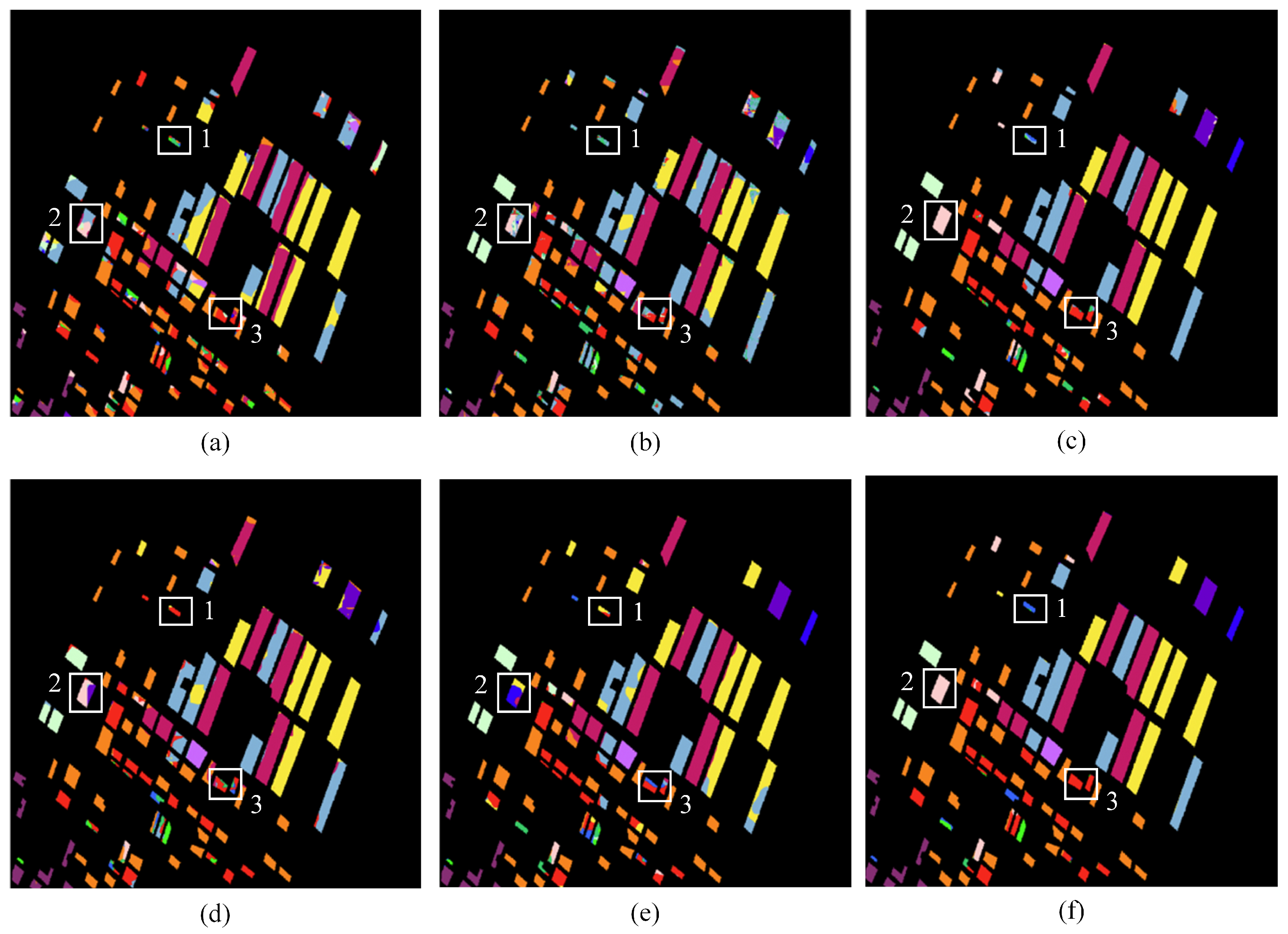

For Flevoland dataset 2, the semantic segmentation results of LAM-CV-BiSeNetV2 and other RV networks are presented in

Figure 10a–f, respectively. In each figure of different networks, there are three parts marked by white boxes.

In the region framed by white box 1, onions are mixed with other types of crops by FCN, U-Net, and DeepLabV3+; misclassified as beet and wheat by ICNet and BiSeNetV2. mainly due to the high similarity in backscattering responses between these classes and the limited discriminative power of their real-valued features. In the region framed by white box 2, grass is partially covered by other categories by FCN and U-Net; partially misclassified as lucerne by ICNet; completely misclassified as wheat, oats, and beets by BiSeNetV2; classified correctly by DeepLabV3+ and LAM-CV-BiSeNetV2, suggesting that these models struggle with weak backscattering and irregular spatial boundaries. Furthermore, onions and grass account for a relatively small proportion of the samples in the dataset, which makes them more difficult to classify accurately. In the region framed by white box 3, all five real-valued networks partially misclassify beet, which can be attributed to the similar polarimetric signatures between beet and neighboring crops.

LAM-CV-BiSeNetV2 achieves accurate classification because the combination of complex-valued feature encoding and lightweight attention allows better separation of crops with similar scattering characteristics.

The classification accuracy of FCN, U-Net, DeepLabV3+, ICNet, BiSeNetV2, and LAM-CV-BiSeNetV2 in 14 categories of Flevoland Dataset 2 is shown in

Table 6. Among the 14 categories, our method achieves the best classification results in 11 categories. For the other 3 categories, BiSeNetV2, ICNet, and U-Net achieved the highest accuracy of 29.2%, 68.58%, and 67.75% in the onions, beans, and maize, respectively. For the onions and maize categories, the number of samples is much less than that of other categories, and it is more difficult to obtain ideal classification results through training.

Furthermore, the evaluation metrics of different methods are shown in

Table 7. Our method achieves the highest MPA of 85.04%, MIoU of 81.83%, FWIoU of 95.11%, OA of 97.13%, and the Kappa of 96.61, all of which are better than the performance of FCN, U-Net, DeepLabV3+, ICNet, and BiSeNetV2.

Based on the above performance, LAM-CV-BiSeNetV2 has the best classification performance compared with the other five network models in this experiment.

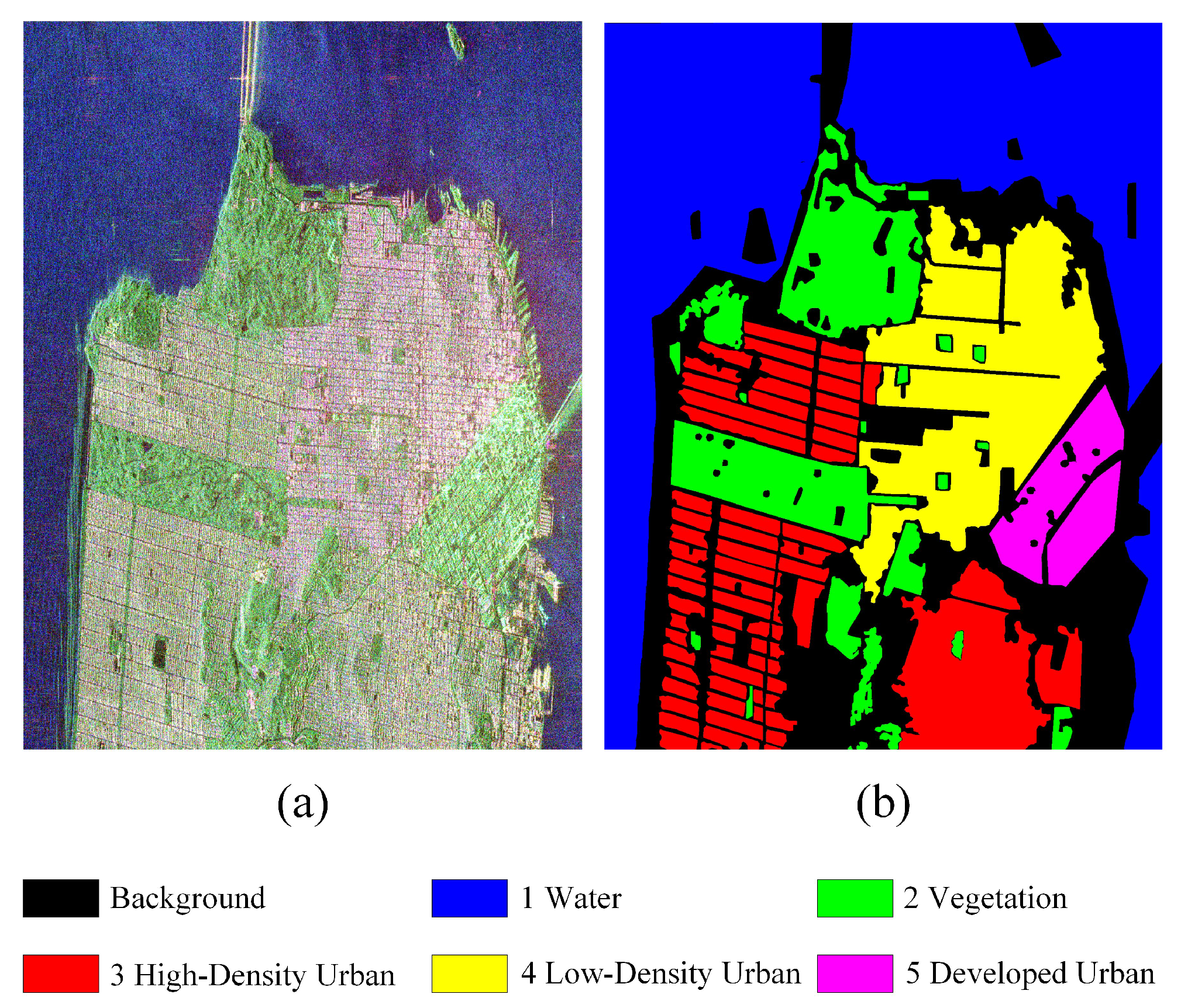

4.4. Experiment on San Francisco Dataset

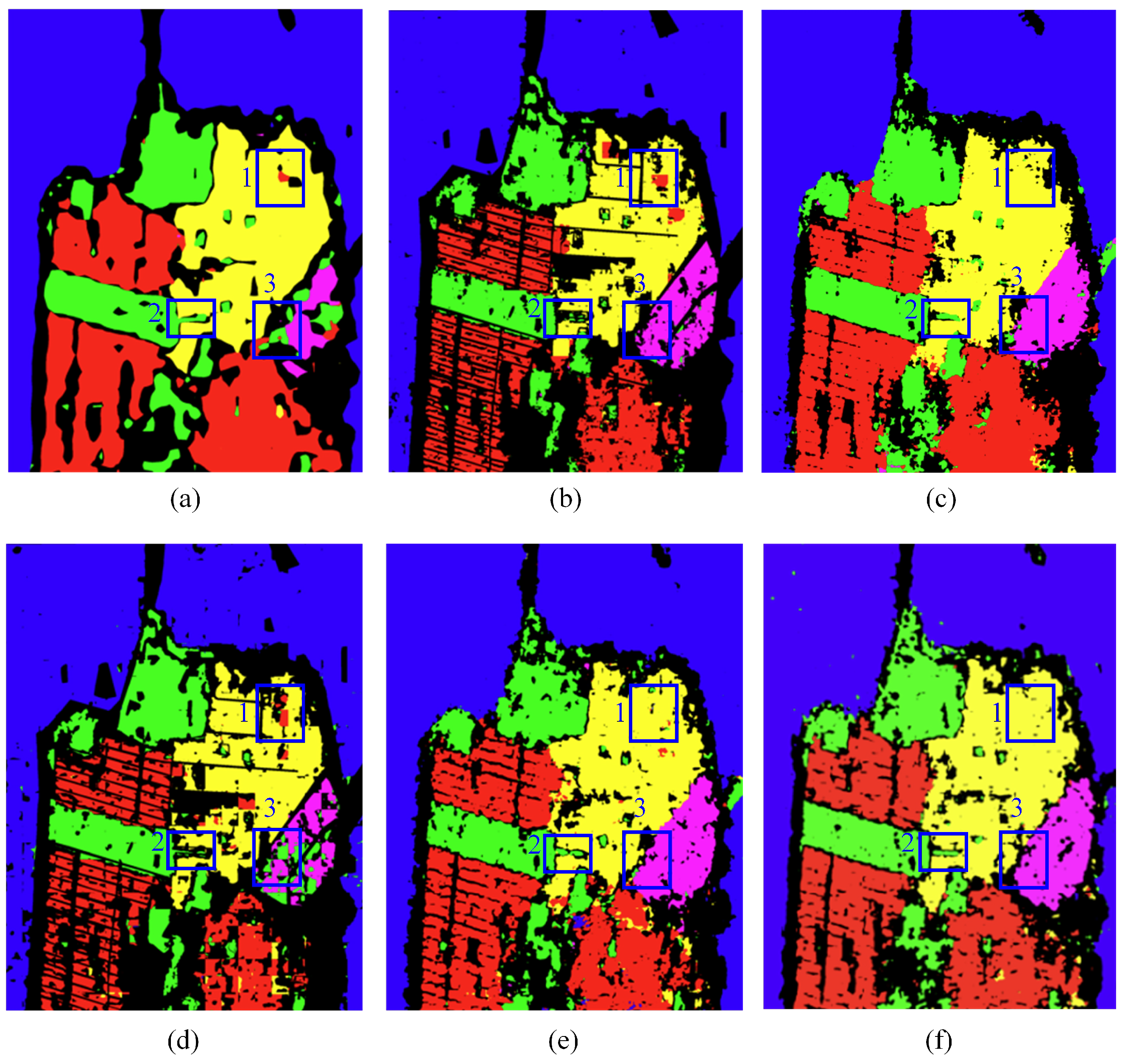

For the San Francisco dataset, the semantic segmentation results of LAM-CV-BiSeNetV2 and other RV networks are presented in

Figure 11a–f, respectively. This dataset has a relatively balanced category distribution, which allows for a more direct and fair evaluation of the model’s classification capability. In each figure of different networks, there are three parts marked by blue boxes.

In the region framed by blue box 1, low-density urban is misclassified as high-density urban by FCN, U-Net, and ICNet partially; misclassified as high-density urban and vegetation by BiSeNetV2 partially; misclassified as background by DeepLabV3+ partially. Compared with the conditions of these networks, LAM-CV-BiSeNetV2 obviously achieves better classification results. These misclassifications mainly arise from the similar polarimetric responses between urban materials of different densities and the limited ability of real-valued networks to capture subtle scattering variations within heterogeneous urban structures. In the region framed by blue box 2, vegetation is not well classified by FCN, U-Net, DeepLabV3+, ICNet, and BiSeNetV2 partially. In the area of blue box 3 representing developed urban, other networks misclassified some developed urban areas as Vegetation, while U-Net and LAM-CV-BiSeNetV2 performed better. The problems are mainly due to the weak and spatially discontinuous scattering signals of vegetation in polarimetric SAR imagery.

In comparison, LAM-CV-BiSeNetV2 accurately distinguishes low-density from high-density urban regions and identifies the vegetation and developed urban areas.

The classification accuracy of FCN, U-Net, DeepLabV3+, ICNet, BiSeNetV2, and LAM-CV-BiSeNetV2 in different categories of the San Francisco dataset is shown in

Table 8. Different from Flevoland dataset 1 and Flevoland dataset 2, in the San Francisco dataset, since the area of background is similar to other categories, background is also shown as a category. Among these 6 categories, LAM-CV-BiSeNetV2 has the highest classification accuracy in categories of water, low-density urban, and developed urban. For the other 3 categories, BiSeNetV2, ICNet, and DeepLabV3+ have the best results for background, vegetation, and high-density urban classification, respectively.

The evaluation metrics of different methods are shown in

Table 9. It can be seen that LAM-CV-BiSeNetV2 has the highest MPA, MIoU, FWIoU, OA, and Kappa, which reach 83.56%, 70.08%, 70.98%, 82.24% and 76.39%, respectively, proving that our method has the best segmentation performance.