Improving the Universal Performance of Land Cover Semantic Segmentation Through Training Data Refinement and Multi-Dataset Fusion via Redundant Models

Abstract

1. Introduction

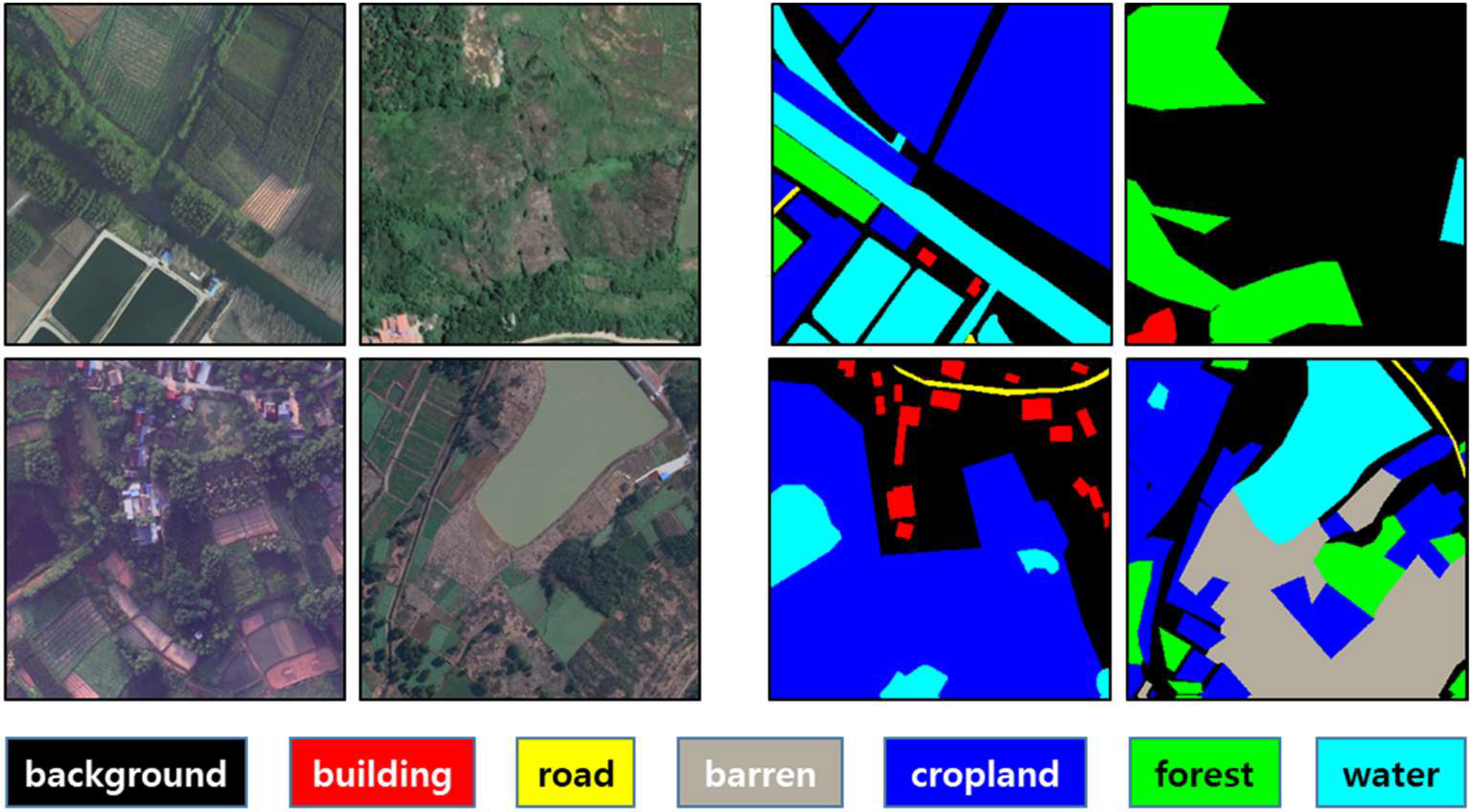

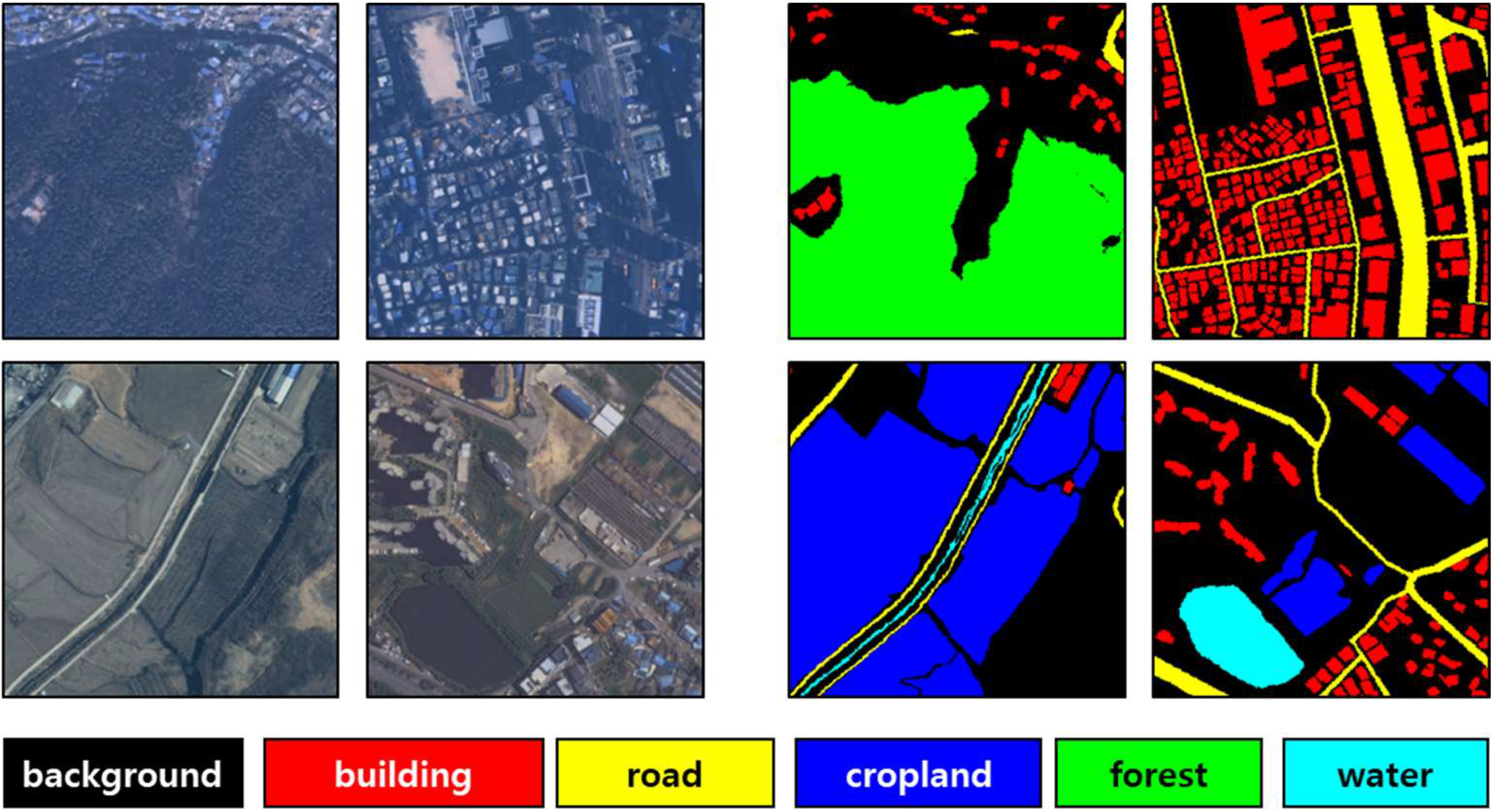

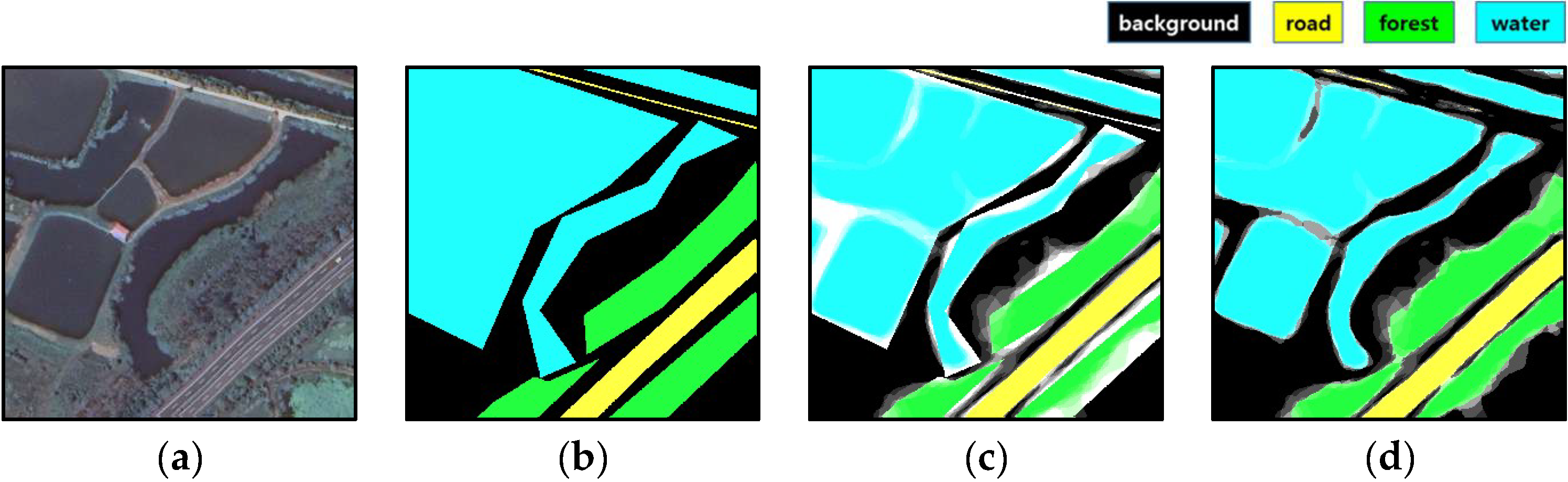

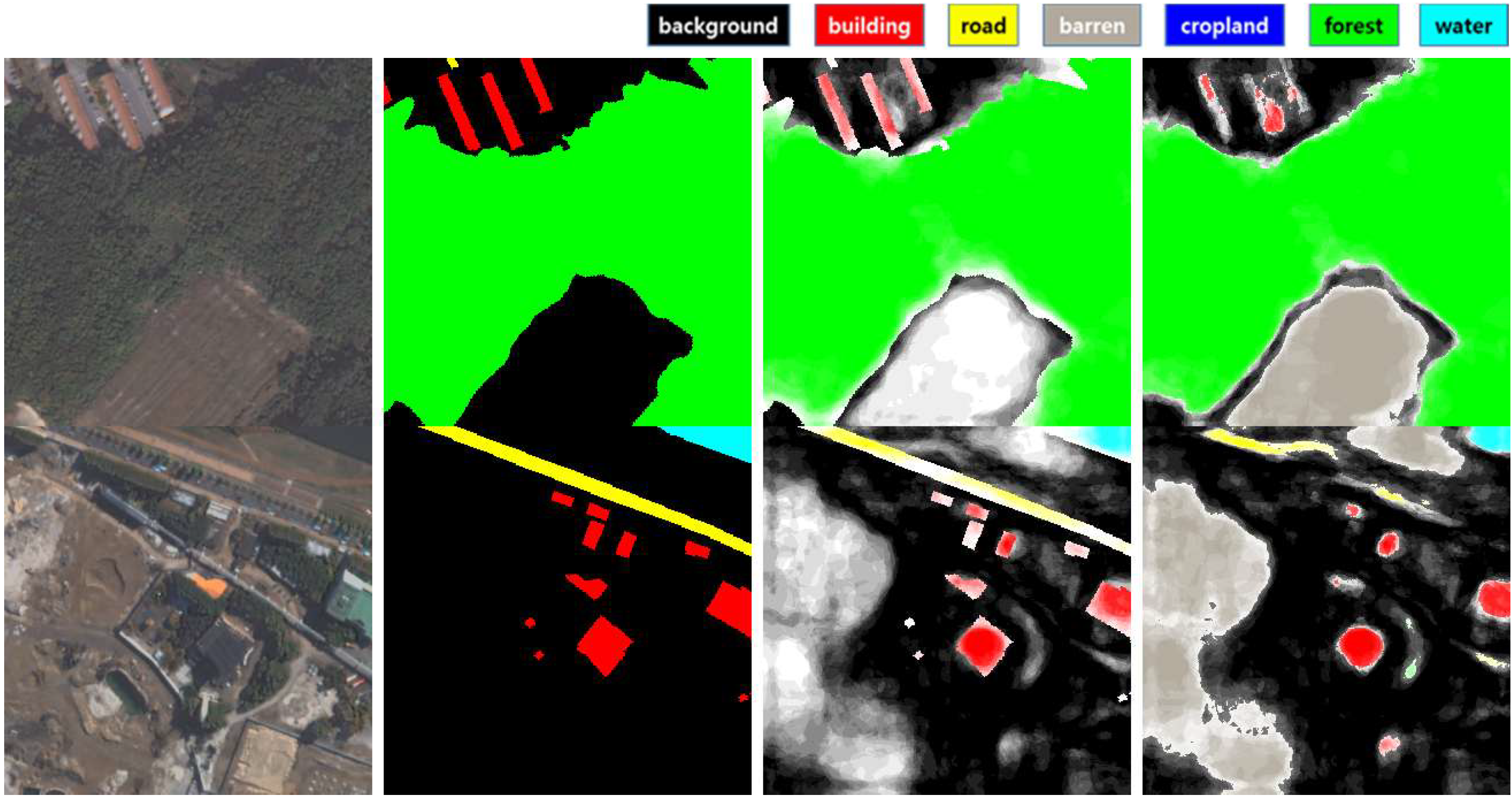

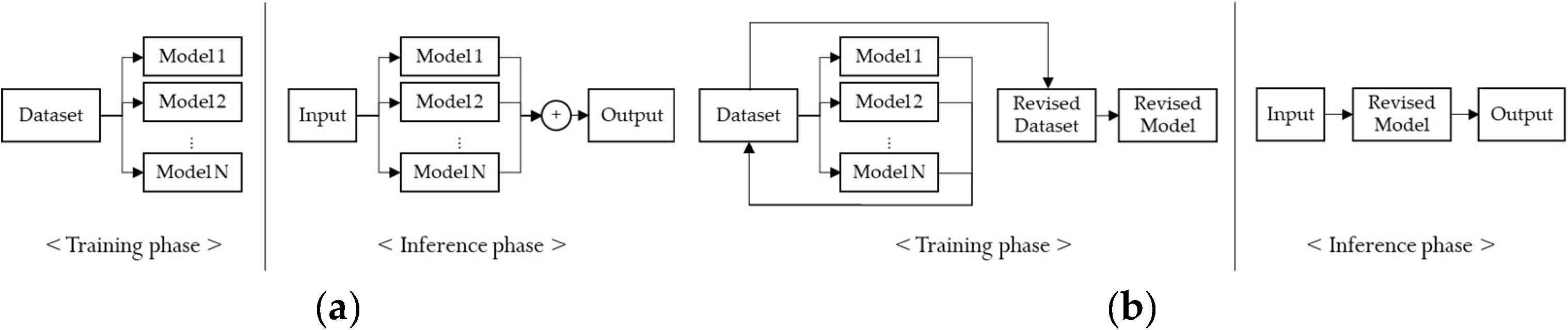

2. Materials

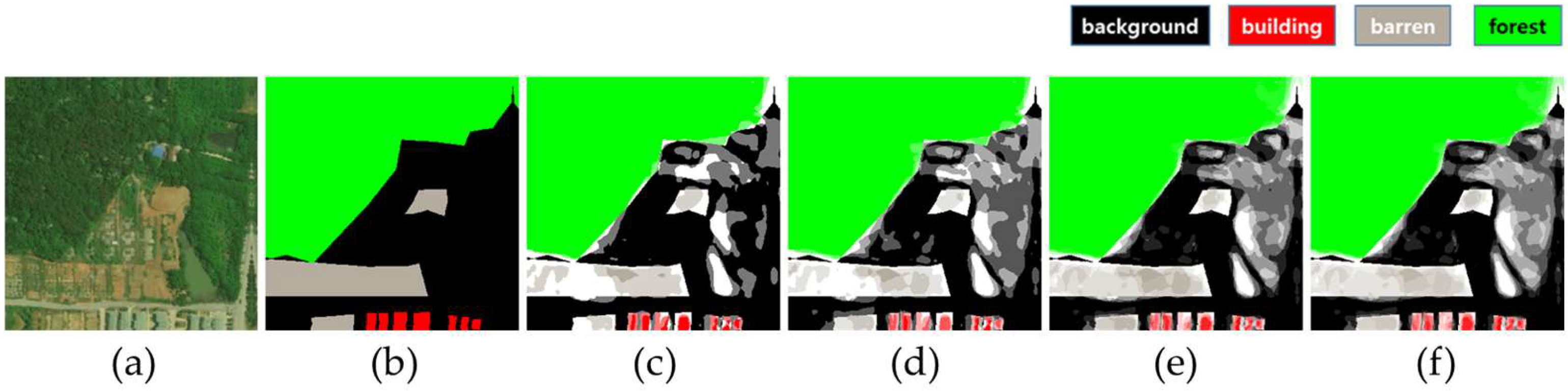

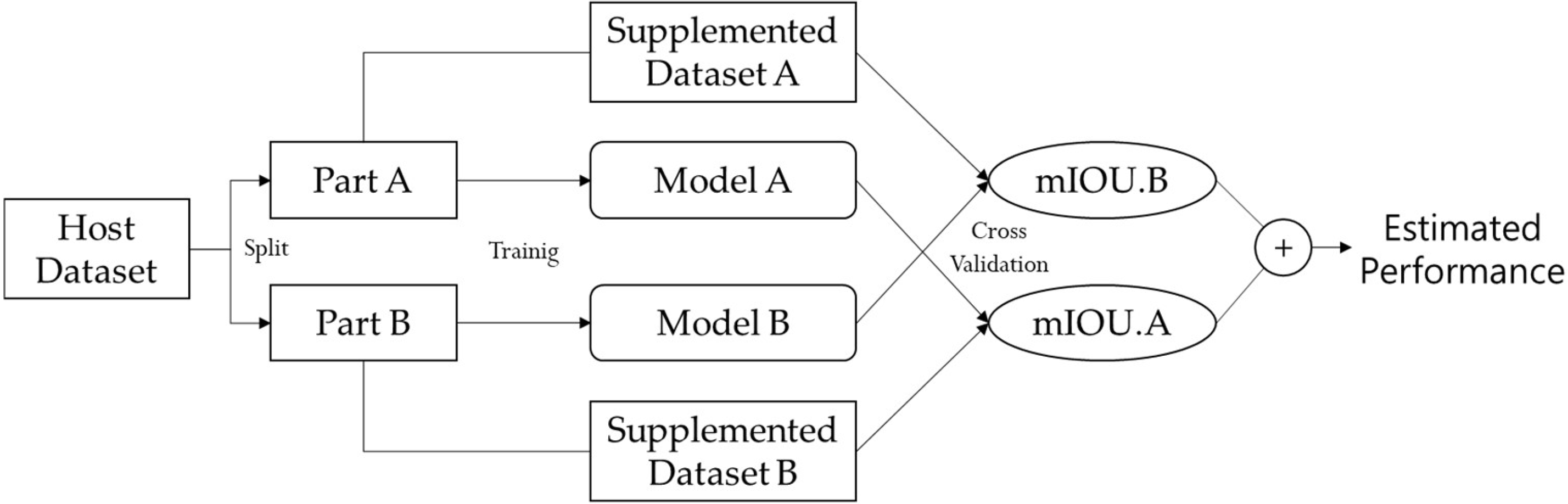

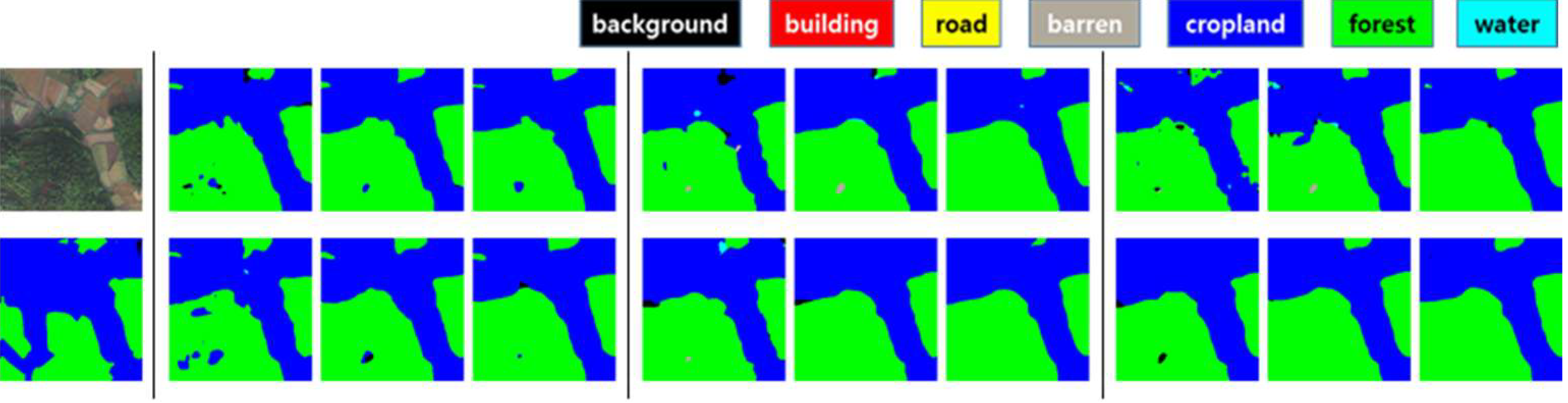

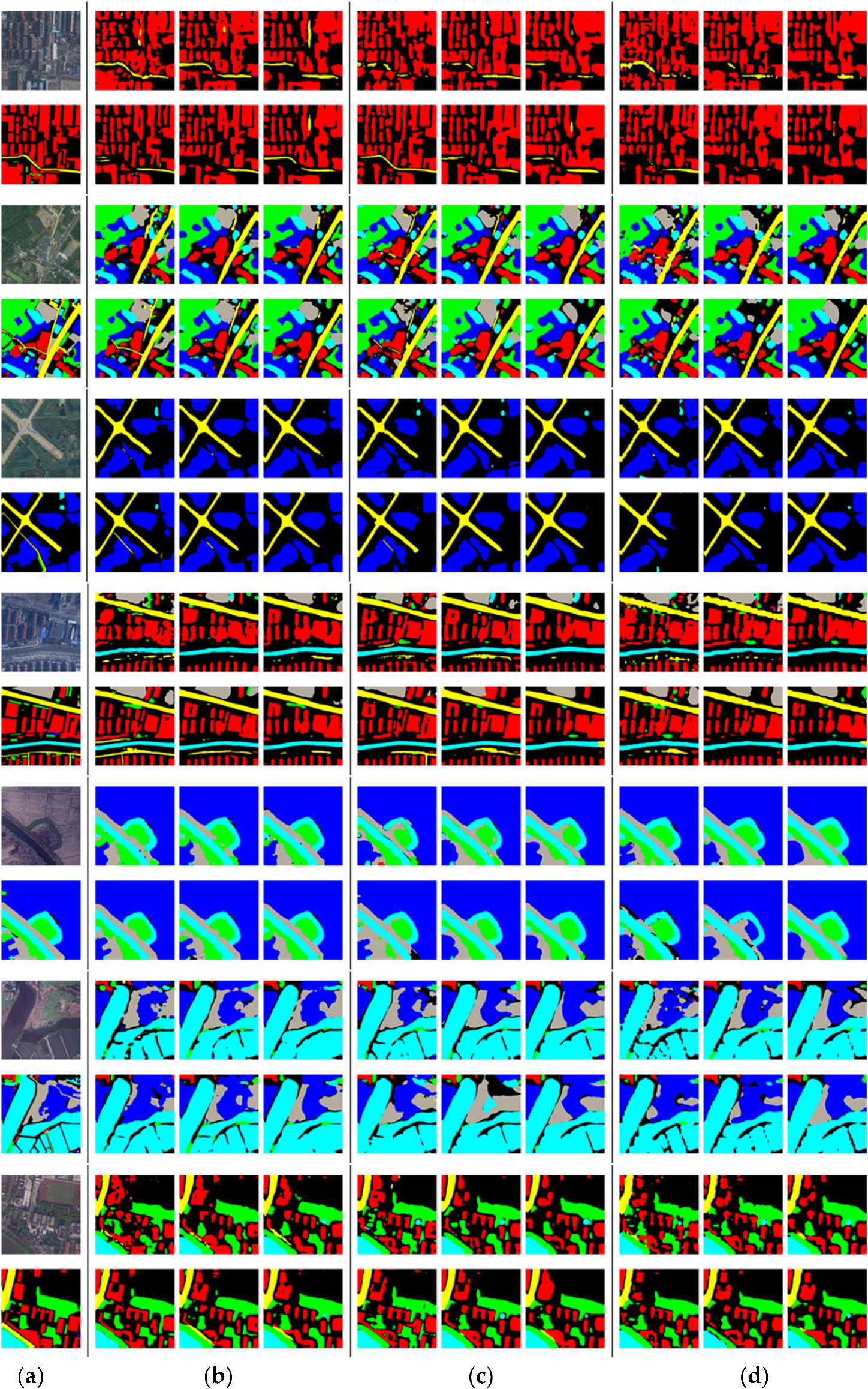

3. Methods

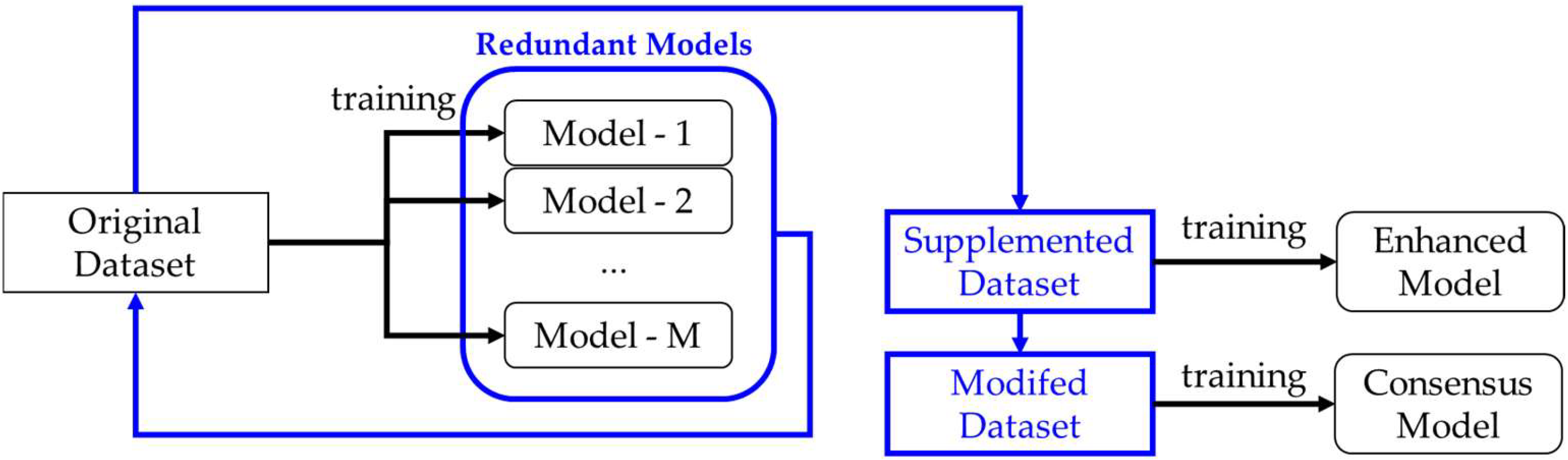

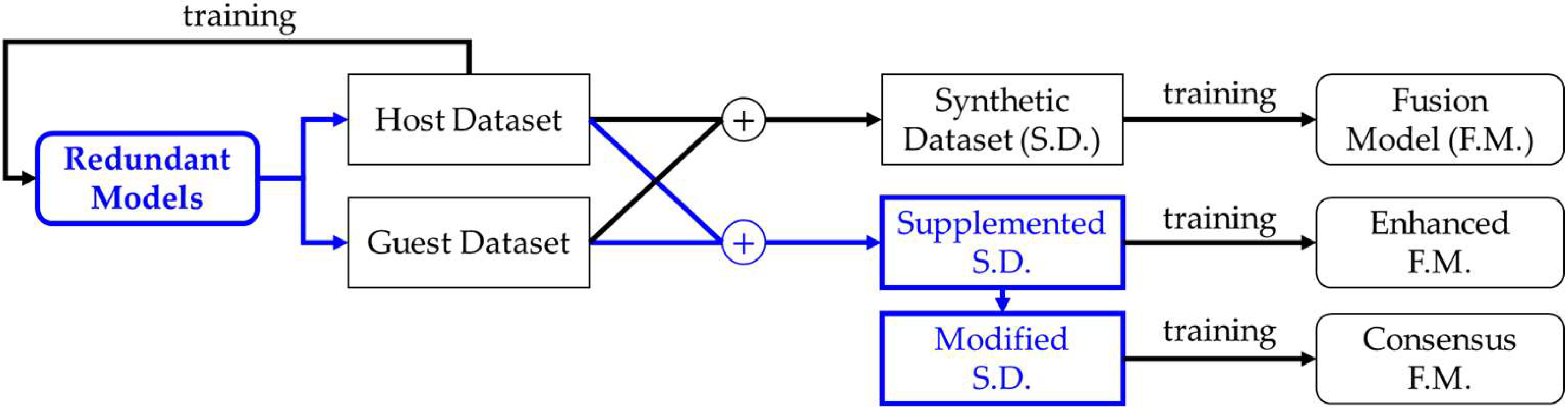

3.1. Overview of the Proposed Method

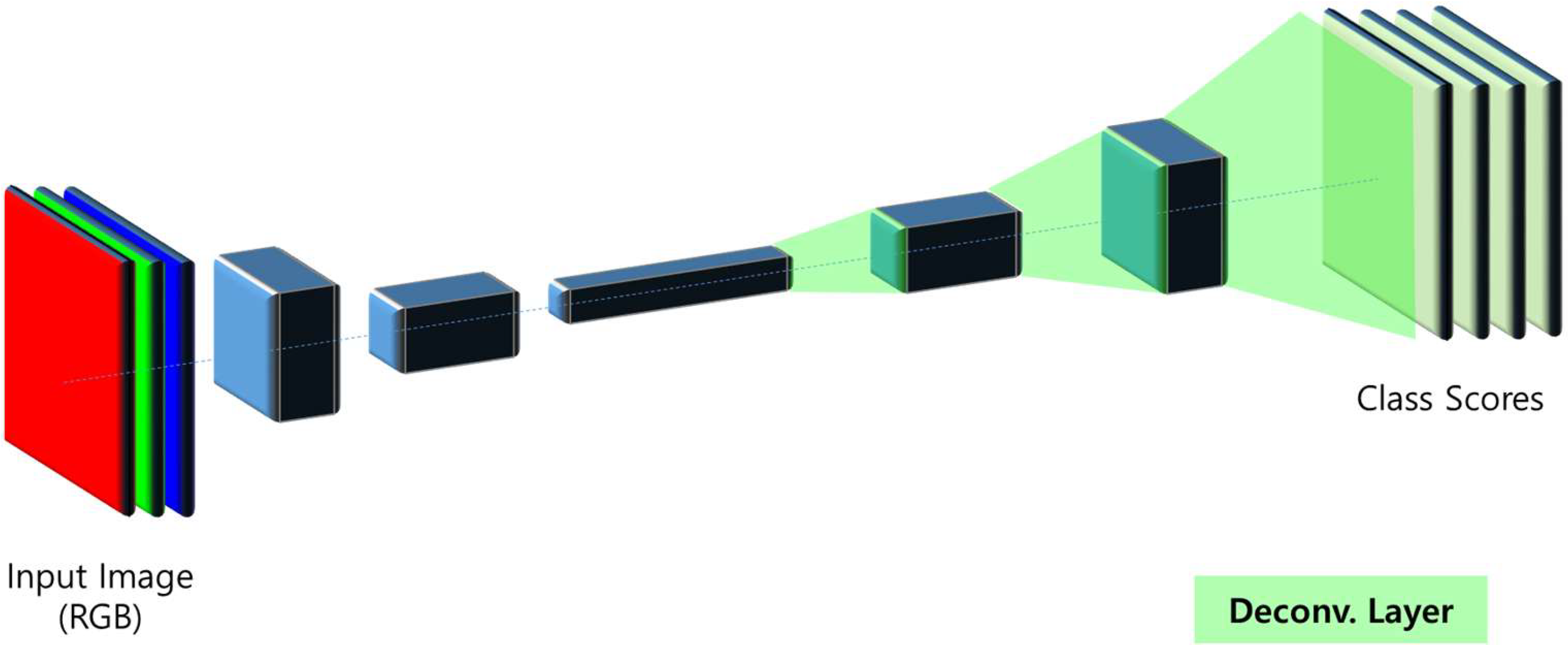

3.2. Segmentation Networks

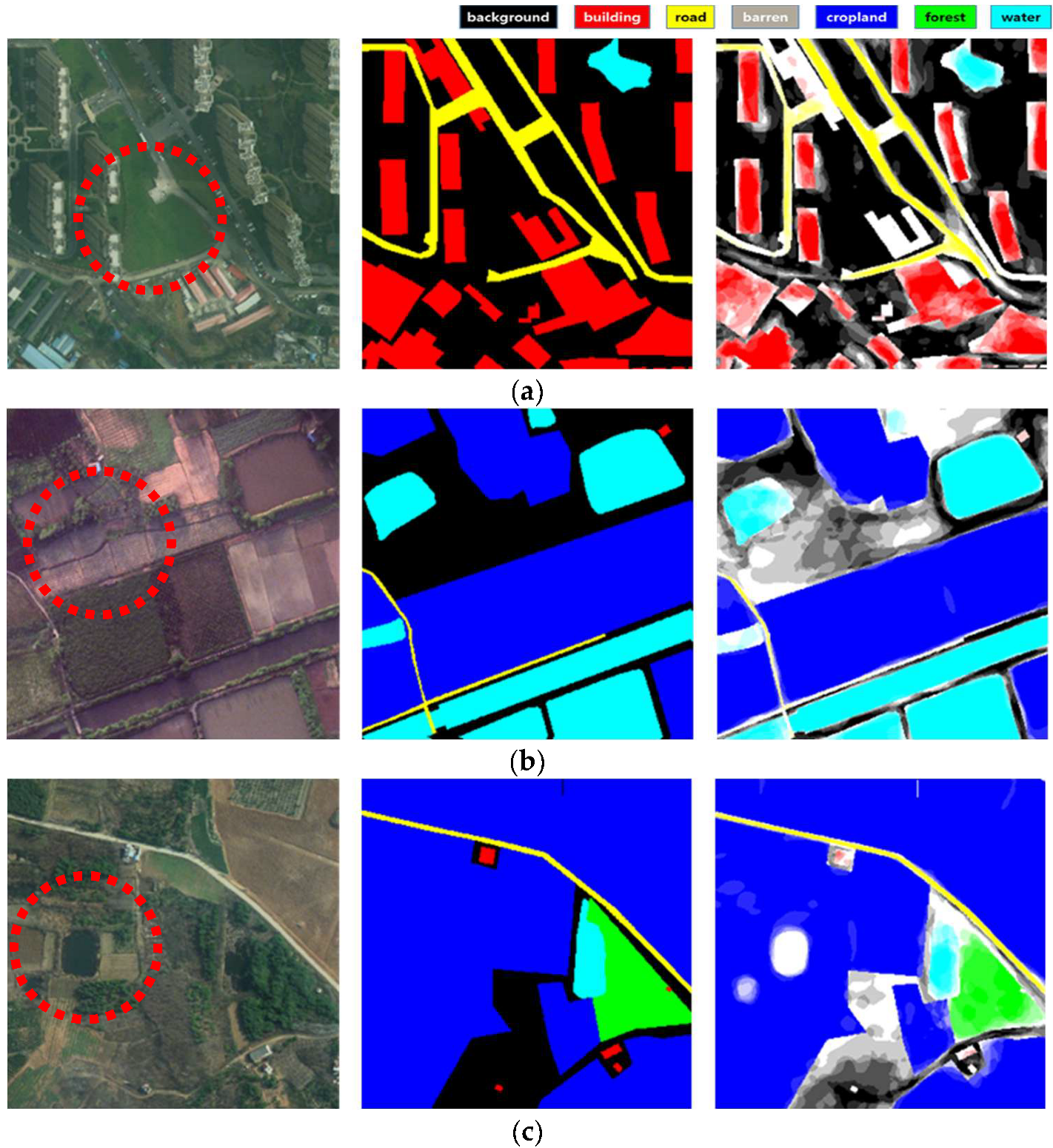

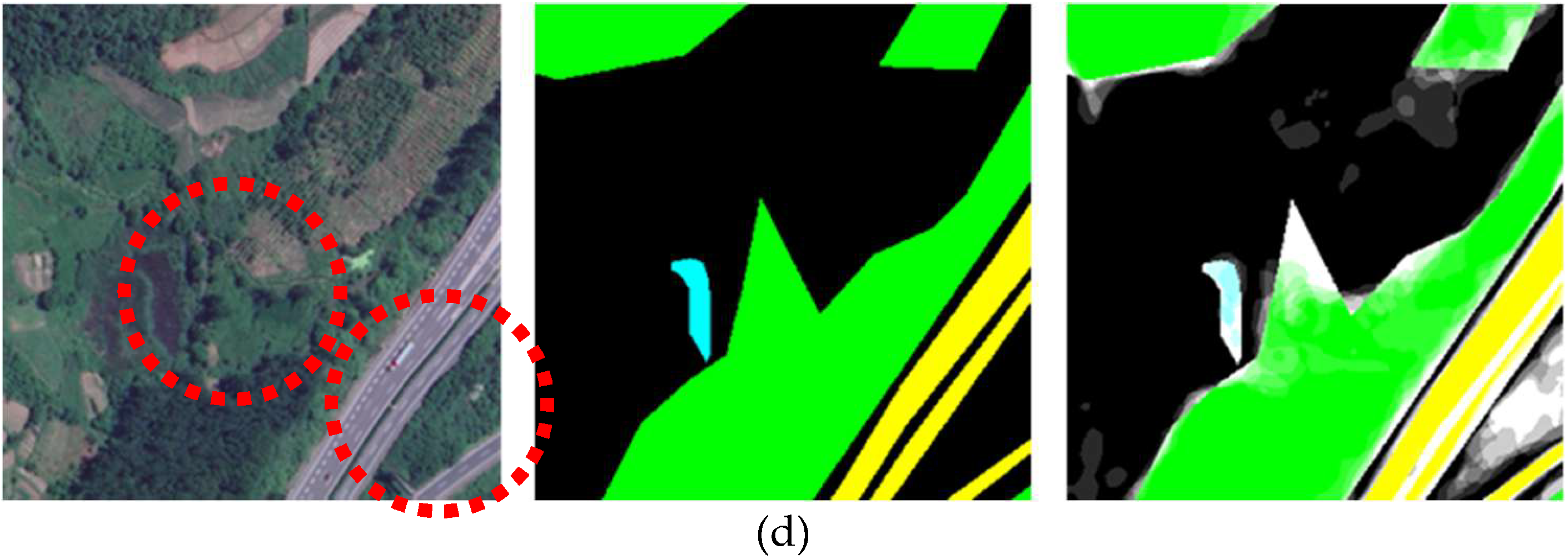

3.3. Creation of the Supplemented Mask and the Modified Mask Using Redundant Models

3.4. Assessment of Universal Performance

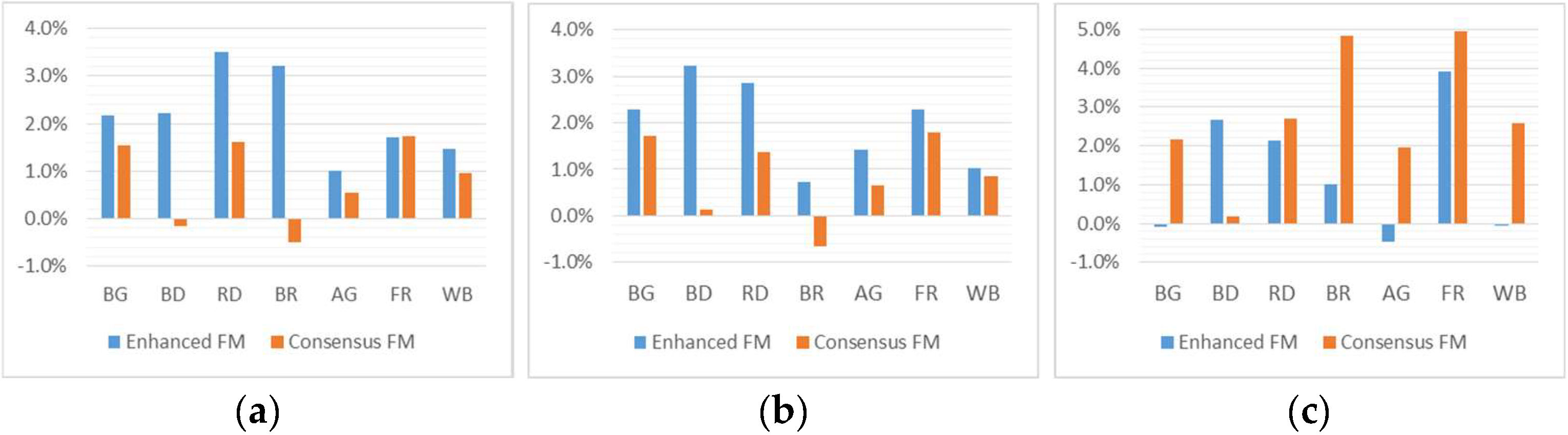

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| GT | ground truth |

| GSD | ground sample distance |

| IGN | Institute of Geography and Forestry |

| FLAIR | French Land Cover with Aerospace ImageRy |

| LoveDA | Land-Cover Dataset for Domain Adaptive Semantic Segmentation |

| FCN | Fully Convolutional Network |

| SD | synthetic dataset |

| FM | fusion model |

| CNN | convolution neural network |

References

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer meets convolution: A bilateral awareness network for semantic segmentation of very fine resolution urban scene images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.-M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 16794–16805. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. DeepGlobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 17200–17209. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? The Inria Aerial Image Labeling Benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Wang, J.; Meng, L.; Li, W.; Yang, W.; Yu, L.; Xia, G.-S. Learning to extract building footprints from off-nadir aerial images. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1294–1301. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Chen, K.; Zhang, H.; Qi, Z.; Zou, Z.; Shi, Z. Change-agent: Towards interactive comprehensive remote sensing change interpretation and analysis. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Yang, K.; Xia, G.-S.; Liu, Z.; Du, B.; Yang, W.; Pelillo, M.; Zhang, L. Asymmetric Siamese networks for semantic change detection in aerial images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–18. [Google Scholar] [CrossRef]

- Garioud, A.; Gonthier, N.; Landrieu, L.; Wit, A.D.; Valette, M.; Poupée, M.; Giordano, S.; Wattrelos, B. FLAIR: A country-scale land cover semantic segmentation dataset from multi-source optical imagery. In Proceedings of the Advances in Neural Information Processing Systems 2023, New Orleans, LA, USA, 2 November 2023. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A remote sensing land-cover dataset for domain adaptive semantic segmentation. In Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, Online, 6–14 December 2021; Volume 1. [Google Scholar]

- Yu, A.; Quan, Y.; Yu, R.; Guo, W.; Wang, X.; Hong, D.; Zhang, H.; Chen, J.; Hu, Q.; He, P. Deep learning methods for semantic segmentation in remote sensing with small data: A survey. Remote Sens. 2023, 15, 4987. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder—Decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R. Resnest: Split-Attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 2736–2746. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A Convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 11976–11986. [Google Scholar]

- Feng, W.; Sui, H.; Huang, W.; Xu, C.; An, K. Water body extraction from very high-resolution remote sensing imagery using deep U-Net and a superpixel-based conditional random field model. IEEE Geosci. Remote Sens. Lett. 2018, 16, 618–622. [Google Scholar] [CrossRef]

- Alsabhan, W.; Alotaiby, T.; Dudin, B. Detecting buildings and nonbuildings from satellite images using U-Net. Comput. Intell. Neurosci. 2022, 2022, 4831223. [Google Scholar] [CrossRef] [PubMed]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep learning segmentation and classification for urban village using a worldview satellite image based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Zhong, X.; Liu, C. Toward mitigating architecture overfitting on distilled datasets. IEEE Trans. Neural Netw. Learn. Syst. 2025, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Dimitrovski, I.; Spasev, V.; Loshkovska, S.; Kitanovski, I. U-Net ensemble for enhanced semantic segmentation in remote sensing imagery. Remote Sens. 2024, 16, 2077. [Google Scholar] [CrossRef]

- Bressan, P.O.; Junior, J.M.; Martins, J.A.C.; Gonçalves, D.N.; Freitas, D.M.; Osco, L.P.; Silva, J.d.A.; Luo, Z.; Li, J.; Garcia, R.C.; et al. Semantic segmentation with labeling uncertainty and class imbalance. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102690. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Alomar, K.; Aysel, H.I.; Cai, X. Data augmentation in classification and segmentation: A survey and new strategies. J. Imaging 2023, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

| Network Structure | Simple Network | U-Net | DeepLabV3 |

|---|---|---|---|

| Number of trainable parameters | 38,021,511 | 41,694,574 | 39,759,047 |

| Percentage difference with respect to simple network | - | +9.7% | +4.6% |

| Network Structure | Model Type | mIOU (%) | Improvement (%) | |||

|---|---|---|---|---|---|---|

| Part A | Part B | Average | ||||

| Simple network | Single Dataset | Raw | 70.48 | 69.84 | 70.16 | - |

| Enhanced | 73.69 | 72.29 | 72.99 | +2.83 | ||

| Consensus | 74.37 | 72.88 | 73.62 | +3.46 | ||

| Multi-Dataset | Raw | 74.30 | 73.10 | 73.70 | +3.54 | |

| Enhanced | 76.51 | 75.27 | 75.89 | +5.73 | ||

| Consensus | 74.89 | 74.15 | 74.52 | +4.36 | ||

| U-Net | Single Dataset | Raw | 72.14 | 71.28 | 71.71 | - |

| Enhanced | 74.45 | 73.38 | 73.92 | +2.21 | ||

| Consensus | 74.13 | 73.40 | 73.76 | +2.05 | ||

| Multi-Dataset | Raw | 73.96 | 72.68 | 73.32 | +1.61 | |

| Enhanced | 75.97 | 74.62 | 75.29 | +3.58 | ||

| Consensus | 74.70 | 73.61 | 74.16 | +2.45 | ||

| DeepLabV3 | Single Dataset | Raw | 72.09 | 70.86 | 71.48 | - |

| Enhanced | 74.51 | 73.30 | 73.91 | +2.43 | ||

| Consensus | 73.79 | 73.70 | 73.75 | +2.27 | ||

| Multi-Dataset | Raw | 71.05 | 70.88 | 70.96 | −0.51 | |

| Enhanced | 72.89 | 71.64 | 72.26 | +0.79 | ||

| Consensus | 74.31 | 73.15 | 73.73 | +2.25 | ||

| Network Structure | Model Type | IOU of Each Class (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Background | Building | Road | Barren | Agricultural | Forest | WaterBody | |||

| Simple network | Single Dataset | Raw | 67.08 | 62.36 | 73.75 | 51.85 | 79.27 | 73.64 | 83.18 |

| Enhanced | 70.86 | 68.05 | 77.00 | 52.97 | 80.71 | 76.11 | 85.24 | ||

| Consensus | 71.39 | 69.43 | 76.99 | 54.57 | 80.91 | 76.58 | 85.49 | ||

| Multi-Dataset | Raw | 70.79 | 71.84 | 77.66 | 54.35 | 80.63 | 75.80 | 84.83 | |

| Enhanced | 72.95 | 74.07 | 81.17 | 57.56 | 81.65 | 77.52 | 86.30 | ||

| Consensus | 72.33 | 71.69 | 79.26 | 53.85 | 81.17 | 77.54 | 85.79 | ||

| U-Net | Single Dataset | Raw | 68.55 | 68.41 | 75.61 | 52.27 | 79.30 | 73.74 | 84.07 |

| Enhanced | 71.56 | 71.58 | 78.26 | 54.42 | 80.13 | 75.86 | 85.61 | ||

| Consensus | 71.59 | 70.88 | 76.68 | 54.93 | 80.46 | 76.18 | 85.60 | ||

| Multi-Dataset | Raw | 70.09 | 72.25 | 77.18 | 54.62 | 79.39 | 74.96 | 84.75 | |

| Enhanced | 72.36 | 75.48 | 80.03 | 55.36 | 80.81 | 77.26 | 85.77 | ||

| Consensus | 71.80 | 72.38 | 78.54 | 53.97 | 80.06 | 76.75 | 85.59 | ||

| DeepLab V3 | Single Dataset | Raw | 68.79 | 68.02 | 73.12 | 52.61 | 79.45 | 74.35 | 83.97 |

| Enhanced | 71.69 | 71.46 | 76.10 | 55.68 | 80.76 | 76.24 | 85.42 | ||

| Consensus | 71.67 | 70.83 | 75.29 | 54.91 | 81.10 | 76.90 | 85.53 | ||

| Multi-Dataset | Raw | 69.22 | 71.32 | 73.85 | 49.53 | 78.33 | 71.89 | 82.60 | |

| Enhanced | 69.12 | 73.99 | 75.99 | 50.54 | 77.87 | 75.80 | 82.55 | ||

| Consensus | 71.37 | 71.51 | 76.54 | 54.37 | 80.28 | 76.83 | 85.19 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, J.Y.; Oh, K.-Y.; Lee, K.-J. Improving the Universal Performance of Land Cover Semantic Segmentation Through Training Data Refinement and Multi-Dataset Fusion via Redundant Models. Remote Sens. 2025, 17, 2669. https://doi.org/10.3390/rs17152669

Chang JY, Oh K-Y, Lee K-J. Improving the Universal Performance of Land Cover Semantic Segmentation Through Training Data Refinement and Multi-Dataset Fusion via Redundant Models. Remote Sensing. 2025; 17(15):2669. https://doi.org/10.3390/rs17152669

Chicago/Turabian StyleChang, Jae Young, Kwan-Young Oh, and Kwang-Jae Lee. 2025. "Improving the Universal Performance of Land Cover Semantic Segmentation Through Training Data Refinement and Multi-Dataset Fusion via Redundant Models" Remote Sensing 17, no. 15: 2669. https://doi.org/10.3390/rs17152669

APA StyleChang, J. Y., Oh, K.-Y., & Lee, K.-J. (2025). Improving the Universal Performance of Land Cover Semantic Segmentation Through Training Data Refinement and Multi-Dataset Fusion via Redundant Models. Remote Sensing, 17(15), 2669. https://doi.org/10.3390/rs17152669