1. Introduction

With the continuous development of China’s economy, the pace of road infrastructure construction has accelerated significantly [

1]. As an essential transportation facility, roads play a vital role in promoting economic development [

2]. However, road cracks represent one of the primary issues affecting both the safety and longevity of road usage. Currently, road cracks manifest in various forms, including vertical, transverse, and oblique cracks [

3]. These cracks are predominantly caused by a combination of factors, such as construction practices, temperature variations, and changes in vehicle loading. If early cracks are not repaired promptly, they may progress into severe damage, adversely affecting the road’s aesthetic appeal and driving comfort, while also posing a direct threat to traffic safety. In recent years, the prevalence of road cracks has led to vehicle damage and even traffic accidents, particularly in older urban areas and on national highways where cracks are frequent. Consequently, the issue of road cracks has emerged as a significant constraint on the safe operation of pavement infrastructure.

Traditional methods of road crack detection primarily rely on manual inspection techniques, such as visual assessments or handheld equipment. These approaches are characterized by high labor intensity and inefficiency, and they are often influenced by the operator’s experience and subjective judgment [

4]. Consequently, the results of these inspections can exhibit significant uncertainty and a risk of oversight. Furthermore, these methods face substantial limitations when applied to large-scale road network inspections, particularly regarding time and labor costs, making it challenging to meet the demand for high-frequency and high-precision monitoring necessary for the maintenance of modern transportation facilities. Although some developed regions have adopted advanced technologies such as infrared radar, three-dimensional laser scanning vehicles, and high-precision inspection vehicles, these systems tend to be expensive, complex to operate, and require a high level of expertise for maintenance. This dependency restricts their adoption in small and medium-sized cities or less developed areas. Therefore, there is an urgent need for an automated road crack detection scheme that is efficient, intelligent, cost-effective, and scalable. Such a system would enable rapid sensing and timely intervention regarding road infrastructure conditions, thereby supporting the sustainable development of intelligent transportation and road health management. With the rapid advancement of deep learning and computer vision technologies, crack detection methods based on visual perception have emerged as a significant research focus. The potential for deployment on flexible platforms, such as unmanned aerial vehicles (UAVs) and mobile terminals, is increasingly prominent, offering new avenues for the intelligent identification and large-scale monitoring of road defects.

In recent years, with the advancement of deep learning, target detection algorithms have increasingly become the dominant methods for crack detection. These algorithms are primarily categorized into two types: two-stage detection methods (such as R-CNN [

5], Faster R-CNN [

6], and Mask R-CNN [

7]) and single-stage detection methods (including YOLO [

8], SSD [

9], and RetinaNet [

10]). Two-stage target detection algorithms first extract candidate frames from the image and then perform a secondary extraction on these frames. Convolutional Neural Networks (CNNs) are widely recognized for their superior feature extraction capabilities. Recent studies have demonstrated various applications of these algorithms: Wang et al. [

11] developed a crack recognition model based on Mask R-CNN to achieve pixel-level segmentation; Li et al. [

12] introduced the SENet attention mechanism to optimize Faster R-CNN, enhancing its feature expression ability across different levels; Kortmann et al. [

13] applied Faster R-CNN for the recognition of multinational, multi-type road damage, verifying its cross-regional adaptability; Xu et al. [

14] compared the performance of Faster R-CNN and Mask R-CNN under small sample conditions, proposing a joint training strategy; Balcı et al. [

15] achieved high accuracy in road crack detection by combining data augmentation with Faster R-CNN, attaining a mean average precision (mAP) of 93.2%; Gan et al. fused Faster R-CNN with a BIM model to facilitate 3D visual modeling of cracks at the bottom of bridges; and Lv et al. [

16] designed an optimized CNN model capable of accurately identifying vertical, transverse, and reticulation cracks, achieving a recognition accuracy of up to 99%. In contrast, single-stage target detection algorithms directly analyze the entire image, offering the advantage of rapid detection speed, although they may be slightly less accurate. Nevertheless, single-stage methods demonstrate impressive performance in crack detection tasks using visible images, particularly the YOLO series of models. These models are extensively utilized in practical engineering due to their end-to-end architecture and high efficiency. The YOLO series of target detection algorithms continues to evolve and is widely applied in road crack detection tasks. Current research primarily focuses on enhancing model accuracy, developing lightweight structures, and improving robustness in complex scenarios.

In crack detection research, the YOLO series have increasingly become the predominant framework due to its efficient end-to-end architecture and real-time performance. For instance, Zhou et al. [

17] enhanced accuracy and speed on the RDD2022 dataset by integrating SENet channel attention, K-means anchor optimization, and SimSPPF. Similarly, Zhen et al. [

18] improved the detection of complex backgrounds and small cracks through denoising and anchor optimization techniques. Du et al. [

4] proposed BV-YOLOv5S, which combines BiFPN and Varifocal Loss to facilitate robust multi-class pavement defect detection. Karimi et al. [

19] applied YOLOv5 to identify multi-material cracks in historical buildings, demonstrating its strong adaptability to various materials. For small cracks, Li et al. [

20] developed CrackTinyNet, based on YOLOv7, which incorporates BiFormer, NWD loss, and SPD-Conv, achieving high accuracy in both public datasets and real-vehicle tests. Regarding YOLOv8, Wen et al. [

21], Zhang et al. [

22], and Yang et al. [

23] have respectively improved accuracy, inference speed, and deployment through detection-layer optimization, lightweight convolutions, and global attention mechanisms. Manjusha et al. [

24] confirmed the overall superiority of YOLOv8 through comparative analysis. Recent YOLO-based variants include Rural-YOLO [

25], which enhances disease detection accuracy and enables lightweight deployment using attention and feature enhancement modules, and YOLO11-BD [

26], which reduces computation while improving accuracy. Additionally, fusion architectures such as YOLO-MSD [

27], PC3D-YOLO [

28], ML-YOLO [

29], RSG-YOLO [

30], and EMG-YOLO [

31] have been effectively applied to industrial surface defects, track panel cracks, rail cracks, and multi-type defect detection. Additionally, Zhou et al. [

32] proposed an improved YOLOv5 network with integrated convolutional block attention modules (CBAM) and residual structures to enhance insulator and defect detection in UAV images, achieving notable gains in precision and robustness. Chen et al. [

33] introduced DEYOLO, a dual-feature-enhancement framework based on YOLOv8, which incorporates semantic-spatial cross-modality modules (DECA and DEPA) and a bi-directional decoupled focus mechanism, significantly improving RGB-IR object detection under poor illumination. Similarly, Xu et al. [

34] presented an improved YOLOv5s, which embeds a shallow feature extraction layer and window self-attention modules into the Path Aggregation Network, effectively addressing the challenges of detecting small objects in remote sensing images with high accuracy and real-time performance on the DIOR and RSOD datasets.

In addition to the continuous optimization of the YOLO series, emerging network architectures also provide new solutions for crack detection. The Transformer architecture notably enhances global feature modeling. Chen et al. [

35] proposed iSwin-Unet, which integrates hopping attention and residual Swin Transformer blocks with Swin-Unet, achieving significant performance gains across multiple public datasets. Alireza Saberironaghi et al. [

36] introduced DepthCrackNet, which incorporates spatial and depth enhancement modules to maintain high accuracy and computational efficiency for detecting fine cracks in complex backgrounds. Wang et al. [

37] developed CGTr-Net, which fuses CNNs with Gated Axial-Transformers alongside feature fusion and pseudo-labeling strategies, demonstrating robust performance for thin and discontinuous cracks. Zhang et al. [

38] applied generative diffusion models to crack detection through the CrackDiff framework, where a multi-task UNet simultaneously predicts masks and noise, enhancing the integrity and continuity of crack boundaries. In remote sensing road extraction, Hui et al. [

39] fused global spatial features with Fourier frequency-domain features using the Swin Transformer, effectively separating and enhancing high and low-frequency information to improve road-background separability and boundary continuity. This approach achieved IoU scores of 72.54% (HF), 55.35% (MS), and 71.87% (DeepGlobe). Guan et al. [

40] proposed Swin-FSNet for unpaved roads, employing the Swin Transformer as the encoder backbone along with a discrete wavelet-based frequency-aware decomposition module (WBFD) for direction-sensitive features. A hybrid dynamic serpentine convolution module (HyDS-B) in the decoder adaptively models curved and bifurcated roads, achieving an IoUroad of 81.76% (self-collected UAV dataset) and 71.97% (DeepGlobe) with notable structural preservation.

In dual-modal and integrated approaches, other researchers combine diverse features and sensing data to enhance detection adaptability and utility. Zhang et al. [

41] integrated enhanced YOLOv5s with U-Net++, utilizing binocular vision ranging and edge detection to achieve precise crack localization and width quantification, thereby improving measurement accuracy in complex scenes. For pixel-level road crack detection, Wang et al. [

42] proposed GGMNet, which incorporates three key components: (1) Global Context Residual Blocks (GC-Resblocks) to suppress background noise, (2) Graph Pyramid Pooling Modules (GPPMs) for multiscale feature aggregation and long-range dependency capture, and (3) Multiscale Feature Fusion (MFF) modules to minimize missed detections. This framework demonstrated high accuracy and robustness on the DeepCrack, CrackTree260, and Aerial Track Detection datasets, providing insights for refining linear feature extraction. Traditional machine learning techniques remain relevant in specialized applications. Shi et al. [

43] developed CrackForest, which combines integral channel features with random structured forests to enhance noise immunity and structural information retention. Gavilán et al. [

44] significantly reduced false positives through pavement classification and feature screening in their adaptive detection system. Oliveira et al. [

45] created CrackIT, which integrates unsupervised clustering with crack characterization for effective thin-crack detection and width grading.

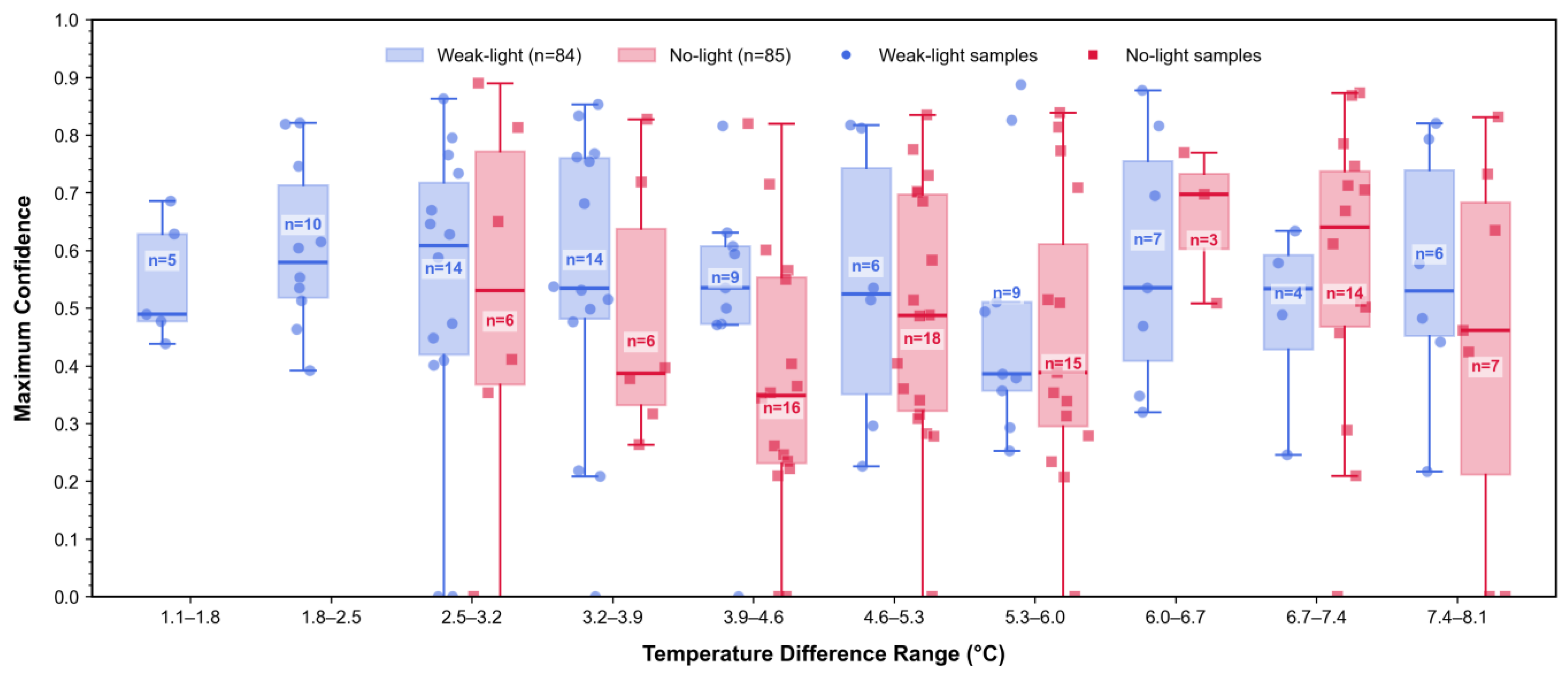

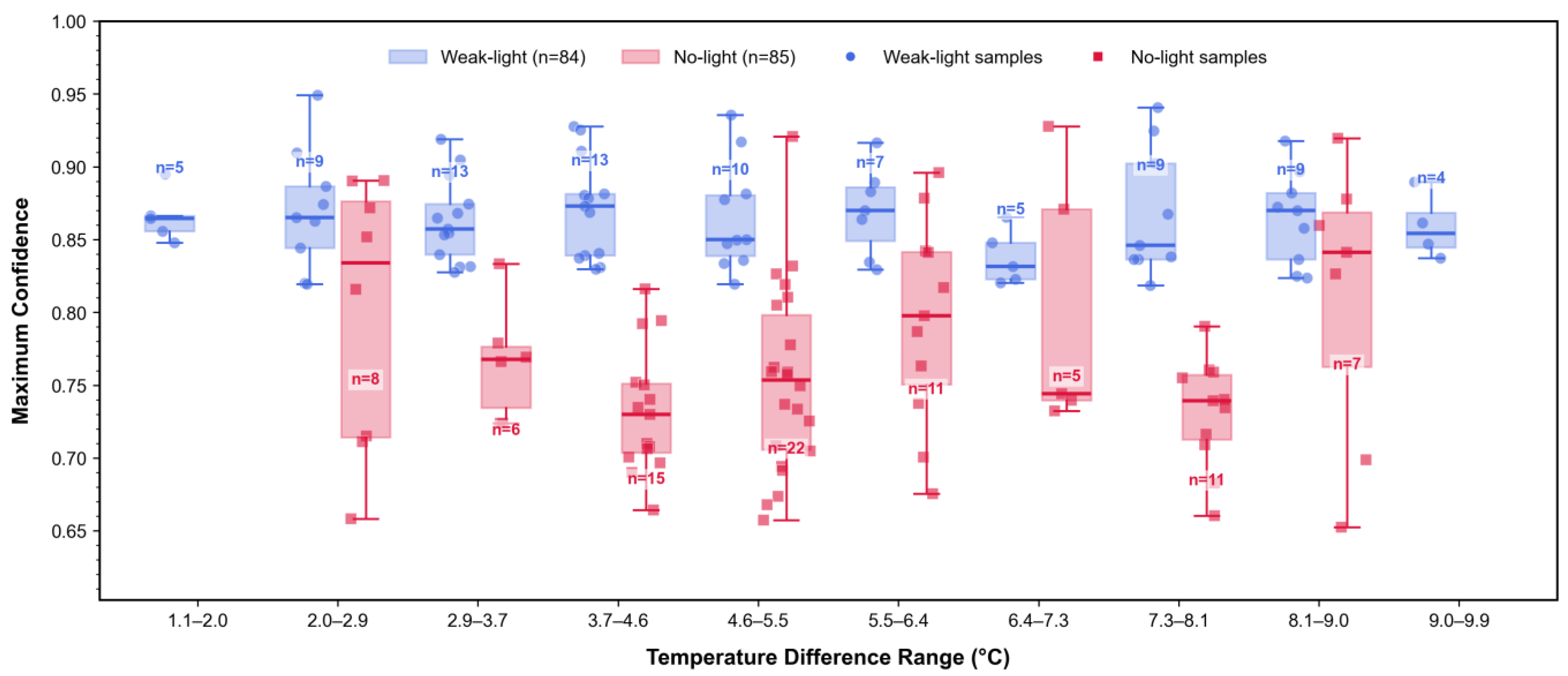

Current research has significantly advanced crack detection through targeted improvements to the YOLO series models, including structural optimization, attention mechanisms, image enhancement, lightweight design, and multimodal fusion. These developments have substantially enhanced both accuracy and practicality. However, model performance degrades markedly in weak- or no-light conditions, as visible light images lose texture information and exhibit blurred boundaries, which limits crack sensing and localization capabilities. Therefore, developing dual-modal detection models with enhanced robustness for such environments remains a critical research direction. To address weak-light degradation, thermal infrared imagery serves as a complementary modality. Unlike visible light, which depends on ambient illumination, thermal imaging relies on the temperature differences of the target surface, enabling stable imaging at night or in darkness. Road cracks manifest as high thermal contrast regions in infrared images, thereby strengthening robust perception. The modalities exhibit significant complementarity: visible images provide texture and edge details, while infrared conveys surface thermal characteristics. Consequently, dual-modal fusion has emerged as a key approach for weak-light crack detection, improving feature completeness and model generalization—particularly valuable for temperature-variant defects such as road cracks.

Meanwhile, current fusion methods (e.g., feature concatenation, weighted fusion) often fail to capture deep inter-modal semantic relationships, suffering from shallow integration and inadequate spatial dependency modeling [

31]. Transformers have recently gained prominence in vision tasks due to their superior long-range dependency modeling. Their self-attention mechanism dynamically focuses on globally relevant features, effectively addressing dual-modal alignment challenges [

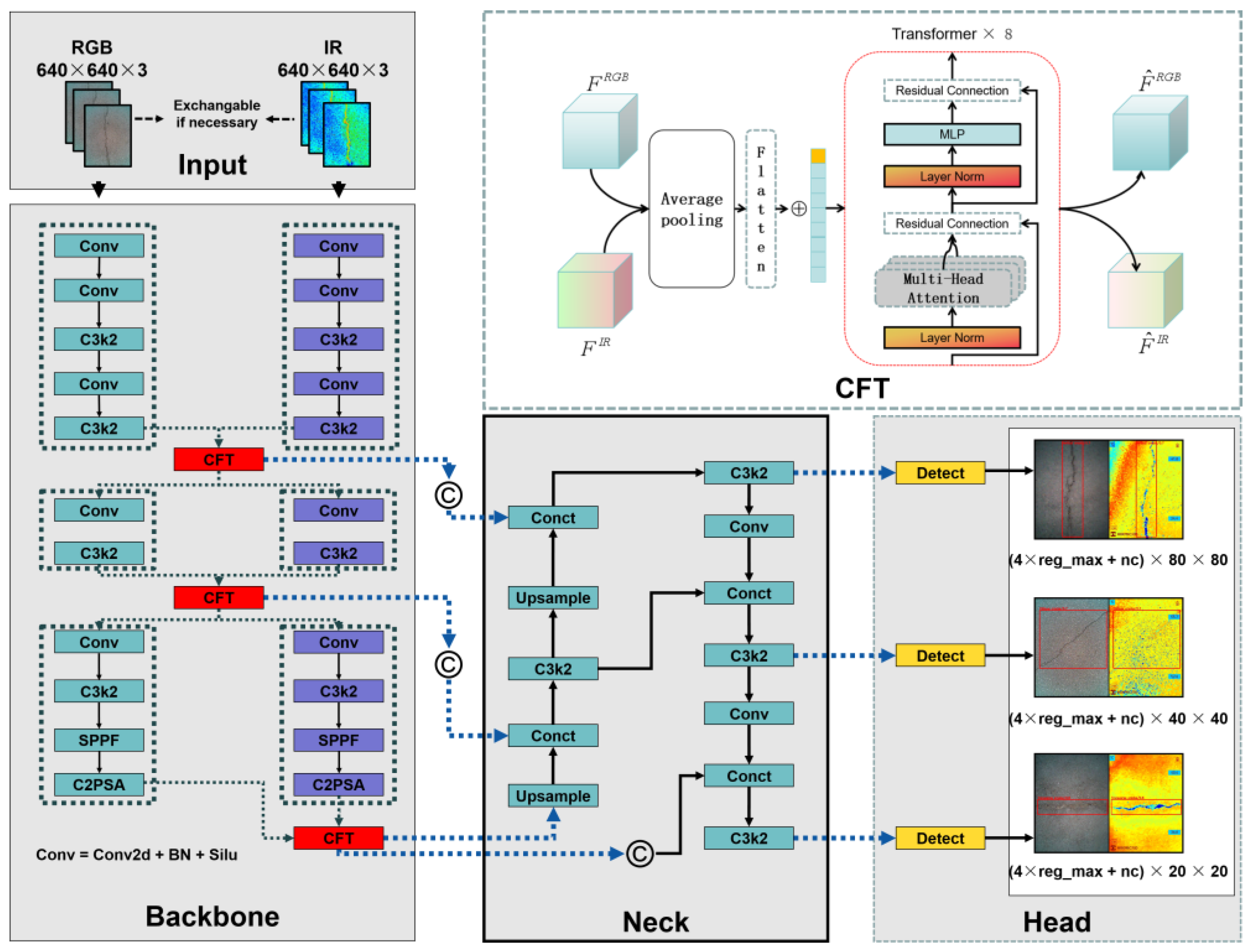

4]. Motivated by these advances, we propose the Cross-Modality Fusion Transformer (CFT) module. This dual-branch structure concurrently models intra- and inter-modal feature relationships via self-attention, enabling deep fusion and semantic alignment to significantly enhance crack perception in complex scenes. Integrated into YOLOv5 for infrared–visible detection, CFT substantially outperforms conventional fusion methods across multiple metrics, particularly in weak-light scenarios [

35].

Building on this foundation, we present a dual-modal road crack detection method using the most stable YOLOv11 version with embedded CFT. Our approach constructs infrared–visible dual-branch feature extraction and leverages CFT within the backbone for dynamic cross-modal interaction. To validate performance, we compiled a dual-modal road crack dataset encompassing weak-/no-light conditions and conducted comparative experiments with visualization analysis.

3. Results

3.1. Uni-Modal Experimental Results with Three Lighting Scenarios

To systematically analyze the effects of lighting conditions on the performance of single-modal visible target detection, this study designs twelve experimental sets that combine four models (YOLOv5-RGB, YOLOv8-RGB, YOLOv11-RGB, and YOLOv13-RGB) with three lighting scenarios (natural light, weak light, and no light). A comprehensive comparison of performance metrics reveals the influence patterns of light variations on crack detection results, thereby validating the necessity of introducing the infrared modality.

Under natural light conditions, characterized by sufficient daytime illumination, all four models demonstrated strong performance in crack detection, achieving optimal results as illustrated in

Figure 4. The YOLOv11-RGB model exhibited stable performance, achieving a mean Average Precision (mAP) of 92.2% at IoU 0.5, with precision at 94.8% and recall at 89.2%, alongside a mAP of 56.0% calculated over the range IoU 0.5:0.95. Serving as the baseline for our enhanced model, YOLOv11-RGB ensures reliable performance control for subsequent dual-modal fusion models. In comparison, YOLOv5-RGB and YOLOv8-RGB attained mAP scores of 93.7% and 66.5%, and 94.2% and 63.7% for mAP@0.5 and mAP@0.5:0.95, respectively, slightly surpassing YOLOv11-RGB in these metrics. YOLOv13-RGB also showed competitive results, with mAP@0.5 reaching 93.9% and mAP@0.5:0.95 at 63.6%, precision at 94.0%, and recall at 91.0%. Nevertheless, recall and overall robustness exhibited minimal differences across the models. Collectively, mainstream uni-modal models provide high detection accuracy under ideal lighting conditions, particularly when crack textures are clearly defined.

Upon entering weak-light conditions, although some visible light information remains in the image, allowing for a discernible texture of the cracks, the model’s detection performance exhibits a downward trend. This decline is attributed to the overall reduction in light intensity, which adversely affects image contrast and detail clarity. For instance, in the case of YOLOv5-RGB, the mean Average Precision (mAP) at 0.5 decreased from 93.7% to 90.3%, while mAP at 0.5:0.95 fell from 66.5% to 51.4%. Similarly, YOLOv11-RGB experienced a decrease of 14.3 percentage points in mAP at 0.5:0.95. YOLOv13-RGB also showed performance degradation, with mAP@0.5 dropping to 83.5% and mAP@0.5:0.95 to 42.7%, precision at 88.6%, and recall at 76.6%. Although the performance degradation is less severe than in no-light environments, the findings indicate that diminished light intensity significantly impacts the model’s perceptual capabilities. The quality of image information under weak-light conditions is insufficient to support detection performance comparable to that in normal lighting. This phenomenon illustrates that while traditional visible light models exhibit some adaptability in low-light scenarios, their detection efficacy remains constrained by light levels, making it challenging to achieve robust and stable recognition performance.

Under no-light conditions, models that rely solely on visible light exhibit a severe performance collapse. All four models demonstrate significant declines in metrics: YOLOv5-RGB’s mean Average Precision (mAP) at 0.5 drops to 77.6% (from 90.3%), with a mAP at 0.5:0.95 of 40.4%; YOLOv8-RGB achieves 70.4% and 36.1% for mAP at 0.5 and mAP at 0.5:0.95, respectively; while YOLOv11-RGB declines to 67.5% and 33.3%. YOLOv13-RGB performed similarly poorly, with mAP@0.5 of 60.6% and mAP@0.5:0.95 of 27.8%, precision at 60.1%, and recall at 59.0%. This performance collapse confirms that negligible texture and structural information from visible images in darkness leads to critical deficiencies in features, substantially reducing detection accuracy and recall, while simultaneously increasing the rates of missed and false detections. The twelve experiments illustrate the stratified effects of illumination: stable, high-accuracy detection occurs under natural light; moderate degradation is observed with basic recognition in weak light; and near-complete failure arises in no-light conditions, thereby revealing fundamental unimodal limitations.

3.2. Comparative Experiments of Our Dual-Modal with Uni-Modal Model

Building upon a comprehensive performance analysis of twelve uni-modal models under diverse lighting conditions, this study further extends the comparative scope to rigorously evaluate dual-modal fusion methodologies in weak-light and no-light environments. Beyond unimodal baselines, our benchmark incorporates three representative state-of-the-art approaches: (1) the YOLOv11-RGBT framework (Wan et al. [

46]), implemented across three lightweight variants (YOLOv8n, YOLOv10n, and YOLOv11n) with consistent P3 mid-level feature fusion for RGB/IR inputs; (2) the YOLOv5-CFT methodology (Qingyun et al. [

47]), deployed at multiple capacity scales (s, m, l) with embedded Cross-Modality Fusion Transformer modules to enhance global feature interaction; and (3) the recently proposed C

2Former model (Yuan and Wei [

48]), which introduces calibrated and complementary cross-attention mechanisms to mitigate modality misalignment and fusion imprecision. This multi-path evaluation framework enables a fair and systematic comparison across heterogeneous strategies, providing insights into the impact of fusion design, architectural configuration, and computational efficiency on dual-modal detection.

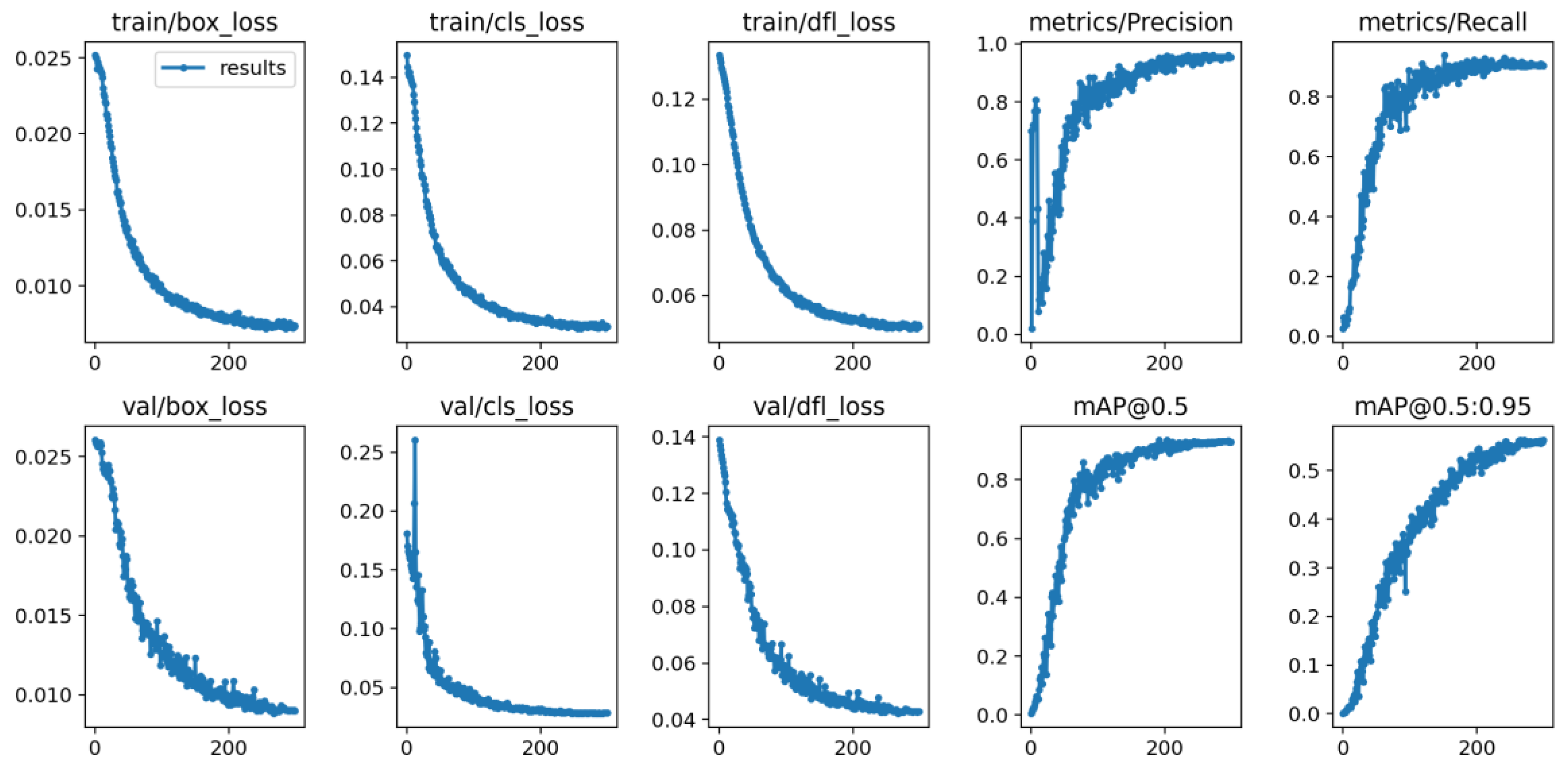

Experimental results confirm that the proposed YOLOv11-DCFNet (ours-n) achieves the most favorable balance between accuracy and efficiency while exhibiting strong robustness in degraded-light conditions. As shown in

Table 3, YOLOv11-DCFNet attains a Precision of 95.3%, Recall of 90.5%, mAP@0.5 of 92.9%, and mAP@0.5:0.95 of 56.3%, consistently outperforming all comparative baselines. It substantially surpasses YOLOv5-CFT variants (maximum 74.4% mAP@0.5 and 32.0% mAP@0.5:0.95) and outperforms the YOLOv11-RGBT family, where the best-performing YOLOv11(n) only reaches 89.4% mAP@0.5 with lower Recall (82.9%). Moreover, while C

2Former achieves competitive Recall (95.6%) and mAP@0.5:0.95 (54.6%), its training cost is prohibitively high (18.6 h, nearly 10× slower than DCFNet). In contrast, YOLOv11-DCFNet provides a superior trade-off with balanced accuracy and efficiency, delivering greater stability and real-world deployability in extreme low-illumination scenarios.

3.3. Ablation Experiments

To systematically evaluate dual-modal fusion strategies for road crack detection in weak- and low-light environments, this study constructs five progressively structured ablation models based on the YOLOv11 backbone with five strategies as illustrated in

Table 4. The baseline model, YOLOv11-RGB, utilizes only RGB inputs. YOLOv11-EF implements early fusion through element-wise summation at P3, P4, and P5, while YOLOv11-ADD applies weighted fusion at identical positions. YOLOv11-T integrates three CFT modules, which combine multi-head attention and feature updates, at P2, P3, and P4 scales with minimal intervention to the backbone. In contrast, YOLOv11-DCFNet reconfigures the backbone with deeply integrated CFT modules at P3, P4, and P5, establishing cross-stage propagation. This progression evolves from feature arithmetic fusion to architecturally embedded synergy.

The experimental results presented in

Table 4 indicate that YOLOv11-RGB exhibits a significant performance bottleneck in low-light conditions, achieving Precision and Recall rates of 76.2% and 72.3%, respectively, and a mean Average Precision (mAP) of only 38.0% at the range of 0.5 to 0.95. This limitation underscores the challenges associated with unimodal inputs in effectively detecting cracks under reduced illumination. In contrast, YOLOv11-EF employs early fusion by integrating RGB and infrared (IR) images at the input stage, resulting in substantial improvements in Precision (85.7%) and Recall (85.1%). Nevertheless, its mAP@0.5:0.95 experiences a slight decline to 36.3%, suggesting that while simple channel splicing enhances target detection capabilities, it remains inadequate for achieving precise localization and complex semantic interpretation.

Further, the YOLOv11-ADD model achieves an improved balance between computational overhead and semantic interaction through element-wise additive fusion of mid-level RGB and infrared (IR) features, which effectively enhances the quality of inter-modal representation. Precision increases to 90.1%, while the mean Average Precision (mAP) at 0.5 remains comparable to that of YOLOv11-EF at 84.4%, and the mAP at 0.5:0.95 shows a slight increase to 36.9%, indicating superior fusion capabilities. Additionally, the YOLOv11-T model employs a two-branch input structure that separately extracts RGB and IR features, incorporating three CFT modules at the P2, P3, and P4 scales. This design utilizes multi-attention mechanisms to facilitate deeper semantic interactions between modalities. Although its mAP at 0.5:0.95 is 35.2%, which is slightly lower than that of the ADD model, it still surpasses early fusion techniques, thereby demonstrating the advantages of Transformer-based fusion in enhancing semantic perception.

In this study, YOLOv11-DCFNet serves as the primary improved model, distinct from previous architectures that limit fusion to input-level (YOLOv11-EF), mid-level weighting (YOLOv11-ADD), or shallow attention guidance (YOLOv11-T). Instead, it adopts a dual-branch structure incorporating deeply embedded CFT modules at P3, P4, and P5, while also introducing cross-stage propagation. This design enhances inter-modal interaction and feature complementarity, resulting in superior performance across all models. Specifically, Precision reaches 95.3%, Recall is 90.5%, mAP@0.5 is 92.9%, and mAP@0.5:0.95 is 56.3%, which corresponds to respective improvements of 19.1%, 18.2%, 16.3%, and 20.0% compared to the baseline. These findings underscore that infrared–visible fusion under weak- or no-light conditions significantly enhances detection accuracy and robustness, thereby validating the effectiveness and advancement of the proposed method.

To further comprehensively assess the impact of module combinations on complexity and efficiency, we evaluate parameters (Params), computational load (GFLOPs), and inference speed (FPS) across five models, as shown in

Table 5. The single-branch YOLOv11-RGB baseline achieves optimal efficiency metrics, including minimal parameters (2.62 M), the lowest computation (3.31 GFLOPs), and a peak frame rate (114.79 FPS), demonstrating an exceptional lightweight design. However, due to the absence of dual-modal fusion, it exhibits limited feature representation in complex scenes.

YOLOv11-EF implements early fusion through element-wise summation at P3, P4, and P5, resulting in an increase in parameters to 3.99 M and computation to 4.87 GFLOPs (

Table 5), while maintaining a frame rate of 82.61 FPS, thereby confirming efficient information enrichment. YOLOv11-ADD employs weighted fusion at the same positions (P3, P4, P5) within a dual-branch structure, preserving nearly identical complexity (3.96 M parameters and 4.78 GFLOPs) while improving the speed to 88.22 FPS, thus demonstrating effective feature integration. Conversely, YOLOv11-T incorporates three CFT modules at P2, P3, and P4 scales, which significantly enhances inter-modal semantic interaction but increases parameters to 13.95 M and computation to 6.13 GFLOPs, resulting in a reduction in FPS to 37.34, which is just above the 30 FPS real-time threshold. This outcome reveals scalability constraints for resource-limited deployment.

Finally, YOLOv11-DCFNet reconfigures the backbone by incorporating deeply integrated CFT modules at stages P3, P4, and P5, thereby establishing cross-stage propagation. This architecture reduces parameters to 10.36 M and computation to 5.49 GFLOPs compared with YOLOv11-T, while enhancing inference speed by 21%, achieving 45.30 FPS. This optimization effectively demonstrates fusion-depth control and computational refinement. As a result, YOLOv11-DCFNet strikes an optimal balance between dual-modal feature enhancement, detection precision, and deployable inference performance, thereby validating its strong practical viability for real-world applications.

3.4. Visual Interpretability Experiment

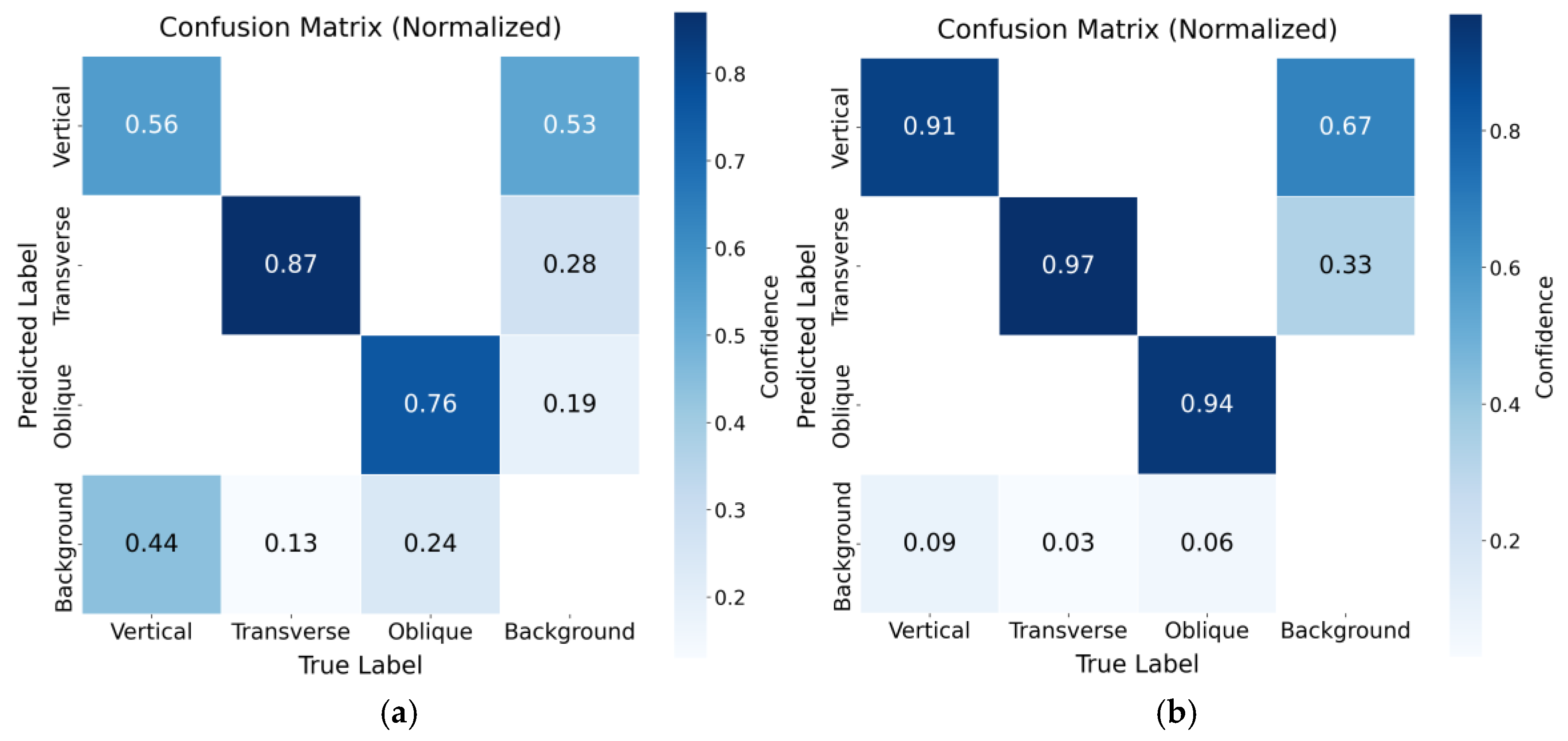

Deep learning models are often regarded as black boxes, which complicates their interpretability despite their strong performance on various tasks. Understanding model interpretability is particularly crucial in critical domains such as autonomous driving. In our study, we evaluated the interpretability of YOLOv11-RGB (unimodal) and YOLOv11-DCFNet (bimodal, equipped with a CFT module) using confusion matrices (Seeing

Figure 5). The unimodal model (Seeing

Figure 5a) exhibits low diagonal values (e.g., 0.56 confusion between ‘Vertical cracks’ and ‘Background’), indicating significant misclassification, particularly between different types of cracks and the background. In contrast, the bimodal model (Seeing

Figure 5b) demonstrates stronger diagonal dominance (0.89 for Vertical, 0.97 for Transverse, and 0.94 for Oblique cracks), with substantially reduced cross-category confusion and fewer background false alarms. This suggests that infrared thermography effectively compensates for the loss of RGB detail in low-light or complex environments, while the CFT module enhances cross-modal context modeling, thereby improving the clarity of target representation and overall detection robustness.

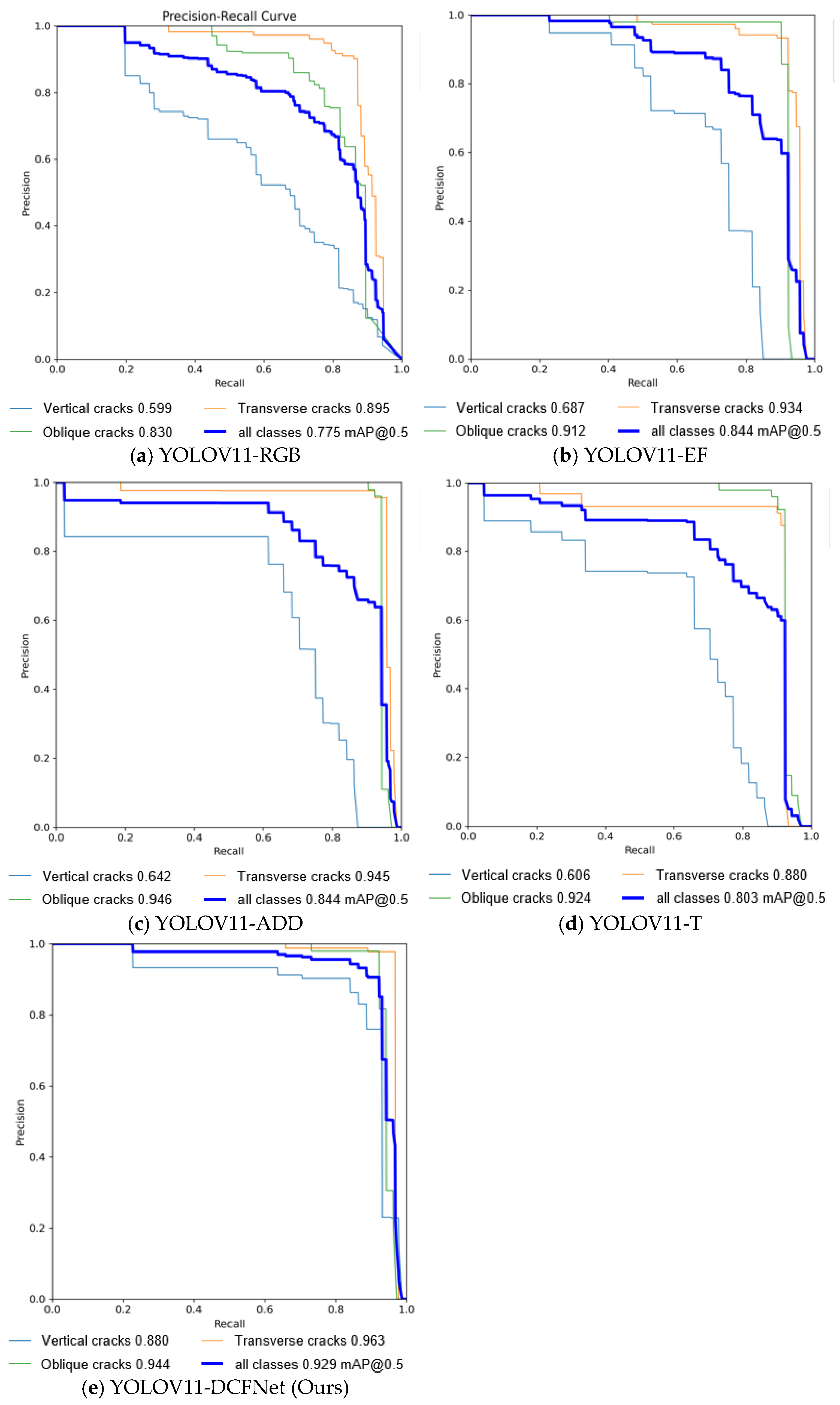

Furthermore, the analysis of Precision–Recall (PR) curves (Seeing

Figure 6) also reveals significant performance differences among the models. The baseline YOLOv11-RGB exhibits a marked degradation in precision at Recall values exceeding 0.6, particularly for vertical cracks. In contrast, YOLOv11-EF and YOLOv11-ADD demonstrate upward-shifted curves, indicating improved precision at medium to high recall levels (0.6–0.9), which supports the effectiveness of early fusion and feature weighting techniques. Specifically, YOLOv11-ADD performs exceptionally well for horizontal and oblique cracks, while YOLOv11-EF enhances recall for vertical cracks. Although YOLOv11-T slightly surpasses the baseline at low to medium recall levels, it shows fluctuations and a sharp decline in performance beyond Recall 0.8, suggesting instability due to local module replacement. Conversely, YOLOv11-DCFNet outperforms all other models, maintaining near-perfect precision until a Recall of approximately 0.9, followed by a gradual decline, and demonstrating superior performance across all crack types. Thus, the CFT module facilitates optimal detection through cross-modal and cross-layer fusion. Overall, DCFNet leads in performance, while EF and ADD provide substantial improvements without increasing complexity. YOLOv11-T shows limited advancements, and the baseline model experiences the most significant degradation at high recall levels. These findings confirm the efficacy of YOLOv11-DCFNet for detecting fine cracks in low-light conditions.

3.5. Visualization Comparison

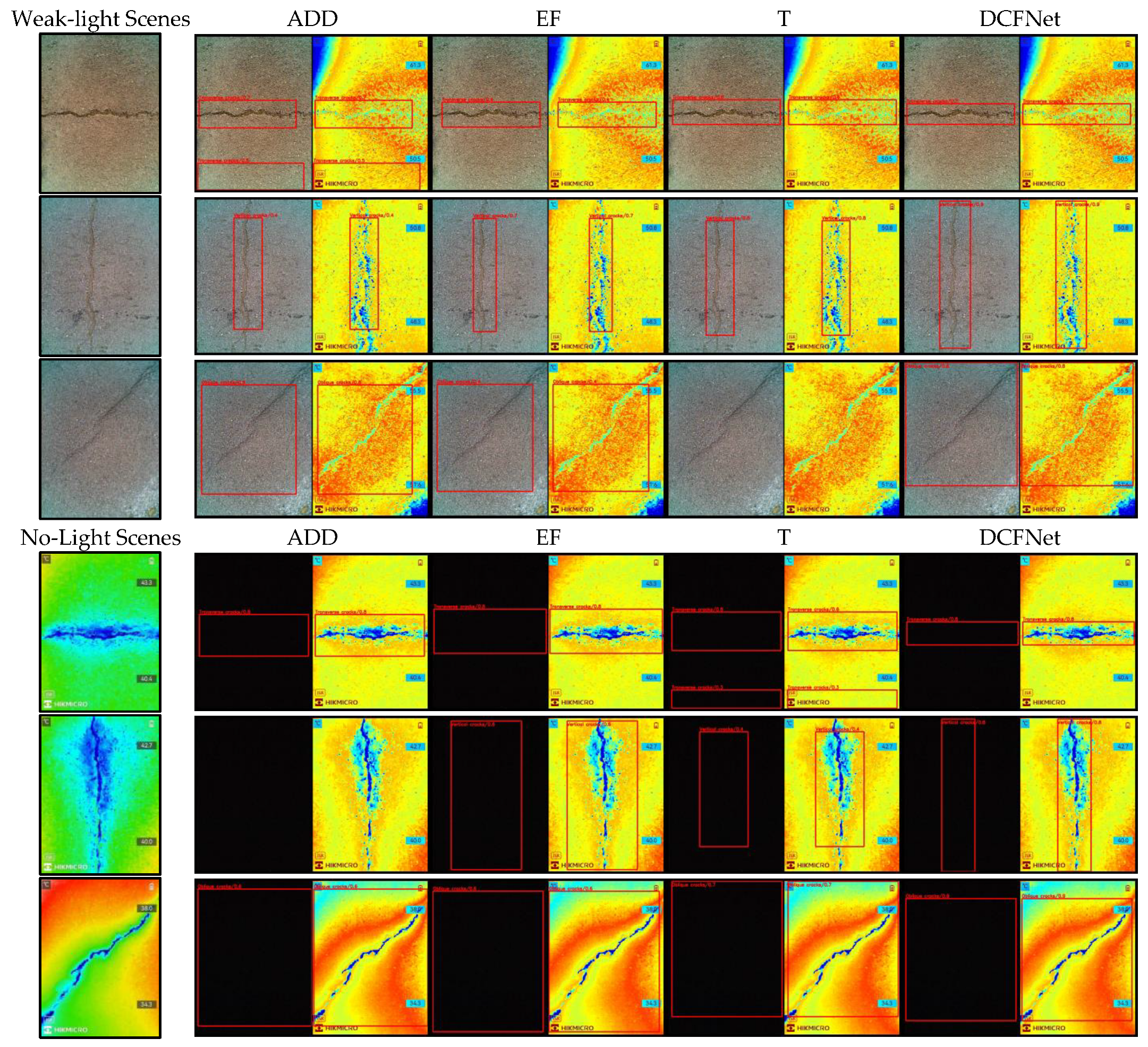

Visual verification using Grad-CAM heatmaps (see

Figure 7) demonstrates the detection efficacy of YOLOv11-DCFNet. The heatmaps reveal the model’s attention, with darker red indicating regions of higher focus. The results indicate that the original YOLOv11 struggles in low-light or nighttime conditions, often becoming distracted by road textures or high-contrast non-target areas in complex backgrounds. This distraction leads to inaccurate crack localization, blurred boundaries, and detection failures. In contrast, YOLOv11-DCFNet exhibits a more stable attention mechanism. By fusing RGB (texture details) and IR (thermal differentials), it generates concentrated thermal responses that precisely highlight crack structures while preserving texture information. This cross-modal fusion significantly enhances target localization in low-light and dark environments.

To validate the stability of YOLOv11-DCFNet, we compared models utilizing different fusion modules (ADD, EF, T, DCFNet) under both weak-light and no-light conditions. The results demonstrate that YOLOv11-DCFNet exhibits optimal performance across all environments, particularly showcasing superior robustness and generalization in low and no-light scenarios (Seeing

Figure 8). In weak-light conditions, DCFNet outperforms the ADD and EF models in extracting subtle crack textures. In complete darkness, its CFT module effectively leverages infrared thermal radiation to compensate for the loss of RGB texture, thereby enhancing the integrity of crack identification. Overall, YOLOv11-DCFNet maintains stable detection capabilities in both lighting extremes, significantly surpassing traditional fusion methods and unimodal models. This approach not only enhances crack detection accuracy and completeness but also ensures operational reliability in challenging low-visibility environments.

5. Conclusions

In this study, we propose an infrared–visible dual-modal fusion crack detection method, YOLOv11-DCFNet, to address the performance degradation of traditional RGB-based models in low-light and no-light environments. The core innovation is the Cross-Modality Fusion Transformer (CFT) module, which facilitates deep interactions between infrared (IR) and visible (RGB) features across multiple layers. By establishing robust correlations between global semantics and local details, YOLOv11-DCFNet compensates for the limitations of unimodal inputs in extreme lighting conditions, enhancing the perception of low-contrast crack boundaries and fine structures while achieving high precision and robustness in detection. Through hierarchical feature interaction, the model effectively leverages thermal radiation cues from infrared images and texture details from visible images, while suppressing redundant information, thereby improving accuracy and stability without compromising inference efficiency. Experimental results demonstrate that YOLOv11-DCFNet outperforms unimodal detectors and conventional fusion strategies, maintaining strong detection integrity in scenarios with low light, no light, background clutter, and target degradation.

Future research can further enhance the utility and generalization of YOLOv11-DCFNet. At the data level, introducing a wider range of diverse conditions—such as heavy rain, fog, snow reflection, and various road materials—will improve cross-geographic and cross-seasonal adaptability. Preprocessing strategies, including weak-light enhancement, de-fogging, and heat source suppression, can mitigate infrared degradation caused by high temperatures or strong thermal interference. At the model level, integrating attention optimization and multi-scale context mechanisms may enhance feature expressiveness while reducing computational overhead, thereby facilitating deployment on mobile and embedded devices. Furthermore, the combination of active learning and semi-supervised approaches can adaptively refine model performance while reducing annotation costs. Although YOLOv11-DCFNet has already achieved significant advancements, ongoing innovation in dual-modal detection will further advance the development of more accurate, robust, and efficient crack monitoring systems for complex real-world environments.