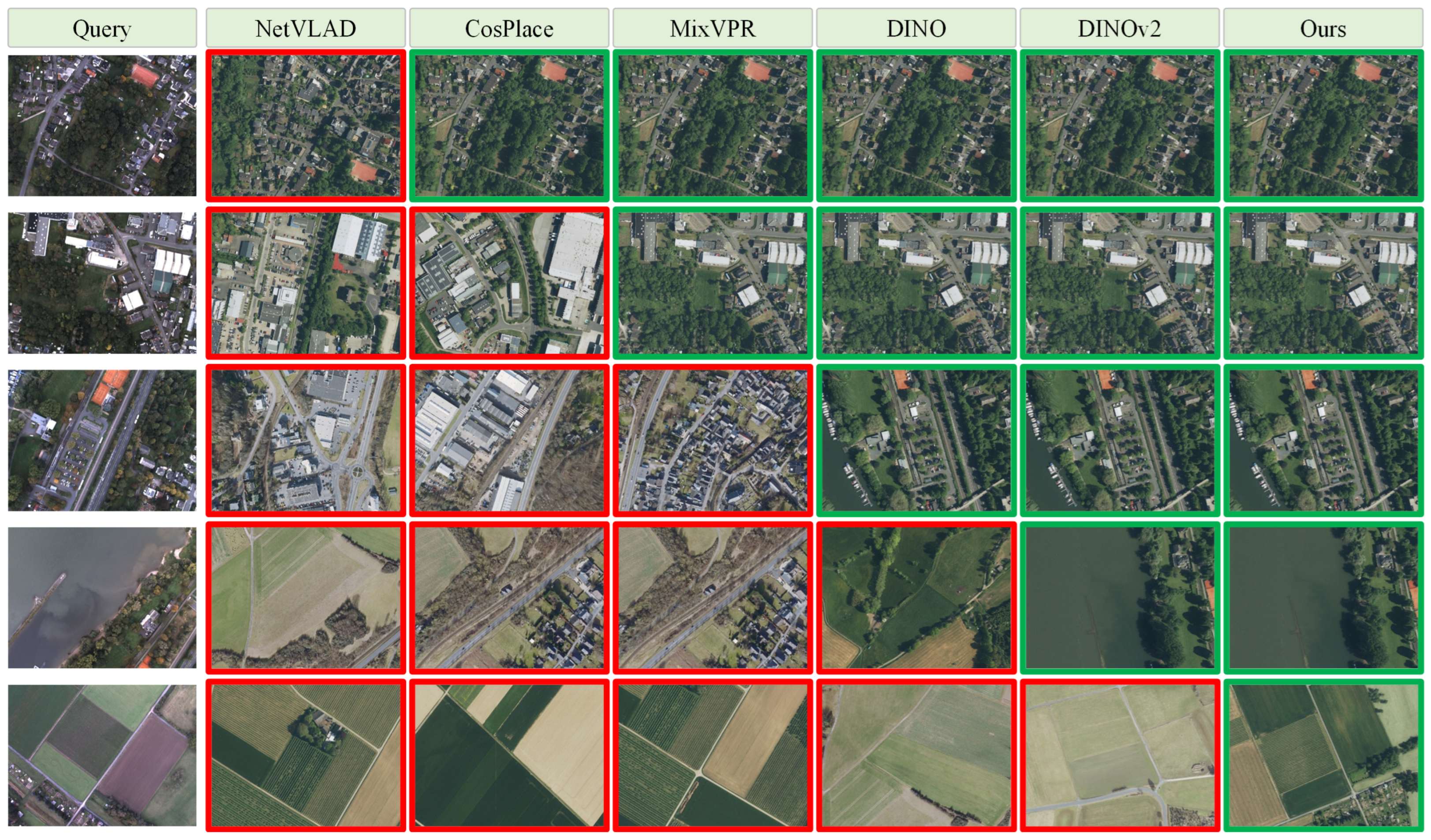

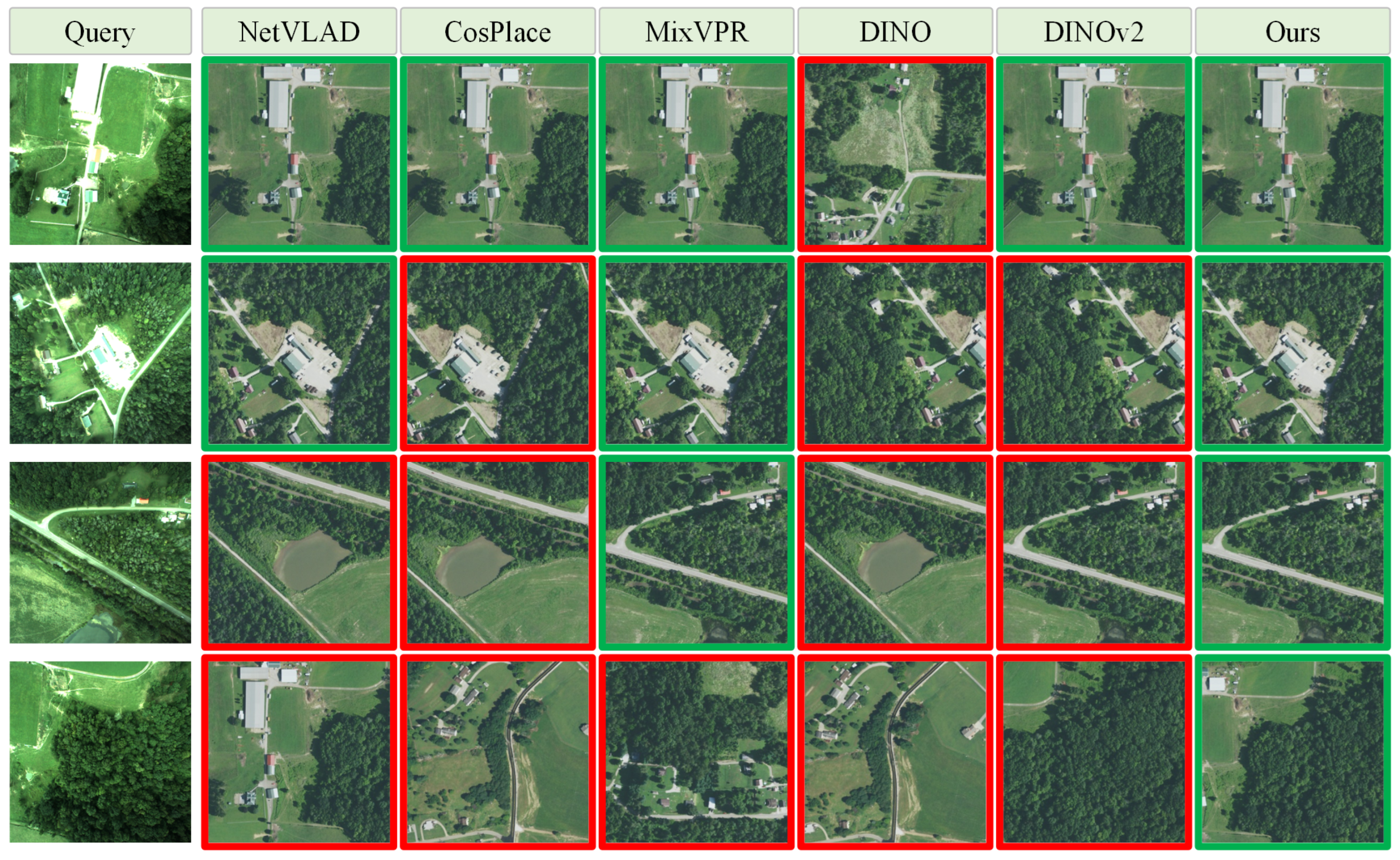

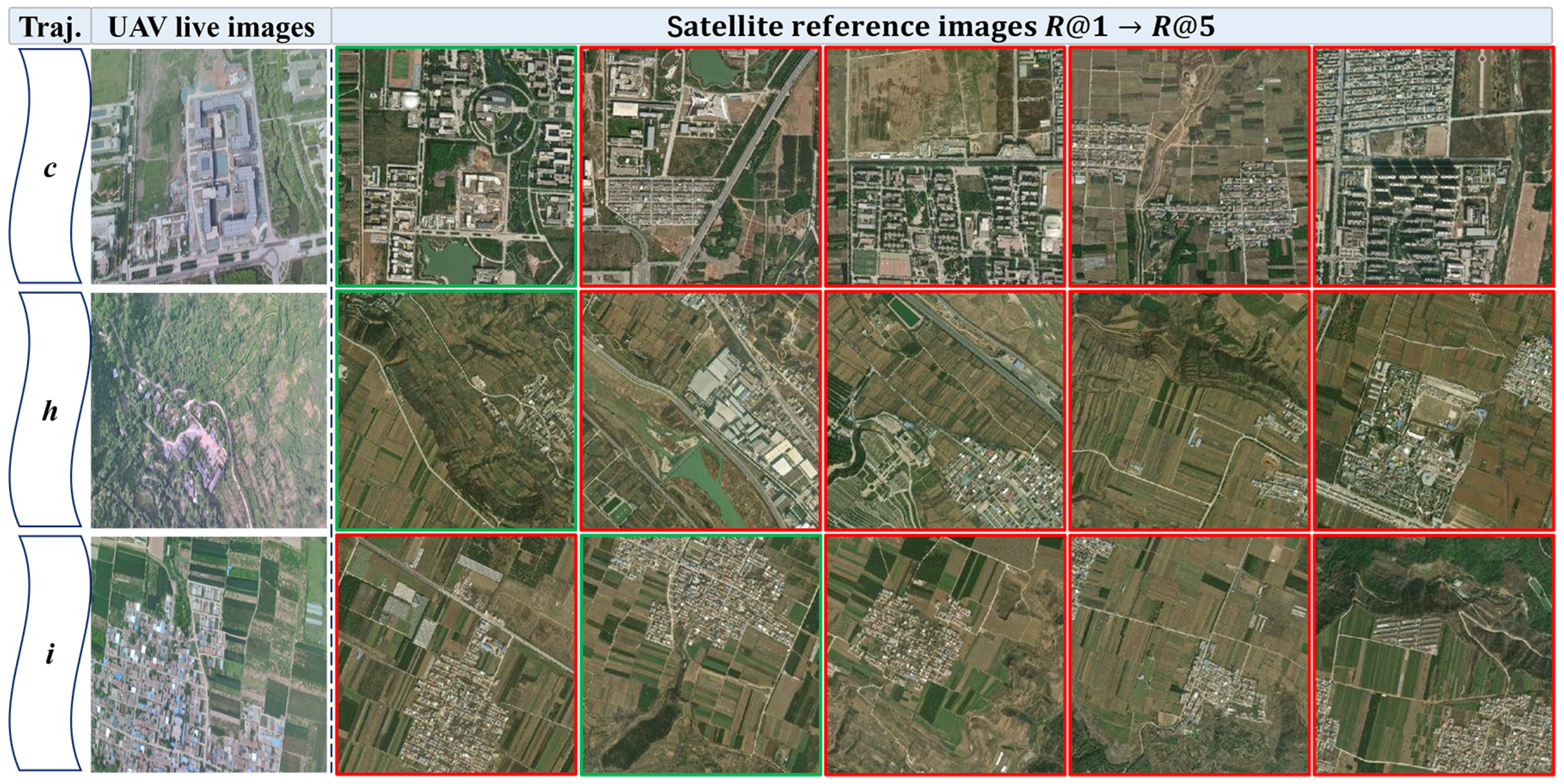

Image retrieval-based localization methods are suitable for rapidly selecting reference images similar to the real-time image in large-scale scenes. However, in continuous frame localization, these methods lack temporal and spatial constraints and thus cannot provide precise positional information. In contrast, image registration-based localization methods can achieve pixel-level accurate alignment, but they require initial position information as a prerequisite—something image retrieval-based methods can conveniently provide.

3.1. Image Retrieval Algorithm Based on Visual Backbone Model

Although image retrieval-based localization methods can quickly retrieve the approximate location of the UAV in large-scale scenes, there are still several issues with these methods.

First, the feature generalization ability is weak. Current supervised learning algorithms have achieved good results on publicly available UAV visual localization datasets. However, UAVs face various complex environmental conditions during actual flight. Models trained only on these single datasets often fail to generalize well, with insufficient adaptability to complex and open environments. Second, the difficulty in feature representation caused by the lack of prior knowledge. The characteristics of the ground environment are influenced by various factors such as lighting, climate, season, and terrain, leading to significant differences in features across different scenes. Additionally, labeled datasets for UAV visual localization are scarce, and the imbalance in the data distribution further complicates the task of feature representation.

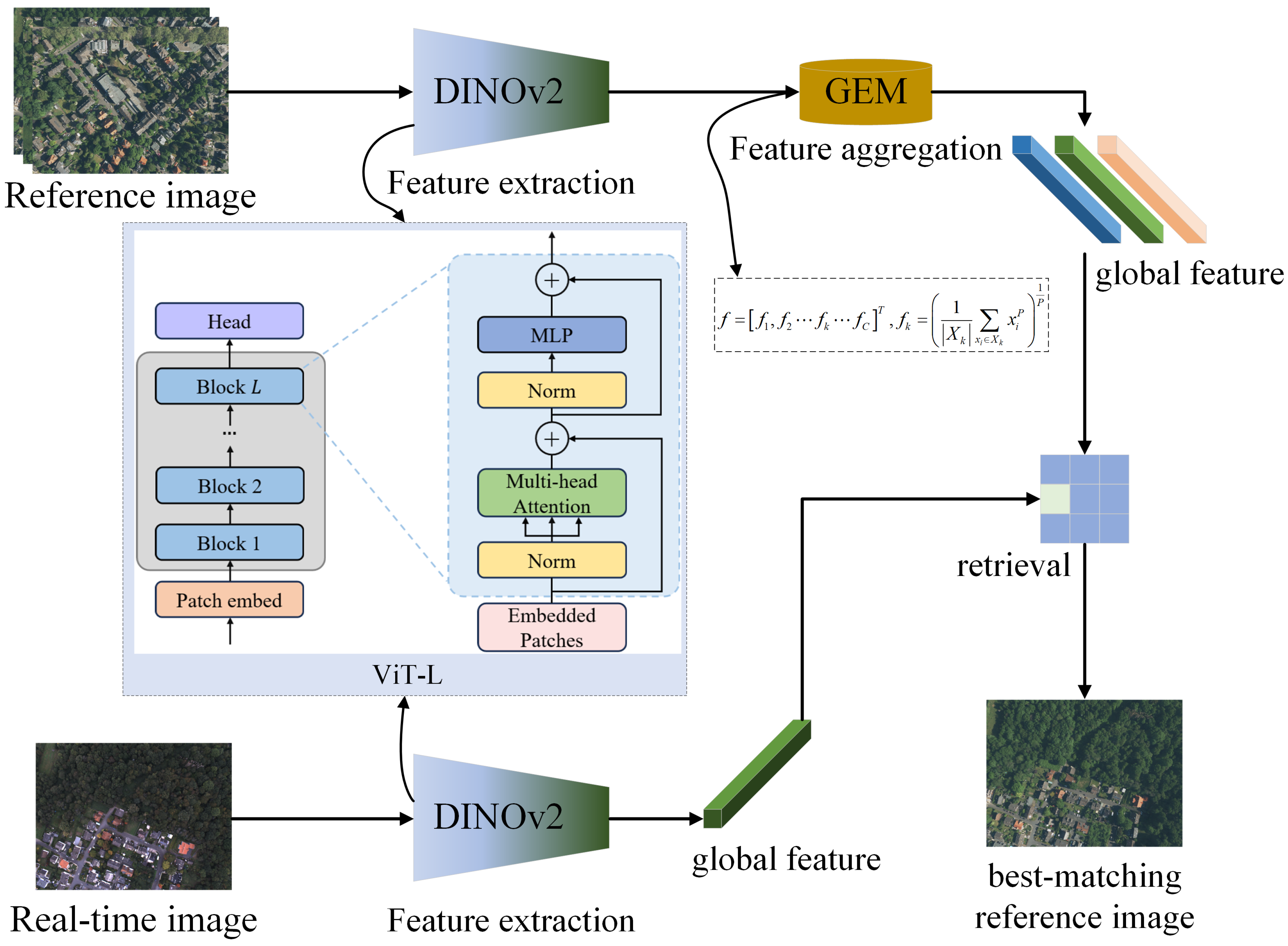

To address these issues, this paper proposes an image retrieval algorithm based on a visual backbone model. The model first extracts generic features using a visual backbone, fully utilizing the spatial information from shallow features and the semantic information from deep features to improve the model’s feature extraction capability. Based on this, a generalized mean pooling method is used to further aggregate the extracted features, enhancing the global feature representation. The overall workflow of the method is shown in

Figure 2, consisting mainly of a backbone network and a feature aggregation layer. The backbone network extracts global features from both the real-time image of the UAV and the reference satellite image, while the feature aggregation layer aggregates the extracted features to improve the global expression ability of the feature vectors.

The specific process is divided into two steps.

Offline processing phase: There exists a reference image library. For each image , global features are extracted using the image retrieval model.

Online processing phase: A query image Q (with an original resolution of ) is used as a single complete frame and directly fed into the model. Prior to entering the network, the image is uniformly resized to match the input resolution required by the backbone, after which its global feature representation is extracted. The similarity between and is then computed as The image with the minimum similarity is the retrieval result for the query image Q.

This paper adopts the DINOv2 model as the visual backbone. DINOv2 is based on a Vision Transformer (ViT) architecture and leverages a self-supervised distillation strategy. It has demonstrated strong generalization capabilities in various downstream tasks such as instance retrieval, semantic segmentation, and depth estimation, particularly excelling in zero-shot scenarios. Since training large-scale visual models is extremely costly, this work does not perform additional training on DINOv2 but directly employs its publicly available pre-trained weights. The focus of our study lies in selecting appropriate layers and components from the pre-trained model for feature extraction, and further enhancing retrieval performance through the integration of unsupervised feature aggregation methods. Details regarding the choice of DINOv2, network layers, and feature selection are provided in

Section 4.2.1 “Backbone Network Architecture Design”. Furthermore, this work employs unsupervised feature aggregation methods, which construct global image representations by aggregating local features without requiring manual annotations. In

Section 4.2.2 “Feature Aggregation Experimental Design”, we compare four aggregation methods—GAP, GMP, GeM, and VLAD—and identify the most effective one for our task.

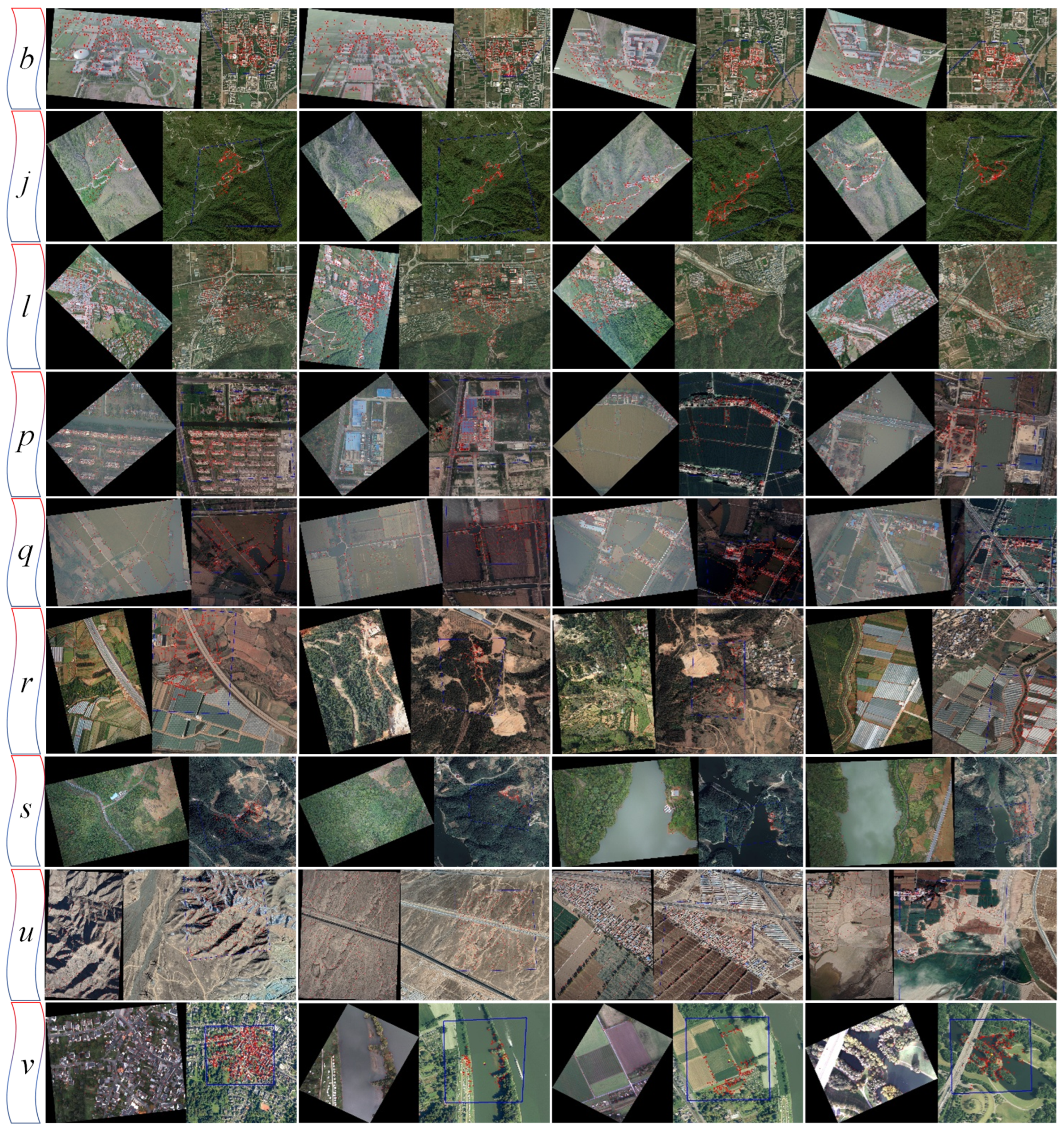

3.2. Image Registration Algorithm Based on Cycle-Consistency Matching

The performance of image registration directly impacts the accuracy of subsequent visual localization. Most existing image registration algorithms are designed for images captured from ground-based platforms. However, in UAV-based visual localization tasks, it is necessary to match real-time UAV-captured images with satellite reference images. The differences between the two image sources are mainly reflected in the following two aspects.

First, scale discrepancy: Due to the significant altitude difference between satellites and UAVs during image acquisition, the captured images exhibit notable scale variations. This imposes a higher demand on the scale-invariance capability of the image registration algorithm. Second, viewpoint discrepancy: Satellite images are typically captured from a near-vertical (approximately 90°) viewpoint, whereas UAV images are captured from oblique angles, adjustable according to specific mission requirements. This necessitates improved viewpoint consistency in the registration algorithm.

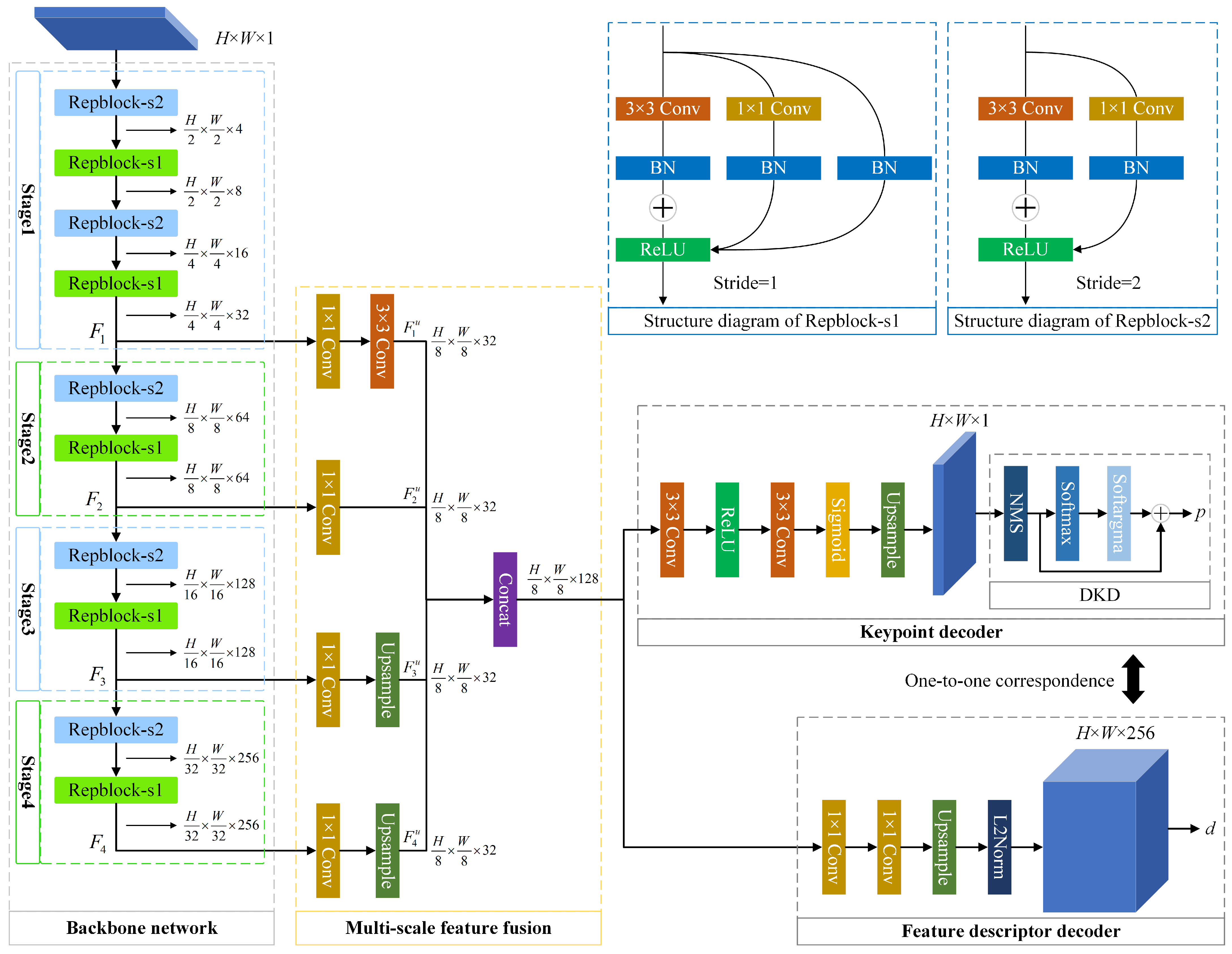

To address these challenges, we propose an image registration algorithm based on cycle-consistency matching. First, we introduce a loss function based on cycle-consistency matching and reprojection error, which optimizes the training process by encouraging the model to learn geometrically and structurally consistent features, thereby improving accuracy. On this basis, we design a multi-scale feature fusion module to enhance the model’s capability to extract features at different scales. Additionally, a structural re-parameterization method is integrated into the image registration algorithm: multiple branches are used during training, while a single branch is employed during inference. This approach improves inference speed while maintaining accuracy. The overall architecture of SuperPoint is modified, with most network parameters shared between the two tasks, enabling reduced overfitting through shared feature representations. In the shared encoder for feature extraction, structural re-parameterization is used to accelerate inference. A feature fusion module is employed to integrate features from multiple scales, improving the model’s ability to extract multi-scale information.

In the keypoint decoder, a differentiable keypoint detection module [

46] is incorporated, which allows sub-pixel keypoint locations to be directly optimized via backpropagation. For the loss function, we design a training objective based on cycle-consistency matching and reprojection error to further optimize the model training. The overall architecture is illustrated in

Figure 3.

3.2.1. Backbone Network

The encoder of SuperPoint adopts a VGG-style [

47] architecture, consisting of multiple stacked 3 × 3 convolutional layers. The 3 × 3 convolutions are well supported and highly optimized on both CPUs and GPUs [

48], and their theoretical computational density is approximately four times that of other convolution operations [

49]. With the emergence of multi-branch architectures such as Inception and ResNet, the accuracy of deep models has been further improved. However, these multi-branch structures inevitably introduce additional computational complexity. To leverage the benefits of multi-branch architectures while retaining the simplicity and efficiency of the VGG network, this work incorporates a structural re-parameterization approach [

50], which decouples the training architecture from the inference architecture. Specifically, multiple branches are employed during training to enhance representational capacity, while a single-branch equivalent is used during inference to maintain accuracy with significantly reduced computational overhead.

In the RepVGG framework, two modules are used during training: a stride-1 module and a stride-2 module for downsampling, referred to as Repblock-s1 and Repblock-s2, respectively, as illustrated in

Figure 3. After training, a simple algebraic transformation is applied. In this process, the identity mapping branch can be regarded as an equivalent

convolution, which can further be reformulated as a degenerated

convolution. This enables the learned parameters to be consolidated into a single

convolutional layer for inference. The encoder is organized into four stages comprising a total of ten Repblocks, with ReLU serving as the activation function. The output feature maps after each stage are denoted as

,

,

, and

, with dimensions of

respectively.

3.2.2. Multi-Scale Feature Fusion

SuperPoint utilizes single-scale features for feature extraction and does not incorporate multi-scale representations, which limits its scale invariance. To address this limitation, this work introduces a feature pyramid structure to enable multi-scale feature fusion, thereby enhancing the model’s robustness to scale variations. In the field of deep learning, integrating multi-scale features through feature pyramids has proven effective for improving network accuracy as demonstrated by models such as FPN [

51]. Recent image matching approaches [

52,

53] have also leveraged hierarchical features to enhance feature representation capabilities. The feature fusion strategy adopted in this work is similar to that in [

52], which has shown strong performance in matching tasks.

This module fuses multi-scale features , , , and . Each feature map is first processed by a convolution layer to adjust its channel dimension. Subsequently, all feature maps are upsampled to a unified spatial resolution of . The processed feature maps are denoted as , , , and .

Finally, by concatenating these rescaled features, both the spatial localization information from shallow layers and the semantic context from deeper layers are fully utilized. The resulting fused feature map has a dimension of

, and is computed as

3.2.3. Keypoint Decoder

The input to the keypoint decoder is a feature map of size , and the output is a feature map of size . This component consists of two convolutional layers, with the last layer using a sigmoid activation function to constrain the output values within the range , representing the probability of each pixel being a keypoint. The resulting score map is denoted as .

A commonly used approach for obtaining keypoint locations is to apply Non-Maximum Suppression (NMS) to eliminate duplicate detections [

54,

55,

56]. However, this method suffers from a key limitation: the extracted keypoint positions are decoupled from the probability map output by the network, making it impossible to backpropagate gradients during training. To address this issue, we adopt the differentiable keypoint detection (DKD) module proposed in [

46], which enables the network to learn keypoints at sub-pixel accuracy. By integrating keypoint detection with probability prediction into a unified task, the network can directly output both keypoint locations and their corresponding confidence scores.

Concretely, for each

window, NMS is first applied to obtain the maximum value within the window, and subsequent steps are performed as follows:

The location with the maximum response within the sliding window over the entire image is identified and denoted as

. The probability values within the local window are then normalized as follows:

where

denotes the temperature coefficient, which controls the smoothness of the output. The softmax function is applied to normalize

:

Subsequently, a softargmax operation is performed to estimate the expected keypoint location within the local window:

Finally, the sub-pixel keypoint location in the full-resolution image is computed as

3.2.4. Feature Descriptor Decoder

The feature descriptor decoder takes as input a feature map of size and outputs a feature descriptor map of size , where D is the dimension of the descriptor. This component consists of two convolutional layers with 128 and 256 channels, respectively, without any activation functions. Before producing the final output, an normalization operation is applied. Each position in the output feature map corresponds to a descriptor, which aligns one-to-one with the score map produced by the keypoint decoder.

3.2.5. Loss Function

During the network training process, a multi-task loss function is designed, which consists of three components: keypoint detection loss, descriptor loss, and reprojection loss.

In SuperPoint, the keypoint detection task is formulated as a multi-class classification problem, where only one keypoint is allowed within each

cell. As a result, the maximum number of SuperPoint keypoints is limited to

. In this work, we adopt the approach introduced in SiLK [

57], which considers each pixel as a potential keypoint candidate and redesigns the loss function accordingly.

Given two images, and , with a total of pixels. Let represent the descriptors of images and , respectively. Let denote the predicted keypoint probability. The similarity between descriptors and is represented by .

The variables

and

denote the correspondence between the

i-th point in image

and the

j-th point in image

, with a total of

M correspondences (

). The corresponding keypoints are denoted as

. The binary variable

y indicates whether the descriptors are successfully matched. We determine correct matches using the simplest mutual nearest neighbor strategy, which is defined as follows:

Here, 1[·] denotes the indicator function. This condition checks whether the current correspondence is mutually the most similar, meaning that the similarity score is the maximum along both its row and column in the similarity matrix. If so, it is considered a successful match. Otherwise, it is regarded as a mismatch.

To further enhance match quality, we employ cycle-consistent matching (CCM), which enforces the idea that two matched features are the closest to each other in the descriptor space. This constraint helps reduce the number of ambiguous or incorrect matches while increasing the proportion of correct ones. The probability of a match being valid is defined as

Here, the matching probability from descriptor to is defined as and the matching probability from descriptor to is defined as

The computation follows a scheme similar to the InfoNCE loss commonly used in self-supervised learning [

58]. The temperature coefficient

is used to modulate the focus on hard negative samples [

59]. The similarity matrix

is computed using the standard cosine similarity function.

Here, ⟨·, ·⟩ denotes the dot product. The descriptor loss is computed over all matched descriptor pairs as

The correspondence between descriptors is indicated by the variable y, allowing the use of a standard cross-entropy loss to supervise keypoint detection:

where

In addition, keypoint positions are further refined by projecting them from image

to image

using a homography matrix

as

For each transformed keypoint

, we determine whether it matches its corresponding

based on a threshold thresh defined in the ground truth. The reprojection error is computed as

Similarly,

can be projected back to image

, yielding the point

. The final reprojection loss is defined as

The total loss is then defined as the weighted sum of the three components:

where

,

, and

are weighting factors that balance the contributions of the descriptor loss, keypoint detection loss, and reprojection loss, respectively.

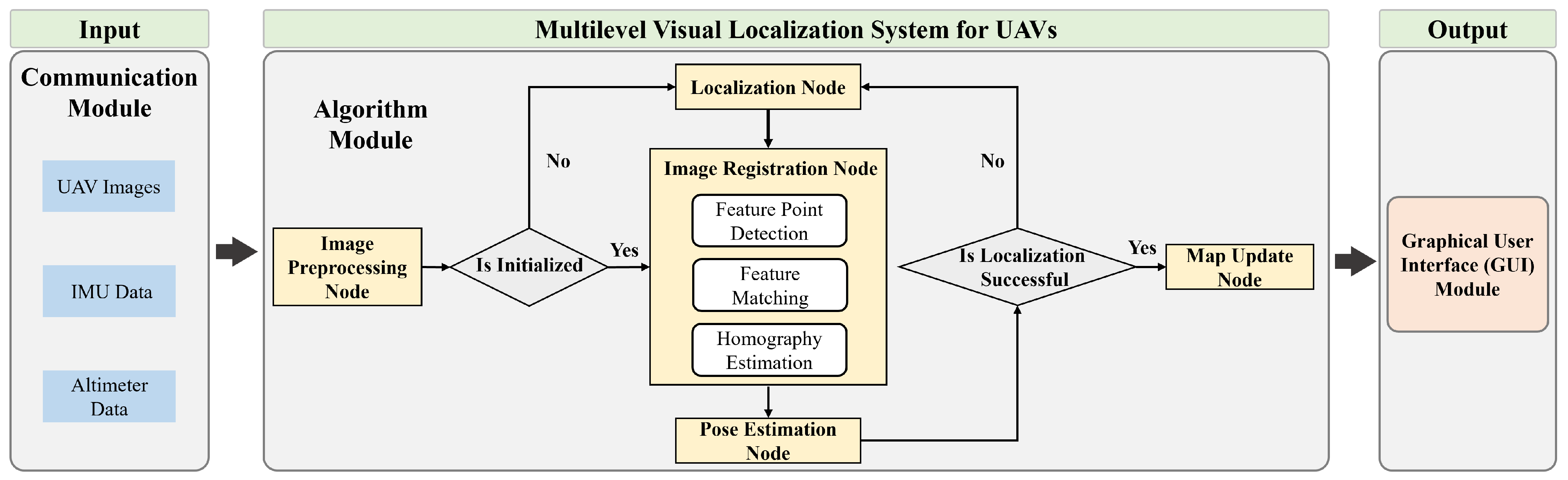

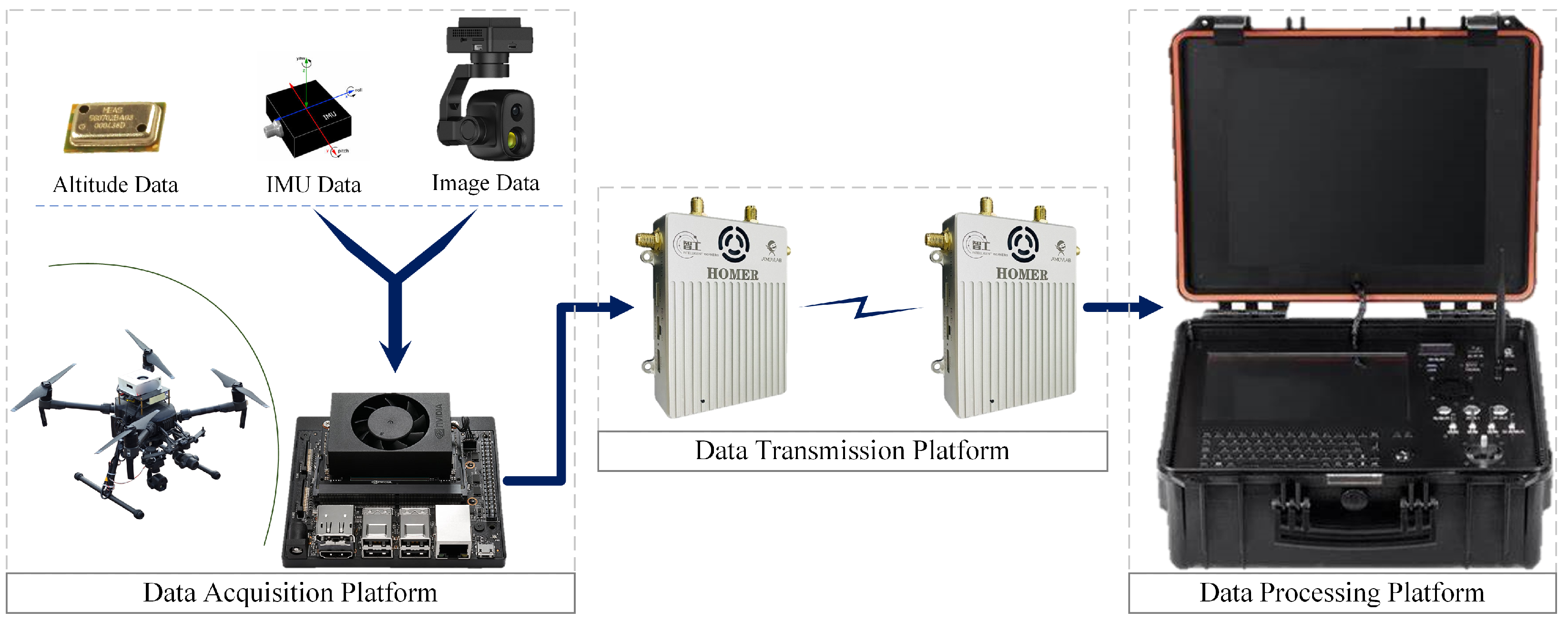

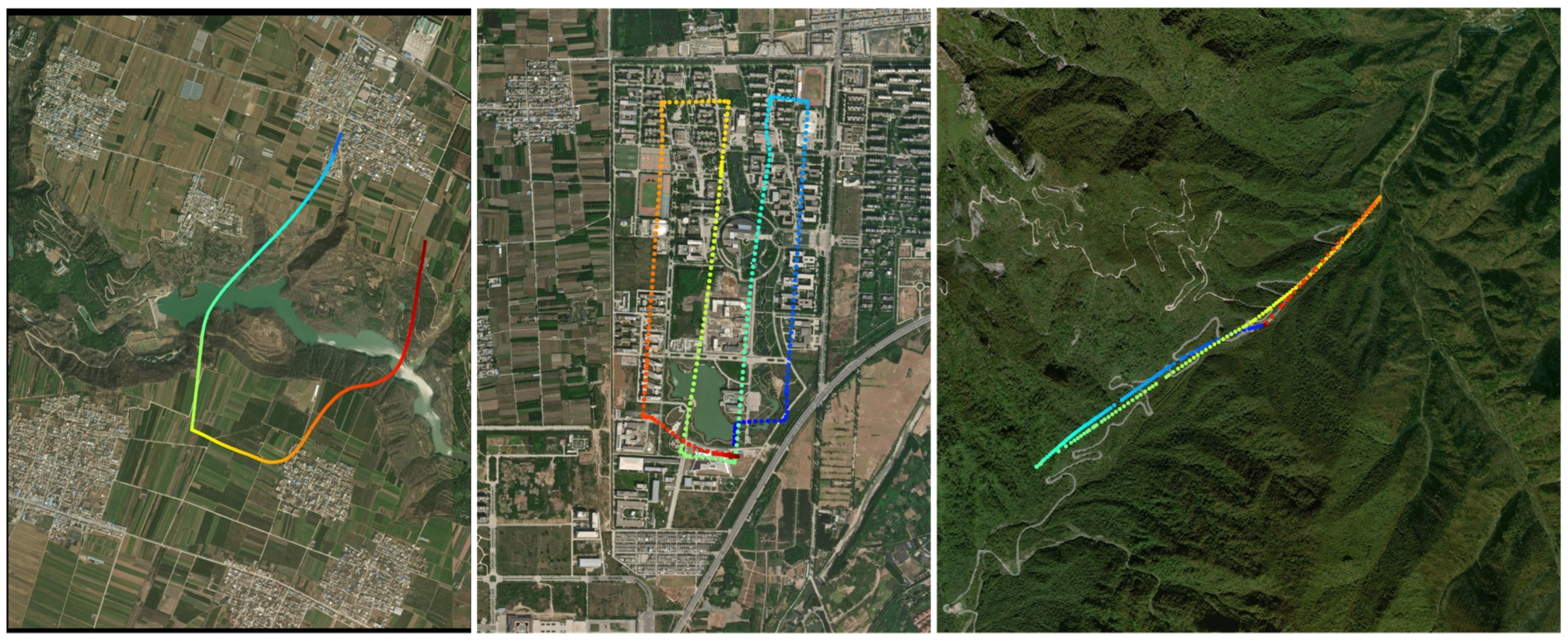

3.3. System Design and Implementation

The entire software system is developed based on the Robot Operating System (ROS). Communication between nodes is achieved via custom-defined message types. The overall system architecture is illustrated in

Figure 4. Leveraging the ROS sensor data management framework, the system can acquire sensor data in real time and distribute it to relevant algorithmic modules through inter-node communication. This design allows each component in the system to focus on its specific function, effectively decoupling hardware from algorithmic logic.

The localization algorithm module serves as the core component of the entire system and is primarily composed of five submodules: the Image Preprocessing Node, Map Update Node, Localization Node, Image Registration Node, and Pose Estimation Node. The main functionalities of each node are described as follows.

The Image Preprocessing Node receives real-time UAV imagery and IMU data as inputs, and outputs the preprocessed images. In this module, a real-time image correction strategy based on IMU prior information is proposed.

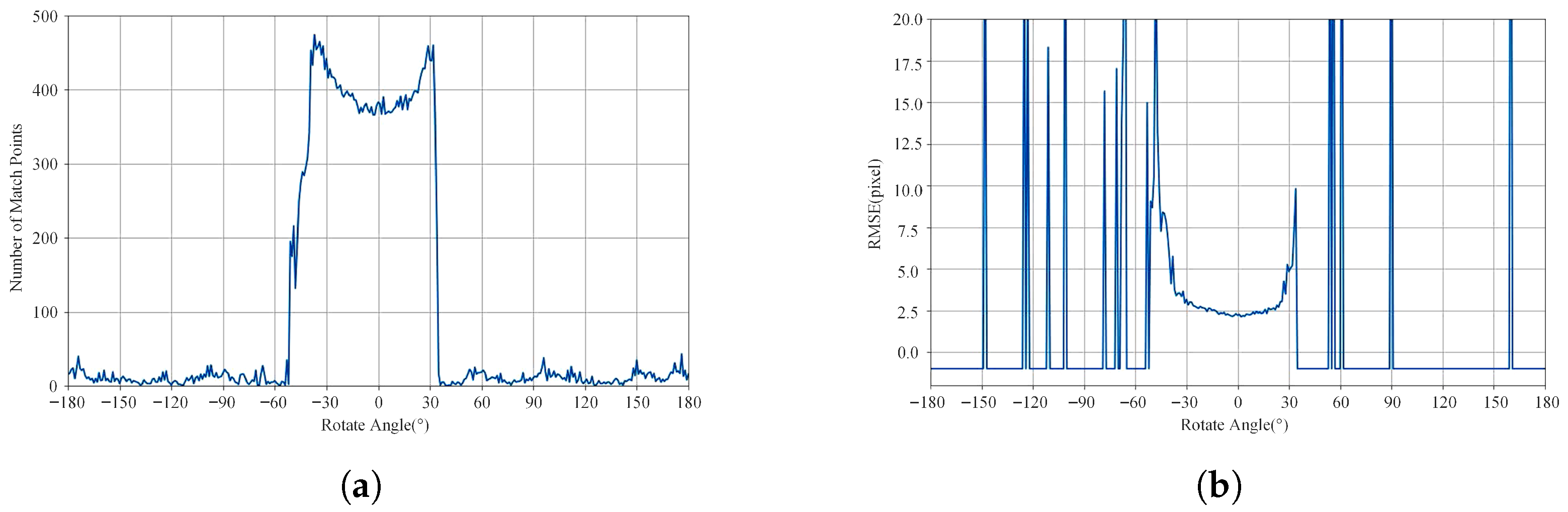

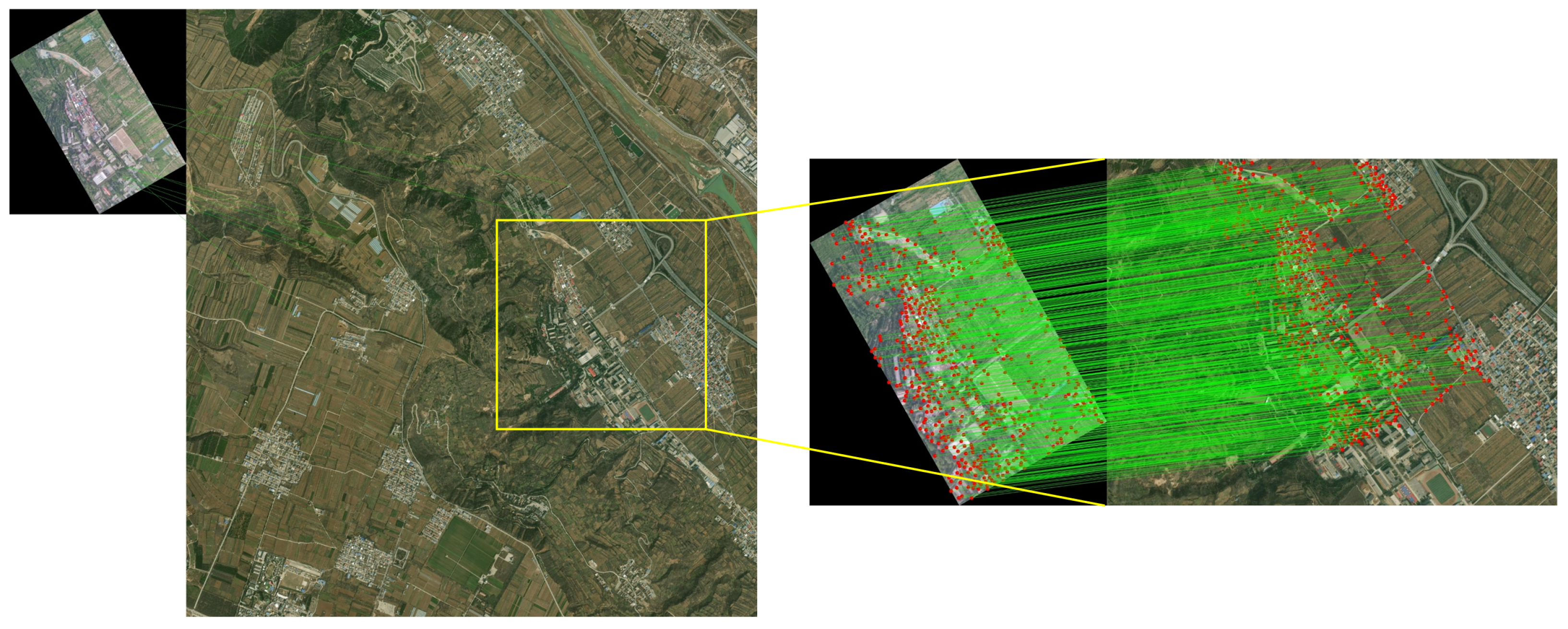

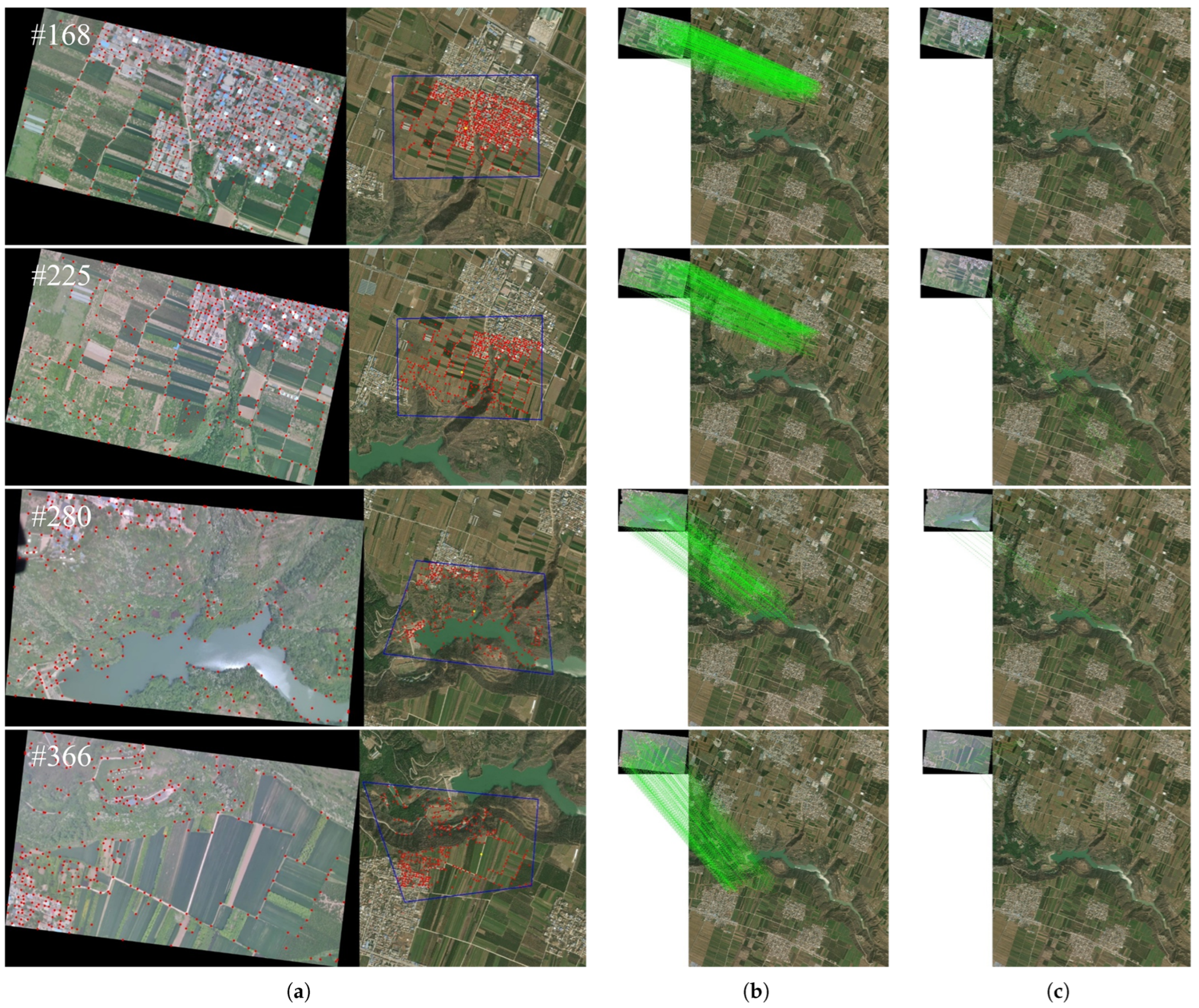

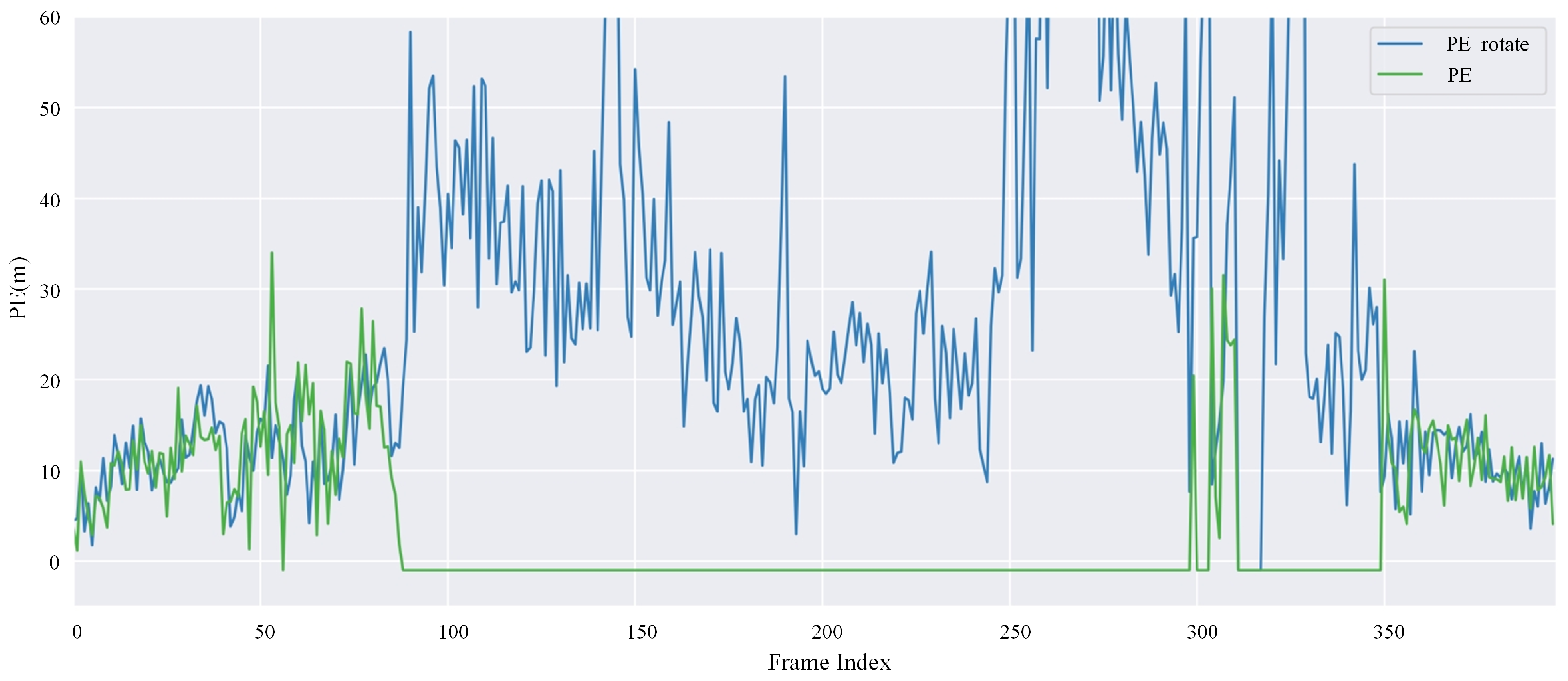

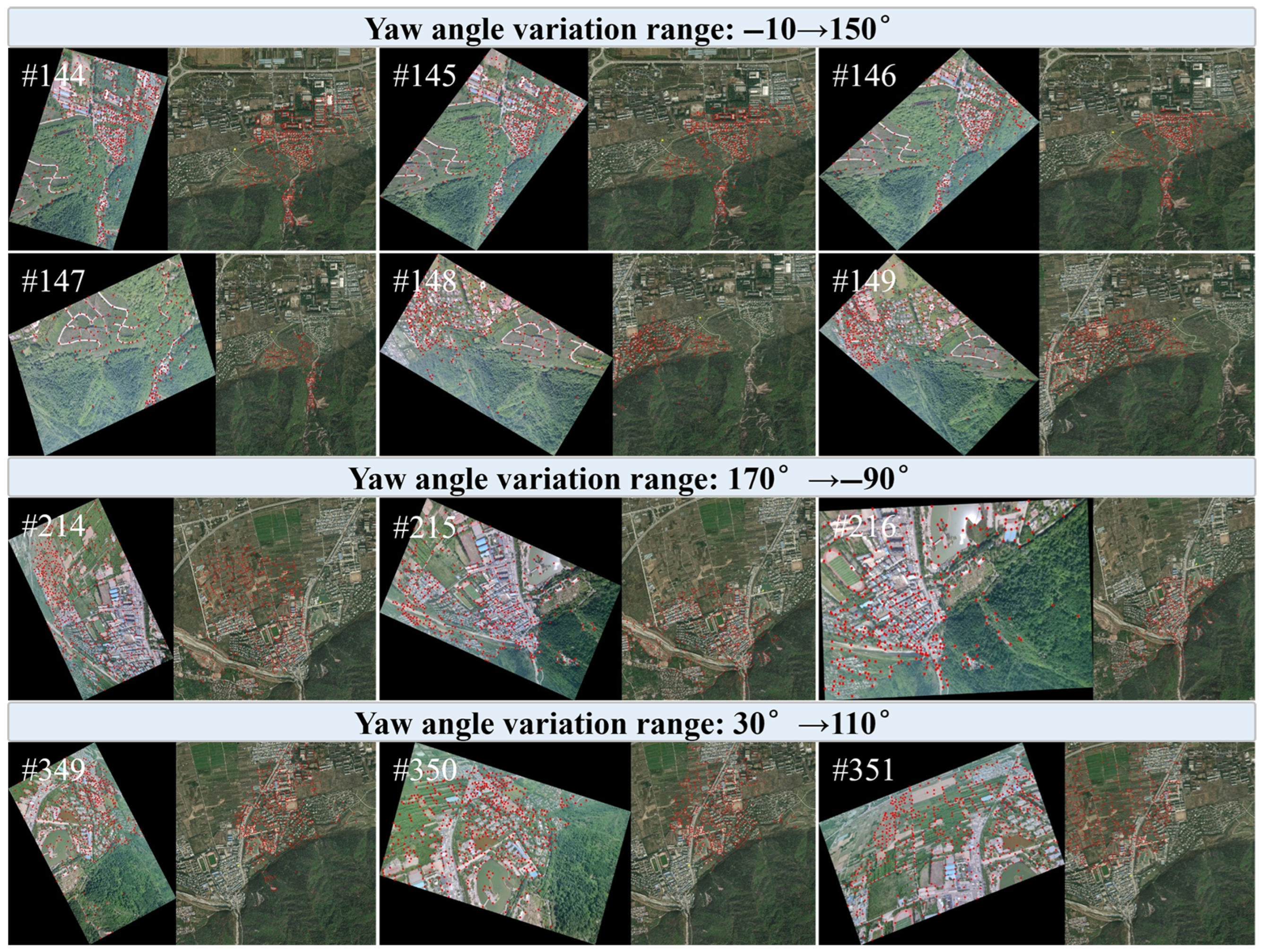

During preprocessing, the image data and IMU data are first temporally synchronized. This is achieved at the software level using functions such as TimeSynchronizer provided by the ROS framework. Additionally, image distortion correction is performed. On top of this, a real-time image correction strategy utilizing IMU priors is applied to align the input image with the reference map. Due to variations in the yaw angle of the UAV during flight, there often exists a rotational discrepancy between the real-time image and the reference image. During UAV flights, dynamic variations in the yaw angle have a significant impact on image registration accuracy. Although the proposed registration algorithm exhibits a certain degree of robustness to rotation, experimental results indicate that this robustness is limited. As shown in

Figure 5, when the rotation angle varies within the range of

, the registration success rate remains relatively high. However, once the angular difference exceeds

, the number of matched points decreases rapidly, and the error increases substantially. In particular, under large yaw changes of the UAV (e.g., angular differences of

or

), effective registration between real-time and reference images becomes nearly impossible (see

Figure 5), ultimately leading to localization failure. Considering that low-altitude UAVs often experience unpredictable yaw variations due to mission requirements, relying solely on image registration is insufficient to ensure system robustness.

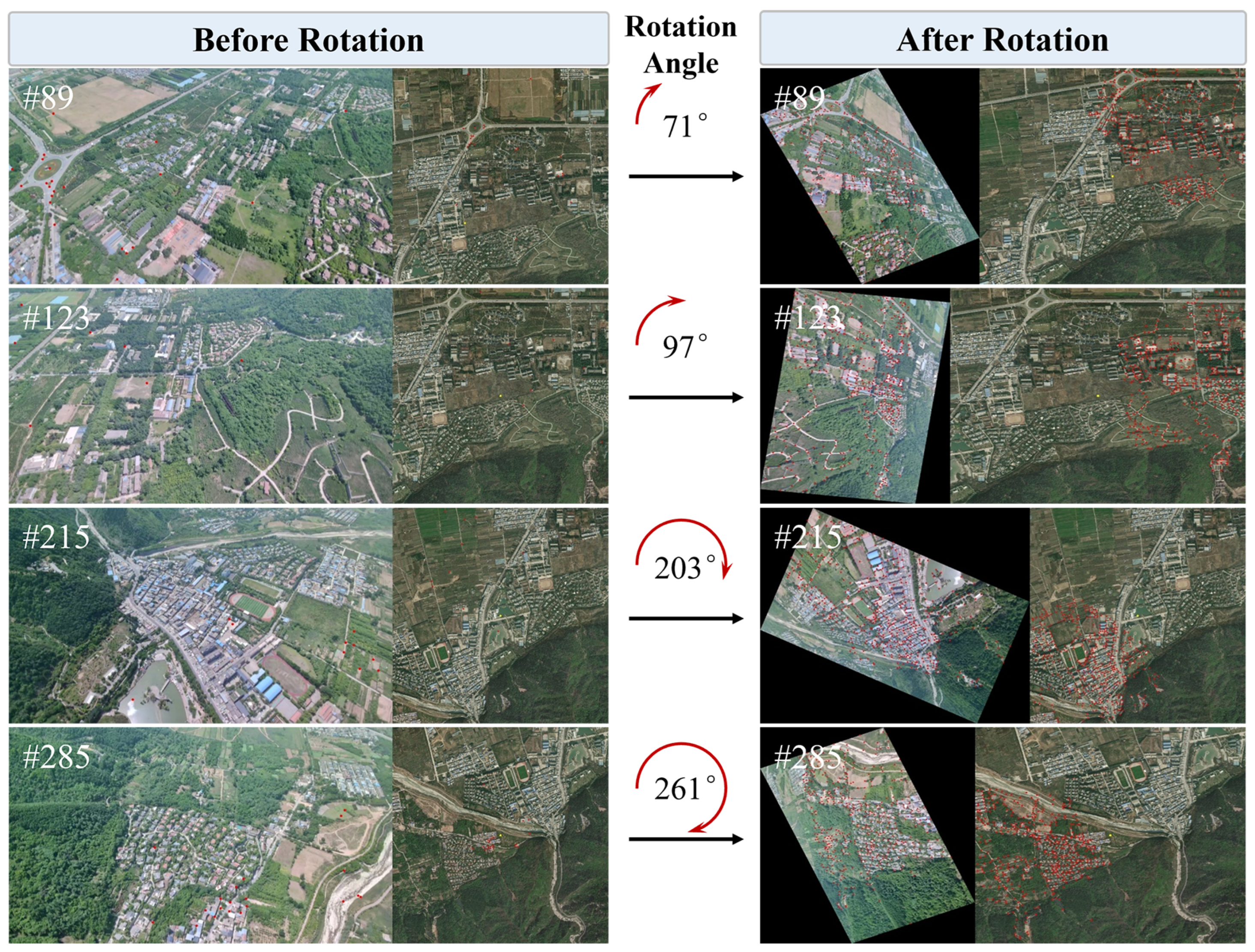

To address this, we propose a correction approach that computes the rotation matrix from the IMU data of the UAV to transform the camera coordinate system into that of the reference map. This matrix is decomposed to obtain the yaw angle , which is then used to adjust the real-time image, minimizing the rotational mismatch between it and the reference.

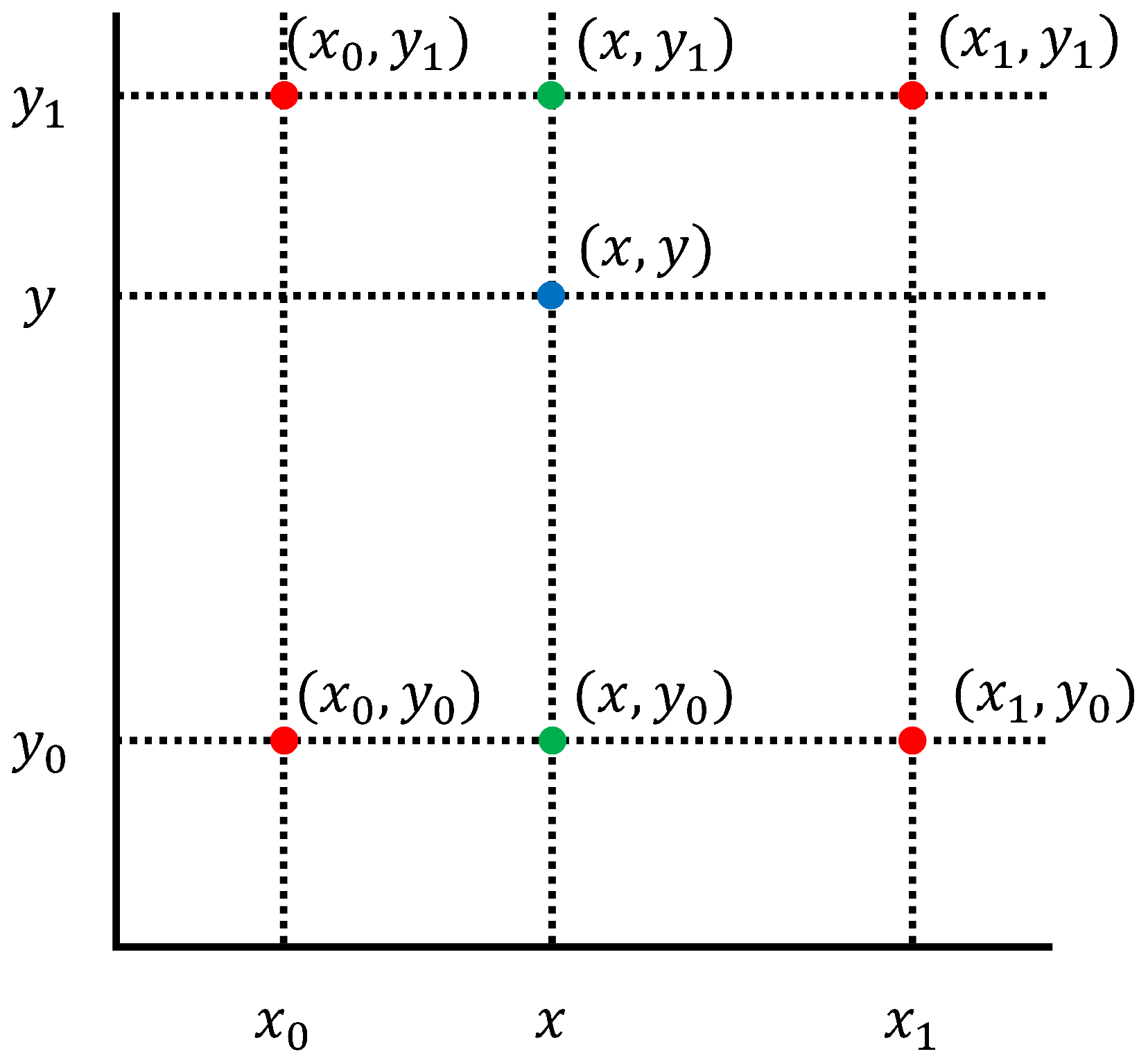

However, during the image rotation process, since image pixels are stored as integers, rotating the image may lead to pixel coordinates with non-integer values, resulting in distortion. To mitigate this issue and preserve image quality, bilinear interpolation [

60] is employed to refine the rotated image. As illustrated in

Figure 6, bilinear interpolation first performs interpolation along the x-axis, followed by interpolation along the y-axis, ultimately producing a smoothed and accurate estimate of pixel values at non-integer coordinates.

When an image is rotated, part of its content may be cropped if the rotated image exceeds the original image boundaries, leading to information loss. To address this issue, the display bounding box must be recalculated and adjusted during the rotation process to ensure that the rotated image can be fully preserved. As shown in

Figure 7, the dashed yellow box denotes the region containing the valid information to be retained, while the red bounding box represents the actual accessible image boundary. After the UAV rotates, if the bounding box size is not adjusted, part of the information within the dashed yellow box will be cropped (middle image). By enlarging the bounding box, all information within the dashed yellow box can be completely preserved (right image). This process enables lossless rotation, ensuring that all image information is retained and reducing matching errors in subsequent registration caused by viewpoint inconsistencies.

The Localization Node is built upon the image retrieval algorithm based on a vision foundation model proposed in this work. Leveraging this algorithm, the system searches for reference sub-images within the reference map database. The input to this node is the real-time image of the UAV, and the output is the retrieved satellite reference sub-image along with its corresponding tile index. The Image Registration Node adopts a novel cycle-consistent matching-based image registration algorithm developed in this paper. This algorithm aligns the real-time image with the retrieved reference sub-image. The inputs are the output from the Map Update Node at time and the output from the Image Preprocessing Node at time t. The outputs are the matched feature point pairs and the estimated homography transformation matrix.

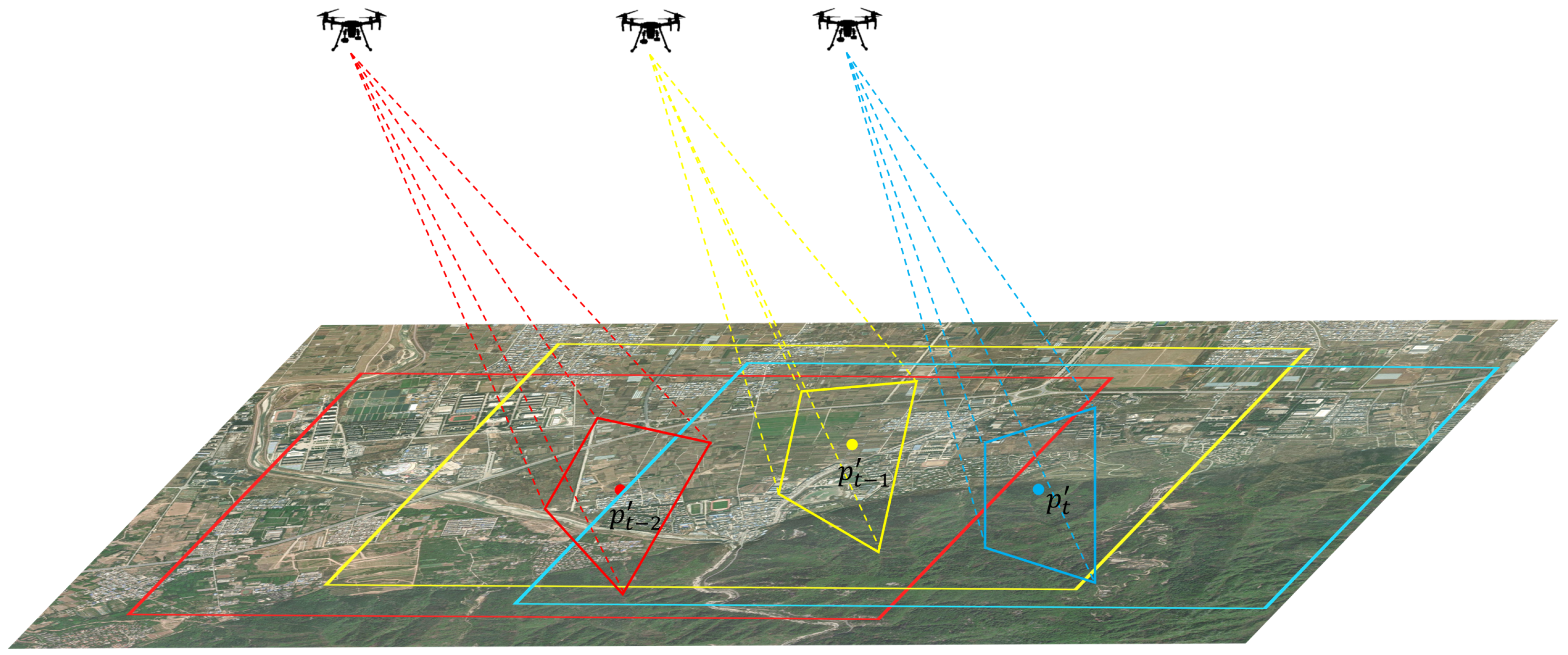

In the Map Update Node, we propose a sliding window-based reference map update strategy. Absolute visual localization requires matching UAV-captured real-time images with pre-stored satellite reference images to achieve precise localization. However, directly matching real-time UAV imagery with the full reference map poses two major challenges:

- (1)

The satellite reference map typically covers a vast area, leading to excessive memory consumption. Loading the entire map into memory would impose a heavy burden on system resources.

- (2)

The real-time image of the UAV occupies only a small portion of the satellite reference image, resulting in a considerable scale difference between the two. Direct image registration under such conditions often results in failure as illustrated in

Figure 8.

Therefore, the global absolute localization problem can be transformed into a constrained local localization problem under the guidance of prior information—specifically, how to determine the appropriate reference sub-image using prior knowledge and perform accurate UAV localization within that subregion.

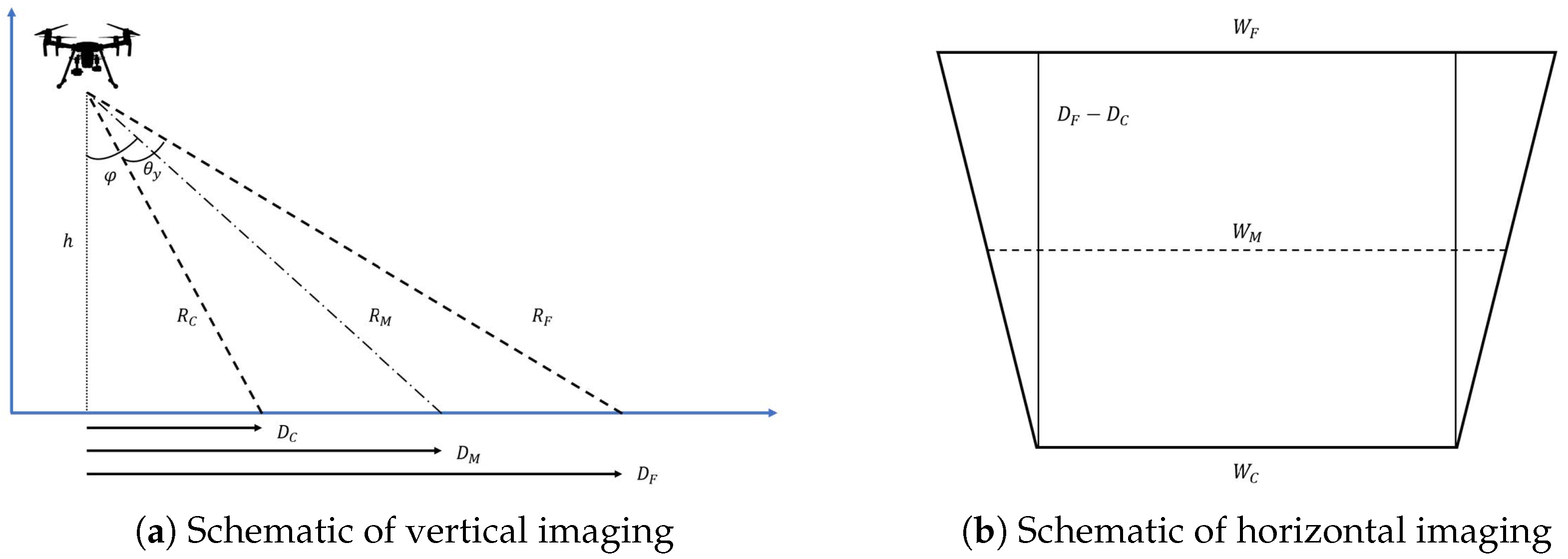

Given that UAV flight is continuous in both spatial and temporal domains, there is typically a significant overlap between the real-time image captured at the current moment and that from the previous timestamp. Traditional visual localization methods often assume a nadir-view camera setup, where each pixel in the image is directly associated with a specific ground location. Under this assumption, the reference sub-image for the current frame can be roughly inferred from the last known position of the UAV. However, in this work, we take into full account the impact of the camera pitch angle on localization accuracy in order to enhance system robustness. This consideration means that reference map updates cannot rely solely on the previous localization result. Instead, the system must precisely determine the satellite reference sub-image based on the actual field of view (FOV) presented in the real-time image of the UAV.

When the camera’s optical axis is not perpendicular to the ground, the imaging range in both vertical and horizontal directions can be calculated according to the camera’s pitch angle

[

61]. As shown in

Figure 9,

, and

and

denote the horizontal and vertical field of view (FOV) angles of the camera in the

x and

y directions, respectively. The vertical (ground-normal) imaging extent can then be computed using the following expression:

The projected range in the

x-direction (i.e., the horizontal direction in the camera’s field of view) varies with the distance in the

y-direction. Therefore, the values of

,

, and

are derived as follows:

When , the top of the field of view will be above the horizon. For fields of view above the horizon, the above calculations will no longer be valid.

Based on the previous analysis, the maximum distance at which the camera’s field of view projects onto the ground can be determined. By considering the map’s spatial resolution, the theoretical maximum pixel area

corresponding to the current frame can then be calculated:

Here,

denotes the spatial resolution of the map,

is defined in Equation (

18), and

is a hyperparameter used to control the size of the updated map. Its purpose is to mitigate drastic changes in the field of view of the UAV over short time intervals, which could otherwise lead to a failure in reference sub-image coverage. In this paper,

is empirically set to 2.

Based on the above calculations, we propose a sliding-window-based reference sub-image update strategy. Given the image center coordinate and the homography transformation matrix estimated at time , the corresponding coordinate of the image center on the map can be computed as

Taking

as the center and

as the side length of a square, we define the region from which the satellite reference sub-image is selected at time

t. The detailed selection process is illustrated in

Figure 10.

The yellow trapezoid indicates the field of view of the real-time image of the UAV at time , projected onto the satellite reference image. The yellow dot represents the coordinate , corresponding to the center of the image of the UAV at time on the reference map. The yellow rectangle denotes the search area for the reference sub-image computed at time . Similarly, the red and blue elements follow the same representation logic. In general, the reference sub-image search area computed at time is used as the reference sub-image for time t, forming a progressive relationship across frames. Due to the short time interval, the motion of the UAV from to t is negligible. This ensures that the reference sub-image will fully cover the field of view of the real-time image at time t.

It should be noted that when contains certain errors, the selection range of the reference sub-image may shift, thereby affecting the accuracy of subsequent matching. To address this issue, the system first degrades into a coarse localization mode. Although the accuracy is reduced, localization continuity can still be maintained. In addition, by incorporating prior information from the IMU and altimeter, further inference and correction can be performed to enhance the robustness of the system. In extreme cases where coarse localization also fails, the error exceeds the designed capability of the system, which constitutes a direction for future research in this work.

In summary, the input to the map update node includes the homography matrix estimated at time and altimeter data. The output is the reference sub-image at time t.

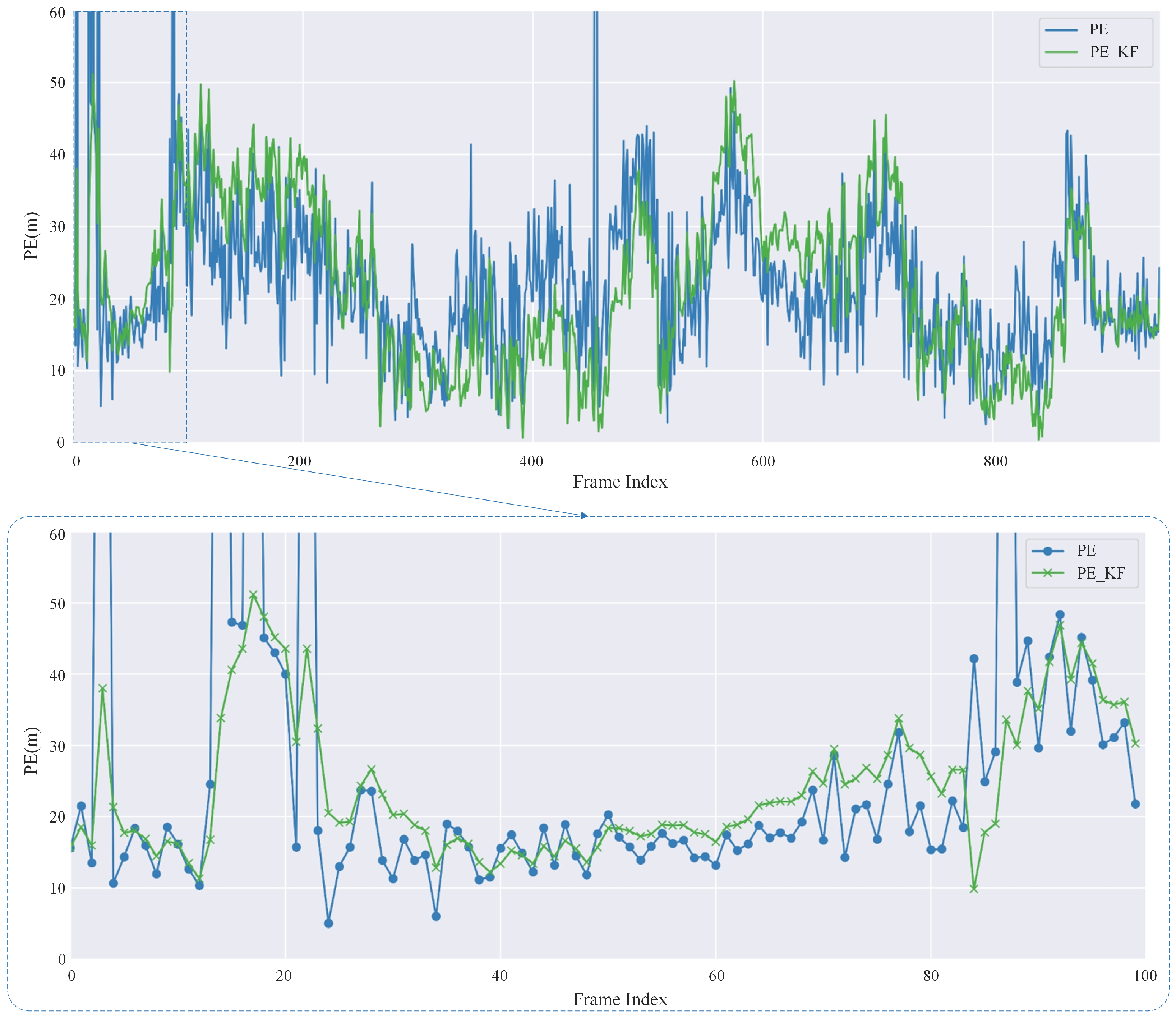

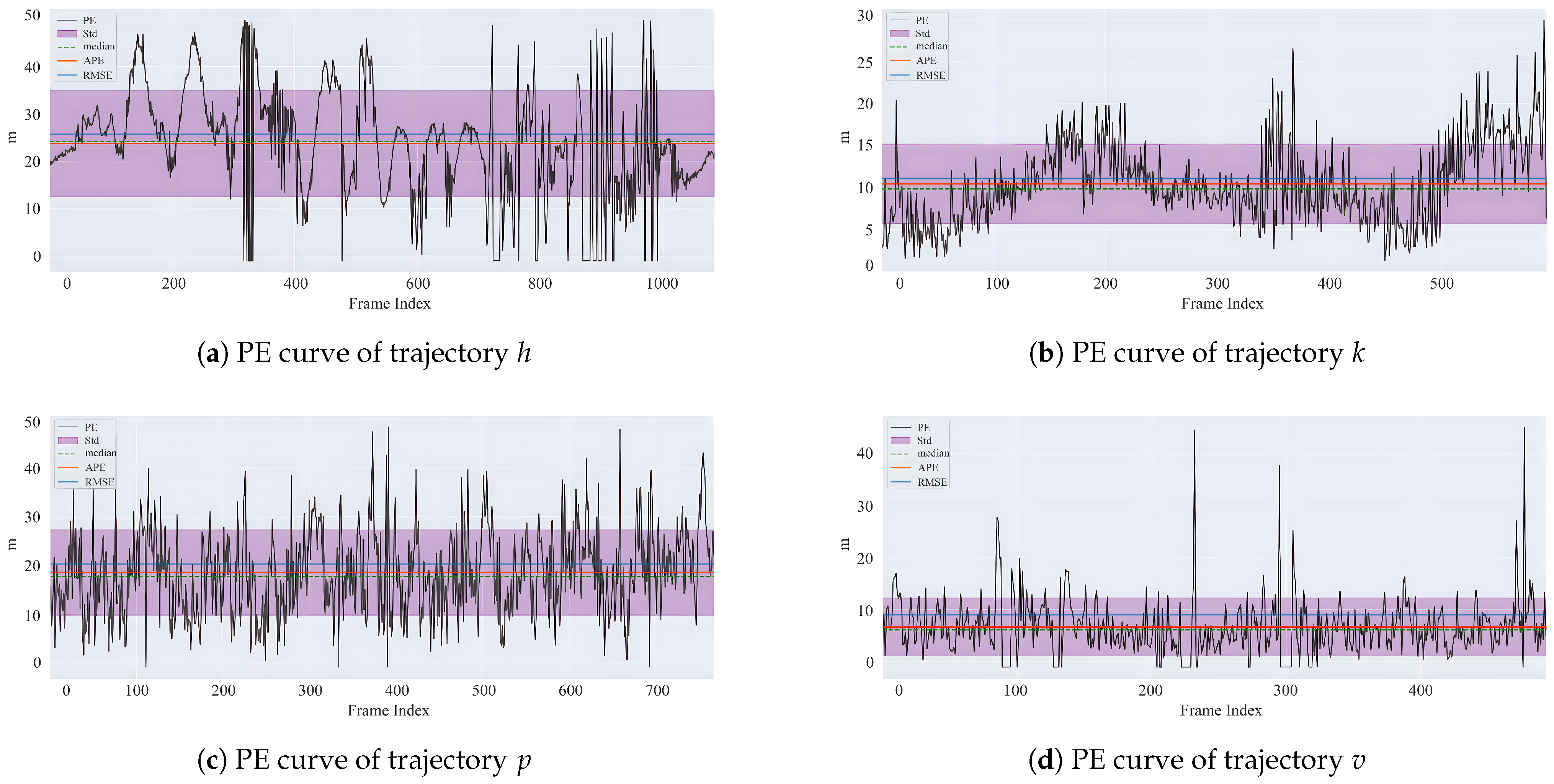

The pose estimation node takes the output from the image registration node as input, computes the pose of the UAV using the Perspective-n-Point (PnP) algorithm, and applies Kalman filtering for refinement. The final output is the estimated pose of the UAV. It should be noted that since the satellite reference map used in this paper is a two-dimensional planar map without elevation information, it is assumed that the height of all points is zero when constructing the 3D spatial points required for the PnP algorithm. Although this simplification neglects terrain variations, it is sufficient for pose estimation in the experimental environment. In addition, the PnP algorithm also relies on camera intrinsic parameters. For the VisLoc dataset used in this paper, we contacted the dataset authors to obtain the camera intrinsics.