UAV-Based Wellsite Reclamation Monitoring Using Transformer-Based Deep Learning on Multi-Seasonal LiDAR and Multispectral Data

Abstract

Highlights

- UAV-LiDAR achieved high accuracy in tree detection and height estimation (R2 = 0.95; RMSE = 0.40 m), while integrating multispectral data improved species classification (mIoU up to 0.93 in spring).

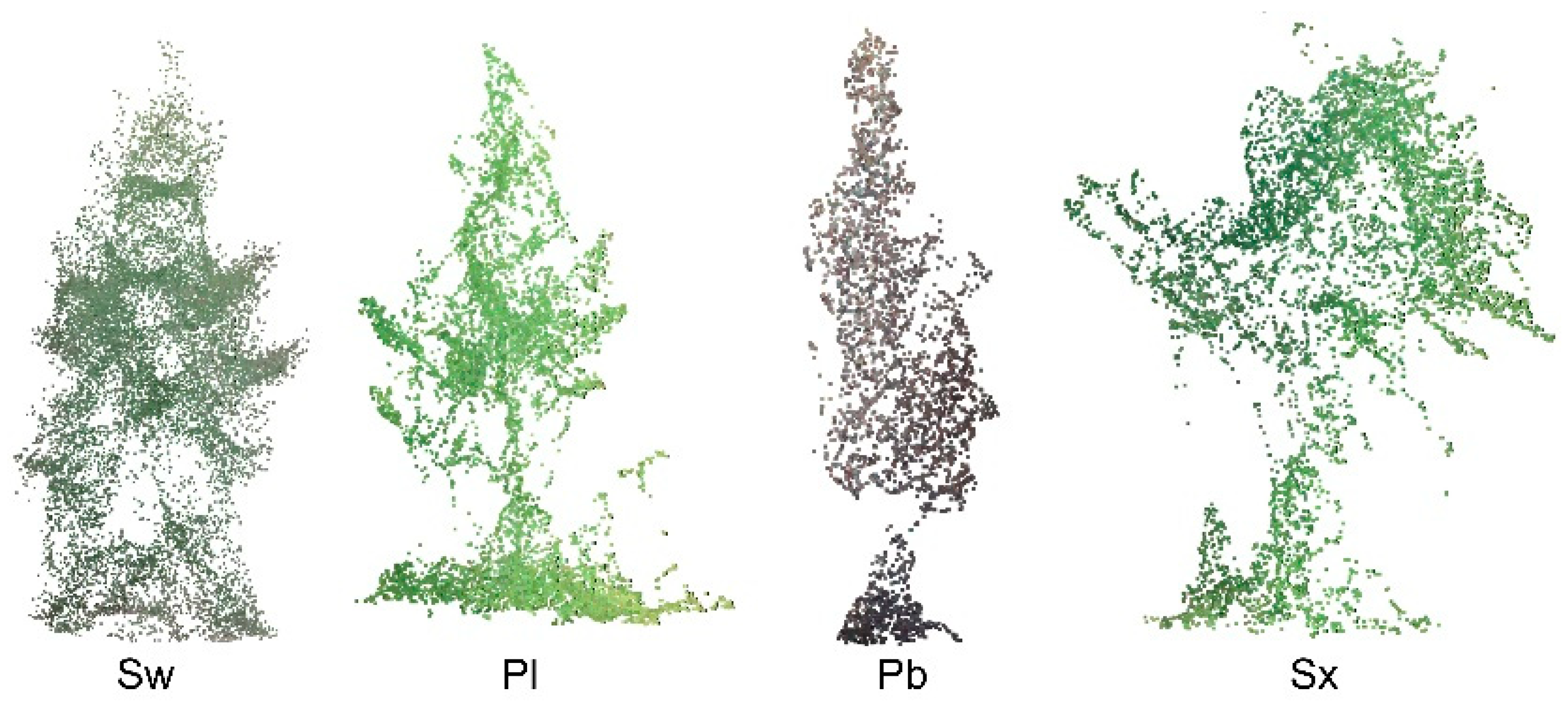

- Coniferous species were classified more accurately than deciduous species, though performance declined for shorter (<2 m) and multi-stemmed species.

- Combining LiDAR and multispectral data provides a scalable, repeatable method for monitoring forest recovery on reclaimed wellsites.

- TreeAIBox plugin enables broader research and operational use, advancing post-disturbance vegetation assessment.

Abstract

1. Introduction

- (1)

- Evaluate the effect of LiDAR and MS data fusion on model accuracy for individual tree segmentation and classification.

- (2)

- Validate LiDAR-derived height estimates and detection accuracy against field-measured reference trees.

- (3)

- Identify and analyze sources of detection and classification errors in LiDAR-based models.

- (4)

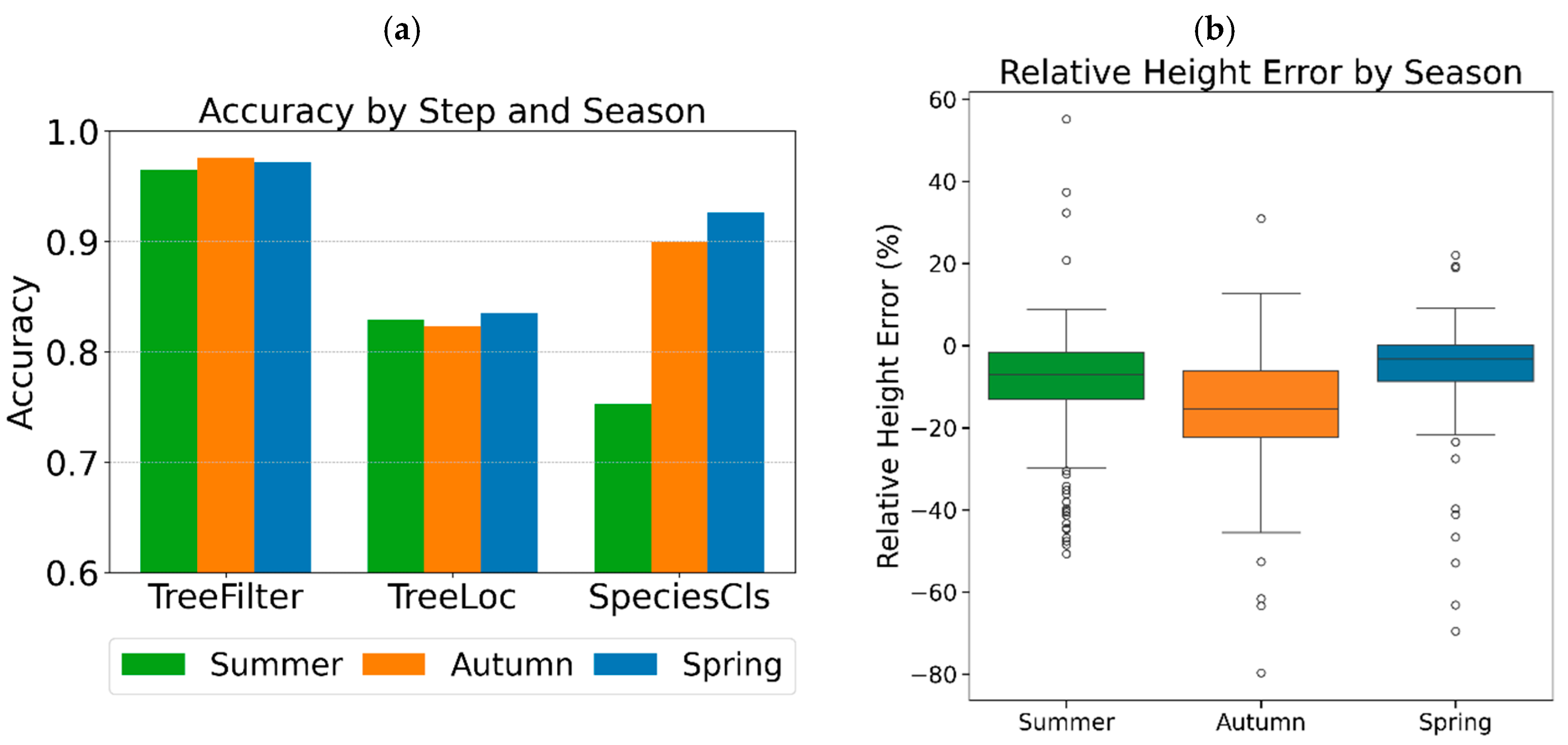

- Assess the repeatability and consistency of the proposed methodology using LiDAR data collected across three seasons (summer, autumn, and spring).

2. Materials and Methods

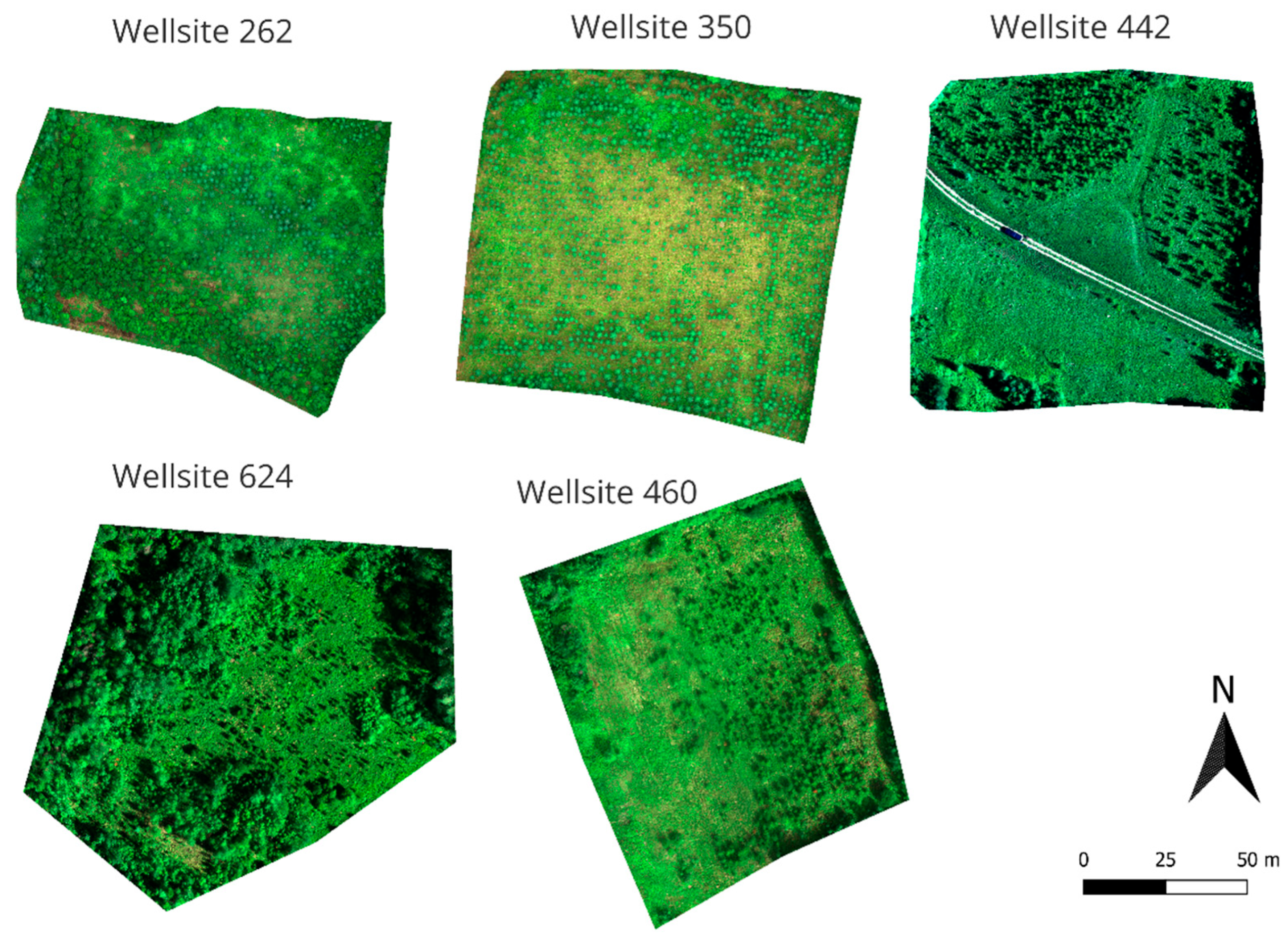

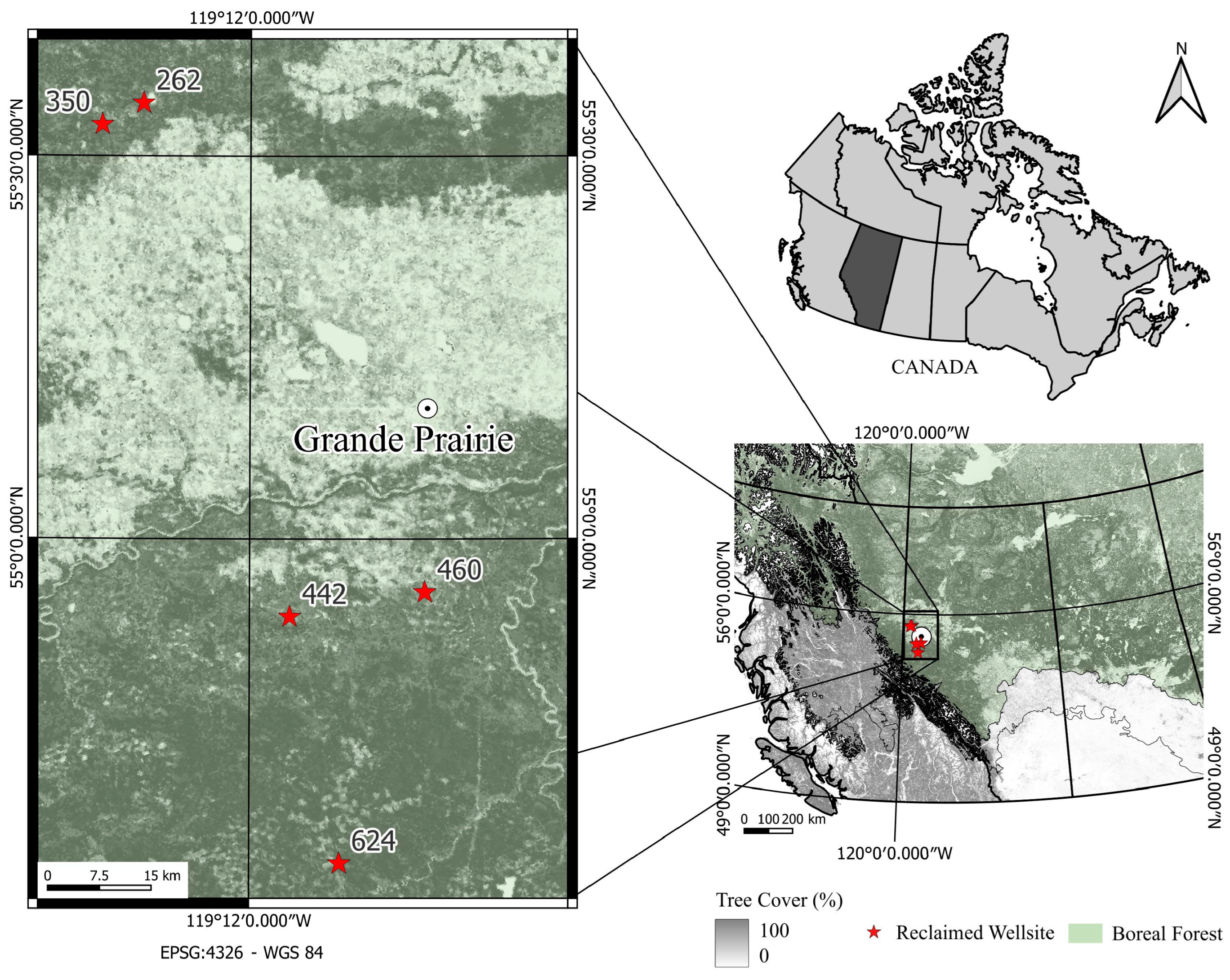

2.1. Study Area

2.2. Data Acquisition and Preparation

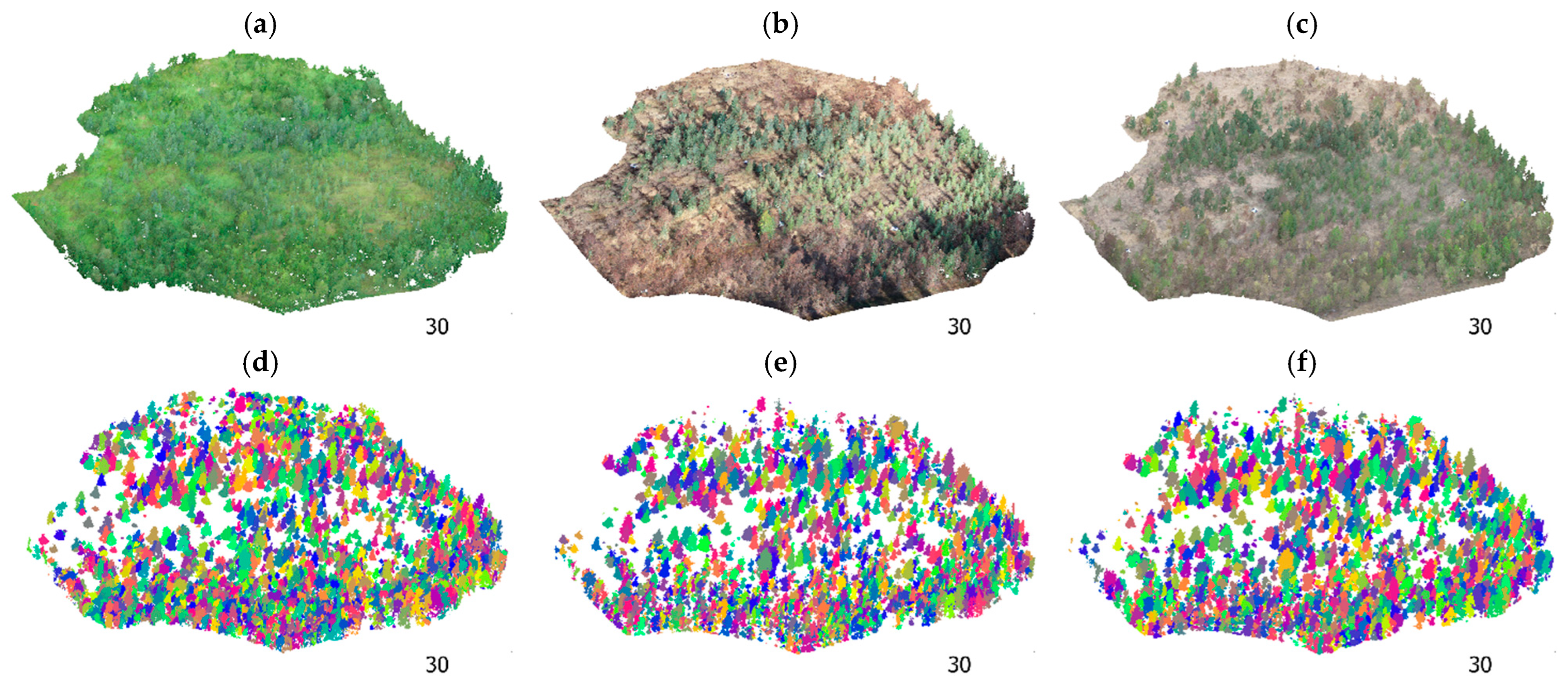

2.2.1. UAV-LiDAR Data

2.2.2. Aerial MS Data

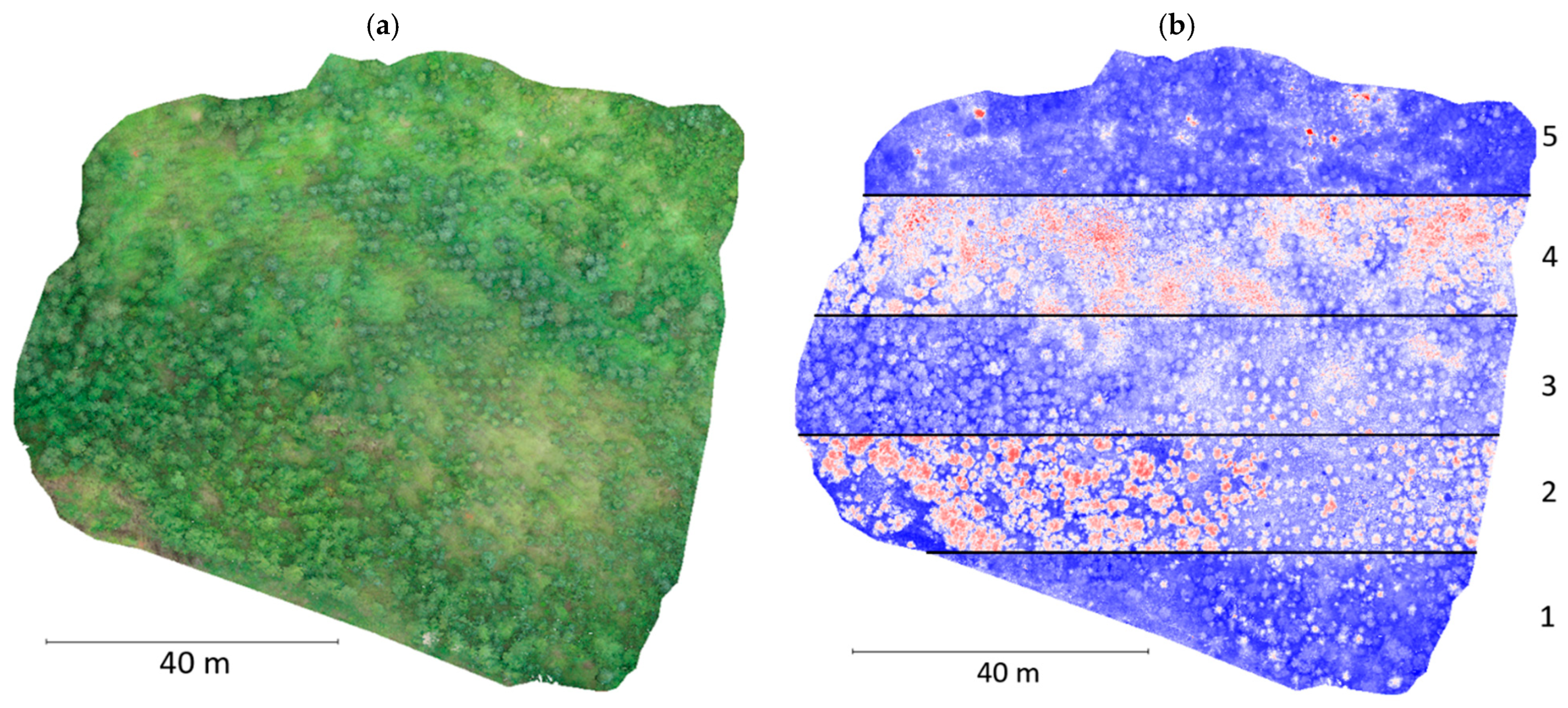

2.2.3. LiDAR and MS Data Fusion

2.2.4. Field Data for Model Validation

3. Methodology

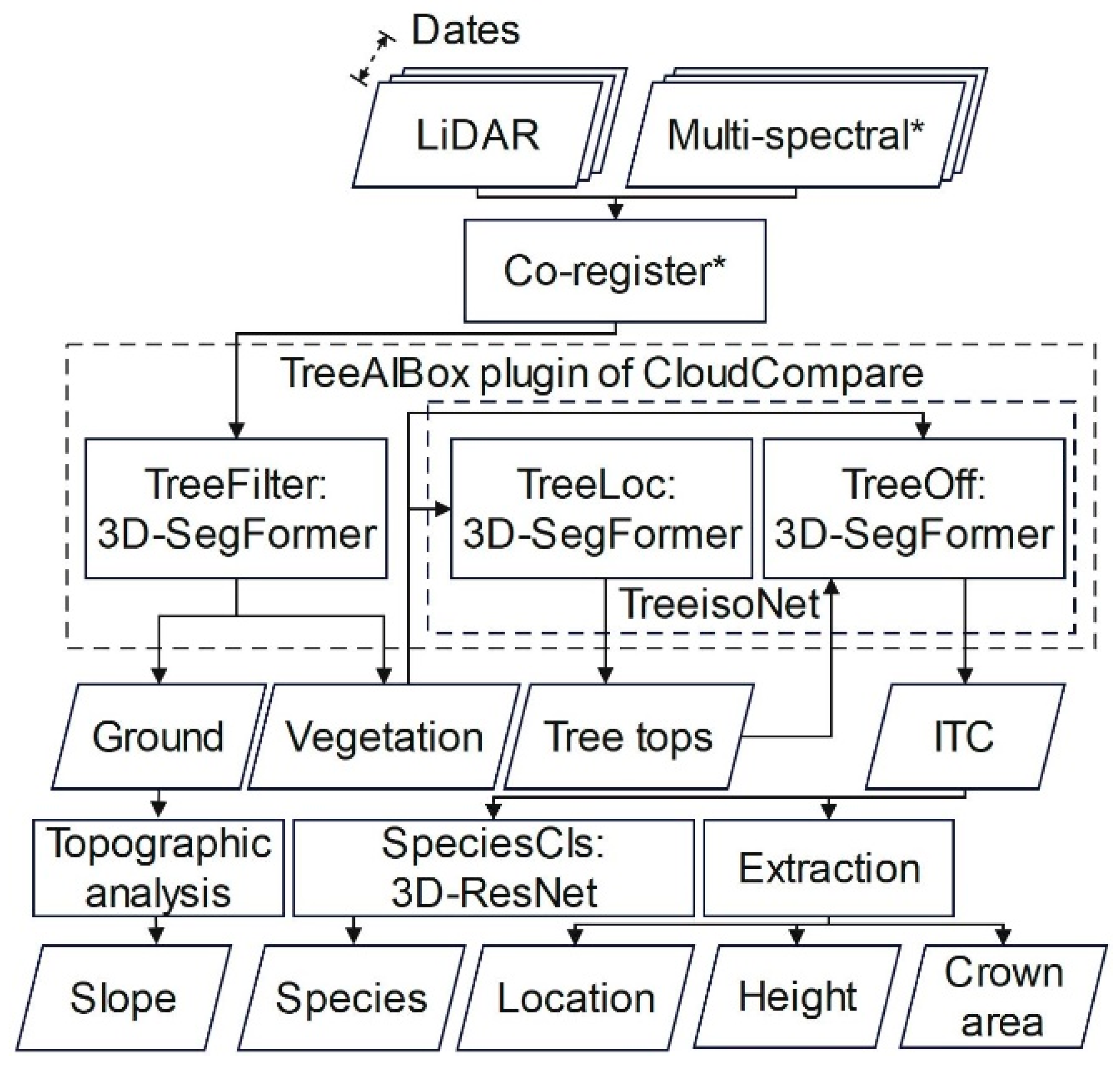

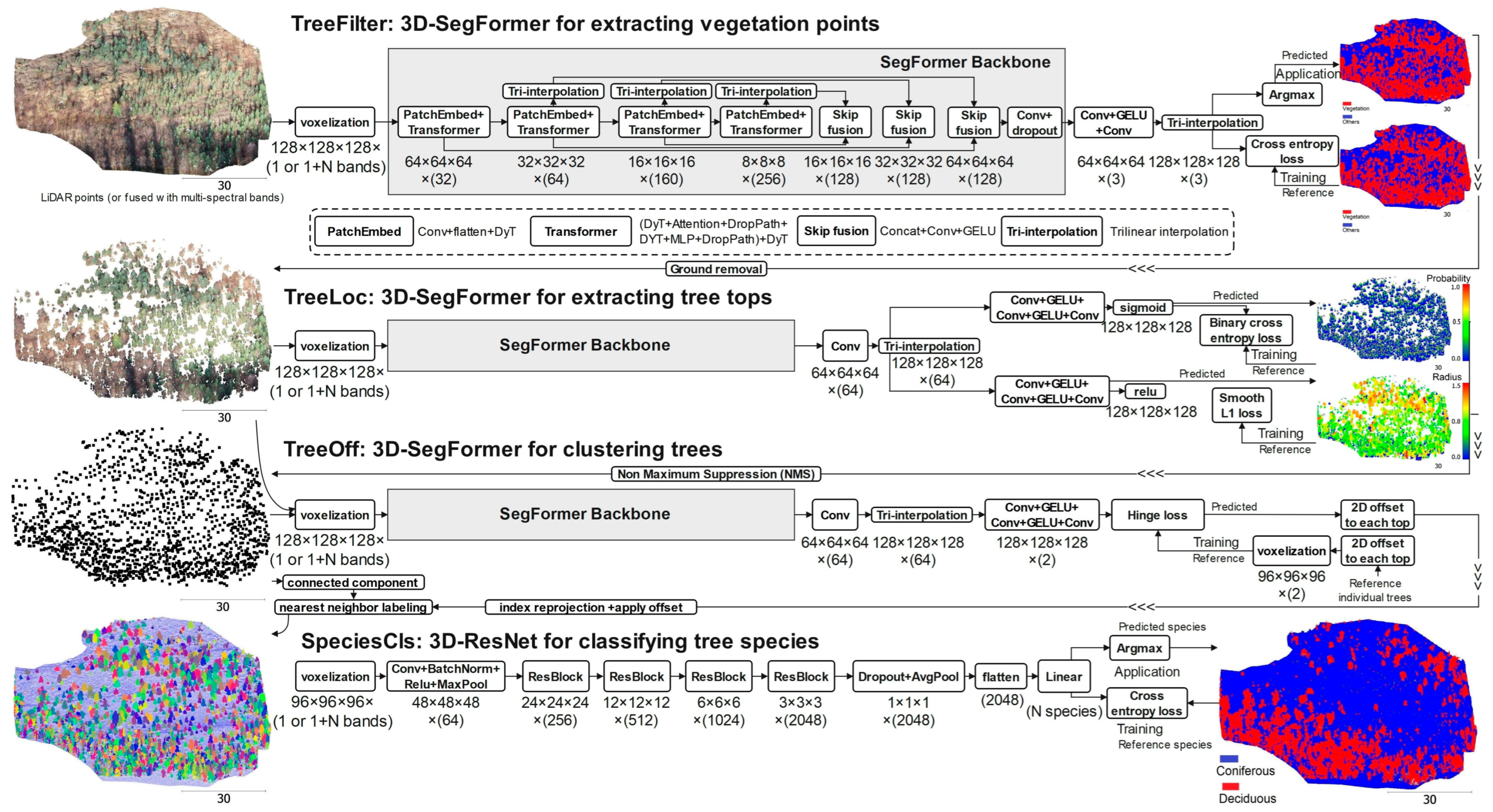

3.1. Workflow Description

3.2. Model Training and Accuracy Assessment

3.3. Model Evaluation and Application

4. Results

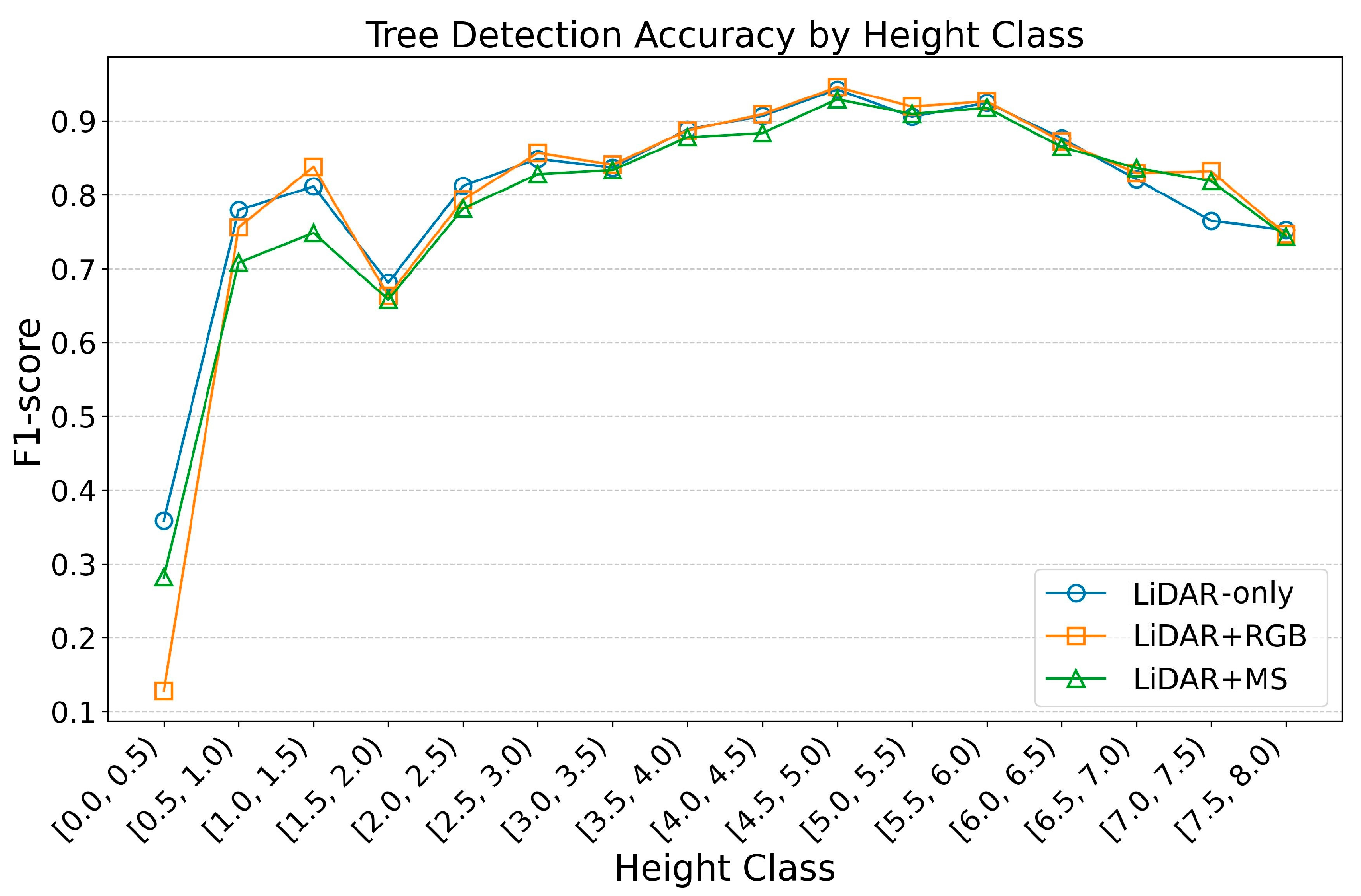

4.1. Effect of Additional Spectral Data on Model Accuracy

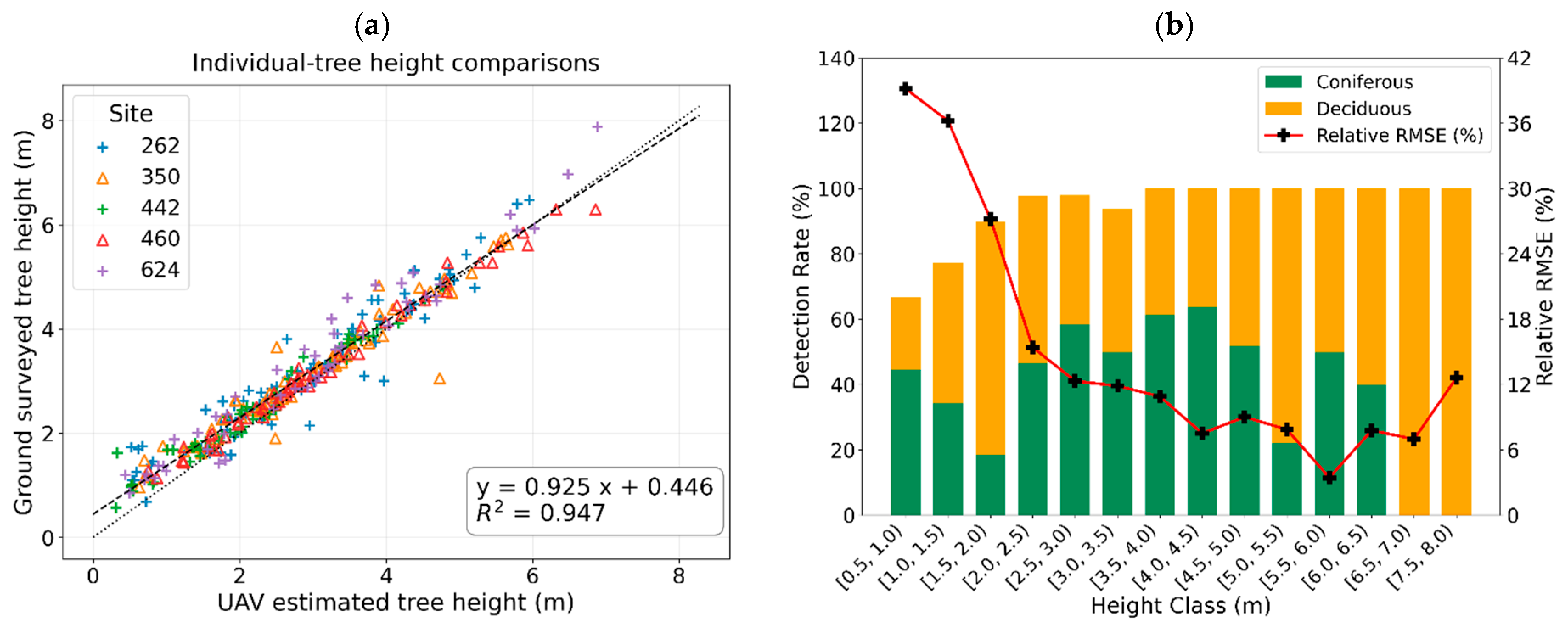

4.2. Validation of LiDAR-Derived Heights and Detection Rates with Reference Trees

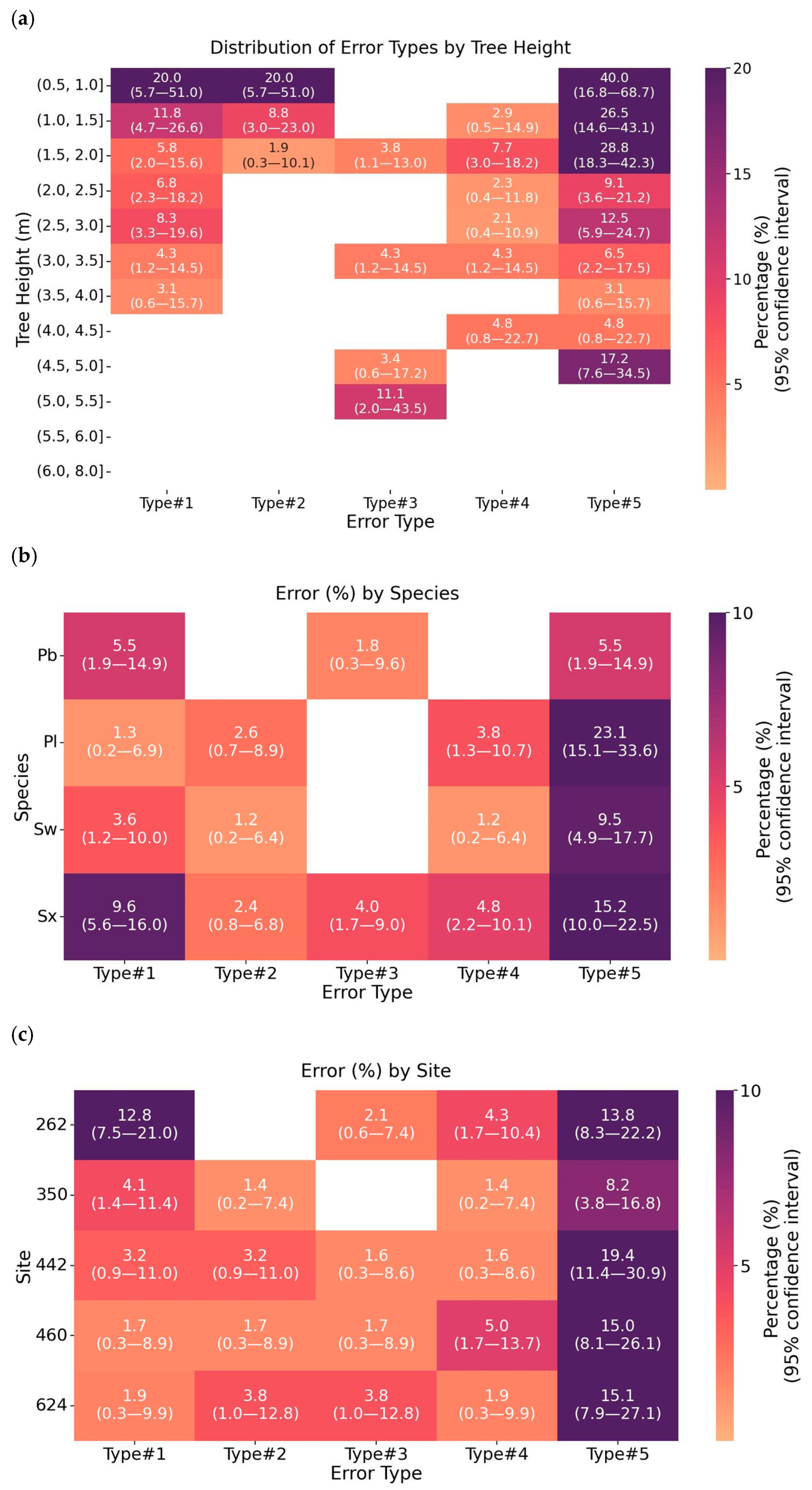

4.3. LiDAR Error Sources

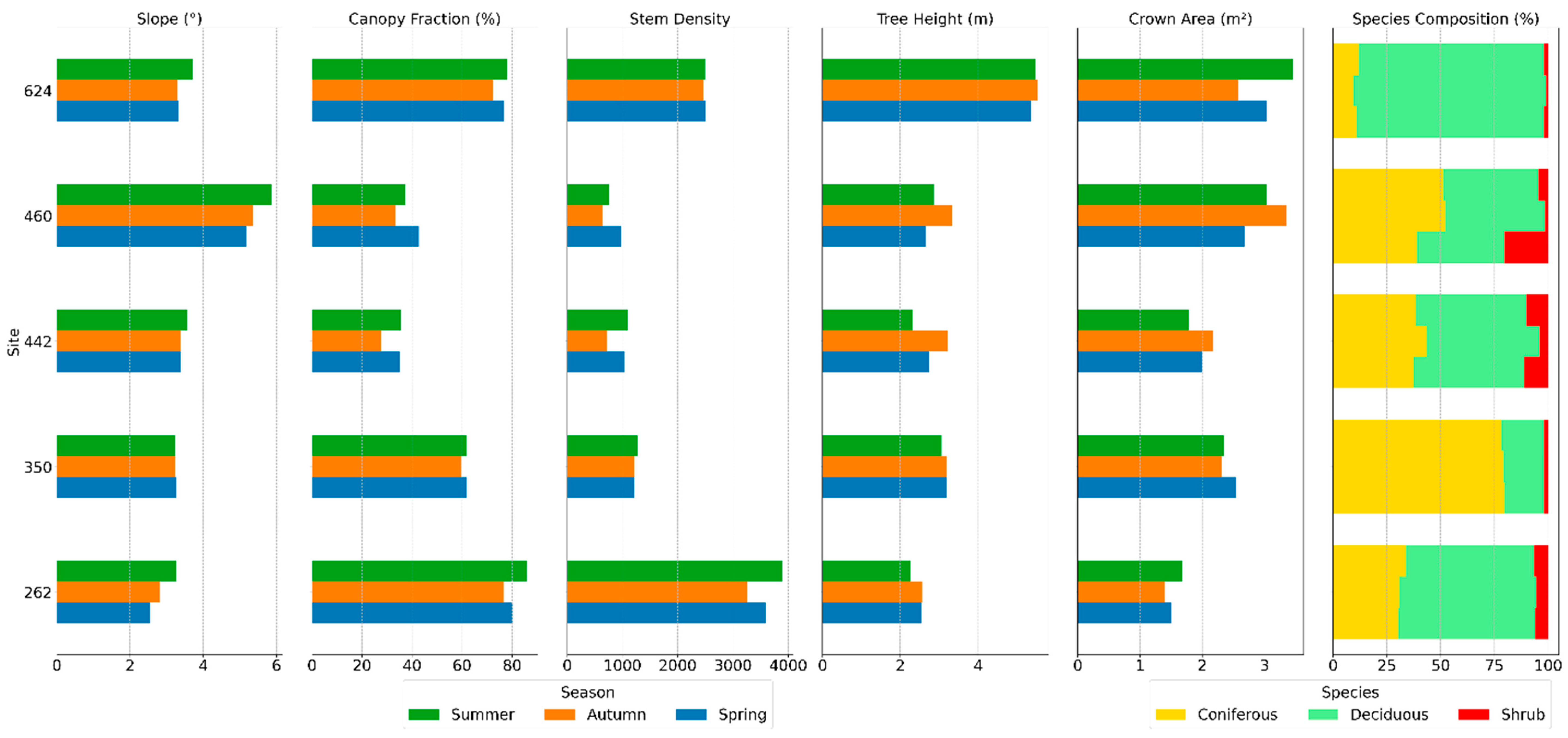

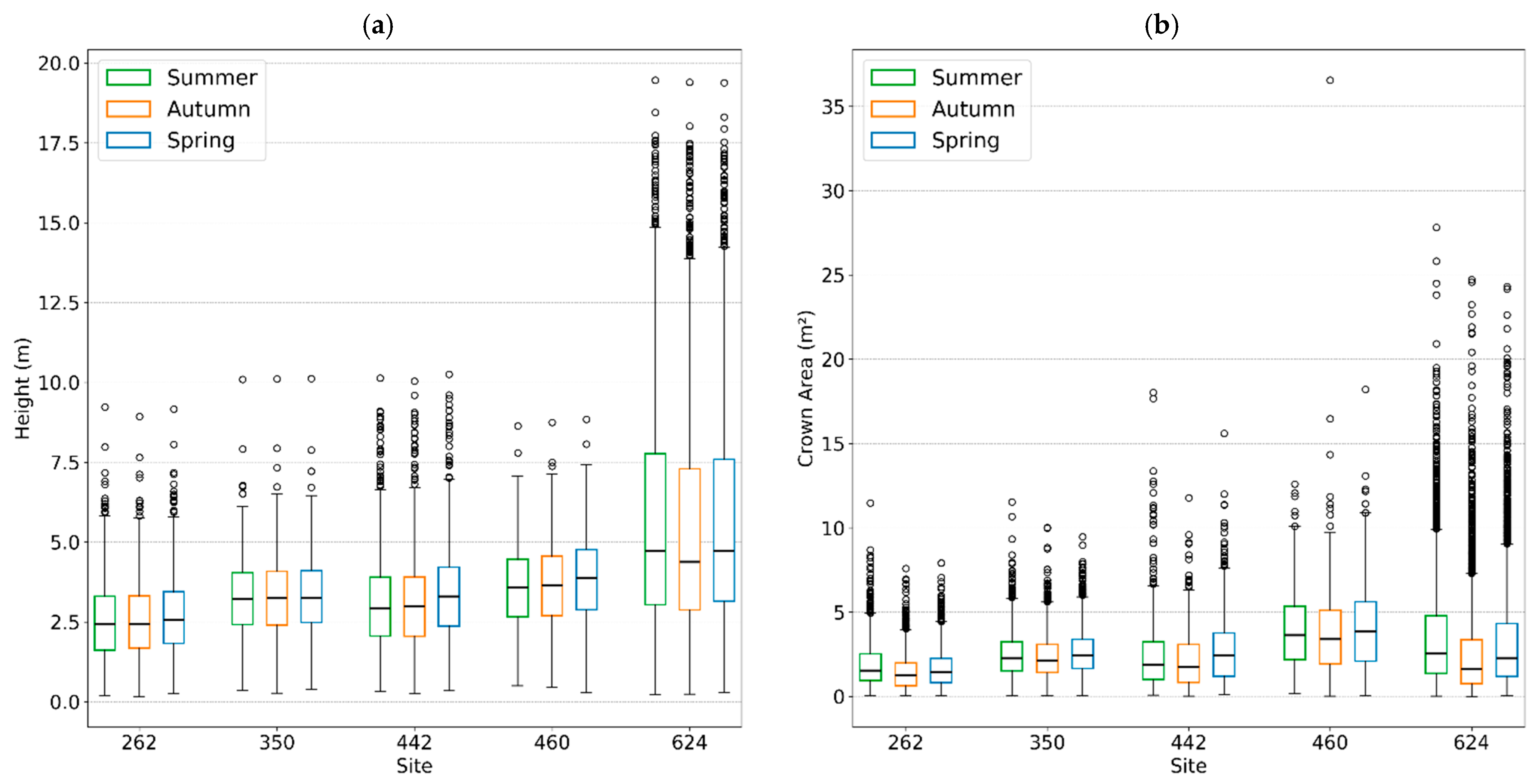

4.4. Capturing Seasonal Changes for Wellsites Monitoring

5. Discussion

5.1. Challenges in Short Tree Detection and Height Estimation

5.2. Impact of Spectral and Geometric Domains on ITD and Classification

5.3. Season Selection for UAV Survey of Trees on Wellsites

5.4. Study Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Uncrewed aerial vehicle |

| LiDAR | Light detection and ranging |

| MS | Multispectral |

| mIoU | Mean intersection-over-union |

| DL | Deep learning |

| ITD | Individual tree detection |

| NIR | Near-infrared |

| GCP | Ground control points |

| GNSS | Global navigation satellite system |

| GSD | Ground sample distance |

| PPP | Precise Point Positioning |

| DTM | Digital terrain model |

| DEM | Digital elevation model |

Appendix A

References

- Chen, L.; Guo, Y. The drivers of sustainable development: Natural resources extraction and education for low-middle- and high-income countries. Resour. Policy 2023, 86, 104146. [Google Scholar] [CrossRef]

- Teng, Y.-P. Natural resources extraction and sustainable development: Linear and non-linear resources curse hypothesis perspective for high income countries. Resour. Policy 2023, 83, 103685. [Google Scholar] [CrossRef]

- Feng, S.; Zhao, W.; Zhan, T.; Yan, Y.; Pereira, P. Land degradation neutrality: A review of progress and perspectives. Ecol. Indic. 2022, 144, 109530. [Google Scholar] [CrossRef]

- Alberta Energy Regulator Well Status. 2025. Available online: https://www.aer.ca/data-and-performance-reports/data-hub/well-status (accessed on 12 May 2025).

- Chowdhury, S.; Peddle, D.R.; Wulder, M.A.; Heckbert, S.; Shipman, T.C.; Chao, D.K. Estimation of land-use/land-cover changes associated with energy footprints and other disturbance agents in the Upper Peace Region of Alberta Canada from 1985 to 2015 using Landsat data. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102224. [Google Scholar] [CrossRef]

- Ducks Unlimited Western Boreal Forest—Canada. 2023. Available online: https://www.ducks.org/conservation/international-conservation-plan/western-boreal-forest-ca (accessed on 12 May 2025).

- Baah-Acheamfour, M.; Dewey, M.; Fraser, E.C.; Schreiber, S.G.; Schoonmaker, A. Assessing Ecological Recovery of Reclaimed Well Sites: A Case Study From Alberta, Canada. Front. For. Glob. Change 2022, 5, 849246. [Google Scholar] [CrossRef]

- Powter, C.; Chymko, N.; Dinwoodie, G.; Howat, D.; Janz, A.; Puhlmann, R.; Richens, T.; Watson, D.; Sinton, H.; Ball, K.; et al. Regulatory history of Alberta’s industrial land conservation and reclamation program. Can. J. Soil Sci. 2012, 92, 39–51. [Google Scholar] [CrossRef]

- Environment and Sustainable Resource Development 2010 Reclamation Criteria for Wellsites and Associated Facilities for Forested Lands. 2013. Available online: https://open.alberta.ca/publications/1657667/resource/77e66fa1-f7ee-44ad-8c68-234854d38d44 (accessed on 1 October 2025).

- Reid, J.; Castka, P. The impact of remote sensing on monitoring and reporting—The case of conformance systems. J. Clean. Prod. 2023, 393, 136331. [Google Scholar] [CrossRef]

- Da Cunha Neto, E.M.; Rex, F.E.; Veras, H.F.P.; Moura, M.M.; Sanquetta, C.R.; Käfer, P.S.; Sanquetta, M.N.I.; Zambrano, A.M.A.; Broadbent, E.N.; Corte, A.P.D. Using high-density UAV-Lidar for deriving tree height of Araucaria Angustifolia in an Urban Atlantic Rain Forest. Urban For. Urban Green. 2021, 63, 127197. [Google Scholar] [CrossRef]

- Straker, A.; Puliti, S.; Breidenbach, J.; Kleinn, C.; Pearse, G.; Astrup, R.; Magdon, P. Instance segmentation of individual tree crowns with YOLOv5: A comparison of approaches using the ForInstance benchmark LiDAR dataset. ISPRS Open J. Photogramm. Remote Sens. 2023, 9, 100045. [Google Scholar] [CrossRef]

- Xiang, B.; Wielgosz, M.; Kontogianni, T.; Peters, T.; Puliti, S.; Astrup, R.; Schindler, K. Automated forest inventory: Analysis of high-density airborne LiDAR point clouds with 3D deep learning. Remote Sens. Environ. 2024, 305, 114078. [Google Scholar] [CrossRef]

- Franklin, S.E. Pixel- and object-based multispectral classification of forest tree species from small unmanned aerial vehicles. J. Unmanned Veh. Syst. 2018, 6, 195–211. [Google Scholar] [CrossRef]

- Windrim, L.; Carnegie, A.J.; Webster, M.; Bryson, M. Tree Detection and Health Monitoring in Multispectral Aerial Imagery and Photogrammetric Pointclouds Using Machine Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2554–2572. [Google Scholar] [CrossRef]

- Hird, J.N.; Montaghi, A.; McDermid, G.J.; Kariyeva, J.; Moorman, B.J.; Nielsen, S.E.; McIntosh, A.C.S. Use of Unmanned Aerial Vehicles for Monitoring Recovery of Forest Vegetation on Petroleum Well Sites. Remote Sens. 2017, 9, 413. [Google Scholar] [CrossRef]

- Mathews, A.J.; Singh, K.K.; Cummings, A.R.; Rogers, S.R. Fundamental practices for drone remote sensing research across disciplines. Drone Syst. Appl. 2023, 11, 1–22. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Lv, L.; Li, X.; Mao, F.; Zhou, L.; Xuan, J.; Zhao, Y.; Yu, J.; Song, M.; Huang, L.; Du, H. A Deep Learning Network for Individual Tree Segmentation in UAV Images with a Coupled CSPNet and Attention Mechanism. Remote Sens. 2023, 15, 4420. [Google Scholar] [CrossRef]

- Pearse, G.D.; Tan, A.Y.S.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Tong, F.; Zhang, Y. Individual tree crown delineation in high resolution aerial RGB imagery using StarDist-based model. Remote Sens. Environ. 2025, 319, 114618. [Google Scholar] [CrossRef]

- Korznikov, K.A.; Kislov, D.E.; Altman, J.; Doležal, J.; Vozmishcheva, A.S.; Krestov, P.V. Using U-Net-Like Deep Convolutional Neural Networks for Precise Tree Recognition in Very High Resolution RGB (Red, Green, Blue) Satellite Images. Forests 2021, 12, 66. [Google Scholar] [CrossRef]

- Puliti, S.; McLean, J.P.; Cattaneo, N.; Fischer, C.; Astrup, R. Tree height-growth trajectory estimation using uni-temporal UAV laser scanning data and deep learning. For. Int. J. For. Res. 2023, 96, 37–48. [Google Scholar] [CrossRef]

- Gyawali, A.; Aalto, M.; Peuhkurinen, J.; Villikka, M.; Ranta, T. Comparison of Individual Tree Height Estimated from LiDAR and Digital Aerial Photogrammetry in Young Forests. Sustainability 2022, 14, 3720. [Google Scholar] [CrossRef]

- Wang, Z.; Li, P.; Cui, Y.; Lei, S.; Kang, Z. Automatic Detection of Individual Trees in Forests Based on Airborne LiDAR Data with a Tree Region-Based Convolutional Neural Network (RCNN). Remote Sens. 2023, 15, 1024. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, H.; Liu, X.; Yu, H. Towards Intricate Stand Structure: A Novel Individual Tree Segmentation Method for ALS Point Cloud Based on Extreme Offset Deep Learning. Appl. Sci. 2023, 13, 6853. [Google Scholar] [CrossRef]

- Hao, Z.; Lin, L.; Post, C.J.; Mikhailova, E.A.; Li, M.; Chen, Y.; Yu, K.; Liu, J. Automated tree-crown and height detection in a young forest plantation using mask region-based convolutional neural network (Mask R-CNN). ISPRS J. Photogramm. Remote Sens. 2021, 178, 112–123. [Google Scholar] [CrossRef]

- Mao, Z.; Abdi, O.; Uusitalo, J.; Laamanen, V.; Kivinen, V.-P. Super-resolution supporting individual tree detection and canopy stratification using half-meter aerial data. ISPRS J. Photogramm. Remote Sens. 2025, 224, 251–271. [Google Scholar] [CrossRef]

- Dersch, S.; Schöttl, A.; Krzystek, P.; Heurich, M. Towards complete tree crown delineation by instance segmentation with Mask R–CNN and DETR using UAV-based multispectral imagery and lidar data. ISPRS Open J. Photogramm. Remote Sens. 2023, 8, 100037. [Google Scholar] [CrossRef]

- Dai, W.; Yang, B.; Dong, Z.; Shaker, A. A new method for 3D individual tree extraction using multispectral airborne LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 144, 400–411. [Google Scholar] [CrossRef]

- Puliti, S.; Pearse, G.; Surový, P.; Wallace, L.; Hollaus, M.; Wielgosz, M.; Astrup, R. FOR-instance: A UAV laser scanning benchmark dataset for semantic and instance segmentation of individual trees. arXiv 2023, arXiv:2309.01279. [Google Scholar] [CrossRef]

- Canadian Climate Normals 1991-2020 Data—Climate—Environment and Climate Change Canada. 2025. Available online: https://climate.weather.gc.ca/climate_normals/results_1991_2020_e.html?searchType=stnName_1991&txtStationName_1991=grande+prairie&searchMethod=contains&txtCentralLatMin=0&txtCentralLatSec=0&txtCentralLongMin=0&txtCentralLongSec=0&stnID=290000000&dispBack=1 (accessed on 1 October 2025).

- Brandt, J.P. The extent of the North American boreal zone. Environ. Rev. 2009, 17, 101–161. [Google Scholar] [CrossRef]

- Beaudoin, A.; Bernier, P.Y.; Villemaire, P.; Guindon, L.; Guo, X.J. Species Composition, Forest Properties and Land Cover Types Across Canada’s Forests at 250m Resolution for 2001 and 2011. 2017. Available online: https://open.canada.ca/data/en/dataset/ec9e2659-1c29-4ddb-87a2-6aced147a990 (accessed on 1 October 2025).

- Natural Regions Committee Natural Regions and Subregions of Alberta. 2006. Available online: https://open.alberta.ca/publications/0778545725 (accessed on 1 October 2025).

- Environment and Sustainable Resource Development Reclamation Criteria for Wellsites and Associated Facilities: 1995 Update. 1995. Available online: https://open.alberta.ca/publications/reclamation-criteria-for-wellsites-and-associated-facilities-1995-update (accessed on 12 May 2025).

- Elsheikh, M.; Iqbal, U.; Noureldin, A.; Korenberg, M. The Implementation of Precise Point Positioning (PPP): A Comprehensive Review. Sensors 2023, 23, 8874. [Google Scholar] [CrossRef]

- ASPRS LAS Specification; Version 1.4-R13; ASPRS: Baton Rouge, LA, USA, 2013.

- MicaSense RedEdge-P Integration Guide. 2021. Available online: https://support.micasense.com/hc/en-us/articles/4410824602903-RedEdge-P-Integration-Guide (accessed on 1 October 2025).

- Bookstein, F.L. Principal warps: Thin-plate splines and the decomposition of deformations. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 567–585. [Google Scholar] [CrossRef]

- Gillies, S. Others Rasterio: Geospatial Raster I/O for {Python} Programmers. 2013. Available online: https://github.com/rasterio/rasterio (accessed on 1 October 2025).

- Brown, G.; Butler, H.; Montaigu, T. Laspy: Python Library for Lidar LAS/LAZ IO. 2022. Available online: https://github.com/laspy/laspy (accessed on 1 October 2025).

- Xi, Z.; Hopkinson, C.; Chasmer, L. Supervised terrestrial to airborne laser scanner model calibration for 3D individual-tree attribute mapping using deep neural networks. ISPRS J. Photogramm. Remote Sens. 2024, 209, 324–343. [Google Scholar] [CrossRef]

- Xi, Z.; Degenhardt, D. A new unified framework for supervised 3D crown segmentation (TreeisoNet) using deep neural networks across airborne, UAV-borne, and terrestrial laser scans. ISPRS Open J. Photogramm. Remote Sens. 2025, 15, 100083. [Google Scholar] [CrossRef]

- Xi, Z.; Hopkinson, C.; Rood, S.B.; Peddle, D.R. See the forest and the trees: Effective machine and deep learning algorithms for wood filtering and tree species classification from terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2020, 168, 1–16. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Rodríguez-Puerta, F.; Gómez-García, E.; Martín-García, S.; Pérez-Rodríguez, F.; Prada, E. UAV-Based LiDAR Scanning for Individual Tree Detection and Height Measurement in Young Forest Permanent Trials. Remote Sens. 2021, 14, 170. [Google Scholar] [CrossRef]

- Wielgosz, M.; Puliti, S.; Xiang, B.; Schindler, K.; Astrup, R. SegmentAnyTree: A sensor and platform agnostic deep learning model for tree segmentation using laser scanning data. Remote Sens. Environ. 2024, 313, 114367. [Google Scholar] [CrossRef]

- Hopkinson, C.; Chasmer, L.; Young-Pow, C.; Treitz, P. Assessing forest metrics with a ground-based scanning lidar. Can. J. For. Res. 2004, 34, 573–583. [Google Scholar] [CrossRef]

- Liang, X.; Litkey, P.; Hyyppa, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic Stem Mapping Using Single-Scan Terrestrial Laser Scanning. IEEE Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Hamraz, H.; Jacobs, N.B.; Contreras, M.A.; Clark, C.H. Deep learning for conifer/deciduous classification of airborne LiDAR 3D point clouds representing individual trees. ISPRS J. Photogramm. Remote Sens. 2019, 158, 219–230. [Google Scholar] [CrossRef]

- Waser, L.T.; Ginzler, C.; Kuechler, M.; Baltsavias, E.; Hurni, L. Semi-automatic classification of tree species in different forest ecosystems by spectral and geometric variables derived from Airborne Digital Sensor (ADS40) and RC30 data. Remote Sens. Environ. 2011, 115, 76–85. [Google Scholar] [CrossRef]

- Grabska, E.; Hostert, P.; Pflugmacher, D.; Ostapowicz, K. Forest Stand Species Mapping Using the Sentinel-2 Time Series. Remote Sens. 2019, 11, 1197. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, S.; Hogg, E.H.; Lieffers, V.; Qin, Y.; He, F. Estimating spatial variation in Alberta forest biomass from a combination of forest inventory and remote sensing data. Biogeosciences 2014, 11, 2793–2808. [Google Scholar] [CrossRef]

- Degenhardt, D.; Movchan, D.; Selvaraj, C.; Xi, Z.; Van Dongen, A. Fused LiDAR and Multispectral Data from Unmanned Aerial Vehicle for Individual Tree Detection in Reclaimed Land Monitoring. 2025. Available online: https://open.canada.ca/data/en/dataset/15c99460-599b-423a-90e0-1af8a4f81913 (accessed on 1 October 2025).

| Wellsite ID | Species | Mean Top Tree Height (stdev), m | Year of Planting | Reference Trees Measured (n) |

|---|---|---|---|---|

| 262 | Sw, Pb, Sx | 4.07 (1.98) | 2007 | Sw (25), Pb (15), Sx (28) |

| 350 | Sw, Sx | 4.34 (1.06) | 2006 | Sw (28), Sx (17) |

| 442 | Pl, Sw, Sx | 2.91 (1.68) | 2009 | Pl (27), Sw (3), Sx (18) |

| 460 | Pl, Pb, Sx | 3.82 (1.23) | 2009 | Pl (25), Pb (20), Sx (6) |

| 624 | Pl, Pb, Sw, Sx | 4.79 (2.49) | 2009 | Pl (9), Pb (19), Sx (17) |

| Steps | Model Hyperparameters Voxel Division, Resolution | #Training Sample, siteID (Date) | #Testing Sample, siteID (Date) |

|---|---|---|---|

| TreeFilter | 128 × 128 × 128, 15 × 15 × 15 cm3 | 10 scans, All (August/October 2023) | 5 scans, All (May 2024) |

| TreeLoc | 128 × 128 × 128, 20 × 20 × 20 cm3 | 19,030 trees, All (August/October 2023) | 10,175 trees, All (May 2024) |

| TreeOff | 128 × 128 × 128, 10 × 10 × 20 cm3 | 1000 trees, Wellsite #262 (August 2023) | 297 trees, Wellsite #262 (August 2023) |

| SpeciesCls | 96 × 96 × 96, 30 × 30 × 30 cm3 | 172 trees, Matched trees from field sample | 74 trees, Matched trees from field sample |

| Module (Process) | LiDAR Point Cloud Only * | LiDAR + RGB * | LiDAR + MS * |

|---|---|---|---|

| TreeFilter: Vegetation filtering | mIoU = 0.93 | mIoU = 0.93 | mIoU = 0.93 |

| TreeLoc: Tree top detection | Precision = 0.87 | Precision = 0.89 | Precision = 0.89 |

| Recall = 0.79 | Recall = 0.77 | Recall = 0.78 | |

| F1 = 0.83 | F1 = 0.83 | F1 = 0.83 | |

| TreeOff: Tree segmentation | mIoU = 0.83 | mIoU = 0.83 | mIoU = 0.84 |

| Species classification | mIoU = 0.78 | mIoU = 0.84 | mIoU = 0.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Movchan, D.; Xi, Z.; Van Dongen, A.; Selvaraj, C.; Degenhardt, D. UAV-Based Wellsite Reclamation Monitoring Using Transformer-Based Deep Learning on Multi-Seasonal LiDAR and Multispectral Data. Remote Sens. 2025, 17, 3440. https://doi.org/10.3390/rs17203440

Movchan D, Xi Z, Van Dongen A, Selvaraj C, Degenhardt D. UAV-Based Wellsite Reclamation Monitoring Using Transformer-Based Deep Learning on Multi-Seasonal LiDAR and Multispectral Data. Remote Sensing. 2025; 17(20):3440. https://doi.org/10.3390/rs17203440

Chicago/Turabian StyleMovchan, Dmytro, Zhouxin Xi, Angeline Van Dongen, Charumitha Selvaraj, and Dani Degenhardt. 2025. "UAV-Based Wellsite Reclamation Monitoring Using Transformer-Based Deep Learning on Multi-Seasonal LiDAR and Multispectral Data" Remote Sensing 17, no. 20: 3440. https://doi.org/10.3390/rs17203440

APA StyleMovchan, D., Xi, Z., Van Dongen, A., Selvaraj, C., & Degenhardt, D. (2025). UAV-Based Wellsite Reclamation Monitoring Using Transformer-Based Deep Learning on Multi-Seasonal LiDAR and Multispectral Data. Remote Sensing, 17(20), 3440. https://doi.org/10.3390/rs17203440