Assessment of the Effectiveness of Spectral Indices Derived from EnMAP Hyperspectral Imageries Using Machine Learning and Deep Learning Models for Winter Wheat Yield Prediction

Abstract

Highlights

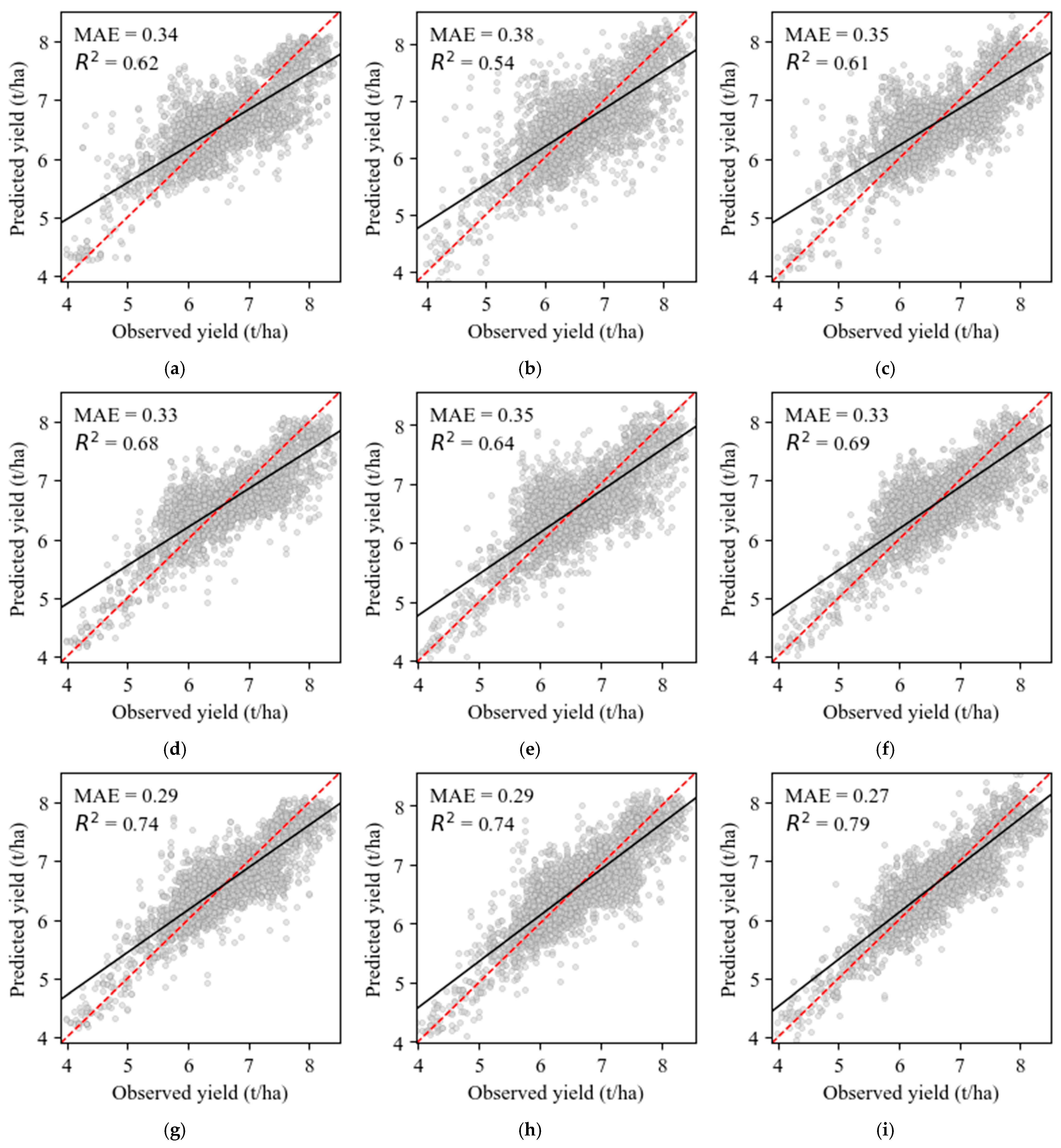

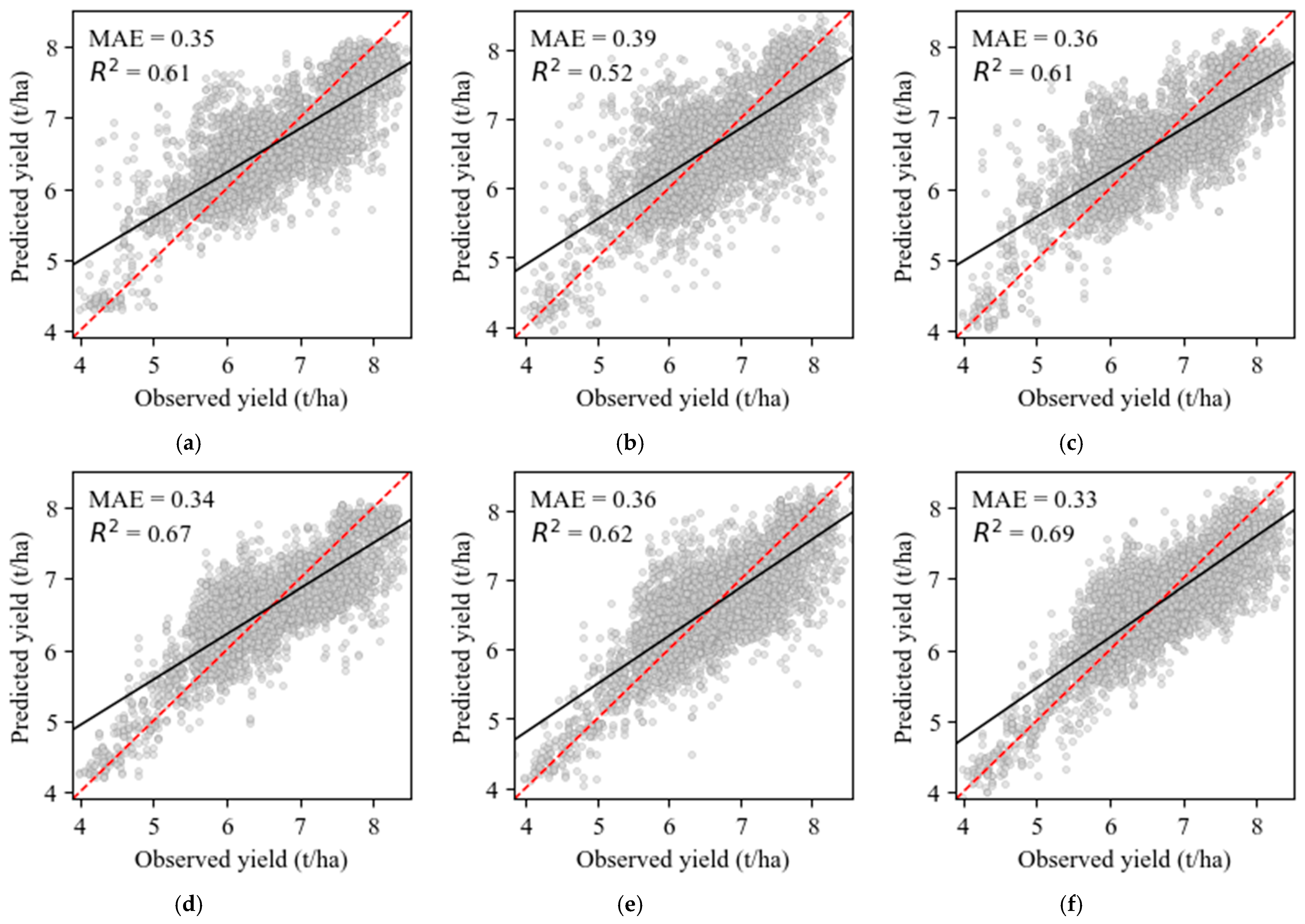

- Multi-temporal EnMAP hyperspectral data combined with machine learning and deep learning models significantly improved the accuracy of winter wheat yield prediction (R2 up to 0.79).

- SWIR indices were particularly important for early-season estimation, whereas VNIR indices became dominant during later growth stages.

- Integrating hyperspectral observations across phenological stages enables more robust and reliable yield forecasts for precision agriculture.

- Future missions, such as ESA’s CHIME, will further enhance large-scale, operational crop yield monitoring by providing frequent, high-resolution hyperspectral data.

Abstract

1. Introduction

2. Materials and Methods

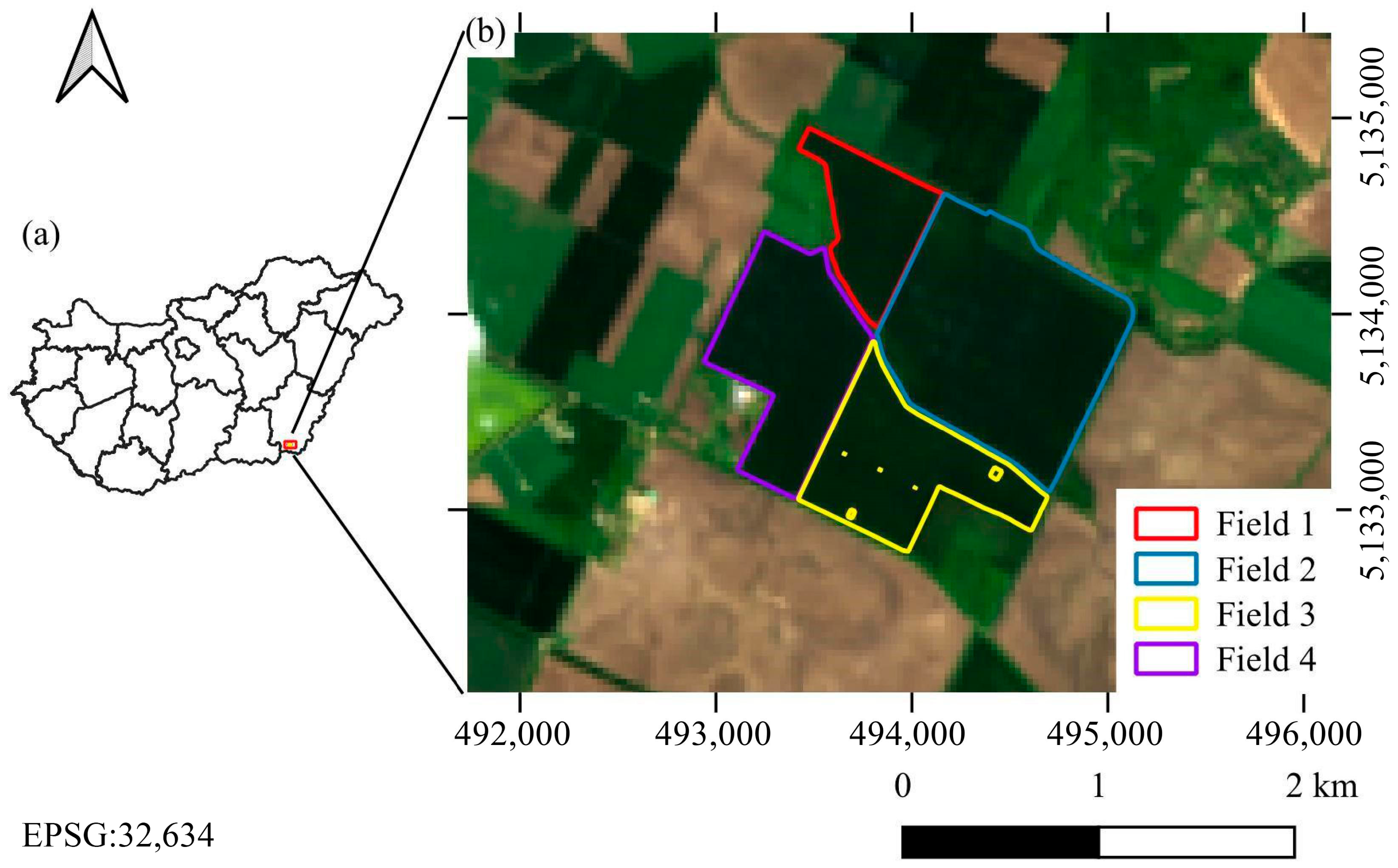

2.1. Study Area

2.2. Cultivar Characteristics

2.3. Study Period and Phenological Context

2.3.1. Agro–Climatic Conditions During the 2023 Growing Season

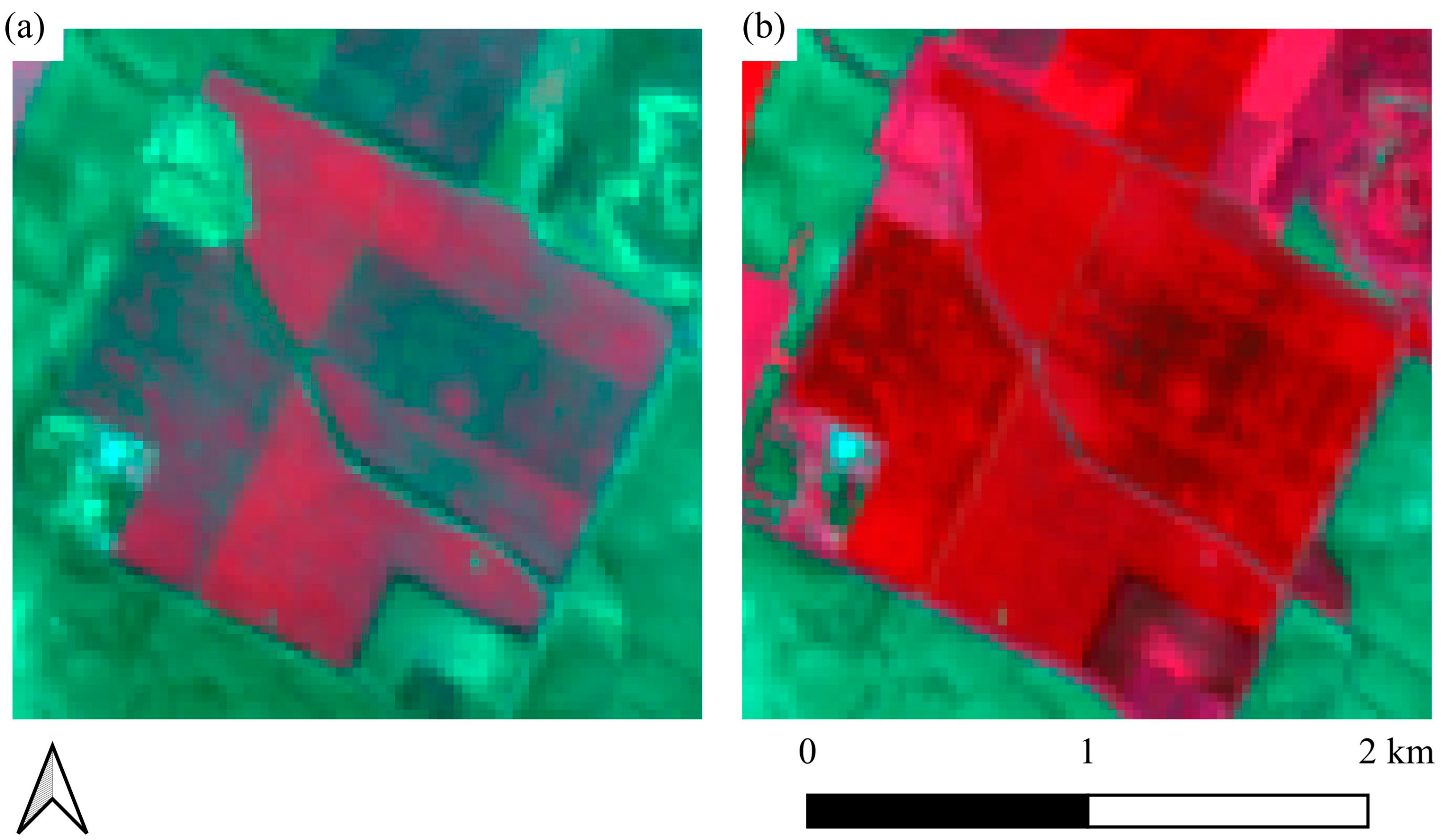

2.3.2. Phenological Development and Spectral Characteristics in 2023

2.4. Preprocessing of the Study Fields and Yield Data

Hyperspectral Dataset

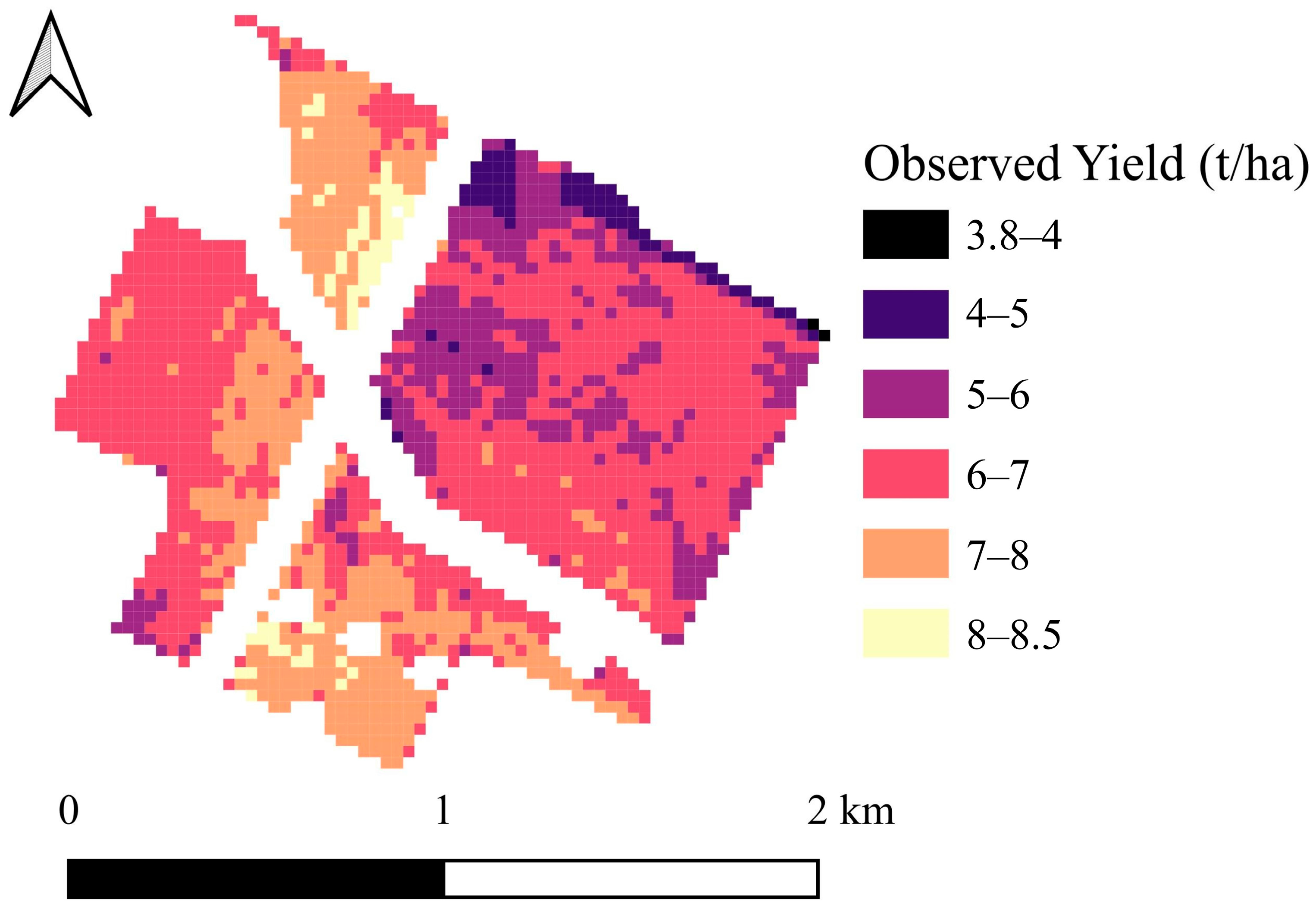

2.5. Yield Data

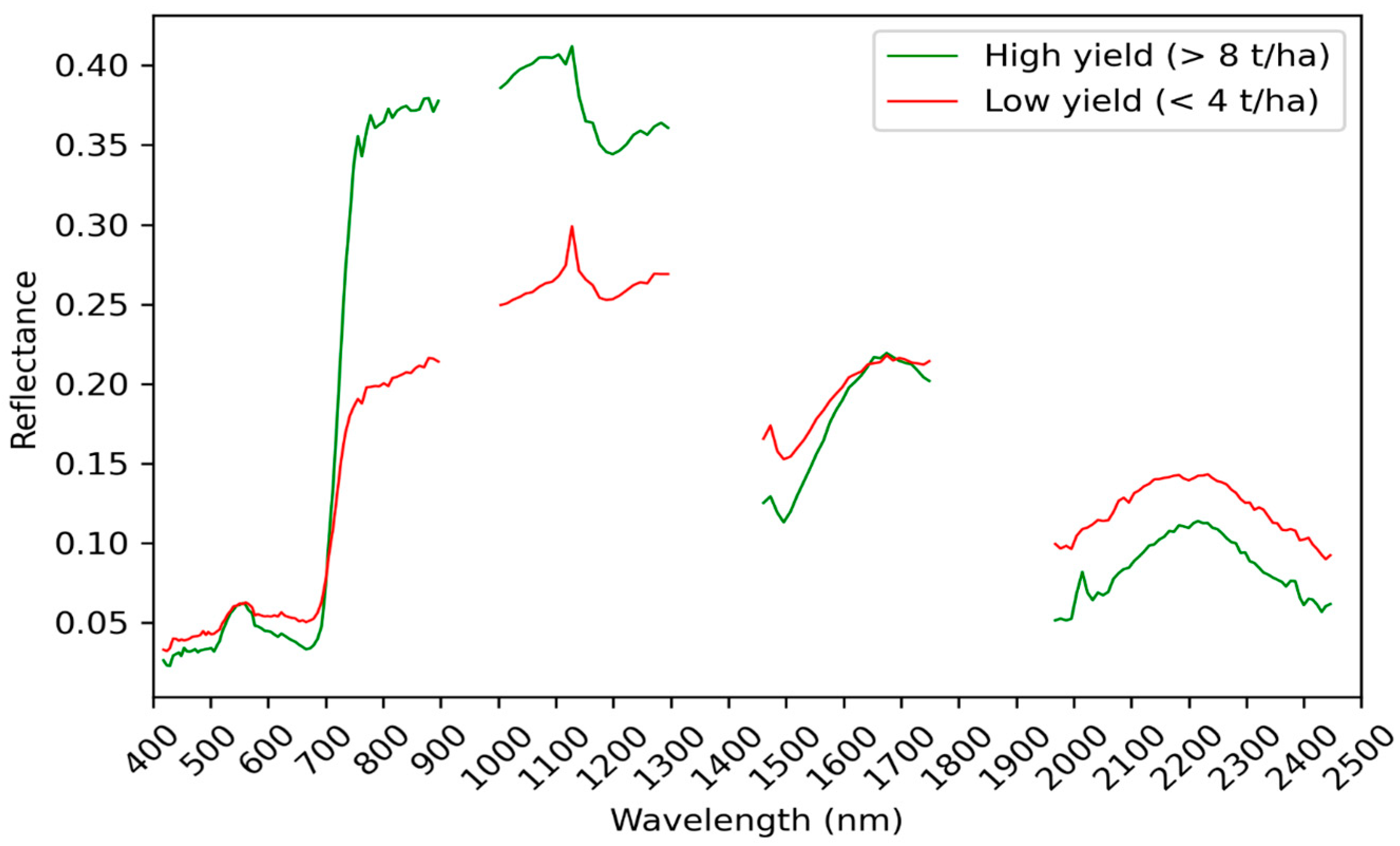

2.6. Spectral Characteristics of Yield Extremes

2.7. Calculation of Vegetation Indices

2.8. Model Training

2.9. Validation

2.10. Feature Importance

3. Results

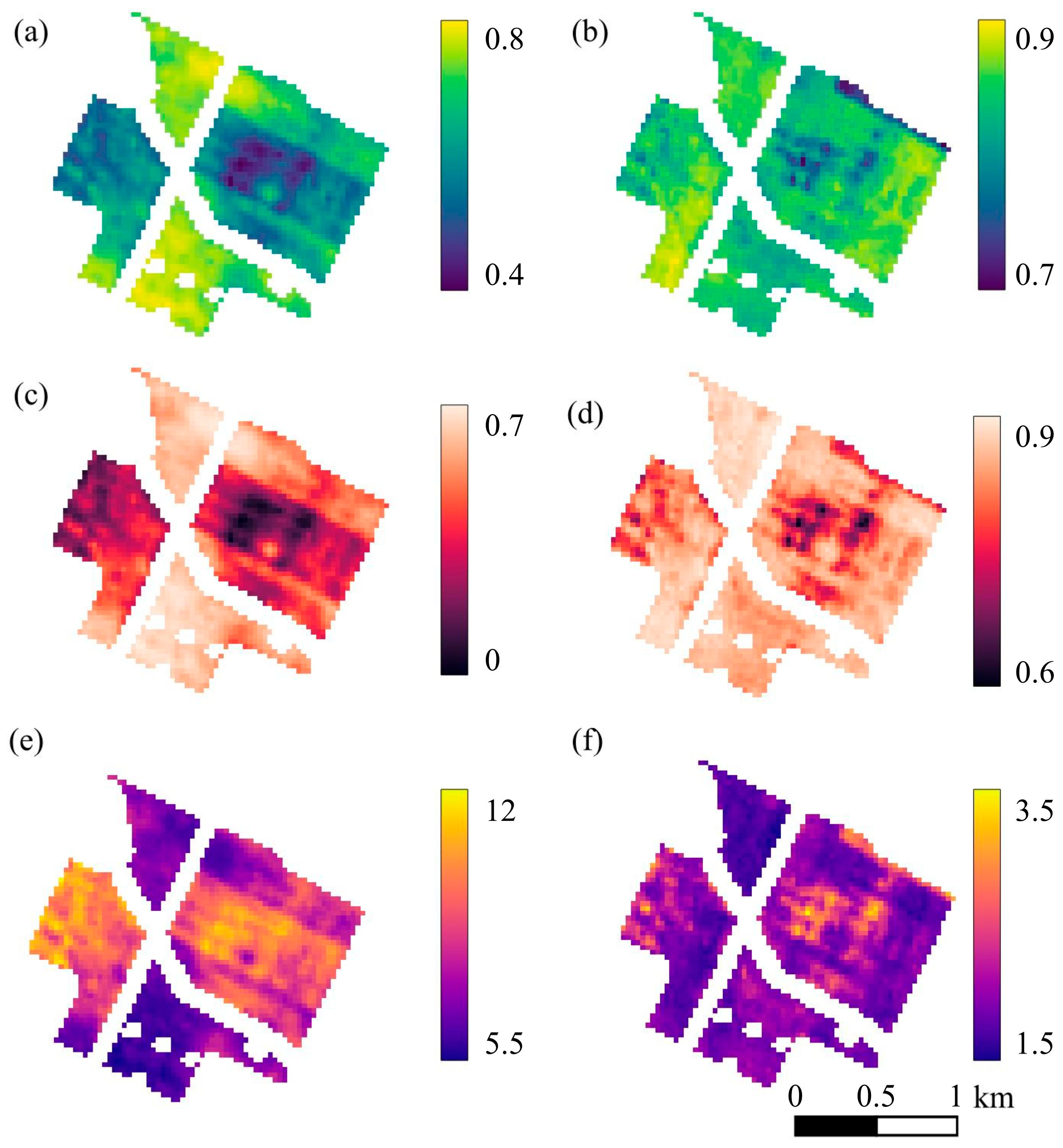

3.1. Spectral Predictor Variables and Feature Importance

3.2. Yield Prediction Validation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ARI | Anthocyanin Reflectance Index |

| DEM | Digital Elevation Models |

| CHIME | Copernicus Hyperspectral Imaging Mission for the Environment |

| CNN | Convolutional Neural Network |

| CRI2 | Carotenoid Reflectance Index 2 |

| ESA | European Space Agency |

| EVI | Enhanced Vegetation Index |

| EVI2 | Two-band Enhanced Vegetation Index |

| GB | Gradient Boosting |

| gNDVI | Green Normalized Difference Vegetation Index |

| GVMI | Global Vegetation Moisture Index |

| hNDVI | Hyperspectral NDVI |

| LAI | Leaf Area Index |

| MAE | Mean Absolute Error |

| MLP | Multilayer Perceptron |

| MSI | Moisture Stress Index |

| NDWI | Normalized Difference Water Index |

| NDVI | Normalized Difference Vegetation Index |

| NIR | Near-Infrared |

| R2 | Coefficient of Determination |

| RF | Random Forest |

| RFR | Random Forest Regression |

| SAR | Synthetic Aperture Radar |

| SWIR | Shortwave Infrared |

| SWIRVI | Shortwave Infrared Vegetation Index |

| TCARI | Transformed Chlorophyll Absorption in Reflectance Index |

| TVI | Transformed Vegetation Index |

| VIS | Visible |

| VNIR | Visible and Near-Infrared |

References

- Lobell, D.B.; Ortiz-Monasterio, J.I.; Addams, C.L.; Asner, G.P. Soil, climate, and management impacts on regional wheat productivity in Mexico from remote sensing. Agric. For. Meteorol. 2002, 114, 31–43. [Google Scholar] [CrossRef]

- Fu, H.; Lu, J.; Li, J.; Zou, W.; Tang, X.; Ning, X.; Sun, Y. Winter Wheat Yield Prediction Using Satellite Remote Sensing Data and Deep Learning Models. Agronomy 2025, 15, 205. [Google Scholar] [CrossRef]

- Guanter, L.; Kaufmann, H.; Segl, K.; Foerster, S.; Rogass, C.; Chabrillat, S.; Fischer, C. The EnMAP Spaceborne Imaging Spectroscopy Mission for Earth Observation. Remote Sens. 2015, 7, 8830–8857. [Google Scholar] [CrossRef]

- Stuffler, T.; Kaufmann, C.; Hofer, S.; Förster, K.P.; Schreier, G.; Mueller, A.; Eckardt, A.; Bach, H.; Penné, B.; Benz, U.; et al. The EnMAP hyperspectral imager—An advanced optical payload for future applications in Earth observation programmes. Acta Astronaut. 2007, 61, 115–120. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical Properties and Nondestructive Estimation of Anthocyanin Content in Plant Leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Ceccato, P.; Flasse, S.; Tarantola, S.; Jacquemoud, S.; Grégoire, J.-M. Detecting Vegetation Leaf Water Content Using Reflectance in the Optical Domain. Remote Sens. Environ. 2001, 77, 22–33. [Google Scholar] [CrossRef]

- Kganyago, M.; Adjorlolo, C.; Mhangara, P.; Tsoeleng, L. Optical Remote Sensing of Crop Biophysical and Biochemical Parameters: An Overview of Advances in Sensor Technologies and Machine Learning Algorithms for Precision Agriculture. Comput. Electron. Agric. 2024, 218, 108730. [Google Scholar] [CrossRef]

- Mateo-Sanchis, A.; Piles, M.; Muñoz-Marí, J.; Adsuara, J.E.; Pérez-Suay, A.; Camps-Valls, G. Synergistic Integration of Optical and Microwave Satellite Data for Crop Yield Estimation. Remote Sens. Environ. 2019, 234, 111460. [Google Scholar] [CrossRef]

- Cheng, E.; Zhang, B.; Peng, D.; Zhong, L.; Yu, L.; Liu, Y.; Xiao, C.; Li, C.; Li, X.; Chen, Y.; et al. Wheat Yield Estimation Using Remote Sensing Data Based on Machine Learning Approaches. Front. Plant Sci. 2022, 13, 1090970. [Google Scholar] [CrossRef]

- Johnson, D.M.; Rosales, A.; Mueller, R.; Reynolds, C.; Frantz, R.; Anyamba, A.; Pak, E.; Tucker, C. USA Crop Yield Estimation with MODIS NDVI: Are Remotely Sensed Models Better than Simple Trend Analyses? Remote Sens. 2021, 13, 4227. [Google Scholar] [CrossRef]

- Tian, H.; Wang, P.; Tansey, K.; Zhang, J.; Zhang, S.; Li, H. An LSTM Neural Network for Improving Wheat Yield Estimates by Integrating Remote Sensing Data and Meteorological Data in the Guanzhong Plain, PR China. Agric. For. Meteorol. 2021, 310, 108629. [Google Scholar] [CrossRef]

- Huber, F.; Yushchenko, A.; Stratmann, B.; Steinhage, V. Extreme Gradient Boosting for Yield Estimation Compared with Deep Learning Approaches. Comput. Electron. Agric. 2022, 202, 107346. [Google Scholar] [CrossRef]

- Victor, B.; Nibali, A.; He, Z. A Systematic Review of the Use of Deep Learning in Satellite Imagery for Agriculture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 2297–2316. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; Arosemena-Jované, J.T.; Cantón-Martínez, S.; Pérez-Porras, F.; Torres-Sánchez, J. Enhancing Crop Yield Estimation in Spinach Crops Using Synthetic Aperture Radar-Derived Normalized Difference Vegetation Index: A Sentinel-1 and Sentinel-2 Fusion Approach. Remote Sens. 2025, 17, 1412. [Google Scholar] [CrossRef]

- Roznik, M.; Boyd, M.; Porth, L. Improving Crop Yield Estimation by Applying Higher Resolution Satellite NDVI Imagery and High-Resolution Cropland Masks. Remote Sens. Appl. Soc. Environ. 2022, 25, 100693. [Google Scholar] [CrossRef]

- Perich, G.; Turkoglu, M.O.; Graf, L.V.; Wegner, J.D.; Aasen, H.; Walter, A.; Liebisch, F. Pixel-Based Yield Mapping and Prediction from Sentinel-2 Using Spectral Indices and Neural Networks. Field Crops Res. 2023, 292, 108824. [Google Scholar] [CrossRef]

- Sharma, K.; Patil, P. Enhancing Crop Yield Estimation from Remote Sensing Data Using Quartile-Based Filtering and ViT Architecture. SN Appl. Sci. 2024, 6, 329. [Google Scholar] [CrossRef]

- Schwieder, M.; Leitão, P.J.; Suess, S.; Senf, C.; Hostert, P. Estimating Fractional Shrub Cover Using Simulated EnMAP Data: A Comparison of Three Machine Learning Regression Techniques. Remote Sens. 2014, 6, 3427–3445. [Google Scholar] [CrossRef]

- Goswami, J.; Murry, O.V.; Boruah, P.; Aggarwal, S.P. Exploring the Potential of EnMAP Hyperspectral Data for Crop Classification: Technique and Performance Evaluation. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2025, X-G-2025, 291–297. [Google Scholar] [CrossRef]

- ESA. (n.d.). CHIME Satellite Mission. Sentinel Online–Copernicus. Available online: https://sentinels.copernicus.eu/copernicus/chime (accessed on 29 July 2025).

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Casas, A.; Riaño, D.; Ustin, S.L.; Dennison, P.; Salas, J. Estimation of water-related biochemical and biophysical vegetation properties using multitemporal airborne hyperspectral data and its comparison to MODIS spectral response. Remote Sens. Environ. 2014, 148, 28–41. [Google Scholar] [CrossRef]

- Bhandari, M.; Baker, S.; Rudd, J.C.; Ibrahim, A.M.H.; Chang, A.; Xue, Q.; Jung, J.; Landivar, J.; Auvermann, B. Assessing the Effect of Drought on Winter Wheat Growth Using Unmanned Aerial System (UAS)-Based Phenotyping. Remote Sens. 2021, 13, 1144. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Peng, D.; Cheng, E.; Feng, X.; Hu, J.; Lou, Z.; Zhang, H.; Zhao, B.; Lv, Y.; Peng, H.; Zhang, B. A Deep–Learning Network for Wheat Yield Prediction Combining Weather Forecasts and Remote Sensing Data. Remote Sens. 2024, 16, 3613. [Google Scholar] [CrossRef]

- Li, C.; Zhang, L.; Wu, X.; Chai, H.; Xiang, H.; Jiao, Y. Winter Wheat Yield Estimation by Fusing CNN–MALSTM Deep Learning with Remote Sensing Indices. Agriculture 2024, 14, 1961. [Google Scholar] [CrossRef]

- Amankulova, K.; Farmonov, N.; Akramova, P.; Tursunov, I.; Mucsi, L. Comparison of PlanetScope, Sentinel-2, and Landsat 8 Data in Soybean Yield Estimation within-Field Variability with Random Forest Regression. Heliyon 2023, 9, e17432. [Google Scholar] [CrossRef]

- Amankulova, K.; Farmonov, N.; Abdelsamei, E.; Szatmári, J.; Khan, W.; Zhran, M.; Rustamov, J.; Akhmedov, S.; Sarimsakov, A.; Mucsi, L. A Novel Fusion Method for Soybean Yield Prediction Using Sentinel-2 and PlanetScope Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13694–13707. [Google Scholar] [CrossRef]

- Farmonov, N.; Amankulova, K.; Szatmári, J.; Urinov, J.; Narmanov, Z.; Nosirov, J.; Mucsi, L. Combining PlanetScope and Sentinel-2 Images with Environmental Data for Improved Wheat Yield Estimation. Int. J. Digit. Earth 2023, 16, 847–867. [Google Scholar] [CrossRef]

- Farmonov, N.; Amankulova, K.; Szatmári, J.; Sharifi, A.; Abbasi-Moghadam, D.; Nejad, S.M.M.; Mucsi, L. Crop Type Classification by DESIS Hyperspectral Imagery and Machine Learning Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1576–1588. [Google Scholar] [CrossRef]

- Limagrain. LG Avenue Winter Wheat. Available online: https://lgseeds.md/catalog/avenue/ (accessed on 29 July 2025).

- Saaten-Union. Avenue Fajtaismertető. Available online: https://www.saaten-union.hu/fajtak/buza/avenue (accessed on 29 July 2025).

- Dallinger, H.G.; Löschenberger, F.; Azrak, N.; Ametz, C.; Michel, S.; Bürstmayr, H. Genome-wide association mapping for pre-harvest sprouting in European winter wheat detects novel resistance QTL, pleiotropic effects, and structural variation in multiple genomes. Plant Genome 2023, 16, e20301. [Google Scholar] [CrossRef]

- KITE Zrt. Fajtakatalógusok és Technológiai Ajánlások. Available online: https://www.kite.hu (accessed on 29 July 2025).

- Storch, T.; Honold, H.-P.; Chabrillat, S.; Habermeyer, M.; Tucker, P.; Brell, M.; Ohndorf, A.; Wirth, K.; Betz, M.; Kuchler, M.; et al. The EnMAP imaging spectroscopy mission towards operations. Remote Sens. Environ. 2023, 294, 113632. [Google Scholar] [CrossRef]

- Pascual-Venteo, A.B.; Portalés, E.; Berger, K.; Tagliabue, G.; Garcia, J.L.; Pérez-Suay, A.; Rivera-Caicedo, J.P.; Verrelst, J. Prototyping Crop Traits Retrieval Models for CHIME: Dimensionality Reduction Strategies Applied to PRISMA Data. Remote Sens. 2022, 14, 2448. [Google Scholar] [CrossRef] [PubMed]

- Segl, K.; Guanter, L.; Rogass, C.; Kaufmann, H.; Schmid, T.; Chabrillat, S.; Kaiser, S.; Sang, B.; Hofer, S.; Doerffer, R. EeteS—The EnMAP End-to-End Simulation Tool. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 522–530. [Google Scholar] [CrossRef]

- Chabrillat, S.; Foerster, S.; Segl, K.; Beamish, A.; Brell, M.; Asadzadeh, S.; Milewski, R.; Ward, K.J.; Brosinsky, A.; Koch, K.; et al. The EnMAP Spaceborne Imaging Spectroscopy Mission: Initial Scientific Results Two Years after Launch. Remote Sens. Environ. 2024, 315, 114379. [Google Scholar] [CrossRef]

- Oppelt, N. Monitoring Plant Chlorophyll and Nitrogen Status Using the Airborne Imaging Spectrometer AVIS. Ph.D. Thesis, Ludwig-Maximilians-University Munich, Munich, Germany, 2002. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Zur, Y.; Chivkunova, O.B.; Merzlyak, M.N. Assessing carotenoid content in plant leaves with reflectance spectroscopy. Photochem. Photobiol. 2002, 75, 272–281. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+b, and total carotenoid content in Eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

| Index Name | Main Category | Formula |

|---|---|---|

| Anthocyanin Reflectance Index (ARI1) | Carotenoids and anthocyanins | (1/550 nm) − (1/700 nm) |

| Carotenoid Reflectance Index 2 (CRI2) | Carotenoid content/plant stress | (1/510 nm) − (1/700 nm) |

| Green Normalized Difference Vegetation Index (gNDVI) | Vegetation chlorophyll content | (550 nm − 800 nm)/(550 nm + 800 nm) |

| Hyperspectral NDVI (hNDVI) | Chlorophyll content and structure | (750 nm − 670 nm)/(750 nm + 670 nm) (with dynamically selected wavelengths) |

| Transformed Chlorophyll Absorption in Reflectance Index (TCARI) | Chlorophyll content | 3 × ((700 nm − 670 nm) − 0.2 × (700 nm − 550 nm)) × (700 nm/670 nm) |

| Transformed Vegetation Index (TVI) | Vegetation vigor | √[(800 nm − 550 nm)/(800 nm + 550 nm) + 0.5] |

| Two-band Enhanced Vegetation Index (EVI2) | Vegetation vigor | 2.5 × (800 nm − 650 nm)/(800 nm + 2.4 × 650 nm) + 1 |

| Index Name | Main Category | Formula |

|---|---|---|

| Global Vegetation Moisture Index (GVMI) | Vegetation moisture content | ((860 nm + 0.1) − (1240 nm + 0.02))/((860 nm + 0.1) + (1240 nm + 0.02)) |

| Moisture Stress Index (MSI) | Leaf water content | 1600 nm/820 nm |

| Normalized Difference Water Index (NDWI) | Vegetation water content | (860 nm − 1240 nm)/(860 nm + 1240 nm) |

| Shortwave Infrared Vegetation Index (SWIRVI) | Dry matter/biomass | 37.27 × (2210 nm + 2090 nm) + 26.2 ×(2208 nm − 2090 nm) − 0.57 |

| Model | Parameter | Value/Type |

|---|---|---|

| Random Forest (RF) | Number of trees | 500 |

| Maximum depth | 15 | |

| Minimum leaf size | 5 | |

| Sampling strategy | Bagging | |

| Random state | 42 | |

| Gradient Boosting (GB) | Number of trees | 500 |

| Maximum depth | 10 | |

| Minimum leaf size | 10 | |

| Learning rate | 0.3 | |

| Regularization (λ) | 1 | |

| Boosting strategy | Sequential residual correction | |

| Random state | 42 | |

| Multilayer Perceptron (MLP) | Hidden layers | (128, 64, 32) neurons |

| Activation function | ReLU | |

| Optimizer | Adam | |

| Learning rate | 0.001 | |

| Regularization (α, L2) | 0.001 | |

| Loss function | Mean squared error | |

| Maximum iterations | 500 | |

| Random state | 42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mucsi, L.; Litkey-Kovács, D.; Bonus, K.; Farmonov, N.; Elgendy, A.; Aji, L.; Sóti, M. Assessment of the Effectiveness of Spectral Indices Derived from EnMAP Hyperspectral Imageries Using Machine Learning and Deep Learning Models for Winter Wheat Yield Prediction. Remote Sens. 2025, 17, 3426. https://doi.org/10.3390/rs17203426

Mucsi L, Litkey-Kovács D, Bonus K, Farmonov N, Elgendy A, Aji L, Sóti M. Assessment of the Effectiveness of Spectral Indices Derived from EnMAP Hyperspectral Imageries Using Machine Learning and Deep Learning Models for Winter Wheat Yield Prediction. Remote Sensing. 2025; 17(20):3426. https://doi.org/10.3390/rs17203426

Chicago/Turabian StyleMucsi, László, Dorottya Litkey-Kovács, Krisztián Bonus, Nizom Farmonov, Ali Elgendy, Lutfi Aji, and Márkó Sóti. 2025. "Assessment of the Effectiveness of Spectral Indices Derived from EnMAP Hyperspectral Imageries Using Machine Learning and Deep Learning Models for Winter Wheat Yield Prediction" Remote Sensing 17, no. 20: 3426. https://doi.org/10.3390/rs17203426

APA StyleMucsi, L., Litkey-Kovács, D., Bonus, K., Farmonov, N., Elgendy, A., Aji, L., & Sóti, M. (2025). Assessment of the Effectiveness of Spectral Indices Derived from EnMAP Hyperspectral Imageries Using Machine Learning and Deep Learning Models for Winter Wheat Yield Prediction. Remote Sensing, 17(20), 3426. https://doi.org/10.3390/rs17203426