Assessing Pacific Madrone Blight with UAS Remote Sensing Under Different Skylight Conditions

Abstract

1. Introduction

1.1. Pacific Madrones

1.2. Foliar Blight

1.3. UAS Trends and Research Gaps of Previous Disease Monitoring Studies

1.4. Prior Studies on Blight Detection in Pacific Madrones

1.5. Prior Studies on Variable Illumination Conditions and UAS Imagery

1.6. Prior Studies on Crown Delineation Using UAS Imagery

1.7. Objective

2. Materials and Methods

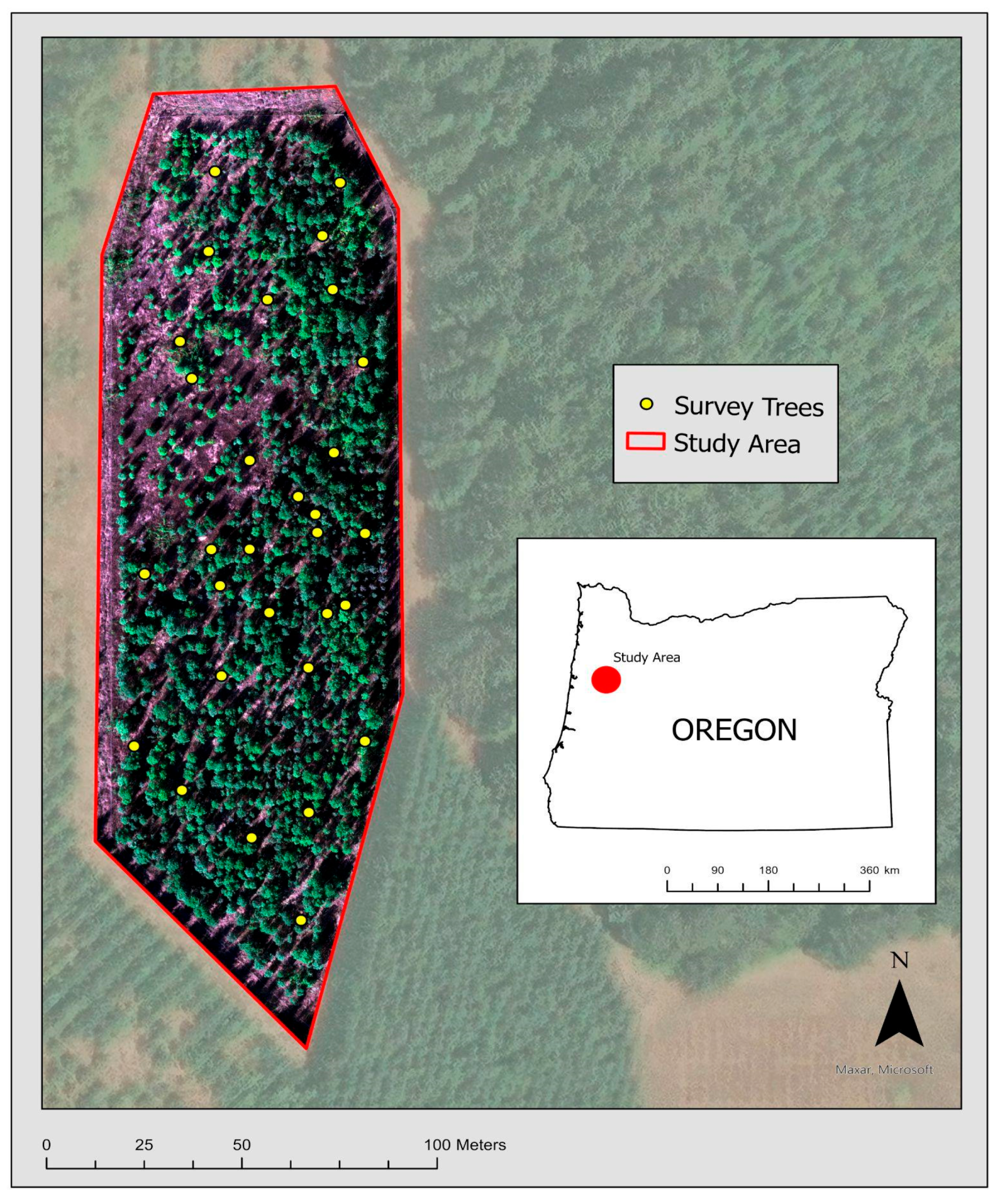

2.1. Site Data and Acquisition

2.2. Ground Survey

2.3. UAS Survey

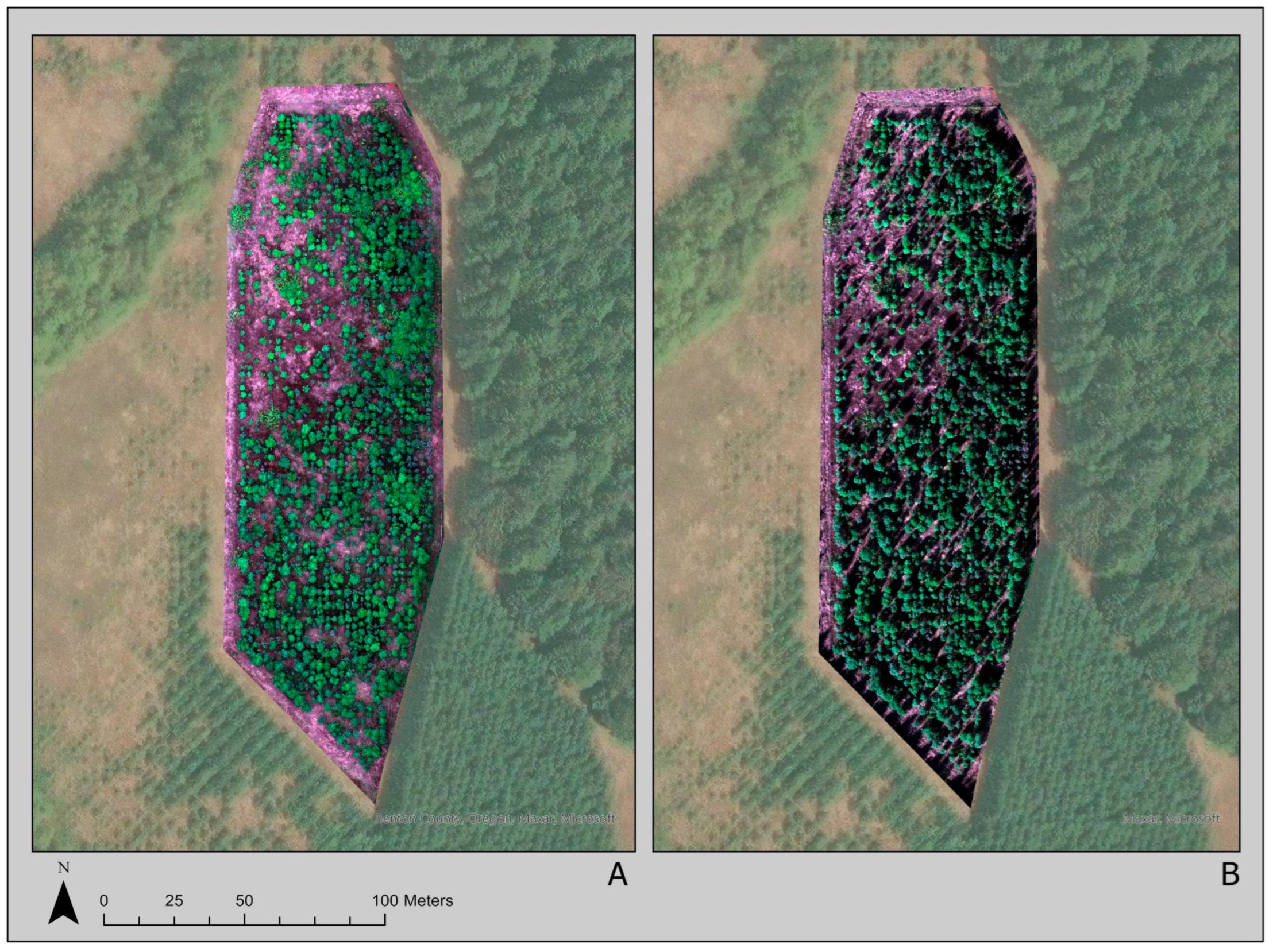

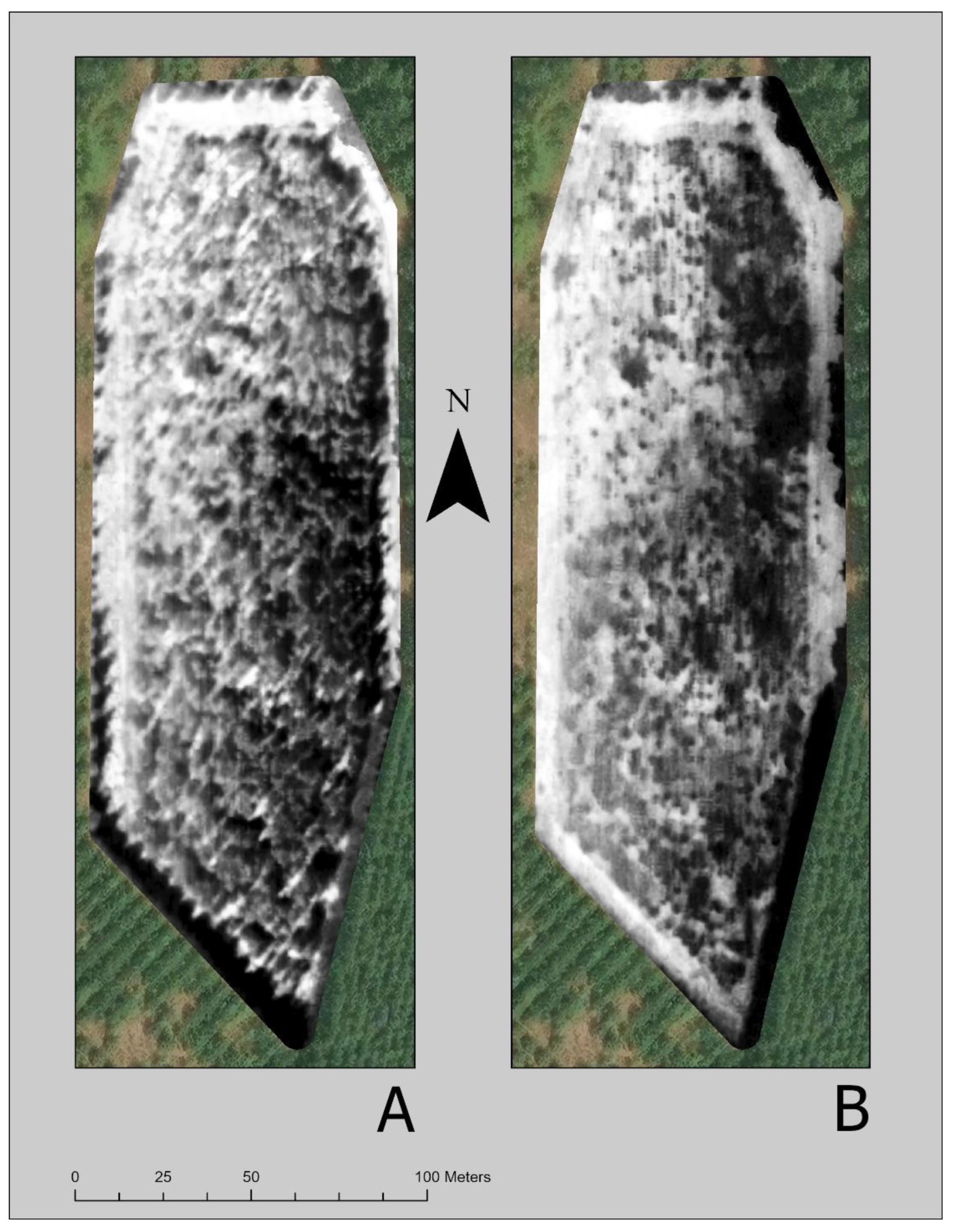

2.4. Image Processing in Metashape

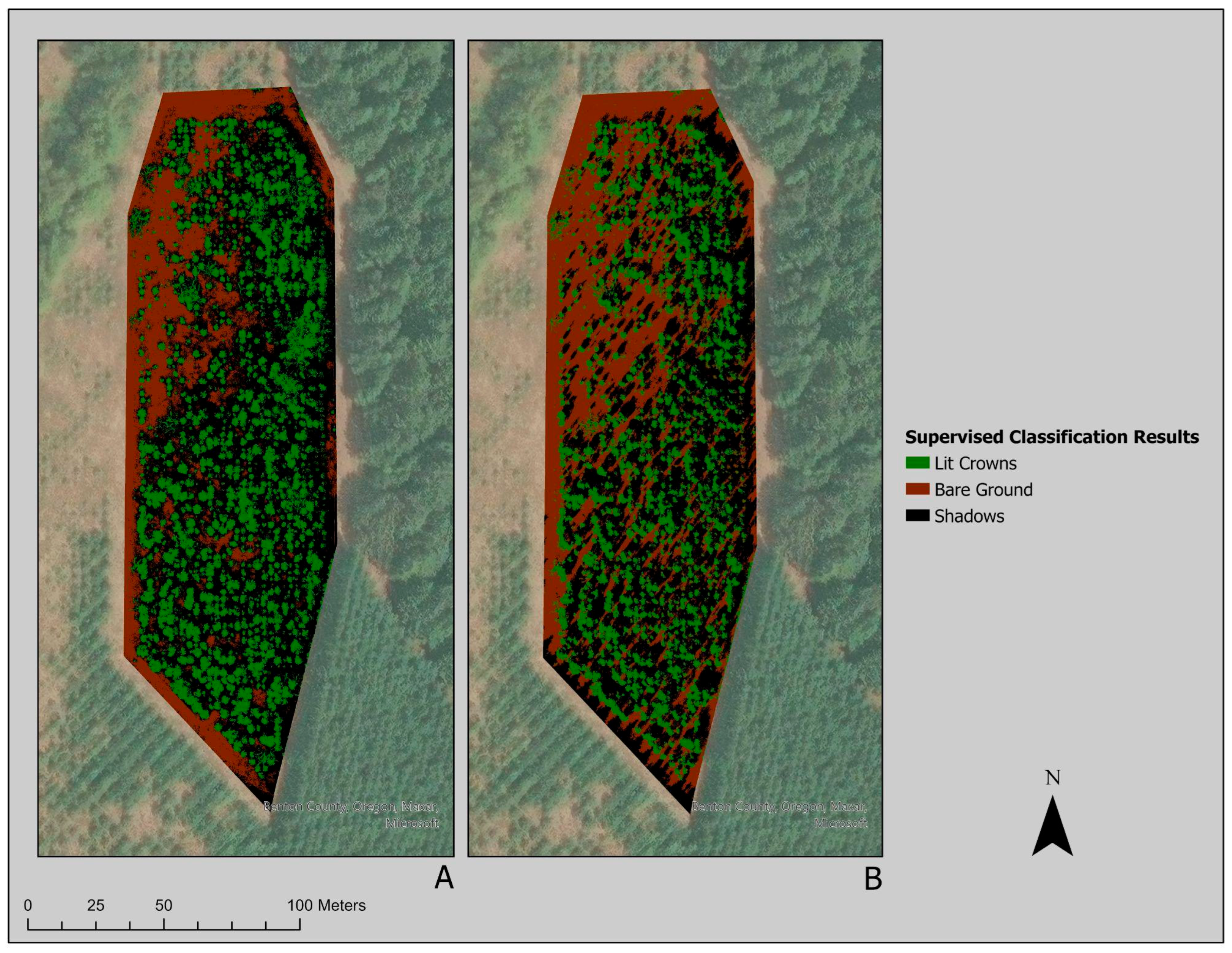

2.5. Supervised Classification

Classifying and Extracting Sunny and Cloudy Crowns for Entire Pacific Madrone Plot Using Support Vector Machines

- M1 < -cor(crn_stats_su[,c(“B”, “G”, “R”, “RE”, “NIR”, “LWIR”, “TGI”, “GRVI”, “NDVI”, “NDRE”, “GNDVI”, “MSR”, “MSRE”, “GCI”, “RECI”)])

- corrplot(M1, method = “number”)

- M2 < -cor(crn_stats_cl[,c(“B”, “G”, “R”, “RE”, “NIR”, “LWIR”, “TGI”, “GRVI”, “NDVI”, “NDRE”, “GNDVI”, “MSR”, “MSRE”, “GCI”, “RECI”)])

- corrplot(M2, method = “number”)

- b < -cor(M1, M2)

- corrplot(b, addCoef.col = ‘green’, tl.cex = 1.2, tl.col = ‘black’, method = ‘color’)

2.6. Analysis of Survey Trees Using Spectral Variables of Interest

- t.test(cl_d$B, su_d$B, paired = TRUE)

- t.test(cl_d$G, su_d$G, paired = TRUE)

- t.test(cl_d$R, su_d$R, paired = TRUE)

- survB < -lm(survey_condition_test$B [1:29]~survey_condition_test$B [30:58], data = survey_condition_test)

- summary(survB)

Blight Index Modeling of the Survey Tree Crowns Across Sunny and Cloudy Conditions

- sunny_data$blight_0a[sunny_data$Blight_0 < 25] < −1

- sunny_data$blight_0a[sunny_data$Blight_0 ≥ 25 & sunny_data$Blight_0 ≤ 50] < −2

- sunny_data$blight_0a[sunny_data$Blight_0 > 50 & sunny_data$Blight_0 ≤ 75] < −3

- sunny_data$blight_0a[sunny_data$Blight_0 > 75] < −4

- …

- sunny_data$blight_ind < −0 * sunny_data$blight_0a + 1 * sunny_data$blight_0_25a + 5 * sunny_data$blight_25_50a + 25 * sunny_data$blight_50a

- model1 < -summary(lm(blight_ind~G + RE + Height + MSRE + NDRE + GNDVI + GCI + RECI, data = survey_condition_test))

- model1

- model2 < -summary(lm(blight_ind~B + R + Height + LWIR + NDRE + MSRE + RECI, data = survey_condition_test))

- model2

- model3 < -summary(lm(blight_ind~B + R + Height + NIR + LWIR + TGI + NDVI + GRVI + MSR, data = survey_condition_test))

- model3

- model4 < -summary(lm(blight_ind~R + B + Height + GCI + NIR + NDVI + MSR + GNDVI, data = survey_condition_test))

- model4

- model5 < -summary(lm(blight_ind~R + B + Height + GCI + NIR + MSR + GNDVI, data = survey_condition_test))

- model5

3. Results

3.1. Results of Supervised Classification of Entire Pacific Madrone Plot Using Support Vector Machines

3.2. Supervised Classification Accuracy Assessment

3.3. Results of Linear Regression of 29 Survey Tree Crowns

3.4. Results of Paired T-Tests of 29 Survey Tree Crowns

3.5. Results of Blight Index Modeling Using Multiple Linear Regression

4. Discussion

4.1. Contribution to Literature

4.2. Flight Timing

4.3. Connection to Madrone Health

5. Conclusions

5.1. Key Findings

5.2. Future Directions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Immel, D.L. Plant Guide: Pacific Madrone; USDA, NRCS: Washington, DC, USA, 2006. Available online: https://plants.usda.gov/DocumentLibrary/plantguide/pdf/cs_arme.pdf (accessed on 11 November 2024).

- Bennett, M.; Shaw, D.C. Diseases and Insect Pests of Pacific Madrone; Oregon State University, Extension Service: Corvallis, OR, USA, 2008. [Google Scholar]

- Elliott, M. The Decline of Pacific Madrone (Arbutus menziesii Pursh) in Urban and Natural Environments: Its Causes and Management. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 1999. [Google Scholar]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent advances in forest insect pests and diseases monitoring using UAV-based data: A systematic review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Barker, M.I.; Burnett, J.D.; Haddad, T.; Hirsch, W.; Kang, D.K.; Wing, M.G. Multi-temporal Pacific madrone leaf blight assessment with unoccupied aircraft systems. Ann. For. Res. 2023, 66, 171–181. [Google Scholar] [CrossRef]

- Wing, M.G.; Barker, M. Applying unoccupied aircraft system multispectral remote sensing to examine blight in a Pacific madrone orchard. Int. J. For. Hortic. 2024, 10, 20–30. [Google Scholar] [CrossRef]

- de Souza, R.; Buchhart, C.; Heil, K.; Plass, J.; Padilla, F.M.; Schmidhalter, U. Effect of time of day and sky conditions on different vegetation indices calculated from active and passive sensors and images taken from UAV. Remote Sens. 2021, 13, 1691. [Google Scholar] [CrossRef]

- Hakala, T.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Kaivosoja, J.; Pesonen, L.; Pölönen, I. Spectral imaging from UAVs under varying illumination conditions. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 189–194. [Google Scholar] [CrossRef]

- Slade, G.; Anderson, K.; Graham, H.A.; Cunliffe, A.M. Repeated drone photogrammetry surveys demonstrate that reconstructed canopy heights are sensitive to wind speed but relatively insensitive to illumination conditions. Int. J. Remote Sens. 2025, 46, 24–41. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Løke, T.; Schläpfer, D.; Coops, N.C.; Lucanus, O.; Leblanc, G. Assessing the impact of illumination on UAV pushbroom hyperspectral imagery collected under various cloud cover conditions. Remote Sens. Environ. 2021, 258, 112396. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.J.; Faias, S.P.; Tomé, M.; Díaz-Varela, R.A. Use of multi-temporal UAV-derived imagery for estimating individual tree growth in Pinus pinea stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Gu, J.; Grybas, H.; Congalton, R.G. A comparison of forest tree crown delineation from unmanned aerial imagery using canopy height models vs. spectral lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Gu, J.; Grybas, H.; Congalton, R.G. Individual tree crown delineation from UAS imagery based on region growing and growth space considerations. Remote Sens. 2020, 12, 2363. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry remote sensing from unmanned aerial vehicles: A review focusing on the data, processing and potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- PRISM Climate Group, Oregon State University. Available online: https://prism.oregonstate.edu (accessed on 5 December 2024).

- DeWald, L.E.; Elliott, M.; Sniezko, R.A.; Chastagner, G.A. Geographic and local variation in Pacific madrone (Arbutus menziesii) leaf blight. In Proceedings of the 6th International Workshop on the Genetics of Tree-Parasite Interactions: Tree Resistance to Insects and Diseases: Putting Promise into Practice, Mt. Sterling, OH, USA, 5–10 August 2018. [Google Scholar]

- Nesbit, P.R.; Hugenholtz, C.H. Enhancing UAV–SFM 3D model accuracy in high-relief landscapes by incorporating oblique images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef]

- Hostens, D.S.; Dogwiler, T.; Hess, J.W.; Pavlowsky, R.T.; Bendix, J.; Martin, D.T. Assessing the role of sUAS mission design in the accuracy of digital surface models derived from structure-from-motion photogrammetry. In sUAS Applications in Geography; Springer: Cham, Switzerland, 2022; pp. 123–156. [Google Scholar]

- Agisoft. Agisoft Metashape User Manual; Agisoft: St. Petersburg, Russia, 2024; Available online: https://www.agisoft.com/downloads/user-manuals/ (accessed on 11 November 2024).

- MicaSense, Inc. What is the Center Wavelength and Bandwidth of Each Filter for MicaSense Sensors? MicaSense, Inc.: Seattle, WA, USA, 2024; Available online: https://support.micasense.com/hc/en-us/articles/214878778-What-is-the-center-wavelength-and-bandwidth-of-each-filter-for-MicaSense-sensors (accessed on 27 August 2025).

- Hunt, E.R., Jr.; Daughtry, C.S.; Eitel, J.U.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agronomy 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Deering, D.W.; Schell, J.A.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Final Report, Type III for the Period September 1972–November 1974; Texas A&M University: Remote Sensing Center: College Station, TX, USA, 1974. [Google Scholar]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.U.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of crop water stress, nitrogen status and canopy density using ground-based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16 July 2000; Volume 1619. No. 6. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Wang, H.; Huang, S.; Cheng, S.; Khosla, R.; Jiang, R. Non-destructive estimation of rice plant nitrogen status with Crop Circle multispectral active canopy sensor. Field Crops Res. 2013, 154, 133–144. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Luo, H.; Quaas, J.; Han, Y. Diurnally asymmetric cloud cover trends amplify greenhouse warming. Sci. Adv. 2024, 10, eado5179. [Google Scholar] [CrossRef]

- Xiao, D.; Pan, Y.; Feng, J.; Yin, J.; Liu, Y.; He, L. Remote sensing detection algorithm for apple fire blight based on UAV multispectral image. Comput. Electron. Agric. 2022, 199, 107137. [Google Scholar] [CrossRef]

- Mol, W.; Heusinkveld, B.; Mangan, M.R.; Hartogensis, O.; Veerman, M.; van Heerwaarden, C. Observed patterns of surface solar irradiance under cloudy and clear-sky conditions. Q. J. R. Meteorol. Soc. 2024, 150, 2338–2363. [Google Scholar] [CrossRef]

- Wang, S.; Baum, A.; Zarco-Tejada, P.J.; Dam-Hansen, C.; Thorseth, A.; Bauer-Gottwein, P.; Bandini, F.; Garcia, M. Unmanned Aerial System multispectral mapping for low and variable solar irradiance conditions: Potential of tensor decomposition. ISPRS J. Photogramm. Remote Sens. 2019, 155, 58–71. [Google Scholar] [CrossRef]

- Ahlawat, V.; Jhorar, O.; Kumar, L.; Backhouse, D. Using hyperspectral remote sensing as a tool for early detection of leaf rust in blueberries. In Proceedings of the International Symposium on Remote Sensing of Environment—The GEOSS Era: Towards Operational Environmental Monitoring, Sydney, Australia, 10–15 April 2011. [Google Scholar]

- Gorretta, N.; Nouri, M.; Herrero, A.; Gowen, A.; Roger, J.M. Early detection of the fungal disease “apple scab” using SWIR hyperspectral imaging. In Proceedings of the 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; pp. 1–4. [Google Scholar]

- Stark, B.; McGee, M.; Chen, Y. Short wave infrared (SWIR) imaging systems using small Unmanned Aerial Systems (sUAS). In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 495–501. [Google Scholar]

- Ji, L.; Zhang, L.; Wylie, B.K.; Rover, J. On the terminology of the spectral vegetation index (NIR− SWIR)/(NIR+ SWIR). Int. J. Remote Sens. 2011, 32, 6901–6909. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-based automatic detection and monitoring of chestnut trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

| Band Name or Vegetation Index | Formula * | Reference |

|---|---|---|

| 475 ± 32 nm | [20] |

| 560 ± 27 nm | [20] |

| 668 ± 14 nm | [20] |

| 717 ± 12 nm | [20] |

| 842 ± 57 nm | [20] |

| ~11 µm (11,000 nm), bandwidth ~6 µm | [20] |

| –0.5 × [190 × (R − G) − 120 × (R − B)] | [21,22] |

| NIR/G | [23] |

| (NIR − R)/(NIR + R) | [24] |

| (NIR − RE)/(NIR + RE) | [25] |

| (NIR − G)/(NIR + G) | [26] |

| (NIR/R − 1)/(√(NIR/R) + 1) | [27] |

| (NIR/RE − 1)/(√(NIR/RE) + 1) | [28] |

| (NIR/G) − 1 | [29] |

| (NIR/RE) − 1 | [29] |

| Prediction | Reference | |||

| Class | 1 | 2 | 3 | |

| 1 | 32 | 0 | 2 | |

| 2 | 1 | 26 | 6 | |

| 3 | 0 | 0 | 33 | |

| Band/Index | Adjusted R2 | p-Value | RSE |

|---|---|---|---|

| B | 0.66 | <0.005 | 0.00 |

| G | 0.82 | <0.005 | 0.00 |

| R | 0.62 | <0.005 | 0.00 |

| RE | 0.80 | <0.005 | 0.03 |

| NIR | 0.84 | <0.005 | 0.05 |

| LWIR | 0.23 | <0.005 | 1.67 |

| TGI | 0.87 | <0.005 | 0.54 |

| GRVI | 0.91 | <0.005 | 0.00 |

| NDVI | 0.95 | <0.005 | 0.00 |

| NDRE | 0.95 | <0.005 | 0.01 |

| GNDVI | 0.92 | <0.005 | 0.00 |

| MSR | 0.92 | <0.005 | 0.01 |

| MSRE | 0.95 | <0.005 | 0.03 |

| GCI | 0.92 | <0.005 | 0.48 |

| RECI | 0.95 | <0.005 | 0.07 |

| Band/Index | Mean Difference | p-Value |

|---|---|---|

| B | −0.012 | <0.005 |

| G | −0.024 | <0.005 |

| R | 0.016 | <0.005 |

| RE | −0.094 | <0.005 |

| NIR | −0.211 | <0.005 |

| LWIR | −4.183 | <0.005 |

| TGI | −2.394 | <0.005 |

| GRVI | −0.505 | <0.005 |

| NDVI | −0.108 | <0.005 |

| NDRE | −0.008 | <0.005 |

| GNDVI | −0.018 | <0.005 |

| MSR | −9.903 | <0.005 |

| MSRE | −0.023 | <0.005 |

| GCI | −0.823 | <0.005 |

| RECI | −0.056 | <0.005 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Winfield, M.C.; Wing, M.G.; Wood, J.H.; Graham, S.; Anderson, A.M.; Hawks, D.C.; Miller, A.H. Assessing Pacific Madrone Blight with UAS Remote Sensing Under Different Skylight Conditions. Remote Sens. 2025, 17, 3141. https://doi.org/10.3390/rs17183141

Winfield MC, Wing MG, Wood JH, Graham S, Anderson AM, Hawks DC, Miller AH. Assessing Pacific Madrone Blight with UAS Remote Sensing Under Different Skylight Conditions. Remote Sensing. 2025; 17(18):3141. https://doi.org/10.3390/rs17183141

Chicago/Turabian StyleWinfield, Michael C., Michael G. Wing, Julia H. Wood, Savannah Graham, Anika M. Anderson, Dustin C. Hawks, and Adam H. Miller. 2025. "Assessing Pacific Madrone Blight with UAS Remote Sensing Under Different Skylight Conditions" Remote Sensing 17, no. 18: 3141. https://doi.org/10.3390/rs17183141

APA StyleWinfield, M. C., Wing, M. G., Wood, J. H., Graham, S., Anderson, A. M., Hawks, D. C., & Miller, A. H. (2025). Assessing Pacific Madrone Blight with UAS Remote Sensing Under Different Skylight Conditions. Remote Sensing, 17(18), 3141. https://doi.org/10.3390/rs17183141