Abstract

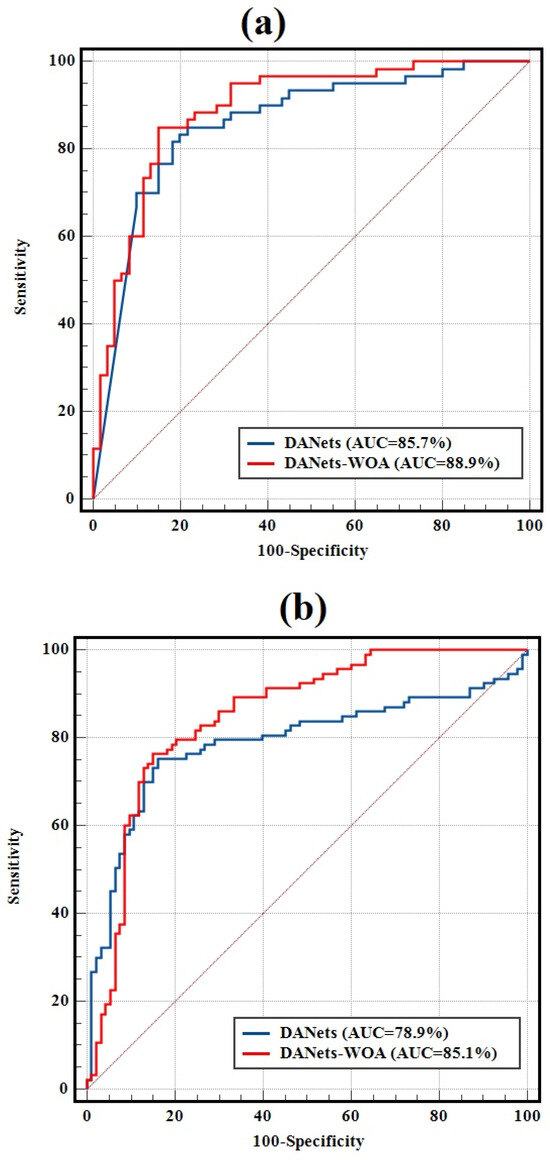

This study employed Deep Abstract Networks (DANets), independently and in combination with the Whale Optimization Algorithm (WOA), to generate high-resolution susceptibility maps for drought and wildfire hazards in the Oroqen Autonomous Banner in Inner Mongolia. Presence samples included 309 wildfire points from MODIS active fire data and 200 drought points derived from a custom Standardized Drought Condition Index. DANets-WOA models showed clear performance improvements over their solitary counterparts. For drought susceptibility, RMSE was reduced from 0.28 to 0.21, MAE from 0.17 to 0.11, and AUC improved from 85.7% to 88.9%. Wildfire susceptibility mapping also improved, with RMSE decreasing from 0.39 to 0.36, MAE from 0.32 to 0.28, and AUC increasing from 78.9% to 85.1%. Loss function plots indicated improved convergence and reduced overfitting following optimization. A pairwise z-statistic analysis revealed significant differences (p < 0.05) in susceptibility classifications between the two modeling approaches. Notably, the overlap of drought and wildfire susceptibilities within the forest–steppe transitional zone reflects a climatically and ecologically tense corridor, where moisture stress, vegetation gradients, and human land-use converge to amplify multi-hazard risk beyond the sum of individual threats. The integration of DANets with the WOA demonstrates a robust and scalable framework for dual hazard modeling.

1. Introduction

Natural hazards such as droughts and wildfires have long posed serious threats to environmental stability, economic security, and human livelihoods worldwide. In recent decades, the frequency, intensity, and spatial extent of these hazards have escalated significantly, driven by climate change, land-use modifications, and anthropogenic pressures [1,2,3]. Particularly in semi-arid and forested regions, the complex interplay between drought and wildfire generates cascading risks that severely challenge existing management frameworks [4]. Understanding and modeling these dual hazards in an integrated manner is therefore not only scientifically imperative but also critical for informed decision-making and sustainable risk mitigation [3,5,6]. Among vulnerable landscapes, the Oroqen Autonomous Banner in Inner Mongolia presents a unique case where the convergence of climatic dryness, vegetative stress, and land-use dynamics renders the ecosystem highly susceptible to both drought-induced degradation and wildfire outbreaks. The convergence of prolonged drought conditions and enhanced wildfire susceptibility creates feedback loops, whereby dry biomass accumulation, vegetation stress, and soil desiccation mutually reinforce the likelihood and severity of fire events [7]. Conversely, wildfires can further exacerbate drought conditions by destroying vegetation cover, inducing soil hydrophobicity, reducing soil moisture retention, and altering local hydrological and microclimatic processes, thereby amplifying long-term ecosystem vulnerability [8]. Consequently, traditional single-hazard approaches are increasingly inadequate for capturing the full spectrum of environmental risks, necessitating a shift toward holistic, multi-hazard modeling frameworks [9].

Despite growing recognition of the need for integrated risk assessment, dual hazard modeling of drought and wildfire remains a relatively underexplored area within the broader field of natural hazards research. Conventional risk models have largely evolved along parallel but separate trajectories, often addressing drought and wildfire as isolated phenomena without systematically accounting for their interdependencies [10,11]. This methodological fragmentation hinders the development of comprehensive risk mitigation strategies, particularly in ecosystems where the two hazards are closely intertwined [12,13]. Furthermore, accurately modeling the joint occurrence and interaction of drought and wildfire presents significant challenges, given the highly nonlinear, multi-scalar, and stochastic nature of the underlying environmental processes [14,15,16]. These challenges underscore the urgent demand for innovative modeling approaches capable of assimilating heterogeneous data sources, capturing complex spatial–temporal dependencies, and offering predictive insights into coupled hazard dynamics [17,18,19,20].

Traditional drought modeling has relied on statistical and data-driven approaches, with machine learning (ML) methods such as Support Vector Machines (SVM) [21], Random Forests (RF) [22], and Gradient Boosting Machines (GBM) [23] contributing substantially to classification and prediction tasks. In recent years, advances in deep learning (DL)—a specialized branch within ML—have enabled the use of architectures like Convolutional Neural Networks (CNNs) [24] and Long Short-Term Memory (LSTM) networks [25], which excel at extracting complex spatial and temporal patterns from large remote sensing datasets. Additionally, unsupervised data mining techniques, including clustering algorithms such as K-means [26] and Self-Organizing Maps (SOM) [27], support drought pattern recognition and early warning system development. Similarly, wildfire hazard modeling has evolved from early empirical statistical models—such as Decision Trees (DT) [28] and Logistic Regression (LR) [29]—to the application of increasingly sophisticated machine learning (ML) algorithms. Classical ML methods like RF [30], Artificial Neural Networks (ANN) [31], and Extreme Gradient Boosting (XGBoost) [32] have been widely employed for wildfire susceptibility mapping, taking advantage of diverse and high-dimensional remote sensing data. More recently, advances within the DL branch of ML have enabled the use of architectures such as CNNs [33], which are well suited for extracting spatial features from satellite imagery, and sequence models like Recurrent Neural Networks (RNNs) and LSTM networks [34], which have demonstrated strong performance in predicting fire spread and temporal dynamics. This methodological shift has been driven chiefly by the explosive growth in the availability, volume, and quality of Earth observation datasets, increasing recognition of the highly nonlinear, multi-factorial nature of drought and wildfire initiation and spread, and the demand for models capable of learning complex spatial and temporal dependencies inherent in these phenomena. Furthermore, the continual advancement in computational resources and open-source machine learning frameworks has facilitated the adoption of more complex data-driven models in practical management and forecasting. This transition in methodological landscape is summarized in recent reviews [35,36,37,38].

Despite these advancements, integrated dual hazard modeling—where drought and wildfire interactions are jointly captured—remains an emerging frontier. A limited number of studies have attempted to address these coupled systems. For example, Jolly et al. [39] introduced a global fire-weather season length dataset that implicitly linked drought and fire conditions, although not through a predictive deep learning framework. In another case, Abatzoglou and Williams [40] emphasized the role of anthropogenic climate change in enhancing drought-fire synergies across the western United States. Recently, hybrid ML models such as RF-LSTM ensembles [41] and DL-integrated hazard maps [42,43] have started to surface, pointing toward the necessity of more robust and scalable approaches capable of handling dual hazard complexities. Nevertheless, many of these models either lack optimization in their deep learning architecture or fail to systematically balance model accuracy with computational efficiency. While these RF-LSTM and DL-based hazard mapping approaches represent important steps toward integrated dual-hazard modeling, they remain limited by their reliance on sequence or image-based architectures, often requiring extensive temporal data and lacking specialized mechanisms for jointly abstracting and representing heterogeneous tabular predictor variables common in environmental hazard research. In contrast, the Deep Abstract Network (DANet) is specifically developed for structured tabular data, utilizing abstraction layers that group and hierarchically encode interactions among diverse environmental, climatic, and anthropogenic predictors. By doing so, this architecture builds higher-level representations that are well suited to capture the complex, nonlinear relationships among climatic, vegetative, and human factors driving both drought and wildfire hazards, enabling finer-scale, context-aware susceptibility mapping. This architecture enables the model to jointly encode and abstract direct and indirect (bidirectional) interactions between predictors at multiple levels, providing a more effective approach to modeling the intertwined dynamics of dual hazards than conventional deep learning models. Moreover, coupling the DANet with a metaheuristic algorithm like the Whale Optimization Algorithm (WOA) enables comprehensive, automated hyperparameter optimization, systematically tuning the model to each hazard’s unique feature structure and reducing overfitting risks. The WOA was chosen with careful consideration for its computational efficiency, strong exploration–exploitation balance, and proven robustness in high-dimensional deep learning optimization tasks. This integration not only improves predictive performance relative to both algorithm-specific and ensemble deep learning models, but also enhances model interpretability and adaptability across varying hazard contexts—advantages particularly pertinent for multi-hazard frameworks where risk factors and data variety are substantial. Thus, this study addresses a key methodological gap by integrating the DANet and WOA into a unified, automated workflow, specifically tailored for high-dimensional, dual-hazard susceptibility mapping—a notable advance over previous hybrid or sequence-based deep learning methods.

The advent of novel deep learning architectures such as DANets offers a promising avenue for dual hazard modeling. DANets are specifically designed to capture multi-level feature abstractions through progressive encoding layers, making them particularly suited for complex environmental systems characterized by nonlinear, hierarchical interactions. However, like many deep learning models, DANets are sensitive to hyperparameter settings and may suffer from local minima entrapment or overfitting when trained on highly variable environmental data. To overcome these limitations, the integration of metaheuristic optimization algorithms has been increasingly explored. In particular, the WOA, inspired by the social foraging behavior of humpback whales, has demonstrated impressive performance in optimizing hyperparameters and enhancing deep network convergence [44]. Combining DANets with the WOA thus represents a novel and potent strategy for improving dual hazard prediction accuracy, robustness, and generalizability. In particular, while the original algorithmic form of the DANet and WOA remains unchanged, the methodological innovation is twofold: first, we joined the DANet and WOA routines into a single coupled modeling workflow for each hazard, allowing robust metaheuristic-based hyperparameter optimization and performance comparison; second, for each individual hazard, the DANet architecture was specifically configured with hazard-appropriate predictors and evidence samples, resulting in a unique input structure and abstraction hierarchy for drought and wildfire modeling, respectively. This tailored model architecture enables optimal feature abstraction and predictive performance within each hazard context and provides a transparent, reproducible workflow for future applications. To our knowledge, this is the first integrated workflow in which the DANet architecture is systematically reconfigured for each hazard scenario and fully optimized via metaheuristic search.

Methodological novelty in this work arises from the development of a fully customized deep learning–metaheuristic framework, purpose-built for multi-hazard susceptibility assessment in the unique context of drought and wildfire hazards. While the DANet and WOA each exist independently in the literature, this study integrates them within a unified modeling architecture that is meticulously tailored to the heterogeneity, predictor composition, and hazard-specific data characteristics of the study domain. Regarding application, to our knowledge, this is the first documented case in which the DANet and WOA have been jointly implemented for either drought or wildfire susceptibility mapping, let alone within a single integrated dual-hazard framework. However, the contribution of this work extends beyond merely being the first such application: the framework was engineered as a single executable workflow in which each hazard scenario is governed by a structurally distinct DANet model, with input layer dimensions, abstraction hierarchies, and hyperparameters dynamically configured to reflect the statistically filtered and thematically relevant predictors for that hazard. This hazard-driven architectural customization ensures that the network’s feature abstraction process operates in a problem-specific manner, extracting representations that are optimally aligned with the environmental, climatic, and anthropogenic drivers of each hazard type. In particular, the abstraction layers are reorganized for each hazard so that groups of predictors with related functional roles (e.g., vegetation indices, topographic variables, climate measures) are encoded jointly in the early stages, while deeper layers are tuned to re-abstract cross-group interactions that statistical pre-analysis identifies as especially pertinent to that hazard’s occurrence patterns. The embedded WOA routine systematically and autonomously tunes hyperparameters for each hazard model, ensuring performance is not dependent on manual trial-and-error but on a reproducible, algorithmic search of the model space. By binding model architecture design, hazard-specific input structuring, and metaheuristic optimization into a single reproducible codebase, this framework advances current practice beyond generic deep learning application, offering an adaptable and transparent methodology that can be ported to other dual-hazard contexts with high-dimensional predictor sets. In the case of the present study, the optimized outputs for drought and wildfire are subsequently combined to identify spatially coincident zones of elevated susceptibility, enabling integrated multi-hazard susceptibility appraisal at fine spatial resolution.

In light of these considerations, this study aims to develop and compare two advanced dual hazard modeling frameworks: (i) a stand-alone DANet model, and (ii) an optimized DANet model coupled with the WOA. By applying these frameworks to the Oroqen Autonomous Banner watershed, the research seeks to generate high-resolution drought and wildfire susceptibility predictions, elucidate the interactive mechanisms underlying these hazards, and contribute toward the advancement of integrated hazard modeling methodologies. The findings are anticipated to offer valuable insights for regional hazard preparedness, adaptive management strategies, and future research directions in the domain of environmental risk assessment.

2. Materials and Methods

2.1. Study Area

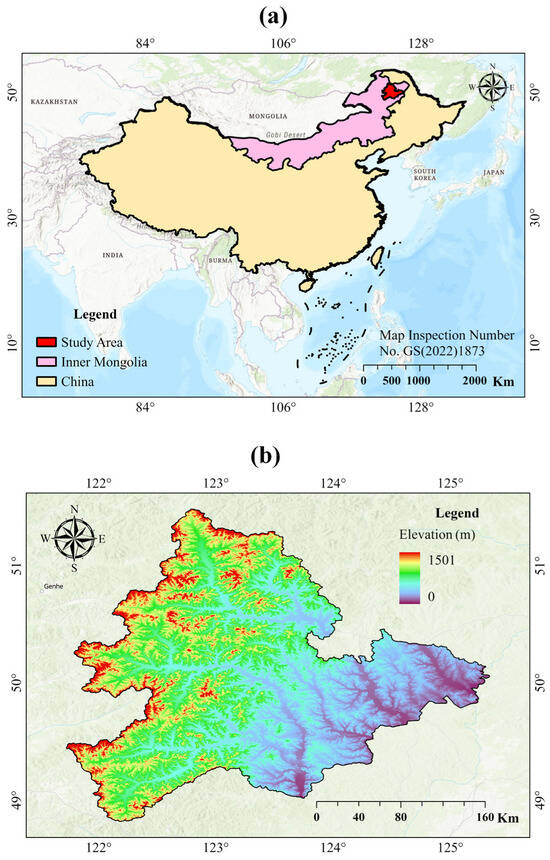

The Oroqen Banner lies in China’s northern Great Khingan range (Figure 1), a cold-temperate zone (semi-humid to humid) that has warmed significantly. Long-term reconstructions show a clear warming trend: for example, Zhao et al. [45] report that since 1707 the northern Greater Khingan Mountains warmed at ~0.06 °C per decade (with a slight increase in precipitation). Modern records corroborate this: across the broader Mongolian Plateau, mean annual temperature rose by ~2.24 °C (1940–2015) while precipitation fell ~7%, implying more frequent drought stress. Such trends suggest heightened risks for vegetation stress, wildfire susceptibility, and ecological shifts in the Oroqen region. In short, the Oroqen region has experienced rising temperatures and generally drier conditions in recent decades, consistent with regional trends in Inner Mongolia and adjacent Mongolia. Inner Mongolia’s grasslands and forests are highly fire-prone, especially in spring and autumn. Zhang et al. [46] note that Inner Mongolia (22% of China’s grasslands) sees frequent grassland fires, with most ignitions in spring and fall. Many of these fires historically occurred near farmland edges. However, strong fire-control policies in recent decades have sharply reduced China’s wildfire activity: from 2003 to 2022 nationwide fire frequency fell by ≈63% (from 51.7 × 103 to 19.1 × 103 fires per year) and fire radiative power by ≈81% [47]. Despite this national decline, Oroqen Banner remains classified as a moderate-to-high wildfire risk zone due to persistent dry periods, high fuel loads in grasslands and forests, and frequent spring winds. Thus, while Oroqen Banner remains vulnerable to wildfires (as illustrated by photo evidence of firefighters battling a 2012 grassland blaze in the region), local fire dynamics require continued monitoring and adaptive management under changing climate conditions.

Figure 1.

Geographic location of the study area: (a) the position of Oroqen Autonomous Banner within China and the Inner Mongolia Autonomous Region; (b) the study area’s topography as depicted by the digital elevation model (DEM).

Recent land cover analyses reveal dynamic shifts in Inner Mongolia. Grasslands once degraded by drought and overgrazing have shown a net recovery since the early 2000s. A 30 m Landsat-based dataset (1991–2020) finds that Inner Mongolia’s grassland area stabilized and even grew after 2005, due to large-scale restoration projects and subsidies. For example, Tong et al. [48] report that grassland area, after fluctuating in the 1990s, rose substantially post-2005 under ecological protection programs. Cropland expanded modestly: Chi et al. [49] found a net increase of ≈15,543 km2 of farmland across Inner Mongolia (1990–2018), concentrated in eastern parts of the region (the Oroqen Banner lies toward the eastern grassland zone). In contrast, afforestation efforts have stabilized forest cover: nationwide “Grain-for-Green” and conservation programs dramatically reduced deforestation and expanded woodlands after 2000 (noting Inner Mongolia among recovering forest trends). Nevertheless, recent drought events and overgrazing pressures have caused localized degradation patches, indicating that ecosystem recovery is spatially variable and fragile in some sectors of the Oroqen Banner. In summary, the Oroqen study area has seen grassland area recover and farmland modestly grow, reflecting China’s regional re-greening policies, but also exhibits areas requiring ongoing ecological restoration efforts. The Oroqen Banner supports rich cold-temperate ecosystems. Its forests, dominated by Larix gmelinii and birch, form part of China’s northern boreal forest belt. These forests provide vital ecosystem services, including air purification, watershed regulation, and habitat stability across the Greater Khingan region. Luo et al. [50] highlight that L. gmelinii exhibits altitude-dependent growth responses to climatic variables, particularly temperature and precipitation, indicating its sensitivity to environmental change. This species plays a key role in maintaining ecological balance and supporting understory plant communities and wildlife such as reindeer, elk, and migratory birds. However, projections under warming climate scenarios suggest a potential northward contraction in the suitable habitat range for L. gmelinii, raising concerns about long-term forest stability and associated ecosystem functions. In addition, parts of Oroqen Banner contain wetland and riparian habitats important for migratory birds (e.g., cranes), further underscoring the area’s biodiversity value. Overall, the Banner’s mixed conifer–deciduous forests and grasslands form a biologically rich mosaic essential for regional ecological health, yet one increasingly vulnerable to climate-induced transformations.

The Oroqen are a small, highly vulnerable indigenous group. Numbering well under 10,000, they occupy remote village communities in the Hinggan Mountains. Traditionally hunter–gatherers and reindeer herders, Oroqen livelihoods have been upended by modern policies. Hunting has been banned for decades, forcing most Oroqen to settle as farmers or laborers. Cultural change has been rapid: Whaley [51] reports that by the 21st century most Oroqen people were primarily farmers, with limited continuation of traditional practices. Their culture and language are under severe pressure: only about 1 in 6 Oroqen now speaks the indigenous language fluently, and intergenerational transmission has effectively ceased. Ward [52] notes that Oroqen culture is “cut adrift” from its animistic forest context, with traditions eroding as elders age and youth show little interest. While community development programs are underway, cultural and economic challenges persist, contributing to socio-ecological vulnerability in the region. In sum, the Oroqen community faces multiple vulnerabilities—geographic isolation, reduced customary land-use practices, cultural assimilation, and limited economic diversification—factors that may constrain adaptive capacity under future environmental change.

2.2. Data Sources and Processing

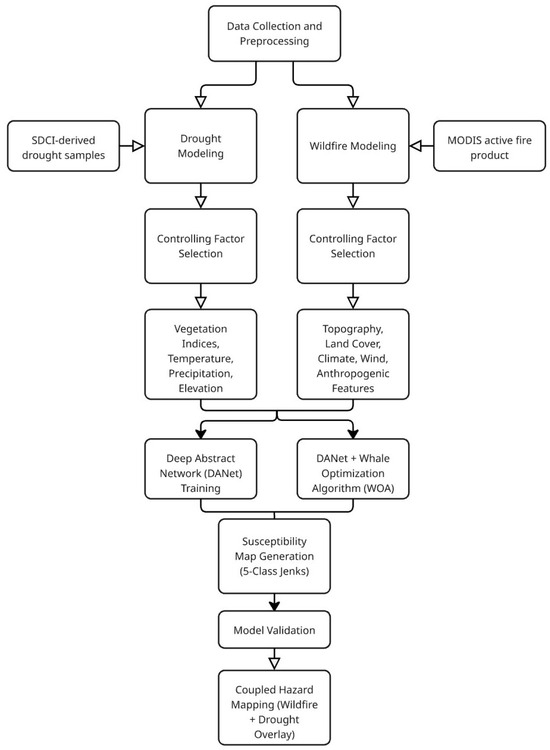

The methodological flowchart of this study is presented in Figure 2, comprising data collection and preprocessing, controlling factor selection (separate for each hazard), model development, optimization phase, hazard susceptibility mapping, model validation and performance assessment (including ROC curve, AUC, RMSE, MAE, loss functions, and pairwise z-statistic), and integrated hazard mapping and interpretation.

Figure 2.

Methodological workflow for dual-hazard susceptibility modeling. SDCI-derived drought samples (Standardized Drought Condition Index) and MODIS active fire data (Moderate Resolution Imaging Spectroradiometer) serve as input evidence. For both drought and wildfire, controlling factors are selected and processed before modeling is conducted using either a Deep Abstract Network (DANet) alone or combined with the Whale Optimization Algorithm (WOA) for optimization. Susceptibility maps are classified using the five-class Jenks method, followed by model validation and the development of a coupled hazard overlay.

2.2.1. Preparation of Evidence Layers (Presence Data)

The preparation of evidence layers, corresponding to the presence or absence of drought and wildfire events, constituted a crucial step toward developing reliable predictive models. Given the different nature and manifestation patterns of droughts and wildfires, the methodology for preparing the evidence layers was adapted accordingly to ensure maximum representativeness and scientific accuracy.

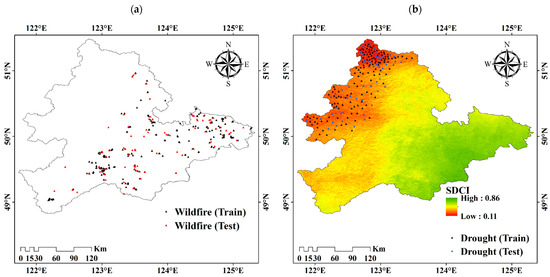

For wildfire presence data, we relied primarily on the active fire detections captured by satellite-borne thermal sensors, particularly the Moderate Resolution Imaging Spectroradiometer (MODIS) active fire product (https://firms.modaps.eosdis.nasa.gov/active_fire/, accessed on 26 April 2025). This globally recognized dataset provides consistent and reliable records of active fire events, minimizing uncertainties related to ground-based reporting. We extracted a total of 309 wildfire occurrence points spanning the period from 2020 to 2024 (Figure 3a). The points were subjected to rigorous spatial filtering to eliminate duplicates and to minimize spatial autocorrelation effects, thereby enhancing the statistical independence and robustness of the training samples used in subsequent modeling stages.

Figure 3.

Spatial distribution of extracted evidence samples for (a) wildfire and (b) drought across the study area (SDCI: Standardized Drought Condition Index).

The preparation of drought evidence layers required a more nuanced approach, given that drought events are gradual, diffuse, and often spatially heterogeneous phenomena rather than discrete occurrences. To address this, we initially derived a Standardized Drought Condition Index (SDCI) by integrating three remote sensing-based indices: the Vegetation Condition Index (VCI), the Temperature Condition Index (TCI), and the Precipitation Condition Index (PCI). This composite index was designed to capture multiple dimensions of drought manifestation, from vegetation stress to thermal and precipitation anomalies, within a standardized framework. After calculating the SDCI, we identified 200 drought presence samples by selecting locations corresponding to the highest SDCI values, which represented the most severe agrometeorological drought conditions over the study period (Figure 3b). This approach ensured that the constructed drought inventory reflected extreme drought events, thereby supporting a more accurate susceptibility modeling effort. The three vegetation and meteorological indices integrated into the SDCI calculation algorithm are presented in Table 1 [53,54,55,56,57].

Table 1.

Data sources and descriptions of remote sensing indices used for the identification and preparation of drought evidence layers.

2.2.2. Preparation of Predictors

Understanding the complex interplay of natural and anthropogenic variables in the occurrence of wildfires and droughts requires a comprehensive and scientifically grounded approach to data selection. In the present study, the controlling factors were carefully chosen based on an extensive review of previous research, theoretical frameworks regarding environmental hazards, and the specific biophysical processes that govern the manifestation of each phenomenon. To capture the full complexity inherent in wildfire and drought dynamics, we divided the potential influencing factors into four broad thematic categories: topographic, climatic, vegetation-related, and anthropogenic variables. This classification enabled a holistic evaluation of susceptibility patterns, as each group of factors contributes differently to the phenomena under investigation. Topographic factors, including elevation, slope, and aspect, were selected to account for the influence of terrain on hydrological behavior, microclimatic conditions, and vegetation distribution, all of which can critically affect both drought persistence and wildfire spread. Climatic variables such as temperature, rainfall, and wind speed were included due to their primary role in controlling moisture availability, fuel aridity, ignition potential, and fire propagation characteristics. Vegetation indices, particularly those sensitive to chlorophyll content, water stress, and biomass density, were chosen to serve as proxies for ecosystem health, fuel conditions, and moisture anomalies, providing real-time insights into environmental stress levels. Finally, anthropogenic factors, including population density and distance to roads, were incorporated to reflect the human footprint, given that human activities often act as either direct triggers or amplifiers of environmental hazards. This multi-factorial approach ensures that the models built for wildfire and drought susceptibility mapping are capable of capturing not only the biophysical predisposition of the landscape but also the human-induced vulnerabilities that increasingly drive hazard occurrence under changing climatic conditions.

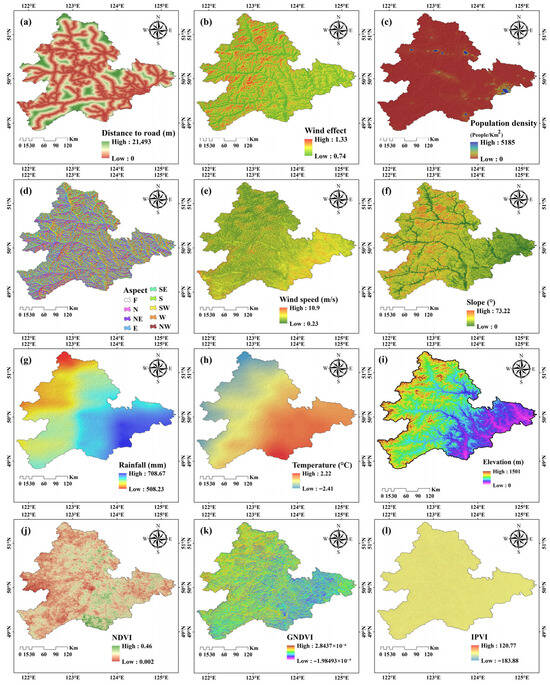

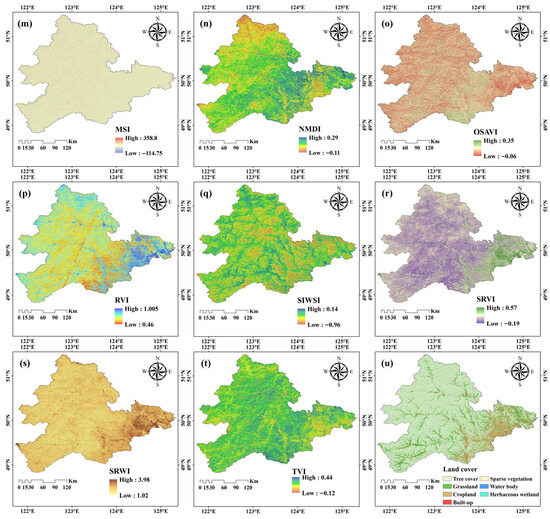

The selection of controlling factors for drought susceptibility modeling in this study is grounded in an extensive review of the relevant literature, which consistently highlights the importance of topographic, climatic, and vegetation indices in capturing drought risk and dynamics [4,9,21,23,24]. Similarly, the wildfire susceptibility predictors were chosen in alignment with established studies that emphasize the key roles of terrain attributes, weather variables, vegetation conditions, and anthropogenic influences in wildfire occurrence and propagation (e.g., [3,5,6,17,19,33]). Table 2 and Table 3 present detailed information on the datasets used for drought and wildfire susceptibility modeling, respectively, including the source, temporal coverage, spatial resolution, and the specific causative role attributed to each factor. The corresponding spatial distributions of these predictor variables are illustrated in Figure 4 through thematic maps.

Table 2.

Controlling factors used for drought susceptibility modeling.

Table 3.

Controlling factors used for wildfire susceptibility modeling.

Figure 4.

Thematic maps of the selected predictors used in wildfire and drought susceptibility modeling: (a) distance to road (m), (b) wind effect, (c) population density (people/km2), (d) aspect, (e) wind speed (m/s), (f) slope (degree), (g) rainfall (mm), (h) temperature (°C), (i) elevation (m), (j) NDVI (Normalized Difference Vegetation Index), (k) GNDVI (Green Normalized Difference Vegetation Index), (l) IPVI (Infrared Percentage Vegetation Index), (m) MSI (Moisture Stress Index), (n) NMDI (Normalized Multi-Band Drought Index), (o) OSAVI (Optimized Soil-Adjusted Vegetation Index), (p) RVI (Ratio-Based Vegetation Index), (q) SIWSI (Shortwave Infrared Water Stress Index), (r) SRVI (Stress-Related Vegetation Index), (s) SRWI (Simple Ratio Water Index), (t) TVI (Transformed Vegetation Index), and (u) land cover.

2.3. Multicollinearity Analysis of Controlling Factors

An indispensable part of preparing the data for modeling involved conducting a rigorous multicollinearity analysis of the selected controlling factors. Multicollinearity, the condition where two or more independent variables are highly linearly correlated, can severely distort the outcomes of statistical and machine learning models by inflating variances of estimated coefficients and by making the interpretation of variable importance problematic. To prevent such issues, we calculated the Variance Inflation Factor (VIF) for each variable individually. Variables that exhibited a VIF value exceeding the commonly accepted threshold of 10 were considered to exhibit problematic multicollinearity and were removed from the dataset. This multicollinearity screening was performed separately for the sets of factors selected for wildfire and drought susceptibility modeling, acknowledging that the interrelationships among variables might differ between the two phenomena. By retaining only variables that demonstrated acceptable independence, we ensured the reliability, stability, and interpretability of the modeling outputs. Moreover, this preprocessing step enhanced the predictive power of the models by eliminating redundant information and focusing the model training process on the truly informative attributes. Following multicollinearity analysis, all retained variables were standardized to ensure comparability and to prevent variables with larger numerical ranges from unduly influencing the model training process. These prepared and screened datasets formed the foundation for the subsequent stages of model development and validation.

2.4. Deep Abstract Network (DANet) Model for Susceptibility Mapping

Accurately mapping where droughts and wildfires are likely to occur requires more than simply feeding every available dataset into an algorithm and hoping for meaningful predictions. While these hazards are similar enough to share certain broad environmental controls, such as climatic patterns and vegetation conditions, they differ in their manifestation and underlying triggers to the extent that each demands a tailored modeling approach. Both droughts and wildfires may be influenced by prolonged periods of hot and dry weather, yet drought typically emerges gradually over weeks or months as extended rainfall deficits deplete soil moisture and vegetation health. In contrast, wildfires can ignite and spread rapidly, sometimes within hours or even minutes, driven by sudden wind changes, the presence of dry fuels, or human activities. This combination of shared and hazard-specific drivers creates a multi-layered, high-dimensional challenge: a successful model must accommodate diverse predictor types, including climatic variables, vegetation indices, topographic measures, and anthropogenic factors, while ensuring that the relationships most relevant to each hazard dominate the learned representation. The DANet architecture is particularly suited to this challenge because it is purposely built for structured, tabular environmental data, where predictor variables come from multiple measurement scales and functional domains. Unlike image-based architectures such as CNNs, the DANet does not require spatially contiguous pixel grids or long temporal sequences; instead, it processes predictors through thematic abstraction layers that group related variables (e.g., climate, vegetation, topography, human impact) before recombining them at deeper levels. This approach mirrors how hazard scientists mentally organize drivers, enabling the network to first strengthen “within-domain” relationships (such as rainfall–soil moisture for drought) and then explore “cross-domain” interactions (such as drought-induced vegetation stress facilitating wildfire spread). Additionally, the DANet’s residual shortcut connections preserve hazard-specific baseline signals from the raw predictors, preventing the loss of slow-moving drought trends or sudden wildfire precursors during feature transformations. This combination of domain-grouped abstraction, controlled cross-integration, and baseline preservation is what allows the DANet to capture both the gradual and sudden dynamics of these hazards in a single, unified framework with architectures that can then be tuned separately for drought and wildfire using the WOA algorithm.

The DANet is a neural architecture specifically designed for tabular data, making it well suited for modeling environmental hazards characterized by heterogeneous and structured datasets [58]. The core innovation of the DANet lies in its Abstract Layer (AbstLay), which groups correlated input features to generate higher-level semantic abstractions. These abstractions facilitate the modeling of complex interactions among environmental variables, enhancing the predictive performance of the network. The architecture of the DANet comprises multiple stacked AbstLays, each learning to capture and abstract feature interactions at different levels. Additionally, a specialized shortcut path is integrated to fetch information directly from raw input features, assisting in feature interactions across various abstraction levels. This design enables the network to maintain a balance between learning deep feature representations and preserving essential raw feature information.

The Deep Abstract Network processes input features , where is the number of samples and is the number of predictors. In each abstraction layer, the abstraction for the l-th layer is [58]:

where is the activation function (e.g., Rectified Linear Unit, known as ReLU), while and are learnable weights and biases. A shortcut connection allows the network to combine transformed and original features:

where is (the input layer) and is a scalar balancing the abstracted and raw features.

A central aspect of this study’s methodology is that the input layer and subsequent abstraction layers of the DANet were not fixed but were distinctly structured for each hazard scenario to reflect the set of selected predictors. Specifically, since each hazard (drought or wildfire) was characterized by a different collection of statistically filtered and thematically relevant input variables (Table 2 and Table 3), the input dimension ( in ) and internal abstraction layer groupings were both variable and hazard-dependent. This directly altered the topology of the network: for example, the number of neurons in the input layer and the pattern by which features were abstracted at each level differed between the drought and wildfire DANet models. Likewise, the number of abstraction layers itself (among other hyperparameters) was ultimately tuned via the optimization process for each hazard to maximize performance as measured by validation metrics. In practical terms, this means the DANet model trained for drought susceptibility had a unique input structure, abstraction hierarchy, and layer configuration tailored to the drought predictors and their interactions, and likewise for wildfire. This hazard-driven architectural adjustment makes each model structurally optimized for its specific data context, and thus maximizes the network’s capacity to extract meaningful patterns for susceptibility mapping.

While Equations (1) and (2) describe the standard, hazard-agnostic form of the DANet, our framework modifies each mathematical stage so that the architecture, parameterization, and objective are explicitly dependent on the hazard being modeled. This means that the variables , , , and even the loss function are indexed by hazard type , and in several cases also by thematic predictor group g. These adaptations ensure the following conditions:

- The dimensionality and composition of the input layer match the hazard’s unique set of predictors.

- The abstraction layers are formed and connected according to hazard-relevant functional groupings.

- The cross-group integration strategy reflects plausible environmental interactions between domains.

- The shortcut connection preserves the optimal amount of raw information for each hazard.

- The loss function emphasizes the error characteristics most critical for that hazard’s practical management.

The following five subsections introduce each of these hazard-specific adaptations in plain terms, before presenting their mathematical definitions.

- Sequence 1: Hazard-Tailored Input Layer

We start by building two separate “gateways” into the model, one for drought and one for wildfire. Each gateway lets in only the predictors that truly matter for that hazard, chosen after statistical checks to remove noise and redundant data. In simple terms, a drought model does not waste “brainpower” on fire-specific variables, and vice versa. This means the very first layer of the network already “speaks the language” of the hazard it is studying, because the number and type of inputs match that hazard’s unique environmental fingerprint. The mathematical form follows:

where is hazard type (drought or wildfire), denotes the number of samples for hazard , and represents the number of hazard-specific, statistically filtered predictors.

- Sequence 2: Hazard-Specific Abstraction Layer Grouping

Once the right predictors are in, we do not just toss them all into a blender. Instead, the network organizes them into themed groups that mirror how hazard experts think about the world. Climate variables sit together, vegetation measures share a room, topographic features form their own cluster, and human-influence indicators acquire their own corner. For drought, the climate–vegetation conversations inside the network are the most important. For wildfire, the loudest chatter happens between slope, wind, and fuel dryness. These groups make it easier for the model to build relationships within the most meaningful “domains” first. Mathematically, this step follows the expression:

where is thematic predictor group index for hazard ; and are hazard/group-specific weights and biases, and represents the activation function for hazard and group .

- Sequence 3: Cross-Group Abstraction

As the model digs deeper, it stops looking within each group and starts mixing ideas across them. This is where drought-altered vegetation might interact with windy conditions to heighten fire potential. The network recombines the groups into a single conversation to learn cross-domain interactions. Without this step, the model might stop working, thinking only “within boxes” and missing the bigger picture. The mathematical form follows:

where denotes concatenation of feature vectors along the feature axis for hazard at the previous layer.

- Sequence 4: Hazard-Specific Shortcut Connection

Sometimes the raw, untouched input variables still carry vital information that should not be lost. To prevent this, we add a “shortcut” that sends some of the original data forward alongside the processed version. But the amount of this original data, “spare cable”, differs in both hazards; drought prediction might keep more of the raw climate patterns, while wildfire prediction might benefit more from transformed feature maps. Mathematically, it follows the expression:

where controls the hazard-specific blend of learned vs. raw features at layer .

- Sequence 5: Hazard-Dependent Objective Function

Finally, the model needs to judge its performance, but drought and wildfire are graded differently. Drought is more about catching persistent biases; wildfire focuses on avoiding huge, one-off misses. So, our “report card” changes its weighting to match the hazard’s priorities. In mathematical terms:

where tunes the error sensitivity according to hazard .

For model training, a supervised learning approach was adopted, utilizing presence and absence samples for each hazard as ground-truth labels. The input dataset included all selected and multicollinearity-filtered controlling factors, resampled to a unified 30 m spatial grid using the bilinear interpolation for continuous variables, to ensure consistency across layers, align with the highest-resolution predictors, and enable high-resolution hazard mapping outputs. For all coarse-to-fine resampling steps, we used bilinear interpolation for continuous variables. This technique was chosen for its ability to smooth transitions between coarse grid values but avoid the blockiness of nearest-neighbor. The dataset was randomly split into training and testing subsets with a 70:30 ratio, ensuring adequate representation of both presence and absence points in each subset [12]. Initially, baseline hyperparameters were selected based on the model’s default values, including 64 output feature dimensions, 64 virtual batches, 5 masks, and 8 abstract layers. The MAE-based loss function was minimized over 100 epochs, with early stopping criteria based on validation loss fluctuations to prevent overfitting and ensure training efficiency. Subsequently, these manually assigned hyperparameters were subject to further optimization using the WOA, as detailed in the next section. The integration of WOA allowed for dynamic fine-tuning of key hyperparameters, aiming to elevate model predictive performance and stability. Upon successful training, the DANet model produced continuous susceptibility probability maps for both wildfire and drought across the study area. These continuous outputs were subsequently classified into five discrete susceptibility classes using the natural break (Jenks optimization) method, enabling a clear and intuitive representation of varying risk zones. The final trained DANet models, both solitary and WOA-coupled, were rigorously evaluated using multiple performance metrics, as described in subsequent sections.

2.5. Whale Optimization Algorithm (WOA) for Hyperparameter Tuning

Choosing the right architecture for a DANet, deciding how many layers to include, how many neurons each layer should have, the strength of skip connections, and the type of activation functions to employ, can have a profound effect on predictive performance. Attempting to tune all these hyperparameters by hand is akin to guessing the correct combination to a safe without knowing how many dials there are or how many digits each can take. To address this challenge in a systematic and efficient way, the WOA was selected to automate the tuning process. The WOA draws inspiration from the hunting strategy of humpback whales known as “bubble-net feeding”, and alternates between two complementary behaviors. In its exploration phase, it searches broadly across the parameter space, testing widely different configurations such as varying the number of abstraction layers or dramatically altering neuron counts. In its exploitation phase, it focuses on the most promising solutions found so far, refining them by making targeted adjustments such as fine-tuning skip connection weights in a wildfire-specific model. This approach inherently avoids the risk of bias toward one hazard’s optimal configuration: a drought-focused DANet might achieve its best performance with deeper architectures to capture slow-developing cumulative effects, whereas a wildfire-focused DANet might benefit from fewer but wider layers to detect rapidly changing spatial patterns. By treating each hazard’s architecture search independently, the WOA ensures that wildfire models do not “inherit” drought-optimized parameters and vice versa. Additionally, the WOA is highly efficient in high-dimensional optimization problems, achieving near-optimal solutions with far fewer evaluations than exhaustive strategies such as grid search, a crucial advantage when each trial entails retraining a deep network. Finally, the WOA’s adaptability allows it to perform well even when optimization landscapes differ markedly between hazards, dynamically adjusting its search strategy without the need for reprogramming.

In other words, the WOA combines exploration and exploitation phases effectively via its adaptive encircling and bubble-net feeding mechanisms, which makes it particularly adept at avoiding premature convergence and navigating complex, high-dimensional search spaces often encountered in deep learning hyperparameter tuning [44]. The WOA also demonstrates competitive performance with respect to convergence speed and solution accuracy in parameter-rich optimization tasks. However, there is no universally best metaheuristic algorithm; comparative results often depend on dataset characteristics, the specifics of the optimization problem, and the model architecture. Additional advantages of the WOA include its ease of implementation, minimal parameter tuning requirements, and scalability to large optimization problems. In contrast to traditional search strategies such as grid and random search, which become computationally prohibitive for complex deep learning models, the WOA’s adaptive mechanism accelerates convergence toward optimal solutions while efficiently navigating high-dimensional parameter spaces. These factors collectively underpin the choice of the WOA as an efficient, robust, and generalizable optimizer for coupling with the DANet architecture in the context of multi-hazard susceptibility mapping.

A key methodological innovation of this study is the integration of the DANet and WOA into a single coupled modeling workflow. This unified framework enables fully automated, end-to-end hyperparameter optimization and model training for susceptibility mapping of each hazard, minimizing manual intervention and ensuring that the best-performing DANet architecture is systematically identified for both drought and wildfire scenarios. The DANet and WOA work together much like an architect and a consultant. The DANet acts as the architect, capable of designing a model structure tailored to the “climate” of each hazard, in this case, the specific conditions and data characteristics of drought and wildfire. The WOA plays the role of the consultant, running simulations to refine those designs until they are ideally suited to each hazard’s needs. For drought, the WOA may determine that a deeper network with more layers is best, allowing the model to capture the gradual, long-term interplay between climate conditions and vegetation stress. For wildfire, the WOA might settle on a shallower but wider network, better able to detect rapid ignition and spread patterns driven by sudden changes in wind, fuel, and terrain. This partnership between the DANet and WOA addresses two core challenges at once. First, each hazard receives a network architecture that is custom-built to reflect its own underlying drivers, rather than relying on a one-size-fits-all model. Second, the optimization process is entirely automatic and reproducible, with each design tested and tuned using the performance measures most relevant to the hazard it is intended to predict.

Hyperparameter tuning involves finding the optimal configuration of these parameters, such as the learning rate, batch size, and the number of layers, to enhance model accuracy and minimize overfitting. To optimize the DANet model’s performance, the WOA was integrated as a metaheuristic optimization method. The WOA is inspired by the hunting behavior of humpback whales and is known for its ability to efficiently explore large search spaces and converge to optimal or near-optimal solutions. The WOA searches for optimal hyperparameters by updating each candidate solution based on the simulated encircling prey [44]:

where is the current position (hyperparameters), is the best-known position, while and are coefficients controlling exploration and exploitation and are updated per iteration.

It is particularly useful in high-dimensional optimization problems, such as hyperparameter tuning, where traditional methods like grid search or random search may not be as effective. The WOA operates by simulating the search for food by whales, adjusting the positions of potential solutions based on an exploration–exploitation strategy. This allows the algorithm to balance global search (exploration) and local search (exploitation), which is crucial when optimizing complex models like the DANet. In this study, the WOA was employed to fine-tune the hyperparameters of the DANet model. It adjusted parameters such as the learning rate, number of epochs, batch size, and other model-specific parameters to improve the performance of the model. The optimization process was conducted in conjunction with the model training, allowing for real-time adjustments during the learning process. The performance of the hyperparameter-tuned DANet model was evaluated using multiple metrics, such as Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE), to ensure that the model was accurately capturing the underlying patterns of wildfire and drought susceptibility. The final tuned parameters were used in the subsequent stages of model evaluation and validation.

2.6. Generation of Susceptibility Maps and Five-Class Classification

After training the Deep Abstract Network (DANet) under both modeling scenarios (i.e., solitary and coupled with WOA) for wildfire and drought susceptibility prediction, the continuous output probability maps were generated for both phenomena across the entire study area. These susceptibility maps represent the likelihood of wildfire and drought occurrences at each grid cell, with values ranging between 0 and 1. The continuous probability outputs allow for a detailed view of potential risk zones, but they need to be categorized for practical use in risk assessment and management. Therefore, a classification process was applied to group the continuous susceptibility values into distinct risk categories. To achieve this, the natural breaks (Jenks) optimization method was employed. This method is widely used for classifying continuous data into discrete categories based on natural groupings inherent in the data. The Jenks scheme minimizes the variance within each class and maximizes the variance between the classes, ensuring that the classes are as distinct as possible. For this study, the continuous susceptibility scores from the DANet model were grouped into five classes, reflecting varying degrees of risk: very low, low, moderate, high, and very high, as suggested by Pourghasemi et al. [12] and Kornejady et al. [59,60]. The five-point classification was intended to facilitate practical interpretation and decision-making. The Natural Breaks method was chosen due to its ability to create meaningful categories that are sensitive to the underlying spatial patterns of wildfire and drought occurrences. The resulting susceptibility maps were then visualized and analyzed to identify key areas requiring management interventions, with the highest risk zones prioritized for immediate action.

2.7. Model Performance Evaluation and Comparison

In order to assess the performance of the Deep Abstract Network (DANet) model in predicting wildfire and drought susceptibility, several performance metrics and loss function behaviors were analyzed. This section focuses on the statistical metrics used to evaluate the model’s effectiveness, as well as the behavior of the loss function during training and validation. The model performance was compared across different configurations, including the solitary DANet and the coupled model where the DANet was combined with the WOA for hyperparameter tuning.

2.7.1. Statistical Metrics: RMSE and MAE Assessment

To evaluate the accuracy of the model’s predictions, two key statistical metrics were used: Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE). RMSE measures the square root of the average squared differences between predicted and observed values following Equation (9), providing insight into the magnitude of prediction errors. It is particularly sensitive to large errors and is widely used for assessing model performance. On the other hand, MAE calculates the average of the absolute differences between the predicted and actual values following Equation (10), providing a more direct measure of prediction accuracy without emphasizing larger deviations.

where is the observed value and is the predicted value for the i-th sample.

For both wildfire and drought susceptibility predictions, these metrics were calculated for the solitary DANet model and the coupled model. The comparison between these configurations helped evaluate the impact of hyperparameter optimization via the WOA on model performance. These statistical metrics provided a clear indication of how well the model was able to predict susceptibility levels for each phenomenon.

2.7.2. Loss Function Behavior Analysis

The loss function behavior was analyzed by tracking both the training loss and validation loss over the course of model training. Monitoring the loss function is crucial to assess the model’s ability to generalize, avoid overfitting, and ensure convergence. For the DANet model, the MAE-based loss function was employed, as it is appropriate for regression tasks and enables the model to optimize its prediction of susceptibility levels [61]. Throughout the training process, both the training and validation losses were recorded for each epoch. The training loss is calculated using the data the model was trained on, while the validation loss is based on a separate set of data that was not used during training. The progression of these losses provided valuable insights into how well the model was fitting the data and whether overfitting or underfitting was occurring. The loss behavior analysis helped identify the need for early stopping to prevent overfitting. Early stopping was implemented by monitoring the validation loss, and if it started to increase while the training loss continued to decrease, the training process was halted. This technique helped to maintain a balance between fitting the model to the training data and ensuring it could generalize to new data effectively.

2.7.3. Model Validation Using ROC Curve and AUC Values

The model validation process aims to assess the discriminatory ability of the predictive models by utilizing the Receiver Operating Characteristic (ROC) curve and calculating the Area Under the Curve (AUC) values. The ROC curve is a graphical representation that illustrates the performance of the model across various threshold values, plotting the true positive rate (TPR) against the false positive rate (FPR) [62,63]. This curve helps evaluate the trade-off between sensitivity and specificity in model predictions. In this study, we employed ROC curve analysis to compare the performance of both the solitary and coupled models for wildfire and drought susceptibility prediction. The models’ outputs, which consist of continuous susceptibility probability maps, were transformed into binary classifications using varying threshold values. By systematically adjusting the threshold, we were able to observe the change in TPR and FPR. The ROC curve was then plotted, where an ideal model would exhibit a curve that hugs the upper-left corner of the plot, corresponding to a high TPR and a low FPR.

The AUC value, which quantifies the overall ability of the model to distinguish between positive and negative instances, was calculated. AUC values range from 0 to 1, where a value of 1 represents perfect classification, and a value of 0.5 indicates a model performing no better than random chance. AUC values closer to 1 indicate stronger model performance in terms of its predictive power. For each model (both solitary and coupled with WOA), the AUC values were compared to assess their relative performance. The higher the AUC value, the better the model’s ability to correctly classify the areas of high susceptibility to wildfire and drought. The ROC and AUC metrics provided an essential validation step, confirming the robustness of the predictive models in producing accurate susceptibility maps for the study area. This model validation approach is crucial in determining the real-world applicability and reliability of the developed susceptibility maps, ensuring that the models perform well under different conditions and are capable of providing accurate risk assessments.

2.7.4. Statistical Comparison of Susceptibility Classifications

Pairwise z-statistic analysis was used to statistically compare the susceptibility classifications generated by the solitary and coupled modeling scenarios. This comparison aimed to assess whether there were significant differences between the susceptibility maps produced under the two different modeling setups (i.e., solitary DANet model versus the coupled model enhanced by the Whale Optimization Algorithm). To perform the comparison, the susceptibility classes for both wildfire and drought were first assigned using the five-class classification scheme based on the natural breaks (Jenks) optimization method, as described in earlier sections. The susceptibility classes were divided into discrete categories, such as very low, low, moderate, high, and very high, representing varying degrees of risk for wildfire and drought occurrences. A pairwise z-statistic analysis was conducted to determine whether the differences in susceptibility classifications between the two models were statistically significant. The z-statistic test is commonly used for comparing two proportions and assessing whether the observed difference in proportions is greater than what might be expected by chance alone. In this case, the proportions represent the number of pixels or areas classified into each susceptibility class under both the solitary and coupled models. The formula for the z-statistic follows [64,65]:

where and are the proportions of pixels in each class for the solitary and coupled models, respectively; is the pooled proportion, calculated as , where and are the number of pixels in each group; and the denominator accounts for the variance in the two proportions.

In this study, the z-statistic test was performed for all susceptibility classes as an overall comparison. A z-statistic value greater than a critical threshold (here, at 95% confidence level) indicates that there is a significant difference in the susceptibility classification between the two models. By conducting the pairwise z-statistic analysis, we were able to statistically evaluate whether the addition of the WOA in the coupled model improved the classification of susceptibility in a statistically significant manner. This analysis provided valuable insight into the performance differences of the two modeling approaches, offering a quantitative measure of how the coupled model might enhance classification accuracy compared to the solitary model.

2.7.5. Coupled Hazard Mapping and Interpretation

To facilitate a comprehensive understanding of multi-hazard dynamics, the final step involved the generation of a coupled hazard map by overlaying the individual susceptibility maps for wildfire and drought. This integrated mapping approach enabled the identification of areas subjected to simultaneous threats, as well as zones predominantly vulnerable to either wildfire or drought alone. Importantly, while regions exposed to both wildfire and drought hazards are of particular concern due to the compounded risks they pose, areas exhibiting a singular threat—either wildfire or drought susceptibility alone—were also systematically captured in the coupled map. Recognizing singular hazard zones is crucial, as isolated threats can still cause severe environmental, economic, and societal impacts. Therefore, the coupled mapping framework was designed not only to highlight multi-hazard hotspots but also to guide management efforts toward regions at risk from individual hazards.

3. Results and Discussion

3.1. Multicollinearity Diagnostics of Input Factors

The multicollinearity analysis for drought susceptibility modeling revealed substantial Variance Inflation Factor (VIF) values across several predictor variables (Table 4). Specifically, SRVI exhibited a very high VIF of 95.468, followed by SRWI at 55.505 and RVI at 26.676, far exceeding the acceptable threshold of 10. Additionally, DEM (6.194), SIWSI (6.352), and Temperature (9.523) recorded moderate VIF values but remained below the critical limit. Most other variables, including GNDVI (4.555), IPVI (1.043), MSI (1.023), NDVI (2.009), and NMDI (3.321), displayed VIFs well within acceptable bounds. These results suggest that while the majority of predictors exhibited tolerable collinearity, a few vegetation indices were highly redundant and could potentially compromise model stability if not appropriately handled. For wildfire susceptibility modeling, the multicollinearity results revealed no critical concerns (Table 4). All variables demonstrated VIF values below 10, with DEM (8.116) and Temperature (7.177) being the highest but still within the acceptable range. Environmental and anthropogenic factors, such as Aspect (1.056), Distance to Road (1.097), Land Cover (1.710), and Population Density (1.040), displayed low VIFs, indicating a largely independent feature set. This suggests that the wildfire dataset was structurally less prone to multicollinearity issues compared to the drought dataset, likely due to the broader variety of predictor types involved.

Table 4.

Variance Inflation Factor (VIF) values for drought and wildfire susceptibility modeling predictors.

Overall, the drought susceptibility predictors presented moderate to severe multicollinearity, mainly among remote sensing indices measuring similar biophysical characteristics. In contrast, the wildfire modeling predictors maintained acceptable independence. These findings underscore the importance of robust model architectures like the DANet that can dynamically prioritize and weight input features to minimize the risk of redundancy-driven instability, especially for drought susceptibility assessments.

3.2. Hyperparameter Optimization with WOA

The WOA was employed to optimize the key hyperparameters of the DANet models for both drought and wildfire susceptibility mapping. The optimization process successfully identified distinct sets of hyperparameters that maximized the model performance, measured in terms of the best achieved R2 score on validation data. The results of the hyperparameter tuning are summarized in Table 5. For drought susceptibility modeling, the optimized hyperparameters included an output feature dimension of 9, a virtual batch size of 43, five masks, and four abstract layers. These settings collectively contributed to achieving a strong R2 value of 0.81 during validation, indicating a high explanatory power of the tuned model in capturing drought susceptibility patterns across the study area. In the case of wildfire susceptibility modeling, the WOA determined an optimal output feature dimension of 32, a virtual batch size of 16, four masks, and two abstract layers. Although slightly lower than that for drought, the wildfire model achieved a respectable R2 value of 0.67. This difference can be attributed to the inherently more stochastic nature of wildfire occurrences, which may introduce greater variability and complexity that are more difficult to model compared to drought patterns.

Table 5.

Initial and optimized hyperparameters and best R2 values for drought and wildfire models using the solitary and WOA-coupled DANet modeling scenarios.

Overall, the application of the WOA enabled an efficient and effective search through the hyperparameter space. The tuned models, therefore, served as the basis for the subsequent generation and evaluation of susceptibility maps under the ensemble model scenario.

3.3. Training and Validation Performance: RMSE and MAE Analysis

The evaluation of model performance during the training and validation phases was conducted using two common statistical metrics, namely RMSE and MAE, presented in Table 6 for both drought and wildfire susceptibility modeling under two modeling scenarios: the stand-alone Deep Abstract Network (DANet) and the WOA-optimized DANet (DANet-WOA). For drought susceptibility mapping, the solitary DANet model achieved an RMSE of 0.20 on the training set and 0.28 on the validation set, along with MAE values of 0.13 and 0.17, respectively. In contrast, the DANet-WOA model exhibited improved performance, with reduced RMSE values of 0.13 and 0.21 for training and validation, respectively, and MAE values decreasing to 0.07 and 0.11. Similarly, for wildfire susceptibility modeling, the solitary DANet yielded RMSE values of 0.32 (training) and 0.39 (validation) and MAE values of 0.26 and 0.32. After hyperparameter tuning with the WOA, the DANet-WOA model achieved lower RMSE values of 0.28 and 0.36 and MAE values of 0.22 and 0.28 for training and validation, respectively. These results clearly demonstrate that the integration of the Whale Optimization Algorithm enhanced the models’ generalization capabilities, leading to lower prediction errors across both training and validation datasets. The observed reduction in RMSE and MAE highlights the effectiveness of WOA-based hyperparameter optimization in fine-tuning model complexity, controlling overfitting, and improving predictive accuracy for both wildfire and drought susceptibility modeling.

Table 6.

RMSE and MAE values of drought and wildfire susceptibility models under DANet and DANet-WOA scenarios.

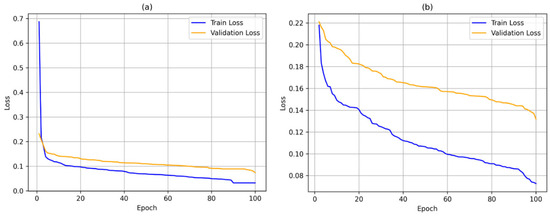

3.4. Loss Function Trajectory During Model Training

The loss function plots provide critical insights into the learning dynamics and generalization behavior of the models across both hazards. In the case of the DANet (Figure 5) for drought (Figure 5a), both the training and validation loss curves show a relatively steep decline within the first 10–15 epochs, stabilizing afterward with gradual improvements. However, a visible gap persists between the training and validation losses, suggesting moderate overfitting. For wildfire (Figure 5b), although a general downward trend is maintained, the gap between training and validation losses is more pronounced and remains almost parallel, indicating greater challenges in achieving strong generalization for wildfire hazard modeling with the original DANets.

Figure 5.

Loss function of DANets: (a) drought and (b) wildfire.

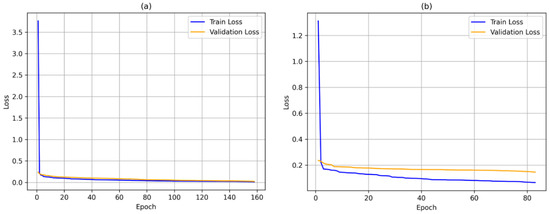

The enhanced DANets-WOA (Figure 6) exhibit notably different behavior. For drought (Figure 6a), the model rapidly minimizes both training and validation losses within the first 10–20 epochs, and critically, the two curves stay very close throughout the training. This demonstrates that the WOA-based hyperparameter tuning significantly improved the generalization performance, mitigating overfitting. Moreover, the final loss values are substantially lower compared to the DANets model. Similarly, for wildfire (Figure 6b), the convergence pattern is much smoother compared to DANets, and while a slight gap remains, it is narrower and progressively shrinking across epochs, confirming the effectiveness of WOA tuning even for the more complex and spatially stochastic wildfire data. Overall, these observations highlight that WOA-tuned DANets (DANets-WOA) not only accelerated convergence but also achieved better balance between fitting and generalization across both drought and wildfire hazard scenarios, validating the critical role of hyperparameter optimization in boosting model robustness.

Figure 6.

Loss function of DANets-WOA: (a) drought and (b) wildfire.

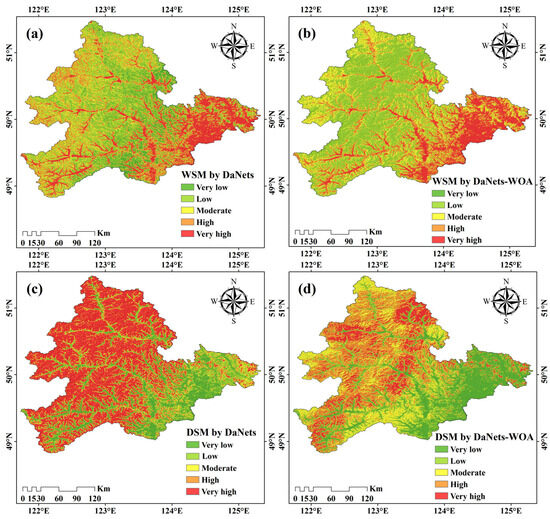

3.5. Susceptibility Map Generation for Wildfire and Drought

The generation of susceptibility maps using both the solitary DANets models and their WOA-optimized counterparts reveals spatial patterns that are the cumulative result of interactions among multiple environmental, climatic, and anthropogenic factors. In Figure 7, four maps are presented—two for wildfire susceptibility (from the solitary and WOA-tuned models) and two for drought susceptibility—each of which reflects the underlying gradients evident in the factor maps. By carefully comparing these outcomes with the mapping of individual parameters such as topography, vegetation indices, climatic conditions, and human-related influences, it becomes possible to elucidate the mechanisms driving the spatial distribution of risk.

Figure 7.

Wildfire and drought susceptibility maps under solitary and WOA-tuned DANet models: (a) wildfire susceptibility by DANet, (b) wildfire susceptibility by DANet-WOA, (c) drought susceptibility by DANet, and (d) drought susceptibility by DANet-WOA.

For wildfire susceptibility, the maps show that the eastern parts of the Oroqen Autonomous Banner are consistently marked by “high” and “very high” risk, a result that may seem unexpected at first glance because these areas are also characterized by relatively high precipitation levels. However, a closer examination of the contributing factors resolves this apparent paradox. In the east, high temperatures prevail alongside the elevated precipitation; nevertheless, the pattern of rainfall is likely episodic rather than sustained, resulting in conditions where high temperatures and rapid evaporation cause fuels to dry out quickly. Concurrently, the region exhibits certain topographic features—moderate to steep slopes and sun-exposed aspects—that enhance the drying effect of solar radiation. The wind factor maps reinforce this narrative by revealing higher wind speeds and a pronounced wind effect in these areas, conditions that not only help disperse moisture but also expedite the drying process and facilitate rapid fire propagation. Moreover, when anthropogenic factors are considered, the eastern region stands out for its proximity to roads and elevated population density, intensifying the likelihood of accidental ignitions. The land cover configuration further contributes to the risk; the eastern landscape is a mosaic of cropland interspersed with fragmented tree cover, creating multiple fuel continuity interfaces. Even though the precipitation is relatively high, the combination of intermittent rainfall with aggressive drying conditions, enhanced by high ambient temperatures and wind, results in a net increase in wildfire susceptibility. After applying the Whale Optimization Algorithm, the wildfire susceptibility map becomes more refined, with the high-risk patches in the east attaining greater spatial coherence and over-predicted zones in less critical areas being significantly diminished. This refinement indicates that the optimized model more effectively integrates the complex interplay of high temperature, variable precipitation, wind dynamics, topographic predispositions, and anthropogenic influences.

In contrast, the drought susceptibility maps present a different yet equally multi-faceted spatial pattern. The solitary drought model produces a widespread and diffuse pattern of “high” and “very high” susceptibility across the central and western portions of the study area. Here, a suite of vegetation-related indices—including the MSI, NMDI, OSAVI, and TVI—clearly signal areas suffering from pronounced moisture deficits. In these regions, the vegetation indices point to reduced plant vigor and higher moisture stress, which are further compounded by climatic factors: the factor maps consistently reveal that these areas are subject to moderate-to-high temperatures and comparatively low or temporally uneven rainfall. Additionally, elevation influences the local microclimate, subtly modifying precipitation patterns and enhancing drought effects in some zones. Alongside these climatic drivers, supplementary vegetation indices such as NDVI, GNDVI, and IPVI underscore the weakened state of the vegetation, while RVI, SIWSI, and SRWI help delineate pockets of critically low water availability. The collective signal from these indices is that the central and western areas experience conditions where sustained moisture deficits lead to prolonged stress on the ecosystem. Upon optimization with the Whale Optimization Algorithm, the drought susceptibility map transitions from a diffuse pattern to one with sharper and more coherent boundaries between different susceptibility classes. These refined boundaries closely mirror the abrupt spatial changes seen in the temperature, rainfall, and vegetation index maps, indicating that the optimized model is capturing localized variations in moisture availability and vegetative response more precisely.

Overall, the integrated analysis of the susceptibility and factor maps reveals that the spatial distribution of both wildfire and drought hazards in the Oroqen Autonomous Banner is determined not by any single factor but by a dynamic interplay among many. In the case of wildfire, although the eastern areas may benefit from relatively high precipitation, the overriding influence of high temperatures, aggressive wind conditions, topographic predispositions (such as steep slopes and sun-exposed aspects), and heightened anthropogenic activity collectively exacerbate the fire risk. For drought, the combination of high temperature (with the exception of a small portion of the western and northwesternmost ridges), insufficient and episodically distributed rainfall, and widespread signs of vegetation stress conspire to create extensive zones of vulnerability, particularly in the central and western regions. The refined maps generated via WOA tuning are able to reduce spatial noise and overgeneralization, leading to outputs that accurately reflect the underlying environmental gradients. These results not only enhance the scientific robustness of the susceptibility modeling but also provide valuable, actionable insights for risk mitigation and management strategies in the region.

3.6. Multi-Hazard-Coupled Mapping and Spatial Interpretation

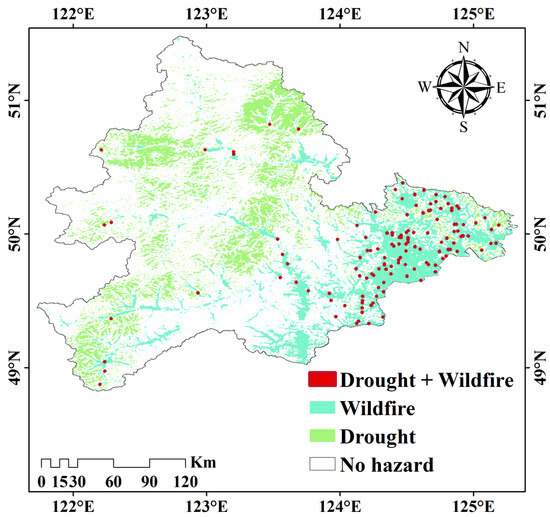

The multi-hazard-coupled map, integrating both wildfire and drought susceptibility assessments, provides critical insights into the spatial distribution and interaction dynamics of these two hazards across the study area. As shown in the coupled hazard map (Figure 8), the landscape was classified into four distinct categories: areas affected solely by drought, areas prone solely to wildfire, regions experiencing the co-occurrence of both hazards, and zones largely free from either threat.

Figure 8.

Multi-hazard coupling map showing the spatial distribution of drought, wildfire, and overlapping drought–wildfire hazard zones across the study area.

Spatial analysis reveals notable hazard segregation shaped by land cover types. The eastern parts of the study area—characterized by extensive cropland and interspersed patches of tree cover (Figure 4u)—show a pronounced dominance of wildfire susceptibility, with a spreading pattern toward the central and western grasslands, particularly in open spaces embedded within dense forest cover. This pattern is consistent with the vegetation structure: dense biomass, particularly in cropland–forest transitional zones, provides ample fuel loads, and when combined with dry climatic anomalies, elevates wildfire risk. Cropland areas, though anthropogenically managed, can accumulate combustible residues, further increasing fire potential under drought conditions. Conversely, the western and central parts of the study area, dominated by continuous tree cover and grassland, are predominantly affected by drought hazards. These areas are generally typified by more open canopies, semi-arid microclimates, and limited water retention capacity, making them highly vulnerable to prolonged dry periods and meteorological droughts.

The sporadic co-occurrence of drought and wildfire hazards is especially prominent in ecotonal zones—transitional areas where cropland, sparse vegetation, and tree cover intermix. Here, drought-induced vegetation stress significantly raises flammability levels, creating an environment highly conducive to fire ignition and rapid spread. Although the spatial extent of dual-hazard zones is relatively limited compared to singular hazard zones, their strategic location in transitional landscapes amplifies their importance for hazard management. Another important observation is the spatial configuration of no-hazard zones, which are predominantly concentrated in regions dominated by dense, healthy tree cover and, to a lesser extent, herbaceous wetlands. These land cover types likely confer greater resilience due to better water retention, microclimatic stability, and fire-resistant ecological characteristics. Overall, the coupled hazard mapping emphasizes several key insights: (1) multi-hazard hotspots, although spatially limited, pose substantial compounded risks and should be prioritized for integrated management strategies, (2) singular hazard zones, especially extensive drought-prone grasslands and wildfire-prone croplands, require hazard-specific mitigation but should not be overlooked, as repeated exposures could gradually erode ecological and socio-economic resilience, and (3) landscape heterogeneity plays a decisive role in hazard dynamics, suggesting that one-size-fits-all mitigation strategies are unlikely to be effective; instead, spatially nuanced, land cover-informed approaches are essential. Thus, the coupled mapping approach offers a critical decision-support tool for designing proactive, landscape-adapted, and multi-hazard risk-reduction frameworks aimed at enhancing environmental sustainability and human security in the region.

3.7. ROC Curve and AUC Performance Evaluation