1. Introduction

Optical remote sensing images constitute a fundamental Earth observation data source, providing multispectral capabilities for land surface dynamics monitoring with indispensable roles in environmental assessment [

1,

2], resource management [

3], disaster early warning [

4,

5], and other applications such as hyperspectral image reconstruction [

6]. However, atmospheric interference caused by cloud cover results in information loss in 60–70% of optical data through radiation attenuation and geometric occlusion [

7], creating a critical bottleneck that constrains both the temporal availability and spatial integrity of remote sensing observations [

8]. Addressing this challenge necessitates dedicated research on cloud removal methodologies to restore data usability, thereby ensuring the reliability of climate monitoring, disaster response systems, and sustainable resource management frameworks.

The existing methods for cloud removal are primarily classified into two groups: traditional model-based approaches and deep learning-based methods. Model-based methods leverage spatiotemporal and spectral correlations within optical remote sensing images to reconstruct cloud-covered regions. In contrast, deep learning approaches utilize deep neural networks to either capture the complex characteristics of optical images or model the relationship between observed and reconstructed images, enabling cloud removal through data-driven optimization.

Traditional model-based approaches for removing thick clouds are mainly categorized into three classes: spatial-based, spectral-based, and temporal-based methods. Spatial-based methods reconstruct cloud-cover information by exploiting the spatial correlations within a single image, e.g., image inpainting methods [

9,

10,

11]. Zhu et al. [

12] propose an enhanced neighborhood similar pixel interpolator (NSPI) to effectively address thick cloud removal. Maalouf et al. [

13] apply the Bandelet transform, along with geometric flow, to reconstruct cloud-affected regions in remote sensing images. These methods generally perform well on small-scale or structurally simple cloud regions but often struggle with large and complex cloud patterns.

Spectral-based methods [

14,

15,

16] reconstruct cloud-contaminated regions by leveraging auxiliary spectral bands and exploiting the spectral correlations among different wavelengths. Li et al. [

15] introduce a multilinear regression method for restoring unavailable observations in the sixth band of Aqua MODIS through spectral correlation analysis. Shen et al. [

16] reconstruct cloud-contaminated regions by leveraging the spectral correlations among multispectral bands. These methods can handle large-scale cloud regions under certain conditions, but their performance degrades when inter-band correlation is low or when auxiliary information is lacking. In other words, these methods are typically applied for the removal of thin clouds.

Temporal-based methods [

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27] leverage the periodic observations of the same region by optical satellites, utilizing multitemporal remote sensing images to reconstruct cloud-covered areas by exploiting temporal redundancy. Lin et al. [

18] utilize information cloning and temporal correlations from multitemporal satellite images to reconstruct cloud-covered regions via a Poisson equation-based global optimization. Li et al. [

19] integrate sequential radiometric calibration combined with residual correction to effectively eliminate thick clouds from images by utilizing complementary temporal data. Peng et al. [

20] model the global and temporal correlations of remote sensing images using tensor ring decomposition combined with a deep feature fidelity term. Li et al. [

21] further develop a method based on tensor ring decomposition, incorporating a gradient-domain fidelity term to enhance the temporal consistency in the reconstruction. Ji et al. [

28] propose a blind cloud removal (i.e., removing clouds without a predefined cloud mask) and detection method employing tensor singular value decomposition along with a group sparse function. These methods effectively improve the accuracy and robustness of cloud removal by jointly modeling the spatial and temporal correlations in multitemporal images.

As deep learning advances rapidly in image processing, its superior feature extraction and nonlinear representation capabilities have shown great promise in cloud removal in optical remote sensing images. Most existing methods utilize convolutional neural networks (CNNs) [

29,

30,

31,

32,

33], which characterize the local spatiotemporal–spectral properties of remote sensing images using various convolutional kernels. However, the locality of convolution operations limits their ability to capture global features. Zhang et al. [

32] propose a learning framework based on spatiotemporal patch groups with a global–local loss function to effectively remove clouds and shadows. Recently, Transformer-based methods have been introduced for cloud removal [

34,

35,

36], utilizing attention mechanisms to capture long-range dependencies and effectively model non-local spatial or temporal features in remote sensing images. To further enhance reconstruction fidelity, generative adversarial networks (GANs) have been widely adopted [

37,

38,

39,

40]. To tackle SAR-to-optical image conversion and cloud contamination removal, a dual-GAN model incorporating dilated residual inception blocks is developed in [

40]. Yang et al. [

39] introduce a GAN-based framework guided by structural representations of ground objects, effectively leveraging structural feature learning to improve cloud removal performance. Wang et al. [

41] develop an SAR-guided spatial–spectral network to reconstruct cloud-contaminated optical images. A deep learning method utilizing Deep Image Prior (DIP) technology is introduced by Czerkawski et al. [

42]. Despite their strong expressive power for modeling land-cover structures, most deep learning methods are data-driven and may suffer from generalization issues when applied to images with different resolutions, spectral characteristics, or cloud patterns.

The existing model-based methods typically characterize the prior knowledge of cloud-free component images by manually designing regularization terms from a discrete perspective. However, due to the inherent limitations of handcrafted regularizations, these approaches often fail to accurately model the underlying image priors. To overcome this difficulty, this work proposes a blind cloud removal model from a continuous perspective, in which the cloud-free image is represented by a tensor function and the cloud component is modeled using a band-wise sparse function. Specifically, the cloud-free image is constructed via a tensor function based on implicit neural representation (INR) and Tucker decomposition [

43]. By exploiting the powerful representational capability of INRs and the inherent low-rank property of Tucker decomposition, the model effectively captures the image’s global correlations and local smoothness. Furthermore, considering that thick clouds obstruct visible light, a band-wise sparse function is proposed to capture the cloud component’s structural characteristics. To preserve the information in cloud-free regions during reconstruction, a thresholding strategy is employed to obtain an initial cloud mask, which is further expanded using convolution to ensure that cloud-covered areas are fully detected. To efficiently handle the developed model, we formulate an alternating minimization algorithm that decouples the optimization into three interpretable subproblems: cloud-free reconstruction, cloud component estimation, and cloud detection. The proposed method is demonstrated to achieve superior cloud removal and better preserve fine image details, as evidenced by comprehensive evaluations on synthetic and real-world data.

The main contributions of this paper are outlined below:

A continuous blind cloud removal model is developed in which the cloud-free image is represented by a tensor function constructed via implicit neural representation and Tucker decomposition. This formulation effectively captures both the global correlations and local smoothness of the image.

A band-wise group sparsity function is introduced to model the spectral and spatial properties of clouds, enabling accurate characterization of cloud structures in the absence of a cloud mask. Furthermore, a thresholding- and convolution-based dilation strategy is designed to automatically detect cloud regions and ensure complete coverage of cloud-contaminated areas.

A method based on alternating minimization is designed to address the proposed model, and comprehensive evaluations on synthetic and real-world datasets show the proposed method’s superior cloud removal performance and enhanced detail preservation.

The organization of the paper is outlined below.

Section 2 introduces the fundamental notations and definitions necessary for understanding our approach.

Section 3 presents the continuous tensor function for modeling the cloud-free image component, the band-wise sparse function for representing the cloud component, and the adaptive thresholding-based cloud detection strategy. Furthermore, a unified framework is designed to jointly detect clouds and reconstruct cloud-contaminated regions.

Section 4 presents comprehensive experimental results on synthetic and real-world datasets to verify the effectiveness of the proposed approach. Finally,

Section 5 concludes the paper.

4. Experiments

In order to conduct a thorough assessment of cloud removal effectiveness, we compare both model-based and deep learning-based approaches, including HaLRTC [

25], ALM-IPG [

26], TVLRSDC [

27], BC-SLRpGS [

28], and MT [

42]. Among them, HaLRTC, ALM-IPG, TVLRSDC, and BC-SLRpGS are model-based methods, while MT is a deep learning-based approach. Specifically, HaLRTC and ALM-IPG require cloud masks, whereas BC-SLRpGS, TVLRSDC, MT, and our proposed method operate in a blind cloud removal setting. All methods are carefully tuned to achieve optimal performance on each individual dataset. For the comparison methods, parameters were set according to the authors’ original recommendations or tuned via grid search within the ranges suggested in their papers. For the proposed method, the parameters

,

, and

(listed in Algorithm 1) were tuned using grid search over the ranges

and

. The final values were selected to maximize the mean PSNR on the validation set for each dataset. A detailed discussion of the parameter sensitivity is provided in

Section 4.5. In the following sections, the proposed method’s performance is tested through both synthetic and real-world experiments. If not otherwise specified, all experiments were conducted on a workstation equipped with an Intel Core i7-14650HX CPU (Intel Corporation, Santa Clara, CA, USA), 48 GB RAM, 1 TB hard drive, and an NVIDIA GeForce RTX 4060 Laptop GPU with 8 GB memory (NVIDIA Corporation, Santa Clara, CA, USA). The code implementation of the proposed method is available online

https://github.com/Pfive2025/Cloud-Removal.git (accessed on 25 August 2025).

4.1. Synthetic Experiments

This section is dedicated to evaluating the performance of the proposed model using five simulated datasets and six cloud masks.

4.1.1. Dataset and Metric

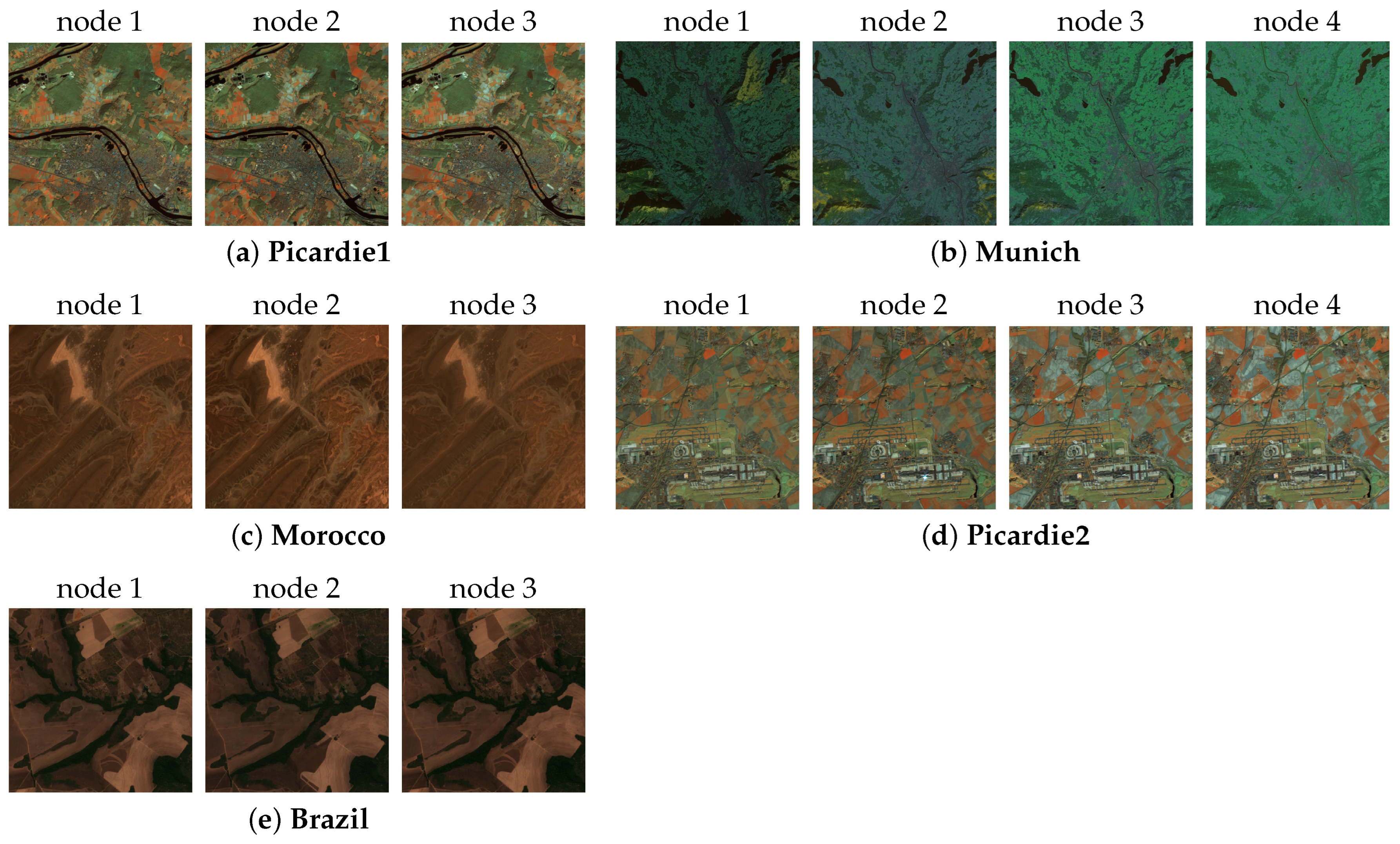

The details of the simulated datasets are provided in

Table 2, while representative visual examples are illustrated in

Figure 1. The spatial resolution is described using ground sampling distance (GSD). Specifically, the Munich dataset is acquired from Landsat-8 with a GSD of 30 m; the Picardie1 and Picardie2 datasets are captured by Sentinel-2 with a GSD of 20 m; and the Morocco and Brazil datasets are obtained from Sentinel-2 with a GSD of 10 m.

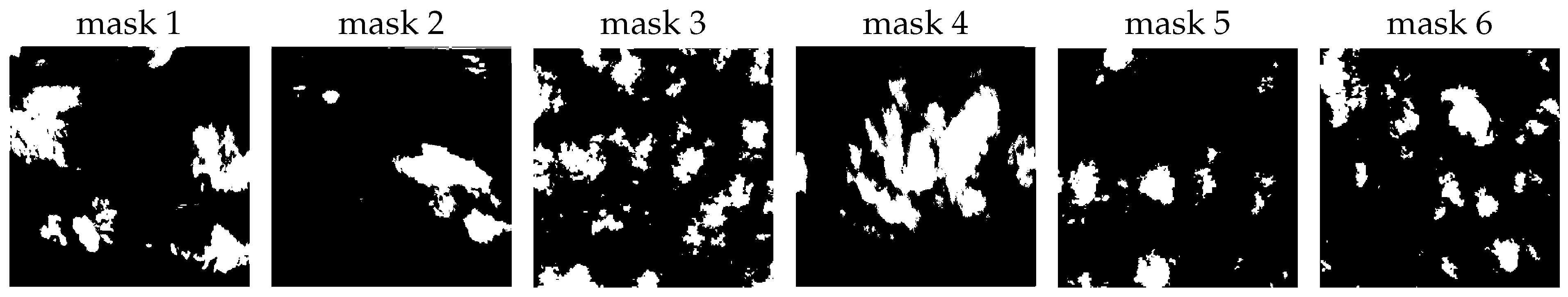

Figure 2 displays the cloud masks used in the experiments, which are extracted from real-world cloud-contaminated remote sensing images

https://dataspace.copernicus.eu/ (accessed on 25 August 2025).

To quantitatively assess the cloud removal performance, we employ three widely used metrics:

,

, and

, which represent the Peak Signal-to-Noise Ratio (PSNR), the Structural Similarity Index Measure (SSIM), and Correlation Coefficient (CC) at time node

t, respectively. Better reconstruction performance is indicated by higher

,

, and

values. These metrics are defined as follows:

where

denotes the

b-th band of the ground truth image at time node

t, and

is the corresponding reconstructed image;

is the maximum pixel value of

;

and

represent the mean and standard deviation of

, respectively;

denotes the covariance between

and

; and

,

are predefined constants;

and

denote the

i-th pixel of the reconstructed and ground truth images at the

t-th temporal instance, respectively.

and

represent their corresponding mean values.

For datasets where multiple time nodes are affected by clouds, we use the mean values of

and

across all cloud-contaminated time nodes to assess the overall cloud removal performance. These are denoted as MPSNR and MSSIM, respectively, and defined as

where

indicates the count of cloud-contaminated time nodes in the dataset.

4.1.2. Quantitative Comparison

The quantitative results (PSNR, SSIM, and CC) for various cloud removal techniques applied to simulated datasets are presented in

Table 3, with the highest values for each metric highlighted in bold. In this table,

,

, and

represent the PSNR, SSIM, and CC values at time node

t, while MPSNR, MSSIM, and MCC denote the average PSNR, SSIM, and CC across all cloud-contaminated time nodes.

To better reflect the complexity of cloud distributions in real-world remote sensing scenarios, the test datasets include Brazil, Morocco, and Munich, where only the first time node is contaminated by clouds, as well as Picardie1 and Picardie2, which contain clouds in multiple time nodes.

As shown in the table, the proposed method outperforms the others in terms of PSNR, SSIM, and CC in most cases. For the Picardie2 dataset, all the temporal images are cloud-contaminated, with certain spatial regions occluded in every time node, making reconstruction particularly challenging. As reported in

Table 3, the proposed method achieves the highest MPSNR, MSSIM, and MCC, demonstrating strong robustness under severe degradation. At time node 2, the relative improvement compared with the other time nodes is smaller, which may be due to higher scene complexity and more pronounced land-cover variation, but the proposed method still delivers superior PSNR, SSIM, and CC compared with all the competing approaches. Moreover, across all four time nodes, the proposed method maintains relatively stable numerical performance in contrast to some competing methods, where accuracy varies substantially between time nodes.

In conclusion, the proposed method achieves the highest average performance across all the datasets, with an average PSNR of 52.16 dB and SSIM of 0.9953, clearly outperforming all the competing methods. In addition to accuracy,

Table 3 also reports the runtime (in seconds) of each method on the same hardware platform. The proposed method attains comparable efficiency to model-based methods such as TVLRSDC and ALM-IPG while being substantially faster than deep learning approaches that involve large-scale network inference. This demonstrates that our method strikes a favorable balance between reconstruction quality and computational efficiency. These results highlight the effectiveness and practicality of the proposed continuous blind cloud removal framework under diverse cloud conditions.

4.1.3. Qualitative Comparison

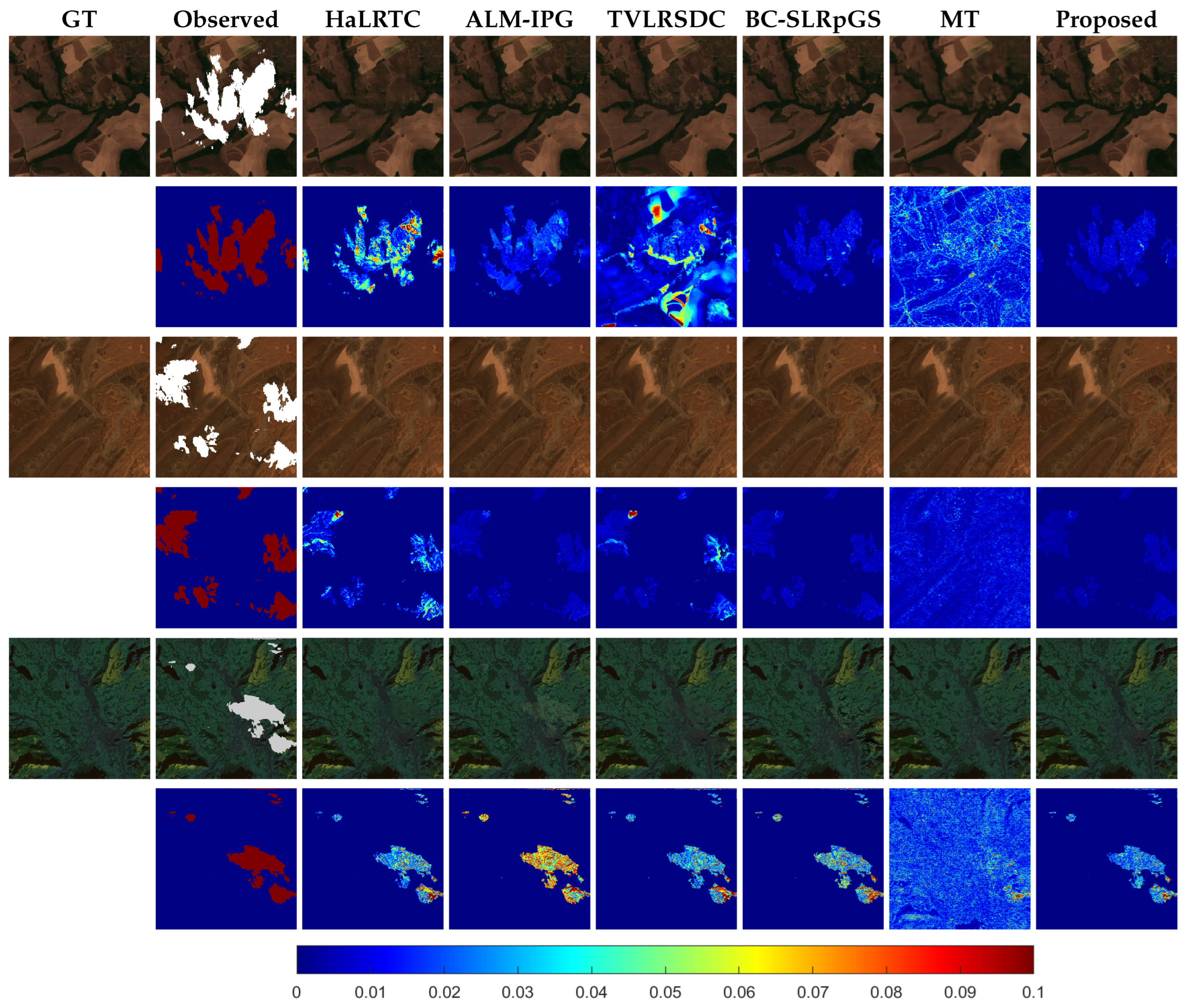

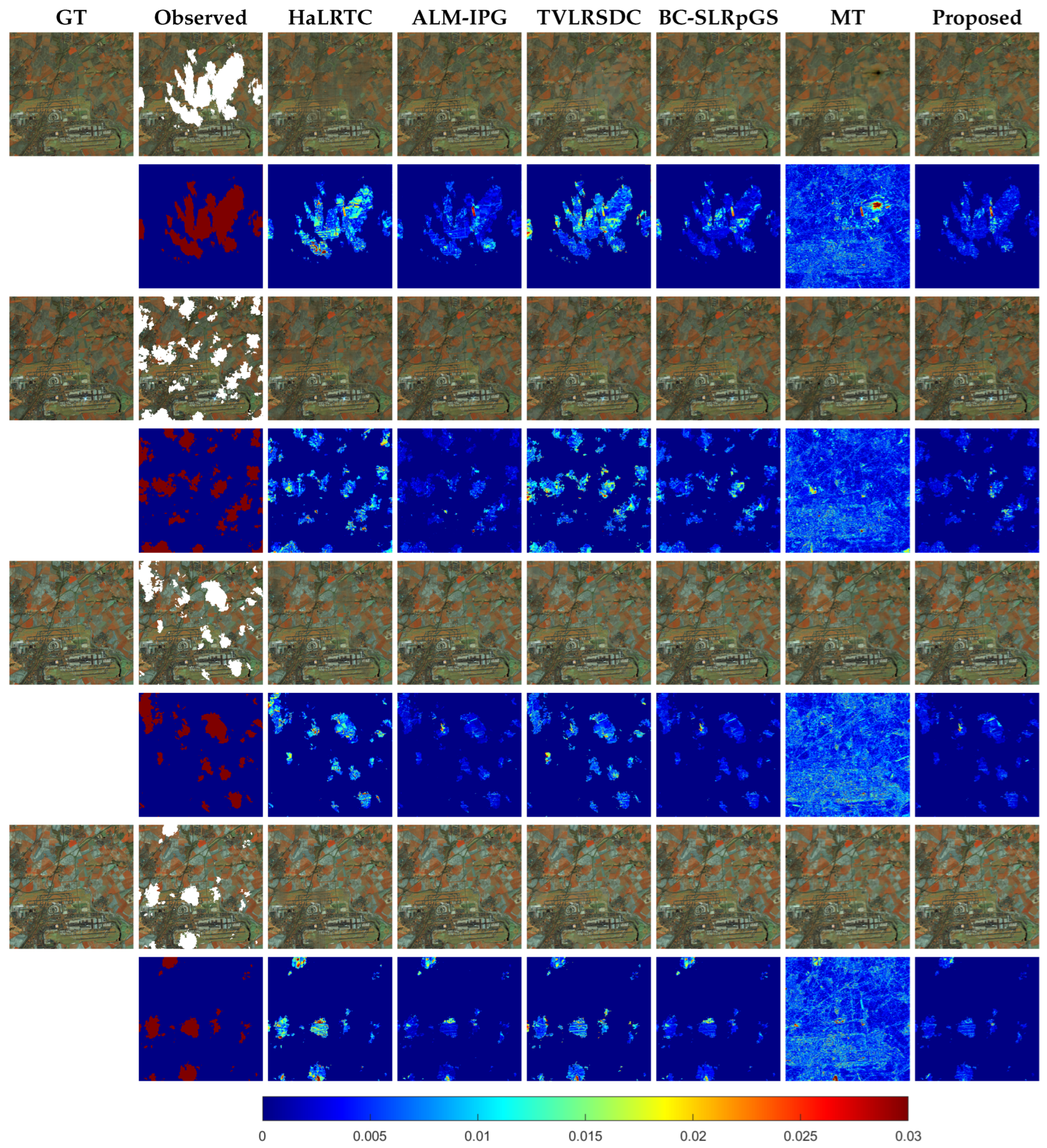

In this section, we compare the cloud removal capabilities of different methods by presenting both the visual results and the residual maps (i.e., residual between the reconstruction and the ground truth).

The results for the Brazil, Morocco, and Munich datasets are illustrated in

Figure 3, arranged from top to bottom in the corresponding order. For each dataset, the top row showcases the cloud removal results, while the corresponding residual maps are shown below. In these simulated experiments, only the first time node is contaminated by clouds. As shown in the figure, all the methods produce visually promising reconstructions. However, the residual maps reveal more detailed differences. Specifically, the HaLRTC, ALM-IPG, TVLRSDC, and BC-SLRpGS methods exhibit large residuals compared to the ground truth, as indicated by the presence of red regions in their residual maps. Although the MT method does not produce large red areas in the residual maps, it changes the information in regions that were not contaminated by clouds in the observed image, resulting in lower overall reconstruction quality. Conversely, the proposed method not only achieves superior visual quality but also yields the smallest residuals, with the least presence of red regions in the residual maps. This implies that our method produces reconstructed images that are nearest to the ground truth when compared to all the others.

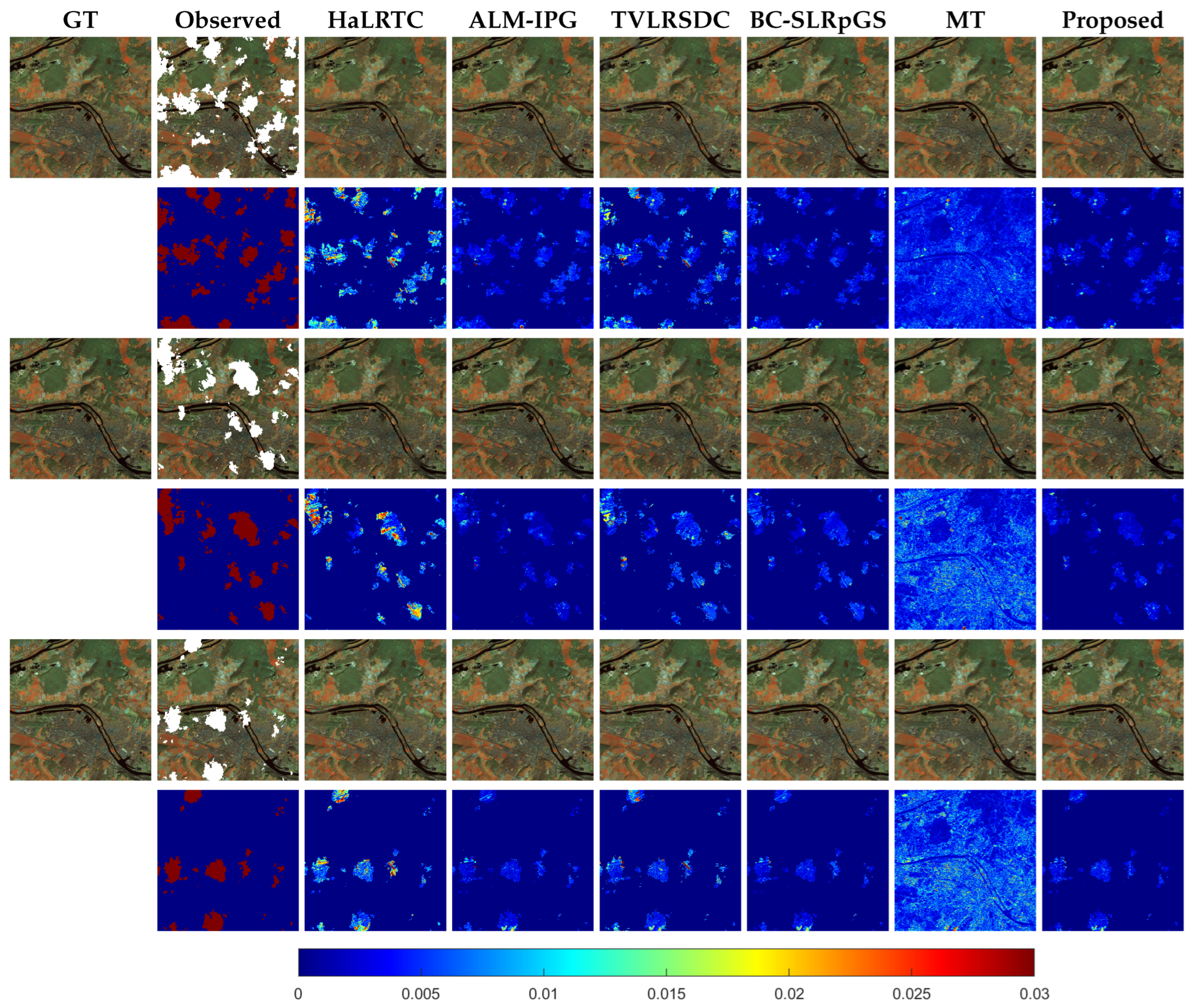

The results of cloud removal for each method on the Picardie1 and Picardie2 datasets are presented in

Figure 4 and

Figure 5, respectively. In both datasets, all the temporal images are cloud-contaminated, and some spatial regions are occluded across all the time nodes. This leads to severe information loss and poses significant challenges for cloud removal. From the figures, it can be observed that the performance of cloud removal by HaLRTC, TVLRSDC, and MT is less satisfactory. For example, HaLRTC produces overly smooth results and loses substantial detail; TVLRSDC shows inconsistencies between the reconstructed regions and their surroundings; and MT changes the information in regions that were not contaminated by clouds in the observed image, leading to degraded visual quality. In contrast, ALM-IPG, BC-SLRpGS, and our approach yield more accurate and visually consistent reconstructions. Among them, a cloud mask for ALM-IPG is provided, while BC-SLRpGS and our approach perform blind cloud removal. The residual maps further demonstrate that the proposed method results in fewer large residual values, indicating that it preserves more fine details and achieves reconstructions closer to the ground truth.

4.2. Real Experiments

In this part, we assess the cloud removal effectiveness of the approach presented on two real-world cloud-contaminated datasets.

4.2.1. Data

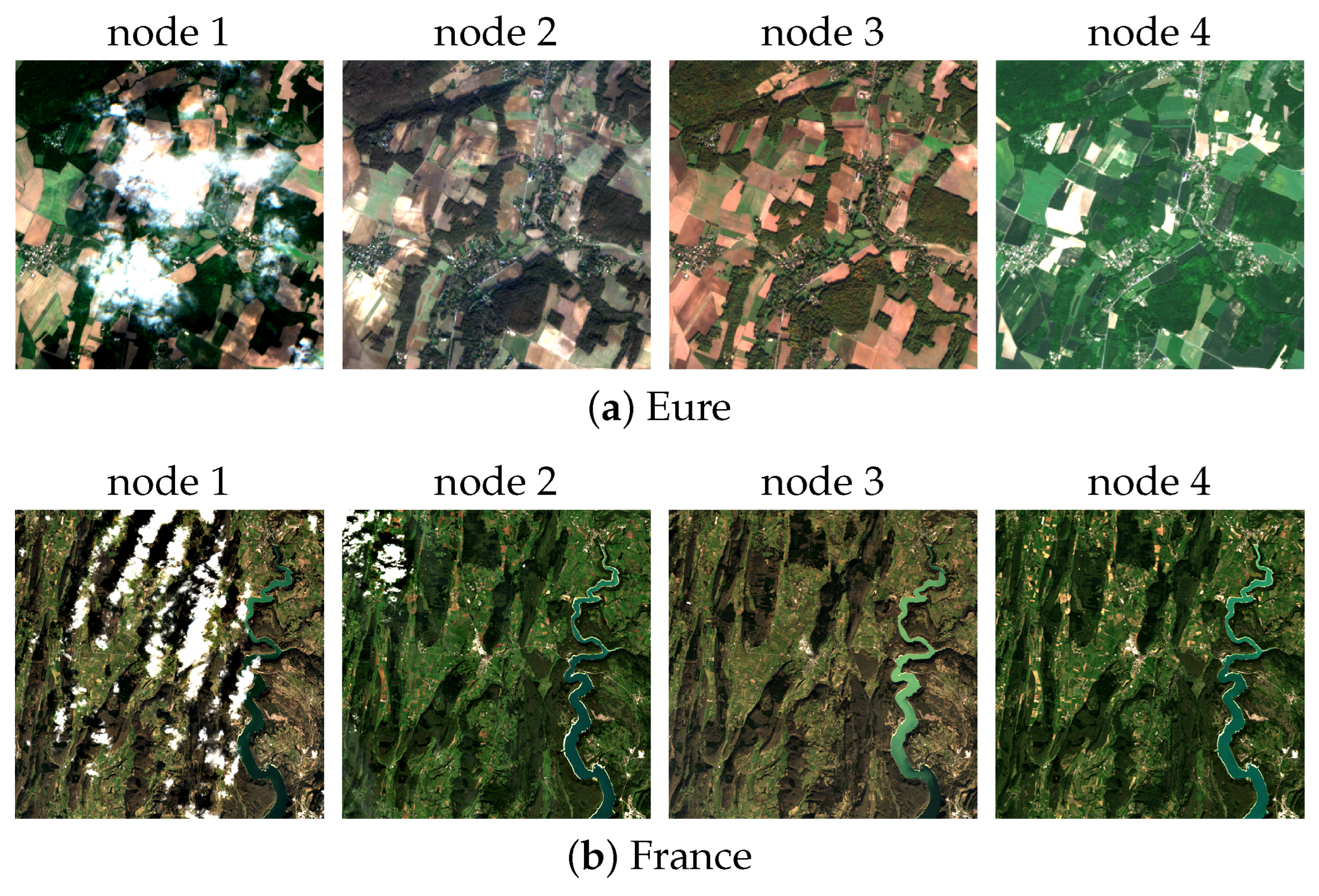

The detailed information of the real datasets used in this section is summarized in

Table 4, and the corresponding visual examples are provided in

Figure 6. The Eure dataset, captured by Sentinel-2 with a spatial resolution of 10 meters, consists of four time nodes (each with four spectral bands), one of which is contaminated by clouds. The France dataset, acquired by Landsat-8 with a spatial resolution of 30 meters, also contains four time nodes (each with seven bands), two of which are cloud-contaminated.

Figure 6 shows that images captured at different times in both datasets exhibit significant variations, further increasing the difficulty of cloud removal.

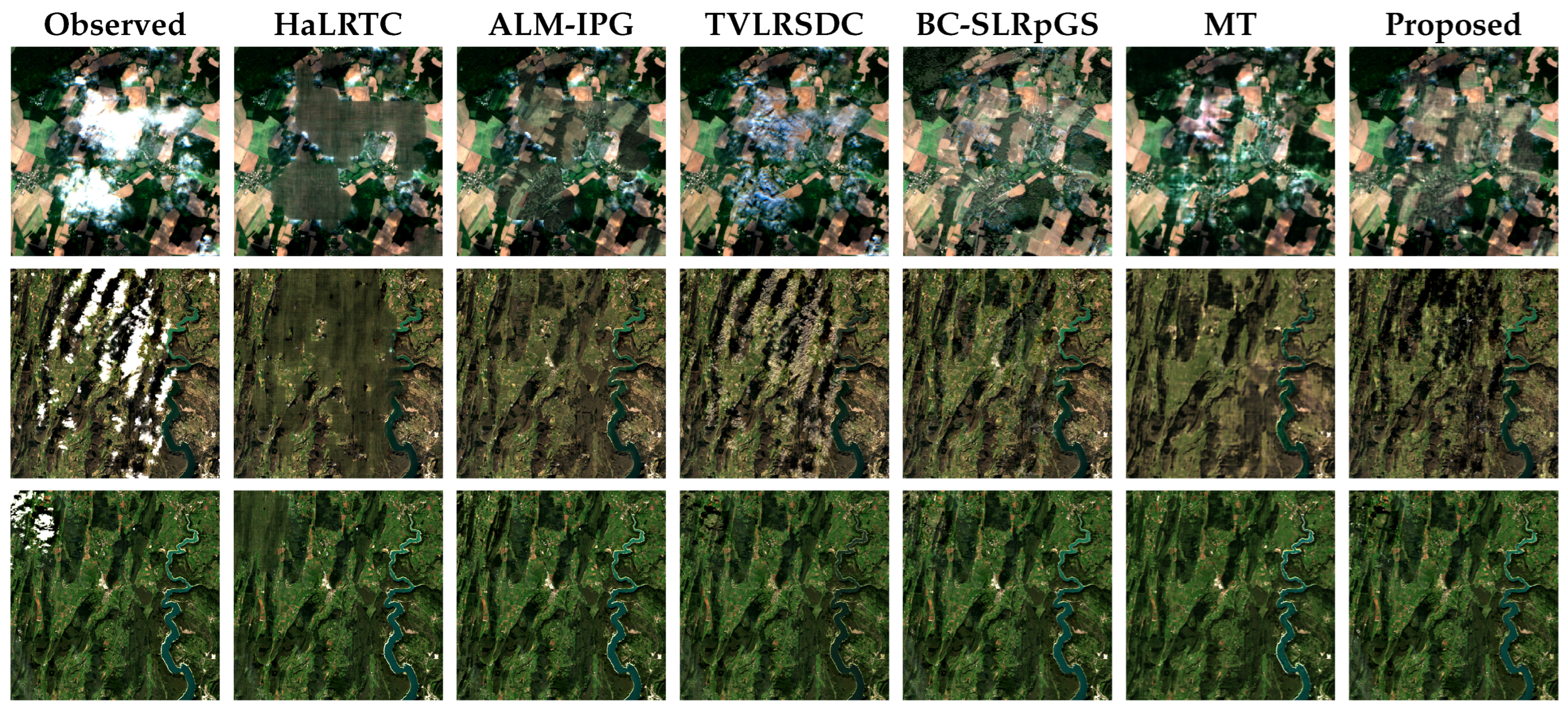

4.2.2. Qualitative Comparison

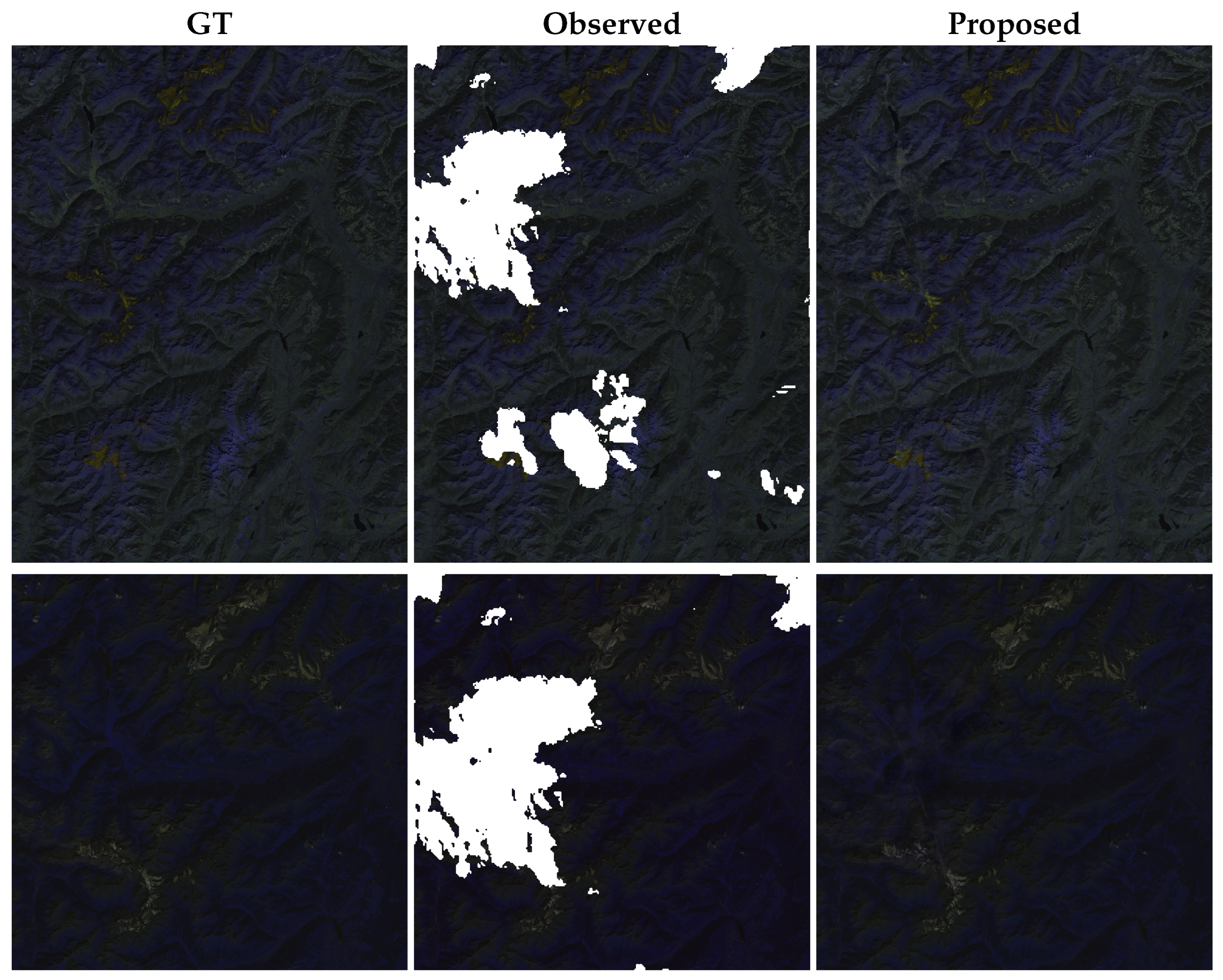

The cloud removal outcomes for all the methods across the two real-world datasets are shown in

Figure 7. The results for the Eure dataset are presented in the first row, while the second and third rows provide the outcomes for the first and second cloud-contaminated time nodes of the France dataset. As shown in the figure, HaLRTC fails to effectively reconstruct the cloud-covered areas, particularly on the Eure dataset, where it is unable to recover any meaningful information. The results from ALM-IPG exhibit noticeable differences in comparison to the reconstructed parts and the surrounding areas free from clouds. The results of TVLRSDC and MT still contain visible cloud information, suggesting that they fail to fully restore the cloud-contaminated information. Both BC-SLRpGS and the proposed method achieve visually pleasing results. Significantly, the proposed method better preserves details, demonstrating its superior ability to recover structural information in real-world cloud-contaminated remote sensing images.

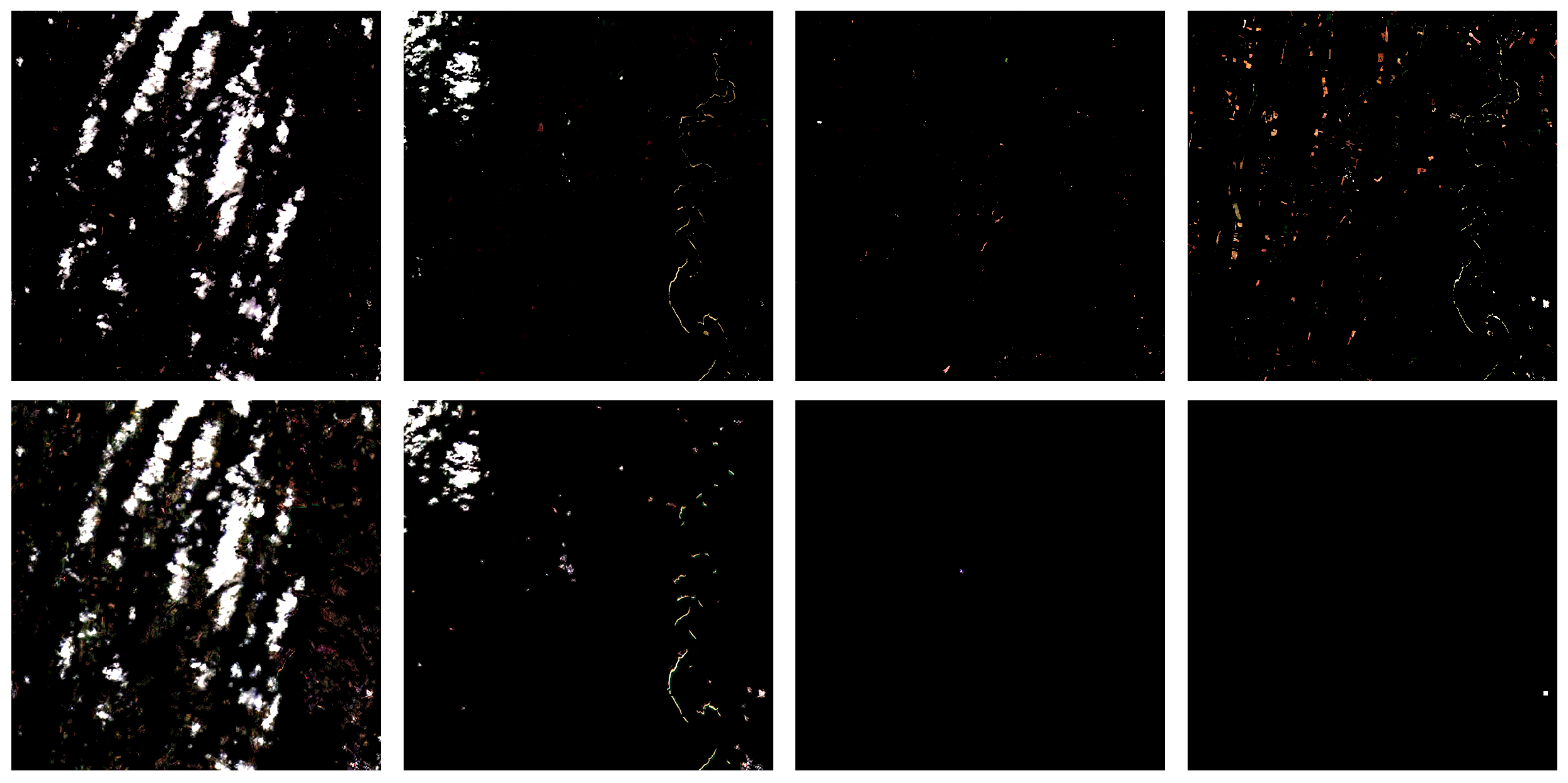

4.2.3. Cloud Detection Results

In this section, we evaluate the cloud detection performance of the proposed method. Among the comparison methods, TVLRSDC, BC-SLRpGS, MT, and our approach are all blind cloud removal approaches. As demonstrated in previous experiments, both TVLRSDC and MT tend to change information in areas unaffected by clouds in the observed image, indicating poor cloud detection performance. Therefore, our analysis in this section focuses on comparing the cloud detection performance of BC-SLRpGS and our approach.

The results of cloud detection (i.e., the estimated cloud components) on both the Eure and France datasets are illustrated in

Figure 8 and

Figure 9. In each figure, the first row shows the cloud detection results of BC-SLRpGS, while the second row illustrates the outcomes of our approach. From the figures, we observe that, for time nodes without cloud contamination (e.g., the second, third, and fourth time nodes of the Eure dataset, and the third and fourth time nodes of the France dataset), BC-SLRpGS incorrectly identifies non-cloud regions as clouds. This misdetection leads to undesired modifications in the clean temporal images, ultimately degrading the overall reconstruction quality. In contrast, the proposed method detects almost no cloud components in these cloud-free time node images, demonstrating its ability to preserve the original content where no cloud is present. These results demonstrate that, compared with BC-SLRpGS, the proposed method achieves more precise cloud detection, which in turn contributes to more effective and reliable cloud removal.

4.3. Scalability on Large-Size Images

To further evaluate the scalability of the proposed method, we performed experiments on large remote sensing images with sizes of

and

pixels

https://dataspace.copernicus.eu/data-collections (accessed on 25 August 2025). As reported in

Table 5, the method consistently achieves high reconstruction accuracy (PSNR above 41 dB and SSIM over 0.98) while maintaining practical computational efficiency (20–34 min per image).

All large-scale experiments were conducted on a server equipped with an Intel Xeon(R) Gold 6348 CPU (2.60GHz, Intel Corporation, Santa Clara, CA, USA) with 100GB RAM and an NVIDIA A800 (80 GB memory, NVIDIA Corporation, Santa Clara, CA, USA). The method was implemented in PyTorch 2.1.2 with CUDA 11.8, and the runtime reported in

Table 5 corresponds to single-GPU execution.

Figure 10 provides visual examples on the two large test images, showing that the proposed approach successfully reconstructs fine spatial structures even at very high resolutions. These results confirm that the continuous tensor function representation scales well and is applicable to large-scale remote sensing scenarios in practice.

4.4. Ablation Study

To evaluate the contribution of the continuous tensor function representation, we replaced it with a discrete low-rank Tucker decomposition while keeping all the other settings unchanged. As shown in

Table 6, our continuous formulation achieves notable improvements, demonstrating its superior capability in modeling fine image details.

To evaluate the influence of the temporal dimension

T on the performance of the proposed method, we conducted experiments on the Munich simulated dataset with

.

Table 7 reports the quantitative results in terms of PSNR and SSIM. It can be observed that increasing

T consistently improves the restoration quality. For example, the PSNR increases from 33.38 dB at

to 38.28 dB at

, and the SSIM improves from 0.9455 to 0.9766. This performance gain can be attributed to the richer complementary information and temporal redundancy provided by additional cloud-free observations, which facilitate more accurate recovery of cloud-covered regions.

In addition, we clarify that the morphological erosion step is adopted as a preprocessing operation to stabilize the detection with different quantile levels. Without erosion, a smaller quantile threshold is typically required to achieve accurate detection, whereas, with erosion, comparable detection results can be obtained even with a larger quantile threshold , where and denote the quantile levels before and after applying morphological erosion, respectively. Since morphological erosion is not the main contribution of this work, we keep it as a fixed auxiliary operation rather than a tunable component and do not provide further ablation studies on this factor.

4.5. Hyperparameter Sensitivity Analysis

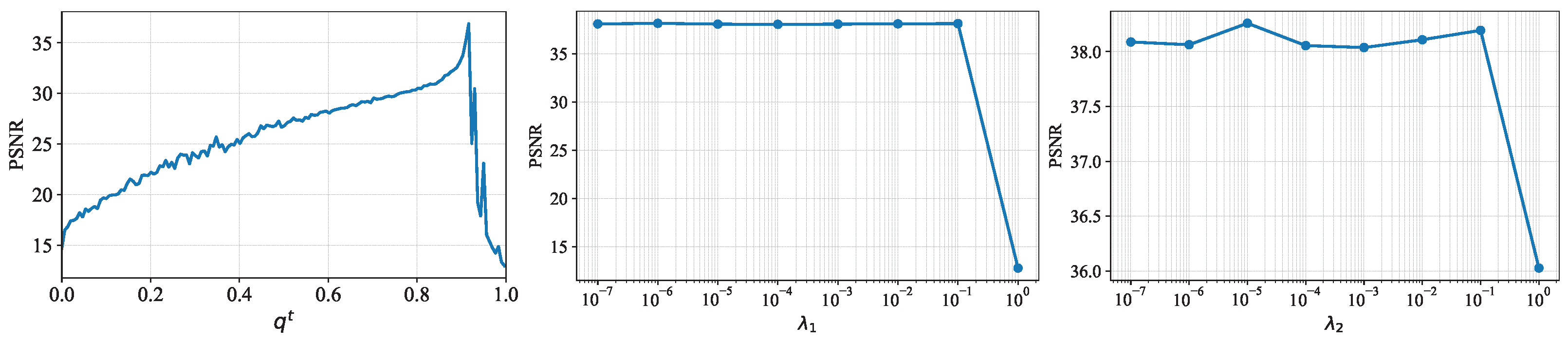

We further conducted a sensitivity analysis on three key hyperparameters of the proposed method: the quantile level in cloud detection, and the regularization parameters and . Experiments were performed on the Munich dataset, and the PSNR values were recorded while varying each parameter independently. Specifically, is varied from 0.0 to 1.0 with a step size of 0.1, while and are tested with values .

The results are plotted in

Figure 11. As shown, when

increases, PSNR initially rises and reaches its maximum at

, then decreases. This is because a smaller

corresponds to a lower threshold, leading to larger detected cloud regions and potentially more false positives. For

and

, PSNR remains relatively stable with minor fluctuations for the first seven values but decreases when either parameter reaches

, indicating that excessively large regularization weights may over-smooth the reconstruction. These results suggest that

values around 0.9 and

offer robust performance.

5. Conclusions

This paper proposed a novel continuous blind cloud removal model to address the limitations of the existing discrete low-rank and sparse prior-based methods. Unlike traditional approaches that rely on manually designed regularization terms, the proposed method represents the cloud-free image component using a continuous tensor function that integrates implicit neural representations with low-rank tensor decomposition. This formulation enables more accurate modeling of both global correlations and local smoothness in remote sensing imagery. In the case of the cloud component, we implemented a band-wise sparse function that effectively captures both the spectral and spatial features of clouds. To retain the details in cloud-free regions while reconstructing, we designed a box constraint guided by an adaptive thresholding-based cloud detection strategy, further enhanced by morphological erosion to ensure precise delineation of cloud boundaries. An alternating minimization algorithm was designed to effectively address the proposed model. Extensive evaluations on both simulated and real-world datasets showed that our method consistently outperforms or remains competitive with state-of-the-art approaches in terms of both visual quality and quantitative metrics. These results validate the efficiency and reliability of the proposed continuous framework in tackling the cloud removal challenges in optical remote sensing imagery.

While the proposed method demonstrates strong performance across diverse datasets, several limitations remain. First, when applied to extremely large-scale datasets or ultra-high-resolution images, the computational cost may become significant due to the optimization-based nature of the approach, even though our experiments show competitive runtime on moderate-scale data. Second, in scenes with highly dynamic land-cover changes (e.g., rapid vegetation growth or seasonal flooding), the temporal smoothness prior in our model may not fully capture abrupt variations, potentially leading to over-smoothing or incomplete reconstruction in rapidly changing regions.

In future work, we will focus on improving the scalability of the method for large-scale datasets, enhancing adaptability to highly dynamic scenes, and exploring the integration of multimodal data to further improve reconstruction performance.