SARFT-GAN: Semantic-Aware ARConv Fused Top-k Generative Adversarial Network for Remote Sensing Image Denoising

Abstract

Highlights

- We propose ARConv Fused Top-k Attention: it fuses geometry-adaptive ARConv with sparse Top-k attention to couple fine-grained local modeling and long-range aggregation.

- We propose SARFT-GAN: it embeds ARConv Fused Top-k Attention into the generator and introduces a Semantic-Aware Discriminator to exploit semantic priors.

- The method improves perceptual realism and semantic consistency of denoised imagery, benefiting downstream tasks.

Abstract

1. Introduction

- We develop an ARConv Fused Top-k Attention module that combines geometry-adaptive sampling with sparsified correlation, overcoming the rigidity of fixed kernels and the noise sensitivity of dense attention.

- We introduce a Semantic-Aware Discriminator that leverages priors from pre-trained vision–language models to guide the generator toward physically plausible textures and fine-grained semantic consistency.

- We conduct comprehensive experiments across 3 noise levels × 4 land-cover scenarios (12 settings) and a real-image set, achieving SOTA LPIPS/FID and competitive PSNR/SSIM.

2. Related Work

2.1. Traditional Image-Denoising Methods

2.1.1. Filter-Based Methods

2.1.2. Statistical Learning-Based Methods

2.2. Deep Learning-Based Methods

2.2.1. CNN-Based

2.2.2. GAN-Based

3. Method

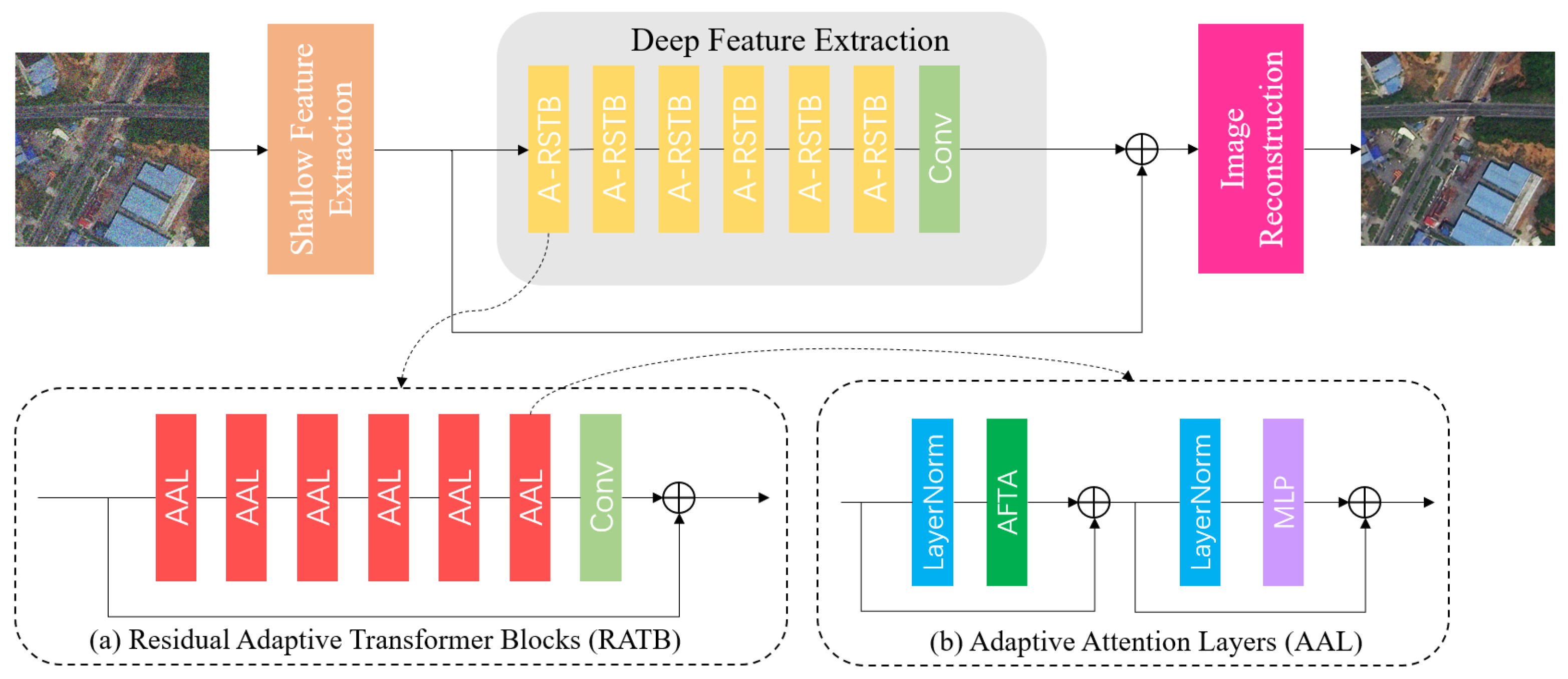

3.1. Generator

3.1.1. Overview

- (1)

- Shallow Feature Extraction

- (2)

- Deep Feature Extraction

- (3)

- Image Reconstruction Module

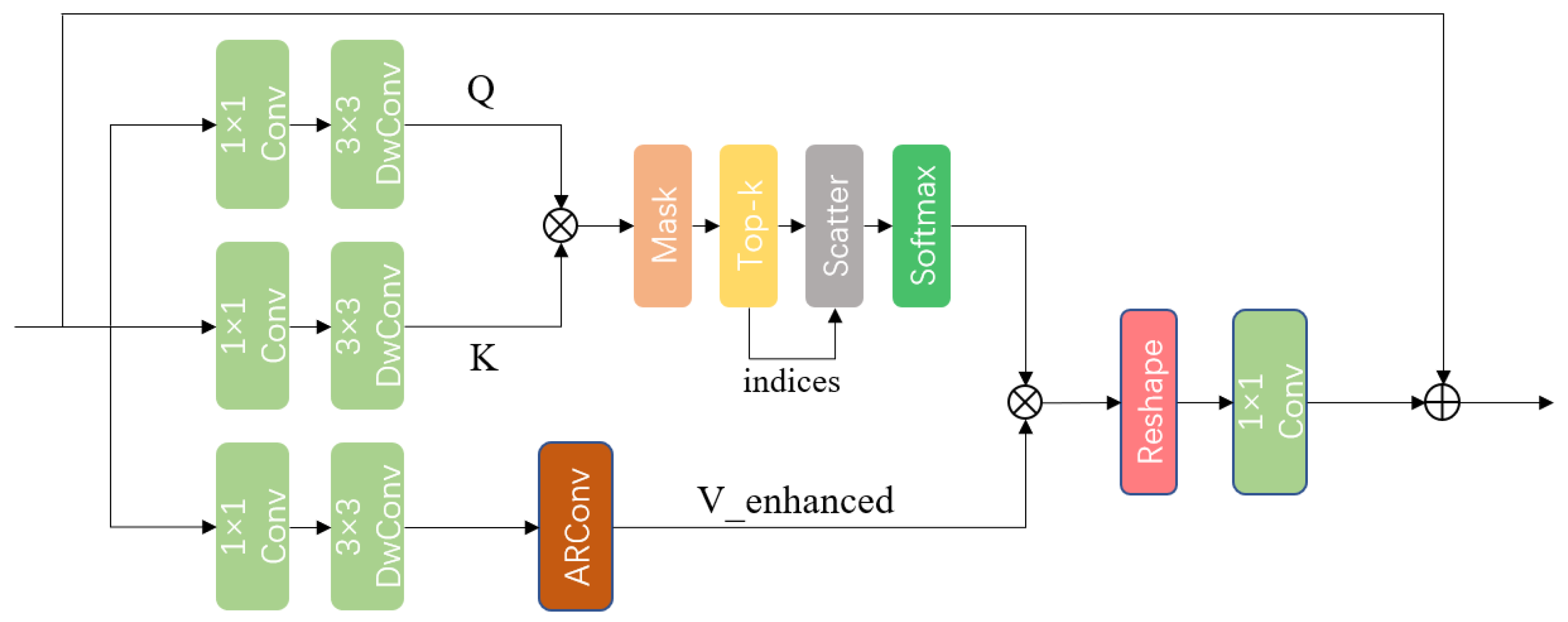

3.1.2. ARConv Fused Top-k Attention

3.1.3. ARConv

- (1)

- Dynamic Kernel Parameter Learning

- (2)

- Dynamic Sparse Sampling Mechanism

- (3)

- Affine Transformation for Spatial Adaptability

3.2. Semantic-Aware Discriminator

4. Experiment

4.1. Settings

4.1.1. Datasets

4.1.2. Implementation Details

4.1.3. Evaluation Metrics

4.2. Comparisons with State-of-the-Art Algorithms

4.2.1. Quantitative Comparison

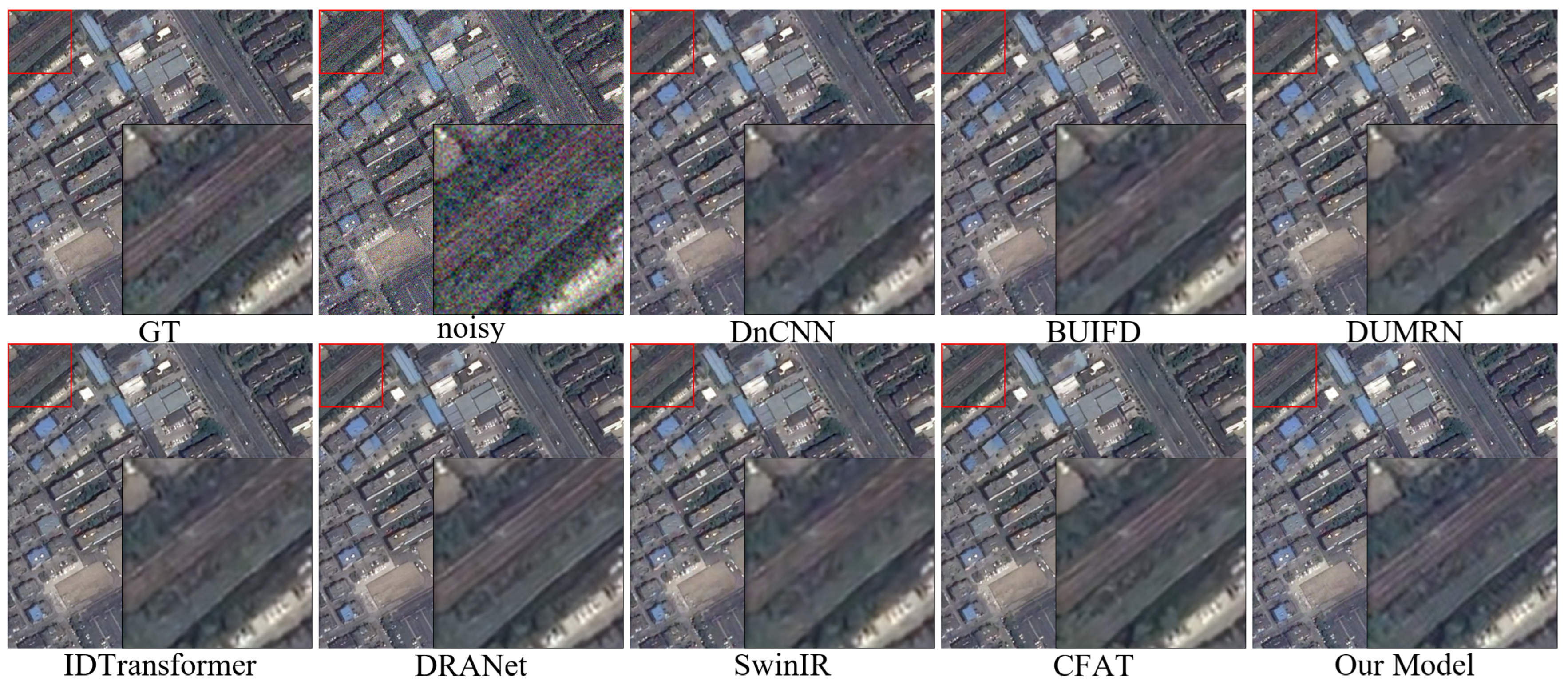

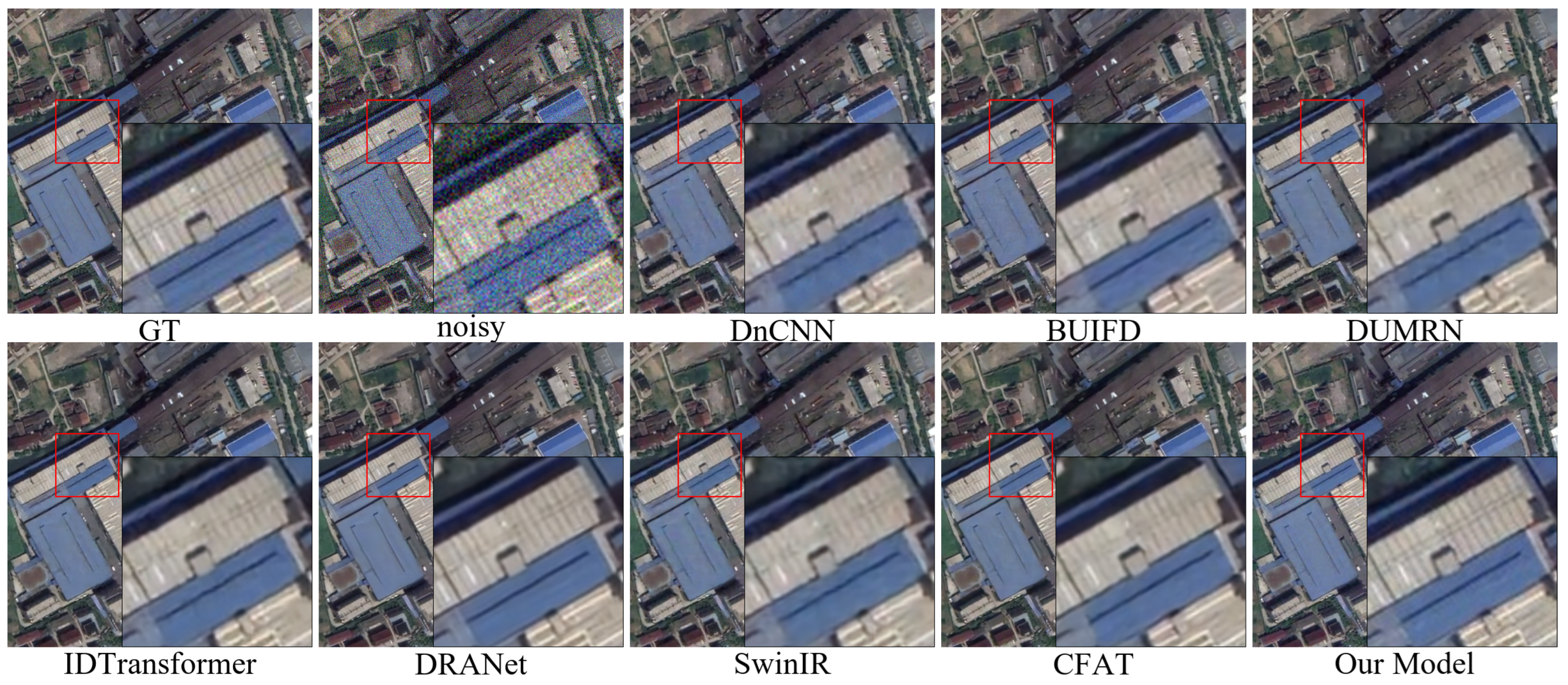

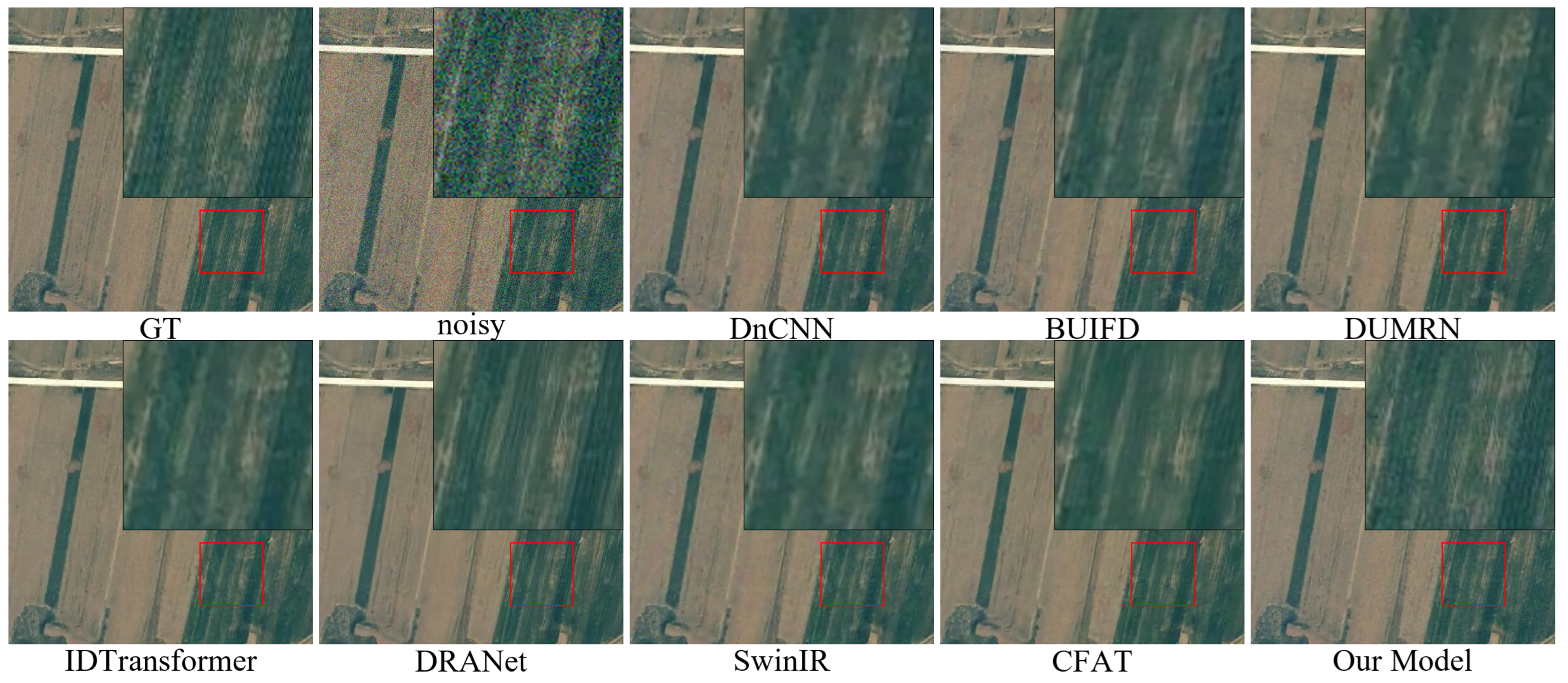

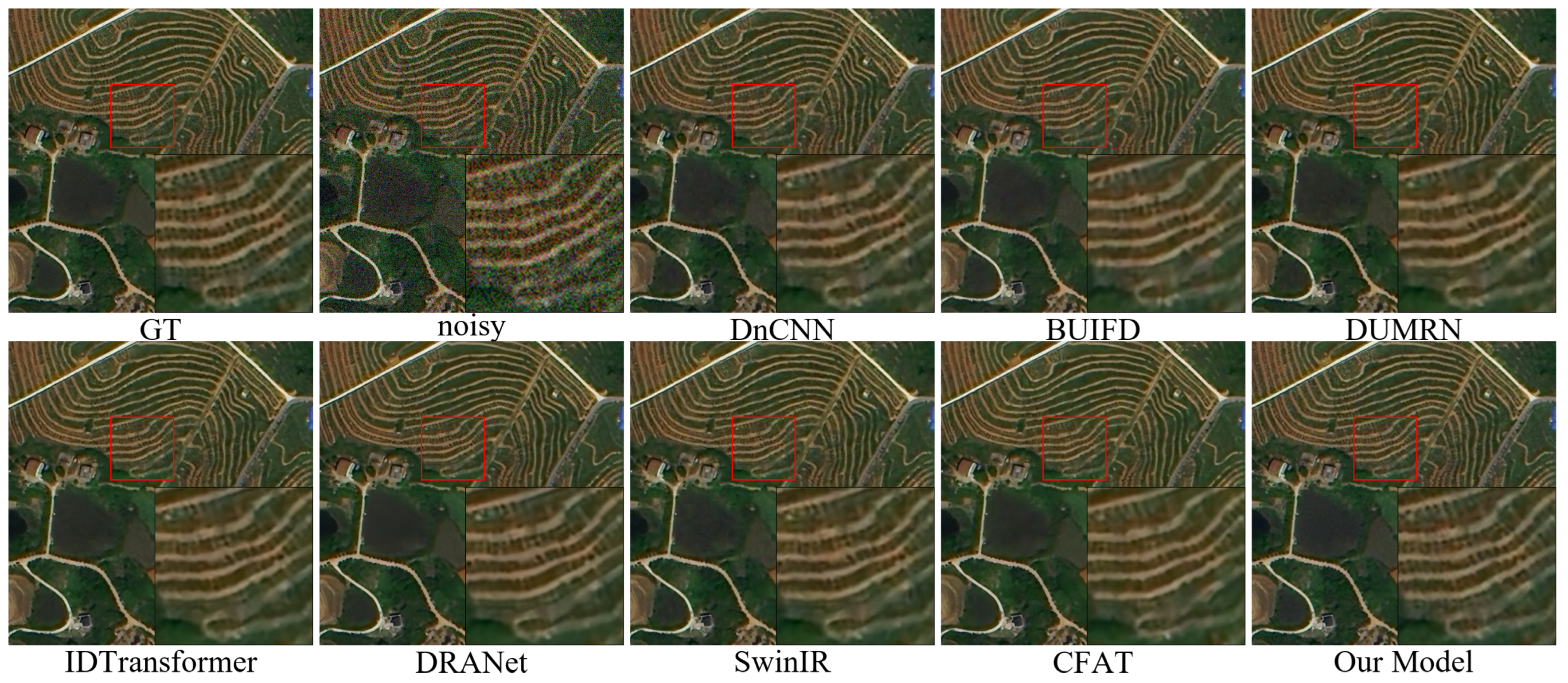

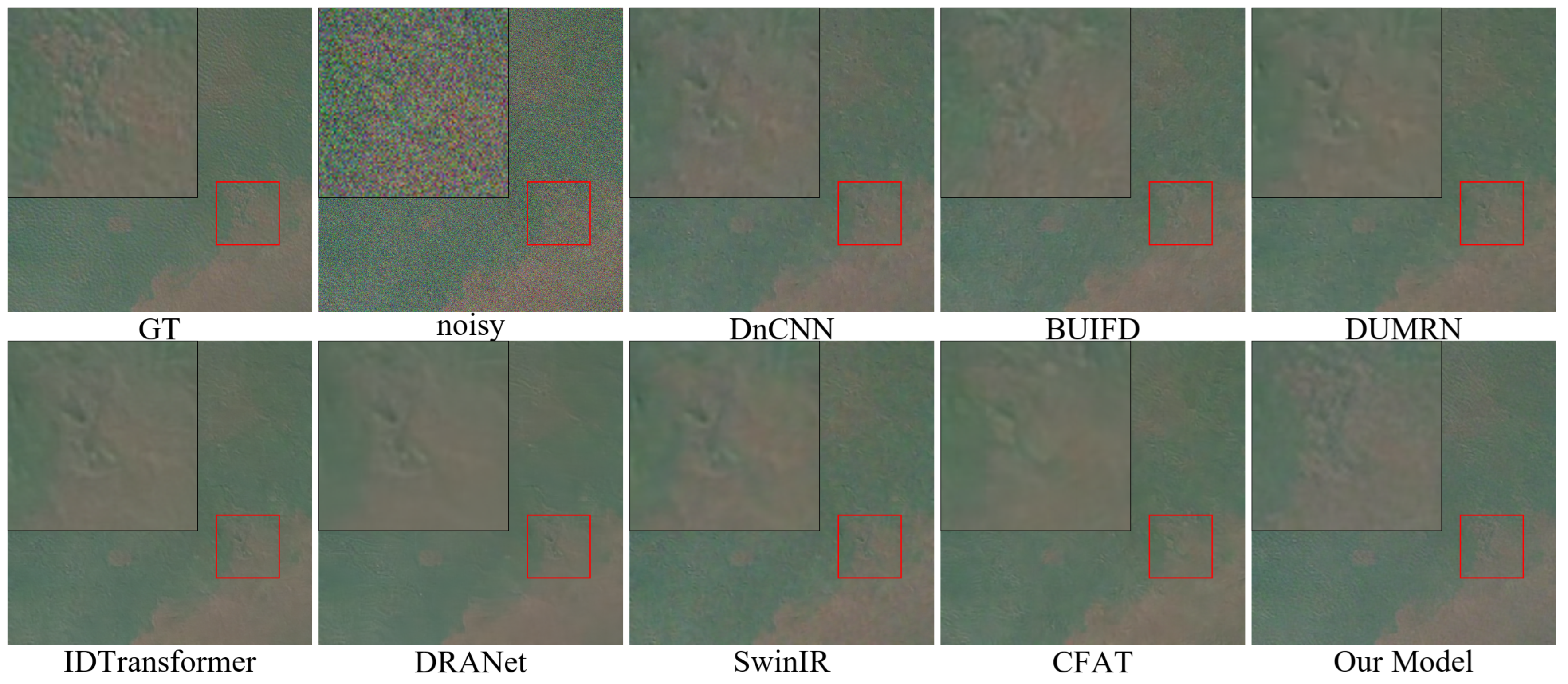

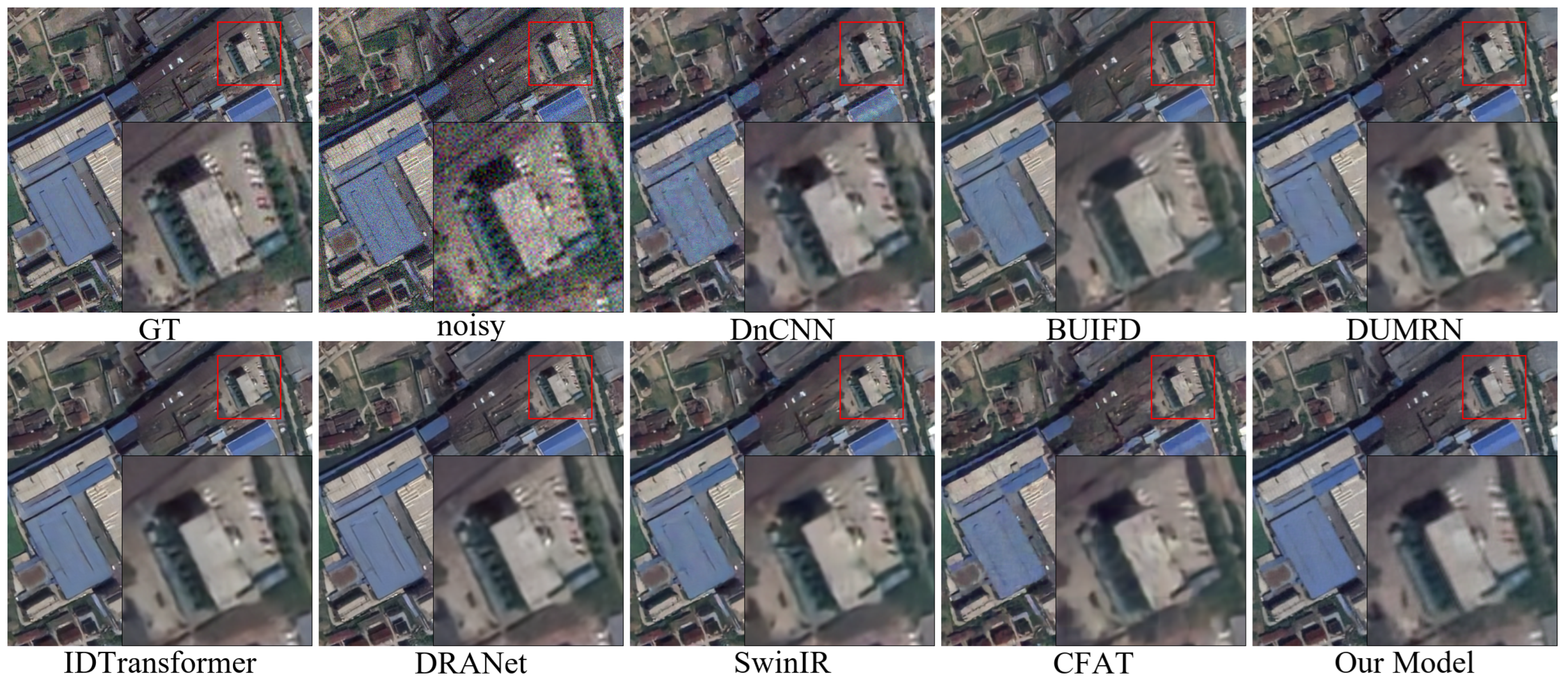

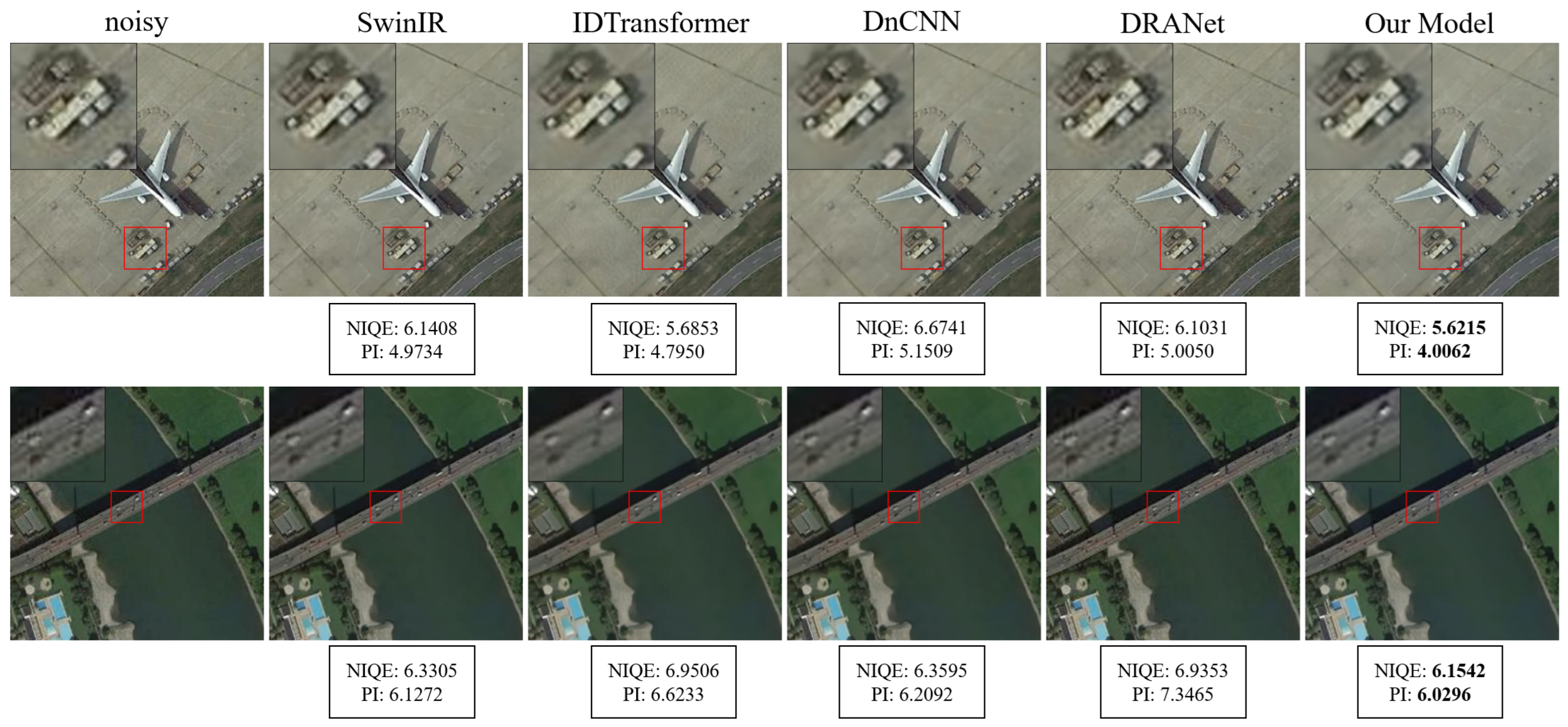

4.2.2. Qualitative Comparison

4.3. Real-World Noisy Remote Sensing Images

4.4. Model Complexity and Runtime Comparison

4.5. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Feng, X.; Zhang, W.; Su, X.; Xu, Z. Optical remote sensing image denoising and super-resolution reconstructing using optimized generative network in wavelet transform domain. Remote Sens. 2021, 13, 1858. [Google Scholar] [CrossRef]

- Li, Q.; Huang, H.; Yu, W.; Jiang, S. Optimized views photogrammetry: Precision analysis and a large-scale case study in Qingdao. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1144–1159. [Google Scholar] [CrossRef]

- Zhu, Y.; Yang, G.; Yang, H.; Zhao, F.; Han, S.; Chen, R.; Zhang, C.; Yang, X.; Liu, M.; Cheng, J.; et al. Estimation of apple flowering frost loss for fruit yield based on gridded meteorological and remote sensing data in Luochuan, Shaanxi Province, China. Remote Sens. 2021, 13, 1630. [Google Scholar] [CrossRef]

- Qi, J.; Wan, P.; Gong, Z.; Xue, W.; Yao, A.; Liu, X.; Zhong, P. A self-improving framework for joint depth estimation and underwater target detection from hyperspectral imagery. Remote Sens. 2021, 13, 1721. [Google Scholar] [CrossRef]

- Xia, Z.; Li, Z.; Bai, Y.; Yu, J.; Adriano, B. Self-supervised learning for building damage assessment from large-scale xBD satellite imagery benchmark datasets. In Proceedings of the International Conference on Database and Expert Systems Applications, Vienna, Austria, 22–24 August 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 373–386. [Google Scholar]

- Yuan, Q.; Zhang, Q.; Li, J.; Shen, H.; Zhang, L. Hyperspectral image denoising employing a spatial–spectral deep residual convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1205–1218. [Google Scholar] [CrossRef]

- Landgrebe, D.A.; Malaret, E. Noise in remote-sensing systems: The effect on classification error. IEEE Trans. Geosci. Remote Sens. 2007, GE-24, 294–300. [Google Scholar] [CrossRef]

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian-Gaussian noise modeling and fitting for single-image raw-data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, F.; Liu, Q.; Wang, S. VST-Net: Variance-stabilizing transformation inspired network for Poisson denoising. J. Vis. Commun. Image Represent. 2019, 62, 12–22. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Chao, H.; Yang, M. Image blind denoising with generative adversarial network based noise modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3155–3164. [Google Scholar]

- Cha, S.; Park, T.; Kim, B.; Baek, J.; Moon, T. GAN2GAN: Generative noise learning for blind denoising with single noisy images. arXiv 2019, arXiv:1905.10488. [Google Scholar]

- Huang, T.; Li, S.; Jia, X.; Lu, H.; Liu, J. Neighbor2neighbor: Self-supervised denoising from single noisy images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14781–14790. [Google Scholar]

- Xue, S.; Qiu, W.; Liu, F.; Jin, X. Wavelet-based residual attention network for image super-resolution. Neurocomputing 2020, 382, 116–126. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Wang, P.; Wang, X.; Wang, F.; Lin, M.; Chang, S.; Li, H.; Jin, R. Kvt: K-nn attention for boosting vision transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 285–302. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Schonfeld, E.; Schiele, B.; Khoreva, A. A u-net based discriminator for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8207–8216. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted nuclear norm minimization with application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Chang, S.G.; Yu, B.; Vetterli, M. Adaptive wavelet thresholding for image denoising and compression. IEEE Trans. Image Process. 2000, 9, 1532–1546. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Anwar, S.; Barnes, N. Real image denoising with feature attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3155–3164. [Google Scholar]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1712–1722. [Google Scholar]

- Zheng, Y.; Su, J.; Zhang, S.; Tao, M.; Wang, L. Dehaze-AGGAN: Unpaired remote sensing image dehazing using enhanced attention-guide generative adversarial networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Wang, Y.; Chang, D.; Zhao, Y. A new blind image denoising method based on asymmetric generative adversarial network. IET Image Process. 2021, 15, 1260–1272. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. Adv. Neural Inf. Process. Syst. 2017, 30, 5769–5779. [Google Scholar]

- Han, Z.; Shangguan, H.; Zhang, X.; Cui, X.; Wang, Y. A coarse-to-fine multi-scale feature hybrid low-dose CT denoising network. Signal Process. Image Commun. 2023, 118, 117009. [Google Scholar] [CrossRef]

- Jin, M.; Wang, P.; Li, Y. Hya-gan: Remote sensing image cloud removal based on hybrid attention generation adversarial network. Int. J. Remote Sens. 2024, 45, 1755–1773. [Google Scholar] [CrossRef]

- Wang, X.; Zheng, Z.; Shao, J.; Duan, Y.; Deng, L.J. Adaptive Rectangular Convolution for Remote Sensing Pansharpening. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 17872–17881. [Google Scholar]

- Li, B.; Li, X.; Zhu, H.; Jin, Y.; Feng, R.; Zhang, Z.; Chen, Z. Sed: Semantic-aware discriminator for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 25784–25795. [Google Scholar]

- Dong, R.; Zhang, L.; Fu, H. RRSGAN: Reference-based super-resolution for remote sensing image. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar] [CrossRef]

- Yang, K.; Xia, G.S.; Liu, Z.; Du, B.; Yang, W.; Pelillo, M.; Zhang, L. Semantic change detection with asymmetric Siamese networks. arXiv 2020, arXiv:2010.05687. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- El Helou, M.; Süsstrunk, S. Blind universal Bayesian image denoising with Gaussian noise level learning. IEEE Trans. Image Process. 2020, 29, 4885–4897. [Google Scholar] [CrossRef] [PubMed]

- Shen, Z.; Qin, F.; Ge, R.; Wang, C.; Zhang, K.; Huang, J. IDTransformer: Infrared image denoising method based on convolutional transposed self-attention. Alex. Eng. J. 2025, 110, 310–321. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Ray, A.; Kumar, G.; Kolekar, M.H. Cfat: Unleashing triangular windows for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26120–26129. [Google Scholar]

- Xu, J.; Yuan, M.; Yan, D.M.; Wu, T. Deep unfolding multi-scale regularizer network for image denoising. Comput. Vis. Media 2023, 9, 335–350. [Google Scholar] [CrossRef]

- Wu, W.; Liu, S.; Xia, Y.; Zhang, Y. Dual residual attention network for image denoising. Pattern Recognit. 2024, 149, 110291. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

| Scenario | Metric | BM3D | DnCNN | BUIFD | DUMRN | DRANet | IDTransformer | SwinIR | CFAT | Our Model |

|---|---|---|---|---|---|---|---|---|---|---|

| Building | PSNR ↑ | 35.54 | 36.75 | 33.94 | 36.71 | 37.32 | 36.80 | 36.55 | 36.04 | 36.82 |

| SSIM ↑ | 0.9359 | 0.9490 | 0.9488 | 0.9481 | 0.9553 | 0.9494 | 0.9528 | 0.9459 | 0.9563 | |

| LPIPS ↓ | 0.2046 | 0.1450 | 0.1822 | 0.1490 | 0.1241 | 0.1427 | 0.1334 | 0.1496 | 0.1220 | |

| FID ↓ | 86.24 | 27.50 | 39.31 | 30.40 | 28.19 | 32.05 | 28.21 | 30.34 | 21.32 | |

| Farmland | PSNR ↑ | 37.65 | 38.19 | 34.06 | 38.25 | 39.20 | 38.55 | 38.95 | 37.51 | 38.45 |

| SSIM ↑ | 0.9225 | 0.9344 | 0.9334 | 0.9295 | 0.9454 | 0.9358 | 0.9363 | 0.9294 | 0.9385 | |

| LPIPS ↓ | 0.2668 | 0.2183 | 0.2486 | 0.2269 | 0.1789 | 0.2106 | 0.1989 | 0.2230 | 0.1770 | |

| FID ↓ | 128.87 | 68.16 | 77.82 | 72.62 | 58.73 | 68.44 | 65.62 | 71.05 | 51.85 | |

| Vegetation | PSNR ↑ | 36.23 | 37.65 | 34.63 | 37.56 | 37.32 | 37.66 | 37.86 | 36.63 | 37.99 |

| SSIM ↑ | 0.9156 | 0.9405 | 0.9385 | 0.9380 | 0.9391 | 0.9395 | 0.9429 | 0.9362 | 0.9446 | |

| LPIPS ↓ | 0.2507 | 0.1847 | 0.2079 | 0.1902 | 0.1610 | 0.1826 | 0.1780 | 0.1824 | 0.1537 | |

| FID ↓ | 93.93 | 41.91 | 50.32 | 42.40 | 40.72 | 44.51 | 41.78 | 40.03 | 38.12 | |

| Water | PSNR ↑ | 42.33 | 42.61 | 38.96 | 42.06 | 43.60 | 42.77 | 43.30 | 39.69 | 42.34 |

| SSIM ↑ | 0.9633 | 0.9644 | 0.9598 | 0.9622 | 0.9705 | 0.9642 | 0.9636 | 0.9617 | 0.9659 | |

| LPIPS ↓ | 0.2836 | 0.2943 | 0.3582 | 0.2818 | 0.2487 | 0.3217 | 0.3113 | 0.2892 | 0.1924 | |

| FID ↓ | 161.24 | 82.77 | 106.62 | 80.40 | 111.28 | 107.47 | 117.70 | 89.52 | 59.24 |

| Scenario | Metric | BM3D | DnCNN | BUIFD | DUMRN | DRANet | IDTransformer | SwinIR | CFAT | Our Model |

|---|---|---|---|---|---|---|---|---|---|---|

| Building | PSNR ↑ | 32.73 | 33.39 | 34.02 | 34.01 | 34.33 | 34.14 | 33.91 | 34.21 | 34.64 |

| SSIM ↑ | 0.8914 | 0.9076 | 0.9084 | 0.9140 | 0.9262 | 0.9162 | 0.9122 | 0.9195 | 0.9170 | |

| LPIPS ↓ | 0.2842 | 0.2273 | 0.2096 | 0.2233 | 0.1871 | 0.2055 | 0.2294 | 0.2068 | 0.1993 | |

| FID ↓ | 140.74 | 60.93 | 52.59 | 65.89 | 64.70 | 58.71 | 76.26 | 67.17 | 48.02 | |

| Farmland | PSNR ↑ | 35.33 | 35.77 | 36.07 | 35.97 | 36.68 | 36.28 | 35.92 | 36.44 | 36.98 |

| SSIM ↑ | 0.8795 | 0.8877 | 0.8978 | 0.8900 | 0.9175 | 0.8999 | 0.8915 | 0.9064 | 0.8990 | |

| LPIPS ↓ | 0.3456 | 0.3130 | 0.2892 | 0.3106 | 0.2695 | 0.2767 | 0.3131 | 0.2777 | 0.2441 | |

| FID ↓ | 164.58 | 118.73 | 107.23 | 123.45 | 106.76 | 116.13 | 120.53 | 121.16 | 101.74 | |

| Vegetation | PSNR ↑ | 33.35 | 34.74 | 34.62 | 34.46 | 34.92 | 35.06 | 34.80 | 34.91 | 35.25 |

| SSIM ↑ | 0.8487 | 0.8900 | 0.8887 | 0.8939 | 0.9039 | 0.8993 | 0.8912 | 0.8972 | 0.8946 | |

| LPIPS ↓ | 0.3400 | 0.2665 | 0.2583 | 0.2712 | 0.2377 | 0.2444 | 0.2760 | 0.2621 | 0.2352 | |

| FID ↓ | 145.51 | 73.97 | 79.26 | 75.85 | 77.86 | 72.57 | 93.02 | 82.92 | 71.19 | |

| Water | PSNR ↑ | 40.41 | 40.19 | 39.91 | 40.67 | 41.74 | 41.24 | 40.12 | 40.81 | 41.89 |

| SSIM ↑ | 0.9540 | 0.9508 | 0.9533 | 0.9552 | 0.9604 | 0.9578 | 0.9521 | 0.9581 | 0.9597 | |

| LPIPS ↓ | 0.3489 | 0.3932 | 0.3756 | 0.3828 | 0.2955 | 0.3489 | 0.3673 | 0.3877 | 0.2353 | |

| FID ↓ | 196.00 | 128.62 | 169.29 | 141.43 | 172.15 | 173.04 | 145.18 | 180.15 | 102.88 |

| Scenario | Metric | BM3D | DnCNN | BUIFD | DUMRN | DRANet | IDTransformer | SwinIR | CFAT | Our Model |

|---|---|---|---|---|---|---|---|---|---|---|

| Building | PSNR ↑ | 28.79 | 29.40 | 30.01 | 30.60 | 30.78 | 30.94 | 30.69 | 30.42 | 31.23 |

| SSIM ↑ | 0.7951 | 0.8144 | 0.8421 | 0.8453 | 0.8578 | 0.8543 | 0.8455 | 0.8401 | 0.8648 | |

| LPIPS ↓ | 0.4144 | 0.3633 | 0.3139 | 0.3336 | 0.2747 | 0.3112 | 0.3268 | 0.3347 | 0.3072 | |

| FID ↓ | 210.23 | 127.03 | 138.30 | 138.30 | 113.62 | 117.68 | 145.56 | 113.21 | 97.37 | |

| Farmland | PSNR ↑ | 32.37 | 32.93 | 32.87 | 33.39 | 34.08 | 33.87 | 33.48 | 33.09 | 34.27 |

| SSIM ↑ | 0.8153 | 0.8243 | 0.8343 | 0.8348 | 0.8645 | 0.8464 | 0.8337 | 0.8325 | 0.8632 | |

| LPIPS ↓ | 0.4643 | 0.4268 | 0.3964 | 0.4035 | 0.3376 | 0.3897 | 0.4099 | 0.4039 | 0.3820 | |

| FID ↓ | 251.98 | 188.32 | 186.64 | 189.70 | 174.61 | 179.79 | 210.20 | 184.45 | 167.58 | |

| Vegetation | PSNR ↑ | 29.49 | 31.40 | 29.63 | 31.64 | 31.98 | 31.81 | 31.55 | 31.39 | 31.95 |

| SSIM ↑ | 0.7335 | 0.7923 | 0.7667 | 0.8010 | 0.8073 | 0.8092 | 0.7950 | 0.8005 | 0.8193 | |

| LPIPS ↓ | 0.4601 | 0.3999 | 0.4176 | 0.3895 | 0.3544 | 0.3534 | 0.3943 | 0.3660 | 0.3478 | |

| FID ↓ | 223.78 | 158.42 | 183.06 | 166.36 | 142.29 | 132.30 | 170.74 | 127.64 | 126.88 | |

| Water | PSNR ↑ | 34.20 | 36.98 | 33.01 | 38.25 | 39.36 | 39.13 | 38.46 | 37.75 | 39.03 |

| SSIM ↑ | 0.9368 | 0.9253 | 0.9370 | 0.9282 | 0.9487 | 0.9403 | 0.9416 | 0.9386 | 0.9408 | |

| LPIPS ↓ | 0.4359 | 0.4752 | 0.3896 | 0.4448 | 0.3315 | 0.3607 | 0.4032 | 0.4057 | 0.3044 | |

| FID ↓ | 259.41 | 220.70 | 222.97 | 256.40 | 200.96 | 188.22 | 223.35 | 218.11 | 162.06 |

| Metric | DnCNN | SwinIR | DRANet | IDTransformer | CFAT | Our Model |

|---|---|---|---|---|---|---|

| FLOPs [G] | 129.08 | 2644.22 | 2082.41 | 486.15 | 4978.76 | 2867.39 |

| Params [M] | 0.56 | 11.46 | 1.62 | 18.53 | 21.49 | 11.56 |

| Average Inference Time [ms] | 1.5 | 1243.9 | 188 | 236.2 | 8123.2 | 25.8 |

| Method | PSNR ↑ | SSIM ↑ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|

| Model-1 | 37.22 | 0.9423 | 0.1722 | 50.64 |

| Model-2 | 37.93 | 0.9483 | 0.1692 | 47.58 |

| Model-3 | 37.95 | 0.9496 | 0.1843 | 52.35 |

| Model-4 (Our) | 38.91 | 0.9513 | 0.1613 | 42.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Duan, R.; Sun, G.; Zhang, H.; Chen, F.; Yang, F.; Cao, J. SARFT-GAN: Semantic-Aware ARConv Fused Top-k Generative Adversarial Network for Remote Sensing Image Denoising. Remote Sens. 2025, 17, 3114. https://doi.org/10.3390/rs17173114

Sun H, Duan R, Sun G, Zhang H, Chen F, Yang F, Cao J. SARFT-GAN: Semantic-Aware ARConv Fused Top-k Generative Adversarial Network for Remote Sensing Image Denoising. Remote Sensing. 2025; 17(17):3114. https://doi.org/10.3390/rs17173114

Chicago/Turabian StyleSun, Haotian, Ruifeng Duan, Guodong Sun, Haiyan Zhang, Feixiang Chen, Feng Yang, and Jia Cao. 2025. "SARFT-GAN: Semantic-Aware ARConv Fused Top-k Generative Adversarial Network for Remote Sensing Image Denoising" Remote Sensing 17, no. 17: 3114. https://doi.org/10.3390/rs17173114

APA StyleSun, H., Duan, R., Sun, G., Zhang, H., Chen, F., Yang, F., & Cao, J. (2025). SARFT-GAN: Semantic-Aware ARConv Fused Top-k Generative Adversarial Network for Remote Sensing Image Denoising. Remote Sensing, 17(17), 3114. https://doi.org/10.3390/rs17173114