1. Introduction

In recent years, the rapid development of synthetic aperture radar (SAR) technology has attracted widespread global attention. Its ability to provide high-resolution imaging in all weather conditions and at any time of day plays a crucial role in applications such as surface monitoring, disaster response, and maritime security [

1,

2,

3]. With the growth of commercial space ventures and sustained investments from major international space agencies, systems such as the European Space Agency’s Sentinel-1 series have seen continuous upgrades. Additionally, companies such as Capella Space in the United States and ICEYE in Finland have launched multiple small SAR satellites, enabling more frequent and flexible image acquisition. China’s Gaofen series and the Jilin-1 SAR constellation are also expanding, generating vast amounts of image data for both global marine and terrestrial monitoring. In this context, SAR-based ship detection has become a critical technology for ensuring maritime traffic safety, conducting emergency search and rescue operations, and performing national defense early-warning tasks [

4,

5,

6].

The complex ocean background and the diversity of ships make ship detection in SAR images challenging. Interferences such as side scatterers, sea clutter, and sea ice reflection differences often lead to false alarms [

7]. At the same time, the differences in the size, shape, and attitude of ships make it difficult to summarize a unified feature model [

8]. The speckle noise of SAR image reduces the clarity and contrast of the image and blurs the edge of the ship, especially for small ships, resulting in missed detection and inaccurate contour judgment [

9,

10,

11,

12]. Moreover, with the increasing volume of high-resolution remote sensing data, achieving rapid ship detection on resource-constrained satellite or airborne platforms has become one of the core challenges in current SAR research. Therefore, minimizing the computational and storage costs of the detection method without compromising detection accuracy is the main focus of this study [

13].

To address the challenges mentioned above, researchers have conducted extensive investigations into SAR-based ship detection, which can currently be divided into two main categories: traditional target detection algorithms and deep-learning-based detection algorithms.

In the early stage of the development of SAR ship detection technology, traditional detection methods played a leading role [

14]. The constant false alarm rate (CFAR) method dynamically adjusts the detection threshold according to the statistical characteristics of local background clutter to ensure a constant false alarm probability. With a uniform background, CFAR can effectively recognize ship targets [

15,

16]. However, when there are multiple strong interfering scatterers in the background, its detection performance is significantly reduced [

17,

18]. Edge detection methods, such as Sobel and Canny, recognize the ship contour by using the gray gradient change between the ship and the background [

19,

20,

21]. However, the inherent speckle of SAR images often leads to a large number of false edges in the detection results. In addition, for ships with irregular contours or partial occlusion, it is difficult to extract edge information, which, in turn, reduces the detection accuracy. Although traditional detection algorithms are simple to implement, their accuracy and generalization are significantly limited when handling the complex and dynamic conditions in SAR images. This has driven the development of deep-learning-based detection algorithms.

Recent advances in deep learning, particularly in automatic feature extraction and complex pattern recognition, have revolutionized SAR ship detection [

22,

23,

24]. Convolutional Neural Networks (CNNs), with their multi-layer convolution and pooling structures, effectively capture the shape features of ships in SAR images [

25,

26,

27]. Fast R-CNN, a two-stage detection model [

28], is applicable to SAR ship detection but suffers from anchor-box mechanisms that introduce numerous hyperparameters, complicating model tuning. Moreover, detection accuracy drops for unconventional ships whose shapes or sizes fall outside predefined anchor-box ranges. To address this, Fully Convolutional One-Stage (FCOS) detection is proposed [

29], determining ship locations and sizes by measuring the distance from each pixel to the ship’s boundary [

30]. While efficient, FCOS’s per-pixel processing demands high computational resources when applied to large-scale SAR images, resulting in longer detection times [

31]. Additionally, the transformer architectures [

32], with their self-attention mechanisms, excel at handling long-range dependencies and global context, achieving high accuracy in complex scenes. The RT-DETR method, introduced in 2024, employs an efficient mixed encoder and query selection for real-time applications, pushing DETR towards practical deployment [

33]. Nevertheless, even considering only the prediction process during deployment, DETR’s efficiency still falls short. Additionally, applying a transformer to SAR-image-based ship detection tasks is challenged by the feature discrepancies between ship characteristics in SAR and optical images. SAR images often contain numerous small ship targets, which diminishes the advantage of transformers in capturing global features. In this context, the extraction of local features becomes critical, and thus, the performance improvement of transformers in SAR-image-based object detection is not significant compared to traditional CNNs.

The You Only Look Once (YOLO) series, a significant branch of object detection, attracts widespread attention due to its exceptional speed in detection [

34,

35]. YOLOv1 pioneers the division of input images into multiple grids, with each grid responsible for predicting object bounding boxes and class probabilities, significantly improving detection speed. YOLOv3 introduces a multi-scale detection mechanism, enabling effective detection of objects of various sizes [

36]. YOLOv4 [

37] and YOLOv5 [

38] optimize network structures and training strategies, maintaining high speed while improving detection accuracy. YOLOv6 combines the EfficientRep Backbone and Rep-PAN Neck architecture to reduce computational load and increase inference speed [

39], and its anchor-free mechanism significantly improves the detection of ships, particularly small targets. YOLOv7 further enhances the robustness of SAR image detection by introducing new activation functions, optimizing the backbone network, and applying advanced data augmentation strategies [

40]. The adaptive anchor-box technique in YOLOv7, based on data-driven adjustments, improves ship detection accuracy under varying conditions. YOLOv8 introduces advanced feature extraction and fusion mechanisms, redesigns the backbone and neck structures to support multi-scale detection [

41], and optimizes training strategies and loss functions to accelerate convergence and enhance detection accuracy, making it an ideal choice for real-time maritime ship monitoring.

Recent studies have further optimized YOLOv8 for lightweight ship detection. Li and Wang [

42] proposed EGM-YOLOv8, which integrates Efficient Channel Attention (ECA), a lightweight GELAN–PANet fusion, and MPDIoU loss to improve recall and reduce parameters and computation, achieving a better balance between accuracy and efficiency for real-time maritime applications. Similarly, Gao et al. [

43] introduced an improved YOLOv8n with DualConv, a Slim-neck using GSConv and VoVGSCSP, SEAM attention, and MPDIoU, significantly enhancing detection accuracy while maintaining a lightweight design on the SeaShips dataset.

However, the methods mentioned above are primarily designed for optical images, which rely on visible-light reflection and provide rich color and texture information. In contrast, SAR images are generated by centimeter-wave radar signal backscatter, exhibiting unique geometric distortions and multiplicative noise. Therefore, directly applying methods designed for optical images to ship detection in SAR images results in a decline in detection performance. Given these differences, many studies focus on developing ship detection methods specifically tailored for SAR images. These methods take into account the unique imaging mechanism and data characteristics of SAR and design a range of specialized neural network structures to enhance detection accuracy [

44,

45,

46,

47,

48]. However, these methods often face challenges such as high computational complexity, slow prediction speeds, and difficulties in deployment on SAR platforms with limited computational power and storage capacity.

To address these challenges, this paper proposes a lightweight SAR-Star-GSConv-YOLOv10 (SSGY) neural network for ship detection in SAR images. The network is based on the YOLOv10 framework, with customized designs for key modules such as the backbone, neck, and loss function. This approach aims to reduce model parameters and computational complexity while improving detection accuracy and adaptability to ships of varying scenes and scales. Ultimately, the method supports reliable ship detection applications on resource-constrained SAR platforms. The specific contributions are as follows:

The multi-scale kernel StarNet network structure is designed and used as a backbone network. The convolution kernel size of the structure is different in different feature extraction stages, which enhances the feature extraction ability of the model for ships of different scales and effectively reduces the computational complexity. In addition, the extended convolution and efficient channel attention (ECA) modules are integrated to further improve the ability to detect ships in complex backgrounds.

A lightweight neck network based on GSConv is proposed, effectively reducing the high computational resource consumption of the classic YOLO neck. Particularly when handling multi-scale ship features in SAR images, this design reduces the number of parameters and computational load, significantly improving feature fusion efficiency and detection accuracy.

A composite loss function integrating detection loss, bounding box loss, and distribution focal loss (DFL) is proposed. This combination enables the model to better learn ship features in SAR images, improving detection accuracy and robustness. Furthermore, the detection head adopts a consistent dual assignment strategy and utilizes depthwise separable convolutions to reduce parameters and computational load, allowing training without the need for non-maximum suppression (NMS), thereby further optimizing model performance.

The organization of this paper is as follows:

Section 2 introduces the YOLOv10 object detection algorithm and reviews related work on ship detection in SAR images.

Section 3 provides a detailed description of the proposed SSGY network architecture, covering the overall framework, the enhanced StarNet backbone, the lightweight neck network, the detection head, and the loss function.

Section 4 presents the experimental details, including the datasets used, experimental settings, ablation studies, and comparative experiments with other detection methods.

Section 5 contains the discussion, and

Section 6 concludes the paper.

3. Method

This section comprehensively shows the main modules and design ideas of the proposed SSGY network.

3.1. Overview of the SSGY Network Model Structure

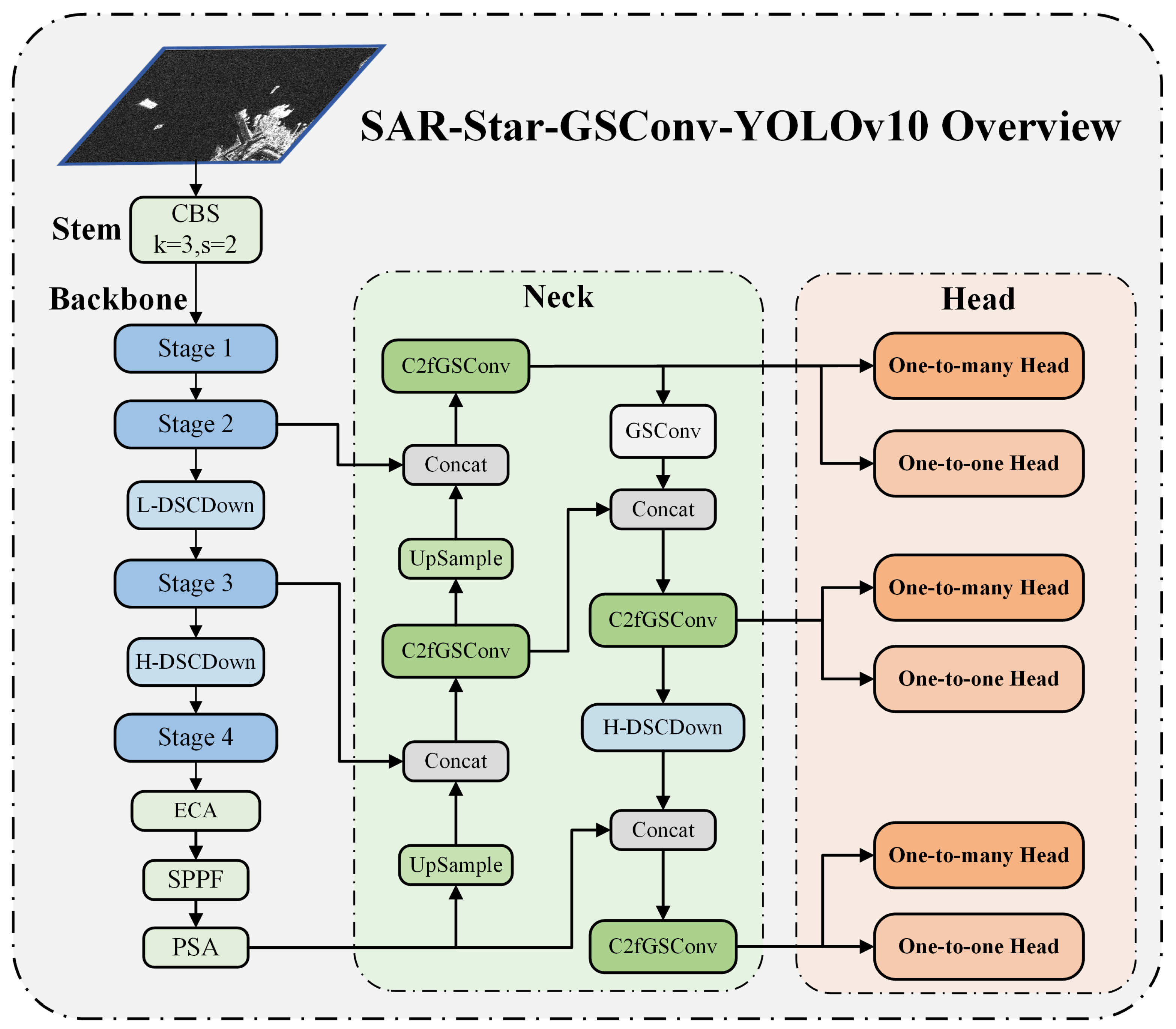

For SAR platforms with limited computational resources, such as those deployed on satellites or aircraft, this paper proposes the SAR-Star-GSConv-YOLOv10 network. The SSGY network is a lightweight neural network model specifically designed for ship target detection in SAR images. Within the YOLOv10 series, YOLOv10n distinguishes itself by having the fewest parameters and the fastest detection speed while maintaining high detection accuracy. Consequently, YOLOv10n is selected as the baseline framework for the network design. The overall architecture of the SAR-Star-GSConv-YOLOv10 network is illustrated in

Figure 2.

Here, we briefly describe the SSGY network structure. (1) Input: Receives SAR single-look complex images, resizing them to 640 × 640 × 3 for network input. (2) Stem: Extracts initial features with a 3 × 3 convolution (stride 2, padding 1), followed by batch normalization and SiLU activation. (3) Backbone: Based on an improved StarNet, enhancing feature extraction to capture multi-level semantic information. (4) Neck: A lightweight GSConv-based module for multi-layer feature fusion tailored to SAR images. (5) Head: Uses an NMS head to directly output predictions, bypassing traditional NMS post-processing. (6) Loss Function: A composite loss function combining classification and bounding box regression tasks to guide model learning and improve detection accuracy. Next, we will provide a detailed introduction to the important modules involved in the SSGY network model.

3.2. StarNet Backbone Network Based on Multi-Scale Kernels

The main function of the backbone network in the detection model is to extract the target features of different scales in the input data. For ship detection tasks in SAR images, it is necessary to capture spatial information and object relationships, provide robust feature representation for subsequent modules, and support accurate target positioning.

As shown in

Figure 2, the backbone network of YOLOv10 relies on feature extraction modules such as rank-guided block convolution, spatial channel decoupling downsampling, and C2fCIB. Although these modules combine linear and nonlinear operations, in SAR images, ship targets are greatly affected by complex background noise, clutter, and scatterers of different scales. As a result, the multi-layer convolution and feature fusion in the YOLOv10 backbone network cannot effectively capture subtle feature changes, and there is a high computational complexity. Although the spatial channel decoupling downsampling optimizes the downsampling process, the reduction of computational load is limited, and it is difficult to meet the requirements of real-time ship detection.

Therefore, a lightweight network structure based on StarNet is designed to mine multi-scale features from SAR images. The star operation typically refers to element-wise multiplication, which is used to fuse features from different subspaces [

51]. A single-layer star operation is generally represented as follows:

where

and

are weight matrices,

X is the input feature, and

and

are bias terms. To simplify the analysis process, the bias terms can be disregarded, resulting in

. It can be observed that this operation maps the input from a computationally efficient low-dimensional space to an approximately

-dimensional implicit feature space. This means that each element interacts with other elements through multiplication, causing the new nonlinear implicit dimensions to grow exponentially without increasing computational overhead. Therefore, this work constructs a backbone network based on star operations, aiming to map the features of ship targets in SAR images to a high-dimensional nonlinear feature space while achieving a lightweight backbone network design.

Based on the receptive field theory, we note that smaller convolution kernels in the lower layers help the network focus on local features, minimizing noise and irrelevant information while reducing computational complexity. The larger kernel in the higher level expands the receptive field, which helps to extract global features and semantic information, thereby improving the detection accuracy.

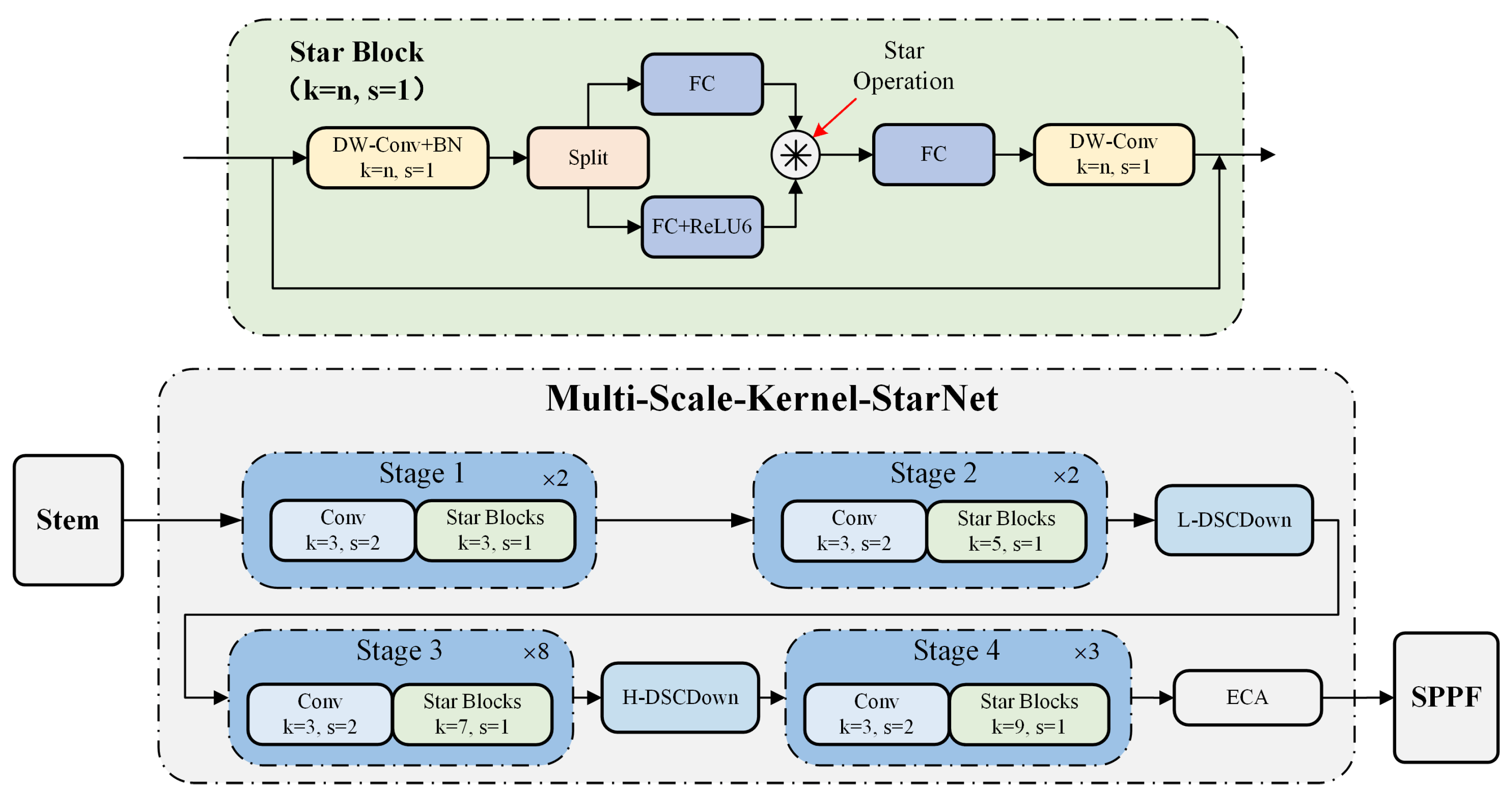

This paper proposes a flexible and lightweight multi-scale kernel StarNet (MSKS) as the backbone of SSGY, with its structure shown in

Figure 3. For the lower-stage Star Blocks (Stages 1 and 2), we use smaller convolution kernels of size 3 × 3 and 5 × 5, stacked

n times. The 3 × 3 kernel, commonly used in CNNs, excels at local feature extraction while maintaining low computational cost. The 5 × 5 kernel slightly expands the receptive field, capturing richer local feature combinations with minimal added computational load. For higher-level features (Stages 3 and 4), we employ 7 × 7 and 9 × 9 kernels. The 7 × 7 kernel enhances the receptive field, capturing more contextual information for better semantic understanding of the target, while the 9 × 9 kernel further expands the receptive field to capture global ship features. This kernel size configuration was determined through experimental comparison with alternative combinations and found to provide the optimal balance between detection accuracy, receptive field coverage, and computational efficiency for real-time SAR ship detection. Larger kernels improve detection accuracy for large ships, ensuring that the entire shape and contours are captured, thus minimizing misidentification of ship parts as separate targets.

Moreover, this paper introduces dilated convolutions to further expand the receptive field of the backbone network. Unlike traditional deep convolutions, which adjust kernel size and stride to control the receptive field, dilated convolutions introduce gaps between kernel elements, allowing for significant receptive field expansion with the same computational cost. By applying dilated convolutions with varying dilation rates on feature maps of different resolutions, this method effectively extracts multi-scale features from SAR images, improving detection accuracy in scenarios with both small boats and large aircraft carriers.

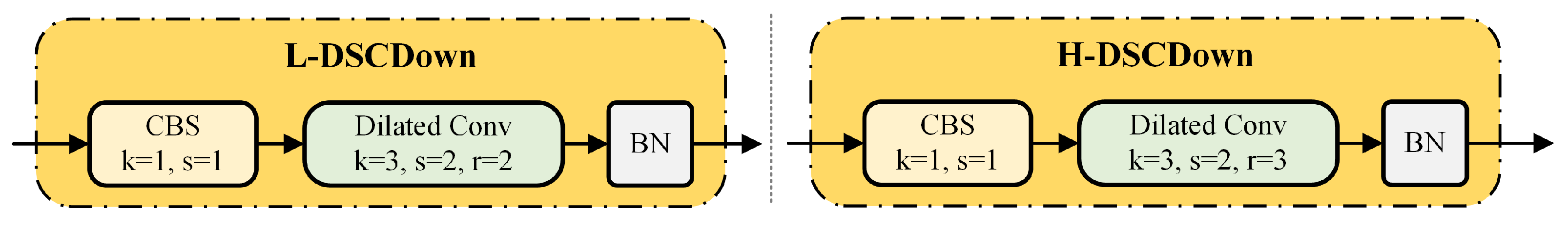

So, we propose a spatial channel decoupling downsampling module based on dilated convolutions, referred to as the DSCDown module, with its specific structure shown in

Figure 4. Since the P3 and P4 levels primarily handle low-level features, which contain more detailed information but less semantic information, dilated convolutions with a dilation rate of 2 (L-DSCDown) are used to moderately expand the receptive field and promote the integration of local features. In contrast, the P4 and P5 levels process mid-level features that contain more semantic information and have a lower resolution. A larger dilation rate (set to 3) is used at these levels to better integrate semantic information while avoiding the introduction of excessive noise, leading to the use of H-DSCDown.

In the feature extraction process of the backbone network, different channels contain different information relevant to the target. For example, some channels represent the contours and edges of the ship, while others contain sea clutter or shoreline target information. Through the channel attention mechanism, the network can focus on and enhance the channels related to the target features, while reducing the interference from irrelevant channels. This is especially beneficial for the detection of small ships, as the channel attention mechanism helps to focus on high-resolution features. Based on this, the efficient channel attention (ECA) module is introduced after multi-level feature extraction in the MSKS network for the following reasons:

High computational efficiency: The ECA module is a lightweight channel attention mechanism using 1D convolutions, achieving low computational cost, making it ideal for real-time detection frameworks like YOLOv10.

Adaptive channel attention: The ECA module adjusts the size of the 1D convolution kernel based on the number of input channels, allowing flexibility across varying channel counts at different feature levels in YOLOv10, without extensive manual tuning.

Enhanced feature representation capability: The ECA module highlights relevant ship target features in SAR images while suppressing background clutter, improving the network’s ability to focus on target-related information.

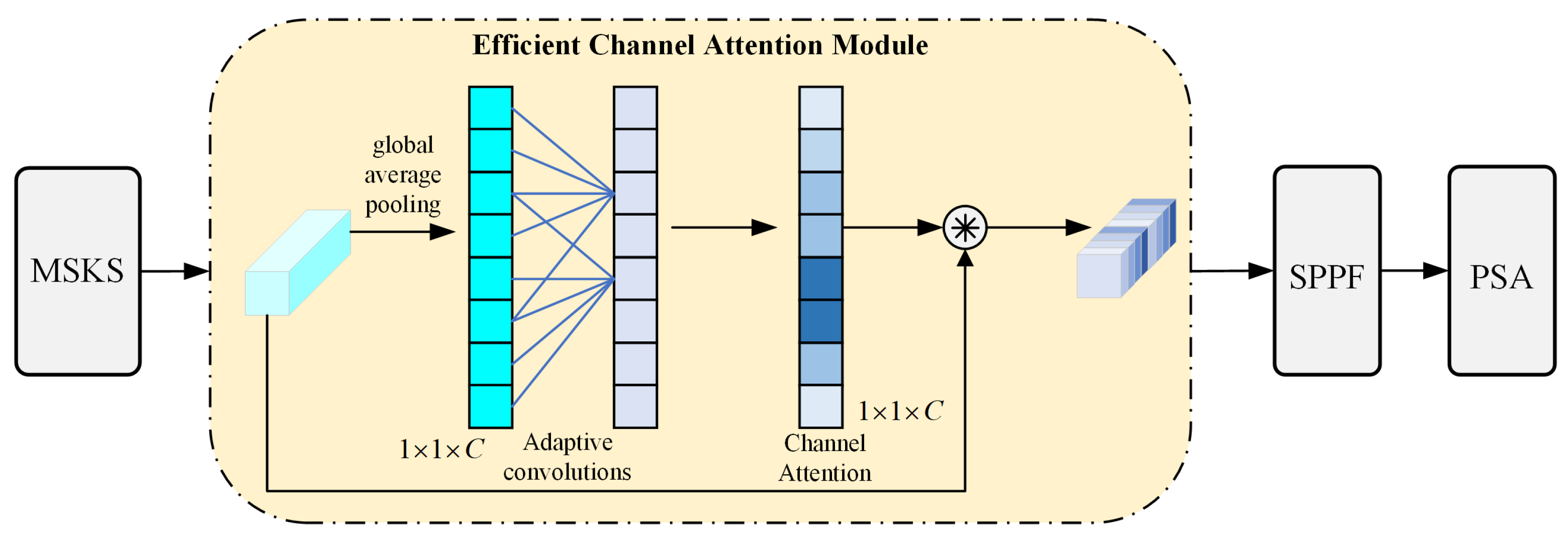

The ECA module is implemented by first aggregating the features output by MSKS. Specifically, as shown in

Figure 5, for an input feature map

, global average pooling is applied to compress the spatial information into a channel-level statistical metric,

where

represents the input feature map; this operation extracts the overall characteristics of the channels by compressing the spatial dimensions. Based on the global descriptor vector

y, a one-dimensional convolution with kernel size

is utilized to capture the inter-channel relationships. The convolution kernel size

k is dynamically determined based on the number of channels

C, as per the following formula:

where

and

b are predefined hyperparameters. In this study, optimal values are determined through experiments to maximize the ECA performance. Subsequently, a one-dimensional convolution operation is applied to

y to obtain the channel attention weights

:

Finally, the attention weights

are multiplied with the original feature map

X channel-wise to obtain the enhanced feature map

:

Then, the input feature is multiplied by the channel of the original feature, which strengthens the model’s attention to important channels and avoids the computational complexity of the full connection operation.

Although we select the ECA module [

52], we also consider other attention mechanisms, such as the SE and CBAM modules. The SE module uses fully connected layers for channel attention, which can introduce computational overhead in resource-limited settings. The CBAM module combines channel and spatial attention, but excessive focus on spatial details may neglect important inter-channel information, especially for SAR images with complex backgrounds. Experimental comparisons (detailed in the

Section 4.3) show that the ECA module provides a better balance between performance and computational efficiency for our network structure and task. It is worth noting that the ECA module outputs the attention features to the SPPF so that it can be fused based on more important channel information when fusing features of different scales, thereby improving the fusion quality.

In summary, adding the ECA module to the backbone network can optimize the channel attention. This provides a more discriminative representation for the input features of the subsequent neck structure.

3.3. Lightweight Neck Network Based on C2fGSConv

In SAR images, ship targets vary greatly in scale, from small patrol boats to large aircraft carriers, with distinct feature representations at different scales. The Neck modules in the YOLO series fuse multi-level features extracted by the backbone network, providing discriminative feature information for subsequent detection heads. However, existing fusion mechanisms may not fully meet the needs of multi-scale ship detection in SAR images. During our research, we identified the following issues with current neck architectures:

Computational Resource Consumption: Modules such as C2f or C2fCIB use conventional convolutions such as CBS, which have high computational complexity. The calculation load is related to the kernel size, input/output channels, and feature mapping dimensions. These convolutions consume significant resources and time. In airborne or spaceborne SAR image processing, this high complexity hinders rapid deployment and efficient operation, limiting the model’s scalability and adaptability in practical applications.

Feature Fusion Effectiveness: Although feature fusion through upsampling (feature pyramid networks) and downsampling (path aggregation networks) is effective, small-scale ship features often weaken after fusion due to information loss during upsampling or insufficient fusion with other scales. This affects the model’s ability to detect small ships. In low-resolution SAR images, focusing on global feature fusion is crucial for small target detection. However, the existing structure can not automatically adapt to this requirement, thus reducing the detection accuracy in complex scenes.

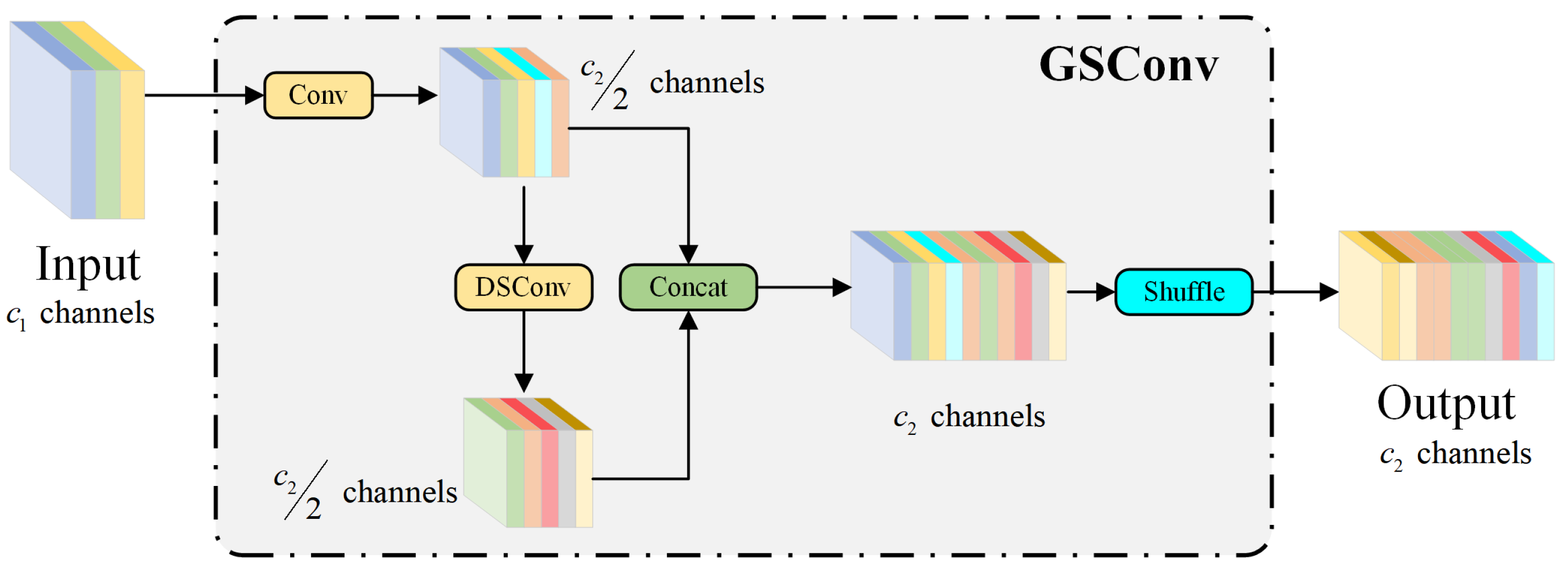

Although YOLOv10 adopts a lightweight design, such as using depthwise separable convolution (DSC) to reduce the number of parameters and computation, there are still notable limitations. Specifically, DSC reduces feature representation ability by separating the channel information of the input image, which leads to lower detection accuracy compared to standard convolution (SC). This limitation is especially prominent in the SAR image ship detection task of this study. GSConv, as a novel lightweight convolution method, cleverly combines the advantages of SC and DSC [

53]. It retains a feature representation ability close to that of SC while significantly reducing computational overhead.

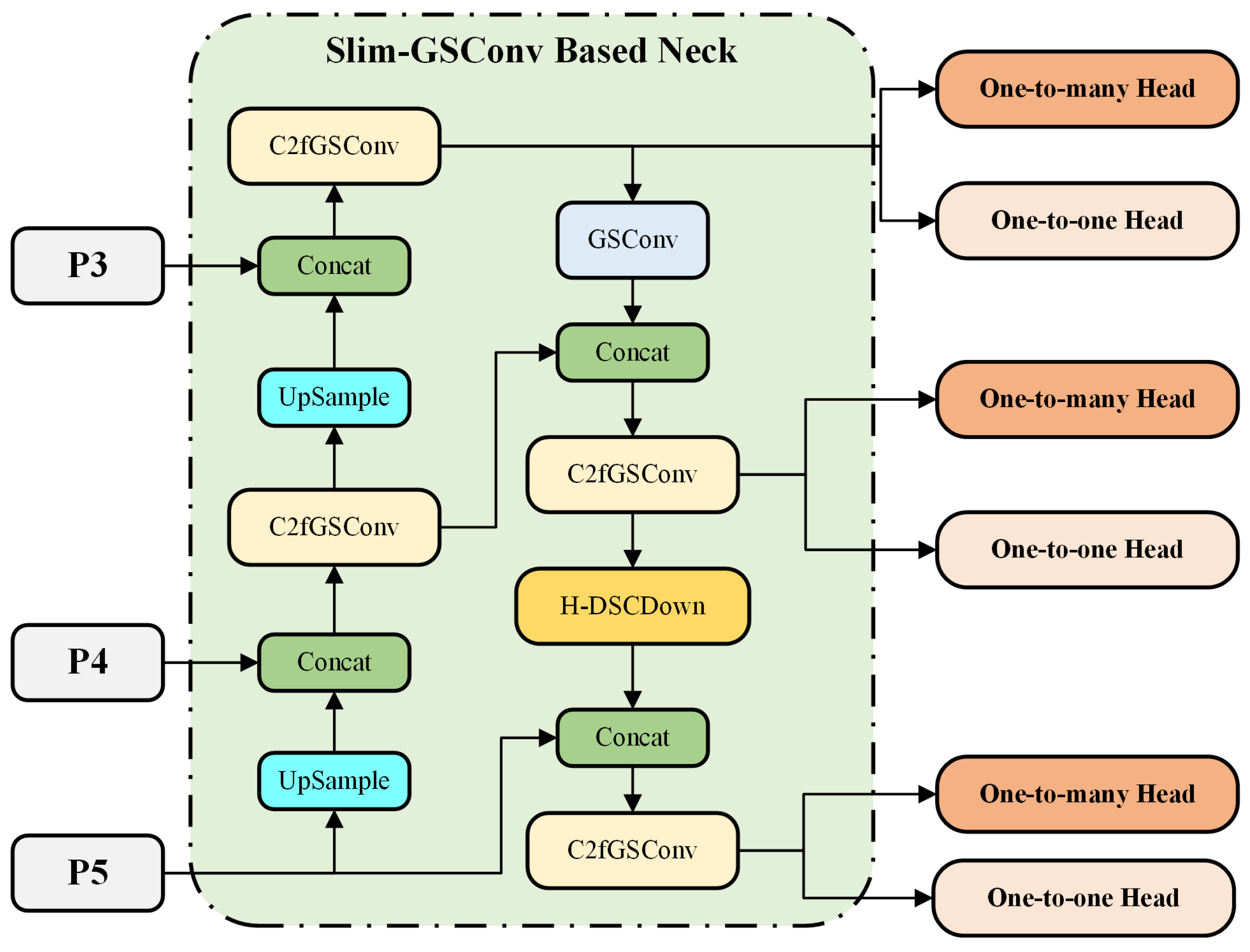

Figure 6 illustrates the structure of GSConv, where the main branch uses SC to focus on channel information, and the auxiliary branch uses DSC to focus on spatial information. These two branches are then merged via a shuffling operation, fully exploiting both spatial and channel features of ship targets. The computational overhead of GSConv is approximately 50% that of SC, and it retains hidden connections between channels while reducing the computational burden. This demonstrates that GSConv can achieve more efficient feature representation with fewer parameters in the Neck architecture.

To achieve an optimal balance between accuracy and speed and to efficiently fuse features, this paper proposes a lightweight Neck network structure based on GSConv, enhancing the suitability of SSGY for SAR image ship detection tasks on spaceborne or airborne platforms. The decision to exclude GSConv from the backbone network is due to the increasing depth of the backbone layers, which exacerbates data flow resistance and significantly increases inference time. By the time features reach the Neck, they have been sufficiently compressed (with channel dimensions at their maximum and spatial dimensions at their minimum), and the required transformations are relatively moderate. Consequently, GSConv is more effective in the Neck, where it processes the connected feature maps with minimal redundancy, eliminating the need for further compression and enabling more efficient attention mechanisms.

Figure 7 shows the lightweight neck network structure based on GSConv designed in this study. The C2fGSConv module in the figure is a feature fusion processing module based on GSConv. For its specific structure, refer to

Figure 8. It is important to note that the activation function for the CBS module in YOLOv10 is SiLU. However, during the experiments, we found that changing the activation function in GSConv to Mish can significantly enhance the detection performance of ships in SAR images. The C2fGSConv module follows the design philosophy of C2f, and the GBM module serves as the basic lightweight convolution module, where the parameters kk and ss represent the parameters of the first standard convolution block in GSConv, respectively. Here, the default size of the depthwise separable convolution kernel is set to 5, and the stride is set to 1.

The introduction of the C2fGSConv module between P4 and P3 enhances the model’s multi-scale feature processing capability. Features at the P4 layer, characterized by higher semantic information but lower resolution, are fused with features from the P3 layer, which provide higher resolution but relatively less semantic information. The C2fGSConv module effectively combines these features, enabling the model to leverage the semantic richness from P4 for accurate classification and the high-resolution details from P3 for precise localization in ship detection tasks. This improved multi-scale feature fusion enhances detection accuracy, particularly for ships of varying sizes and resolutions in SAR images.

Thus, the neck structure of SSGY has successfully reduced computational load and parameter count through the incorporation of the GSConv module. Specific parameter quantification results can be found in

Table 1, where comparisons with the original neck structure are provided. This optimization facilitates faster feature fusion and transmission during SAR image processing, thereby improving the overall operational efficiency of the model.

3.4. Detection Head

The YOLOv10 head structure processes multi-scale feature maps from the Neck, facilitating the detection of objects across a wide range of sizes. This is particularly crucial for SAR ship detection, as it enables accurate identification of both small, distant fishing vessels and large, nearby cargo ships. The head network consists of convolutional layers that extract and fuse features from the input maps, along with prediction layers responsible for object localization (bounding box regression) and classification. Together, these components enable comprehensive object detection.

In the YOLO series, the head structure typically includes a classification head and a regression head, which are responsible for object classification and position regression, respectively. Analysis of the detection head indicates that the classification head generally requires more parameters and computational complexity than the regression head. This is due to the greater complexity of the classification head, which must distinguish between multiple object categories. However, experiments replacing the output with true regression and classification values while setting the corresponding loss to zero show that removing the regression module results in a significant drop in validation accuracy compared to removing the classification module. This suggests that accurately predicting the object’s location is more complex than distinguishing its category, especially in complex scenarios with targets of varying sizes and shapes, as precise localization requires the model to learn more intricate spatial information.

The SSGY method adopts the consistent dual assignment strategy from YOLOv10 to facilitate NMS-free training. Specifically, both the one-to-many and one-to-one heads are incorporated during training. The one-to-many branch offers extensive supervision signals, improving the model’s ability to learn diverse object features, while the one-to-one branch primarily operates during inference. This design significantly reduces the computational complexity of the deployed model. Furthermore, to decrease the parameter count and computational load of the classification head, two 3 × 3 convolutional layers are replaced with depthwise separable convolutions, as illustrated in

Figure 9.

3.5. Joint Loss Function

The loss function typically consists of two main components: classification loss and bounding box (Bbox) loss. In the classification loss part, we use binary cross-entropy (BCE) loss to predict the presence or absence of ships in SAR images, framing the problem as a binary classification task with the goal of determining the presence of a ship. The BCE loss function is as follows:

where

N represents the number of samples,

denotes the ground-truth label for the

i-th sample (with 0 indicating the absence of a ship and 1 indicating the presence of a ship), and

is the model-predicted probability of a ship being present in the image.

The other component of the loss function is the bounding box loss. The Intersection over Union (IoU) is defined as the ratio of the overlapping area between the predicted and ground-truth boxes to their combined area, formally expressed by

. Given the diverse shapes and positions of ships in SAR imagery, we introduce the complete intersection-over-union (CIoU) loss, which incorporates the IoU, the distance between bounding box centers, and the aspect ratio:

where

c denotes the diagonal length of the smallest enclosing rectangle that bounds both the predicted and ground-truth boxes,

measures the aspect ratio difference between them, and

is a weighting factor that balances this discrepancy.

However, the distribution of ships in SAR scenarios is uneven, with some areas being densely populated and others being sparse. Traditional loss functions fail to effectively address the cross-region variation detection requirements. Moreover, this imbalance may result in imprecise annotations, negatively affecting models trained with loss functions based on CIoU. The Weighted IoU (WIoU) introduces a weighting mechanism that can mitigate the negative impact of inaccurate annotations on model training to some extent. By assigning higher weights to specific regions or categories, this loss function improves detection performance in scenarios with class imbalance or highly complex target distributions [

54]. Therefore, this paper adopts

in the bounding box loss function, with its calculation formula as follows:

where

and

denote the coordinates of the predicted bounding box, while

and

represent the coordinates of the ground-truth bounding box. Meanwhile,

and

indicate the width and height, respectively, of the minimum enclosing rectangle. The * signifies that the operations within the parentheses are decoupled to prevent gradients that could hinder the convergence process.

In an SAR ocean scene, the target is usually in a complex noise background and presents a variety of shapes and scales. In response to these challenges, this study combines the CIoU with the WIoU to optimize the geometric features and regional importance of bounding boxes. The proposed combined loss function treats both

and

as independent loss terms, which are then weighted and summed. The formulation is given as follows:

where

is a coefficient that controls the weighting of CIoU and WIoU, balancing their contributions in the overall loss. This value is typically determined through cross-validation or adjusted empirically based on domain expertise.

Distribution Focal Loss (DFL) addresses uncertainty in bounding box predictions by modeling multiple potential positions for bounding box coordinates. In SAR imagery, ship boundaries can become indistinct or partially occluded due to imaging principles and environmental factors. By enabling finer optimization of predicted boxes, DFL improves the accuracy of bounding box regression.

where

represents the ground-truth probability distribution for each box. Consequently, the total loss function designed in this study can be expressed as follows:

The values of , , and were set based on prior research and refined empirically to balance accuracy and efficiency, and the chosen loss components were selected after considering commonly used alternatives.

So far, we have designed a multi-task loss function that simultaneously optimizes position, category, and confidence, which can further improve the accuracy of ship detection in SAR images.

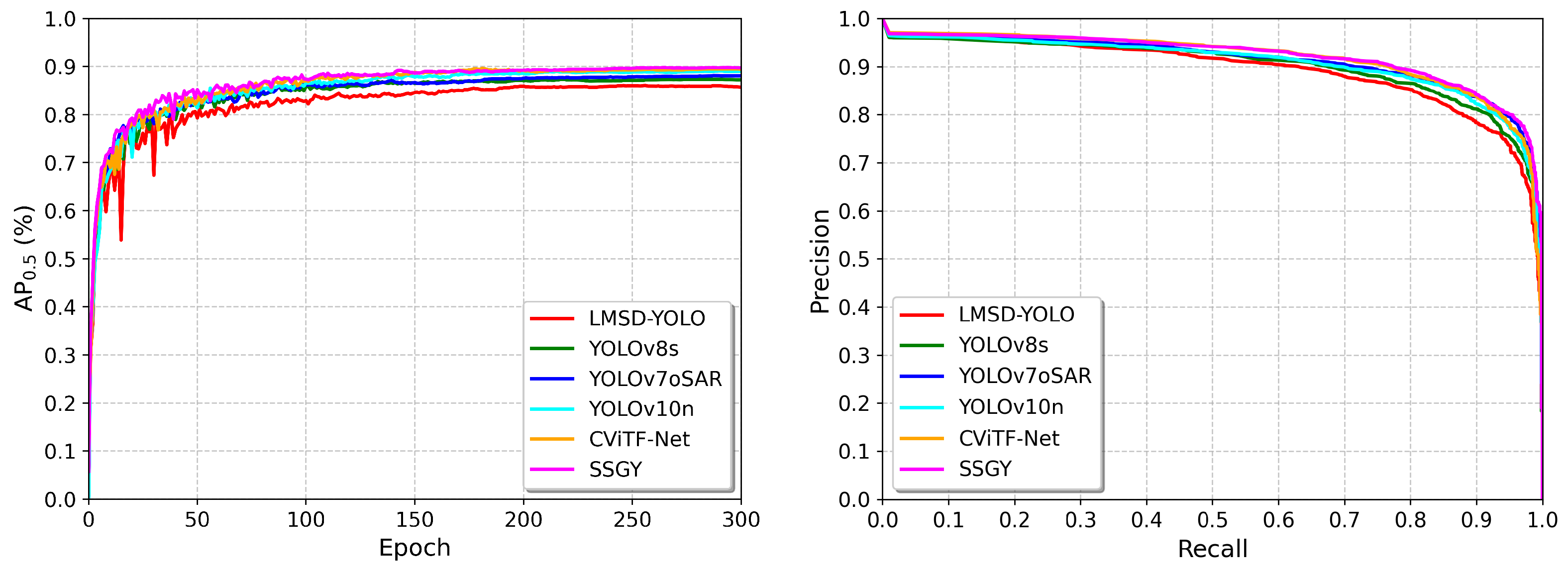

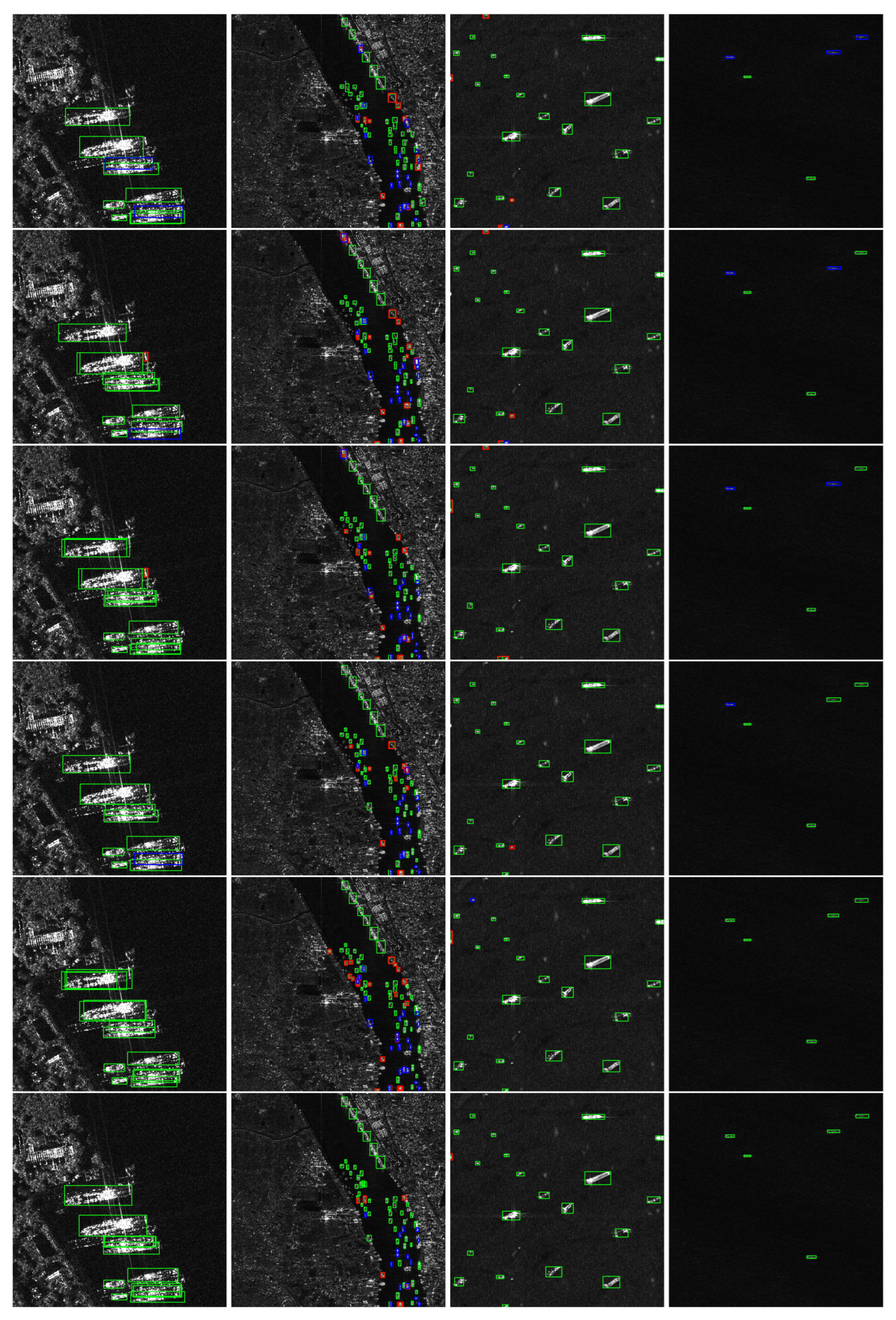

5. Discussion

This paper presents a lightweight SAR image ship detection model, SAR-Star-GSConv-YOLOv10 (SSGY), which optimizes feature extraction through the multi-scale kernel StarNet in the backbone network. The neck network utilizes GSConv for efficient feature fusion, and the detection head employs strategies to minimize computational cost. The loss function integrates multiple components to enhance detection performance. With just 1.4 M parameters, SSGY significantly reduces storage requirements compared to models such as YOLOv10n (2.3 M parameters), making it highly deployable. Its 5.4 G FLOPs, much lower than CViTF-Net’s 205.74 G, ensure reduced computational load, lower time and energy consumption, and efficient operation on SAR platforms with limited computing resources, offering a practical solution for real-world applications.

Recently, an oriented ship detection method for optical remote sensing images (CGTC-RYOLO) [

57] combined a contextual global attention mechanism with lightweight task-specific context decoupling to improve detection of multi-scale, arbitrary-orientation, and densely arranged ships. Both CGTC-RYOLO and SSGY aim to enhance detection accuracy and efficiency, but they are optimized for different sensing modalities and challenges. CGTC-RYOLO focuses on optical imagery, leveraging global attention and angle classification to improve rotation robustness. In contrast, SSGY targets SAR imagery, where complex marine backgrounds and speckle noise dominate, and it emphasizes extreme lightweight design (1.4 M parameters, 5.4 G FLOPs) to support deployment on resource-constrained SAR platforms. The proposed method complements optical-based approaches by extending lightweight ship detection into the SAR domain.

In dense scenes, MSKS enlarges the effective receptive field through large kernels and dilated convolutions, while DSCDown preserves fine structural cues. Together, these modules separate adjacent hulls and reduce duplicate or missed detections. The dual-assignment head and distribution focal loss further refine positive sample selection and boundary regression under heavy overlap. For strong iceberg-like scatterers, the efficient channel attention module boosts channels that respond to elongated manmade structures and suppresses responses dominated by background clutter. The combination of CIoU and WIoU in the localization objective penalizes center displacement and aspect ratio inconsistencies, discouraging boxes from fitting amorphous bright clutter and lowering the false alarm rate.

Theoretically, MSKS offers significant advantages as the backbone network. Its star-shaped operation fuses features and maps them to a high-dimensional nonlinear space, enabling the extraction of complex ship features. Smaller convolution kernels in the lower layers reduce computational cost and enhance detail extraction, while larger kernels in higher layers expand the receptive field to capture semantic information. The integration of dilated convolutions and the ECA module further optimizes multi-scale feature capture and channel attention. In the ablation experiments, using YOLOv10n as the baseline, the introduction of the MSKS module improves precision and reduces parameters and FLOPs. When combined with modules such as DSCDown and ECA, detection performance is further enhanced, demonstrating the MSKS module’s effectiveness and crucial role in SAR image ship detection.

In SAR image ship detection, the neck structure based on C2fGSConv plays a crucial role. GSConv combines the benefits of SC and DSC, effectively capturing both spatial and channel features within the neck network. This integration enhances detection accuracy while reducing computational cost, especially during feature fusion at the P3 and P4 layers. In the ablation experiments, the CBS neck structure was used as the baseline. The GBSiLu structure reduces the parameter count but increases FLOPs. However, the C2fGSConv structure with the GBMish activation function further improves accuracy without altering the parameter count, demonstrating its ability to optimize detection accuracy and reduce parameter count, thus enhancing ship detection on resource-constrained SAR platforms.

Despite the effectiveness of the proposed method, hyperparameter sensitivity remains a challenge, particularly in terms of generalization and adaptability to complex SAR environments. While a set of hyperparameters has been determined experimentally, performance fluctuations may arise when the model encounters SAR images with varied data distributions. To address this, we will categorize SAR image data based on factors such as imaging conditions, ship types, and resolution. Using traditional methods, we will first determine the hyperparameter range and then refine the search with Bayesian optimization to identify optimal values. The model will be iteratively adjusted and validated against new data until the performance meets the desired standards.